Abstract

Behavioral flexibility is vital for survival in an environment of changing contingencies. The nucleus accumbens may play an important role in behavioral flexibility, representing learned stimulus–reward associations in neural activity during response selection and learning from results. To investigate the role of nucleus accumbens neural activity in behavioral flexibility, we used light-activated halorhodopsin to inhibit nucleus accumbens shell neurons during specific time segments of a bar-pressing task requiring a win–stay/lose–shift strategy. We found that optogenetic inhibition during action selection in the time segment preceding a lever press had no effect on performance. However, inhibition occurring in the time segment during feedback of results—whether rewards or nonrewards—reduced the errors that occurred after a change in contingency. Our results demonstrate critical time segments during which nucleus accumbens shell neurons integrate feedback into subsequent responses. Inhibiting nucleus accumbens shell neurons in these time segments, during reinforced performance or after a change in contingencies, increases lose–shift behavior. We propose that the activity of nucleus shell accumbens shell neurons in these time segments plays a key role in integrating knowledge of results into subsequent behavior, as well as in modulating lose–shift behavior when contingencies change.

Behavioral flexibility—the ability to change responses in accordance with feedback of results—is crucial for adaptive behavior. Tasks that include a switch in contingencies from a previously reinforced response to another response provide a sensitive measure of behavioral flexibility (Bitterman 1975; Kehagia et al. 2010; Rayburn-Reeves et al. 2013). The nucleus accumbens (NAcc) plays an important role in several forms of behavioral flexibility, including latent inhibition, attentional set shifting, and reversal learning (Stern and Passingham 1995; Cools et al. 2006; Floresco et al. 2006; O’Neill and Brown 2007), with the core and shell subregions of the NAcc regulating separate components (Weiner et al. 1996; Parkinson et al. 1999; Corbit et al. 2001; Ito et al. 2004; Cardinal and Cheung 2005; Pothuizen et al. 2005a,c; Granon and Floresco 2009). Inactivation of NAcc shell prior to initial discrimination learning improves performance of set shift behavior (Floresco et al. 2006) and blocks latent inhibition (Weiner et al. 1996; Jongen-Relo et al. 2002; Pothuizen et al. 2005b, 2006). The NAcc shell plays a particular role in responses to changes in the incentive value of conditioned stimuli (Floresco et al. 2008; Granon and Floresco 2009), which may be important in different forms of behavioral flexibility. Here we investigate the neural mechanisms underlying behavioral flexibility in a task requiring a shift in responses after a contingency switch, using brief optogenetic inhibition to silence NAcc shell neurons in specific time segments.

At the cellular level, changes in the firing activity of NAcc neurons are associated with different phases of behavior, including preparation and response, reward expectation, and reward delivery (Carelli and Deadwyler 1994; Bowman et al. 1996; Carelli et al. 2000; Hollerman et al. 2000). During the response preparation phase, anticipatory increases in firing related to reward expectation occur (Carelli and Deadwyler 1994; Carelli et al. 2000). Similarly, phasic increases and more prolonged decreases in firing occur in response to a conditioned stimulus or the associated approach response (Day et al. 2006). Later in the sequence, when the outcome of the response is made known, some cells exhibit excitation, while others exhibit inhibition (Carelli and Deadwyler 1994; Carelli et al. 2000). This activation and inhibition of different neural subpopulations in the NAcc occurs in time segments related to different phases of action, from decision to feedback of results. Neural activity in these different time segments—response selection, reward expectancy, and reward delivery—may therefore play a specific causal role in response selection.

Correlation of activation and inhibition of neural activity with behavior establishes the possibility of a causal relationship between the neural activity and the behavior, but a causal relationship cannot be inferred from recording studies showing only correlation. For example, behavior may cause the neural activity, rather than the converse. To show a causal role of neural activity during particular intervals it is necessary to manipulate this activity on similar timescales to the recorded activations and inhibitions. The recent introduction of optogenetic methods has made it possible to modify ongoing neural activity on millisecond timescales (Aravanis et al. 2007; Arenkiel et al. 2007; Gradinaru et al. 2007, 2008; Zhang et al. 2008; Tsai et al. 2009; Gunaydin et al. 2010; Liu and Tonegawa 2010; Lobo et al. 2010; Zhang et al. 2010). This temporal precision of optogenetics makes it possible to investigate the causal role of neural activity in different time segments of a response on a second-by-second basis, extending previous work based on correlation of neural activity and behavior (Tye et al. 2012; Nakamura et al. 2013; Steinberg et al. 2013). In particular, the light-activated halorhodopsin (Han and Boyden 2007; Zhang et al. 2007; Gradinaru et al. 2008) provides a means to optogenetically inhibit neurons in the rodent brain. Recent developments support the use of halorhodopsin in rats (Witten et al. 2011; Stefanik et al. 2012; Nakamura et al. 2013), which have some advantages for testing behavioral flexibility (Whishaw 1995; Cressant et al. 2007).

In the present study we investigated the effect on behavioral flexibility of optogenetic inhibition of the NAcc shell neurons in behaving rats during specific time segments related to task events. Based on evidence that NAcc shell neurons encode the incentive value of conditioned stimuli, we hypothesized that inhibition during feedback of results would change the probability of a shift in response after a switch in contingencies. To test this hypothesis we used viral mediated gene transfer to express halorhodopsin in neurons of the rat NAcc shell. We injected a lentiviral vector (pLenti-hSyn-eNpHR3.0-EYFP) into the NAcc shell bilaterally and implanted optic fibers above the injection sites on both sides. A light-emitting diode (LED) delivered light to the optic fibers, so that infected NAcc shell neurons were inhibited when the LED was on. The LED was turned on or off in specific time segments of a task in which contingencies switched several times during a session. We used this approach to investigate the causal role of NAcc shell neurons in integration of the results of previous responses into subsequent responses, focusing on the role of their activity in specific time segments of the sequence of behavior.

Results

Functional expression of halorhodopsin in medium spiny neurons

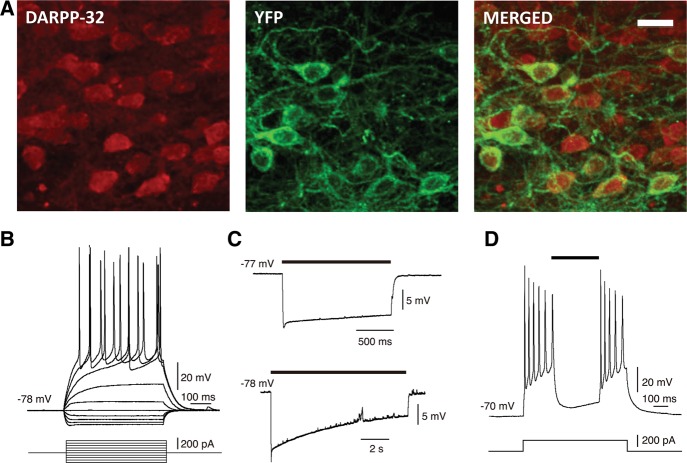

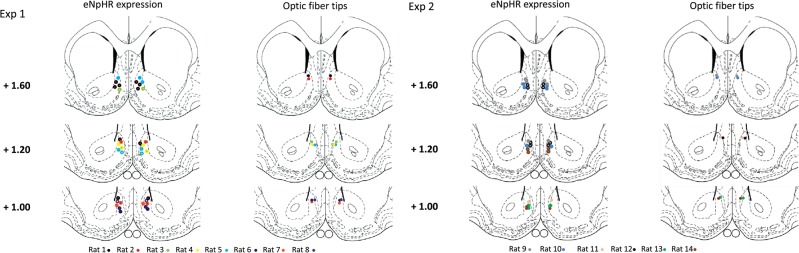

Halorhodopsin expression and optical fiber placement in the NAcc shell was confirmed by histology in all animals at the end of the experiments (Fig. 1). Cellular expression in the principal neurons of the NAcc shell—the medium spiny neurons (MSNs)—was shown by colocalization of yellow fluorescent protein (YFP) and a specific marker for MSNs (DARPP-32, dopamine and cyclic-adenosine monophospate responsive phosphoprotein of molecular weight 32 kDa) that labels both D1 and D2 subtypes of MSN (Bertran-Gonzalez et al. 2008; Matamales et al. 2009; Rajput et al. 2009) (Fig. 2A). In total, 93% (114/122) of halorhodopsin-expressing cells were MSNs, consistent with the percentage of MSNs measured in the striatum by quantitative neuroanatomy (Oorschot 1996). The infected neurons exhibited electrophysiological characteristics of MSNs including inward rectification and delayed action potential firing (Fig. 2B).

Figure 1.

Halorhodopsin expression and position of optical fibers in the NAcc shell. Location of maximal expression of halorhodopsin (eNpHR expression, left) and tips of optical fibers (right) are indicated by circles, color-coded by animal. Several animals showed halorhodopsin expression in multiple sections. Halorhodopsin expression extended ∼300 µm in the medial–lateral and 400 µm in the dorsal–ventral directions.

Figure 2.

Cellular expression of halorhodopsin and electrophysiology of light-activated inhibition in medium spiny neurons. (A) Medium spiny neurons (DARPP-32, red), halorhodopsin (YFP, green), and their colocalization (MERGED) indicating halorhodopsin expression in medium spiny neurons (red + green). Scale bar, 20 µm. (B) Electrophysiological recording from YFP-positive neurons in NAcc shows the characteristic voltage response (above) of a medium spiny neuron to depolarizing and hyperpolarizing current pulses (below). (C) Optical stimulation of YFP-positive neurons induces hyperpolarization in medium spiny neurons on short and long timescales. Black bars indicate illumination time (upper trace, 1.5 sec; lower trace, 10 sec). (D) Illumination (black bar) blocked repetitive action potential firing induced by suprathreshold current injection.

Functional expression of halorhodopsin was confirmed by hyperpolarization of YEF-positive neurons on exposure to yellow light for 1.5 sec or 10 sec (Fig. 2C). Illumination for 1.5 sec caused hyperpolarization of 26.8 mV below the resting membrane potential on average (n = 3), indicating strong inhibition. Action potential firing induced by strong depolarizing current was completely stopped by illumination (Fig. 2D). Control neurons (YFP-negative) from outside the injected areas were not responsive to light stimulation. Thus, optogenetic inhibition of MSNs was able to block firing in response to excitatory currents which were many times greater than synaptic inputs recorded in vivo (Wickens and Wilson 1998).

Experiment 1: Behavioral flexibility is increased by inhibition during feedback

We investigated the effect on behavioral flexibility of optogenetic inhibition during specific time segments of a task that involved within-session switching of contingencies. Rats (n = 8) were trained to criterion on tasks of increasing difficulty, initially learning to press one of two levers for an immediate food reward, and then progressing through stages: between-session reversal, single reversal within a session, and multiple reversals within a session. In the final, multiple reversal testing sessions there were 80 rewards per session. After 20 rewards had been given on one lever, the stimulus–reward contingencies were reversed so that pressing on the other lever was required for reward delivery. Four different stimulus–reward contingencies were tested in each session, with the switching sequence counterbalanced. Rats had 90 minutes to complete the task, and could choose to lever press at any time within a session.

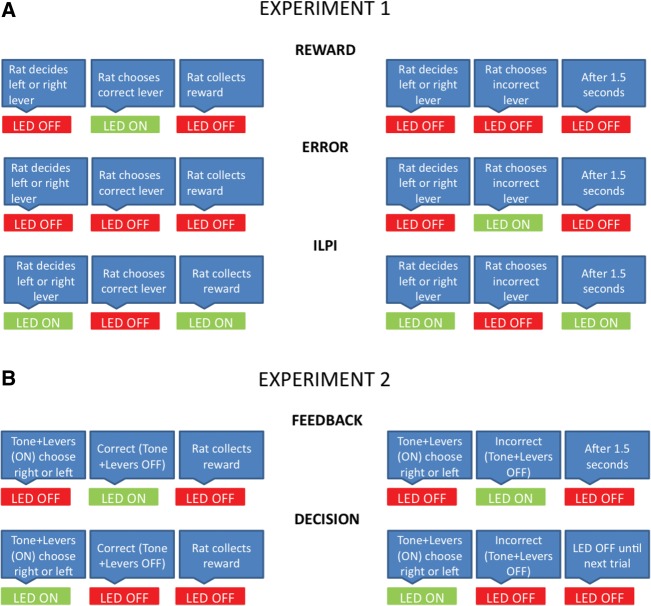

To investigate the role of neural activity in the NAcc shell in different phases of their responses, rats were subjected to optogenetic inhibition by turning on the LED during specific time segments (Fig. 3A). In the REWARD condition, the LED was turned on by a correct lever press and stayed on until reward collection. In the ERROR condition, the LED was turned on after an incorrect lever press and stayed on for 1.5 sec. In the ILPI (inter lever-press interval) condition, the LED was on throughout the session, but turned off whenever the rat made a lever press. If the lever press was correct the LED stayed off until reward collection. If the lever press was incorrect the LED stayed off for 1.5 sec. Control conditions included leaving the LED off throughout all sessions (OFF condition), or turning the LED on at random for 1.5 sec every 30, 45, or 60 sec (RANDOM condition). In addition, a separate group of rats received an inactive halorhodopsin to control for direct effects of LED illumination. The total time of LED illumination for each condition is shown in Supplemental Table 1.

Figure 3.

Schematic representation of optogenetic conditions. (A) Experiment 1. REWARD: LED on after a correct lever press and off when the reward was collected. ERROR: LED on after an incorrect lever press and off after 1.5 sec. ILPI: LED on throughout but after a correct level press was turned off until reward collection and after an incorrect lever press was turned off for 1.5 sec. (B) Experiment 2. FEEDBACK: LED on for either REWARD or ERROR conditions as in Experiment 1. DECISION: LED on during tone and lever-out period until a correct or incorrect lever press.

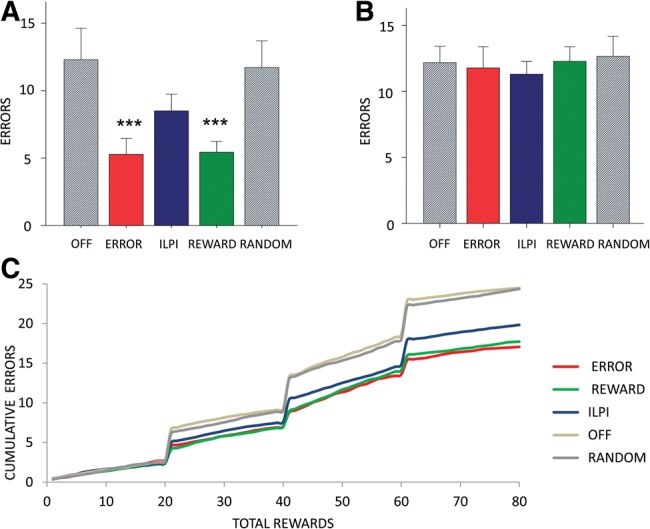

We first analyzed errors that occurred immediately after the switch in contingencies at the end of each block of 20 rewards. Such errors may be due to a failure to cease pressing an unreinforced lever and could be considered failure to implement a lose–shift strategy. We found a main effect of LED condition (F(1.29,9.05) = 33.5, P < 0.001). We then made post-hoc comparisons of the error rate for each optogenetic manipulation (ERROR, REWARD, ILPI, RANDOM, OFF). There was a significant reduction in errors in REWARD or ERROR conditions compared to the two control conditions (REWARD vs. OFF, P = 0.002; REWARD vs. RANDOM, P = 0.001; ERROR vs. OFF, P = 0.002; ERROR vs. RANDOM, P = 0.002) (Fig. 4A). The reduction in errors in ERROR and REWARD conditions was also significant when compared to the ILPI condition (ERROR vs. ILPI, P = 0.001; REWARD vs. ILPI, P = 0.001). There was no significant difference between ILPI and control conditions (ILPI vs. OFF, P = 0.175; ILPI vs. RANDOM, P = 0.215) suggesting that inhibition of activity in the decision period preceding action selection had no effect on the errors. These results suggest that the activity of NAcc cells in the time after action selection (lever-pressing) until outcome (reward or nonreward), corresponding to feedback of reward or error results, is important for lose–shift behavior.

Figure 4.

Reversal errors are reduced by optogenetic inhibition during REWARD or ERROR epochs. (A) Mean total number of lever-pressing errors after reversal until first correct response summed over three reversals. There is a significant decrease in these errors in the ERROR and REWARD conditions. (B) Mean total number of lever-pressing errors excluding those shown in A. There is no difference between the conditions. (C) Learning curve showing cumulative errors over rewards acquired (80 rewards per session) for the three optogenetic conditions (ERROR, REWARD, ILPI) and two control conditions (OFF, RANDOM), confirming that the main effect is in the errors after reversal and before the first correct response.

To investigate whether win–stay behavior was also affected, we analyzed errors that occurred after the first correct response. There was no significant main effect of optogenetic manipulation on lever-pressing errors that occurred after the first correct response (Fig. 4B). We then examined trial by trial whether the optogenetic stimulated rats and controls were learning the task differently. A learning curve (Fig. 4C) showing cumulative errors confirmed that the main effects occurred in the errors after reversal. The learning rate during rewarded correct responding was similar across conditions. These results indicate that win–stay behavior was not affected by any of the optogenetic manipulations.

We further examined the microstructure of learning by analyzing the number of times the animal made an error and then chose the correct lever on the next trial, divided by the total number of errors, as a percentage of the total number of errors (lose–shift percentages). We also analyzed the number of times the animal received a reward for pressing one lever and then chose the same lever on the next trial, expressed as a percentage of the total number of rewards (win–stay percentages). Consistent with the statistical analysis of the number of errors between contingency shifts, lose–shift percentages were higher in the REWARD (62.1%) and ERROR (61.6%) conditions than in the ILPI (49.4%) or OFF (45.1%) conditions. In contrast, win–stay percentages were similar across all conditions (range 83.9% to 85.9%). These results confirm that the main effect of optogenetic inhibition in REWARD and ERROR conditions is increased probability of lose–shift behavior without an effect on win–stay behavior.

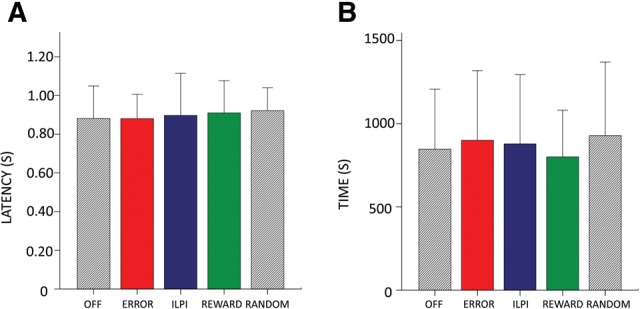

Optogenetic inhibition had no effect on how well the rats learned the task. Analysis of discrimination percentages for each block (Supplemental Table 2) confirmed that rats’ overall performance was similar across all conditions. Optogenetic condition also had no effect on motivational measures such as total time to complete each session (F(2.27,11,38) = 0.97, P > 0.05) (Fig. 5A) and latency of reward collection after a correct lever press (F(2.22,11.12) = 0.33, P > 0.05) (Fig. 5B).

Figure 5.

Optogenetic stimulation has no effect on motivational variables. (A) There is no significant effect of condition on latency from correct lever press to reward collection. (B) There is no significant effect of condition on time to complete the task.

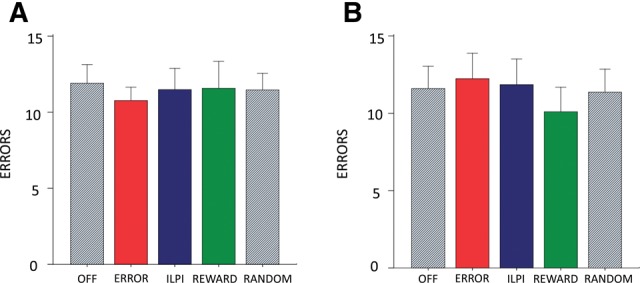

Light delivery may, in theory, alter neural activity even in nonexpressing cells. Although this is unlikely with the light levels used in the current experiment (Yizhar et al. 2011), to control for the nonoptogenetic effects of the LED we tested a separate group of rats (n = 6) that received an inactive halorhodopsin (pLenti-YFP). In these rats the three LED manipulations (ERROR, REWARD, and ILPI) had no effect on performance (Fig. 6A,B). This confirmed that direct physiological effects of LED illumination were unrelated to learning performance.

Figure 6.

Optical stimulation has no effect on control rats with inactive halorhodopsin. (A) Total number of lever-pressing errors after reversal until first correct response summed over three reversals. There is no effect of condition. (B) Total number of lever-pressing errors excluding errors in the period after reversal until first correct response. There is no effect of condition.

Experiment 2: Behavioral flexibility is increased by optogenetic inhibition during FEEDBACK but not DECISION periods

In Experiment 2 we made two modifications to the task in light of results from Experiment 1. First, we delineated a decision period so that optogenetic inhibition could be applied in a distinct time segment. In this DECISION time segment, optogenetic inhibition started with the onset of a discriminative stimulus (a tone starting with the protrusion of the two levers), and stopped either after 5 sec (when the tone ceased and the levers retracted) or when the rat pressed a lever (correct or incorrect). Second, to exclude the possibility that optogenetic inhibition in the former REWARD and ERROR conditions might act as a discriminative stimulus and increase the effect of reward or error outcomes, we applied optogenetic inhibition during both errors and correct responses (FEEDBACK) within the same session.

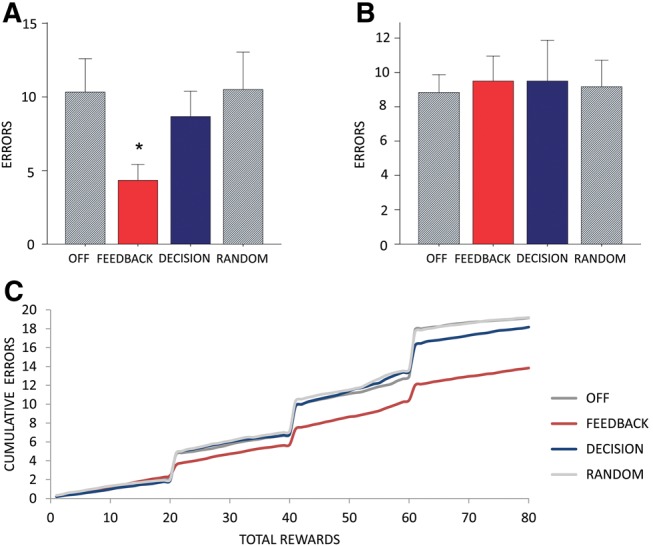

In Experiment 2 (n = 6) we found a main effect of condition on the total number of lever-pressing errors after reversal until the first correct response over three reversals (F(3,15) = 19.3, P < 0.001). There was a significant error reduction in those sessions in which the LED was turned on during either a correct or incorrect response (FEEDBACK vs. OFF, P = 0.015; FEEDBACK vs. RANDOM, P = 0.024; FEEDBACK vs. DECISION, P = 0.027) (Fig. 4A), but not during decisions (DECISION vs. OFF, P = 0.630; DECISION vs. RANDOM, P = 0.170) (Fig. 7A). We found no significant main effect of optogenetic manipulation on the total number of lever-pressing errors excluding errors in the period after reversal until the first correct response (Fig. 7B). These results confirm that the activity of NAcc cells during FEEDBACK is important for lose–shift behavior, regardless of whether feedback is of reward or error results. These results also confirm that inhibition during a more distinct DECISION period has no significant effect on the measures tested.

Figure 7.

Reversal errors are reduced by optogenetic inhibition during FEEDBACK. (A) Mean total number of lever-pressing errors after reversal until first correct response summed over three reversals. There is a significant decrease in these errors in the FEEDBACK condition but not in the DECISION, RANDOM, or OFF conditions. (B) Mean total number of lever-pressing errors excluding those shown in A. There is no difference between the conditions. (C) Learning curve showing cumulative errors over rewards acquired (80 rewards per session) for the two optogenetic conditions (FEEDBACK, DECISION) and the control conditions (OFF, RANDOM), confirming that the main effect occurs in the period between contingency switch and the first correct response.

As in Experiment 1, the learning curve for each optogenetic condition shows that across the 80 rewards in a session, rats across all conditions learned at a similar rate (with an increase in the error rate between the 2nd and 3rd reversal), but a reduced number of errors immediately after the switch in the FEEDBACK condition (Fig. 7C). Consistent with the appearance of the learning curve, there was a higher lose–shift percentage in the FEEDBACK condition (57.8%) than in other conditions (40.7%–44.6%). In contrast, win–stay percentages were similar across all conditions (88.1%–88.8%). Analysis of discrimination percentages for each block confirmed that overall task performance was similar across all conditions (Supplemental Table 3). These results confirm that the main effect of optogenetic inhibition in FEEDBACK conditions is increased probability of lose–shift behavior.

Discussion

To the best of our knowledge, this is the first demonstration that optogenetic inhibition of NAcc shell neurons during reward or error feedback intervals increases behavioral flexibility in a task requiring a win–stay/lose–shift strategy. We found that optogenetic inhibition of NAcc shell activity in the time segment between action selection and outcome reduced the number of errors after a stimulus–reward contingency switch. However, optogenetic inhibition in other time segments had no effect on our behavioral measures. Our results demonstrated critical time windows during which NAcc shell neurons (1) integrate reward or error feedback history and (2) use this integrated history to resist lose–shift behavior after a contingency switch. Inhibiting NAcc shell neurons in these critical time windows increased lose–shift behavior, thus facilitating behavioral flexibility.

The optogenetic manipulation we used enabled us to inhibit halorhodopsin-expressing neurons within range of the optic fiber. Based on the wavelength, the fiber numerical aperture, and the light power output from the tip of the optic fiber we estimate that the light emanating from the tip would penetrate 0.2–0.3 mm into the tissue to inactivate a volume of 0.034–0.11 mm3. Since the density of MSNs is 84,900 mm−3 (Oorschot 1996) we estimate that about 104 neurons caused the effects we observed. In our intracellular recordings, the inhibitory effect of halorhodopsin was strong enough to block of firing in response to injected currents that were several times larger than synaptic currents. No rebound spiking was observed in the MSNs, consistent with previous studies (Wickens and Wilson 1998; Lansink 2008). Thus, the main effect of the optogenetic manipulation was reduced firing of the MSN output neurons of the NAcc shell.

In addition to the MSNs, the NAcc has a small population of fast-spiking interneurons (Kawaguchi et al. 1995). These interneurons are relatively few in number (<1% of the total neurons) but they have strong inhibitory effects on MSNs (Koos and Tepper 1999; Koos et al. 2004). The promoter we used, synapsin, may have resulted in expression of halorhodopsin in fast spiking interneurons as well as in MSNs, raising the possibility of a disinhibitory action on MSNs. However, our intracellular records show this is unlikely because the inhibitory effect of halorhodopsin in MSNs is greater than the inhibitory currents caused by fast-spiking interneurons (Koos et al. 2004; Tepper et al. 2004). Moreover, although these low-threshold spiking interneurons do exhibit rebound low-threshold calcium bursts after release from hyperpolarization (Kubota and Kawaguchi 2000), such bursts would increase the inhibitory effect of halorhodopsin on MSNs rather than diminish it. Therefore, inhibition of MSN output is the dominant effect of the optogenetic manipulation.

Our main finding is that the optogenetic inhibition of MSNs in the NAcc shell causes improved switching performance when inhibition is applied in either of two time windows: after correct responses (REWARD), or after errors (ERROR). We suggest that these effects are most probably mediated by the same neurons—those that express halorhodopsin—involved in a mechanism that first integrates feedback during learning and is later engaged after a shift in contingencies. We discuss this putative mechanism below.

In the REWARD condition, NAcc shell neurons were inhibited only after correct responses. The effect of inhibition in the REWARD condition—seen after the reversal—was evident in the smaller number of errors before the first correct response. However, the rats in the REWARD condition receive no optogenetic inhibition during the unrewarded responding on the incorrect lever immediately after the contingency switch, because they are making incorrect responses. This means that the cause of the improvement must have occurred during the initial learning, before the reversal, when the rat is making correct responses. However, there is no evidence in our data of fewer correct responses in the periods before the reversal, where the learning curves are similar for all conditions.

To explain the effect of optogenetic inhibition in the REWARD condition, we postulate weaker learning of reward expectancy prior to the switch in contingencies, caused by inhibition of NAcc shell neurons after a correct response. The discharge rate of ventral striatal neurons has been reported to correlate with reward magnitude and expectancies and encode cues related to reward (Cromwell and Schultz 2003; Roitman et al. 2005; Wood et al. 2011). Optogenetic inhibition of such neurons during learning experiences would reduce activity that is necessary for synaptic plasticity (Reynolds and Wickens 2000, 2002; Reynolds et al. 2001), leading to weaker reward expectation signals. Weaker reward expectation signals in turn may cause a reduced tendency to resist lose–shift behavior, resulting in more labile responding after a contingency switch.

The effect of optogenetic inhibition in the ERROR condition requires a different explanation. Optogenetic inhibition when an incorrect response was made after a shift in contingency was sufficient to reduce the number of reversal errors. The mechanism mediating this effect presumably involves the same neurons as in the REWARD condition but at a different time point. In the ERROR condition, inhibition of these same neurons representing reward expectancy in the REWARD condition would also cause a weaker reward expectation signal in the ERROR condition. The weaker reward expectancy activity might result in more labile responding after a contingency shift.

We considered but rejected an alternative interpretation that the increase in behavioral flexibility in REWARD and ERROR is caused by LED-induced neural inhibition acting as cue to increase the effect of feedback. Such a cue might have been informative if it only occurred in association with errors in the ERROR condition, or in association with rewards in the REWARD condition. To test this possibility we added FEEDBACK condition in Experiment 2, in which optogenetic inhibition was given during both REWARD and ERROR conditions. This ensured that the optogenetic inhibition provided no additional information about the outcome. We found that the FEEDBACK condition also increased lose–shift behavior resulting in fewer errors after a switch, thus failing to support the alternative interpretation.

Considering our results in the framework of behavioral flexibility, decision-making, and reinforcement learning, it is plausible to suggest that normally the tendency to shift after a loss is reduced by an expectancy of reward. Even in the absence of a reward on the most recent trial, the integrated history of previous association of the lever press with reward may sustain responding. This would cause continued incorrect responding after errors brought about by a switch in contingencies. Inhibiting such an expectancy of reward signal—as in our experiments—would lead to more rapid switching to the correct response. Such a framework combines rule-based win–stay, lose–shift models (Worthy et al. 2012) with reinforcement learning models (Sutton and Barto 1981; Barto and Sutton 1982) by basing decisions on the recency-weighted average reward, leading to selection of the option with greatest expected reward values (Worthy et al. 2013). Optimal behavior in such contingency switch tasks is possible using a win–stay, lose–shift strategy that repeats the response from the last trial if it was correct but switches to the alternative response if it was incorrect (Rayburn-Reeves et al. 2013). Such a strategy requires integration of the results of previous responses into subsequent responses. Our results may be explained in this framework if REWARD, ERROR, and FEEDBACK conditions result in weaker reward expectancy signals.

The present findings contribute to a larger body of work in which prolonged inactivation or lesions of the NAcc shell improve behavioral flexibility in various forms, including latent inhibition, Pavlovian-instrumental transfer, and attentional set-shifting (Weiner et al. 1996; Corbit et al. 2001; Pothuizen et al. 2005a; Floresco et al. 2006). For example, Floresco et al. (2006) found that NAcc shell inhibition during the first day of discrimination learning led to improved performance (fewer trials to reach criterion) relative to controls receiving inhibition after a set switch. The improvement in shifting from the previously learned strategy was interpreted as inhibition-induced inability of the rats to fully ignore the irrelevant stimulus during the first discrimination, making this stimulus more salient during the shift, and thus reducing perseverative errors. However, Ambroggi et al. (2011) found that NAcc shell inactivation did not impair the ability to discriminate between cues, even though it reduced inhibition of responding to a nonrewarded stimulus. Our findings are consistent with Ambroggi et al. (2011) but not with Floresco et al. (2006), because we did not see increased lose–shift behavior in the ILPI or DECISION conditions. However, the firing of a subset of NAcc neurons in the delay period preceding movement is correlated with the direction of subsequent movement (Taha et al. 2007) leaving open the possibility that they may contribute to decisions if inactivation over both response directions leaves some differential activation.

Some effects of optogenetic inhibition of the NAcc shell may be mediated by dopamine neurons in the ventral tegmental area that receive inputs from the NAcc (Zahm and Heimer 1990; Heimer et al. 1991; Usuda et al. 1998; Aggarwal et al. 2012). Alterations in dopamine signaling have previously been associated with changes in behavioral flexibility. For example, Colpaert et al. (2007) found that systemic dopamine D2 antagonists increased win–shift behavior after a rewarded trial. Conversely, Halluk and Floresco (2009) found that infusions of the D2 agonist quinpirole directly into the NAcc impaired reversal learning without disrupting initial response learning. St Onge et al. (2011) found that rats would bias their choices toward a lose–shift strategy better than controls if a D1 agonist was injected into the prefrontal cortex. Together, these studies suggest that some of the effects of NAcc shell inhibition may be mediated by dopaminergic projections to the prefrontal cortex or NAcc (Floresco et al. 2009).

In conclusion, our optogenetic experiments indicate critical time segments during which NAcc shell neurons integrate reward or error feedback history, and use this integrated history to make decisions. Inhibiting NAcc shell neurons in these critical time segments increased lose–shift behavior, thus facilitating behavioral flexibility. The effects we observed may be explained by reduced integration of reinforcement history causing reduced reward expectancy, or disrupted readout of reward expectancy for decision making. Weaker reward expectancy signals might explain the observed more labile responding after a contingency shift. Further work is needed to examine the natural firing patters of the infected neurons in these critical time windows, which our evidence suggests play a key role in integrating knowledge of results into subsequent behavior, and in modulating lose–shift behavior when contingencies change.

Materials and Methods

Subjects

Twenty Long Evans rats were obtained from Charles River weighing 250–275 g on arrival. The animals were initially housed in pairs and later housed individually after they had cannulae implanted. They were maintained on a 12-h light–dark cycle. Rats were restricted to 15 g of chow per day with free access to water. The Okinawa Institute of Science and Technology Animal Care and Use Committee approved the procedures.

Virus production and purification

The pLenti-hSyn-eNpHR3.0-EYFP lentiviral vector was kindly provided by Karl Deisseroth’s Lab. This contains a fusion protein of halorhodopsin (eNpHR) and the hSyn promoter which is highly specific for neurons (Kugler et al. 2003). This was used along with packaging plasmid, psPAX2, and envelope plasmid, pMD.2G, in a liposome mediated triple transfection (FuGene-6, Roche) of HEK293T cells (ATCC). After a period of 6 h, the medium was replaced with an Ultraculture serum free medium (Lonza Bio) supplemented with 5-mM sodium butyrate and viral particles shed from the cells were collected over a period of 36 h. The virus containing media was then filtered through a 0.45-mm SFCA filter unit (Nalgene) and spun in a CP100WX refrigerated (4°C) ultracentrifuge for 2 h at 16K rpm (Hitachi). Supernatants were discarded and the viral pellets re-suspended in PBS and frozen at −80°C for subsequent use. Final concentrated viral titers were 1.78 × 1011 copies/mL and determined by RT-PCR using the Lenti-X qRT-PCR Titration Kit (Clontech).

Stereotaxic optic fiber implantation and virus injection

We injected pLenti-eNpHR3.0-YFP bilaterally at stereotaxic coordinates for the NAcc shell and implanted optical fibers at the site of each injection on both sides. For these procedures, rats were anesthetized with a mixture of isoflurane and oxygen at a ratio of 5:1 (induction) and placed in a stereotaxic frame (David Kopf Instruments). The isoflurane to oxygen ratio was changed to 2:1 during the surgical procedure. Two holes were drilled and 1.0 µL virus was injected via Hamilton syringe into the NAcc shell bilaterally at the following coordinates from bregma: anterior–posterior, +1.6 mm; dorsoventral, −7.0 mm; medial–lateral, +/− 0.8 mm. After the injections, the two fiber-optic cannulae were inserted and anchored to the skull with stainless-steel screws and dental cement. For maximum viral expression, the animals were rested for 2 wk before behavioral training began.

Behavioral procedures and optogenetic conditions

Rats were trained and tested in sound-attenuated testing chambers (34 × 29 × 25 cm, Med Associates) and the behavioral task was programmed using a MED-PC system. Retractable levers were fitted on the left and right walls of the chamber, with a pellet receptacle in the center. A head entry detector was used to measure reward collection. A house light was located in the top center of the response panel and a Sonalert attachment was mounted above the cage. Food pellets (45 mg) were delivered via a pellet dispenser (ENV-203M). Light delivery into the rat’s fiber-optic cannulae was gated by a digital logic control signal between the MED-PC system and an LED driver. The LED (wavelength 590 nm) was connected via a two-channel fiber-optic swivel that allowed the animal to turn freely. Two mono fiber patch cords provided optical connection from the swivel to the fiber-optic cannulae. All optical equipment was obtained from Doric Lenses. The optical fiber diameter was 200 µm with a numerical aperture of 0.37. Power output from the tip of the fiber was 0.40 mW.

Experiment 1

We began training 2 wk after pLenti-eNpHR3.0-YFP injection, and tested from 4- to 8-wk post-injection. Training took place in four stages. In the first stage—fixed-ratio (FR1) discrimination—rats learned to press the left or right lever (counterbalanced) for a food reward. Each correct lever press resulted in the simultaneous illumination of a visual cue, the onset of an auditory stimulus, and the delivery of a 45-mg food pellet. Rats had 90 min to complete the task and could receive 80 rewards. The criterion for moving to the next stage of training was 90% discrimination accuracy. In the second stage—between-session reversal—the stimulus–reward contingencies were reversed so that if lever-pressing on the left previously resulted in reward delivery this ceased to be the case and lever-pressing on the right became rewarding, and vice versa. Rats underwent 3 d of training before moving on to the third stage. In the third stage—within session reversal—the stimulus–reward contingencies were switched twice within the same session, so that after 40 lever presses on the left to receive food pellets, rats had to lever press on the right to receive the remaining 40 pellets.

In the final stage, four different stimulus–reward contingencies were tested in each session. Rats obtained 20 rewards on a lever before a switch occurred, with the switching sequence counterbalanced, accumulating a total of 80 rewards per session. The rat could choose to lever press at any time within a session. After 3 d of training on this paradigm, two mono fiber patch cords from the LED were connected to the implanted fiber-optic cannulae and the effects of optogenetic inhibition at different time points were tested. We conducted a total of 27 testing sessions of 90 min on different days.

To investigate the role of neural activity in the NAcc shell in different time segments, each rat was subjected to optogenetic inhibition during days 10–36. Optogenetic conditions were defined by the timing of the LED illumination, as shown in Figure 3A.

Experiment 2

All training and testing procedures were identical to those in Experiment 1 in terms of stimulus–reward contingencies, but optogenetic conditions were changed to those shown in Figure 3B.

Histology

Expression of pLenti-eNpHR3.0-YFP and location of the optical fiber tips in the NAcc shell were confirmed by histology in all animals at the end of the experiments (Fig. 1). Following optogenetic behavioral experiments, all animals were sacrificed by injection of pentobarbital and perfused transcardially with PBS/heparin (60 U/mL) followed by 4% PFA. The brains were removed and postfixed on 4% PFA overnight. The following day the PFA was discarded and the brains were immersed in a 20% sucrose solution in PBS overnight. Brain slices were cut into 60-µm thick sections on a freezing microtome (Yamato), placed in PBS for storage at 4°C.

Immunocytochemistry

To determine the efficiency of expression of eNpHR3.0-YFP in NAcc medium spiny cells, slices were permeabilized/quenched with 0.05 M NH4Cl and 0.02% (w/v) Saponin in PBS or 15 min and blocked with PGAS containing 2% Goat Serum (Jackson) and 1% (w/v) BSA for 1 h. For double staining of eNpHR and DARPP-32, brain sections were stained using chicken-derived GFP (1:2000, Abcam) and rabbit-derived DARPP-32 antibodies (1:1000, Chemicon) overnight at 4°C. The following day and after PGAS washes to remove the primary antibodies, secondary labeling of the bound antibodies on the slices were stained using goat-derived anti-chicken Alexa 488 and anti-rabbit Alexa 594 conjugated secondary antibodies (both at 1:500, Invitrogen) for 4 h at 25°C. After washing the secondary antibodies with PGAS and PBS, slices were mounted onto slides with Vecta-Shield (Vector Labs) and images were acquired using a Zeiss LSM 510 Meta Confocal microscope. We quantified the infection efficacy by counting the number of YFP positive cells that were also DARPP-32 (double labeled). The analysis was applied to each NAcc shell section (N = 4) in which YFP was present within a counting frame (200 µm × 200 µx × 50µm). The total number of double labeled cells (YFP+DARPP-32) was compared with the total number of YFP positive cells.

Electrophysiology

Electrophysiological studies were performed 2 wk after bilateral injection of pLenti-eNpHR3.0-YFP at 5 wk, corresponding to the time at which training began in behaviorally tested rats. To prepare brain slices for electrophysiology, coronal sections (300 µm thick) containing the NAcc were cut on a VT1000S microtome (Leica) in cold modified artificial CSF (ACFS) containing 50 mM NaCl, 2.5 mM KCl, 7 mM MgCl2, 0.5 mM CaCl2, 1.25 mM NaH2PO4, 25 mM NaHCO3, 95 mM sucrose, 25 mM glucose and saturated with 95% O2/5% CO2. Slices were then incubated in oxygenated standard ACSF containing 120 mM NaCl, 2.5 mM KCl, 2 mM CaCl2, 1 mM MgCl2, 25 mM NaHCO3, 1.25 mM NaHPO4, 15 mM glucose. After recovery for 1–4 h, slices were transferred to a recording chamber where they were perfused with standard ACSF (3–4 mL/min, 30°C).

Prior to recording, eNpHR-expressing neurons were identified by YFP fluorescence. We used patch pipettes (2–4 MΩ) filled with internal solution (115 mM K gluconate, 1.2 mM MgCl2, 10 mM HEPES, 4 mM ATP, 0.3 mM GTP, 0.5% biocytin, pH 7.2–7.4) to make whole-cell current-clamp recordings from eNpHR-YFP-positive neurons in NAcc. Neural electrical responses were amplified using a Multiclamp 700B amplifier and signals were digitized at 10 kHz. Stimulation of NpHR was achieved by epifluorescence illumination (100-W xenon arc lamp, 560-nm excitation filter; Semrock FF01-562/40) gated by a Uniblitz VS25 Shutter under through-the-lens control.

Statistical analyses

For statistical analyses we used SPSS version 18 (SPSS Inc). Data were analyzed using one-way repeated measures analysis of variance (ANOVA). During testing, we calculated the total number of errors either during the reversal phase (total number of lever-pressing errors after reversal until first correct response over three reversals) or before the reversal (total number of lever-pressing errors excluding errors in the period after reversal until first correct response) for all the optogenetic manipulations over three sessions (three sessions for each LED condition). If a main effect was found, we conducted post-hoc pairwise comparisons using the Bonferroni correction for the repeated measures ANOVA. A P value of <0.05 was considered significant.

Acknowledgments

We thank M. Aggarwal and J. Johansen for helpful discussions, and C. Vickers for technical assistance with electrophysiology recording.

Footnotes

[Supplemental material is available for this article.]

Freely available online through the Learning & Memory Open Access option.

References

- Aggarwal M, Hyland BI, Wickens JR 2012. Neural control of dopamine neurotransmission: implications for reinforcement learning. Eur J Neurosci 35: 1115–1123 [DOI] [PubMed] [Google Scholar]

- Ambroggi F, Ghazizadeh A, Nicola SM, Fields HL 2011. Roles of nucleus accumbens core and shell in incentive-cue responding and behavioral inhibition. J Neurosci 31: 6820–6830 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aravanis AM, Wang LP, Zhang F, Meltzer LA, Mogri MZ, Schneider MB, Deisseroth K 2007. An optical neural interface: in vivo control of rodent motor cortex with integrated fiberoptic and optogenetic technology. J Neural Eng 4: S143–S156 [DOI] [PubMed] [Google Scholar]

- Arenkiel BR, Peca J, Davison IG, Feliciano C, Deisseroth K, Augustine GJ, Ehlers MD, Feng GP 2007. In vivo light-induced activation of neural circuitry in transgenic mice expressing channelrhodopsin-2. Neuron 54: 205–218 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barto AG, Sutton RS 1982. Simulation of anticipatory responses in classical conditioning by a neuron-like adaptive element. Behav Brain Res 4: 221–235 [DOI] [PubMed] [Google Scholar]

- Bertran-Gonzalez J, Bosch C, Maroteaux M, Matamales M, Herve D, Valjent E, Girault JA 2008. Opposing patterns of signaling activation in dopamine D1 and D2 receptor-expressing striatal neurons in response to cocaine and haloperidol. J Neurosci 28: 5671–5685 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bitterman ME 1975. The comparative analysis of learning. Science 188: 699–709 [DOI] [PubMed] [Google Scholar]

- Bowman EM, Aigner TG, Richmond BJ 1996. Neural signals in the monkey ventral striatum related to motivation for juice and cocaine rewards. J Neurophysiol 75: 1061–1073 [DOI] [PubMed] [Google Scholar]

- Cardinal RN, Cheung TH 2005. Nucleus accumbens core lesions retard instrumental learning and performance with delayed reinforcement in the rat. BMC Neurosci 6: 9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carelli RM, Deadwyler SA 1994. A comparison of nucleus accumbens neuronal firing patterns during cocaine self-administration and water reinforcement in rats. J Neurosci 14: 7735–7746 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carelli RM, Ijames SG, Crumling AJ 2000. Evidence that separate neural circuits in the nucleus accumbens encode cocaine versus “natural” (water and food) reward. J Neurosci 20: 4255–4266 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Colpaert F, Koek W, Kleven M, Besnard J 2007. Induction by antipsychotics of “win–shift” in the drug discrimination paradigm. J Pharmacol Exp Ther 322: 288–298 [DOI] [PubMed] [Google Scholar]

- Cools R, Lewis SJG, Clark L, Barker RA, Robbins TW 2006. L-dopa disrupts activity in the nucleus accumbens during reversal learning in Parkinson’s disease. Neuropsychopharmacology 32: 180–189 [DOI] [PubMed] [Google Scholar]

- Corbit LH, Muir JL, Balleine BW 2001. The role of the nucleus accumbens in instrumental conditioning: evidence of a functional dissociation between accumbens core and shell. J Neurosci 21: 3251–3260 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cressant A, Besson M, Suarez S, Cormier A, Granon S 2007. Spatial learning in Long-Evans Hooded rats and C57BL/6J mice: different strategies for different performance. Behav Brain Res 177: 22–29 [DOI] [PubMed] [Google Scholar]

- Cromwell HC, Schultz W 2003. Effects of expectations for different reward magnitudes on neuronal activity in primate striatum. J Neurophysiol 89: 2823–2838 [DOI] [PubMed] [Google Scholar]

- Day JJ, Wheeler RA, Roitman MF, Carelli RM 2006. Nucleus accumbens neurons encode Pavlovian approach behaviors: evidence from an autoshaping paradigm. Eur J Neurosci 23: 1341–1351 [DOI] [PubMed] [Google Scholar]

- Floresco SB, Ghods-Sharifi S, Vexelman C, Magyar O 2006. Dissociable roles for the nucleus accumbens core and shell in regulating set shifting. J Neurosci 26: 2449–2457 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Floresco SB, McLaughlin RJ, Haluk DM 2008. Opposing roles for the nucleus accumbens core and shell in cue-induced reinstatement of food-seeking behavior. Neuroscience 154: 877–884 [DOI] [PubMed] [Google Scholar]

- Floresco SB, Zhang Y, Enomoto T 2009. Neural circuits subserving behavioral flexibility and their relevance to schizophrenia. Behav Brain Res 204: 396–409 [DOI] [PubMed] [Google Scholar]

- Gradinaru V, Thompson KR, Zhang F, Mogri M, Kay K, Schneider MB, Deisseroth K 2007. Targeting and readout strategies for fast optical neural control in vitro and in vivo. J Neurosci 27: 14231–14238 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gradinaru V, Thompson KR, Deisseroth K 2008. eNpHR: a natronomonas halorhodopsin enhanced for optogenetic applications. Brain Cell Biol 36: 129–139 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Granon S, Floresco SB 2009. Functional neuroanatomy of flexible behaviors in mice and rats. In Endophenotypes of psychiatric and neurodegenerative disorders in rodent models (ed. Granon S), pp. 83–103 Transworld Research Network, Kerala, India [Google Scholar]

- Gunaydin LA, Yizhar O, Berndt A, Sohal VS, Deisseroth K, Hegemann P 2010. Ultrafast optogenetic control. Nat Neurosci 13: 387–392 [DOI] [PubMed] [Google Scholar]

- Haluk DM, Floresco SB 2009. Ventral striatal dopamine modulation of different forms of behavioral flexibility. Neuropsychopharmacology 34: 2041–2052 [DOI] [PubMed] [Google Scholar]

- Han X, Boyden ES 2007. Multiple-color optical activation, silencing, and desynchronization of neural activity, with single-spike temporal resolution. PLoS One 2: e299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heimer L, Zahm DS, Churchill L, Kalivas PW, Wohltmann C 1991. Specificity in the projection patterns of accumbal core and shell in the rat. Neuroscience 41: 89–125 [DOI] [PubMed] [Google Scholar]

- Hollerman JR, Tremblay L, Schultz W 2000. Involvement of basal ganglia and orbitofrontal cortex in goal-directed behavior. Prog Brain Res 126: 193–215 [DOI] [PubMed] [Google Scholar]

- Ito R, Robbins TW, Everitt BJ 2004. Differential control over cocaine-seeking behavior by nucleus accumbens core and shell. Nat Neurosci 7: 389–397 [DOI] [PubMed] [Google Scholar]

- Jongen-Relo AL, Kaufmann S, Feldon J 2002. A differential involvement of the shell and core subterritories of the nucleus accumbens of rats in attentional processes. Neuroscience 111: 95–109 [DOI] [PubMed] [Google Scholar]

- Kawaguchi Y, Wilson CJ, Augood SJ, Emson PC 1995. Striatal interneurones: chemical, physiological and morphological characterization. Trends Neurosci 18: 527–535 [DOI] [PubMed] [Google Scholar]

- Kehagia AA, Murray GK, Robbins TW 2010. Learning and cognitive flexibility: frontostriatal function and monoaminergic modulation. Curr Opin Neurobiol 20: 199–204 [DOI] [PubMed] [Google Scholar]

- Koos T, Tepper JM 1999. Inhibitory control of neostriatal projection neurons by GABAergic interneurons. Nat Neurosci 2: 467–472 [DOI] [PubMed] [Google Scholar]

- Koos T, Tepper JM, Wilson CJ 2004. Comparison of IPSCs evoked by spiny and fast-spiking neurons in the neostriatum. J Neurosci 24: 7916–7922 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kubota Y, Kawaguchi Y 2000. Dependence of GABAergic synaptic areas on the interneuron type and target size. J Neurosci 20: 375–386 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kugler S, Kilic E, Bahr M 2003. Human synapsin 1 gene promoter confers highly neuron-specific long-term transgene expression from an adenoviral vector in the adult rat brain depending on the transduced area. Gene Ther 10: 337–347 [DOI] [PubMed] [Google Scholar]

- Lansink CS 2008. “The ventral striatum in goal-directed behavior and sleep: intrinsic network dynamics, motivational information and relation with the hippocampus.” PhD thesis, Swammerdam Institute for Life Sciences, Amsterdam, The Netherlands [Google Scholar]

- Liu X, Tonegawa S 2010. Optogenetics 3.0. Cell 141: 22–24 [DOI] [PubMed] [Google Scholar]

- Lobo MK, Covington HE III, Chaudhury D, Friedman AK, Sun H, Damez-Werno D, Dietz DM, Zaman S, Koo JW, Kennedy PJ, et al. 2010. Cell type-specific loss of BDNF signaling mimics optogenetic control of cocaine reward. Science 330: 385–390 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matamales M, Bertran-Gonzalez J, Salomon L, Degos B, Deniau JM, Valjent E, Herve D, Girault JA 2009. Striatal medium-sized spiny neurons: identification by nuclear staining and study of neuronal subpopulations in BAC transgenic mice. PLoS One 4: e4770. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakamura S, Baratta MV, Cooper DC 2013. A method for high fidelity optogenetic control of individual pyramidal neurons in vivo. J Vis Exp 77: e50142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O’Neill M, Brown VJ 2007. The effect of striatal dopamine depletion and the adenosine A2A antagonist KW-6002 on reversal learning in rats. Neurobiol Learn Mem 88: 75–81 [DOI] [PubMed] [Google Scholar]

- Oorschot DE 1996. Total number of neurons in the neostriatal, pallidal, subthalamic, and substantia nigral nuclei of the rat basal ganglia: a stereological study using the cavalieri and optical disector methods. J Comp Neurol 366: 580–599 [DOI] [PubMed] [Google Scholar]

- Parkinson JA, Olmstead MC, Burns LH, Robbins TW, Everitt BJ 1999. Dissociation in effects of lesions of the nucleus accumbens core and shell on appetitive Pavlovian approach behavior and the potentiation of conditioned reinforcement and locomotor activity by D-amphetamine. J Neurosci 19: 2401–2411 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pothuizen HH, Jongen-Relo AL, Feldon J 2005a. The effects of temporary inactivation of the core and the shell subregions of the nucleus accumbens on prepulse inhibition of the acoustic startle reflex and activity in rats. Neuropsychopharmacology 30: 683–696 [DOI] [PubMed] [Google Scholar]

- Pothuizen HH, Jongen-Relo AL, Feldon J, Yee BK 2005b. Double dissociation of the effects of selective nucleus accumbens core and shell lesions on impulsive-choice behaviour and salience learning in rats. Eur J Neurosci 22: 2605–2616 [DOI] [PubMed] [Google Scholar]

- Pothuizen HHJ, Jongen-Rêlo AL, Feldon J, Yee BK 2005c. Double dissociation of the effects of selective nucleus accumbens core and shell lesions on impulsive-choice behaviour and salience learning in rats. Eur J Neurosci 22: 2605–2616 [DOI] [PubMed] [Google Scholar]

- Pothuizen HH, Jongen-Relo AL, Feldon J, Yee BK 2006. Latent inhibition of conditioned taste aversion is not disrupted, but can be enhanced, by selective nucleus accumbens shell lesions in rats. Neuroscience 137: 1119–1130 [DOI] [PubMed] [Google Scholar]

- Rajput PS, Kharmate G, Somvanshi RK, Kumar U 2009. Colocalization of dopamine receptor subtypes with dopamine and cAMP-regulated phosphoprotein (DARPP-32) in rat brain. Neurosci Res 65: 53–63 [DOI] [PubMed] [Google Scholar]

- Rayburn-Reeves RM, Laude JR, Zentall TR 2013. Pigeons show near-optimal win–stay/lose–shift performance on a simultaneous-discrimination, midsession reversal task with short intertrial intervals. Behav Processes 92: 65–70 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reynolds JN, Wickens JR 2000. Substantia nigra dopamine regulates synaptic plasticity and membrane potential fluctuations in the rat neostriatum, in vivo. Neuroscience 99: 199–203 [DOI] [PubMed] [Google Scholar]

- Reynolds JN, Wickens JR 2002. Dopamine-dependent plasticity of corticostriatal synapses. Neural Netw 15: 507–521 [DOI] [PubMed] [Google Scholar]

- Reynolds JN, Hyland BI, Wickens JR 2001. A cellular mechanism of reward-related learning. Nature 413: 67–70 [DOI] [PubMed] [Google Scholar]

- Roitman MF, Wheeler RA, Carelli RM 2005. Nucleus accumbens neurons are innately tuned for rewarding and aversive taste stimuli, encode their predictors, and are linked to motor output. Neuron 45: 587–597 [DOI] [PubMed] [Google Scholar]

- Stefanik MT, Moussawi K, Kupchik YM, Smith KC, Miller RL, Huff ML, Deisseroth K, Kalivas PW, LaLumiere RT 2012. Optogenetic inhibition of cocaine seeking in rats. Addict Biol 18: 50–53 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steinberg EE, Keiflin R, Boivin JR, Witten IB, Deisseroth K, Janak PH 2013. A causal link between prediction errors, dopamine neurons and learning. Nat Neurosci 16: 966–973 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stern CE, Passingham RE 1995. The nucleus accumbens in monkeys (Macaca fascicularis). Exp Brain Res 106: 239–247 [DOI] [PubMed] [Google Scholar]

- St Onge JR, Abhari H, Floresco SB 2011. Dissociable contributions by prefrontal D1 and D2 receptors to risk-based decision making. J Neurosci 31: 8625–8633 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sutton RS, Barto AG 1981. Toward a modern theory of adaptive networks: expectation and prediction. Psychol Rev 88: 135–170 [PubMed] [Google Scholar]

- Taha SA, Nicola SM, Fields HL 2007. Cue-evoked encoding of movement planning and execution in the rat nucleus accumbens. J Physiol 584: 801–818 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tepper JM, Koos T, Wilson CJ 2004. GABAergic microcircuits in the neostriatum. Trends Neurosci 27: 662–669 [DOI] [PubMed] [Google Scholar]

- Tsai HC, Zhang F, Adamantidis A, Stuber GD, Bonci A, de Lecea L, Deisseroth K 2009. Phasic firing in dopaminergic neurons is sufficient for behavioral conditioning. Science 324: 1080–1084 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tye KM, Mirzabekov JJ, Warden MR, Ferenczi EA, Tsai HC, Finkelstein J, Kim SY, Adhikari A, Thompson KR, Andalman AS, et al. 2012. Dopamine neurons modulate neural encoding and expression of depression-related behaviour. Nature 493: 537–541 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Usuda I, Tanaka K, Chiba T 1998. Efferent projections of the nucleus accumbens in the rat with special reference to subdivision of the nucleus: biotinylated dextran amine study. Brain Res 797: 73–93 [DOI] [PubMed] [Google Scholar]

- Weiner I, Gal G, Rawlins JN, Feldon J 1996. Differential involvement of the shell and core subterritories of the nucleus accumbens in latent inhibition and amphetamine-induced activity. Behav Brain Res 81: 123–133 [DOI] [PubMed] [Google Scholar]

- Whishaw IQ 1995. A comparison of rats and mice in a swimming pool place task and matching to place task: some surprising differences. Physiol Behav 58: 687–693 [DOI] [PubMed] [Google Scholar]

- Wickens JR, Wilson CJ 1998. Regulation of action potential firing in spiny neurons of the rat neostriatum, in vivo. J Neurophysiology 79: 2358–2364 [DOI] [PubMed] [Google Scholar]

- Witten IB, Steinberg EE, Lee SY, Davidson TJ, Zalocusky KA, Brodsky M, Yizhar O, Cho SL, Gong S, Ramakrishnan C, et al. 2011. Recombinase-driver rat lines: tools, techniques, and optogenetic application to dopamine-mediated reinforcement. Neuron 72: 721–733 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wood DA, Walker TL, Rebec GV 2011. Experience-dependent changes in neuronal processing in the nucleus accumbens shell in a discriminative learning task in differentially housed rats. Brain Res 1390: 90–98 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worthy DA, Otto AR, Maddox WT 2012. Working-memory load and temporal myopia in dynamic decision making. J Exp Psychol Learn Mem Cogn 38: 1640–1658 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worthy DA, Hawthorne MJ, Otto AR 2013. Heterogeneity of strategy use in the Iowa gambling task: a comparison of win-stay/lose-shift and reinforcement learning models. Psychon Bull Rev 20: 364–371 [DOI] [PubMed] [Google Scholar]

- Yizhar O, Fenno LE, Davidson TJ, Mogri M, Deisseroth K 2011. Optogenetics in neural systems. Neuron 71: 9–34 [DOI] [PubMed] [Google Scholar]

- Zahm DS, Heimer L 1990. Two transpallidal pathways originating in the rat nucleus accumbens. J Comp Neurol 302: 437–446 [DOI] [PubMed] [Google Scholar]

- Zhang F, Wang LP, Brauner M, Liewald JF, Kay K, Watzke N, Wood PG, Bamberg E, Nagel G, Gottschalk A, et al. 2007. Multimodal fast optical interrogation of neural circuitry. Nature 446: 633–639 [DOI] [PubMed] [Google Scholar]

- Zhang F, Prigge M, Beyriere F, Tsunoda SP, Mattis J, Yizhar O, Hegemann P, Deisseroth K 2008. Red-shifted optogenetic excitation: a tool for fast neural control derived from Volvox carteri. Nat Neurosci 11: 631–633 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang F, Gradinaru V, Adamantidis AR, Durand R, Airan RD, de Lecea L, Deisseroth K 2010. Optogenetic interrogation of neural circuits: technology for probing mammalian brain structures. Nat Protoc 5: 439–456 [DOI] [PMC free article] [PubMed] [Google Scholar]