Abstract

Memories relating to a painful, negative event are adaptive and can be stored for a lifetime to support preemptive avoidance, escape, or attack behavior. However, under unfavorable circumstances such memories can become overwhelmingly powerful. They may trigger excessively negative psychological states and uncontrollable avoidance of locations, objects, or social interactions. It is therefore obvious that any process to counteract such effects will be of value. In this context, we stress from a basic-research perspective that painful, negative events are “Janus-faced” in the sense that there are actually two aspects about them that are worth remembering: What made them happen and what made them cease. We review published findings from fruit flies, rats, and man showing that both aspects, respectively related to the onset and the offset of the negative event, induce distinct and oppositely valenced memories: Stimuli experienced before an electric shock acquire negative valence as they signal upcoming punishment, whereas stimuli experienced after an electric shock acquire positive valence because of their association with the relieving cessation of pain. We discuss how memories for such punishment- and relief-learning are organized, how this organization fits into the threat-imminence model of defensive behavior, and what perspectives these considerations offer for applied psychology in the context of trauma, panic, and nonsuicidal self-injury.

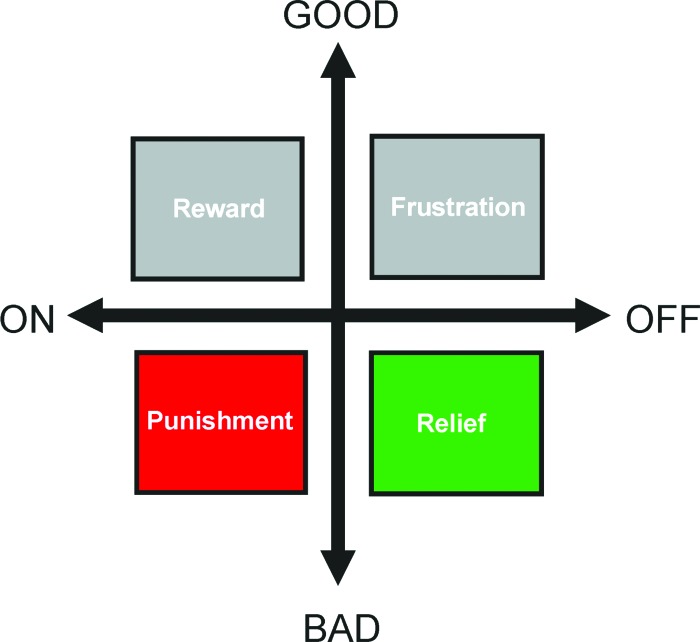

The acknowledged “negative” mnemonic effects of adverse experiences mostly relate to what happens before the onset of an aversive, painful event. However, there is a less widely acknowledged type of memory that relates to what happens after the offset of or after escape from such a painful event, at the moment of “relief” (Fig. 1) (we use “relief” to refer specifically to the acute effects of punishment offset; an equally legitimate yet broader use of the word in, e.g., “fear relief,” encompasses any process that eases fear [Riebe et al. 2012]). Indeed, in experimental settings, it turns out that stimuli experienced before and during a punishing episode are later avoided as they signal upcoming punishment, whereas stimuli experienced after a painful episode can subsequently prompt approach behavior, arguably (Box 1) because of their association with the relieving cessation of pain (Konorski 1948; Smith and Buchanan 1954; Wolpe and Lazarus 1966; Zanna et al. 1970; Solomon and Corbit 1974; Schull 1979; Solomon 1980; Wagner 1981; Walasek et al. 1995; Tanimoto et al. 2004; Yarali et al. 2008, 2009b; Andreatta et al. 2010, 2012; Yarali and Gerber 2010; Ilango et al. 2012; Navratilova et al. 2012; Diegelmann et al. 2013b); for a corresponding finding in the appetitive domain, see Hellstern et al. (1998) and Felsenberg et al. (2013). Such relief can both support the learning of the cues associated with the disappearance of the threat and reinforce those behaviors that helped to escape it. Obviously, the positive conditioned valence of and ensuing learned approach behavior toward such cues would decrease the probability of encountering the threat again and/or keep exposure time to a minimum. We review the literature of what is known, and discuss what should be asked, about the mechanisms of such punishment- and relief-learning in the fruit fly Drosophila, as well as in rat and in man, as in these three species fairly concordant approaches have been taken. This is timely, because despite the rich literature on punishment–learning (e.g., for reviews regarding Drosophila, see: Dubnau and Tully 2001; Heisenberg 2003; Gerber et al. 2004; Davis 2005; Keene and Waddell 2007; Kahsai and Zars 2011; regarding Aplysia, see: Lechner and Byrne 1998; Baxter and Byrne 2006; Benjamin et al. 2008; regarding rodents, see: Davis et al. 1993; Fendt and Fanselow 1999; LeDoux 2000; Maren 2001; Christian and Thompson 2003; Fanselow and Poulos 2005; Pape and Pare 2010; regarding monkeys, see: Davis et al. 2008; regarding humans, see: Rosen and Schulkin 1998; Öhman and Mineka 2001; Delgado et al. 2006; Öhman 2008; Davis et al. 2009; Milad and Quirk 2012), little is known about the neurobiological mechanisms or the psychological corollaries of relief-learning. Such knowledge would be important also from an applied perspective: The more distinct the underlying processes of punishment- and relief-learning are, the more likely they contribute independently to pathology, and the easier it will be to selectively interfere with either of them.

Figure 1.

Event-timing and valence. For the “Good” and the “Bad” things in life, two aspects matter in particular: What made them happen? What made them cease? The diagram illustrates that the On-set of something good (top left; e.g., finding food, a salary increase) can act as a reward, while the On-set of something bad (bottom left; e.g., being stung by a bee, being sent to prison) can act as punishment. In turn, however, the Off-set of pain upon cooling the sting or release from prison entails an oppositely valenced aspect, relief (bottom right); likewise, having your lollipop pilfered as a kid or experiencing a salary cut entail negatively valenced frustration (top right). This review is chiefly concerned with the mnemonic consequences of punishment (red color code throughout) and relief (green color code throughout). We consistently plot those behavioral measures toward the top of the y-axes that indicate positive valence, and consistently plot measures indicating negative valence toward the bottom of the y-axes. Please note that despite detailed coverage of the good and the bad, the ugly is ignored throughout (Leone 1966).

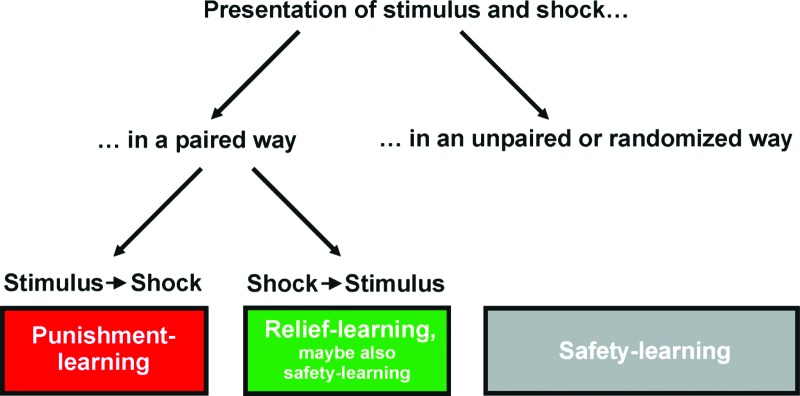

BOX 1. Relief-learning—safety-learning.

We use the term relief-learning specifically to imply the learning of an association between a stimulus and the offset of punishment. It was Konorski (1948) who initially proposed that the occurrence of a stimulus during the falling phase of a punishment signal could result in a positively valenced memory for this stimulus. In other words, in addition to their punishing effect, punishments may induce a delayed state of relief supporting positively valenced memories.

An alternative view is that repeated exposure to the punishment within the experimental context establishes this context as a dangerous one. Within such a dangerous context, a stimulus that is presented in an explicitly unpaired manner with the punishment could come to signal a subsequent period of safety. In other words, the actual absence of a contextually predicted punishment during and after the stimulus can lead to a positive prediction error (“better-than expected”), supporting a positively valenced memory for it (Rescorla 1988 and references therein).

Safety-learning, but not relief-learning, would thus be possible with unpaired or temporally randomized presentations of stimulus and punishment (for review, see Kong et al. 2013). As in such safety-learning procedures, the stimulus becomes a conditioned inhibitor predicting the nonoccurrence of the punishment and can reduce contextual fear, increase the exploration of unprotected environments, act as a positive secondary reinforcer, reduce immobility in the forced swim test, ameliorate the consequences of chronic mild stress, and promote neurogenesis (Rogan et al. 2005; Pollak et al. 2008; Christianson et al. 2012). Notably, after safety-learning of an auditory stimulus, less-than baseline evoked auditory potentials are induced in the lateral amygdala but increased evoked potentials in the caudoputamen (Rogan et al. 2005). At the same time, there is an activation of inhibitory pathways inside the amygdala that is different from those activations underlying fear extinction (Amano et al. 2010). No amygdaloid effects are found for relief-learning, however (see main text) (Andreatta et al. 2012). We finally note that neurons in the basolateral amygdala that show increased firing to a safety-learned stimulus appear to partially overlap with those activated by reward-related stimuli (Sangha et al. 2013).

Returning to procedures that consistently present the stimulus shortly after punishment, the issue is that under these conditions theoretically relief- as well as safety-learning could occur (see Fig. 2). How can one, in these cases, experimentally discriminate between relief- and safety-based explanations (see also discussions in Wagner and Larew 1985; Malaka 1999)?

In a repetitive training design, safety-learning should monotonically decrease as the inter-stimulus interval (ISI) lengthens, because the stimulus moves closer in time toward the next punishment, shortening the following safety period. In most preparations looked at, the ISI-dependency appears bell-shaped (Plotkin and Oakley 1975; Maier et al. 1976; Tanimoto et al. 2004; for related findings, see Hellstern et al. 1998; Franklin et al. 2013a; for a counter-example, see Moscovitch and LoLordo 1968), fitting more naturally to a relief-based explanation, as the relief signal peaks shortly after punishment offset. However, the differences in the shape of the ISI-functions as proposed by relief- and safety-based models are subtle (Malaka 1999 loc. cit. Figs. 4b,5) and difficult to ascertain experimentally, in particular when the learning scores are low.

Safety-learning arguably requires multiple training trials, because only when the context is sufficiently “charged” by previous trials will there be a positive prediction error during the subsequent trials, and the stimulus can become a safety-signal. While a single presentation of a stimulus with punishment offset can result in a positively valenced memory (e.g., Wagner and Larew 1985; for related findings, see Hellstern et al. 1998; Franklin et al. 2013a), in most cases multiple pairings are required (Heth 1976 and references therein; for Drosophila, see Yarali et al. 2008). Note that, unless one assumes implausibly rapid context-learning, the sufficiency of a single training trial argues against a safety-based explanation. A requirement for multiple trials, on the other hand, is consistent with both relief- and safety-based explanations (for detailed discussions, see Heth 1976; Wagner and Larew 1985; Malaka 1999).

As noted, safety-learning should rely on the value of the experimental context as a signal for the punishment. In rats, Chang et al. (2003) argued for such context-dependency and thus for a safety-based explanation: An extinction treatment for the context–punishment association diminished the effect of prior punishment–stimulus training. However, following a very similar reasoning, Yarali et al. (2008) tested in a Drosophila paradigm whether the initial punishment–stimulus pairings of a multiple-trial training session can be substituted by mere exposure to punishment within the experimental context. This was not found to be the case, offering no support for a safety-based explanation. Neither argument is particularly strong, though, because Chang et al. (2003) did not actually demonstrate extinction of the context–punishment association, and because in flies direct evidence for contextual learning under the employed conditions is lacking. We note that in honeybee learning using odors and a sugar reward, changing the context during the inter-stimulus interval leaves scores unaffected, also failing to provide evidence for context-mediation (Hellstern et al. 1998).

Obviously, these experimental strategies have given mixed or tentative results across studies, paradigms, and species, making firm and general conclusions premature. By our assessment, however, the relief-based explanation seems to be in the lead at this point—when it comes to grasping the mnemonic processes related to punishment offset.

We note that despite the above-sketched conceptual dichotomy, animals may well have the capacity for both relief- and safety-learning, so the parametric boundary conditions for their respective operation and their underlying mechanisms would need to be clarified. Indeed, one can imagine experiments that would parametrically turn experimental procedures ideal for relief-learning (pairing of the stimulus with punishment offset) into paradigms optimal for safety-learning (unpaired presentations of punishment and stimulus).

Figure 2.

Fly

Punishment- and reward-learning

When flies receive an odor followed by an electric shock, they subsequently avoid this odor because it predicts shock (Quinn et al. 1974; Tully and Quinn 1985). Specifically, a two-group reciprocal-training paradigm is used (Fig. 3): One group of flies receives odor A followed by electric shock (denoted as “–”), whereas odor B is presented alone (A–/B). The second group of flies receives reciprocal training (A/B–). Then, both groups are tested in a forced-choice situation for their relative preferences between A and B. It turns out that punishment of A tips the balance between A and B in favor of B, while punishment of B biases choice in favor of A. An associative learning index (abbreviated as LI in Figs. 3, 5 [below]) is then calculated on the basis of this difference in preference between the two reciprocally trained groups. In addition to such punishment-learning, an appetitive version of the paradigm is available which uses sugar as reward (Tempel et al. 1983): When flies receive an odor together with a sugar reward, they subsequently show an increase in preference for this odor because it predicts reward. Thus, the paradigm is “bivalent” in the sense that it can reveal both decreases in odor preference after punishment-learning, and increases in odor preference after reward-learning. This bivalent nature of the task is essential for the ensuing discussion.

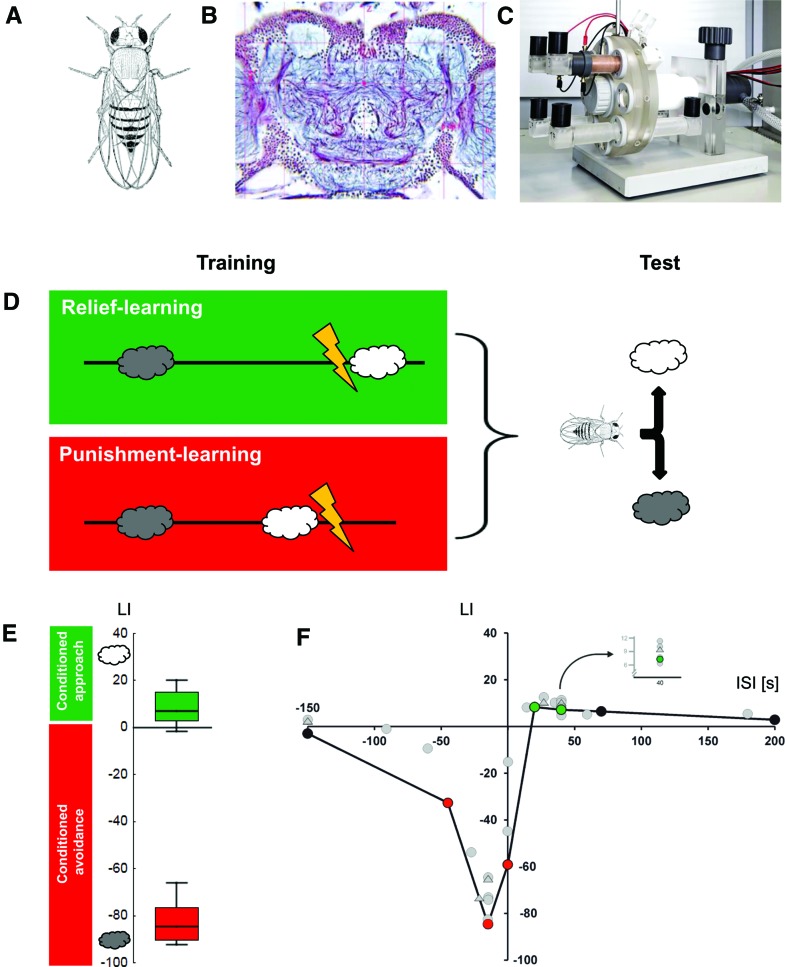

Figure 3.

Punishment- and relief-learning in Drosophila. (A) Image of a female adult fruit fly Drosophila melanogaster (from Demerec and Kaufmann 1973). A typical female fly is ∼3–4 mm in length; males are ∼0.5 mm smaller. (B) Histological preparation of an adult Drosophila brain. Shown is a frontal section stained using the reduced silver technique. Cell nuclei are visible as small purple dots; nerve tracts can be discerned as purple fibers. Blue and pale-blue stains indicate regions of synaptic contact (neuropil). The mushroom body calyces can be distinguished as large, round, paired pale-blue structures toward the top of the preparation (from www.flybrain.org); please note that the laterally situated visual brain areas (retina, lamina, medulla, lobula), which comprise almost half of the fly's brain, are cropped from the image. A typical fly brain comprises ∼100,000 neurons and is ∼450 μm in width. (C) Wheel apparatus for Drosophila relief- and punishment-learning, partially disassembled for clarity. For training, a cohort of 60–80 flies is loaded to a training tube lined inside with an electrifiable copper grid (brown tube at top of device); to the left of the training tube, a black odor container can be discerned. These odor containers can be changed such that either odor A is presented with or another odor B without electric shock. After training, flies are transferred to a neighboring position on the wheel. In that position, visible in the lower part of the apparatus, two testing tubes are attached, each linked with an odor container, such that flies face a choice between the two odors. Air is sucked out of the apparatus by an exhaust pump, meaning that air flows from the outside via the odor containers and tubes with the flies inside into the exhaust. The floor plate of the apparatus is ∼30 × 30 cm in size. When fully assembled, it allows the training and testing of four cohorts of flies simultaneously. (D) Sketch of the sequence of events for relief- and punishment-learning, using a between-group design. For both groups of flies, one odor (gray cloud) is presented temporally so far removed from the electric shock (typically 3–4 min) that no association takes place. For those flies that undergo relief-learning, a second odor (white cloud) is presented only after an electric shock, at the moment of “relief” (relief-learning) (please note that for subgroups the chemical identity of the involved odors is swapped). In contrast, for those flies that undergo punishment-learning this sequence is reversed, such that the second odor is presented before the shock. These respective cycles of training are repeated six times; then, flies are given the choice between the two odors in a final test. From this choice behavior a learning index (LI) (–100; 100) is calculated such that positive scores imply conditioned approach to the second odor, while negative values imply conditioned avoidance of it (for details, see Yarali et al. 2008; Diegelmann et al. 2013b). Note that when very long intervals between the second odor (white cloud) and the shock are used, essentially both odors are presented in an explicitly unpaired way. This could either entail no learning at all, about either odor; or it could establish both odors as safety-signals (see Box 1). In either case, flies would distribute equally between the two odors in the final choice test (and this is indeed observed: see F). In other words, the employed discriminative training–binary choice test paradigm “purifies” scores for punishment- and relief-learning, yet factors out safety-learning effects. (E) Experimental data showing punishment- or relief-memory, dependent on the inter-stimulus interval (ISI) during training (the ISI is defined as the time interval from shock onset to odor onset). The ISI is calculated such that a negative ISI implies odor → shock training, while a positive ISI implies shock → odor training. The box plots show that for an ISI of −15 sec, strong punishment-learning is observed in terms of conditioned avoidance of the odor (negative LIs), while for an ISI of 40 sec, relief-learning is observed in terms of conditioned approach toward the odor, which is notably weaker. Box plots show the median as the middle line, and the 25%/75% and 10%/90% quantiles as box boundaries and whiskers, respectively. Coloring implies Bonferroni-corrected significance from chance, i.e., from zero. Sample sizes are N = 32 and 35 for punishment- and relief-memory, respectively. (F) Event-timing and conditioned valence. Test behavior is plotted across the ISI (–150, –45, –15, 0, 20, 40, 70, 200 sec). For clarity, only the median learning indices (LI) are displayed. As odor presentation during training is shifted in time past the shock episode, conditioned behavior changes valence from “Bad” to “Good”: It turns from conditioned avoidance to conditioned approach. Coloring implies Bonferroni-corrected significance from chance, i.e., from zero. Sample sizes are N = 8, 24, 32, 47, 24, 35, 12, 12. Data in E,F taken from Yarali et al. (2009b). Gray circles represent data from Tanimoto et al. (2004), Yarali et al. (2008), or Diegelmann et al. (2013b); the five gray triangles represent medians of datasets from unpublished experiments using the very same methods as the aforementioned papers.

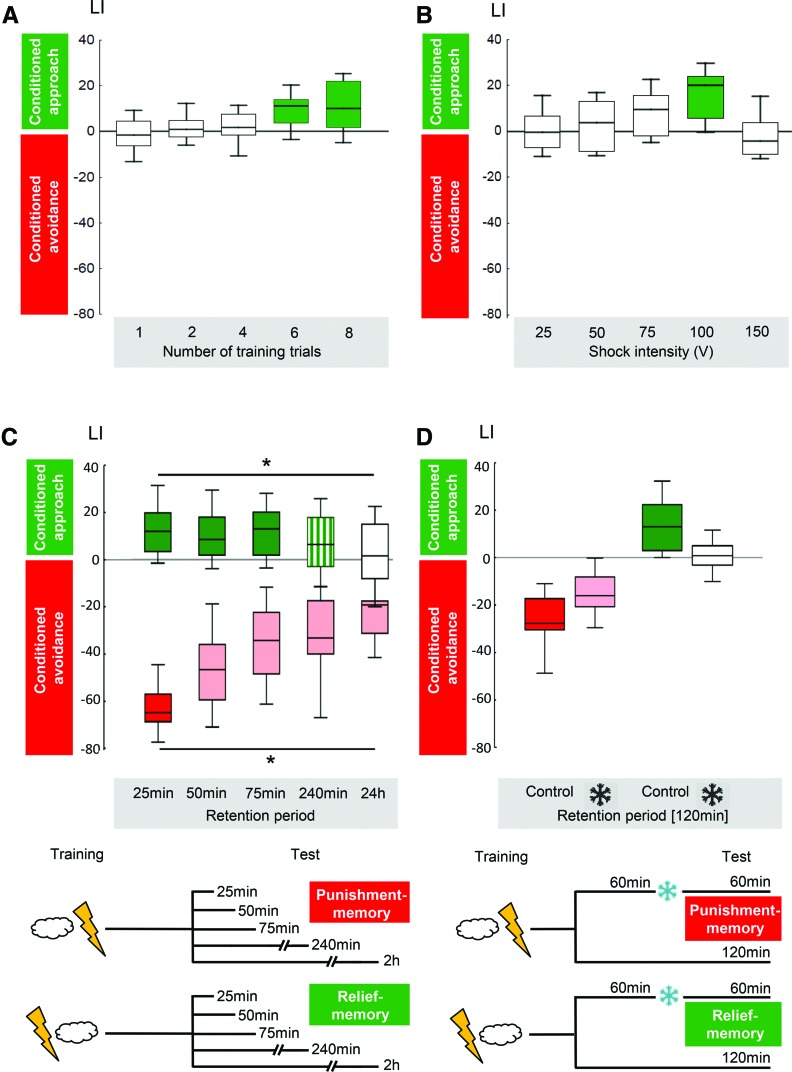

Figure 5.

Features of relief-learning in wild-type Drosophila. (A) Relief-learning requires multiple trials. Coloring implies Bonferroni-corrected significance from chance, i.e., from zero. Sample size from left to right: 16, 15, 20, 19, 23. Data taken from Yarali et al. (2008). (B) Relief-learning is strongest at intermediate shock intensities. Coloring implies Bonferroni-corrected significance from chance, i.e., from zero. Sample size from left to right: 8, 7, 12, 15, 7. Data taken from Yarali et al. (2008). (C) Time course of memory decay differs between relief-learning and punishment-learning. Relief-memory scores are stable for 75 min, yet have decayed fully by 24 h after training. In contrast, punishment-memory scores decay to about half within the first 75 min and then remain stable. Coloring implies Bonferroni-corrected significance from chance, i.e., from zero. Sample size N = 51, 35, 46, 43, 40 for relief-memory and N = 20 in all cases of punishment-memory. Data taken from Diegelmann et al. (2013b). (D) Cold-amnesia abolishes relief-memory, but spares about half of punishment-memory scores. Coloring implies Bonferroni-corrected significance from chance, i.e., from zero. Sample size N = 14 in all cases. Data taken from Diegelmann et al. (2013b).

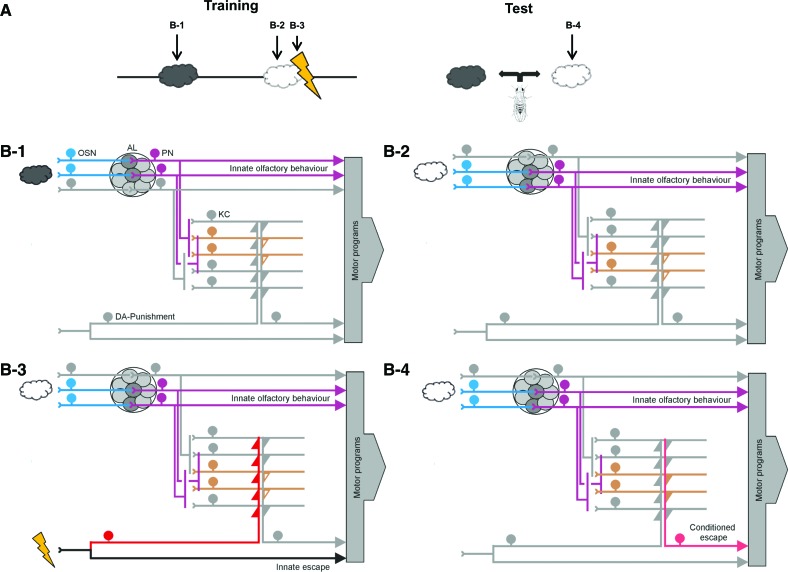

The cellular and molecular networks underlying short-term memory after punishment-learning have been studied in some detail (Fig. 4; regarding longer-term forms of memory and mechanisms of memory consolidation, see the recent studies by, e.g., Placais et al. 2012 or Perisse et al. 2013 and references therein). Briefly, upon presentation of an odor, a particular combination of olfactory sensory neurons on the antennae and maxillary palps is activated according to the ligand profiles of the respectively expressed receptor proteins. As a rule, all sensory neurons expressing the same receptor protein then converge at a single glomerulus in the antennal lobe, where they provide output to mostly uniglomerular projection neurons. The combination of projection neurons activated by a given odor is shaped, in addition, by lateral connections among the antennal lobe glomeruli. In the next step, projection neurons connect to both mushroom body Kenyon cells and lateral horn neurons as the third-order processing stages (see Laurent et al. 2001 for a discussion of temporal-coding aspects in olfaction). The electric shock, in turn, triggers a reinforcement signal, likely in a subset of dopaminergic neurons which carry this signal to many if not all Kenyon cells (Schwaerzel et al. 2003; Riemensperger et al. 2005; Kim et al. 2007; Claridge-Chang et al. 2009; Mao and Davis 2009; Aso et al. 2010, 2012; Pech et al. 2013; for larval Drosophila, see Schroll et al. 2006; Selcho et al. 2009). In contrast, as mentioned above, only a subset of the Kenyon cells is activated by the odor. It is only in these particular Kenyon cells, due to the coincidence of the odor-induced activity and the shock-induced reinforcement signal, that an odor–shock short-term memory trace is formed. Such a memory trace conceivably consists in a modulation of connection between the Kenyon cells and their output neurons (Sejourne et al. 2011), with the AC-cAMP-PKA signaling cascade as one of the necessary processes involved in molecular coincidence detection (Zars et al. 2000; Thum et al. 2007; Blum et al. 2009; Tomchik and Davis 2009; for a similar conclusion for appetitive learning, see Gervasi et al. 2010; for discussions of purely physiology-based conclusions about memory trace localization, see Heisenberg and Gerber 2008; for a general critique of the concept of memory trace localization, see Menzel 2013). If, after training, the learned odor is perceived, activity in the mushroom body output neurons—by virtue of their modified input from the Kenyon cells—is altered such that conditioned olfactory avoidance can take place (Sejourne et al. 2011). Interestingly, in accord with prediction-error signaling (Rescorla 1988), punishment-trained odors not only enable conditioned behavior but also apparently induce feedback onto dopaminergic neurons (Riemensperger et al. 2005; see the seminal study of Hammer 1993 for a corresponding finding in honeybee appetitive learning). Other, nontrained odors support conditioned avoidance only to the extent that they are similar in quality (Niewalda et al. 2011; Campbell et al. 2013; Barth et al. 2014) and/or intensity (Yarali et al. 2009a) to the actually trained odor. Appetitive learning, using sugar as reward is, in principle, organized in a similar way (e.g., Schwaerzel et al. 2003; Keene et al. 2006; Trannoy et al. 2011), with at least two significant differences:

It has been argued that, in addition to the Kenyon cells, there may be an odor-reward short-term memory trace in the projection neurons (Drosophila, Thum et al. 2007; honeybee, Menzel 2001; Giurfa and Sandoz 2012; Menzel 2012; but see Peele et al. 2006). However, in Drosophila at least, independent confirmation of this is so far lacking (see discussions in Heisenberg and Gerber 2008; Michels et al. 2011).

The reinforcing effect of reward involves aminergic neurons distinct from those mediating punishment (Schwaerzel et al. 2003; Burke et al. 2012; Liu et al. 2012), such that distinct sets of Kenyon cell output synapses are likely to be modulated to accommodate conditioned approach.

Figure 4.

Simplified working model of punishment-learning in Drosophila. (A) Timing of events for punishment-learning, and indication of the time points at which snapshots of activity patterns are displayed in B1–B4. (B) Snapshots of stimulus-evoked activity during training (B1–B3) and testing (B4). Coloring indicates activity. Please note that both the odors as well as the electric shock activate two pathways each: one to trigger the respective innate behavior and one detour toward the mushroom body Kenyon cells. It is these detour pathways that feature coincidence detection and associative plasticity. Also, please note the combined divergence–convergence connectivity between the projection neurons and mushroom body Kenyon cells. (OSN) Olfactory sensory neurons, (AL) antennal lobe, (PN) projection neurons, (KC) mushroom body Kenyon cells, (DA) a subset of dopaminergic neurons mediating an internal punishment signal. (B1,B2) Depending on the ligand profiles of the expressed olfactory receptors and the connectivity within the circuit, a given odor (gray cloud in B1) activates a particular set of olfactory sensory neurons, antennal lobe glomeruli, projection neurons, and mushroom body Kenyon cells. A different odor (white cloud in B2) activates a different pattern of the respective neurons. As for both these odors the animals are experimentally naïve, only innate olfactory behavior is expressed; that is, the output of the mushroom body Kenyon cells (open orange triangles) is not sufficiently strong to activate their postsynaptic partners and thus cannot steer conditioned escape. (B3,B4) Coincidence of a dopaminergic punishment signal and odor-induced activity in the odor-specific subset of mushroom body Kenyon cells (B3) (as flies are confined to the training tube, and no behavioral observations are possible [Fig. 3], the integration of innate shock-related escape with innate olfactory behavioral tendencies remains unknown). Coincidence is molecularly detected by the type I adenylate cyclase and arguably enacted, at least in part, by phosphorylation of synapsin and the recruitment of synaptic vesicles for subsequent release. If following this coincidence the previously punished odor is presented again (B4), it can activate a set of thus potentiated output synapses from the mushroom body Kenyon cells (filled triangles), such that conditioned escape is possible. Please note that the interplay of innate and conditioned olfactory behavior (B3) remains distressingly little understood. For clarity, the circuit is numerically simplified and altogether omits a number of neuronal classes including within-antennal lobe interneurons, multiglomerular projection neurons, and a host of modulatory inputs as well as of mushroom body intrinsic feedback neurons (for references to more detailed accounts, see main text; consult Laurent et al. 2001 for possible temporal-coding aspects of olfaction).

The net effect of driving or blocking signaling through a set of dopaminergic neurons covered by the TH-Gal4 expression pattern is to induce or impair, respectively, aversive memories (e.g., Schwaerzel et al. 2003; Schroll et al. 2006; Aso et al. 2010, 2012). The corresponding manipulations using the TDC-Gal4 expression pattern to drive octopaminergic neurons, or the tβHM18 mutant (CG1543) to impair octopamine biosynthesis, have corresponding net effects in the appetitive domain (e.g., Schwaerzel et al. 2003; Schroll et al. 2006; Burke et al. 2012). There is, however, clearly no simple dichotomy in reinforcement processing, dopamine for punishment and octopamine for reward: A subset of dopaminergic neurons from the so-called PAM-cluster mediates appetitive reinforcement (Burke et al. 2012; Liu et al. 2012), and genetic distortions of the dopamine receptor gene Dop1R1 (CG9652, also known as dumb) fittingly compromise both appetitive and aversive learning (Kim et al. 2007; Burke et al. 2012; Qin et al. 2012). Current work is beginning to disentangle the specific roles for subsets of aminergic neurons, their target Kenyon cells, and the respectively expressed amine receptors in the acquisition, consolidation, or retrieval of various forms of olfactory memory as well as in motivational control of behavior (e.g., Krashes et al. 2009; Berry et al. 2012; Placais et al. 2012; Perisse et al. 2013; see also Burke et al. 2012); for these aspects too, the roles of dopamine and octopamine apparently transgress the above-mentioned dichotomy. Thus, the striking valence-specificity of driving or impairing aminergic signaling seems to be a property of particular neurons and of their specific target receptors and cellular connectivity, rather than a property of transmitter systems as whole. A similar picture may be emerging for mammals as well (Brischoux et al. 2009; Bromberg-Martin et al. 2010; Ilango et al. 2012; Lammel et al. 2012). Strikingly, even within the aversive domain there may be dissociations on the level of aminergic neurons: Activations caused by aversive air puff or bad tastants in individual midbrain dopamine neurons in the monkey were either not observed at all (most midbrain dopamine neurons are instead activated strongly by unpredicted rewards and reward-predicting stimuli [Schultz 2013]) or were seen only for aversive air puff, or only for bad taste—but not both (Fiorillo et al. 2013b, loc. cit. Fig. 9A) (about a third of the neurons, however, were inhibited by both). This could provide the basis for memories that are specific for the kind, rather than the mere aversiveness, of the punishment employed; indeed, distinct dopamine neurons were activated by cues associated with air puff versus cues associated with bad taste (Fiorillo et al. 2013b, loc. cit. Fig. 9B) (again, about a third of the neurons were inhibited by both). In flies it has so far not been systematically tested whether memories refer to the specific quality of the reinforcer (but see Eschbach et al. 2011); if they did, this would call for a fundamental revision of current thinking on how memories are organized in the Drosophila brain.

Relief-learning

Compared to punishment- and reward-learning, relief-learning in Drosophila (Figs. 3, 5) is much less well understood. It was first described by Tanimoto et al. (2004): If an odor is presented after shock, flies subsequently approach that odor, arguably (Box 1) because of its association with the relieving cessation of shock (Figs. 3, 5). That is, a fairly minor parametric change such as inverting event timing during training has a rather drastic qualitative effect: It inverts behavioral valence, such that odor → shock training establishes conditioned avoidance, while shock → odor training establishes conditioned approach toward the odor.

In a follow-up study, Yarali et al. (2008) demonstrated that after relief-training, the preference toward the trained odor is indeed associatively increased; this effect does not seem to interact with the innate valence of the odor, but rather adds on to it. That study also suggested that relief-learning is likely independent of context–shock associations and that it needs more repetitive but less intense training than punishment-learning (Fig. 5; see Box 1 for the theoretical implications of these findings). Namely, relief-learning in Drosophila reaches asymptote after six training trials (together with the given signal-to-noise ratio, this makes relief-learning 10- to 20-fold more laborious than the standard one-trial version of punishment-learning; note that in all direct comparisons reviewed in this paper nothing but the inter-stimulus interval is varied between punishment- and relief-learning procedures) and is optimal at relatively mild shock intensities. The latter may be because shocks that are too strong induce anterograde amnesic effects, such that a subsequent odor presentation remains unrecognized by the flies, preventing the relatively weak effects of relief-learning. Alternatively, it may be that the more intense the shock, the longer is the aversive state it induces, such that the ensuing relief is further delayed. In that case, the optimal timing of the odor with respect to shock for yielding either type of memory would depend on shock intensity (Bayer et al. 2007). Both of these scenarios may offer perspectives on the nature of “trauma” (Box 2) in the sense that massive or mild adverse experiences may induce punishment and relief memories to a lesser or stronger extent.

BOX 2. Punishment, relief, and “trauma”.

Remembering past adverse, punishing events is, in principle, adaptive since it helps us to avoid or to cope with future dangerous situations. However, emotional memories of extremely distressing “traumatic” events can become overwhelming, leading to psychiatric complications such as post-traumatic stress disorder (PTSD). Core symptoms of PTSD are intrusions and flashbacks, i.e., unusually vivid memories of the traumatic event. Such a traumatic event can be criminal victimizations, accidents, combat experiences, or childhood maltreatment (summarized in Nemeroff et al. 2006). While the frequency, type, and intensity of such episodes are critical determinants for developing PTSD, it remains striking that <30% of those exposed to comparable sequences of events go on to develop PTSD. There are several person-related risk factors such as polymorphisms in several genes (e.g., DRD2 or MAO), female gender, and preexisting psychiatric conditions, such as depression and alcohol abuse, as well as ineffective coping strategies. To develop a conceptual handle on PTSD, therefore, not only do the status of the subject and aspects of punishment-learning have to be considered (e.g., genotype and personal history of the subject, graveness of the traumatic experiences, levels of generalization, the temporal dynamics of memory consolidation, retrieval-induced reconsolidation, extinction, and spontaneous recovery), but mechanisms of operant learning as well as nonassociative processes are also likely to be of significance (for discussions, see Siegmund and Wotjak 2006; Riebe et al. 2012).

Such complexity makes it difficult to establish comprehensive experimental models of PTSD. Also, as the graveness of the events is of significance for the switch from adaptive aversive learning to trauma, it is intrinsically problematic to develop such experimental models because this graveness defines the boundaries of what is ethically acceptable in experimental settings, not only in humans but in animals as well. In other words, if the experimental treatment is grave enough to induce PTSD, it may arguably not be ethical, whereas when it remains within ethical bounds, it may not be grave enough to induce PTSD. The existing rodent models of PTSD employ a single exposure to an intensive foot shock or to a predator to model the traumatic experience (e.g., Adamec and Shallow 1993; Siegmund and Wotjak 2007) and are useful for observing and understanding the long-lasting associative and nonassociative symptoms of fear (e.g., conditioned fear of contextual features of the traumatic experience, social anxiety, neophobia, exaggerated startle). Such models should allow investigation into whether such experiences also lead to conditioned relief and whether such conditioned relief impacts “rodent-PTSD.”

Regarding PTSD in humans, we find it reasonable to suppose that, though perhaps restricted to an implicit level, there is a moment of relief upon the cessation of a traumatic event and that this may be of mnemonic, psychological, and behavioral significance to the traumatized person. In particular, to the extent that repetitions are “needed” to induce trauma, increasing or broadening of generalization of relief-type memories may be relevant entry points to ameliorate the impact of a first relatively mild such episode and/or to protect the patient in a possible next such episode. Furthermore, one may ask whether the cessation of intrusive flashback memories may cause relief, whether such relief contributes to the maintenance of the disorder or can be exploited in therapy, or whether it may rather complicate therapy if such relief-learning repeatedly happens in therapeutic settings so as to unwittingly induce attachment to these settings. Basic and translational research on punishment- and relief-learning with relatively mild aversive events may therefore also turn out useful with regard to trauma and PTSD.

The temporal pattern of decay of relief-memory differs from both punishment- and reward-memories (Fig. 5; Yarali et al. 2008; Diegelmann et al. 2013b): Over the first 4 h following training, relief-memory decays much more slowly than punishment-memory, as is reminiscent of the slow initial decay rate of reward-memory (Tempel et al. 1983; Schwaerzel et al. 2007; Krashes and Waddell 2008). For longer retention periods, however, only multiple, temporally spaced training trials result in longer-term (24-h) punishment-memory (Tully et al. 1994; Isabel et al. 2004; Diegelmann et al. 2013b), whereas for longer-term reward-memory even a single training trial suffices (Krashes and Waddell 2008; Colomb et al. 2009). For relief-learning, despite using multiple, spaced training trials, Diegelmann et al. (2013b) found no longer-term relief-memory (Fig. 5). Also, unlike both punishment- and reward-learning, relief-learning fails to induce amnesia-resistant memory (Fig. 5; Diegelmann et al. 2013b). If both punishment- and relief-memories were formed in a natural string of events around a painful experience, these findings may be of practical importance: While trying to erase punishment-memory, one may unwittingly also erase relief-memory. Depending on the relative strength of these memories and the relative effectiveness of the treatment, the net effect of such manipulation may be to make the overall mnemonic effect of the experience even more adverse. Again, these conclusions may be of relevance for the understanding of “trauma” and its treatment (Box 2).

In two further studies, the neurogenetic bases of fly relief-learning were investigated: First, the role of the white gene (CG2759) in relief-learning was tested (Yarali et al. 2009b). The white gene product forms one-half of an ATP-binding transmembrane transporter (O'Hare et al. 1984). Its reported cargoes are the signaling molecule cGMP, as well as a heterogeneous set of molecules required for the synthesis of eye pigments and serotonin (Sullivan and Sullivan 1975; Howells et al. 1977; Sullivan et al. 1979, 1980; Koshimura et al. 2000; Evans et al. 2008). The white1118 mutation enhances punishment-learning (Diegelmann et al. 2006; Yarali et al. 2009b) and reduces relief-learning (Yarali et al. 2009b), while leaving unaltered the reflexive avoidance behavior toward the shock. It thus seems that specifically the mnemonic effect of shock, the “take-home message” of the painful event, is more negative for the white1118 mutant. Whether this genetic effect in the white1118 mutant is molecularly related to altered levels of biogenic amines is a matter of controversy (Sitaraman et al. 2008; Yarali et al. 2009b). Interestingly, genetic variance in the human homolog of the white gene (ABCG1) has been related to susceptibility to mood and panic disorders (Nakamura et al. 1999).

Second, Yarali and Gerber (2010) compared relief-learning to both punishment- and reward-learning in terms of sensitivity to manipulations of aminergic signaling. Blocking synaptic output from a subset of dopaminergic neurons defined by the TH-Gal4 driver partially impairs punishment-learning (see also Schwaerzel et al. 2003; Aso et al. 2010, 2012); however, relief-learning remains intact. As for the comparison to reward-learning, interfering with another set of dopaminergic neurons, which need to provide output for reward-learning to occur (defined by the DDC-Gal4 driver) (Burke et al. 2012; Liu et al. 2012), also leaves relief-learning intact. Furthermore, the tβhM18 mutation, compromising octopamine biosynthesis, impairs reward-learning (see also Schwaerzel et al. 2003), but not relief-learning. It therefore appears that relief-learning is neurogenetically distinguishable from both punishment- and reward-learning. Importantly, however, roles of dopamine or octopamine signaling in relief-learning cannot be ruled out because in none of the experiments described was the respective type of aminergic signaling completely shut off, due to methodological constraints (for a detailed discussion, see Yarali and Gerber 2010). Also, in the tests for octopamine signaling, relief-learning scores in the genetic controls were low, making it practically impossible to ascertain decreases in relief-learning scores; as tendentially increased relief-learning scores may be recognized in the data provided by Yarali and Gerber (2010), however, it still seems fair not to assume a strict requirement for octopamine in relief-learning.

We would like to note that despite the above-mentioned differences across punishment-, reward-, and relief-learning, these three processes are certainly not completely distinct; indeed, much of the sensory and motor circuitry as well as the molecular mechanisms will be shared. Thus, the research problem is rather to understand exactly which processes are shared and which are specific across these three tasks—and how the fly brain is organized to accommodate them.

Possible mechanisms underlying relief-learning

The relative timing of events is also a key factor in synaptic plasticity. Typically, in spike-timing dependent plasticity (STDP) (for reviews, see Bi and Poo 2001; Caporale and Dan 2008; Markram et al. 2011) a synaptic connection is strengthened when action potentials in the presynaptic cell precede those in the postsynaptic cell, while a reverse order of events weakens the synaptic connection.

Given the conspicuous parallelism of STDP on the synaptic level with the timing-dependent switch between punishment and relief-learning in Drosophila, Drew and Abbott (2006) ventured a computational study to establish whether STDP, operating at the time scale of milliseconds, can indeed lead to behavioral effects on the time scale of seconds. The authors modeled a circuit in which the odor activates a subset of Kenyon cells, whereas the shock excites their postsynaptic partner, which mediates avoidance. For both odor- and shock-induced activity, rather high firing rates were assumed that decay only slowly (several seconds) upon termination of the respective stimulus. Then, the authors implemented an STDP rule, operating at the millisecond-scale (indeed, STDP takes place at the Kenyon cell output synapses, as shown in the locust [Cassenaer and Laurent 2007, 2012]). As long as relatively high spiking rates and relatively slow decay rates are assumed, this model does account for the effect of the relative timing of odor and shock at the behavioral level, which occurs at the scale of several seconds. However, the assumed strong and persistent spiking activity has not been observed in Drosophila Kenyon cells; rather, it turns out that these cells fire strikingly few spikes at the beginning and/or at the end of odor presentation (Murthy et al. 2008; Turner et al. 2008; for moth Kenyon cells, see Ito et al. 2008). Also, it remains unclear whether the shock signal indeed impinges upon the postsynaptic partners of the Kenyon cells. The data so far rather suggest that the Kenyon cells themselves receive a dopaminergic reinforcement signal: Dopamine receptors are enriched in the Kenyon cells (Han et al. 1996; Kim et al. 2003), and restoring receptor function in the Kenyon cells rescues the learning impairment of a dopamine receptor mutant (Kim et al. 2007). Furthermore, synaptic output from Kenyon cells during the training phase has repeatedly been found to be dispensable for punishment-learning (Dubnau et al. 2001; McGuire et al. 2001; Schwaerzel et al. 2003). Altogether, Drew and Abbott's (2006) STDP-based model thus does not embrace the empirical findings particularly well. We note, however, that the STDP rule is sensitive to neuromodulatory effects, as shown for the locust Kenyon cell output synapses (Cassenaer and Laurent 2012), and incorporating such effects may result in more realistic STDP-based models of punishment-, reward-, and relief-learning. In any event, the STDP-based model by Drew and Abbott (2006) does not predict safety-learning as a result of unpaired presentations of odor and shock. Such unpaired-training can, however, have mnemonic consequences: In larval Drosophila unpaired presentations of odor and a sugar reward turn the odor into a predictor of no-reward (Saumweber et al. 2011; Barth et al. 2014; concerning honeybees, see Hellstern et al. 1998 and references therein). Also, given the innate avoidance of odors typically observed in the current paradigm, the attraction to odors after unpaired presentations of odor and shock in adult Drosophila can be understood as reflecting safety-learning (e.g., Niewalda et al. 2011, loc. cit. Fig. S5; Barth et al. 2014).

Alternatively, the event timing-dependent transition between punishment and relief-learning may be rooted in the very molecular mechanism of coincidence detection. It is likely that during punishment-training the type I adenylate cyclase rutabaga (CG9533) acts as a molecular detector of the coincidence between a neuromodulatory reinforcement signal and the odor-evoked activity in the mushroom body Kenyon cells (Tomchik and Davis 2009; Gervasi et al. 2010). Due to this coincidence, in the respective odor-activated Kenyon cells, cAMP would be produced and PKA be activated, leading to the phosphorylation of downstream effectors, conceivably including Synapsin (CG3985). Synapsin phosphorylation would, in turn, allow the recruitment of reserve-pool vesicles toward the readily releasable pool (for discussion, see Diegelmann et al. 2013a), enabling a stronger synaptic output upon odor presentation after training and eventually leading to conditioned avoidance. Consistent with this scenario, the impairments in punishment-learning by mutations in the rutabaga and synapsin genes are not additive (Knapek et al. 2010); also, in odor–sugar associative learning of larval Drosophila, the impairment of the syn97 mutant cannot be rescued by a transgenically expressed synapsin that lacks functional PKA/CaMKII recognition sites (Michels et al. 2011; for recent data suggesting a role of CaMKII for Synapsin phosphorylation in olfactory habituation, see Sadanandappa et al. 2013).

Could both punishment and relief-learning possibly be accommodated in the same Kenyon cells, based on an event timing-dependent, bidirectional modulation of AC-cAMP-PKA signaling? This was explored in a computational study by Yarali et al. (2012). In their model, upon the application of shock-alone, all Kenyon cells receive a shock-induced neuromodulatory signal, which triggers G-protein signaling, consequently activating the adenylate cyclase. As active adenylate cyclase accumulates, the reverse reaction, that is, deactivation of the adenylate cyclase, also takes place. Once shock is over and the neuromodulatory signal has waned, this deactivation of the adenylate cyclase becomes the dominant reaction, leading eventually to the deactivation of all adenylate cyclase molecules. The level of cAMP production caused by such shock-alone stimulation is taken as a “baseline” level and assumed to already potentiate the output from all Kenyon cells to the downstream avoidance circuit. Application of an odor, in turn, raises the presynaptic Ca2+ concentration specifically in the respective subset of model Kenyon cells coding for this odor. Such presynaptic Ca2+ multiplicatively increases the rate constants for both the G-protein-dependent activation of adenylate cyclase and its deactivation (Yovell and Abrams 1992; Abrams et al. 1998). Thus, the net effect of Ca2+ on the adenylate cyclase-dynamics depends on its timing. That is, Ca2+ has no net effect if it arrives long before the neuromodulatory activation of adenylate cyclase has begun, or long after the deactivation of adenylate cyclase has been completed. Note that this model thus also does not predict safety-learning resulting from unpaired presentations of odor and shock, although such learning likely does take place. On the other hand, if the Ca2+ arrives while adenylate cyclase-activation is dominant, it speeds up this activation, whereas if it arrives while adenylate cyclase is predominantly being deactivated, this deactivation is accelerated. Accordingly, in simulated punishment-training the shock-induced neuromodulatory signal activates the adenylate cyclase in all Kenyon cells. Only in those Kenyon cells that code for the particular odor does a rise in Ca2+ coincide with this activation phase, speeding it up. The resulting above-baseline level of cAMP is then assumed to potentiate the output from these Kenyon cells further, beyond the potentiation in all Kenyon cells due to the shock-alone, thus enabling the trained odor subsequently to induce conditioned avoidance more readily than other odors. In turn, in simulated relief-training, the odor-induced rise in Ca2+ follows the shock-induced neuromodulatory signal that coincides with the deactivation phase of the adenylate cyclase. Therefore, in the particular odor-coding Kenyon cells, the adenylate cyclase is deactivated faster, resulting in cAMP production below the baseline level. Consequently, the output from these Kenyon cells is less potentiated compared to that of all other Kenyon cells (or compared to a situation where this odor is presented long before or long after the shock), rendering the trained odor less likely to induce conditioned avoidance than other odors. In a choice situation this would result in relative approach toward the trained odor.

Thus, with this model, the timing-dependence of associative function on the behavioral level can be simulated, using Ca2+ as a stand-in for odor, and neuromodulator as a stand-in for shock. The bidirectional regulation of the very coincidence detector, the adenylate cyclase, is used to explain the effect of event-timing on learning. This now invites experimental scrutiny, especially with respect to the requirement for the AC-cAMP-PKA cascade and the Kenyon cells for relief-learning as well as the identity of the respective neuromodulatory signal.

In any event, both Drew and Abbott's (2006) STDP-based model and the adenylate cyclase-based model by Yarali et al. (2012) follow the “canonical” view of short-term mnemonic odor coding in the mushroom body, holding that odors are coded combinatorially across the full array of γ-lobe Kenyon cells (regarding longer-term memory, this has recently been challenged by Perisse et al. 2013, suggesting that the α/β set of Kenyon cells responsible for 3-h memory may be internally “multiplexed” by valence and/or memory strength). That is, punishment and relief-learning rely on the same olfactory representation such that both kinds of learning modify the same Kenyon cell output synapses onto the same downstream circuit (depicted in Fig. 4 for punishment-learning)—but in opposite ways. While this scenario readily accounts for the observed opposite conditioned behaviors of avoidance and approach, more fine-grained investigations into conditioned behavior, and into the anatomy of the mushroom body lobes, may show up the shortcomings of such scenarios: Punishment-learning may modulate kinds of behavior that relief-learning leaves unaffected and vice versa.

Rat

Punishment-learning

Learning that a cue predicts an electric shock is one of the best-studied cases of Pavlovian conditioning in the rat (e.g., for reviews, see Fendt and Fanselow 1999; LeDoux 2000; Maren 2001; Davis 2006; Ehrlich et al. 2009; Pape and Pare 2010). The range of conditioned behaviors toward the learned cue can be understood as making the animal ready for the predicted aversive event and includes protective and defense-related behaviors such as orienting, avoidance, freezing, and potentiation of reflexes including the startle response to facilitate fight-or-flight behavior, as well as autonomic changes such as tachycardia, hypertonia, and an activation of the hypothalamic–pituitary–adrenal axis. Because this syndrome of conditioned effects is similar to the signs of fear in humans, this paradigm is typically referred to as fear conditioning. However, given that for flies there are no arguments for such a comprehensive similarity to human fear, and given that for the present cross-species review we want to use a terminology that is consistent across species, we will use the term punishment-learning instead of fear conditioning for the remainder of this contribution.

In punishment-learning procedures for the rat, the cues to be learned can be visual, as already mentioned, or olfactory, tactile, auditory, or comprising contextual combinations of stimuli from multiple modalities, provided the respective sensory systems are mature (Hunt et al. 1994; Richardson et al. 1995, 2000). Rats can learn the association between cue and punishment, which is typically delivered as a foot shock via a metal floor grid, after just one pairing (e.g., Sacchetti et al. 1999); however, more pairings lead to stronger and more stable memories. Further variables which modulate the strength and speed of learning are the intensity of the cue as well as of the shock, and in particular the timing of cue and shock relative to each other: According to the predictive character of the process, learning is not best when shock is applied with the beginning of the cue but, rather, when the shock is applied upon the end of the cue (“delay” procedure). When a temporal gap is left between cue offset and shock onset (“trace” procedure), rats learn less well as the duration of the gap increases. This timing-dependency not only matches that discussed above for the fly, but it is indeed one of the rather few universally observed features of associative learning, on the respectively adaptive time scales ranging from hundreds of milliseconds in the case of eye-blink conditioning to hours in the case of flavor-aversion learning (cf. Rescorla 1988, loc. cit. Fig. 1).

There are different ways to test behaviorally whether the rats have established the association between cue and shock. Here, we focus on the modulation of startle amplitude as a read-out. The startle response (Figs. 6, 8) can be elicited by sudden and intense stimuli (historically, the sound of pistol shots has been used in human subjects [Strauss 1929]) and consists of short-latent muscle contractions collectively serving to protect the organism (especially eyes and neck) and preparing it for subsequent fight-or-flight. It is an evolutionarily well-conserved and much-studied reflex (Koch 1999), such that the neural mechanisms of the startle response itself and of its modulations are known in quite some detail in rodents (Fendt and Fanselow 1999; Davis 2006; Davis et al. 2009), monkeys (Davis et al. 2008), and man (Davis et al. 2008, 2009, van Well et al. 2012). The startle response is experimentally elicited by a sudden, very loud noise from a loudspeaker and can be measured by motion-sensitive transducers underneath the floor grid of the measurement apparatus (Fig. 6). This startle response, despite being phylogenetically ancient, is certainly not completely hard-wired: Although the motor programs are qualitatively rather stereotyped, the amplitude of the startle response is higher when the animal is afraid. That is, after animals (or humans) are trained such that they associate a cue with an electric shock, the amplitude of the startle response is increased in the presence as compared to the absence of the cue, an effect called fear-potentiated startle (Brown et al. 1951; Lipp et al. 1994; Koch 1999; Grillon 2002; Davis 2006). It is particularly important in the current context that the startle response has a nonzero baseline; that is, both increases and decreases in startle amplitude can be measured! Indeed, after associating a cue with a reward such as food or rewarding brain stimulation, the amplitude of the startle response is decreased in the presence of the cue (Schmid et al. 1995; Koch et al. 1996; Steidl et al. 2001; Schneider and Spanagel 2008). Thus, as in the case already discussed for flies, the paradigm of startle modulation is bivalent: Under neutral conditions startle is normal, while when expecting something bad startle is potentiated, and when expecting something good startle is attenuated.

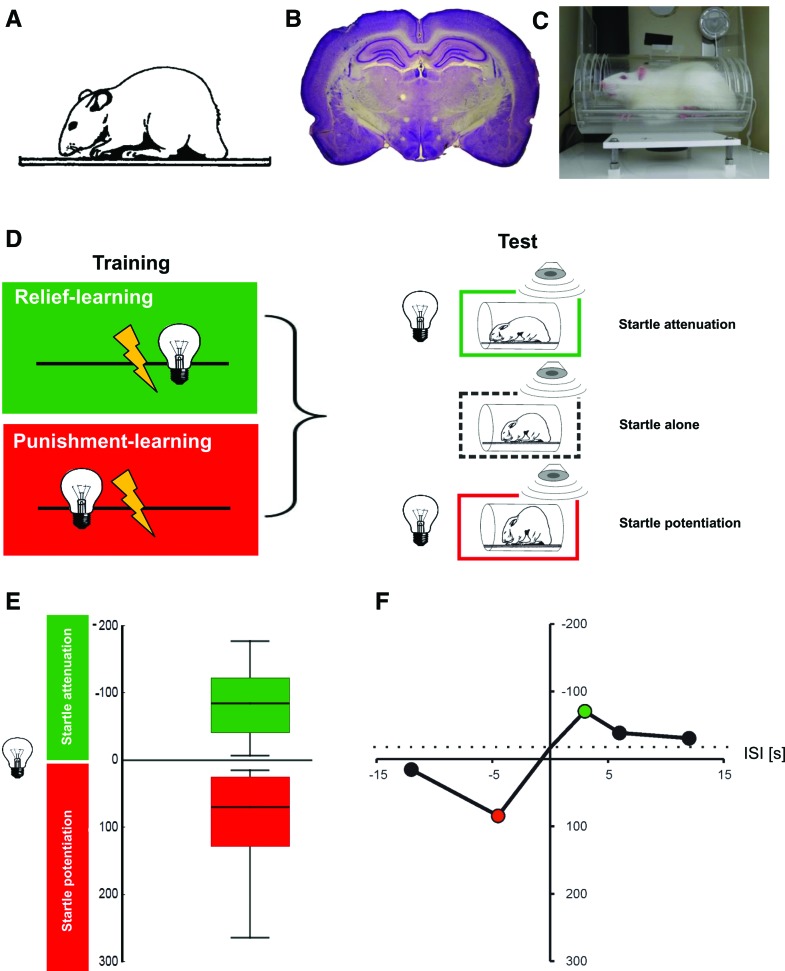

Figure 6.

Punishment- and relief-learning in the rat. (A) Image of an adult rat (adapted from Koch 1999 with permission from Elsevier © 1999). (B) Histological preparation of a rat brain. Depicted is a Nissl-stained frontal section at the level of the amygdala and dorsal hippocampus. Intensely stained regions are somata-rich, lighter stain indicates fiber tracts. A typical rat brain consists of ∼100 million neurons and is ∼2.5 cm in width. (C) Apparatus for measuring the modulation of the startle response by relief- and punishment-learning in the rat. The animal is placed in a small enclosure (9-cm diameter, 16-cm length). During training, light stimuli can be presented; electric shocks are administered via a floor grid. During the test a speaker can be used to deliver a loud noise that makes the animal startle, either in the presence or in the absence of the trained light stimulus. The amplitude of the startle response is measured by motion-sensitive transducers mounted underneath the floor grid. (D) Sketch of the sequence of events for relief- or punishment-learning, using a between-group design. For the group undergoing relief-learning, the light stimulus follows the shock, while for punishment-learning this sequence is reversed, such that the light stimulus precedes the shock. In the test the amplitude of the startle response is measured, either in the presence or in the absence of the light stimulus. Relative to the startle amplitude upon the loud noise alone, the startle amplitude is attenuated after relief-learning (indicating positive conditioned valence), while after punishment-learning the startle amplitude is potentiated (indicating negative conditioned valence). Images of startled rats modified from Koch (1999) (adapted with permission from Elsevier © 1999). (E) Experimental data showing relief- or punishment-memory, depending on the inter-stimulus interval during training (the inter-stimulus interval [ISI] is defined as the time interval from shock onset to light onset). In order to display “Good” (positive conditioned valence) toward the top, startle attenuation is plotted toward the top of the y-axis; in turn, and in deliberate breach of the convention in the field, startle potentiation is plotted toward the bottom of the y-axis in order to display “Bad” (negative conditioned valence) toward the bottom. In line with convention, the sign of the startle modulation is presented as, respectively, negative or positive, because the actual behavior of the rats consists of less or more startle, respectively. Please note that the ISI is defined such that a negative ISI implies light → shock training, while a positive ISI implies shock → light training. The box plots to the left show that for an ISI of −4.5 sec, punishment-learning is observed in terms of potentiated startle (red), while for an ISI of 2.5 sec, relief-learning is observed in terms of attenuated startle (green). Notably, the two types of startle modulation do not appear as drastically different in strength as in flies (Fig. 3). Box plots show the median as the bold middle line, and the 25%/75% and 10%/90% quantiles as box boundaries and whiskers, respectively. Sample size is N = 16 per group. (F) Event-timing and conditioned valence. Test behavior is plotted across the ISI. For clarity, only the median scores of startle modulation are displayed, derived from five experimental groups. As the light presentation is shifted in time past the shock episode during training, conditioned valence changes from “Bad” to “Good”: it turns from startle potentiation to startle attenuation. Coloring implies Bonferroni-corrected significance from chance, i.e., from zero. Sample sizes are N = 12–16 per group. The stippled line shows the behavior of two control groups that had received either only the cue but no electric shocks at all, or cue and electric shocks at randomized ISIs. In these control groups a slight decrease in startle is observed, relative to the startle-alone testing condition (see text for discussion). Data in E,F taken from Andreatta et al. (2012).

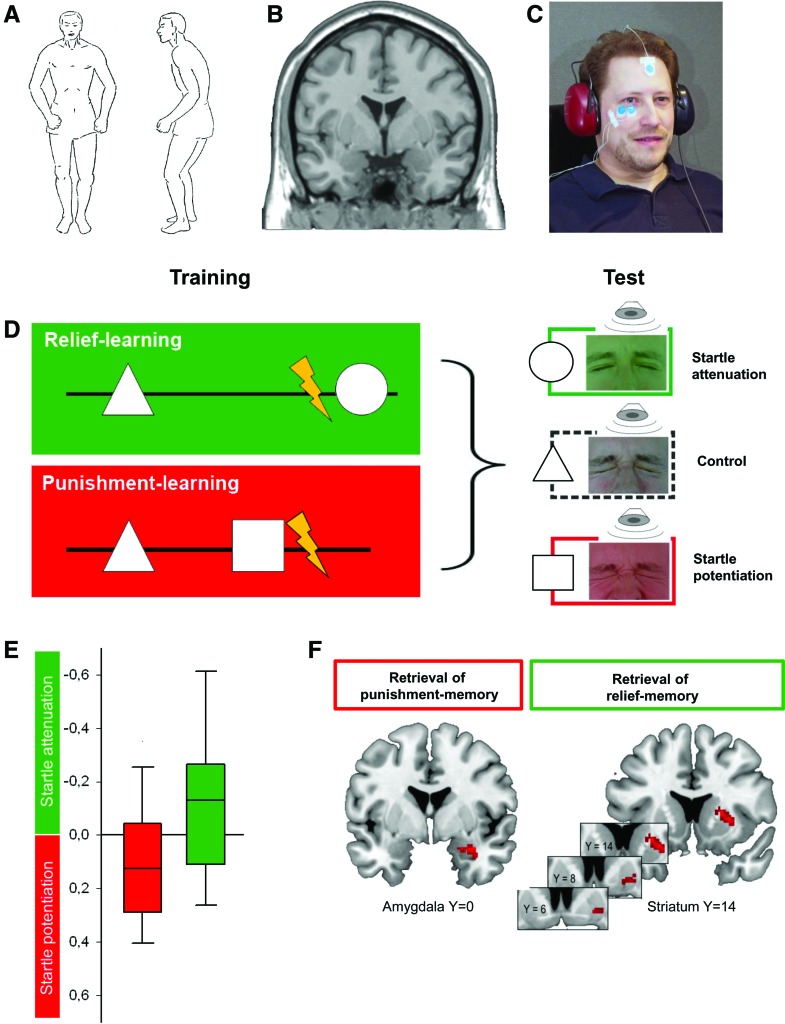

Figure 8.

(A) Body posture upon a pistol shot illustrating the startle response pattern (from Landis and Hunt 1939) initially described as “Zusammenschrecken” by Strauss (1929). Together with the immediate, brief concomitant closure of the eyes, startle serves to protect from imminent threat and to prepare the subject for fight-or-flight behavior. (B) Magnetic resonance image of a human brain, coronal slice. (C) Experimental setup for relief- and punishment-learning in humans. The subject faces a computer monitor (not shown) on which visual stimuli can be displayed. During training mild electric shock punishment can be delivered to the left forearm. During the test, a loud noise can be delivered through the earphones to induce the startle response while the subjects watch the screen on which visual stimuli are displayed. Measurement electrodes (blue) record the eye-closure component of the startle response via electromyography (EMG) from the musculus orbicularis oculi. (D) Sketch of the sequence of events for relief- or punishment-learning, using a between-group design. During training, in both groups a control stimulus (e.g., a triangle) is presented temporally removed from shock. In the relief-learning group, a second geometrical stimulus (e.g., a circle) is presented upon cessation of shock (ISI = 6 sec). During the test the startle amplitude, evoked by a loud noise from the earphones and measured by the eye-EMG, is less when subjects are viewing the relief-trained than when viewing the control stimulus. In contrast, in the punishment-learning group the second stimulus (e.g., a square) is presented before shock (ISI = −8 sec). During the test the startle amplitude is higher when subjects are viewing the punishment-trained than when viewing the control stimulus. Note that the experimental role of the geometrical shapes is counterbalanced across subjects. (E) Experimental data showing relief- or punishment-memory, dependent on the relative timing of the visual stimulus and shock during training. As in Figure 6, positive conditioned valence (“Good”) is plotted toward the top of the y-axis indicating the degree of startle attenuation; in turn, startle potentiation is plotted toward the bottom of the y-axis in order to display negative conditioned valence (“Bad”) toward the bottom. The sign of the startle modulation is presented as, respectively, negative or positive, because the actual behavior of the subjects consists of less or more startle, respectively. Box plots show the median as the bold middle line, and the 25%/75% and 10%/90% quantiles as box boundaries and whiskers, respectively. Data taken from Andreatta et al. 2010, sample sizes are N = 34 and N = 33 for the punishment- and the relief-learning groups, respectively. (F) After punishment-learning, the learned visual stimulus induces activation of the right amygdala (left panel), but not of the striatum. In contrast, a relief-conditioned visual stimulus induces activation of the right striatum (right panel), but not of the amygdala; striatum activation extends to the ventral striatum/nucleus accumbens. Both the punishment- and the relief-conditioned stimulus induce activation of the left insula as well (not shown). From Andreatta et al. (2012), sample sizes are N = 14 for both the punishment- and the relief-learning groups.

Concerning the circuits underlying punishment-learning in rats (Fig. 7), it is well established that the amygdala plays a critical role (summarized in Maren 2001, Ehrlich et al. 2009, and Pape and Pare 2010). The lateral/basolateral part of the amygdala features as a convergence site for cue and shock processing. Information about the cue is carried by projections from the thalamic geniculate nucleus and the sensory cortices, while information about the shock is carried by projections from posterior intralaminar nuclei of the thalamus and caudal insular cortex. During acquisition of the cue–shock association, long-term potentiation of the synapses from the cortical sensory and thalamic geniculate projections to projection neurons within the lateral/basolateral amygdala is induced, thus effectively potentiating the cue's sensory pathway to the lateral/basolateral amygdala (McKernan and Shinnick-Gallagher 1997; Rogan et al. 1997). Furthermore, it is believed that long-term depression occurs in sensory pathways mediating cues to the lateral/basolateral amygdala whose activity is un- or anti-correlated with shock occurrence (Heinbockel and Pape 2000). Thus, when after training the learned cue is presented alone, projection neurons of the lateral/basolateral amygdala will be activated. These neurons can, in turn, activate projection neurons in the central nucleus of the amygdala. The conditioned behavior is organized via projections from these central amygdala neurons toward the midbrain and the brainstem (Fendt and Fanselow 1999; LeDoux 2000; Maren 2001), i.e., the potentiation of startle takes place by direct and indirect projections from the central amygdala to giant neurons within the caudal pontine reticular nucleus that in turn activate spinal motor neurons (Fendt and Fanselow 1999; Koch 1999).

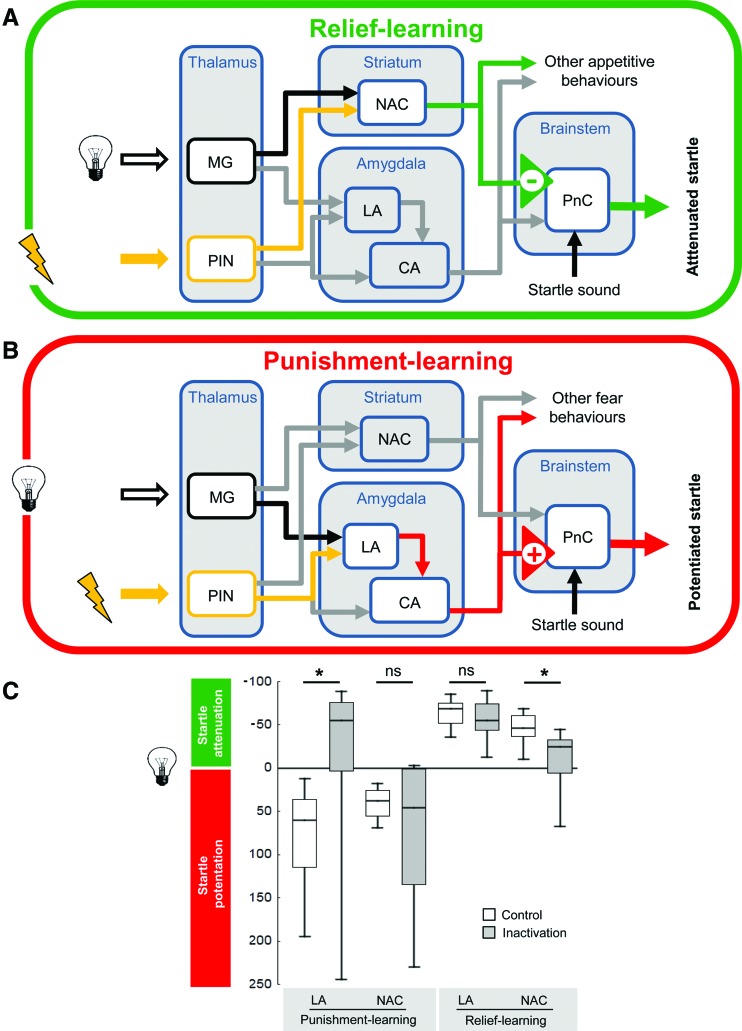

Figure 7.

Simplified working model of relief- and punishment-learning in the rat. (A) During relief-learning, the shock is presented first and the light is presented only afterward. This, we propose, leads to memory trace formation by the coincidence of light processing and internal reinforcement processing in the nucleus accumbens (NAC) of the striatum where some neurons are active upon shock offset. Upon testing with the light stimulus, output from the nucleus accumbens is suggested to impinge upon the pontine reticular nucleus (PnC) in the brainstem to mediate startle attenuation. (B) During punishment-learning, the light is presented first and the shock is presented only afterward. This is known to lead to memory trace formation by the coincidence of light processing and internal reinforcement processing in the lateral amygdala (LA). Upon testing with the light stimulus, output from the lateral amygdala, via the central amygdala (CA), also projects to the pontine reticular nucleus (PnC) in the brainstem, but by a pathway that leads to startle potentiation. (MG) Medial geniculate nucleus, (PIN) posterior intralaminar nuclei. (C) Local transient inactivation, using the GABA-A receptor agonist muscimol, of either the lateral amygdala (LA) or the nucleus accumbens (NAC) during the test for conditioned punishment or conditioned relief. Open plots refer to controls injected with saline. Punishment-learning leads to negative conditioned valence and thus is plotted downward (startle potentiation); relief-learning leads to positive conditioned valence and thus is plotted upward (startle attenuation). Inactivation of the lateral amygdala abolished punishment-memory but leaves relief-memory intact; in turn, inactivation of the nucleus accumbens leaves punishment-memory intact yet impairs relief-memory. Sample sizes are N = 7–9 per group. Data in C taken from Andreatta et al. (2012).

Key observations to support this working hypothesis come from pharmacological inactivation or lesions of the lateral amygdala in rats, which robustly block punishment-learning (Hitchcock and Davis 1986; Helmstetter and Bellgowan 1994; Muller et al. 1997). Importantly, the functional integrity of the lateral amygdala is necessary for both establishing and remembering cue–shock associations (Muller et al. 1997). It has further been revealed that long-term potentiation of the thalamic/cortical input to the lateral/basolateral amygdala underlying punishment-learning is NMDA receptor-dependent (Miserendino et al. 1990; Maren et al. 1996) and is controlled by a complex network of GABA-ergic interneurons (summarized in Ehrlich et al. 2009). Activity of these interneurons can be modulated by several neuropeptides as well as by serotonin, noradrenalin, and acetylcholine.

As briefly mentioned above, the amplitude of startle is not only potentiated by learned punishment but can also be attenuated by cues associated with reward. Such conditioned “pleasure-attenuated startle” was first established by Schmid et al. (1995): In their study, a light cue was repeatedly paired with a food reward. After this association was learned, startle amplitude was attenuated by the light cue. This effect can be blocked by lesions of the dopamine neurons within the nucleus accumbens but not by lesions of the amygdala (Koch et al. 1996). This first study on the neural basis of pleasure-attenuated startle suggested that the mesoaccumbal system that is generally crucial for reward-related learning (for reviews, see, e.g., Cardinal and Everitt 2004; Schultz 2013; but see also Bromberg-Martin et al. 2010; Ilango et al. 2012; Lammel et al. 2012) is also important for pleasure-attenuated startle.

Several studies have also demonstrated that the nucleus accumbens is able to modulate punishment-learning. For example, temporary inactivation of the nucleus accumbens by local injections of tetrodotoxin or of the muscarinic agonist carbachol blocks the acquisition and expression of punishment-learning (Schwienbacher et al. 2004, 2006; Cousens et al. 2011). However, manipulating dopamine signaling within the nucleus accumbens has no effect on punishment-learning (Josselyn et al. 2005; Schwienbacher et al. 2005). This is supported by a recent study showing that the GABA-ergic but not the dopaminergic neurons in the ventral tegmental area (projecting to the nucleus accumbens) are activated by punishment (Cohen et al. 2012).

Relief-learning

For the establishment of a relief-learning paradigm on the basis of the startle response in rats (Andreatta et al. 2012), it seemed significant that relief-learning in flies works best with a relatively long gap between electric shock offset and cue onset (5–25 sec) (Tanimoto et al. 2004; Yarali et al. 2008). In the vertebrate literature this kind of procedure has been called “backward-trace” conditioning; for the remainder of this contribution, however, we use the term relief-learning instead, in order to apply a consistent nomenclature across the three species covered. In any case, such a relief-learning type of procedure has been employed relatively rarely: Often punishment and cue have a coincident onset, but the cue outlasts the punishment by a long time (Siegel and Domjan 1974; Walasek et al. 1995), or a rather short cue is presented during or after a rather long aversive stimulus (Heth and Rescorla 1973). Also, the question investigated has mostly been whether such a relief-learning type of procedure leads to less strong learning than punishment-learning. However, there have been few studies directly suggesting a positive valence of the learned cue after relief-learning types of procedure: Walasek and colleagues (1995) used a 1-sec shock and a 1-min cue which had simultaneous onsets such that the cue outlasted the punishment by 59 sec. In a subsequent test session, the authors observed an increase in bar pressing for food during the presence of the cue (compared with bar pressing under baseline conditions), which was interpreted as “conditioned safety” (see Box 1). In contrast, punishment-learning (i.e., presentation of the 1-sec shock at the end of the 1-min cue) in this paradigm resulted in a strong suppression of bar pressing. This suggests that also in rats changes in the relative timing of cue and punishment do more than affecting whether and how much learning occurs, but rather can affect the valence of the respective mnemonic effect (for a related finding see also Smith and Buchanan 1954). A different approach was used by Navratilova et al. (2012): The authors investigated tonic post-surgical pain and induced relief by pharmacological treatment of that pain. Using a place preference/avoidance apparatus, such treatment was paired with one compartment of the apparatus whereas the other compartment was paired with a placebo treatment. In a subsequent test session, the animals preferred the compartment that had been paired with the pain-relief treatment.

Andreatta et al (2012) decided to use the modulation of the startle response as a behavioral measure to compare punishment- and relief-learning because it can be modulated bivalently (Koch 1999); that is, negatively valenced cues, established by punishment-learning, increase startle amplitude (Brown et al. 1951), whereas positively valenced cues, established by cue–reward associative training, decrease startle amplitude (Schmid et al. 1995). The relief-learning protocol was matched closely to the established punishment-learning protocol (i.e., 15 pairings of a 5-sec light and a 0.5-sec, 0.8-mA electric foot shock), and varied only the inter-stimulus interval (ISI, defined as the time interval from shock onset to light onset) (Fig. 6): Different groups of rats received a relief-learning protocol such that the onset of the electric shock preceded the onset of the 5-sec cue (denoted as positive ISIs: 3, 6, 12 sec). In addition, groups were included that underwent punishment-learning with a delay (ISI: −4.5 sec) or a trace procedure (ISIs: −12 sec). Last, but not least, control groups received either the cue but no electric shock at all, or cue and electric shock at randomized ISIs. In these control groups it was observed that, relative to the startle-alone testing condition, startle is slightly decreased when the cue was present (in Fig. 6, bottom right, the stippled line corresponds to the mean across these control groups and is referred to as the “baseline” in the following). Interpretations of this baseline level might be that it represents an unconditioned distraction effect of the light stimulus on startle, and/or that a mild degree of conditioned safety was induced. In any event, as the cue is moved in time toward shock onset, startle amplitude increases (Fig. 6). As the cue is moved past the shock, however, this effect is reversed and startle amplitude decreases. Importantly, for even longer gaps between shock and cue, startle amplitude returns to baseline levels (Andreatta et al. 2012). Thus, with respect to startle modulation as a measure, the cue has acquired negative conditioned valence with an ISI of −4.5 sec, but positive conditioned valence with an ISI of 3 sec; we refer to these effects as punishment- and relief-memory, respectively.

Nucleus accumbens and amygdala are respectively required for relief- and punishment-memory

Given that relief-learning in a startle paradigm can be demonstrated in the rat, Andreatta et al (2012) asked what its neuronal underpinnings are. A way to probe whether a brain structure is acutely required for a particular behavior is by temporarily inactivating it. This can be done by optogenetic tools (for the rat, see Zalocusky and Deisseroth 2013), or by local microinjections of drugs inactivating neuronal firing. A suitable drug for these purposes is muscimol, a GABA-A receptor agonist, a method previously applied to a number of different brain structures and behaviors (e.g., Fendt et al. 2003; Schulz et al. 2004; Müller and Fendt 2006). Notably, these local injections silence neural activity quickly but are remarkably transient (∼120 min) (Martin 1991).

Given that relief-learning, just like reward-learning, manifests itself as a decrease in startle in the presence of the learned cue, it seemed plausible that brain structures concerned with reward-learning are required for relief-learning as well. The usual first suspect here is the nucleus accumbens, because, like its human terminological counterpart, the ventral striatum (which, however, includes the olfactory tubercle), it is a critical brain structure for organizing learning and behavior in the appetitive domain (Ikemoto and Panksepp 1996, 1999; Berridge and Robinson 1998; Cardinal and Everitt 2004; Schultz 2013). Indeed, the decrease in startle amplitude supported by a reward-associated cue (Schmid et al. 1995) can be blocked by lesions of the nucleus accumbens (Koch et al. 1996).

To test for a role of the nucleus accumbens in relief-memory, cannulas aiming at the respective region were chronically and bilaterally implanted. After recovering from surgery, the animals underwent the relief-learning procedure, without any injections. One day later, muscimol was injected to acutely inactivate the nucleus accumbens, and the ability of the learned cue to modulate the startle response was tested. It turned out that under such accumbal inactivation the learned cue does not support startle attenuation beyond baseline (see Fig. 7). In contrast, silencing the nucleus accumbens did not prevent startle potentiation after punishment-learning. In turn, performance after punishment-learning was abolished by silencing the lateral/basolateral amygdala, a procedure which leaves the performance after relief-learning unaffected. Thus, there is a double dissociation between the requirement of the nucleus accumbens and lateral/basolateral amygdala for memory after relief-learning or after punishment-learning, respectively (Andreatta et al. 2012). Notably, the “signature” of relief-memory thus corresponds to reward-memory.

It is important to note that the above experiment specifically tested for effects on the expression of memory, not for effects on the acquisition process. Thus, the reviewed findings raise the question of the role of the nucleus accumbens during training, i.e., during the acquisition of relief-memory, as well as of the task-relevant pathways from the nucleus accumbens onto the startle pathway, potentially including the ventral pallidum and/or the pedunculopontine tegmental nucleus (Koch 1999). A perhaps more immediate question was whether similar neuronal dissociations of punishment- and relief-memory with regard to amygdala and nucleus accumbens are found in humans as well.

Humans

Punishment- and reward-learning