Abstract

A major challenge in single particle reconstruction from cryo-electron microscopy is to establish a reliable ab initio three-dimensional model using two-dimensional projection images with unknown orientations. Common-lines–based methods estimate the orientations without additional geometric information. However, such methods fail when the detection rate of common-lines is too low due to the high level of noise in the images. An approximation to the least squares global self-consistency error was obtained in [A. Singer and Y. Shkolnisky, SIAM J. Imaging Sci., 4 (2011), pp. 543–572] using convex relaxation by semidefinite programming. In this paper we introduce a more robust global self-consistency error and show that the corresponding optimization problem can be solved via semidefinite relaxation. In order to prevent artificial clustering of the estimated viewing directions, we further introduce a spectral norm term that is added as a constraint or as a regularization term to the relaxed minimization problem. The resulting problems are solved using either the alternating direction method of multipliers or an iteratively reweighted least squares procedure. Numerical experiments with both simulated and real images demonstrate that the proposed methods significantly reduce the orientation estimation error when the detection rate of common-lines is low.

Keywords: angular reconstitution, cryo-electron microscopy, single particle reconstruction, common-lines, least unsquared deviations, semidefinite relaxation, alternating direction method of multipliers, iteratively reweighted least squares

1. Introduction

Single particle reconstruction (SPR) from cryo-electron microscopy (cryo-EM) [12, 57] is an emerging technique for determining the three-dimensional (3D) structure of macromolecules. One of the main challenges in SPR is to attain a resolution of 4Å or better, thereby allowing interpretation of atomic coordinates of macromolecular maps [14, 60]. Although X-ray crystallography and NMR spectroscopy can achieve higher resolution levels (~ 1Å by X-ray crystallography and 2–5Å by NMR spectroscopy), these traditional methods are often limited to relatively small molecules. In contrast, cryo-EM is typically applied to large molecules or assemblies with size ranging from 10 to 150 nm, such as ribosomes [13], protein complexes, and viruses.

Cryo-EM is used to acquire 2D projection images of thousands of individual, identical frozen-hydrated macromolecules at random unknown orientations and positions. The collected images are extremely noisy due to the limited electron dose used for imaging to avoid excessive beam damage. A 3D electron density map can be reconstructed computationally from the 2D projection images. However, a broad computational framework is required for the reconstruction.

1.1. The algorithmic pipeline of SPR

The main steps of a full SPR procedure can be summarized as follows:

Particle selection: The individual molecules are detected and extracted from the micrograph by image segmentation.

Class averaging: Images with similar projection directions are averaged together to improve their signal-to-noise ratio (SNR).

Orientation estimation: This is done via the random-conical tilt technique, or common-lines–based approaches, or reprojection. This step may also include a shift estimation.

Reconstruction: A 3D volume is generated by a tomographic inversion algorithm.

Iterative refinement: The whole procedure is repeated from step 3, using the outcome of the most recent reconstruction until convergence.

Numerous technical and computational advances have contributed to the different processing steps in SPR. First, the particles can be automatically selected from micrographs using algorithms based on template matching, computer vision, neural network, or other approaches [67]. Then multivariate statistical data compression [22, 56] and classification techniques [54, 34, 49] can be used to sort and partition the large set of particle images by their viewing directions, producing “class averages” of enhanced SNR. After class averaging, an ab initio estimation of the pose parameters can be obtained using the random-conical tilt technique [40] or common-lines–based approaches [55, 47, 48]. Using the ab initio estimation of the pose parameters, a preliminary 3D map is reconstructed from the projection images by a tomographic inversion algorithm. The tomographic inversion is computed either by applying a weighted adjoint operator [40, 39, 19, 35] or by using an iterative approach for inversion [17, 28, 15]. We refer to the preliminary 3D map as the ab initio model or simply initial model. The initial model is then iteratively refined [33] in order to obtain a higher-resolution 3D reconstruction. In each iteration of the refinement process, the current 3D model is projected at several pre-chosen viewing directions, and the resulting reprojection images are matched with the particle images, giving rise to new estimates of their pose parameters. The new pose parameters are then used to produce a refined 3D model using a 3D reconstruction algorithm. This process is repeated for several iterations until convergence is achieved.

In this paper, we address the problem of orientation estimation using common-lines between cryo-EM images in order to obtain an improved initial model. This is a major challenge in SPR due to the low SNR of the raw images, especially for small particles.

1.2. Orientation determination using common-lines between images

The Fourier projection-slice theorem (see, e.g., [31]) plays a fundamental role in common-lines–based reconstruction methods. The theorem states that restricting the 3D Fourier transform of the volume to a planar central slice yields the Fourier transform of a 2D projection of the volume in a direction perpendicular to the slice (Figure 1). Thus, any two projections imaged from nonparallel viewing directions intersect at a line in Fourier space, which is called the common-line between the two images. The common-lines between any three images with linearly independent projection directions determine their relative orientation up to handedness. This is the basis of the “angular reconstitution” technique of van Heel [55], which was also developed independently by Vainshtein and Goncharov [53]. In this technique, the orientations of additional projections are determined in a sequential manner. Farrow and Ottensmeyer [10] used quaternions to obtain the relative orientation of a new projection in a least squares sense. The main problem with such techniques is that they are sensitive to false detection of common-lines, which leads to the accumulation of errors. Penczek, Zhu, and Frank [37] tried to obtain the rotations corresponding to all projections simultaneously by minimizing a global energy functional, which requires a brute force search in an exponentially large parametric space of all possible orientations for all projections. Mallick et al. [26] and Singer et al. [47] applied Bayesian approaches to use common-lines information from different groups of projections. Recently, Singer and Shkolnisky [48] developed two algorithms based on eigenvectors and semidefinite programming for estimating the orientations of all images. These two algorithms correspond to convex relaxations of the global self-consistency error minimization and can accurately estimate all orientations at relatively low common-line detection rates.

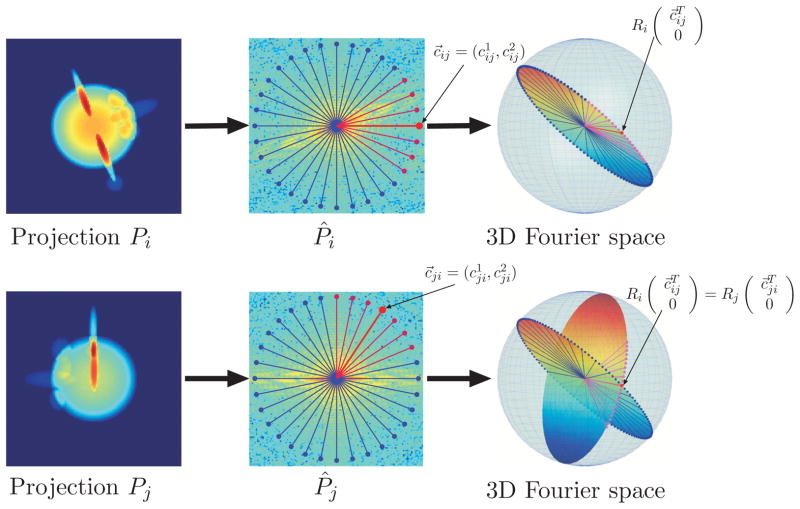

Figure 1.

Fourier projection-slice theorem. In the middle, P̂i is the 2D Fourier transform of projection Pi (left) computed on a polar grid. c⃗ij is a unit vector representing the direction of the common-line (indicated in red) between P̂i and P̂j on P̂i. On the right, the two transformed images P̂i and P̂j intersect with each other at the common-line after rotations Ri and Rj, yielding (3.3), where Ri and Rj represent the orientations of the projections Pi and Pj, respectively.

When the SNR of the images is significantly low, the detected common-lines consist of a modest number of noisy inliers, which are explained well by the image orientations, along with a large number of outliers, which have no structure. The standard common-lines–based methods, including those using least squares (LS) [10, 48], are sensitive to these outliers. In this paper we estimate the orientations using a different, more robust self-consistency error, which is the sum of unsquared residuals [32, 51] rather than the sum of squared residuals of the LS formulation. Convex relaxations of least unsquared deviations (LUD) have recently been proposed for other applications, such as robust principal component analysis [23, 64] and robust synchronization of orthogonal transformations [61]. Under certain noise models for the distribution of the outliers (e.g., the haystack model of [23, 64]), such convex relaxations enjoy proven guarantees for exact and stable recovery with high probability. Such theoretical and empirical improvements that LUD brings compared to LS serve as the main motivation for considering in this paper the application of LUD to the problem of orientation estimation from common-lines in SPR.

The LUD minimization problem is solved here via semidefinite relaxation. When the detection rate of common-lines is extremely low, the estimated viewing directions of the projection images are observed to cluster together (see Figure 4). This artificial clustering can be explained by the fact that images that share the same viewing direction also share more than one common-line (see more details in section 5). In order to mitigate this spurious clustering of the estimated viewing directions, we add to the minimization formulation a spectral norm term, either as a constraint or as a regularization term. The resulting minimization problem is solved by the alternating direction method of multipliers (ADMM). We also consider the application of the iteratively reweighted least squares (IRLS) procedure, which is not guaranteed to converge to the global minimizer but performs well in our numerical experiments. We demonstrate that the ab initio models resulting from our new methods are more accurate and require fewer refinement iterations compared to LS-based methods.

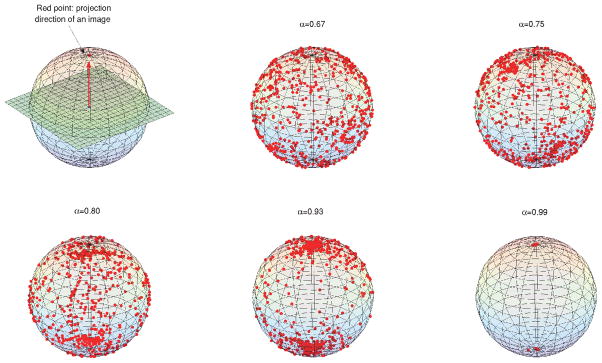

Figure 4.

The dependency of the spectral norm of G (denoted as αK here) on the distribution of orientations of the images. Each red point denotes the viewing direction of a projection. Five different distributions of viewing directions of K = 500 projections are plotted on spheres. The larger the spectral norm αK is, the more clustered the viewing directions are.

The paper is organized as follows. In section 2 we review the detection procedure of common-lines between images. Section 3 presents the LS and LUD global self-consistency cost functions. Section 4 introduces the semidefinite relaxation and rounding procedure for the LUD formulation. The additional spectral norm constraint is considered in section 5. The ADMM method for obtaining the global minimizer is detailed in section 6, and the IRLS procedure is described in section 7. Numerical results for both simulated and real data are provided in section 8. Finally, section 9 is a summary with discussion.

2. Detection of common-lines between images

Typically, the first step for detecting common-lines is to compute the 2D Fourier transform of each image on a polar grid using, e.g., the nonuniform fast Fourier transform (NUFFT) [9, 11, 18]. The transformed images have resolution nr in the radial direction and resolution nθ in the angular direction; that is, the radial resolution nr is the number of equi-spaced samples along each ray in the radial direction, and the angular resolution nθ is the number of angularly evenly distributed Fourier rays computed for each image (Figure 1). For simplicity, we let nθ be an even number. The transformed images are denoted by ( ), where is an nr-dimensional vector, m ∈ {0, 1, …, nθ − 1} is the index of a ray, k ∈ {1, 2, …, K} is the index of an image, and K is the number of images. The zero frequency term is shared by all lines independently of the image and is therefore excluded from comparison. To determine the common-line between two images Pi and Pj, the similarity between all nθ radial lines from the first image and all nθ radial lines from the second image is measured (overall, comparisons), and the pair of radial lines and with the highest similarity is declared as the common-line pair between the two images. However, as a radial line is the complex conjugate of its antipodal line, the similarity measure between and has the same value as that between their antipodal lines and (where addition of indices is taken modulo nθ). Thus the number of distinct similarity measures that need to be computed is , obtained by restricting the index m1 to take values between 0 and nθ/2 and letting m2 take any of the nθ possibilities (see also [55] and [36, p. 255]). Equivalently, it is possible to compare real-valued 1D line projections of the 2D projection images, instead of comparing radial Fourier lines that are complex-valued. According to the Fourier projection-slice theorem, each 1D projection is obtained by the inverse Fourier transform of the corresponding Fourier radial line and its antipodal line , and is denoted as . The 1D projection lines of a cryo-EM image can be displayed as a 2D image known as a “sinogram” (see [55, 45]).

Traditionally, the pair of radial lines (or sinogram lines) that has the maximum normalized cross correlation is declared as the common line, that is,

| (2.1) |

where mi,j is a discrete estimate for where the jth image intersects with the ith image. In practice, a weighted correlation, which is equivalent to applying a combination of high-pass and low-pass filters, is used to determine proximity. As noted in [55], the normalization is performed so that the correlation coefficient becomes a more reliable measure of similarity between radial lines. Note that, even with clean images, this estimate will have a small deviation from its ground truth (unknown) value due to discretization errors. With noisy images, large deviations of the estimates from their true values (say, errors of more than 10°) are frequent, and their frequency increases with the level of noise. We refer to common lines whose mi,j and mj,i values were estimated accurately (up to the discretization error tolerance 10°) as “correctly detected” common lines, or “inliers,” and to the remaining common lines as “falsely detected” or “outliers.”

A simple inliers-outliers model was introduced and analyzed in [48, sections 5.1 and 7]. That model assumes that each common-line pair is an inlier with probability p, independent of the remaining common-lines, while the directions of the outliers are uniformly distributed. It was noted, however, already in [48, p. 560] that this model is too simplistic as it assumes independence while there must be dependency: The number of common-line pairs is

(K2), and since there are only

(K2), and since there are only

(Knθ) radial lines, there must be dependency among the pairs for sufficiently large K. It is therefore difficult to provide a theoretical analysis for the evaluation of the practical performance of the different common-lines–based orientation determination algorithms.

(Knθ) radial lines, there must be dependency among the pairs for sufficiently large K. It is therefore difficult to provide a theoretical analysis for the evaluation of the practical performance of the different common-lines–based orientation determination algorithms.

3. Weighted LS and LUD

We define the directions of detected common-lines between the Fourier transformed image P̂i and the Fourier transformed image P̂j as the following unit vectors (Figure 1):

| (3.1) |

| (3.2) |

where c⃗ij and c⃗ji are on the Fourier transformed images P̂i and P̂j, respectively, and mij and mji are discrete estimates for the common lines’ positions using (2.1). Let the rotation matrices Ri ∈ SO(3), i = 1, …, K, represent the orientations of the K images. According to the Fourier projection-slice theorem (Figure 1), the common lines on every two images should be the same after the 2D Fourier transformed images are inserted in the 3D Fourier space using the corresponding rotation matrices; that is,

| (3.3) |

These can be viewed as linear equations for the 6K variables corresponding to the first two columns of the rotation matrices. (The third column of each rotation matrix does not contribute in (3.3) since it is multiplied by the zero third entries in (c⃗ij, 0) and (c⃗ji, 0).) The weighted LS approach for solving this system can be formulated as the minimization problem

| (3.4) |

where the weights wij indicate the confidence in the detections of common-lines between pairs of images. Since (c⃗ij, 0)T and (c⃗ji, 0)T are 3D unit vectors, their rotations are also unit vectors; that is, ||Ri(c⃗ij, 0)T|| = ||Rj(c⃗ji, 0)T|| = 1. It follows that the minimization problem (3.4) is equivalent to the maximization problem of the sum of dot products:

| (3.5) |

When the weight wij = 1 for each pair i ≠ j, (3.5) is equivalent to the LS problem that was considered in [37], and more recently in [48] using convex relaxation of the nonconvex constraint set. The solution to the LS problem may not be optimal due to the typically large proportion of outliers (Figure 2).

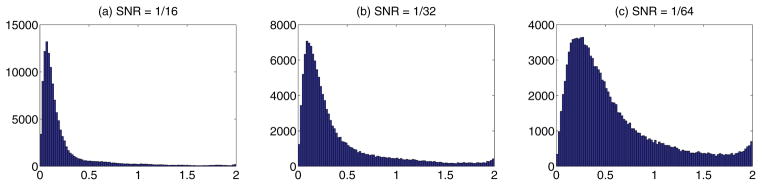

Figure 2.

The histogram plots of errors in the detected common-lines c⃗ij for all i and j, i.e., ||Ri(c⃗ij, 0)T − Rj(c⃗ji, 0)T||, where Ri is the true rotation matrix for all i. The fat tail in (c) indicates that the detected common-lines contain a large number of outliers. The cryo-EM images used for common-line detection are shown in Figure 3.

To guard the estimation of the orientations from outliers, we replace the sum of weighted squared residuals in (3.4) with the more robust sum of unsquared residuals and obtain

| (3.6) |

or equivalently,

| (3.7) |

We refer to the minimization problem (3.6) as the least unsquared deviation (LUD) problem. The self-consistency error given in (3.6) reduces the contribution from large residuals that may result from outliers. We note that it is also possible to consider the weighted version of (3.6), namely,

For simplicity, we focus here on the unweighted version.

4. Semidefinite programming relaxation (SDR) and the rounding procedure

Both the weighted LS problem (3.4) and the LUD problem (3.6) are nonconvex and therefore extremely difficult to solve if one requires the matrices Ri to be rotations, that is, when adding the constraints

| (4.1) |

where I3 is the 3 × 3 identity matrix. A relaxation method that neglects the constraints (4.1) will simply collapse to the trivial solution R1 = ··· = RK = 0, which obviously does not satisfy the constraint (4.1).

The relaxation in [48] that uses semidefinite programming (SDP) can be modified in a straightforward manner in order to deal with nonunity weights wij in (3.5). We present this modification here for three reasons. First, the weighted version is required by the IRLS procedure (see section 7). Second, the rounding procedure after the SDP employed here is slightly different than the deterministic rounding procedure presented in [48] and is closer in spirit to the randomized rounding procedure of Goemans and Williamson for the MAX-CUT problem [16]. Finally, in the appendix we prove exact recovery of the rotations by the SDR procedure when the detected common-lines are all correct.

4.1. Constructing the Gram matrix G from the rotations Ri

We denote the columns of the rotation matrix Ri by , and , and write the rotation matrices as

We define a 3 × 2K matrix R by concatenating the first two columns of all rotation matrices:

| (4.2) |

The Gram matrix G for the matrix R is a 2K × 2K matrix of inner products between the 3D column vectors of R; that is,

| (4.3) |

Clearly, G is a rank-3 semidefinite positive matrix (G ≽ 0), which can be conveniently written as a block matrix

where Gij is the 2 × 2 upper left block of the rotation matrix , that is,

In addition, the orthogonality of the rotation matrices ( ) implies that

| (4.4) |

where I2 is the 2 × 2 identity matrix.

4.2. SDR for weighted LS

We first define two 2K × 2K matrices S = (Sij)i,j=1,…,K and W = (Wij)i,j=1,…,K, where the 2 × 2 subblocks Sij and Wij are given by

and

Both matrices S and W are symmetric, and they store all available common-line information and weight information, respectively. It follows that the objective function (3.5) is the trace of the matrix (W ∘ S) G:

| (4.5) |

where the symbol ∘ denotes the Hadamard product between two matrices. A natural relaxation of the optimization problem (3.5) is thus given by the SDP problem

| (4.6) |

| (4.7) |

| (4.8) |

The nonconvex rank-3 constraint on the Gram matrix G is missing from this SDR [25]. The problem (4.6)–(4.8) is an SDP that can be solved by standard SDP solvers. In particular, it can be well solved by the solver SDPLR [4], which takes advantage of the low-rank property of G. SDPLR is a first-order algorithm via low-rank factorization and hence can provide approximate solutions for large-scale problems. Moreover, the iterations of SDPLR are extremely fast.

4.3. SDR for LUD

Similar to defining the Gram matrix G in (4.3), we define a 3K × 3K matrix G̃ as G̃ = (G̃ij)i,j=1,…,K, where each G̃ij is a 3 × 3 block defined as . Then, a natural SDR for (3.7) is given by

| (4.9) |

The constraints missing in this SDP formulation are the nonconvex rank-3 constraint and the determinant constraints det(G̃ij) = 1 on the Gram matrix G̃. However, the solution G̃ to (4.9) is not unique. Note that if a set of rotation matrices {Ri} is a solution to (3.7), then the set of conjugated rotation matrices {JRiJ} is also a solution to (3.7), where the matrix J is defined as

Thus, another solution to (4.9) is the Gram matrix with the 3 × 3 sub-blocks given by . It can be verified that is also a solution to (4.9). Using the fact that

the problem (4.9) is reduced to

| (4.10) |

This is a SDR for the LUD problem (3.6). The problem (4.10) can be solved using ADMM (see details in section 6.2).

4.4. The randomized rounding procedure

The matrix R is recovered from a random projection of the solution G of the SDP (4.6). We randomly draw a 2K ×3 matrix P from the Stiefel manifold V3(ℝ2K). The random matrix P is computed using the orthogonal matrix Q and the upper triangular matrix R from QR factorization of a random matrix with standard i.i.d. Gaussian entries; that is, P = Q sign (diag (R)), where sign stands for the entrywise sign function and diag(R) is a diagonal matrix whose diagonal entries are the same as those of the matrix R. The matrix P is shown to be drawn uniformly from the Stiefel manifold in [29]. We compute the Cholesky decomposition of G = LLT and then project the lower triangular matrix L onto the subspace spanned by the columns of the 2K × 3 matrix LP.1

The 2K × 3 matrix LP is a proxy for the matrix RT (up to a global 3 × 3 orthogonal transformation). In other words, we can regard the 3 × 2K matrix (LP)T as composed from K matrices of size 3 × 2, denoted Ai (i = 1, …, K), namely,

The two columns of each Ai correspond to and (compare to (4.2)). We therefore estimate the matrix as the closest matrix to Ai on the Stiefel manifold V2(ℝ3) in the Frobenius matrix norm. The closest matrix is given by (see, e.g., [1]) , where is the singular value decomposition of Ai. We note that, except for the orthogonality constraint (4.7), the SDP (4.6)–(4.8) is identical to the Goemans–Williamson SDP for finding the maximum cut in a weighted graph [16], where the SDR and the randomized rounding procedure [50, 25] for maximum cut problem is proved to have a 0.87 performance guarantee. Currently we do not have a similar performance guarantee for the SDP relaxation of the LS problem (3.5). From the complexity point of view, SDP can be solved in polynomial time to any given precision. The idea of using SDP for determining image orientations in cryo-EM was originally proposed in [48].

5. The spectral norm constraint

In our numerical experiments (see section 8) we observed that in the presence of many “outliers” (i.e., a large proportion of misidentified common-lines) the estimated viewing directions2 that are obtained by solving either (4.6)–(4.8) or (4.10) are highly clustered (Figure 4). This empirical behavior of the solutions can be explained by the fact that images whose viewing directions are parallel share many common-lines, which results from the overlapping Fourier slices. In other words, when the viewing directions of Ri and Rj are nearby, the fidelity term ||Ri(c⃗ij, 0)T − Rj(c⃗ji, 0)T|| (that appears in all cost functions) can become small (i.e., close to 0), even when the common-line pair (c⃗ij, c⃗ji) is misidentified. When the proportion of outliers in the detected common-lines is large enough, the solution to either the LS problem or the LUD problem can consist of many rotations with similar viewing directions, so that most of the fidelity terms have small values. In such cases, the Gram matrix G has just two dominant eigenvalues, instead of three.

In order to prevent the viewing directions from clustering and the Gram matrix G from collapsing to a rank-2 matrix, we add the following constraint on the spectral norm of the Gram matrix G to the optimization problem (4.6)–(4.8) or (4.10):

| (5.1) |

where I2K is the 2K × 2K identity matrix, or equivalently,

| (5.2) |

where ||G||2 is the spectral norm of the matrix G, and the parameter controls the spread of the viewing directions. If the true imaging orientations are uniformly sampled from the rotation group SO(3), then by the law of large numbers and the symmetry of the distribution of orientations, the spectral norm of the true Gram matrix Gtrue is approximately . (To see this, notice that Tr(G) = Tr(KI2) = 2K. Thus, the sum of eigenvalues of G is 2K. Recall that G is of rank 3, so if the rotations are uniformly distributed, then each of its three nontrivial eigenvalues equals .) On the other hand, if the true viewing directions are highly clustered, then the spectral norm of the true Gram matrix Gtrue is close to K. For a known distribution of orientations, we can compute the spectral norm of the true Gram matrix Gtrue accordingly, which can be verified to be a number between and 1. In practice, however, the distribution of the viewing directions is usually unknown a priori, and often it cannot be assumed to be uniform. To prevent a solution with clustered viewing directions, we fix the parameter α to some number satisfying , and perhaps even try a few possible values for α and choose the best value by examining the resulting reconstructions. Another method for choosing the best value for α is to compare the likelihood scores. We defer the definition of the likelihood score to section 9.

6. The alternating direction method of multipliers (ADMM) for SDRs with spectral norm constraint

The application of ADMM to SDP problems was considered in [62]. Here we generalize the application of ADMM to the optimization problems considered in the previous sections. ADMM is a multiple-splitting algorithm that minimizes the augmented Lagrangian function in an alternating fashion such that in each step it minimizes over one block of the variables with all other blocks fixed and then updates the Lagrange multipliers. We apply ADMM to the dual problems since the linear constraint (6.2) satisfies

= I, which simplifies the computation of subproblems. The strong duality theorem, which is known as Slater’s theorem, guarantees that in the presence of a strictly feasible solution, a primal problem can be solved by solving its dual problem. To obtain a strictly feasible solution to the primal problems with a positive semidefinite constraint, the linear constraint (6.2), and the spectral norm constraint (6.3), we can construct a Gram matrix G in (4.3) using rotations sampled from a uniform distribution over the rotation group. Therefore, strong duality holds for the primal problems, and the primal problems can be solved by applying ADMM to their corresponding dual problems.

= I, which simplifies the computation of subproblems. The strong duality theorem, which is known as Slater’s theorem, guarantees that in the presence of a strictly feasible solution, a primal problem can be solved by solving its dual problem. To obtain a strictly feasible solution to the primal problems with a positive semidefinite constraint, the linear constraint (6.2), and the spectral norm constraint (6.3), we can construct a Gram matrix G in (4.3) using rotations sampled from a uniform distribution over the rotation group. Therefore, strong duality holds for the primal problems, and the primal problems can be solved by applying ADMM to their corresponding dual problems.

6.1. The relaxed weighted LS problem

The weighted LS problem after SDR (4.6)–(4.8) can be efficiently solved using SDPLR [4]. However, SDPLR is not suitable for the problem once the spectral norm constraint on G (5.2) is added to (4.6)–(4.8). This is because the constraint (5.2) can be written as αKI − G ≽ 0, but the matrix αKI − G does not have a low-rank structure. Moreover, SDP solvers using polynomial-time primal-dual interior point methods are designed for small to medium-sized problems. Therefore, they are not suitable for our problem. Instead, here we devise a version of the ADMM which takes advantage of the low-rank property of G.

After the spectral norm constraint (5.2) is added, the problem (4.6)–(4.8) becomes

| (6.1) |

| (6.2) |

| (6.3) |

where

| (6.4) |

denotes the (p, q)th element in the 2 × 2 subblock Gij, C = W ∘ S is a symmetric matrix, and 〈C, G〉 = trace (CG). Following the equality 〈

(G), y〉 = 〈G,

(G), y〉 = 〈G,

(y)〉 for arbitrary

(y)〉 for arbitrary

the adjoint of the operator

is defined as

is defined as

where for i = 1, 2, …, K

It can be verified that

= I. The dual problem of problem (6.1)–(6.3) is

= I. The dual problem of problem (6.1)–(6.3) is

| (6.5) |

By rearranging terms in (6.5), we obtain

| (6.6) |

Using the fact that the dual norm of the spectral norm is the nuclear norm (Proposition 2.1 in [41]), we can obtain from (6.6) the dual problem

| (6.7) |

where ||·||* denotes the nuclear norm. By introducing a variable Z = C + X +

(y), we obtain from (6.7) that

(y), we obtain from (6.7) that

| (6.8) |

| (6.9) |

Since Z is a symmetric matrix, ||Z||* is the summation of the absolute values of the eigenvalues of Z. The augmented Lagrangian function of (6.8)–(6.9) is defined as

| (6.10) |

where μ > 0 is a penalty parameter. Using the augmented Lagrangian function (6.10), we devise an ADMM that minimizes (6.10) with respect to y, Z, X, and G in an alternating fashion; that is, given some initial guess, in each iteration the following three subproblems are solved sequentially:

| (6.11) |

| (6.12) |

| (6.13) |

and the Lagrange multiplier G is updated by

| (6.14) |

where is an appropriately chosen step length.

To solve the subproblem (6.11), we use the first-order optimality condition

and the fact that

= I, and we obtain

= I, and we obtain

By rearranging the terms of

(yk+1, Z, Xk, Gk), it can be verified that the subproblem (6.12) is equivalent to

(yk+1, Z, Xk, Gk), it can be verified that the subproblem (6.12) is equivalent to

where . Let Bk = U Λ UT be the spectral decomposition of the matrix Bk, where Λ = diag (λ) = diag (λ1, …, λ2K). Then Zk+1 = U diag (ẑ) UT, where ẑ is the optimal solution of the problem

| (6.15) |

It can be shown that the unique solution of (6.15) admits a closed-form solution called the soft-thresholding operator, following a terminology introduced by Donoho and Johnstone [8]; it can be written as

The problem (6.13) can be shown to be equivalent to

where . The solution is the Euclidean projection of Hk onto the semidefinite cone (section 8.1.1 in [3]), where

is the spectral decomposition of the matrix Hk, and Σ+ and Σ− are the positive and negative eigenvalues of Hk.

It follows from the update rule (6.14) that

6.2. The relaxed LUD problem

Consider the LUD problem after SDR:

| (6.16) |

where G,

, and b are defined in (4.3) and (6.4). The ADMM devised to solve (6.16) is similar to and simpler than the ADMM devised to solve the version with the spectral norm constraint. We focus on the more difficult problem with the spectral norm constraint. By introducing

and adding the spectral norm constraint ||G||2 ≤ αK, we obtain

, and b are defined in (4.3) and (6.4). The ADMM devised to solve (6.16) is similar to and simpler than the ADMM devised to solve the version with the spectral norm constraint. We focus on the more difficult problem with the spectral norm constraint. By introducing

and adding the spectral norm constraint ||G||2 ≤ αK, we obtain

| (6.17) |

The dual problem of problem (6.17) is

| (6.18) |

By rearranging terms in (6.18), we obtain

| (6.19) |

where θ = (θij)i,j=1,…, K, ,

for p, q = 1, 2. It is easy to verify that for 1 ≤ i < j ≤ K

| (6.20) |

In fact, (6.20) is obtained using the inequality

| (6.21) |

and the inequality in (6.21) holds when θij and xij have the same direction. Using (6.20) and the fact that the dual norm of the spectral norm is the nuclear norm, we can obtain from (6.19) the dual problem

| (6.22) |

| (6.23) |

The augmented Lagrangian function of problem (6.22)–(6.23) is defined as

| (6.24) |

for ||θij|| ≤ 1, where μ > 0 is a penalty parameter. Similar to section 6.1, using the augmented Lagrangian function (6.24), ADMM is used to minimize (6.24) with respect to y, θ, Z, X, and G alternatively; that is, given some initial guess, in each iteration the following four subproblems are solved sequentially:

| (6.25) |

| (6.26) |

| (6.27) |

| (6.28) |

and the Lagrange multiplier G is updated by

| (6.29) |

where

is an appropriately chosen step length. The methods for solving subproblems (6.25), (6.27), and (6.28) are similar to those used in (6.11), (6.12), and (6.13). To solve subproblem (6.26), we rearrange the terms of

(yk+1, θ, Zk, Xk, Gk) and obtain an equivalent problem,

(yk+1, θ, Zk, Xk, Gk) and obtain an equivalent problem,

| (6.30) |

where , and . Problem (6.30) is further simplified as

whose solution is

The practical issues related to how to take advantage of low-rank assumptions of G in the eigenvalue decomposition performed at each iteration, strategies for adjusting the penalty parameter μ, the use of a step size γ for updating the primal variable X, and termination rules using the infeasibility measures are discussed in detail in [62]. The convergence analysis of ADMM using more than two blocks of variables can be found in [20]. However, there are some conditions of Assumption A in [20, p. 5] that cannot be satisfied for our problem. For example, Assumption A requires that the feasible set be polyhedral, whereas the SDP cone in our problem is not a polyhedral. To generalize the convergence analysis in [20] to our problem, we will need to show that the local error bounds (see [20, pp. 8–9]) hold for the SDP cone. Currently we do not have a rigorous convergence proof for ADMM for our problem. In practice, we observe that the primal infeasibility, the dual infeasibility,3 and the relative gap between the primal and dual problems converge simultaneously to zero, quickly during first hundreds of iterations, then the rate of convergence slows down as the iterates approach an optimal solution.

7. The iterative reweighted least squares (IRLS) procedure

Since c⃗ij and c⃗ji are unit vectors, it is tempting to replace the LUD problem (3.7) with the following SDR:

| (7.1) |

| (7.2) |

| (7.3) |

| (7.4) |

where α is a fixed number between and 1, and the spectral norm constraint on G (7.4) is added when the solution to the problem (7.1)–(7.3) is a set of highly clustered rotations. Notice that this relaxed problem is, however, not convex since the objective function (7.1) is concave. We propose to solve (7.1)–(7.3) (possibly with (7.4)) by a variant of the IRLS procedure [6, 23, 64, 5], which at best converges to a local minimizer. With a good initial guess for G it can be hoped that the global minimizer is obtained. Such an initial guess can be taken as the LS solution. In section 7.2, we prove that the sequence of IRLS converges to a stationary point. However, our convergence analysis cannot exclude the possibility of convergence to saddle points that are not stable.

7.1. Main idea

Before the rounding procedure, the IRLS procedure finds an approximate solution to the optimization problem (7.1)–(7.3) (possibly with (7.4)) by solving its smoothing version

| (7.5) |

| (7.6) |

| (7.7) |

| (7.8) |

where ε > 0 is a small number. The solution to the smoothing version is close to the solution to the original problem. In fact, let and G* = arg min F(G); then we shall verify that

| (7.9) |

Using the definition of F(G, ε) and F(G), we obtain that

and thus

Since and , inequality (7.9) holds.

In each iteration, the IRLS procedure solves the problem

| (7.10) |

on the (k + 1)th iteration, where , and

In other words, in each iteration, more emphasis is given to detected common-lines that are better explained by the current estimate Gk of the Gram matrix. The inclusion of the regularization parameter ε ensures that no single detected common-line can gain undue influence when solving problem (7.10), which is equivalent to

| (7.11) |

We repeat the process until the residual sequence {rk} has converged or the maximum number of iterations has been reached.

7.2. Convergence analysis

We shall verify below that the value of the cost function is nonincreasing, and that every cluster point of the sequence of IRLS is a stationary point of (7.5)–(7.7) in the following lemma and theorem, for the problem without the spectral norm constraint on G. The arguments can be generalized to the case with the spectral norm constraint. The proof of Theorem 7.2 follows the method of proof for Theorem 3 in the paper [30] by Mohan and Fazel.

Lemma 7.1

The value of the cost function sequence is monotonically nonincreasing; i.e.,

| (7.12) |

where {Gk} is the sequence generated by the IRLS procedure of Algorithm 1.

Algorithm 1.

(the IRLS procedure). Solve optimization problem (7.1)–(7.3) (with the spectral norm constraint on G (7.4) if the input parameter α satisfies ), and then recover the orientations by rounding.

| Input: a 2K × 2K common-line matrix S, a regularization parameter ε, a parameter α, and the total number of iterations Niter. |

| for all i, j = 1, …, K; |

| G0 = 0; |

| for k = 1 → Niter, step size = 1 do |

| update W by setting ; |

| if , obtain Gk by solving the problem (6.1)–(6.3) using ADMM; otherwise, obtain Gk by solving (4.6)–(4.8) using SDPLR (with initial guess Gk−1); |

| ; |

| ; |

| the residual ; |

| end for |

| obtain estimated orientations from GNiter using the randomized rounding procedure in section 4.4. |

| Output: estimated orientations R̂1, …, R̂K . |

Proof

Since Gk is the solution of (7.11), using the Karush–Kuhn–Tucker (KKT) conditions, there exist yk ∈ ℝ2K and Xk ∈ ℝ2K×2K such that

| (7.13) |

| (7.14) |

Hence we have

| (7.15) |

| (7.16) |

where the first and third equalities use (7.13), and the inequality (7.15) uses (7.14). From (7.16) we obtain

| (7.17) |

where the last inequality uses the Cauchy–Schwarz inequality and the equality holds if and only if

| (7.18) |

where c is a constant. Thus (7.12) is confirmed.

Theorem 7.2

The sequence of iterates {Gk} of IRLS is bounded, and every cluster point of the sequence is a stationary point of (7.5)–(7.7).

Proof

Since trace(Gk) = 2K and Gk ≽ 0, the sequence {Gk} is bounded. It follows that Wk and trace((Wk ∘ S)Gk+1) are bounded. Using the strong duality of SDP, we conclude that bTyk+1 = trace((Wk ∘ S)Gk+1) is bounded. In addition, from the KKT conditions (7.13)–(7.14) we obtain –

(yk+1) + Wk ∘ S ≽ 0. Using the definition of

(yk+1) + Wk ∘ S ≽ 0. Using the definition of

and S, the property of semidefinite matrices, and the fact that Wk is bounded, it can be verified that yk is bounded. Using (7.13) again, we obtain

and S, the property of semidefinite matrices, and the fact that Wk is bounded, it can be verified that yk is bounded. Using (7.13) again, we obtain

which implies that Xk is bounded.

We now show that every cluster point of {Gk} is a stationary point of (7.5)–(7.7). Suppose to the contrary, and let Ḡ be a cluster point of {Gk} that is not a stationary point. By the definition of cluster point, there exists a subsequence {Gki, Wki, Xki, yki} of {Gk, Wk, Xk, yk} converging to (Ḡ, W̄, X̄, ȳ). By passing to a further subsequence if necessary, we can assume that {Gki+1, Wki+1, Xki+1, yki+1} is also convergent, and we denote its limit by (Ĝ, Ŵ, X̂, ŷ). Gki+1 is defined as (7.10) or (7.11) and satisfies the KKT conditions (7.13)–(7.14). Passing to limits, we see that

Thus we conclude that Ĝ is a maximizer of the following convex optimization problem:

Next, by assumption, Ḡ is not a stationary point of (7.5)–(7.7). This implies that Ḡ is not a maximizer of the problem above, and thus 〈 W̄ ∘ S, Ĝ 〉 > 〈 W̄ ∘ S, Ḡ 〉. From this last relation and (7.17)–(7.18) it follows that

| (7.19) |

Otherwise if F(Ĝ, ε) = F (Ḡ, ε), then 〈 Ĝij, Sij 〉 = 〈 Ḡij, Sij 〉 due to (7.17)–(7.18), and thus we would obtain 〈 W̄ ∘ S, Ĝ 〉 = 〈 W̄ ∘ S, Ḡ 〉, which is a contradiction.

On the other hand, it follows from Lemma 7.1 that the sequence {F(Gk, ε)}converges. Thus we have that

which contradicts (7.19). Hence, every cluster point of the sequence is a stationary point of (7.5)–(7.7).

In addition, using Hölder’s inequality, the analysis can be generalized to the reweighted approach to solve

| (7.20) |

where 0 < p < 1. The convergence analysis of IRLS (p = 1) in [6, 23] can be extended to different applications with p < 1. The problem (7.20) is an SDR of the problem

| (7.21) |

The smaller p is, the more penalty the outliers in the detected common-lines receive.

8. Numerical results

All numerical experiments were performed on a machine with 2 Intel(R) Xeon(R) X5570 CPUs, each with 4 cores, running at 2.93 GHz. In all the experiments, the polar Fourier transform of images for common-line detection had radial resolution nr = 100 and angular resolution nθ = 360. The number of iterations was set to be Niter = 10 in all IRLS procedures. Reconstruction from the images with estimated orientations used the Fourier-based 3D reconstruction package FIRM4 [59]. The reconstructed volumes are shown in Figures 5, 6, and 9 using the visualization system Chimera [38].

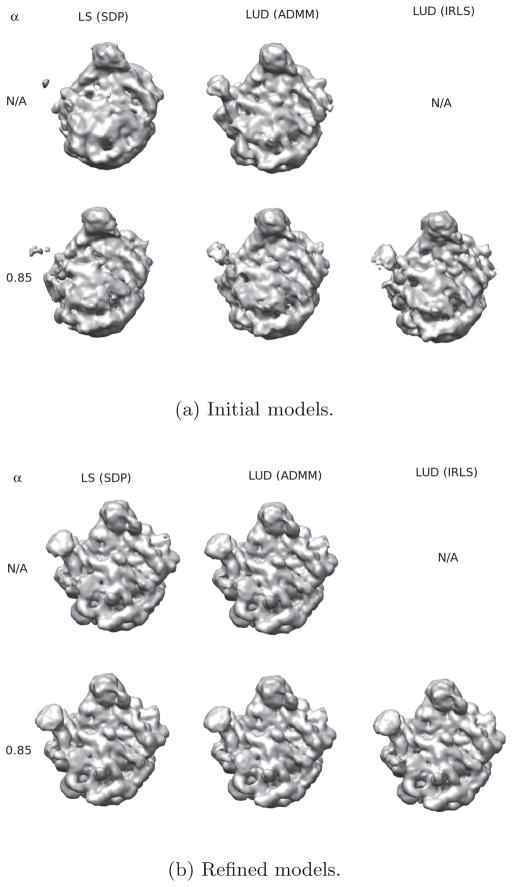

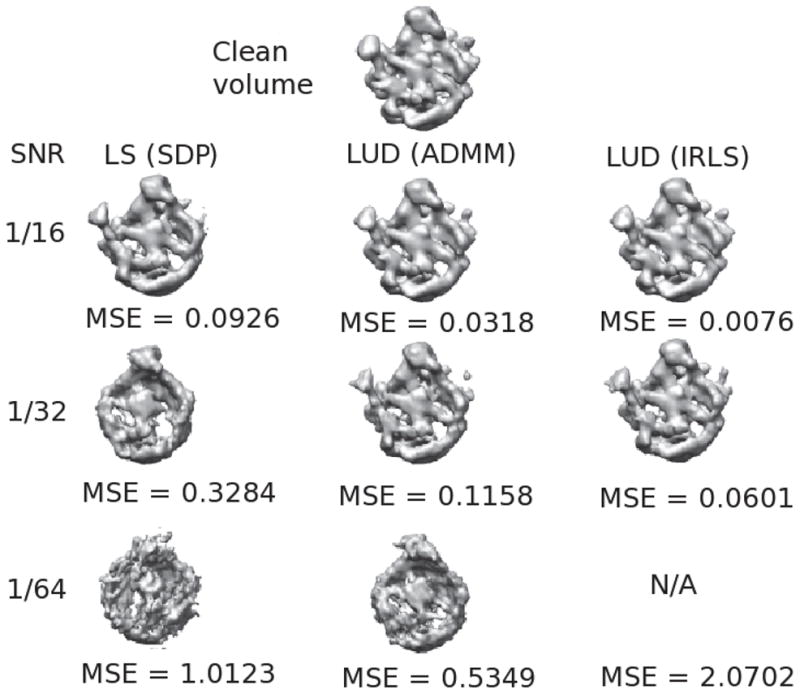

Figure 5.

The clean volume (top), the reconstructed volumes, and the MSEs (8.2) of the estimated rotations. No spectral norm constraint was used (i.e., α = N/A) for all algorithms. The result using the IRLS procedure without α is not available due to the highly clustered estimated projection directions. All the volumes have size 129 × 129 × 129 voxels.

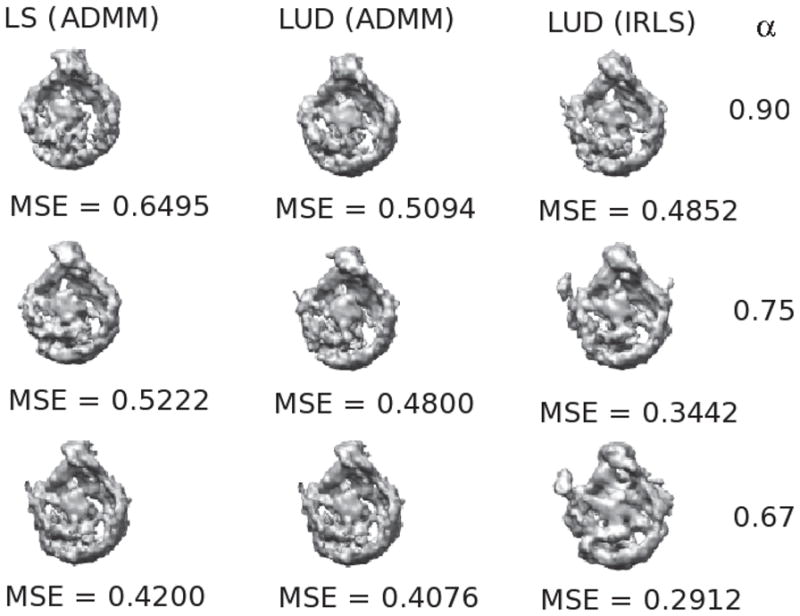

Figure 6.

The reconstructed volumes from images with SNR = 1/64 and the MSEs (8.2) of the estimated rotations using spectral norm constraints (i.e., α = 0.90, 0.75, and 0.67) for all algorithms. All the volumes have size 129 × 129 × 129 voxels. The result from the IRLS procedure with α = 0.67 for the spectral norm constraint is best.

Figure 9.

Initial models and refined models. (a) The ab initio models estimated by merging two independent reconstructions, each obtained from 1000 class averages. The resolutions of the models are 17.2Å, 16.7Å, 16.7Å, 16.7Å, and 16.1Å (from top to bottom, left to right) using the FSC 0.143 resolution cutoff (Figure 10). The model using the IRLS procedure without the spectral norm constraint (i.e., α = N/A) is not available, since the estimated projection directions are highly clustered. (b) The refined models corresponding to the ab initio models in (a). The resolutions of the models are all 11.1Å.

To evaluate the accuracy or the resolution of the reconstructions, we used the 3D Fourier shell correlation (FSC) [43]. FSC measures the normalized cross correlation coefficient between two 3D volumes over corresponding spherical shells in Fourier space, i.e.,

| (8.1) |

where

(V1) and

(V1) and

(V2) are the Fourier transforms of volumes V1 and V2, respectively; the spatial frequency i ranges from 1 to N/2 − 1 times the unit frequency 1/(N · pixel size); N is the size of a volume; and Shelli := {j : 0.5 + (i −1) + ε ≤ ||j|| < 0.5 + i + ε}, where ε = 1e − 4. In this form, the FSC takes two 3D volumes and converts them into a 1D array. In section 8.2, we used the FSC 0.143 cutoff criterion [44, 2] to determine the resolutions of the ab initio models and the refined models.

(V2) are the Fourier transforms of volumes V1 and V2, respectively; the spatial frequency i ranges from 1 to N/2 − 1 times the unit frequency 1/(N · pixel size); N is the size of a volume; and Shelli := {j : 0.5 + (i −1) + ε ≤ ||j|| < 0.5 + i + ε}, where ε = 1e − 4. In this form, the FSC takes two 3D volumes and converts them into a 1D array. In section 8.2, we used the FSC 0.143 cutoff criterion [44, 2] to determine the resolutions of the ab initio models and the refined models.

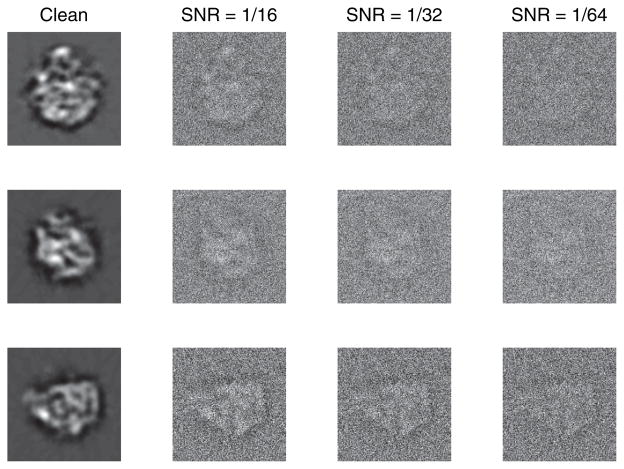

8.1. Experiments on simulated images

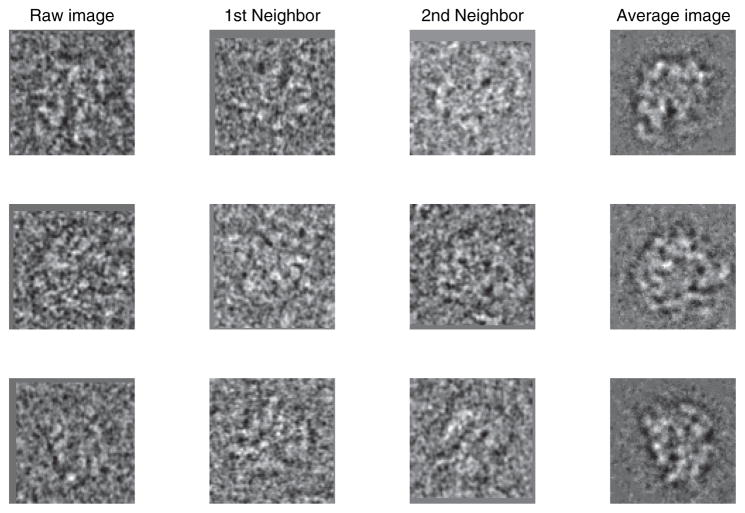

We simulated 500 centered images of size 129 × 129 pixels with pixel size 2.4Å of the 50S ribosomal subunit (the top image in Figure 5), where the orientations of the images were sampled from the uniform distribution over SO(3). White Gaussian noise was added to the clean images to generate noisy images with SNR = 1/16, 1/32, and 1/64 (Figure 3). Common-line pairs that were detected with an error smaller than 10° were considered to be correct. The common-line detection rates were 64%, 44%, and 23% for images with SNR = 1/16, 1/32, and 1/64, respectively (Figure 2).

Figure 3.

The first column shows three clean images of size 129×129 pixels generated from a 50S ribosomal subunit volume with different orientations. The other three columns show three noisy images corresponding to those in the first column with SNR = 1/16, 1/32, and 1/64, respectively.

To measure the accuracy of the estimated orientations, we defined the mean squared error (MSE) of the estimated rotation matrices R̂1, …, R̂K to be

| (8.2) |

where Ô is the optimal solution to the registration problem between the two sets of rotations {R1, …, RK} and {R1, …, R̂K} in the sense of minimizing the MSE. As shown in [48], there is a simple procedure for obtaining both Ô and the MSE from the singular value decomposition of the matrix .

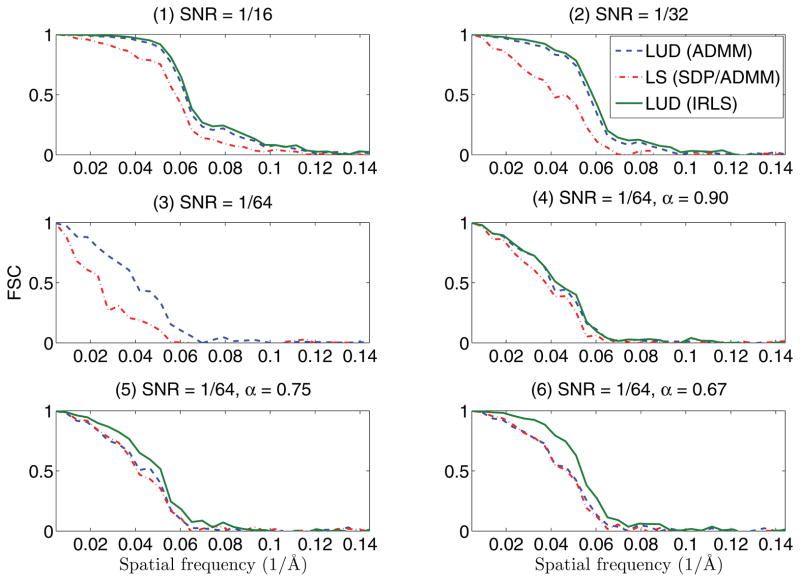

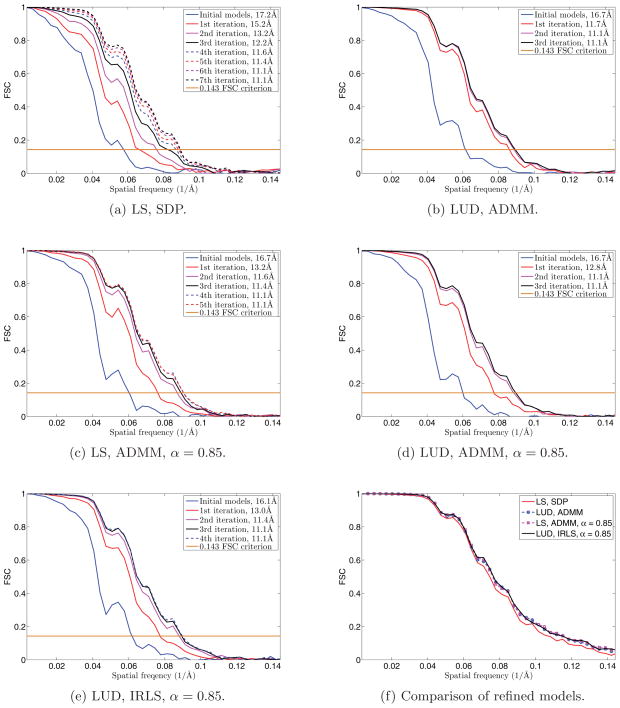

We applied the LS approach using SDP and ADMM, and the LUD approach using ADMM and IRLS to estimate the images’ orientations, then computed the MSEs of the estimated rotation matrices, and lastly reconstructed the volume (Figures 5 and 6). In order to measure the accuracy of the reconstructed volumes, we measured each volume’s FSC (8.1) (Figure 7) against the clean 50S ribosomal subunit volume; that is, in our measurement V1 was the reconstructed volume, and V2 was the “ground truth” volume.

Figure 7.

FSCs (8.1) of the reconstructed volumes against the clean volume in Figures 5 and 6. The plots of the correlations show that the LUD approach using ADMM and IRLS (denoted as the blue dashed lines and green solid lines) outweighed the LS approach using SDP or ADMM (denoted as the red dot-dashed lines). Note that all of the last four subfigures are results for images with SNR = 1/64, where the last three subfigures are results using different α for the spectral norm constraint. In the third subfigure (left to right, top to bottom), there is no green solid line for the LUD approach using IRLS, since the IRLS procedure without the spectral norm constraint converges to a solution where the estimated projection directions are highly clustered and no 3D reconstruction can be computed.

When SNR= 1/16 and 1/32, the common-line detection rate was relatively high (64% and 44%), and the algorithms without the spectral norm constraint on G were enough to make a good estimation. The LUD approach using ADMM and IRLS outweighed the LS approach in terms of accuracy measured by MSE and FSC (Figures 5–7). Note that the LS approach using SDP failed when SNR = 1/32, while the LUD approach using either ADMM or IRLS succeeded. When SNR = 1/64, the common-line detection rate was relatively low (23%), and most of the detected common-lines were outliers (Figure 2). As a consequence, we observed that the algorithms without spectral norm constraint ||G||2 ≤ αK did not work. In particular, the viewing directions of images estimated by the IRLS procedures without ||G|| ≤ αK converged to two clusters around two antipodal directions, yielding no 3D reconstruction. The LUD approach using ADMM failed in this case; however, the IRLS procedure with an appropriate regularization on the spectral norm (i.e., α = 0.67 since the true rotations were uniformly sampled over SO(3)) gave the best reconstruction.

8.2. Experiments on a real dataset

A set of micrographs of E. coli 50S ribosomal subunits was provided by Dr. M. van Heel. These micrographs were acquired by a Philips CM20 at defocus values between 1.37 and 2.06μm, and they were scanned at 3.36 Å/pixel. The particles (particularly E. coli 50S ribosomal subunits) were picked using the automated particle picking algorithm in EMAN Boxer [24]. Then, using the IMAGIC software package [52, 58], the 27,121 particle images of size 90 × 90 pixels were phase-flipped to remove the phase-reversals in the contrast transfer function (CTF), bandpass filtered at 1/150 and 1/8.4 Å, normalized by their variances, and then translationally aligned with the rotationally averaged total sum. The particle images were randomly divided into two disjoint groups of equal number of images. The following steps were performed on each group separately.

The images were rotationally aligned and averaged to produce class averages of better quality, following the procedure detailed in [66]. For each group, the images were denoised and compressed using Fourier–Bessel-based steerable principal component analysis (FBSPCA) [65]. Then, triple products of Fourier–Bessel expansion coefficients obtained in FBSPCA were used to compute rotationally invariant features of the images, i.e, the bispectrums [42, 21, 27]. For each image, an initial set of neighboring images was computed using the normalized cross-correlation of the bispectrums, which was later refined using the method described in [49] to produce new sets of neighbors. Finally, we averaged each image with its ten nearest neighbors after alignment.5 Three examples of averaged images are shown in Figure 8.

Figure 8.

Noise reduction by image averaging. Three raw ribosomal images are shown in the first column. Their closest two neighbors (i.e., raw images having similar orientations after alignments) are shown in the second and third columns. The average images shown in the last column were obtained by averaging over 10 neighbors of each raw image.

One thousand class averages were randomly selected from each group. The LS and LUD approaches with and without the spectral norm constraint were applied. Two reconstructed volumes were obtained from the two groups of images. The two resulting volumes were aligned and averaged to obtain the ab initio model (Figure 9(a)). We observed that the LUD approach gives much more reasonable ab initio models compared to the LS approach. In addition, the FSC of the two volumes was computed to estimate the resolution of the ab initio model (Figure 10). Among all the ab initio models, the one obtained by LS is at the lowest resolution, 17.2Å, while the one obtained by LUD through IRLS procedure has the highest resolution, 16.1Å. Notice that the FSC measures the variance error but not the bias error of the ab initio model. We also notice that the viewing directions of images estimated by the IRLS procedures without the spectral norm constraint converged to two clusters around two antipodal directions, resulting in no 3D reconstruction. Moreover, for this dataset, adding the spectral norm constraint on G with α = 0.85 did not improve the accuracy of the result, although this helped with regularizing the convergence in the IRLS procedure.

Figure 10.

Convergence of the refinement process. In panels (a)–(e), the FSC plots show the convergence of the refinement iterations. The ab initio models (Figure 9(a) used in (a)–(e) were obtained by solving the LS/LUD problems using SDP/ADMM/IRLS. The numbers of refinement iterations performed in (a)–(e) are 7, 3, 5, 3, and 4, respectively. Panel (f) shows FSC plots of the refined models in (a), (b), (c), and (e) against the refined model in (d), which are measurements of similarities between the refined models in Figure 9(b).

The two resulting volumes were then iteratively refined using 10,000 raw images in each group. In each refinement iteration, 2,000 template images were generated by projecting the 3D model from the previous iteration, then the orientations of the raw images were estimated using reference-template matching, and finally a new 3D model was reconstructed from the 10,000 raw images with highest correlation with the reference images. Each refinement iteration took about four hours. Therefore, a good ab initio model should be able to accelerate the refinement process by reducing the total number of refinement iterations. The FSC plots in Figure 10(a)–10(e) show the convergence of the refinement process using different ab initio models. We observed that all the refined models are at the resolution of 11.1Å. However, the worst ab initio model obtained by LS needed 7 iterations (about 28 hours) for convergence (Figure 10(a)), while the best ab initio model obtained by LUD needed 3 iterations (about 12 hours) for convergence (Figure 10(b) and (d)). Figure 10(f) uses FSC plots to compare the refined models. We observed that the refined models in Figure 10(b)–10(e) were consistent with each other, while the refined model obtained by LS in Figure 10(a) was slightly different from the others.

The average CPU times for computing the ab initio models in these two subsections are shown in Table 1. It is not surprising to see that the LS approach was the fastest and that adding the spectral norm constraint slowed down the ADMM and IRLS procedures. The reason for this slowing is that a large portion of the CPU time in ADMM and IRLS is due to the projections onto the semidefinite cone. These steps are expected to be accelerated by the recent advance in eigenspace computation [63]. However, when using the LUD approach for the real dataset, the time saved in the refinement was about 16 hours, which is much more than the time cost for computing the ab initio models (about 0.5–1 hour when ADMM was used).

Table 1.

The average cost time using different algorithms on 500 and 1000 images in the two experimental subsections. The notation α = N/A means that no spectral norm constraint ||G||2 ≤ αK is used.

| K | α = N/A |

|

|||||

|---|---|---|---|---|---|---|---|

| LS (SDP) | LUD | LS (ADMM) | LUD | ||||

| ADMM | IRLS | ADMM | IRLS | ||||

| 500 | 7s | 266s | 469s | 78s | 454s | 3353s | |

| 1000 | 31s | 1864s | 3913s | 619s | 1928s | 20918s | |

9. Discussion

To estimate image orientations, we introduced a robust self-consistency error and used the ADMM or IRLS procedure to solve the associated LUD problem after SDR. Numerical experiments demonstrate that the solution is less sensitive to outliers in the detected common-lines than is the LS method approach. In addition, when the common-line detection rate is low, the spectral norm constraint on the Gram matrix G can help to tighten the semidefinite relaxation, and thus improves the accuracy of the estimated rotations in some cases. Moreover, the numerical experiments using the real dataset (section 8.2) demonstrate that the ab initio models resulting from the LUD-based methods are more accurate than initial models that result from least squares–based methods. In particular, our initial models require fewer time-consuming refinement iterations. We note that it is also possible to consider other self-consistency errors involving the unsquared deviations raised to some power p (e.g., the cases p = 1, 2 correspond to LUD and LS, respectively). We observed that the accuracy of the estimated orientations can be improved by using p < 1, provided that the initial guess is “sufficiently good.” The LUD approach and the spectral norm constraint on G can be generalized to the synchronization approach to estimate the images’ orientations [46].

Both LS and LUD-based algorithms have weaknesses and strengths in applications. The LS method is much faster than the LUD method, while the LUD method is more robust to outliers in the detected common-lines than is the LS method. Moreover, adding a spectral norm constraint can tighten the convex relaxation and thus improve the accuracy of the result, while it slows down the whole procedure. Based on the observations in our numerical experiments, we recommend using the LS method, the LUD method, and both methods with spectral norm constraints, respectively, for images with SNR from high to low. However, it is hard to quantify the actual value of SNR, due to the unknown 3D structure, and to choose a method accordingly. We suggest trying the LS method without any spectral norm constraint first, then examine the top four or five eigenvalues of the Gram matrix G to see whether G has three dominate eigenvalues. If the spectrum of G is reasonable, we can examine the ab initio model that is reconstructed using the estimated rotations. If one of the two examinations fails, the LUD and the spectral norm constraint can be considered to improve the result. One can also experiment with different orientation determination algorithms and choose the result with the highest likelihood score. Once an ab initio estimation of orientations is given, it is possible to evaluate the likelihood score of the estimation in one of two ways: (1) determine the “common-lines” by the estimated rotations, compute the Fourier coefficients in the common-lines, and then compute the sum of squared distances between the implied common-lines in the Fourier space; or (2) reconstruct a 3D volume using the estimated rotations and compute the sum of deviations between the original input 2D images and the corresponding 2D projections of the 3D volume (i.e., the reprojection error).

In [61], the LUD approach is shown to be more robust than the LS approach for the synchronization problem over the rotation group SO(d). Given some relative rotations , the synchronization problem is to estimate the rotations Ri ∈ SO(d), i = 1, …, K, up to a global rotation. It is verified that under a specific model of the measurement noise and the measurement graph for , the rotations can be exactly and stably recovered using LUD, exhibiting a phase transition behavior in terms of the proportion of noisy measurements. The problem of orientation determination using common-lines between cryo-EM images is similar to the synchronization problem. The difference is that the pairwise information given by the relative rotation is full, while that given by the common-lines is partial. Moreover, the measurement noise of each detected common-line c⃗ij depends on images i and j, and thus it cannot be simply modeled, which brings the difficulties in verifying the conditions for the exact and stable orientation determination that we observed.

Acknowledgments

This author was partially supported by NSFC grants 11101274 and 11322109.

The authors would like to thank Zhizhen Zhao for producing class averages from the experimental ribosomal images. The authors would also like to thank the SIIMS Associate Editor and three anonymous referees for their detailed and valuable comments and suggestions.

Appendix. Exact recovery of the Gram matrix G from correct common-lines

Here we prove that if the detected common-lines c⃗ji (defined in (3.1)) are all correct and at least three images have linearly independent projection directions (i.e., the viewing directions of the three images are not on the same great circle on the sphere shown in Figure 4), then the Gram matrix G obtained by solving the LS problem (4.6)–(4.8) or the LUD problem (4.10) is uniquely that defined in (4.3). To verify the uniqueness of the solution G, it is enough to show rank(G)= 3 due to the SDP solution uniqueness theorem (see [7, pp. 36–39], [68]). Without loss of generality, we consider the SDP for the LS approach when applied on three images (i.e., K = 3 and wij = 1 in the problem (4.6)–(4.8)):

Since the solution G is positive semidefinite, we can decompose G as

where , p = 1, 2 and i = 1, 2, 3, are column vectors. We will show rank(G)= 3; i.e., any four vectors among { } span a space with dimensionality at most 3.

Define arrays ui as

then the inner product

where the last inequality follows from the Cauchy–Schwarz inequality and the facts that all c⃗ij are unit vectors, and are unit vectors and orthogonal to each other due to the constraint Gii = I2, and thus all are unit vectors on the Fourier slices of the images. The equality holds if and only if

| (A.1) |

Thus when the maximum is achieved, due to (A.1) and the fact that the projection directions of the images are linearly independent, , and thus . Therefore, without loss of generality, we only have to show that . Using (A.1), assume that and , where v1 and v2 are linearly independent vectors (otherwise all three projection directions are linearly dependent, and thus the three Fourier slices of the images intersect at the same line). Therefore we have , and . Thus .

Footnotes

The work of the first and second authors was partially supported by awards FA9550-12-1-0317 and FA9550-13-1-0076 from AFOSR, by award R01GM090200 from the NIGMS, and by award LTR DTD 06-05-2012 from the Simons Foundation.

The 3D subspace can also be spanned by the eigenvectors associated with the top three eigenvalues of G, while the fourth largest eigenvalue is expected to be significantly smaller; see also [48].

The viewing direction is the third column of the underlying rotation matrix.

The primal infeasibility and dual infeasibility are the residuals in the primal and dual equality constraints.

The FIRM package is available at https://web.math.princeton.edu/~lanhuiw/software.html.

We observed that, using either the LS or LUD method, the initial model reconstructed from 200 averaged images, which are averaged over 50 nearest neighbors, has the highest resolution, 11.1Å, that we can achieve. However, averaging too many neighbors may result in oversmoothing.

Contributor Information

Amit Singer, Email: amits@math.princeton.edu.

Zaiwen Wen, Email: wenzw@math.pku.edu.cn.

References

- 1.Arun KS, Huang TS, Blostein SD. Least-squares fitting of two 3-D point sets. IEEE Trans Pattern Anal Mach Intell. 1987;9:698–700. doi: 10.1109/tpami.1987.4767965. [DOI] [PubMed] [Google Scholar]

- 2.Bai X, Fernandez IS, McMullan G, Scheres SHW. Ribosome structures to near-atomic resolution from thirty thousand cryo-EM particles. eLife Sciences. 2013;2:e00461. doi: 10.7554/eLife.00461. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Boyd S, Vandenberghe L. Convex Optimization. Cambridge University Press; New York: 2004. [Google Scholar]

- 4.Burer S, Monteiro RDC. A nonlinear programming algorithm for solving semidefinite programs via low-rank factorization. Math Program. 2003;95:329–357. [Google Scholar]

- 5.Candès EJ, Wakin MB, Boyd SP. Enhancing sparsity by reweighted ℓ1 minimization. J Fourier Anal Appl. 2008;14:877–905. [Google Scholar]

- 6.Daubechies I, DeVore R, Fornasier M, Güntürk CS. Iteratively reweighted least squares minimization for sparse recovery. Comm Pure Appl Math. 2010;63:1–38. [Google Scholar]

- 7.de Klerk E. Applied Optimization. Springer; New York: 2002. Aspects of Semidefinite Programming: Interior Point Algorithms and Selected Applications. [Google Scholar]

- 8.Donoho DL, Johnstone IM. Adapting to unknown smoothness via wavelet shrinkage. J Amer Statist Assoc. 1995;90:1200–1224. [Google Scholar]

- 9.Dutt A, Rokhlin V. Fast Fourier transforms for nonequispaced data. SIAM J Sci Comput. 1993;14:1368–1393. [Google Scholar]

- 10.Farrow NA, Ottensmeyer FP. A posteriori determination of relative projection directions of arbitrarily oriented macromolecules. J Opt Soc Amer A. 1992;9:1749–1760. [Google Scholar]

- 11.Fessler JA, Sutton BP. Nonuniform fast Fourier transforms using min-max interpolation. IEEE Trans Signal Process. 2003;51:560–574. [Google Scholar]

- 12.Frank J. Three Dimensional Electron Microscopy of Macromolecular Assemblies. Academic Press; New York: 1996. [Google Scholar]

- 13.Frank J. Cryo-electron microscopy as an investigative tool: The ribosome as an example. BioEssays. 2001;23:725–732. doi: 10.1002/bies.1102. [DOI] [PubMed] [Google Scholar]

- 14.Frank J. Single-particle imaging of macromolecules by cryo-electron microscopy. Annu Rev Biophys Biomol Struct. 2002;31:303–319. doi: 10.1146/annurev.biophys.31.082901.134202. [DOI] [PubMed] [Google Scholar]

- 15.Gilbert P. Iterative methods for the three-dimensional reconstruction of an object from projections. J Theoret Biol. 1972;36:105–117. doi: 10.1016/0022-5193(72)90180-4. [DOI] [PubMed] [Google Scholar]

- 16.Goemans MX, Williamson DP. Improved approximation algorithms for maximum cut and satisfiability problems using semidefinite programming. J ACM. 1995;42:1115–1145. [Google Scholar]

- 17.Gordon R, Bender R, Herman GT. Algebraic reconstruction techniques (ART) for three-dimensional electron microscopy and X-ray photography. J Theoret Biol. 1970;29:471–481. doi: 10.1016/0022-5193(70)90109-8. [DOI] [PubMed] [Google Scholar]

- 18.Greengard L, Lee JY. Accelerating the nonuniform fast Fourier transform. SIAM Rev. 2004;46:443–454. [Google Scholar]

- 19.Harauz G, van Heel M. Exact filters for general geometry three dimensional reconstruction. Optik. 1986;73:146–156. [Google Scholar]

- 20.Hong M, Luo Z-Q. On the Linear Convergence of the Alternating Direction Method of Multipliers. 2012 http://arxiv.org/abs/1208.3922, preprints.

- 21.Kondor RI. A Complete Set of Rotationally and Translationally Invariant Features for Images. 2007 http://arxiv.org/abs/cs/0701127.

- 22.Lebart L, Morineau A, Warwick KM. Wiley Ser Prob Math Statist. Wiley-Interscience; New York: 1984. Multivariate Descriptive Statistical Analysis: Correspondence Analysis and Related Techniques for Large Matrices. [Google Scholar]

- 23.Lerman G, McCoy M, Tropp JA, Zhang T. Robust Computation of Linear Models, or How to Find a Needle in a Haystack. 2012 http://arxiv.org/abs/1202.4044.

- 24.Ludtke SJ, Baldwin PR, Chiu W. EMAN: Semiautomated software for high-resolution single-particle reconstructions. J Struct Biol. 1999;128:82–97. doi: 10.1006/jsbi.1999.4174. [DOI] [PubMed] [Google Scholar]

- 25.Luo Z, Ma W, So A, Ye Y, Zhang S. Semidefinite relaxation of quadratic optimization problems. IEEE Signal Process Mag. 2010;27:20–34. [Google Scholar]

- 26.Mallick SP, Agarwal S, Kriegman DJ, Belongie SJ, Carragher B, Potter CS. Computer Vision and Pattern Recognition, Proceedings of the 2006 IEEE Computer Society Conference. Vol. 2. IEEE Press; Piscataway, NJ: 2006. Structure and view estimation for tomographic reconstruction: A Bayesian approach; pp. 2253–2260. [Google Scholar]

- 27.Marabini R, Carazo JM. On a new computationally fast image invariant based on bispectral projections. Pattern Recognition Lett. 1996;17:959–967. [Google Scholar]

- 28.Marabini R, Herman GT, Carazo JM. 3D reconstruction in electron microscopy using ART with smooth spherically symmetric volume elements (blobs) Ultramicroscopy. 1998;72:53–65. doi: 10.1016/s0304-3991(97)00127-7. [DOI] [PubMed] [Google Scholar]

- 29.Mezzadri F. How to generate random matrices from the classical compact groups. Notices Amer Math Soc. 2007;54:592–604. [Google Scholar]

- 30.Mohan K, Fazel M. Iterative reweighted algorithms for matrix rank minimization. J Mach Learning Res. 2012;13:3441–3473. [Google Scholar]

- 31.Natterer F. Classics in Appl Math. Vol. 32. SIAM; Philadelphia: 2001. The Mathematics of Computerized Tomography. [Google Scholar]

- 32.Nyquist H. Least orthogonal absolute deviations. Comput Statist Data Anal. 1988;6:361–367. [Google Scholar]

- 33.Penczek P, Grassucci R, Frank J. The ribosome at improved resolution: New techniques for merging and orientation refinement in 3D cryo-electron microscopy of biological particles. Ultramicroscopy. 1994;53:251–270. doi: 10.1016/0304-3991(94)90038-8. [DOI] [PubMed] [Google Scholar]

- 34.Penczek P, Radermacher M, Frank J. Three-dimensional reconstruction of single particles embedded in ice. Ultramicroscopy. 1992;40:33–53. [PubMed] [Google Scholar]

- 35.Penczek P, Renka R, Schomberg H. Gridding-based direct Fourier inversion of the three-dimensional ray transform. J Opt Soc Amer A. 2004;21:499–509. doi: 10.1364/josaa.21.000499. [DOI] [PubMed] [Google Scholar]

- 36.Penczek PA, Grassucci RA, Frank J. The ribosome at improved resolution: New techniques for merging and orientation refinement in 3d cryo-electron microscopy of biological particles. Ultramicroscopy. 1994;53:251–270. doi: 10.1016/0304-3991(94)90038-8. [DOI] [PubMed] [Google Scholar]

- 37.Penczek PA, Zhu J, Frank J. A common-lines based method for determining orientations for N > 3 particle projections simultaneously. Ultramicroscopy. 1996;63:205–218. doi: 10.1016/0304-3991(96)00037-x. [DOI] [PubMed] [Google Scholar]

- 38.Pettersen EF, Goddard TD, Huang CC, Couch GS, Greenblatt DM, Meng EC, Ferrin TE. UCSF Chimera—A visualization system for exploratory research and analysis. J Comput Chem. 2004;25:1605–1612. doi: 10.1002/jcc.20084. [DOI] [PubMed] [Google Scholar]

- 39.Radermacher M. Electron Tomography. Springer; New York: 2006. Weighted back-projection methods; pp. 245–273. [Google Scholar]

- 40.Radermacher M, Wagenknecht T, Verschoor A, Frank J. A new 3-D reconstruction scheme applied to the 50S ribosomal subunit of E. coli. Ultramicroscopy. 1986;141:RP1–2. doi: 10.1111/j.1365-2818.1986.tb02693.x. [DOI] [PubMed] [Google Scholar]

- 41.Recht B, Fazel M, Parrilo PA. Guaranteed minimum-rank solutions of linear matrix equations via nuclear norm minimization. SIAM Rev. 2010;52:471–501. [Google Scholar]

- 42.Sadler BM, Giannakis GB. Shift- and rotation-invariant object reconstruction using the bispectrum. J Opt Soc Amer A. 1992;9:57–69. [Google Scholar]

- 43.Saxton WO, Baumeister W. The correlation averaging of a regularly arranged bacterial cell envelope protein. J Microscopy. 1982;127:127–138. doi: 10.1111/j.1365-2818.1982.tb00405.x. [DOI] [PubMed] [Google Scholar]

- 44.Scheres SHW, Chen S. Prevention of overfitting in cryo-EM structure determination. Nature Methods. 2012;9:853–854. doi: 10.1038/nmeth.2115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Serysheva II, Orlova EV, Chiu W, Sherman MB, Hamilton SL, van Heel M. Electron cryomicroscopy and angular reconstitution used to visualize the skeletal muscle calcium release channel. Nature Struct Biol. 1995;2:18–24. doi: 10.1038/nsb0195-18. [DOI] [PubMed] [Google Scholar]

- 46.Shkolnisky Y, Singer A. Viewing direction estimation in cryo-EM using synchronization. SIAM J Imaging Sci. 2012;5:1088–1110. doi: 10.1137/120863642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Singer A, Coifman RR, Sigworth FJ, Chester DW, Shkolnisky Y. Detecting consistent common lines in cryo-EM by voting. J Struct Biol. 2010;169:312–322. doi: 10.1016/j.jsb.2009.11.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Singer A, Shkolnisky Y. Three-dimensional structure determination from common lines in cryo-EM by eigenvectors and semidefinite programming. SIAM J Imaging Sci. 2011;4:543–572. doi: 10.1137/090767777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Singer A, Zhao Z, Shkolnisky Y, Hadani R. Viewing angle classification of cryo-electron microscopy images using eigenvectors. SIAM J Imaging Sci. 2011;4:723–759. doi: 10.1137/090778390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.So A, Zhang J, Ye Y. On approximating complex quadratic optimization problems via semidefinite programming relaxations. Math Program. 2007;110:93–110. [Google Scholar]

- 51.Späth H, Watson GA. On orthogonal linear approximation. Numer Math. 1987;51:531–543. [Google Scholar]

- 52.Stark H, Rodnina MV, Wieden H, Zemlin F, Wintermeyer W, van Heel M. Ribosome interactions of aminoacyl-tRNA and elongation factor Tu in the codon-recognition complex. Nature Struct Mol Biol. 2002;9:849–854. doi: 10.1038/nsb859. [DOI] [PubMed] [Google Scholar]

- 53.Vainshtein B, Goncharov A. Determination of the spatial orientation of arbitrarily arranged identical particles of an unknown structure from their projections. Proceedings of the 11th International Congress on Electron Microscopy; Tokyo: Japanese Society for Electron Microscopy; 1986. pp. 459–460. [Google Scholar]

- 54.van Heel M. Multivariate statistical classification of noisy images (randomly oriented biological macromolecules) Ultramicroscopy. 1984;13:165–183. doi: 10.1016/0304-3991(84)90066-4. [DOI] [PubMed] [Google Scholar]

- 55.van Heel M. Angular reconstitution: A posteriori assignment of projection directions for 3D reconstruction. Ultramicroscopy. 1987;21:111–123. doi: 10.1016/0304-3991(87)90078-7. [DOI] [PubMed] [Google Scholar]

- 56.van Heel M, Frank J. Use of multivariates statistics in analysing the images of biological macromolecules. Ultramicroscopy. 1981;6:187–194. doi: 10.1016/0304-3991(81)90059-0. [DOI] [PubMed] [Google Scholar]

- 57.van Heel M, Gowen B, Matadeen R, Orlova EV, Finn R, Pape T, Cohen D, Stark H, Schmidt R, Schatz M, Patwardhan A. Single-particle electron cryo-microscopy: Towards atomic resolution. Quart Rev Biophys. 2000;33:307–369. doi: 10.1017/s0033583500003644. [DOI] [PubMed] [Google Scholar]

- 58.van Heel M, Harauz G, Orlova EV, Schmidt R, Schatz M. A new generation of the imagic image processing system. J Struct Biol. 1996;116:17–24. doi: 10.1006/jsbi.1996.0004. [DOI] [PubMed] [Google Scholar]

- 59.Vonesch C, Wang L, Shkolnisky Y, Singer A. Fast wavelet-based single-particle reconstruction in Cryo-EM. Biomedical Imaging: From Nano to Macro; Proceedings of the 2011 IEEE International Symposium; Piscataway, NJ: IEEE Press; 2011. pp. 1950–1953. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Wang L, Sigworth FJ. Cryo-EM and single particles. Physiology. 2006;21:13–18. doi: 10.1152/physiol.00045.2005. [DOI] [PubMed] [Google Scholar]

- 61.Wang L, Singer A. Exact and stable recovery of rotations for robust synchronization. Inform and Inference. 2013 doi: 10.1093/imaiai/iat005. to appear. [DOI] [Google Scholar]

- 62.Wen Z, Goldfarb D, Yin W. Alternating direction augmented Lagrangian methods for semidefinite programming. Math Program Comput. 2010;2:203–230. [Google Scholar]

- 63.Wen Z, Yang C, Liu X, Zhang Y. Trace-penalty minimization for large-scale eigenspace computation. Optimization Online. 2013 http://www.optimization-online.org/DBHTML/2013/03/3799.html.

- 64.Zhang T, Lerman G. A Novel M-Estimator for Robust PCA. Arxiv preprint. 2012 http://arxiv.org/abs/1112.4863.

- 65.Zhao Z, Singer A. Fourier-Bessel rotational invariant eigenimages. J Opt Soc Amer A. 2013;30:871–877. doi: 10.1364/JOSAA.30.000871. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Zhao Z, Singer A. Rotationally Invariant Image Representation for Viewing Angle Classification. 2013 doi: 10.1016/j.jsb.2014.03.003. submitted. available at http://arxiv.org/abs/1309.7643. [DOI] [PMC free article] [PubMed]

- 67.Zhu Y, Carragher B, Glaeser RM, Fellmann D, Bajaj R, Bern M, Mouche F, Haas FD, Hall RJ, Kriegman DJ, Ludtke C, Mallick SP, Penczek PA, Roseman AM, Sigworth FJ, Potter CS. Automatic particle selection: Results of a comparative study. J Struct Biol. 2004;145:3–14. doi: 10.1016/j.jsb.2003.09.033. [DOI] [PubMed] [Google Scholar]

- 68.Zhu Z, So AMC, Ye Y. Universal rigidity and edge sparsification for sensor network localization. SIAM J Optim. 2010;20:3059–3081. [Google Scholar]