Abstract

Guided by a comprehensive implementation model, this study examined training/implementation processes for a tailored contingency management (CM) intervention instituted at a Clinical Trials Network-affiliate opioid treatment program (OTP). Staff-level training outcomes (intervention delivery skill, knowledge, and adoption readiness) were assessed before and after a 16-hour training, and again following a 90-day trial implementation period. Management-level implementation outcomes (intervention cost, feasibility, and sustainability) were assessed at study conclusion in a qualitative interview with OTP management. Intervention effectiveness was also assessed via independent chart review of trial CM implementation vs. a historical control period. Results included: 1) robust, durable increases in delivery skill, knowledge, and adoption readiness among trained staff; 2) positive managerial perspectives of intervention cost, feasibility, and sustainability; and 3) significant clinical impacts on targeted patient indices. Collective results offer support for the study’s collaborative intervention design and the applied, skills-based focus of staff training processes. Implications for CM dissemination are discussed.

Keywords: Contingency management, Dissemination, Implementation science

1. Introduction

A schism between treatment research and community-based practice, identified by the Institute of Medicine (IOM, 1998) 15 years ago, continues to plague healthcare delivery efforts. Challenges in disseminating empirically-supported approaches are particularly poignant in addiction treatment settings, where this gap is disproportionately large (Brown, 2000; Compton et al., 2005; McLellan, Carise, & Kleber, 2003). A large-scale bridging effort is NIDA’s Clinical Trials Network [CTN; (Hanson, Leshner, & Tai, 2002)], enabling multisite trials of promising treatments at community clinics. Other prominent efforts seek to expose community treatment personnel to empirically-supported treatments via SAMHSA’s National Registry of Evidence Based Programs and Practices (www.nrepp.samhsa.gov), regional Addiction Technology Transfer Centers, and joint effort with NIDA to develop clinician-friendly ‘blending products’ (Martino et al., 2010). Nevertheless, one national estimate suggests that just 11% of U.S. treatment-seekers receive empirically-supported treatment (McGlynn et al., 2003).

Contingency management (CM) is a cogent example of an empirically-supported method of treating substance abuse for which dissemination has proven challenging [for review, see Hartzler, Lash, & Roll (2012)]. Encompassing a family of behavioral reinforcement systems, Petry (2012) notes as binding tenets of contemporary CM methods: 1) a focal, desired patient behavior be closely monitored, 2) a tangible, positive reinforcer be immediately provided when the behavior occurs, and 3) the reinforcer be withheld when the behavior does not occur. Meta-analyses document reliable, small-to-medium therapeutic effects with substance abusers (Dutra et al., 2008; Griffith, Rowan-Szal, Roark, & Simpson, 2000; Lussier, Heil, Mongeon, Badger, & Higgins, 2006; Prendergast, Podus, Finney, Greenwell, & Roll, 2006). Greater staff receptivity to CM is found in opioid treatment programs (OTPs), where opiate-dependent patients access a daily dose of agonist medication and medical/psychosocial support services (Fuller et al., 2007; Hartzler et al., 2012). Regarding community effectiveness, a CTN trial with six OTPs found CM increased treatment adherence among 388 patients (Peirce et al., 2006). Despite this widely-cited trial, just 12% of CTN clinics report sustained implementation (Roman, Abraham, Rothrauff, & Knudsen, 2010), and comparatively lesser interest and implementation is noted outside the CTN (Hartzler & Rabun, 2013a, 2013b). As 100+ published RCTs support CM efficacy, dissemination efforts will benefit from trials focused on implementation issues. Indeed, pressing needs for scientific attention to clinician training are gaining broad recognition (Beidas & Kendell, 2010; Herschell, Kolko, Baumann, & Davis, 2010; McHugh & Barlow, 2010).

Historically, many CM approaches have been validated in OTPs. Early methods offered patients convenience and autonomy of take-home medication doses to reinforce drug abstinence (Milby, Garrett, English, Fritschi, & Clarke, 1978; Stitzer, Bigelow, & Liebson, 1980; Stitzer et al., 1977). Contemporary methods, promoted under a motivational incentives moniker, use monetary reinforcers and derive from hallmark studies of voucher-based CM by Higgins et al. (1994, 1993) and Petry, Martin, Cooney, and Kranzler (2000) ‘fishbowl technique’ of earning prize draws. As salience of psychosocial support for OTP patients gained international recognition (WHO, 2004), CM methods increasingly targeted counseling attendance (Alessi, Hanson, Wieners, & Petry, 2007; Jones, Haug, Silverman, Stitzer, & Svikis, 2001; Ledgerwood, Alessi, Hanson, Godley, & Petry, 2008). Academicians may debate what they see as the optimal patient behavior to target or type of reinforcer to offer, but ultimately the opinions of treatment professionals’ guide whether and how CM is disseminated. To that end, OTPs appear well-served in targeting meaningful patient behaviors in their setting and devising CM systems matched to their implementation capacity in terms of affordability, patient interest, and logistical compatibility with existing clinic services.

Effective dissemination of CM may be guided by implementation science models that incorporate real-world systems issues (Damschroder & Hagedorn, 2011; Fixsen, Naoom, Blase, Friedman, & Wallace, 2005; Rogers, 2003). Often overshadowed by the patient outcomes in controlled treatment trials, such issues are focal outcomes of staff training and implementation activities. Proctor et al. (2011) define these implementation outcomes as “effects of deliberate and purposive actions to implement new treatments, practices, and services” (p.65). A corresponding taxonomy includes an intervention’s: 1) acceptability, or philosophical palatability among staff, 2) appropriateness, or setting compatibility for staff, 3) fidelity, or staff knowledge and skill to deliver as intended, 4) adoption, or staff intent to use, 5) cost, or the clinic resources required to implement, 6) feasibility, or clinic navigation of logistical hurdles, 7) sustainability, or its maintenance as a stable clinic service, and 8) penetration, or its integration into regular clinic practice by staff. The unit of analysis for some domains (acceptability, appropriateness, fidelity, and adoption) is individual staff members, who can offer quantifiable self-report or skill demonstration at repeated points. Others (costs, feasibility, sustainability, and penetration) reflect OTPs as units, and can be assessed by qualitative management report or independent review of clinic records after a trial period of implementation. As suggested in a psychotherapy review (Beidas & Kendell, 2010), implementation is well-suited to mixed method evaluation.

Extant literature on CM dissemination to the treatment community, predominated by the widely-promoted motivational incentives methods, may inform expectations about staff-focused implementation domains. A bleak picture is painted of attitudes toward these CM methods, with lesser acceptability and perceived appropriateness than other therapeutic approaches (Bride, Abraham, & Roman, 2010; Herbeck, Hser, & Teruya, 2008; McCarty et al., 2007; McGovern, Fox, Xie, & Drake, 2004). Prevailing objections include perceived inefficacy, procedural confusion, and philosophical incongruence (Ducharme, Knudsen, Abraham, & Roman, 2010; Kirby, Benishek, Dugosh, & Kerwin, 2006; Ritter & Cameron, 2007), with routine use of CM supported by just 27% of addiction treatment staff (Benishek, Kirby, Dugosh, & Pavodano, 2010). Staff members’ clinic role appears to moderate such attitudes, with greater appeal voiced by those in managerial positions (Ducharme et al., 2010; Henggeler et al., 2008; Kirby et al., 2006). Opposition by direct-care staff may be overcome via championing by agency leadership (Kellogg et al., 2005), with strong training attendance observed after simple forms of executive advocacy (Henggeler, Chapman, et al., 2008). Direct training exposure does appear to enhance CM attitudes, knowledge, and adoption among counseling staff working in the related field criminal justice (Henggeler, Chapman, Rowland, Sheidow, & Cunningham, 2013). A broader training literature also notes the importance of active learning strategies—specifically use of expert demonstration and applied trainee practice—in developing new clinical expertise (Beidas & Kendell, 2010; Cucciare, Weingardt, & Villafranca, 2008; Herschell et al., 2010). To that end, performance-based feedback has been suggested to reduce staff drift in procedural CM adherence (Petry, Alessi, & Ledgerwood, 2012) and enable longitudinal improvement in delivery skill (Henggeler, Sheidow, Cunningham, Donohoe, & Ford, 2008). Taken together, it appears that staff-based implementation outcomes may be enhanced if CM training: 1) is advocated by management, 2) addresses concerns of direct-care staff, and 3) focuses on building clinical competencies through active learning strategies.

Prior research also informs expectations about management-focused CM implementation domains. As core costs (e.g., staff time, purchase of reinforcers) are a common reason clinics forego adoption (Roman et al., 2010; Walker et al., 2010), CM interventions must be affordable in the eyes of the clinics adopting them. Another common sentiment is that clinical methods be adopted only if ‘they don’t conflict with treatments already in place’ (Haug, Shopshire, Tajima, Gruber, & Guydish, 2008). In this respect, CM systems that rely on staff to perform foreign or complicated tasks (like dense arithmetic calculations), even if efficacious in controlled trials, will prompt logistical problems (Chutuape, Silverman, & Stitzer, 1998; Petry et al., 2001). One way to effectively address potential fiscal constraints and logistical incompatibilities is to engage clinic management in CM intervention design (Kellogg et al., 2005; Squires, Gumbley, & Storti, 2008). This allows an OTP to tailor a CM system to its fiscal and logistical implementation capacities, thereby enhancing likelihood of sustainability and breadth of staff penetration. As support for sustaining a CM intervention once designed, conceptual primers and procedural descriptions enhance knowledge acquisition and adoption readiness (Benishek et al., 2010; Ledgerwood et al., 2008). Thus, the impact of CM trainings may be augmented if the curricula include reproducible aids for staff to reference as needed in their daily work. This collective literature suggests that positive managerial implementation outcomes are more likely achieved for a CM intervention if: 1) management contributes to its design, 2) it is compatible with clinic fiscal and logistical constraints, 3) foreign or complicated staff procedures are avoided, and 4) the training curriculum includes reproducible materials for staff to later reference as needed.

Guided by a widely-cited implementation framework (Proctor et al., 2011), the current study evaluates a range of implementation and clinical impacts of CM training delivered to staff at a community OTP. This mixed method trial evaluated staff-focused domains over time (prior to, immediately following, and 3 months after training) in a design accounting for potential assessment reactivity, and examined qualitative report of management-focused domains after a 90-day implementation period. The OTP’s executive director was enlisted in a collaborative intervention design process—specifying a target behavior, target population, reinforcers, and reinforcement system. Resulting clinical impacts were assessed via independent chart review, and comparison to a historical control period. Herein, processes of intervention design and staff training are described, followed by evaluation of: 1) immediate and eventual impacts of staff training on CM delivery skills, knowledge, attitudes and adoption readiness, 2) management perspective of intervention affordability, feasibility, and sustainability after a 90-day period of implementation, and 3) intervention effects on the treatment adherence of targeted patients.

2. Materials and method

2.1. Trial Design

The mixed factorial design of this training and implementation trial includes three salient features. First, within-subjects analyses of temporal changes in four staff-focused domains (acceptability, appropriateness, adoption, and fidelity) examined potential assessment reactivity, by way of random assignment of counselors to a single or multiple baseline assessment condition, as well as immediate and eventual impacts of training. The omission of a waitlist or no-training control group was a pragmatic design choice, given a finite number of counseling staff available to participate and risk of undesired contamination effects in their common work setting. It did, however, preclude between-group experimental comparisons of training impact. Second, management-focused domains (cost, feasibility, and sustainability) were examined via qualitative report of a subset of managerial staff as the trial implementation period ended. Given the nature of the information of interest, elicitation of qualitative narrative managerial reports of implementation experience were thought preferable to potentially reductionistic, quantitative alternatives. Third, review of clinic records enabled comparative analyses of OTP patients who received CM with a historical control group, as well as penetration of the CM intervention among staff. This also was a pragmatic design choice, one allowing the clinic to implement its CM intervention with all (rather than a randomized subset) of target patients but necessitating quasi-experimental comparisons. Taken together, these three design features —while introducing complexity and experimental caveats—address issues highlighted in models of implementation science (Damschroder & Hagedorn, 2011; Lash et al., 2013; Proctor et al., 2011) by methods suggested in recent training literature reviews (Fairburn & Cooper, 2011; Herschell et al., 2010).

2.2. Clinic Selection

A key consideration was how a collaborating OTP would be selected, as the hope was to partner with a clinic possessing attributes suggestive of potential to effectively implement CM. Prior research has identified as clinic-level predictors of favorable CM adoption attitudes: CTN affiliation, non-profit corporation status, stable clinician staffing, e-communication capacity, and a clinic culture tolerant of stress and open to new clinical methods (Hartzler, Donovan, et al., 2012; Henggeler, Chapman, et al., 2008; Squires et al., 2008). Accordingly, the PI accessed SAMHSA- and CTN-sponsored websites to identify local OTPs and review many of these clinic-level attributes. Upon determining a top candidate, the executive director was contacted about study collaboration. It is a private, non-profit OTP located in an urban area of a large U.S. city, with a census of 1000 patients receiving agonist medication, individual/group counseling, and monthly drug screen urinalysis (UA). As a CTN-affiliate clinic, it had previously participated in multisite trials of other treatment innovations. Other desired attributes—concerning staffing pattern, e-communication capacity, and organizational culture—were evident from the OTP’s involvement in prior research studies (Baer et al., 2009; Hartzler & Rabun, 2013a).

2.3. Intervention Description

As clinic engagement in CM intervention design appears to promote implementation (Kellogg et al., 2005; Squires et al., 2008), the executive director was invited to specify several key features. These were: 1) a target population of introductory phase patients, or those in the initial 90 days of OTP services, 2) a target behavior of attendance at individual counseling visits, occurring on a weekly basis during this introductory treatment phase, 3) $5 gift cards to local vendors and take-home doses as the available reinforcers, and 4) a simple, ‘point-based’ token economy as the reinforcement system. Upon determination of these features, the PI devised a reinforcement schedule—incorporating two specific operant conditioning principles (priming and reinforcement escalation/reset) to enhance potential clinical impact. This eventual intervention design (outlined below) was then reviewed with the executive director, with a focus on anticipating and resolving potential fiscal or logistical implementation challenges for the OTP.

Consistent with suggestions in literature (Haug et al., 2008), a premium was placed on creating a CM intervention compatible with existing clinic operations. Accordingly, the staff members were to monitor the target behavior, track earned points, and deliver reinforcers amidst usual care in their weekly counseling visits with introductory phase patients. Upon stabilization of agonist medication, newly-enrolled patients were notified in writing of the CM ‘point system’ related to counseling attendance and scheduled for an initial visit with an assigned counselor. At this initial visit, the counselor confirmed client understanding of the details of this CM system as needed. Patients earned two points per weekly visit attended, to accumulate or be exchanged for a gift card (4 points) or take-home medication dose (10 points, date scheduled in advance and with appropriate clinic safeguards). To promote initiation of attendance, a priming feature enabled patients to earn two bonus points at the initial counseling visit (which allowed for an immediate gift card exchange). To promote continuity of attendance, a limited escalation/ reset feature enabled patients to earn a bonus point at each consecutively attended visit. With these provisions, a patient could earn 40 points in the 13-week introductory treatment phase (equivalent to ten $5 gift cards, or four take-home doses). To aid tracking, the OTP’s electronic medical record system was adapted to include documentation of patient point total (and any reinforcers provided) in notes corresponding to each individual counseling visit.

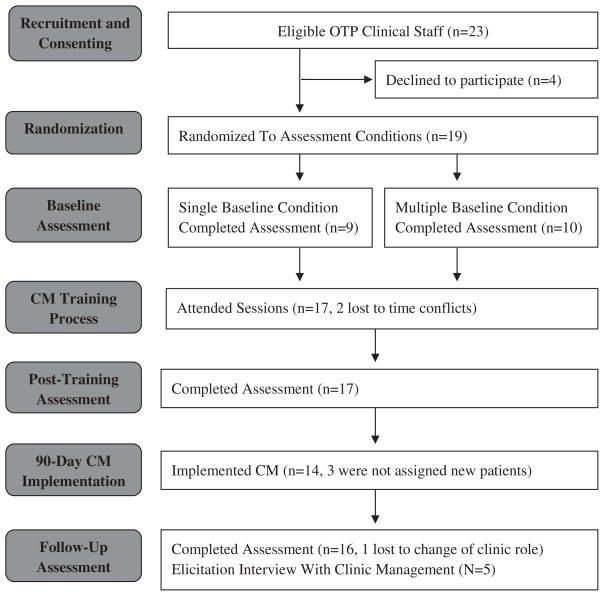

2.4. Procedures

All procedures were approved by university IRB, and a corresponding flow diagram for the principal trial participants (OTP clinical staff) is included as Fig. 1. Recruitment occurred via an on-site presentation, at which both the PI and executive director emphasized participation in intervention training and implementation as voluntary. Staff also had the option to attend CM training and implement the intervention even if opting out of formal research participation (in which a sequence of training outcome assessments was completed). Interested staff members provided informed consent and, based on simple randomization, were assigned to complete: 1) a single baseline assessment battery a week before training, or 2) a baseline assessment battery 2 weeks prior to training, then repeated the following week (for the purpose of accounting for any practice effects in training outcome measures). Staff members were notified of their condition assignment immediately upon consent. All other study activities were uniform, and included a series of training sessions followed by training outcome assessments scheduled 1 week after training and again after a 90-day trial implementation period.

Fig. 1.

Flow diagram of formal trial participation for OTP clinical staff.

Initial baseline assessments elicited staff demography and professional background. All assessment batteries included as self-reports: 1) the Provider Survey of Incentives [PSI; (Kirby et al., 2006)], assessed acceptability/appropriateness via 42 ratings (1 = strongly disagree, 5 = strongly agree) forming positive/negative attitudinal subscales (α = .83–.90), 2) a Readiness to Adopt Scale (McGovern et al., 2004) consisted of one 6-point item (0 = not familiar, 5 = using with efforts to maintain), and 3) an adapted Knowledge Test (Petry & Stitzer, 2002) assessed conceptual knowledge via 18 multiple-choice questions (α = .76). Assessments also targeted intervention delivery skill, for which demonstration in standardized role-play is a suggested method (Fairburn & Cooper, 2011). A standardized patient (SP) encounter involved an SP presenting at staff offices at an arranged time to enact a 20-minute, audio-recorded introductory patient scenario that simulated the patient’s initial counseling session. Unlike role-plays conducted in training (in which trainers provided immediate, performance-based feedback to trainees), staff members did not receive feedback on their performance during SP encounters as they were completed solely for the purpose of formal training evaluation. All of these SP encounter recordings were scored by two independent raters, blind to the timing of assessment, using a relevant version of the Contingency Management Competence Scale [CMCS; (Petry & Ledgerwood, 2010)] that included Likert rating (1 = very poor,7 = excellent) of six skill areas: 1) notification of earned reinforcers, 2) planning for prospective reinforcers, 3) delivery of earned reinforcers, 4) assessment of client interest in reinforcer choices, 5) provision of social reinforcement, and 6) linkage of the target behavior to treatment goals. Inter-rater reliability was excellent across these skill areas (ICCs = .77–.89), enabling computation of CMCS summary scores (range: 6–42).

The training process was informed by preferences broadly reported in a national sampling of OTP directors and staff (Hartzler & Rabun, in press). These include: 1) scheduling of on-site sessions (to limit cost and logistic hurdles), 2) a less intensive format, with shorter sessions distributed over time, and 3) a curriculum dominated by active learning strategies (e.g., trainer demonstration, experiential role-play activities, performance-based feedback) rather than passive learning strategies (e.g., didactic instruction). Accordingly, training was structured as four weekly half-day sessions, occurring on-site in a clinic room enabling fishbowl observational methods (for trainer demonstrations and dyadic trainee role-plays) and breakout areas for small group exercises. Training was facilitated by two psychologists, both with CM expertise and prior clinical experience working in an OTP setting, who offered 16 hours of direct contact time. The trainers also met with OTP management for 30 minutes prior to each training session as consultative support for preparatory clinic activities (i.e., identification of staff implementation leaders, development of written patient orientation materials, adaptation of the electronic records system, purchase and accounting of reinforcers) in advance of its trial implementation period. A copy of all training materials (i.e., handouts, session audio-recordings) was provided to the OTP as an ongoing resource, and to facilitate potential post-study training of non-participating staff.

A week after training, clinic management specified an implementation start date for the CM intervention. Participating staff concurrently completed post-training assessment of the aforementioned measures and a 20-item Training Satisfaction Survey (Baer et al., 2009) with Likert ratings (1 = not at all, 7 = extremely) of format, applicability, facilitators, and the overall experience. Soon after, implementation was initiated with newly-enrolled patients, continuing without interruption or adaptation for 90 days. Participating staff then completed follow-up assessment batteries identical to that described for post-training, and the PI conducted an audio-recorded elicitation interview with five management personnel (the executive director, deputy executive director, treatment director, assistant treatment director, & special projects assistant). The interview featured open discussion of implementation experiences related to intervention cost, feasibility, and prospective sustainability. It was later transcribed in its entirety, after which a phenomenological narrative analysis (Bernard & Ryan, 2010) targeted management-focused implementation domains.

Implementation outcomes were the principal trial focus, yet a circumscribed set of patient outcomes were examined via anonymized chart review. The reviews targeted a pair of 90-day intervals—the clinic’s trial implementation period, and the 3-month period just prior to staff recruitment (a historical control). Idiographic data for individual counseling visits were extracted, from which three indices were computed: 1) initiation (binary: did/did not attend initial visit), 2) continuity (longest # of consecutive weeks with attended visit), and 3) overall rate (% scheduled visits attended). The latter chart review period (of trial CM implementation) enabled calculation of intervention penetration among staff, computed as the percentage of OTP staff for whom chart documentation noted CM implementation with at least one introductory phase patient.

2.5. Participants

Of the 23 OTP counseling staff who were eligible to participate, 19 consented to do so. All were active in delivery of patient services at the OTP. Their mean age was 59.32 years (S.D. = 12.73), and 89% were female. Hispanic ethnicity was identified by 5%, and distribution of race was 79% Caucasian, 16% multi-racial, and 5% Native American. With respect to formal education, 58% had master degrees, 26% bachelor degrees, and 16% associate degrees. Many were longstanding clinic employees, as the mean clinic tenure was 12.24 years (S.D. = 9.72). The focal CM intervention was designed to be a novel service provision at this OTP, so clinic staff had no prior experience with its implementation. In terms of prior exposure to CM concepts, 11% had attended a presentation, 31% had reviewed published or online resources, 27% noted both types of prior exposure, and 31% reported no prior CM exposure. With respect to attrition, all 19 participating OTP counselors completed the baseline assessment procedures to which they were assigned, but two subsequently withdrew due to scheduling conflicts with the CM training sessions. An additional OTP counselor, after also completing the training process and post-training assessment, opted to withdraw for personal reasons. Demographic data were not collected of management personnel involved in only the concluding elicitation interview, nor was any identifying information available for the targeted OTP patients due to the anonymized nature of the chart review processes.

2.6. Data Analytic Strategy

Analyses of staff-focused domains examined: 1) staff assessment reactivity, 2) immediate training impact, and 3) eventual impact of training and 90 days of implementation. Assessment reactivity was examined with repeated-measures analyses of variance (RM-ANOVA), restricted to the subset of OTP staff (n = 10) assigned to the multiple baseline condition, evaluating change between their 1st and 2nd baseline assessment batteries. Immediate training impact was also examined by RM-ANOVA, expanded to the full remaining sample (N = 17) and evaluated change between their 1st baseline and post-training assessment batteries. Eventual training impact was also examined by RM-ANOVA, evaluating change between the 1st baseline and follow-up assessment batteries for the 16 staff participants that remained at that time. Given the staff sample size, a Cohen’s d (for dependent measures) effect size was computed as a standard metric corresponding to all analyses of training impact.

Management-focused implementation outcomes and intervention effectiveness were also examined. The former were explored via phenomenological narrative analysis of the elicitation interview with clinic management, which offered a ‘window into the lived experience’ of CM implementation (Bernard & Ryan, 2010). Accordingly, the interviewer: 1) structured questions to elicit experiential narrative, 2) reviewed the full interview transcript for broad understanding of implementation processes, and 3) selected salient excerpts about intervention cost, feasibility, and sustainability. Regarding intervention effectiveness, the nested data structures (i.e., patients in OTP staff caseloads) necessitated use of multilevel or ‘mixed’ models to compare enrollees during trial implementation (n = 106) vs. those of a historical control period (n = 111). To test a binary outcome of initiation (i.e., whether 1st scheduled visit was attended), a generalized linear mixed model was computed with temporal period (90-day CM implementation period, 90-day historical control period) as a fixed effect and corresponding staff member as a random effect. To test continuity (i.e. duration of longest continuous weekly attendance) and aggregate rate (i.e., % visits attended of those scheduled), initial random-effects ANOVA were computed to identify the intra-class correlation (ICC) due to clustering of patients within caseloads. These were followed by linear mixed models, with historical period as a fixed effect and corresponding staff member as a random effect. All models were run in SPSS version 19.0 (Chicago, IL).

3. Results

3.1. Assessment Reactivity Among Staff

Assessment reactivity was assessed with five RM-ANOVA, individually targeting as dependent variables the: 1) CMCS summary score, 2) CM knowledge test summary score, 3) adoption readiness rating, 4) PSI subscale score for positive CM attitude, and 5) PSI subscale score for negative CM attitude. All five RM-ANOVA failed to detect meaningful assessment reactivity among the subset of 10 staff assigned to the multiple baseline assessment condition (all F-values <.80, p-values >.40). Consequently, subsequent RM-ANOVA testing immediate and eventual training impacts in these indices among the full staff sample incorporated corresponding data from all staff members’ initial exposure to the baseline assessment instruments.

3.2. Immediate Impact of Training on Staff

Table 1 lists corresponding sample means and effect sizes among the 17 remaining counselors. With respect to intervention delivery, RM-ANOVA detected a substantial baseline to post-training increase in CMCS scores, F (1,16) = 64.57, p <.001. RM-ANOVAs also detected a large increase in knowledge, F(1, 16) = 17.81, p <.001, and a medium increase in adoption readiness, F(1, 16) = 6.23, p <.05. The two RM-ANOVAs that focused on CM attitudes revealed nonsignificant immediate training impact. In terms of staff reactions to the CM training, the sample mean of Likert ratings for format (M = 5.60, SD = 1.05), applicability (M = 5.40, SD = 1.05), the trainers (M = 5.81, SD = 1.00), and overall experience (M = 5.67, SD = 1.19) suggested that (on average) participating staff felt ‘moderately’ to ‘very’ satisfied.

Table 1.

Immediate and eventual effects for clinician-focused implementation indices.

| Implementation Outcome | Initial Baseline Assessment (N = 19)

|

Post-Training Assessment (n = 17)

|

Follow-Up Assessment (n = 16)

|

|||||

|---|---|---|---|---|---|---|---|---|

| M | (SD) | M | (SD) | Cohen’s d | M | (SD) | Cohen’s d | |

| CMCS Summary Score | 21.11 | (5.72) | 33.06 | (3.29) | 2.09*** | 31.88 | (2.47) | 2.43*** |

| CM Knowledge Test | 12.16 | (3.25) | 15.76 | (1.82) | 1.10*** | 14.63 | (2.09) | .96** |

| CM Adoption Readiness | 2.53 | (1.65) | 3.47 | (1.01) | .63* | 3.81 | (1.17) | .88** |

| PSI Positive CM Attitude | 3.71 | (.46) | 3.92 | (.51) | .43 | 3.83 | (.53) | .26 |

| PSI Negative CM Attitude | 2.34 | (.45) | 2.17 | (.50) | .44 | 2.08 | (.49) | .92** |

Table Notes: Relative to the baseline assessment, post-training and follow-up assessments occurred 1 month and 4 months later, respectively; all Cohen’s d reflect effect sizes calculated for dependent measures per Morris and Deshon (2002), relative to initial baseline assessment; interpretive ranges for Cohen’s d: small = .20–.50, medium = .51–.80, large = .81+; range of possible scores were 6–42 for CMCS summary score, 0–18 for CM knowledge test, 0–5 for CM adoption readiness, and 1–5 for PSI attitudinal subscales. Statistics for corresponding RM-ANOVA are provided in text.

p < .001.

p < .01.

p < .05.

3.3. Eventual Impact of Training and Implementation Experience on Staff

Eventual staff impacts represent influence of both training and 90 days of implementation experience among the 16 counselors completing the trial’s follow-up assessment. RM-ANOVA detected a substantial eventual effect on CMCS scores, F(1, 15) = 67.94, p < .001. Stronger correlation among these baseline/follow-up CMCS score distributions (r = .41), relative to the baseline/post-training score distributions involved in testing immediate training impact (r = .19), contributed to this greater eventual training effect in spite of the slightly reduced mean raw-score difference. RM-ANOVAs detected large eventual increases in both knowledge, F(1, 15) = 12.31, p < .01, and adoption readiness, F(1, 15) = 12.22, p < .01. Relative to the magnitude of post-training effects, the former was slightly reduced while the latter was enhanced (see Table 1). An eventual training impact on attitudinal index did emerge. While the RM-ANOVA for positive attitudes remained nonsignificant, the RM-ANOVA for negative attitudes showed a significant decrease, F(1, 15) = 13.20, p < .01 (see Table 1). In terms of staff reactions to CM training 90 days later, the sample mean of Likert ratings for format (M = 5.74, SD = 1.11), applicability (M = 5.43, SD = .83), trainers (M = 5.68, SD = 1.01), and overall experience (M = 5.66, SD = 1.03) suggested that staff (on average) remained ‘moderately’ to ‘very’ satisfied.

3.4. Management Perspectives of Clinic Implementation

Narrative analysis of the elicitation interview with five managerial staff detailed clinic perspective about intervention cost, feasibility, and sustainability. A noteworthy organizational attribute, evident throughout the trial, was managerial respect for the opinions of individual staff members about CM adoption. This was evidenced in the freedom staff members were given about whether or not to formally participate as research participants, to attend CM training sessions, and to implement the CM intervention thereafter with new clients assigned to their caseload during the 90-day trial implementation period. This managerial approach is consistent with what Rogers (2003) has characterized as optional innovation decisions, in which choice to adopt or reject an innovation are made by individual members of a system independent of other members’ choices. This contrasts with Rogers (2003) description of more common collective and authority innovation decisions, where one systemic choice is made by member consensus or leadership dictate, respectively. This willingness of this OTP’s leadership to empower its staff to make individual adoption decisions was also generally evident during their elicitation interview remarks, which were generally positive about experiences in the trial implementation period.

With respect to fiscal costs of the CM intervention, managerial sentiments expectedly revealed little budgetary concern for the inexpensive reinforcers selected by the OTP director in intervention design. Fiscal concerns about staff time involved varying implementation tasks, including CM delivery by clinical staff, program monitoring by administrative staff, and staff supervision by managers. Staff time is a common dimension in cost-effectiveness analyses of CM interventions (Lash et al., 2013; Olmstead & Petry, 2009; Sindelar, Ebel, & Petry, 2007; Sindelar, Olmstead, & Peirce, 2007), and the excerpted managerial perspectives below offer a range of views regarding its salience as a cost dimension in implementing this CM intervention:

Executive Director: Actually, the cost of the reinforcers is trivial. If you think about the counselors, they’re going to be seeing these folks anyway. So they’re delivering this in a session we were already going to be paying staff time for, so there is no additional cost. The amount of administration time, leadership time is relatively trivial, mostly in ramp-up time when you’re trying to decide what the reinforcers are going to be, and so forth. Deputy Executive Director: To implement this longer-term we need someone internally to track the data, to see if there’s variability within counselors, how we’re doing as an agency, etc. That is an additional cost. Treatment Director: And I think that…to do this and do it consistently, we’ll need to have periodic booster trainings. You have to have booster training to have staff keep up on it. Assistant Treatment Director: It is an extra component that is added to an already loaded initial treatment burden that counselors have with folks that are coming into treatment. So that is an extra burden. But I think it’s gone pretty well. Special Projects Assistant: My sense is that more [of the counselors] enjoy it than find it cumbersome. It is a little more work… another piece of paper to fill out, another thing to do.

Management sentiments were broadly positive about the procedural feasibility of the intervention, which was unsurprising given efforts to construct it to be compatible with pre-existing OTP services. While specific logistical challenges encountered in trial implementation were noted, most were circumstantial and all had been effectively resolved by clinic staff. Most sentiments during the interview were broader and more positive, with the value of administrative preparedness, intervention simplicity, and identification of implementation leaders highlighted as contributing factors to what they regarded as successful, continuing CM implementation:

Executive Director: An agency thinking about doing this ……they have to be confident that procedures are tight and people doing this work are diligent. If they have concerns about… administrative procedures, documentation, or follow-through, it’s going to lead to people not being reinforced in a timely fashion and the whole thing will just fall apart. Deputy Executive Director: In terms of the logistics, we’ve come up with solutions for just about everything that’s come up. The implementation doesn’t need to be all that sophisticated to be done successfully. What made it manageable was it was very circumscribed in scope, and we had two point-people that all questions could be directed to. That was critical. Treatment Director: Overall, I’ve been really pleased. Even though it may take 5 minutes of a session to make sure [counselors] add this, if you have people who are coming in regularly then [counselors] can focus on things other than their noncompliance. Assistant Treatment Director: I think it’s gone pretty well. There was the initial push to get it formalized and ready to go. That took a little bit of work. Beyond that, there have been a few, very small concerns that have come up. But, overall, I’d say that it’s gone well. Special Projects Assistant: I’m the one distributing the gift cards and keeping track of them, and it seems it has been well-received [by clinic staff]. The clients really like it, too.

Discussion of intervention sustainability was marked by managerial confidence about capability to continue implementation, and guarded optimism about corresponding outcome data. The clinic’s official position at the time of the elicitation interview was voiced intent to sustain use of the CM intervention on a provisional basis, pending report of patient outcomes from the trial implementation period from which clinic staff would decide whether to sustain, amend, or discontinue it as a clinic-wide practice. This marked a notable change in perspective from the optional innovation decision approach earlier ascribed to clinic management, which now more closely reflected a consensus-based collective innovation decision (Rogers, 2003) that would be heavily influenced by their own site-specific empirical support for use of this CM intervention. Managerial sentiments underscored clinic intent for a data-driven decision about sustainability of the CM intervention, common affinity held for it among staff and patients, and the possibility of its adaptation or application of CM principles for other clinic purposes:

Executive Director: We’re continuing on for right now…..to decide whether this becomes an integrated part of what we do agency-wide depends on the data. So, the folks—the staff—who are doing it now can continue to do it. When we get the data, then we decide. Deputy Executive Director: We have the majority of the counselors interested in continuing it. If people hated it, that would be a different issue. But that’s not the case here. Going forward, there’s a lot of evidence in the literature that this is an effective retention technique. Once we get the data, assuming the data shows a positive effect, we’re all inclined to continue implementing this. Treatment Director: I think there are a number of people who have said ‘if the data supports it, do we then want to utilize contingency management in any other kind of areas that are like this, with a specific target behavior?’ I think there could be some other potential uses of it. Assistant Treatment Director: It really is just a question of adding another thing to the plate, because people really like acknowledging their patients and positively reinforcing their patients in this way. And patients, in turn, also like it. But it’s an extra thing—and that’s really it, as opposed to “not wanting to pay people to come” and things like that. Special Projects Assistant: It seems the majority of people do enjoy it. I mean, counselors have actually called me to walk in when their clients are there…and they’ll say “I really like this program,” you know. I hear that kind of thing a lot.

A final implementation outcome was intervention penetration, conceptualized as three percentages reflecting the subset of OTP staff employing it with an introductory phase patient. As noted earlier, staff members attended training on a voluntary basis. As the intervention was intended to be compatible with existing clinic operations, well-established procedures for the assignment of newly-enrolled patients to OTP staff (based on staff availability and therapeutic matching considerations) were unaltered. This precluded staff members who were already carrying a full caseload from having opportunity to implement the intervention as they were not assigned any new patients. Based on independent chart review, 14 staff members were assigned one or more introductory phase patients, and all delivered the CM intervention. Accordingly, intervention penetration amongst the OTP’s collective 23 clinical staff was 61%, amongst the 17 CM-trained staff was 82%, and amongst the 14 staff who both attended training and had a new patient added to their caseload in the trial implementation period was 100% (see Fig. 1).

3.5. Patient-Focused Intervention Effectiveness

Table 2 outlines model statistics and parameter estimates for each of the three counseling attendance indices. In model #1, which examined the binary index of attendance initiation, the generalized linear mixed model identified temporal period as a significant fixed effect (p < .05), as 85% of the 111 introductory phase patients enrolled during CM implementation period attending their 1st scheduled counseling visit relative to 70% of 106 introductory phase patients during the historical control period (OR = 2.37). The random effect of counselor was nonsignificant (see Table 2), suggesting lack of caseload-level clustering in attendance initiation. In model #2 (testing continuity of attendance), an initial random-effects ANOVA also showed little caseload-level clustering (ICC = .05). The linear mixed model found temporal period to again be a significant fixed effect (p < .001), with the 111 enrollees during the trial implementation period attending a greater duration of consecutive weekly visits (M = 3.93, SD = 2.82) than the 106 historical controls (M = 2.61, SD = 1.99). In model #3 (testing aggregate attendance rate), the initial random-effects ANOVA again showed little caseload-level clustering (ICC = .06). Similarly, the linear mixed model found temporal period to be a significant fixed effect (p < .01), with a higher mean attendance rate (82%) among 111 patients during the trial implementation period than the 106 seen during the historical control period (68%). Effect sizes for models #2–3 (d = .53, .45, respectively) were medium in magnitude.

Table 2.

Intervention effectiveness for indices of patient counseling attendance.

| Parameter | Estimate | SE | df | t | Wald Z | 95% Confidence Interval

|

||

|---|---|---|---|---|---|---|---|---|

| Lower Bound | Upper Bound | |||||||

| Model #1 Initiation | Estimates of Fixed Effect | |||||||

| Intercept | 1.723 | .407 | 4.236*** | .921 | 2.525 | |||

| Temporal Period | .863 | .346 | 2.497* | .182 | 1.545 | |||

| Random Effect | ||||||||

| Residual | .088 | |||||||

| Counselor | .046 | .167 | .000 | 61.289 | ||||

| Model #2 Continuity | Estimates of Fixed Effect | |||||||

| Intercept | 3.913 | .249 | 30.215 | 15.741*** | 3.405 | 4.420 | ||

| Temporal Period | 1.284 | .335 | 157.613 | 3.838*** | .623 | 1.946 | ||

| Random Effect | ||||||||

| Residual | 5.819 | .589 | 9.874*** | 4.771 | 7.100 | |||

| Counselor | .091 | .228 | .397 | .001 | 12.586 | |||

| Model #3 Aggregate Rate | Estimates of Fixed Effect | |||||||

| Intercept | .811 | .033 | 35.868 | 24.781*** | .745 | .877 | ||

| Temporal Period | .133 | .042 | 188.340 | 3.165** | .050 | .216 | ||

| Random Effect | ||||||||

| Residual | .088 | .009 | 9.935*** | .072 | .107 | |||

| Counselor | .003 | .004 | .864 | .000 | .033 | |||

Table Notes: Initiation was a binary outcome reflecting whether the 1st scheduled visit was attended, and was tested via a generalized linear mixed model (#1); Continuity was a continuous outcome reflecting the longest duration of consecutive weekly visits attended, and was tested via a linear mixed model (#2); Aggregate rate was a continuous outcome reflecting overall percentage of scheduled visits attended, and was tested via linear mixed model (#3); Client sample sizes were 106 during the historical control period, and 111 during the trial CM implementation period.

p < .001.

p < .01.

p < .05.

4. Discussion

Guided by Proctor et al. (2011) conceptual framework, this study identified impacts of training and implementation processes for a tailored CM intervention instituted at a community OTP. These include: 1) robust, durable increases in delivery skill, knowledge, and adoption readiness among trained staff members; 2) a 100% penetration rate among those staff members who had post-training opportunity to use the CM intervention with new patients; 3) positive managerial perspectives—informed by 90 days of clinic implementation—of the CM intervention’s affordability, feasibility, and sustainability; and 4) significant clinical impacts on targeted patients’ initiation, continuity, and rate of counseling attendance as compared to those observed during a historical control period. Collective results offer preliminary support for the collaborative approach taken to design of the focal CM intervention and the applied, skills-based focus of the staff training processes. Given the disparity between voluminous empirical support for efficacy of CM methods and their limited community dissemination (Roman et al., 2010), the current work may offer a useful template for processes of planning and design, training and consultation, and trial implementation and evaluation that enabled this CM intervention to be effectively transported for use by this community-based substance abuse treatment setting.

One of the more novel study findings was robust, durable training impact on intervention delivery skills of staff. While the sample size was admittedly small, the rigor of the involved SP methodology (Beidas, Cross, & Dorsey, in press; Fairburn & Cooper, 2011) and lack of observed assessment reactivity should strengthen confidence in these effects. The findings also support a growing consensus that active learning strategies are necessary for health professionals to learn new clinical skills and integrate them into daily practice (Beidas & Kendell, 2010; Cucciare et al., 2008; Herschell et al., 2010; McHugh & Barlow, 2010; Miller, Sorensen, Selzer, & Brigham, 2006). Further inspection of study data also indicate that the involved training, structured around live trainer demonstration and experiential practice, led to consistent attainment of competency among staff. Specifically, all staff members met or exceeded a benchmark proposed by CMCS developers (Petry & Ledgerwood, 2010) in both their post-training and follow-up assessments. Whether staff members’ implementation experience over the 90-day trial period contributed to the durability of this effect, and what level of intervention delivery skill might be expected given further time and experience, are both questions that await future implementation studies.

Immediate training impacts on conceptual knowledge, adoption readiness, and attitudes of staff were consistent in direction and magnitude with those of recently evaluated regional CM workshops attended by VA program leaders (Rash, DePhillipis, McKay, Drapkin, & Petry, 2013). This replication is important for three reasons. First, it may enhance confidence among both CM advocates and community treatment directors about the utility of multi-day workshop attendance. Second, the composition of this current study sample—an intact group of direct-care counseling staff working in a single OTP—documents similarity in a range of observed training impacts for those of selected VA program leaders. Whereas the VA CM Training Initiative (Petry, DePhillipis, Rash, Drapkin, & McKay, in press) reflects a ‘top-down’ dissemination approach (i.e., training of select program leaders, who were then responsible for transporting the treatment technology to their respective workforces), the current work alternatively exposed an OTP workforce directly to CM training. Notably, this latter approach of directly training the workforce who would then implement CM with patients models an effective CM dissemination effort in the criminal justice field (Henggeler et al., 2013; McCart, Henggeler, Chapman, & Cunningham, 2012). Finally, replicated effects in knowledge and attitudinal indices occurred as a result of a CM training that was decidedly skills-focused, suggesting oft-cited conceptual and philosophical barriers for CM adoption (Ducharme et al., 2010; Kirby et al., 2006) need not always be the sole or even primary training focus. Training and 90 days of implementation experience culminated in retention of conceptual knowledge, increased adoption readiness, and further reduction of attitudinal concerns among staff. Taken together, these effects are consistent with prior research in which implementation experience also promotes staff knowledge and favorable attitudes toward CM (Ducharme et al., 2010; Lott & Jencius, 2009).

Qualitative managerial perspectives about the intervention, voiced after 90 days of clinic implementation, were also very encouraging. Some may consider these opinions unsurprising, insofar as having an OTP director define key intervention features may predictably contribute to later perception of affordability and logistical feasibility. Whether surprising or not, the intent of the study was clearly to frame the clinic’s experiences in intervention design, training, and implementation so that positive managerial views had opportunity to develop. Implementation science models highlight compatibility as an attribute of successful innovations (Damschroder & Hagedorn, 2011; Rogers, 2003), and this OTP’s successful experience may be attributable in part to the intervention’s fit within existing clinic operations. Targeting counseling attendance of introductory clients may also have afforded secondary therapeutic benefits, as such visits are a primary mechanism whereby these typically less stable clients work on case management issues with an assigned counselor. Indeed, centralization of OTP services is known to increase patient access and utilization (Berkman & Wechsberg, 2007; Gourevitch, Chatterji, Deb, Schoenbaum, & Turner, 2007). With respect to sustainability, guarded optimism was pervasive as management awaited report of site-specific empirical support to inform a data-driven, consensus-based clinic decision. This apparent shift in organizational philosophy, from a series of what Rogers (2003) would have classified as optional innovation decisions at earlier points in the study to this collective innovation decision at its conclusion, offers a practical example of how nuanced clinic policy decisions can unfold over time. While some clinic-level predictors of favorable CM attitudes are identified (Bride, Abraham, & Roman, 2011; Hartzler, Donovan, et al., 2012), there remains much to learn about organizational factors that promote or deter adoption decisions.

It is hoped that the attendance initiation, continuity, and rate observed during implementation of CM are indicative of patient interest in treatment. Prior research identifies such indices as markers of patient engagement, which in turn predict their long-term retention in addiction care (Peirce et al., 2006; Schon, 1987). That counselor–client interactions occurred earlier, in closer succession, and in greater aggregation also suggests potential for a host of secondary therapeutic benefits. Magnitude of the observed clinical effects (d = .46–.53) is also salient, as they appear to exceed those reported in other trials conducted in addiction treatment settings where counseling attendance has been targeted for behavioral reinforcement (Alessi et al., 2007; Ledgerwood et al., 2008). As earlier noted, the current study’s effects were achieved via: 1) independent clinic implementation (no external funds or staffing support was provided), 2) comparison with a historical control patient group receiving the clinic’s treatment-as-usual services, and 3) very low-cost incentives. With respect to cost-effectiveness, these OTP patients earned a maximum of $50 or four take-home medication doses (and often some combination of the two), a fiscal commitment specified by the OTP director and one that represented a fraction of the per-patient $250–400 maximal earnings of a widely-cited multisite CTN study conducted in OTPs (Peirce et al., 2006). In all such respects, the clinical impacts reported herein may offer clinic directors hope for prospects of replicating similar success at other community OTPs.

Several methodological caveats bear acknowledgement. As noted earlier, the pragmatic design approach enabled recruitment of all interested staff for training as well as application of the CM intervention to all eligible OTP clientele. However, it also necessitated uncontrolled and quasi-experimental comparisons for staff- and patient-based study outcomes, respectively. This single-site trial involved collaboration with a clinic selected because of its configuration of attributes thought to predict implementation success. Clearly, attempts at replication with a variety of clinics are warranted, and generalization of study findings to the OTP community at-large should not be assumed. It may be that OTPs unaffiliated with the CTN required greater effort by university collaborators, given lesser staff resources (Ducharme & Roman, 2009) and receptivity about CM (Hartzler & Rabun, 2013b). Potential for selection bias also applies to staff members, whose participation in CM training and subsequent intervention delivery during the trial implementation period was optional. Further, some attrition was observed over the course of the study, though this was limited and reflected either time conflicts or change in clinic role for the involved staff. Beyond potential self-selection influences, the size of the staff sample also suggests that the magnitude of reported training effects be interpreted with caution. Staff sample size also precluded analyses of background attributes as predictors of learning or baseline skill. Other design caveats relate to the study timeline. A longer follow-up window would have provided greater certainty about durability of staff training impacts as well as greater experience from which clinic management to draw in discussing prospective CM sustainability. Stronger conclusions could also be drawn about clinical effectiveness of the CM intervention had the trial implementation period been extended beyond 90 days. Finally, the quality of intervention delivery by staff during contacts with patients on their caseload was not directly assessed. The described SP methodology has many psychometric advantages for formal assessment of intervention delivery skill, yet is only a proxy measure for how the intervention was delivered. The documented clinical impacts of that actual CM delivery may mitigate such concerns in the current study, but OTPs seeking to replicate this work in their setting should strongly consider use of routine fidelity-monitoring practices (e.g., direct observation or review of recorded sessions by a clinical supervisor) as suggested for effective implementation of empirically-supported behavioral interventions (Baer et al., 2007; Herschell et al., 2010; Miller et al., 2006).

Caveats notwithstanding, this mixed method study offers a comprehensive examination of CM implementation at the level of an individual, community-based OTP clinic. Its design was informed by a widely-cited implementation framework (Proctor et al., 2011), both in terms of a breadth of staff- and clinic-level indices as well as evaluation of intervention effectiveness once implemented. Similarly comprehensive designs are needed for prospective studies of other CM methods, and more broadly other empirically-supported therapeutic approaches, to advance our understanding of how to bridge persistent translational gaps that plague addiction treatment (Schon, 1983). Dissemination research brings advocates of an empirically-supported approach face-to-face with treatment community professionals, but also with opportunities to incorporate their input in processes of study design, conduct, and evaluation that then enhance its ecological validity. Indeed, a collaborative spirit—evidenced through mutual respect and recognition of inevitable real-world contextual constraints—may be a particularly under-appreciated ingredient in examining and understanding how a clinical method like CM is integrated into routine clinical practice. We hope this work offers guidance to those spearheading similar translational efforts.

Acknowledgments

The research reported herein was supported by the National Institute on Drug Abuse of the National Institutes of Health under award number K23DA025678 (Integrating Behavioral Interventions in Substance Abuse Treatment, Hartzler PI). The content of this report is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute of Health. The authors wish to thank the following contributors: Molly Carney, Carol Davidson and Michelle Peavey, for their support of clinic implementation efforts; Esther Ricardo-Bulis, for her assistance in coordinating study assessment processes; Robert Brooks, for his SP character portrayal; and the participating OTP staff and their clients for allowing their efforts to be examined for purposes of this trial.

References

- Alessi SM, Hanson T, Wieners M, Petry NM. Low-cost contingency management in community clinics: Delivering incentives partially in group therapy. Experimental and Clinical Psychopharmacology. 2007;15:293–300. doi: 10.1037/1064-1297.15.3.293. [DOI] [PubMed] [Google Scholar]

- Baer JS, Ball SA, Campbell BK, Miele GM, Schoener EP, Tracy K. Training and fidelity monitoring of behavioral interventions in multi-site addictions research. Drug and Alcohol Dependence. 2007;87:107–118. doi: 10.1016/j.drugalcdep.2006.08.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baer JS, Wells EA, Rosengren DB, Hartzler B, Beadnell B, Dunn C. Context and tailored training in technology transfer: Evaluating motivational interviewing training for community counselors. Journal of Substance Abuse Treatment. 2009;37:191–202. doi: 10.1016/j.jsat.2009.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beidas RS, Cross W, Dorsey S. Show me, don’t tell me: Behavioral rehearsal as a training and analogue fidelity tool. Cognitive and Behavioral Practice. 2014;21:1–11. doi: 10.1016/j.cbpra.2013.04.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beidas RS, Kendell PC. Training therapists in evidence-based practice: A critical review from a systems-contextual perspective. Clinical Psychology: Science and Practice. 2010;17:1–31. doi: 10.1111/j.1468-2850.2009.01187.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benishek LA, Kirby KC, Dugosh KL, Pavodano A. Beliefs about the empirical support of drug abuse treatment interventions: A survey of outpatient treatment providers. Drug and Alcohol Dependence. 2010;107:202–208. doi: 10.1016/j.drugalcdep.2009.10.013. [DOI] [PubMed] [Google Scholar]

- Berkman ND, Wechsberg WM. Access to treatment-related and support services in methadone treatment programs. Journal of Substance Abuse Treatment. 2007;32:97–104. doi: 10.1016/j.jsat.2006.07.004. [DOI] [PubMed] [Google Scholar]

- Bernard HS, Ryan GW. Analyzing qualitative data: Systematic approaches. Los Angelos, CA: Sage Publications, Inc; 2010. [Google Scholar]

- Bride BE, Abraham AJ, Roman PM. Diffusion of contingency management and attitudes regarding its effectiveness and acceptability. Substance Abuse. 2010;31:127–135. doi: 10.1080/08897077.2010.495310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bride BE, Abraham AJ, Roman PM. Organizational factors associated with the use of contingency management in publicly funded substance abuse treatment centers. Journal of Substance Abuse Treatment. 2011;40:87–94. doi: 10.1016/j.jsat.2010.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown BS. From research to practice: The bridge is out and the water’s rising. In: Levy J, Stephens R, McBride D, editors. Emergent issues in the field of drug abuse: Advances in medical sociology. Stanford, CT: JAI Press; 2000. pp. 345–365. [Google Scholar]

- Chutuape MA, Silverman K, Stitzer ML. Survey assessment of methadone treatment services as reinforcers. American Journal of Drug and Alcohol Abuse. 1998;24:1–16. doi: 10.3109/00952999809001695. [DOI] [PubMed] [Google Scholar]

- Compton WM, Stein JB, Robertson EB, Pintello D, Pringle B, Volkow ND. Charting a course for health services research at the National Institute on Drug Abuse. Journal of Substance Abuse Treatment. 2005;29:167–172. doi: 10.1016/j.jsat.2005.05.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cucciare MA, Weingardt KR, Villafranca S. Using blended learning to implement evidence-based psychotherapies. Clinical Psychology: Science and Practice. 2008;15:299–307. [Google Scholar]

- Damschroder LJ, Hagedorn H. A guiding framework and approache for implementation research in substance use disorders treatment. Psychology of Addictive Behaviors. 2011;25:194–206. doi: 10.1037/a0022284. [DOI] [PubMed] [Google Scholar]

- Ducharme LJ, Knudsen HK, Abraham AJ, Roman PM. Counselor attitudes toward the use of motivational incentives in addiction treatment. American Journal of Addiction. 2010;19:496–503. doi: 10.1111/j.1521-0391.2010.00081.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ducharme LJ, Roman PM. Opioid treatment programs in the Clinical Trials Network: Representativeness and buprenorphen adoption. Journal of Substance Abuse Treatment. 2009;37:90–94. doi: 10.1016/j.jsat.2008.09.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dutra L, Stathopoulou G, Basden SL, Leyro TM, Powers MB, Otto MW. A meta-analytic review of psychosocial interventions for substance use disorders. American Journal of Psychiatry. 2008;165:179–187. doi: 10.1176/appi.ajp.2007.06111851. [DOI] [PubMed] [Google Scholar]

- Fairburn CG, Cooper Z. Therapist competence, therapy quality, and therapist training. Behavior Research and Therapy. 2011;49:373–378. doi: 10.1016/j.brat.2011.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fixsen D, Naoom S, Blase KA, Friedman RM, Wallace F. T. N. I. R. N. Louis de la Pate Florida Mental Health Institute, editor. Implementation research: A synthesis of the literature. Tampa, FL: University of South Florida; 2005. [Google Scholar]

- Fuller BE, Rieckmann T, Nunes EV, Miller M, Arfken C, Edmundson E, et al. Organizational readiness for change and opinions toward treatment innovations. Journal of Substance Abuse Treatment. 2007;33:183–192. doi: 10.1016/j.jsat.2006.12.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gourevitch MN, Chatterji P, Deb N, Schoenbaum EE, Turner BJ. On-site medical care in methadone maintenance: Associations with health care use and expenditures. Journal of Substance Abuse Treatment. 2007;32:143–151. doi: 10.1016/j.jsat.2006.07.008. [DOI] [PubMed] [Google Scholar]

- Griffith JD, Rowan-Szal GA, Roark RR, Simpson DD. Contingency management in outpatient methadone treatment: A meta-analysis. Drug and Alcohol Dependence. 2000;58:55–66. doi: 10.1016/s0376-8716(99)00068-x. [DOI] [PubMed] [Google Scholar]

- Hanson GR, Leshner AI, Tai B. Putting drug abuse research to use in real-life settings. Journal of Substance Abuse Treatment. 2002;23:69–70. doi: 10.1016/s0740-5472(02)00269-6. [DOI] [PubMed] [Google Scholar]

- Hartzler B, Donovan DM, Tillotson C, Mongoue-Tchokote S, Doyle S, McCarty D. A multi-level approach to predicting community addiction treatment attitudes about contingency management. Journal of Substance Abuse Treatment. 2012;42:213–221. doi: 10.1016/j.jsat.2011.10.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartzler B, Lash SJ, Roll JM. Contingency management in substance abuse treatment: A structured review of the evidence for its transportability. Drug and Alcohol Dependence. 2012;122:1–10. doi: 10.1016/j.drugalcdep.2011.11.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartzler B, Rabun C. Community treatment adoption of contingency management: A conceptual profile of U.S. clinics based on innovativeness of executive staff. International Journal of Drug Policy. 2013a;24:333–341. doi: 10.1016/j.drugpo.2012.07.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartzler B, Rabun C. Community treatment perspectives on contingency management: A mixed-method approach to examining feasibility, effectiveness, and transportability. Journal of Substance Abuse Treatment. 2013b;45:242–248. doi: 10.1016/j.jsat.2013.01.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartzler B, Rabun C. Training addiction professionals in empirically-supported treatments: Perspectives from the treatment community. Substance Abuse. doi: 10.1080/08897077.2013.789816. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haug NA, Shopshire M, Tajima B, Gruber VA, Guydish J. Adoption of evidence-based practice s among subtance abuse treatment providers. Journal of Drug Education. 2008;38:181–192. doi: 10.2190/DE.38.2.f. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henggeler SW, Chapman JE, Rowland MD, Holliday-Boykins CA, Randall J, Shackelford J, et al. Statewide adoption and initial implementation of contingency management for substance-abusing adolescents. Journal of Consulting and Clinical Psychology. 2008;76:556–567. doi: 10.1037/0022-006X.76.4.556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henggeler SW, Chapman JE, Rowland MD, Sheidow AJ, Cunningham PB. Evaluating training methods for transporting contingency management to therapists. Journal of Substance Abuse Treatment. 2013;45:466–474. doi: 10.1016/j.jsat.2013.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henggeler SW, Sheidow AJ, Cunningham PB, Donohoe BC, Ford JD. Promoting the implementation of an evidence-based intervention for adolescent marijuana abuse in community settings: Testing the use of intensive quality assurance. Journal of Clinical Child and Adolescent Psychology. 2008;37:682–689. doi: 10.1080/15374410802148087. [DOI] [PubMed] [Google Scholar]

- Herbeck DM, Hser Y, Teruya C. Empirically supported substance abuse treatment approaches: A survey of treatment providers’ perspectives and practices. Addictive Behaviors. 2008;33:699–712. doi: 10.1016/j.addbeh.2007.12.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herschell AD, Kolko DJ, Baumann BL, Davis AC. The role of therapist training in the implementation of psychosocial treatments: A review and critique with recommendations. Clinical Psychology Review. 2010;30:448–466. doi: 10.1016/j.cpr.2010.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Higgins ST, Budney AJ, Bickel WK, Hughes J, Foerg FE, Badger GJ. Incentives improve outcome in outpatient behavioral treatment of cocaine dependence. Archives of General Psychiatry. 1994;51:568–576. doi: 10.1001/archpsyc.1994.03950070060011. [DOI] [PubMed] [Google Scholar]

- Higgins ST, Budney AJ, Bickel WK, Hughes JR, Roerg F, Badger GJ. Achieving cocaine abstinence with a behavioral approach. American Journal of Psychiatry. 1993;150:763–769. doi: 10.1176/ajp.150.5.763. [DOI] [PubMed] [Google Scholar]

- IOM. Bridging the gap between practice and research: Forging partnerships with community-based drug and alcohol treatment. Washington, D.C: National Academy Press; 1998. [PubMed] [Google Scholar]

- Jones HE, Haug NN, Silverman K, Stitzer ML, Svikis DS. The effectiveness of incentives in enhancing treatment attendance and drug abstinence in methadone-maintained pregnant women. Drug and Alcohol Dependence. 2001;61:297–306. doi: 10.1016/s0376-8716(00)00152-6. [DOI] [PubMed] [Google Scholar]

- Kellogg SH, Burns M, Coleman P, Stitzer ML, Wale JB, Kreek MJ. Something of value: The introduction of contingency management interventions into the New York City Health and Hospital Addiction Treatment Service. Journal of Substance Abuse Treatment. 2005;28:57–65. doi: 10.1016/j.jsat.2004.10.007. [DOI] [PubMed] [Google Scholar]

- Kirby KC, Benishek LA, Dugosh KL, Kerwin ME. Substance abuse treatment providers’ beliefs and objections regarding contingency management: Implications for dissemination. Drug and Alcohol Dependence. 2006;85:19–27. doi: 10.1016/j.drugalcdep.2006.03.010. [DOI] [PubMed] [Google Scholar]

- Lash SJ, Burden JL, Parker JD, Stephens RS, Budney AJ, Horner RD, et al. Contracting, prompting, and reinforcing substance use disorder continuing care. Journal of Substance Abuse Treatment. 2013;44:449–456. doi: 10.1016/j.jsat.2012.09.008. [DOI] [PubMed] [Google Scholar]

- Ledgerwood DM, Alessi SM, Hanson T, Godley MD, Petry NM. Contingency management for attendance to group substance abuse treatment administered by clinicians in community clinics. Journal of Applied Behavior Analysis. 2008;41:517–526. doi: 10.1901/jaba.2008.41-517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lott DC, Jencius S. Effectiveness of very low-cost contingency management in a community adolescent treatment program. Drug and Alcohol Dependence. 2009;102:162–165. doi: 10.1016/j.drugalcdep.2009.01.010. [DOI] [PubMed] [Google Scholar]

- Lussier JP, Heil SH, Mongeon JA, Badger GJ, Higgins ST. A meta-analysis of voucher-based reinforcement therapy for substance use disorders. Addiction. 2006;101:192–203. doi: 10.1111/j.1360-0443.2006.01311.x. [DOI] [PubMed] [Google Scholar]

- Martino S, Brigham GS, Higgins C, Gallon S, Freese TE, Albright LM, et al. Partnerships and pathways of dissemination: The National Institute on Drug Abuse-Substance Abuse and Mental Health Services Administration Blending Initiative in the Clinical Trials Network. Journal of Substance Abuse Treatment. 2010;38(Supplement 1):S31–S43. doi: 10.1016/j.jsat.2009.12.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCart MR, Henggeler SW, Chapman JE, Cunningham PB. System-level effects of integrating a promising treatment into juvenile drug courts. Journal of Substance Abuse Treatment. 2012;43:231–243. doi: 10.1016/j.jsat.2011.10.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCarty DJ, Fuler BE, Arfken C, Miller M, Nunes EV, Edmundson E, et al. Direct care workers in the National Drug Abuse Treatment Clinical Trials Network: Characteristics, opinions, and beliefs. Psychiatric Services. 2007;58:181–190. doi: 10.1176/appi.ps.58.2.181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGlynn EA, Asch SM, Adams I, Keesey J, Hicks J, DeChristofano A, et al. The quality of health care delivered to adults in the United States. New England Journal of Medicine. 2003;348:2635–2645. doi: 10.1056/NEJMsa022615. [DOI] [PubMed] [Google Scholar]

- McGovern MP, Fox TS, Xie H, Drake RE. A survey of clinical practices and readiness to adopt evidence-based practicies: Dissemination research in an addiction treatment system. Journal of Substance Abuse Treatment. 2004;26:305–312. doi: 10.1016/j.jsat.2004.03.003. [DOI] [PubMed] [Google Scholar]

- McHugh RK, Barlow DH. The dissemination and implemenation of evidence-based psychological treatments: A review of current efforts. American Psychologist. 2010;65:73–84. doi: 10.1037/a0018121. [DOI] [PubMed] [Google Scholar]

- McLellan AT, Carise D, Kleber HD. Can the national addiction treatment infrastructure support the public’s demand for quality care? Journal of Substance Abuse Treatment. 2003;25:117–121. [PubMed] [Google Scholar]

- Milby JB, Garrett C, English C, Fritschi O, Clarke C. Take-home methadone: Contingency effects on drug-seeking and productivity of narcotic addicts. Addictive Behaviors. 1978;3:215–220. doi: 10.1016/0306-4603(78)90022-9. [DOI] [PubMed] [Google Scholar]

- Miller WR, Sorensen JL, Selzer JA, Brigham GS. Disseminating evidence-based practices in substance abuse treatment: A review with suggestions. Journal of Substance Abuse Treatment. 2006;31:25–39. doi: 10.1016/j.jsat.2006.03.005. [DOI] [PubMed] [Google Scholar]

- Morris SB, DeShon RP. Combining effect size estimates meta-analyses with repeated measures and independent-groups designs. Psychological Methods. 2002;7:105–125. doi: 10.1037/1082-989x.7.1.105. [DOI] [PubMed] [Google Scholar]

- Olmstead TA, Petry NM. The cost-effectiveness of prize-based and voucher-based contingency management in a population of cocaine- or opioid-dependent outpatients. Drug and Alcohol Dependence. 2009;102:108–115. doi: 10.1016/j.drugalcdep.2009.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peirce J, Petry NM, Stitzer ML, Blaine J, Kellogg S, Satterfield F, et al. Effects of lower-cost incentives on stimulant abstinence in methadone maintenance treatment: A National Drug Abuse Treatment Clinical Trials Network Study. Archives of General Psychiatry. 2006;63:201–208. doi: 10.1001/archpsyc.63.2.201. [DOI] [PubMed] [Google Scholar]

- Petry NM. Contingency management for substance abuse treatment: A guide to implementing this evidence-based practice. New York: Routledge; 2012. [Google Scholar]

- Petry NM, Alessi SM, Ledgerwood DM. A randomized trial of contingency management delivered by community therapists. Journal of Consulting and Clinical Psychology. 2012;80:286–298. doi: 10.1037/a0026826. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petry NM, DePhillipis D, Rash CJ, Drapkin M, McKay JR. Nationwide dissemination of contingency management: The Veterans Administration Initiative. The American Journal on Addictions. doi: 10.1111/j.1521-0391.2014.12092.x. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petry NM, Ledgerwood DM. The Contingency Management Competence Scale for reinforcing attendance. Farmington, CT: University of Connecticut Health Center; 2010. [Google Scholar]

- Petry NM, Martin B, Cooney JL, Kranzler HR. Give them prizes, and they will come: Contingency management for treatment of alcohol dependence. Journal of Consulting and Clinical Psychology. 2000;68:250–257. doi: 10.1037//0022-006x.68.2.250. [DOI] [PubMed] [Google Scholar]

- Petry NM, Petrakis I, Trevisan L, Wiredu G, Boutros NN, Martin B, et al. Contingency management interventions: From research to practice. American Journal of Psychiatry. 2001;158:694–702. doi: 10.1176/appi.ajp.158.5.694. [DOI] [PubMed] [Google Scholar]

- Petry NM, Stitzer ML. Y.U.P.D. Center, editor. Training Series, #6. West Haven, CT: Yale University; 2002. Contingency management: Using motivational incentives to improve drug abuse treatment. [Google Scholar]

- Prendergast M, Podus D, Finney JW, Greenwell L, Roll JM. Contingency management for treatment of substance use disorders: A meta-analysis. Addiction. 2006;101:1546–1560. doi: 10.1111/j.1360-0443.2006.01581.x. [DOI] [PubMed] [Google Scholar]

- Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons GA, Bunger A, et al. Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Administration and Policy in Mental Health and Mental Health Services Research. 2011;38:65–76. doi: 10.1007/s10488-010-0319-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rash CJ, DePhillipis D, McKay JR, Drapkin M, Petry NM. Training workshops positively impact beliefs about contingency management in a nationwide dissemination effort. Journal of Substance Abuse Treatment. 2013;45:306–312. doi: 10.1016/j.jsat.2013.03.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ritter A, Cameron J. Australian clinician attitudes towards contingency management: Comparing down under with America. Drug and Alcohol Dependence. 2007;87:312–315. doi: 10.1016/j.drugalcdep.2006.08.011. [DOI] [PubMed] [Google Scholar]

- Rogers EM. Diffusion of innovations. 5. New York: The Free Press; 2003. [Google Scholar]

- Roman PM, Abraham AJ, Rothrauff TC, Knudsen HK. A longitudinal study of organizational formation, innovation adoption, and dissemination activities within the National Drug Abuse Treatment Clinical Trials Network. Journal of Substance Abuse Treatment. 2010;38(Supplement 1):S44–S52. doi: 10.1016/j.jsat.2009.12.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schon DA. The reflective practitioner: How professionals think in action. New York: Basic Books; 1983. [Google Scholar]

- Schon DA. Educating the reflective practitioner. San Francisco, CA: Jossey-Bass; 1987. [Google Scholar]