Abstract

A limited set of attributes can guide visual selective attention. Thus, it is possible to deploy attention to an item defined by an appropriate color, size, or orientation but not to a specific type of line intersection or a specific letter (assuming other attributes like orientation are controlled). What defines the set of guiding attributes? Perhaps it is the set of attributes of surfaces or materials in the world. L. Sharan, R. E. Rosenholtz, and E. H. Adelson (submitted for publication) have shown that observers are extremely adept at identifying materials. Are they equally adept at guiding attention to one type of material among distractors of another? A series of visual search experiments shows that the answer is “no.” It may be easy to identify “fur” or “stone,” but search for a patch of fur among the stones will be inefficient.

Keywords: middle vision, search, attention, material properties, surface properties

Introduction

We search for things all day long. Even if the object of desire is in plain view, we may have to search because we are incapable of instantly and simultaneously recognizing all of the objects in the visual field. Most, if not all, acts of object recognition require that we select the target object by directing attention to it. Fortunately, we do not search randomly. Our attention can be guided by attributes of the target object (Egeth, Virzi, & Garbart, 1984; Williams, 1966; Wolfe, Cave, & Franzel, 1989). Defining the set of guiding attributes has been a research project for over 25 years (Treisman, 1985). In its original form, this was a search for the “preattentive” features that could be found in the first, parallel stage of processing proposed in Treisman's Feature Integration Theory (Treisman & Gelade, 1980). (Note: Henceforth, we try to use “attribute” to refer to a type of property like color and “feature” to refer to an instance of an attribute; e.g., red.) One of the diagnostics of a preattentive feature was that it could be found in a visual search display in a time that was independent of the number of distractor items in the display. That is, the slope of the function relating set size to reaction time (RT) would be near zero.

In Treisman's original formulation, there were parallel searches that produced these near-zero slopes and serial searches that did not. It subsequently became clear that there was a continuum of search efficiencies (Wolfe, 1998a) and that an important factor in determining search efficiency was the degree to which preattentive feature information could be used to “guide” attention (Wolfe, 1994, 2007; Wolfe et al., 1989). Thus, if observers (Os) were looking for a red “T” among black and red “Ls”, the RT would depend on the number of red items. Attention could be guided toward them and not wasted on black letters (Egeth et al., 1984; Kaptein, Theeuwes, & Van der Heijden, 1994).

What are the attributes that can guide attention? It is possible to catalog them (Thornton & Gilden, 2007; Treisman, 1986b; Wolfe, 1998a; Wolfe & Horowitz, 2004). Fundamental properties like color, size, motion, and orientation appear on essentially all lists. Most lists include a variety of less obvious properties like lighting direction (Sun & Perona, 1998) and various depth cues (e.g., Epstein & Babler, 1990; He & Nakayama, 1995; Ramachandran, 1988). What has been lacking is a principled reason why some attributes can guide attention and others do not. Treisman originally proposed that preattentive features were those extracted from the input at early stages in visual processing, perhaps primary visual cortex (V1; Treisman, 1986a), but there are difficulties with this idea. First, there are attributes such as the aforementioned lighting direction and depth cues that seem to guide attention but are unlikely to be attributes processed by V1. Moreover, even the basic attributes that guide attention sometimes seem to have been processed by later stages before guiding attention. For example, in search for targets defined by size, the size attribute is not retinal size but the perceived size calculated after size constancy mechanisms have done their work (Aks & Enns, 1996) though not all guiding attributes are “post-constancy” attributes (Moore & Brown, 2001).

One alternative hypothesis is that the basic attributes are those that would describe the properties of surfaces (He & Nakayama, 1992; Nakayama & He, 1995; Wolfe, Birnkrant, Kunar, & Horowitz, 2005). Thus, search for a lime might be guided by its green, curved, glossy, lime-textured surface properties. This idea is appealing because the goal of search—at least, outside of the laboratory—is not to find “green” or “vertical” or “shiny.” Typically, the searcher is searching for some object and the attributes of the visible surface of that object are what the searcher can use to locate the object. Thus, it might make sense for the guiding attributes to be the mid-level vision properties of surfaces rather than earlier representations of the stimulus. This would be analogous to the situation else-where in vision. For example, it is the task of the visual system to estimate the size of objects and not to sense the size of the retinal image (Gogel, 1969).

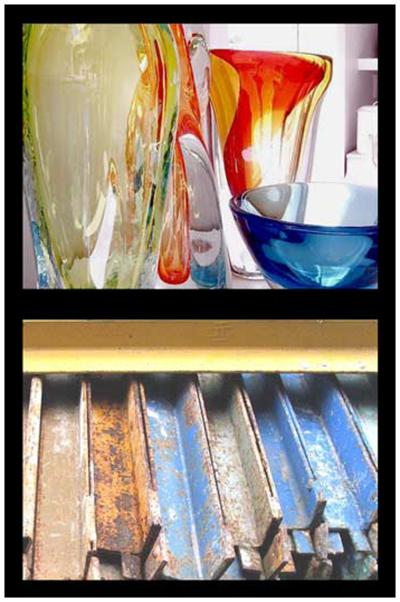

This hypothesis receives an indirect boost from recent work by Sharan, Rosenholtz, and Adelson (submitted for publication). In a series of experiments, they showed that Os could identify material properties of objects very rapidly. In their study, Os were 80% accurate with a 40-ms exposure and 92% accurate after 120 ms (Sharan et al., submitted for publication). Figure 1 shows examples from one of their sets of stimuli.

Figure 1.

It is quite easy to identify the material in the top panel as “glass” and in the bottom panel as metal. Stimuli from Sharan et al. (submitted for publication).

If Os can identify high-level material category very rapidly, perhaps material properties can guide search and perhaps the sets of basic attributes that support efficient visual search are those that define materials. Sharan et al.'s work does not address this issue because they presented Os with a single image at the focus of attention. To test this hypothesis, we had Os search for targets defined by one material property among distractors of other materials. In Experiment 1, we used stimuli from Sharan et al. They had deliberately developed a highly heterogeneous set of material exemplars in order to make sure that no non-material property was supporting the good identification behavior shown by their Os. That is, it would be of limited interest if Os could rapidly identify water only because it was always blue. As search stimuli in our Experiment 1, however, Sharan et al.'s stimuli proved to give rise to very inefficient search. Therefore, in Experiment 2, we used a set of much more homogeneous surface textures. Nevertheless, we still failed to find efficient search for one material type among distractors of another type. We conclude that it is unlikely that material type can guide search.

Experiment 1: Diverse material exemplars

Stimuli and procedure

Sharan et al. developed two sets of stimuli: close-up and whole object. Our stimuli were drawn from the close-up set. Each stimulus showed part of an object. Some shape and depth information was available as shown in Figure 1, though the identity of the object was not always obvious. There were nine material categories: fabric, glass, leather, metal, paper, plastic, stone, water, and wood. Each image was 256 by 256 pixels and subtended 4.1 × 4.1 degrees at a viewing distance of 57.4 cm. The center of each image was 3.2 degrees from the central fixation point. Set sizes of 1, 2, 3, or 4 were displayed on mid-gray background that subtended 12.7 × 12.7 deg.

Four conditions were tested: Stone targets among heterogeneous distractors, plastic targets among heterogeneous distractors, stone among plastic, and plastic among stone. There are dozens of other possible experiments. These conditions were picked because stone stimuli appeared to be quite distinct from plastic. Pilot experiments with other pairings produced qualitatively similar results. For each condition, Os were tested for 330 trials, the first 30 of which were considered to be practice trials. Targets were present on 50% of trials. Stimuli were visible until Os responded. Os made target-present or -absent responses via the keyboard. Os were told to respond quickly and accurately. Feedback was given after each trial.

Observers

Twelve paid participants between the ages of 18 and 55 were tested on all conditions. Each participant reported no history of eye or muscle disorders. All had at least 20/25 acuity and passed the Ishihara Test for Color Blindness. Informed consent was obtained for all participants and each participant was paid $10/h.

Results

Reaction times

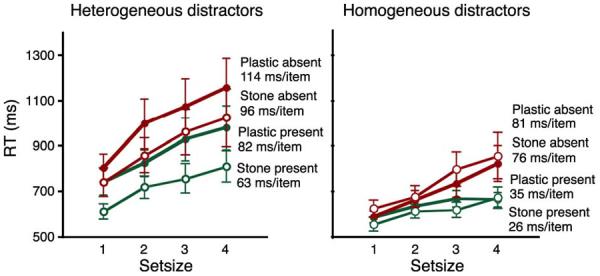

Figure 2 shows mean RT as a function of set size for the heterogeneous distractor conditions on the left and the homogeneous distractor conditions on the right. The main finding is that all of the conditions produce inefficient searches. All slopes are significantly greater than zero (all t(11) > 3.3, all p < 0.01). The most efficient condition (stone among plastic) has a slope of 26 ms/item with a lower bound on its 95% confidence interval of 14 ms/item.

Figure 2.

Reaction time × set size functions for the four conditions of Experiment 1. Error bars are 1 SEM. Green lines show target-present trials. Red lines show target-absent trials. Closed symbols show plastic target data. Open symbols show stone target data.

The heterogeneous distractor conditions are harder than the homogeneous conditions, as would be expected (Duncan & Humphreys, 1989), for both target-present and -absent conditions (both F(1,11) > 5.9, p < 0.05, partial eta-squared (pes) > 0.35).

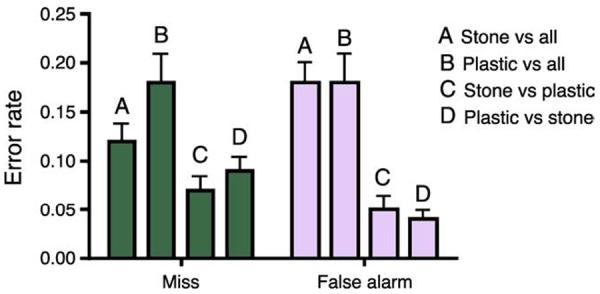

Errors

Figure 3 shows error rates for each of the four conditions. Os made more errors than is typical in simple visual search tasks. Typical error rates in basic search tasks would be less than 10%, even at the larger set sizes (Wolfe, Palmer, & Horowitz, 2010). This is especially notable in the false alarm rates in the conditions in which distractors were heterogeneous (A and B). False alarm rates in this sort of experiment are typically very low. Here, apparently, Os confused some distractors for targets and vice versa. Two-way ANOVAs document that errors are greater in the heterogeneous distractor conditions than in the homogeneous conditions (Miss errors: F(1,11) = 16.5, p = 0.0019, pes = 0.60, FA: F(1,11) = 38.2, p < 0.0001, pes = 0.78). Miss errors are more common for plastic targets than for stone targets (F(1,11) = 6.4, p = 0.027, pes = 0.37). False alarms did not differ between target types (F(1,11) = 0.53, p = 0.48, pes = 0.05).

Figure 3.

Error rates for Experiment 1. Error bars are 1 SEM.

Discussion

It is clear that while these stimuli may support rapid identification, they do not support efficient search. Indeed, these slopes are comparable or steeper than standard “inefficient” searches like a search for a T among Ls or a 2 among 5s (Wolfe, 1998b). It is important to note that our data in no way contradict Sharan's findings. The ability to perform very rapid identification of an attended item does not imply an ability to guide search on the basis of that identification. For example, a “T” can be rapidly distinguished from an “L” but a search for a T among Ls is inefficient. The same applies to isolated objects. They might be easy to identify, but search for one object among assorted other objects is quite inefficient (Vickery, King, & Jiang, 2005). Still, it seemed possible that Sharan's materials might have been too complex and too heterogeneous for present purposes. Accordingly, in Experiment 2, we repeated the experiment using a simpler, more homogeneous set of stimuli.

Experiment 2: Simple material stimuli

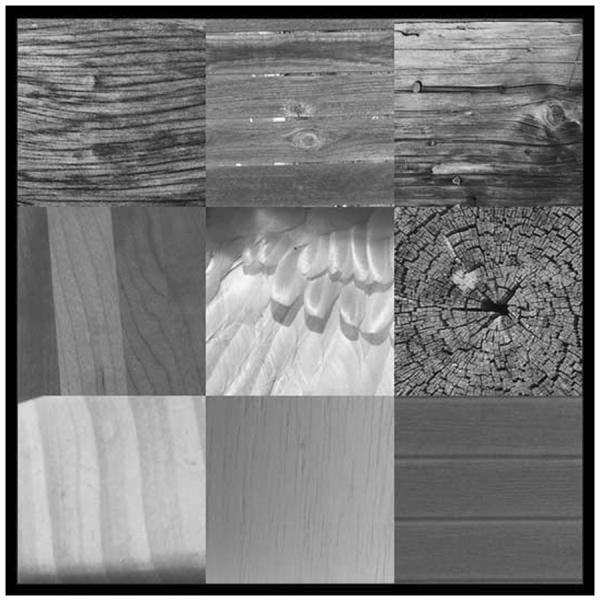

Experiment 2 used relatively close-up, planar images of feather, wood, fur, water, and stone. Three conditions were tested: Feather among wood in grayscale (example shown in Figure 4), fur among water in grayscale, and stone among fur in color. Again, many other search tasks are possible, but these materials seemed very distinct from one another. If a condition such as fur among water fails to produce efficient search, it does not seem likely that material would be a general, guiding attribute. As shown in Figure 4, stimuli were packed together since denser stimuli can produce higher salience (Nothdurft, 2000) and more efficient search for weak preattentive features. For example, it is easier to find a red target among reddish orange distractors if the target and distractors are closely packed, facilitating comparisons between items.

Figure 4.

Search for feather among wood.

Larger set sizes were used in this experiment because, again, there are circumstances under which feature guidance is more effective with larger set sizes (Bravo & Nakayama, 1992). Two of the three conditions were performed with grayscale images. This eliminated the possibility of a color cue (e.g., water might be bluer than fur) and it eliminated the distracting effects of irrelevant color variation. The third condition, stone among fur, was performed in color in case the presence of color was a vital part of material identification even if it was not diagnostic. The fur and stone stimuli had heavily overlapping distributions of colors (at least, by observation).

Stimuli were displayed against a deep blue background to provide contrast with the grayscale images. Each individual image subtended 4.1 × 4.1 degrees at a viewing distance of 57.4 cm. Set size varied from 4 (2 by 2 matrix), 9 (3 by 3 matrix), 16 (4 by 4 matrix), to 25 (5 by 5 matrix). Before performing the search task, Os saw each of the stimuli individually. They were asked to verbally categorize the stimuli and were corrected on the rare occasion of an error. Due to a programming error, images were displayed with the upper left corner of the matrix presented aligned with the upper left corner of the 20.5 × 20.5 degree display area. Thus, the 5 × 5, set size 25 grid was always centered on the screen while the smaller set sizes were not. A subsequent control experiment suggested that this did not substantially alter the results. Methods were otherwise the same as Experiment 1.

Observers

Eleven paid participants between the ages of 18 and 55 were tested on all conditions. Participants reported no history of eye or muscle disorders and had 20/25 vision or better. All passed the Ishihara Tests for Color Blindness. Informed consent was obtained for all participants and each participant was paid $10/h.

Results

Os were 95% correct on average. The worst performance was in the fur category (89%) because fur stimuli were occasionally mistaken for feather stimuli. Since Os did not search for fur among feather, this is unlikely to have been a significant factor in the search results. For the target-distractors pairs used here: stone–feather, fur–water, or feather–wood, there were no categorization confusions (i.e., no stones labeled as “feather”).

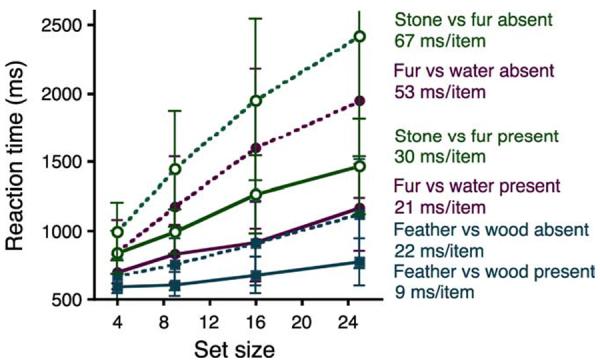

RTs less than 200 and over 5000 ms were removed from analysis. This eliminated less than 1% of trials. Mean RTs are shown in Figure 5.

Figure 5.

RT × set size functions for the three conditions of Experiment 2. Solid lines show target-present data. Dashed lines show target-absent data. Open circles (green) show search for stone among fur, filled circles (purple) show fur among water, and filled squares (blue) show feather among wood. Error bars show 1 SEM.

Overall, these simpler, more homogeneous stimuli did not produce particularly efficient search. The stone–fur and fur–water conditions produce the standard inefficient search results that would be seen in a search for a 2 among 5s or a T among Ls. The fact that the stone–fur stimuli were in color while the fur–water stimuli were in grayscale does not seem to have made any qualitative difference to the results. Feather–wood, however, initially appeared to be something of an exception to the general rule that search for material type is inefficient. The target-present slope of 9 ms/item is quite efficient (but see discussion below). ANOVAs with factors of Condition and Set Size reveal significant effects on RT of condition for correct target-present (F(2,22) > 58, p < 0.0001, pes > 0.84) and -absent responses (F(2,22) > 61, p < 0.0001, pes > 0.85). The main effects of set size and the interaction of set size and condition are similarly highly significant (all p > 0.0001).

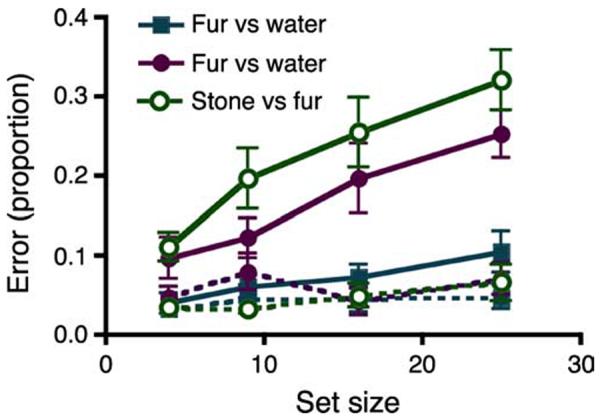

Error data are shown in Figure 6. The high miss error rates, increasing with set size, for the fur–water and stone–fur conditions indicate that the RT × set size slopes for those conditions would be even less[notdef]efficient without the apparent speed–accuracy tradeoff. The lower false alarm rates in this experiment are more typical of standard search tasks where observers' criteria favor misses over false alarms (Wolfe et al., 2010).

Figure 6.

Error data for the three conditions of Experiment 2. Solid lines show target-present data. Dashed lines show target-absent data. Open circles show search for stone among fur, filled circles show fur among water, and filled squares show feather among wood. Error bars are 1 SEM.

The feather–wood condition produced more accurate as well as more efficient search. However, the efficiency of the feather–wood condition in this experiment is probably merely an informative artifact. Wood and feather textures have non-isotropic orientation distributions. As can be seen in Figure 4, a typical patch of wood will have a grain with a predominant orientation. As it happens, in Experiment 2, the feather textures tended to be oriented vertically while the wood textures tended to be oriented horizontally. Of course, this was accidental and neither the experimenters nor the observers were explicitly aware of this confound when the experiment was run. Nevertheless, Os seem to have been sensitive to this regularity and to have used the orientation cue to guide attention. In a subsequent control experiment with orientations randomized, five new observers produced an average target-present slope of 20 ms/item and an average target-absent slope of 46 ms/item, very similar to the inefficient fur among water condition. Overall, Experiment 2 fails to produce convincing evidence for efficient search for material type.

General discussion

Because these search tasks produce consistently inefficient search results, we conclude that material type is not an attribute that effectively guides the deployment of attention in visual search. This conclusion suffers from the usual problem of a negative finding. Perhaps there is some set of material stimuli that would produce efficient search. This is possible. The present experiment does not come close to exhausting the possible sets of materials. Nevertheless, one would think that, if guidance by material properties was a routine aspect of guided search, it should be possible to find fur among water or stone among fur pretty easily. It is hard to imagine that there are substantially more dramatic material contrasts that await testing and that would support efficient search.

As noted above, the failure to find efficient search for material type does not mean that Os cannot rapidly identify materials. The identification task at the start of Experiment 2 shows that Os had no trouble distinguishing our target materials from the distractor materials and Sharan et al.'s work shows that these identifications can be made rapidly even with the much more diverse materials of Experiment 1.

The present results notwithstanding, it seems intuitively clear that material properties guide our search in the real world. Here the artifactually efficient search for feather among wood in Experiment 2 may be informative. Search for a material will be guided by the basic preattentive attributes that characterize that material if that information is available. To take a trivial example, a child's plastic toy, misplaced in the living room, is likely to have some distinctive color that is not part of the parents' interior design palette. It will be found efficiently, not because it is plastic material, but because it possesses unique features from the already established list of basic attributes. This is a persistent issue in the search for preattentive, guiding attributes. Once the stimulus is complex enough, it can be quite difficult to guarantee that no basic feature distinguishes between targets and distractors. For example, this fact has bedeviled the question of guidance to faces or facial expression for years (Eastwood, Smilek, & Merikle, 2001; Hansen & Hansen, 1988; Hershler & Hochstein, 2005, 2006; Nothdurft, 1993; Purcell & Stewart, 1988; Purcell, Stewart, & Skov, 1996; VanRullen, 2006).

In an effort to eliminate other attributes as sources of guidance, we adopted the expedient of using heterogeneous collections of targets (several different patches of fur) and of distractors (several different patches of water). Even then, one can fall victim to a feature artifact, as we did in the feather–wood condition. Moreover, this strategy introduces its own problem when search turns out to be inefficient. Even well-established features may fail to produce efficient search if the distractors are suitably inhomogeneous. Thus, while color is irrefutably a basic, preattentive attribute, search will be inefficient if the target lies between the distractors in color space (as long as the colors are quite close to each other in that space). Search for a blue–green among blue and green, for example, will be inefficient even if separate searches for blue–green among blue and blue–green among green are efficient (Bauer, Jolicœur, & Cowan, 1996; D'Zmura, 1991). The logic of the present experiments is that the heterogeneity arises in dimensions other than the hypothetical material dimension under test. The wood is all wood even if it varies in orientation, spatial frequency content, and so forth. This works for a feature-like orientation. One vertical object will be easily found among horizontal objects even if all the objects differ in their color, size, and so forth (Snowden, 1998; Treisman, 1988). It does not work for materials. Finding the one stone region among the fur requires inefficient search.

What, then, do we know about the guiding attributes in visual search?

Guiding attributes do not seem to be the same as the attributes of the first stages of visual processing. There are guiding attributes that are not represented in early vision such as the various cues to depth (Enns & Rensink, 1990; Kleffner & Ramachandran, 1992) and attributes that are attributes of early vision (e.g., orientation) seem to be differently represented in search and early vision (Wolfe, Friedman-Hill, Stewart, & O'Connell, 1992).

Guiding attributes do not seem to map on to some simple set of middle vision properties. To offer a few examples, intersections, important for calculation of objects and occlusion, do not guide search (Bergen & Julesz, 1983; Wolfe & DiMase, 2003). Subjective contours may begin driving cells in V2 (Von der Heydt, Peterhans, & Baumgartner, 1984), but they are limited in their ability to guide search (Davis & Driver, 1994; Gurnsey, Humphrey, & Kapitan, 1992; Li, Cave, & Wolfe, 2008; Senkowski, Rottger, Grimm, Foxe, & Herrmann, 2005). In addition, the present results show that material properties do not guide search.

The ability of a feature to guide attention may be dissociated from its perceptual salience. Most models of salience assume that guidance and perception are making use of the same signal, but this is demonstrably untrue. The present results show a failure to efficiently search for apparently salient surface properties. As a clearer example of the dissociation of salience and guidance, consider a recent experiment in which Os searched for a desaturated target among saturated and achromatic distractors. Os might search for pink among red and white or pale green among green and white. The stimuli were carefully calibrated so that all targets lay at the perceptual midpoint between their two distractors. Nevertheless, search for the desaturated red targets was much faster than search for any other desaturated target (Lindsey et al., 2010).

These negative facts about guiding attributes lead to the conclusion that guidance makes idiosyncratic, non-perceptual use of a limited set of attributes. The guiding attributes are derived from the visual input for the purposes of guidance. In the color example, just given, it is possible to model the precise way in which photo-receptor signals are used to guide search and to show that this guiding combination of signals is different from the combination that produces color perception. It is harder to do this with other guiding attributes—even quite basic ones like orientation. It is clear that the guiding attributes are not a simple subset of some other visual processing stage (e.g., V1). The principle that governs admission to the set of guiding attributes, if there is a principle, remains unclear. The present results indicate that the set is not the set of attributes that permits rapid categorization of materials.

Acknowledgments

We thank Michelle Greene and Lavanya Sharan for comments. We also thank Lavanya Sharan for sharing her stimuli with us. This work was supported by NEI EY017001 and ONR N000141010278.

Footnotes

Commercial relationships: none.

References

- Aks DJ, Enns JT. Visual search for size is influenced by a background texture gradient. Journal of Experimental Psychology: Human Perception and Performance. 1996;22:1467–1481. doi: 10.1037//0096-1523.22.6.1467. [DOI] [PubMed] [Google Scholar]

- Bauer B, Jolicœur P, Cowan WB. Visual search for colour targets that are or are not linearly-separable from distractors. Vision Research. 1996;36:1439–1466. doi: 10.1016/0042-6989(95)00207-3. [DOI] [PubMed] [Google Scholar]

- Bergen JR, Julesz B. Rapid discrimination of visual patterns. IEEE Transactions on Systems, Man, and Cybernetics. 1983;13:857–863. [Google Scholar]

- Bravo M, Nakayama K. The role of attention in different visual search tasks. Perception & Psychophysics. 1992;51:465–472. doi: 10.3758/bf03211642. [DOI] [PubMed] [Google Scholar]

- Davis G, Driver J. Parallel detection of Kanisza subjective figures in the human visual system. Nature. 1994;371:791–793. doi: 10.1038/371791a0. [DOI] [PubMed] [Google Scholar]

- Duncan J, Humphreys GW. Visual search and stimulus similarity. Psychological Review. 1989;96:433–458. doi: 10.1037/0033-295x.96.3.433. [DOI] [PubMed] [Google Scholar]

- D'Zmura M. Color in visual search. Vision Research. 1991;31:951–966. doi: 10.1016/0042-6989(91)90203-h. [DOI] [PubMed] [Google Scholar]

- Eastwood JD, Smilek D, Merikle PM. Differential attentional guidance by unattended faces expressing positive and negative emotion. Perception & Psychophysics. 2001;63:1004–1013. doi: 10.3758/bf03194519. [DOI] [PubMed] [Google Scholar]

- Egeth HE, Virzi RA, Garbart H. Searching for conjunctively defined targets. Journal of Experimental Psychology: Human Perception and Performance. 1984;10:32–39. doi: 10.1037//0096-1523.10.1.32. [DOI] [PubMed] [Google Scholar]

- Enns JT, Rensink RA. Sensitivity to three-dimensional orientation in visual search. Psychological Science. 1990;1:323–326. [Google Scholar]

- Epstein W, Babler T. In search of depth. Perception & Psychophysics. 1990;48:68–76. doi: 10.3758/bf03205012. [DOI] [PubMed] [Google Scholar]

- Gogel WC. The sensing of retinal size. Vision Research. 1969;9:1079–1094. doi: 10.1016/0042-6989(69)90049-2. [DOI] [PubMed] [Google Scholar]

- Gurnsey R, Humphrey GK, Kapitan P. Parallel discrimination of subjective contours defined by offset gratings. Perception & Psychophysics. 1992;52:263–276. doi: 10.3758/bf03209144. [DOI] [PubMed] [Google Scholar]

- Hansen CH, Hansen RD. Finding the face in the crowd: An anger superiority effect. Journal of Personality & Social Psychology. 1988;54:917–924. doi: 10.1037//0022-3514.54.6.917. [DOI] [PubMed] [Google Scholar]

- He JJ, Nakayama K. Surfaces vs. features in visual search. Nature. 1992;359:231–233. doi: 10.1038/359231a0. [DOI] [PubMed] [Google Scholar]

- He ZJ, Nakayama K. Visual attention to surfaces in three-dimensional space. Proceedings of the National Academy of Sciences of the United States America. 1995;92:11155–11159. doi: 10.1073/pnas.92.24.11155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hershler O, Hochstein S. At first sight: A high-level pop out effect for faces. Vision Research. 2005;45:1707–1724. doi: 10.1016/j.visres.2004.12.021. [DOI] [PubMed] [Google Scholar]

- Hershler O, Hochstein S. With a careful look: Still no low-level confound to face pop-out. Vision Research. 2006;46:3028–3035. doi: 10.1016/j.visres.2006.03.023. [DOI] [PubMed] [Google Scholar]

- Kaptein NA, Theeuwes J, Van der Heijden AHC. Search for a conjunctively defined target can be selectively limited to a color-defined subset of elements. Journal of Experimental Psychology: Human Perception and Performance. 1994;21:1053–1069. [Google Scholar]

- Kleffner DA, Ramachandran VS. On the perception of shape from shading. Perception & Psychophysics. 1992;52:18–36. doi: 10.3758/bf03206757. [DOI] [PubMed] [Google Scholar]

- Li X, Cave K, Wolfe JM. Kanisza-style subjective contours do not guide attentional deployment in visual search but line termination contours do. Perception & Psychophysics. 2008;70:477–488. doi: 10.3758/pp.70.3.477. [DOI] [PubMed] [Google Scholar]

- Lindsey DT, Brown AM, Reijnen E, Rich AN, Kuzmova Y, Wolfe JM. Psychology Science. 2010. Color channels, not color appearance or color categories, guide visual search for desaturated color targets. first published online on August 16, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore CM, Brown LE. Preconstancy information can influence visual search: The case of lightness constancy. Journal of Experimental Psychology: Human Perception and Performance. 2001;27:178–195. doi: 10.1037//0096-1523.27.1.178. [DOI] [PubMed] [Google Scholar]

- Nakayama K, He JJ. Attention to surfaces: Beyond a Cartesian understanding of focal attention. In: Papathomas TV, editor. Attention to surfaces: Beyond a Cartesian understanding of visual attention. MIT Press; Cambridge, MA: 1995. [Google Scholar]

- Nothdurft HC. Faces and facial expression do not pop-out. Perception. 1993;22:1287–1298. doi: 10.1068/p221287. [DOI] [PubMed] [Google Scholar]

- Nothdurft HC. Salience from feature contrast: Variations with texture density. Vision Research. 2000;40:3181–3200. doi: 10.1016/s0042-6989(00)00168-1. [DOI] [PubMed] [Google Scholar]

- Purcell DG, Stewart AL. The face-detection effect: Configuration enhances detection. Perception & Psychophysics. 1988;43:355–366. doi: 10.3758/bf03208806. [DOI] [PubMed] [Google Scholar]

- Purcell DG, Stewart AL, Skov RB. It takes a confounded face to pop out of a crowd. Perception. 1996;25:1091–1108. doi: 10.1068/p251091. [DOI] [PubMed] [Google Scholar]

- Ramachandran VS. Perception of shape from shading. Nature. 1988;331:163–165. doi: 10.1038/331163a0. [DOI] [PubMed] [Google Scholar]

- Senkowski D, Rottger S, Grimm S, Foxe J, Herrmann C. Kanizsa subjective figures capture visual spatial attention: Evidence from electro-physiological and behavioral data. Neuropsychologia. 2005;43:872–886. doi: 10.1016/j.neuropsychologia.2004.09.010. [DOI] [PubMed] [Google Scholar]

- Sharan L, Rosenholtz RE, Adelson EH. Material perception in real-world images is fast and accurate. submitted for publication. [Google Scholar]

- Snowden RJ. Texture segregation and visual search: A comparison of the effects of random variations along irrelevant dimensions. Journal of Experimental Psychology: Human Perception and Performance. 1998;24:1354–1367. doi: 10.1037//0096-1523.24.5.1354. [DOI] [PubMed] [Google Scholar]

- Sun J, Perona P. Where is the sun? Nature Neuroscience. 1998;1:183–184. doi: 10.1038/630. [DOI] [PubMed] [Google Scholar]

- Thornton TL, Gilden DL. Parallel and serial process in visual search. Psychology Reviews. 2007;114:71–103. doi: 10.1037/0033-295X.114.1.71. [DOI] [PubMed] [Google Scholar]

- Treisman A. Preattentive processing in vision. Computer Vision, Graphics, and Image Processing. 1985;31:156–177. [Google Scholar]

- Treisman A. Features and objects in visual processing. Scientific American. 1986a;255:114B–125. [Google Scholar]

- Treisman A. Properties, parts, and objects. In: Boff KR, Kaufmann L, Thomas JP, editors. Handbook of human perception and performance. 1st ed. vol. 2. John Wiley and Sons; New York: 1986b. pp. 35.31–35.70. [Google Scholar]

- Treisman A. Features and objects: The 14th Bartlett memorial lecture. Quarterly Journal of Experimental Psychology. 1988;40:201–237. doi: 10.1080/02724988843000104. [DOI] [PubMed] [Google Scholar]

- Treisman A, Gelade G. A feature-integration theory of attention. Cognitive Psychology. 1980;12:97–136. doi: 10.1016/0010-0285(80)90005-5. [DOI] [PubMed] [Google Scholar]

- VanRullen R. On second glance: Still no high-level pop-out effect for faces. Vision Research. 2006;46:3017–3027. doi: 10.1016/j.visres.2005.07.009. [DOI] [PubMed] [Google Scholar]

- Vickery TJ, King L-W, Jiang Y. Setting up the target template in visual search. Journal of Vision. 2005;5(1):8, 81–92. doi: 10.1167/5.1.8. http://www.journalofvision.org/content/5/1/8, doi:10.1167/5.1.8. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Von der Heydt R, Peterhans E, Baumgartner G. Illusory contours and cortical neuron responses. Science. 1984;224:1260–1262. doi: 10.1126/science.6539501. [DOI] [PubMed] [Google Scholar]

- Williams LG. The effect of target specification on objects fixed during visual search. Perception & Psychophysics. 1966;1:315–318. [Google Scholar]

- Wolfe JM. Guided Search 2.0: A revised model of visual search. Psychonomic Bulletin and Review. 1994;1:202–238. doi: 10.3758/BF03200774. [DOI] [PubMed] [Google Scholar]

- Wolfe JM. Visual search. In: Pashler H, editor. Attention. Psychology Press; Hove, East Sussex, UK: 1998a. pp. 13–74. [Google Scholar]

- Wolfe JM. What do 1,000,000 trials tell us about visual search? Psychological Science. 1998b;9:33–39. [Google Scholar]

- Wolfe JM. Guided search 4.0: Current progress with a model of visual search. In: Gray W, editor. Integrated models of cognitive systems. Oxford; New York: 2007. pp. 99–119. [Google Scholar]

- Wolfe JM, Birnkrant RS, Kunar MA, Horowitz TS. Visual search for transparency and opacity: Attentional guidance by cue combination? Journal of Vision. 2005;5(3):9, 257–274. doi: 10.1167/5.3.9. http://www.journalofvision.org/content/5/3/9, doi:10.1167/5.3.9. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Wolfe JM, Cave KR, Franzel SL. Guided search: An alternative to the feature integration model for visual search. Journal of Experimental Psychology: Human Perception and Performance. 1989;15:419–433. doi: 10.1037//0096-1523.15.3.419. [DOI] [PubMed] [Google Scholar]

- Wolfe JM, DiMase JS. Do intersections serve as basic features in visual search? Perception. 2003;32:645–656. doi: 10.1068/p3414. [DOI] [PubMed] [Google Scholar]

- Wolfe JM, Friedman-Hill SR, Stewart MI, O'Connell KM. The role of categorization in visual search for orientation. Journal of Experimental Psychology: Human Perception and Performance. 1992;18:34–49. doi: 10.1037//0096-1523.18.1.34. [DOI] [PubMed] [Google Scholar]

- Wolfe JM, Horowitz TS. What attributes guide the deployment of visual attention and how do they do it? Nature Reviews Neuroscience. 2004;5:495–501. doi: 10.1038/nrn1411. [DOI] [PubMed] [Google Scholar]

- Wolfe JM, Palmer EM, Horowitz TS. Reaction time distributions constrain models of visual search. Vision Research. 2010;50:1304–1311. doi: 10.1016/j.visres.2009.11.002. [DOI] [PMC free article] [PubMed] [Google Scholar]