Abstract

Brain-machine interfaces (BMIs) are artificial systems that aim to restore sensation and movement to severely paralyzed patients. However, previous BMIs enabled only single arm functionality, and control of bimanual movements was a major challenge. Here, we developed and tested a bimanual BMI that enabled rhesus monkeys to control two avatar arms simultaneously. The bimanual BMI was based on the extracellular activity of 374–497 neurons recorded from several frontal and parietal cortical areas of both cerebral hemispheres. Cortical activity was transformed into movements of the two arms with a decoding algorithm called a 5th order unscented Kalman filter (UKF). The UKF is well-suited for BMI decoding because it accounts for both characteristics of reaching movements and their representation by cortical neurons. The UKF was trained either during a manual task performed with two joysticks or by having the monkeys passively observe the movements of avatar arms. Most cortical neurons changed their modulation patterns when both arms were engaged simultaneously. Representing the two arms jointly in a single UKF decoder resulted in improved decoding performance compared with using separate decoders for each arm. As the animals’ performance in bimanual BMI control improved over time, we observed widespread plasticity in frontal and parietal cortical areas. Neuronal representation of the avatar and reach targets was enhanced with learning, whereas pairwise correlations between neurons initially increased and then decreased. These results suggest that cortical networks may assimilate the two avatar arms through BMI control.

Introduction

The complexity and variety of motor behaviors in humans and other primates is vastly augmented by the remarkable ability of the central nervous system to control bimanual movements (1). Yet, this functionality has not been enacted by previous brain-machine interfaces (BMIs), hybrid systems that directly connect brain tissue to machines in order to restore motor and sensory functions to paralyzed individuals (2). The advancement of BMIs has been driven by two fundamental goals: investigation of the physiological principles that guide the operation of large neural ensembles (3) and the development of neuroprosthetic devices that could restore limb movements and sensation to paralyzed patients (4, 5). Previous BMIs mimicked only single arm control represented by either a computer cursor (6–11), a robot (8, 12, 13), or an avatar arm (14), but did not enable simultaneous bimanual control of arm movements.

Studies in non-human primates have shown that the brain does not encode bimanual movements simply by superimposing two independent single-limb representations (15, 16). Cortical regions, such as the supplementary motor area (SMA) (17–19) and the primary motor cortex (M1) (16, 18, 19), exhibit specific patterns of activity during bimanual movements. These complex neuronal representations pose a major challenge for BMIs enabling bimanual control because such BMIs cannot be designed simply by combining single-limb modules. Here, we tested the ability of a bimanual BMI to enable rhesus monkeys to control two avatar arms simultaneously.

Results

Large scale recordings and experimental paradigms

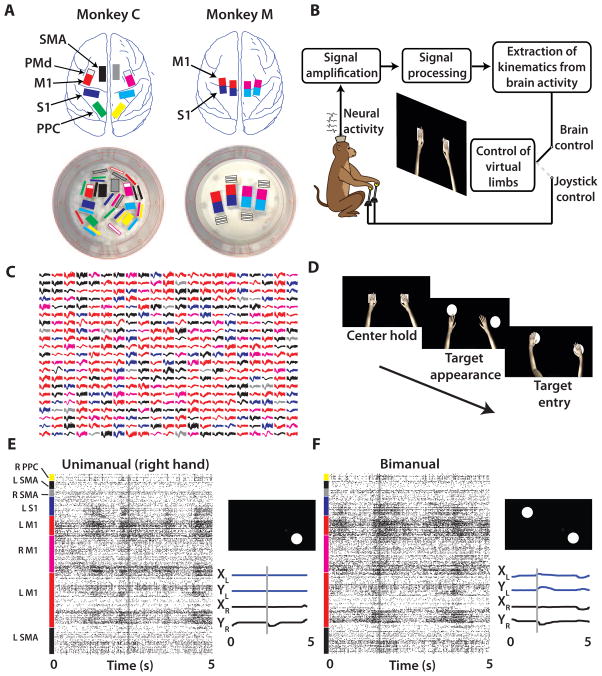

We set out to discover whether large-scale cortical recordings could provide sufficient neuronal signals to accurately control a bimanual BMI (4, 20). We implanted volumetric multielectrode arrays in two monkeys (768 microelectrodes in monkey C; 384 in monkey M) (Fig. 1A) as described previously (20). Neural signals were sorted using template matching algorithms within commercially available software (Plexon Inc., Dallas, TX). In monkey C, we simultaneously sampled (Fig. 1C,E–F) from the SMA (73–110 units in the left hemisphere, 0–20 units in the right hemisphere; ranges for all experiments), M1 (176–218 units in the left hemisphere, 45–62 units in the right hemisphere), primary somatosensory cortex (S1) (9–64 units in the left hemisphere, 0–34 in the right hemisphere), and posterior parietal cortex (PPC) (0–4 units in the left hemisphere, 22–47 in the right hemisphere). In monkey M, we sampled from M1 (80–90 units in the left hemisphere, 195–204 in the right hemisphere) and S1 (47–56 units in the left hemisphere, 127–149 in the right hemisphere). The daily unit count neared 500 for each monkey, which constitutes the highest number of simultaneously recorded units in non-human primates to date (21). The high unit count for monkey M has persisted for 48 months after the implantation surgery, and for monkey C has persisted for 18 months after the surgery. Recordings are still continuing in these two animals.

Fig. 1. Large scale electrode implants and behavioral tasks.

(A) Monkey C (left) and monkey M (right) were chronically implanted with eight and four 96-channel arrays, respectively. (B) The monkey is seated in front of a screen showing two virtual arms and uses either joystick movements or modulations in neural activity to control the avatar arms. (C) 441 sample waveforms from typical Monkey C recording sessions with the color of the waveform indicating the recording site (shown in A). (D) Left to right: Trial sequence began with both hands holding a center target for a random interval. Next, two peripheral targets appeared which had to be reached to and held with the respective hands to receive a juice reward. (E, F) Raster plot of spike events from 438 neurons (y-axis) over time (x-axis) for a single unimanual (E) and bimanual (F) trial. Target location and position traces of trial are indicated to the right of the raster panel.

Using this large-scale BMI, both monkeys were able to directly control the simultaneous reaching movements performed by two avatar arms. Moreover, these monkeys learned to operate the bimanual BMI without producing overt movements of their own arms. A realistic, virtual monkey avatar (Fig. S1A) was chosen as the actuator for the bimanual BMI because in prior studies (14, 22) and experiments for this study (Fig. 2) we observed that monkeys readily engaged in both active control and passive observation of the avatar movements. Each monkey observed two avatar arms on a computer monitor from a first-person perspective (Fig. 1B). A trial began with an appearance on the screen of two square targets. Their position was the same in all trials, and they served as the start positions for the avatar hands. The monkey had to place the avatar hands over their respective targets and hold these positions for a delay, randomly drawn from a uniform distribution (400 to 1000 ms intervals, Fig. 1D). The two squares were then replaced by two circular targets in one of 16 possible configurations (right, left, up, or down relative to start position for each hand). At this point, the monkey had to place both avatar hands over the targets indicated by the two circles and hold the targets for a minimum of 100 ms to receive a fruit juice reward. In the unimanual version of this task, a single avatar arm had to reach for a single target.

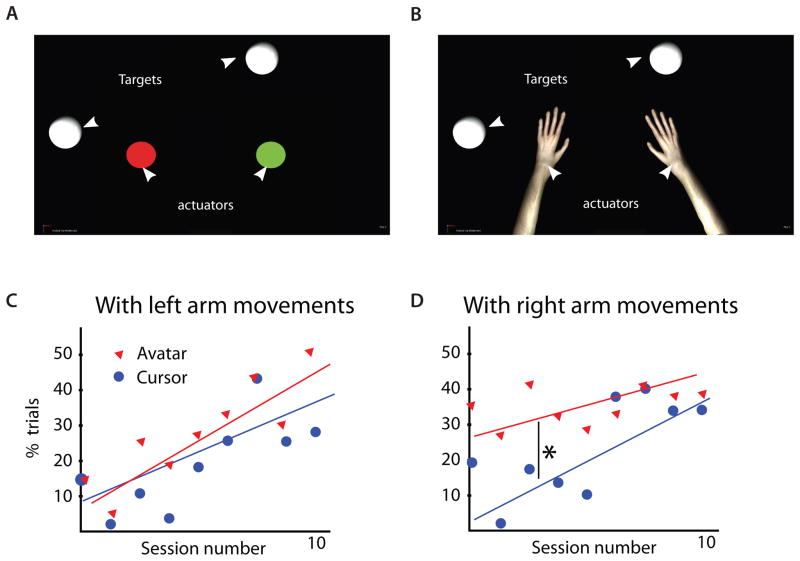

Fig. 2. Comparison of bimanual behavioral training with cursor and avatar actuators.

(A) Two 2D cursors or (B) two avatar arms were controlled by joystick movements. In both environments, the target for each hand is a white circle. Percentage of total trials containing a threshold amount of movements (avatar arm reached beyond 80% of distance from center to target) with the left arm (C) or right arm (D) shown in lower panels. The first ten sessions of avatar and cursor bimanual training, conducted on alternating days, are shown separately by blue and red marker type. * denotes p<0.05, t-test

The tasks were performed in three possible ways: joystick control, brain control with arm movements (BC with arms), and brain control without arm movements (BC without arms). Both monkeys learned to perform BC without arms but through different learning sequences. Monkey C began with joystick control – a paradigm where the right and left avatar arms were controlled directly by movements of the two joysticks (Fig. 1F)(18). Monkey C then learned BC with arms, a paradigm where movements of the avatar arms were directed by cortical activity, although the monkey was permitted to continue manipulating the joysticks. Finally, monkey C learned BC without arms, a mode of operation where decoded brain activity once again controlled avatar arm movements, but now overt limb movements were prevented by gently restraining both arms. Monkey M did not use the joystick in any task. Rather, this monkey’s task training began by having it passively observe the avatar arms moving on the screen as an initial step before learning BC without arms. This type of BMI training has clinical relevance for paralyzed subjects who cannot produce any overt movements, and it has been used in several human studies (13, 23).

To set up BC with arms for monkey C, we followed our previously established routine (8, 10) of training the BMI decoder on joystick control data to extract arm kinematics from cortical activity. Daily sessions dedicated solely to joystick control lasted 20–40 min. Next, brain control sessions began with 5–7 min of the joystick control task, before switching to BC with arms for the final 20–40 min. Despite the complexities of independent control of two virtual limbs, the decoding accuracy for our bimanual BMI was sufficient for online control (Movie S3) and matched the accuracy previously reported for less challenging unimanual BMIs (7, 8, 10, 24, 25).

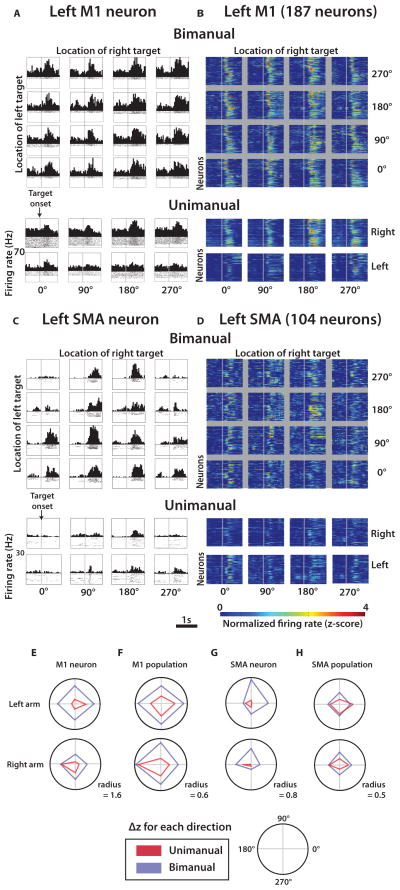

Bimanual Joystick Control

Monkey C was trained to perform both unimanual and bimanual joystick control tasks very accurately (greater than 97% of the trials were correct) (Fig. S1B–E; Movies 1,2). Cortical recordings collected from this monkey revealed widespread neuronal modulations that reflected movement timing and direction (Fig. 1E,F; Figs. 3–5). Consistent with previous studies (15, 16, 18), cortical activity from multiple areas was different between unimanual and bimanual movements (Fig. 3–4). In motor areas, M1 (Fig. 3A,B,E,F) and SMA (Fig. 3C,D,G,H), individual units (Fig. 3A,C,E,G) and neuronal populations (Fig. 3B,D,F,H) alike exhibited directionally selective modulations during both unimanual and bimanual performance. For each configuration of the pair of targets, we characterized neuronal modulations as Δz – the mean difference between the movement epoch (from 150 to 600 ms after target appearance) firing rate and baseline rate, both expressed in normalized units (z-scores). Normalization to z-scores was applied to each unit’s firing rate before any grouping or averaging of individual trials. Average modulation for all target positions was quantified as absolute value of Δz averaged for all target configurations ( ). Directional selectivity was measured as the standard deviation of Δz for different target configurations, σ(Δz).

Fig. 3. Modulations of cortical neurons during manually performed unimanual and bimanual movements.

(A) Representative left M1 neuron peri-event time histogram (PETH) aligned on target appearance (grey line) for each of 16 left and right target location combinations during bimanual movements. Below the 4×4 grid are corresponding PETHs for the same neuron during unimanual trials in each of the four directions. (B) Same layout as (A) for the population of left M1 neurons. Each row of each color plot panel represents a single neuron and the pixel color is the normalized firing rate or z-score (color scale at bottom). (C–D) Representative neuron (C) and neuronal population (D) in the supplementary motor area (SMA) brain region. (E–H) for each of the four movement directions for unimanual (red) and bimanual (blue) trials for the left (top) and right (bottom) arms: for one M1 neuron (E), for a population of M1 neurons (F), for one SMA neuron (G), and for a population of SMA neurons (H).

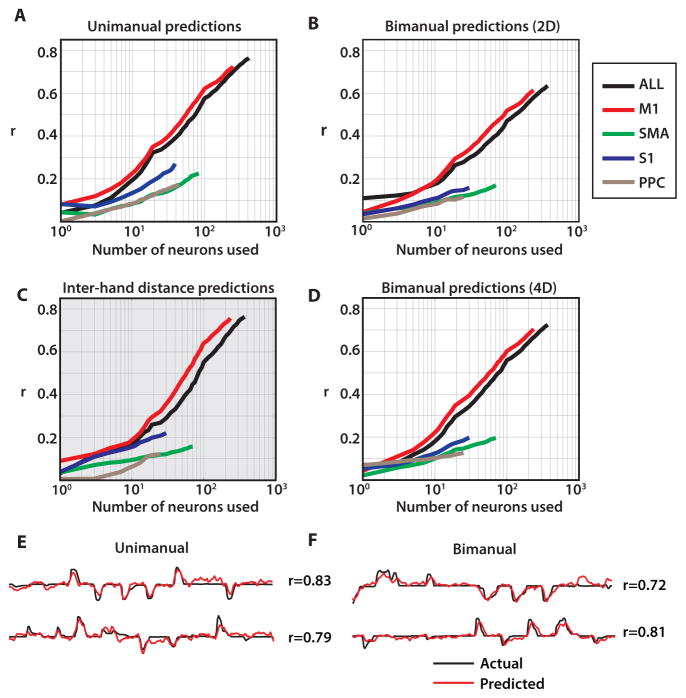

Fig. 5. Neuron dropping curves for joystick control.

(A) Neuron dropping curves for unimanual joystick control,(B) bimanual joystick control using two 2D decoding models (C), inter-hand spacing, and (D), and bimanual joystick control using one 4D decoding model. Curves are shown separately for each area, indicated by color. (E–F) Offline predictions using 2D UKF for unimanual movements (E) and 4D UKF for bimanual movements (F).

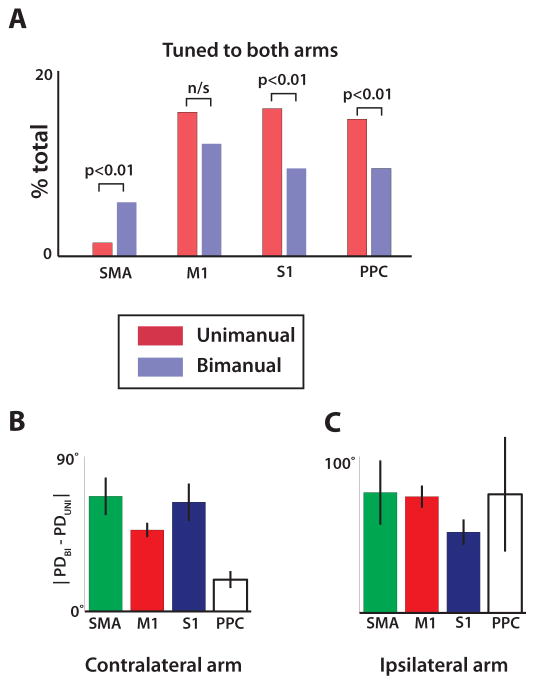

Fig. 4. Directional tuning during bimanual versus unimanual movements.

(A) Fraction of neurons in each cortical area which had significant tuning to both arms during unimanual (red) and bimanual (blue) trials, determined from regression. (B) The absolute value of the difference between preferred direction of the contralateral arm computed from bimanual trials and unimanual trials. Data shown separately for each cortical area. (C) Same analysis as (B) but showing the difference in preferred direction for the ipsilateral arm. All data are mean ± standard error. Analysis compiled from activity of 492 M1 neurons, 203 SMA neurons, 90 S1 neurons, and 61 PPC neurons.

The transition from unimanual to bimanual movements (Table S1) induced several effects in M1 and SMA. First, we observed a prominent increase in during bimanual movements by 76.7% and 34.6% for left M1 and right M1, respectively; and 35.8% and 37.9% for left and right SMA (p<0.01, t-test). M1 neurons exhibited clear preference for the contralateral rather than the ipsilateral arm during unimanual performance, both in terms of overall modulations (28.3% increase in for contralateral vs. ipsilateral arm; p<0.01) and in terms of tuning depth (22.3% increase in σ(Δz). An opposite, ipsilateral preference was observed for SMA (19.1% decrease in , and 11.1% decrease in σ(Δz); p<0.01). For both M1 and S1, directional tuning depth during the bimanual task was approximately equal for the left and right arm (left σ(Δz): 0.08; right σ(Δz): 0.09; p>0.01). Notably, SMA was the only area where more neurons were tuned to both arms after a transition from unimanual to bimanual movements (p<0.01) (Fig. 4A). In addition to changes in overall modulations and directional tuning depth, bimanual control resulted in changes in neuronal preferred directions, which shifted between the unimanual and bimanual conditions by 53.1±4.0° (mean ± s.e.m.) for the contralateral arm and 66.0±5.4° for the ipsilateral arm (Fig. 4B,C).

Offline decoding of bimanual movements with UKF

The unscented Kalman filter (UKF) (24) was selected for the BMI decoder in this study. The UKF is a decoding algorithm which has been employed previously to extract motor commands from brain activity to control a cursor (24) or virtual arm (14) in real-time. The UKF represents both the reaching parameters, such as position and velocity, (state model) and their nonlinear relationship to neuronal rates (tuning model). Additionally, it incorporates a history of neuronal rates (the higher UKF order, the richer the history). In this study, the UKF updated its state every 100 ms based on the previous state and the neuronal activity recordings. The UKF was first applied to cortical recordings obtained during the joystick control task in monkey C. To decode the position of one arm during unimanual movements, we used a UKF with a two-dimensional (2D) output (X and Y coordinates of the hand; Fig. 5A,E). To decode bimanual movements, we applied either two separate 2D UKFs (Fig. 5B) or a UKF with a four-dimensional (4D) output (X and Y for both hands; Fig. 5D,F).

The complexity of neuronal representations of bimanual movements became apparent from the neuron dropping curves (8, 10). Neuronal dropping curves describe the deterioration of decoding accuracy as neurons are removed (dropped) from the population used for decoding. They are a useful analytical method of showing the effect of neural ensemble size on the ability to decode motor parameters, measured as correlation coefficient r. In the present study, this method clearly indicated that more neurons were needed to achieve the same decoding accuracy for each arm during the bimanual task than during the unimanual task (compare Fig. 5A with Fig. 5B). For example, to achieve decoding accuracy of r = 0.5 with the 2D UKF, a mean of 80 neurons (drawn from the full population) were needed for unimanual hand control and 145 neurons for bimanual hand control, despite using the same 2D UKF for each hand. The decoding accuracy, quantified as r, was proportional to the logarithm of neuron count in each case (Fig. 5A–D). Additionally, bimanual movements required a longer time to train the UKF than unimanual movements (Fig. S2A). Furthermore, individual neurons more strongly contributed to the decoding of one of the arms when movements were unimanual, but more homogeneously represented each arm during bimanual movements (Fig. S2B,C). Both distributions were leftward shifted from the null distribution (Fig. S2D) collected from the same recording session but during periods without task execution (p<0.05 both right and left arms, Wilcoxon signed rank test). We obtained better bimanual prediction accuracy when the 2D UKF was trained on bimanual movements compared to the same model trained on unimanual movements of each arm separately (Fig. S2E, p<0.01). Similarly, training the UKF on bimanual movements yielded more accurate predictions for bimanual than for unimanual movements (Fig. S2F, p<0.01).

During bimanual hand control, the position of the right and left arms was decoded from multi-area ensemble activity with high accuracy (r=0.85±0.02 and r=0.62±0.03, Fig. S4C). Looking at cortical areas separately, the best decoding was achieved with M1 neurons (n = 245; r = 0.73±0.03, average of two arm r values). A less numerous population of SMA neurons performed worse (n = 73; r = 0.22±0.02), but the contribution from SMA and other areas to the overall predictions was still evident from the rise of the entire ensemble dropping curve beyond the maximum M1 performance, as well as the steady rise of the individual area dropping curves (Fig. 5A,B,D). Moreover, when UKF predictions were run for individual neurons and all neurons were ranked by the accuracy of these predictions, many non-M1 neurons received high rankings. Thus, of the 50 top ranked neurons, 27 (54%) were from M1, 16 (32%) from SMA, 4 (8%) from PPC, and 3 (6%) from S1. Therefore, even though M1 neurons contributed the greatest amount to kinematic predictions, non-M1 areas such as SMA, PPC, and S1 provided informative signals, as well.

In addition to predictions of the coordinates of two hands, the distance between hands was represented with similar accuracy (Fig. 5C) when derived from the predictions of two hand positions made with the 4D UKF model.

Brain control of bimanual movements with UKF

After testing the 4D UKF on monkey C’s hand control data and finding that it consistently outperformed the 2D UKF on the hand control data (Figs. 5D, S3A), we chose this decoder for real time BMI control. This decoder incorporated kinematics of both arms in the state variables, thus allowing the algorithm to jointly represent both arms in a single model. Even with this improved decoder, switching from joystick control (Fig. S1E) to BC with arms resulted in a sharp drop in monkey C’s performance level during initial sessions even though the monkey continued to operate the joysticks (Fig. 6C). However, over the next 24 sessions of BC with arms, monkey C’s proficiency improved substantially in both bimanual task performance (i.e. percent of trials where both arms reached their targets within the maximal allowed trial duration; Fig. 6C) and individual arm performance (i.e. percent of trials where a single arm reached its correct target within the same duration; Fig. 6D). Additional improvement in performance was achieved after the decoder was upgraded from a 1st order UKF to a 5th order UKF (Figs. 6C, S3B–D). Changing from 1st to 5th order provided a more detailed history of prior neuronal activity to be incorporated into the decoder. By the end of BC with arms training, a consistent level of performance exceeded 70% correct trials (Fig. 6C), including over 90% correct trials for each arm individually (Fig. 6D).

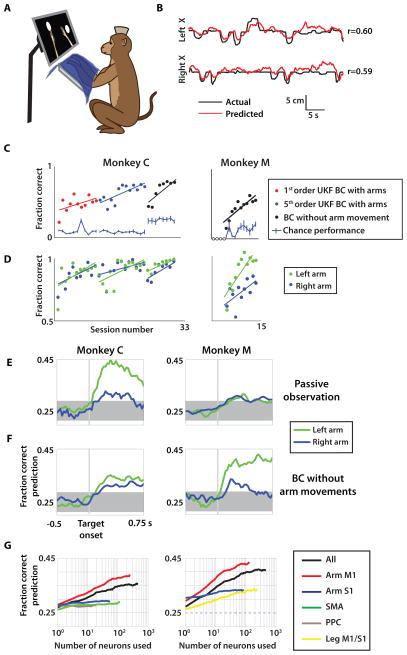

Fig. 6. Passive observation and brain control paradigms.

(A) A monkey was seated in front of a screen with both arms gently restrained and covered by an opaque material during passive observation and BC without arms experiments. (B) Actual left and right arm X-position (black) compared with predicted X-position (red) for passive observation sessions. Pearson’s correlation, r, is indicated. (C) Performance of monkey C (left) and monkey M (right) quantified as fraction correct trials. Shown separately for monkey C are different decoding model parameter settings (red, blue markers) as well as brain control without arm movement sessions (black, both monkeys). Sessions with less than 10 attempted trials were set to zero due to insufficient data (open circles). (D) Fraction of trials where the left arm (green circles) and right arm (blue circles) acquired their respective target during brain control. Linear fit for learning trends of each paradigm is shown as in (C). (E–F) Fraction of correct predictions by k-NN of target location for each arm (blue/green) over the trial period during both passive observation (E) and brain control without arm movement (BC without arm movements) (F) in both monkey C (left column) and monkey M (right column). (G) Mean k-NN target prediction fraction correct from neuron dropping curves separated by cortical area for each monkey (same columns as E–F). UKF, unscented Kalman filter;

Our BC without arms experiments were demonstrated in both monkeys. These experiments were designed to match the practical needs of paralyzed people who have to learn BMI control without being able to produce overt upper limb movements. Previously, to reach the same goal, single-effector BMI studies have employed a co-adaptive decoding model with iteratively updated tuning properties (25), requested subjects to imagine movements (13, 26), or had them passively observe effector movements (27, 28). In our study, a passive observation paradigm became the basis for how the BMI decoder was trained without requiring the monkeys to produce overt arm movements. For these experiments, we had the monkeys passively observe the movements of the avatar while both monkey arms were gently restrained (Fig. 6A). The screen displayed two avatar arms moving in center-out trajectories towards the targets. The trajectories were either replayed from a previous joystick control session (monkey C) or preprogrammed using estimates of kinematic parameters (monkey M). These bimanual passive observation movements were clearly reflected by cortical modulations (Fig. S4A–C). Neuronal modulations to passive observations do not appear to be explained by muscle activity (Fig. S5A–B). To explore these neuronal modulations, the 4D UKF was applied to extract passive observation movements of the avatar hands from cortical activity (Fig. 6B). The accuracy of these extractions was different for the left and right avatar arms (monkey C: r=0.46±0.05 for the left avatar arm versus r=0.12± 0.05 for the right; monkey M: r=0.47±0.03 versus r=0.23±0.02) (Fig. S4C) and fluctuated in time (r in the range of −0.29 to 0.64; Fig. S6). The running accuracies for the two arms were weakly positively correlated between themselves (correlation coefficient of 0.25±0.12 in monkey C and 0.31±0.14 in monkey M, across all sessions; mean±s.e.m.), likely reflecting drifts in the level of overall attention to both arms. One could speculate in addition that attention was occasionally unevenly distributed between the two avatar arms (e.g., negative correlation of running accuracies during the interval 155–200 s in Fig. S6), but this issue will have to be more carefully investigated in future studies using more precise eye tracking methods.

After the UKF was trained on 5–7 minutes of passive observation, the mode of operation was switched to BC without arms for 25–45 min (Movie S4). The monkeys controlled the avatar with their cortical activity while still fully arm-restrained. Both monkeys rapidly improved the performance for each arm in the bimanual brain control task within 5–10 sessions (monkey C rising from 43% to 79%; monkey M from 15% to 62%; Fig. 6C, black circles). Similar to the passive observation pattern (Figs. 6E–F, S4C), the performance accuracy for the left and right arms was unequal during the BC without arms task (monkey C: left 98.5%, right 94.4%; monkey M: left 96.4%, right 77.7%; average over last three brain control without hands sessions, Fig. 6D). The first four sessions of BC without arms for monkey M (open circles, Fig. 6C) contained fewer than 10 trials which met the minimum attention threshold and were counted as zero accuracy. To compute a chance performance level for each mode of control, we performed a shuffled-target analysis (Fig. 6C). Cursor trajectories of each trial were replayed with peripheral target locations drawn from a randomly shuffled set of target combinations. Correct performance was defined the same way as in online sessions, where both center and peripheral targets must be acquired within the 10 s timeout limit. Chance level performance derived from the shuffle test was very low for BC with arms data (less than 10% correct trials, Fig. 6C) and slightly higher but still far below monkey performance for BC without arms (20–30% for monkey C, 10–20% for monkey M, Fig. 6C). For 20 out of the 21 BC without arms sessions, monkey performance was statistically greater than chance performance (p<0.05, t-test), the lone exception being the second session for monkey M.

To obtain further evidence that cortical modulations during brain control sessions reflected the goal of the bimanual task, we evaluated how cortical ensembles represented the location of targets on each trial. It was essential that we decoded target position instead of position or velocity of the avatar arms in this analysis. Unlike the position of the avatar arms, target locations were not algorithmically linked to neuronal activity during real-time BMI control. Therefore, this analysis was not confounded by the fact that neuronal tuning to arm kinematics was preset by the UKF tuning model. Such a confound would have been a hindrance if we attempted to derive tuning from the relationship between movement direction and neuronal rates. In our experiments, the target locations were neither a parameter of the UKF nor its output, so when we examined how those locations were tracked by cortical activity we were certain that we dealt with a true representation of movement goals and not with an epiphenomenon of the real-time BMI. It should be noted that the UKF model may have influenced this analysis indirectly because it drove the avatar arms, and the position of the avatar arms likely contributed to this estimate of the neuronal representation of the targets. We used a k-nearest neighbor (k-NN) classifier to extract target locations from cortical modulations (Fig. 6E–G). To quantify cortical representation of each target – which potentially could be different for neurons from different hemispheres and/or areas – we used two classifiers, one for each target. Both target locations were clearly reflected by cortical ensembles, starting with the appearance of the targets and continuing throughout the trial (Fig. 6E–G). Cortical activity represented the targets during both BC without arms sessions (Fig. 6F,G) and passive observation sessions (Fig. 6E). The accuracy of each representation was measured as fraction correct. Using k-NN, the target location of the left arm was decoded more effectively than the right arm in both monkeys (Fig. 6F). This matches the behavioral results from Fig. 6D, which show better BC without arms performance with the left hand of both monkeys as well. Despite this difference, both right and left target locations could be decoded at significant levels within the same epoch following target appearance. This dual representation persisted through the reaction time and movement epoch of a typical trial (Fig. 6E–F).

We next assessed the effect of the number of recorded neurons and relative contributions of cortical areas on k-NN decoding of target position. Similar to Fig. 6F, k-NN decoded left and right target locations during the brain control without hands task. The mean prediction accuracy for both arms improved approximately linearly with the logarithm of neuronal ensemble size (e.g., for monkey M, M1 ensembles: fraction correct=0.34 for n=5, fraction correct=0.37 for n=10, and fraction correct=0.43 for n=100, with chance level 0.25; Fig. 6G, right). This followed the same trend observed for prediction of arm kinematics (Fig. 5A–D). For monkey M, with microelectrodes implanted in both leg and arm M1 areas, the targets were better represented by neurons located in the arm area (fraction correct: 0.43±0.02, n=100) compared to the neurons in the leg area (fraction correct: 0.31±0.03, n=100; p<0.01). Yet neurons in the leg area of M1 contributed to predicting target location: a population of about 100 neurons in the leg area of M1 rivaled in accuracy 100 neurons located in the S1 arm region.

Neuronal plasticity during brain control without arms training

In parallel with each monkey’s learning of the BC without arms task, we observed plastic changes in the firing patterns of cortical ensembles. These changes were particularly clear in the functional reorganization of the cortical representation of the avatar during the passive observation task, which was measured at the beginning of each session of BC without arms (Fig. 7A–B). The decoding accuracy of passively observed avatar kinematics (measured as prediction r) was clearly enhanced as the training progressed.

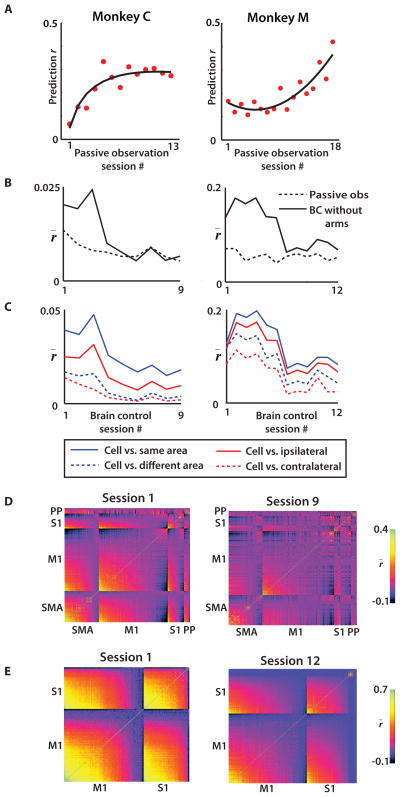

Fig. 7. Cortical plasticity during passive observation and brain control without arm movement experiments.

(A) UKF prediction performance r over time using passive observation data from the beginning of each session. (B) Mean correlation r of neural firing among recorded neuronal populations throughout the passive observation and brain control without arm movements (BC without arms) epochs of training sessions. (C) Mean inter- and intra-hemispheric (red) and inter- and intra-area (blue) correlation r̄ vs. session. (D) Neuron vs. neuron correlation indicated by pixel color for two monkeys on the first (left) and last (right) day of brain control without hands training for monkey C. Within each panel, neurons are sorted by cortical area and mean correlation strength. (E) Same as (D), except for monkey M. (A–C) Left column: monkey C, right column: monkey M. UKF, unscented Kalman filter; SMA, supplementary motor area; PP, posterior parietal cortex.

Furthermore, we observed a gradual reduction (p<0.01; ANOVA) in firing rate correlations amongst cortical neurons as animals were training in the BC without arms task (Fig. 7B–E). During early BC without arms sessions, correlations between neurons were 1.7–2.2 times greater than during passive observation periods tested on the same day. Over the next few days, however, these cortical correlations decreased until they reached the same level as during passive observation (Fig. 7B). During this reduction, correlations between neurons from the same hemisphere (solid red line, Fig. 7C) and the same cortical area (solid blue line) remained higher than the correlations between neurons from different hemispheres or areas (dashed lines). An ANOVA test showed that both area and hemispheric relationships were factors related to the decrease in correlation (p<0.01). In both monkeys, there was a greater proportional decrease in cross-hemisphere correlation during BC without arms learning than within-hemisphere correlation (monkey C: 85.2% reduction across hemispheres vs. 54.0% within hemisphere; monkey M: 56.6% across hemispheres vs. 36.1% within hemisphere). Similarly, correlations between cortical areas (same hemisphere) decreased more than those within an area (monkey C: 76.6% reduction between cortical areas versus 54.0% within cortical area; monkey M: 53.7% reduction between cortical areas versus 29.9% within cortical area).

Discussion

Our findings suggest that BMI technology can be applied to the challenging task of enabling bimanual control in subjects who do not produce overt arm movements. The current study enhances the degree of sophistication of an upper limb BMI by enabling simultaneous control of two avatar arms. This was achieved by introducing a bimanual decoding/training paradigm – one that takes full advantage of large-scale simultaneous bi-hemispheric chronic cortical recordings of up to 500 neurons, a virtual reality training environment, an optimal bimanual decoding algorithm, and the recently discovered (22) phenomenon of visually-driven cross-modal cortical plasticity.

From our earlier studies, we have argued that BMIs provide important insights into the physiological principles that govern the function of brain circuits (3). In this context, the present study tested whether these principles apply to bimanual motor control. To examine the role of neural ensembles for bimanual control, we utilized simultaneous, very large scale recordings from fronto-parietal cortical ensembles (2, 3) and obtained extractions of bimanual motor parameters. Neuron dropping curves constructed from both manual control data (Fig. 5A–D) and real-time BC without arms session data (Fig. 6G) indicate that the accuracy of neural decoding steadily and linearly improves with the logarithm of neuronal ensemble size. With our distributed multi-site recording approach, we demonstrated the representation of bimanual movements of several fronto-parietal cortical areas. This result is consistent with previous neurophysiological studies of bimanual motor control (15–19). From our data, the M1 contribution to the decoding was the most substantial, which is likely due to a higher proportion of task related neurons. Supplementing the M1 ensemble (red line in Fig. 5A–D) with neuronal data from the other areas (black line) further improved the decoding. Furthermore, non-M1 areas such as PPC (29) and PMd (8, 10, 30) are known to be reliable sources for BMI decoding with an overlapping but often distinct role from M1 output (31–33). This is especially true in an area such as SMA, which is known to be involved in bimanual coordination (17, 19).

Our results support a highly distributed representation of bimanual movements by cortical ensembles, with individual neurons and neural populations representing both avatar arms simultaneously (17–19). Most neurons recorded in this study contributed to the predictions of kinematics of each arm (Fig. S2B–D). Moreover, during online BMI control of bimanual movements such multiplexing of the kinematics of both arms by individual neurons became even more prominent (Fig. S2C).

It should be emphasized that the distributed cortical representation of bimanual movements could not be described as a linear superposition of the representations of unimanual movements. Most clear in SMA and M1 brain regions (Fig. 3), but also evident in S1 and PPC (Fig. 4), the activity of individual neurons and neural populations during bimanual tasks was not a weighted sum of unimanual patterns derived from data collected from right and left arms independently. These observations point to an existence of a separate, bimanual state of the network in which modulations represent the movements of both arms simultaneously by way of non-linear transformations of the separate neural tuning profiles of each arm (Fig. 3A–D). At this point, we can only speculate about the function of this nonlinearity. From one moment to the next, the two arms need to be able to switch between unimanual and bimanual functionality. During unimanual control, it is important that the motor drive to the non-working arm is inhibited. Conversely, during two-arm behaviors, it becomes important that a motor program in one arm does not interfere with the other arm and does not evoke unwanted synergies in both arms, but rather permits a degree of independence. Nonlinear phenomena have been reported at the behavioral level in bimanual motor control studies, which showed that motor systems can choose to favor stability (inter-limb coordination, nonlinear) over more unstable (inter-limb independence, linear) (34–36) motor programs in a task-dependent way.

Overall, our findings provide support for the notion that very large neuronal ensembles, not single neurons, define the true physiological module underlying normal motor functionality (2, 3, 10, 14, 37). This suggests that small cortical neuronal samples (9, 25, 38, 39) may be insufficiently informative to control complex motor behaviors using a BMI, but such BMIs with few neurons could be useful testbeds for experimentation with less challenging motor tasks. Although we did not attempt bimanual control with just a few neurons in this study, we probed small ensembles indirectly by employing a neuron dropping analysis using a k-NN classifier to estimate the contribution of ensemble size to target representation during BC without arms (Fig. 6E–G). This analysis showed that large ensembles always performed better.

Our study provides new insights into the plasticity of cortical ensembles. Previously, we demonstrated that cortical ensembles can undergo substantial plasticity during learning of BMI tasks (10). We even observed an emergence of visually evoked responses in S1 and M1 when attended to virtual avatar arms (14, 22). Here, we observed improvements in performance as the monkeys enacted real-time BMI control of bimanual movements. These improvements were accompanied by a steady decline of correlated neural activity throughout the four recorded cortical areas and in both hemispheres (Fig. 7B–E). Previous work (3, 40, 41) has identified that cortical modulations and inter-neuronal correlations initially increase during BMI operation, which have been suggested to serve a role in the initial learning of unfamiliar tasks. Additionally, we have reported a decrease in variance associated with neuronal modulations during brain control learning (40). Still, the potential function served by the decrease in neuronal correlations observed in our study and others (42, 43) remains to be fully understood. The most basic interpretation of this result is that correlated activity benefits early learning, but firing rate independence sustains later stages of the learning process when independent control of both arms is learned. Notably, changes were observed in a multitude of fronto-parietal structures across both cortical hemispheres.

Previously, we reported elevated correlated activity between neurons (3) and increased neuronal modulations unrelated to movement kinematics (40) during early sessions of unimanual BC without arms. A similar change in neural correlations was reported in human EEG studies in which inter-hemispheric EEG coherence decreased during bimanual task learning (42, 43). Thus, our monkey data indicate that inter-area and inter-hemispheric correlations may transiently increase during the initial learning phase and then decrease after subjects perfected bimanual motor behavior.

Our findings demonstrate a BMI that controls movements of two limbs in real-time, utilizing neuronal ensemble data recorded from both cerebral hemispheres. Our data may contribute to the development of future clinical neuroprosthetics systems aimed at restoring bimanual motor behaviors. A key observation is that the inclusion of two limbs within a single BMI decoding paradigm produced the best predictions and that this approach demonstrated that both arms could be controlled independently. The importance of bimanual movements in our everyday activities and specialized skills cannot be overestimated (44). Future clinical applications of BMIs aimed at restoring mobility in paralyzed patients will benefit greatly from the incorporation of multiple limbs. It still remains to be tested how well BMIs would control motor activities requiring precise inter-limb coordination. From this demonstration of BMI control over independent movements in two arms, it is clear that performance would benefit from the inclusion of large populations of neurons and multiple areas in both hemispheres.

Another key finding from the current study is that our bimanual BMI allowed four degrees of freedom to be decoded across two limbs. In a practical sense, our results suggest that to reproduce complex body movements using BMI control, the contributions of separate but functionally related body parts should be modeled jointly, not separately. Future studies will have to clarify such conjoint representation for a broader range of bimanual movements.

Another feature of our study with implications for neuroprosthetics was the utilization of a virtual environment within which a subject would control realistic avatar arms both during joystick control and brain control tasks. Decoding arm movements from neural activity of both monkeys proved robust and persistent across many sessions, even when subjects passively observed avatar arm movements (Fig. 7A). In future clinical BMI applications, particularly in those involving patients suffering from devastating levels of paralysis, the employment of realistic, intuitive virtual limb effectors may become a critical component of the BMI training. In upper limb control, arm-centric spatial reference frames play a pivotal role in goal-oriented movements (45, 46) and may provide a basis for the perception of body schema (47). Even when the arm movements were simply observed, sensorimotor (14) and premotor (47, 48) neurons in macaques have been shown to encode limb kinematics. Within a BMI context, providing the subject with virtual arms, instead of cursor circles, could tap into the existing, arm-centric biological framework (45–49) and enhance the process of experience-dependent plasticity, which is believed to underlie the mechanism through which subjects learn to operate a BMI. Preliminary results from our laboratory (Fig. 2) provide an early indication that this difference (cursor vs. avatar arm) is evident to macaques. Therefore, it could be further exploited both in behavioral and brain-control research paradigms aimed at enhancing the user’s experience as they learn to operate a BMI.

Overall, our study for the first time demonstrated that cortical large scale recordings can enable bimanual BMI operations in primates – a type of operation that advanced clinical neural prostheses will employ in the future.

Materials and Methods

Study design

The objective of the study was to elucidate key differences in cortical control between unimanual and bimanual movements and to implement a BMI paradigm based on real-time decoding of large-scale, multi-area cortical recordings to produce control of two virtual arms simultaneously. Furthermore, a BMI strategy was sought that required no movements of the subjects’ own arms during the execution of bimanual movements. The study’s design conformed to the conventional requirements for neurophysiological studies in nonhuman primates. All studies were conducted with approved protocols from Duke University Institutional Animal Care and Use Committee and were in accordance with NIH Guidelines for the Use of Laboratory Animals. Two rhesus monkeys were used, and each was recorded for more than 19 days. The major findings were replicated in both monkeys for multiple experimental sessions. Statistical analyses of the data involved parametric and nonparametric tests. ANOVA tests were used to analyze the influence of multiple factors, followed by the appropriate post-hoc comparisons.

The first monkey, Monkey C (female; 6.2kg), was overtrained for 12 months on unimanual and bimanual center out reaching tasks prior to the implantation surgery and the experiments in the present study. The second monkey (Monkey M; male; 10.6kg) was extensively trained prior to this study on a unimanual joystick task performed with the left arm, but was never introduced to the bimanual joystick control task. Monkey C performed bimanual joystick experiments until reaching greater than 95% correct trials on consecutive sessions. Monkey M was intentionally naïve to the bimanual BMI task prior to passive observation experiments. Monkey C next began BC with arms experiments and such experiments continued for 24 sessions when we observed consistent performance exceeding 75% correct. Both monkeys participated in four experiments that were exclusively passive observation of bimanual avatar movements. For all passive observation experiments, both arms were fully restrained. For the final passive observation session of each monkey, EMG recordings of both arms were obtained. Next, both monkeys participated in the BC without arm movement experiments. Monkey C reached proficiency after 9 sessions and monkey M after 15 sessions. Brain control experiments with fewer than 10 trials where the monkey attended to the screen for a minimum of 90% of the trial were designated as null performance and not included in subsequent analyses.

Task design

The avatar had been previously developed by our research group (14) and used for reaching movements by assigning joystick or BMI output to the position of the center of each hand (near the base of the middle finger). This hand location was also used as the reference point to indicate whether the hand was inside/outside a target.

Bimanual joystick control trials began by moving the right and left spring-loaded joysticks such that the right and left avatar hands were placed inside the right and left center targets, respectively. The center targets were squares with 8 cm sides located in the center of the right and left sides of the screen. Next, both hands had to simultaneously remain inside these center targets for a hold interval randomly drawn from a uniform distribution between 400 and 1000 ms. After this hold, the center targets disappeared and two reach targets appeared on the screen, one on each side of the screen. The objective was to reach with both hands to their respective targets. The nearest edge of the peripheral target appeared at a fixed distance of 8 cm either left, right, above, or below the center target. The peripheral targets were 8 cm in diameter during joystick control and BC with arms and were 10 cm in diameter during BC without arms experiments. With 4 potential locations per arm, there were 16 potential left/right combinations. Each combination was equally likely and was assigned randomly. Once both targets were entered and both were simultaneously held for 100 ms, a juice reward was given to the monkey.

In two recording sessions, joystick control performance included unimanual left, unimanual right, and bimanual trials. During this paradigm, the first 150 trials were bimanual, then 150 unimanual left, then 150 unimanual right, then 100–200 bimanual trials or until the monkey voluntarily declined to continue the task. During unimanual trials, only the single working arm was displayed on the computer screen for the monkey. The avatar arm and targets continued to appear in the same locations, on the corresponding right or left half of the screen. The door on the primate chair for the non-working arm was closed such that only the working arm could reach and manipulate the joystick.

Passive observation experiment

During passive observation experiments, the monkey was seated in a customized chair which immobilized both arms and allowed minimal movement of lower limbs. Arms were restrained to a foam-padded shelf fixed at a comfortable and natural angle in front of the monkey (Fig. 6A). The pronated arms were fastened to the shelf using secure and foam-padded adjustable straps. Both monkey C and monkey M were used for this experimental paradigm, although the observed movements for the two differed slightly. Monkey C observed replayed arm movements from its previous bimanual center-out joystick control session. Monkey M did not have prior joystick control sessions on this task and instead observed movements of the avatar limbs along ideal trajectories. These automated movements were enacted with a realistic distribution of reaction times and peak velocities, as well as acceleration profiles. We obtained the distribution of typical reaction times of monkey M for each arm from prior unimanual data. The automated passive observation movements were initiated after a reaction time drawn from a distribution with the same mean and variance as the monkey’s own typical reaction time distribution. The same steps were taken to obtain realistic mean reach velocities from prior unimanual training. Acceleration and deceleration periods and the beginning and end of the reach were added to make the generated movement look natural and smooth. Passive observation trials followed the same task sequence as the bimanual center-out joystick control task. The monkey was rewarded when both avatar arms moved into and held its corresponding peripheral target. In addition to target-based rewards, a smaller juice reward quantity was dispensed at random intervals between 2 and 8 seconds to encourage the monkey to look at the screen throughout the trial.

To track the monkeys’ attention to the task during non-joystick experiments, an eye tracking system was implemented. A single camera above the display screen was used to record the monkey. A modified version of the TLD tracking algorithm (50) was used to track eye position in real-time and identify periods of the experiment when the monkey did not attend to the screen. In addition, persistent attention to the screen was required to receive juice rewards throughout the experiment. For offline analysis, trials were separated into attended and unattended trials. To be considered an attended trial, the monkey’s eyes must be attending to the screen for a minimum of 90% of the trial epoch.

Both monkey C and monkey M performed four 20–30 minute passive observation sessions. Following these experiments, passive observation was used on a daily basis for 5–7 minutes at the beginning of each experiment to train the decoding model. For both monkeys, the fourth passive observation session was identical to the prior three except for EMG electrodes placed on the forearm flexor and extensor, biceps, and triceps of each arm.

Brain control experiment

The first phase of brain control experiments is called brain control with arms (BC with arms) and was completed by monkey C immediately following bimanual joystick sessions. The decoding model for BC with arms was trained on data collected during 5–7 minutes of joystick control trials at the beginning of the experiment. Next, the model was fit and movements of the avatar right and left arm were under the control of the decoding algorithm. The arms remained unrestrained and the hands continued to manipulate the joysticks. Monkey C performed 24 BC with arms sessions within a span of 7 weeks. The first 11 BC with arms sessions used different model parameters than the final 13 BC with arms sessions (see Neural Decoding section).

The latter phase of brain control, brain control without arms (BC without arms) began after both monkey C and monkey M completed the four passive observation-only experiments. Monkey M began BC without arms immediately following session 4, but monkey C began approximately 4 weeks following day 1 of passive observation. During BC without arms, the monkeys’ arms were restrained in the same way as for passive observation. The decoding model for BC without arms was retrained each session with 5–7 minutes of passive observation trials. The observed movements of this initial 5–7 min training window were generated in the same way as those in passive observation-only experiments. Next, the model was fit and the movements of the avatar were controlled by the decoding algorithm. The major difference with BC without arms from BC with arms was that during the brain control phase, the arms were completely restrained and covered.

Surgery and electrode implantation

Monkey C was implanted approximately 3 months prior to the beginning of the experiments of this study. Eight 96 channel multielectrode microwire arrays (768 total channels) were implanted into bilateral SMA, M1, S1, PMd, and PPC using previously described surgical methods (20, 51). Electrode arrays were organized as 4×10 grids with each shaft comprised of 2 or 3 polyimide- insulated stainless steel microwire electrodes with exposed tips. Within the same shaft, three microwires were staggered by 400 μm in depth. Lateral spacing between shafts was 1mm center-to-center. Microelectrodes were lowered using independent microdrives such that the deepest microwire was 2mm below the cortical surface. Only 384 of the 768 channels were recorded at a given time due to recording system limitations at the time. PMd arrays resulted in a low number of quality units and therefore were not included in the 384 selected channels.

Monkey M was implanted 42 months prior to the beginning of the experiments of this study. Arm and leg M1 and S1 were implanted in both hemispheres for a total of eight areas, each with 48 electrodes. During experiments, all 384 channels were recorded, however only arm M1/S1 channels were used for both online and offline predictions. Each 48 channel implant was comprised of a 4×4 grid with 3 electrodes per cannula staggered at 400 μm.

Neurophysiological analysis of joystick control trials

Modulations in cortical neuronal activity were analyzed using peri-event time histograms (PETHs). Spike timestamps for each neuron were first put into 50 ms bins and the activity of each neuron was normalized by subtracting the mean bin count and dividing by the standard deviation, which is statistically equivalent to the z-score. This normalization transforms the activity of each neuron to represent modulations as the fraction of overall modulations. Single neuron PETHs were computed in terms of firing rate (Hz), however population analysis utilized the normalized mean firing rate to facilitate comparison between neurons with different baseline firing rates. After the normalized mean firing rate was computed for each neuron, event-related modulations were analyzed by constructing PETHs. Movement-related modulations were computed as the difference between normalized mean firing rate during the typical movement epoch (150–600ms post-stimulus) and normalized mean firing rate during the baseline epoch (600–100ms pre-stimulus). This represents a difference in z-scores and is referred to as Δz in the analysis of this study. We computed Δz on a single trial basis and fit multiple linear regression models to compute parameters of directional tuning:

| (1) |

where (Lx, Ly) and (Rx, Ry) are (x,y) positions of the left and right targets on each trial. Coefficients A,B,C, and D were fit for each neuron with regression. Preferred direction of each hand for each neuron was computed using vectors (A⃗, B⃗) and (C⃗, D⃗).

A second metric based on Δz was used to evaluate depth of directional tuning. A mean Δz was computed for each neuron, for each direction. Tuning depth was measured as the standard deviation of the mean Δz distribution across different directions. Overall movement-related modulations were estimated as the mean of Δz absolute value.

For comparisons between unimanual and bimanual modulation, all data was collected within the same session. The bimanual and unimanual PETHs shown in Fig. 3 represent neural activity normalized by the same mean and standard deviation of that single session. Within unimanual trials, Eq.1 was modified to only compute coefficients reflecting modulations of the working arm.

Neurophysiological analysis of passive observation and brain control

Similar analyses were also applied to passive observation and brain control experiment data. Initially, PETHs aligned on target presentation, as described previously, were computed. From the PETH, the modulation strength Δz was computed for each area and for each of the 16 potential movement directions. In addition, we computed Δz in this way for each passive observation experiment day to observe the short and long term changes in this parameter. When computed as the normalized mean firing rate (Fig. S4), this metric gives an estimation of the population response amplitude as a z-score, thus facilitating comparisons across sessions regardless of the persistence of all recorded units from the previous session.

A second neurophysiological analysis used during passive observation and brain control experiments was neuronal spike count correlation. Binned (25 ms bin size) neuron activity patterns of single neurons of full experiments (not single trials, as in PETH analysis) were compared against the activity profiles of all other neurons in the population in a pairwise fashion. The comparison between neuron activity profiles was quantified as the Pearson’s correlation coefficient r between equal length time series a and b as in Eq. 2:

| (2) |

where n is the length of a and b. Spike count correlation was quantified as the mean r of all pairwise comparisons, quantified as in Eq. 3:

| (3) |

where Ψ is the ensemble of all recorded neurons and corr(ni, nj) defined in Eq. 2. We extended this analysis to identify correlations within a cortical area (e.g. an M1 neuron correlated with another M1 neuron) as well as between areas (e.g. M1 neuron and SMA neuron) as well as intra-hemisphere vs. inter-hemisphere. Only cortical activity during periods of the session when the monkey was attending to the screen was used for spike count correlation comparisons.

Neural decoding

Right and left arm kinematics were decoded using an unscented Kalman filter (UKF) as described elsewhere (24). The same model was used for both offline and online predictions with 3 past taps and 2 future taps of 100 ms binned neural activity. During the first 11 sessions of BC with arms, a 1st order UKF was used with only a single past tap of neural activity (Fig. 6C, red circles). Later BC with arms sessions and all BC without arms sessions employed the 5th order UKF. These settings were determined empirically by offline comparison (Fig. S3), as well as from previous studies in our lab (14). Offline predictions were computed for both active and passive observation experiments in a similar way. Offline, we used 6 minutes of neural data collected during attempted trials (for joystick control) or while attending to the screen (for passive observation) to fit the UKF tuning model. For unimanual offline analysis, the 2D tuning model fit binned neural activity as y(t) a function of single arm (x,y) position, velocity, and quadratic terms of both as in Eq. 4:

| (4) |

For bimanual experiments, both joystick control and passive observation, a 4D extension was made to the quadratic tuning model of Eq. 2. More specifically, y(t) was now formulated as a function of bimanual (x,y) positions, velocities, and quadratic terms of each as in Eq. 5:

| (5) |

We performed several preliminary analyses to optimize the amount of training data required for each model and generally found that 5–7 minutes was sufficient and beyond this yielded marginal improvements. Prediction performance was measured using correlation coefficient r. For each analysis, r was computed 5 times per data point and averaged, using a k-fold cross-validation technique. As such, we report prediction r as a distribution (mean±standard error) rather than a single point. We opted to use r as the metric for prediction accuracy due to its common usage in other BMI studies. Offline predictions using EMG activity were performed using a similar procedure. Eight EMG voltage channels were each resampled at 10 Hz – the same rate as utilized for neural decoding. All other decoding steps and models (Eq. 5) were equivalent for the two methods.

In addition to computing r using all recorded neurons, we computed random neuron dropping curves to evaluate the functional effect of number of recorded neurons on offline prediction performance. This analysis was conducted separately for unimanual and bimanual conditions, although the amount of training data was enforced to be equal for both analyses. For each neuron dropping curve, the number of neurons was increased at fixed intervals. At each neuronal quantity, n randomly selected neurons were used to both train the model and make predictions of bimanual kinematics on a separate block of test data. This procedure was repeated 5 times at each step, each time a random subset of neurons was selected and a different block of the session was designated as training data in order to cross-validate our results. In addition, neuron dropping curves were computed both overall and by cortical area (Figs. 5A–D, 6G).

When the UKF model was fit, both offline and online, one of the parameters computed was the noise covariance matrix R. This R matrix was estimated using the product of the regression residual matrix and its transpose and was normalized by several constant terms (24). Furthermore, the diagonal of R has a practical interpretation: values closer to 1 indicate that the neuron has a contribution which could largely be accounted for simply by noise. Conversely, lower values along the R-diagonal contribute more to the fitting of the neural tuning model. We use this R-diagonal term for each neuron as a proxy for its involvement in representing movements of a single arm. By computing R for right and left arms within a single experiment, we were able to evaluate the multifunctionality of a single neuron, and how this property was affected by bimanual modes of movements (Fig. S2B–D).

Online predictions using the UKF were computed using the same model as was used for offline analysis. Neuronal timestamp data from each of the three acquisition systems was sent over the local network to a single master computer. UKF algorithms within the custom BMI suite decoded the activity of all recorded (monkey C) or all arm area (monkey M) neurons. Output from the single 4D UKF assigned (x,y) position of both right and left hands of the avatar.

To generate an estimate of the chance performance level during brain control experiments, each session was replayed offline with the peripheral target locations selected from a randomly shuffled array of the session’s actual target location combinations. Each trial proceeded according to the same contingencies as during online BMI control including the 10 second timeout period and target hold times. If each virtual hand passed into and held the center targets, then moved into and held the peripheral targets at random locations, then the trial was correct. This procedure was repeated ten times for each BC with arms (monkey C) and BC without arms session (both monkeys).

A discrete classifier was used to make predictions of target location from both passive observation and BC without arms sessions. We used a k-nearest neighbor (k-NN) model with k=5 for these predictions. k-NN is a non-parametric classification algorithm which searches nearest-neighbor data (from training data) within the feature space – in the case of neural predictions, this would be a space defined by binned firing rates of all the neurons. By searching for the k-nearest neighbors to the test data, the algorithm can be made more robust. The classifications of each “neighbor” are counted as votes towards the prediction of the unknown sample class. Neural activity was binned into a single 250ms window during a specified epoch aligned on target onset. We then slid this window at 25 ms increments along the task interval from −0.5 to 0.75s relative to target onset and at each step, the k-NN model was fit with 75% of the session’s trials and target location predictions were made on the remaining 25% of trials. Train and sample trials were randomly selected five-times and the resulting prediction performance in terms of fraction correct prediction was averaged. In addition to the sliding window k-NN analysis, we used k-NN to generate a neuron dropping curve based on activity during BC without arms experiments. Similar to the continuous UKF model, we generated predictions of target locations using a varying number of neurons, ranging from 1 to all available. Predictions for the 50–500 ms window were compiled and the most common output was selected as the “vote”. Each test trial therefore had one vote, rather than computed performance as a function of time. Performance was again computed in terms of fraction correct as a function of population size.

Statistical Analysis

Several statistical methods were used in this study to validate the results we obtained. We used a t-test (α=0.05) for parametric tests and the Wilcoxon signed rank test (α=0.05) or Wilcoxon rank sum test (α=0.05) for nonparametric tests when data was not drawn from a normal distribution. Both 1-way and 2-way ANOVA tests (α=0.05) were utilized in the statistical analysis of correlated neural activity of Fig. 4. k-NN classifier performance (Fig. 3) was measured as fraction correct prediction. In this analysis, chance level performance was ¼. The 95% C.I. was constructed using the 1-proportion z-test (Eq. 6)

| (6) |

where n is the number of trials used for test data and p0 = 0.25. Offline predictions of cursor X and Y position were compared with actual cursor positions using Pearson’s product-moment correlation coefficient r. To generate a distribution of r, the prediction was repeated 5 times using the k-fold cross-validation technique, with each iteration using a different block of data for training and test data.

Supplementary Material

Fig. S1: Behavioral results from bimanual and unimanual joystick control experiments.

Fig. S2: Decoding performance during joystick control.

Fig. S3. Improvements to UKF model.

Fig. S4. Plasticity during passive observation training.

Fig. S5. Arm EMGs during passive and active trials.

Fig. S6. Temporal changes in prediction accuracy during passive observation.

Table S1. Unimanual and bimanual modulation differences by area.

Movie S1. Screen capture of bimanual center out joystick control trials.

Movie S2. Video of monkey performing bimanual joystick control trials using two joysticks.

Movie S3. Video of monkey performing bimanual BC with arms trials.

Movie S4. Screen capture of bimanual BC without arms trials.

Accessible summary.

Millions of people worldwide suffer from sensory and motor deficits caused by complete or partial paralysis. Brain machine interfaces (BMIs) are artificial systems that hold promise to restore mobility and sensation to such patients by connecting the intact structures of their brains to assistive devices. Previous studies have developed BMIs that control single prosthetic arms, but have not offered users the ability to coordinate two artificial arms – an important functionality that humans employ in a variety of motor activities from opening a can to typing on a keyboard. In this study, for the first time we developed a BMI that utilized cortical activity to control two arms. For this bimanual BMI control, a large sample of nearly 500 neurons was recorded from various cortical areas in monkeys. A custom decoding algorithm transformed this large-scale activity into independent control of two avatar arms and performed reaching movements in virtual reality. Monkeys perfected bimanual operations within 15 days of training. Eventually they were able to move the avatar arms without moving their own arms. A detailed analysis revealed a widespread cortical plasticity that underlied this learning.

Acknowledgments

We are grateful for the work of Gary Lehew and Jim Meloy in the building of the experimental setup and the multielectrode arrays; Dragan Dimitrov and Laura Oliveira for conducting neurosurgeries; Tamara Phillips for experimental support; Susan Halkiotis for administrative support.

Funding: This work was supported by NIH grant DP1MH099903 and NIH (NINDS) R01NS073952 awarded to Miguel A. L. Nicolelis. The content is solely the responsibility of the authors and does not necessarily represent the official views of the Office of the Director or NIH.

Footnotes

Author contributions: M.A.L.N., M.A.L., P.I. and S.S. designed the study. P.I. performed all the joystick and brain control experiments. P.I. and S.S. performed all the passive observation experiments. Z.L. and P.I. wrote decoding software for the custom BMI suite using for experiments. P.I., S.S., M.A.L., and M.A.L.N. analyzed the data and wrote the manuscript.

Competing interests: The authors declare that they have no competing interests.

References and Notes

- 1.Swinnen SP, Duysens J. Neuro-behavioral determinants of interlimb coordination: a multidisciplinary approach. Kluwer Academic; Boston: 2004. p. xxx.p. 329. [Google Scholar]

- 2.Lebedev MA, Nicolelis MA. Brain-machine interfaces: past, present and future. Trends Neurosci. 2006 Sep;29:536. doi: 10.1016/j.tins.2006.07.004. [DOI] [PubMed] [Google Scholar]

- 3.Nicolelis MA, Lebedev MA. Principles of neural ensemble physiology underlying the operation of brain-machine interfaces. Nat Rev Neurosci. 2009 Jul;10:530. doi: 10.1038/nrn2653. [DOI] [PubMed] [Google Scholar]

- 4.Lebedev MA, Nicolelis MA. Toward a whole-body neuroprosthetic. Prog Brain Res. 2011;194:47. doi: 10.1016/B978-0-444-53815-4.00018-2. [DOI] [PubMed] [Google Scholar]

- 5.Chestek CA, et al. Neural prosthetic systems: current problems and future directions. Conf Proc IEEE Eng Med Biol Soc. 2009;2009:3369. doi: 10.1109/IEMBS.2009.5332822. [DOI] [PubMed] [Google Scholar]

- 6.Patil PG, Carmena JM, Nicolelis MA, Turner DA. Ensemble recordings of human subcortical neurons as a source of motor control signals for a brain-machine interface. Neurosurgery. 2004 Jul;55:27. [PubMed] [Google Scholar]

- 7.Wessberg J, et al. Real-time prediction of hand trajectory by ensembles of cortical neurons in primates. Nature. 2000 Nov 16;408:361. doi: 10.1038/35042582. [DOI] [PubMed] [Google Scholar]

- 8.Carmena JM, et al. Learning to control a brain-machine interface for reaching and grasping by primates. PLoS Biol. 2003 Nov;1:E42. doi: 10.1371/journal.pbio.0000042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Serruya MD, Hatsopoulos NG, Paninski L, Fellows MR, Donoghue JP. Instant neural control of a movement signal. Nature. 2002 Mar 14;416:141. doi: 10.1038/416141a. [DOI] [PubMed] [Google Scholar]

- 10.Lebedev MA, et al. Cortical ensemble adaptation to represent velocity of an artificial actuator controlled by a brain-machine interface. J Neurosci. 2005 May 11;25:4681. doi: 10.1523/JNEUROSCI.4088-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.O’Doherty JE, Lebedev MA, Hanson TL, Fitzsimmons NA, Nicolelis MA. A brain-machine interface instructed by direct intracortical microstimulation. Front Integr Neurosci. 2009;3:20. doi: 10.3389/neuro.07.020.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Velliste M, Perel S, Spalding MC, Whitford AS, Schwartz AB. Cortical control of a prosthetic arm for self-feeding. Nature. 2008 Jun 19;453:1098. doi: 10.1038/nature06996. [DOI] [PubMed] [Google Scholar]

- 13.Hochberg LR, et al. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature. 2012 May 17;485:372. doi: 10.1038/nature11076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.O’Doherty JE, et al. Active tactile exploration using a brain-machine-brain interface. Nature. 2011 Nov 10;479:228. doi: 10.1038/nature10489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Rokni U, Steinberg O, Vaadia E, Sompolinsky H. Cortical representation of bimanual movements. J Neurosci. 2003 Dec 17;23:11577. doi: 10.1523/JNEUROSCI.23-37-11577.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Steinberg O, et al. Neuronal populations in primary motor cortex encode bimanual arm movements. Eur J Neurosci. 2002 Apr;15:1371. doi: 10.1046/j.1460-9568.2002.01968.x. [DOI] [PubMed] [Google Scholar]

- 17.Tanji J, Okano K, Sato KC. Neuronal activity in cortical motor areas related to ipsilateral, contralateral, and bilateral digit movements of the monkey. J Neurophysiol. 1988 Jul;60:325. doi: 10.1152/jn.1988.60.1.325. [DOI] [PubMed] [Google Scholar]

- 18.Donchin O, Gribova A, Steinberg O, Bergman H, Vaadia E. Primary motor cortex is involved in bimanual coordination. Nature. 1998 Sep 17;395:274. doi: 10.1038/26220. [DOI] [PubMed] [Google Scholar]

- 19.Kazennikov O, et al. Neural activity of supplementary and primary motor areas in monkeys and its relation to bimanual and unimanual movement sequences. Neuroscience. 1999 Mar;89:661. doi: 10.1016/s0306-4522(98)00348-0. [DOI] [PubMed] [Google Scholar]

- 20.Nicolelis MA, et al. Chronic, multisite, multielectrode recordings in macaque monkeys. Proc Natl Acad Sci U S A. 2003 Sep 16;100:11041. doi: 10.1073/pnas.1934665100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Stevenson IH, Kording KP. How advances in neural recording affect data analysis. Nat Neurosci. 2011 Feb;14:139. doi: 10.1038/nn.2731. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Shokur S, et al. Expanding the primate body schema in sensorimotor cortex by virtual touches of an avatar. Proc Natl Acad Sci U S A. 2013 Aug 26; doi: 10.1073/pnas.1308459110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Collinger JL, et al. High-performance neuroprosthetic control by an individual with tetraplegia. Lancet. 2013 Feb 16;381:557. doi: 10.1016/S0140-6736(12)61816-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Li Z, et al. Unscented Kalman filter for brain-machine interfaces. PLoS One. 2009;4:e6243. doi: 10.1371/journal.pone.0006243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Taylor DM, Tillery SI, Schwartz AB. Direct cortical control of 3D neuroprosthetic devices. Science. 2002 Jun 7;296:1829. doi: 10.1126/science.1070291. [DOI] [PubMed] [Google Scholar]

- 26.Hochberg LR, et al. Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature. 2006 Jul 13;442:164. doi: 10.1038/nature04970. [DOI] [PubMed] [Google Scholar]

- 27.Wahnoun R, He J, Helms Tillery SI. Selection and parameterization of cortical neurons for neuroprosthetic control. J Neural Eng. 2006 Jun;3:162. doi: 10.1088/1741-2560/3/2/010. [DOI] [PubMed] [Google Scholar]

- 28.Kim SP, et al. Point-and-click cursor control with an intracortical neural interface system by humans with tetraplegia. IEEE Trans Neural Syst Rehabil Eng. 2011 Apr;19:193. doi: 10.1109/TNSRE.2011.2107750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Hauschild M, Mulliken GH, Fineman I, Loeb GE, Andersen RA. Cognitive signals for brain-machine interfaces in posterior parietal cortex include continuous 3D trajectory commands. Proc Natl Acad Sci U S A. 2012 Oct 16;109:17075. doi: 10.1073/pnas.1215092109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Hatsopoulos N, Joshi J, O’Leary JG. Decoding continuous and discrete motor behaviors using motor and premotor cortical ensembles. J Neurophysiol. 2004 Aug;92:1165. doi: 10.1152/jn.01245.2003. [DOI] [PubMed] [Google Scholar]

- 31.Scherberger H, Andersen RA. Target selection signals for arm reaching in the posterior parietal cortex. J Neurosci. 2007 Feb 21;27:2001. doi: 10.1523/JNEUROSCI.4274-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Santucci DM, Kralik JD, Lebedev MA, Nicolelis MA. Frontal and parietal cortical ensembles predict single-trial muscle activity during reaching movements in primates. Eur J Neurosci. 2005 Sep;22:1529. doi: 10.1111/j.1460-9568.2005.04320.x. [DOI] [PubMed] [Google Scholar]

- 33.Requin J, Lecas JC, Vitton N. A comparison of preparation-related neuronal activity changes in the prefrontal, premotor, primary motor and posterior parietal areas of the monkey cortex: preliminary results. Neurosci Lett. 1990 Mar 26;111:151. doi: 10.1016/0304-3940(90)90360-l. [DOI] [PubMed] [Google Scholar]

- 34.Saha DJ, Morasso P. Stabilization strategies for unstable dynamics. PLoS One. 2012;7:e30301. doi: 10.1371/journal.pone.0030301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Peper CE, Carson RG. Bimanual coordination between isometric contractions and rhythmic movements: an asymmetric coupling. Exp Brain Res. 1999 Dec;129:417. doi: 10.1007/s002210050909. [DOI] [PubMed] [Google Scholar]

- 36.Ridderikhoff A, Peper CL, Beek PJ. Unraveling interlimb interactions underlying bimanual coordination. J Neurophysiol. 2005 Nov;94:3112. doi: 10.1152/jn.01077.2004. [DOI] [PubMed] [Google Scholar]

- 37.Fitzsimmons NA, Lebedev MA, Peikon ID, Nicolelis MA. Extracting kinematic parameters for monkey bipedal walking from cortical neuronal ensemble activity. Front Integr Neurosci. 2009;3:3. doi: 10.3389/neuro.07.003.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Tillery SI, Taylor DM. Signal acquisition and analysis for cortical control of neuroprosthetics. Curr Opin Neurobiol. 2004 Dec;14:758. doi: 10.1016/j.conb.2004.10.013. [DOI] [PubMed] [Google Scholar]

- 39.Georgopoulos AP, Kettner RE, Schwartz AB. Primate motor cortex and free arm movements to visual targets in three-dimensional space. II. Coding of the direction of movement by a neuronal population. J Neurosci. 1988 Aug;8:2928. doi: 10.1523/JNEUROSCI.08-08-02928.1988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Zacksenhouse M, et al. Cortical modulations increase in early sessions with brain-machine interface. PLoS One. 2007;2:e619. doi: 10.1371/journal.pone.0000619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Olson B, Si J. Evidence of a mechanism of neural adaptation in the closed loop control of directions. International Journal of Intelligent Computing and Cybernetics. 2008;3:5. [Google Scholar]

- 42.Andres FG, et al. Functional coupling of human cortical sensorimotor areas during bimanual skill acquisition. Brain. 1999 May;122(Pt 5):855. doi: 10.1093/brain/122.5.855. [DOI] [PubMed] [Google Scholar]

- 43.Gerloff C, Andres FG. Bimanual coordination and interhemispheric interaction. Acta Psychol (Amst) 2002 Jun;110:161. doi: 10.1016/s0001-6918(02)00032-x. [DOI] [PubMed] [Google Scholar]

- 44.Swinnen SP, Wenderoth N. Two hands, one brain: cognitive neuroscience of bimanual skill. Trends Cogn Sci. 2004 Jan;8:18. doi: 10.1016/j.tics.2003.10.017. [DOI] [PubMed] [Google Scholar]

- 45.Colby CL. Action-oriented spatial reference frames in cortex. Neuron. 1998 Jan;20:15. doi: 10.1016/s0896-6273(00)80429-8. [DOI] [PubMed] [Google Scholar]

- 46.Rizzolatti G, Fadiga L, Fogassi L, Gallese V. The space around us. Science. 1997 Jul 11;277:190. doi: 10.1126/science.277.5323.190. [DOI] [PubMed] [Google Scholar]