Abstract

In order to cross a street without being run over, we need to be able to extract very fast hidden causes of dynamically changing multi-modal sensory stimuli, and to predict their future evolution. We show here that a generic cortical microcircuit motif, pyramidal cells with lateral excitation and inhibition, provides the basis for this difficult but all-important information processing capability. This capability emerges in the presence of noise automatically through effects of STDP on connections between pyramidal cells in Winner-Take-All circuits with lateral excitation. In fact, one can show that these motifs endow cortical microcircuits with functional properties of a hidden Markov model, a generic model for solving such tasks through probabilistic inference. Whereas in engineering applications this model is adapted to specific tasks through offline learning, we show here that a major portion of the functionality of hidden Markov models arises already from online applications of STDP, without any supervision or rewards. We demonstrate the emergent computing capabilities of the model through several computer simulations. The full power of hidden Markov model learning can be attained through reward-gated STDP. This is due to the fact that these mechanisms enable a rejection sampling approximation to theoretically optimal learning. We investigate the possible performance gain that can be achieved with this more accurate learning method for an artificial grammar task.

Author Summary

It has recently been shown that STDP installs in ensembles of pyramidal cells with lateral inhibition networks for Bayesian inference that are theoretically optimal for the case of stationary spike input patterns. We show here that if the experimentally found lateral excitatory connections between pyramidal cells are taken into account, theoretically optimal probabilistic models for the prediction of time-varying spike input patterns emerge through STDP. Furthermore a rigorous theoretical framework is established that explains the emergence of computational properties of this important motif of cortical microcircuits through learning. We show that the application of an idealized form of STDP approximates in this network motif a generic process for adapting a computational model to data: expectation-maximization. The versatility of computations carried out by these ensembles of pyramidal cells and the speed of the emergence of their computational properties through STDP is demonstrated through a variety of computer simulations. We show the ability of these networks to learn multiple input sequences through STDP and to reproduce the statistics of these inputs after learning.

Introduction

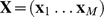

An ubiquitous motif of cortical microcircuits is ensembles of pyramidal cells (in layers 2/3 and in layer 5) with lateral inhibition [1]–[3]. This network motif is called a winner-take-all (WTA) circuit, since inhibition induces competition between pyramidal neurons [4]. We investigate in this article which computational capabilities emerge in WTA circuits if one also takes into account the existence of lateral excitatory synaptic connections within such ensembles of pyramidal cells (Fig. 1A). This augmented architecture will be our default notion of a WTA circuit throughout this paper.

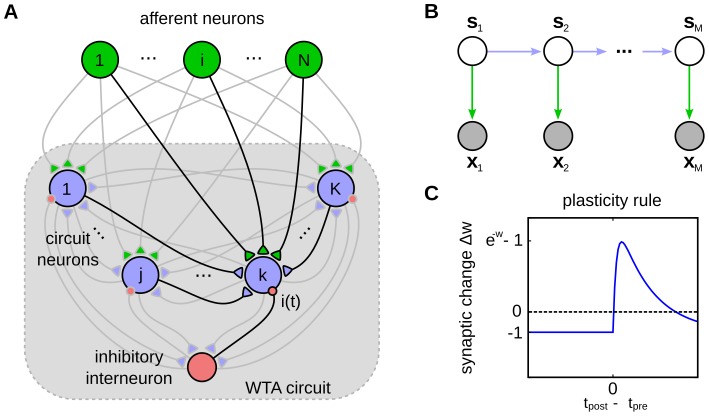

Figure 1. Illustration of the network model.

(A) The structure of the network. It consists of  excitatory neurons (blue) that receive feedforward inputs (green synapses) and lateral excitatory all-to-all connections (blue synapses). Interneurons (red) install soft winner-take-all behavior by injecting a global inhibition to all neurons of the circuit in response to the network's spiking activity. (B) The Bayesian network representing the HMM over

excitatory neurons (blue) that receive feedforward inputs (green synapses) and lateral excitatory all-to-all connections (blue synapses). Interneurons (red) install soft winner-take-all behavior by injecting a global inhibition to all neurons of the circuit in response to the network's spiking activity. (B) The Bayesian network representing the HMM over  time steps. The prediction model (blue arrows) is implemented by the lateral synapses. It determines the evolution of the hidden states

time steps. The prediction model (blue arrows) is implemented by the lateral synapses. It determines the evolution of the hidden states  over time. The observation model (green arrows) is implemented by feedforward connections. The inference task for the HMM is to determine a sequence of hidden states

over time. The observation model (green arrows) is implemented by feedforward connections. The inference task for the HMM is to determine a sequence of hidden states  (white), given the afferent activity

(white), given the afferent activity  (gray). (C) The STDP window that is used to update the excitatory synapses. The synaptic weight change is plotted against the time difference between pre- and postsynaptic spike events.

(gray). (C) The STDP window that is used to update the excitatory synapses. The synaptic weight change is plotted against the time difference between pre- and postsynaptic spike events.

We show that this network motif endows cortical microcircuits with the capability to encode and process information in a highly dynamic environment. This dynamic environment of generic cortical mircocircuits results from quickly varying activity of neurons at the sensory periphery, caused for example by visual, auditory, and somatosensory stimuli impinging on a moving organism that actively probes the environment for salient information. Quickly changing sensory inputs are also caused by movements and communication acts of other organisms that need to be interpreted and predicted. Finally, a generic cortical microcircuit also receives massive inputs from other cortical areas. Experimental data with simultaneous recordings of many neurons suggest that these internal cortical codes are also highly dynamic, and often take the form of characteristic assembly sequences or trajectories of local network states [5]–[10]. We show in this article that WTA circuits have emergent coding and computing capabilities that are especially suited for this highly dynamic context of cortical microcircuits.

We show that spike-timing-dependent plasticity (STDP) [11], [12], applied on both the lateral excitatory synapses and synapses from afferent neurons, implements in these networks the capability to represent the underlying statistical structure of such spatiotemporal input patterns. This implies the challenge to solve two different learning tasks in parallel. First it is necessary to learn to recognize the salient high-dimensional patterns from the afferent neurons, which was already investigated in [13]. The second task consists in learning the temporal structure underlying the input spike sequences. We show that augmented WTA circuits are able to detect the sequential arrangements of the learned salient patterns. Synaptic plasticity for lateral excitatory connections provides the ability to discriminate even identical input patterns according to the temporal context in which they appear. The same STDP rule, that leads to the emergence of sparse codes for individual input patterns in the absence of lateral excitatory connections [13] now leads to the emergence of context specific neural codes and even predictions for temporal sequences of such patterns. The resulting neural codes are sparse with respect to the number of neurons that are tuned for a specific salient pattern and the temporal context in which it appears.

The basic principles of learning sequences of forced spike activations in general recurrent networks were studied in previous work [14], [15] and resulted in the finding that an otherwise local learning rule (like STDP) has to be enhanced by a global third factor which acts as an importance weight, in order to provide a – theoretically provable – approximation to temporal sequence learning. The possible role of such importance weights for probabilistic computations in spiking neural networks with lateral inhibition was already investigated earlier in [16].

In this article we establish a rigorous theoretical framework which reveals that each spike train generated by WTA circuits can be viewed as a sample from the state space of a hidden Markov model (HMM). The HMM has emerged in machine learning and engineering applications as a standard probabilistic model for detecting hidden regularities in sequential input patterns, and for learning to predict their continuation from initial segments [17]–[19]. The HMM is a generative model which relies on the assumption that the statistics of input patterns  over

over  time steps is governed by a sequence of hidden states

time steps is governed by a sequence of hidden states  , such that the

, such that the  hidden state

hidden state  “explains” or generates the input pattern

“explains” or generates the input pattern  . We show that the instantaneous state

. We show that the instantaneous state  of the HMM is realized by the joint activity of all neurons of a WTA circuit, i.e. the spikes themselves and their resulting postsynaptic potentials. The stochastic dynamics of the WTA circuit implements a forward sampler that approximates exact HMM inference by propagating a single sample from the hidden state

of the HMM is realized by the joint activity of all neurons of a WTA circuit, i.e. the spikes themselves and their resulting postsynaptic potentials. The stochastic dynamics of the WTA circuit implements a forward sampler that approximates exact HMM inference by propagating a single sample from the hidden state  forward in time [19], [20].

forward in time [19], [20].

We show analytically that a suitable STDP rule in the WTA circuit – notably the same rule on both the recurrent and the feedforward synaptic connections – realizes theoretically optimal parameter acquisition in terms of an online expectation-maximization (EM) algorithm [21], [22], for a certain pair  if the stochastic network dynamics describes the state sequence

if the stochastic network dynamics describes the state sequence  upon the input sequence

upon the input sequence  . We further show that when the STDP rule is applied within the approximative forward sampling network dynamics of the WTA circuit, it instantiates a weak but well defined approximation of theoretically optimal HMM learning through EM. This is remarkable insofar as no additional mechanisms are needed for this approximation – it is automatically implemented through the stochastic dynamics of the WTA circuit, in combination with STDP. In this paper we focus on the analysis of this approximation scheme, its limits and its behavioral relevance.

. We further show that when the STDP rule is applied within the approximative forward sampling network dynamics of the WTA circuit, it instantiates a weak but well defined approximation of theoretically optimal HMM learning through EM. This is remarkable insofar as no additional mechanisms are needed for this approximation – it is automatically implemented through the stochastic dynamics of the WTA circuit, in combination with STDP. In this paper we focus on the analysis of this approximation scheme, its limits and its behavioral relevance.

We test this model in computer simulations that duplicate a number of experimental paradigms for evaluating emergent neural codes and behavioral performance in recognizing and predicting temporal sequences. We analyze evoked and spontaneous dynamics that emerges in our model network after learning an object sequence memory task as in the experiments of [23], [24]. We show that the pyramidal cells of a WTA circuit learn through STDP to encode the hidden states that underlie the input statistics in such tasks, which enables these cells to recognize and distinguish multiple pattern sequences and to autonomously predict their continuation from initial segments. Furthermore, we find neural assemblies emerging in neighboring interconnected WTA circuits that encode different abstract features underlying the task. The resulting neural codes resemble the highly heterogeneous codes found in the cortex [25]. Furthermore, neurons often learn to fire preferentially after specific predecessors, building up stereotypical neural trajectories within neural assemblies, that are also commonly observed in cortical activity [5]–[7], [26].

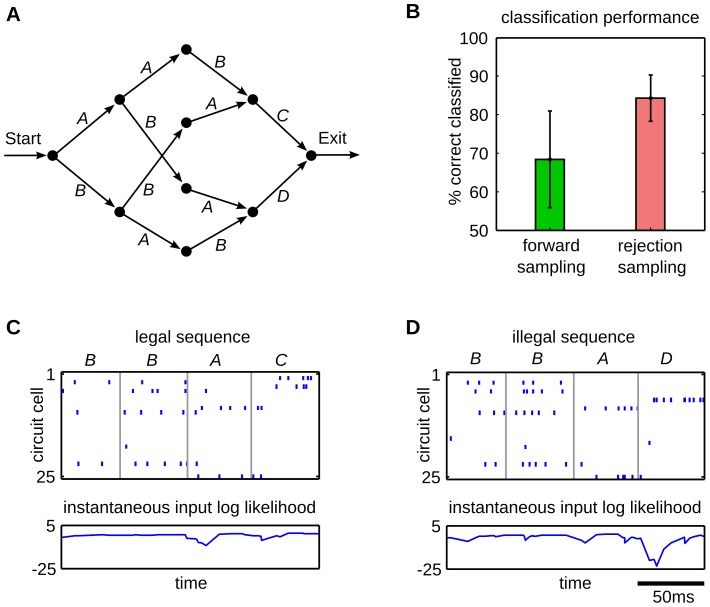

Our generative probabilistic perspective of synaptic plasticity in WTA circuits naturally leads to the question whether the proposed learning approximation is able to solve complex problems beyond simple sequence learning. Therefore we reanalyze data on artificial grammar learning experiments from cognitive science [27], where subjects were exposed to sequences of symbols generated by some hidden artificial grammar, and then had to judge whether subsequently presented unseen test sequences had been generated by the same grammar. We show that STDP learning in our WTA circuits is able to infer the underlying grammar model from a small number of training sequences.

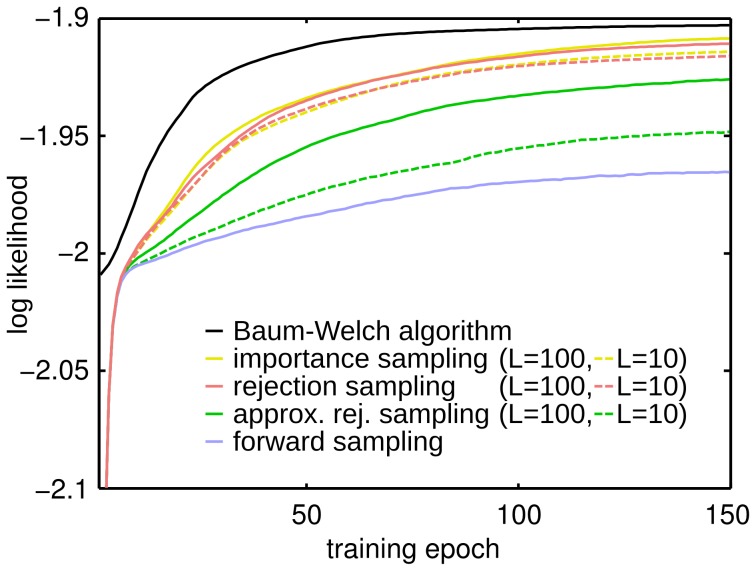

The simple approximation by forward sampling, however, clearly limits the learning performance. We show that the full power of HMM-learning can be attained in a WTA circuit based on the rejection sampling principle [19], [20]. A binary factor is added to the STDP learning rule, that gates the expression of synaptic plasticity through a subsequent global modulatory signal. The improvement in accuracy of this more powerful learning method comes at the cost that every input sequence has to be repeated a number of times, until one generated state sequence is accepted. We show that a significant performance increase can be achieved already with a small number of repetitions. We demonstrate this for a simple and a more complex grammar learning task.

Results

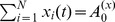

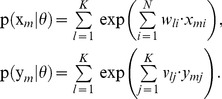

We first define the spiking neural network model for the winner-take-all (WTA) circuit considered throughout this paper. The architecture of the network is illustrated in Fig. 1A. It consists of stochastic spiking neurons, which receive excitatory input from an afferent population (green synapses) and from lateral excitatory connections (blue synapses) between neighboring pyramidal neurons. To clarify the distinction between these connections, we denote the synaptic efficacies of feedforward and lateral synapses by different weight matrices  and

and  , respectively, where

, respectively, where  denotes the number of afferent neurons and

denotes the number of afferent neurons and  the size of the circuit (i.e., the number of pyramidal cells in the circuit). In addition, all neurons within the WTA circuit project to interneurons and in turn all receive the same common inhibition

the size of the circuit (i.e., the number of pyramidal cells in the circuit). In addition, all neurons within the WTA circuit project to interneurons and in turn all receive the same common inhibition  . Thus the membrane potential of neuron

. Thus the membrane potential of neuron  at time

at time  is given by

is given by

|

(1) |

| (2) |

where  and

and  denote the time courses of the excitatory postsynaptic potentials (EPSP) under the feedforward and lateral synapses, where

denote the time courses of the excitatory postsynaptic potentials (EPSP) under the feedforward and lateral synapses, where  and

and  are the elements of

are the elements of  and

and  respectively, and

respectively, and  is a parameter that controls the excitability of the neuron. The two sums in (1) describe the time courses of the membrane potential in response to synaptic inputs from feedforward and lateral synapses. In equation (2) we used the assumption of additive EPSPs, where

is a parameter that controls the excitability of the neuron. The two sums in (1) describe the time courses of the membrane potential in response to synaptic inputs from feedforward and lateral synapses. In equation (2) we used the assumption of additive EPSPs, where  denotes a kernel function that determines the time course of an EPSP [28]. The sums run over all spike times of the presynaptic neuron. For the theoretical analysis we used a single exponential decay for the sake of simplicity, throughout the simulations we used double exponential kernels, if not stated otherwise. Our theoretical model can be further extended to other EPSP shapes (see the Methods section for details).

denotes a kernel function that determines the time course of an EPSP [28]. The sums run over all spike times of the presynaptic neuron. For the theoretical analysis we used a single exponential decay for the sake of simplicity, throughout the simulations we used double exponential kernels, if not stated otherwise. Our theoretical model can be further extended to other EPSP shapes (see the Methods section for details).

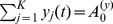

As proposed in [29], we employ an exponential dependence between the membrane potential and the firing probability. Therefore the instantaneous rate of neuron  is given by

is given by  , where

, where  is a constant that scales the firing rate. The inhibitory feedback loop

is a constant that scales the firing rate. The inhibitory feedback loop  in equation (1), that depresses the membrane potentials whenever the network activity rises, has a normalizing effect on the circuit-wide output rate. Although, each neuron

in equation (1), that depresses the membrane potentials whenever the network activity rises, has a normalizing effect on the circuit-wide output rate. Although, each neuron  generates spikes according to an individual Poisson process, this inhibition couples the neural activities and thereby installs the required competition between all cells in the circuit. We model the effect of this inhibition in an abstract way, where we assume, that all WTA neurons receive the same inhibitory signal

generates spikes according to an individual Poisson process, this inhibition couples the neural activities and thereby installs the required competition between all cells in the circuit. We model the effect of this inhibition in an abstract way, where we assume, that all WTA neurons receive the same inhibitory signal  such that the overall spiking rate of the WTA circuit stays approximately constant. Ideal WTA behavior is attained if the network rate is normalized to the same value at any point in time, i.e.

such that the overall spiking rate of the WTA circuit stays approximately constant. Ideal WTA behavior is attained if the network rate is normalized to the same value at any point in time, i.e.  . Using this, we find the circuit dynamics to be determined by

. Using this, we find the circuit dynamics to be determined by

|

(3) |

This ideal WTA circuit realizes a soft-max or soft WTA function, granting the highest firing rate to the neuron with the highest membrane potential, but still allowing all other neurons to fire with non-zero probability.

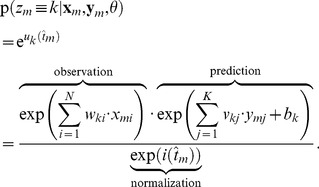

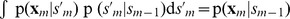

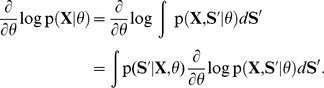

Recapitulation of hidden Markov model theory

In this section we briefly summarize the relevant concepts for deriving our theoretical results. An exhaustive discussion on hidden Markov model theory can be found in [17]–[19]. Throughout the paper, to keep the notation uncluttered we use the common short-hand notation  to denote

to denote  , i.e. the probability that the random variable

, i.e. the probability that the random variable  takes on the value

takes on the value  . If it is not clear from the context, we will use the notation

. If it is not clear from the context, we will use the notation  to remind the reader of the underlying random variable, that is only implicitly defined.

to remind the reader of the underlying random variable, that is only implicitly defined.

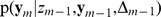

The HMM is a generative model for input pattern sequences over  time steps

time steps  (the input patterns are traditionally called observations in the context of HMMs). It relies on the assumption that a sequence of hidden states

(the input patterns are traditionally called observations in the context of HMMs). It relies on the assumption that a sequence of hidden states  and a set of parameters

and a set of parameters  exist, which govern the statistics of

exist, which govern the statistics of  . This assumption allows to write the joint distribution of

. This assumption allows to write the joint distribution of  and

and  as

as

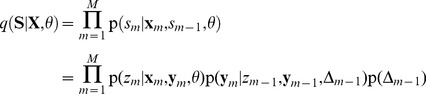

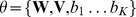

| (4) |

where we suppress an explicit representation of the initial state  , for the sake of brevity. The joint distribution (4) factorizes in each time step into the observation model

, for the sake of brevity. The joint distribution (4) factorizes in each time step into the observation model

and the state transition or prediction model

and the state transition or prediction model

[19]. This independence property is illustrated by the Bayesian network for a HMM in Fig. 1B.

[19]. This independence property is illustrated by the Bayesian network for a HMM in Fig. 1B.

The HMM is a generative model and therefore we can recover the distribution over input patterns by marginalizing out the hidden state sequences  . Learning in this model means to adapt the model parameters

. Learning in this model means to adapt the model parameters  such that this marginal distribution

such that this marginal distribution  comes as close as possible to the empirical distribution

comes as close as possible to the empirical distribution  of the observable input sequences. A generic method for learning in generative models with hidden variables is the expectation-maximization (EM) algorithm [30], and its application to HMMs is known as the Baum-Welch algorithm [31]. This algorithm consists of iterating two steps, the E-step and the M-step, where the model parameters

of the observable input sequences. A generic method for learning in generative models with hidden variables is the expectation-maximization (EM) algorithm [30], and its application to HMMs is known as the Baum-Welch algorithm [31]. This algorithm consists of iterating two steps, the E-step and the M-step, where the model parameters  are adjusted at each M-step (for the updated posterior generated at the preceding E-step). A remarkable feature of the algorithm is that the fitting of the model to the data is guaranteed to improve at each M-step of this iterative process. Whereas the classical EM algorithm is restricted to offline learning (where all training data are available right at the beginning), there exist also stochastic online versions of EM learning.

are adjusted at each M-step (for the updated posterior generated at the preceding E-step). A remarkable feature of the algorithm is that the fitting of the model to the data is guaranteed to improve at each M-step of this iterative process. Whereas the classical EM algorithm is restricted to offline learning (where all training data are available right at the beginning), there exist also stochastic online versions of EM learning.

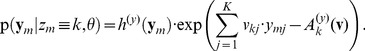

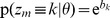

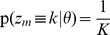

In its stochastic online variant [21], [22] the E-step consists of generating one sample  from the posterior distribution

from the posterior distribution

, given one currently observed input sequence

, given one currently observed input sequence  . Given these sampled values for

. Given these sampled values for  , the subsequent M-step adapts the model parameters

, the subsequent M-step adapts the model parameters  such that the probability

such that the probability  increases. The adaptation is confined to acquiring the conditional probabilities that govern the observation and the prediction model.

increases. The adaptation is confined to acquiring the conditional probabilities that govern the observation and the prediction model.

It would be also desirable to realize the inference and sampling of one such posterior sample sequence  in a fully online processing, i.e. generating each state

in a fully online processing, i.e. generating each state  in parallel to the arrival of the corresponding input pattern

in parallel to the arrival of the corresponding input pattern  . Yet this seems to be impossible as the probabilistic model according to (4) implies a statistical dependence between any

. Yet this seems to be impossible as the probabilistic model according to (4) implies a statistical dependence between any  and the whole future observation sequence

and the whole future observation sequence  . However, it is well known that the inference of

. However, it is well known that the inference of  can be approximated by a so-called forward sampling process [19], [20], where every single time step

can be approximated by a so-called forward sampling process [19], [20], where every single time step  of the sequence

of the sequence  is sampled online, based solely on the knowledge of the observations

is sampled online, based solely on the knowledge of the observations  received so far, rather than the observation of the complete sequence

received so far, rather than the observation of the complete sequence  . Hence sampling the sequence

. Hence sampling the sequence  is approximated by propagating a single sample from the HMM state space forward in time.

is approximated by propagating a single sample from the HMM state space forward in time.

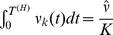

Forward sampling in WTA circuits

In this section we show that the dynamics of the network realizes a forward sampler for the HMM. We make use of the fact that equations (1), (2) and (3) realize a Markov process, in the sense that future network dynamics is independent from the past, given the current network state (for a suitable notion of network state). This property holds true for most reasonable choices of EPSP kernels. For the sake of brevity we focus in the theoretical analysis on the simple case of a single exponential decay with time constant  .

.

We seek a description of the continuous-time network dynamics in response to afferent spike trains over a time span of length  that can be mapped to the state space of a corresponding HMM with discrete time steps. Although the network works in continuous time, its dynamics can be fully described taking only those points in time into account, where one of the neurons in the recurrent circuit produces a spike. This allows to directly link spike trains generated by the network to a sequence of samples from the state space of a corresponding HMM.

that can be mapped to the state space of a corresponding HMM with discrete time steps. Although the network works in continuous time, its dynamics can be fully described taking only those points in time into account, where one of the neurons in the recurrent circuit produces a spike. This allows to directly link spike trains generated by the network to a sequence of samples from the state space of a corresponding HMM.

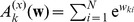

Let the  spike times produced during this time window be given by

spike times produced during this time window be given by  . The neuron dynamics are determined by the membrane time courses (2). For convenience let us introduce the notation

. The neuron dynamics are determined by the membrane time courses (2). For convenience let us introduce the notation  , with

, with  and by analogy

and by analogy  , with

, with  .

.

Due to the exponentially decaying EPSPs the synaptic activation  at time

at time  is fully defined by the synaptic activation

is fully defined by the synaptic activation  at the time of the previous spike

at the time of the previous spike  , and the identity of the neuron that spiked in that previous time step, which we denote by a discrete variable

, and the identity of the neuron that spiked in that previous time step, which we denote by a discrete variable  . We thus conclude that the sequence of tuples

. We thus conclude that the sequence of tuples  (with

(with  ) fulfills the Markov condition, i.e. the conditional independence

) fulfills the Markov condition, i.e. the conditional independence  and thus fully represents the continuous dynamics of the network (see Methods). We call

and thus fully represents the continuous dynamics of the network (see Methods). We call  the network state. The corresponding HMM forward sampler follows a simple update scheme that samples a new state

the network state. The corresponding HMM forward sampler follows a simple update scheme that samples a new state  given the current observation

given the current observation  and the previous state

and the previous state  . This dynamic is equivalent to the WTA network model.

. This dynamic is equivalent to the WTA network model.

This state representation allows us to update the network dynamics online, jumping from one spike time  to the next. Using this property, we find that the dynamics of the network realizes a probability distribution over state sequences

to the next. Using this property, we find that the dynamics of the network realizes a probability distribution over state sequences  , given an afferent sequence

, given an afferent sequence  , which can be written as

, which can be written as

|

(5) |

where  is the set of network parameters. The factorization and independence properties in (5) are induced by the state representation and the circuit dynamics. We assume here that the lateral inhibition within the WTA circuit ensures that the output rate of the whole circuit is normalized, i.e.

is the set of network parameters. The factorization and independence properties in (5) are induced by the state representation and the circuit dynamics. We assume here that the lateral inhibition within the WTA circuit ensures that the output rate of the whole circuit is normalized, i.e.  at all times

at all times  . This allows to introduce the distribution over the inter-spike-time intervals

. This allows to introduce the distribution over the inter-spike-time intervals  independent from

independent from  (see Methods for details). Note, that

(see Methods for details). Note, that  determines the interval between spikes of all circuit neurons, realized by a homogeneous Poisson process with a constant rate

determines the interval between spikes of all circuit neurons, realized by a homogeneous Poisson process with a constant rate  . The second term in the second line of (5) determines the course of the membrane potential, i.e. it assures that

. The second term in the second line of (5) determines the course of the membrane potential, i.e. it assures that  follows the membrane dynamics. Since the EPSP kernels are deterministic functions this distribution has a single mass point, where (2) is satisfied. The first factor in the second line of (5) is given by the probability of each individual neuron to spike. This probability depends on the membrane potential (1), which in turn is determined by

follows the membrane dynamics. Since the EPSP kernels are deterministic functions this distribution has a single mass point, where (2) is satisfied. The first factor in the second line of (5) is given by the probability of each individual neuron to spike. This probability depends on the membrane potential (1), which in turn is determined by  ,

,  and the network parameters

and the network parameters  . Given that the circuit spikes at time

. Given that the circuit spikes at time  , the firing probability of neuron

, the firing probability of neuron  can be expressed as a conditional distribution

can be expressed as a conditional distribution  . The lateral inhibition in (1) ensures that this probability distribution is correctly normalized. Therefore, the winner neuron

. The lateral inhibition in (1) ensures that this probability distribution is correctly normalized. Therefore, the winner neuron  is drawn from a multinomial distribution at each spike time.

is drawn from a multinomial distribution at each spike time.

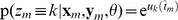

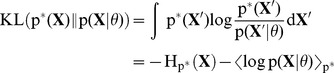

For the given architecture the functional parts of the network can be related directly to hidden Markov model dynamics. In the Methods section we show in detail that by rewriting  the membrane potential (1) can be decomposed into three functional parts

the membrane potential (1) can be decomposed into three functional parts

|

(6) |

The lateral excitatory connections predict a prior belief about the current network activity and the feedforward synapses match this prediction against the afferent input. The inhibition  implements the normalization that is required to make (6) a valid multinomial distribution. The functional parts of the membrane potential can be directly linked to the prediction and observation models of a HMM, where the network state is equivalent to the hidden state of this HMM. The WTA circuit realizes a forward-sampler for this HMM, which approximates sampling from the posterior distribution

implements the normalization that is required to make (6) a valid multinomial distribution. The functional parts of the membrane potential can be directly linked to the prediction and observation models of a HMM, where the network state is equivalent to the hidden state of this HMM. The WTA circuit realizes a forward-sampler for this HMM, which approximates sampling from the posterior distribution  in an online fashion [20]. Its sampling is carried out step by step, i.e. it generates through each spike a new sample from the network state space, taking only the previous time step sample into account. Furthermore this forward sampling requires no additional computational organization, but is achieved by the inherent dynamics of the stochastically firing WTA circuit.

in an online fashion [20]. Its sampling is carried out step by step, i.e. it generates through each spike a new sample from the network state space, taking only the previous time step sample into account. Furthermore this forward sampling requires no additional computational organization, but is achieved by the inherent dynamics of the stochastically firing WTA circuit.

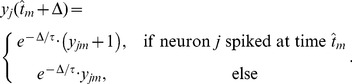

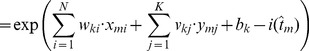

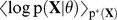

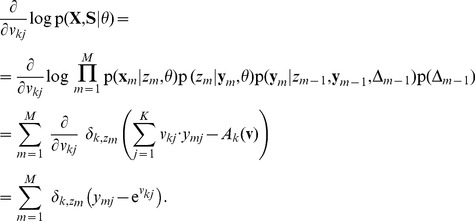

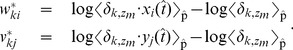

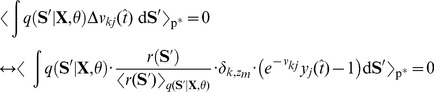

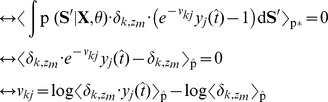

STDP instantiates a stochastic approximation to EM parameter learning

Formulating the network dynamics in terms of a probabilistic model is beneficial for two reasons: First, it gives rise to a better understanding of the network dynamics by relating it to samples from the HMM state space. Second, the underlying model allows us to derive parameter estimation algorithms and to compare them with biological mechanisms for synaptic plasticity. For the HMM, this approach results in an instantiation of the EM algorithm [19], [30] in a network of spiking neurons (stochastic WTA circuit). In the Methods section we derive this algorithm for the WTA circuit and show that the M-step evaluates to weight updates that need to be applied whenever neuron  emits a spike at time

emits a spike at time  , according to

, according to

| (7) |

where  is a positive constant that controls the learning rate. Note that the update rules for the feedforward and the recurrent connections are identical, and thus all excitatory synapses in the network are handled uniformly. These plasticity rules (7) are equivalent to the updates that previously emerged as theoretically optimal synaptic weight changes, for learning to recognize repeating high-dimensional patterns in spike trains from afferent neurons, in related studies [13], [32], [33]. The update rules consist of two parts: A Hebbian long-term potentiating (LTP) part that depends on presynaptic activity and a constant depression term. The dependence on the EPSP time courses (2) makes the first part implicitly dependent on the history of presynaptic spikes. The STDP window is shown in Fig. 1C for

is a positive constant that controls the learning rate. Note that the update rules for the feedforward and the recurrent connections are identical, and thus all excitatory synapses in the network are handled uniformly. These plasticity rules (7) are equivalent to the updates that previously emerged as theoretically optimal synaptic weight changes, for learning to recognize repeating high-dimensional patterns in spike trains from afferent neurons, in related studies [13], [32], [33]. The update rules consist of two parts: A Hebbian long-term potentiating (LTP) part that depends on presynaptic activity and a constant depression term. The dependence on the EPSP time courses (2) makes the first part implicitly dependent on the history of presynaptic spikes. The STDP window is shown in Fig. 1C for  -shaped EPSPs. Potentiation is triggered when the postsynaptic neuron fires after the presynaptic neuron. This term is commonly found in synaptic plasticity measured in biological neurons, and for common EPSP windows it closely resembles the shape of the pre-before-post part of standard forms of STDP [11], [12]. The dependence on the current value of the synaptic weight has a local stabilizing effect on the synapse. The depressing part of the update rule is triggered whenever the postsynaptic neuron fires independent of presynaptic activity. It contrasts LTP and assures that the synaptic weights stay globally in a bounded regime. It is shown in Fig. 4 of [13] that the simple rule (7) reproduces the standard form of STDP curves when it is applied with an intermediate pairing rate.

-shaped EPSPs. Potentiation is triggered when the postsynaptic neuron fires after the presynaptic neuron. This term is commonly found in synaptic plasticity measured in biological neurons, and for common EPSP windows it closely resembles the shape of the pre-before-post part of standard forms of STDP [11], [12]. The dependence on the current value of the synaptic weight has a local stabilizing effect on the synapse. The depressing part of the update rule is triggered whenever the postsynaptic neuron fires independent of presynaptic activity. It contrasts LTP and assures that the synaptic weights stay globally in a bounded regime. It is shown in Fig. 4 of [13] that the simple rule (7) reproduces the standard form of STDP curves when it is applied with an intermediate pairing rate.

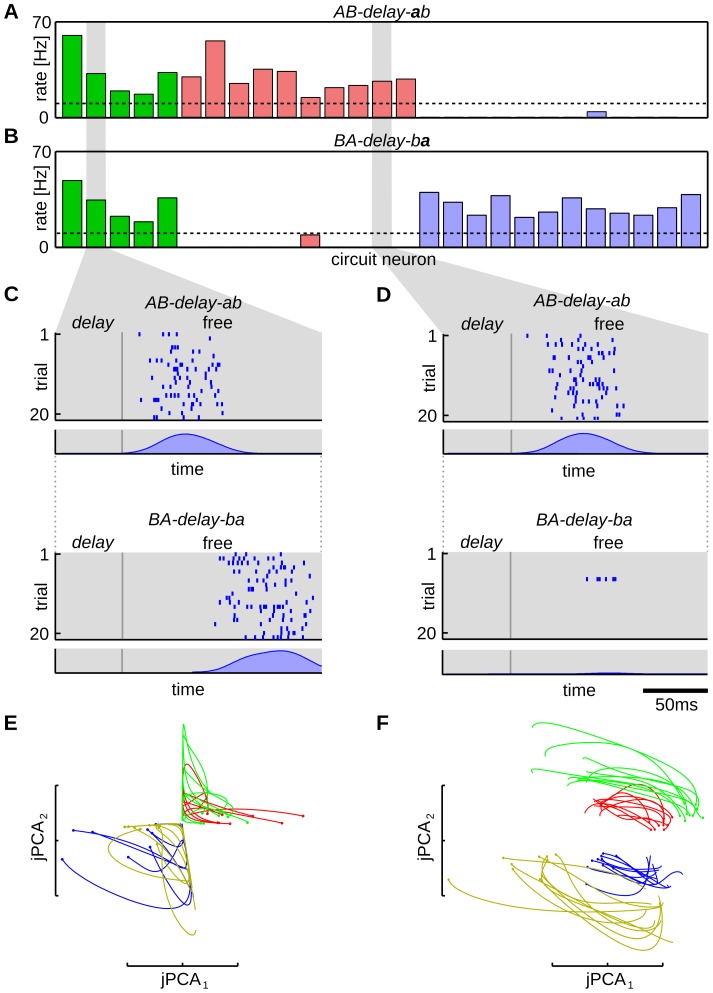

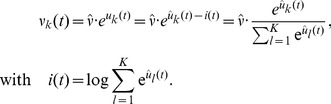

Figure 4. Mixed selectivity in networks of multiple interconnected WTA circuits.

(A,B) Mean firing rate of the circuit neurons for evoked activity during pattern a in sequence AB-delay-ab (A) and BA-delay-ba (B). A threshold of 10 Hz (dashed line) was used to distinguish between neurons that were active or inactive during the pattern. Firing rates of neurons that were not context selective are shown in green, that of neurons selective for starting sequences AB and BA are shown in red and blue, respectively. Neurons that did not fall in one of these groups are not shown. Spike trains of one context selective (C) and one non-selective (D) neuron are presented for spontaneous completion of sequence AB-delay-ab (upper) and BA-delay-ba (lower) (cue phase is not shown). Spike raster plots over 20 trial runs and corresponding averaged neural activity (PETH) are shown. The two neurons encode the input on different levels of abstraction. The neuron in panel (D) shows context cell behavior, since it encodes pattern a only if it occurs in the context of sequence ab. During ba it remains (almost) perfectly silent. The neuron in (C) is not context selective, but nevertheless fires reliably during the time slot of pattern a during the free run by integrating information from other (context selective) neurons. It belongs to a WTA circuit with 15 neurons, for which the network state projection is shown in panel (E). (E,F) Linear projection of the network activity during the delay phase to the first two components of the jPCA, for a single WTA circuit with 15 neurons (E) and for the whole network (F). 10 trajectories are plotted for each sequence (AB-delay-ab red, BA-delay-ba green, CD-delay-cd blue, DC-delay-dc yellow). The dots at the beginning of each line, indicate the onsets of the delay state, i.e. the beginning of the trajectories. The plots have arbitrary scale. The projection of the WTA circuit in (E) does not allow a linear separation between all four sequences, whereas the activity of the whole network (F) clusters into four sequence-specific regions. The network neurons use this state representation to modulate their behavior during spontaneous activity.

While these M-step updates emerge as exact solutions for the underlying HMM, the WTA circuit implements an approximation of the E-step, using forward sampling from the distribution in equation (5). In the following experiments we will first focus on this simple approximation, and analyze what computational function emerges in the network using the STDP updates (7) without any third signal related to reward or a “teacher”. In the last part of the Results section we will introduce a possible implementation of a refined approximation, and assess the advantages and disadvantages of this method.

Learning to predict spike sequences through STDP

In this section we show through computer simulations that our WTA circuits learn to encode the hidden state that underlies the input statistics via the STDP rule (7). We demonstrate this for a simple sequence memory task and analyze in detail how the hidden state underlying this task is represented in the network. The experimental paradigm reproduces the structure of object sequence memory tasks, where monkeys had to memorize a sequence of movements and reproduce it after a delay period [23], [24], [34], [35]. The task consisted of three phases: An initial cue phase, a delay phase and a recall phase. Each phase is characterized by a different input sequence, where the cue sequence defines the identity of the recall sequence. We used four cue/recall pairs in this experiment.

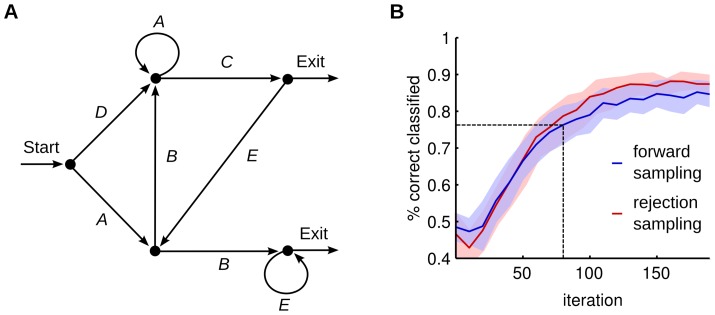

The structure of this task is illustrated in Fig. 2A. The graph represents a finite state grammar that can be used to generate symbol sequences by following a path from Start to Exit. In this first illustrative example the only stochastic decision is made at the beginning, randomly choosing one of the four cue phases with equal probabilities while the rest of the sequence is deterministic. On each arc that is passed, the symbol next to the arc is generated, e.g. AB-delay-ab is one possible symbolic sequence. Note that all symbols can appear in different temporal contexts, e.g. A appears in sequence AB-delay-ab and in BA-delay-ba. The delay symbol is completely unspecific since it appears in all four possible sequences. Therefore this task does not fulfill the Markov condition with respect to the input symbols, e.g. knowing that the current symbol is delay does not identify the next one as it might be any of a,b,c,d. Only additional knowledge about the temporal context of the symbol allows to uniquely identify the continuation of the sequence.

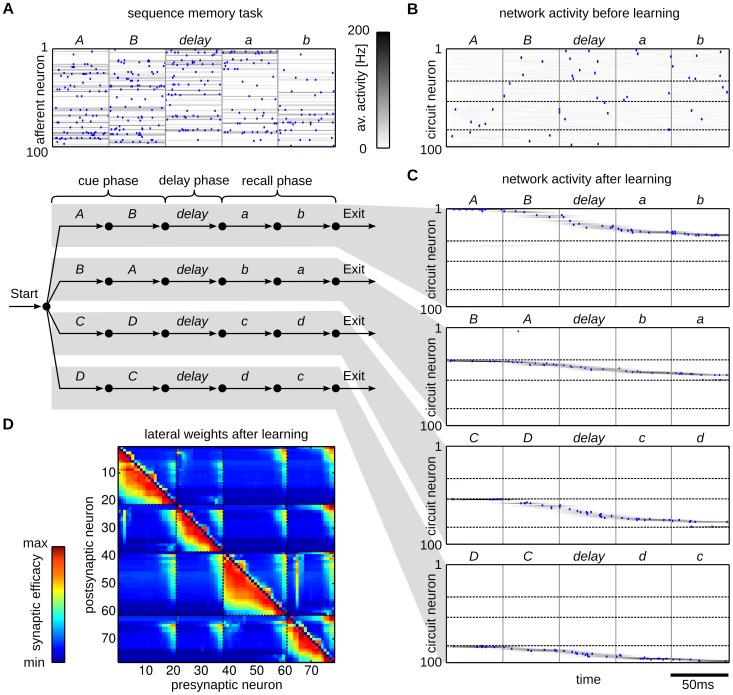

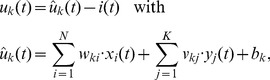

Figure 2. Emergence of working memory encoded in neural assemblies through weak HMM learning in a WTA circuit through STDP.

(A) Illustration of the input encoding for sequence AB-delay-ab. The upper plot shows one example input spike train (blue dots) plotted on top of the mean firing rate (100 out of 200 afferent neurons shown). The lower panel shows the finite state grammar graph that represents the simple working memory task. The graph can be used to generate symbol sequences by following any path from Start to Exit. In the first state (Start) a random decision is made, which of the four paths to take. This decision determines all arcs that are passed throughout the sequence. On each arc that is passed the symbol next to the arc is emitted (and provided as input to the WTA circuit in the form of some 200-dimensional rate pattern). (B,C) Evoked activity of the WTA circuit for one example input sequence before learning (B) and for each of the four sequences after learning (C). The network activity is averaged and smoothed over 100 trial runs (gray traces), the blue dots show the spiking activity for one trial run. The input sequences are labeled by their pattern symbols on top of each plot. The neurons are sorted by the time of their highest average activity over all four sequences, after learning. For each sequence a different assembly of neurons becomes active in the WTA circuit. Dotted black lines indicate the boundaries between assemblies. Since the 4 assemblies that emerged have virtually no overlap, the WTA circuit has recovered the structure of the hidden states that underlie the task. (D) The lateral weights  that emerged through STDP. The neurons are sorted using the same sorting algorithm as in (B,C). The black dotted lines correspond to assembly boundaries, neurons that fired on average less than one spike per sequence are not shown. Each neuron has learned to fire after a distinct set of predecessors, which reflects the sequential order of assembly firing. The stochastic switches between sequences are represented by enhanced weights between neurons active at the sequence onsets.

that emerged through STDP. The neurons are sorted using the same sorting algorithm as in (B,C). The black dotted lines correspond to assembly boundaries, neurons that fired on average less than one spike per sequence are not shown. Each neuron has learned to fire after a distinct set of predecessors, which reflects the sequential order of assembly firing. The stochastic switches between sequences are represented by enhanced weights between neurons active at the sequence onsets.

This additional knowledge can be represented in a hidden state that encodes the required information, which renders this task a simple example of a HMM. The hidden states of this HMM have to encode the input patterns and the temporal context in which they appear in order to maintain the Markov property throughout the sequences, e.g. a distinct state  encodes pattern B when it appears in sequence AB-delay-ab. The temporal structure of the hidden state can be related to the finite state grammar in Fig. 2A. The arcs of the grammar directly correspond to the hidden states, i.e. given knowledge about the currently visited arc allows us to complete the sequence. The symbols next to the arcs define the observation model, i.e. the most likely symbol throughout each state. In this simple symbolic HMM the observation model is in fact deterministic, since exactly one symbol is allowed in each state.

encodes pattern B when it appears in sequence AB-delay-ab. The temporal structure of the hidden state can be related to the finite state grammar in Fig. 2A. The arcs of the grammar directly correspond to the hidden states, i.e. given knowledge about the currently visited arc allows us to complete the sequence. The symbols next to the arcs define the observation model, i.e. the most likely symbol throughout each state. In this simple symbolic HMM the observation model is in fact deterministic, since exactly one symbol is allowed in each state.

In the neural implementation of this task, the symbolic sequences are presented to the WTA circuit encoded by afferent spike trains. Every symbol A,B,C,D,a,b,c,d,delay is represented by a rate pattern with fixed length of 50 ms, during which each afferent neuron emits spikes with a symbol-specific, fixed Poisson rate (see Methods). One example input spike train encoding the symbolic sequence AB-delay-ab is shown in the top panel of Fig. 2A. The input spike times are not kept fixed but newly drawn for each pattern presentation. This input encoding adds extra variability to the task, which is not directly reflected by the simple symbolic finite state grammar. Still, the statistics underlying the input sequences  follow the dynamics of a HMM of the form (4), and therefore our WTA circuit and the spike trains that encode sequences generated by the artificial grammar share a common underlying model.

follow the dynamics of a HMM of the form (4), and therefore our WTA circuit and the spike trains that encode sequences generated by the artificial grammar share a common underlying model.

The observation model  of that HMM covers the uncertainty induced by the noisy rate patterns by assigning a certain likelihood to each observed input activation

of that HMM covers the uncertainty induced by the noisy rate patterns by assigning a certain likelihood to each observed input activation  . The hidden state representation has to encode the context-dependent symbol identity and the temporal structure of the sequences, i.e. the duration of each individual symbol. In our continuous-time formulation the hidden state is updated at the time points

. The hidden state representation has to encode the context-dependent symbol identity and the temporal structure of the sequences, i.e. the duration of each individual symbol. In our continuous-time formulation the hidden state is updated at the time points  . Therefore, throughout the presentation of a rate pattern of 50 ms length, several state updates are encountered during which the hidden state has to be maintained. In principle this can be done by allowing each hidden state to persist over multiple update steps by assigning non-zero probabilities to

. Therefore, throughout the presentation of a rate pattern of 50 ms length, several state updates are encountered during which the hidden state has to be maintained. In principle this can be done by allowing each hidden state to persist over multiple update steps by assigning non-zero probabilities to  . However, this approach is well known to result in a poor representation of time as it induces an exponential distribution over the state durations, which is inappropriate in most physical systems and obviously also for the case of deterministic pattern lengths, considered here [17], [19]. The accuracy of the model can be increased at the cost of a larger state space by introducing intermediate states, e.g. by representing pattern B in sequence AB-delay-ab by an assembly of states

. However, this approach is well known to result in a poor representation of time as it induces an exponential distribution over the state durations, which is inappropriate in most physical systems and obviously also for the case of deterministic pattern lengths, considered here [17], [19]. The accuracy of the model can be increased at the cost of a larger state space by introducing intermediate states, e.g. by representing pattern B in sequence AB-delay-ab by an assembly of states  that form an ordered state sequence throughout the pattern presentation. Each of these assemblies encodes a specific input pattern, the temporal context and its sequential structure throughout the pattern, and with sufficiently large assemblies the temporal resolution of the model achieves reasonable accuracy. We found that this coding strategy emerges unsupervised in our WTA circuits through the STDP rule (7).

that form an ordered state sequence throughout the pattern presentation. Each of these assemblies encodes a specific input pattern, the temporal context and its sequential structure throughout the pattern, and with sufficiently large assemblies the temporal resolution of the model achieves reasonable accuracy. We found that this coding strategy emerges unsupervised in our WTA circuits through the STDP rule (7).

To show this, we trained a WTA circuit with  afferent cells and

afferent cells and  circuit neurons by randomly presenting input spike sequences until convergence. In this experiment, the patterns were presented as a continuous stream of input spikes, without intermediate pauses or resetting the network activity at the beginning of the sequences. Training started from random initial weights, and therefore the observation and prediction model had to be learned from the presented spike sequences. Prior to learning the neural activity was unspecific to the patterns and their temporal context (see Fig. 2B). Fig. 2C shows the evoked activities for all four sequences after training. The output of the network is represented by the perievent time histogram (PETH) averaged over 100 trial runs and a single spike train that is plotted on top. To simplify the interpretation of the network output we sorted the neurons according to their preferred firing times (see Methods). Each sequence is encoded by a different assembly of neurons. This reflects the structure of the hidden state that underlies the task. Since the input is presented as continuous spike train, the network has also learned intermediate states that represent a gradual blending between patterns. About 25 neurons were used to encode the information required to represent the hidden state of each sequence.

circuit neurons by randomly presenting input spike sequences until convergence. In this experiment, the patterns were presented as a continuous stream of input spikes, without intermediate pauses or resetting the network activity at the beginning of the sequences. Training started from random initial weights, and therefore the observation and prediction model had to be learned from the presented spike sequences. Prior to learning the neural activity was unspecific to the patterns and their temporal context (see Fig. 2B). Fig. 2C shows the evoked activities for all four sequences after training. The output of the network is represented by the perievent time histogram (PETH) averaged over 100 trial runs and a single spike train that is plotted on top. To simplify the interpretation of the network output we sorted the neurons according to their preferred firing times (see Methods). Each sequence is encoded by a different assembly of neurons. This reflects the structure of the hidden state that underlies the task. Since the input is presented as continuous spike train, the network has also learned intermediate states that represent a gradual blending between patterns. About 25 neurons were used to encode the information required to represent the hidden state of each sequence.

This coding scheme installs different representations of the patterns depending on the temporal context they appeared in, e.g. the pattern delay within the sequence AB-delay-ab was represented by another assembly of neurons than the one in the sequence BA-delay-ba. Small assemblies of about five neurons became tuned for each pattern and temporal context. This sparse representation emerged through learning and is not merely a consequence of the inherent sparseness of the WTA dynamics. Prior to learning all WTA neurons are broadly tuned and show firing patterns that are unordered and nonspecific (see Fig. 2B). After learning their afferent synapses are tuned for specific input patterns, whereas the temporal contexts in which they appear are encoded in the excitatory lateral synapses. The latter can be seen by inspecting the synaptic weights  shown in Fig. 2D. They reflect the sparse code and also the sequential order in which the neurons are activated. They also learned to encode the stochastic transitions at the beginning of the cue phase, where randomly one of the four sequences is selected. These stochastic switches are reflected in increased strength of synapses that connect neurons activated at the end and the beginning of the sequences.

shown in Fig. 2D. They reflect the sparse code and also the sequential order in which the neurons are activated. They also learned to encode the stochastic transitions at the beginning of the cue phase, where randomly one of the four sequences is selected. These stochastic switches are reflected in increased strength of synapses that connect neurons activated at the end and the beginning of the sequences.

The behavior of the circuit is further examined in Fig. 3. The average network activity over 100 trial runs of the neurons that became most active during sequence AB-delay-ab are shown in Fig. 3A. In addition the spike trains for 20 trials are shown for three example neurons. The same sorting was applied as in Fig. 2. Using the hidden state encoded by the network it should be possible to predict the recall patterns after seeing the cue, if it correctly learned the input statistics. We demonstrate this by presenting incomplete inputs to the network. After presentation of the delay pattern the input was turned off and the network was allowed to run freely. The delay pattern was played three times longer than in the training phase  . During this time the network was required to store its current state (the identity of the cue sequence). After this delay time the input was turned off – no spikes were generated by the afferent neurons during this phase, the network was purely driven by the lateral connections. Since the delay time was much longer than the EPSP windows the network had to keep track of the sequence identity in its activity pattern throughout this time to solve the task. Fig. 3B shows the output behavior of the network for sequence AB-delay-free (where free denotes a

. During this time the network was required to store its current state (the identity of the cue sequence). After this delay time the input was turned off – no spikes were generated by the afferent neurons during this phase, the network was purely driven by the lateral connections. Since the delay time was much longer than the EPSP windows the network had to keep track of the sequence identity in its activity pattern throughout this time to solve the task. Fig. 3B shows the output behavior of the network for sequence AB-delay-free (where free denotes a  time window with no external input). After the initial sequence AB was presented, a small assembly of neurons became active that represents the delay pattern that was associated with that specific sequence. After the delay pattern was turned off, the network completed the hidden state sequence using its memorized activity, which can be seen by comparing the evoked and spontaneous spike trains in Fig. 3A and B, respectively.

time window with no external input). After the initial sequence AB was presented, a small assembly of neurons became active that represents the delay pattern that was associated with that specific sequence. After the delay pattern was turned off, the network completed the hidden state sequence using its memorized activity, which can be seen by comparing the evoked and spontaneous spike trains in Fig. 3A and B, respectively.

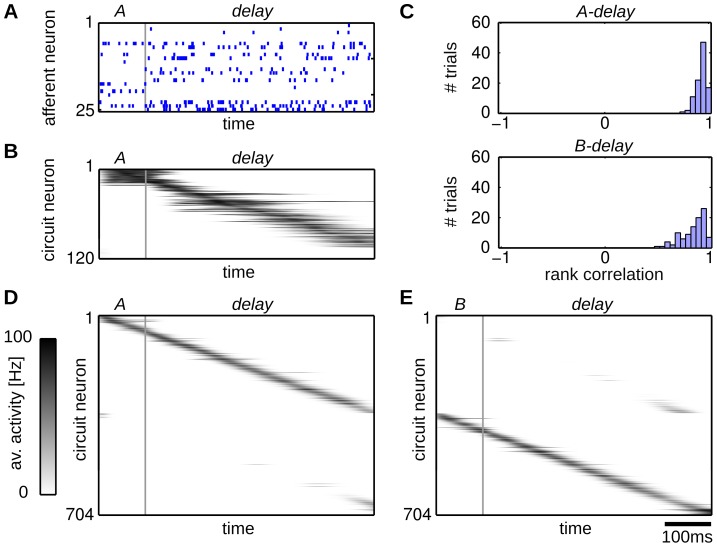

Figure 3. Spontaneous replay of pattern sequences.

(A,B) The output behavior of a trained network for sequence AB-delay-ab. The network input is indicated by pattern symbols on top of the plot and pattern borders (gray vertical lines). (A) The average firing behavior of the network during evoked activity. The 30 circuit neurons that showed highest activity for this sequence are shown. The remaining neurons were almost perfectly silent. The network activity is averaged over 100 trial runs and neurons are sorted by the time of maximum average activity. Detailed spiking activities for three example neurons that became active after the delay pattern are shown. Each plot shows 20 example spike trains. (B) Spontaneous completion of sequence AB-delay-free. After presenting the cue sequence AB and the delay pattern for 150 ms the afferent input was turned off, letting the network run driven solely by lateral connections. During this spontaneous activity, the neurons are activated in the same sequential order as in the evoked trials. Detailed spiking activity is shown for the same three example neurons as in (A). (C) Histograms of the rank order correlation between the evoked and spontaneous network activity for all four sequences, computed over 100 trial runs. The sequential order of neural firing is reliably reproduced during the spontaneous activity and thus the structure of the hidden state is correctly completed.

In order to quantify the ability of the network to reproduce the structure of the hidden state, we evaluated the similarity between the spontaneous and evoked network activity using the rank order correlation coefficient, which is a similarity measure normalized between  and

and  , where

, where  means that the order is perfectly preserved. This measure has been previously proposed to detect stereotypical temporal order in neural firing patterns [6]. Fig. 3C shows the histograms over the correlation coefficients for all four sequences. The histograms were created by calculating the rank order correlation between the spontaneous sequences and the PETH of the evoked sequences. It can be seen that the temporal order of the evoked sequence was reliably reproduced during the free run. To that end, for each of the input sequences, a stable representation has been trained into the network, that is encoded in the lateral synapses. This structure emerged completely unsupervised using the local STDP rule, solely from the intrinsic dynamics of the network.

means that the order is perfectly preserved. This measure has been previously proposed to detect stereotypical temporal order in neural firing patterns [6]. Fig. 3C shows the histograms over the correlation coefficients for all four sequences. The histograms were created by calculating the rank order correlation between the spontaneous sequences and the PETH of the evoked sequences. It can be seen that the temporal order of the evoked sequence was reliably reproduced during the free run. To that end, for each of the input sequences, a stable representation has been trained into the network, that is encoded in the lateral synapses. This structure emerged completely unsupervised using the local STDP rule, solely from the intrinsic dynamics of the network.

Mixed selectivity emerges in multiple interconnected WTA circuits

The first experiment demonstrated that through STDP, single neurons of a WTA circuit get tuned for distinct input patterns and the temporal context in which they appear. The neural code that emerged is reminiscent of some features found in cortical activity of monkeys solving similar tasks, namely the emergence of context cells that respond specifically to certain symbols when they appear in a specific temporal context [34], [36], [37]. However, the overall competition of a single WTA circuit hinders the building of codes for more abstract features, which are also found in the cortex in the very same experiments where neurons in the same cortical area encode different functional aspects of stimuli and actions. They seem to integrate information on different levels of abstraction which results in a diverse and rich neural code, where close-by neurons are often tuned to different task-related features [25].

We show that our model reproduces this mixed selectivity of cortical neurons if multiple interconnected WTAs are trained on a common input. The strong competition is restricted to neurons within every single WTA, whereas there is no competition between neurons of different circuits and lateral connections allow full information exchange between the circuits. Therefore, the model is extended by splitting the network into smaller WTA groups, each of which receives input from a distinct inhibitory feedback loop that implements competition between members of that group. In addition all neurons receive lateral excitatory input from the whole network. Every WTA group still follows the dynamics of a forward sampler for a HMM. Each of these WTA circuits adapts its synaptic weights through STDP to best represent the observed input spike sequences. In addition, the lateral connections between WTA groups introduce a coupling between the network states of individual groups. The dynamics of the whole network of WTA circuits can be understood as a forward sampler for a coupled HMM [38], where every WTA group encodes one multinomial variable of a compound state such that from one time step to the next all single state variables have influence on each other [20], [38].

In the first experiment we have seen that the WTA circuit learned to use about  of the available neurons to encode each of the four sequences. We have also seen that the network used small assemblies of neurons to represent each of the patterns in favor of a finer temporal resolution. This implies that WTA circuits of different size can learn to decode the input sequence on different levels of detail, where small circuits only learn the most salient features of the input sequences. To show this we trained a network with

of the available neurons to encode each of the four sequences. We have also seen that the network used small assemblies of neurons to represent each of the patterns in favor of a finer temporal resolution. This implies that WTA circuits of different size can learn to decode the input sequence on different levels of detail, where small circuits only learn the most salient features of the input sequences. To show this we trained a network with  WTA groups of random size between 10 and 50 units, giving a total network size of

WTA groups of random size between 10 and 50 units, giving a total network size of  , on the simple object sequence memory task (Fig. 2A). The neural code that emerges in this network after training is shown in Fig. 4. The output rates of the circuit neurons were measured during the presentation of pattern a appearing in the sequence AB-delay-ab, BA-delay-ba, shown in Fig. 4A,B respectively. Three classes of neurons can be distinguished: 10 neurons were tuned to pattern a in the context AB-delay-ab only (shown in red), 12 neurons were tuned to pattern a exclusievly in the context BA-delay-ba (shown in blue) and 5 additional neurons encode pattern a independent of its context (green), i.e. they get activated by the pattern a in both sequences AB-delay-ab and BA-delay-ba. The remaining neurons were not significantly tuned for pattern a (average firing rate during pattern a was less than

, on the simple object sequence memory task (Fig. 2A). The neural code that emerges in this network after training is shown in Fig. 4. The output rates of the circuit neurons were measured during the presentation of pattern a appearing in the sequence AB-delay-ab, BA-delay-ba, shown in Fig. 4A,B respectively. Three classes of neurons can be distinguished: 10 neurons were tuned to pattern a in the context AB-delay-ab only (shown in red), 12 neurons were tuned to pattern a exclusievly in the context BA-delay-ba (shown in blue) and 5 additional neurons encode pattern a independent of its context (green), i.e. they get activated by the pattern a in both sequences AB-delay-ab and BA-delay-ba. The remaining neurons were not significantly tuned for pattern a (average firing rate during pattern a was less than  , not shown in the plot).

, not shown in the plot).

To pinpoint the computational function that emerged in the network we compared the spontaneous activity of individual neurons from different WTA circuits. Spike trains for one context-specific and one non-specific neuron are compared in Fig. 4C and D, respectively. Both panels show spike raster plots over 20 trial runs and averaged neuron activities (PETH) for sequences AB-delay-free and BA-delay-free. The neuron in Fig. 4C belongs to a small WTA group with a total size of 15 neurons and shows context unspecific behavior, whereas the neuron in Fig. 4D which belongs to a larger WTA group (42 neurons) is context specific (see Fig. 4A,B). This behavior is also reproduced during the free run, when the neurons are only driven by their lateral synapses. The neuron in Fig. 4D remains silent during BA-delay-free and thus shows the properties of context cells observed in the cortex, whereas the neuron in Fig. 4C is active during both sequences. Still, during spontaneous replay that neuron correctly reproduces the temporal structure of the input sequences. In sequences starting with AB the neural activity peaks at  after the onset of the free run – the time pattern a was presented in the evoked phase. If the sequence starts with BA this behavior is modulated and the activity is delayed by roughly 50 ms, to the time point a would appear in the recall phase. The required information to control this modulation was not available within the small WTA group the neuron belongs to, but provided by neighboring context-specific neurons from other groups.

after the onset of the free run – the time pattern a was presented in the evoked phase. If the sequence starts with BA this behavior is modulated and the activity is delayed by roughly 50 ms, to the time point a would appear in the recall phase. The required information to control this modulation was not available within the small WTA group the neuron belongs to, but provided by neighboring context-specific neurons from other groups.

To see this we trained a linear classifier on the evoked activity during the delay phase of AB-delay-ab and BA-delay-ba (see Methods for details). If the neurons reliably encode the sequence identity a separating plane should divide the  -dimensional space of network activities between the sequences. Training the classifier only on the 15-dimensional state space of the group the neuron in Fig. 4C belongs to, did not reveal such a plane (the classification performance was

-dimensional space of network activities between the sequences. Training the classifier only on the 15-dimensional state space of the group the neuron in Fig. 4C belongs to, did not reveal such a plane (the classification performance was  ). Therefore, this small WTA circuit did not encode the required memory item to distinguish between the two sequences after the delay phase. However, the whole network of all WTA groups reliably encoded this information and the classifier trained on the

). Therefore, this small WTA circuit did not encode the required memory item to distinguish between the two sequences after the delay phase. However, the whole network of all WTA groups reliably encoded this information and the classifier trained on the  -dimensional state space could distinguish between the delay phases of AB-delay-ab and BA-delay-ba with

-dimensional state space could distinguish between the delay phases of AB-delay-ab and BA-delay-ba with  accuracy.

accuracy.

To illustrate the different emergent representations, we compared linear projections of the state of the small WTA group with 15 neurons and the state of the whole network in Fig. 4E,F, respectively. The plots show the network activity during the delay phase for all four sequences. Each line corresponds to a trajectory of the evoked network activity, where the line colors indicate the sequence identity. The state trajectories were projected onto the first two dimensions of the dynamic principal component analysis (jPCA), that was recently introduced as an alternative to normal PCA that is applicable to data with rotational dynamics [39]. Empirically, we found this analysis method superior to normal PCA in finding linear projections that separate the network states for different input sequences. One explanation for this lies in the dynamical properties of WTA circuits. Due to the global normalization which induces a constant network rate, the dynamics of the network are roughly energy-preserving. Since this implies that the corresponding linear dynamical system is largely non-expanding/contracting, a method that identifies purely rotational dynamics such as the jPCA was found to be beneficial here.

Fig. 4E shows the first two jPCA components of the neural activities during the delay phase for the WTA circuit with 15 neurons, which the neuron in Fig. 4C belongs to. This circuit was not able to distinguish between all four input sequences, since it activated the same neurons to encode them. This is also reflected in the jPCA projections shown in Fig. 4E, which show a large overlap for sequences AB-delay-ab and BA-delay-ba. On the other hand, the network state comprising all  neurons reliably encoded the sequence identities (see Fig. 4F). The delay state for each sequence spans an area in the 2-D projection and therefore the network found a state space that allows a linear separation between the sequences. Such a representation is important since the neuron model employs a linear combination of the network state in the membrane dynamics (1) and therefore provides the information required by the neurons in Fig. 4C,D to modulate their spontaneous behavior.

neurons reliably encoded the sequence identities (see Fig. 4F). The delay state for each sequence spans an area in the 2-D projection and therefore the network found a state space that allows a linear separation between the sequences. Such a representation is important since the neuron model employs a linear combination of the network state in the membrane dynamics (1) and therefore provides the information required by the neurons in Fig. 4C,D to modulate their spontaneous behavior.

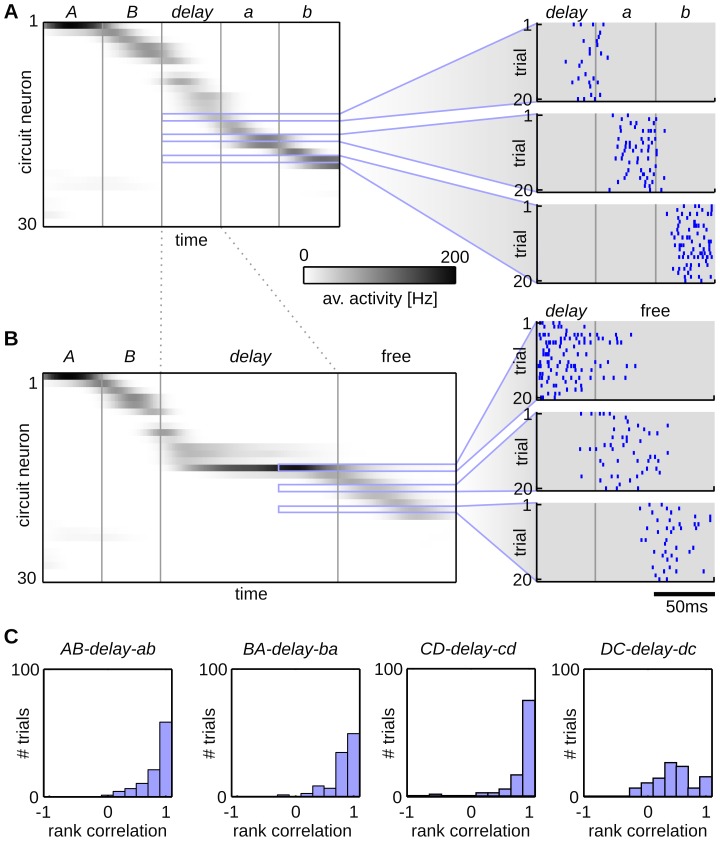

Trajectories in network assemblies emerge for stationary input patterns

Information about transient stimuli is often kept available over long time spans in trajectories of neural activity in the mammalian cortex [5], [7], [26], [40] and in songbirds [41]–[43]. In the previous experiment we saw that our model is in principle capable to develop such trajectories in neural assemblies (see Fig. 3B), which emerged to encode salient input patterns and the temporal structure throughout them. However, in that experiment the input sequences comprised a rich temporal structure, since each pattern was only shown for a  time bin which might have facilitated the development of these activity patterns. In this section we study whether a similar behavior also emerges when the input signal is stationary over long time spans.

time bin which might have facilitated the development of these activity patterns. In this section we study whether a similar behavior also emerges when the input signal is stationary over long time spans.

In analogy to the previous experiment we generated two input sequences A-delay and B-delay. The patterns A, B were played for  and the pattern delay for 500 ms. As in all other experiments, the patterns were rate patterns, i.e. each input neuron fired with a constant Poisson rate during the pattern and spike times were not kept fixed throughout trials. One example input spike train is shown in Fig. 5A.

and the pattern delay for 500 ms. As in all other experiments, the patterns were rate patterns, i.e. each input neuron fired with a constant Poisson rate during the pattern and spike times were not kept fixed throughout trials. One example input spike train is shown in Fig. 5A.

Figure 5. Neural trajectories emerge for stationary input patterns.

(A) A network was trained with an extended delay phase of 500 ms. Input spike trains of a single run for sequence A-delay (25 out of 100 afferent neurons). Throughout the delay phase the afferent neurons fire with fixed stationary Poisson rates. (B) The output behavior for sequence A-delay averaged over 100 trial runs. The circuit neurons are sorted according to their mean firing time within the sequences (120 out of 704 neurons are shown). (C) Histograms of the rank order correlation between the evoked and spontaneous network activity. The sequential order of neural firing is preserved during spontaneous activity. (D,E) Homeostatic plasticity enhances the formation of this sequential structure. The output behavior of the network trained with STDP and the homeostatic plasticity mechanism is shown. Approximately 50% of the neurons encode each of the two sequence. The neurons learn to fire at a specific point in time within the delay patterns, building up stable trajectories.

Although the input was stationary for  during the delay pattern, we could still observe the emergence of neural trajectories in the network after training. Again, we used a network composed of multiple interconnected WTA circuits to learn these patterns. We employed a network of

during the delay pattern, we could still observe the emergence of neural trajectories in the network after training. Again, we used a network composed of multiple interconnected WTA circuits to learn these patterns. We employed a network of  WTA groups of random size in the range from 10 to 100 neurons. The total network had a size of

WTA groups of random size in the range from 10 to 100 neurons. The total network had a size of  circuit neurons and we used

circuit neurons and we used  afferent cells. Fig. 5B shows the sorted average output activity after training. For each of the two sequences a distinct assembly of neurons emerged and the neurons composing these assemblies fired in a distinct sequential order. Fig. 5C shows the rank order correlations between the evoked and spontaneous activities. The trajectories of neural firing were reliably reproduced during spontaneous activity, but only about 100 neurons were used for each of the two assemblies, leaving the remaining 500 neurons (almost) perfectly silent.

afferent cells. Fig. 5B shows the sorted average output activity after training. For each of the two sequences a distinct assembly of neurons emerged and the neurons composing these assemblies fired in a distinct sequential order. Fig. 5C shows the rank order correlations between the evoked and spontaneous activities. The trajectories of neural firing were reliably reproduced during spontaneous activity, but only about 100 neurons were used for each of the two assemblies, leaving the remaining 500 neurons (almost) perfectly silent.

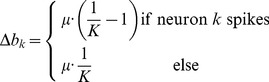

The emergence of these trajectories can be further enhanced using a homeostatic intrinsic plasticity mechanism which enforces that on average all network neurons participate equally in the representation of the hidden state. This can be achieved by a mechanism that regulates the excitability  of each neuron, such that the overall output rate

of each neuron, such that the overall output rate  of neuron

of neuron  (measured over a long time window) converges to a given target rate