Abstract

A systematic review is a summary of existing evidence that answers a specific clinical question, contains a thorough, unbiased search of the relevant literature, explicit criteria for assessing studies and structured presentation of the results. A systematic review that incorporates quantitative pooling of similar studies to produce an overall summary of treatment effects is a meta-analysis. A systematic review should have clear, focused clinical objectives containing four elements expressed through the acronym PICO (Patient, group of patients, or problem, an Intervention, a Comparison intervention and specific Outcomes). Explicit and thorough search of the literature is a pre-requisite of any good systematic review. Reviews should have pre-defined explicit criteria for what studies would be included and the analysis should include only those studies that fit the inclusion criteria. The quality (risk of bias) of the primary studies should be critically appraised. Particularly the role of publication and language bias should be acknowledged and addressed by the review, whenever possible. Structured reporting of the results with quantitative pooling of the data must be attempted, whenever appropriate. The review should include interpretation of the data, including implications for clinical practice and further research. Overall, the current quality of reporting of systematic reviews remains highly variable.

Keywords: Bias, meta-analysis, number needed to treat, publication bias, randomized controlled trials, systematic review

Introduction

A systematic review is a summary of existing evidence that answers a specific clinical question, contains a thorough, unbiased search of the relevant literature, explicit criteria for assessing studies and structured presentation of the results. A systematic review can be distinguished from a narrative review because it will have explicitly stated objectives (the focused clinical question), materials (the relevant medical literature) and methods (the way in which studies are assessed and summarized).[1,2] A systematic review that incorporates quantitative pooling of similar studies to produce an overall summary of treatment effects is a meta-analysis.[1,2] Meta-analysis may allow recognition of important treatment effects by combining the results of small trials that individually might lack the power to consistently demonstrate differences among treatments.[1]

With over 200 speciality dermatology journals being published, the amount of data published just in the dermatologic literature exceeds our ability to read it.[3] Therefore, keeping up with the literature by reading journals is an impossible task. Systematic reviews provide a solution to handle information overload for practicing physicians.

Criteria for reporting systematic reviews have been developed by a consensus panel first published as Quality of Reporting of Meta-analyses (QUOROM) and later refined as Preferred Reporting Items for Systematic Reviews and Meta-analyses (PRISMA).[4,5] This detailed, 27-item checklist contains items that should be included and reported in high quality systematic reviews and meta-analyses. The methods for understanding and appraising systematic reviews and meta-analyses presented in this paper are a subset of the PRISMA criteria.

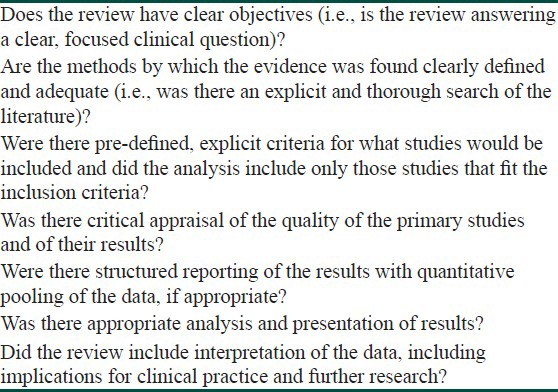

The items that are the essential features of a systematic review include having clear objectives, explicit criteria for study selection, an assessment of the quality of included studies, criteria for which studies can be combined, appropriate analysis and presentation of results and practical conclusions that are based on the evidence evaluated [Table 1]. Meta-analysis is only appropriate if the included studies are conceptually similar. Meta-analyses should only be conducted after a systematic review.[1,6]

Table 1.

Criteria for evaluating a systematic review or the meta-analysis

A Systematic Review Should Have Clear, Focused Clinical Objectives

A focused clinical question for a systematic review should contain the same four elements used to formulate well-built clinical questions for individual studies, namely a Patient, group of patients, or problem, an Intervention, a Comparison intervention and specific Outcomes.[7] These features can be remembered by the acronym PICO. The interventions and comparison interventions should be adequately described so that what was done can be reproduced in future studies and in practice. For diseases with established effective treatments, comparisons of new treatments or regimens to established treatments provide the most useful information. The outcomes reported should be those that are most relevant to physicians and patients.[1]

Explicit and Thorough Search of the Literature

A key question to ask of a systematic review is: “Is it unlikely that important, relevant studies were missed?” A sound systematic review can be performed only if most or all of the available data are examined. An explicit and thorough search of the literature should be performed. It should include searching several electronic bibliographic databases including the Cochrane Controlled Trials Registry, which is part of the Cochrane Library, Medline, Embase and Literatura Latino Americana em Ciências da Saúde. Bibliographies of retrieved studies, review articles and textbooks should be examined for studies fitting inclusion criteria. There should be no language restrictions. Additional sources of data include scrutiny of citation lists in retrieved articles, hand-searching for conference reports, prospective trial registers (e.g., clinical trials.gov for the USA and clinical trialsregister.eu for the European union) and contacting key researchers, authors and drug companies.[1,8]

Reviews should have Pre-defined Explicit Criteria for what Studies would be Included and the Analysis should Include Only those Studies that Fit the Inclusion Criteria

The overwhelming majority of systematic reviews involve therapy. Randomized, controlled clinical trials should therefore be used for systematic reviews of therapy if they are available, because they are generally less susceptible to selection and information bias in comparison with other study designs.[1,9]

Systematic reviews of diagnostic studies and harmful effects of interventions are increasingly being performed and published. Ideally, diagnostic studies included in systematic reviews should be cohort studies of representative populations. The studies should include a criterion (gold) standard test used to establish a diagnosis that is applied uniformly and blinded to the results of the test(s) being studied.[1,9]

Randomized controlled trials can be included in systematic reviews of studies of adverse effects of interventions if the events are common. For rare adverse effects, case-control studies, post-marketing surveillance studies and case reports are more appropriate.[1,9]

The Quality (Risk of Bias) of the Primary Studies should be Critically Appraised

The risk of bias of included therapeutic trials is assessed using the criteria that are used to evaluate individual randomized controlled clinical trials. The quality criteria commonly used include concealed, random allocation; groups similar in terms of known prognostic factors; equal treatment of groups; blinding of patients, researchers and analyzers of the data to treatment allocation and accounting for all patients entered into the trial when analyzing the results (intention-to-treat design).[1] Absence of these items has been demonstrated to increase the risk of bias of systematic reviews and to exaggerate the treatment effects in individual studies.[10]

Structured Reporting of the Results with Quantitative Pooling of the Data, if Appropriate

Systematic reviews that contain studies that have results that are similar in magnitude and direction provide results that are most likely to be true and useful. It may be impossible to draw firm conclusions from systematic reviews in which studies have results of widely different magnitude and direction.[1,9]

Meta-analysis should only be performed to synthesize results from different trials if the trials have conceptual homogeneity.[1,6,9] The trials must involve similar patient populations, have used similar treatments and have measured results in a similar fashion at a similar point in time.

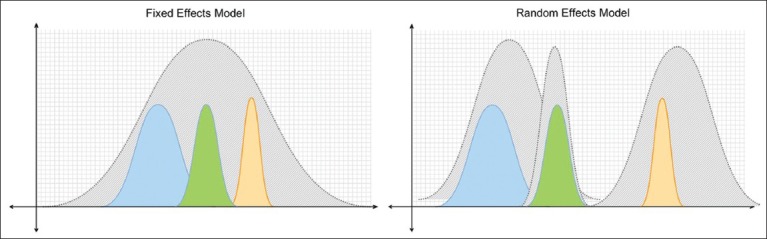

Once conceptual homogeneity is established and the decision to combine results is made, there are two main statistical methods by which results are combined: random-effects models (e.g., DerSimonian and Laird) and fixed-effects models (e.g., Peto or Mantel-Haenszel).[11] Random-effects models assume that the results of the different studies may come from different populations with varying responses to treatment. Fixed-effects models assume that each trial represents a random sample of a single population with a single response to treatment [Figure 1]. In general, random-effects models are more conservative (i.e., random-effects models are less likely to show statistically significant results than fixed-effects models). When the combined studies have statistical homogeneity (i.e., when the studies are reasonably similar in direction, magnitude and variability), random-effects and fixed-effects models give similar results.

Figure 1.

Fixed-effects models (a) assume that each trial represents a random sample (colored curves) of a single population with a single response to treatment. Random-effects models (b) assume that the different trials’ results (colored curves) may come from different populations with varying responses to treatment.

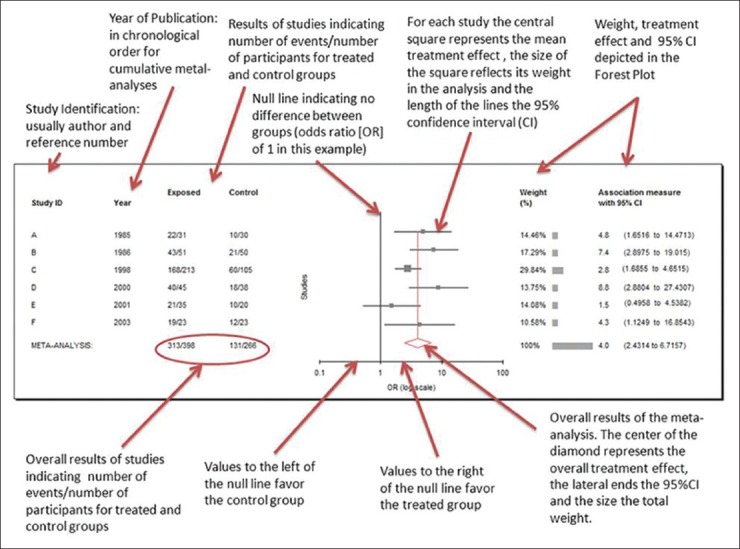

The point estimates and confidence intervals of the individual trials and the synthesis of all trials in meta-analysis are typically displayed graphically in a forest plot [Figure 2].[12] Results are most commonly expressed as the odds ratio (OR) of the treatment effect (i.e., the odds of achieving a good outcome in the treated group divided by the odds of achieving a good result in the control group) but can be expressed as risk differences (i.e., difference in response rate) or relative risk (probability of achieving a good outcome in the treated group divided by the probability in the control group). An OR of 1 (null) indicates no difference between treatment and control and is usually represented by a vertical line passing through 1 on the x-axis. An OR of greater or less than 1 implies that the treatment is superior or inferior to the control respectively.

Figure 2.

Annotated results of a meta-analysis of six studies, using random effects models reported as odd ratios using MIX version 1.7 (Bax L, Yu LM, Ikeda N, Tsuruta H, Moons KGM. Development and validation of MIX: comprehensive free software for meta-analysis of causal research data. BMC Med Res Methodol http://www.ncbi.nlm.nih.gov/pmc/articles/PMC1626481/). The central graph is a typical Forest Plot

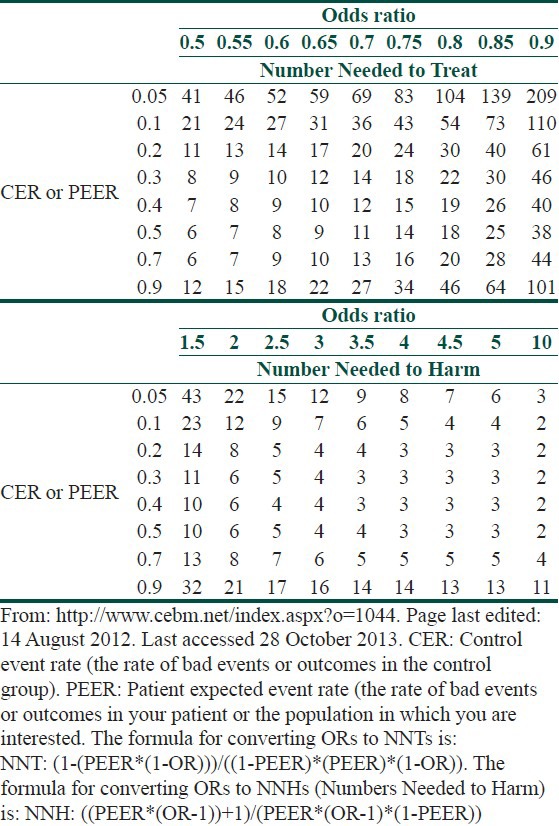

The point estimate of individual trials is indicated by a square whose size is proportional to the size of the trial (i.e., number of patients analyzed). The precision of the trial is represented by the 95% confidence interval that appears in Forest Plots as the brackets surrounding point estimate. If the 95% confidence interval (brackets) does not cross null (OR of 1), then the individual trial is statistically significant at the P = 0.05 level.[12] The summary value for all trials is shown graphically as a parallelogram whose size is proportional to the total number of patients analyzed from all trials. The lateral tips of the parallelogram represent the 95% confidence interval and if they do not cross null (OR of 1), then the summary value of the meta-analysis is statistically significant at the P = 0.05 level. ORs can be converted to risk differences and numbers needed to treat (NNTs) if the event rate in the control group is known [Table 2].[13,14]

Table 2.

Deriving numbers needed to treat from a treatment's odds ratio and the observed or expected event rates of untreated groups or individuals

The difference in response rate and its reciprocal, the NNT, are the most easily understood measures of the magnitude of the treatment effect.[1,9] The NNT represents the number of patients one would need to treat in order to achieve one additional cure. Whereas the interpretation of NNT might be straightforward within one trial, interpretation of NNT requires some caution within a systematic review, as this statistic is highly sensitive to baseline event rates.[1]

For example, if a treatment A is 30% more effective than treatment B for clearing psoriasis and 50% of people on treatment B are cleared with therapy, then 65% will clear with treatment A. These results correspond to a rate difference of 15% (65-50) and an NNT of 7 (1/0.15). This difference sounds quite worthwhile clinically. However if the baseline clearance rate for treatment B in another trial or setting is only 30%, the rate difference will be only 9% and the NNT now becomes 11 and if the baseline clearance rate is 10%, then the NNT for treatment A will be 33, which is perhaps less worthwhile.[1]

Therefore, NNT summary measures within a systematic review should be interpreted with caution because “control” or baseline event rates usually differ considerably between studies.[1,15] Instead, a range of NNTs for a range of plausible control event rates that occur in different clinical settings should be given, along with their 95% confidence intervals.[1,16]

The data used in a meta-analysis can be tested for statistical heterogeneity. Methods to tests for statistical heterogeneity include the χ2 and I.[2,11,17] Tests for statistical heterogeneity are typically of low power and hence detecting statistical homogeneity does not mean clinical homogeneity. When there is evidence of heterogeneity, reasons for heterogeneity between studies – such as different disease subgroups, intervention dosage, or study quality – should be sought.[11,17] Detecting the source of heterogeneity generally requires sub-group analysis, which is only possible when data from many or large trials are available.[1,9]

In some systematic reviews in which a large number of trials have been performed, it is possible to evaluate whether certain subgroups (e.g. children versus adults) are more likely to benefit than others. Subgroup analysis is rarely possible in dermatology, because few trials are available. Subgroup analyses should always be pre-specified in a systematic review protocol in order to avoid spurious post hoc claims.[1,9]

The Importance of Publication Bias

Publication bias is the tendency that studies that show positive effects are more likely to be published and are easier to find.[1,18] It results from allowing factors other than the quality of the study to influence its acceptability for publication. Factors such as the sample size, the direction and statistical significance of findings, or the investigators’ perception of whether the findings are “interesting,” are related to the likelihood of publication.[1,19,20] Negative studies with small sample size are less likely to be published.[1,19,20] Studies published are often dominated by the pharmaceutical company sponsored trials of new, expensive treatments often compared with the placebo.

For many diseases, the studies published are dominated by drug company-sponsored trials of new, expensive treatments. Such studies are almost always “positive.”[1,21,22] This bias in publication can result in data-driven systematic reviews that draw more attention to those medicines. Systematic reviews that have been sponsored directly or indirectly by industry are also prone to bias through over-inclusion of unpublished “positive” studies that are kept “on file” by that company and by not including or not finishing registered trials whose results are negative.[1,23] The creation of study registers (e.g. http://clinicaltrials.gov) and advance publication of research designs have been proposed as ways to prevent publication bias.[1,24,25] Many dermatology journals now require all their published trials to have been registered beforehand, but this policy is not well policed.[1]

Language bias is the tendency for studies that are “positive” to be published in an English-language journal and be more quickly found than inconclusive or negative studies.[1,26] A thorough systematic review should therefore not restrict itself to journals published in English.[1]

Publication bias can be detected by using a simple graphic test (funnel plot), by calculating the fail-safe N, Begg's rank correlation method, Egger regression method and others.[1,9,11,27,28] These techniques are of limited value when less than 10 randomized controlled trials are included. Testing for publication bias is often not possible in systematic reviews of skin diseases, due to the limited number and sizes of trials.[1,9]

Question-driven systematic reviews answer the clinical questions of most concern to practitioners. In many cases, studies that are of most relevance to doctors and patients have not been done in the field of dermatology, due to inadequate sources of independent funding.[1,9]

The Quality of Reporting of Systematic Reviews

The quality of reporting of systematic reviews is highly variable.[1] One cross-sectional study of 300 systematic reviews published in Medline showed that over 90% were reported in specialty journals. Funding sources were not reported in 40% of reviews. Only two-thirds reported the range of years that the literature was searched for trials. Around a third of reviews failed to provide a quality assessment of the included studies and only half of the reviews included the term “systematic review” or “meta-analysis” in the title.[1,29]

The Review should Include Interpretation of the Data, Including Implications for Clinical Practice and Further Research

The conclusions in the discussion section of a systematic review should closely reflect the data that have been presented within that review. Clinical recommendations can be made when conclusive evidence is found, analyzed and presented. The authors should make it clear which of the treatment recommendations are based on the review data and which reflect their own judgments.[1,9]

Many reviews in dermatology, however, find little evidence to address the questions posed. The review may still be of value even if it lacks conclusive evidence, especially if the question addressed is an important one.[1,30] For example, the systematic review may provide the authors with the opportunity to call for primary research in an area and to make recommendations on study design and outcomes that might help future researchers.[1,31]

Footnotes

Source of Support: Nil

Conflict of Interest: Nil.

References

- 1.Bigby M, Williams HC. Appraising systematic reviews and meta-analyses. In: Williams HC, Bigby M, Diepgen T, Herxheimer A, Naldi L, Rzany B, editors. Evidence-based dermatology. London: BMJ Books; 2003. pp. 38–43. [Google Scholar]

- 2.Robinson JK, Dellavalle RP, Bigby M, Callen JP. Systematic reviews: Grading recommendations and evidence quality. Arch Dermatol. 2008;144:97–9. doi: 10.1001/archdermatol.2007.28. [DOI] [PubMed] [Google Scholar]

- 3.Williams H. Dowling Oration 2001. Evidence-based dermatology: A bridge too far? Clin Exp Dermatol. 2001;26:714–24. doi: 10.1046/j.1365-2230.2001.00925.x. [DOI] [PubMed] [Google Scholar]

- 4.Moher D, Cook DJ, Eastwood S, Olkin I, Rennie D, Stroup DF. Improving the quality of reports of meta-analyses of randomised controlled trials: The QUOROM statement. Quality of Reporting of Meta-analyses. Lancet. 1999;354:1896–900. doi: 10.1016/s0140-6736(99)04149-5. [DOI] [PubMed] [Google Scholar]

- 5.Moher D, Liberati A, Tetzlaff J, Altman DG PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. BMJ. 2009;339:b2535. doi: 10.1136/bmj.b2535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Egger M, Smith GD, Sterne JA. Uses and abuses of meta-analysis. Clin Med. 2001;1:478–84. doi: 10.7861/clinmedicine.1-6-478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Richardson WS, Wilson MC, Nishikawa J, Hayward RS. The well-built clinical question: A key to evidence-based decisions. ACP J Club. 1995;123:A12–3. [PubMed] [Google Scholar]

- 8.Lefebvre C, Manheimer E, Glanville J. Searching for studies. In: Higgins JPT, Green S, editors. Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011] The Cochrane Collaboration; 2011. Available from www.cochrane-handbook.org . [Google Scholar]

- 9.Bigby M, Williams H. Evidence-based dermatology. In: Burns T, Breathnach S, Cox N, Griffiths C, editors. Rook's Textbook of Dermatology. 8th ed. Oxford, UK: Blackwell Publishing; 2010. [Google Scholar]

- 10.Jadad AR, Moore RA, Carroll D, Jenkinson C, Reynolds DJ, Gavaghan DJ, et al. Assessing the quality of reports of randomized clinical trials: Is blinding necessary? Control Clin Trials. 1996;17:1–12. doi: 10.1016/0197-2456(95)00134-4. [DOI] [PubMed] [Google Scholar]

- 11.Abuabara K, Freeman EE, Dellavalle R. The role of systematic reviews and meta-analysis in dermatology. J Invest Dermatol. 2012;132:e2. doi: 10.1038/jid.2012.392. [DOI] [PubMed] [Google Scholar]

- 12.Schriger DL, Altman DG, Vetter JA, Heafner T, Moher D. Forest plots in reports of systematic reviews: A cross-sectional study reviewing current practice. Int J Epidemiol. 2010;39:421–9. doi: 10.1093/ije/dyp370. [DOI] [PubMed] [Google Scholar]

- 13.CEBM.net. Centre for evidence base medicine. [Last accessed on 2013 Oct 28]. Available from: http://www.cebm.net/index.aspx?o=1044 .

- 14.Stattools.net. [Last accessed on 2013 Oct 28]. Available from: http://www.stattools.com/RiskOddsConv_Pgm.php .

- 15.Ebrahim S, Smith GD. The ‛number need to treat’: Does it help clinical decision making? J Hum Hypertens. 1999;13:721–4. doi: 10.1038/sj.jhh.1000919. [DOI] [PubMed] [Google Scholar]

- 16.Manriquez JJ, Villouta MF, Williams HC. Evidence-based dermatology: Number needed to treat and its relation to other risk measures. J Am Acad Dermatol. 2007;56:664–71. doi: 10.1016/j.jaad.2006.08.024. [DOI] [PubMed] [Google Scholar]

- 17.Deeks JJ, Higgins JPT, Altman DG. Heterogeneity. In: Higgins JPT, Green S, editors. Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011] The Cochrane Collaboration; 2011. Available from www.cochrane-handbook.org . [Google Scholar]

- 18.Sterne JA, Egger M, Smith GD. Systematic reviews in health care: Investigating and dealing with publication and other biases in meta-analysis. BMJ. 2001;323:101–5. doi: 10.1136/bmj.323.7304.101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Dickersin K, Min YI, Meinert CL. Factors influencing publication of research results. Follow-up of applications submitted to two institutional review boards. JAMA. 1992;267:374–8. [PubMed] [Google Scholar]

- 20.Easterbrook PJ, Berlin JA, Gopalan R, Matthews DR. Publication bias in clinical research. Lancet. 1991;337:867–72. doi: 10.1016/0140-6736(91)90201-y. [DOI] [PubMed] [Google Scholar]

- 21.Smith R. Conflicts of interest: How money clouds objectivity. In: Smith R, editor. The Trouble with Medical Journals. London: Royal Society of Medicine; 2006. pp. 125–38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Perlis CS, Harwood M, Perlis RH. Extent and impact of industry sponsorship conflicts of interest in dermatology research. J Am Acad Dermatol. 2005;52:967–71. doi: 10.1016/j.jaad.2005.01.020. [DOI] [PubMed] [Google Scholar]

- 23.Melander H, Ahlqvist-Rastad J, Meijer G, Beermann B. Evidence b (i) ased medicine: Selective reporting from studies sponsored by pharmaceutical industry: Review of studies in new drug applications. BMJ. 2003;326:1171–3. doi: 10.1136/bmj.326.7400.1171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Dickersin K. Report from the panel on the case for registers of clinical trials at the Eighth Annual Meeting of the Society for Clinical Trials. Control Clin Trials. 1988;9:76–81. doi: 10.1016/0197-2456(88)90010-4. [DOI] [PubMed] [Google Scholar]

- 25.Piantadosi S, Byar DP. A proposal for registering clinical trials. Control Clin Trials. 1988;9:82–4. doi: 10.1016/0197-2456(88)90011-6. [DOI] [PubMed] [Google Scholar]

- 26.Bassler D, Antes G, Egger M. Non-English reports of medical research. JAMA. 2000;284:2996–7. doi: 10.1001/jama.284.23.2996. [DOI] [PubMed] [Google Scholar]

- 27.Macaskill P, Walter SD, Irwig L. A comparison of methods to detect publication bias in meta-analysis. Stat Med. 2001;20:641–54. doi: 10.1002/sim.698. [DOI] [PubMed] [Google Scholar]

- 28.Sutton AJ, Duval SJ, Tweedie RL, Abrams KR, Jones DR. Empirical assessment of effect of publication bias on meta-analyses. BMJ. 2000;320:1574–7. doi: 10.1136/bmj.320.7249.1574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Moher D, Tetzlaff J, Tricco AC, Sampson M, Altman DG. Epidemiology and reporting characteristics of systematic reviews. PLoS Med. 2007;4:e78. doi: 10.1371/journal.pmed.0040078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Parker ER, Schilling LM, Diba V, Williams HC, Dellavalle RP. What is the point of databases of reviews for dermatology if all they compile is “insufficient evidence”? J Am Acad Dermatol. 2004;50:635–9. doi: 10.1016/s0190-9622(03)00883-1. [DOI] [PubMed] [Google Scholar]

- 31.Eleftheriadou V, Thomas KS, Whitton ME, Batchelor JM, Ravenscroft JC. Which outcomes should we measure in vitiligo? Results of a systematic review and a survey among patients and clinicians on outcomes in vitiligo trials. Br J Dermatol. 2012;167:804–14. doi: 10.1111/j.1365-2133.2012.11056.x. [DOI] [PubMed] [Google Scholar]