INTRODUCTION

The Clinical and Translational Science Awards (CTSA) program supported by the National Institutes of Health (NIH) represents a consortium of approximately 60 biomedical research institutions across the United States. The goals of the consortium are: “to accelerate the translation of laboratory discoveries into treatments for patients, to engage communities in clinical research efforts, and to train a new generation of clinical and translational researchers.”1 Research education is clearly a major objective of the CTSA program. The Education Core Competency Work Group, a joint effort of the NIH and the CTSA Education and Career Development (EdCD) Key Function Committee (KFC), undertook an effort to define core competencies for Master’s degree programs in clinical and translational research, which include 14 thematic areas defining the knowledge, attributes and skills that are essential to success in clinical and translational science.2 Two of the 14 thematic areas specifically address competency in interdisciplinary team research, as summarized in Table 1.

TABLE 1.

Selected competencies for Master’s-level training in clinical and translational science developed and approved by the CTSA Education and Career Development Key Function Committee (2009)

| Thematic Area | Selected Competencies |

|---|---|

| Translational Teamwork |

|

| Leadership |

|

Whereas individual CTSAs strive to advance integrated and interdisciplinary approaches to education and career development in clinical and translational science, they may do so in very different ways, as there is no single approach practiced uniformly in all the centers. Perhaps not surprisingly, therefore, strategies, resources, evaluation processes, and effectiveness may vary substantially between institutions. It is important to learn about the different approaches and, ultimately, determine what works and what does not. That process starts with examining methods that are currently in place.

With this goal in mind, members of the EdCD KFC of the national CTSA consortium created a new working group on “Team Science Training”, with the objectives of assessing, describing, and critiquing approaches to preparing scholars for careers in interdisciplinary team science (defined below). To begin the process of examining different approaches to preparing scholars for interdisciplinary science careers, the committee developed a survey instrument to distribute to the education leaders at 60 CTSA institutions nation-wide. The survey asked about each institution’s approaches for “teaching” or fostering team science skills and strategies, and the perceived utility and effectiveness of these efforts. The purpose of this paper is to present the findings from that survey questionnaire, which incorporated responses to multiple choice questions as well as qualitative analyses of open text responses. We present these findings with a view to providing a reference aid for future program design and evaluation efforts in training for interdisciplinary science.

Note that for the purposes of this investigation, we use the taxonomy of interdisciplinary science provided by Rosenfield (1992): “Researchers work jointly but still from [their] disciplinary-specific basis to address [a] common problem.”3 Similarly, we adopt the definition of team science proposed by Stokols et al. (2008): group initiatives “designed to promote collaborative – and often cross-disciplinary – approaches to analyzing research questions about particular phenomena.”4 Because interdisciplinary science most often involves team efforts, we restrict attention in this paper to “interdisciplinary team science”, i.e., team projects that involve contributions and ongoing collaboration by scientists representing at least two distinct disciplines as they address together a common research question. Thus, our findings are applicable to research projects involving interdisciplinary teams.

MATERIALS AND METHODS

To learn about beliefs, perceptions and approaches to “team science training” being undertaken by CTSA institutions, we created a web-based questionnaire. CTSA education leaders across the nation (n=60) were contacted through email and asked to participate in the study from August 2012 to September 2012. A direct link to the survey was provided in an e-mail generated by the REDCap survey web application,5 with three e-mail reminders and one “last chance” e-mail sent to maximize overall response rate. A cover letter about the study was sent with the survey request and was accompanied by the list of competencies in translational teamwork and leadership (Table 1).

The survey

The questionnaire asked about each institution’s approaches for “teaching” team science skills and strategies, and the perceived utility and effectiveness of these efforts. The purpose of this paper is to present the findings from that survey questionnaire, which incorporated responses to multiple choice questions as well as qualitative analyses of open text responses (Table 2).

TABLE 2.

SURVEY QUESTIONNAIRE

| PAGE 1 – BELIEFS REGARDING TEAM SCIENCE TRAINING |

| 1. How important is it to offer specific training opportunities in interdisciplinary team science for young investigators? |

| a. Very important |

| b. Somewhat important |

| c. Not very important |

| d. Not at all important |

| If the answer to #1 was c or d, the survey continued to question #2; otherwise, it skipped to question #3. |

| 2. Please tell us why you responded as you did to question #1 about teaching team science skills. |

| a. I don’t believe these skills are important to success as a clinical/translational scientist. |

| b. I don’t believe these skills can be taught effectively. |

| c. Other; please describe:___________________________ |

| PAGE 2 – CURRICULUM FOR TEAM SCIENCE |

| 3. Does your institution offer specific training in interdisciplinary team science as part of your educational portfolio for the CTSA? |

| a. Yes |

| b. No |

| c. Unsure |

| If the answer to #3 was YES, the survey continued to the following questions; otherwise, it skipped to question #10. |

| 4. Is training in interdisciplinary team science required of trainees and scholars enrolled in your CTSA educational programs? |

| a. Yes |

| b. No |

| c. Unsure |

| 5. Do you collaborate with another school/unit of the university (e.g., school of business, education, law or communication) to deliver interdisciplinary team science training to your scholars and trainees? |

| a. Yes |

| b. No |

| c. Unsure |

| 6. Please tell us what types of training opportunities are provided. Please note that you may select more than one option from the list below. |

| a. A formal, credit-bearing course offered by a health sciences school (e.g., medicine, nursing, public health) |

| b. A formal, credit-bearing course offered by another school (e.g., education, business, engineering) |

| c. A non-credit seminar or workshop |

| d. An experiential requirement outside of the classroom (e.g., a rotation or practicum placement in an interdisciplinary setting) |

| e. Other; please describe:___________________________ |

| If the answer to question #6 was a, b, or c, the survey continued to the following question; otherwise, it skipped to question #11. |

| 7. How would you best describe the content of your course or workshop on interdisciplinary team science? Please check all that apply. |

| a. A survey of the literature on skills and strategies for successfully engaging in team science |

| b. A case-based course in which students review case studies in team science and develop strategies for pursuing interdisciplinary science despite obstacles |

| c. A course in negotiation skills and conflict management |

| d. Other; please describe:___________________________ |

| 8. Please share with us some of your strategies/methods for evaluating the effectiveness of the interdisciplinary training opportunities at your institution. OPEN TEXT RESPONSE:______________________________________ |

| 9. How effective do you believe your program’s training efforts in interdisciplinary science to be? |

| a. Very effective |

| b. Somewhat effective |

| c. Neutral |

| d. Mostly ineffective |

| 10. Are you planning to develop training opportunities in interdisciplinary team science as part of your educational portfolio for the CTSA? |

| a. Yes |

| b. No |

| c. Unsure |

| PAGE 3 – Final question for all respondents. |

| 11. Thank you very much for your participation. Are there any other thoughts, suggestions, or comments you’d like to share with us at this time? _______________________________ |

Quantitative methodology

The responses to multiple choice questions are summarized through percentages and bar charts. Comparisons of qualitative characteristics across groups are evaluated by use of the chi-squared test. All analyses were conducted in STATA 12.

Qualitative methodology

Open ended text generated from the survey questionnaire items were reviewed to extract important indicator quotes. Analyst triangulation was used throughout the data analysis process to enhance confirmability of the findings.6 A list of hierarchical codes was developed by consensus of the coders.

Two investigators independently reviewed the indicator quotations to identify constructs. The second investigator coded the indicator quotations with the first investigator’s coding removed. Saturation was achieved when no new codes emerged from the data, confirming the adequacy of the sample size and providing an endpoint to the coding portion of the data analyses. A total of 45 constructs were agreed upon to classify the data, derived from 44 indicator quotations. After the initial identification of constructs, the team re-examined the data and identified additional categories of constructs, reorganized and labeled existing constructs, and identified relationships between constructs. To assist in data reporting, constructs were categorized by types of factors as described in the Results section. Whenever divergent interpretations of constructs occurred, indicator quotations were re-reviewed and discussed until consensus was achieved.7

This analysis technique has been used in other qualitative studies without quantifying qualitative results.8 Qualitative research designs are not meant to provide quantitative estimates. Qualitative research probes beyond the level of survey data and allows a deeper understanding to explore dimensions that quantitative studies cannot uncover.9

RESULTS

Survey response rate

57/60 individuals responded to the survey, for an overall response rate of 95%. Thus, we believe that we have captured the views of nearly all the CTSA institutions, and can be confident that these results are representative of the target audience.

Quantitative findings

The marginal response percentages for each multiple choice question are given in Table 3. Overall, 86% of respondents felt that interdisciplinary team science training is important (very or somewhat) for young investigators. Of the 8 (14%) who felt that this training is not very or not at all important, 2 endorsed the notion that interdisciplinary skills are not important for success as a clinical/translational scientist. Three stated that while these skills are important, they cannot be taught. Just over half of institutions (29 institutions, or 52% of the total respondents) stated that they offer training in interdisciplinary team science skills as part of their CTSA educational portfolio; and 72% of these require such training of their scholars. Most of the institutions who offer interdisciplinary training (62%) do so in collaboration with another school or unit within the university (e.g., with a school of business, education, law, or communication).

TABLE 3.

Responses to multiple-choice survey questions

| Survey question | Count | Percent |

|---|---|---|

| Question 1: How important is it to offer specific training opportunities in interdisciplinary team science for young investigators? | 57 | |

| Very important | 36 | 63% |

| Somewhat important | 13 | 23% |

| Not very important | 5 | 9% |

| Not at all important | 3 | 5% |

|

FOR THOSE WHO ANSWERED NOT VERY/NOT AT ALL IMPORTANT TO QUESTION 1: Question 2: Please tell us why you responded as you did to the first question about teaching team science skills. |

8 | |

| I don’t believe that these skills are important to success as a clinical/translational scientist | 2 | 25% |

| I believe it’s important but I don’t believe that these skills can be taught effectively. | 3 | 38% |

| Other | 3 | 38% |

| Question 3: Does your institution offer specific training in interdisciplinary team science as part of your educational portfolio for the CTSA? | 56 | |

| Yes | 29 | 52% |

| No | 18 | 32% |

| Unsure | 9 | 16% |

|

FOR THOSE WHO ANSWERED YES TO QUESTION 3: Question 4: Is training in interdisciplinary team science required of trainees and scholars enrolled in your CTSA educational programs? |

29 | |

| Yes | 21 | 72% |

| No | 8 | 28% |

|

FOR THOSE WHO ANSWERED YES TO QUESTION 3: Question 5: Do you collaborate with another school/unit of the university (e.g., school of business, education, law or communication) to deliver interdisciplinary team science training to your scholars and trainees? |

29 | |

| Yes | 18 | 62% |

| No | 11 | 38% |

|

FOR THOSE WHO ANSWERED YES TO QUESTION 3: Question 6: Please tell us what types of training opportunities are provided, Please note that you may select more than one option from the list below. |

29 | |

| (a) A formal, credit-bearing course offered by a health sciences school (e.g., medicine, nursing, public health) | 16 | 55% |

| (b) A formal, credit-bearing course offered by another school (e.g., education, business, engineering) | 4 | 14% |

| (c) A non-credit seminar or workshop | 17 | 59% |

| (d) An experiential requirement outside of the classroom (e.g., rotation or practicum placement in an interdisciplinary setting) | 8 | 28% |

| (e) Other | 2 | 7% |

|

FOR THOSE WHO ANSWERED a, b, OR c TO QUESTION 6: Question 7: How would you best describe the content of your course or workshop on interdisciplinary team science? Please check all that apply. |

27 | |

| (a) A survey of the literature on skills and strategies for successfully engaging in team science | 11 | 41% |

| (b) A case-based course in which students review case studies in team science and develop strategies for pursuing interdisciplinary science despite obstacles | 19 | 70% |

| (c) A course in negotiation skills and conflict management | 10 | 37% |

| (d) Other | 7 | 26% |

|

FOR THOSE WHO ANSWERED YESTO QUESTION 3: Question 9: How effective do you believe your program’s training efforts in interdisciplinary science to be? |

27 | |

| Very effective | 8 | 30% |

| Somewhat effective | 16 | 59% |

| Neutral | 2 | 7% |

| Mostly ineffective | 1 | 4% |

|

FOR THOSE WHO ANSWERED NO OR UNSURE TO QUESTION 3: Question 10: Are you planning to develop training opportunities in interdisciplinary team science as part of your educational portfolio for the CTSA? |

19 | |

| Yes | 13 | 68% |

| No | 2 | 11% |

| Unsure | 4 | 21% |

Of the 29 institutions that offer interdisciplinary team science training, the majority offer a formal, credit-bearing course based in the health sciences (55%), or a non-credit seminar or workshop (59%). Most of these training options can be described as case-based courses in which students review case studies in team science and develop strategies for pursuing interdisciplinary science despite the obstacles to it. About 28% require an experiential activity outside of the classroom, such as a research rotation.

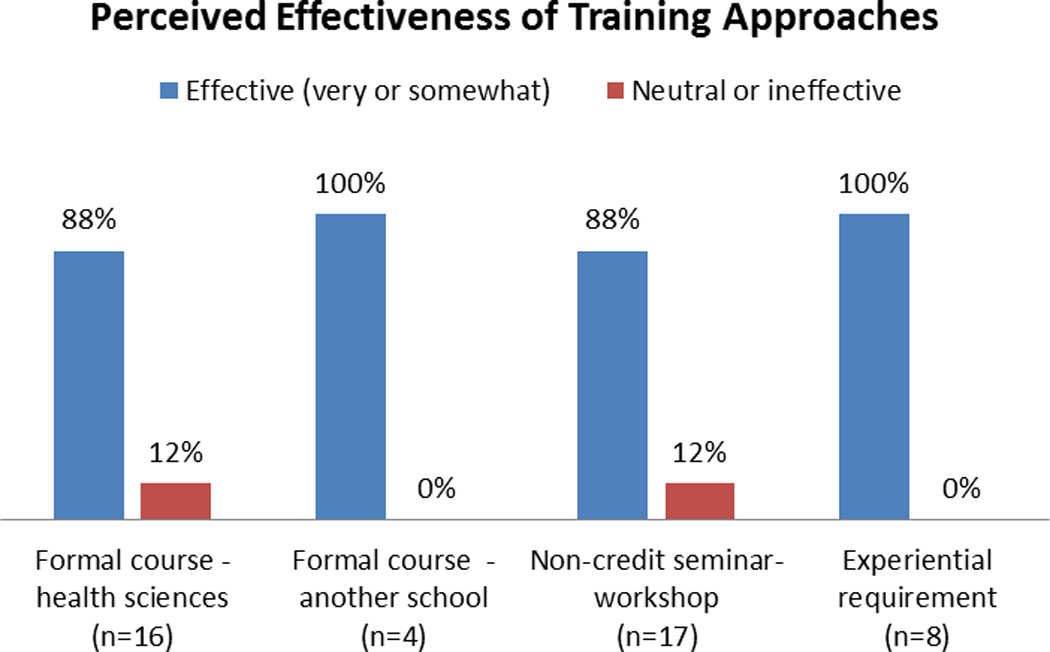

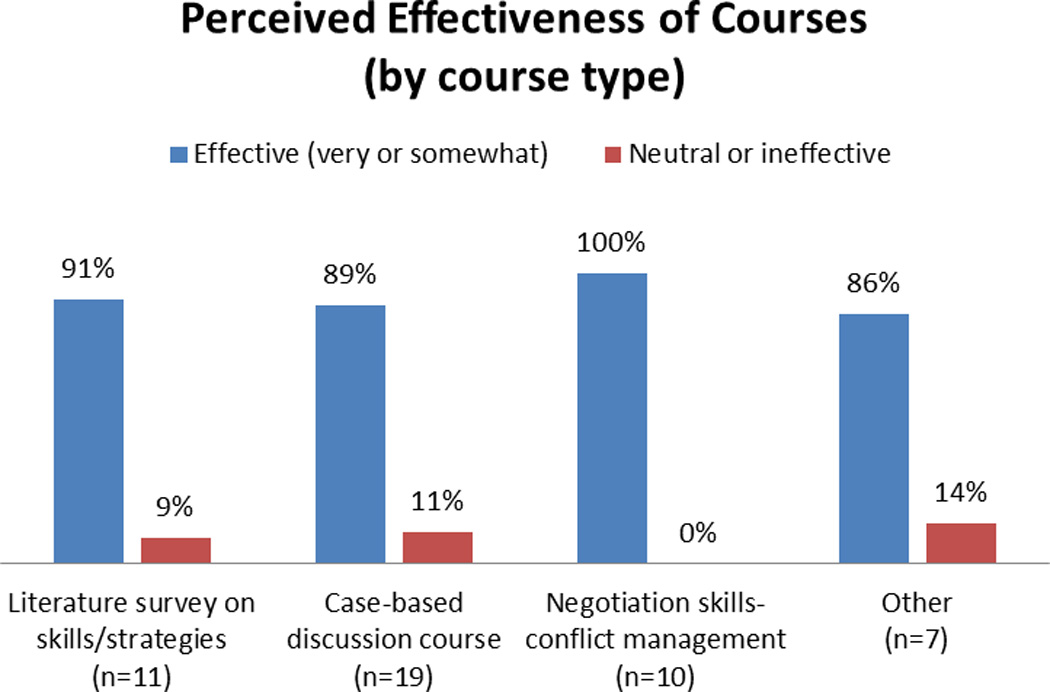

Most education leaders (89%) perceived the training they offer as at least somewhat effective. There was little variation in perceptions of effectiveness across types of training opportunities provided (question 6), with percentages reporting somewhat or very effective ranging from 88% to 100% (see Figure 1a). Perceptions of effectiveness also did not vary substantially by type of course (content of classroom-based or seminar-style courses); with percentages of somewhat or very effective falling between 86% and 100% (see Figure 1b).

Figure 1.

a. Perceived effectiveness of various approaches to team science training, perceived effectiveness regarding training method.

b. Perceived effectiveness of various approaches to team science training, perceived effectiveness based on course type.

Qualitative findings

In the survey, open text opportunities were offered in questions 2, 6, 7, 8 and 11. Themes and subthemes of these responses can be found in Table 4. It is important to highlight and clarify how these responses were elicited within the context of the survey. In question 2, most education leaders agreed (86%) that opportunities in interdisciplinary team science were “very important” or “somewhat important,” although some education leaders disagreed (14%), selecting interdisciplinary team science as “not very important” or “not at all important,” in which the survey was programmed to branch to three additional choices to their initial response.

Table 4.

Qualitative data themes, subthemes and data extracts from open ended option “other” for survey questions 2, 6-8, and 11.

| Themes and subthemes | Data extracts |

|---|---|

| 2. Offering specific training opportunities for interdisciplinary team science is not important | |

| Previous established successful paradigm | I have (been) part of interdisciplinary teams for 35 years both in research and as a clinician. |

| Conceptual uncertainty of term “Team Science” | How (is) team science different than interdisciplinary Research? Are the competencies different, are the skills different? |

| I have an issue with the term team science. | |

| 6. Additional training opportunities in Team Science | |

| Integration within existing non-credit and credit bearing educational offerings. | Course in bioinformatics computational biology and clinical informatics; one session in a seminar here and there. |

| We integrate this (Team Science) into a workshop and journal club; we include this as a part of the annual symposium and integrated into two other courses that we offer. | |

| A couple of seminars embedded in our weekly KL2 seminar program. | |

| Course in Leadership. | |

| Experiential Learning | Team Science through the trainees multidisciplinary KL2 mentors. |

| Basic scientist trainees and clinical scientist trainees collaborate to compete for a pilot grant awarded in the category of team science. | |

| There is also a wireless scholar position in our program, where clinical research is conducted with the help of communication engineers. | |

| Pre-existing online courses | Online course from NUCATS (Northwestern University Clinical and Translational Science Team Science Course). |

| 7. Content offered related to Team Science | |

| Integration within existing non-credit and credit bearing educational offerings. | Conflict management and team management. |

| Leadership. | |

| 8. Effectiveness of programs’ interdisciplinary strategies | |

| What constructs of team science are evaluated? | |

| Collaborations | Students in our elective class, Instruction to Translational Research in the Health Sciences class work in groups to produce an interdisciplinary project on a health issue of their choice. All disciplines of the students must be represented. |

| We require trainees to have mentors from different disciplines and we see evidence of interdisciplinary in the student’s progress report | |

| We track faculty trainees’ collaborative research efforts. | |

| Projects are assessed on their interdisciplinary approach. | |

| Team formation pre post with individuals that have gone through the programs or course work. | |

| Research completed through the team approach. | |

| Manuscripts/co-authorships | Excellent question, wish we had the right answer. We evaluate the homes of authors on manuscripts, investigators on studies for this purpose. |

| Tracking team grant applications and papers. | |

| Social Networks | SNA pre and post. |

| Students are asked, as part of the curse evaluation, to what extent, “Group project provided an authentic experience to understand multidisciplinary teamwork”. | |

| Skills | Participants role play negotiation skills around a complex conflict common to scientists, such as authorship, allocation of lab resources, clinical vs. research time. |

| Attitudes | We use our educations outcomes unit to do pre and post course testing of the attitudes of the scholars toward Interdisciplinary Science as well as evaluation of the course itself. |

| No formal evaluation | While team science is taught the evaluation is minimal. |

| Currently our evaluation is limited to paper/pencil post-training evaluation. | |

| When is the effectiveness of Team Science measured? | |

| Post course or training Pre and post course training During training |

Course evaluations for the class in which the grant writing is introduced. |

| SNA (social network) pre and post. | |

| We see evidence of interdisciplinary in the student’s progress reports. | |

| Who provides information being evaluated? | |

| Scholars and Trainee | Student evaluations are the sole source of evaluation data at this point. |

| Students are asked, as part of the course evaluation, to what extent, “Group project provided an authentic experience to understand multidisciplinary teamwork.” | |

| Survey students at the end of training about competencies including team science. | |

| Joint effort (mentor/mentee/supervisor) | Our KL2 scholars attend a multidisciplinary meeting twice a month, and also have 4 mentors from different departments and disciplines. The mentors evaluate the scholars’ progress, and the scholars evaluate their relationships with their mentors. |

| While this is not evaluation of the effectiveness of interdisciplinary training per se, their mentoring is interdisciplinary and instrumental to their training, and we evaluate how their training and mentoring is going. | |

| Phone survey of mentor and mentees. | |

| Post-rotation evaluation completed by Scholar rotation supervisor. | |

| External entity | Our auditing process and MARC committees’ asses how well our trainees perform in team science and the trainees are evaluated on this metric. |

| This year, for the first time, we have asked a colleague in our translational science center to create and implement a pre-tests and post-test survey for our team science participants. | |

| We use our educations outcomes unit to do pre and post course testing of the attitudes of the scholars toward interdisciplinary science as well as evaluation of course itself. | |

| What methodology is used to evaluate effectiveness of team Science? | |

| Pen and paper evaluation Telephone survey Progress report |

Currently our evaluation is limited to paper/pencil post-training evaluation. |

| Phone survey of mentor and mentees. | |

| We require trainees to have mentors from different disciplines and we see evidence of interdisciplinary in the student’s progress report. | |

| 11. General comments from CTSA Education and Career Development Leaders | |

| Sharing of Curriculum Content (Syllabi) | It would be great to share all teaching strategies on this and perhaps even consider launching an on-line course together. |

| Please see 2 course syllabi from [redacted]/ The courses are [redacted] and [redacted](our main interdisciplinary course). | |

| I can send you a draft of a manuscript about the course that is under review with the CTS journal. Please e mail at [redacted]. | |

| We believe this is a very important topic. We currently have a MS in Clinical Research and are now in the midst of creating a MS in Translational Science. This latter degree program has one of its three main components as team science. | |

| Curriculum in developmental stage | We have multiple-mentor programs for all trainees, Some experience in interdisciplinary team science occurs in these settings. However, formal coursework or didactic programming has not yet been developed. We will look forward to hearing of the other CTSA programs. |

| Developing curricula or strategies to accomplish this would be very useful. | |

| Our trainees are part of translational research clusters which are multidisciplinary groups of researchers. They have an immersion experience in team science during their tenures in our program. As of yet there is no formal didactic program in team science. | |

| What are other CTSAs doing? | Very interested to learn what others are doing. |

| The new RFA puts great emphasis on this, but it is clear that there are few if any accepted models or standards for this kind of education. One has not clue to whether one’s attempt to address this will be adequate or not. | |

| This is a difficult task to accomplish. I would very much like to learn from the experiences of others. | |

| Yes, I am interested in learning if some CTSA’s have curricula or workshops specifically addressing this issue. | |

| I am very interested in hearing other approaches. | |

The first choice “I don’t believe these skills are important to success as a clinical/translational scientist” and “I don’t believe these skills can be taught effectively” were the most common, with a third choice “other” in which an open text response was an option. As seen in Table 4 three statements under “other” illustrate some of the tensions and ambiguities between the terminology “team science” and interdisciplinary research. The first construct is the belief of an established successful paradigm:

“I have (been) part of interdisciplinary teams for 35 years both in research and as a clinician.”

The second construct is that of the conceptual uncertainty of the term “team science” as having many attributes of skills and competencies similar to interdisciplinary research and therefore rendering it difficult to distinguish definitively between these two concepts.

“I have an issue with the term team science. How (is) team science different than interdisciplinary research, are the competencies different, are the skills different?”

When asked in Question 6, “… what types of training opportunities are provided (in team science),” respondents could select more than one option. These options included “a formal credit bearing course offered by: a) health sciences school or b) another school (e.g., business, education, engineering),” or c) a non-credit seminar or workshop, or d) an experiential requirement outside the classroom (e.g., a rotation or practicum placement in an interdisciplinary setting), or e) “Other, please describe.” Respondents of “other” indicated an integration of team science training opportunities into existing non-credit and credit-bearing educational course offerings, as well as integration of “team science” in existing curriculum such as bioinformatics, computational biology, clinical informatics, journal club and annual symposiums. There was also an indication of a “when needed” response to implementing “team science” in which mentoring teams consisting of two or more mentors from differing disciplines would require team science training; however, no further information was provided as to what that training entailed.

In Question 7 we asked respondents to “… describe the content of your course or workshop on interdisciplinary team science.” If their description was not a choice in our survey, they could write a description in “Other.” We received a variety of ways in which interdisciplinary team science knowledge and skills were integrated into existing non-credit bearing courses and/or embedded into weekly KL2 seminars with an emphasis on conflict and team management through experiential learning. Others adopted distance learning opportunities and took advantage of either “Team Science Course” offerings such as “Online resource from NUCATS”; or through outcome-based learning strategies such as team collaboration (competition) for pilot funding or through distance-based experiential learning. Didactic course offerings in team science leadership were also given as course content.

Evaluation of Team Science

Question 8 asked respondents to share “… strategies/methods for evaluating the effectiveness of the interdisciplinary training opportunities at your institution.” Responses varied greatly and were coded under evaluation as: when (time point), who (provides information), what (subject area content) and how (evaluation instruments) evaluation processes of team science. A total of 109 comments were coded from 27 survey responses, as summarized in Table 5. Sample survey responses are shown in Table 4.

Table 5.

Constructs for coded responses to Question 8 (strategies/methods for evaluating effectiveness of interdisciplinary training)

| 1. When Evaluated (n=27) | |

| a. Pre/Post Course/Training | 4 (15%) |

| b. Post Course/Training | 20 (74%) |

| c. During Course/Training | 3 (11%) |

| 2. Who Evaluated (n=28) | |

| a. Scholar/Trainee | 22 (78%) |

| b. Joint Effort (Mentor/Mentee) | 3 (11%) |

| c. External Entity | 3 (11%) |

| 3. What Was Evaluated (n=38) | |

| a. Generic “course evaluation” | 9 (24%) |

| b. Course/Rotation Experience | 2 (5%) |

| c. Scholar Knowledge | 4 (11%) |

| d. Scholar Attitude | 2 (5%) |

| e. Scholar Skills | 4 (11%) |

| i. Role Play | 1 |

| ii. Writing | 1 |

| iii. Not specified | 2 |

| f. Competency-based team performance | 13 (34%) |

| i. Team Research Protocol / Project | 4 |

| ii. Team Research Manuscript / Coauthorship | 3 |

| iii. Social Network Analysis | 2 |

| iv. Not specified | 4 |

| g. Mentor/Mentee Relationship | 1 (3%) |

| h. Mentee Progress | 3 (8%) |

| 4. How Evaluated (n=26) | |

| a. Informal | 1 (4%) |

| b. Formal | 25 (96%) |

| i. Pen & Paper | 1 |

| ii. Phone | 1 |

| iii. Not specified | 23 |

When: Respondents indicated post course/training (74%) as the most common time point for evaluation, with others stating a combination of pre and post (15%) and during (11%) course training as data collection time points.

Who: The majority of responses indicated the scholars or trainees (78%) provided evaluation information, with others indicating both mentor and scholar supervisor (11%) or an external entity (11%).

What: The two most common content areas identified as the subject of evaluation included competency-based team performance measures (34%), such as collaborative projects, manuscripts and co-authorships, and social network analysis (SNA), and generic course evaluations or post training evaluations (24%).

How: The most common method of evaluation was a formal evaluation (96%), but details of the method collecting information were usually not specified.

Shared Community Experience

In Question 11 we asked “… are there any other thoughts, suggestions, or comments you'd like to share with us at this time?”, and we received a wide spectrum of suggestions and comments leading to categories of importance and queries as to what other CTSAs were doing. Many of the responses indicated a need for sharing curriculum content, course syllabi, and evaluation instruments. Some respondents stated they were in the “developmental stage” of their team science curriculum, and expressed great interest in what other CTSA’s are doing. Perhaps the most urgent stated: “The new RFA puts great emphasis on this [team science education], but it is clear that there are few, if any accepted models or standards for this kind of education. One has no clue as to whether one's attempt to address this will be adequate or not.”

In sum, these qualitative data reveal the tensions, ambiguities and diversity of perceptions, attitudes and applications of the term “team science” in clinical translational sciences education and career development. These data suggest that there is a need for guidance in curriculum development, instructional strategies and pedagogical methods to support interdisciplinary team science training and education.

DISCUSSION

It is widely perceived that the research landscape is changing for biomedical research.4,10–15 The biomedical literature is increasingly filled with team-based science, as reflected by larger numbers of authors (larger teams) on published papers.10–12 Cutting edge research often takes place at the interstices between established disciplines; thus, the skills and competencies to succeed as members and leaders of interdisciplinary teams has been cited as a critical need among young investigators.13–15 Meyers et al. (2012) argue that there is a need for a “qualitatively different investigator” than in years past; and that “curricula designed to promote teamwork and interdisciplinary training will promote innovation” when it is delivered in combination with strong disciplinary skills.16 The unsettled question, however, is how to define interdisciplinary team skills, and how to deliver them. Several authors have attempted to tackle this question; see, for example, the papers by Gebbie and colleagues (2008)17 and Borrego and Newswander (2010).18 If training programs are to succeed in fostering interdisciplinary skills, then an analysis of the work of these authors (and many others) suggests that we focus on: strong disciplinary skills (so as to be perceived as a valuable member of interdisciplinary teams); regular interaction with colleagues from multiple disciplines; exposure to the theory and practice of building and sustaining a high-functioning team; and opportunities to practice interdisciplinary team-building, management, and communication skills. A number of different strategies could be adopted that would provide training in these areas. We decided, therefore, to take a systematic approach (i.e., a survey) to identify and describe the range of strategies currently used and the perceived utility of those strategies in practice.

In this exploratory cross-sectional study we describe beliefs, activities, and perceptions of CTSA institution Education Directors about interdisciplinary team science and related training opportunities offered to young investigators as part of their training portfolio in clinical and translational science research. The results suggest that although the majority believe that offering training in Team Science is very important, only about half of the institutions offer specific training opportunities and just over a third has made Team Science a formal program requirement. Among those with formal training programs, 89% believe that their efforts are at least somewhat effective; only about 30% believe that their educational efforts have been very effective, which may be due to the dearth of methods for evaluating interdisciplinary “readiness.”

The CTSA survey results suggest two broad themes. The first is that specific training in interdisciplinary team science for young investigators is very important, especially given the changing nature of how science is being conducted in the 21st century;12 and the second is that there is no one methodology, universally practiced in CTSA institutions, designed to meet this identified need. This outcome (i.e., lots of experimentation and little uniformity across CTSA institutions) is neither surprising nor unexpected: when a newly emerging problem or opportunity arises, there is always a proliferation of strategies trying to fill the niche. A lack of uniformity across the CTSAs, when it comes to teaching interdisciplinary team science, is a natural evolutionary stage. It is the intention of this paper to move CTSAs beyond the present stage of evolutionary development, when it comes to teaching young investigators interdisciplinary team science, through the natural process of winnowing.

Another important observation, however, is that not all CTSAs have had the award the same length of time. As a consequence, some institutions are, no doubt, still building their educational portfolios. Moreover, while teams of scientists working together might be dated, at a minimum, to the Manhattan Project (1942–45), the formal study of “interdisciplinary team science” is more recent in the biomedical research realm, and there is an understandable lack of clarity about many things, including a curriculum designed to train young investigators for this type of work. That may explain in part why 89% of the respondents to the survey considered the training they offer at their sites as at least somewhat effective, yet no two institutions conducted their training programs in exactly the same manner.

The challenge of interdisciplinary team science “training,” reflected in the CTSA survey results, may simply reflect the challenges that appear repeatedly in the literature about interdisciplinary team science.19–21 Like interdisciplinary team science itself, any robust training program for young investigators will require that various points of view be represented. These differing points of view, however, will at times result in conflict.20 The challenge will be to manage these “conflicts” productively within the training program, which will provide young investigators with the skills needed to do so once they are working in interdisciplinary teams.

In order to answer the primary question implied in the CTSA survey, i.e., “What methodology works best for training young investigators in interdisciplinary team science?” long-term studies will need to be conducted which define, with precision, what is meant by “effectiveness” as an interdisciplinary investigator, and then young investigators will need to be followed over time to gauge their effectiveness based upon the type of training they received. We should note that a limitation of this study is the lack of a definition of “effective” in survey question #9, leaving the definition of effective training subject to interpretation by the program directors completing the survey. This points to an additional concern raised by this study that CTSA institutions not only use different training approaches for team science, but also that there is no consistent or widely accepted method for assessing interdisciplinary or team skills and evaluating trainee progress.

We view the results of these analyses as a “call to arms” for CTSA institutions nationwide. We cannot leave these questions unanswered if we are to achieve our goal of training a workforce that is well prepared for interdisciplinary team science. Therefore, we strongly recommend that the following four steps be taken by the members of the CTSA consortium.

Recommendation 1

The Education and Career Development key function committee for the CTSA should conduct a review of already described competencies and develop a common, agreed-upon set of competencies specific to interdisciplinary team science for all scholars and trainees. For example, Gebbie et al. (2008) used a Delphi survey process with a panel of interdisciplinary center directors to identify , 17 competencies were identified in three overarching domains: conducting research (e.g., “Use theories and methods of multiple disciplines in developing integrated theoretical and research frameworks”); communicating (e.g., “Advocate interdisciplinary research in developing initiatives within a substantive area of study”); and interacting with others (e.g., “Engage colleagues from other disciplines to gain their perspectives on research problems”).17 Similarly, Borrego and Newswander (2010) specified four domains of competency: grounding in multiple traditional disciplines; integration skills and broad perspective of the interdisciplinary domain; teamwork; and interdisciplinary communication.18 Most recently, Holt (2013) used a Delphi panel approach to identify 24 competencies for interdisciplinary research collaboration, which were clustered into five domains: intrapersonal; disciplinary awareness and exchange; integration; teamwork, management, and leadership; and fruition.22 These three competency sets are overlapping and similar, and provide a solid foundation for establishing a uniform recommended set of CTSA competencies for team science education and training.

Recommendation 2

Core curricular components from various institutions should be broadly shared on the CTSA website. These materials may include course syllabi, case studies, instructions for student projects, links to web-based training, and other materials that educators have deemed valuable in this domain. The desire for a shared community experience was evident in the survey results. As more curriculum components are shared and become available for comparison, some consensus may be reached as to best practices, which may be of particular benefit not only to new CTSA institutions, but also to non-CTSA institutions that become interested in expanding the scope of their biomedical research training programs.

Recommendation 3

New methods of instruction may be needed to support the development of team science competencies. Rather than relying on teaching methods that promote passive learning, such as traditional didactic lectures and even some web-based on-line learning, the social nature of the competencies involved in interdisciplinary team science suggests that more effective teaching strategies will support active learning and incorporate small group teaching such as problem-based learning and team-based learning.23–25 Such strategies have been used extensively in health sciences education, but less so in research training. Team-based learning (TBL) was recently suggested to be particularly useful for nurturing the ability to engage in interdisciplinary team science,26 which is supported by the recent report that TBL supports the reasoning strategies and social mechanisms that underlie ethical decision-making required for the responsible conduct of research.27 In the TBL method, students learn collaboratively, working through individual and group tasks and holding one another accountable for completing assignments and preparing for in-class activities. In addition to developing new methods of instruction to foster interdisciplinary team science skills, CTSA educators may lead faculty development efforts to support this training on a broad scale, across the consortium.

Recommendation 4

The CTSA Evaluation key function committee should undertake a review of the literature and select a set of assessment metrics that all CTSAs can use to gauge success of their trainees in interdisciplinary team engagement and productivity. These may include “process” measures that are assessed before, during, or immediately after training, as well as “outcome” measures that reflect career achievements over the longer term. A working group of the EdCD KFC recently identified a set of metrics that may be used to evaluate career success for clinical and translational scientists and that includes metrics to assess collaboration and team science.28 For example, networking may be assessed using the 6-item Cross-Disciplinary Collaborative Activities Scale, which measures participation in cross-disciplinary collaboration, and the 10-item Research Orientation Scale, which measures researcher preference for unidisciplinary, multidisciplinary or interdisciplinary research. Both of these measures were developed by the Transdisciplinary Research on Energetics and Cancer initiative of the National Cancer Institute.29 The 23-item Research Collaboration Scale, developed by the Transdisciplinary Tobacco Use Research Center Program, measures trust and respect for team members, satisfaction with collaboration, and perception of the impact of coallaboration.30 Additional assessment tools are available from the National Cancer Institute’s “Team Science Toolkit”,31 from the Science of Team Science (SciTS) website (sponsored by the Northwestern University Clinical and Translational Sciences Institute),32 and from another NIH publication: Collaboration and Team Science: A Field Guide.33 Another option may be to try to assess, over time, the degree of interdisciplinarity of an individual’s set of publications. Porter and colleagues (2007) have proposed using measurements based on the range of subject categories in the Thomson Institute for Scientific Information Web of Knowledge website for citations; that is, they suggest counting the number of subject categories cited by all the papers published by an individual author, in addition to the number of subject categories referenced by the publications that cite that author’s work. 34 In combination, these factors would represent the “reach” of the author’s work across disciplines. While intriguing, these measures are cumbersome to compute and could require years of follow-up to be meaningful. Nevertheless, it would be useful to the CTSA consortium and to the field to identify several key metrics to calculate over time to enable valid, reproducible, commonly used strategies for evaluation of interdisciplinary team skills and productivity.

As the CTSA Consortium enters the next phase of its evolution through a strategic planning process as recommended by the recent Institute of Medicine report about the opportunities that lie ahead for advancing clinical and translational research,35 we hope the results of this survey and our recommendations are useful in developing measurable strategic goals for team science in the context of clinical and translational research. The groundwork has been laid for collaborations across CTSA institutions to identify and disseminate best practices for education and training in interdisciplinary team science, and for individual institutions to implement them as best fits the needs and resources specific to their environments.

Acknowledgments

This project has been funded in whole or in part with Federal funds from the National Center for Research Resources and National Center for Advancing Translational Sciences (NCATS), National Institutes of Health, through the Clinical and Translational Science Awards Program (CTSA). The manuscript was approved by the CTSA Consortium Publications Committee. All institutions are supported by the National Center for Advancing Translational Sciences (NCATS), National Institutes of Health (NIH), through the Clinical and Translational Science Awards Program (CTSA), a trademark of DHHS, part of the Roadmap Initiative, “Re-Engineering the Clinical Research Enterprise; Columbia University, Irving Institute for Clinical and Translational Research (Grant #UL1TR000040); Georgetown- Howard Universities Center for Clinical and Translational Science (Grant #UL1TR000101); University of California, Davis (Grant# UL1TR000002); University of Florida Clinical & Translational Science Institute (Grant # UL1TR000064); University of Rochester School of Medicine and Dentistry (Grant # UL1TR000042); University of Southern California (Grant # ULT1TR000130); Vanderbilt University (Grant #UL1TR000445).

References

- 1.Clinical and Translational Science Awards. [Accessed September 9, 2013];National Center for Advancing Translational Sciences. 2013 Summer; Available at: http://www.ncats.nih.gov/files/factsheet-ctsa.pdf.

- 2.Core Competencies for Clinical and Translational Research. [Accessed September 9, 2013];National Center for Advancing Translational Sciences. Available at: https://www.ctsacentral.org/education_and_career_development/core-competencies-clinical-and-translational-research.

- 3.Rosenfield PL. The potential of transdisciplinary research for sustaining and extending linkages between the health and social sciences. Soc Sci Med. 1992 Dec;35(11):1343–1357. doi: 10.1016/0277-9536(92)90038-r. [DOI] [PubMed] [Google Scholar]

- 4.Stokols D, Misra S, Moser RP, et al. The ecology of team science: understanding contextual influences on transdisciplinary collaboration. Am J Prev Med. 2008 Aug;35(2 Suppl):S96–S115. doi: 10.1016/j.amepre.2008.05.003. [DOI] [PubMed] [Google Scholar]

- 5.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap) - A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009 Apr;42(2):377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Merriam S. What Can You Tell From An N of l?: Issues of Validity and Reliability in Qualitative Research. PAACE Journal of Lifelong Learning. 1995;4:50–60. [Google Scholar]

- 7.Strauss A, Corbin J. Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory. Thousand Oaks. 1998 [Google Scholar]

- 8.Vadaparampil ST, Quinn GP, Brzosowicz J, et al. Experiences of genetic counseling for BRCA ½ among recently diagnosed breast cancer patient: a qualitative study. J Psychosoc Oncol. 2008;26(4):33–52. doi: 10.1080/07347330802359586. [DOI] [PubMed] [Google Scholar]

- 9.Nekhlyudov L, Ross-Degnan D, Fletcher SW. Beliefs and expectations of women under 50 years old regarding screening mammography: a qualitative study. J Gen Intern Med. 2003 Mar;18(3):182–189. doi: 10.1046/j.1525-1497.2003.20112.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Weeks WB, Wallace AD, Surrott Kimberly BC. Changes in authorship patterns in prestigious US medical journals. Soc Sci Med. 2004;59:1949–1954. doi: 10.1016/j.socscimed.2004.02.029. [DOI] [PubMed] [Google Scholar]

- 11.Baethge C. Publish together or perish. Dtsch Arztebl Int. 2008 May;105(20):380–383. doi: 10.3238/arztebl.2008.0380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Wuchty S, Jones BF, Uzzi B. The increasing dominance of teams in production of knowledge. Science. 2007 May 18;316(5827):1036–1039. doi: 10.1126/science.1136099. [DOI] [PubMed] [Google Scholar]

- 13.Zerhouni E. The NIH Roadmap. Science. 2003;302:63–72. doi: 10.1126/science.1091867. [DOI] [PubMed] [Google Scholar]

- 14.Rhoten D. Interdisciplinary research: trend or transition. Items Issues. 2004;5:6–11. [Google Scholar]

- 15.Committee on Facilitating Interdisciplinary Research, National Academy of Sciences, National Academy of Engineering, Institute of Medicine. Facilitating Interdisciplinary Research. The National Academies Press; 2004. [Google Scholar]

- 16.Meyers FJ, Begg MD, Fleming M, et al. Strengthening the career development of clinical translational scientist trainees: a consensus statement of the Clinical Translational Science Award (CTSA) Research Education and Career Development Committees. Clin Transl Sci. 2012 Apr;5(2):132–137. doi: 10.1111/j.1752-8062.2011.00392.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gebbie KM, Meier BM, Bakken S, et al. Training for interdisciplinary health research: defining the required competencies. J Allied Health. 2008 Summer;37(2):65–70. [PubMed] [Google Scholar]

- 18.Borrego M, Newswander LK. Definitions of Interdisciplinary Research: Toward Graduate-Level Interdisciplinary Learning Outcomes. Rev Higher Ed. 2010 Fall;34(1):61–84. [Google Scholar]

- 19.Bergman JZ, Rentsch JR, Small EE, et al. The shared leadership Process in decision-making teams. J Soc Psychol. 2012;152(1):17–42. doi: 10.1080/00224545.2010.538763. [DOI] [PubMed] [Google Scholar]

- 20.Guimerà R, Uzzi B, Spiro J, et al. Team assembly mechanisms determine collaboration network structure and team performance. Science. 2005 Apr 29;308(697) doi: 10.1126/science.1106340. 308(5722):697-702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Christensen C, Larson JR, Abbott A, Ardolino A, Franz T, Pfeiffer C. Decision making of clinical teams: communication patterns and diagnostic error. Med Decis Making. 2000 Jan-Mar;20(1):45–50. doi: 10.1177/0272989X0002000106. [DOI] [PubMed] [Google Scholar]

- 22.Holt VC. Graduate education to facilitate interdisciplinary research collaboration: Identifying individual competencies and developmental activities; Presentation at the Annual International Science of Team Science (SciTS) Conference; June 24-27, 2013; Northwestern University, Evanston, IL. [Google Scholar]

- 23.Schmidt HG, Rotgans JI, Yew EH. The process of problem-based learning: what works and why. Med Educ. 2011;45:792–806. doi: 10.1111/j.1365-2923.2011.04035.x. [DOI] [PubMed] [Google Scholar]

- 24.Michaelsen L, Knight A, Fink L, editors. Team-Based Learning: A Transformative Use of Small Groups in College Teaching. Sterling, Va: Stylus; 2004. [Google Scholar]

- 25.Michaelsen L, Sweet M, Parmelee D. Team-Based Learning: Small Group Learning’s Next Big Step. [Accessed September 9, 2013];New Directions for Teaching and Learning. 2008 116 Available at: http://onlinelibrary.wiley.com/doi/10.1002/tl.v2008:116/issuetoc. [Google Scholar]

- 26.McCormack WT. Facilitating (Learning) Interdisciplinary Research with Team-Based Learning (TBL); Presentation at the Annual International Science of Team Science (SciTS) Conference; June 24-27, 2013; Northwestern University, Evanston, IL. [Google Scholar]

- 27.McCormack WT, Garvan CW. Team-Based Learning Instruction for Responsible Conduct of Research Positively Impacts Ethical Decision-Making. Accountability in Research. 2013 doi: 10.1080/08989621.2013.822267. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Lee LS, Pusek SN, McCormack WT, Helitzer DL, Martina CA, Dozier AM, Ahluwalia JS, Schwartz LS, McManus LM, Reynolds BD, Haynes EN, Rubio DM. Clinical and translational scientist career success: metrics for evaluation. Clin Transl Sci. 2012;5(5):400–407. doi: 10.1111/j.1752-8062.2012.00422.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Hall KL, Stokols D, Moser RP, Taylor BK, Thornquist MD, Nebeling LC, Ehret CC, Barnett MJ, McTiernan A, Berger NA, Goran MI, Jeffery RW. The collaboration readiness of transdisciplinary research teams and 17 centers: Findings from the National Cancer Institute's TREC Year-One evaluation study. Am J Prev Med. 2008;35(2 Suppl):S161–S172. doi: 10.1016/j.amepre.2008.03.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Masse LC, Moser RP, Stokols D, Taylor BK, Marcus SE, Morgan GD, Hall KL, Croyle RT, Trochim WM. Measuring collaboration and transdisciplinary integration in team science. Am J Prev Med. 2008;35(2 Suppl):S151–S160. doi: 10.1016/j.amepre.2008.05.020. [DOI] [PubMed] [Google Scholar]

- 31. [Accessed September 9, 2013];Team Science Toolkit. Available at: https://www.teamsciencetoolkit.cancer.gov.

- 32. [Accessed September 9, 2013];SciTS & Team Science Resources. Available at: http://www.scienceofteamscience.org.

- 33.Bennett LM, Gadlin H, Levine-Finley S. [Accessed September 9, 2013];Collaboration and Team Science, A field Guide. National Institutes of Health. [ NIH Publication No. 10-7660] 2010 Aug; Available at: https://ccrod.cancer.gov/confluence/download/attachments/47284665/TeamScience_FieldGuide.pdf?version=2&modificationDate=1285330231523.

- 34.Porter AL, Cohen AS, Roessner JD, Perreault M. Measuring researcher interdisciplinarity. Scientometrics. 2007;72:117–147. [Google Scholar]

- 35. [Accessed September 9, 2013];The CTSA Program at NIH: Opportunities for Advancing Clinical and Translational Research. Institute of Medicine of the National Academies. 2013 Jun 25; Available at: http://www.iom.edu/Reports/2013/The-CTSA-Program-at-NIH-Opportunities-for-Advancing-Clinical-and-Translational-Research.aspx. [PubMed]