Abstract

Using fMRI, we examined how the affective experience of choice, the means by which individuals exercise control, is modulated by the valence of potential outcomes (gains, losses). When trials involved potential gains, participants reported liking cues predicting a choice opportunity better than cues predicting no-choice – an effect that corresponded with BOLD increases in ventral striatum (VS). Surprisingly, no differences were observed between choice and no-choice cues when anticipating potential losses. Individual differences in subjective choice preference in the loss condition, however, corresponded to choice-related BOLD activity in the VS. We conducted a second study to examine if monetary losses were perceived differently in the context of simultaneous gains. Participants reported greater liking of choice trials and greater choice cue-related VS activity. Collectively, the findings suggest that the affective experience of choice involves reward processing circuitry when anticipating appetitive and aversive outcomes, but may be sensitive to context and individual differences.

Keywords: fMRI, striatum, anticipation, choice, perceived control, reward

INTRODUCTION

Imagine you are driving to work on the highway when suddenly traffic comes to a standstill – you see the nearest exit and consider the choice between sitting in traffic or getting off the highway to take a longer route to work. Now imagine you are sitting on a bus in that traffic, and you are at the will of the driver. In the first scenario, neither option is necessarily better; yet somehow the situation seems less stressful when a choice is available and you are the one in control. Is there something inherently valuable in exercising control through choice?

Belief in one’s ability to exert control over the environment is essential for an individual’s well being. Converging evidence suggests that perception of control enhances positive emotions and can also buffer negative emotional responses to aversive events (e.g. Leotti, Iyengar, & Ochsner, 2010; Ryan & Deci, 2006; Shapiro, Schwartz, & Astin, 1996). For instance, impaired coping ability and exacerbated stress responses to aversive events are observed in the absence of perceived control (e.g. Bandura, 1992; Maier, 1986), suggesting that perceived control may mitigate the negative emotional response elicited by anticipating potentially negative outcomes. Since perception of control is integral for adaptive behavior and emotion regulation, it is important to identify the behavioral and brain mechanisms by which perceived control exerts its modulatory effects.

Every day, individuals make countless choices aimed to approach positive outcomes and avoid negative outcomes. When a causal action (i.e. choice) results in a positive consequence, future opportunity to exercise choice becomes desirable, and may be experienced as inherently rewarding. Thus, expectancies of control, through choice, may serve an important role in regulation of affect and motivated behavior. However, it is unknown whether the affective experience of choice and control depends on the valence of the resulting outcome.

Recently, we demonstrated when anticipating positive outcomes, choice opportunity recruits brain regions involved in affective and motivational processes (Leotti & Delgado, 2011). Here, we examine whether similar neural circuitry is involved when anticipating choice opportunity, when that choice results in avoidance of a negative outcome. While previous research has demonstrated greater activity in reward circuitry during the anticipation of reward and the avoidance of negative outcomes (Kim, Shimojo, & O'Doherty, 2006; Knutson, Adams, Fong, & Hommer, 2001), as well as for rewards contingent on behavior (Bjork & Hommer, 2007; O'Doherty et al., 2004; Tricomi, Delgado, & Fiez, 2004), the present study is unique because it investigates the affective experience involved when merely anticipating the opportunity to exercise control, through choice, rather than looking at the actual choice behavior or anticipating a positive or negative monetary outcome.

Our hypothesis is that perceiving control carries motivational significance, regardless of whether the choice is followed by a potentially positive or negative outcome. If this is the case, we expect cues that predict choice to produce greater activity in regions involved in reward anticipation, such as the ventral striatum (VS, Knutson, Taylor, Kaufman, Peterson, & Glover, 2005). An alternative hypothesis, however, is that choice may be undesirable in the context of potential losses, since incorrect choices may lead to feelings of regret, and individuals may prefer to accept the status quo rather than make errors of commission (Samuelson and Zeckhauer, 1988). The present study tests these hypotheses using a simple choice paradigm designed to examine the affective experience of anticipating choice in the context of positive and negative outcomes (adapted from Leotti and Delgado, 2011).

METHODS

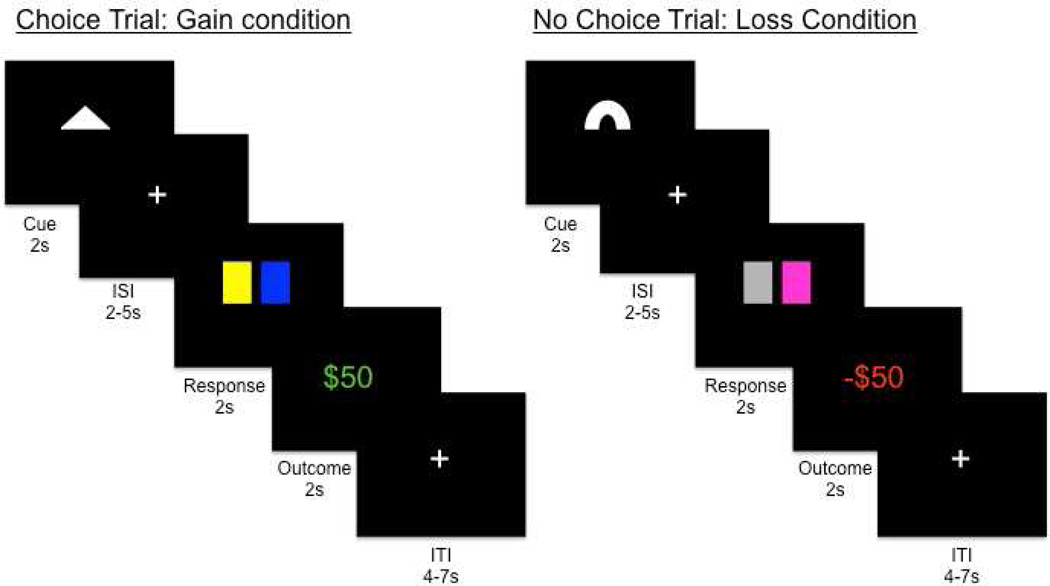

The experimental paradigm (Figure 1) involved the opportunity to choose between two keys (choice condition), or acceptance of a computer-selected key, which led to similar outcomes (no choice condition). Symbolic cues predicted upcoming choice vs. no-choice trial types and whether key presses would result in a potential monetary gain (Gain condition) or monetary loss (Loss condition). Participants provided subjective ratings of these symbolic cues, and fMRI analyses focused on BOLD activity in response to the cue during the anticipation of choice. Experiment 1 was comprised of a 2×2 design to examine the anticipation of choice opportunity (choice vs. no-choice) as a function of outcome valence (Gain vs. Loss). Experiment 2 examined choice effects for losses occurring in the absence of potential gains, to determine the role of context in the affective experience of choice.

Figure 1.

Example trial structure for choice trial in the gain block (left) and a no choice trial in the loss block (right). At the start of each trial, a symbolic cue explicitly indicating both the Choice Opportunity (Choice vs. No-Choice) and Outcome Valence (Gain vs. Loss) was presented centrally for 2s. Following the cue, there was a randomly jittered inter-stimulus interval (ISI) marking the anticipation phase. Participants were then presented with the response phase (presented for 2s): either a choice scenario, in which they indicated the location (left or right) of the key they wished to choose, or a no-choice scenario, where participants indicated the location of the one available key selected by the computer. Responses and reaction times were recorded on each trial. Immediately after the response phase, the monetary outcome was presented for 2s during an outcome phase. The monetary outcome was followed by a randomly jittered inter-trial interval (ITI).

Experiment 1

Participants

Twenty-four healthy right-handed individuals (12 males; median age = 21 years) recruited from the Rutgers University- Newark campus and gave informed consent according to the review boards at Rutgers and UMDNJ (see Participants in Supplementary Materials for details).

Procedure

Participants were presented with blocks of either gain or loss trials, which were separated into blocks to minimize the cost of switching between trial types. At the start of a new block, participants saw a screen with either “Gain Next” or “Loss Next” (presented for 2s). Each trial (Figure 1) in a block involved the presentation of a cue that announced the type of condition (Choice or No-Choice) followed by two colored keys. In the Choice condition, participants could freely choose between the two keys and in the No-Choice condition, participants were forced to accept a computer-selected key. In the Gain blocks, participants were instructed to select the key (choice between blue and yellow keys) that would win the most money and key presses could result in potential monetary gains ($0, $50, or $100 experimental dollars). In the Loss blocks, participants were instructed to select the key (choice between cyan and magenta keys) that would lose the least money and key presses could result in potential monetary losses (-$0, -$50, -$100). Within the Gain and Loss conditions, the two colored keys were rewarded or penalized equally, regardless of the specific key selected (See Supplemental Materials for more details). Participants were not informed of reward probabilities in advance. Participants were informed that the overall goal was to earn as many experimental dollars as possible, which would be translated into a monetary bonus at the end of the experiment. Because both keys (within a Gain or Loss condition) had the same average value, all subjects earned the same range of experimental dollars, which was translated into real monetary compensation of $50 at the end of the experiment. Data collection and stimuli presentation were done with E-prime-2.0 (PST, Pittsburgh, PA).

There were four types of symbolic cues presented. Each cue marked the beginning of a new trial and indicated which one of four trial types would occur: (1) Choice Gain: choice trials that could result in a gain (e.g. $50 or $100) or non-gain ($0); (2) Choice Loss: choice trials that could result in a loss (e.g. -$50 or -$100) or a non-loss (-$0); (3) No-choice Gain: no-choice trials that could result in a gain or non-gain; and (4) No-choice Loss: no-choice trials that could result in a loss or non-loss. Choice vs. no-choice trials were differentiated by cue shape (e.g. triangle vs. horseshoe), and the gain and loss conditions were differentiated by the orientation of the shape (pointing upward or downward). Associations between cues and trial types were learned explicitly prior to the scanning session. Each of the four trial types occurred 32 times throughout the task and trial order was pseudorandomized within four valence blocks (4 choice and 4 no-choice trials per block) in each of four separate fMRI runs. The order of the valence blocks varied within each functional run, and the order of the runs varied across subjects.

Immediately following the scanning session, participants were asked to rate how much they liked/disliked each of the trial types on a scale from 1 (disliked a lot) to 5 (liked a lot). A rating of 3 indicated that the trial was neither liked nor disliked (neutral rating).

fMRI Data Acquisition and Analyses

A 3T Siemens Allegra head-only scanner and a Siemens standard head coil were used for data acquisition at Rutgers’ University Heights Center for Advanced Imaging. Analysis of imaging data was conducted using Brain Voyageur software (version 2.3; Brain Innovation, Maastricht, The Netherlands). A random effects analysis was performed on the functional data using a general linear model, including the four cue conditions described above, as well as the response/outcome phase and nuisance variables (See Supplementary Materials for details). To explore our a priori hypotheses, we first conducted a region of interest (ROI) analysis, with ROIs in the midbrain and VS, defined by coordinates reported in the literature as selective to the anticipation of monetary reward (Knutson et al., 2005), and used previously to investigate the affective value of anticipating choice (Leotti & Delgado, 2011). Parameter estimates (betas) extracted from these regions were compared across cue-types using paired t-test, and significant statistical tests are reported at p < .05 corrected threshold. To complement the use of functionally defined ROIs, we confirmed and expanded these results with a whole-brain analysis examining differences in anticipatory BOLD activity associated with the four cue types using a 2 (Choice Availability)×2 (Outcome Valence) repeated measures ANOVA (p < .005, k = 6 contiguous voxels for corrected threshold of p < .05). See fMRI Acquisition and Analyses in the Supplemental Material for additional details.

Experiment 2

Participants

Fourteen right-handed individuals (8 females median age = 21 years) were included in this study and gave informed consent.

Procedure

The task used in Experiment 2 was identical to that in Experiment 1, with the exception that the Gain trial blocks were removed. Thus, the task consisted of 32 choice trials and 32 no-choice trials, all which could lead to potential monetary losses (-$0, -$50, -$100). Participants were first endowed with $5000 play money, and instructed to keep as much money as possible. As in Experiment 1, all participants ended up losing the same range of experimental dollars, resulting in a final payment of $50. After the scanning session, participants rated how much they liked or disliked the cues on a scale from 1 (disliked a lot) to 7 (liked a lot), where a rating of 4 indicated that the trial was neither liked nor disliked (neutral rating).

fMRI Data Acquisition and Analyses

Data were acquired using A Siemens 3T Magnetom Trio whole-body scanner at Rutgers University Brain Imaging Center (RUBIC), and analyzed using BrainVoyager QZ (v2.3, Brain Innovation). Choice and no-choice cues were compared in the previously defined ROIs and across the whole-brain.

RESULTS

Experiment 1

Behavioral Results

Cue Ratings

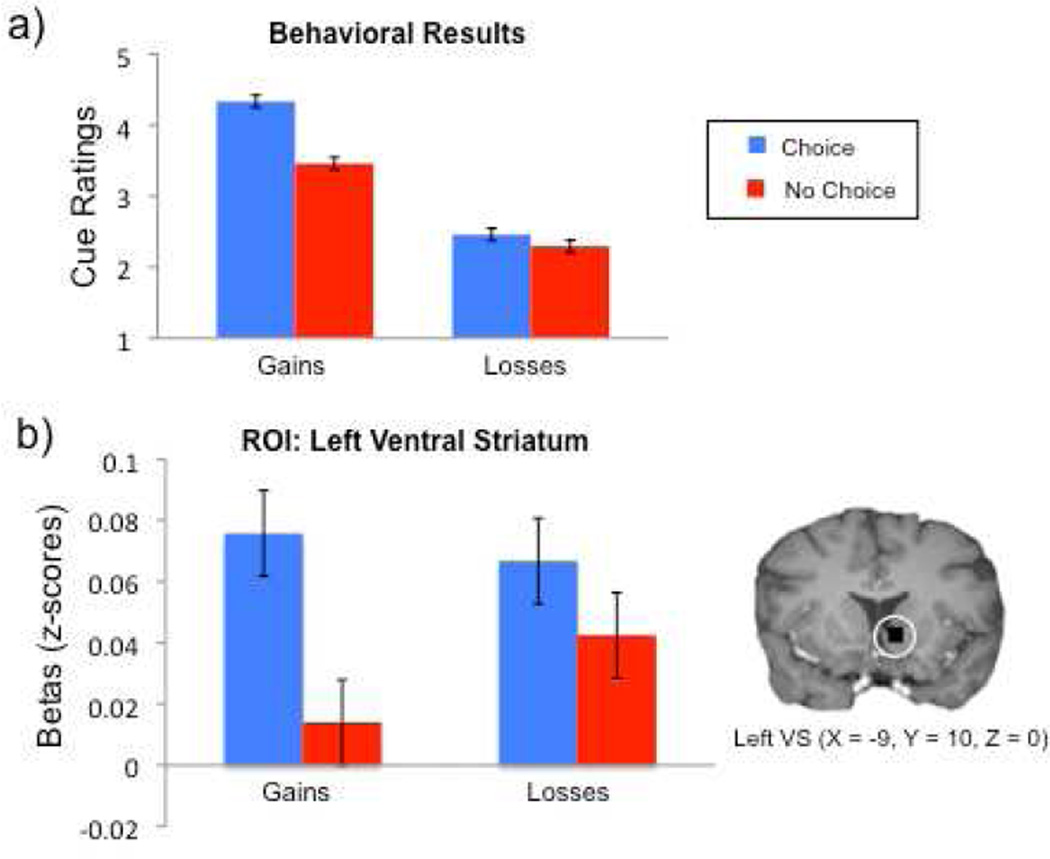

Participants reported liking cues predicting choice more than cues predicting no-choice when anticipating potential monetary gains. However, in the context of monetary losses, the difference in cue ratings was not significant (see Figure 2a).

Figure 2.

(a) Cues predicting choice were liked better than those predicting no choice opportunity when playing for monetary gains, but not monetary losses. (b) In the ventral striatum (a priori ROI sensitive to anticipation of monetary gain), BOLD activity was significantly greater for choice cues in the monetary gain condition, but not in the monetary loss condition.

The full factorial analysis revealed a significant main effect of choice (F(1,23) = 7.78, p = .01), a significant main effect of valence, such that ratings were positive (above a score of 3) for the Gain condition, and ratings were negative (below 3) for the Loss condition (F(1,23) = 38.4, p < .0005), and a marginally significant interaction term (F(1,23) = 4.136, p=.054), illustrating the larger preference for choice cues in the Gain condition relative to the Loss condition.

Participants also rated the cues on several other dimensions and reported preferences for specific choice options (see Supplementary Results and Supplementary Table 1).

Reaction Times

Reaction times (RT) were collected during the response phase of the choice task. There was a significant main effect of Choice Opportunity (F(1,23) = 28.025, p <.001), such that responses are slower when making a decision between two keys (choice condition) rather than just responding to location of a key (no-choice condition). There was a main effect of Outcome Valence (F(1,23) = 26.6, p < .001), where responses are slower overall when making decisions leading to potential monetary losses. There was also a significant interaction (F(1,23) = 5.01, p =.035), such that there is a greater difference in response time between the choice and no-choice conditions in the loss condition relative to the gain condition, which suggests that making a choice was more difficult when anticipating potential losses, perhaps due to anticipated regret. Reaction time differences between choice conditions were not correlated with differences in BOLD activity.

Neuroimaging Results

Region of Interest Analysis

Within the Gain condition, betas associated with the Choice cues were significantly greater than those for the No-choice cues in both left (t(23)= 3.9, p < .05; see Figure 2b), and right VS (t(23)= 4.78, p < .05), as well as midbrain (t(23)= 2.7, p < .05), replicating previous findings of choice opportunity in the context of potential rewards (Leotti & Delgado, 2011). However, within the loss condition, there were no significant differences between cue types in any of the ROIs. Results of the full factorial analysis are provided in the Supplemental Materials.

Choice Opportunity×Outcome Valence ANOVA: Whole-brain Analysis

We observed significant main effects of Choice Opportunity, where activity was greater for choice cues relative to no-choices cues, in the left VS, midbrain, dorsal anterior cingulate cortex (dACC), and supplementary motor area (SMA). Activity was greater for no-choice cues relative to choice cues in the right ventrolateral prefrontal cortex (vlPFC), bilateral inferior parietal lobule, and right posterior middle temporal gyrus. Main effects of Outcome Valence were found only in the precuneus. We observed significant Choice Opportunity×Outcome Valence interactions in the dorsal and VS, midbrain, dACC, ventromedial PFC (vmPFC), and bilateral hippocampus. For all interactions, activity was greater for choice in the Gain condition, but greater for no-choice in the Loss condition, though given the individual differences in choice preference (see below and Supplementary Materials), it is difficult to interpret these interactions. Supplementary Table 2 provides a list of all main effects and interactions.

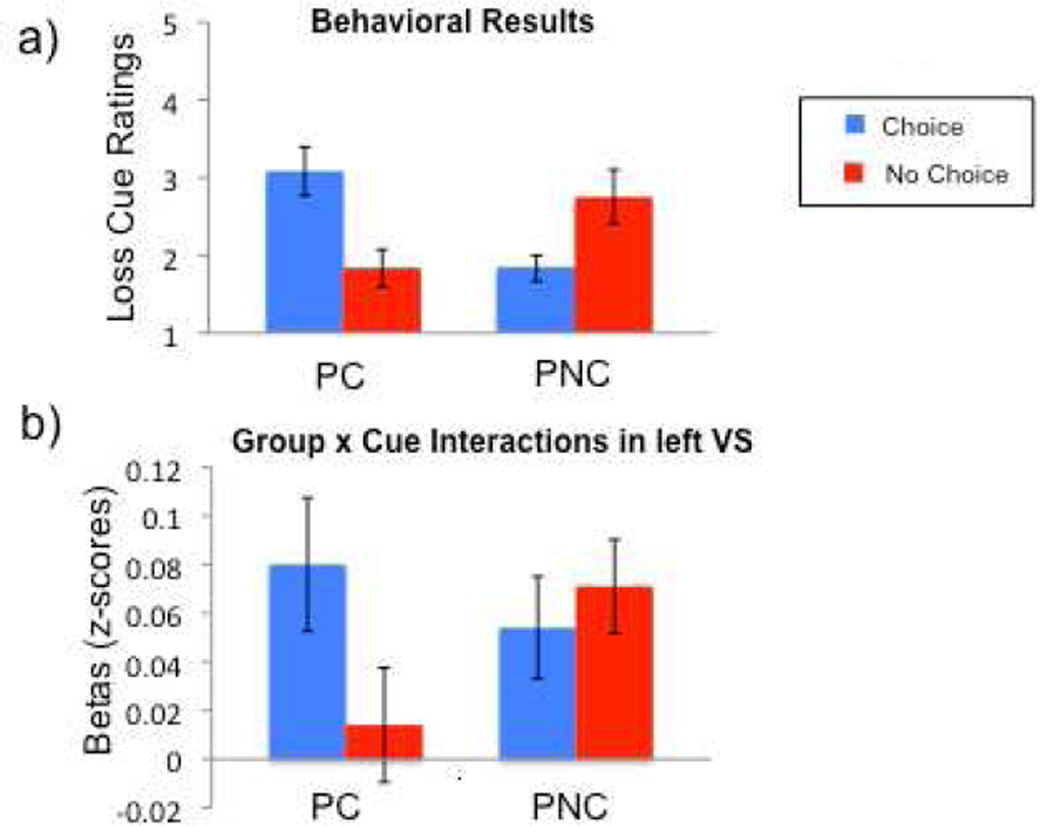

Individual Differences in Loss Condition

Contrary to our primary hypothesis that choice would be rewarding independent of outcome valence, we observed no significant effect of choice in the Loss condition. However, given that individuals may vary in their sensitivity to losses in the context of simultaneous gains, we explored whether individual differences in perceived value of choice in the Loss condition may explain why we did not observe main effects of Choice Opportunity. We looked at post-task questionnaires of cue ratings to establish whether individuals rated cues predicting choice as better liked than those predicting no-choice (choice preference). In the Gain condition, most of the participants reported an explicit preference for choice cues. In contrast, in the Loss condition, only half of the sample population (n=12) reported liking choice cues better than no-choice cues, while the remaining subjects reported a preference for the no-choice cues. Subjects were categorized into two groups based on their preference for choice in the Loss condition to examine individual differences in valuation of choice (see Figure 3a).

Figure 3.

Individual differences analyses in monetary loss condition. Based on loss cue ratings, participants were separated into groups: those who preferred choice (PC) and those who preferred no-choice (PNC). (a) Cue ratings of different trial types, split by group, illustrating the basis for grouping. (b) Choice Opportunity by Group interactions in the left ventral striatum (VS) ROI reveals BOLD activity mirrors behavioral ratings of cues.

Individuals in the Prefer Choice (PC) group (N=12) reported a preference for choice cues over no-choices cues (Choice rating – no-choice rating: M = 1.25) and individuals in the Prefer No-choice (PNC) group (N=12) reported no preference or an explicit preference for the no-choice cues (M = −.92). A Choice Opportunity×Group ANOVA, demonstrates there is no significant main effect of choice, as expected, but a significant interaction (F(1,22) = 55.5, p < .001).

Because there was considerable individual variation in reported preference for choice in the Loss condition, we compared BOLD activity for cues predicting potential losses for the PC and PNC groups. Activity in the VS was consistent with reported preference for cue types, such that those in the PC group demonstrated greater activity in the VS when anticipating choice, and those in the PNC group demonstrated greater activity in the VS when anticipating no-choice (see Figure 3b). This Choice Opportunity by Group interaction was significant in the left VS (F(1,22) = 7.1, p = .014), and marginally significant in the right VS (F(1,22) = 3.7, p = .067), but was not significant in the midbrain. However, there were no significant interactions for cue ratings (p = 0.4) or for any of the ROIs in the gain condition (p > 0.4), suggesting the interaction is specific to the loss condition.

Experiment 2

Experiment 1 replicated previous findings regarding the anticipation of choice opportunity in the context of potential monetary gains (Leotti & Delgado, 2011). However, results were more variable when anticipating choice in the context of potential monetary losses. One explanation for this variability in brain and behavioral responses in choice valuation under aversive conditions (i.e., loss) lies in individual differences in choice preference, as suggested by Experiment 1. A contributing factor could be the context effect inherent in our experimental design, where losses co-occur with gains, which may have magnified the individual differences observed in Experiment 1. Indeed, the impact of context effects (e.g. endowment effects, shifts in reference point) on loss aversion and decision-making has well been established (De Martino, Kumaran, Holt, & Dolan, 2009; De Martino, Kumaran, Seymour, & Dolan, 2006; Kahneman & Tversky, 2000). By experiencing losses concurrent with gains, the anticipation of losses may have been made more salient for some participants. If losses had been experienced in the absence of monetary gains, we hypothesized there would be more consistency in both subjective reports of preference and brain correlates of choice observed in Experiment 1. To address this hypothesis, we conducted a follow-up experiment, where choice anticipation was examined in the context of monetary losses only.

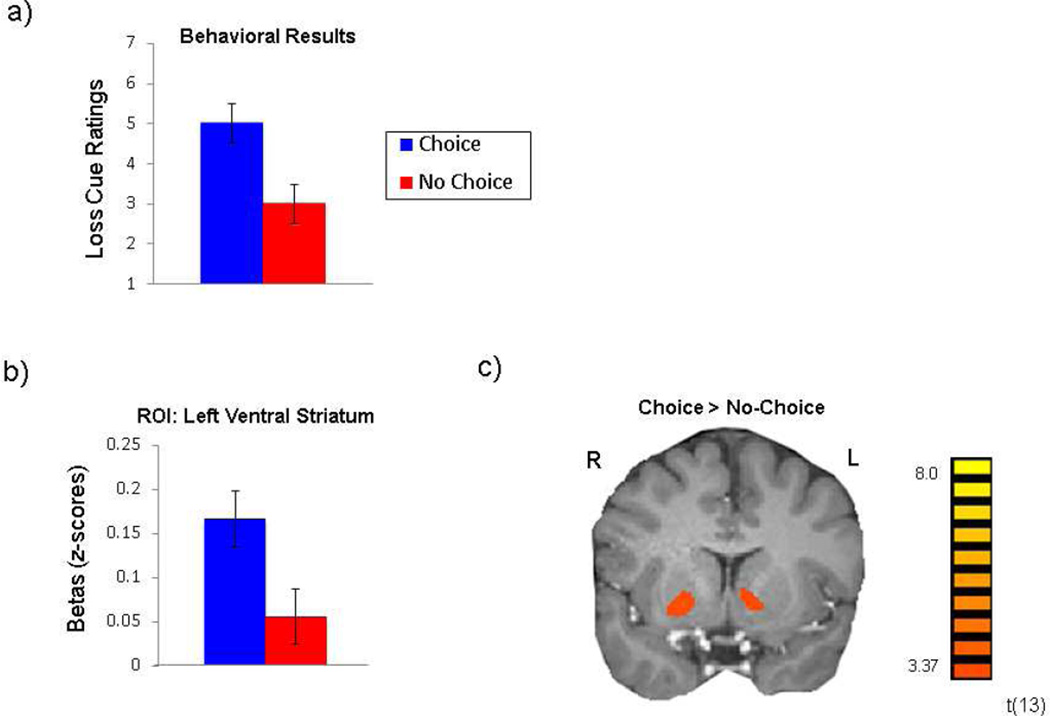

Experiment 2: Behavioral Results

Participants in Experiment 2 reported liking cues predicting an opportunity for choice (M= 5, SD= 1.2) significantly more than cues predicting no-choice (M = 3, SD = 1.5; t(13) = 4.1, p =.001) in the Loss condition (Figure 4a).

Figure 4.

Experiment 2: the context of choice opportunity. When the opportunity for choice is presented in the context of losses only, we observed significantly greater (a) liking of choice cues and choice-related BOLD activity in the ventral striatum, both in the (b) a priori ROI and (c) in the unmasked whole-brain analysis.

This is in contrast to the results of Experiment 1, suggesting that choice opportunity was valued differently in the context of negative outcomes alone (see Supplementary Table 1 for study comparison).

Experiment 2: Neuroimaging Results

Region of Interest Analysis

Linear contrasts of choice effects were analyzed within the a priori ROIs defined in Experiment 1 (Figure 4b). Consistent with our findings from our previous study (Leotti & Delgado, 2011), in the gain condition in Experiment 1, and in the loss condition for the PC group in Experiment 1, betas associated with the Choice cues were significantly greater than those for the No-choice cues in the left VS (t(13) = 3.5, p = .004), and right VS (t(13) = 2.8, p = .014), but not in the midbrain or right anterior insula ROIs.

Whole-brain analysis

A linear contrast of BOLD activity for Choice cues vs. No-Choice cues revealed greater choice-related activity in the bilateral ventral striatum and anterior insula (Figure 4c; Supplementary Table 3).

DISCUSSION

Consistent with our previous study (Leotti & Delgado, 2011), participants in Experiment 1 reported greater preference for choice opportunity and demonstrated increased activity in the VS and midbrain when anticipating choices leading to potential monetary gains. The recruitment of neural circuitry involved in reward-related processes, such as the VS (Delgado, 2007; Montague & Berns, 2002; O'Doherty, 2004; Rangel, Camerer, & Montague, 2008; Robbins & Everitt, 1996), suggests that in the context of positive outcomes, choice opportunity may be inherently valuable. However, in the context of negative outcomes, specifically monetary losses, there was greater variability in the behavioral and brain correlates of the value of choice. Individuals who reported liking choice in the context of potential losses demonstrated increased activity in the ventral striatum in response to Choice cues relative to No-choice cues, whereas those who reported disliking choice in the loss condition demonstrated the opposite pattern. The findings from Experiment 2 suggest that this variability is reduced when potential losses occur in the absence of monetary gains. Specifically, participants more consistently reported a behavioral preference for choice over no-choice and demonstrated a corresponding increase in striatal activity when anticipating choice opportunity in the Loss condition only. The discrepancy between the two studies when examining choice in the loss condition suggests the important role of context in the affective experience of choice and control, and highlight individual differences in the perceived value of choice.

A major difference between the two experiments presented here is the interpretation of the potentially negative outcomes. In the context of Experiment 1, avoiding a monetary loss may be perceived as a negative outcome, relative to receipt of a monetary gain, and as a result, the value of choice is more variable across participants, depending on possible individual differences in loss aversion and perceived control in the face of failure, which people tend to attribute to external sources beyond one’s control (Brewin & Shapiro, 1984; Rotter, 1966). In contrast, in the context of Experiment 2, avoiding a monetary loss may be perceived as a positive outcome (Kim et al., 2006), thus enhancing perceived control (Thompson, Armstrong, & Thomas, 1998), and producing greater reward-related activity. This may explain the differences in choice-related VS activity across the two studies, consistent with previous findings demonstrating that the VS is particularly susceptible to context effects from a shift in reference point (De Martino et al., 2009).

A potential consequence of the context effect present in our design is that it may also have amplified individual differences, in turn contributing to the variability in behavioral and brain responses observed in the loss condition. The act of choosing may be more stressful to some individuals, when there is not sufficient information to make an informed selection (Paterson & Neufeld, 1995), as is the case in the current experiments, where there is no real difference between options, and outcomes occur randomly (see Supplemental Discussion). In fact, greater activity in the lateral PFC has been associated with a tendency to avoid cognitive demand in decision-making (McGuire & Botvinick, 2010). This may explain why, in the context of potential losses in Experiment 1, we observed greater activity in the vlPFC for the no-choice condition across participants. Furthermore, individuals may vary in their tendency to accept the status quo, as opposed to overtly acting in a way that could lead to an error and potential regret (Baron & Ritov, 1994; Samuelson & Zeckhauer, 1988). Additionally, there may be individual differences in the perceived threat of monetary losses (Sokol-Hessner et al., 2009), or loss aversion (Tom, Fox, Trepel, & Poldrack, 2007) that could influence neural activity in the VS and the subjective value of choice opportunity.

Collectively, the findings suggest that the opportunity to exercise control, through choice, is desirable for both choices that lead to obtaining rewards or avoiding punishment, and recruits a common neural circuitry involved in reward processing. Though the VS is commonly reported in the reward literature, it has also been linked to regulation of negative emotion, including avoidance learning (Delgado, Jou, Ledoux, & Phelps, 2009), reappraisal of negative affect (Schiller & Delgado, 2010; Wager, Davidson, Hughes, Lindquist, & Ochsner, 2008), and re-evaluation of aversive events (Sharot, Shiner, & Dolan, 2010). In the current experiments, choice-related activity in the VS following the cue may suggest that reward processes are activated when anticipating an opportunity to choose. The recruitment of the VS has been demonstrated in many other affective and motivational processes beyond reward. For example, others have found that the VS responds to the anticipation of action, when contrasting execution and withholding of responses (Guitart-Masip et al., 2011). However, while the choice condition may be seen as more engaging relative to no-choice condition in the current study, anticipation of action does not likely account for our findings since both conditions involve active responding following the cue (See Supplemental Discussion).

Importantly, the current study is also distinguishable from other studies investigating action-outcome contingency (e.g., O'Doherty et al., 2004; Tricomi, Delgado, & Fiez, 2004), because previous studies focused on outcomes or action-contingent cues predicting such outcomes, whereas the present study focuses on the cue that predicted having control (irrespective of outcome), that is, the affective experience when anticipating an opportunity to choose. Although the expected value associated with choice and no-choice trials are equated in the current study, it is nonetheless possible that participants perceive a difference, and as a result believe that choice affords the opportunity to choose the best possible option (See Supplementary Materials). The present study characterizes the affective experience of choice and future research will be necessary to understand what is driving the perceived value of choice.

In conclusion, by exploiting choice opportunity as the simple, yet fundamental basis of perceiving control, the current study provides the basis for understanding how the perception of control influences our ability to regulate emotional responses to appetitive and aversive stimuli. Across the two experiments, we observed involvement of the striatum and anterior insula in processing the affective experience of choice, revealing that expectations of control recruit neural circuitry important in affective and motivational processing. Furthermore, our findings demonstrate that context effects previously observed in the ventral striatum (De Martino et al., 2009) also extend to the mere opportunity for choice. Because deficits in perceived control are believed to be at the core of many psychiatric diseases (Beck, Emery, & Greenberg, 1985; Maier & Seligman, 1976; Shapiro et al., 1996), fully characterizing the affective experience of choice will be critical for learning about both the development and effective therapeutic treatment of many psychiatric disorders.

Supplementary Material

ACKNOWLEDGMENTS

This research was supported by funding from the National Institute on Drug Abuse to M.R.D. (DA027764) and to L.A.L (F32-DA027308). We thank Vicki Lee and Meg Speer for data collection assistance.

Footnotes

AUTHOR CONTRIBUTIONS: L.A. Leotti and M.R. Delgado developed the study concept and contributed to the study design. Testing and data collection were performed by L.A. Leotti. L.A.Leotti and M.R. Delgado wrote the manuscript and approved the final version of the paper for submission.

REFERENCES

- Bandura A. Self efficacy mechanism in psychobiologic functioning. In: Schwarzer R, editor. Self efficacy: Thought control of action. London: Hemisphere; 1992. pp. 355–394. [Google Scholar]

- Baron J, Ritov I. Reference points and omission bias. Organ Behav Hum Decis Process. 1994;59:475–498. doi: 10.1006/obhd.1999.2839. [DOI] [PubMed] [Google Scholar]

- Beck AT, Emery G, Greenberg RL. Anxiety disorders and phobias. New York: Basic Books; 1985. [Google Scholar]

- Bjork JM, Hommer DW. Anticipating instrumentally obtained and passively-received rewards: a factorial fMRI investigation. Behav Brain Res. 2007;177(1):165–170. doi: 10.1016/j.bbr.2006.10.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brewin CR, Shapiro DA. Beyond locus of control: Attribution of responsibility for positive and negative outcomes. British Journal of Psychology. 1984;75(1):43–49. [Google Scholar]

- De Martino B, Kumaran D, Holt B, Dolan RJ. The neurobiology of reference-dependent value computation. J Neurosci. 2009;29(12):3833–3842. doi: 10.1523/JNEUROSCI.4832-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Martino B, Kumaran D, Seymour B, Dolan RJ. Frames, biases, and rational decision-making in the human brain. Science. 2006;313:684–687. doi: 10.1126/science.1128356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delgado MR. Reward-related responses in the human striatum. Ann N Y Acad Sci. 2007;1104:70–88. doi: 10.1196/annals.1390.002. [DOI] [PubMed] [Google Scholar]

- Delgado MR, Jou RL, Ledoux JE, Phelps EA. Avoiding negative outcomes: tracking the mechanisms of avoidance learning in humans during fear conditioning. Front Behav Neurosci. 2009;3:33. doi: 10.3389/neuro.08.033.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guitart-Masip M, Fuentemilla L, Bach DR, Huys QJ, Dayan P, Dolan RJ, et al. Action dominates valence in anticipatory representations in the human striatum and dopaminergic midbrain. J Neurosci. 2011;31(21):7867–7875. doi: 10.1523/JNEUROSCI.6376-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahneman D, Tversky A. Choices, values, and frames. Cambridge University Press; 2000. [Google Scholar]

- Kim H, Shimojo S, O'Doherty JP. Is avoiding an aversive outcome rewarding? Neural substrates of avoidance learning in the human brain. PLoS biology. 2006;4(8):e233. doi: 10.1371/journal.pbio.0040233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knutson B, Adams CM, Fong GW, Hommer D. Anticipation of increasing monetary reward selectively recruits nucleus accumbens. J Neurosci. 2001;21(16):RC159. doi: 10.1523/JNEUROSCI.21-16-j0002.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knutson B, Taylor J, Kaufman M, Peterson R, Glover G. Distributed neural representation of expected value. J Neurosci. 2005;25(19):4806–4812. doi: 10.1523/JNEUROSCI.0642-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leotti LA, Delgado MR. The inherent reward of choice. Psychol Sci. 2011;22(10):1310–1318. doi: 10.1177/0956797611417005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leotti LA, Iyengar SS, Ochsner KN. Born to Choose: The Origins and Value of the Need for Control. Trends in Cognitive Sciences. 2010;14(10):457–463. doi: 10.1016/j.tics.2010.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maier SF. Stressor controllability and stress-induced analgesia. In: Kelly DD, editor. Stress-induced analgesia. Annals of the new york academy of sciences. Vol. 467. New York: New York Academy of Sciences; 1986. pp. 55–72. [DOI] [PubMed] [Google Scholar]

- Maier SF, Seligman ME. Learned helplessness: Theory and evidence. Journal of Experimental Psychology: General. 1976;105(1):3–46. [Google Scholar]

- McGuire JT, Botvinick MM. Prefrontal cortex, cognitive control, and the registration of decision costs. Proc Natl Acad Sci U S A. 2010;107(17):7922–7926. doi: 10.1073/pnas.0910662107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Montague P, Berns G. Neural economics and the biological substrates of valuation. Neuron. 2002;36(2):265. doi: 10.1016/s0896-6273(02)00974-1. [DOI] [PubMed] [Google Scholar]

- O'Doherty JP. Reward representations and reward-related learning in the human brain: insights from neuroimaging. Curr Opin Neurobiol. 2004;14(6):769–776. doi: 10.1016/j.conb.2004.10.016. [DOI] [PubMed] [Google Scholar]

- O'Doherty JP, Dayan P, Schultz J, Deichmann R, Friston K, Dolan RJ. Dissociable roles of ventral and dorsal striatum in instrumental conditioning. Science. 2004;304(5669):452–454. doi: 10.1126/science.1094285. [DOI] [PubMed] [Google Scholar]

- Paterson RJ, Neufeld RW. What are my options? Influences of choice availability on stress and the perception of control. Journal of Research in Personality. 1995;29(2):145–167. [Google Scholar]

- Rangel A, Camerer C, Montague PR. A framework for studying the neurobiology of value-based decision making. Nat Rev Neurosci. 2008;9(7):545–556. doi: 10.1038/nrn2357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robbins TW, Everitt BJ. Neurobehavioural mechanisms of reward and motivation. Curr Opin Neurobiol. 1996;6(2):228–236. doi: 10.1016/s0959-4388(96)80077-8. [DOI] [PubMed] [Google Scholar]

- Rotter J. Generalised expectancies for internal versus external control of reinforcement. Psychological Monographs. 1966;80(1, Whole no. 609):1–28. [PubMed] [Google Scholar]

- Ryan RM, Deci EL. Self-regulation and the problem of human autonomy: does psychology need choice, self-determination, and will? J Pers. 2006;74(6):1557–1585. doi: 10.1111/j.1467-6494.2006.00420.x. [DOI] [PubMed] [Google Scholar]

- Samanez-Larkin GR, Gibbs SE, Khanna K, Nielsen L, Carstensen LL, Knutson B. Anticipation of monetary gain but not loss in healthy older adults. Nat Neurosci. 2007;10(6):787–791. doi: 10.1038/nn1894. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Samuelson W, Zeckhauer R. Status quo bias in decision making. Journal of Risk and Uncertainty. 1988;1:7–59. [Google Scholar]

- Schiller D, Delgado MR. Overlapping neural systems mediating extinction, reversal and regulation of fear. Trends Cogn Sci. 2010;14(6):268–276. doi: 10.1016/j.tics.2010.04.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shapiro DH, Jr, Schwartz CE, Astin JA. Controlling ourselves, controlling our world. Psychology's role in understanding positive and negative consequences of seeking and gaining control. Am Psychol. 1996;51(12):1213–1230. doi: 10.1037//0003-066x.51.12.1213. [DOI] [PubMed] [Google Scholar]

- Sharot T, Shiner T, Dolan RJ. Experience and choice shape expected aversive outcomes. J Neurosci. 2010;30(27):9209–9215. doi: 10.1523/JNEUROSCI.4770-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sokol-Hessner P, Hsu M, Curley NG, Delgado MR, Camerer CF, Phelps EA. Thinking like a trader selectively reduces individuals' loss aversion. Proc Natl Acad Sci U S A. 2009;106(13):5035–5040. doi: 10.1073/pnas.0806761106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson SC, Armstrong W, Thomas C. Illusions of control, underestimations, and accuracy: A control heuristic explanation. Psychological Bulletin. 1998;123:143–161. doi: 10.1037/0033-2909.123.2.143. [DOI] [PubMed] [Google Scholar]

- Tom SM, Fox CR, Trepel C, Poldrack RA. The neural basis of loss aversion in decision-making under risk. Science. 2007;315(5811):515–518. doi: 10.1126/science.1134239. [DOI] [PubMed] [Google Scholar]

- Tricomi EM, Delgado MR, Fiez JA. Modulation of caudate activity by action contingency. Neuron. 2004;41(2):281–292. doi: 10.1016/s0896-6273(03)00848-1. [DOI] [PubMed] [Google Scholar]

- Wager TD, Davidson ML, Hughes BL, Lindquist MA, Ochsner KN. Prefrontal-subcortical pathways mediating successful emotion regulation. Neuron. 2008;59(6):1037–1050. doi: 10.1016/j.neuron.2008.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.