Abstract

Executive functions and learning share common neural substrates essential for their expression, notably in prefrontal cortex and basal ganglia. Understanding how they interact requires studying how cognitive control facilitates learning, but also how learning provides the (potentially hidden) structure, such as abstract rules or task-sets, needed for cognitive control. We investigate this question from three complementary angles. First, we develop a new computational “C-TS” (context-task-set) model inspired by non-parametric Bayesian methods, specifying how the learner might infer hidden structure and decide whether to re-use that structure in new situations, or to create new structure. Second, we develop a neurobiologically explicit model to assess potential mechanisms of such interactive structured learning in multiple circuits linking frontal cortex and basal ganglia. We systematically explore the link betweens these levels of modeling across multiple task demands. We find that the network provides an approximate implementation of high level C-TS computations, where manipulations of specific neural mechanisms are well captured by variations in distinct C-TS parameters. Third, this synergism across models yields strong predictions about the nature of human optimal and suboptimal choices and response times during learning. In particular, the models suggest that participants spontaneously build task-set structure into a learning problem when not cued to do so, which predicts positive and negative transfer in subsequent generalization tests. We provide evidence for these predictions in two experiments and show that the C-TS model provides a good quantitative fit to human sequences of choices in this task. These findings implicate a strong tendency to interactively engage cognitive control and learning, resulting in structured abstract representations that afford generalization opportunities, and thus potentially long-term rather than short-term optimality.

1 Introduction

Life is full of situations that require us to appropriately select simple actions, like clicking Reply rather than Delete to an email, or more complex actions requiring cognitive control, like changing modes of operation when switching from a Mac to a Linux machine. These more complex actions themselves define simple rules, or task-sets, i.e., abstract constructs that signify appropriate stimulus-response groupings in a given context (Monsell, 2003). Extensive task-switching literature has revealed the existence of task-set representations in both mind and brain (fMRI: Dosenbach et al. (2006), monkey electrophysiology: Sakai (2008), etc). Notably, these task-set representations are independent of the context in which they are valid (Reverberi et al., 2011; Woolgar et al., 2011) and even of the specific stimuli and actions to which they apply (Haynes et al., 2007), and are thus abstract latent constructs that constrain simpler choices.

Very little research addresses how such task-sets are constructed during uninstructed learning, and for what purpose (i.e. do they facilitate learning?). Task-switching studies are typically supervised: the relevant rule is explicitly indicated and the rules themselves are either well known (e.g., arrows pointing to the direction to press) or highly trained (e.g., vowel-consonant discriminations). In some studies, participants need to discover when a given rule has become invalid and switch to a new valid rule from a set of known candidate options (Nagano-Saito et al., 2008; Mansouri et al., 2009; Imamizu et al., 2004; Yu & Dayan, 2005; Hampton et al., 2006), without having to learn the nature of the rules themselves. Conversely, the reinforcement learning literature has largely focused on how a single rule is learned and potentially adapted, in the form of a mapping between a set of stimuli and responses.

However, we often need to solve these two problems simultaneously: in an unknown context, the appropriate rules might be completely new and hence need to be learned, or they might be known rules that only need to be identified as valid, and simply reused in the current context. How do humans simultaneously learn (i) the simple stimulus-response associations that apply for a given task-set, and (ii) at the more abstract level, which of the candidate higher order task-set rules to select in a given context (or whether to build a new one)? Though few studies have confronted this problem directly, a few of them have examined simultaneous learning at different hierarchical levels of abstraction. For example, subjects learned more efficiently when a simplifying rule-like structure was available in the set of stimulus-action associations to be learned (“policy abstraction”, Badre et al., 2010). Collins & Koechlin (2012) showed that subjects build repertoires of task-sets, and learn to discriminate between whether they should generalize one of the stored rules or learn a new one in a new temporal context. Both studies thus showed that when structure was available in the learning problem (signified by either contextual cues or by temporal structure), subjects were able to discover such structure and make efficient use of it to speed learning. However, these studies do not address whether and how subjects spontaneously and simultaneously learn such rules and sets of rules when the learning problem does not in some way cue that organization. One might expect such structure building in part because it may afford a performance advantage for subsequent situations that permit generalization of learned knowledge.

Here, we develop computational models to explore the implications of building task-set structure into learning problems, whether or not there is an immediate advantage to doing so. We then examine how, when confronted with new contexts, humans and models can decide whether to re-use existing structured representations or to create new ones.

We have thus far considered how rules requiring cognitive control (task-sets) are created and learned. We now turn to the reciprocal question needed to close the loop: how does cognitive control facilitate learning?

For example, the computational reinforcement learning (RL) framework typically assumes that subjects learn for each state (e.g., observed stimulus) to predict their expected (discounted) future rewards for each of the available actions. These state-action reward values are used to determine the appropriate action to select (e.g., Sutton & Barto, 1998; Samejima et al., 2005; Daw & Doya, 2006; Frank et al., 2007a). Most reinforcement learning studies assume that the relevant state space is known and fully observable. However, there could be uncertainty about the nature of the state to be learned, or this state might be hidden (e.g., it may not represent a simple sensory stimulus, but could be, for example, a sequential pattern of stimuli, or it could depend on the subject’s own previous actions). When given explicit cues informative about these states (but not which actions to take), participants are much more likely to discover the optimal policy in such environments (Gureckis & Love, 2010). Without such cues, learning requires making decisions based on the (partially) hidden states. Thus, cognitive control may be necessary for hypothesis testing about current states which act as contexts for learning motor actions (e.g., treating the internally maintained state as if it was an observable stimulus in standard RL).

Indeed, recent behavioral modeling studies have shown that subjects can learn hidden variables such as latent states relevant for action selection, as captured by Bayesian inference algorithms or approximations thereof (Redish et al., 2007; Gershman et al., 2010; Todd et al., 2009; Frank & Badre, 2011; Collins & Koechlin, 2012; Wilson & Niv, 2011). In most of these studies, there is a clear advantage to be gained by learning these hidden variables, either in optimizing learning speed (Behrens et al., 2007), or to separate superficially similar conditions into two different latent states. Thus learning often implicates more complex strategies including identification and manipulation of hidden variables. Some studies have shown that subjects even tend to infer hidden patterns in the data when they do not exist and afford no behavioral advantage (Yu & Cohen, 2009), or when it is detrimental to do so (Gaissmaier & Schooler, 2008; Lewandowsky & Kirsner, 2000). Thus humans may exhibit a bias to use more complex strategies even when they’re not useful, potentially because these strategies are beneficial in many real life situations.

We can thus predict that subjects might adopt this same approach to create task-set structure – identifying cues as indicative of task-sets which contextualize lower level stimulus-response mappings – when learning very simple stimulus-action associations that require no such structure. This prediction relies on the three previously described premises found in the literature:

when cued, rules requiring cognitive control can be discovered and leveraged;

learning may involve complex cognitive control-like strategies which can in turn improve learning;

subjects have a bias to infer more structure than needed in simple sequential decision tasks.

The first two points define the reciprocal utility of cognitive control and learning mechanisms. The third point implies that there must be an inherent motivation for building such structure. One such motivation, explored in more detail below, is that applying structure to learning of task-sets may afford the possibility of reusing these task-sets in other contexts, thus affording generalization of learned behaviors to future new situations. Next, we motivate the development of computational models inspired by prior work in the domain of category learning but extended to handle the creation and re-use of task-sets in policy selection.

1.1 Computational models of reinforcement learning, category learning, and cognitive control

Consider the problem of being faced with a new electronic device (e.g., your friend’s cell phone) or a new software tool. Although these examples constitute new observable contexts or situations, figuring out the proper actions often does not require to relearn from “scratch”. Instead, with just a little trial and error, we can figure out the general class of software or devices to which this applies, and act accordingly. Occasionally, however, we might need to recognize a veridical novel context that requires new learning, without unlearning existing knowledge (e.g., learning actions for a Mac without interfering with actions for a PC). In the problems we define below, people need to learn a set of rules (hidden variables) that is discrete but of unknown size, informed by external observable cues and which serve to condition the observed stimulus-action-feedback contingencies. They also need to infer the current hidden state/rule for action selection in any given trial. Three computational demands are critical for this sort of problem:

the ability to represent a rule in an abstract form, dissociated from the context with which it has been typically associated, as is the case for task-sets (Reverberi et al., 2011; Woolgar et al., 2011), such that it is of potentially general rather than local use;

the ability to cluster together different arbitrary contexts linked to a similar abstract task-set;

the ability to build a new task-set cluster when needed, to support learning of that task-set without interfering with those in other contexts.

This sort of problem can be likened to a class of well-known nonparametric Bayesian generative processes, often used in Bayesian models of cognition, Chinese restaurant processes (Blei et al., 2004)1. Computational approaches suitable for addressing the problem of inferring hidden rules (and where the number of rules is unknown) include Dirichlet process mixture models (e.g. Teh et al., 2006) and infinite partially observable Markov decision processes (iPOMDP; Doshi, 2009). This theoretical framework has been successfully leveraged in the domain of category learning (e.g. Sanborn et al., 2006, 2010; Gershman et al., 2010; Gershman & Blei, 2012), where latent category clusters are created that allow principled grouping of perceptual inputs to support generalization of learned knowledge, even potentially inferring simultaneously more than one possible relevant structure for categorization (Shafto et al., 2011). Further, although optimal inference is too computationally demanding and has high memory cost, reasonable approximations have been adapted to account for human behavior (Sanborn et al., 2010; Anderson, 1991).

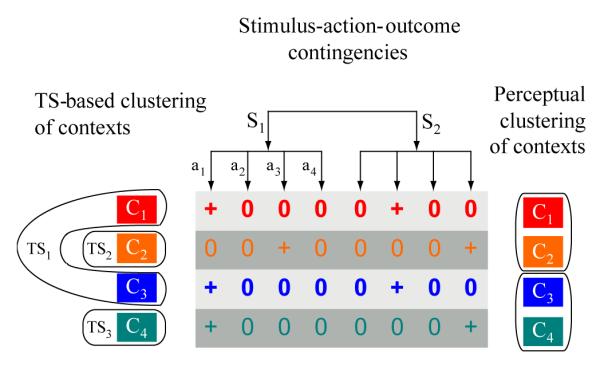

Here, we take some inspiration from these models of perceptual clustering and extend them to support clustering of more abstract task-set states which then serve to contextualize lower level action selection. We discuss the relationship with our work and the category learning models in more detail in the General Discussion. In brief, the perceptual category learning literature typically focuses on learning categories based on similarity between multidimensional visual exemplars. In contrast, useful clustering of contexts for defining task-sets relies not on their perceptual similarity but rather in their linking to similar stimulus-action-outcome contingencies (Figure 1, only one ‘dimension’ of which are observable in any given trial. We thus extend similarity-based category learning from the mostly observable perceptual state-space to an abstract, mostly hidden but partially observable rule space.

Figure 1. Task-set clustering vs perceptual category clustering.

A task-set defines a set of (potentially probabilistic) stimulus-action-outcome (S-A-O) contingencies, depicted here with deterministic binary outcomes for simplicity. To identify similarity between disparate contexts pointing to the same latent task-set (left), the agent has to actively sample and experience multiple distinct S-A-O contingencies across trials (only one S and one A from the potentially much larger set is observable in a single trial). In contrast, in perceptual category learning, clustering is usually built from similarity among perceptual dimensions (shown simplistically here as color grouping, right), with all (or most) relevant dimensions observed at each trial. Furthermore, from the experimenter perspective, subject beliefs about category labels are observed directly by their actions; in contrast, abstract task-sets remain hidden to the experimenter (e.g., the same action can apply to multiple task-sets and a single task-set consists of multiple S-A contingencies).

In a mostly separate literature, computational models of cognitive control and learning have been fruitfully applied to studying a wide range of problems. However, these too have limitations. In the vast majority, learning problems are modeled with RL algorithms that assume perfect knowledge of the state, although some recent models include state uncertainty or learning about problem structure in specific circumstances (Acuña & Schrater, 2010; Kruschke, 2008; Nassar et al., 2010; Green et al., 2010; Wilson & Niv, 2011; Botvinick, 2008; Frank & Badre, 2011; Collins & Koechlin, 2012).

Thus our contribution here is to establish a link between the clustering algorithms of category learning models on the one hand, and the task-set literature and models of cognitive control and reinforcement learning on the other. The merger of these modeling frameworks allows us to address the computational tradeoffs inherent in building vs. reusing task-sets for guiding action selection and learning. We propose a new computational model inspired by the Dirichlet process mixture framework, while including reinforcement learning heuristics simple enough to allow for quantitative trial by trial analysis of subjects’ behavior. This simplicity allows us to assume a plausible neural implementation of this approximate process, grounded by the established and expanding literature on the neurocomputational mechanisms of reinforcement learning and cognitive control. In particular, we show that a multiple loop corticostriatal gating network using reinforcement learning can implement the requisite computations to allow task-sets to be created or re-used. The explicit nature of the mechanisms in this model allows us to derive predictions regarding the effects of biological manipulations, and disorders on structured learning and cognitive control. Because it is a process model it also affords predictions about the dynamics of action selection within a trial, and hence response times.

1.2 Neural mechanisms of learning and cognitive control

Many neural models of learning and cognitive control rely on the known organization of multiple parallel frontal cortico-basal ganglia loops (Alexander & DeLong, 1986). These loops implement a gating mechanism for action selection, facilitating selection of the most rewarding actions while suppressing less rewarding actions, where the reward values are acquired via dopaminergic reinforcement learning signals (e.g. Doya, 2002; Frank, 2005). Moreover, the same mechanisms have been co-opted to support the gating of more cognitive actions, such as working memory updating and maintenance via loops connecting more anterior prefrontal regions and basal ganglia (Frank et al., 2001; O’Reilly & Frank, 2006; Gruber et al., 2006; Todd et al., 2009).

In particular, O’Reilly & Frank (2006) have shown how multiple PFC-BG circuits can learn to identify and gate stimuli into working memory, and to represent these states in active form such that subsequent motor responses can be appropriately contextualized. Todd et al. (2009) provided an analysis of this gating and learning process in terms of POMDPs. Recently, Frank & Badre (2011) proposed a hierarchical extension of this gating architecture for increasing efficiency and reducing conflict when learning multiple tasks. Noting the similarity between learning to choose a higher order rule, and learning to select an action within a rule, they implement these mechanisms in parallel gating loops, with hierarchical influence of one loop over another. This generalized architecture enhanced learning and, when reduced to a more abstract computational level model, provided quantitative fits to human subjects behavior, with support for its posited mechanisms provided by functional imaging analysis (Frank & Badre, 2011; Badre et al., 2012). However, neither that model nor its predecessors can account for the sort of task-set generalization to novel contexts afforded by the iPOMDP framework and observed in the experiments reported below. We thus develop a novel hierarchical extension of the cortico-basal ganglia architecture to simultaneously support the selection of abstract task-sets in response to arbitrary cues, and of actions in response to stimuli, contextualized by the abstract rule.

The remainder of the paper is organized as follows. We first present the C-TS model, an approximate non-parametric Bayesian framework for creation, learning and clustering task-set structure and show that it supports improved performance and generalization when multiple contextual states are indicative of previously acquired task-sets. We consider cases in which building task-set structure is useful for improving learning efficiency and also when it is not. We then show how this functionality can be implemented in a nested corticostriatal neural network model, with associated predictions about dynamics of task-set and motor response selection. We provide a principled linking between the two levels of modeling to show how selective biological manipulations in the neural model are captured by distinct parameters within the non-parametric Bayesian framework. This formal analysis allows us to derive a new behavioral task protocol to assess human subjects’ tendency to incidentally build, use, and transfer task-set structure without incentive to do so. We validate these predictions in two experiments.

2 C-TS Model description

We first present the C-TS model for building TS-structure given a known context and stimulus space. Below we extend this to the general case allowing inference about which input dimension constitutes context, which constitutes lower level stimulus, and whether this hierarchical structure is present at all.

2.1 C-TS Model

We begin by describing the problem in terms of the following structure. As in standard RL problems, at each time t the agent needs to select an action at that, depending on the current state (sensory input), leads to reinforcement rt. We confront the situation in which the link between state and action depends on higher task-set rules which are hidden (and of unknown size, i.e. the learner does not know how many different rules exist). To do so, we assume that the state is itself determined hierarchically. Specifically, we assume that the agent considers some input dimensions to act as higher order context ct potentially indicative of a task-set, and other dimensions to act as lower level stimulus st for determining which motor actions to produce. In the examples we consider below, ct could be a color background in an experiment, and st could be a shape.

We further assume that at any point in time, a non observable variable indicates the valid rule or task-set TSt and determines the contingencies of reinforcement: P(rt∣st, at, ct) = ∑TSi P(rt∣st, at, TSi)P(TSi∣ct). For simplicity, we assume probabilistic binary feedback, such that P(rt∣st, at, TSt) are Bernoulli probability distributions. In words, the action that should be selected in the current state is conditioned on the latent task set variable TSt, which is itself cued by the context. Note that there is not necessarily a one-to-one mapping from contexts to task-sets: indeed, a given task-set may be cued by multiple different contexts. We assume that the prior on clustering of the task-sets corresponds to a Dirichlet (“chinese restaurant”) process: if contexts {c1:n} are clustered on N ≤ n task-sets, then for any new context cn+1 ∉ {c1:n},

| (1) |

Where Ni is the number of contexts clustered on task-set i, α > 0 is a clustering parameter and A = α + ∑k=1…N Nk = α + N is a normalizing constant (Gershman et al., 2010). Thus, for each new context, the probability of creating a new task-set is proportional to α, and the probability of reusing one of the known task-sets is proportional to the popularity of that task-set across multiple other contexts.

We do not propose that humans solve the inference problem posed by such a generative model. Indeed, near optimal inference is computationally extremely demanding both in memory and computation capacities, which does not fit with our objective of representing the learning problem as an online, incremental and efficient process in a way that may be plausibly achieved by human subjects. Instead, we propose a reinforcement-learning-like algorithm that approximates this inference process well enough to produce adequate learning and generalization abilities, but simple enough to be plausibly carried out and to allow analysis of trial-by-trial human behavior. Nevertheless, as a benchmark, we did simulate a more exact version of the inference process using a particle filter with large number of particles. As expected, learning overall is more efficient with exact inference, but all qualitative patterns presented below for the approximate version are similar.

The crucial aims of the C-TS model are (i) to create representations of task-set (TS) and of their parameters (stimulus-response-outcome mappings); (ii) to infer at each trial which TS is applicable and should thus guide action selection; and (iii) to discover the unknown space of hidden TS rules. Given that a particular TSi is created, the model must learn predicted reward outcomes following action selection in response to the current stimulus P(r∣s, a, TSi). We assume Beta probability distribution priors on the parameter of the Bernoulli distribution. Identification of the valid hidden TS rule is accomplished through Bayesian inference, as follows. For all TSi in the current TS space, and all contexts cj, we keep track of the probability that this task-set is valid given the context, p(TSi∣cj), and the most probable task-set TSt in context ct is used for action selection. Specifically, after observation of reward outcome, the estimated posterior validities of all TSi are updated:

| (2) |

where NTS(t) is the number of task-sets created by the model up to time t (see details below), and all probabilities are implicitly conditioned on past history of trials. This ex-post calculation determines the most likely hidden rule corresponding to the trial once the reward has been observed. We assign this trial definitively to that particular latent state, rather than keeping track of the entire probability history. This posterior then determines (i) which task-set’s parameters (stimulus-action associations) is updated, and (ii) the inferred task-set on subsequent encounters of context ct. Motor action selection is then determined as a function of the expected reward values of each stimulus action pair given the TS, Q(s, ak) = E(r∣st, ak, TSt), where the choice function can be greedy or noisy, for example softmax (see equation 4)2.

The last critical aspect of this model is the building of the hidden TS space itself, the size of which is unknown. Each time a new context is observed we allow the model the potential to be linked to a TS in the existing set or to expand the considered TS space, such that NTS(t + 1) = NTS(t) + 1. Thus, upon each first encounter of a context cn+1, we increase the current space of possible hidden TS by adding a new (blank) TSnew to that space (formally, a blank TS is defined by initializing P(r∣s, a, TSnew) to an uninformative prior). We then initialize the prior probability that this new context is indicative of TSnew or whether it should instead be linked to an existing TS, as follows.

| (3) |

Here, α determines the likelihood of visiting a new TS state (as in a Dirichlet / Chinese restaurant process), and A is a normalizing factor: A = α + ∑i,j P(TSi∣cj). Intuitively, this prior allows a popular TS to be more probably associated to the new context, weighed against factor α determining the likelihood of constructing a new hidden rule. α can be thought of as a clustering parameter, with lower values yielding more clustering of new contexts to existing TS’s.3 Note that the new task-set might never have been estimated as valid either a priori (and thus never chosen), or a posteriori (and thus remain blank). Therefore, multiple contexts could feasibly link to the same TS and the number of filled (not blank) task-sets does not need to increase proportionally with the number of contexts. We can estimate the expected number of existing TS by summing across all potential TS their expected probability across contexts. Finally, note that since there is no backward inference, and only one history of assignments is tracked (partly analogous to a particle filter with a single particle), we use probabilities rather than discrete number assignments to clusters to initialize the prior. The approximation made in the Bayesian inference, which in its exact form would require keeping track of all possible clustering of previous trials and summing over them, which is computationally intractable, means that at each trial, we collapse the joint posterior on a single high probability task-set assignment. We still keep track of and propagate uncertainty about that assignment and the clustering of contexts, but forget uncertainty about the specific earlier assignments.

2.2 Flat model

As a benchmark, we compare the above structured C-TS model’s behavior to a “flat” learner model, which represents and learns all inputs independently from one-another (ie. so that contexts are treated just like other stimuli; fig 11a). We refer readers to the appendix for details. Briefly, the flat model represents the “state” as the conjunction of stimulus and context and then estimates expected reward for state-action pairs, Q((ct, st), at).

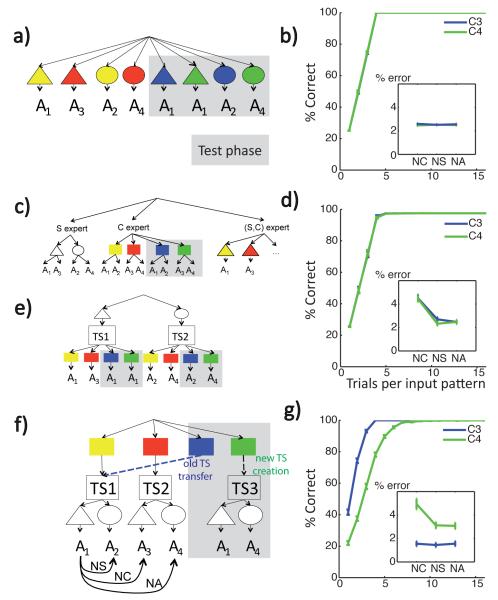

Figure 11. Various Model Predictions.

(a,c,e,f) Graphical representation of model information structures. Grey areas represent test-phase only associations. (b,d,g) Model test phase predictions for the transfer condition (blue) and the new condition (green): Proportion of correct responses as a function of input repetition, inset: proportion of errors of type; neglect color (NC), neglect shape (NS) or neglect all (NA). Model simulations were conducted using parameters chosen for best model performance within a qualitatively representative range, over 1000 repetitions. a) Flat model: all input-action associations are represented independently of each other (ie conjunctively). b) The flat model predicts no effect of test condition on learning or error type. c) Dimension-experts model. Appropriate actions for shapes and colors are represented separately. In the test phase the shape expert does not have any new links to learn (no new shapes, no new correct actions for the old shapes in new colors), while the color expert learns links for the new colors. d) No effect of test condition in this model, but a main effect of error type. e) S-TS(c) structure model: shape acts as a context for selecting task-sets that determine color stimulus-action associations, so that new test-phase colors are new stimuli to be learned within already created task-sets. Predictions for this model are qualitatively the same as for the dimension experts model (d). f) C-TS(s) structure model: color context determines a latent task-set, that contextualizes the learning of shape stimulus-action asociations. The C3 transfer context may be linked to TS1, whereas the C4 new context should be assigned to a new task-set. Curbed arrows indicate different kinds of errors: NS, NC or NA. g) C-TS(s) model predicts faster learning for test transfer condition than test new condition, and an interaction between condition and error type.

Policy is determined by the commonly used softmax rule for action selection as a function of the expected reward for each action:

| (4) |

where β is an inverse temperature parameter determining the degree of exploration vs exploitation, such that very high β values lead to a greedy policy.

2.3 Generalized structure model

The C-TS model described earlier arbitrarily imposed one of the input dimensions (C) as the context cueing the task-sets, and the other (S) acting as stimulus to be linked to an action according to the defined task-set. We will denote S-TS the symmetrical model that would have made the contrary assignment of input dimensions.

A more adaptive inference model would not choose one fixed dimension as context but instead would infer the identity of the contextual dimension. Indeed, the agent should be able to infer whether there is task-set structure at all. We thus develop a generalized model that simultaneously considers potential C-TS structure, S-TS structure, or flat structure and makes inferences about which of these generative models is valid. For more details, see the appendix.

3 C-TS Model behavior

3.1 Initial clustering

We first simulated the C-TS model to verify that this approximate inference model can leverage structure and appropriately cluster contexts around corresponding abstract task-sets when such structure exists. We therefore first simulated a learning task in which there is a strong immediate advantage to learning structure (see figure 2, top left). This task included sixteen different contexts and three stimuli, presented in interleaved fashion. Six actions were available to the agent. Critically, the structure was designed so that eight contexts were all indicative of the same task-set TS1, while the other eight signified another task-set TS2. Feedback was binary and deterministic.

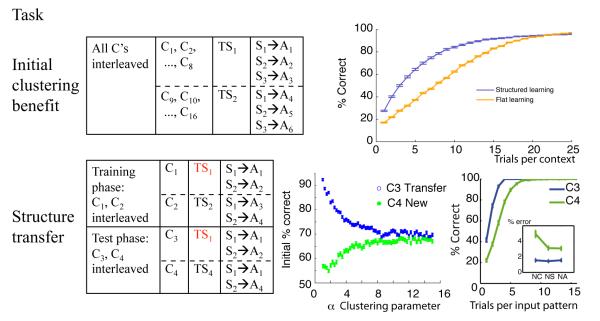

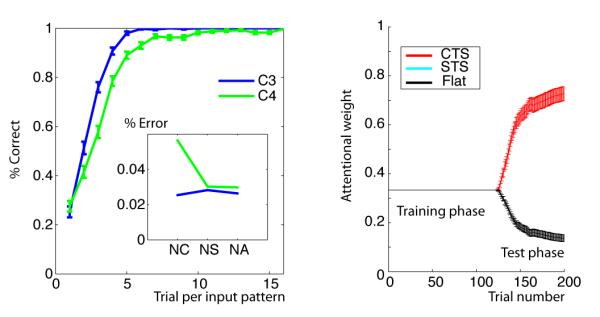

Figure 2. Paradigms used to assess task-set clustering as a function of clustering parameter α.

Top: Initial clustering benefit task: demonstration of advantage to clustering during a learning task in which there are 16 “redundant” contexts, signifying just two distinct TS (see protocol on the left). Speeded learning is observed for structured model (with low Dirichlet parameter α = 1, thus high prior for clustering), compared to flat learning model (high α, so that state-action-outcome mappings are learned separately for each context). Bottom: Structure transfer task: Effect of clustering on subsequent transfer, when there is no advantage to clustering during initial learning (protocol on left). Bottom middle. Proportion of correct responses as a function of clustering α parameter in the first 10 trials, for C3 transfer (blue) and C4 new (green) test conditions. Large α’s indicate a strong prior to assign a new hidden state to a new context, thus leading to no performance difference between conditions. Low α’s indicate a strong prior to re-use existing hidden states in new contexts, leading to positive transfer for C3, but negative transfer for C4, due to the ambiguity of the new task-set. Bottom right. Example of learning curves for C3 and C4, and error repartition pattern (Inset). NC: neglect C errors, NS: neglect S errors, NA: neglect all errors.

As predicted, the C-TS model learned faster than a flat learning model (figure 2 bottom). It did so by grouping contexts together on latent task-sets (building mean N = 2.16 latent task-sets), rather than building sixteen unique ones for each context – and successfully leveraged knowledge from one context to apply to other contexts indicative of the same TS. Thus the model identifies hidden TS and generalizes them across contexts during learning. Although feedback was deterministic for illustration here, we also confirmed that the model is robust to non-deterministic feedback by adding 0.2 random noise on the identity of the correct action at each trial. Initial learning remains significantly better for the C-TS model than a flat model (t = 9.46, p < 10−4), again due to creation of a limited number of task-sets for the 16 contexts (mean N = 2.92). As expected, the number of created task-sets and its effects on learning efficiency varied inversely with parameter α (Spearman’s ρ = 0.99, p = 0.0028 and ρ = −0.94, p = 0.016 respectively). This effect is explored in more detail below, and hence the data are not shown here.

3.2 Transfer after initial learning

In a second set of simulations, we explore the nature of transfer afforded by structure learning even when no clear structure is present in the learning problem. These simulations include two successive learning phases, which for convenience we label training and test phase (see figure 2, bottom left). The training phase involved just two contexts (C1 and C2), two stimuli (S1 and S2), and four available actions. Although the problem can be learned optimally by simply defining each state as a CS conjunction, it can also be represented such that the contexts determine two different, non overlapping task-sets, with rewarded actions as follows: TS1: S1-A1 and S2-A2; TS2: S1-A3 and S2-A4. In the ensuing transfer phase, new contexts C3 and C4 are presented together with old stimuli S1 and S2, in an interleaved fashion. Importantly, the mappings are such that C3 signifies the learned TS1, whereas C4 signifies a new TS4 which overlaps with both old task-sets (Figure 2, bottom). Thus a tendency to infer structure should predict positive transfer for C3 and negative transfer for C4 (see below).

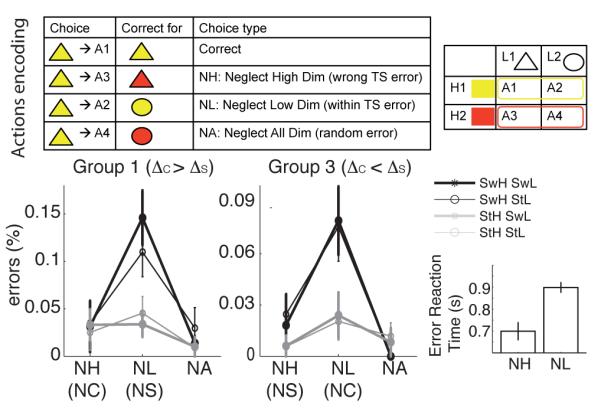

The inclusion of four actions (as opposed to two which is overwhelmingly used in the task-switching literature, but see Meiran & Daichman (2005)) allows us to analyze not only accuracy, but also the different types of errors that can be made. This error repartition is equally informative about structure building and allows for a richer set of behavioral predictions. Specifically, the learning problem is designed such that for any input, the set of three incorrect actions could be usefully recoded in a one-to-one fashion to a set of three different kinds of errors:

a neglect C error (NC), meaning that the incorrect action would have been correct for the same stimulus but a different context;

a neglect S error (NS), meaning that the incorrect action would have been correct for the same context but a different stimulus;

a neglect all error (NA), where the incorrect action would not be correct for any input sharing same stimulus or same context.

Thus, all incorrect action choices could be encoded as NC, NS, or NA in a one-to-one fashion, given the stimulus for which it was chosen.

The model is able to learn near optimally during the initial learning phase (not shown here because this optimal learning is also possible in a flat model). Notably, during the test phase, this model recognizes that a new context is representative of a previous task-set and thus reuses rather than relearns it. Accordingly, it predicts better performance in the transfer (C3) than new (C4) condition, due to both positive transfer for C3 and negative transfer for C4 (fig 2 bottom right). Negative transfer occurs because a rewarding response for one of the stimulus-action pairs for C4 will be suggestive of one of the previously learned task-sets, increasing its posterior probability conditioned on C4, leading to incorrect action selection for the other stimulus and also slower recognition of the need to construct a new TS for C4. This is observable in the pattern of errors, with those corresponding to actions associated with an old TS more frequent than other errors. Specifically, this model predicts preferentially more NC errors (due to applying a different task-set than that indicated by the current C) in the new (C4) condition (fig 2 bottom right inset).

Recall that parameter α encodes the tendency to transfer previous hidden TS states vs create new ones. We systematically investigated the effects of this clustering across a range of α values and simulated 500 times per parameter set (fig 2 bottom left). We observed the expected tradeoff, with C3 transfer performance decreasing, and C4 new performance increasing, as a function of increasing α. For large αs (equivalent to a flat model), performance was similar in both conditions, thus no positive or negative transfer.

In sum, the C-TS model proposes that the potential for structure is represented during learning and incidentally creates such structure even when it is not necessarily needed. This allows the model to subsequently leverage structure when it is helpful, leading to positive transfer, but can also lead to negative transfer. Below we show evidence for this pattern of both positive and negative transfer in humans performing this task.

3.3 Generalized structure model behavior

For clarity of exposition, above we imposed the context C to be the input dimension useful for task-set clustering. However, subjects wouldn’t know this in advance. Thus, we also simulated these protocols with the generalized structure model (see figure 16 in appendix). As expected, this model correctly infers that the most likely generative model is C-TS rather than S-TS or flat. For the structure transfer simulations, all three structures are weighted equally during learning (since the task contingencies are not diagnostic), but the model quickly recognizes that C-TS structure applies during the test phase (and could not have done so if this structure wasn’t incidentally created during learning); all qualitative patterns presented above hold (see appendix).

Figure 16. Generalized structure model results.

Example simulation of general structure model. Left panel: model performance on transfer task. Qualitative results are similar to C-TS model predictions. Right panel: average attentional weights. During the training phase, no structure is a better predictor of outcomes. However, the model infers the C-TS structure over the test phase.

We proposed a high level model to study the interaction of cognitive control and learning of context-task-set hidden structure, and for re-using this structure for generalization. This model does not however address the mechanisms that support its computations (and hence it does not consider whether they are plausibly implemented), nor does it consider temporal dynamics (and hence reaction times). In the next section, we propose a biologically detailed neural circuit model which can support, at the functional level, an analogous learning of higher and lower level structure using purely reinforcement learning. The architecture and functionality of this model is constrained by a wide range of anatomical, physiological data, and it builds on existing models in the literature. We then explore this model’s dynamics and its internal representations, and relate them to the hidden structure model described above. This allows us to make further predictions for human behavioral experiments described thereafter.

4 Neural network implementation

Our neural model builds on an extensive literature of the mechanisms of gating of motor and cognitive actions and reinforcement learning in corticostriatal circuits, extended here to accommodate hidden structure. We first describe the functionality and associated biology in terms of a single corticostriatal circuit for motor action selection, before discussing extensions to structure building and task-switching. All equations can be found in the appendix.

In these networks, the frontal cortex “proposes” multiple competing candidate actions (e.g., motor responses), and the basal ganglia selectively gates the execution of the most appropriate response via parallel re-entrant loops linking frontal cortex to basal ganglia, thalamus and back to cortex (Alexander & DeLong, 1986; Mink, 1996; Frank & Badre, 2011). The most appropriate response for a given sensory state is learned via dopaminergic reinforcement learning signals (Montague et al., 1996) allowing networks to learn to gate responses that are probabilistically most likely to produce a positive outcome and least likely to lead to a negative outcome (Doya, 2002; Houk, 2005; Frank, 2005; Maia, 2009; Dayan & Daw, 2008). Notably, in the model proposed below, there are two such circuits, with one learning to gate an abstract task-set (and to cluster together contexts indicative of the same task-set), and the other learning to gate a motor response conditioned on the selected task-set and the perceptual stimulus. These circuits are arranged hierarchically, with two main “diagonal” frontal-BG connections from the higher to the lower loop striatum and subthalamic nucleus. The consequences are that: (i) motor actions to be considered as viable are constrained by task-set selection; (ii) conflict at the level of task-set selection leads to delayed responding in the motor loop, preventing premature action selection until the valid task-set is identified. As we show below, this mechanism not only influences local within-trial RTs, but also renders learning more efficient across trials by effectively expanding the state space for motor action selection and thereby reducing interference between stimulus-response mappings across task-sets.

The mechanics of gating and learning in our specific implementation (Frank, 2005, 2006) are as follows (described first for a single motor loop). Cortical motor response units are organized in terms of “stripes” (groups of interconnected neurons that are capable of representing a given action; see Figure 3). There is lateral inhibition within cortex, thus supporting competition between multiple available responses (e.g. Usher & McClelland, 2001). But unless there is a strong learned mapping between sensory and motor cortical response units, this sensory-to-motor cortico-cortical projection is not sufficient to elicit a motor response, and alternative candidate actions are all noisily activated in PMC with no clear winner. However, motor units within a stripe also receive strong bottom-up projections from, and send top-down projections to, corresponding stripes within the motor thalamus. If a given stripe of thalamic units becomes active, the corresponding motor stripe receives a strong boost of excitatory support relative to its competitors, which are then immediately inhibited via lateral inhibition. Thus, gating relies on selective activation of a thalamic stripe.

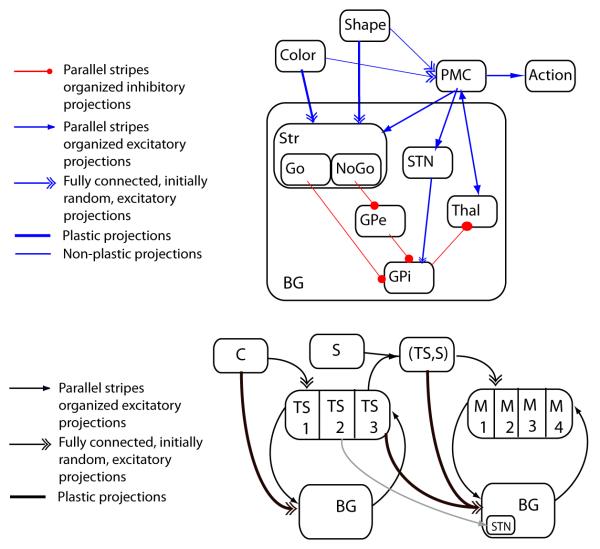

Figure 3. Neural network models.

Top: Schematic representation of a single loop corticostriatal network. Here, input features are represented in two separate input layers. Bottom: schematic representation of the two loop corticostriatal gating network. Color context serves as input for learning to select the TS in the first PFC loop. The PFC TS representation is multiplexed with the shape stimulus in the parietal cortex, the representation of which acts as input to the second motor loop. Before the TS has been selected, multiple candidate TS representations are active in PFC. This TS-conflict results in greater excitation of the subthalamic nucleus in the motor loop (due to a diagonal projection), thus making it more difficult to select motor actions until TS conflict is resolved. PMC: Premotor cortex; STN: subthalamic nucleus; Str: Striatum; Thal: Thalamus; GPe: Globus Pallidus external segment; GPi: Globus Pallidus internal segment.

Critically, the thalamus is under inhibition from the output nucleus of the basal ganglia, the globus pallidus internal segment, GPi. GPi neurons fire at high tonic rates, and hence the default state is for the thalamus to be inhibited, thereby preventing gating. Two opposing populations of neurons in the striatum contribute positive and negative evidence in favor of gating the thalamus. These populations are intermingled and equally represented, together comprising 95% of all neurons in the striatum (Gerfen & Wilson, 1996). Specifically, the “Go” neurons send direct inhibitory projections to the GPi. Hence, Go activity in favor of a given action promotes inhibition and disinhibition of the corresponding stripes in GPi and thalamus respectively, and hence gating. Conversely, the “NoGo” neurons influence the GPi indirectly, via inhibitory projections first to the external segment of the globus pallidus (GPe), which in turn tonically inhibits GPi. Thus, whereas Go activity inhibits GPi and disinhibits thalamus, NoGo activity opposes this effect. The net likelihood of a given action to be gated is then a function of the relative difference in activation states between Go and NoGo populations in a stripe, relative to that in other stripes.

The excitability of these populations are dynamically modulated by dopamine: whereas Go neurons express primarily D1 receptors, NoGo neurons express D2 receptors, and dopamine exerts opposing influences on these two receptors. Thus, increases in dopamine promote relative increases in Go vs. NoGo activity, whereas decreases in dopamine have the opposite effect. The learning mechanism leverages this effect: positive reward prediction errors (when outcomes are better than expected) elicit phasic bursts in dopamine, whereas negative prediction errors (worse than expected) elicit phasic dips in dopamine. These dopaminergic prediction error signals transiently modify Go and NoGo activation states in opposite directions, and these activation changes are associated with activity-dependent plasticity, such that synaptic strengths from corticostriatal projections to active Go neurons are increased during positive prediction errors, while those to NoGo neurons are decreased, and vice-versa for negative prediction errors. These learning signals increase and decrease the probability of gating the selected action when confronted with the same state in the future.

This combination of mechanisms has been shown to produce adaptive learning in complex probabilistic reinforcement environments using solely reinforcement learning (e.g. Frank, 2005). Various predictions based on this model, most notably using striatal dopamine manipulations, have been confirmed empirically (see e.g., Maia & Frank (2011) for recent review). Moreover, an extension of the basic model includes a third pathway involving the subthalamic nucleus (STN), a key node in the BG circuit. The STN receives direct excitatory projections from frontal cortical areas and sends direct and diffuse excitatory projections to the GPi. This ‘hyperdirect’ pathway bypasses the striatum altogether, and in the model supports a ‘global NoGo’ signal which temporarily suppresses the gating of all alternative responses, particularly under conditions of cortical response conflict (Frank (2006); see also Bogacz (2007)). This functionality provides a dynamic regulation of the model’s decision threshold as a function of response conflict (Ratcliff & Frank, 2012), such that more time is taken to accumulate evidence among noisy corticostriatal signals to prevent impulsive responding and to settle on a more optimal response. Imaging, STN stimulation, and electrophysiological data combined with behavior and drift diffusion modeling are consistent with this depiction of frontal-STN communication (Aron et al., 2007; Frank et al., 2007a; Wylie et al., 2010; Isoda & Hikosaka, 2008; Cavanagh et al., 2011; Zaghloul et al., 2012). Below we describe a novel extension of this mechanism to multiple frontal-BG circuits, where conflict at the higher level (e.g. during task-switching) changes motor response dynamics.

4.1 Base network - No structure

We first apply this single corticostriatal circuit to the problems simulated in the more abstract models above (Fig 3, top). Here, the loop contains two input layers, encoding separately the two input dimensions (eg. color and shape). The premotor cortex layer contains four stripes, representing four motor actions available. Each premotor stripe projects to a corresponding striatal ensemble of 20 units (10 Go and 10 NoGo) encoding a distributed representation of input stimuli, and which learn the probability of obtaining (or not obtaining) a reward if the corresponding action is gated. Input-striatum weights are initialized randomly, while input projections to premotor cortical (PMC) units are uniform. Only input-striatum synaptic weights are plastic (subject to learning) (see appendix for weight update equations). This network is able to learn all basic tasks presented using pure reinforcement learning (i.e. using only simulated changes in dopamine, without direct supervision about the correct response) in very efficient time. However, it has no mechanisms for representing hidden structure and is thus forced to learn in a ‘flat’ way, binding together the input features, similar to the flat computational model. Thus it should not show evidence of transfer or structure in its pattern of errors or RTs.

4.2 Hidden structure network

We thus extended the network to include two nested corticostriatal circuits. The anterior circuit initiates in the prefrontal cortex (PFC), and actions gated into PFC provide contextual input to the second posterior premotor cortex (PMC) circuit (fig 3 bottom, fig 4). The interaction between these two corticostriatal circuits is in accordance with anatomical data showing that distinct frontal regions project preferentially to their corresponding striatal region (at the same rostrocaudal level), but that there is also substantial convergence between loops (see Haber, 2003; Calzavara et al., 2007; Draganski et al., 2008; Nambu, 2011). Moreover, this rostrocaudal organization at the level of corticostriatal circuits is a generalization of the hierarchical rostrocaudal organization of the frontal lobe (Koechlin et al., 2003; Badre, 2008). A related neural network architecture was proposed in Frank & Badre (2011), but we modify it here to accommodate hidden structure, to include BG gating dynamics including the STN and GP layers, and pure reinforcement learning at all levels.4

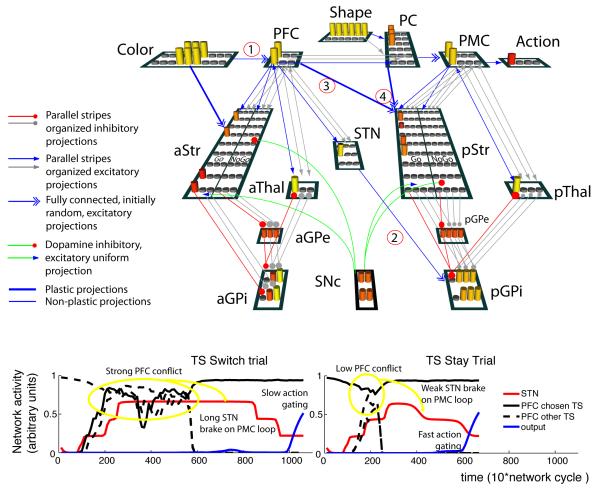

Figure 4. Neural network model.

Top: Detailed representation of the two-loop network. See text for detailed explanation of connectivity and dynamics. Parametrically manipulated projection strengths are highlighted: (1) connectivity between color input and PFC (fully connected vs. one-to-one organized C-PFC mapping, which increases the likelihood that the network assigns distinct PFC states to distinct contexts); (2) STN to GPi strength (modulating the extent to which motor action selection is inhibited given conflict at the level of PFC task-set selection); (3) diagonal PFC to pStr connection strength (modulating task-set motor action preparation); (4) pStr learning rate. PFC: Prefrontal cortex; PC: Parietal cortex; PMC: Premotor cortex; STN: subthalamic nucleus; Str: Striatum; Thal: Thalamus; GPe: Globus Pallidus external segment; GPi: Globus Pallidus internal segment; SNc: Substancia nigra pars compacta. a and p indicate anterior and posterior loops. Bottom Example of the time course of PFC activations (for chosen and other TS), average STN activity and chosen motor output unit activity in correct stay and switch trials. In switch trials, co-activation of PFC stripes results in stronger STN activation, thus preventing action selection in the motor loop until conflict is resolved, leading to increased reaction times.

As in the Bayesian C-TS model, we do not consider here the learning of which dimension should act as context or stimulus, but assume they are given as such to the model and investigate the consequential effects on learning. We extend and discuss this point further down in the paper. Thus, only the context (eg. color) part of the sensory input projects to PFC, whereas the stimulus (eg. shape) projects to posterior visual cortex. The stimulus representation in parietal cortex (PC) is then contextualized by top-down projections from PFC. Weights linking the shape stimulus inputs to parietal cortex are predefined and organized (top half of layer reflects shape 1 and bottom half shape 2). In contrast, projections linking color context inputs to PFC are fully and randomly connected with all PFC stripes, such that PFC representations are not simply frontal “copies” of these contexts; rather they have (initially) no intrinsic meaning, but as we shall see, come to represent abstract states that contextualize action selection in the lower motor action selection loop.

There are three PFC stripes, each subject to gating signals from the anterior striatum, with dynamics identical to those described above for a single loop – but with PFC stripes reflecting abstract states rather than motor responses. When a PFC stripe is gated in response to the Color context, this PFC representation is then multiplexed with the input Shape stimulus in the parietal cortex (PC), such that PC units contain distinct representations for the same sensory stimulus in the context of distinct (abstract) PFC representations (Reverberi et al., 2011). Specifically, while the entire top half (all three columns) of the PC layer represents shape 1 and the bottom half shape 2, once a given PFC stripe is gated, it provides preferential support to only one column of PC units (and the others are suppressed due to lateral inhibition). Thus the anterior BG-PFC loop acts to route information about a particular incoming stimulus to different PC “destinations”, similar to a BG model proposed by Stocco et al. (2010). In our model, the multiplexed PC representation then serves as input to the second PMC loop for motor action selection. The PMC loop contains 4 stripes, corresponding to the 4 action choices, as in the single circuit model above.

Dopaminergic reinforcement signals modify activity and plasticity in both loops. Accordingly, the network can learn to select the most rewarding of four responses but will do so efficiently only if it also learns to gate the different input Color contexts to two different PFC stripes. Note, however, that unlike for motor responses, there is no single a priori “correct” PFC stripe for any given context – the network creates its own structure. Heuristically, PFC stripes represent the hidden states the network gradually learns to gate in response to contexts. The PMC gating network learns to select actions for a stimulus in the context of a hidden state (via their multiplexed representation in parietal cortex), thus precisely comprising the definition of a task-set. Consequently, this network contains a higher level PFC loop allowing for the selection of task-sets (conditioned on contexts, with those associations to be learned), and a lower level MC loop allowing for the selection of actions conditioned on stimuli and the PFC-task-sets (again with learned associations). In accordance with the role of PFC for maintaining task-set in working memory, we allow PFC layer activations to persist from the end of one trial to the beginning of the next.

4.2.1 Cross-loop “diagonal” projections

We include two additional new features to the model whereby the anterior loop communicates along “diagonal” projections with the posterior BG (Nambu, 2011, e.g.,). First, it is important that motor action gating in the second loop does not occur before the task set has been gated in the first loop. Indeed, this would lead to action selection according only to stimulus, neglecting task-set. This is accomplished by incorporating the STN role as implemented in Frank (2006), but here where STN in the motor loop detects conflict in PFC from the first loop, instead of just conflict between alternative motor responses. Indeed, PFC to STN projection is structured in parallel stripes, so that coactivation of multiple PFC stripes elicits greater STN activity, and thus a stronger global No-Go signal in the GPi. Thus, early during processing, when a task-set has not yet been selected, there is co-activation between multiple PFC stripes, and gating of motor actions is prevented by the STN until conflict is resolved in the first loop (i.e., a PFC stripe has been gated). See specific dynamics in figure 4, bottom.

Second, we also include a diagonal input from the PFC to the striatum of the second loop, thereby contextualizing motor action selection according to cognitive state (see also Frank & Badre, 2011). This projection enables a task-set preparatory effect: the motor striatum can learn associations from the selected PFC task-set independently of the lower level stimulus, thus preferentially preparing both actions related to a given task-set. As discussed earlier, these features are in accordance with known anatomy: indeed, although the cortico-basal ganglia circuits involve parallel loops, there is a degree of transversal overlap across parallel loops, as required by this diagonal PFC-lower loop striatum projection, as well as influence of first loop conflict on second loop STN (Draganski et al., 2008).

It should be emphasized that the tasks of interest are expected to be difficult to learn by such a structured network without explicit supervision and using only reinforcement learning across 4 motor responses, especially due to credit assignment issues. Indeed, initially, both TS and action gating are random. Thus, feedback is ambiguously applied to both loops: an error is interpreted both as an inappropriate TS selection to the color context and incorrect action selection in response to the shape stimulus within the selected TS. However, this is the same problem faced by human participants, who do not receive supervised training and have to learn on their own how to structure the representations.

5 Neural network results

Although such networks include a large number of parameters pertaining to various neurons’ dynamics and their connectivity strengths, the results presented below are robust across a wide range of parameter settings. We validate this claim below.

5.1 Neural network simulations: initial clustering benefit

As for the C-TS model, we first assess the neural network’s ability to cluster contexts onto task-sets when doing so provides an immediate learning advantage. We do so in a minimal experimental design permitting assessment of the critical effects. We ran 200 networks, from which 3 were removed from analysis due to outlier learning. Simulation details are found in appendix and figure 5.

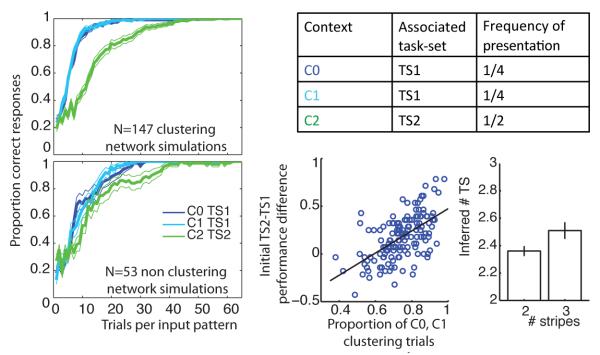

Figure 5. Neural Network Simulation 1.

Top Right: Experimental design summary Left Learning curves for different conditions. Top: 75% of networks adequately learned to select a common PFC representation for the two contexts corresponding to the same rule, and thus learned faster (clustering networks). Bottom: the remaining 25% of the networks created two different rules for C0 and C1, and thus showed no improved learning. Bottom Middle Performance advantage for the clustering networks was significantly correlated with the proportion of trials in which the network gated the common PFC representation. Bottom Right Quantitative fits to network behavior with the C-TS model showed a significant increase in inferred number of hidden TS for clustering compared to non-clustering simulations.

Rapid recognition of the fact that two contexts C0 and C1 are indicative of the same underlying TS should permit the ability to generalize stimulus-response mappings learned in each of these contexts to the other. As such, if the neural network creates one single abstract rule that is activated to both C0 and C1, we expect faster learning in contexts C0 and C1 than in C2, which is indicative of a different TS. Indeed, figure 5 (left) shows that the network’s learning curves were faster for C0 and C1 than they are for C2 (initial performance on first 15 trials of all stimuli: t = 7.8 ; p < 10−4).

This performance advantage relates directly to identifying one single hidden rule associated to contexts C0 and C1. Because the network is a process model, we can directly assess the mechanisms that give rise to observed effects. For each network simulation, we determined which PFC stripe is gated in response to contexts C0, C1 and C2 (assessed at the end of learning, during the last 5 error-free presentations of each input). All networks selected a different stripe for C2 than for C1 and C0, thus correctly identifying C2 as indicative of a distinct task-set. Moreover, 75% of networks (147) learned to gate the same stripe for C0 and C1, correctly identifying that these corresponded to the identical latent task-set. The remaining 25% (50) selected two different stripes for C0 and C1, thus learning their rules independently – that is, like a flat model.

Importantly, the tendency to cluster contexts C0 and C1 into a single PFC stripe was predictive of performance advantages. Learning efficiency in C0/C1 was highly significantly improved relative to context C2 for the clustering networks (figure 5 top left, N = 147; t = 9.4 ; p < 10−4) whereas no such effect was observed in non-clustering networks (figure 5 bottom left, N = 50; t = 0.3; p = 0.75). Directly contrasting these networks, clustering networks performed selectively better than non-clustering networks in C0/C1 (t = 4.9; p < 10−4), with no difference in C2 (t = −0.94; p = 0.35).

Within the clustering networks, we compute the proportion of trials in which the network gated the common stripe for TS1 in contexts C0 and C1, as a measure of efficiency in identifying a common task-set. This proportion correlated significantly with the increase in C0/C1 performance (figure 5 bottom middle, r = 0.72 ; p < 10−4), with no relation to C2 performance (r = −0.01; p = 0.89).

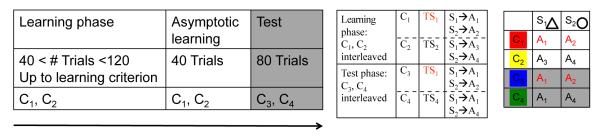

5.2 Neural network simulations: structure transfer

5.2.1 Neural network dynamics lead to similar behavioral predictions as C-TS model

This second set of simulations investigate structure transfer after learning, as described above for the C-TS model. Recall that these simulations include two consecutive learning phase, labeled training phase followed by a test phase. During the training phase, interleaved inputs include two contexts (C1 and C2) and two stimuli (S1 and S2). During the test phase, new contexts are presented to test transfer of a previously used TS (C3-Transfer), or learning of a new TS (C4-new).

The two-loop nested network was able to learn the task, with mean time to criterion 22.1(±2.6) repetitions of each of the four inputs.5

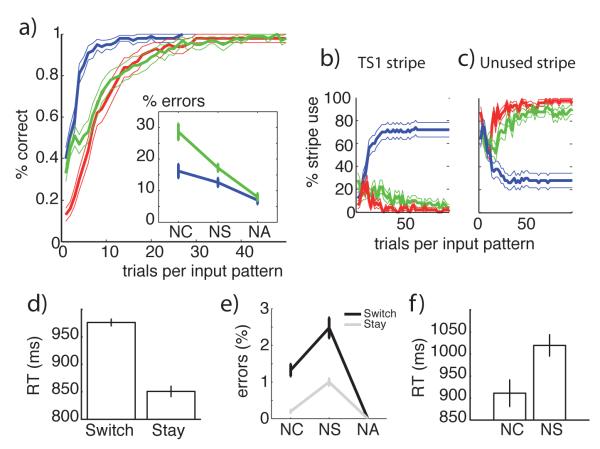

Moreover, as in the C-TS model, a clear signature and potential advantage of structure became clear in the test phase. First, learning was significantly faster in the C3 transfer condition than in the C4-new condition, thus positive transfer (fig 6a). Second, the repartition of error types was similar to that expected by the C-TS model (and as we shall see below, exhibited by human subjects). In particular, the network exhibited more errors corresponding to the wrong TS selection (NC) than other errors, especially in the new condition (figure 6i). As explained earlier, this is a sign of negative transfer – the tendency to reapply previous task-sets to situations that ultimately require creating new task-sets.

Figure 6. Neural network results.

Top a-c): test phase results, for Transfer (blue), New-overlap (green) and New-incongruent (red) conditions. Left: Proportion of correct trials as a function of input repetitions, inset: proportion of NC, NS and NA errors. Positive transfer is visible in the faster Transfer than New learning curves; Negative transfer is visible in the interaction between condition and error types and in the slower slope in New-overlap than New-Incongruent conditions. Right: Proportion of task-set TS1 (b), and blank TS (c) hidden state selections as a function of trials, for all conditions. Positive transfer is visible in the reuse of TS1 stripe in the transfer condition, and negative transfer in the reduced recruitment of the new TS stripe for new-overlap compared to new-incongruent conditions. Bottom: Asymptotic learning phase results. d): reaction-time switch-cost; e) error type and switch effects on error proportions. f): slower reaction-times for neglect L than neglect H errors.

To further investigate the source of negative transfer, we also tested networks with a third test condition, “C5-new-incongruent”, which was new but completely incongruent with previous stimulus response associations. While both C4 and C5 involved learning new task-sets, in the C5 test-condition the task-set did not overlap at all with the two previously learned task-sets: if either was gated into PFC, it led to incorrect action selection for both stimuli. This situation contrasts with that for C4, in which application of either of the previous task-sets leads to correct feedback for one stimulus and incorrect for the other, making inference about the hidden state more difficult. Indeed, networks were better able to recruit a new stripe in the C5 compared to C4 test condition (p < 0.02, t > 2.4, fig 6 b, c), leading to more efficient learning. Although initial performance was better in the C4 overlap condition (t = 3.7, p = 5 10−4, fig 6a), due to the 0.5 probability of reward resulting from selection of a previous task-set, subsequent learning curves were steeper in the C5 condition, due to faster identification of the necessity for a new hidden state.

Again, we can directly assess the mechanisms that give rise to these effects. Similarly to the previous simulations, for each network simulation, we determined which PFC stripe is gated in response to contexts C1 and C2 at the end of learning, corresponding to TS1 and TS2. We then assessed during the test phase which of the three stripes was gated for each transfer condition.

This analysis largely confirmed the proposed mechanisms of task-set transfer. In the C3 transfer condition (fig 6b), more than 70% of the networks learned to reselect stripe TS1 in response to the new context, thus transferring TS1 stimulus-action associations to the new situation, despite the fact that the weights from the units corresponding to C3 were initially random. The remaining 30% of networks selected the third previously unused (“blank”) TS stripe, and thus relearned the task-set as if it were new. In contrast, in the C4 new test condition (fig 6c), ≈90% of networks appropriately learned to select the blank TS stripe. The remaining 10% of networks selected either the TS1 or TS2 stripes, due to overlap between these task-sets and the new one, leading to negative transfer. In this small number of cases, rather than creating a new task-set networks simply learned to modify the stimulus-action associations linked to the old task-set; eventually performance converged to optimal in all networks.

To confirm the presumed link between the generalization advantage in the C3 transfer condition and the gating of a previously learned task-set, we investigated the correlation between performance and the proportion of blank TS stripe selection. This analysis was conducted over the wide array of simulations, including those designed to explore the robustness of the parameter space (fig 7). The selection of the blank stripe was highly significantly (both p’s < 10−13) anti-correlated with C3 transfer performance (r = −0.11), and positively correlated with C4 new-overlap performance (r = 0.55).6

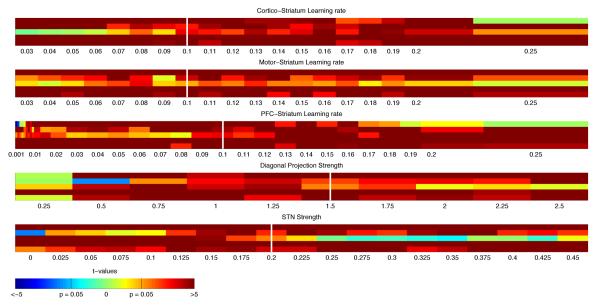

Figure 7. Neural Network parameter robustness.

Exploration of systematic modulations of key network parameters across a wide range. For each parameter, the significance values are plotted for each of five main behavioral effects (see descriptions in main text), from top to bottom: 1) Transfer versus new-overlap performance difference; 2) asymptotic learning phase error repartition effect; 3) asymptotic learning phase error reaction times NH < NL; 4) Test-phase old > new PFC stripe selection for the transfer condition; 5) Test phase new > old PFC stripe selection for the new condition. Simulations were conducted 100 times each, in each case with the other 4 parameters fixed to the corresponding white bar value, and 1 parameter varied along a wide range. 1st line: Cortico-striatal learning rate (here fixing learning rates to be the same for both loops); 2nd line: motor-cortex striatum learning rate; 3rd line: PFC-striatum learning rate; 4th line: Diagonal PFC-posterior striatum relative projection strength; 5th line: STN to 2nd loop GPi relative projection strength. Results across all five effects were largely robust to parameter changes.

Thus, these first analyses show that the neural network model creates and re-uses task-sets linked to contexts as specified by the high level C-TS computational model. Below we provide a more systematic and quantitative analysis showing how each level of modeling relates to the other. But first, we consider behavioral predictions from the neural model dynamics.

5.2.2 Neural network dynamics lead to behavioral predictions: switch costs, RTs, and error repartition

While we have shown that the neural network affords similar predictions as the C-TS structure learning model, in terms of positive and negative transfer, it also allows us to make further behavioral predictions most notably related to dynamics of selection. We assess these predictions during the asymptotic learning phase.

The persistence of PFC activation states from the end of one trial to beginning of next (a simple form of working memory), resulted in performance advantage for task-set repeat trials, or conversely, a switch-cost, with significantly more errors (fig 6e) and slower reaction times (fig 6d) in switch trials. This is because in a switch trial, gating of a different PFC stripe than that in the previous trial took longer than simply keeping the previous representation active. This longer hesitation in PFC layer led to three related effects.

First, it initially biased the PC input to the second loop to reflect the stimulus in context of the wrong task-set, thus leading to an increased chance of an error if the motor loop responds too quickly, and hence an accuracy switch-cost (and a particular error type).

Second, when the network was able to overcome this initial bias and respond correctly, it was slower to do so (due to the additional time associated with updating the PFC task-set and then processing the new PC stimulus representation), and hence a reaction-time switch-cost.

Third, and counter-intuitively, the error repartition favored NS errors over NC errors, over NA errors (fig 6e). This pattern arose because the hierarchical influence of PFC onto posterior (motor) striatum led to a task-set preparatory effect, where the two actions associated with the TS were activated before the stimulus was itself even processed. Thus, actions valid for the task-set (but not necessarily the stimulus) were more likely to be gated than other actions, leading to more NS errors. In contrast, NC errors resulted from impulsive action selection due to application of the previous trial’s task-set (particularly in switch trials). Indeed, during switch trials, error reaction-times were significantly faster for NC errors than NS errors (fig 6f). If these dynamics are accurate, we thus predict a very specific pattern of errors by the end of the learning phase:

presence of an error and reaction time switch cost when ct ≠ ct+1, but not st ≠ st+1

prevalence of within task-set errors (neglect of the stimulus), rather than perseveration errors (neglect of the context) on switch trials

faster within than across task-sets errors

We also ensured that all the behavioral predictions are robust to parameter manipulation of the network. In particular, we show in figure 7 that the majority of predicted effects hold across systematic variations in key parameters, including corticostriatal learning rates and connection strengths between various layers, including PFC-striatum and STN-GPi. The main results presented above were obtained with parameters representative from this range.

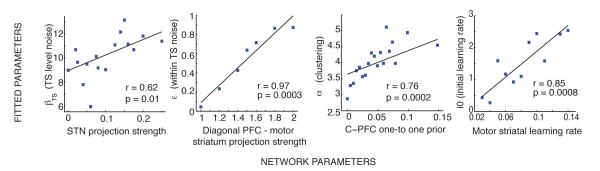

6 Linking levels of modeling analysis

In this section we show that the approximate Bayesian C-TS formulation provides a good description of the behavior of the network, and moreover, that distinct mechanisms with the neural model correspond to selective modulation of parameters within the higher level model. To do so, we quantitatively fit the behavior generated by the neural network simulations (including both experimental protocols) with the C-TS, by optimizing the parameters of the latter model that maximizes the log likelihood of networks’ choices given the history of observations Frank & Badre (2011). Parameters optimized include the clustering parameter α, the initial Beta prior strength on task-sets n0 (potentially reported as i0 = 1/n0 for a positively monotonous relationship with a learning rate equivalent), and a general action selection noise parameter, the softmax β. For comparison, we also fit a flat model, including parameters n0 and β, taking model complexity into account by evaluating fits using the Akaike Information Criterion (AIC; Akaike, 1974), as well as exceedance probability on AIC (Stephan et al., 2009).

6.1 Simulation 1: Initial clustering benefit

First, the C-TS structure model fit the networks’ behavior significantly better than a flat model (t = 5.45; p < 10−4, exceedance probability p = 0.84), for both clustering networks (t = 5.06; p < 10−4) and non-clustering networks (t = 2.17; p = 0.035), with no significant difference in fit improvement between groups (t = 0.49, ns).7 Correlation between empirical and predicted probabilities choice (grouped in deciles) over all simulations was high: r2 = 0.965, p < 10−4. Mean pseudo-r2 value comparing the likelihood of the precise sequence of individual trials to chance was also strong, at 0.46.

Given that the fits were reasonable, we then assessed the degree to which network tendencies to develop a clustered gating policy corresponded to inferred number of task-sets from the C-TS structure model. If a gated PFC stripe corresponds to use of an independent task-set, then the clustering networks (which by definition use fewer PFC stripes) should be characterized by lower inferred number of latent task-sets in the fits. As expected, the inferred number of task-sets was significantly lower for the clustering networks compare to non-clustering ones (figure 5 bottom right, t = 2.28, p = 0.023). Within the clustering networks, the proportion of common final TS1 stripe use for C0 and C1 was significantly correlated with the fitted number of task-sets inferred by the models (p = 0.027, r = −0.18).

Notably, there were no differences in the prior clustering parameter α across the two groups of networks, as expected from their common initial connectivity structure. Rather, differences in clustering were produced by random noise and choices leading to different histories of action selection which happen to sometimes reinforce a common stripe gating policy or not. Due to its approximate inference scheme, C-TS is also sensitive to specific trial order. We investigate systematically the effect of priors below by manipulating the connectivity. The fact that the hidden structure C-TS model can detect these differences in clustering due to specific trial history (without having access to the internal PFC states) provides some evidence that the two levels of modeling use information in similar ways for building abstract task-sets while learning from reinforcement. This claim is reinforced by subsequent simulations below.

6.2 Simulation 2: Structure transfer

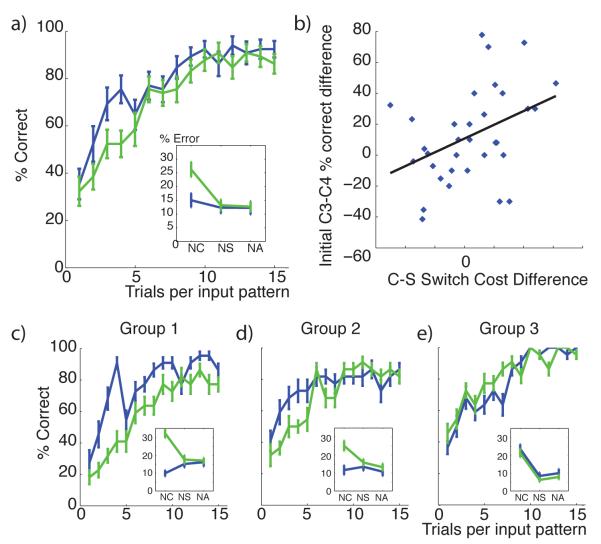

We applied the same quantitative fitting of network choices with the C-TS model for the second set of simulations for the structure transfer task. Again, the C-TS structure model fits better than a at model, penalizing for model complexity (t = 5:8, p < 10−6, true for 46 out of 50 simulations, exceedance probability p = 1:0). Moreover, these fits indicated that networks were likely to re-use existing task-sets in the C3 transfer condition, whereas networks were more likely to create a new task-set in C4 and C5. Indeed, the inferred number of additional task-sets created in the transfer phase (beyond the two created for all simulations during the learning phase), was E(N) = 0:05 for C3 vs 0:84 for C4; p < 10−4 ; t = −13:8). Networks were even more likely to create a new task-set for the C5 new-incongruent condition (E(N) = 0.99; significantly greater than C4; p = 0.0009 ; t = −3.53).