Abstract

Background

Despite many decades of research on the effective development of clinical systems in medicine, the adoption of health information technology to improve patient care continues to be slow, especially in ambulatory settings. This applies to dentistry as well, a primary care discipline with approximately 137,000 practitioners in the United States. A critical reason for slow adoption is the poor usability of clinical systems, which makes it difficult for providers to navigate through the information and obtain an integrated view of patient data.

Objective

In this study, we documented the cognitive processes and information management strategies used by dentists during a typical patient examination. The results will inform the design of a novel electronic dental record interface.

Methods

We conducted a cognitive task analysis (CTA) study to observe ten general dentists (five general dentists and five general dental faculty members, each with more than two years of clinical experience) examining three simulated patient cases using a think-aloud protocol.

Results

Dentists first reviewed the patient’s demographics, chief complaint, medical history and dental history to determine the general status of the patient. Subsequently, they proceeded to examine the patient’s intraoral status using radiographs, intraoral images, hard tissue and periodontal tissue information. The results also identified dentists’ patterns of navigation through patient’s information and additional information needs during a typical clinician-patient encounter.

Conclusion

This study reinforced the significance of applying cognitive engineering methods to inform the design of a clinical system. Second, applying CTA to a scenario closely simulating an actual patient encounter helped with capturing participants’ knowledge states and decision-making when diagnosing and treating a patient. The resultant knowledge of dentists’ patterns of information retrieval and review will significantly contribute to designing flexible and task-appropriate information presentation in electronic dental records.

Keywords: cognitive task analysis, electronic health records, usability, cognitive engineering, system design, electronic dental records

1. INTRODUCTION

Providing patient-centered cognitive support in electronic health records (EHR) for clinicians is a significant research challenge in informatics [1]. Clinicians spend a great deal of time and energy searching and sifting through raw data about patients, and trying to integrate these data with their general medical knowledge, as they care for their patients. Multiple shortcomings of EHR, such as usability problems and the resultant loss of time and productivity [2–4], steep learning curves and unintended adverse consequences [5–13], aggravate this situation. As a result, the adoption of health information technology (HIT) to improve patient care continues to be slow, especially in ambulatory settings.

Multiple problems in human-computer interaction design contribute to the often suboptimal support of clinical work by EHRs. For instance, cluttered screen designs and separation of information across multiple screens make it difficult for clinicians to gain a quick overview of the patient’s status. As a result, clinicians sometimes miss key information required to make decisions, which in turn increases the chance of errors. Several studies suggest that dentistry, a primary care discipline with approximately 137,000 practitioners in the United States, may have problems similar to those found in medicine [14–19]. Studies conducted in dentistry [15–21] have demonstrated that poor usability and a steep learning curve are major barriers to the use of electronic dental record (EDR) systems. These studies suggest that there is significant room for improvement of EDR systems. Similarly, despite widespread adoption of EDRs at U.S. dental schools, investigators continue to find that many users are not convinced that they improve efficiency and effectiveness [15, 19, 21]. Users want better design, shorter learning curves, reliable hardware and digital imaging capabilities [15, 19].

On the other hand, empirical studies have reported that clinical performance improves when information displays match the users’ mental models and their clinical work processes [22–27]. Clinicians are able to focus their attention entirely on patient problems and devote their cognitive resources to clinical reasoning, strategy and treatment planning [28]. These observations have led to the application of techniques and methodologies adapted from applied cognitive psychology to study how HIT can support clinicians’ work processes and decision-making activities [24, 27, 29]. For example, Patel and Kushniruk [24] proposed cognitive engineering methods to study individual interactions of users with HIT, and to understand group processes and interactions among health professionals and HIT using a distributed cognitive framework. The results helped with assessing the clinicians’ information needs and understanding the problems they experienced when interacting with clinical systems. They also contributed to redesigning these systems to support clinicians’ work.

Few studies have explored how cognitive engineering methods can inform the design of a clinical system [22, 30–34]. These studies have typically employed methods such as think-aloud protocols, work-flow observations and semi-structured interviews to understand the cognitive processes and information management strategies of clinicians during patient care. The resulting cognitive models were then used as the basis to design systems. Zhang and colleagues [34–37] developed a human-centered distributed information design framework to study the dynamic interactions among humans, artificial agents and the context in which the system is situated. Preliminary results have demonstrated that these approaches may improve systems’ cognitive support during patient care and thus suggest the possibility of improving patient care quality and safety. Recently, investigators identified clinical data presentation that facilitates the clinicians’ formulation of a patient’s problem as a critical component for improving patient care through HIT [1, 38]. However, research is still nascent as many methods have been proposed with little empirical evaluation. It is this gap in knowledge – how cognitive engineering methods can be optimally applied to inform the system design process – that we sought to address in this study.

Existing cognitive engineering studies have mostly focused on information processing and management when users interacted with patient documentation or records or during their clinical workflow [22, 31, 32, 39–41]. They do not reflect the actual patient or the ‘clinicians’ physical and cognitive interactions with a patient’ [38, 42, 43]. Thus, capturing interactions with records may not necessarily capture the clinicians’ knowledge states and decision-making as well as doing so with less abstract representations of patients. In this paper, we adapt the current cognitive task analysis methods to closely simulate activities during an actual patient visit, and to study how dentists access and interact with raw patient data to diagnose a patient. We coded cognitive processes and information accessed in order to learn what information dentists needed and how they used it in diagnosing and treatment planning.

The objective of this study was to document how dentists review information and make decisions during a new patient visit. We focused on the following questions:

What information sources do dentists retrieve and in what sequence when examining simulated patient cases of varying complexities?

What information do dentists use to make clinical decisions and how do they use it?

What cognitive processes characterize a dentists’ information management and decision-making activities when examining patients?

2. METHODS

Cognitive task analysis (CTA) is the “extension of traditional (behavioral) task analysis techniques to yield information about the knowledge, thought processes and goal structures that underlie observable task performance” [44]. It is typically used to identify the concepts, contextual cues, goals and strategies that contribute to the mental activities of an individual when solving a specific problem or a task. In this study, we conducted a CTA to observe ten general dentists examining three simulated patient cases using a think-aloud protocol. The three simulated patient cases referred here include only patient information and do not include real patients or actors. From here on, we refer simulated patient cases as ‘cases’ in this manuscript. We recruited a purposive sample of ten general dentists, five general dentists practicing in Pittsburgh and five general dental faculty members, with more than two years of clinical experience. The three cases were selected from the pool of approximately 80 patient cases that senior dental students at the University of Pittsburgh School of Dental Medicine develop each year as part of their Senior Case Presentation course. All the cases are based on actual patients. Three dental faculty selected the clinical cases from this pool to ensure that they represented patients typically seen in general dental practice. One case represented a low complexity case (a patient who maintains regular dental visits); the second, a medium complexity case (patient with multiple dental needs); and the third, a high complexity case (patient with multiple dental needs and underlying systemic disease).

Each session was video- and audio-recorded to capture participants’ interactions with the patient information. Before starting the session, the goal and the process of conducting the experiment were reviewed with the participant. Participants were trained through practice with the think-aloud process, using one or two tasks that were not used in the study.

After practice, the cases were presented to the participants one by one in random order to prevent sequential bias. The observer began by handing out the first patient information, which included basic patient information and the chief complaint. All other information about the case was provided only if the participant requested it while assessing the patient and developing a treatment plan. Each participant was instructed to verbalize both the type of information desired and what s/he was thinking while reviewing and assessing patient information. The observer reminded participants to keep thinking aloud if they fell silent for more than 15 seconds.

The transcribed sessions were analyzed to identify and code both the kinds of information dentists reviewed and the cognitive processes they used.

2.1 Coding of Data

The transcribed data were first segmented into individual protocol statements (data segments). Each individual protocol statement (data segment) represents a phrase, sentence or a group of multiple sentences that refer to the same thought (content or knowledge) [45]. From here on, individual protocol statements will be referred as data segments in this manuscript. Initially, two researchers (TT and TS) reviewed the verbal reports from two sessions and drafted a coding scheme. An incremental and iterative process was followed to develop and refine the coding scheme. Once it was finalized, the two researchers coded two sessions independently to refine and validate the coding scheme. Table I shows the final coding scheme and examples of individual protocol statements. One researcher (TT) coded all thirty sessions. Inter-rater reliability was then calculated for each variable using the κ-statistic for a randomly selected case coded by the two researchers. Data coding followed the process described in the two research studies on cognitive processing published by Jaspers [22] and Crowley [45].

Table I.

Coding scheme showing the variables and examples of coding

| Variables | Values | Example(s) |

|---|---|---|

| Independent variables | ||

| Dentist | ID of the dentist | n/a |

| Case Complexity (CASE) | Case 1 (low complexity), Case 2 (medium complexity), Case 3 (high complexity) | n/a |

| Time (time_percent) | Time taken to complete each case converted to percentage | |

| Dependent variables | ||

| Process type (PROCESS) | Information retrieval/review | “Looking at that, the next thing that I would like to know is a medical history.” |

| Processing | “ But the patient, in my clinical charting, I would note that tooth No. 16 has retained root tips.” | |

| Deciding | “I can already tell that she has a lot of cavities or caries.” | |

| Other | “That’s basically it. I think she’s in good shape. I would just do those things I recommended. That would be it.” | |

| Information source (INFO) | Identification of form or artifact (e.g., radiograph); section on form (if applicable); data element (if applicable) | Medical history form → cardiovascular system → stroke in 2000 |

| Segment order (ORDER) | Sequential number assigned to each successive segment | n/a |

Each data segment of the verbal protocol was coded for process type, process code and information source. Process type refers to “information processes that produce new states of knowledge by acting on existing states of knowledge” [45]. In this study, four major process types were identified: 1) Information retrieval or review; 2) Processing; 3) Deciding; and 4) Other. Information retrieval or review included actions or processes involved with retrieving and reviewing patient information, such as requesting information, asking a follow-up question, scanning records or reviewing images. Processing included actions involved with processing the information reviewed, such as setting a goal, hypothesizing, contextualizing and comparing/cross checking. Deciding included decision-making actions such as establishing a finding, diagnosis or a treatment and making recommendations for a treatment or on a diagnostic procedure. Other, the fourth process type, included actions that led to the conclusion or evaluation of one’s own reasoning, such as summarizing, wrapping up and expressing ignorance.

Process codes refer to actions or processes that occur within each of the four major processing types described above. The final coding scheme consisted of 28 specific process codes – four within information retrieval or review, 11 within processing, nine within deciding and four within other. Last, there was an option for “not coded.”

The third major variable coded, information source, included the different patient information participants reviewed during the simulated patient examination. In this study, information sources included chief complaint, dental history and medical history, intraoral exam findings recorded on hard tissue and periodontal information, and intraoral images and radiographs. As shown in Table II, we organized these information sources into three major categories for data analysis: patient meta-information, examination information and images.

Table II.

Three categories of information sources and the information sources organized under these categories

| Categories of Information sources | Information sources | |

|---|---|---|

| 1 | Patient meta-information (general status and attitudes of the patient) | Patient information, medical history, medications, medical consult, dental history, social history |

| 2 | Examination information (information typically acquired during a patient examination) | Hard tissue and periodontal information, extraoral and intraoral exam findings, study models, notes, pathology consults |

| 3 | Images | Intraoral images and radiographs |

To summarize, each data segment from every examination was coded as to one of the four process types, one of the process codes within that type and by the relevant information source(s). Since the time taken was not expected to be the same for each case, an additional time-percent variable was created by calculating duration completed against total duration in ten percent intervals. This helped in plotting data from all 30 cases on the same graph in ten percent time intervals for comparison purposes.

2.2 Data Analysis

We performed the following data analysis to identify the dentists’ information usage and their patterns of navigation:

quantified, analyzed and compared across case types of specific information sources used, including frequency and sequence of use (Table IV)

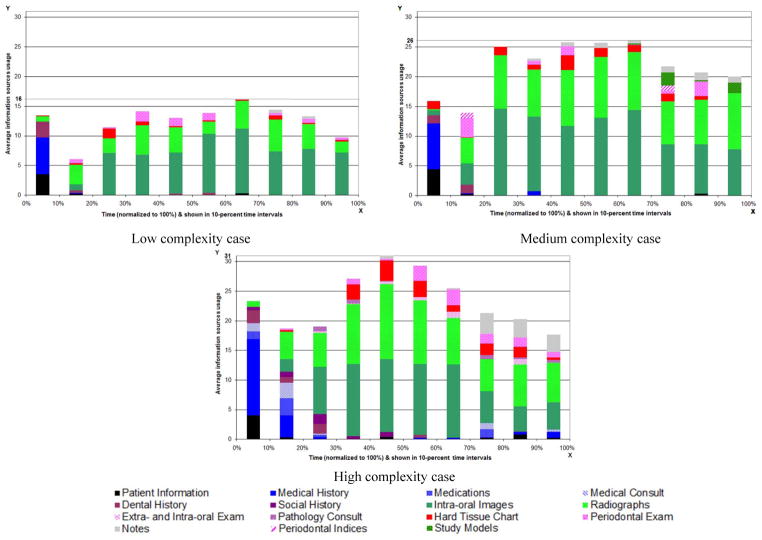

graphed the average frequency of information sources used against time-percent in ten-percent time intervals: to show which information sources supported their decision-making process and their sequence of using information sources (Figure 1)

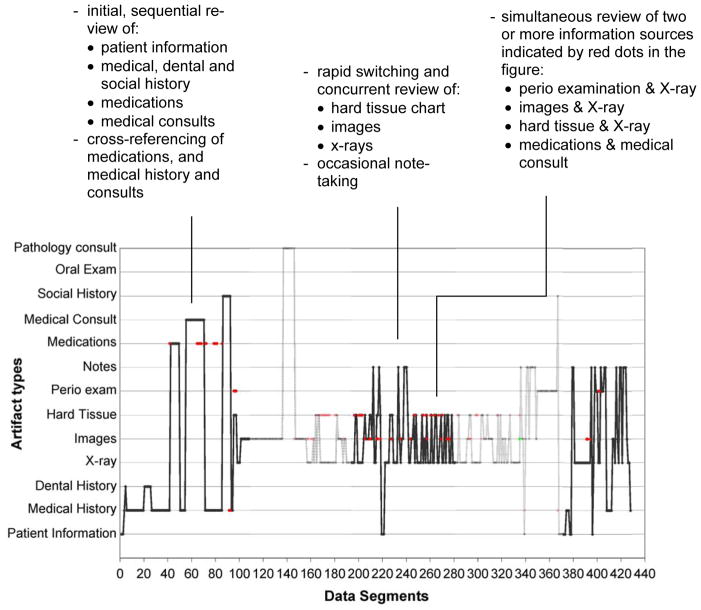

graphed each participant’s information source usage against data segments to identify patterns of navigation (Figure 2)

determined instances when participants reviewed at least two information sources simultaneously and when they needed additional information. (Figure 2 & Table V)

graphed average frequency of process types used against time-percent in ten-percent time intervals.

Table IV.

Total Frequency (Percent) of Information Sources Usage by the Ten Participants for the Three Cases

| Patient information sources | Total frequency | % | Low complexity | % | Medium complexity | % | High Complexity | % |

|---|---|---|---|---|---|---|---|---|

| Intraoral images | 1958 | 37 | 626 | 51 | 758 | 39 | 574 | 28 |

| Radiographs | 1810 | 35 | 340 | 28 | 747 | 38 | 723 | 35 |

| Medical history | 336 | 6 | 64 | 5 | 88 | 4 | 184 | 9 |

| Notes | 157 | 3 | 8 | 1 | 45 | 2 | 104 | 5 |

| Periodontal information | 251 | 5 | 63 | 5 | 81 | 4 | 91 | 4 |

| Hard tissue information | 211 | 4 | 39 | 3 | 84 | 4 | 88 | 4 |

| Basic patient information | 152 | 3 | 40 | 3 | 52 | 3 | 60 | 3 |

| Dental history | 121 | 2 | 38 | 3 | 29 | 1 | 54 | 3 |

| Medical consult | 56 | 1 | n/a | 0 | n/a | 0 | 56 | 3 |

| Medications | 46 | 0.9 | n/a | 0 | n/a | n/a | 46 | 2 |

| Study models | 44 | 0.8 | n/a | 0 | 44 | 2 | n/a | n/a |

| Social history | 43 | 0.8 | n/a | 0 | n/a | n/a | 43 | 2 |

| Extra-oral and intraoral exam | 33 | 0.6 | n/a | 0 | n/a | n/a | 33 | 2 |

| Pathology consult | 31 | 0.6 | n/a | 0 | n/a | n/a | 31 | 2 |

| Total | 5249 | 100 | 1218 | 100 | 1944 | 100 | 2087 | 100 |

Figure 1.

Information source usage over case time (normalized to 100% for all participants/cases since absolute case times differed). Information source usage demonstrates specific patterns as participants progress through each case.

Figure 2.

Three patterns of navigation through the information sources for the high complexity case. 1) reviewing information sources in a linear sequence 2) rapidly switching between information sources and 3) reviewing multiple information sources simultaneously.

Table V.

Frequency and the time participants spent working with at least two information sources

| Information reviewed simultaneously | High complexity case | Medium complexity case | Low complexity case | ||||

|---|---|---|---|---|---|---|---|

| Frequency | Time (minutes, seconds) | Frequency | Time (minutes, seconds) | Frequency | Time (minutes, seconds) | ||

| radiographs | intraoral images | 115 | 14.33 | 163 | 20.53 | 19 | 2.4 |

| radiographs | hard tissue chart | 53 | 5.0 | 23 | 2.27 | 5 | 1.41 |

| radiographs | periodontal chart | 17 | 2.0 | 3 | 0.29 | 5 | 0.17 |

| hard tissue chart | intraoral images | 18 | 2.17 | 26 | 2.36 | 1 | 0.14 |

| periodontal chart | intraoral images | 19 | 1.58 | 3 | 0.35 | 1 | 0.8 |

Generalized linear mixed models for count data were used to carry-out the primary analyses. These models account for the inherent correlation within and heterogeneity between dentists. In particular, Poisson or negative binomial regression models with random intercept were fitted to determine 1) whether the distribution of information sources was same or different; 2) whether the average number of dental history utilization was different than the average number of radiograph utilization; 3) whether the mean number of each information source used varied by complexity of the case; and 4) whether the distribution of information source used varied across dentists. Process types were also analyzed to determine 1) whether the distribution of process types were same; 2) whether the average number of information retrieval/review events were different from the average number of decision events; 3) whether the mean number of occurrences of each process type varied by case; and 4) whether the distribution of process types varied across dentists.

3. RESULTS

Six of the ten participants were male and five participants were graduates of the University of Pittsburgh. All of them completed diagnosis and treatment planning for all three standardized cases. The time spent on working through the cases varied by participant and case complexity (average: 73 minutes; std. dev. 22 minutes). Table III shows that time and average number of segments per case increased with case complexity.

Table III.

Average Time Spent By Participants and Average Number of Data Segments

| Case complexity | Avg. time (min.) reviewing case | Standard deviation (min.) | Avg. number of segments per case (rounded) | Standard Deviation (segments) |

|---|---|---|---|---|

| Low | 17 | 7 | 158 | 65 |

| Medium | 25 | 7 | 240 | 89 |

| High | 31 | 14 | 266 | 106 |

The transcribed sessions consisted of a total of 6,631 data segments, an average of 664 segments per participant. In the sections below, we describe which information sources the ten participants requested and reviewed while working with the three cases and their information needs during different time periods of the patient examination.

3.1 Use of Specific Information Sources by Participants

Participants spent approximately 80 percent of their time interacting with patient information during diagnosis and treatment planning of the three cases. In the remaining 20% of the time, participants recalled facts from past clinical experiences and personal knowledge. Table IV shows that radiographs and intraoral images were the most often used information, constituting 72% of information source usage. In general, the frequency of participants’ information source usage increased with case complexity, with few exceptions.

Figure 1 shows information source usage over time as participants worked through each case. (We normalized absolute time spent on each case to percent in order to be able to group participants by case.) Each vertical bar shows how often participants used particular information sources during the particular 10% increment in case time. In all three cases, participants started by reviewing patient information to understand the patient’s reason for the dental visit. They then reviewed the patient’s medical history and/or dental history. Once they determined the patient’s general health status they examined the patients’ oral status through radiographs, intraoral images, hard tissue and periodontal information. In one case, the participant did not ask for the medical or dental history of any patient. Below, we describe in more detail how participants reviewed specific information and how the process of discovery led them to review additional information.

3.1.1 Patient Demographics

All participants started with patient information and looked for patient’s age, sex, ethnicity and the chief complaint. More than half of the participants also looked for specific information such as patients having tooth ache and their expectations for treatment. Seven participants commented that a patient’s financial status for dental treatment such as insured or uninsured influences the treatment plan.

3.1.2 Medical History

Dentists reviewed the patients’ medical history to determine any medical conditions that contraindicated dental treatment, or needed additional precautions/pre-medications before receiving dental treatment [46]. Important questions included patient’s vital signs, drug or food allergies and any underlying systemic diseases. Specific diseases such as HIV (in high complexity case) triggered participants to seek more information on patient’s medications, medical consults and current lab values for viral load. They also asked about the patients’ occupation, drinking and smoking habits.

3.1.3 Dental History

More than half of the study participants asked for the dental history to review the patient’s past dental treatment. This information helped participants to determine the patient’s preferences for dental treatment, previous experiences and treatment anxiety levels. The latter is important when planning treatment because about 5–10% of U.S. adults are estimated to have dental anxiety or fear.

3.1.4 Transition to Oral Examination

To examine the patient’s oral status, participants primarily looked at intraoral images, radiographs, and hard tissue and periodontal information. Some participants asked for additional information such as study models to check the patient’s occlusion, pulp testing results to determine vitality of the teeth, and the patient’s smile or front profile photo to determine the teeth’s influence on the patient’s facial aesthetics. For all three cases, participants first reviewed the intraoral images and the radiographs to gather information and to make decisions for a case, and then integrated information about hard tissue and the periodontal status to diagnose and plan treatment.

3.1.5 Intraoral Images and Radiograph Usage

Typically, all participants started with the intraoral images to examine the patient’s oral condition. For instance, they looked at the oral hygiene of the patient, any obvious signs of inflammation or swelling as well as color changes of the oral soft tissue. They also looked for missing and carious teeth before they proceeded to examine each tooth individually, starting from the upper right third molar to the upper left third molar and then moving to the lower left third molar and progressing to the lower right third molar, in a standard sequence (UR→LR). During this time, they checked for any discontinuity or cracks on tooth structure that indicated tooth decay or fracture. They also checked for tooth mobility, which plays an important role in deciding whether to save or extract teeth. They looked at radiographs for more information (such as extent of tooth decay or bone loss) or for confirmation of what they saw in the images. If participants started by reviewing the radiographs, they essentially followed the same order and integrated information from the images to confirm their observations.

Participants reviewed the hard tissue and periodontal information either to confirm their findings or to obtain more information. However, radiographs and images were the primary information sources they used to diagnose and develop a treatment plan for each of the three cases. An interesting finding is that while the five dental faculty participants requested and reviewed the hard tissue information, the five practicing dentists did not. Instead, they said they would document all findings in the hard tissue chart during the oral examination.

3.2 Variations among Participants and across Cases

Although participants used almost the same information sources for all three cases, there were some differences in how they used it based on case complexity. For instance, participants reviewed patient meta-information (patient information, medical history, dental history, social history, medication list, medical consult) at significantly higher frequencies (p value < 0.01) for the high complexity case in the beginning of the session (20th time percent) than for the low and medium complexity cases. This difference was most likely due to the fact that the high complexity case had additional information on medications, medical consults and social history. Another interesting finding was that while participants rarely went back to patient demographics, medical history, medical consult and medications at the end of the low or medium complexity case sessions, they revisited this information for the high complexity case. Five of the 10 participants reviewed medical history, medications and medical consult again when finalizing the treatment plan at the end of the session. Few participants recorded notes as they reviewed the three cases. Those who did so took fewer notes when reviewing the low complexity case than the medium and the high complexity cases. Participants also started writing halfway through the session for the medium and the high complexity cases and only towards the end of the session for the low complexity case.

3.3 Patterns of Navigation through Information Sources

It is important to learn how clinicians navigate through various information sources when diagnosing and planning treatment. Analysis revealed that participants exhibited three common patterns of reviewing information sources: 1) reviewing information in a linear sequence, which was observed mostly when they reviewed the low complexity case; 2) rapid switching between different information sources when they needed additional information or confirmation to decide on a finding, diagnosis or treatment; and 3) reviewing at least two information sources simultaneously, especially when confirming their findings or finalizing their diagnoses and treatments. Figure 2 illustrates these three patterns of navigation when a participant reviewed a high complexity case and Table V shows the frequency and time they spent reviewing at least two information sources simultaneously.

3.3.1 Analyzing Process Types Over Time

Of the 6631 coded segments, 13.8% were coded as the process type information retrieval or review, 26% as processing, 40.5% as deciding and 4.25% as other. Process type other comprised segments coded as expressing ignorance, summarizing and wrapping up. Of the total segments, 15.4% were not coded as they did not contain any information related to the patient case. In all 30 sessions, information retrieval or review occurred predominantly in the first quarter and leveled off about halfway through the case. At that point, deciding started to peak. Participants made most decisions in the middle phase and few at the end of each case. Processing was observed to be constant throughout all the cases. The pattern of process types, i.e. information retrieval/review, processing, deciding and other appeared to be the same over time in the three cases. The incidence rates of process types were highest for the low complexity case (p < 0.01). This may be because dentists processed information more quickly with the low complexity case than those of medium or high complexity. The overall inter-rater reliability (κ-statistic) for coded process types was 0.7. The individual κ-statistic for each process type was 0.82 for deciding, 0.97 for information review, 0.54 for processing and 0.82 for other.

These results suggest that clinicians spent the early phases of patient examination gathering information. The process types, information retrieval or review, processing and deciding occur throughout the patient examination. The process type other was significantly low in all sessions. These findings suggest that it is important to display clinically relevant information together and in context right from the beginning to support the clinicians’ continuous information review, processing and decision-making activities. Clinicians currently spend significant time searching and sifting through electronic patient information which makes it difficult to process and diagnose without making mistakes.

4. DISCUSSION

In this project, we studied how dentists review and process information, and make decisions, when examining new patients. To do so, we used cognitive task analysis, a method to capture and document manual and mental activities, artifacts, and task characteristics during a work process. CTA has only rarely been used during the needs analysis phase of clinical systems development, despite enhancing the understanding of clinician-patient encounters necessary for systems design [22, 24, 25, 31, 40].

For two reasons, we combined cognitive task analysis with a think-aloud protocol to simulate clinician activities during the patient encounter as closely as possible. First, capturing clinicians’ knowledge states and decision-making is likely to work better when the clinical care process is simulated more closely than possible through a simple interaction with a patient record. Second, using humans as real or simulated patients is with a patient record. Second, using humans as real or simulated patients is costly, time-consuming and logistically difficult.

Previous studies have focused on the clinical workflow or the interaction with patient documentation to understand how clinicians work [22, 31, 32, 39–41]. Observing the clinical workflow often faces logistical and practical challenges, which may make it difficult for researchers to observe the behavior of interest. On the other hand, inferring clinical behavior solely from EHR data is challenging [38, 43] because they are a reflection of the recording process inherent in healthcare, not the patient care process [43]. In our study, participants started with the basic information patients typically provide. Subsequently, they gathered, reviewed and processed information on their own, as they would with an actual patient. We simulated the patient as closely as possible with rich visual, graphical and other materials.

Our study produced three major insights into how clinicians reviewed and processed information relevant to the design of EDR systems. First, we determined which information was critical to the review, diagnosis and treatment planning during initial patient examinations. Second, we identified three main ways in which dentists navigate through the information in the process. Third, we identified information needs that EDRs currently may not be equipped to satisfy optimally. Below, we discuss these findings in more detail.

1. Information critical to review, diagnosis and treatment planning

Although participants reviewed information in the order they preferred, the study identified some common patterns. For instance, all participants had reviewed the general patient information, and the medical, medication and dental histories, before they made their first decision on the general status of the patient. Then, they typically reviewed radiographs and photos together, and integrated hard tissue and periodontal information. Dentists often use multiple information artifacts together, and work fluidly between high-fidelity information (e.g. radiographs and photos) and its abstract representation(s) (hard tissue and periodontal charts). In most EDRs, radiographs and intraoral photos are displayed on separate screens, making navigation cumbersome, and forcing recall rather than supporting recognition. These findings argue for designs that present clinically relevant information together and in context.

2. Three main navigation patterns through information

Participants navigated through information using three main patterns: (1) reviewing information artifacts in a linear sequence; (2) switching rapidly between information artifacts; and (3) reviewing multiple information artifacts simultaneously. This finding is significant because previous studies of EDRs indicated significant usability problems as a result of information that is fragmented across multiple screens [15, 17, 19, 20]. EDR users must remember key information while navigating through different screens, requiring them to focus on locating information and avoiding mistakes, rather than on the task itself. In contrast, paper-based records provide a higher degree of flexibility, because they allow the clinician to arrange forms as needed, for instance side-by-side. Supporting the navigation patterns identified in our study in EDRs may enhance access and navigation, and possibly improve the efficiency and effectiveness of users’ clinical decision-making processes.

3. Information needs

Our CTA also helped identify information needs that may not necessarily be part of routine patient documentation. For instance, most participants wanted to know whether the patient was in pain. Acute pain is an important part of the patient’s chief complaint, and helps the clinician prioritize and plan treatment. Yet, as one of our earlier studies has found [47], the chief complaint, as a separate data field, is not part of the four market-leading EDR systems. Similarly, participants wanted to check the patient’s bite and occlusion, and rule out parafunctional habits, often using study models. Anecdotal reports suggest that dentists continue to use plaster study models even after they have moved to electronic records. Despite the increasing availability and adoption of digital models, they are yet to become a part of the EDR in general dentistry.

Financial information also played a crucial role when the participants planned treatment. Currently, all EDRs manage patients’ financial and insurance information separately from the patient’s clinical information. This is because front desk personnel typically handle financial transactions, while clinical personnel manage clinical information. Given the relevance of financial considerations, access to financial and insurance information in the context of the treatment planning process should be improved. For instance, EDR systems could provide real-time financial estimates for planned treatment.

5. LIMITATIONS

Our study only approximated, but did not replicate, a clinical patient examination. This design may have influenced our results in multiple ways. First, dentists do not generally verbalize their thoughts during a clinical examination. Second, reviewing intraoral images and written information had to substitute for examining and interacting with the patient. Last, some of the nuances of the workflow of the clinical appointment may have been lost. A second limitation was that only a convenience sample of dentists participated in the study. We thus do not know whether the study results would generalize.

However, we believe that the CTA was one of the best options to achieve our study objectives. Lab studies are typically more appropriate for studying clinical decision-making than field studies. In the clinic, dentists often combine data gathering and treatment planning into a single activity [20], making it difficult to “tease out” specific cognitive processes. Our participants were able to focus their attention completely on the patient case and verbalize their thoughts, thus facilitating data collection in ways that would have been difficult to achieve in the clinical setting.

6. FUTURE DIRECTIONS

In future research, we plan to elucidate how EDRs can be designed optimally for clinical work using additional methods, such as contextual inquiry. While our current study focused on the cognitive processes of a single clinician in examining and treatment planning a single patient, our next step will be to study the clinical encounter in its actual work context. In this way, we will capture not only the dentist’s activities, but also his interaction with the dental team as well as the entire workflow. Another question for future research is how an EDR system designed on the basis of a CTA differs from one that was not with respect to usability, usefulness and user perceptions. Last, a long-term question we pursue is how CTA compares with other methods in helping optimize the design of health information technology systems.

7. CONCLUSION

Our study made several important contributions to understanding the requirements of and methods for designing EDR systems. First, our results provide a detailed and rich representation of how dental clinicians review, diagnosis and treatment plan patient cases. Using cases at three levels of complexity, we identified what information participants needed, in what sequence they reviewed it, how they navigated through it, and what information needs were unlikely to be met with current EDRs. We expect our results to significantly contribute to the development of flexible and task-appropriate information and interaction designs for EDRs. Second, our study helped reinforce the significance of applying cognitive engineering methods to the design of clinical system, as other authors [22, 24, 25, 31, 40] have already suggested.

RESEARCH HIGHLIGHTS.

Human-computer interaction problems of EHR contribute to suboptimal support of clinical work.

Scant literature exists on applying cognitive engineering methods to support system design.

Existing studies studied how and why users interacted with patient records.

The study extended cognitive methods to clinicians’ activities during patient encounter.

10. SUMMARY TABLE.

What was already known on the topic:

|

What this study added to our knowledge:

|

Acknowledgments

This project was supported in part by grant number KL2 RR024154 (Clinical Research Scholar) from the National Center for Research Resources (NCRR), a component of the National Institutes of Health (NIH) and NIH Roadmap for Medical Research and 5K08DE018957 from the National Institute for Dental and Craniofacial Research, a component of NIH. This publication was made possible by the Lilly Endowment, Inc. Physician Scientist Initiative. We gratefully acknowledge the study participants for participating in the study. We acknowledge Dr. Heiko Spallek for assisting with preparing the cases, Dr. James Bost for assisting with conceptualizing the study and analyzing the data and Dr. An-dre Kushniruk for his feedback and advice during the conduct of the study. We also acknowledge Ms. Diane Davis for her editorial assistance with this manuscript.

Footnotes

AUTHORS’ CONTRIBUTIONS

Dr. Thankam P. Thyvalikakath conceptualized the study, recruited participants, collected data, conducted analysis, wrote, and finalized the manuscript.

Mr. Michael P. Dziabiak assisted with preliminary coding, cross-checking data results, creating the charts and graphs and reviewed the final manuscript.

Mr. Raymond Johnson assisted with preliminary coding, cross-checking data results and reviewed the final manuscript.

Dr. Miguel Humberto Torres-Urquidy helped to conceptualize the study methods and to collect data.

Dr. Amit Acharya helped to conceptualize the study methods and data analysis of the study.

Dr. Jonathan Yabes conducted data analysis and reviewed the final manuscript

Dr. Titus K. Schleyer guided conceptualizing the study and data analysis. He also reviewed, and finalized the manuscript.

9. CONFLICT OF INTEREST

All authors report no conflict of interest with the study, “Advancing cognitive engineering methods to support user interface design for electronic health records”.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Thankam P. Thyvalikakath, Department of Restorative Dentistry, Indiana University School of Dentistry, 1121 W Michigan Street, S316, Indianapolis, IN 46202-5186.

Michael P. Dziabiak, Office of Faculty Affairs, School of Dental Medicine, University of Pittsburgh, Pittsburgh, Pennsylvania.

Raymond Johnson, School of Dental Medicine, University of Pittsburgh, Pittsburgh, Pennsylvania.

Miguel Humberto Torres-Urquidy, Department of Biomedical Informatics, School of Medicine, University of Pittsburgh, Pittsburgh, Pennsylvania.

Amit Acharya, Biomedical Informatics Research Center, Marshfield Clinic, Marshfield, Wisconsin.

Jonathan Yabes, Department of Medicine, School of Medicine, University of Pittsburgh, Pittsburgh, Pennsylvania.

Titus K. Schleyer, Center for Biomedical Informatics, Regenstrief Institute, Inc., 410 West 10th Street, Suite 2000, Indianapolis, IN 46202-3012.

References

- 1.Stead WW, Lin HS, editors. Computational Technology for Effective Health Care: Immediate Steps and Strategic Directions. Washington (DC): National Academies Press (US); 2009. 2010/07/28. Strategic Directions. [PubMed] [Google Scholar]

- 2.Fitzpatrick J, Koh JS. If you build it (right), they will come: the physician-friendly CPOE. Not everything works as planned right out of the box. A Mississippi hospital customizes its electronic order entry system for maximum use by physicians. Health Manag Technol. 2005 Jan;26(1):52–3. [PubMed] [Google Scholar]

- 3.Miller RH, Sim I. Physicians’ use of electronic medical records: barriers and solutions. Health Aff (Millwood) 2004 Mar-Apr;23(2):116–26. doi: 10.1377/hlthaff.23.2.116. [DOI] [PubMed] [Google Scholar]

- 4.Simon SR, Kaushal R, Cleary PD, Jenter CA, Volk LA, Poon EG, et al. Correlates of electronic health record adoption in office practices: a statewide survey. J Am Med Inform Assoc. 2007 Jan-Feb;14(1):110–7. doi: 10.1197/jamia.M2187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Adams MJTY, Pew RW. Situation awareness and the cognitive management of complex systems. Hum Factors. 1995;37:85–104. [Google Scholar]

- 6.Ash JS, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc. 2004 Mar-Apr;11(2):104–12. doi: 10.1197/jamia.M1471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Blyth A. Issues arising from medical system’s failure. SIGSOFT Softw Eng Notes. 1997;22(2):85–6. [Google Scholar]

- 8.Campbell EM, Guappone KP, Sittig DF, Dykstra RH, Ash JS. Computerized provider order entry adoption: implications for clinical workflow. J Gen Intern Med. 2009 Jan;24(1):21–6. doi: 10.1007/s11606-008-0857-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Han YY, Carcillo JA, Venkataraman ST, Clark RS, Watson RS, Nguyen TC, et al. Unexpected increased mortality after implementation of a commercially sold computerized physician order entry system. Pediatrics. 2005 Dec;116(6):1506–12. doi: 10.1542/peds.2005-1287. [DOI] [PubMed] [Google Scholar]

- 10.Koppel R, Metlay JP, Cohen A, Abaluck B, Localio AR, Kimmel SE, et al. Role of computerized physician order entry systems in facilitating medication errors. JAMA: the journal of the American Medical Association. 2005 Mar 9;293(10):1197–203. doi: 10.1001/jama.293.10.1197. [DOI] [PubMed] [Google Scholar]

- 11.Kuperman GJ, Bobb A, Payne TH, Avery AJ, Gandhi TK, Burns G, et al. Medication-related clinical decision support in computerized provider order entry systems: a review. J Am Med Inform Assoc. 2007 Jan-Feb;14(1):29–40. doi: 10.1197/jamia.M2170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Patel VL, Zhang J, Yoskowitz NA, Green R, Sayan OR. Translational cognition for decision support in critical care environments: a review. J Biomed Inform. 2008 Jun;41(3):413–31. doi: 10.1016/j.jbi.2008.01.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Tang PC, Patel VL. Major issues in user interface design for health professional workstations: summary and recommendations. Int J Biomed Comput. 1994 Jan;34(1–4):139–48. doi: 10.1016/0020-7101(94)90017-5. [DOI] [PubMed] [Google Scholar]

- 14.Atkinson JC, Zeller GG, Shah C. Electronic patient records for dental school clinics: more than paperless systems. Journal of dental education. 2002 May;66(5):634–42. [PubMed] [Google Scholar]

- 15.Hill HK, Stewart DC, Ash JS. Health Information Technology Systems profoundly impact users: a case study in a dental school. Journal of dental education. 2009 Apr;74(4):434–45. [PubMed] [Google Scholar]

- 16.Schleyer TK, Thyvalikakath TP, Spallek H, Torres-Urquidy MH, Hernandez P, Yuhaniak J. Clinical computing in general dentistry. J Am Med Inform Assoc. 2006 May-Jun;13(3):344–52. doi: 10.1197/jamia.M1990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Thyvalikakath TP, Monaco V, Thambuganipalle HB, Schleyer T. A usability evaluation of four commercial dental computer-based patient record systems. Journal of the American Dental Association. 2008 Dec;139(12):1632–42. doi: 10.14219/jada.archive.2008.0105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Thyvalikakath TP, Schleyer TK, Monaco V. Heuristic evaluation of clinical functions in four practice management systems: a pilot study. Journal of the American Dental Association. 2007 Feb;138(2):209–10. 12–8. doi: 10.14219/jada.archive.2007.0138. [DOI] [PubMed] [Google Scholar]

- 19.Walji MF, Taylor D, Langabeer JR, 2nd, Valenza JA. Factors influencing implementation and outcomes of a dental electronic patient record system. Journal of dental education. 2009 May;73(5):589–600. [PubMed] [Google Scholar]

- 20.Irwin JY, Torres-Urquidy MH, Schleyer T, Monaco V. A preliminary model of work during initial examination and treatment planning appointments. British dental journal. 2009 Jan 10;206(1):E1. doi: 10.1038/sj.bdj.2008.1151. discussion 24–5. [DOI] [PubMed] [Google Scholar]

- 21.Walji MF, Kalenderian E, Tran D, Kookal KK, Nguyen V, Tokede O, et al. Detection and characterization of usability problems in structured data entry interfaces in dentistry. International journal of medical informatics. 2012 Jun 29; doi: 10.1016/j.ijmedinf.2012.05.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Jaspers MW, Steen T, van den Bos C, Geenen M. The think aloud method: a guide to user interface design. International journal of medical informatics. 2004 Nov;73(11–12):781–95. doi: 10.1016/j.ijmedinf.2004.08.003. [DOI] [PubMed] [Google Scholar]

- 23.Wachter SB, Agutter J, Syroid N, Drews F, Weinger MB, Westenskow D. The employment of an iterative design process to develop a pulmonary graphical display. J Am Med Inform Assoc. 2003 Jul-Aug;10(4):363–72. doi: 10.1197/jamia.M1207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Patel VL, Kushniruk AW. Interface design for health care environments: the role of cognitive science. Proc AMIA Symp. 1998:29–37. [PMC free article] [PubMed] [Google Scholar]

- 25.Kushniruk AW, Patel VL. Cognitive evaluation of decision making processes and assessment of information technology in medicine. International journal of medical informatics. 1998 Aug-Sep;51(2–3):83–90. doi: 10.1016/s1386-5056(98)00106-3. [DOI] [PubMed] [Google Scholar]

- 26.Kushniruk AW, Patel VL. Cognitive and usability engineering methods for the evaluation of clinical information systems. J Biomed Inform. 2004 Feb;37(1):56–76. doi: 10.1016/j.jbi.2004.01.003. [DOI] [PubMed] [Google Scholar]

- 27.Patel VL, Arocha JF, Diermeier M, Greenes RA, Shortliffe EH. Methods of cognitive analysis to support the design and evaluation of biomedical systems: the case of clinical practice guidelines. J Biomed Inform. 2001 Feb;34(1):52–66. doi: 10.1006/jbin.2001.1002. [DOI] [PubMed] [Google Scholar]

- 28.Horsky J, Kaufman DR, Oppenheim MI, Patel VL. A framework for analyzing the cognitive complexity of computer-assisted clinical ordering. J Biomed Inform. 2003 Feb-Apr;36(1–2):4–22. doi: 10.1016/s1532-0464(03)00062-5. [DOI] [PubMed] [Google Scholar]

- 29.Kushniruk AW, Patel VL, Marley AA. Small worlds and medical expertise: implications for medical cognition and knowledge engineering. International journal of medical informatics. 1998 May;49(3):255–71. doi: 10.1016/s1386-5056(98)00044-6. [DOI] [PubMed] [Google Scholar]

- 30.Fafchamps D, Young CY, Tang PC. Modelling work practices: input to the design of a physician’s workstation. Proc Annu Symp Comput Appl Med Care. 1991:788–92. [PMC free article] [PubMed] [Google Scholar]

- 31.Nygren E, Wyatt JC, Wright P. Helping clinicians to find data and avoid delays. Lancet. 1998 Oct 31;352(9138):1462–6. doi: 10.1016/S0140-6736(97)08307-4. [DOI] [PubMed] [Google Scholar]

- 32.Sharda P, Das AK, Cohen TA, Patel V. Customizing clinical narratives for the electronic medical record interface using cognitive methods. International journal of medical informatics. 2006 May;75(5):346–68. doi: 10.1016/j.ijmedinf.2005.07.027. [DOI] [PubMed] [Google Scholar]

- 33.Jalote-Parmar A, Badke-Schaub P, Ali W, Samset E. Cognitive processes as integrative component for developing expert decision-making systems: a workflow centered framework. J Biomed Inform. 2010 Feb;43(1):60–74. doi: 10.1016/j.jbi.2009.07.001. [DOI] [PubMed] [Google Scholar]

- 34.Johnson CM, Turley JP. The significance of cognitive modeling in building healthcare interfaces. International journal of medical informatics. 2006 Feb;75(2):163–72. doi: 10.1016/j.ijmedinf.2005.06.003. [DOI] [PubMed] [Google Scholar]

- 35.Zhang J, Patel V, Johnson KA, Malin J, Smith JW. Designing Human-Centered Distributed Information Systems. IEEE Intelligent Systems. 2002;17(5):42–6. [Google Scholar]

- 36.Rinkus S, Johnson-Throop KA, Zhang J, editors. AMIA Annual Symposium proceedings / AMIA Symposium AMIA Symposium. 2003. Designing a knowledge management system for distributed activities: a human centered approach; p. 1479896. [PMC free article] [PubMed] [Google Scholar]

- 37.Rinkus S, Walji M, Johnson-Throop KA, Malin JT, Turley JP, Smith JW, et al. Human-centered design of a distributed knowledge management system. J Biomed Inform. 2005 Feb;38(1):4–17. doi: 10.1016/j.jbi.2004.11.014. [DOI] [PubMed] [Google Scholar]

- 38.Zheng K, Padman R, Johnson MP, Diamond HS. An interface-driven analysis of user interactions with an electronic health records system. J Am Med Inform Assoc. 2009 Mar-Apr;16(2):228–37. doi: 10.1197/jamia.M2852. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Patel VL, Kushniruk AW, Yang S, Yale JF. Impact of a computer-based patient record system on data collection, knowledge organization, and reasoning. J Am Med Inform Assoc. 2000 Nov-Dec;7(6):569–85. doi: 10.1136/jamia.2000.0070569. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Nygren E, Henriksson P. Reading the medical record. I. Analysis of physicians’ ways of reading the medical record. Comput Methods Programs Biomed. 1992 Sep-Oct;39(1–2):1–12. doi: 10.1016/0169-2607(92)90053-a. [DOI] [PubMed] [Google Scholar]

- 41.Nygren E, Johnson M, Henriksson P. Reading the medical record. II. Design of a human-computer interface for basic reading of computerized medical records. Comput Methods Programs Biomed. 1992 Sep-Oct;39(1–2):13–25. doi: 10.1016/0169-2607(92)90054-b. [DOI] [PubMed] [Google Scholar]

- 42.Cusack CM, Hripcsak G, Bloomrosen M, Rosenbloom ST, Weaver CA, Wright A, et al. The future state of clinical data capture and documentation: a report from AMIA’s 2011 Policy Meeting. J Am Med Inform Assoc. 2013 Sep 8;20(1):134–40. doi: 10.1136/amiajnl-2012-001093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Hripcsak G, Albers DJ. Next-generation phenotyping of electronic health records. J Am Med Inform Assoc. 2013 Sep 30;20(1):117–21. doi: 10.1136/amiajnl-2012-001145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Weir CR, Nebeker JJ, Hicken BL, Campo R, Drews F, Lebar B. A cognitive task analysis of information management strategies in a computerized provider order entry environment. J Am Med Inform Assoc. 2007 Jan-Feb;14(1):65–75. doi: 10.1197/jamia.M2231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Crowley RS, Naus GJ, Stewart J, 3rd, Friedman CP. Development of visual diagnostic expertise in pathology -- an information-processing study. J Am Med Inform Assoc. 2003 Jan-Feb;10(1):39–51. doi: 10.1197/jamia.M1123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Stefanac SJ, Nesbit SP. Treatment Planning in Dentistry. 2. St. Louis, Mo: Mosby; 2006. [Google Scholar]

- 47.Schleyer T, Spallek H, Hernandez P. A qualitative investigation of the content of dental paper-based and computer-based patient record formats. J Am Med Inform Assoc. 2007 Jul-Aug;14(4):515–26. doi: 10.1197/jamia.M2335. [DOI] [PMC free article] [PubMed] [Google Scholar]