Summary

The current study demonstrates, for the first time, a specific enhancement of auditory spatial cue discrimination due to eye gaze. Whereas the region of sharpest visual acuity, called the fovea, can be directed at will by moving one's eyes, auditory spatial information is primarily derived from head-related acoustic cues. Past auditory studies have found better discrimination in front of the head [1–3], but have not manipulated subjects' gaze, thus overlooking potential oculomotor influences. Electrophysiological studies have shown that the inferior colliculus (IC), a critical auditory midbrain nucleus, shows visual and oculomotor responses [4–6] and modulations of auditory activity [7–9], and auditory neurons in the superior colliculus (SC) show shifting receptive fields [10–13]. How the auditory system leverages this crossmodal information at the behavioral level remains unknown. Here, we directed subjects' gaze (with an eccentric dot) or auditory attention (with lateralized noise) while they performed an auditory spatial cue discrimination task. We found that directing gaze toward a sound significantly enhances discrimination of both interaural level and time differences, whereas directing auditory spatial attention does not. These results show that oculomotor information variably enhances auditory spatial resolution even when the head remains stationary, revealing a distinct behavioral benefit possibly arising from auditory-oculomotor interactions at an earlier level of processing than previously demonstrated.

Results

When making judgments about an object, we generally rely on the most informative sensory cues available [14, 15]. For visible objects, the eyes are more spatially reliable than the ears. As a result, auditory localization is strongly biased by a coincident visual stimulus [16]. Additionally, gazing toward a visual stimulus biases sound localization away from the direction of gaze over short time periods [17, 18], and toward it over longer ones [19], suggesting multiple mechanisms by which eye position influences auditory localization. These studies, however, have focused on absolute tasks (locating a sound) instead of relative ones (discriminating two sounds' locations), and do not measure acuity. The observed oculomotor-based realignments of auditory localization behavior could reasonably emerge at any stage of processing from brainstem to cortex. However, performance on relative spatial discrimination tasks has been linked to the acuity of midbrain spatial receptive fields in owls [20, 21]. Thus, gaze-driven improvements in auditory spatial cue discrimination could be linked to oculomotor modulation of subcortical coding of these cues observed in a number of studies [7, 9–13].

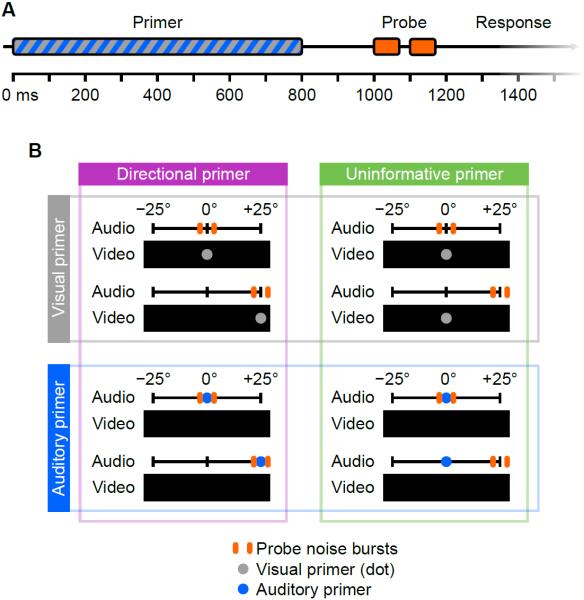

Here we determine if directing gaze to an auditory target increases behavioral sensitivity to binaural cues in that direction. We presented 16 subjects (11 female; age 23.9 ± 3.1, mean ± SD; thresholds≤20 dB HL at octave frequencies 250–8000 Hz) with trials consisting of an auditory or visual primer followed by a probe comprising two brief noise bursts at slightly different perceived positions (i.e., differing binaural cue values; Figure 1A), in a two-alternative forced-choice task. Sounds were presented via insert earphones. Subjects reported whether the second probe noise burst was to the right or left of the first (i.e., discriminated their relative positions). One subject was removed due to abnormal binaural perception, yielding N=15.

Figure 1.

Stimulus presentation and blocking.

(A) The time course of a trial. In visual trials, the dot brightened on fixation and darkened after 800 ms; in auditory trials the primer was a noise burst. The probe noise bursts lasted 70 ms each with 30 ms between them. The subject responded by button press any time after the stimulus. Primers provided the same timing information whether visual or auditory, directional or uninformative.

(B) Experimental blocks are shown one per quadrant. Each quadrant shows an example of a center trial above a side trial. The positions of the visual or auditory primers, where present, are shown as gray and blue dots respectively. In auditory trials, subjects were presented with a black screen and not instructed where to direct their eyes. The probe noise bursts are shown as orange bars of different lateralizations centered about the primer. See also Figure S1 for example eye gaze traces.

Typically, interaural level differences (ILDs) are more informative regarding azimuth at frequencies above approximately 3 kHz and interaural time differences (ITDs) below about 1.5 kHz. These binaural cues are extracted in auditory brainstem nuclei, respectively: the lateral and medial superior olive (LSO, MSO). Because oculomotor activity may influence these parallel pathways differently, we manipulated the cues independently, using octave-wide noise bursts in the relevant high (centered at 4 kHz) or low (500 Hz) frequency ranges. This created a lateralized percept, but stimuli were generally not perceived to be externalized as they would have been using free-field presentation. Since ILD and ITD were separately manipulated (alternate cue set to 0), some subjects may have perceived conflicting cues. This possibility was mitigated by filtering the stimuli to the relevant frequency ranges; further, any potential impact on discrimination was taken into account by the initial calibration for each subject (see below).

The ILD or ITD was set for each subject so that sounds were perceived to be centered (0° azimuth midpoint) or to the side (±25° midpoint), with offsets of 12.0±5.0 dB for ILD and 231±89 μs for ITD. The excluded subject gave unnaturally large ILD and ITD offsets (42 dB, 1157 μs). ILD and ITD discrimination thresholds were determined for each subject (5.9±1.3 dB, 217±70 μs) at their measured offset; performance was tested at these values thereafter. These thresholds are larger than in previous studies, which may result from differing stimulus parameters or because subjects were inexperienced in interaural discrimination tasks [22].

Each experimental block employed either visual or auditory primers lasting 800 ms followed by a 200 ms pause before the probe started. Visual primers were a white fixation dot subtending 0.85° (turning gray after 800 ms to allow maintained fixation). Auditory primers were noise lateralized by the complementary binaural cue in the complementary frequency band (e.g., high-frequency ILD primer preceded low-frequency ITD probe), to test for effects of directed auditory attention. Primers were either directional, indicating the midpoint lateralization of the impending probe, or uninformative, occurring in the center regardless of the probe's position. These variables resulted in four experimental blocks (Figure 1B). Fixation on visual primers was confirmed with eye tracking before each trial (Figure S1 and Supplementary Experimental Procedures). The probe comprised two noise bursts lasting 70 ms each, with 30 ms between them. Subjects' gaze was not controlled in auditory trials, and they gazed near the center in the vast majority of trials.

Using four-factor within-subjects ANOVA, we found significant effects of probe position (F(1,14)=96.0, p=1.2×10−7), binaural cue type (F(1,14)=6.15, p=0.026), and an interaction between the two (F(1,14)=13.7, p=0.0024). Specifically, performance was better for center than for side probes (Figure 2), as expected [1–3]. Primer informativeness was significant (F(1,14)=4.75, p=0.047), but its interaction with primer modality was much more so (F(1,14)=20.5, p=0.00048), reflecting the fact that only directional visual primers improved performance. No other factors or interactions were significant. All statistics were performed on arcsine-transformed data.

Figure 2.

Directing eye gaze improves spatial cue discrimination.

(A and B) For ILD (A) and ITD (B) stimuli, the subject performance is shown for all conditions. Center performance was better than side performance. For ILD, performance was better in visual directional trials than in visual uninformative trials at both the center and side positions. For ITD, directional visual trials showed improved discrimination when the stimulus was located on the side. Auditory primers offered no benefit. Error bars indicate ±1 SEM (across the 15 intra-subject means). Asterisks indicate one-tailed paired t test significance: * p<0.00625, ** p<0.00125 (Bonferroni-corrected values of 0.05 and 0.01, respectively). Effect size (within-subjects Cohen's d) of Directional – Informative contrast is bold where >0.5. Arcsine-transformed values were used for t tests and effect sizes; the means and error bars plotted are based on raw percent correct scores.

The biggest improvement was due to gazing toward a side probe. Subjects benefitted from informative visual primers on both ILD (p=0.00083 one-tailed paired t test, Bonferroni-corrected α=0.00625; Figures 2A,3A Visual) and ITD trials (p=0.0044; Figures 2B,3B Visual). These results show that gazing toward an off-center stimulus enhances binaural discrimination, but could this come simply from knowing where to listen? Using an auditory primer instead of a visual one (meaning listeners were spatially primed but not directing gaze) provided no improvements (p=0.53 ILD, p=0.41 ITD). This result is surprising given previous experiments have shown intelligibility benefits of knowing where to listen [23]. Those gains may come from facilitated selective attention, likely a cortical process [24]. The present results are consistent with a gaze-directed refinement of subcortical binaural cue coding.

Figure 3.

Most subjects show improved discrimination from directing eye gaze.

Directional primer performance is plotted against uninformative primer performance for each subject (N=15). Panels are arranged and labeled as in Figure 2. Blue x's show individual subject mean performance on center probe trials and orange o's show performance on side probe trials. Centroids and SEM in each direction are shown as black crosses overlaying colored ellipses whose radii are also defined by the SEM. Points in the upper-left half of the plot show an improvement of the directional primer over the uninformative one, points on the gray diagonal show no effect, and points below (in the lower-right half) show a performance detriment. Note that in all four panels, center probe points and centroids (blue) are higher than side probe points and centroids (orange), indicating that there was a marked improvement in discrimination of ILD and ITD in the center compared to the side.

(A) For ILD stimuli, most subjects show an improvement of the directional visual primer for both side and center probes, particularly for side probes. For auditory primers, performance is scattered evenly above and below, leading to centroids on the diagonal, showing no effect of a directional primer.

(B) Results are similar for ITD stimuli, though not significant for center probe trials.

Within one experimental block, all trials had either directional or uninformative primers. In trials with a central probe, informative visual primers improved discrimination for ILD probes (p=0.0051). Auditory primers offered no benefit (p≈0.9 for ILD, ITD). Thus, while only knowing where to listen is not enough to improve discrimination, it does appear to affect whether a centered visual stimulus improves performance, at least for ILD-lateralized stimuli.

Discussion

Gaze-mediated modulations of auditory spatial processing have often been discussed in the context of bringing auditory information (innately head-centric) and visual information (innately eye-centric) into a common reference frame [9–12, 25]. However, as in vision, dynamically directing the region of highest auditory acuity (even if that region is broad, unlike the visual fovea) also likely has important behavioral benefits. When a listener attends to one speech stream while suppressing others, spatial separation between these sources increases intelligibility, an effect known as spatial release from masking that improves with increasing separations [26]. Functionally speaking, improving spatial cue discrimination could effectively increase the perceptual separation (by decreasing the spatial ambiguity) of two sources that are physically close together. Studies of concurrent minimum audible angles (in which probe sounds were presented at the same time, rather than sequentially) [27, 28], of the spatial acuity with which streams can be segregated [3], and of the angles by which two sources need to be separated to be perceived distinctly [29] all suggest that there is indeed room for improvement when segregating lateral sound sources.

Over short periods of directed gaze, like those used here, shifts in the apparent sound-source location away from the direction of gaze have been demonstrated behaviorally [17, 18]. Unlike changes in acuity, such biases in perceived location could conceivably emerge at any level of processing, including in the cortex. However, it is worth speculating whether there is a physiological mechanism that could explain such shifts together with the discrimination enhancements of the current study (Figure 4A,C centered gaze, Figure 4B,D eccentric gaze).

Figure 4.

Behavioral results are consistent with shifting spatial receptive fields.

(A) When gaze is centered there is some ambiguity in the difference between eccentric sources (blurred orange circles), and a centered sound is perceived correctly from the center (blue circle).

(B) When gaze is to the side, discrimination between eccentric sounds' positions increases (blur is reduced for orange circles), and the centered sound source is perceived as shifted in the direction opposite gaze (blue circle moved right).

(C) The unbiased spatial receptive field, plotting spike rate of a binaural neuron versus azimuth. Here, true azimuth and perceived azimuth are in alignment.

(D) The receptive field and perceptual azimuth axis have been shifted in the direction of gaze (left). This shift has two effects: 1) a bigger difference between the neural firing rates from the eccentric targets (see orange bars on vertical axis), improving discrimination; and 2) the centered sound being right of center on the perceptual azimuth axis. The shift has been exaggerated for illustrative purposes.

Such a mechanism may arise from the fact that auditory brainstem spatial receptive fields are typically nonlinear, with many showing a transition zone between low and high spike rates where the slope is steepest. The best coding resolution, i.e., the most information, is found in this steeper region [30]. Intensity and ITD response functions have been shown to shift as result of adapting to stimulus statistics [31, 32]. Such shifts of nonlinear response functions change the operating point, resulting in larger differences in neural firing rates. Shifting rate-azimuth curves in the direction of eye gaze (Figure 4C,D orange sound sources) is one mechanism that would allow the sound sources' locations to be better distinguished, improving discrimination (Figure 4B orange circles more punctate than in A). Moving the receptive field in this manner would predict that localization estimates would move opposite gaze direction to some degree, as has been observed [17, 18] (Figure 4, blue sources). For example, gazing leftward would shift the receptive field to the left, resulting in 1) better discrimination of the left-lateralized sounds, as well as 2) a rightward shift in the centered sound source's perceived azimuth (Figure 4D, blue arrow meeting perceived azimuth axis right of center). This shifting receptive field would not explain long-term localization biases in the same direction as gaze [19], but further investigation with varied timing may reconcile the present results with these. Further, behavioral work has shown that gaze's interaction with sound localization is frequency-dependent, implicating centers of the brain with tonotopic organization such as those in the ascending auditory pathway [33].

Gaze's effect on ILD discrimination may stem from such mechanisms. There is evidence of gaze-mediated shifts of auditory spatial receptive fields in superior colliculus (SC) and its avian analog [11–13]. The SC is the principal midbrain nucleus involved in executing saccadic eye movements, and is thought to integrate spatial information across modalities [34]. It contains spatially tuned auditory neurons that typically respond to high frequencies, and are generally more sensitive to ILDs [35, 36]. It is not clear if these spatial receptive field shifts originate in SC, or if they emerge in a previous level of processing, such as the external and pericentral nuclei of the IC and the nucleus of the brachium of the IC which project to SC [35, 37] or the superior olivary complex (which projects to IC [38]). In IC, gaze interacts in a complex way with auditory responses producing a representation that is neither fully head- nor eye-centered, but can be used to compute an eye-centric representation [7, 9]. Combinations of these responses, especially of units tuned to opposite azimuths, could result in shifting receptive fields downstream, which could in turn help explain the improvements seen here in ILD discrimination for eccentric probes.

For ITD, a possible physiological mechanism for the improvements in discrimination may lie in the MSO, where ITD cues are calculated. Recordings in the MSO show that rate-ITD curves often peak outside the physiological range, with their region of maximal slope covering the relevant range [39]. Inhibition plays a role in ITD processing in the MSO [39], and while the details are debated, modulating this inhibition could shift ITD receptive fields [40]. It is conceivable that this modulatory signal could originate from non-auditory regions of the brainstem, midbrain, or cortex, though this is yet to be demonstrated. While not related to gaze, adaptive changes to ITD response functions observed in IC (which receives MSO inputs [38]) indicate that such modulations are at least feasible [31].

What is driving these modulations of discrimination performance? The cortical network controlling visual attention is well studied [41], and the frontal eye fields region (FEF), an important node in this network, has strong connections to SC [42] as well as to the auditory cortex (AC) [43]. FEF is also important to auditory attention [44], and studies have shown sharpening of auditory spatial receptive fields by microstimulation in the avian FEF analog [13], suggesting a role in supramodal attention. Though AC is likely important to selective auditory attention [24], electrophysiological work has shown only weak effects of gaze in AC [7] compared to stronger modulations in IC [6, 9]. We posit that the improvement in discrimination observed here when directing gaze but not auditory attention alone results from modulation of subcortical auditory activity by the oculomotor, and even possibly the visual attentive system [45], independent of auditory attention.

Here we observed an enhancement in auditory spatial cue discrimination when gazing toward an auditory stimulus lateralized by manipulating either ILD or ITD. Crucially, discrimination saw no improvement in any experimental conditions where location was cued acoustically (leaving gaze undirected), demonstrating that simply knowing where to listen is not enough to improve discrimination, and that the oculomotor system is a necessary part of the observed enhancements. ILD discrimination also improved when the subject gazed toward a centered visual primer and knew the auditory probe would also be centered, suggesting that attention may affect gaze's impact on auditory spatial perception. Taken together, the results of this study are consistent with interaction of the oculomotor system with subcortical binaural processing pathways benefitting human spatial hearing.

Experimental Procedures

Methods were approved by the University of Washington Institutional Review Board.

Acoustic stimuli were ramped on/off by a 10 ms cos2 envelope. Filtering was performed as frequency domain multiplication, yielding negligible energy outside passbands. There were 40 trials per data point per subject.

Individual ILD and ITD offsets were determined for each subject by aligning repeating acoustic noise bursts with a visual fixation dot at ±25° several times and averaging those estimates. We used a weighted up-down adaptive track to measure ILD and ITD discrimination at 75% performance at the center-gaze, side-probe condition, and these values separated probe bursts for the entire experiment. ITDs were applied to both envelope and fine structure.

Supplementary Material

Highlights

Listeners' eye gaze was manipulated in an auditory spatial cue discrimination task

Gazing toward a sound improves auditory spatial cue discrimination

Just knowing where to listen does not account for the observed behavioral benefit

Results are consistent with oculomotor modulations of subcortical auditory activity

Acknowledgments

Thanks to Mihwa Kim for assistance with data collection and Eric Larson and Jennifer Thornton for comments. This work was funded by NIH grants R00DC010196, R01DC013260 (AKCL), T32DC005361 (RKM).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Mills AW. On the Minimum Audible Angle. J. Acoust. Soc. Am. 1958;30:237–246. [Google Scholar]

- 2.Hafter ER, De Maio J. Difference thresholds for interaural delay. J. Acoust. Soc. Am. 1975;57:181–187. doi: 10.1121/1.380412. [DOI] [PubMed] [Google Scholar]

- 3.Middlebrooks JC, Onsan ZA. Stream segregation with high spatial acuity. J. Acoust. Soc. Am. 2012;132:3896–3911. doi: 10.1121/1.4764879. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Porter KK, Metzger RR, Groh JM. Visual- and saccade-related signals in the primate inferior colliculus. Proc. Natl. Acad. Sci. 2007;104:17855–17860. doi: 10.1073/pnas.0706249104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bulkin DA, Groh JM. Distribution of visual and saccade related information in the monkey inferior colliculus. Front. Neural Circuits. 2012;6:61. doi: 10.3389/fncir.2012.00061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Bulkin DA, Groh JM. Distribution of eye position information in the monkey inferior colliculus. J. Neurophysiol. 2012;107:785–795. doi: 10.1152/jn.00662.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Maier JX, Groh JM. Comparison of gain-like properties of eye position signals in inferior colliculus versus auditory cortex of primates. Front. Integr. Neurosci. 2010;4:121. doi: 10.3389/fnint.2010.00121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bergan JF, Knudsen EI. Visual Modulation of Auditory Responses in the Owl Inferior Colliculus. J. Neurophysiol. 2009;101:2924–2933. doi: 10.1152/jn.91313.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Groh JM, Trause AS, Underhill AM, Clark KR, Inati S. Eye Position Influences Auditory Responses in Primate Inferior Colliculus. Neuron. 2001;29:509–518. doi: 10.1016/s0896-6273(01)00222-7. [DOI] [PubMed] [Google Scholar]

- 10.Jay MF, Sparks DL. Auditory receptive fields in primate superior colliculus shift with changes in eye position. Nature. 1984;309:345–347. doi: 10.1038/309345a0. [DOI] [PubMed] [Google Scholar]

- 11.Jay MF, Sparks DL. Sensorimotor integration in the primate superior colliculus. II. Coordinates of auditory signals. J. Neurophysiol. 1987;57:35–55. doi: 10.1152/jn.1987.57.1.35. [DOI] [PubMed] [Google Scholar]

- 12.Populin LC, Tollin DJ, Yin TCT. Effect of eye position on saccades and neuronal responses to acoustic stimuli in the superior colliculus of the behaving cat. J. Neurophysiol. 2004;92:2151–2167. doi: 10.1152/jn.00453.2004. [DOI] [PubMed] [Google Scholar]

- 13.Winkowski DE, Knudsen EI. Top-down gain control of the auditory space map by gaze control circuitry in the barn owl. Nature. 2006;439:336–339. doi: 10.1038/nature04411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Talsma D, Senkowski D, Soto-Faraco S, Woldorff MG. The multifaceted interplay between attention and multisensory integration. Trends Cogn. Sci. 2010;14:400–410. doi: 10.1016/j.tics.2010.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Whitchurch EA, Takahashi TT. Combined auditory and visual stimuli facilitate head saccades in the barn owl (Tyto alba) J. Neurophysiol. 2006;96:730–745. doi: 10.1152/jn.00072.2006. [DOI] [PubMed] [Google Scholar]

- 16.Recanzone GH. Interactions of auditory and visual stimuli in space and time. Hear. Res. 2009;258:89–99. doi: 10.1016/j.heares.2009.04.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Lewald J. The effect of gaze eccentricity on perceived sound direction and its relation to visual localization. Hear. Res. 1998;115:206–216. doi: 10.1016/s0378-5955(97)00190-1. [DOI] [PubMed] [Google Scholar]

- 18.Lewald J, Ehrenstein WH. The effect of eye position on auditory lateralization. Exp. Brain Res. Exp. Hirnforsch. Expérimentation Cérébrale. 1996;108:473–485. doi: 10.1007/BF00227270. [DOI] [PubMed] [Google Scholar]

- 19.Razavi B, O'Neill WE, Paige GD. Auditory spatial perception dynamically realigns with changing eye position. J. Neurosci. 2007;27:10249–10258. doi: 10.1523/JNEUROSCI.0938-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Bala ADS, Spitzer MW, Takahashi TT. Prediction of auditory spatial acuity from neural images on the owl's auditory space map. Nature. 2003;424:771–774. doi: 10.1038/nature01835. [DOI] [PubMed] [Google Scholar]

- 21.Bala ADS, Spitzer MW, Takahashi TT. Auditory Spatial Acuity Approximates the Resolving Power of Space-Specific Neurons. PLoS ONE. 2007;2:e675. doi: 10.1371/journal.pone.0000675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Wright BA, Fitzgerald MB. Different patterns of human discrimination learning for two interaural cues to sound-source location. Proc. Natl. Acad. Sci. 2001;98:12307–12312. doi: 10.1073/pnas.211220498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Best V, Ozmeral EJ, Shinn-Cunningham BG. Visually-guided Attention Enhances Target Identification in a Complex Auditory Scene. J. Assoc. Res. Otolaryngol. 2007;8:294–304. doi: 10.1007/s10162-007-0073-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Mesgarani N, Chang EF. Selective cortical representation of attended speaker in multi-talker speech perception. Nature. 2012;485:233–236. doi: 10.1038/nature11020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Lee J, Groh JM. Auditory signals evolve from hybrid- to eye-centered coordinates in the primate superior colliculus. J. Neurophysiol. 2012;108:227–242. doi: 10.1152/jn.00706.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Marrone N, Mason CR, Kidd G. Tuning in the spatial dimension: Evidence from a masked speech identification task. J. Acoust. Soc. Am. 2008;124:1146–1158. doi: 10.1121/1.2945710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Perrott DR, Saberi K. Minimum audible angle thresholds for sources varying in both elevation and azimuth. J. Acoust. Soc. Am. 1990;87:1728–1731. doi: 10.1121/1.399421. [DOI] [PubMed] [Google Scholar]

- 28.Divenyi PL, Oliver SK. Resolution of steady-state sounds in simulated auditory space. J. Acoust. Soc. Am. 1989;85:2042–2052. [Google Scholar]

- 29.Best V, Schaik A. van, Carlile S. Separation of concurrent broadband sound sources by human listeners. J. Acoust. Soc. Am. 2004;115:324–336. doi: 10.1121/1.1632484. [DOI] [PubMed] [Google Scholar]

- 30.Harper NS, McAlpine D. Optimal neural population coding of an auditory spatial cue. Nature. 2004;430:682–686. doi: 10.1038/nature02768. [DOI] [PubMed] [Google Scholar]

- 31.Maier JK, Hehrmann P, Harper NS, Klump GM, Pressnitzer D, McAlpine D. Adaptive coding is constrained to midline locations in a spatial listening task. J. Neurophysiol. 2012;108:1856–1868. doi: 10.1152/jn.00652.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Dean I, Robinson BL, Harper NS, McAlpine D. Rapid Neural Adaptation to Sound Level Statistics. J. Neurosci. 2008;28:6430–6438. doi: 10.1523/JNEUROSCI.0470-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Grootel TJV, Wanrooij MMV, Opstal AJV. Influence of Static Eye and Head Position on Tone-Evoked Gaze Shifts. J. Neurosci. 2011;31:17496–17504. doi: 10.1523/JNEUROSCI.5030-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Lewald J, Dörrscheidt GJ. Spatial-tuning properties of auditory neurons in the optic tectum of the pigeon. Brain Res. 1998;790:339–342. doi: 10.1016/s0006-8993(98)00177-2. [DOI] [PubMed] [Google Scholar]

- 35.Slee SJ, Young ED. Linear Processing of Interaural Level Difference Underlies Spatial Tuning in the Nucleus of the Brachium of the Inferior Colliculus. J. Neurosci. 2013;33:3891–3904. doi: 10.1523/JNEUROSCI.3437-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Wise LZ, Irvine DR. Topographic organization of interaural intensity difference sensitivity in deep layers of cat superior colliculus: implications for auditory spatial representation. J. Neurophysiol. 1985;54:185–211. doi: 10.1152/jn.1985.54.2.185. [DOI] [PubMed] [Google Scholar]

- 37.Sparks DL, Hartwich-Young R. The deep layers of the superior colliculus. Rev. Oculomot. Res. 1989;3:213–255. [PubMed] [Google Scholar]

- 38.Huffman RF, Henson OW., Jr. The descending auditory pathway and acousticomotor systems: connections with the inferior colliculus. Brain Res. Rev. 1990;15:295–323. doi: 10.1016/0165-0173(90)90005-9. [DOI] [PubMed] [Google Scholar]

- 39.Pecka M, Brand A, Behrend O, Grothe B. Interaural Time Difference Processing in the Mammalian Medial Superior Olive: The Role of Glycinergic Inhibition. J. Neurosci. 2008;28:6914–6925. doi: 10.1523/JNEUROSCI.1660-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Zhou Y, Carney LH, Colburn HS. A Model for Interaural Time Difference Sensitivity in the Medial Superior Olive: Interaction of Excitatory and Inhibitory Synaptic Inputs, Channel Dynamics, and Cellular Morphology. J. Neurosci. 2005;25:3046–3058. doi: 10.1523/JNEUROSCI.3064-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 2002;3:201–215. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- 42.Leichnetz GR, Spencer RF, Hardy SGP, Astruc J. The prefrontal corticotectal projection in the monkey; An anterograde and retrograde horseradish peroxidase study. J. Neurosci. 1981;6:1023–1041. doi: 10.1016/0306-4522(81)90068-3. [DOI] [PubMed] [Google Scholar]

- 43.Barbas H, Mesulam M-M. Organization of afferent input to subdivisions of area 8 in the rhesus monkey. J. Comp. Neurol. 1981;200:407–431. doi: 10.1002/cne.902000309. [DOI] [PubMed] [Google Scholar]

- 44.Lee AKC, Rajaram S, Xia J, Bharadwaj H, Larson E, Shinn-Cunningham BG. Auditory selective attention reveals preparatory activity in different cortical regions for selection based on source location and source pitch. Front. Audit. Cogn. Neurosci. 2013;6:190. doi: 10.3389/fnins.2012.00190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Müller JR, Philiastides MG, Newsome WT. Microstimulation of the superior colliculus focuses attention without moving the eyes. Proc. Natl. Acad. Sci. U. S. A. 2005;102:524–529. doi: 10.1073/pnas.0408311101. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.