Abstract

The advent of cochlear implantation has provided thousands of deaf infants and children access to speech and the opportunity to learn spoken language. Whether or not deaf infants successfully learn spoken language after implantation may depend in part on the extent to which they listen to speech rather than just hear it. We explore this question by examining the role that attention to speech plays in early language development according to a prominent model of infant speech perception – Jusczyk’s WRAPSA model – and by reviewing the kinds of speech input that maintains normal-hearing infants’ attention. We then review recent findings suggesting that cochlear-implanted infants’ attention to speech is reduced compared to normal-hearing infants and that speech input to these infants differs from input to infants with normal hearing. Finally, we discuss possible roles attention to speech may play on deaf children’s language acquisition after cochlear implantation in light of these findings and predictions from Jusczyk’s WRAPSA model.

Before the advent of cochlear implantation, most children with severe-to-profound hearing loss had little to no chance of acquiring a spoken language. Only a small minority of deaf children – who were highly talented lip readers – could learn a spoken language in addition to (or instead of) a manual language (e.g., American Sign Language). Today, most children with severe-to-profound hearing loss receive cochlear implants and are able to acquire at least some level of proficiency with a spoken language. However, there is an enormous amount of variability in spoken language outcomes after cochlear implantation (Niparko et al, 2010); and children with cochlear implants, on average, lag behind their normal-hearing peers in spoken language proficiency even after more than ten years of experience with their cochlear implants (Geers, Strube, Tobey, Pisoni, & Moog, 2011).

The fact that many children who receive cochlear implants do not reach the same level of proficiency with spoken language as their normal-hearing peers is itself not very surprising given that the auditory input cochlear implants provide is greatly impoverished compared to typical acoustic hearing. For cochlear implant users, the spiral ganglion neurons of the auditory nerve are stimulated by up to 22 electrodes whereas normal-hearing listeners have thousands of hair cells tuned to different frequencies, which stimulate the spiral ganglion cells (Zeng, 2004). This means that the frequency resolution for cochlear implant users is poor compared to normal-hearing listeners (Loizou 2006). Also, hearing through a cochlear implant does not provide listeners with the same dynamic range as what normal-hearing listeners have because electrical stimulation produces a steeper rate-intensity function than acoustic hearing (Zeng, 2004; Zeng et al., 2002). Taken together, the input provided by cochlear implants to the spiral ganglia is less rich than what is provided by the hair cells in a normally functioning cochlea. Moreover, early auditory deprivation may affect the development of the auditory-neural pathway, which may result in poorer processing of already impoverished input (Moore & Linthicum, 2007).

Poor audibility of speech is a primary contributing factor in cochlear implant users’ difficulty with speech perception (Baudhuin, Cadieux, Firszt, Reeder, & Maxson, 2012). However, there are other factors that influence language development after cochlear implantation. Evidence that audibility is not the only contributing factor to language outcomes comes from studies in which multiple factors were tested for their relationship to measures of language outcomes. For example, Ann Geers and her colleagues conducted a study of 181 8- and 9-year-old who all received their cochlear implants before 5 years of age (Geers, 2003). They examined the effects of child and family characteristics (e.g., age at implantation, non-verbal IQ, family size), educational factors, and device characteristics (length of time using a particular processing strategy, dynamic range, number of electrodes inserted into the cochlea, and loudness growth) on a composite score from a battery of speech perception tests. Device characteristics, which included measures of audibility through the device, accounted for 22% of the variance after controlling for child and family characteristics.

If audibility does not explain all or even most of the variance associated with speech perception outcomes, what other factors contribute to the variance? Some of the variance has been associated with environmental differences that are well known to contribute to variance in language outcomes in all populations of children – e.g., socio-economic status and family environment (Geers et al, 2011, Holt, Beer, Kronenberger, Pisoni, & Lalonde, 2012); and some has been associated with differences in educational/therapeutic approaches (Bergeson, Pisoni, & Davis, 2005). Much of the variance has been found to be associated with subject characteristics such as age at cochlear implantation, amount residual hearing before implantation, duration of hearing loss, and performance IQ (Dettman, Pinder, Briggs, Dowell, & Leigh, 2007; Geers 2003; Holt & Svirsky, 2008; Nicholas & Geers, 2006; Nott, Cowan, Brown, & Wigglesworth, 2009; Tomblin, Barker, Spencer, Zhang, & Gantz, 2005; Zwolan et al., 2004). These findings are informative for predicting which children have increased risk for poor language outcomes, but they do little to help us understand how these differences in patient characteristics lead to differences in language outcomes. What are the basic underlying cognitive and linguistic skills that underlie the relationship between these factors and language outcomes?

Some of the fundamental linguistic skills that are affected by factors such as age at implantation and residual hearing include speech perception and word learning (Geers 2003; Houston, Stewart, Moberly, Hollich, & Miyamoto, 2012}. These skills have been found to correlate with later language outcome measures, suggesting that the effects of early auditory experience on these skills accounts for some of the variability in language outcomes.

Finding that speech perception and word learning may be affected by early auditory experience and in turn affect language outcomes constitutes progress in efforts to understand the nature of the relationship between early auditory experience and spoken language development. To understand the nature of the relationship further, we must continue to explore the effects of deafness on fundamental components of language processes. For example, word learning – even basic word-object association word learning – involves several cognitive processes. It is possible that difficulties in linguistic skills such as word learning may stem from effects of auditory deprivation on basic cognitive skills.

Until fairly recently, there was little research on the effects of deafness on general cognitive skills because it was assumed that deafness affected only audition and auditory-related language processes (but see Myklebust & Brutten, 1953). There is now a large and growing literature on perceptual and cognitive differences between typically hearing and deaf adults and children {Marschark & Hauser, 2008}. Most of this research has investigated deaf individuals without cochlear implants who primarily or exclusively use sign language. However, research on verbal and nonverbal cognitive skills in children who receive cochlear implants have revealed differences in those populations as well (Burkholder & Pisoni, 2003; Cleary, Pisoni, & Geers, 2001; Conway, Pisoni, Anaya, Karpicke, & Henning, 2011; Horn, Davis, Pisoni, & Miaymoto, 2004, 2005; Lyxell et al., 2009; Schlumberger, Narbona, & Manrique, 2004; Smith, Quittner, Osberger, & Miyamoto, 1998; Tharpe, Ashmead, & Rothpletz, 2002). Moreover, differences in cognitive skills among children with cochlear implants help predict some of the variability in later language outcomes. These findings make it clear that continued research on the effects of deafness and subsequent cochlear implantation on verbal and nonverbal cognitive skills contribute to our understanding of spoken language development in deaf children who receive cochlear implants.

The cognitive skill that is the focus of this paper is attention – specifically, attention to speech. Investigating attention to speech in deaf infants who receive cochlear implants can provide important new information that will contribute to our understanding of how sensory input affects cognitive processes. The importance of attention to speech is also on the forefront of discussions among clinicians and parents of deaf children regarding what approach to take for speech and language therapy after cochlear implantation. Most therapists recognize that attending to speech plays a role in learning spoken language, the emphasis on attention to speech varies significantly across therapy approaches (Cole & Flexer, 2011).

While encouraging children with hearing loss to attend to speech in order to learn spoken language has intuitive appeal and may be effective according to many clinicians’ subjective experiences, there is virtually no evidence to support the idea that increasing attention to speech (i.e., “listening”) facilitates language development in children with cochlear implants or even normal-hearing children. Moreover, little is known about attention to speech and its role in learning language even though the importance of attention in learning has long been acknowledged (e.g., Trabasso and Bower, 1968),. The goal of this paper is to explore the role attention to speech might play for normal-hearing infants and deaf infants who receive cochlear implants. Because there has been so little work done in this area, this paper is more focused on raising questions to be addressed by future research than providing a review of our current state of knowledge, which is highly limited at this point.

The outline of this paper is as follows. First, we will define what we mean by attention to speech. This involves describing different aspects of attention, their development, and how they relate to the auditory domain. Second, we specify which aspects of early language development are likely to be affected by attention to speech. This is important because while speech and hearing scientists and clinicians assert that listening is important for language development, we know of no publications in that literature that have specified exactly what or how listening helps. We will approach this task by reviewing Peter Jusczyk’s Word Recognition and Phonetic Structure Acquisition (WRAPSA) model (Jusczyk, 1993, 1997), which is a relatively comprehensive model of early language comprehension, and discuss how attention to speech may affect each of the components and processes described in the model. Third, we will consider the role of attention-getting characteristics of speech (e.g., infant-directed speech) on infants’ attention to speech; if attention to speech affects particular processes, then we expect those processes to be relatively more influenced by how attention-getting the input is. Fourth, we will review recent research on attention to speech in deaf infants with cochlear implants and discuss future directions for understanding how reduced attention to speech may affect their speech perception and early language acquisition. Finally, we will review recent findings on input to infants with cochlear implants and discuss future directions for further understanding how differences in input may affect their attention to speech.

As will be seen in the latter two sections, there has been very little research so far on attention to speech in children with cochlear implants despite assertions that it is important for language development. We hope that by using an existing detailed model of early language development to specify more precisely how attention to speech may affect it, we will help formulate some of the research questions that need to be addressed to fill the gaps in our understanding. A better understanding of both the input that affects implanted infants’ attention to speech and how attention to speech influences language development may help inform therapists of how listening skills can be improved to facilitate language development after cochlear implantation.

Development of Attention

Attention is a multifaceted concept and has been described by developmental scientists as involving various combinations of systems, components, and functions. We review some of the more prominent theories and then will summarize the common elements among them. The theories have focused on visual attention, and we will end this section by relating the concepts to auditory attention.

In a seminal book on the development of attention, Ruff and Rothbart (1996) discuss two systems and three components of attention. The systems include an external system and an internal system. The external system includes the forces in the environment that influence not only what we attend to at the moment but also how we attend in general. For example, caregiving style can influence how an infant attends. So can other environmental influences such as how often the TV is on. The internal systems include the orienting/investigate subsystem and the goal-oriented subsystem.

The orienting/investigative subsystem is the first system to develop. Its selectivity is determined by intensity and novelty, which reflects the observation that infants (as well as children and adults) often orient to objects and events that are relatively perceptually salient. The second subsystem to develop is the goal-oriented system. This system is engaged when a person attends as part of a goal, such as attending to the words on this page with the goal of reading this paper. These systems impinge on three components of attention – selectivity, state, and higher-level control. Attentional selectivity refers to the process of selecting something to attend to among an almost limitless number of possibilities. Attentional state refers to the level of arousal or degree to which a person is engaged in what they are attending. Both of these components are initially dominated entirely by the orienting/investigative system and then become increasingly mediated by the goal-oriented system across development. Higher-level control is mediated by both the internal goal-oriented system and the external systems. There is an intimate relationship between higher-level control and organization of behavior, and higher-level control serves to regulate selectivity and state.

Ruff and Rothbart’s model provides one conceptualization of how attention develops. It shares similarities with other conceptualizations even if the components differ. For example, Colombo (2001) conceptualizes the development of visual attention in terms of four functions – spatial orienting, attention to object features, alertness, and endogenous control. Colombo’s model is motivated by systems of neural networks but maps on well to Ruff and Rothbart’s model. Spatial orienting and attention to object features is similar to the orienting system and selectivity component of the Ruff and Rothbart model. Alertness maps onto state. Endogenous control maps onto goal-oriented system and higher-level control. In both models, selectivity and state are initially dominated by characteristics of the input and then become increasingly under self-control. In this paper, we will discuss the role these two components play in specific aspects of language development.

Both selectivity and state are crucial for learning. Selectivity – what the infant attends to – acts as a filter for what the infant may learn. The degree to which the infant will encode the information that they are selectively attending to may be influenced by their state of attention. Seminal work on infant attentional states has been carried out by John Richards and his colleagues (e.g., Richards & Casey, 1992) using a combination of behavioral, heart rate, and neurophysiological measures. They have identified at least four phases of attentional state – pre-attention, orienting, sustained attention, and attention termination – which they define with respect to changes in heart rate.

Baseline heart rate indicates that the infant is in a pre-attentive phase of attention (i.e., relatively inattentive). An orienting phase is defined by a deceleration in heart rate from baseline, which occurs as a byproduct of increased activity in the parasympathetic nervous system. The orienting phase may be followed, optionally, by a sustained attention phase defined by a continued lower-than-baseline heart rate. Finally, attention termination is defined by a return to baseline heart rate. Whether or not a young infant orients, sustains attention, or terminates attention to a stimulus depends on the characteristics of the stimulus. As the infant develops, these processes become increasingly under endogenous control, which is consistent with the Ruff and Rothbart and the Colombo models described above.

Richards and colleagues have investigated how state of attention affects learning using a visual recognition task (Frick & Richards, 2001; Richards 1997). They presented 20- to 26-week-old infants with visual stimuli when the infants were in different heart rate defined phases of attention and subsequently tested them on their recognition of the stimuli using a paired-comparison paradigm where each presented (old) stimulus was paired with a novel stimulus. Infants showed a preference for the novel stimuli only when paired with old stimuli that had been presented when the infants were in a sustained attention phase. Infants showed no preferences when presented with stimulus pairs where the old stimuli had been originally presented during other phases of attention. These findings clearly demonstrate the importance of sustained attention for encoding new information.

Although sustained attention enhances learning in general, learning can take place without attention. For example, in dichotic listening tasks (Cherry 1953) where subjects are asked to attend only to the sound presented on one side, after the task subjects are sometimes able to report whether or not there was speech or even whether or not there were changes in the speech (e.g., change of talker) in the unattended ear. Even when there is no awareness of sounds in the unattended ear, learning can take place. Corteen and Dunn (1974) tested implicit learning in the unattended ear by first conditioning subjects to expect electric shocks when they heard city names. They then presented subjects with a dichotic listening task in which the city names were sometimes presented to the unattended ear. Subjects showed physiological responses to those city names even when they were unaware of hearing the names. Whether or not subjects can process any of the unattended information depends on several factors including the acoustic properties of the stimulus, the nature of the task, and the cognitive load of the task (Spence & Santagnelo, 2010). Nevertheless, it is clear that not all auditory processing requires attention.

Attention and Early Language Development

A goal of this paper is to discuss what aspects of language development require sustained attention to speech and what aspects can be accomplished through passive hearing. We will approach this task by reviewing Jusczyk’s WRAPSA model as described in his book The Discovery of Spoken Language (Jusczyk, 1997). For each of the model’s components and processes, we will discuss the role that attentional selectivity and state may play. More specifically, we will discuss whether or not the process requires sustained attention to the speech stimulus for the process to succeed.

Whether or not sustained attention directed to speech is required for each process in the WRAPSA model will be determined by the degree to which the process requires active processes or can be accomplished passively. According to resource-limitation views of attention (Shiffran & Schneider, 1977), attention-demanding active processes are needed when there is not a one-toone mapping between stimulus and response. When there is a one-to-one mapping, only passive processes are needed for learning.

Initial Encoding

According to WRAPSA, speech processing begins with an initial encoding, which preserves most of the acoustic details. Auditory analyzers detect auditory input and provide a description of the spectral and temporal features present in the acoustic signal. These features include intensity, duration and bandwidth of sounds, their periodicity and change characteristics. The analyzers also identify syllable boundaries by detecting amplitude peaks and minima. While the analyzers capture most of the acoustic details, they do not code a perfectly detailed representation of the signal – they have limitations and these limitations define infants’ early discrimination abilities. For example, evidence that infants do not show discrimination of a 40 msec vs. 60 msec voice-onset-time differences would suggest that the auditory analyzers would produce the same set of responses to both sounds. Likewise, discrimination of 20 msec vs. 40 msec voice-onset-time differences would suggest that these two sounds result in two different sets of responses. Each auditory analyzer responds independently from all of the other analyzers, which means that there is a one-to-one mapping between the input and the responses of the auditory analyzers. This one-to-one mapping suggests that sustained attention is not necessary for the auditory analyzers to operate, and, consequently, sustained attention to speech would not be necessary for speech discrimination at this level.

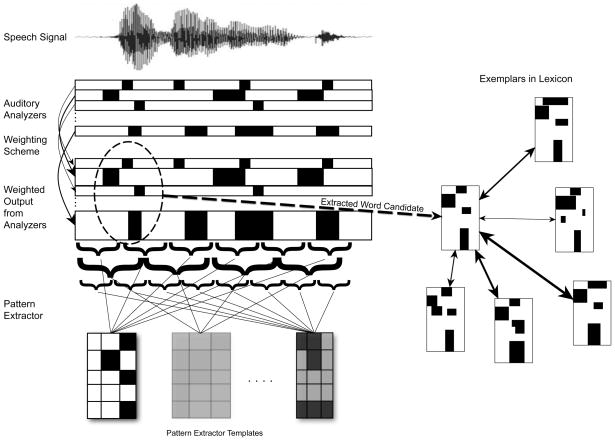

Figure 1 represents our schematic interpretation of Jusczyk’s WRAPSA model. In the figure, auditory analyzers are represented in a grid where each one responds to a specific aspect of the acoustic signal. Each analyzer is activated directly by the signal and not influenced by other processes.

Figure 1.

Interpretation of WRAPSA (Jusczyk, 1997). Acoustic information is represented at the top with a waveform. Acoustic analyzers are represented in a grid. Each row is an analyzer that detects each time a particular feature is present in the signal, represented by filled rectangles. Note that the width of the rectangles vary because features can vary in their durations (e.g., a voicing feature of a stop consonant is much shorter than a feature like spectral noise, which can continue for 100s of milliseconds). The output from the auditory analyzers is weighted according to its importance in the ambient language (represented by arrows of varying thicknesses). Below the auditory analyzers is the weighted output where the weighting of each feature is by vertical height. Below that level is the pattern extractor, which identifies potential word candidates. Brackets represent processing windows for segmentation cues (e.g., rhythmic structure, phonotactic properties, allophonic properties, etc) that allow infants to detect words and word boundaries. If a string of acoustic-phonetic information within a processing window or set of processing windows is consistent with what the infant knows so far about word/word boundaries, then a potential word candidate will be extracted. Sets of word/word boundary cues are represented as templates in the figure. The intensity of the template represents the degree to which the infant uses that set of word/word boundary cues for segmentation. Jusczyk did not discuss a specific criterion for how much acoustic-phonetic information had to be consistent with word/word boundary knowledge for a potential word to be extracted. We can assume that the process is probabilistic – the more acoustic-phonetic information consistent with word/word boundary cues, the more likely the string of acoustic-phonetic information would be extracted as a potential word. On the right side is a representation of the mental lexicon with stored exemplars. Arrows connecting the extracted word candidate and the examplars in the lexicon represent WRAPSA’s exemplar-based word recognition system. The darker the arrows, the more similar the potential word candidate is to stored exemplars. Recognition of a word is determined by both how similar it is to specific exemplars and how many exemplars it is similar to.

Weighting Scheme

It is well established that experience with language shapes infants’ perception of speech. A classic example of this are the findings by Werker and Tees (1984) that English-learning 6-month-olds are able to discriminate the Hindi retroflex and dental /d/ but that English-learning 10-month-olds and adults are not. Those and similar findings are accounted for in WRAPSA by the weighting scheme. The weighting scheme weights some of the features more than others and thereby shapes the perception of the input encoded by the auditory analyzers.

The weighting scheme is represented in Figure 1 by the arrows from the auditory analyzers to the next level of representation. The thickness of the arrows represents their weight. Note that the representations of features linked with thick arrows are larger relative to the representations of features linked with the thin arrows. Size represents the importance of each feature for pattern extraction and word recognition.

The weighting scheme is shaped by the ambient language. Features that are important for differentiating words or indexical information (talker identify, accent, etc.) become weighted more heavily whereas features that are not meaningful diminish in weight. For example, WRAPSA would explain the Werker and Tees (1984) Hindi findings as follows: During early infancy the features that differentiate dental and retroflex /d/ begin with average weightings for all infants. The weightings of those features would then diminish for infants who are exposed only to a language or languages that do not differentiate dental and retroflex /d/ (e.g., English), making the variants of /d/ increasingly perceptually similar until they are indiscriminable.

Jusczyk explained that the weighting scheme was “…an automatic means of setting the focus of attention on properties that are relevant for recognizing words in a particular native language” (Jusczyk, 1997: 221). This statement addresses both the selectivity and state of attention involved in the weighting scheme. The weighting occurs automatically, suggesting that the infant does not have to be in a state of sustained attention to speech for the weighting scheme to operate.

Pattern Extractor

In WRAPSA, the weighted output from the auditory analyzers is analyzed by a pattern extractor. The pattern extractor extracts potential word candidates and encodes them into the lexicon. Like with the weighting scheme, pattern extraction is affected by the ambient language. For example, English-learning infants’ learning that stressed syllables in English are more likely to signal word onsets than offsets modified their pattern extractors accordingly. Research over the last 20 years has identified several potential cues for identifying word boundaries, including lexical stress (Jusczyk et al., 1999b), phonotactics (Mattys & Jusczyk, 2001), allophonics (Jusczyk et al., 1999a), transitional probabilities of syllables (Saffran et al., 1996), prosodic word-boundary cues (Johnson, 2008) and familiar words (Bortfeld et al., 2005). Moreover, cross-linguistic studies have found that cues for word boundaries differ across languages (Nazzi et al., 2006). The language-specific nature of the pattern extractor reflects these findings.

Jusczyk wrote that pattern extraction routines must encode sequential information and “…be developed to accord with those properties that are likely to be predictive of word boundaries in the particular language that the infant is learning” (Jusczyk, 1997: 225). Jusczyk did not detail the process of extracting potential word candidates, so, for the purpose of creating a complete schematic of WRAPSA, we supplemented WRAPSA with some additional concepts. In particular, the template concept described below was added to provide a mechanism encoding into memory acoustic-phonetic information relevant to speech segmentation.

The pattern extractor is represented in Figure 1 as a set of templates with arrows pointed to a bracketed set of the weighted output from the auditory analyzers. Each template represents a collection of acoustic-phonetic information the infant has associated with words and/or word boundaries (e.g., that a stressed syllable is likely to be a word or a word onset). The intensity of each template represents the probabilistic nature of the extraction information (i.e., more intense templates have higher probabilities of correctly identifying word/word boundaries). As infants gain more language experience, these templates change in both form and intensity. New templates can be formed in the pattern extractor in short periods of time, within minutes or even seconds. For example, if during an interaction the infant encounters a multi-syllabic word several times, the infant may initially extract each syllable individually but then eventually detect the consistent ordering of the syllables and form a new template that would lead to the extraction of the word as a cohesive unit.

The arrows pointed from the pattern extractor to the brackets represent the process of the pattern extractor analyzing speech to detect strings of acoustic-phonetic information that match one or more template. The figure depicts each template being compared to acoustic-phonetic information within strings of weighted output from the acoustic analyzers. The comparisons of the templates to the strings of speech occur independently and in parallel. By our interpretation of WRAPSA, when a string of speech is consistent with one or more word/word boundary templates, the string of speech may be extracted as a potential word candidate. The probability of extraction depends on the amount of converging evidence from the templates. Jusczyk did not specify this particular process. It is possible that Jusczyk envisioned the pattern extractor as operating with only one set of criteria for identifying word candidates that became increasingly sophisticated with language experience. However, our speculation is that the processes depicted in Figure 1 are more in line with what he had in mind. Whatever the case, the pattern extractor in WRAPSA operates using a combination of probabilistic information – none of which individually provide certain evidence of a word boundary. The complex nature of the pattern extractor suggests that sustained attention to speech would facilitate its processes.

Word Recognition

In WRAPSA, recognition of a potential word candidate depends on its similarity to stored exemplars of words in the lexicon. Word candidates from the pattern extractor resonate with the stored exemplars based on similarity. If it resonates strongly enough with stored exemplars, it will be categorized with those exemplars and recognized; if it only resonates weakly with stored exemplars, it may be stored as a potential new word and not recognized. The potential role of attention for these processes of WRAPSA is fairly complex. On the one hand, Jusczyk posited that attention was involved in pattern extraction – that more acoustic details of words would be represented when infants attended to the speech. Encoding of more details would allow for a more accurate similarity comparison with the stored exemplars. Moreover, greater attention would increase the chances of a new instance of a word being encoded into the lexicon. Thus, there is a role for attention in word recognition in WRAPSA because word recognition involves extracting and encoding acoustic-phonetic information.

On the other hand, it is likely that in WRAPSA the process of comparing extracted and encoded word candidates with stored exemplars would be automatic and not attention demanding. If the mechanism for determining similarity between probe and exemplars was an active controlled process, then we would predict that increasingly more attentional resources would be required as more exemplars were stored in the lexicon because active processes are resource demanding. A prediction of slower and/or more attention resource-demanding processing as more exemplars are encoded into the lexicon has no empirical support that we know of and Jusczyk never made such a prediction. Therefore, we assume that attention is not involved in WRAPSA’s mechanism of comparing word candidates to stored exemplars. Any effect of attention on word recognition would be, according to WRAPSA, in the extracting and encoding of the word candidate rather than in comparing that candidate to stored exemplars for recognition.

In Figure 1, the arrow pointing from the bracketed pattern to the pattern on the right represents the pattern extractor forming a word candidate representation. The arrows from that representation to the circle with many small patterns represent the word candidate being compared to exemplars in the lexicon. The darker arrows represent similarity to those stored exemplars. In this example, the word candidate strongly resonates in similarity to a cluster of similar exemplars and so would be recognized as a familiar word and encoded as a member of that cluster that represents variants of a particular word.

Word Learning

Although not addressed in any detail in Jusczyk’s WRAPSA model, word learning is one of the most important and extensively studied aspects of language acquisition. Children incorporate perceptual cues, attention biases, social cues, sentential contexts, and possibly innate conceptual categories in order to form associations between the sound patterns of words and their referents (Golinkoff et al., 2000). Given the complexity of the task and all the different sources of information that have to be integrated, there is little doubt that the task of word learning is greatly facilitated by attention. However, it should be noted that because word learning often involves associating the sound pattern of a word to a whole object, a characteristic, or an action observed visually, attention only to speech will usually not be a good strategy for learning words. Instead, attention must be divided between speech and other cues, many of them visual, to accomplish the word-learning task. Still, at least some sustained attention to speech is necessary to encode the acoustic-phonetic information of words to be added to the lexicon.

In summary, there are several aspects of early speech perception and language development that require sustained attention according Jusczyk’s WRAPSA model. Infants’ attention to speech depends in large part on the nature of the speech presented to them. Next we turn to how input characteristics impact infants’ attention to speech.

The Role of Input Characteristics on Infants’ Attention to Speech

Although an infant’s environment might initially seem to be a “blooming, buzzing confusion” (James, 1890), selective attention to one auditory signal over another might suggest not only that infants can discriminate the two signals but also that the signals differ in saliency or attractiveness. Several studies have shown that infants are particularly attracted to the human voice. Three-month-olds attend more to speech than rhesus monkey vocalizations (Vouloumanos et al., 2010), 4-month-olds attend more to fluent speech than silence or white noise (Colombo and Bundy, 1981), 6- and 9-month-olds attend more to speech sounds such as “hop hop hop” and “ahhh” than silence (Houston et al., 2003), 9- to 18-month-olds attend more to nursery rhymes than white noise (Friedlander and Wisdom, 1971), and 9-month-olds attend more to sung than instrumental nursery rhymes and repeated tones (Glenn and Cunningham, 1983; Glenn et al., 1981). These findings all suggest that human speech has particular qualities that naturally attract infants’ attention. If this is the case, we might expect even newborn infants to pay more attention to speech than non-speech auditory signals.

In fact, a recent study by Vouloumanos and Werker (2007) showed that even neonates exhibit an attentional preference for speech over non-speech analogues matched by spectral and temporal patterns. A follow-up study, however, demonstrated that such speech preferences may be learned from infants’ experience with their auditory environment (Vouloumanos et al., 2010). Vouloumanos et al. presented human and rhesus monkey vocalizations to newborns and 3-month-old infants and found a human voice preference only in the older infants. Moreover, newborn infants in the Vouloumanos and Werker (2007) study showed a preference for speech over non-speech only in the second block of trials, suggesting that both types of auditory signals initially attracted infants’ attention equally. Interestingly, the commonality of the speech, non-speech analogues, and rhesus monkey vocalizations is that all had positive affect, which is typically associated with high pitch and other acoustic features also found in maternal speech to infants (e.g., Scherer, 1981).

It is likely no accident that caregivers across the world speak to infants in a “special” infant-directed (ID) speech register differentiated from adult-directed (AD) speech by acoustic correlates of positive affect (Ferguson, 1964; Fernald, 1989, 1991; Papoušek et al., 1985; Singh et al., 2004; Trainor et al., 2000). Some features of ID speech include high pitch level, exaggerated pitch contours, slow tempo, long pauses, and short, syntactically simple utterances (Bergeson and Trehub, 2002; Fernald, 1991, 1992; Fernald and Simon, 1984; Papoušek et al., 1985). In fact, a number of studies have shown that infants prefer to listen to ID than AD speech (Cooper and Aslin, 1990; Fernald, 1985; Pegg et al., 1992; Werker and McLeod, 1989).

What drives infants’ attention to ID speech? Evidence across several studies suggests that it is a combination of infants’ auditory experience and the exaggerated acoustic characteristics of ID speech. For example, very young infants prefer ID over AD speech of unfamiliar women (Cooper et al., 1997; Cooper and Aslin, 1990), a preference very likely due to acoustic salience. On the other hand, newborns also prefer their own mother’s voice to unfamiliar female voices (DeCasper and Fifer, 1980; Fifer and Moon, 1995; Mehler et al., 1978), which is more likely due to prenatal auditory experience than acoustic salience. In fact, Cooper and her colleagues (1997) found that infants do not show the typical ID speech preference when presented with maternal ID and AD speech until 4 months of age. That is, the exaggerated features of ID speech and the familiar prosodic characteristics of maternal speech, and, in particular, the AD maternal speech that infants were presumably most exposed to in the womb, all initially attract infants’ attention.

By the time infants are 4–6 months of age, mothers have already adjusted the features of their speech to include higher and more exaggerated pitch, for example, compared to their speech to newborn infants (Kitamura and Burnham, 2003; Kitamura et al., 2002; Stern et al., 1983). In fact, these longitudinal studies have shown that the individual features of mothers’ speech to their infants continue to change as infants grow older, gain more auditory experience, and reach various milestones of speech and spoken language acquisition. Moreover, recent studies have also shown that infants’ preference for ID over AD speech also changes across time (Hayashi et al., 2001; Newman and Hussain, 2006), likely reflecting the dynamic and reciprocal interactions between mothers and infants. For example, Kitamura and Burnham (2003) found that mothers’ pitch was higher when their infants were 6 and 12 months than 9 months of age, and Hayashi et al. (2001) demonstrated that 4- to 6-month-olds and 10- to 14-month-olds attended longer to ID than AD speech, whereas the 7- to 9-month-olds attended equally to both speech registers. Slightly different results were attained by Stern and colleagues (1983), who found that pitch height was higher in mothers’ speech to infants at 4 than 12 months of age, which also is more in line with the findings of Newman and Hussain (2006), who found a steady decline in the ID speech preference from 6 to 13 months of age. Despite the differences across these sets of experiments, it is likely that ID speech features and ID speech preferences match in individual mother-infant dyads across the first postnatal year.

Pitch height and pitch exaggeration are two prosodic speech characteristics that may serve the role of capturing and maintaining infants’ attention in addition to contributing to the social-emotional bond between infants and their caregivers. Researchers (Cooper et al., 1997; Fernald, 1992; Hayashi et al., 2001; Jusczyk, 1997) have proposed that ID speech also highlights important linguistic information that is important for infants to encode to shape their speech perception abilities and develop spoken language. For example, mothers hyperarticulate vowels when speaking to 2- to 6-month-old infants (Burnham et al., 2002; Kuhl et al., 1997), and the degree of their hyperarticulation correlates with their infants’ later speech perception abilities (Liu et al., 2003). Mothers also use prosodic cues to highlight clause boundaries (e.g., Bernstein Ratner, 1986; Kondaurova and Bergeson, 2011), and infants perceive clause boundaries more easily when listening to ID than AD speech (Kemler Nelson et al., 1989). These and other cues also seem to facilitate infants’ word recognition and word learning abilities (Ma et al., in press; Singh et al., 2009). How do mothers know when to attract infants’ attention to vowel categories and clause boundaries? A logistically challenging but very interesting study would track mothers’ use of various attention-getting and linguistically enhancing speech features and infants’ perception of the relevant features across time as infants acquire spoken language. In any case, maternal speech is naturally responsive and complementary to infants’ skills and behavior (Smith and Trainor, 2008), and the combination of segmental and suprasegmental cues that attract and maintain infants’ attention to the speech in general and provide a spotlight on individual linguistic components such as vowel categories and clause boundaries likely play a role in infants’ development of speech perception and spoken language.

The research presented up to this point has all assumed that there are no communicative breakdowns on part of either the caregiver or the infant. However, there are several possible areas in which the reciprocal interactions could be less than optimal. For example, maternal depression can result in less exaggerated ID speech and poorer learning abilities in infants (e.g., Kaplan et al., 2002). Newman and Hussain (2006) also posited that infant hearing loss would also affect their attention to ID and AD speech. They used background noise to simulate the listening conditions of infants with hearing loss, and measured attention to ID and AD speech in infants at 4.5, 9, and 13 months of age. They found that although the 4.5-month-olds listened longer during quiet than noisy conditions, they preferred ID to AD speech regardless of the presence of background babble. On the other hand, the 9- and 13-month-olds showed no speech register preferences and no effects of background noise. Thus, the younger infants showed general attention deficits in noisy conditions. This finding in particular has important implications for speech and spoken language development in infants who experience a period of profound deafness prior to receiving amplification via cochlear implants.

Attention to Speech in Deaf Infants with Cochlear Implants

The review of Jusczyk’s WRAPSA model suggested that attention to speech may be important for normal-hearing infants’ speech perception and word recognition. Attention to speech may be even more important for infants who have difficulty encoding speech due to compromised auditory processing, such as deaf infants with cochlear implants. Congenitally deaf infants develop and interact with the world without auditory input. Until they receive input through a cochlear implant, they are attending to and learning about their environment through their other senses. The addition of auditory input does not necessarily mean that these infants will automatically interpret it as meaningful. Moreover, the electrical signal from cochlear implants is severely impoverished compared to natural acoustic hearing. Because of both the poverty of the auditory input and the experience of interacting with the environment without sound, the attention to speech of deaf infants with cochlear implants may differ substantially from that of infants with normal hearing.

Investigating attention to speech in cochlear-implanted deaf infants can provide insights into the role of early auditory experience on infants’ attention to speech. In addition to these theoretical implications, there are important clinical implications. If deaf infants’ attention to speech after cochlear implantation is reduced compared to normal-hearing infants, they may face an even greater challenge for acquiring spoken language beyond what might be expected due to the delay in auditory input and the impoverished signal. Another potential clinical implication is that if attention to speech is predictive of later language development, assessing attention to speech may be clinically useful for tracking the progress deaf infants are making in spoken language development after cochlear implantation. Moreover, it will be clinically relevant to determine what types of speech input may increase cochlear-implanted deaf infants’ attention to speech.

Here, we review findings in our lab that address attention to speech in deaf infants after cochlear implantation. We also discuss specific ways that attention to speech may be relevant to this population based in part on the earlier review of the role of attention to speech in normal-hearing infants’ speech perception.

In our first study we investigated deaf infants’ sustained attention to repeating speech sounds versus silence from 1 day to 18 months after cochlear implantation and compared their performance to that of normal-hearing infants (Houston et al., 2003)1. Sustained attention was assessed using a modified version of the visual habituation procedure (VHP). The basic idea of the VHP is that infants will attend longer to a simple visual display if what they are hearing is interesting to them (i.e., it captures their attention). This cross-modal measure of auditory attention is a validated and widely used methodology (Horowitz, 1975). The VHP has been used extensively to assess normal-hearing infants’ speech discrimination ability (Best et al., 1988; Polka and Werker, 1994). We modified it to assess infants’ sustained attention to speech as well as their ability to discriminate repetitions of a 4s continuous /a/ with minimal pitch change – from 217Hz to 172Hz and no pause inserted between repetitions so that the break between each was barely detectable (“ahhh”) versus repetitions of the syllable /hap/ , which was 269ms with 150ms of silence inserted between each repetition (“hop hop hop”) or repetitions of a 4s /i/ with a rising intonation (167Hz to 435Hz) versus repetitions of a 4s /i/ with a falling intonation (417Hz to 164Hz).

In the VHP the infant is seated on the caregiver’s lap in front of a TV monitor. During a habituation phase, the infant is presented with the same checkerboard pattern and the same repeating speech sound (e.g., “hop hop hop”) on each trial. The infant’s eye gazes are monitored through a hidden camera and monitor in a control room, and the trial continues until the infant looks away from the checkerboard pattern for 1 sec or more or a maximum looking time duration (~20 sec) is reached. The habituation trials continue until the infant’s looking times decrease to reach a habituation criterion. In a typical version of the VHP, each trial during the habituation phase is identical. In our version of the VHP, half of the trials were silent – i.e., the same checkerboard pattern was presented but with no accompanying repeating speech sound. For every block of four trials two were “sound trials” and two were “silent trials”. This modification allowed us to obtain a measure of attention to speech: mean looking time to the sound trials minus mean looking time to the silent trials.

Infants reached habituation when their mean looking time during a block of four trials was 50% or less of what it was during the first block of trials. Following the habituation phase, infants were presented with two test trials – one with the same repeating speech sound (e.g., “hop hop hop”) and one with a novel speech sound (e.g., “ahhh”), both accompanied by the same checkerboard pattern. Infants showed discrimination of the “hop hop hop” versus “ahhh” contrast but did not show discrimination of the into national contrast. It should be noted that the fact that infants showed discrimination of one of the contrasts and that all of the stimuli were presented at the same intensity (70+5dB SPL) suggests that the stimuli were sufficiently above the infants’ hearing thresholds to be able to be attended. However, the focus of this paper is on the attention results so the discrimination results will not be discussed further.

Houston et al (2003) reported that NH 6-month-olds showed a significantly greater looking time preference for the sound versus the silent trials than did deaf infants with 6 months of cochlear implant experience and who had their cochlear implants switched on between 7 and 24 months of age. These findings suggest that implanted infants’ sustained attention to speech is reduced compared to their hearing-age matched peers. Infants with cochlear implants showed even less attention to speech at earlier post-implantation intervals. A follow-up report found that implanted 13- to 30-month-olds’ attention to speech is more similar to chronologically age-matched normal-hearing infants (Houston, 2009). Taken together, these findings lead to somewhat mixed conclusions. On the one hand, similar attention performance to chronologically age-matched peers may suggest that their attention system to speech is developing normally. On the other hand, if attention to speech during the first year of exposure to speech is important for the development of the weighting scheme and pattern extraction procedures (Jusczyk, 1997), cochlear-implanted infants’ reduced attention to speech compared to hearing age-matched peers may be more significant than the finding that they have similar attention to speech compared to chronologically age-matched peers.

If having reduced attention to speech were important for cochlear-implanted infants’ development of their speech perception skills, then we would expect their attention to speech to relate to a later measure of a language skill highly related to speech perception. Houston (Houston, 2009) reported that deaf infants’ attention to speech at 6 months after implantation correlated significantly with their performance on a word recognition task two to three years later. These findings are consistent with the possibility that more attention to speech leads to better speech perception skills. However, these findings do not at all rule out the possibility that the causal direction of the correlation is the other way – better speech perception may result in more attention to speech. This is a topic we will explore in future work.

While infants with cochlear implants may have reduced attention to speech for repetitions of isolated syllables and vowels, it is possible that implanted infants’ attention to speech may be better when presented with speech that is more natural and meaningful. Two ways to do this are to use stimuli that include continuous discourse rather than individual sounds or words and that feature the prosody associated with ID speech. To determine whether continuous speech versus silence and speech presented in an ID versus an AD manner would both increase attention in infants with cochlear implants, Bergeson, Houston, Spisak, and Caffrey (in preparation) compared attention to a passage spoken in ID and AD registers to silent trials in infants with profound deafness across one year post-implantation. We also compared the behavior of infants with cochlear implants to that of normal-hearing infants matched by chronological age. Using a visual preference procedure, attention to the speech and silent trials was measured as looking time to a checkerboard pattern during each condition.

The results of this study showed that normal-hearing infants initially preferred ID to AD speech, but this preference was reversed after approximately one year. They also preferred both speech conditions to silent trials, as expected. On the other hand, profoundly deaf infants initially showed no preferences for any of the conditions, but after a year developed an ID speech preference over both the AD speech and silent conditions. Surprisingly, they did not attend more to AD speech than silence even after one year of implant experience. This suggests that deaf infants with cochlear implants do not naturally attend to speech even though previous studies have shown that stimuli in such experiments are audible enough to make speech sound discriminations (Houston et al., 2003). Moreover, infants with cochlear implants require extensive hearing experience before displaying an attentional preference for ID over AD speech. One possible explanation is that the implant technology transmits pitch information quite poorly, so the cues of pitch height and exaggeration are likely less obvious to these infants than normal-hearing infants. It could also be the case that it takes time to learn the associations between maternal affect and ID speech characteristics. Regardless of the reasons, the results of this study demonstrate that deaf infants with cochlear implants do not attend to speech in the same ways that normal-hearing infants do, which has important implications for speech perception and spoken language development.

How Might Attention to Speech Affect Infants with Cochlear Implants?

According to Jusczyk’s WRAPSA model, the more words encoded into the lexicon, the more accurate infants will be at word recognition. Jusczyk also posited that attention to speech was important for encoding representations into memory. Thus, the finding that attention to speech is correlated with later word recognition (Houston, 2009) is consistent with WRAPSA’s predictions. As reviewed above, attention to speech was important for several components of the WRAPSA model. We will now revisit that model briefly so as to have a framework to speculate about other ways in which reduced attention to speech may impact cochlear-implanted infants’ spoken language development.

Initial encoding

WRAPSA posits that the initial encoding is automatic, but it also assumes normal hearing. We do not know if the mechanisms that are automatic for normal-hearing infants and adults will necessarily be automatic (i.e., not attention-demanding) for deaf infants (or even adults) who use cochlear implants. It is possible that even picking up information by the auditory analyzers could be attention-demanding for congenitally deaf infants with cochlear implants. This would depend on whether suprathreshold hearing is automatic in this population.

Weighting system and pattern extraction

According to Jusczyk’s WRAPSA model, the components of the speech perception system after the initial analyzers are shaped by encoding the sound patterns of words (or word candidates) into memory. The level of detail of the encoding depends, in part, on whether or not sustained attention is allocated to the task. All else being equal, infants who attend more to speech should form more representations than infants who attend less, and their representations should be more detailed, affording them more information from which to shape the weighting and pattern extraction systems. Likewise, if deaf infants with cochlear implants attend less to speech than normal-hearing infants, then there may be additional delays in shaping these systems.

Given the possible role that attention and encoding may play in shaping basic components of speech perception, it is worth revisiting possible implications of Houston et al’s (2003) findings that infants with cochlear implants attend much less to speech than their hearing age-matched peers. It might not come as any surprise that older infants with cochlear implants behave differently than infants who have the same hearing age but are nonetheless younger, especially when there is evidence that they behave similarly to their normal-hearing chronologically age-matched peers (Houston, 2009). However, it is important to note that at any given chronological age what normal-hearing infants can potentially learn from nonsensical speech is likely to be very different from what infants with cochlear implants could potentially learn from it. The normal-hearing infants who served as chronological age-matched controls were well past the stages of development where, according to the WRAPSA model, infants would have done most of the work of shaping their perceptual system. There is thus little to gain from listening to repetitions of “hop hop hop” and “ahhh” for normal-hearing infants. Deaf infants with less than a year of implant experience, by contrast, are likely still at the stages of shaping their perceptual systems. It is possible then that in order to not fall further behind in shaping their perceptual systems, they would need to attend to speech like their hearingage -matched peers rather than their chronologically age-matched peers. That this does not seem to be the case warrants further research into how their attention to speech differs from hearing age-matched peers and how any differences may influence early speech perception development.

The fact that the stimuli in the Houston et al (2003) study were not meaningful, whereas the stimuli in Bergeson et al. (in preparation) were, may help explain why infants with 6 months of cochlear implant experience attended to speech like chronological age-matched normal-hearing infants in the former but not the latter. The stimuli in the Bergeson et al. study were semantically meaningful, making them more interesting to the normal-hearing infants than the stimuli in the Houston et al. study. Taken together, both studies suggest that implanted infants as a group may not be attending to speech enough to keep on pace with normal-hearing infants in shaping the weighting scheme and pattern extraction system. As a result, we would expect to see delays beyond those due to later access to sound in speech segmentation and in other speech perception tasks that involve shaping from the input, such as preference for the rhythmic properties of the ambient language (Jusczyk et al., 1993) and language-specific speech discrimination (Best et al., 1988; Werker and Tees, 1984).

In addition to any delays resulting from forming fewer and less detailed representations, reduced attention to speech may result in poorer online speech segmentation even after sufficient learning of language-specific properties has taken place. The reason that speech segmentation may continue to be poorer is because segmentation involves integrating multiple cues. This means that, according to Nusbaum and Magnuson’s (1997) definition of what should require active mechanisms, that segmentation involves active attention-demanding cognitive processes even for adults. So we would expect attention to be even more necessary for infant speech segmentation.

Word recognition and learning

As noted earlier, WRAPSA predicts that sustained attention affects word recognition because of its role in extracting and encoding word candidates. Consistent with that prediction, research on adult word recognition suggests that it is an attention-demanding process (Magnuson & Nusbaum, 2007). These findings combined with WRAPSA’s predictions raise the possibility that reduced attention to speech may make word recognition more difficult for children with cochlear implants. The fact that Houston (2009) reported a correlation between early measures of attention to speech and later word recognition skills provides evidence that attention to speech plays a role in word recognition in children with cochlear implants. Likewise, work by others suggests that word learning involves integrating a wide array of information (e.g., Hollich et al., 2000), suggesting that attention is important for word learning and may prove to be difficult for children with cochlear implants who may have reduced attention.

Maternal Speech to Infants with Cochlear Implants

Reduced attention to speech in infants with cochlear implants could also have an effect on their caregivers’ speech characteristics. ID speech seems to rely in part on the mutually reinforcing roles of both interaction partners; if the infant does not pay special attention to ID over AD speech or even silence, it is entirely possible that the lack of reinforcement would result in caregivers’ reduced use of ID speech (e.g., Wedell-Monnig and Lumley, 1980). In a recent study (Bergeson et al., 2006), we examined mothers’ speech to three groups of infants: 10- to 37-month-old infants with hearing loss who had used a cochlear implant for 3–18 months, normal-hearing infants matched by hearing experience (3–18 months of age), and normal-hearing infants matched by chronological age (10–37 months of age). We instructed the mothers (all normal-hearing) to speak to their infants as they would normally do at home. We also recorded the mothers in a semi-structured interview with an adult experimenter to get baseline measures of their speech. We measured prosodic characteristics such as pitch, utterance duration, and speaking rate, in both ID and AD conditions.

The results of the Bergeson et al. (2006) study revealed that mothers in all three groups spoke to their infants in an ID speech register and to adults in an AD speech register. Moreover, mothers of infants with cochlear implants adjusted the prosodic features of their speech in more similar ways to mothers of normal-hearing infants matched by hearing experience rather than chronological age. This suggests that mothers spontaneously tailor their speech to match the hearing skills (and presumably attentiveness) of their infants. For example, a mother of a 12-month-old infant who had used a cochlear implant for 4 months would speak to that infant using very high pitch (relative to AD speech) similar to that used by mothers of normal-hearing 4-month-olds rather than normal-hearing 12-month-olds. Thus, mothers are intuitively providing infants with cochlear implants with the prosodic characteristics of speech that are known to attract and maintain the attention of normal-hearing infants.

Do mothers of deaf infants with cochlear implants also highlight linguistically relevant components of speech for their infants? Previous research has shown that when speaking to normal-hearing children, maternal caregivers’ speech is characterized by an expanded vowel space relative to that produced in AD speech (Burnham et al., 2002; Kuhl et al., 1997), which has been linked to enhanced speech perception skills in later development (Liu et al., 2003). We are currently investigating mothers’ use of such vowel hyperarticulation when speaking with their hearing-impaired infants who use a cochlear implant (Dilley and Bergeson, 2010). Using the recordings of mothers’ speech to their infants and to an adult experimenter described above, we compared the vowel spaces in deaf infants at 3- and 6-month post-amplification and normal-hearing infants matched by chronological age and hearing experience. We measured the first formant, second formant, and fundamental frequency for the point-vowels /i/, /a/, and /u/ in stressed syllables, and used the resulting vowel triangle areas for group comparisons.

Preliminary results of Dilley and Bergeson’s (2010) study showed that when speaking to their hearing-impaired children with a cochlear implant, vowel spaces of mothers on average were expanded relative to when speaking to another adult. However, substantial individual variation was observed, with some mothers producing substantial degrees of vowel expansion and other mothers producing little to no vowel expansion. In other words, some mothers of deaf infants with cochlear implants are providing exaggerated cues to the differences among vowel categories, which would likely aid their infants’ attention to vowel categories. Future studies will examine whether the variation in mothers’ use of vowel hyperarticulation is related to later speech perception skills in deaf infants with cochlear implants.

Finally, previous studies have shown that mothers use prosodic cues to highlight clause boundaries (e.g., Bernstein Ratner, 1986), and infants perceive clause boundaries more easily when listening to ID than AD speech (Kemler Nelson et al., 1989). We recently completed a study that investigated mothers’ use of clause boundary cues (pitch change, preboundary vowel lengthening, and postboundary pause duration) in speech to deaf infants prior to receiving a cochlear implant and 6 months post-implantation, as well as to normal-hearing infants matched by chronological age and hearing experience (Kondaurova & Bergeson, 2011). Similar to the previously described studies, we found that mothers produced exaggerated prosodic cues to clause boundaries in ID speech regardless of the infants’ hearing status. We also found that mothers of the infants with cochlear implants tailored at least one of the clause boundary cues (preboundary vowel lengthening) to the infants’ hearing experience rather than chronological age.

Taken together, these findings reveal that normal-hearing mothers use typical ID speech characteristics such as high pitch, vowel space expansion, and exaggerated cues to clause duration when interacting with their hearing-impaired infants who use cochlear implants. Moreover, mothers naturally adjust their speech characteristics to fit the needs of their infants according to their hearing experience. In other words, the auditory environment of infants with cochlear implants is, in many cases, set up in such a way to attract and maintain their attention to speech, and to attract their attention to specific linguistic components in speech. We are currently investigating whether these and other characteristics of ID speech are related to the development of speech and spoken language skills in deaf infants as they gain hearing experience with a cochlear implant.

Future Directions

The purpose of this manuscript was to explore specific aspects of early language acquisition that may be affected by attention to speech.

Another important direction is using more sophisticated means for measuring attention. Looking time has long been the standard measure of infant attention. However, the infant’s arousal state can vary substantially while looking at an object or event. As reviewed earlier, Colombo found that infants who spend a greater proportion of their looking time to an object in a state of sustained attention, as measured by a continued heart rate deceleration, demonstrate higher vocabulary levels later on in comparison with infants who spend a relatively greater proportion of their looking time in states of orienting or attention termination. Obtaining heart rate measures during exposure to speech would provide more detailed information about the degree to which infants with cochlear implants attend to speech.

In order to understand how the nature of the input affects attention to speech after cochlear implantation, it will be important to examine that speech within the broader context of social interaction. Do parents of children with cochlear implants engage their infants with pointing gestures, eye gazes, and other nonverbal gestures in a similar way as parents do with normal-hearing infants? And how do these gestures influence implanted infants’ attention to speech?

Concluding Remarks

Acquiring spoken language is easy for normal hearing typically developing children. Part of the reason for why this is may be due to the fact that they naturally attend to speech; moreover, the people in their environment naturally provide them with the kind of speech they are most interested in. It may because attention to speech is, in a sense, always there for normal-hearing infants that the development of that attention and its role in language acquisition has not been investigated much.

The story is quite different when we think about the language development of congenitally deaf infants who receive cochlear implants. Because they develop for a period without sound, we cannot assume that when they gain access to sound that they will automatically attend to it the way normal-hearing infants do. Indeed, evidence reviewed here suggests that they do not. This forces us to examine more carefully the role that attention to speech plays in language acquisition if we are to fully understand the challenges children with cochlear implants face in learning spoken language.

Upon reviewing Jusczyk’s WRAPSA model component-by-component, it is evident that reduced attention to speech may impact early language development in several ways: from shaping basic components of the perceptual system through learning associations between the sound patterns of words and their referents. The possibility that reduced attention to speech may add to the difficulties deaf infants may face in learning spoken language makes investigating the relationship between their attention to speech and their language development a pressing and clinically relevant issue. Moreover, it is important to further investigate how the input directed at infants with cochlear implants affects their attention so that we can better understand what might be the optimal input for their acquisition of spoken language.

Highlights.

We examine the role of sustained attention to speech for early speech perception development

We examine the role of the characteristics of speech to infants in their attention to speech

We discuss the possibility that infants who receive cochlear implants may have reduced attention to speech.

We discuss what potential consequences of reduced attention to speech for deaf infants who receive cochlear implants.

Acknowledgments

Preparation for this manuscript was supported in part by NIH Research Grants R01-DC006235 (DMH) and R01-DC008581 (TRB). The authors would like to thank Elizabeth Johnson for helpful feedback on a previous version of this manuscript.

Footnotes

Houston et al (2003) reported preliminary findings. A final report is in preparation

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Derek M. Houston, Department of Otolaryngology – Head & Neck Surgery, Indiana University School of Medicine

Tonya R. Bergeson, Department of Otolaryngology – Head & Neck Surgery, Indiana University School of Medicine

References

- Baudhuin J, Cadieux J, Firszt JB, Reeder RM, Maxson JL. Optimization of programming parameters in children with the advanced bionics cochlear implant. Journal of the American Academy of Audiology. 2012;23(5):302–12. doi: 10.3766/jaaa.23.5.2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bergeson TR, Miller RJ, McCune K. Mothers’ speech to hearing-impaired infants with cochlear implants. Infancy. 2006;10:221–240. [Google Scholar]

- Bergeson TR, Pisoni DB, Davis RAO. Development of audiovisual comprehension skills in prelingually deaf children with cochlear implants. Ear & Hearing. 2005;26:149–164. doi: 10.1097/00003446-200504000-00004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bergeson TR, Trehub SE. Absolute pitch and tempo in mothers’ songs to infants. Psychological Science. 2002;13:72–75. doi: 10.1111/1467-9280.00413. [DOI] [PubMed] [Google Scholar]

- Bernstein Ratner N. Durational cues which mark clause boundaries in mother-child speech. Journal of Phonetics. 1986;14:303–309. [Google Scholar]

- Best CT, McRoberts GW, Sithole NM. Examination of perceptual reorganization for nonnative speech contrasts: Zulu click discrimination by English-speaking adults and infants. Journal of Experimental Psychology: Human Perception and Performance. 1988;14:345–360. doi: 10.1037//0096-1523.14.3.345. [DOI] [PubMed] [Google Scholar]

- Bortfeld H, Morgan JL, Golinkoff RM, Rathbun K. Mommy and me: Familiar names help launch babies into speech-stream segmentation. Psychological Science. 2005;15(4) doi: 10.1111/j.0956-7976.2005.01531.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burkholder RA, Pisoni DB. Speech timing and working memory in profoundly deaf children after cochlear implantation. Journal of Experimental Child Psychology. 2003;85:63–88. doi: 10.1016/S0022-0965. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burnham D, Kitamura C, Vollmer-Conna U. What’s new, pussycat? On talking to babies and animals. Science. 2002;296:1435. doi: 10.1126/science.1069587. [DOI] [PubMed] [Google Scholar]

- Cheour M, Martynova O, Näätänen R, Erkkola R, Sillanpää M, Kero P, Raz A, Kaipio ML, Hiltunen J, Aaltonen O, Savela J, Hämäläinen H. Speech sounds learned by sleeping newborns. Nature. 2002;415:599–600. doi: 10.1038/415599b. [DOI] [PubMed] [Google Scholar]

- Cherry EC. Some experiments on the recognition of speech, with one and with two ears. Journal of the Acoustical Society of America. 1953;25:975–979. [Google Scholar]

- Cleary M, Pisoni DB, Geers AE. Some measures of verbal and spatial working memory in eight- and nine-year-old hearing-impaired children with cochlear implants. Ear and Hearing. 2001;22(5):395–411. doi: 10.1097/00003446-200110000-00004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cole EB, Flexer CA. Children with hearing loss: Developing listening and talking, birth to six. San Diego, CA: 2011. p. xi.p. 434. Retrieved from Library of Congress. [Google Scholar]

- Colombo J. The development of visual attention in infancy. Annual Review of Psychology. 2001;52:337–367. doi: 10.1146/annurev.psych.52.1.337. [DOI] [PubMed] [Google Scholar]

- Colombo JA, Bundy RS. A method for the measurement of infant auditory selectivity. Infant Behavior and Development. 1981;4:219–223. [Google Scholar]

- Conway CM, Pisoni DB, Anaya EM, Karpicke J, Henning SC. Implicit sequence learning in deaf children with cochlear implants. Developmental Science. 2011;14(1):69–82. doi: 10.1111/j.1467-7687.2010.00960.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cooper RP, Abraham J, Berman S, Staska M. The development of infants’ preference for motherese. Infant Behavior and Development. 1997;20:477–488. [Google Scholar]

- Cooper RP, Aslin RN. Preference for infant-directed speech in the first month after birth. Child Development. 1990;61:1584–1595. [PubMed] [Google Scholar]

- Corteen RS, Dunn D. Shock-associated words in a nonattended message: A test for momentary awareness. Journal of Experimental Psychology. 1974;102:1143–1144. [Google Scholar]

- Cutler A, Carter DM. The predominance of strong initial syllables in the English vocabulary. Computer Speech and Language. 1987;2:133–142. [Google Scholar]

- Cutler A, Norris D. The role of strong syllables in segmentation for lexical access. Journal of Experimental Psychology: Human Perception & Performance. 1988;14:113–121. [Google Scholar]

- DeCasper AJ, Fifer WP. Of human bonding: Newborns prefer their mother’s voices. Science. 1980;208:1174–11. doi: 10.1126/science.7375928. [DOI] [PubMed] [Google Scholar]

- Dettman SJ, Pinder D, Briggs RJ, Dowell RC, Leigh JR. Communication development in children who receive the cochlear implant younger than 12 months: Risks versus benefits. Ear and Hearing. 2007;28(2 Suppl):11S–18S. doi: 10.1097/AUD.0b013e31803153f876. [DOI] [PubMed] [Google Scholar]

- Dilley L, Bergeson TR. Mothers’ use of exaggerated vowel space in speech to hearing-impaired infants with hearing aids or cochlear implants. 11th International Conference on Cochlear Implants and Other Implantable Auditory Technologies; Stockholm, Sweden. 2010. [Google Scholar]

- Ferguson CA. Baby talk in six languages. American Anthropologist. 1964;66:103–114. [Google Scholar]

- Fernald A. Four-month-old infants prefer to listen to motherese. Infant Behavior and Development. 1985;8:181–195. [Google Scholar]

- Fernald A. Intonation and communicative intent in mothers’ speech to infants: Is the melody the message? Child Development. 1989;60:1497–1510. [PubMed] [Google Scholar]

- Fernald A. Prosody in speech to children: Prelinguistic and linguistic functions. Annals of Child Development. 1991;8:43–80. [Google Scholar]

- Fernald A. Meaningful melodies in mothers’ speech to infants. In: Papoušek H, Juergens U, Papoušek M, editors. Nonverbal vocal communication: Comparative and developmental approaches. 1992. pp. 262–282. [Google Scholar]

- Fernald A, Simon T. Expanded intonation contours in mothers’ speech to newborns. Developmental Psychology. 1984;20:104–113. [Google Scholar]

- Fifer WP, Moon CM. The effects of fetal experience with sound. In: Lecanuct J, Fifer WP, Krasnegor NA, Smotherman WP, editors. Fetal development: A psychobiological perspective. Lawrence Erlbaum Associates; Hillsdale, NJ: 1995. pp. 351–366. [Google Scholar]

- Fischer C, Luauté J. Evoked potentials for the prediction of vegetative state in the acute stage of coma. Neuropsychol Rehabil. 2005;15:372–380. doi: 10.1080/09602010443000434. [DOI] [PubMed] [Google Scholar]

- Francis AL, Nusbaum HC. Effects of intelligibility on working memory demand for speech perception. Attention, perception & psychophysics. 2009;71:1360–1374. doi: 10.3758/APP.71.6.1360. [DOI] [PubMed] [Google Scholar]

- Frick JE, Richards JE. Individual Differences in Infants’ Recognition of Briefly Presented Visual Stimuli. Infancy. 2001;2:331–352. doi: 10.1207/S15327078IN0203_3. [DOI] [PubMed] [Google Scholar]