Abstract

Purpose

Despite the endorsement of several quality measures for prostate cancer by the National Quality Forum and the Physician Consortium for Performance Improvement, how consistently physicians adhere to these measures has not been examined. We evaluated regional variation in adherence to these quality measures in order to identify targets for future quality improvement.

Materials and Methods

For this retrospective cohort study, we used Surveillance, Epidemiology, and End Results (SEER) – Medicare data for 2001–2007 to identify 53,614 patients with newly diagnosed prostate cancer. Patients were assigned to 661 regions (Hospital Service Areas [HSAs]). Hierarchical generalized linear models were used to examine reliability adjusted regional adherence to the endorsed quality measures.

Results

Adherence at the patient level was highly variable, ranging from 33% for treatment by a high-volume provider to 76% for receipt of adjuvant androgen deprivation therapy while undergoing radiotherapy for high-risk cancer. Additionally, there was considerable regional variation in adherence to several measures, including pretreatment counseling by both a urologist and radiation oncologist (range 9% to 89%, p<0.001), avoiding overuse of bone scans in low-risk cancer (range 16% to 96%, p<0.001), treatment by a high-volume provider (range 1% to 90%, p<0.001), and follow-up with radiation oncologists (range 14% to 86%, p<0.001).

Conclusions

We found low adherence rates for most established prostate cancer quality of care measures. Within most measures, regional variation in adherence was pronounced. Measures with low adherence and a large amount of regional variation may be important low-hanging targets for quality improvement.

Keywords: health services research, prostate cancer, quality improvement, quality of care, small area variation

Introduction

Prostate cancer prevalence will increase by 40% from 2010 to 2020, with costs for prostate cancer care approaching $18 billion by the end of this decade.1 In light of this high prevalence and cost, providing efficient and high-quality prostate cancer care is of utmost importance. To better assess the quality of prostate cancer care, quality measures have been identified based on consensus opinion of prostate cancer professionals and stakeholders, incorporating the available evidence base. This was done most comprehensively in 2000 by RAND.2 More recently, the Physician Consortium for Performance Improvement (PCPI) and the National Quality Forum (NQF) endorsed several quality measures, incorporating some of the RAND measures and an up-to-date evidence base.3,4 Three of these measures have also been included into the Centers for Medicare and Medicaid Services' Physician Quality Reporting System.5

Despite the development and endorsement of these measures, little is known about overall adherence to these established standards of care and about variation in adherence across the United States. One cross-sectional study found low adherence rates to several of these measures, including pretreatment and follow-up care, as well as certain aspects of radiation technique.6 However, this study was limited to hospitals approved by the Commission on Cancer and may therefore not reflect the quality of prostate cancer care across all clinical settings in the United States.6,7 In addition, this study demonstrated significant regional variation in processes of care, but regions were broadly defined as one of nine census divisions.6 To the extent that variation in quality is primarily determined by physicians, who deliver prostate cancer care locally,8 these large regions may not have completely captured variation in quality across the United States.

For these reasons, we used Surveillance Epidemiology and End Results (SEER) – Medicare linked data representative of 26% of the United States' population9 to evaluate adherence to established quality of care measures across all clinical settings and to examine the full extent of regional variation in quality of prostate cancer care.

Methods

Study population

We used Surveillance, Epidemiology, and End Results (SEER) – Medicare data to identify patients newly diagnosed with localized prostate cancer between 2001 and 2007.10 To ensure the ability to assess health status for the year preceding the diagnosis we limited our study to patients 66 years of age and older. Further, only patients in the fee-for-service program eligible for Parts A and B of Medicare for at least 12 months before and after prostate cancer diagnosis were included. We only included patients treated with radical prostatectomy or radiotherapy (Supplemental Table 1) because the endorsed quality measures only apply to these patients.3,4. Using these criteria, our study population consisted of 53,614 patients who were followed with Medicare claims through December 31, 2009.

Identifying healthcare regions

We divided the SEER registries into healthcare regions using the Hospital Service Area (HSA) boundaries specified by the Dartmouth Atlas.11 Briefly, HSAs represent a collection of ZIP codes in which Medicare patients residing in these areas primarily receive their hospital care.11 Patients were assigned to their respective HSA (n=661) based on the ZIP code of their primary residence.

Measuring quality of care

We used five nationally endorsed RAND and PCPI measures that could be assessed in Medicare claims to ensure a broad view of quality of care (Supplemental Table 2):2,3 (1) the proportion of patients seen by both a urologist and a radiation oncologist between diagnosis and start of treatment (RAND process measure), (2) the proportion of patients with low-risk cancer avoiding receipt of a non-indicated bone scan (PCPI process measure endorsed by NQF, only patients diagnosed in 2004 and later included due to availability of PSA and Gleason grade in dataset), (3) the proportion of patients with high-risk cancer receiving adjuvant androgen deprivation therapy while undergoing radiotherapy (PCPI process measure endorsed by NQF, only patients diagnosed in 2004 and later included due to availability of PSA and Gleason grade in dataset), (4) the proportion of patients treated by a high volume provider (RAND structure measure), and (5) the proportion of patients having at least two follow-up visits with a treating radiation oncologist or urologist (RAND process measure).

Statistical analyses

We described bivariate associations between patient demographic (age in years, race, comorbidity,12 clinical stage, tumor grade, D'Amico risk group,13 year of diagnosis, socioeconomic status,14 and urban residence) or regional characteristics (number of urologists, number of radiation oncologists, and number of hospital beds per 100,000 men aged 65 and older; Medicare managed care penetration; all obtained from the Health Resources and Services Administration’s Area Resource File ) and receipt of care according to the quality measures using chi square tests.

Our primary binary outcomes were whether or not a patient received care according to each of the quality measures described above. We fit a series of hierarchical generalized linear models with a logit link to examine adherence to these measures. These models allowed us to account for the nested structure of our data (i.e., patients nested within HSAs) by introducing an HSA-level random effect.15 The first model used was a random intercept model with no explanatory variables included (empty model). This allowed us to understand the basic partitioning of the data's variability between the patient- and HSA-level.

We then introduced patient and regional covariates, as well as year of diagnosis (treated as a categorical variable) as fixed effects in an attempt to explain the observed variation. We used Empirical Bayes estimation to calculate the adjusted probability of receiving care adherent to the quality measure for each HSA. This approach accounted for differences in reliability of individual HSA adherence rates resulting from differences in sample size across HSAs. HSAs were then ranked from lowest to highest based on their adjusted adherence rates and these rates (and their associated 95% confidence intervals) were plotted for each quality measure. To examine HSA-level variation for statistical significance, we used a likelihood-ratio test comparing the hierarchical generalized linear models to models without an HSA-level random intercept.

To quantify the amount of variation that was due to HSA-level effects, we calculated the intraclass correlation (ICC).16 To quantify the amount of variation at the HSA-level that could be explained by measured patient- and regional characteristics, we calculated the proportional change in variance at the HSA-level, comparing each adjusted model to the prior simpler model.17

We assessed whether HSAs performing well in one quality measure also performed well in other measures by first categorizing HSAs into those with the best (top 20% ranking), intermediate (middle 60% ranking), or worst (bottom 20% ranking) performance for each quality measure.18 We then calculated the proportion of high-performing HSAs for one quality measure that were also high-performing (in top 20%) for other quality measures.

We performed all analyses using Stata (version 12MP) and SAS (version 9.3). All tests were 2-tailed, and we set the probability of a Type 1 error at 0.05 or less. The University of Michigan Medical School Institutional Review Board exempted this study from review.

Results

Patient and regional characteristics and their bivariate associations with quality measures are summarized in Table 1. Unadjusted adherence to the quality measures ranged from 32.9% for treatment by a high-volume provider to 76.8% for use of adjuvant androgen deprivation therapy among high-risk prostate cancer patients treated with radiation. Adherence rates varied according to several patient and regional characteristics (Table 1). For example, with increasing age, more patients were being seen by both a urologist and radiation oncologist and were receiving recommended adjuvant androgen deprivation therapy. Regional density of urologists and radiation oncologists was associated with higher adherence to recommended follow-up care.

Table 1.

Adherence to quality measures stratified by demographics and clinical characteristics of the study population. Quality measures: (1) proportion of patients seen by both a urologist and a radiation oncologist between diagnosis and start of treatment (“seen both”), (2) proportion of patients with low-risk cancer avoiding receipt of a non-indicated bone scan (only patients diagnosed 2004–2007 included, “bone scan”), (3) proportion of patients with high-risk cancer receiving adjuvant androgen deprivation therapy while undergoing radiotherapy (only patients diagnosed 2004–2007 included, “adjuvant ADT”), (4) proportion of patients treated by a high volume provider (“high-volume provider”), (5a) proportion of patients having at least two follow-up visits with a treating radiation oncologist (“f/u with radonc”), (5b) proportion of patients having at least two follow-up visits with a treating urologist (“f/u with urologist”).

Abbreviations: radonc: radiation oncologist; nr: not reported because of low numbers (<100) in the denominator; na: not applicable

| Characteristics | N | % of patients receiving care adherent to quality measure | |||||

|---|---|---|---|---|---|---|---|

| (1) seen both | (2) bone scan | (3) adjuvant ADT | (4) high-volume provider | (5a) f/u with radonc | (5b) f/u with urologist | ||

| Overall | 53,614 | 51.2 | 65.9 | 76.8 | 32.9 | 53.4 | 66.3 |

| Patient characteristics | |||||||

| Age, years | |||||||

| 66–69 | 17,768 | 40.9* | 68.5* | 72.6* | 32.4 | 55.9* | 65.5 |

| 70–74 | 19,656 | 51.9* | 65.3* | 75.6* | 33.1 | 54.6* | 67.7 |

| 75–79 | 11,984 | 61.1* | 63.8* | 77.9* | 33.4 | 52.1* | 68.7 |

| 80–84 | 3,599 | 63.9* | 58.4* | 81.2* | 32.7 | 47.3* | nr |

| 85+ | 607 | 58.5* | 68.4* | 88.4* | 29.9 | 40.1* | nr |

| Race/ethnicity | |||||||

| White | 45,796 | 51.6** | 66.5** | 76.4 | 33.2** | 53.9** | 65.7 |

| Black | 3,857 | 47.4** | 60.5** | 77.7 | 32.9** | 52.4** | 71.7 |

| Hispanic | 1,042 | 47.8** | 63.7** | 83.5 | 23.3** | 49.4** | 70.2 |

| Asian | 1,492 | 50.0** | 70.6** | 80.0 | 31.5** | 48.8** | 69.8 |

| Other/unknown | 1,427 | 50.2** | 58.6** | 78.0 | 32.4** | 47.1** | 67.9 |

| Comorbidity | |||||||

| 0 | 36,873 | 49.7* | 67.2* | 76.4 | 33.1 | 54.0* | 65.6 |

| 1 | 11,567 | 53.7* | 63.7* | 76.8 | 32.5 | 53.6* | 69.3 |

| 2 | 3,383 | 54.4* | 63.2* | 79.0 | 33.2 | 49.5* | 68.9 |

| 3+ | 1,791 | 58.6* | 57.9* | 77.6 | 30.7 | 48.1* | 60.1 |

| Clinical stage | |||||||

| T1 | 25,902 | 50.6* | 66.1 | 80.3 | 35.3* | 54.6* | 65.1 |

| T2 | 26,138 | 51.4* | 65.3 | 72.5 | 31.1* | 52.9* | 67.8 |

| T3 | 1,328 | 61.8* | na | 90.0 | 23.3* | 42.4* | 64.0 |

| T4 | 159 | 54.1* | na | 92.4 | 20.1* | 34.3* | nr |

| Tumor grade | |||||||

| Well/moderately differentiated | 30,436 | 51.6 | 66.2** | 54.2** | 33.0 | 55.7** | 67.2 |

| Poorly/undifferentiated | 22,661 | 50.6 | 56.0** | 82.5** | 32.9 | 49.7** | 65.3 |

| Unknown | 517 | 51.3 | na | na | 26.9 | 59.4** | nr |

| D'Amico risk (2004–2007) | |||||||

| Low | 8,944 | 53.7 | 65.9 | na | 38.2* | 57.2* | 62.3 |

| Intermediate | 10,525 | 50.4 | na | na | 37.2* | 51.9* | 63.2 |

| High | 8,468 | 55.6 | na | 76.8 | 32.1* | 48.9* | 64.5 |

| Missing | 3,296 | 43.7 | na | na | 33.1* | 54.2* | 66.1 |

| Year of diagnosis | |||||||

| 2001 | 7,256 | 46.3* | na | na | 22.8* | 52.2 | 70.6* |

| 2002 | 7,745 | 51.5* | na | na | 31.6* | 54.6 | 70.5* |

| 2003 | 7,380 | 51.9* | na | na | 32.3* | 55.1 | 69.8* |

| 2004 | 7,735 | 51.3* | 66.7 | 79.0 | 33.3* | 54.4 | 67.6* |

| 2005 | 7,426 | 52.4* | 66.5 | 73.9 | 33.8* | 51.5 | 65.3* |

| 2006 | 7,932 | 53.0* | 65.1 | 77.2 | 37.0* | 53.5 | 62.0* |

| 2007 | 8,140 | 51.6* | 65.4 | 76.9 | 38.5* | 52.2 | 60.5* |

| Socioeconomic status14 | |||||||

| Low | 16,042 | 47.7* | 63.3* | 75.4 | 28.1* | 51.9* | 67.4 |

| Medium | 17,821 | 53.7* | 66.7* | 78.2 | 32.8* | 52.7* | 67.0 |

| High | 18,668 | 51.9* | 67.2* | 76.7 | 37.0* | 55.3* | 65.0 |

| Residing in urban area | |||||||

| non-urban | 5,063 | 49.8 | 62.9 | 74.9 | 25.8* | 45.8* | 61.7* |

| urban | 48,544 | 51.3 | 66.1 | 77.0 | 33.6* | 54.2* | 66.8* |

| Regional characteristics | |||||||

| Urologists per 100,000 men | |||||||

| Low (≤ 53) | 16,848 | 50.9 | 69.0* | 76.5 | 29.2* | 52.6* | 65.9 |

| Intermediate | 16,647 | 52.1 | 67.1* | 77.3 | 32.3* | 51.3* | 67.4 |

| High (≥ 87) | 20,112 | 50.7 | 62.4* | 76.6 | 36.5* | 55.6* | 65.7 |

| Radiation oncologists per 100,000 men | |||||||

| Low (≤ 21) | 17,449 | 50.3 | 66.7 | 76.4 | 33.1 | 53.0* | 65.0* |

| Intermediate | 17,132 | 54.5 | 66.5 | 77.4 | 31.7 | 51.4* | 65.2* |

| High (≥ 37) | 19,026 | 49.1 | 64.7 | 76.6 | 33.8 | 55.5* | 68.4* |

| Hospital beds per 100,000 men | |||||||

| Low (≤ 4,735) | 16,975 | 51.5* | 70.6* | 76.3 | 30.6 | 51.2 | 67.1 |

| Intermediate | 18,544 | 55.3* | 64.8* | 77.6 | 38.1 | 55.7 | 63.4 |

| High (≥ 6,854) | 18,088 | 46.7* | 62.5* | 76.4 | 29.6 | 52.9 | 68.0 |

| Medicare Managed Care Penetration | |||||||

| Low (≤ 5.1%) | 16,284 | 53.9* | 66.9* | 77.9* | 24.9* | 51.6 | 65.0 |

| Intermediate | 19,327 | 53.0* | 60.5* | 79.2* | 44.0* | 57.9 | 68.8 |

| High (≥ 19.4%) | 17,996 | 46.8* | 71.1* | 73.7* | 28.0* | 49.5 | 65.5 |

p≤0.001, Mantel-Haenszel chi square test

p≤0.001, chi square test

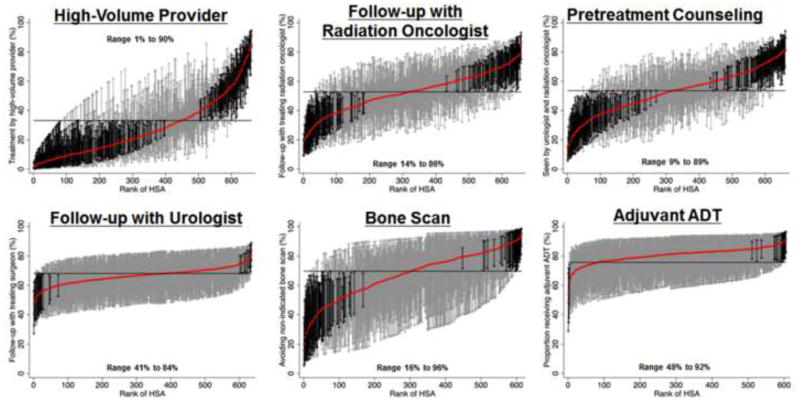

After adjusting for patient and regional characteristics in multivariable hierarchical regression, mean adherence was lowest for treatment by a high-volume provider and highest for receipt of adjuvant androgen deprivation therapy (horizontal lines in graphs in Figure 1). Adherence to recommended care varied widely across HSAs, particularly for treatment by a high-volume provider (range 1% to 90%, p<0.001, n=48,801), follow-up with the treating radiation oncologist (range 14% to 86, p<0.001, n=38,888), pretreatment counseling by both a urologist and radiation oncologist (range 9% to 89%, p<0.001, n=52,439), and avoiding unnecessary bone scans in low-risk prostate cancer patients (range 16% to 96%, p<0.001, n=9,014, Figure 1, Table 2). For each of these measures, 70 to 245 regions had adherence rates significantly lower than the mean and 42 to 113 regions had adherence rates significantly higher than the mean (black error bars in Figure 1). There was less variation for follow-up with urologists (range 41% to 84%, p<0.001, n=13,551) and adjuvant androgen deprivation therapy (range 48%–92%, n=6,363, Figure 1, Table 2). Less than 5% of the differences in adherence rates at the HSA-level were explained by patient characteristics. Measured regional characteristics were not consistently associated with better adherence to the quality measures in multivariable analyses. These HSA-level characteristics explained only 7% to 16% of the variation in adherence rates across HSAs. Thus, unmeasured characteristics accounted for the majority of the regional variation in adherence.

Figure 1.

Variation in quality of prostate cancer care across 661 Hospital Service Areas (HSAs) located within the SEER areas. HSAs were ranked from lowest to highest adherence to each quality measure. The probability of receiving care adherent to each quality measure was calculated by use of hierarchical generalized linear modeling, adjusting for patient and regional characteristics. These models also account for differences in reliability of individual HSA-level adherence rates resulting from variations in the number of patients per HSA. The solid red line represents the adjusted adherence rate for each HSA. The horizontal line represents the adjusted overall mean rate of adherence. Error bars represent 95% confidence intervals for the rates of the individual HSAs. Black error bars represent rates that are statistically significantly different from the overall mean. Grey error bars represent rates that are not significantly different from the overall mean.

Table 2.

Amount of variation in adherence to quality measures that was due to HSA-level effects. We calculated the intraclass correlation (ICC) to obtain the proportion of the total variance in a patient's propensity to get care adherent to a quality measure that was attributable to the HSA-level. For example, an ICC of 0.15 means that 15% of the variation in adherence to a quality measure was due to differences at the HSA-level. To put these numbers into context, the ICCs found in our study were much higher than those found in other clinical settings, e.g., physician-level variation in care of diabetic patients (ICCs 0.01 to 0.04).19 ICCs did not change much after inclusion of patient and regional characteristics into the models, which implies that much of the HSA-level variation was due to unmeasured characteristics.

| Quality measure | Seen by both a urologist and a radiation oncologist | Avoiding receipt of a non-indicated bone scan | Receipt of adjuvant androgen deprivation therapy while undergoing radiotherapy | Treated by a high volume provider | At least two follow-up visits with a treating radiation oncologist | At least two follow-up visits with a treating surgeon |

|---|---|---|---|---|---|---|

| ICC | ICC | ICC | ICC | ICC | ICC | |

| Empty model | 0.15 | 0.27 | 0.09 | 0.32 | 0.13 | 0.06 |

| After including patient characteristics | 0.15 | 0.26 | 0.11 | 0.33 | 0.13 | 0.06 |

| After including patient and regional characteristics | 0.14 | 0.24 | 0.10 | 0.31 | 0.12 | 0.05 |

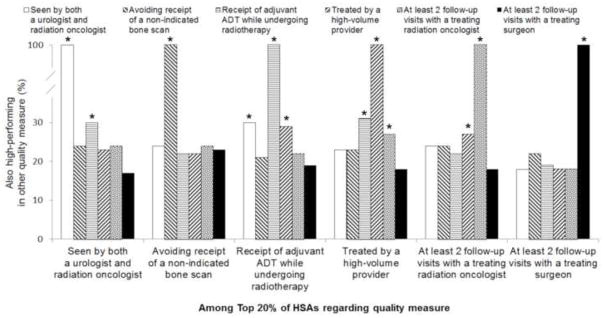

For several quality measures, high performance on one was associated with higher performance on others (Figure 2). For example, among the HSAs in the top 20th percentile for being seen by both a urologist and radiation oncologist before treatment, 30% were also in the top 20th percentile for receipt of adjuvant androgen deprivation therapy while undergoing radiotherapy. Similarly, among the top performing HSAs on the provider volume measure, 31% and 27% were high-performing on the adjuvant androgen deprivation therapy and follow-up with radiation oncology measures, respectively.

Figure 2.

Proportion of HSAs that were high-performing for one quality measure and also high-performing (top 20%) for the other quality measures. * Denotes p<0.05 from chi square test.

Discussion

We found low adherence rates for all but one of the quality measures among patients diagnosed with prostate cancer between 2001 and 2007. In addition, regional variation in quality of care was substantial. The largest amount of variation was seen for pretreatment counseling, avoiding unnecessary bone scans, treatment by a high-volume provider, and follow-up with radiation oncologists. This variation was primarily due to unmeasured HSA-level effects, rather than due to measured differences between patients or HSAs. For several, but not all, quality measures, high performance on one measure was associated with higher performance on others.

This study is the first to evaluate the adherence to established quality of care measures and the variation in quality of care across all clinical settings. A previous analysis of the American College of Surgeons National Cancer Data Base found low adherence rates to several of these measures, but only included patients treated in Commission on Cancer approved hospitals.6 These hospitals have more hospital beds, a higher surgical volume, and are more likely to be designated as Comprehensive Cancer Centers by the National Cancer Institute than non-approved hospitals, which may have affected adherence rates to the quality measures.7 By using data representative of 26% of the United States' population,9 we could provide a comprehensive picture of the quality of prostate cancer care provided across all clinical settings. While the previous study found significant variation in quality across the nine United States census divisions with regards to follow-up visits and adherence to radiation process of care measures,6 our current study expands on these findings by evaluating small area variation rather than broadly categorizing the United States into nine census divisions. Prostate cancer care is primarily delivered locally, so focusing on the regions in which patients receive their hospital care provided a more in-depth assessment of variations in quality. For example, we found substantially more variation in recommended follow-up (range 14% to 86% for radiation oncologists, Figure 1) than the prior study (range 44% to 62%).

We quantified the amount of variation in adherence to the quality measures by calculating ICCs, which were larger than 0.10 for four of the measures, indicating substantial regional variation. To put these numbers into context, the ICCs found in our study were much higher than those found in other clinical settings, including physician-level variation in care of diabetic patients (ICCs 0.01 to 0.04)19 and hospital variation in readmissions after coronary artery bypass surgery (ICC 0.004).20 Similar to our findings, other studies have found considerable regional variation in quality of cancer care, including receipt of recommended cancer screenings, breast cancer care, and lymph node assessment for patients with gastric cancer.21–23 Together, these findings indicate that quality of cancer care is very variable throughout the United States and that effective quality improvement efforts are clearly needed.

The variation in quality of prostate cancer care was largely due to unmeasured HSA-level effects, rather than due to measured differences between patients or HSAs. We hypothesize that at least some of these unmeasured differences between HSAs may have been due to differences in patient-provider communication, in provider knowledge of clinical guidelines, or in care coordination between the involved specialists.24 Further mixed-methods research will be needed to gain insight into these potential drivers of quality of care.

This study has several limitations. First, claims data are primarily designed to provide billing information and therefore do not provide granular clinical data.25 Additional data regarding quality is currently captured by the Physician Quality Reporting System,5 but reporting is voluntary and prostate cancer measures were not included in this program until 2008.5 Therefore, we limited our quality measures to those that could be measured based on evaluation and management or procedure codes, which have been shown to have high accuracy.26 Second, with the exception of treatment by a high-volume provider (a structural measure of care), this study focused on the evaluation of process measures of quality. Although a direct association between these measures and outcomes in prostate cancer patients has not been demonstrated, better processes of care were associated with improved quality of life among patients with chronic diseases and with survival among community-dwelling vulnerable elders.27,28 In addition, the prostate cancer quality measures used in our study have been nationally endorsed by several entities.2–4 Therefore, they are felt to be meaningful and will likely be incorporated into future systematic assessments of quality of care by the Centers for Medicare and Medicaid Services.3,5 Third, while the quality measures examined in this study were initially developed by RAND in 2000,29 they were not published in the peer-reviewed literature until 20032 and endorsement by the PCPI and NQF happened several years later. Thus, with increasing public attention to these quality measures, adherence to them may have increased in more recent years. However, we accounted for possible time-trends as much as possible by adjusting our models for year of diagnosis.

Conclusions

Based on our findings, several aspects of care exhibited low adherence to established quality measures and a large amount of regional variation: (1) pretreatment counseling by both a urologist and radiation oncologist, (2) avoiding overuse of bone scans, (3) treatment by a high-volume provider, and (4) follow-up with the treating radiation oncologist. While it would seem prudent to improve adherence to these measures, changing physician practice to improve quality is a complex task. Physicians may face multilevel barriers to reporting or delivery of quality care, including skepticism due to lack of validation of the quality measures, knowledge gaps, peer pressure, financial, operational, and time constraints.24 Identifying and addressing these barriers will be essential for improving quality of care in the future.

Supplementary Material

Acknowledgments

Funding: American Cancer Society (PF-12-118-01-CPPB to F.R.S., PF-12-008-01-CPHPS to B.L.J.); NIDDK / NIH (T32 DK07782 to F.R.S.); and NCI / NIH (R01 CA168691 to V.B.S. and B.K.H.).

Abbreviations

- HSA

Hospital Service Area

- ICC

Intraclass Correlation

- PCPI

Physician Consortium for Performance Improvement

- NQF

National Quality Forum

- SEER

Surveillance, Epidemiology, and End Results

Footnotes

Financial disclosures: VBS is a paid consultant for Amgen, Inc.

References

- 1.Mariotto AB, Yabroff KR, Shao Y, et al. Projections of the cost of cancer care in the United States: 2010–2020. J Natl Cancer Inst. 2011;103:117–128. doi: 10.1093/jnci/djq495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Spencer BA, Steinberg M, Malin J, et al. Quality-of-care indicators for early-stage prostate cancer. J Clin Oncol. 2003;21:1928–1936. doi: 10.1200/JCO.2003.05.157. [DOI] [PubMed] [Google Scholar]

- 3.Thompson IM, Clauser S. [accessed September 13, 2011.];Prostate Cancer Physician Performance Measurement Set. 2007 Available at: http://www.ama-assn.org/apps/listserv/x-check/qmeasure.cgi?submit=PCPI.

- 4. [accessed January 26, 2013.];National Quality Forum (NQF): NQF-endorsed standards. 2012 Available at: www.qualityforum.org/QPS/QPSTool.aspx?Exact=false&Keyword=prostatecancer.

- 5.Centers for Medicare & Medicaid Services. [accessed July 5, 2012.];Physician Quality Reporting System: 2010 Reporting Experience Including Trends (2007–2011) 2012 Available at: http://www.facs.org/ahp/pqri/2013/2010experience-report.pdf.

- 6.Spencer BA, Miller DC, Litwin MS, et al. Variations in Quality of Care for Men With Early-Stage Prostate Cancer. J Clin Oncol. 2008;26:3735–3742. doi: 10.1200/JCO.2007.13.2555. [DOI] [PubMed] [Google Scholar]

- 7.Bilimoria KY, Bentrem DJ, Stewart AK, et al. Comparison of Commission on Cancer–Approved and –Nonapproved Hospitals in the United States: Implications for Studies That Use the National Cancer Data Base. J Clin Oncol. 2009;27:4177–4181. doi: 10.1200/JCO.2008.21.7018. [DOI] [PubMed] [Google Scholar]

- 8.McPherson K, Wennberg JE, Hovind OB, et al. Small-area variations in the use of common surgical procedures: an international comparison of New England, England, and Norway. N Engl J Med. 1982;307:1310–1314. doi: 10.1056/NEJM198211183072104. [DOI] [PubMed] [Google Scholar]

- 9.Howlader N, Noone AM, Krapcho M, et al. SEER Cancer Statistics Review, 1975–2008. Bethesda, MD: National Cancer Institute; 2011. [accessed September 2, 2011.]. Available at: http://seer.cancer.gov/csr/1975_2008/ [Google Scholar]

- 10.Warren JL, Klabunde CN, Schrag D, et al. Overview of the SEER-Medicare data: content, research applications, and generalizability to the United States elderly population. Medical care. 2002;40:IV–3. doi: 10.1097/01.MLR.0000020942.47004.03. [DOI] [PubMed] [Google Scholar]

- 11.The Dartmouth Institute for Health Policy & Clinical Practice. [accessed April 7, 2012.];Glossary - Dartmouth Atlas of Health Care. 2012 Available at: http://www.dartmouthatlas.org/tools/glossary.aspx.

- 12.Klabunde CN, Potosky AL, Legler JM, et al. Development of a comorbidity index using physician claims data. Journal of Clinical Epidemiology. 2000;53:1258–1267. doi: 10.1016/s0895-4356(00)00256-0. [DOI] [PubMed] [Google Scholar]

- 13.D’Amico AV, Whittington R, Malkowicz SB, et al. Biochemical outcome after radical prostatectomy, external beam radiation therapy, or interstitial radiation therapy for clinically localized prostate cancer. JAMA. 1998;280:969–974. doi: 10.1001/jama.280.11.969. [DOI] [PubMed] [Google Scholar]

- 14.Diez Roux AV, Merkin SS, Arnett D, et al. Neighborhood of residence and incidence of coronary heart disease. N Engl J Med. 2001;345:99–106. doi: 10.1056/NEJM200107123450205. [DOI] [PubMed] [Google Scholar]

- 15.Rabe-Hesketh S, Skrondal A. Multilevel and Longitudinal Modeling Using Stata. 2. Stata Press; 2008. [Google Scholar]

- 16.Merlo J, Chaix B, Ohlsson H, et al. A brief conceptual tutorial of multilevel analysis in social epidemiology: using measures of clustering in multilevel logistic regression to investigate contextual phenomena. J Epidemiol Community Health. 2006;60:290–297. doi: 10.1136/jech.2004.029454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Merlo J, Yang M, Chaix B, et al. A brief conceptual tutorial on multilevel analysis in social epidemiology: investigating contextual phenomena in different groups of people. J Epidemiol Community Health. 2005;59:729–736. doi: 10.1136/jech.2004.023929. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dimick JB, Staiger DO, Hall BL, et al. Composite measures for profiling hospitals on surgical morbidity. Ann Surg. 2013;257:67–72. doi: 10.1097/SLA.0b013e31827b6be6. [DOI] [PubMed] [Google Scholar]

- 19.Hofer TP, Hayward RA, Greenfield S, et al. The unreliability of individual physician “report cards” for assessing the costs and quality of care of a chronic. 1999;281:2098–2105. doi: 10.1001/jama.281.22.2098. [DOI] [PubMed] [Google Scholar]

- 20.Li Z, Amstrong EJ, Parker JP, et al. Hospital Variation in Readmission After Coronary Artery Bypass Surgery in California. Circ Cardiovasc Qual Outcomes. 2012;5:729–737. doi: 10.1161/CIRCOUTCOMES.112.966945. [DOI] [PubMed] [Google Scholar]

- 21.Radley DC, Schoen C. Geographic Variation in Access to Care - The Relationship with Quality. N Engl J Med. 2012;367:3–6. doi: 10.1056/NEJMp1204516. [DOI] [PubMed] [Google Scholar]

- 22.Malin JL, Schneider EC, Epstein AM, et al. Results of the National Initiative for Cancer Care Quality: how can we improve the quality of cancer care in the United States? J Clin Oncol. 2006;24:626–634. doi: 10.1200/JCO.2005.03.3365. [DOI] [PubMed] [Google Scholar]

- 23.Coburn NG, Swallow CJ, Kiss A, et al. Significant regional variation in adequacy of lymph node assessment and survival in gastric cancer. Cancer. 2006;107:2143–2151. doi: 10.1002/cncr.22229. [DOI] [PubMed] [Google Scholar]

- 24.Zapka J, Taplin SH, Ganz P, et al. Multilevel Factors Affecting Quality: Examples From the Cancer Care Continuum. J Natl Cancer Inst Monogr. 2012;2012:11–19. doi: 10.1093/jncimonographs/lgs005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Finlayson E, Birkmeyer JD. Research based on administrative data. Surgery. 2009;145:610–616. doi: 10.1016/j.surg.2009.03.005. [DOI] [PubMed] [Google Scholar]

- 26.Fowles JB, Lawthers AG, Weiner JP, et al. Agreement between physicians’ office records and Medicare Part B claims data. Health Care Financ Rev. 1995;16:189–199. [PMC free article] [PubMed] [Google Scholar]

- 27.Kahn KL, Tisnado DM, Adams JL, et al. Does ambulatory process of care predict health-related quality of life outcomes for patients with chronic disease? Health Serv Res. 2007;42:63–83. doi: 10.1111/j.1475-6773.2006.00604.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Zingmond DS, Ettner SL, Wilber KH, et al. Association of claims-based quality of care measures with outcomes among community-dwelling vulnerable elders. Med Care. 2011;49:553–559. doi: 10.1097/MLR.0b013e31820e5aab. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Litwin M, Steinberg M, Malin J, et al. Prostate Cancer Patient Outcomes and Choice of Providers: Development of an Infrastructure for Quality Assessment. [accessed October 7, 2013.];2000 Available at: http://www.rand.org/pubs/monograph_reports/MR1227.html.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.