Abstract

This study examined sex differences in categorization of facial emotions and activation of brain regions supportive of those classifications. In Experiment 1, performance on the Facial Emotion Perception Test (FEPT) was examined among 75 healthy females and 63 healthy males. Females were more accurate in the categorization of fearful expressions relative to males. In Experiment 2, 3T functional magnetic resonance imaging data were acquired for a separate sample of 21 healthy females and 17 healthy males while performing the FEPT. Activation to neutral facial expressions was subtracted from activation to sad, angry, fearful and happy facial expressions. Although females and males demonstrated activation in some overlapping regions for all emotions, many regions were exclusive to females or males. For anger, sad and happy, males displayed a larger extent of activation than did females, and greater height of activation was detected in diffuse cortical and subcortical regions. For fear, males displayed greater activation than females only in right postcentral gyri. With one exception in females, performance was not associated with activation. Results suggest that females and males process emotions using different neural pathways, and these differences cannot be explained by performance variations.

Keywords: face emotion processing, affect perception, sex differences, gender differences

INTRODUCTION

During the past decade, there has been a growing interest in understanding sex differences in the ability to process emotional stimuli, with healthy females consistently performing better than healthy males, both in terms of accuracy and speed of processing (Mufson and Nowicki, 1991; Thayer and Johnsen, 2000; Hall and Matsumoto, 2004; Montagne et al., 2005; Mathersul et al., 2008; Wright et al., 2009). Following from these behavioral findings, functional neuroimaging studies have sought to identify the neural correlates underlying these sex differences, yet few have integrated functional imaging with performance considerations. Because mood and anxiety disorders are thought to be secondary to dysfunction in emotion processing circuitry (Chan et al., 2009), sex comparison studies shed insight into biological processes that may underlie the greater susceptibility to mood and anxiety disorders in females when compared with males (American Psychiatric Association, 2000).

Processing of facial emotions is of particular interest to cognitive and affective neuroscientists because it entails both cognitive and interpersonal elements and may be particularly disrupted in affective disorders; consistent with differences in prevalence, some sex differences have been observed (for review see Wright and Langenecker, 2009). Among healthy adults, face identification has been linked to the fusiform face area (Grill-Spector et al., 2004), whereas emotion discrimination has been shown to recruit multiple cortical and limbic areas. One meta-analysis of 55 positron emission tomography and functional magnetic resonance imaging (fMRI) studies investigating emotion processing in healthy adults suggested that the medial prefrontal cortex is involved in processing a number of different emotion types. At the same time, fear was specifically associated with amygdala activation, sadness with subgenual cingulate activation and happiness and disgust with activation in the basal ganglia (Phan et al., 2002).

Studies employing a range of tasks and stimuli generally suggest that although some similarities exist, females and males process emotions using different brain regions. Sex differences in functional activation have been noted during viewing of emotionally laden pictures (Schienle et al., 2005; Hofer et al., 2006; Caseras et al., 2007), viewing of positively and negatively valenced words (Hofer et al., 2007), rating of unpleasant words (Shirao et al., 2005) and during facial emotion processing (Killgore and Yergelun-Todd, 2001, 2004; Lee et al., 2002; Hall and Matsumoto, 2004; Hall et al., 2004; Aleman and Swart, 2008). A meta-analysis of 105 studies that examined processing of human emotional facial expressions among healthy adults concluded that males generally show greater activation relative to females in a region spanning the right amygdala and parahippocampal gyrus, the right medial frontal gyrus and the left fusiform gyrus; furthermore, females demonstrate greater activation than males in right subgenual cingulate (Fusar-Poli et al., 2009). Similarly, a meta-analysis of 65 studies found increased activation in females in the subgenual anterior cingulate, the thalamus and the midbrain, and greater activation in males in the inferior frontal cortex and posterior regions (Wager et al., 2003). Of note, neither of these meta-analyses specified the number of studies in which sex was directly evaluated; however, given the extant literature on this topic, they are likely to be few in number.

Importantly, these prior studies reported differential regional activations in males and females and drew inferences about sex differences in behavioral performance based on the different brain localizations, across a variety of stimuli, but without actual performance correlates. Clearly, these types of inferential conclusions are premature without direct demonstration of relationships between performance and activation, and no study to date has pursued correlational analyses to support the interpretations that have been made. Specifically, it is not clear whether activation differences might underlie performance variations observed between females and males or whether they represent relatively separate developmental processes and/or strategic approaches to tasks. Findings from a study by Derntl et al. (2009) that utilized a face emotion recognition paradigm suggest that relationships between activation and performance are important and may differ by sex. While both females and males demonstrated bilateral amygdala activation to every emotional condition, only in males was a significant relationship found between amygdala activation and accuracy for fearful expressions.

The majority of neuroimaging studies investigating facial emotion perception have utilized experimental tasks with implicit emotion processing paradigms, such as passive viewing of stimuli (e.g. Lee et al., 2008), presenting masked faces theoretically outside of conscious awareness (e.g. Dannlowski et al., 2007, 2008) or oblique sex/age discrimination tasks (e.g. Canli et al., 2005; Costafreda et al., 2009). A few studies have utilized tasks requiring explicit emotion judgments, although these tasks have required simpler emotional classification decisions about facial expressions (e.g. emotional vs neutral; Almeida et al., 2010) or matching emotions presented in different faces (e.g. Phan et al., 2006; Frodl et al., 2009). Often, prior explicit studies have used tasks with ceiling effects, and thus did not capitalize upon challenging subjects to the point of dysfunctional performance, precluding the analysis of the functional correlates of poor performance in emotion perception, as has been observed behaviorally in males. As a result, although such oblique, implicit and easy explicit paradigms are an excellent way to understand subtle perturbations of emotion processing circuitry, they do not provide an exportable behavioral performance paradigm for translation to clinical settings (Langenecker et al., 2005, 2007).

The present study extended prior research exploring sex differences in the identification and processing of facial emotions. Experiment 1 examined how females and males process facial emotions differently, using an event-related design with an emotion identification task consisting of fearful, sad, happy and angry facial expressions. Consistent with previous research, we expected in Experiment 1 that females would make more accurate responses in categorizing facial emotion stimuli than males. Having established the task and pattern of performance among males and females, Experiment 2 examined neural correlates of behavioral performance in males and females. Also in line with prior studies, we expected that males and females would display a differential pattern of activation to emotional faces, such as increased subgenual cingulate activation in females and increased inferior frontal cortex activation in males. Finally, as an exploratory hypothesis, we expected that differences in patterns of functional activation between females and males would be related to performance variations.

METHODS

Participants

In Experiment 1, 75 females, aged 18–64 (M = 31.81, s.d. = 15.72) years, and 63 males, aged 18–65 (M = 34.02, s.d. = 15.72) years, completed a facial emotion and animal categorization test outside of the scanner. These participants were screened to determine that they had never been mentally ill with the Diagnostic Interview for Genetic Studies (Nurnberger et al., 1994) as part of the Prechter Longitudinal Study of Bipolar Disorder (Langenecker et al., 2010). Independent t-tests indicated that the groups were not significantly different in age t(119) = 0.89, P = 0.37) or education [females M = 15.7, s.d. = 2.2, males M = 15.4, s.d. = 2.2; t(134) = 0.88, P = 0.38]. In Experiment 2, a separate sample of 21 females, aged 19–63 years (M = 32.9, s.d. = 14.8), and 17 males, aged 17–61 years (M = 31.2, s.d. = 14.2), completed the facial emotion and animal categorization test inside the fMRI scanner after screening to determine that they had no personal history of any psychiatric illness and no family history depression or any other psychiatric disorder. Exclusion criteria also included neurological or any other medical disorder that could impact cognitive functioning. Females and males were similar in age, t(36) = −0.38, P = 0.71, and education [females M = 16.10, s.d. = 2.32, males M = 14.90, s.d. = 1.95; t(36) = 1.63, P = 0.11] in this second sample.

One male and one female (not included in sample descriptive analyses) were initially recruited for inclusion in Experiment 2 and then excluded due to performance of <65% accuracy for facial emotion or animal categorization while inside the scanner. All participants who completed the task inside the scanner were right-handed. Participants were recruited through advertisements posted at a local university health system and in the surrounding area. Informed consent was obtained and documented for all participants per University of Michigan Medical institutional review board guidelines, and they were reimbursed $15–30 per hour spent in the experiment.

Facial emotion perception task

The Facial Emotion Perception Test (FEPT) was used to assess the accuracy of participants’ ability to categorize facial expressions (Ekman and Friesen, 1976; Rapport et al., 2002; Tottenham et al., 2002; Langenecker et al., 2005, 2007). This test required participants to categorize briefly presented faces into one of four emotion categories (happy, sad, fear or angry), including neutral trials in which they were forced to select one of these four emotions, or to categorize pictured animals into one of four categories (primate, dog, cat or bird; for use in other different studies in which a block design is employed). Each presentation, regardless of face or animal, began with an orienting cross in the center of the screen that was presented for 500 ms. The orienting cross was followed by a facial emotion stimulus (300 ms), then a visual mask to prevent visual afterburn phenomena (100 ms) and then a response period (2600 ms). Each trial lasted 3500 ms and there was no ITI.

The out-of-scanner version of the FEPT had seven face blocks and two animal blocks and took 7 min to complete, using Ekman faces (Ekman and Friesen, 1976). It was completed by both samples of participants. The fMRI version of the FEPT included 21 face blocks and 8 animal blocks, with presentation of specific emotions counterbalanced to the second order, such that there were an equal number of emotions followed by every other emotion (e.g. happy followed by neutral and happy followed by sad). The animal block trials were used as a method of controlling for visual processing and praxis, for use in a block design that is not part of the current study. The in-scanner version consisted of five, 3.5 min runs and entailed 56 animal presentations and 147 facial emotion presentations, using the MACBrain Foundation faces (Tottenham et al., 2002). It included presentation of 38 neutral faces. There were no repetitions of same actor/actress with the same emotion to avoid habituation effects in the experiment.

Scanning procedures

During the fMRI scan, participants lay flat on their backs in the scanner and used a five-button key-press device to record responses, using only index through pinky fingers to respond specifically to each stimulus. Goggles attached to the head coil were used for display of the stimuli or images were projected onto a screen and viewed through prism glasses. Participants wore earplugs in order to reduce the 95 dB scanner noise to well below 75 dB. Foam padding and a Velcro fixation strap were used in order to reduce head motion artifact.

MRI acquisition

Whole-brain imaging was performed using a GE Signa 3T scanner (release VH3). The fMRI series consisted of 30 contiguous oblique-axial sections that were 4 mm thick to cover the brain, and these were acquired using a forward/reverse spiral sequence, which provides excellent fMRI sensitivity (Glover and Thomason, 2004). The image matrix was 64 × 64 over a 24 cm field of view resulting in a 3.75 mm × 3.75 mm× 4 mm voxel. The 30-slice volume was acquired serially at 1750 ms temporal resolution for a total of 590 time points for the FEPT task. One subject had fewer repetition times (530 total), with briefer rest blocks at the end of each run, though the scan was otherwise identical with regard to design and order. Additionally, this subject had an echo-planar acquisition sequence rather than a forward/reverse spiral sequence, with no difference in the reported results if excluded. One hundred six high-resolution Fast SPGR IR axial anatomic images [TE (echo time) = 3.4 ms; TR (repetition time) = 10.5 ms, 27° flip angle, NEX (number of excitations) = 1, slice thickness = 1.5 mm, FOV (field of view) = 24 cm, matrix size = 256 × 256] were performed on each participant for co-registration.

MRI processing

Images were processed using SPM2 (Friston et al., 1995). Images were realigned, stereotactically normalized and smoothed using a 5 mm full width at half maximum Gaussian filter. Contrast images were then derived based on the event-related design and used within the group analyses conducted with SPM5.

Analyses

Behavioral data were examined using independent samples two-tailed t-tests of accuracy for fear, anger, happy and sad stimuli. Differences in d′ were examined for each emotion, which represents the sensitivity to discriminate a particular stimulus from noise accurately (Corwin, 1994; Todorov, 1999). Calculations for d′ are performed by subtracting the hit rate (in this case, for each particular emotion) from the false alarm rate (e.g. correct anger—anger false alarms). Behavioral performance analyses employed a statistical threshold of P < 0.05.

For fMRI data, activation in response to identification of neutral facial expressions was subtracted from identification of fear, anger, sad and happy facial expressions. The emotion identification minus neutral identification subtractions were computed by using the BOLD signal for each emotion identification event (fear, anger, sad, happy) and subtracting similar BOLD signal changes for neutral identification events for each individual. Group analyses used these individual contrasts for comparisons and were run in SPM5. Second-level analysis employed multivariate analysis of variance, with sex as the independent variable and fear-neutral, anger-neutral, sad-neutral and happy-neutral contrasts as dependent variables. We conducted whole-brain analysis with correction for multiple comparisons. Separately, based on prior convention and theoretical interest, we also conducted region of interest (ROI) analysis using the amygdala. First, we examined activation for each emotion (relative to neutral) in females and males separately. We additionally examined which regions were exclusive to females and to males by masking significant areas of activation for the males (in females only analyses) and females (in males only analyses). Third, we examined the overall effect of sex, emotion and the interaction of sex and emotion on activation for each of the emotions, followed by post hoc t-tests when appropriate. Finally, we tested whether activation differences between females and males are related to sex, rather than to between-sex differences in performance. To do so, we assessed the relationship of performance and activation with Pearson correlational analyses by extracting activation values using MarsBAR for individuals in regions where significant activation differences were observed between females and males. A threshold of P < 0.001, mm3 > 216 was employed for whole-brain statistical tests conducted in SPM5. This threshold meets the combined height and extent threshold as determined by 1000 Monte Carlo simulations using AlphaSim (whole-brain corrected P < 0.05). For amygdala ROI analyses, a threshold of P < 0.05, mm3 > 39 was used.

RESULTS

Experiment 1—FEPT performance

Performance on the FEPT in Experiment 1 (n = 75 females, n = 63 males) revealed that females were significantly more accurate for fear stimuli, relative to males, t(136) = 2.37, P < 0.05, Cohen’s d = 0.40, but females and males performed equivalently for anger, happy and sad stimuli (all ps > 0.17). Table 1 provides the descriptive statistics, t statistics and effect sizes for the comparisons. d′ was also higher in females for fear stimuli, indicating greater sensitivity to fear, relative to males, t(135) = −2.53, P = 0.01, but not for anger, happy or sad stimuli (ps > 0.20). For the smaller sample (part of Experiment 2), in-scanner behavioral results were not significantly different between the two groups for accuracy or d′ for any of the emotions (though were in the same direction and of similar effect size as observed in the larger sample for fear). Please refer to Table 2 for descriptive statistics, t statistics and effect sizes for the comparisons.

Table 1.

Descriptive statistics comparing males (n = 63) and females (n = 75) on FEPT performance from Experiment 1

| Variable (accuracy) (%) | Males M (s.d.) | Females M (s.d.) | t(136) | P | Cohen’s d |

|---|---|---|---|---|---|

| Sad | 74.1 (17.3) | 74.5 (17.3) | 0.13 | 0.897 | 0.02 |

| Angry | 80.1 (19.4) | 84.3 (13.7) | 1.18 | 0.254 | 0.25 |

| Fear | 79.5 (20.8) | 86.4 (13.1) | 2.37 | 0.019 | 0.40 |

| Happy | 94.0 (14.9) | 96.7 (9.3) | 1.35 | 0.179 | 0.22 |

Table 2.

Descriptive statistics comparing males (n = 17) and females (n = 21) on FEPT performance from Experiment 2

| Variable (accuracy) (%) | Males M (s.d.) | Females M (s.d.) | t(36) | P | Cohen’s d |

|---|---|---|---|---|---|

| Sad | 82.1 (8.7) | 80.6 (12.6) | 0.44 | 0.664 | 0.14 |

| Angry | 82.3 (12.0) | 82.2 (8.0) | 0.99 | 0.986 | 0.01 |

| Fear | 75.4 (12.2) | 81.4 (11.9) | −1.53 | 0.134 | 0.50 |

| Happy | 95.3 (3.5) | 96.5 (3.7) | −0.98 | 0.332 | 0.33 |

Experiment 2—fMRI areas of activation for females and males

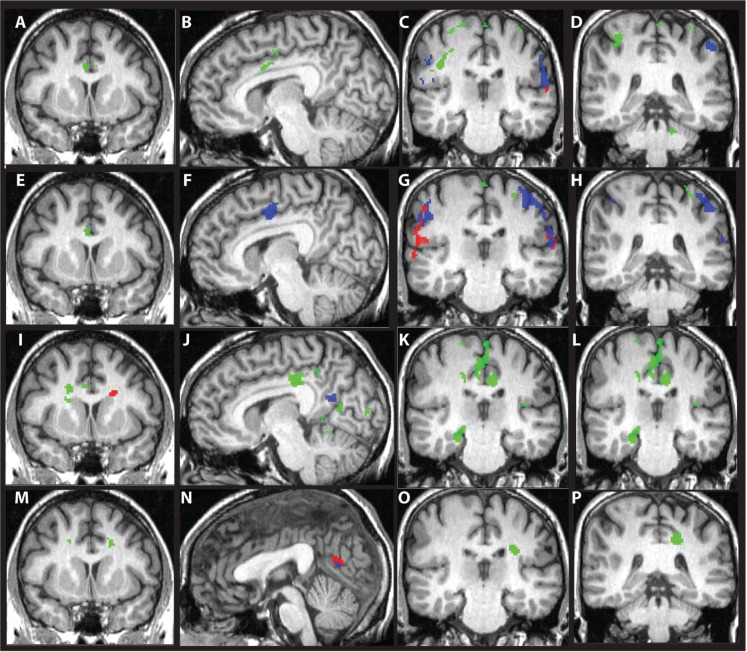

Table 3 and Figure 1 display areas of significant activation assessed for females and males separately. For the anger-neutral contrast, females activated far fewer areas than males, in general, with lesser extent of activation (females = 6408 mm3, males = 15 360 mm3). Two of four regions activated among females were exclusive to females. For the fear-neutral contrast, both females and males activated frontal and parietal regions, though the majority of these regions were exclusive to females or males. Females demonstrated greater extent of activation than males (females = 19 728 mm3, males = 11 160 mm3). Females additionally activated right insula and superior temporal gyrus, as well as cerebellum (declive), while males additionally activated right parahippocampal gyrus. For the happy-neutral contrast, females activated only right precuneus/cuneus and left caudate body. Males displayed much more widespread activation for the happy-neutral contrast, including frontal, temporal, parietal, occipital and subcortical regions (females = 6432 mm3, males = 45 304 mm3), with the majority of regions being exclusive to males. For the sad-neutral contrast, females activated only right posterior cingulate, while males activated bilateral mid-cingulate, left middle frontal gyrus, precuneus, insula and caudate. Again, males displayed more widespread activation than did females (females = 832 mm3, males = 4160 mm3).

Table 3.

Foci of significant activation for females and males from Experiment 2

| MNI coordinates |

||||||||

|---|---|---|---|---|---|---|---|---|

| Group | Lobe | Region | BA | x | y | z | Z | mm3 |

| Anger-neutral | ||||||||

| Females | Temporal | Superior temporal | 40/42 | 62 | −24 | 18 | 4.5 | 2944 |

| Transverse temporal/insula | 41/13 | −64 | −20 | 16* | 3.9 | 688 | ||

| Parietal | Postcentral/precentral | 2/6 | −60 | −18 | 38 | 4.4 | 1120 | |

| Subcortical | Caudate body | – | −18 | 28 | 10* | 4.0 | 1424 | |

| – | −18 | 0 | 28 | 4.1 | 232 | |||

| Amygdala^ | – | −26 | −8 | −14* | 2.3 | 40 | ||

| −26 | −8 | −10* | 2.0 | 40 | ||||

| Males | Frontal | Precentral/postcentral | 4/2 | 36 | −16 | 66* | 4.2 | 5144 |

| Paracentral lobule | 4 | 2 | −32 | 72* | 4.1 | 440 | ||

| Mid-cingulate | 24 | −4 | 10 | 32* | 3.6 | 512 | ||

| 24 | 6 | −4 | 48* | 3.6 | 432 | |||

| (6 | −6 | 46) | ||||||

| Temporal | Superior temporal | 42 | 58 | −32 | 18* | 3.4 | 224 | |

| Parietal | Inferior parietal lobule | 40 | −64 | −26 | 32 | 4.4 | 4976 | |

| 40 | −42 | −36 | 58* | 4.6 | 1712 | |||

| (−46 | −32 | 54) | ||||||

| Postcentral | 3 | −24 | −26 | 72* | 3.7 | 840 | ||

| Precuneus | 7 | 28 | −52 | 62* | 3.8 | 608 | ||

| 7 | −14 | −50 | 68* | 3.5 | 248 | |||

| Subcortical | Amygdala^ | – | 26 | −6 | −18* | 3.1 | 440 | |

| −26 | −2 | −14* | 2.1 | 48 | ||||

| Cerebellum | Anterior lobe | – | 16 | −34 | −28* | 3.8 | 224 | |

| Fear-neutral | ||||||||

| Females | Frontal | Mid-cingulate | 24 | 8 | −2 | 46 | 4.6 | 3360 |

| Middle frontal | 6 | 22 | −8 | 70 | 3.8 | 336 | ||

| Temporal | Insula | 13 | −46 | −16 | −8* | 3.8 | 568 | |

| Superior temporal | 22 | −58 | 6 | 0* | 4.2 | 344 | ||

| −66 | −24 | 8* | 4.1 | 224 | ||||

| Parietal | Postcentral | 3 | −60 | −16 | 44* | 4.9 | 6712 | |

| (−60 | −18 | 40) | ||||||

| Inferior parietal lobule | 40 | 50 | −34 | 52 | 4.5 | 6568 | ||

| Superior parietal lobule | 7 | −20 | −60 | 64 | 4.0 | 904 | ||

| Subcortical | Amygdala^ | – | 22 | −2 | −14* | 2.9 | 208 | |

| −24 | −2 | −14* | 2.5 | 72 | ||||

| Cerebellum | Declive | – | 24 | −64 | −20* | 4.3 | 712 | |

| Males | Frontal | Mid-cingulate | 24 | −6 | 12 | 32* | 4.3 | 1800 |

| (−4 | 12 | 32) | ||||||

| Medial frontal | 6 | 6 | −6 | 64* | 3.5 | 864 | ||

| Parietal | Postcentral/inferior parietal gyrus | 5/40 | 36 | −38 | 68 | 4.4 | 8128 | |

| Superior parietal lobule | 7 | −20 | −44 | 74 | 3.5 | 368 | ||

| Subcortical | Amygdala^ | – | 22 | −8 | −12 | 1.9 | 72 | |

| Happy-Neutral | ||||||||

| Females | Parietal | Precuneus/cuneus | 23/17 | −6 | −60 | 24 | 5.2 | 5984 |

| Subcortical | Caudate body | – | 22 | 12 | 26* | 4.3 | 448 | |

| Males | Frontal | Paracentral lobule/mid-cingulate/posterior cingulate | 31/24/23 | −8 | −20 | 54* | 5.0 | 30 472 |

| Superior frontal | 6/8 | 28 | 20 | 56* | 4.2 | 888 | ||

| Mid-cingulate | 24 | −6 | 4 | 34* | 4.4 | 616 | ||

| Temporal | Middle temporal/superior occipital | 39/19 | 46 | −64 | 28* | 4.3 | 2408 | |

| Insula | 13 | −50 | −8 | 8* | 3.8 | 312 | ||

| 13 | 38 | −22 | 16* | 3.6 | 304 | |||

| (38 | −22 | 20) | ||||||

| Parietal | Postcentral | 3 | −20 | −36 | 72* | 3.9 | 1408 | |

| 3 | 54 | −14 | 44 | 3.6 | 584 | |||

| Occipital | Lingual/fusiform | 19 | −36 | −68 | 6* | 3.9 | 576 | |

| Cuneus | 19 | −12 | −88 | 32 | 3.9 | 520 | ||

| 17 | 10 | −92 | 12* | 3.8 | 456 | |||

| Middle occipital | 18 | 18 | −84 | 20* | 3.8 | 312 | ||

| (18 | −82 | 20) | ||||||

| Subcortical | Caudate | – | −20 | 8 | 30* | 4.6 | 976 | |

| – | −24 | −32 | 30* | 3.7 | 568 | |||

| Amygdala^ | – | 22 | −8 | −12* | 2.4 | 280 | ||

| −26 | −2 | −14* | 2.7 | 72 | ||||

| Cerebellum | Culmen | – | 24 | −54 | −16* | 4.8 | 2720 | |

| – | −26 | −48 | −14* | 3.9 | 1120 | |||

| – | −12 | −38 | −6* | 4.1 | 280 | |||

| Sad-Neutral | ||||||||

| Females | Parietal | Posterior cingulate | 31 | −2 | −64 | 20* | 4.5 | 832 |

| (0 | −64 | 20) | ||||||

| Males | Frontal | Mid-cingulate | 24 | −22 | 4 | 44* | 3.9 | 1072 |

| (−22 | 6 | 44) | ||||||

| 20 | 6 | 34* | 4.2 | 592 | ||||

| (20 | 8 | 34) | ||||||

| −8 | 6 | 34 | 3.9 | 224 | ||||

| Posterior cingulate | 31 | 16 | −36 | 40* | 4.3 | 712 | ||

| Middle frontal | 8 | 24 | 26 | 42* | 4.1 | 608 | ||

| Parietal | Precuneus | 7 | 16 | −48 | 64* | 3.7 | 416 | |

| Subcortical | Insula | 13 | 36 | −2 | 22* | 4.1 | 320 | |

| Caudate | – | −22 | 0 | 22* | 3.7 | 216 | ||

*Indicates a region that is exclusive to females or to males (within five voxels per x, y, z coordinate; exact coordinates in parentheses if different from original coordinate identified in females only/male only analyses).

Note: Combined height (P < 0.05 corrected) and cluster level (mm3 > 216) threshold for significance in whole-brain analyses. Combined height (P < 0.05 corrected) and cluster level (mm3 > 39) threshold for significance in ROI (amygdala) analyses (^ denotes ROI analysis).

Fig. 1.

Areas of activation for females and males. Panels (A–D) illustrate statistically significant activation for anger in areas that are exclusive to females (red), areas that are exclusive to males (green) and in regions inclusive to females and males (blue). Panels (E–H) illustrate statistically significant activation for fear in areas that are exclusive to females (red), areas that are exclusive to males (green) and in regions inclusive to females and males (blue). Panels (I–L) illustrate statistically significant activation for happy in areas that are exclusive to females (red), areas that are exclusive to males (green) and in regions inclusive to females and males (blue). Panels (M–P) depict statistically significant areas for sad in areas exclusive to females (red), areas that are exclusive to males (green) and in regions inclusive to females and males (blue). Panels A, E, I and M are located at coordinates −4 +10 +32. Panels B, F and J are located at coordinates +8, +7 +32. Panel N is located at coordinates 0 −18 13. Panels C, G, K and O are located at coordinates +62 −24 +18. Panels D, H, L and P are located at coordinates −42 −36 +58.

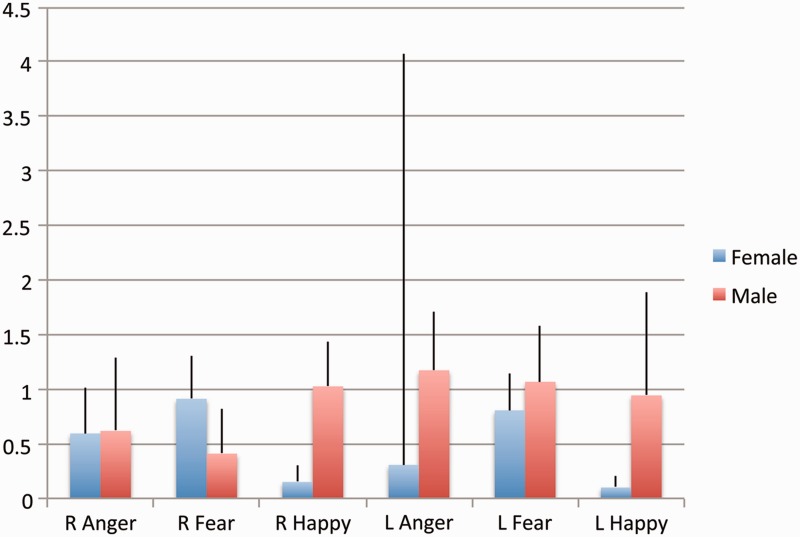

For the amygdala ROI analyses, males demonstrated nominally greater lateralization to the left for fear and anger, but bilateral activation for happy. Males also demonstrated indiscriminate levels of activation for positive and negative emotions, whereas females activated amygdala to a greater extent in response to anger and fear, relative to happy. Activation of amygdala for sad was not significant for females or males (see Table 3 and Figure 2). To specifically test whether there is an interaction of gender × emotion × hemisphere, we ran a repeated measures ANOVA. For anger, there were two regions of activation in each hemisphere, so we created an average for each hemisphere, weighted by extent of activation. Here we observed a significant interaction of gender × emotion, F(1, 36) = 6.03, P < 0.05, primarily driven by happy, but the interaction of gender × emotion × hemisphere was not significant (P = 0.15).

Fig. 2.

Emotion × hemisphere × gender amygdala activation. Weighted averages (weighted by extent of activation) of right and left amygdala activation for anger, fear and happy in females and males.

Sex differences in emotion activation

The independent sex effect was significant (F = 11.29, P < 0.05 corrected). Activation was significantly different between females and males in a wide range of frontal, temporal, parietal, occipital and subcortical regions, in addition to cerebellum and midbrain (see Table 4).

Table 4.

Foci of significant sex effects across all emotions for Experiment 2

| MNI coordinates |

|||||||

|---|---|---|---|---|---|---|---|

| Lobe | Region | BA | x | y | z | Z | mm3 |

| Frontal | Superior frontal | 10 | 22 | 62 | 14 | 6.6 | 12 048 |

| 8 | −22 | 26 | 54 | 4.7 | 1008 | ||

| 2 | 34 | 54 | 4.1 | 224 | |||

| Superior/middle frontal | 10/46 | −30 | 50 | 28 | 4.7 | 888 | |

| Middle frontal | 46 | 48 | 26 | 22 | 5.3 | 4768 | |

| Precentral | 4 | 52 | −10 | 52 | 4.7 | 3080 | |

| 24 | −22 | 64 | 4.0 | 1240 | |||

| 6 | −48 | 0 | 38 | 4.0 | 624 | ||

| Medial frontal | 6 | 4 | −2 | 68 | 3.6 | 384 | |

| 10 | 18 | 46 | 12 | 3.7 | 304 | ||

| Inferior frontal | 47 | 38 | 22 | −16 | 3.9 | 312 | |

| Temporal | Middle temporal | 21 | 62 | −54 | 6 | 5.2 | 736 |

| 44 | 4 | −24 | 3.5 | 264 | |||

| Parietal | Posterior cingulate | 30 | 24 | −58 | 10 | 6.7 | 1680 |

| −20 | −50 | 12 | 4.8 | 944 | |||

| Postcentral | 2 | −42 | −20 | 36 | 4.3 | 592 | |

| 3 | 26 | −30 | 66 | 3.6 | 240 | ||

| Inferior parietal lobule | 40 | 66 | −32 | 34 | 4.3 | 256 | |

| Occipital | Cuneus | 17 | 10 | −92 | 12 | 5.5 | 1656 |

| Lingual | 18 | 6 | −66 | 10 | 3.9 | 552 | |

| 19 | 36 | −52 | 10 | 4.6 | 512 | ||

| Subcortical | Amygdala/parahippocampal | – | −34 | −8 | −12 | 6.5 | 51 176 |

| Claustrum | – | 34 | −18 | 12 | 4.9 | 2456 | |

| – | 36 | −4 | 10 | 3.6 | 256 | ||

| Insula | 13 | 48 | 6 | −6 | 5.5 | 1200 | |

| 42 | 22 | 8 | 4.5 | 536 | |||

| Hippocampus/parahippocampus | – | 36 | −10 | −16 | 4.5 | 1120 | |

| Thalamus | – | 12 | −12 | 18 | 4.0 | 432 | |

| Amygdala^ | – | −32 | −6 | −18 | 4.0 | 288 | |

| Medal globus pallidus | −8 | 4 | −4 | 5.6 | 272 | ||

| Cerebellum | Declive/parahippocampal/fusiform | – | 24 | −32 | 24 | 5.0 | 2616 |

| Fusiform gyrus/cerebellar tonsil | −18 | −36 | −36 | 5.1 | 920 | ||

| Culmen | −6 | −52 | 0 | 4.6 | 840 | ||

| 24 | −42 | −10 | 3.7 | 216 | |||

| Midbrain | – | 0 | −26 | −24 | 4.6 | 624 | |

| Red nucleus | 0 | −14 | −6 | 4.2 | 392 | ||

Note: Combined height (P < 0.05 corrected) and cluster level (mm3 > 216) threshold for significance in whole-brain analyses. Combined height (P < 0.05 corrected) and cluster level (mm3 > 39) threshold for significance in ROI (amygdala) analyses (^ denotes ROI analysis).

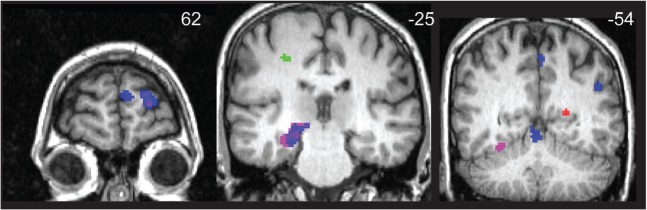

Post hoc t-tests were conducted in SPM5 to assess the direction of activation differences for females and males for each emotion-neutral contrast. No area of activation was significantly greater in females than in males for any of the four emotions (i.e. happy, fear, anger, sad). Several areas for each emotion were significantly more active in males than in females, however. For anger, males showed significantly greater activation in a large region encompassing right amygdala/hippocampus/medial geniculum body of the thalamus and right mid-cingulate and fusiform gyri, left middle frontal gyrus and a number of regions in bilateral cerebellum. For fear, males demonstrated greater activation relative to females in right postcentral gyrus. For happy, males demonstrated greater activation than females in left superior and middle frontal and middle temporal gyri, left precuneus and right cerebellum (culmen); the sex difference for happy was especially striking. For sad, males displayed greater activation than females in left posterior cingulate and caudate body. Table 5 and Figure 3 display specific areas of activation for each emotion activated more in males, relative to females. For ROI analyses of the amygdala, males exhibited greater left amygdala activation, relative to females, for anger and happy and greater right amygdala activation for sad (see Table 5).

Table 5.

Significantly greater activation for males relative to females for emotion specific areas in Experiment 2

| MNI coordinates |

|||||||

|---|---|---|---|---|---|---|---|

| Contrast/lobe | Region | BA | x | y | z | Z | mm3 |

| Anger-neutral | |||||||

| Frontal | Cingulate gyrus | 23 | −4 | −10 | 34 | 3.4 | 256 |

| Middle frontal gyrus | 10 | 24 | 58 | 20 | 3.4 | 240 | |

| Parietal | Fusiform gyrus/cerebellum declive | – | −30 | −54 | −16 | 4.0 | 992 |

| Fusiform gyrus | 37 | −46 | −58 | −14 | 3.9 | 288 | |

| Subcortical | Amygdala/hippocampus/thalamus-medial geniculum body | – | −18 | −22 | −4 | 4.2 | 3064 |

| Amygdala^ | – | 28 | −8 | −16 | 2.1 | 104 | |

| Cerebellum | Culmen | – | 14 | −34 | −24 | 3.4 | 280 |

| Tonsil/pons | −18 | −36 | −36 | 4.0 | 256 | ||

| Fear-neutral | |||||||

| Parietal | Postcentral | 3 | −30 | −24 | 48 | 3.6 | 264 |

| Happy-neutral | |||||||

| Frontal | Superior frontal gyrus | 10 | 22 | 62 | 14 | 4.3 | 1312 |

| 8 | 28 | 22 | 56 | 4.1 | 376 | ||

| Temporal | Middle temporal gyrus | 39 | 48 | −56 | 32 | 3.9 | 744 |

| Parietal | Precuneus | 7 | 2 | −50 | 58 | 3.9 | 480 |

| Subcortical | Amygdala^ | – | 24 | −2 | −14 | 2.3 | 80 |

| Cerebellum | Culmen | – | −2 | −56 | −8 | 3.5 | 392 |

| Sad-neutral | |||||||

| Parietal | Posterior cingulate | 31 | 16 | −46 | 28 | 4.7 | 504 |

| 20 | −36 | 38 | 3.4 | 344 | |||

| 30 | 22 | −56 | 12 | 4.0 | 224 | ||

| Subcortical | Caudate body | – | −22 | 0 | 22 | 3.8 | 224 |

| Amygdala^ | – | −30 | −4 | −18 | 2.0 | 70 | |

Note: Combined height (P < 0.05 corrected) and cluster level (mm3 > 216) threshold for significance in whole-brain analyses. Combined height (P < 0.05 corrected) and cluster level (mm3 > 39) threshold for significance in ROI (amygdala) analyses (^ denotes ROI analysis).

Fig. 3.

Effect of sex on emotion activation. Areas of activation that were greater for males, relative to females are depicted. Anger is displayed in magenta, fear in green, happy in blue and sad in red.

In a post hoc manner, we also sought to understand whether there are more specific differences in activation during identification of facial emotions between females and males, when activation of specific emotions is compared with one another. In prior (unpublished) analyses and in the broader literature, we have observed that activation for each of the negative emotions is quite similar to one another when directly compared (relative to neutral). Therefore, before modeling each emotion separately in the overall model, we compared each of the negative emotions to one another in the entire group of females and males. There was just one region that was significantly different in any combination of comparing the negative emotions to one another (fear-neutral vs anger-neutral), and this was a small cluster in the prefrontal cortex (34 −16 66, mm3 = 248). Thus, we combined all negative emotions to include in All Negative-Happy and Happy-All Negative contrasts. There were a number of regions that were significantly different using these contrasts in the whole group (reported in Table 6). We then tested the interaction of gender by emotion for regions that were significant in the prior analyses, and here, there were significant interactions detected in two regions of the culmen of the cerebellum, as well as left lingual gyrus, left insula and left middle frontal gyrus. These regions are denoted by asterisks in Table 6. Follow-up t-tests demonstrated that for happy (relative to all negative emotions), males activated one region of the cerebellum culmen (12 −38 −6) significantly more than females [for whom this region was actually deactivated, t(36) = 2.7, P = 0.01]. For All Negative (relative to happy), males deactivated lingual gyrus significantly more so than females, t(36) = −2.2, P = 0.04. Males also deactivated one region of the cerebellum culmen (24 −54 −16) significantly more than did females, t(36) = −2.4, P = 0.02.

Table 6.

Whole group effects of all negative vs happy contrasts on activation

| MNI coordinates |

|||||||

|---|---|---|---|---|---|---|---|

| Contrast/lobe | Region | BA | x | y | z | Z | mm3 |

| Happy-all negative | |||||||

| Frontal | Middle frontal gyrus | 6 | 28 | 20 | 56 | 4.0 | 696 |

| Cingulate gyrus | 24 | −6 | 4 | 34 | 4.0 | 288 | |

| Temporal | Middle temporal | 39 | 46 | −64 | 28 | 4.3 | 2248 |

| Fusiform gyrus | 37 | −44 | −56 | −4 | 3.5 | 224 | |

| Parietal | Posterior cingulate | 30 | −2 | −50 | 22 | 4.9 | 27 024 |

| Occipital | Cuneus | 17 | 10 | −92 | 12 | 3.7 | 432 |

| 19 | −12 | −88 | 32 | 3.8 | 336 | ||

| Lingual | 19 | −36 | −68 | 6* | 4.0 | 296 | |

| Middle occipital | 18 | 18 | −84 | 20 | 3.7 | 248 | |

| Subcortical | Caudate | – | −20 | 8 | 30 | 4.3 | 624 |

| – | −24 | −32 | 30 | 3.5 | 344 | ||

| Cerebellum | Culmen | – | 24 | −54 | −16* | 4.7 | 2424 |

| −26 | −48 | −14 | 3.9 | 1112 | |||

| −24 | −26 | −16 | 4.1 | 776 | |||

| −12 | −38 | 6* | 4.1 | 296 | |||

| All negative-happy | |||||||

| Frontal | Middle frontal | 9 | 44 | 18 | 28* | 3.6 | 536 |

| Subcortical | Insula | – | −34 | 28 | −4 | 4.4 | 842 |

| 34 | 26 | 2* | 4.0 | 720 | |||

Note: Combined height (P < 0.05 corrected) and cluster level (mm3 > 216) threshold for significance in whole-brain analyses.

*Indicates a region that is significant for gender × emotion interaction at P < 0.05.

Relationship of performance to regions with significant sex effects

Finally, Pearson product-moment correlations were conducted to investigate associations between FEPT performance (i.e. accuracy) and extracted fMRI activation in areas that were significantly different between females and males. This set of analyses was exploratory and descriptive; therefore, no correction for multiple comparisons was employed. In males, there were no significant relationships between extracted mean BOLD signal for any of the four emotions and performance. In females, only activation in left precuneus (happy extracted mean from Table 3; 2, −50, 58) was related to accuracy for happy faces (r = 0.48, P < 0.05).

DISCUSSION

The two experiments presented demonstrate that males show generally greater extent and height of activation than females when processing facial emotions; moreover, these findings held for facial emotions for which females did not show better accuracy than males, and by and large, activation was unrelated to performance. Thus, the present study identified sex differences in functional activation in processing facial emotion among healthy males and females and reduced ambiguity in prior literature regarding whether sex-related activation differences are subserved by performance differences. The present findings indicate that activation differences between males and females appear to be how emotion is processed differently, irrespective of accuracy, at least on a linear level.

Experiment 1 confirmed the hypothesis that females and males differ in their accuracy and sensitivity in categorizing facial emotions; females outperformed males in identifying fearful faces. This finding strengthens existing literature demonstrating that females are more adept than males at classifying facial emotions, and specifically negatively valenced emotions (Montagne et al., 2005). At the same time, females have been found to be more accurate than males at classifying facial expressions of anger (Montagne et al., 2005); while we did not replicate those findings here, the observed effects were in the same direction as in previous work.

As initially hypothesized, females and males displayed different patterns of activation during identification of facial emotions. For anger, happy and sad emotions, males showed substantially more widespread activation than females, and this was especially true for happy expressions of emotion. Conversely, females activated more widespread regions for fear than did males, although the magnitude of activation was not greater in females than in males for any region.

For anger, males activated several regions that have been demonstrated in prior studies to be important for processing of angry facial expressions, including right cingulate cortex (Fusar-Poli et al., 2009; Jehna et al., 2011) that were not activated in females. Males also activated areas of the temporal and parietal cortex that were not activated in females, including the right inferior parietal lobule and the precuneus bilaterally. Both females and males demonstrated activation for angry faces in left superior temporal gyrus, although the extent of activation was much more widespread in females when compared with males. The superior temporal gyrus has been shown in previous studies to be important for processing of emotional faces in general, and it is even activated during processing of neutral faces (Kesler-West et al., 2001). That females activated this region to a greater extent than did males and activated fewer other regions may suggest that females are more efficient at processing angry emotion, utilizing an area that is generally important for face processing, but requiring few other regions for achieving equivalent performance to males, who activated with greater regional extent. The suggestion here is one of compensation: although females and males do equally well in identifying angry emotion, males may activate more diffuse brain regions to achieve similar levels of performance.

A similar picture of more diffuse activation in males relative to females was observed in identification of happy emotional expressions. Males activated larger extents of widespread cortical regions and limbic regions than did females, whereas females only significantly activated the right precuneus/cuneus and the left caudate body. For sad, again, a similar picture emerged, whereby females activated only posterior cingulate/precuneus. Males activated posterior cingulate and precuneus as well, but more so than females, with additional activation observed in areas shown to be important for emotion processing, including mid-cingulate, insula, caudate, precuneus and middle frontal gyrus (Phan et al., 2002; Fusar-Poli et al., 2009). The posterior cingulate/precuneus region has been associated with self-referential processing or mediation of emotion and memory-related processing (Maddock et al., 2003; Rameson et al., 2010) and has been shown to be active especially during processing of negative emotions (Phan et al., 2002). That males required engagement of additional regions associated with emotion processing again suggests a compensation hypothesis, as with processing of anger and happy emotions.

In contrast to anger, happy and sad faces, females demonstrated much more widespread activation for fearful faces, relative to males. Overall, females and males showed activation in similar regions for fear, including cingulate, postcentral gyrus and superior parietal lobule. At the same time, the laterality of these regions was different for females and males in several areas, with females generally demonstrating left-sided activation in frontal regions and right-sided activation in temporal regions. The pattern was much more mixed for males, who showed activation of frontal regions bilaterally. This finding contradicts studies that have demonstrated greater lateralization (typically to the right hemisphere) of brain functioning in males than in females (Bowers, Bauer, Coslett, & Heilman, 1985; Bowers and LaBarba, 1988; Hines et al., 1992; Russo et al., 1999), although these studies have not specifically studied emotion processing (with the exception of Bowers et al., 1985). That females showed more widespread activation for fear relative to males is interesting in light of the finding that females were also more accurate in identifying fearful faces. Taken together, these findings suggest that females process fearful facial expressions differently than males, with greater hemispheric specialization, and use several additional brain regions, including right superior temporal gyrus, left middle frontal gyrus and right insula. The insula has been found to be important for processing fear in a meta-analysis, although this study did not examine sex effects (Phan et al., 2002).

In ROI analyses of the amygdala, we demonstrate a pattern trending toward greater left lateralization in males for negative emotions (fear and anger), but not for happy, where males demonstrated a more bilateral pattern of activation. Neither females nor males displayed significant amygdala activation for sad. These findings are consistent with previous studies showing greater lateralization in the amygdala among males during tasks of emotion processing (Schneider et al., 2000; Killgore et al., 2001), but differ from the results of a meta-analysis showing that females and males demonstrate equivalent patterns of bilateral amygdala activation, though with different regions of peak density, with males showing greater peak density in the right sublenticular area and females in the left sublenticular area (Wager et al., 2003). This prior investigation of Wager and colleagues included studies using a variety of emotion processing tasks and also collapsed findings across emotions. It is notable that in the current study, males demonstrated a pattern of bilateral activation for happy, but not for negative emotions (fear and anger), suggesting that collapsing emotions may mask laterality differences in females and males. The other relevant finding from the ROI analyses was that females activated the amygdala to a greater extent for fear and anger, relative to happy, whereas males activated amygdala indiscriminately regardless of valence. Given that the amygdala has been conceptualized as a ‘relevance detector’ (Sander et al., 2003), this finding suggests that for females, negative emotions are most relevant, whereas for males, positive and negative emotions are salient. This hypothesis would also be consistent with findings of less activation of posterior regions in males than in females during processing of negative emotions, relative to happy. It is also supportive of findings that females demonstrate smaller priming to emotionally negative, relative to positive conditions compared with males, interpreted as females having more sensitivity to emotional stimuli with a negative valence (Gohier et al., 2013).

Increased sensitivity of select brain regions to specific emotions among females may confer risk of mood disorder and help to explain higher rates of mood disorders in females (American Psychiatric Association, 2000). While the current study did not address this hypothesis, per se, it is interesting to consider findings in light of studies showing greater activation in males of regions important for emotion regulation (i.e. prefrontal regions and anterior cingulate) when asked to modulate their responsiveness to emotional stimuli (Mak et al., 2009; Domes et al., 2010). Greater sensitivity to negative emotions among females may bestow greater difficulty in employing regulatory mechanisms at times when doing so would be beneficial. Clinicians working with females who struggle with emotion regulation might consider the role that increased sensitivity to negative emotion has in the regulatory process. For example, emotion modulatory strategies might be combined with building skills that work to ‘unlearn’ emotion sensitization in the initial stages of emotion processing.

To our knowledge, this is the first study to address the influence of sex on brain activation during processing of facial emotions that concurrently evaluates the effect of performance on differential patterns of activation. Females and males who underwent fMRI also showed a wide range of accuracies, allowing for the opportunity to examine relationships between performance and activation in regions that differed among males and females in the imaging data. Given that these were healthy adults, it is difficult to evoke truly dysfunctional performance; however, future studies might add more and subtler emotions (e.g. disgust, contempt, surprise) to those examined in the present study. A wider and subtler range of emotions might further reduce the sex-specific patterns of activation observed during facial emotion identification. Additionally, metacognitive processes such as confidence in accuracy might be interesting to pursue; it would be of interest to know whether these adults are aware when they incorrectly identify emotions and how this phenomenon relates to neural activation. Studies that systematically examine errors in emotion identification also might be fruitful toward understanding sex-related differences in emotion processing. One additional limitation of the present study is that we did not assess for phase of menstrual cycle. This characteristic may be predictive of accuracy for identification of facial emotions and patterns of functional activation during facial emotion processing (Derntl et al., 2008). The number of subjects may also have been relatively small for addressing relationships between BOLD activation and performance variations.

In sum, the findings support the view that females and males process facial emotions with different neural circuitry, and this is not fully accounted for by variations in performance. It emphasizes the importance of considering sex differences as a moderating variable in studies of facial emotion processing. More broadly, these data have relevance for psychopathological processes where sex differences have been described, such as in mood and anxiety disorders, and where sex differences in their neurobiological substrates are just starting to be explored.

Acknowledgments

This work was supported by the National Institutes of Health [KL2 Career Development Award RR024987 and K23 Award MH074459 to S.A.L.]; a Young Investigators Award from the National Alliance for Research on Schizophrenia and Depression [to S.A.L.], the University of Michigan Depression Center [Rachel Upjohn Clinical Scholars Awards to S.L.W. and S.A.L.]; the University of Michigan Department of Psychiatry [Psychiatry Research Committee Award to S.A.L.]; the University of Michigan General Clinical Research Center [MUL1RR024986 to S.A.L. and M.N.S.]; the University of Michigan fMRI Center Pilot Scans [to S.L.W./S.A.L.] and the Phil F. Jenkins Foundation [to J.K.Z.]. The behavioral performance for some of the female subjects (outside the scanner, N = 5) has been reported previously (Langenecker et al., 2005, 2007; Wright and Langenecker, 2009; Wright et al., 2009). Michael T. Ransom, Ph.D., Matthew Mordhorst, Ph.D., Michael L. Brinkman, Psy.D., Elisabeth A. Wilde, Ph.D., Jennifer L. Huffman, Ph.D., Kathleen E. Hazlett, Leslie M. Guidotti, M.S., Allison M. Kade, Benjamin D. Long, Lawrence S. Own, Hadia M. Leon, Thomas Hooven, Karandeep Singh, Ciaran Considine, Michael-Paul Schallmo, Erich S. Avery and Lindsay Franti and the staff and technicians at the University of Michigan fMRI Lab are thanked for their assistance in task design, data collection and processing with this work.

REFERENCES

- Aleman A, Swart M. Sex differences in neural activation to facial expressions denoting contempt and disgust. PLoS One. 2008;3(11):e3622. doi: 10.1371/journal.pone.0003622. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Almeida JRC, Versace A, Hassel S, Kupfer DJ, Phillips ML. Elevated amygdala activity to sad facial expressions: a state marker of bipolar but not unipolar depression. Biological Psychiatry. 2010;67(5):414–21. doi: 10.1016/j.biopsych.2009.09.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders. 2000. 4th edn, text revision. Washington, DC: Author. [Google Scholar]

- Bowers CA, LaBarba RC. Sex differences in the lateralization of spatial abilities: a spatial component analysis of extreme group scores. Brain and Cognition. 1988;8(2):165–77. doi: 10.1016/0278-2626(88)90047-4. [DOI] [PubMed] [Google Scholar]

- Bowers D, Bauer RM, Coslett HB, Heilman KM. Processing of faces by patients with unilateral hemisphere lesions. I. Dissociation between judgments of facial affect and facial identity. Brain and Cognition. 1985;4(3):258–72. doi: 10.1016/0278-2626(85)90020-x. [DOI] [PubMed] [Google Scholar]

- Canli T, Cooney RE, Goldin P, et al. Amygdala reactivity to emotional faces predicts improvement in major depression. Neuroreport. 2005;16(12):1267–70. doi: 10.1097/01.wnr.0000174407.09515.cc. [DOI] [PubMed] [Google Scholar]

- Caseras X, Mataix-Cois D, An SK, et al. Sex differences in neural responses to disgusting visual stimuli: implications for disgust-related psychiatric disorders. Biological Psychiatry. 2007;62(5):464–71. doi: 10.1016/j.biopsych.2006.10.030. [DOI] [PubMed] [Google Scholar]

- Chan SW, Norbury R, Goodwin GM, Harmer CJ. Risk for depression and neural responses to fearful facial expressions of emotion. British Journal of Psychiatry. 2009;194(2):139–45. doi: 10.1192/bjp.bp.107.047993. [DOI] [PubMed] [Google Scholar]

- Corwin J. On measuring discrimination and response bias: unequal numbers of targets and distractors and two classes of distractors. Neuropsychology. 1994;8(1):110–7. [Google Scholar]

- Costafreda SG, Khanna A, Mourao-Miranda J, Fu CHY. Neural correlates of sad faces predict clinical remission to cognitive behavioral therapy in depression. Neuroreport. 2009;20(7):637–41. doi: 10.1097/WNR.0b013e3283294159. [DOI] [PubMed] [Google Scholar]

- Dannlowski U, Ohrmann P, Bauer J, et al. Amygdala reactivity to masked negative faces is associated with automatic judgmental bias in major depression: a 3 T fMR1 study. Journal of Psychiatry & Neuroscience. 2007;32(6):423–9. [PMC free article] [PubMed] [Google Scholar]

- Dannlowski U, Ohrmann P, Bauer J, et al. 5-HTTLPR biases amygdala activity in response to masked facial expressions in major depression. Neuropsychopharmacology. 2008;33(2):418–24. doi: 10.1038/sj.npp.1301411. [DOI] [PubMed] [Google Scholar]

- Derntl B, Habel U, Windischberger C, et al. General and specific responsiveness of the amygdala during explicit emotion recognition in females and males. BMC Neuroscience. 2009;10:91. doi: 10.1186/1471-2202-10-91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Derntl B, Windischberger C, Robinson S, et al. Facial emotion recognition and amygdala activation are associated with menstrual cycle phase. Psychoneuroendocrinology. 2008;33(8):1031–40. doi: 10.1016/j.psyneuen.2008.04.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Domes G, Schulze L, Böttger M, et al. The neural correlates of sex differences in emotional reactivity and emotion regulation. Human Brain Mapping. 2010;31:758–69. doi: 10.1002/hbm.20903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekman P, Friesen W. Pictures of Facial Affect. Palo Alto, CA: Consulting Psychologists Press; 1976. [Google Scholar]

- Friston KJ, Holmes A, Worsley K, Poline JB, Frith CD, Frackowiak RS. Statistical parametric maps in functional imaging: a general linear approach. Human Brain Mapping. 1995;2:189–210. [Google Scholar]

- Frodl T, Scheuerecker J, Albrecht J, et al. Neuronal correlates of emotional processing in patients with major depression. World Journal of Biological Psychiatry. 2009;10(3):202–8. doi: 10.1080/15622970701624603. [DOI] [PubMed] [Google Scholar]

- Fusar-Poli P, Placentino A, Carletti F, et al. Functional atlas of emotional faces processing: a voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. Journal of Psychiatry and Neuroscience. 2009;34(6):418–32. [PMC free article] [PubMed] [Google Scholar]

- Glover GH, Thomason ME. Improved combination of spiral-in/out images for BOLD fMRI. Magnetic Resonance in Medicine. 2004;51(4):863–8. doi: 10.1002/mrm.20016. [DOI] [PubMed] [Google Scholar]

- Gohier B, Senior C, Brittain PJ, et al. Gender differences in the sensitivity to negative stimuli: cross-modal affective priming study. European Psychiatry. 2013;28(2):74–80. doi: 10.1016/j.eurpsy.2011.06.007. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Knouf N, Kanwisher N. The fusiform face area subserves face perception, not generic within-category identification. Nature Neuroscience. 2004;7(5):555–62. doi: 10.1038/nn1224. [DOI] [PubMed] [Google Scholar]

- Hall GB, Witelson SF, Szechtman H, Nahmias C. Sex differences in functional activation patterns revealed by increased emotion processing demands. Neuroreport. 2004;15(2):219–23. doi: 10.1097/00001756-200402090-00001. [DOI] [PubMed] [Google Scholar]

- Hall JA, Matsumoto D. Gender differences in judgments of multiple emotions from facial expressions. Emotion. 2004;4(2):201–6. doi: 10.1037/1528-3542.4.2.201. [DOI] [PubMed] [Google Scholar]

- Hines M, Chiu L, McAdams LA, Bentler PM, Lipcamon J. Cognition and the corpus callosum: verbal fluency, visuospatial ability, and language lateralization related to midsagittal surface areas of callosal subregions. Behavioral Neuroscience. 1992;106(1):3–14. doi: 10.1037//0735-7044.106.1.3. [DOI] [PubMed] [Google Scholar]

- Hofer A, Siedentopf CM, Ischebeck A, et al. Gender differences in regional cerebral activity during the perception of emotion: a functional MRI study. Neuroimage. 2006;32(2):854–62. doi: 10.1016/j.neuroimage.2006.03.053. [DOI] [PubMed] [Google Scholar]

- Hofer A, Siedentopf CM, Ischebeck A, Rettenbacher MA, Verius M, Felber S, Wolfgang FW. Sex differences in brain activation patterns during processing of positively and negatively valenced emotional words. Psychological Medicine. 2007;37(1):109–19. doi: 10.1017/S0033291706008919. [DOI] [PubMed] [Google Scholar]

- Jehna M, Neuper C, Ischebeck A, et al. The functional correlates of face perception and recognition of emotional face expressions as evidenced by fMRI. Brain Research. 2011;1393:73–83. doi: 10.1016/j.brainres.2011.04.007. [DOI] [PubMed] [Google Scholar]

- Kesler-West ML, Andersen AH, Smith CD, et al. Neural substrates of facial emotion processing using fMRI. Cognitive Brain Research. 2001;11(2):213–26. doi: 10.1016/s0926-6410(00)00073-2. [DOI] [PubMed] [Google Scholar]

- Killgore WD, Oki M, Yurgelun-Todd DA. Sex-specific developmental changes in amygdala responses to affective faces. Neuroreport. 2001;12(2):427–33. doi: 10.1097/00001756-200102120-00047. [DOI] [PubMed] [Google Scholar]

- Killgore WD, Yurgelun-Todd DA. Sex differences in amygdala activation during the perception of facial affect. Neuroreport. 2001;12:2543–7. doi: 10.1097/00001756-200108080-00050. [DOI] [PubMed] [Google Scholar]

- Killgore WD, Yurgelun-Todd DA. Sex-related developmental differences in the lateralized activation of the prefrontal cortex and amygdala during perception of facial affect. Perceptual and Motor Skills. 2004;99:371–91. doi: 10.2466/pms.99.2.371-391. [DOI] [PubMed] [Google Scholar]

- Langenecker SA, Bieliauskas LA, Rapport LJ, Zubieta JK, Wilde EA, Berent S. Face emotion perception and executive functioning deficits in depression. Journal of Clinical and Experimental Neuropsychology. 2005;27(3):320–33. doi: 10.1080/13803390490490515720. [DOI] [PubMed] [Google Scholar]

- Langenecker SA, Caveney AF, Giordani B, et al. The sensitivity and psychometric properties of a brief computer-based cognitive screening battery in a depression clinic. Psychiatry Research. 2007;152(2–3):143–54. doi: 10.1016/j.psychres.2006.03.019. [DOI] [PubMed] [Google Scholar]

- Langenecker SA, Saunders EFH, Kade AM, Ransom MT, McInnis MG. Intermediate cognitive phenotypes in bipolar disorder. Journal of Affective Disorders. 2010;122(3):285–93. doi: 10.1016/j.jad.2009.08.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee BT, Seok JH, Lee BC, et al. Neural correlates of affective processing in response to sad and angry facial stimuli in patients with major depressive disorder. Progress in Neuro-Psychopharmacology & Biological Psychiatry. 2008;32(3):778–85. doi: 10.1016/j.pnpbp.2007.12.009. [DOI] [PubMed] [Google Scholar]

- Lee TM, Liu HL, Hoosain R, et al. Sex differences in neural correlates of recognition of happy and sad faces in humans assessed by functional magnetic resonance imaging. Neuroscience Letters. 2002;333(1):13–6. doi: 10.1016/s0304-3940(02)00965-5. [DOI] [PubMed] [Google Scholar]

- Maddock RJ, Garrett AS, Buonocore MH. Posterior cingulate cortex activation by emotional words: fMRI evidence from a valence decision task. Human Brain Mapping. 2003;18(1):30–41. doi: 10.1002/hbm.10075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mak AKY, Hu Z, Zhang JXX, Xiao Z, Lee TMC. Sex-related differences in neural activity during emotion regulation. Neuropsychologia. 2009;47:2900–8. doi: 10.1016/j.neuropsychologia.2009.06.017. [DOI] [PubMed] [Google Scholar]

- Mathersul D, Palmer DM, Gur RC, et al. Explicit identification and implicit recognition of emotions: II. Core domains and relationships with general cognition. Journal of Clinical and Experimental Neuropsychology. 2008;31(3):278–91. doi: 10.1080/13803390802043619. [DOI] [PubMed] [Google Scholar]

- Montagne B, Kessels RPC, Frigerio E, de Haan EHF, Perrett DI. Sex differences in the perception of affective facial expressions: do men really lack emotional sensitivity? Cognitive Processing. 2005;6(2):136–41. doi: 10.1007/s10339-005-0050-6. [DOI] [PubMed] [Google Scholar]

- Mufson L, Nowicki S., Jr Factors affecting the accuracy of facial affect recognition. Journal of Social Psychology. 1991;131(6):815–22. doi: 10.1080/00224545.1991.9924668. [DOI] [PubMed] [Google Scholar]

- Nurnberger JI, Jr, Blehar MC, Kaufmann CA, et al. Diagnostic interview for genetic studies. Rationale, unique features, and training. NIMH Genetics Initiative. Archives of General Psychiatry. 1994;51(11):849–59. doi: 10.1001/archpsyc.1994.03950110009002. [DOI] [PubMed] [Google Scholar]

- Phan KL, Fitzgerald DA, Nathan PJ, Tancer ME. Association between amygdala hyperactivity to harsh faces and severity of social anxiety in generalized social phobia. Biological Psychiatry. 2006;59(4):424–9. doi: 10.1016/j.biopsych.2005.08.012. [DOI] [PubMed] [Google Scholar]

- Phan KL, Wager T, Taylor SF, Liberzon I. Functional neuroanatomy of emotion: a meta-analysis of emotion activation studies in PET and fMRI. NeuroImage. 2002;16:331–48. doi: 10.1006/nimg.2002.1087. [DOI] [PubMed] [Google Scholar]

- Rameson LT, Satpute AB, Lieberman MD. The neural correlates of implicit and explicit self-relevant processing. Neuroimage. 2010;50(2):701–8. doi: 10.1016/j.neuroimage.2009.12.098. [DOI] [PubMed] [Google Scholar]

- Rapport LJ, Friedman SR, Tzelepis A, Van VA. Experienced emotion and affect recognition in adult attention-deficit hyperactivity disorder. Neuropsychology. 2002;16(1):102–10. doi: 10.1037//0894-4105.16.1.102. [DOI] [PubMed] [Google Scholar]

- Russo P, Persegani C, Papeschi LL, Nicolini M, Trimarchi M. Sex differences in hemisphere preference as assessed by a paper-and-pencil test. International Journal of Neuroscience. 1999;100(1–4):29–37. [PubMed] [Google Scholar]

- Sander G, Grafman J, Zalla T. The human amygdala: an evolved system for relevance detection. Reviews in Neuroscience. 2003;14(4):303–16. doi: 10.1515/revneuro.2003.14.4.303. [DOI] [PubMed] [Google Scholar]

- Schneider F, Habel U, Kessler C, Salloum JB, Posse S. Gender differences in regional cerebral activity during sadness. Human Brain Mapping. 2000;9(4):226–38. doi: 10.1002/(SICI)1097-0193(200004)9:4<226::AID-HBM4>3.0.CO;2-K. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schienle A, Schafer A, Stark R, Walter B, Vaitl D. Gender differences in the processing of disgust- and fear-inducing pictures: an fMRI study. Neuroreport. 2005;16(3):277–80. doi: 10.1097/00001756-200502280-00015. [DOI] [PubMed] [Google Scholar]

- Shirao N, Okamoto Y, Okada G, Ueda K, Yamawaki S. Sex differences in brain activity toward unpleasant linguistic stimuli concerning interpersonal relationships: an fMRI study. European Archives of Psychiatry and Clinical Neuroscience. 2005;255(5):327–33. doi: 10.1007/s00406-005-0566-x. [DOI] [PubMed] [Google Scholar]

- Thayer JF, Johnsen BH. Sex differences in judgment of facial affect: a multivariate analysis of recognition errors. Scandinavian Journal of Psychology. 2000;41(3):243–6. doi: 10.1111/1467-9450.00193. [DOI] [PubMed] [Google Scholar]

- Todorov N. Calculation of signal detection theory measures. Behaviour Research Methods, Instruments & Computers. 1999;31(1):137–49. doi: 10.3758/bf03207704. [DOI] [PubMed] [Google Scholar]

- Tottenham N, Borscheid A, Ellertsen K, Marcus DJ, Nelson CA. San Francisco, CA: 2002. Categorization of facial expressions in children and adults: establishing a larger stimulus set. Poster session at the annual meeting of the Cognitive Neuroscience Society. [Google Scholar]

- Wager T, Phan KL, Liberzon I, Taylor SF. Valence, gender, and lateralization of functional brain anatomy in emotion: a meta-analysis of findings from neuroimaging. NeuroImage. 2003;19:513–31. doi: 10.1016/s1053-8119(03)00078-8. [DOI] [PubMed] [Google Scholar]

- Wright SL, Langenecker SA. Differential risk for emotion processing difficulties by sex and age in major depressive disorder. In: Hernandex P, Alonso S, editors. Females and Depression. New York: Nova Science Publishers; 2009. pp. 183–216. [Google Scholar]

- Wright SL, Langenecker SA, Deldin PJ, et al. Sex-specific disruptions in emotion processing in younger adults with depression. Depression and Anxiety. 2009;26(2):182–9. doi: 10.1002/da.20502. [DOI] [PMC free article] [PubMed] [Google Scholar]