Abstract

Recent functional magnetic resonance imaging studies have reported activation of primary and secondary somatosensory cortices when participants observe another person or object being touched. In this study, we used event-related potentials to examine the nature and time-course of the neural mechanisms associated with the observation of humans and non-human objects being touched. Participants were presented with short video clips of a human arm or a non-human cylindrical object being touched by an object, compared with an object moving in front of the arms or cylinders without touching them. Touch vs non-touch effects were observed in the amplitudes of the N100 and N250 components, as well as a late slow wave component (500–600 ms), measured from electrodes over primary somatosensory cortex. Human vs non-human stimulus effects were reflected in the latencies of the N100, P170 and N250 components recorded over somatosensory cortex, as well as the temporal–parietal visual-perceptual N170 and N250 components. These findings suggest that human and non-human touch observation are associated with somatosensory processing at both an early sensory-perceptual stage and a relatively late cognitive stage, both preceding and following the perceptual encoding of the humanness of stimuli that typically occurs in extrastriate visual areas.

Keywords: touch observation, ERP, somatosensory processing, social context

INTRODUCTION

Recent functional magnetic resonance imaging (fMRI) studies have demonstrated that observation of another person being touched can activate both primary (SI) and secondary somatosensory (SII) cortices, core brain regions associated with sensation processing. Some studies report similar activations of SI or SII when participants experience touch and when they observe another person or object being touched (Keysers et al., 2004; Blakemore et al., 2005; Schaefer et al., 2006, 2009; Ebisch et al., 2008, 2010; Osborn and Derbyshire, 2010). In a recent review of somatosensory processing in social contexts, Keysers et al. (2010) concluded that higher stages of somatosensory processing are activated during the observation of touch as well as during the observation of an action or someone experiencing somatic pain. They further suggest that this somatosensory activity may be related to visuotactile mirroring mechanisms, where the observation of an action automatically activates portions of corresponding neural circuits in the observer (see e.g. Rizzolatti and Craghiero, 2004; Cattaneo and Rizzolatti, 2010).

Due to the low temporal resolution of fMRI, event-related studies utilizing electromagnetic imaging measures, such as electroencephalography (EEG) and magnetoencephalography (MEG), are critical for determining the neural time-course of observed touch processing. Two electromagnetic imaging studies have previously examined the time-course of activation of somatosensory cortex during the observation of another person being touched. Pihko et al. (2010) recorded event-related MEG data while finger taps were delivered to participants’ dorsal right hand (touch condition) and when the participants observed an experimenter being touched in a similar manner (observation condition). SI was activated during the first 300 ms of tactile stimulation, and similar activations were observed between 300 and 600 ms during the observation of touch. In an earlier event-related EEG study, somatosensory-evoked potentials (SEPs) were measured during the observation of painful and neutral tactile stimulation (Bufalari et al., 2007). The amplitude of the P45 component, the positive-going somatosensory component peaking 45 ms following stimulus onset, was modulated during the observation of both painful and neutral touch.

These studies provide initial MEG and EEG/SEP evidence that somatosensory cortex is modulated by the observation of touch. However, the particular time-course of somatosensory cortex activation during human touch observation differed dramatically between studies. In addition, neither study examined the specificity of the activation of somatosensory cortex during the observation of touching humans vs non-human objects. The results of fMRI studies suggest that activation levels in somatosensory regions are similar during the observation of these two types of touch (Keysers et al., 2004; Ebisch et al., 2008; but see also Blakemore et al., 2005). It remains unknown, however, whether or not the time-course of the activation of somatosensory cortex differs when observing humans vs objects being touched.

In this study, we examine the time-course of somatosensory processing components during the observation of humans vs objects being touched. Previous event-related electromagnetic imaging research on the observation of humans being touched suggests that touch vs non-touch effects would occur at an early sensory processing stage (i.e. within 100 ms) and/or at a much later cognitive stage (i.e. 300–600 ms), whereas previous fMRI studies suggest no difference in the levels of activity. Thus, we predict that human and object touch vs non-touch effects would differ in their timing, but not in the level of activity that they evoke.

METHODS

Participant

Participants were 16 undergraduate students (4 males and 12 females) from the University of Birmingham. These participants had a mean age of 21 years (range: 18–26 years). All participants included in the study reported that they were right-handed. Data from three additional participants were excluded from analysis because they produced fewer than 30 trials of viable EEG/event-related potential (ERP) data in one or more of the four experimental conditions. All participants reported that they had no history of a neurological or psychiatric disorder and that they had normal or corrected to normal vision. Informed consent was obtained for all participants prior to participation in the study, in accordance with an ethical protocol approved by the Ethical Review Committee of the University of Birmingham.

Materials

Videos were in .vi format and were recorded using a digital camera with a resolution of 720 × 480 colour pixels and with a frame rate of 29.97 frames/s, positioned 60 cm from the actor or object. The following parameters were used for all of the video recordings: frequency rate: 25 Hz, 75 frames and pixel aspect ratio: 1.067. All video clips were created using Pinnacle Studio 12 and edited down to a length of 25 frames, corresponding to a total duration of ∼830 ms. All human stimulus video clips presented the right or left palm and forearm of a male or female actor, from an egocentric point of view. All non-human stimulus video clips presented a cylindrical object from either a right or left side orientation. The stimulus set for each condition comprised 12 different video clips, 6 videos for each actor or object, for a total of 48 stimuli (see example stimuli in Figure 1). Each video in the touch condition demonstrated either the left or right palm and forearm of an actor, or an object, and one of three objects (peacock feather, brush and plastic arm massager), approaching the arm or object and subsequently touching it. For the non-touch condition, the videos involved the same object (feather, brush and plastic arm massager), approaching the arm of the person or object and moving in front of it, but without touching it. Each video was repeated six times, so that 72 trials were presented in total for each condition. The average onset of movement in front of an object or touch of an object or person was measured to be precisely 63 ms after the visual onset of the video for each condition. Finally, four additional video clips that corresponded to each of the four experimental conditions were modified using a colour-editing Pinnacle toolbox, so that they represented arm and objects of different colours. These videos were used as ‘target’ trials (4% of all video clips) for participant behavioural responses and were not included in further data analysis.

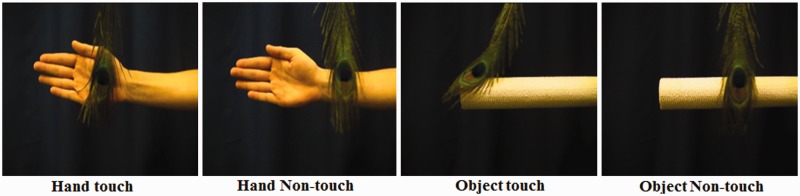

Fig. 1.

Stimuli. Example frames extracted from the video clips in the four experimental conditions: (1) hand touch (an object moving in front of a male arm and palm and touching it); (2) hand non-touch (an object moving in front of an arm and palm without touching it); (3) object touch (an object moving in front of a white roll and touching it) and (4) object non-touch (an object moving in front of a white roll without touching it).

Experimental procedure

Participants were seated comfortably in an isolated sound-attenuated EEG/ERP testing room in front of the computer stimulus monitor. Participants were asked to watch all of the video clips and to press a button on a response box when they saw a movie in which the human or object stimulus was an unusual colour (e.g. green hand or green cylinder). To ensure that participants understood which were the target stimuli, they were shown pictures of the video clips with normally coloured human and non-human stimuli alongside pictures of off-colour target stimuli that required a response, prior to initiating the experiment.

The experiment consisted of two separate blocks of trials: observation of human touch and non-touch (HUMAN) and the observation of object touch and non-touch (OBJECT). During the observation of HUMAN touch, participants were presented with videos showing an arm with an object touching (touch) it or moving in front of it (non-touch). Similarly, during the OBJECT condition, participants were presented with videos showing a cylindrical object with another object either touching it or moving in front of it. In order to prime participants for the experiment, the participant’s arm and palm were touched gently with the same touch objects that were presented in the video clips (peacock feather, brush and plastic arm massager). This tactile stimulation was conducted for ∼6 min in total, 3 min per arm. The same soft force and medium speed of stimulation were maintained for both hands and across participants. In order to maintain the same velocity of tactile movements, the experimenter counted the rhythm internally. This tactile stimulation priming procedure was also conducted again prior to the second block of ERP trials.

Each ERP observation trial began with a baseline period of 1000 ms, presenting a blank black screen. This was then followed by a central fixation cross, which varied in duration from 800 to 1000 ms. Finally, the touch or non-touch video stimulus was presented for 830 ms (25 frames). As described above, the event-related observation stimuli were presented in two separate blocks—HUMAN (human touch and non-touch) and OBJECT (object touch and non-touch). The order of the blocks was randomized across participants. The order of the trials presented within each block was also randomized.

EEG recording and data analysis

EEG data were acquired using a 128-channel Hydrocel Geodesic Sensor Net and recorded with NetStation 4.3.1 software (Electrical Geodesic, Eugene, Oregon). EEG was sampled at 500 Hz, and electrode impedances were kept <80 kohm. Raw EEG data were recorded with the vertex (Cz) as the online reference and re-referenced offline to an average reference. Stimuli were presented using E-Prime 2.0 software (Psychology Software Tools). The experiment took place in a sound-attenuated, dimly lit room and the stimuli were presented on a 17-inch computer monitor with a viewing distance of 80 cm.

EEG recordings were processed offline using NetStation 4.3.1 software (Electrical Geodesics, Inc.). EEG data were bandpass filtered offline at 0.3–40 Hz, and then segmented into epochs containing 100 ms before stimulus onset and 800 ms post-stimulus time. Data were then processed using an artefact detection tool that marked channels bad if the recording was poor for >20% of the time [amplitude threshold (max–min) >100], if eye-blinks [amplitude threshold (max–min) >100] or eye-movements [amplitude threshold (max–min) >100] occurred. All trials containing either an eye movement, an eye-blink, or more than 10 bad channels, were excluded from further analysis. Following this automatic artefact detection, each trial was examined by-hand by a trained observer in order to remove any remaining trials that contained eye-blink or eye-movement artefacts for further analysis. Bad channels in the data of trials containing fewer than 10 bad electrodes were replaced using a spherical spline interpolation algorithm (Srinivasan et al., 1996). The data were then averaged for each participant, re-referenced to an average reference and baseline corrected to a 100 ms pre-stimulus interval.

Grand average ERPs were generated from the average ERPs of 16 participants who produced on average 52 viable trials per condition with a minimum of viable 30 trials per condition for each individual participant. An average of 52 (human touch), 53 (human non-touch), 55 (object touch) and 51 (object non-touch) trials per participant, out of 72 trials presented in each condition, were used in the analysis. Electrodes and time windows for data analysis were chosen on the basis of visual inspection of both individual and grand-averaged ERP data across the scalp, which was initially guided by the hypotheses of the experiment as well as initial piloting of 10 participants whose data are not included in the current analyses due to a slight difference in the experimental design. Clusters of left/right hemisphere electrodes (five left hemisphere and five right hemisphere) over the parietal–central region were selected for the statistical analysis of effects related to somatosensory processing (Figure 2). Additional clusters of left/right hemisphere electrodes (five left and five right) were selected for the statistical analysis of effects related to visual perceptual processing (Figure 3).

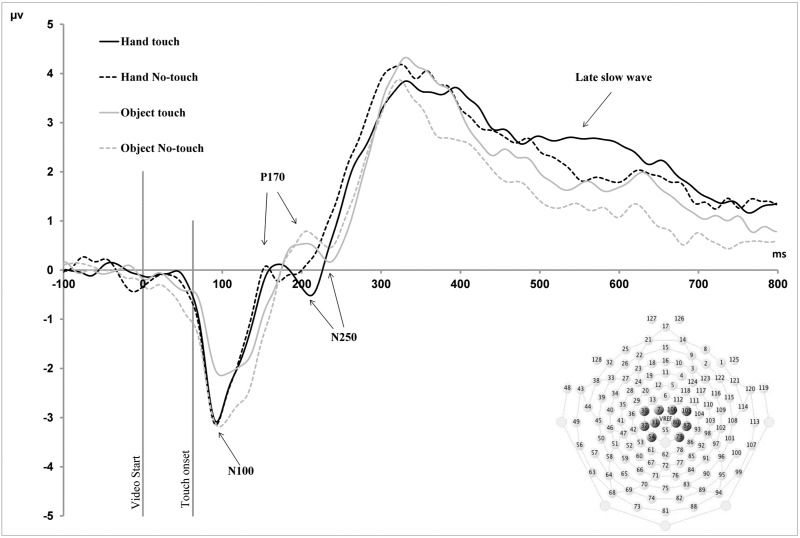

Fig. 2.

Parietal–central (somatosensory) waveforms. Grand average ERP waveforms for parietal–central (somatosensory) electrodes in the four observation conditions. All components are labelled according to their timing in relation to the initial onset of the video stimulus. N100, P170 and N250 component latencies exhibited a main effect of Stimulus Type, whereby latencies for human stimuli were shorter than for objects. The amplitudes of LSW component exhibited a main effect of Stimulus Type, whereby amplitudes for human stimuli were larger than for objects. Finally, the peak amplitudes of the N100, N250 and the mean amplitudes of late positive slow wave (500–600 ms) component exhibited a main effect of Touch, with larger amplitudes for touch compared with non-touch stimuli.

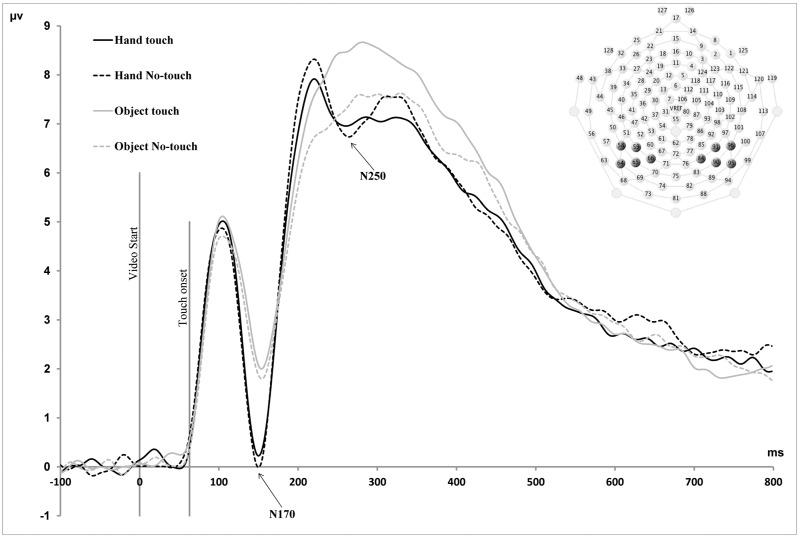

Fig. 3.

Temporal–parietal waveforms. Grand average ERP waveforms for temporal–parietal electrodes in the four observation conditions. All components are labelled according to their timing in relation to the initial onset of the video stimulus. The peak amplitudes of the N170 and N250 components exhibited a main effect of Stimulus Type, whereby amplitudes were larger for human stimuli compared with object stimuli. Latencies of the N250 component exhibited a main effect of Stimulus Type, indicating shorter latencies for human vs object stimuli.

Mean amplitude, peak amplitude and latency-to-peak amplitude values during the time window of each ERP component were averaged across relevant electrode montages for each participant for each observation condition (see ‘Results’ section). Repeated measures analyses of variance (ANOVAs) with within-subject factors Stimulus Type (Human, Object), Touch (Touch, Non-Touch) and Hemisphere (Left, Right) were performed for both the amplitudes and latencies of the somatosensory and temporal–parietal visual processing components. Additionally, we investigated whether the occipital P100, which is involved in lower level visual sensory-perceptual processing, was influenced by the conditions. For this purpose, an ANOVA with within-subject factors Stimulus Type (Human, Object), Touch (Touch, Non-Touch) and Hemisphere (Left, Right) was performed at O1 and O2 electrodes.

RESULTS

ERP components

Two ERP component effects were identified in parietal–central electrodes over somatosensory cortex. These somatosensory components were as follows, based on their timing in relation to the initial onset of the visual stimulus: an early negative-going component peaking at ∼100 ms (N100), a positive-going component peaking at ∼170 ms (P170) and a late slow wave (LSW) component exhibiting a mean amplitude difference between 500 and 600 ms (Figure 2). Additional component peaks were observed at 250 (N250) and 300 ms (P300), in these central electrodes, although condition effects were not clearly observed in these components. In addition to these somatosensory components, condition effects were also observed in visual components recorded from electrodes over temporal–parietal cortex (Figure 3). These were two negative-going peaks that have been shown to be involved in face processing in previous studies, the N170 and N250 components (Bentin et al., 1996; Tanaka et al., 2006; Flevaris et al., 2008).

Electrodes used to measure each component were determined through examination of both grand average and individual subject data of pilot participants, and then confirmed as appropriate for the final sample of 16 participants reported here. Ten parietal–central electrodes (five left hemisphere and five right hemisphere) and 10 temporal–parietal electrodes (five left hemisphere and five right hemisphere) were identified for data analysis. Peak amplitudes and latencies to peak amplitudes were analysed for all components except for the LSW component, for which mean amplitudes were analysed. Time windows were selected for each component on the basis that the window encompassed the peak of the grand average for each condition and also accurately measured the peak of the component for each condition for each individual subject. Time windows for data analysis for each component in the parietal–central region were as follows: N100: 70–120, P170: 120–220, N250: 200–270 and P300: 240–340 ms; late positive components: 500–600 ms. For the temporal–parietal components, time windows for analysis were as follows: N170: 120–200 and N250: 200–270 ms.

Behavioral responses

The accuracy of the detection of target videos was >90% for all participants.

ERP effects

Parietal–central (somatosensory) components

A repeated measures ANOVA with Stimulus Type (Human, Non-Human), Touch (Touch, Non-Touch) and Hemisphere (Left, Right) as within-subjects factors revealed a main effect of Touch for the peak amplitude of the N100 (F(1;15) = 6.67, P < 0.05) and N250 components (F(1;15) = 5.1, P ≤ 0.05). Additionally, a significant interaction between Touch and Stimulus Type was revealed for the amplitude of the N100 component (F(1;15) = 9.2, P < 0.01). Follow-up paired-sample t-tests revealed a significant difference between non-human touch and non-touch observation (MD = 0.6, SE = 0.2, P < 0.05), but not between human touch and non-touch observation (P > 0.05). For the N100, the amplitude of object non-touch observation was greater compared with the object touch observation condition (MD = 0.4, SE = 0.2, P < 0.05). For both the N250 and late positive components, amplitudes were larger for touch vs non-touch stimuli (Figure 2). A main effect of Stimulus Type was observed for the mean amplitudes of the LSW component (F(1;15) = 5.18, P < 0.05), indicated larger amplitudes for human stimuli compared with objects (Figure 2). Finally, a significant interaction between Touch and Hemisphere was revealed for the mean amplitude of the LSW component (500–600 ms; F(1;15) = 6.8, P < 0.05). Post hoc paired sample t-tests showed a difference between touch and non-touch conditions in only the right hemisphere (MD = 0.58, SE = 0.2, P = 0.01). No other significant main effects of amplitude were observed for N170 and P250 components. No significant effects were observed for the amplitude of P300 component.

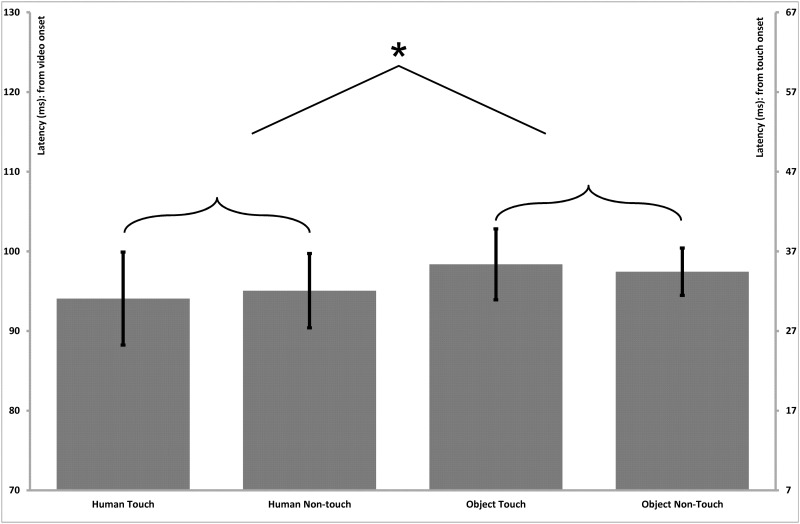

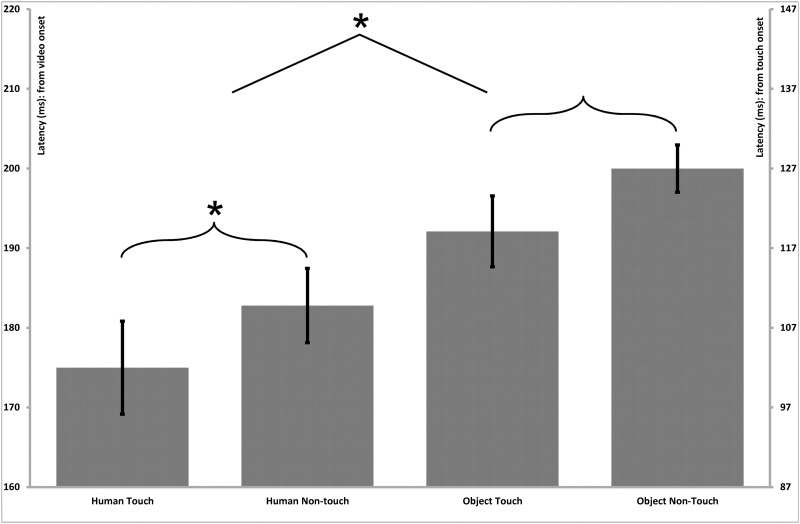

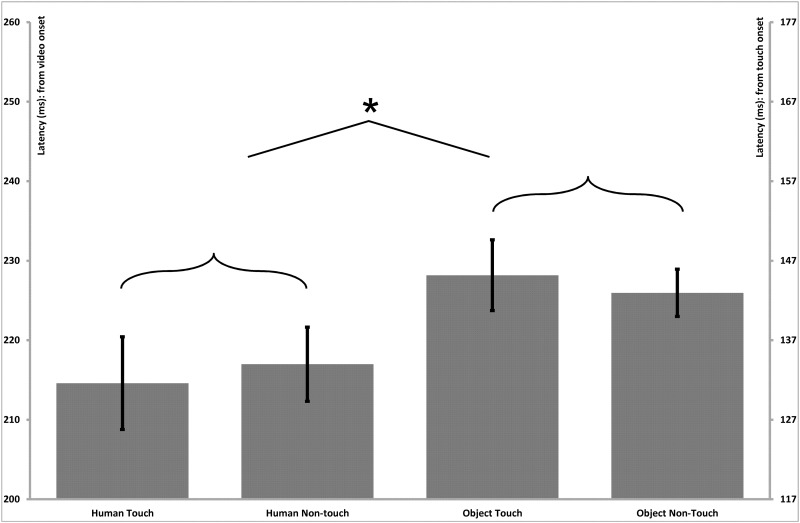

A repeated measures ANOVA with Stimulus Type (Human, Non-Human), Touch (Touch, Non-Touch) and Hemisphere (Left, Right) as within-subjects factors revealed a main effect of Stimulus Type for the latencies of the parietal–central N100 component (F(1;15) = 7.7, P = 0.01), indicating shorter peak latencies for human stimuli (Human = 95 ms, SE = 2.2) than for object stimuli (Object = 98 ms, SE = 2.2; Figure 4). A main effect of Stimulus Type was also found for the P170 (F(1;15) = 15.4, P = 0.01; Figure 5) and N250 (F(1;15) = 27.4, P < 0.001; Figure 6) components, which both also reflected shorter latency responses for human vs object stimuli (P170: Human = 183 ms, SE = 4.5; Object = 191 ms, SE = 3.4; N250: Human = 215 ms, SE = 3.0; Object = 227 ms, SE = 3.7). Additionally, a main effect of Touch was revealed for the P170 component (F(1;15) = 10.2, P < 0.01), with shorter latencies for touch compared with non-touch stimuli (MD = 7.8, SE = 2.5; Figure 5). No other significant main effects or interactions were observed for the latencies of any of the parietal–central components.

Fig. 4.

Parietal–central (somatosensory) N100 latency effects. Bar graphs present the mean (standard error) ERP latency differences for the N100 component in the parietal–central region. The left vertical axis presents timing in relation to the initial onset of the video stimulus, and the right vertical axis presents timing in relation to the onset of the touch within the video stimulus. The latencies exhibited a main effect of Stimulus Type, indicating shorter latencies for human vs object stimuli.

Fig. 5.

Parietal–central (somatosensory) P170 latency effects. Bar graphs present the mean (standard error) ERP latency differences for the P170 component in the parietal–central region. The left vertical axis presents timing in relation to the initial onset of the video stimulus, and the right vertical axis presents timing in relation to the onset of the touch within the video stimulus. A main effect of Stimulus Type indicated shorter latencies for human vs object stimuli, and a main effect of Touch indicated shorter latencies for touch compared with non-touch stimuli.

Fig. 6.

Parietal–central (somatosensory) N250 latency effects. Bar graphs present the mean (standard error) ERP latency differences for the N250 component in the parietal–central somatosensory region. The left vertical axis presents timing in relation to the initial onset of the video stimulus, and the right vertical axis presents timing in relation to the onset of the touch within the video stimulus. The latencies exhibited a main effect of Stimulus Type, indicating shorter latencies for human vs object stimuli.

Temporal-parietal (visual perceptual) components

A repeated measures ANOVA with Stimulus Type (Human, Non-Human), Touch (Touch, Non-Touch) and Hemisphere (Left, Right) as within-subjects factors revealed a main effect of Stimulus Type for the peak amplitudes of both the N170 (F(1;15) = 14.9, P < 0.01) and N250 components (F(1;15) = 4.7, P < 0.05), whereby greater amplitudes were observed for human vs object stimuli. There was also significant interaction between Stimulus Type and Touch for the amplitudes of the N250 component (F(1;15) = 13.7, P < 0.05). Post-hoc paired sample t-tests indicated that the peak amplitude was greater for object touch compared with the object non-touch condition (MD = 1.2, SE = 0.4, P < 0.01). There were no significant effects of Hemisphere for the amplitudes of either the N170 or N250 components. For latencies, there was a main effect of Stimulus Type for both the N170 (F(1;15) = 4.8, P = 0.05) and N250 components (F(1;15) = 17.7, P = 0.01), reflecting shorter latencies for human vs object stimuli (Human = 148 ms, SE = 1.8, Non-Human = 153 ms, SE = 2.6; Human = 222 ms, SE = 2.8; Non-Human = 230 ms, SE = 3.5). There were no other significant main effects or interactions for latencies of the P100, N170 or N250 components.

Central occipital (visual sensory-perceptual) component

A repeated measures ANOVA with Stimulus Type (Human, Non-Human), Touch (Touch, Non-Touch) and Hemisphere (Left, Right) as within-subjects factors revealed no significant effects or interactions observed for either the latencies or amplitudes of the occipital P100 component (F(1;15) < 1, Ps > 0.1). Mean amplitude (standard error) and mean latency (standard error) values for all parietal–central, temporal–parietal and occipital components analysed are presented in Table 1.

Table 1.

Mean ERP component amplitudes

| ROI | Parietal–central |

Temporal–parietal |

Occipital |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Conditions | Human touch | Human non-touch | Object touch | Object non-touch | Human touch | Human non-touch | Object touch | Object non-touch | Human touch | Human non-touch | Object touch | Object non-touch |

| N100 | −6.3 (0.59) | 5.75 (0.73) | −5.91 (0.48) | −6.85 (0.66) | 5.79 (0.47) | 5.77 (0.37) | 5.87 (0.39) | 5.85 (0.42) | 8.3 (0.69) | 7.9 (0.6) | 8.5 (0.64) | 8.6 (0.84) |

| 94 (2.20) | 95 (2.50) | 98 (2.39) | 97 (2.25) | 104 (2.29) | 102 (2.72) | 106 (2.04) | 106 (2.46) | 105 (2.45) | 103 (2.42) | 105 (2.29) | 105 (2.36) | |

| P170 (N170) | −2.86 (0.39) | 2.74 (0.52) | −2.73 (0.44) | −3.04 (0.45) | 0.23 (0.41) | −0.09 (0.43) | 1.74 (0.49) | 1.43 (0.42) | – | – | – | – |

| 175 (5.83) | 183 (4.66) | 192 (4.45) | 200 (2.97) | 150 (2.04) | 149 (2.29) | 153 (2.69) | 153 (2.67) | – | – | – | – | |

| N250 | −1.21 (1.06) | −0.19 (1.30) | −0.53 (1.44) | 0.02 (1.42) | 6.93 (0.62) | 7.32 (0.74) | 6.84 (0.68) | 6.08 (0.7) | – | – | – | – |

| 215 (2.2) | 217 (2.5) | 229 (2.39) | 227 (2.25) | 209 (2.77) | 207 (3.4) | 218 (2.96) | 209 (3.62) | – | – | – | – | |

| P300 | 3.47 (0.6) | 3.99 (0.72) | 3.41 (0.79) | 3.39 (0.66) | – | – | – | – | – | – | – | – |

| 316 (5.48) | 312 (5.08) | 318 (4.78) | 312 (5.09) | – | – | – | – | – | – | – | – | |

| LSW (500–600 ms): left hemisphere | 1.28 (0.32) | 1.7 (0.43) | 2.45 (0.61) | 2 (0.51) | – | – | – | – | – | – | – | – |

| LSW (500–600 ms): right hemisphere | 1.59 (0.39) | 2 (0.50) | 2.3 (0.58) | 2.19 (0.55) | – | – | – | – | – | – | – | – |

Notes: The mean amplitudes, latencies and their standard errors for each of the components in the parietal–central, temporal–parietal and occipital regions are present. All components are labelled according to their timing in relation to the initial onset of the video stimulus. The first row for each component indicates amplitude (mV), the second row indicates latency (ms).

DISCUSSION

In this study, we investigated the time-course of activation of somatosensory processing mechanisms during the observation of humans vs objects being touched. The results demonstrate that touch processing elicited different levels of somatosensory activity at early sensory, perceptual and later cognitive stages of processing. The results further demonstrate that somatosensory (parietal–central) and visual perceptual (temporal–parietal) ERP component responses have shorter latencies for stimuli that involve humans vs objects at several stages of processing. Importantly, no differences were observed in the central occipital P100 component, suggesting that these differences observed in somatosensory and visual perceptual processing components were not driven by lower level visual sensory processing differences.

The current findings are largely consistent with previous fMRI results that suggest that there is significant overlap in levels of activation of the somatosensory cortex during the observation of human and non-human touch (Keysers et al., 2004; Ebisch et al., 2008). The current results extend this research by providing evidence that the time-course of the activation of somatosensory processing mechanisms is also similar during human and non-human touch observation. The touch effects in our experiment were observed in larger amplitude responses recorded from electrodes over somatosensory cortex at 100 ms (N100), at 250 ms (N250) and then again between 500 and 600 ms (LSW), for the observation of both human and object touch. These findings suggest that touch observation modulates somatosensory cortex at the early sensory-perceptual (N100), perceptual (N250) and late cognitive (LSW) stages. We note here that the onset of touch within our videos occurred 63 ms after video onset, indicating that the modulation of somatosensory processing occurred at ∼40 (N100), 190 (N250) and 440–540 ms (LSW), following observed touch.

The finding of modulation of somatosensory cortex 40 ms (N100) after observed touch is consistent with previous evidence for early modulation of somatosensory cortex responses during the observation of human touch (Bufalari et al., 2007). In addition, however, we observed modulation of the late somatosensory cortex response from 440 to 540 ms (LSW) after observed touch. This finding is consistent with a previous MEG study demonstrating that the observation of human touch vs non-touch elicited somatosensory differences between 300 and 600 ms after stimulus onset (Pihko et al., 2010).

Only the N100 recorded from electrodes over somatosensory cortex exhibited an interaction between Stimulus Type (Human, Non-Human) and Touch (Touch, Non-Touch). Specifically, the amplitudes of this negative-going component were less negative in response to the observation of objects not being touched compared with all other conditions. One possible explanation is that the presence of touch in the videos showing non-human objects created associations of the non-animate object (white roll) with a human arm. Alternatively, or additionally, the observation of objects being touched may have generated representations related to intentions associated with a human touching an object with another object, even though no human was visible or apparent. As a result of this, neural responses during the observation of non-human touch might evoke similar mechanisms to human touch and human non-touch at this stage of processing, whereas the observation of non-human non-touch did not.

Although none of the ERP component differences observed for touch vs non-touch processing in this study were specific to the observation of human touch, the latencies of several ERP components recorded over these same somatosensory regions (Central N100, P170 and N250) were significantly shorter for stimuli presenting human compared with non-human object stimuli. Interestingly, these latency differences between human and non-human stimuli began at a relatively early sensory-perceptual stage of information processing, ∼40 ms post-touch observation (N100) and continued through to an early cognitive stage of processing (N250). These results are consistent with other findings that demonstrate the involvement of somatosensory cortex in the processing of social information. For example, Pitcher et al. (2008) found that repetitive transcranial magnetic stimulation (rTMS) targeted at the face representation region in right somatosensory cortex impaired participants’ accuracy in the recognition of facial expressions of emotion, relative to rTMS targeted at either the finger region or the vertex. Interestingly, impairment in emotion recognition occurred when the pulses were delivered prior to 170 ms following stimulus onset, which is known to be a critical time for the visual-perceptual encoding of faces in temporal–parietal regions (Utama et al., 2009; Righi et al., 2012). The authors, therefore, suggest that the perceptual encoding of social information that includes somatosensory content is also encoded in somatosensory cortex processing simultaneous with, or prior to, visual perceptual encoding in temporal–parietal regions. Our results are consistent with these previous findings, in that human vs non-human hand encoding difference occurred first at 100 ms (N100) over somatosensory regions and then, later, at 170 ms (N170) over temporal–parietal cortex.

The current finding of larger amplitude responses to human stimuli in the temporal–parietal N170 and N250 components is consistent with previous studies suggesting differential processing of objects and socially relevant stimuli, including faces and human bodies, in these components (Bindemann et al., 2008; Rossion and Caharel, 2011). Specifically, the N170 has been shown to exhibit larger amplitude response for faces than for a variety of non-face objects (Bentin et al., 1996), and recent evidence suggest that face-specific response of N170 may reflect specialized mechanisms associated with encoding of face information due to extensive exposure to faces as a social stimulus (Tanaka and Curran, 2001; Macchi Cassia et al., 2006; Anaki et al., 2007; Flevaris et al., 2008). The N250 component has been found to be larger in amplitude in response to repeated vs non-repeated faces, suggesting a functional link to facial identity and semantic information processing (Tanaka et al., 2006; Pierce et al., 2011).

At both 170 (central P170 latencies, temporal–parietal N170 amplitudes) and 250 ms (central N250 amplitudes, temporal–parietal N250 amplitudes), temporal–parietal visual processing effects co-occurred alongside somatosensory component effects in this study. Previous research suggests that there is integration of processing in somatosensory and extrastriate visual cortex regions for socially relevant touch processing (Sereno and Huang, 2006; Serino and Haggard, 2010). For example, Haggard et al. (2007) found evidence that somatosensory cortex activity is influenced by visual input, such that seeing the body increased tactile acuity. There is also evidence to suggest the involvement of SI in the visual processing of tactile events (Bolognini et al., 2011). Given this prior evidence, the simultaneous ERP effects at both 170 and 250 ms in central and temporal–parietal regions in this study may reflect the integration and/or coordination of social and/or touch observation processing in these two regions.

The extent to which neural mechanisms associated with the observation of touch are specific to the observation of human touch is especially intriguing considering that the existing neuroimaging findings have provided somewhat mixed results. In 2008, Ebisch and colleagues demonstrated that the activation of somatosensory cortex during the observation of an object accidentally touched by another object was not different from that in a condition where a human body was touched with an object. The authors therefore suggested that the same mechanisms are involved in the observation of any type of touch. In contrast to this, (Blakemore et al. 2005) reported increased blood oxygen-level-dependent responses in both SI and SII for human touch compared with non-animate touch. Although our ERP results cannot provide such detailed examinations of the involvement of particular somatosensory brain regions, our results provide further support for the hypothesis that the observation of both human and non-human object touch elicit neural processing in somatosensory regions (similar to Keysers et al., 2004; Ebisch et al., 2008). Specifically, we observed larger amplitude brain activity during the observation of touch vs non-touch for both human and non-human stimuli in both the N250 component and a LSW component (500–600 ms) recorded from electrodes over somatosensory cortex. Additionally, for the latter component, an interaction between Touch and Hemisphere was revealed, indicating that the touch vs non-touch effect was greater in the right than in the left hemisphere. We note that this finding of lateralization of processing cannot be attributed to the experimental design features or participant sample, because the presentation of right and left hands was counterbalanced within the experiment, and only right-handed participants were included in the study. Therefore, we suggest that this effect might reflect an increase of late cognitive processing or evaluation of observed touch stimuli in the right hemisphere.

It is worth considering why the neural mechanisms recruited during the observation of touch did not differ for human vs non-human touch in the current ERP study or in Ebisch and colleagues’ fMRI study (Ebisch et al., 2008; see also Keysers et al., 2004). As suggested above, it is possible that the presence of intention during touch may make the observed touching of both human and non-human stimuli more animate compared with non-touch. Similarly, it has been argued that the recruitment of neural mechanisms for the observation of human touch may carry over to the observation of non-human object touch. This type of effect may be more likely in studies, including this study, in which participants are primed to the potential self/other nature of the stimulus set through being touched with the touching objects utilized in the experiment (see e.g. Ebisch et al., 2008, for discussion). Although this interpretation reflects a potential limitation for our full understanding of the implications of the current results as well as the results of several previously published fMRI studies on this topic, we note that this particular interpretation would suggest a surprising predominance and flexibility of social mechanisms for somatosensory processing. This, in itself, is an unlikely but intriguing possibility, which is certainly worth pursuing in future research.

In summary, the current findings reflect the first examination of the time-course of the neural mechanisms involved in the observation of human vs object touch. The study results provide new evidence, consistent with existing fMRI evidence, to suggest that somatosensory processing mechanisms are recruited during the observation of both human and object touch. The current results further indicate that the time-course of these touch observation mechanisms does not differ for human vs object touch, both of which occur at several stages of processing. In addition, we found new evidence for the hypothesis that the somatosensory processing system responds more quickly to the presentation of human vs object stimuli, which was reflected in both early sensory-perceptual and perceptual processing ERP component effects recorded from electrodes over somatosensory cortex. These somatosensory processing mechanisms both precede and follow the expected perceptual encoding of human vs non-human objects in extrastriate visual cortical regions.

Acknowledgments

The authors wish to thank Dr Stuart Debryshire for helpful comments and feedback on the draft manuscript, as well as Antonios Christou and Natasha Elliot for assistance with data collection. The research was funded by the University Birmingham, no external funding was provided.

REFERENCES

- Anaki D, Zion-Golumbic E, Bentin S. Electrophysiological neural mechanisms for detection, configural analysis and recognition of faces. Neuroimage. 2007;37(4):1407–16. doi: 10.1016/j.neuroimage.2007.05.054. [DOI] [PubMed] [Google Scholar]

- Blakemore S-J, Bristow D, Bird G, Frith C, Ward J. Somatosensory activations during the observation of touch and a case of vision-touch synaesthesia. Brain. 2005;128:1571–83. doi: 10.1093/brain/awh500. [DOI] [PubMed] [Google Scholar]

- Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience. 1996;8(6):551–65. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bindemann M, Burton AM, Leuthold H, Schweinberger SR. Psychophysiology. 2008;45(4):535–44. doi: 10.1111/j.1469-8986.2008.00663.x. [DOI] [PubMed] [Google Scholar]

- Bolognini N, Rossetti A, Maravita A, Miniussi C. Seeing touch in the somatosensory cortex: a TMS study of the visual perception of touch. Human Brain Mapping. 2011;32:2104–14. doi: 10.1002/hbm.21172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bufalari I, Aprile T, Avenanti A, Di Russo F, Aglioti SM. Empathy for pain and touch in the human somatosensory cortex. Cerebral Cortex. 2007;17:2553–61. doi: 10.1093/cercor/bhl161. [DOI] [PubMed] [Google Scholar]

- Cattaneo L, Rizzolatti G. The mirror neuron system. Archives of Neurology. 2010;66(5):557–60. doi: 10.1001/archneurol.2009.41. [DOI] [PubMed] [Google Scholar]

- Ebisch SJH, Ferri F, Salone A, et al. Differential involvement of somatosensory and interoceptive cortices during the observation of affective touch. Journal of Cognitive Neuroscience. 2010;23:1808–22. doi: 10.1162/jocn.2010.21551. [DOI] [PubMed] [Google Scholar]

- Ebisch SJH, Perrucci MG, Ferretti A, Del Gratta C, Romani GL, Gallese V. The Sense of touch: embodied simulation in a visuotactile mirroring mechanism for observed animate or inanimate touch. Journal of Cognitive Neuroscience. 2008;20:1611–23. doi: 10.1162/jocn.2008.20111. [DOI] [PubMed] [Google Scholar]

- Flevaris AV, Robertson LC, Bentin S. Using spatial frequency scales for processing face features and face configuration: an ERP analysis. Brain Research. 2008;1194:100–9. doi: 10.1016/j.brainres.2007.11.071. [DOI] [PubMed] [Google Scholar]

- Haggard P, Christakou A, Serino A. Viewing the body modulates tactile receptive fields. Experimental Brain Research. 2007;180:187–93. doi: 10.1007/s00221-007-0971-7. [DOI] [PubMed] [Google Scholar]

- Keysers C, Kaas JH, Gazzola V. Somatosensation in social perception. Nature Reviews Neuroscience. 2010;11(10):417–29. doi: 10.1038/nrn2833. [DOI] [PubMed] [Google Scholar]

- Keysers C, Wicker B, Gazzola V, Anton JL, Fogassi L, Gallese V. A touching sight: SII/PV activation during the observation and experience of touch. Neuron. 2004;42:335–46. doi: 10.1016/s0896-6273(04)00156-4. [DOI] [PubMed] [Google Scholar]

- Macchi Cassia V, Kuefner D, Westerlund A, Nelson CA. Modulation of face-sensitive event-related potentials by canonical and distorted human faces: the role of vertical symmetry and up-down featural arrangement. Journal of Cognitive Neuroscience. 2006;18:1343–58. doi: 10.1162/jocn.2006.18.8.1343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Osborn J, Derbyshire SW. Pain sensation evoked by observing injury in others. Pain. 2010;148(2):268–74. doi: 10.1016/j.pain.2009.11.007. [DOI] [PubMed] [Google Scholar]

- Pierce LJ, Scott LS, Boddington S, Droucker D, Curran T, Tanaka JW. The N250 brain potential to personally familiar and newly learned faces and objects. Frontiers in Human Neuroscience. 2011;10(5):1–13. doi: 10.3389/fnhum.2011.00111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pihko E, Nangini C, Jousmaki V, Hari R. Observing touch activates human primary somatosensory cortex. European Journal of Neuroscience. 2010;31:1836–43. doi: 10.1111/j.1460-9568.2010.07192.x. [DOI] [PubMed] [Google Scholar]

- Pitcher D, Garrido L, Walsh V, Duchaine BC. Transcranial magnetic stimulation disrupts the perception and embodiment of facial expressions. Journal of Neuroscience. 2008;28(36):8929–33. doi: 10.1523/JNEUROSCI.1450-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Righi S, Marzi T, Toscani M, Baldassi S, Ottonello S, Viggiano MP. Fearful expressions enhance recognition memory: electrophysiological evidence. Acta Psychologica. 2012;139(1):7–18. doi: 10.1016/j.actpsy.2011.09.015. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Craighero L. The mirror-neuron system. Annual Review of Neuroscience. 2004;27:169–92. doi: 10.1146/annurev.neuro.27.070203.144230. [DOI] [PubMed] [Google Scholar]

- Rossion B, Caharel S. ERP evidence for the speed of face categorization in the human brain: disentangling the contribution of low-level visual cues from face perception. Vision Research. 2011;51(12):1297–311. doi: 10.1016/j.visres.2011.04.003. [DOI] [PubMed] [Google Scholar]

- Schaefer M, Flor H, Heinze HJ, Rotte M. Dynamic modulation of the primary somatosensory cortex during seeing and feeling a touched hand. NeuroImage. 2006;29:587–92. doi: 10.1016/j.neuroimage.2005.07.016. [DOI] [PubMed] [Google Scholar]

- Schaefer M, Xu B, Flor H, Cohen LG. Effects of different viewing perspectives on somatosensory activations during observation of touch. Human Brain Mapping. 2009;30(9):2722–30. doi: 10.1002/hbm.20701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Serino A, Haggard P. Touch and the body. Neuroscience and Biobehavioral Reviews. 2010;34:224–36. doi: 10.1016/j.neubiorev.2009.04.004. [DOI] [PubMed] [Google Scholar]

- Sereno MI, Huang R-S. A human parietal face area contains aligned head-centered visual and tactile maps. Nature Neuroscience. 2006;9:1337–43. doi: 10.1038/nn1777. [DOI] [PubMed] [Google Scholar]

- Srinivasan R, Nunez PL, Tucker DM, Silberstein RB, Cadusch PJ. Brain Topogr. 1996;8(4):355–66. doi: 10.1007/BF01186911. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Curran T. A neural basis for expert object recognition. Psychological Science: A Journal of the American Psychological Society/APS. 2001;12(1):43–7. doi: 10.1111/1467-9280.00308. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Curran T, Porterfield AL, Collins D. Activation of preexisting and acquired face representations: the N250 event-related potential as an index of face familiarity. Journal of Cognitive Neuroscience. 2006;18:1488–97. doi: 10.1162/jocn.2006.18.9.1488. [DOI] [PubMed] [Google Scholar]

- Utama NP, Takemoto A, Koike Y, Nakamura K. Phased processing of facial emotion: an ERP study. Neuroscience Research. 2009;64:30–40. doi: 10.1016/j.neures.2009.01.009. [DOI] [PubMed] [Google Scholar]