Abstract

OBJECTIVE

Colorectal cancer (CRC) screening is underutilized. Effective and efficient interventions are needed to increase its utilization in primary care.

METHODS

We used UNC Internal Medicine electronic medical records to perform 2 effectiveness trials. Eligible patients had no documentation of recent CRC screening and were aged 50–75 years. The mailed intervention contained a letter documenting the need for screening signed by the attending physician in wave A and the practice director in wave B, a postcard to request a decision aid about CRC screening options, and information about how to obtain screening.

RESULTS

Three-hundred and forty patients of attending physicians in wave A, 944 patients of resident physicians in wave B, and 214 patients of attending physicians in wave B were included. The intervention increased screening compared with controls for attending physicians’ patients in wave A (13.1% vs. 4.1%, 95% CI, 3.1%–14.9%) but not for resident physicians’ patients in wave B (1.3% vs. 1.9%, 95% CI, −2.2% to 1.0%). A small increase in screening with the intervention was seen in attending physicians’ patients in wave B (6.9% vs. 2.4%, 95% CI, −1.4% to 10.5%). Requests for decision aids were uncommon in both waves (12.5% wave A and 7.8% wave B).

LIMITATIONS

The group assignments were not individually randomized, and covariate information to explain the differences in effect was limited.

CONCLUSIONS

The intervention increased CRC screening in attending physicians’ patients who received a letter from their physicians, but not resident physicians’ patients who received a letter signed by the practice director.

Effective and efficient methods to promote colorectal cancer (CRC) screening are needed to increase utilization in clinical practice and decrease CRC morbidity and mortality. Some interventions that target barriers to CRC screening have been shown to increase screening [1–6], but not all have been effective [7, 8]. One of these interventions, which provided a CRC screening decision aid (ie, a tool to help patients make informed decisions about screening test options) during a clinic visit, has been shown to increase screening in an efficacy trial [9]. However, to be widely adopted, effective interventions must also be easy to implement, efficient, and cost-effective [10, 11].

Implementing interventions, including decision aids, widely in clinical practice poses difficulties. Space and time constraints limit feasibility, and decision aids may not reach all eligible patients. A more efficient method to increase CRC screening may be to intervene outside the visit. In a previous pilot trial, we found an 11% increase in screening test completion in attending physicians’ patients who were mailed a package containing a letter from their doctor encouraging screening, a decision aid to facilitate screening test choice, and information about how to get a screening test completed without an office visit [12]. However, the cost of this intervention was relatively high, $94 per additional patient screened. This high cost was primarily due to the expense of mailing the decision aid to everyone. Further, the burden of repeated mailings to all unscreened patients may not be sustainable; therefore, we wanted to test a less costly and less labor-intensive approach. We also wanted to examine whether the intervention would be as effective among resident physicians’ patients.

Thus, we sought to test whether an intervention that includes a mailed letter signed by a patients’ personal physician, an invitation to receive a decision aid, and instructions for obtaining screening could increase screening among attending physicians’ patients and to compare how differences in the letter (signed by personal physician vs. practice director) and in the group receiving the letter (attending physicians’ patients vs. resident physicians’ patients) would affect screening uptake.

Methods

Our previous work demonstrated the efficacy of the decision aid on screening test completion in a randomized controlled trial conducted among patients recruited from primary care practices that served mainly insured patients [9]. For these studies reported here, we designed an effectiveness trial to test our approach among an unselected, diverse practice population. To do so, we designed a study that used a waiver of signed consent and assessed outcomes without requiring patient contact or questionnaire completion, relying on the electronic medical record to assess test completion and covariates. The University of North Carolina’s Biomedical Institutional Review Board approved this study.

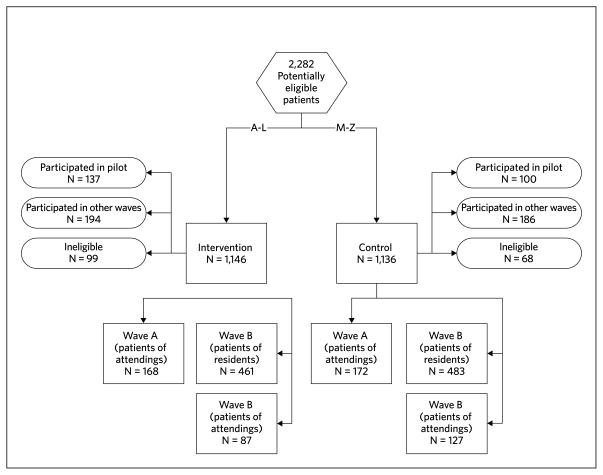

These 2 studies are part of a series of effectiveness trials in one academic internal medicine practice to evaluate mail-out strategies to improve CRC screening. The principal features of the improvement strategies were a letter reminding the patient that they were due for CRC screening, a CRC screening decision aid, and improved direct access to CRC screening tests for patients. In our pilot trial, the intervention package, including a letter and the decision aid, was mailed to attending physicians’ patients directly, without requiring request (by the patient). This increased screening by 11 percentage points [13]. We report here the results of 2 of 3 subsequent larger trials (Figure 1). In the 2 trials reported here, called waves A and B, patients initially received a letter, instructions for scheduling tests, and a decision aid request card. Wave A consisted of attending physicians’ patients, and wave B predominately consisted of resident physicians’ patient. We report the results together here because the methods for these trials were identical except for the signature on the study letters in the intervention package. For wave A, study letters were signed by each patient’s physician, and for wave B, study letters were signed by the practice director.

FIGURE 1.

Wave and Group Assignments

Setting and patient recruitment

We conducted our trial in the UNC Internal Medicine practice. The practice has a diverse population of over 10,000 adults who are cared for by 16 attending physicians and 75 resident physicians. Eligible patients were aged 50 to 75 years, had no record of being up-to-date with screening, and had been seen in the practice within the previous 2 years. “Up-to-date” was defined using the Multi-Society Task Force guidelines for CRC screening and included a fecal occult blood test (FOBT) within the past 12 months, sigmoidoscopy within the past 5 years, barium enema within the past 5 years, or colonoscopy within the past 10 years [14]. We ascertained potentially eligible patients through a review of laboratory and billing databases and divided them alphabetically into intervention and control groups. We then assigned each patient a study identification number.

Assignment to intervention or control group

Eligible patients were assigned to intervention or control groups based on their identification numbers, which were allocated based on their last names and stratified by physician type. We chose to assign eligible patients in this manner to avoid individual randomization (which would have required written consent per our IRB), and thus maintain our unselected population. We divided our eligible patients into waves to maintain feasibility given the large number of unscreened patients. Assignments were all performed initially, before any of the intervention mailings were carried out. Before the mailing of each wave, we reassessed eligibility and removed any participant that had evidence of up-to-date screening.

Description of interventions

Intervention patients received a packet containing the study letter documenting the need for screening, instructions for scheduling a screening test without an office visit, and a postcard to request the decision aid either in DVD or VHS format. We also included a short questionnaire to assess current screening status and personal or family history of CRC; however, because we elicited this information only from the intervention group, we did not use it to determine eligibility. If requested, the decision aid was sent with a post-viewing questionnaire and information about how to obtain screening without an office visit, identical to that which was included with the initial mailing. The decision aid was created by the Foundation for Informed Medical Decision Making [15]. Standing orders were implemented in the practice for FOBT, and a nurse facilitator was available by phone to provide FOBT cards for completion without a visit. For flexible sigmoidoscopy and colonoscopy, schedulers in the gastroenterology practice’s endoscopy unit were instructed to schedule patients who requested either test.

The initial mailing occurred on March 1, 2006 and July 24, 2006 for waves A and B, respectively. Reminders containing the same information as the initial intervention mailing were sent at 1 and 2 months after the initial intervention mailing for patients who had not yet responded. Control group patients received the intervention materials after data collection was complete.

Measures

Colon cancer screening test completion

CRC screening test completion was determined by chart reviews of the electronic medical records and was based on evidence of screening test completion from 7 to 130 days after the initial mailing. Research assistants searched our health care system’s electronic medical record for gastroenterology reports, indicating flexible sigmoidoscopy or colonoscopy; lab results for FOBT; radiology reports for barium enema; and clinic notes from the patient’s most recent internal medicine visit for mention of any of the applicable tests not otherwise identified. Age, race, insurance status, and gender were also recorded from the medical record. We used 2 reviewers for each patient. Inter-rater reliability was assessed using the kappa statistic and found to be very high (0.89 for patients in wave A and 0.85 for patients in wave B). Discrepancies were resolved by team review.

Cost per additional patient screened

We calculated approximate cost per additional patient screened by estimating direct costs of materials and staff time but did not consider other costs, such as patient time (Table 1).

TABLE 1.

Cost Per Additional Patient Screened in Wave A

| Item | Cost | Quantity | Total |

|---|---|---|---|

| Postage | |||

| Initial mail out | $0.39 | 194 | $75.66 |

| Reminder mail outs | $0.39 | 292 | $113.88 |

| Mail back | $0.45 | 53 | $23.85 |

| Mail out package | $1.84 | 21 | $38.64 |

| Mail back package | $5.00 | 3 | $15.00 |

| Postage total | $267.03 | ||

| Materials | |||

| Duplication cost of videos not returned | $2.50 | 18 | $45.00 |

| Paper and envelopes* | $0.10 | 486 | $48.60 |

| Package materials* | $1.00 | 21 | $21.00 |

| Materials total | $114.60 | ||

| Staff | |||

| Tracking and mailing time** | $17.00 | 4 | $68.00 |

| Staff total | $68.00 | ||

| Grand total | $449.63 | ||

estimated costs incurred

based on hourly wage of research assistant

Post-decision aid survey

The post-viewing survey assessed self-reported decision aid viewing, intent to be screened, screening test preferences, knowledge, and satisfaction.

Power calculations

Based on our pilot study we estimated that in order to have 80% power to detect a 10% difference in screening rates between the intervention and control groups, we would need 160 patients per group, assuming a control group screening rate of 5% and a 2-sided α of 0.05. We elected to use the full population of patients available, however, to ensure equity.

Data analysis

First, we compared patient characteristics between intervention and control arms for each group using χ2 tests to determine whether the intervention assignment method adequately balanced the treatment groups. We then compared screening rates between intervention and control arms stratified by group (wave A attending, wave B resident, wave B attending) using χ2 tests. We also calculated 95% confidence intervals around the absolute difference in screening rates. We attempted to control for clustering within providers but, due to the small number of persons with evidence of screening, these models did not converge. Our principal analysis included all patients not excluded on the basis of chart reviews. Using the same methods, we performed an alternative analysis to examine the effect of excluding those in the intervention group found to have had recent screening.

To investigate the differences we found in the intervention effects, we performed additional χ2 tests across the 3 waves. First, we compared the characteristics of the patients across the 3 groups: wave A attendings’ patients, wave B residents’ patients, and wave B attendings’ patients. Then we performed a formal test for differences in the intervention effects across the 3 groups using the Breslow-Day test for homogeneity. We used logistic regression to determine if imbalances in patient characteristics (age, sex, race, insurance status) between the intervention and control groups were influencing the crude intervention effects. We also conducted a logistic regression with all groups combined to determine the impact of patient characteristics on the interaction between the intervention effect and the 3-level group variable (wave A attending, wave B attending, and wave B resident). This was done to determine if the differences in characteristics between attending and resident providers’ patients were driving the inconsistency in the intervention effects. Covariates were considered one at a time due to the limited number of screening events.

Results

Figure 1 shows the study flow, divided by intervention status, wave, and physician type (attending vs. resident). Table 2 shows the demographic characteristics by intervention group. Intervention and control groups were relatively similar in terms of available demographic characteristics except for 2 modest differences: in wave A, the control group was more likely to be uninsured than the intervention group and in wave B, the intervention group patients were slightly older.

TABLE 2.

Patient Characteristics for Intervention and Control Groups in Each Wave

| Wave A Attending patients | Wave B Resident patients | Wave B Attending patients | ||||

|---|---|---|---|---|---|---|

| Intervention | Control | Intervention | Control | Intervention | Control | |

| N = 168 | N = 172 | N = 461 | N = 483 | N = 87 | N = 127 | |

| Mean agea | 62.5 | 61.6 | 61.1b | 60.0 | 64.1c | 62.3 |

| Women (%) | 101 (60) | 96 (56) | 229 (50) | 261 (54) | 45 (52) | 73 (57) |

| Race (%)a,d | ||||||

| White | 114 (68) | 115 (67) | 229 (50) | 222 (46) | 62 (71) | 84 (66) |

| Black | 43 (26) | 47 (27) | 199 (43) | 216 (45) | 20 (23) | 39 (31) |

| Other | 11 (7) | 10 (6) | 33 (7) | 45 (9) | 5 (6) | 4 (3) |

| Insurance status (%)a,d | ||||||

| No insurance | 10 (6)e | 22 (13) | 139 (30) | 146 (30) | 11 (13) | 11 (9) |

| Medicaid | 16 (10) | 21 (12) | 78 (17) | 79 (16) | 10 (11) | 17 (14) |

| Medicare | 57 (34) | 38 (22) | 116 (25) | 115 (24) | 35 (40) | 33 (26) |

| Private insurance | 66 (39) | 78 (45) | 113 (25) | 115 (24) | 24 (28) | 56 (45) |

| Other | 19 (11) | 12 (7) | 15 (3) | 28 (6) | 7 (8) | 8 (6) |

P < .0001 comparing the distribution of these variables in the combined intervention and control groups across the 3 groups.

P = .008 comparing intervention and control groups in wave B for age for patients of resident physicians.

P = .06 comparing intervention and control groups in wave B for age for patients of attending physicians.

percentages may not add to 100% due to rounding.

P = .021 comparing intervention and control groups in wave A for insurance.

Primary outcome: colorectal cancer screening

For the attending physicians’ patients in wave A, the intervention produced a significant net increase in screening rates of 9.0% (95% CI, 3.1– 14.9) (Table 3). For resident physicians’ patients in wave B, we observed no difference between groups: −0.6% (95% CI, −2.2, to 1.0). However, for attending physicians’ patients in wave B, the intervention produced a smaller, statistically non-significant increase of 4.5% (95% CI, −1.4% to10.5%) in CRC screening test completion.

TABLE 3.

Patients Completing Colon Cancer Screening by Chart Review

| Intervention | Control | Difference (95% CI)* | |

|---|---|---|---|

| Wave A (attending) | 13.1% (N = 22) | 4.1% (N = 7) | 9.0% (3.1%–14.9%) |

| N = 340 patients | |||

| N = 14 providers | (N = 168) | (N = 172) | |

| Wave B (residents) | 1.3% (N = 6) | 1.9% (N=9) | −0.6% (−2.2%–1.0%) |

| N = 944 patients | |||

| N = 83 providers | (N = 461) | (N = 483) | |

| Wave B (attending) | 6.9% (N = 6) | 2.4% (N = 3) | 4.5% (−1.4%–10.5%) |

| N = 214 patients | |||

| N = 19 providers | (N = 87) | (N = 127) | |

The Breslow-Day test for homogeneity for intervention effects across all 3 groups: P = .0412; for intervention effects between attending groups (wave A and wave B): P = .86; for intervention effects between wave B resident patients and wave B attending patients: P = .089.

Among wave A patients, controlling for insurance status (insured vs. not insured) did not reduce the magnitude of the intervention effect (P = .0055, adjusted Wald). Among wave B patients of attending physicians, after adjustment for age, the intervention effect remained positive but not statistically significant (OR, 3.15 [95% CI, 0.76–13.07]). The difference in the intervention effect across the 3 groups was statistically significant (P = .04, Breslow-Day test for homogeneity).

In logistic regression models individually controlling for insurance status, race, and age, the statistical tests for difference in intervention effects across groups did not change appreciably. This suggests that these demographic factors do not explain the differences in intervention effects between groups.

Cost per additional patient screened

For wave A, where the intervention showed a significant effect on screening, we calculated the cost per additional patient screened over that of the control group to be about $30 ($449.63/15 additional patients, Table 1). Most of the cost was attributable to the postage paid for repeated mailings ($267).

Decision aid viewing

The proportion requesting and viewing decision aids in response to the intervention mailing was small for both waves: for wave A, 12.5% (N = 21) of the patients responded and requested the decision aid; 3 participants returned the decision aid and questionnaire, and 2 of these reported viewing the decision aid. The 2 that viewed the decision aid were among the 26 that were screened. For wave B patients of resident physicians, the results were similar: 7.8% (N = 36) responded and requested the decision aid; 12 returned the decision aid, and 6 reported that they had viewed the decision aid.

Alternative analysis

In our alternative analysis, we excluded intervention group members who self-reported higher risk status or previous screening. The primary results, CRC screening differences between groups, were no longer significant but did not change directions. In wave A, we observed a net increase of 5.4% (95% CI, 0.3%–11%), and in wave B resident physicians, we still observed no difference (−0.9% [95% CI, −2.4% to 0.6%]). The effect sizes in wave A and wave B attending patients were no longer different; we observed a 5.3% net increase in screening in the attending patients in wave B (95% CI, −1.1%–11.9%).

Discussion

In these 2 effectiveness trials, we found that an intervention mailing that included a letter encouraging screening, a card to request a decision aid, and instructions on how to obtain a screening test outside an office visit was effective in modestly increasing CRC screening in attending physicians’ patients who received a letter signed by their physician, but not effective for resident physicians’ patients who received a letter signed by the practice director. Attending physicians’ patients who received the reminder signed by the practice director had intermediate results. Requests for the decision aids were low for all groups (12.5% and 7.8% for attending and resident patients, respectively) suggesting that the decision aid itself had little effect on screening rates. The cost per additional patient screened (among patients of attending physicians who received the letter signed by their regular provider) was modest ($30) compared to our pilot study ($94) [12].

We found some evidence to suggest that the signature of the patient’s physician on the study letter may have had an effect on the CRC screening test completion for attending physicians’ patients. Attending physicians’ patients in wave B, who received a letter in the intervention package signed by the practice director, were less responsive to the intervention. These findings are consistent with a previous study demonstrating that invitations signed by the patient’s personal physician are more effective at increasing uptake than general invitations, such as from a national screening program [16].

We were unable to fully explain the difference in the effect of our intervention between the attending and resident physicians’ patients with the limited individual characteristics available in the electronic medical records. It is possible that the differences observed could be explained by other unmeasured characteristics that may have differed between groups, such as income, health status, or the duration of the physician-patient relationship. Additionally, when we explored the effect of excluding those with evidence of screening on the pre-questionnaire, differences were smaller.

Recent randomized controlled trials of relatively low-intensity interventions for CRC screening in a variety of populations have shown mixed results. Dietrich and colleagues demonstrated a 13% increase in screening among low-income women with calls from prevention care managers [2]. Denberg and colleagues sent a mailed brochure to patients in 2 academic practices who were scheduled for a colonoscopy and found a 12% increase in adherence to testing compared to usual care [1]. However, Walsh and colleagues did not demonstrate a difference in screening test completion in patients from academic and community practices who were randomized to receive a mailed intervention including a letter from their physician, information about CRC, and FOBT cards [8]. Sequist and colleagues found that a mailed intervention with a tailored letter, educational brochure, and FOBT kit increased screening by 6 percentage points, with a cost of $94 per additional patient screened [17].

Including the option of requesting the decision aid did not appear to have much of an effect on the promotion of screening, as numbers of requests in both groups were low. The intervention may have mainly served as a patient reminder about screening rather than encouraging a better decision-making process. While decision aids have been shown to improve decision quality when viewed [18], this method of encouraging their use was not effective.

We chose our study design—alphabetical pseudo-randomization to intervention and control, no direct patient contact for covariates, and chart review for outcomes assessment—to remove some of the privacy and human subjects concerns that might require written informed consent or otherwise limit the pool of participants. By not filtering our study population, we sought to improve the generalizability of our results, including patients who would not usually actively enroll in a trial. These choices, however, created some potential limitations. Although we alphabetically allocated participants to intervention or control groups and did not observe large differences in the measured covariates, important unmeasured differences may have been present. The lack of covariates also limited our ability to explore and explain the difference in the intervention effect between patient groups, apart from the signature on the study letter.

Our outcomes assessment was also limited by the study design. We had limited follow-up time (130 days), and some patients may have been screened after this time period. However, this would only affect our results if late screening occurred preferentially in the control group. It is also possible that screening could have occurred outside of our health system, but it is again unlikely that this misclas-sification would differ between intervention and control groups, and there are few other local sources for screening. For video viewing, we relied on self-report. It is conceivable that patients viewed the video and did not respond to the questionnaire. However, when we assessed this in our pilot study, this number was small [12]. Finally, our results may not be generalizable because the study was conducted in 1 academic practice that has been a site for other decision aid and quality improvement studies.

In conclusion, we found that an intervention mailing that included a letter encouraging screening, a card to request a decision aid, and instructions on how to obtain a screening test outside an office visit was effective at increasing CRC screening among attending physicians’ patients who received a letter signed by their physician, but not among resident physicians’ patients who received a letter signed by the practice director.

Acknowledgments

The Foundation for Informed Medical Decision Making (Grant Number 0087-4) supported the research. Dr. Lewis was funded by National Cancer Institute K07 Mentored Career Development Award (#K07-CA104128). Dr. Pignone was partially funded by the University of North Carolina Center for Health Promotion Economics. Dr. Moore was supported by K30 Clinical Research Curriculum RR022267 and Roadmap K12 RR023248.

Footnotes

Presented in part at the International Shared Decision Making Meeting. Presented as an oral presentation on May 29, 2007 in Freiburg, Germany.

Potential conflicts of interest. All authors have no relevant conflicts of interest.

Contributor Information

Carmen L. Lewis, Associate professor of medicine, Department of Medicine and Cecil G. Sheps Center for Health Services Research, University of North Carolina–Chapel Hill, Chapel Hill, North Carolina.

Alison Tytell Brenner, Doctoral candidate, School of Public Health, University of Washington, Seattle, Washington.

Jennifer M. Griffith, Assistant professor, Texas A&M University System Health Science Center, College Station, Texas.

Charity G Moore, Associate professor, Department of Medicine, University of Pittsburgh, Pittsburgh, Pennsylvania.

Michael P. Pignone, Professor of medicine, Department of Medicine and Cecil G. Sheps Center for Health Services Research, University of North Carolina–Chapel Hill, Chapel Hill, North Carolina.

References

- 1.Denberg TD, Coombes JM, Byers TE, et al. Effect of a mailed brochure on appointment-keeping for screening colonoscopy: a randomized trial. Ann Intern Med. 2006;145(12):895–900. doi: 10.7326/0003-4819-145-12-200612190-00006. [DOI] [PubMed] [Google Scholar]

- 2.Dietrich AJ, Tobin JN, Cassells A, et al. Telephone care management to improve cancer screening among low-income women: a randomized, controlled trial. Ann Intern Med. 2006;144(8):563–571. doi: 10.7326/0003-4819-144-8-200604180-00006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Hulscher ME, Wensing M, Grol RP, van der Weijden T, van Weel C. Interventions to improve the delivery of preventive services in primary care. Am J Public Health. 1999;89(5):737–746. doi: 10.2105/ajph.89.5.737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Hulscher ME, Wensing M, van Der Weijden T, Grol R. Interventions to implement prevention in primary care. Cochrane Database Syst Rev. 2001;(1):CD000362. doi: 10.1002/14651858.CD000362. [DOI] [PubMed] [Google Scholar]

- 5.Shankaran V, McKoy JM, Dandade N, et al. Costs and cost-effectiveness of a low-intensity patient-directed intervention to promote colorectal cancer screening. J Clin Oncol. 2007;25(33):5248–5253. doi: 10.1200/JCO.2007.13.4098. [DOI] [PubMed] [Google Scholar]

- 6.Stone EG, Morton SC, Hulscher ME, et al. Interventions that increase use of adult immunization and cancer screening services: a meta-analysis. Ann Intern Med. 2002;136(9):641–651. doi: 10.7326/0003-4819-136-9-200205070-00006. [DOI] [PubMed] [Google Scholar]

- 7.Ruffin MT, Fetters MD, Jimbo M. Preference-based electronic decision aid to promote colorectal cancer screening: results of a randomized controlled trial. Prev Med. 2007;45(4):267–273. doi: 10.1016/j.ypmed.2007.07.003. [DOI] [PubMed] [Google Scholar]

- 8.Walsh JM, Salazar R, Terdiman JP, Gildengorin G, Pérez-Stable EJ. Promoting use of colorectal cancer screening tests. Can we change physician behavior? J Gen Intern Med. 2005;20(12):1097–1101. doi: 10.1111/j.1525-1497.2005.0245.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Pignone M, Harris R, Kinsinger L. Videotape-based decision aid for colon cancer screening. A randomized, controlled trial. Ann Intern Med. 2000;133(10):761–769. doi: 10.7326/0003-4819-133-10-200011210-00008. [DOI] [PubMed] [Google Scholar]

- 10.Andersen MR, Urban N, Ramsey S, Briss PA. Examining the cost-effectiveness of cancer screening promotion. Cancer. 2004;101(5 Suppl):1229–1238. doi: 10.1002/cncr.20511. [DOI] [PubMed] [Google Scholar]

- 11.Wolf MS, Fitzner KA, Powell EF, et al. Costs and cost effectiveness of a health care provider-directed intervention to promote colorectal cancer screening among Veterans. J Clin Oncol. 2005;23(34):8877–8883. doi: 10.1200/JCO.2005.02.6278. [DOI] [PubMed] [Google Scholar]

- 12.Lewis CL, Brenner AT, Griffith JM, Pignone MP. The uptake and effect of a mailed multi-modal colon cancer screening intervention: a pilot controlled trial. Implement Sci. 2008;3:32. doi: 10.1186/1748-5908-3-32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Brenner AT, Griffith JM, Malone R, Pignone M. Effectiveness of a mailed intervention to increase colorectal cancer screening in an academic primary care practice: a controlled trial. Paper presented at: 4th International Shared Decision Making Conference; 2007; Freiberg, Germany. [Google Scholar]

- 14.Winawer S, Fletcher R, Rex D, et al. Colorectal cancer screening and surveillance: clinical guidelines and rationale-update based on new evidence. Gastroenterology. 2003;124(2):544–560. doi: 10.1053/gast.2003.50044. [DOI] [PubMed] [Google Scholar]

- 15.Informed Medical Decisions Foundation. [Accessed October 10, 2007];Informed Medical Decisions Foundation Web site. http://www.fimdm.org/

- 16.Tacken MA, Braspenning JC, Hermens RP, et al. Uptake of cervical cancer screening in The Netherlands is mainly influenced by women’s beliefs about the screening and by the inviting organization. Eur J Public Health. 2007;17(2):178–185. doi: 10.1093/eurpub/ckl082. [DOI] [PubMed] [Google Scholar]

- 17.Sequist TD, Franz C, Ayanian JZ. Cost-effectiveness of patient mailings to promote colorectal cancer screening. Med Care. 2010;48(6):553–557. doi: 10.1097/MLR.0b013e3181dbd8eb. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Stacey D, Bennett CL, Barry MJ, et al. Decision aids for people facing health treatment or screening decisions. Cochrane Database Syst Rev. 2001;(10):CD001431. doi: 10.1002/14651858.CD001431. [DOI] [PubMed] [Google Scholar]