Abstract

We provide a novel refined attractor-based complexity measurement for Boolean recurrent neural networks that represents an assessment of their computational power in terms of the significance of their attractor dynamics. This complexity measurement is achieved by first proving a computational equivalence between Boolean recurrent neural networks and some specific class of  -automata, and then translating the most refined classification of

-automata, and then translating the most refined classification of  -automata to the Boolean neural network context. As a result, a hierarchical classification of Boolean neural networks based on their attractive dynamics is obtained, thus providing a novel refined attractor-based complexity measurement for Boolean recurrent neural networks. These results provide new theoretical insights to the computational and dynamical capabilities of neural networks according to their attractive potentialities. An application of our findings is illustrated by the analysis of the dynamics of a simplified model of the basal ganglia-thalamocortical network simulated by a Boolean recurrent neural network. This example shows the significance of measuring network complexity, and how our results bear new founding elements for the understanding of the complexity of real brain circuits.

-automata to the Boolean neural network context. As a result, a hierarchical classification of Boolean neural networks based on their attractive dynamics is obtained, thus providing a novel refined attractor-based complexity measurement for Boolean recurrent neural networks. These results provide new theoretical insights to the computational and dynamical capabilities of neural networks according to their attractive potentialities. An application of our findings is illustrated by the analysis of the dynamics of a simplified model of the basal ganglia-thalamocortical network simulated by a Boolean recurrent neural network. This example shows the significance of measuring network complexity, and how our results bear new founding elements for the understanding of the complexity of real brain circuits.

Introduction

In neural computation, understanding the computational and dynamical properties of biological neural networks is an issue of central importance. In this context, much interest has been focused on comparing the computational power of diverse theoretical neural models with those of abstract computing devices. Nowadays, the computational capabilities of neural models is known to be tightly related to the nature of the activation function of the neurons, to the nature of their synaptic connections, to the eventual presence of noise in the model, to the possibility for the networks to evolve over time, and to the computational paradigm performed by the networks.

The first and seminal results in this direction were provided by McCulloch and Pitts, Kleene, and Minsky who proved that first-order Boolean recurrent neural networks were computationally equivalent to classical finite state automata [1]–[3]. Kremer extended these results to the class of Elman-style recurrent neural nets [4], and Sperduti discussed the computational power of different other architecturally constrained classes of networks [5].

Later, Siegelmann and Sontag proved that by considering rational synaptic weights and by extending the activation functions of the cells from Boolean to linear-sigmoid, the corresponding neural networks have their computational power drastically increased from finite state automata up to Turing machines [6]–[8]. Kilian and Siegelmann then generalised the Turing universality of neural networks to a broader class of sigmoidal activation functions [9]. The computational equivalence between so-called “rational recurrent neural networks” and Turing machines has now become standard result in the field.

Following von Neumann considerations [10], Siegelmann and Sontag further assumed that the variables appearing in the underlying chemical and physical phenomena could be modelled by continuous rather than discrete (rational) numbers, and therefore proposed a study of the computational capabilities of recurrent neural networks equipped with real instead of rational synaptic weights [11]. They proved that the so-called “analog recurrent neural networks” are computationally equivalent to Turing machines with advices, hence capable of super-Turing computational power from polynomial time of computation already [11]. In this context, a proper internal hierarchical classification of analog recurrent neural networks according to the Kolmogorov complexity of their underlying real synaptic weights was described [12].

It was also shown that the presence of arbitrarily small amount of analog noise seriously reduces the computational capability of both rational- and real-weighted recurrent neural networks to those of finite automata [13]. In the presence of Gaussian or other common analog noise distribution with sufficiently large support, the computational power of recurrent neural networks is reduced to even less than finite automata, namely to the recognition of definite languages [14].

Besides, the concept of evolvability has also turned out to be essential in the study of the computational power of circuits closer to the biological world. The research in this context has initially been focused almost exclusively on the application of genetic algorithms aimed at allowing networks with fully-connected topology and satisfying selected fitness functions (e.g., performed well on specific tasks) to reproduce and multiply [15]–[18]. This approach aimed to optimise the connection weights that determine the functionality of a network with fixed-topology. However, the topology of neural networks, i.e. their structure and connectivity patterns, greatly affects their functionality. The evolution of both topologies and connection weights following bioinspired rules that may also include features derived from the study of neural development, differentiation, genetically programmed cell-death and synaptic plasticity rules has become increasingly studied in recent years [19]–[26]. Along this line, Cabessa and Siegelmann provided a theoretical study proving that both models of rational-weighted and analog evolving recurrent neural networks are capable of super-Turing computational capabilities, equivalent to those of static analog neural networks [27].

Finally, from a general perspective, the classical computational approach from Turing [28] was argued to “no longer fully corresponds to the current notion of computing in modern systems” [29] – especially when it refers to bio-inspired complex information processing systems. In the brain (or in organic life in general), information is rather processed in an interactive way [30], where previous experience must affect the perception of future inputs, and where older memories may themselves change with response to new inputs. Following this perspective, Cabessa and Villa described the super-Turing computational power of analog recurrent neural networks involved in a reactive computational framework [31]. Cabessa and Siegelmann provided a characterisation of the Turing and super-Turing capabilities of rational and analog recurrent neural networks involved in a basic interactive computational paradigm, respectively [32]. Moreover, Cabessa and Villa proved that neural models combining the two crucial features of evolvability and interactivity were capable of super-Turing computational capabilities [33].

In this paper, we pursue the study of the computational power of neural models and provide two novel refined attractor-based complexity measurement for Boolean recurrent neural networks. More precisely, as a first step we provide a generalisation to the precise infinite input stream context of the classical equivalence result between Boolean neural networks and finite state automata [1]–[3]. Under some natural condition on the type specification of their attractors, we show that Boolean recurrent neural networks disclose the very same expressive power as deterministic Büchi automata [34]. This equivalence allows to establish a hierarchical classification of Boolean neural networks by translating the Wagner classification theory from the Büchi automaton to the neural network context [35]. The obtained classification consists of a pre-well ordering of width 2 and height  (where

(where  denotes the first infinite ordinal). As a second step, we show that by totally relaxing the restrictions on the type specification of their attractors, the Boolean neural networks significantly increase their expressive power from deterministic Büchi automata up to Muller automata. Hence, another more refined hierarchical classification of Boolean neural networks is obtained by translating the Wagner classification theory from the Muller automaton to the neural network context. This classification consists of a pre-well ordering of width 2 and height

denotes the first infinite ordinal). As a second step, we show that by totally relaxing the restrictions on the type specification of their attractors, the Boolean neural networks significantly increase their expressive power from deterministic Büchi automata up to Muller automata. Hence, another more refined hierarchical classification of Boolean neural networks is obtained by translating the Wagner classification theory from the Muller automaton to the neural network context. This classification consists of a pre-well ordering of width 2 and height  . The complexity measurements induced by these two hierarchical classifications refer to the possibility of networks' dynamics to maximally alternate between attractors of different types along their evolutions. They represent an assessment of the computational power of Boolean neural networks in terms of the significance of their attractor dynamics. Finally, an application of this approach to a Boolean model of the basal ganglia-thalamocortical network is provided. This practical example shows that our automata-theoretical approach might bear new founding elements for the understanding of the complexity of real brain circuits.

. The complexity measurements induced by these two hierarchical classifications refer to the possibility of networks' dynamics to maximally alternate between attractors of different types along their evolutions. They represent an assessment of the computational power of Boolean neural networks in terms of the significance of their attractor dynamics. Finally, an application of this approach to a Boolean model of the basal ganglia-thalamocortical network is provided. This practical example shows that our automata-theoretical approach might bear new founding elements for the understanding of the complexity of real brain circuits.

Materials and Methods

Network Model

In this work, we focus on synchronous discrete-time first-order recurrent neural networks made up of classical McCulloch and Pitts cells. Such a neural network is modelled by a general labelled directed graph. The nodes and labelled edges of the graph respectively represent the cells and synaptic connections of the network. At each time step, the status of each activation cell can be of only two kinds: firing or quiet. When firing, a cell instantaneously transmits an action potential throughout all its outgoing connections, the intensity of which being equal to the label of the underlying connection. Then, a given cell is firing at time  whenever the summed intensity of all the incoming action potentials transmitted at time

whenever the summed intensity of all the incoming action potentials transmitted at time  by both its afferent cells and background activity exceeds its threshold (which we suppose without loss of generality to be equal to 1). The definition of such a network can be formalised as follows:

by both its afferent cells and background activity exceeds its threshold (which we suppose without loss of generality to be equal to 1). The definition of such a network can be formalised as follows:

Definition 1.

A first-order Boolean recurrent neural network (RNN) consists of a tuple  , where

, where  is a finite set of

is a finite set of  activation cells,

activation cells,  is a finite set of

is a finite set of  input units, and

input units, and  ,

,  , and

, and  are rational matrices describing the weighted synaptic connections between cells, the weighted connections from the input units to the activation cells, and the background activity, respectively.

are rational matrices describing the weighted synaptic connections between cells, the weighted connections from the input units to the activation cells, and the background activity, respectively.

The activation value of cells  and input units

and input units  at time

at time  , respectively denoted by

, respectively denoted by  and

and  , is a Boolean value equal to

, is a Boolean value equal to  if the corresponding cell is firing at time

if the corresponding cell is firing at time  and equal to

and equal to  otherwise. Given the activation values

otherwise. Given the activation values  and

and  , the value

, the value  is then updated by the following equation

is then updated by the following equation

|

(1) |

where  is the classical Heaviside step function, i.e. a hard-threshold activation function defined by

is the classical Heaviside step function, i.e. a hard-threshold activation function defined by  if

if  and

and  otherwise.

otherwise.

According to Equation (1), the dynamics of the whole network  is described by the following governing equation

is described by the following governing equation

| (2) |

where  and

and  are Boolean vectors describing the spiking configuration of the activation cells and input units, and

are Boolean vectors describing the spiking configuration of the activation cells and input units, and  denotes the Heaviside step function applied component by component.

denotes the Heaviside step function applied component by component.

Such Boolean neural networks have already been proven to reveal same computational capabilities as finite state automata [1]–[3]. Furthermore, it can be observed that rational- and real-weighted Boolean neural networks are actually computationally equivalent.

Example 1. Consider the network  depicted in Figure 1. The dynamics of this network is then governed by the following system of equation:

depicted in Figure 1. The dynamics of this network is then governed by the following system of equation:

|

Figure 1. A simple neural network.

The network is formed by two input units ( ) and three activation cells (

) and three activation cells ( ). In this example the synaptic weights are all equal to 1/2, with positive sign corresponding to an excitatory input and a negative sign corresponding to a negative input. Notice that both cells

). In this example the synaptic weights are all equal to 1/2, with positive sign corresponding to an excitatory input and a negative sign corresponding to a negative input. Notice that both cells  and

and  receive an excitatory background activity weighing 1/2.

receive an excitatory background activity weighing 1/2.

Attractors

Neurophysiological Meaningfulness

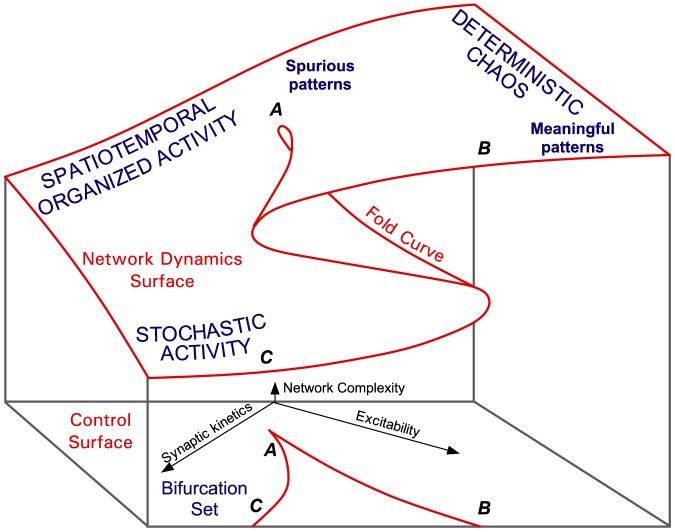

In bio-inspired complex systems, the concept of an attractor has been shown to carry strong biological and computational implications. According to Kauffman: “Because many complex systems harbour attractors to which the system settle down, the attractors literally are most of what the systems do” [36, p. 191]. The central hypothesis for brain attractors is that, once activated by appropriate activity, network behaviour is maintained by continuous reentry of activity [37], [38]. This involves strong correlations between neuronal activities in the network and a high incidence of repeating firing patterns therein, being generated by the underlying attractors. Alternative attractors are commonly interpreted as alternative memories [39]–[46].

Certain pathways through the network may be favoured by preferred synaptic interactions between the neurons following developmental and learning processes [47]–[49]. The plasticity of these phenomena is likely to play a crucial role to shape the meaningfulness of an attractor and attractors must be stable at short time scales. Whenever the same information is presented in a network, the same pattern of activity is evoked in a circuit of functionally interconnected neurons, referred to as “cell assembly”. In cell assemblies interconnected in this way, some ordered and precise neurophysiological activity referred to as preferred firing sequences, or spatio-temporal patterns of discharges, may recur above chance levels whenever the same information is presented [50]–[52]. Recurring firing patterns may be detected without a specific association to a stimulus in large networks of spiking neural networks or during spontaneous activity in electrophysiological recordings [53]–[55]. These patterns may be viewed as spurious patterns generated by spurious attractors that are associated with the underlying topology of the network rather than with a specific signal [56]. On the other hand, several examples exist of spatiotemporal firing patterns in behaving animals, from rats to primates [57]–[61], where preferred firing sequences can be associated to specific types of stimuli or behaviours. These can be viewed as meaningful patterns associated with meaningful attractors. However, meaningfulness cannot be reduced to the detection of a behavioural correlate [62]–[64]. The repeating activity in a network may also be considered meaningful if it allows the activation of neural elements that can be associated to other attractors, thus allowing the build-up of higher order dynamics by means of itinerancy between attractor basins and opening the way to chaotic neural dynamics [51], [65]–[70].

The dynamics of rather simple Boolean recurrent neural networks can implement an associative memory with bioinspired features [71], [72]. In the Hopfield framework, stable equilibria of the network that do not represent any valid configuration of the optimisation problem are referred to as spurious attractors. Spurious modes can disappear by “unlearning” [71], but rational successive memory recall can actually be implemented by triggering spurious modes and achieving meaningful memory storage [66], [73]–[77]. In this paper, the notions of attractors, meaningful attractors, and spurious attractors are reformulated in our precise Boolean network context. Networks will then be classified according to their ability to alternate between different types of attractive behaviours. For this purpose, the following definitions need to be introduced.

Formal Definitions

As preliminary notations, for any  , the space of

, the space of  -dimensional Boolean vectors is denoted by

-dimensional Boolean vectors is denoted by  . For any vector

. For any vector  and any

and any  , the

, the  -th component of

-th component of  is denoted by

is denoted by  . Moreover, the spaces of finite and infinite sequences of

. Moreover, the spaces of finite and infinite sequences of  -dimensional Boolean vectors are denoted by

-dimensional Boolean vectors are denoted by  and

and  , respectively. Any finite sequence of length

, respectively. Any finite sequence of length  of

of  will be denoted by an expression of the form

will be denoted by an expression of the form  , and any infinite sequence of

, and any infinite sequence of  by an expression of the form

by an expression of the form  , where each

, where each  . For any finite sequence of Boolean vectors

. For any finite sequence of Boolean vectors  , we let the expression

, we let the expression  denote the infinite sequence obtained by infinitely many consecutive concatenations of

denote the infinite sequence obtained by infinitely many consecutive concatenations of  , i.e.

, i.e.  .

.

Now, let  be some network with

be some network with  activation cells and

activation cells and  input units. For each time step

input units. For each time step  , the Boolean vectors

, the Boolean vectors  and

and  describing the spiking configurations of both the activation cells and input units of

describing the spiking configurations of both the activation cells and input units of  at time

at time  are called the state of

are called the state of  at time

at time  and the input submitted to

and the input submitted to  at time

at time  , respectively. An infinite input stream

, respectively. An infinite input stream

of

of  is then defined as an infinite sequence of consecutive inputs, i.e.

is then defined as an infinite sequence of consecutive inputs, i.e.  . Now, assuming the initial state of the network to be

. Now, assuming the initial state of the network to be  , any infinite input stream

, any infinite input stream  induces via Equation (2) an infinite sequence of consecutive states

induces via Equation (2) an infinite sequence of consecutive states  called the evolution of

called the evolution of  induced by the input stream

induced by the input stream  .

.

Note that the set of all possible distinct states of a given Boolean network  is always finite; indeed, if

is always finite; indeed, if  possesses

possesses  activation cells, then there are at most

activation cells, then there are at most  distinct possible states of

distinct possible states of  . Hence, any infinite evolution

. Hence, any infinite evolution  of

of  consists of an infinite sequence of only finitely many distinct states. Therefore, in any evolution

consists of an infinite sequence of only finitely many distinct states. Therefore, in any evolution  of

of  , there necessarily exists at least one state that recurs infinitely many times in the infinite sequence

, there necessarily exists at least one state that recurs infinitely many times in the infinite sequence  , irrespective of the fact that

, irrespective of the fact that  is periodic or not. The non-empty set of all such states that recurs infinitely often in the evolution

is periodic or not. The non-empty set of all such states that recurs infinitely often in the evolution  will be denoted by

will be denoted by  .

.

By definition, every state  that is visited only finitely often in

that is visited only finitely often in  will no longer occur in

will no longer occur in  after some time step

after some time step  . By taking the maximum of these time steps

. By taking the maximum of these time steps  , we obtain a global time step

, we obtain a global time step  such that all states of

such that all states of  occurring after time

occurring after time  will necessarily repeat infinitely often in

will necessarily repeat infinitely often in  . Formally, there necessarily exists an index

. Formally, there necessarily exists an index  such that, for all

such that, for all  , one has

, one has  . It is important to note that the reoccurrence of the states belonging to

. It is important to note that the reoccurrence of the states belonging to  after time step

after time step  does not necessarily occur in a periodic manner during the evolution

does not necessarily occur in a periodic manner during the evolution  . Therefore, any evolution

. Therefore, any evolution  consists of a possibly empty prefix of successive states that repeat only finite many times, followed by an infinite suffix of successive states that repeat infinitely often, yet not necessarily in a periodic way. A set of states of the form

consists of a possibly empty prefix of successive states that repeat only finite many times, followed by an infinite suffix of successive states that repeat infinitely often, yet not necessarily in a periodic way. A set of states of the form  for some evolution

for some evolution  is commonly called an attractor of

is commonly called an attractor of  [36]. A precise definition can be given as follows:

[36]. A precise definition can be given as follows:

Definition 2. Let  be some Boolean neural network with

be some Boolean neural network with  activation cells. A set

activation cells. A set  is called an attractor for

is called an attractor for  if there exists an input stream

if there exists an input stream  such that the corresponding evolution

such that the corresponding evolution  satisfies

satisfies  .

.

In other words, an attractor of a Boolean neural network is a set of states such that the behaviour of the network could eventually become forever confined to that set of states. In this sense, the definition of an attractor requires the infinite input stream context to be properly formulated.

In this work, we suppose that attractors can only be of two distinct types, namely either meaningful or spurious. For instance, the type of each attractor could be determined by its topological features or by its neurophysiological significance with respect to measurable observations associated with certain behaviours or sensory discriminations (see Section “Neurophysiological Meaningfulness” above). From this point onwards, any given network is assumed to be provided with a corresponding classification of all of its attractors into meaningful and spurious types. Further discussions about the attribution of the attractors to either types will be addressed in the forthcoming sections.

An infinite input stream  of

of  is called meaningful if

is called meaningful if  is a meaningful attractor, and it is called spurious if

is a meaningful attractor, and it is called spurious if  is a spurious attractor. In other words, an input stream is called meaningful (respectively spurious) if the network dynamics induced by this input stream will eventually become confined into some meaningful (respectively spurious) attractor. Then, the set of all meaningful input streams of

is a spurious attractor. In other words, an input stream is called meaningful (respectively spurious) if the network dynamics induced by this input stream will eventually become confined into some meaningful (respectively spurious) attractor. Then, the set of all meaningful input streams of  is called the neural language of

is called the neural language of  and is denoted by

and is denoted by  . Finally, an arbitrary set of input streams

. Finally, an arbitrary set of input streams  is said to be recognisable by some Boolean neural network if there exists a network

is said to be recognisable by some Boolean neural network if there exists a network  such that

such that  .

.

Besides, if  denotes some Boolean neural network provided with an additional specification of the type of each of its attractors, then the complementary network

denotes some Boolean neural network provided with an additional specification of the type of each of its attractors, then the complementary network  is defined to be the same network as

is defined to be the same network as  yet with a completely opposite type specification of its attractors. Then, an attractor

yet with a completely opposite type specification of its attractors. Then, an attractor  is meaningful for

is meaningful for  iff

iff  is a spurious attractor for

is a spurious attractor for  and one has

and one has  . All preceding definitions are illustrated by the next Example 2.

. All preceding definitions are illustrated by the next Example 2.

Example 2. Let us consider the network  described in Example 1 and illustrated in Figure 1. Let us further assume that the network state where the three cells

described in Example 1 and illustrated in Figure 1. Let us further assume that the network state where the three cells  simultaneously fire determines the meaningfulness of the attractors of

simultaneously fire determines the meaningfulness of the attractors of  . In other words, the meaningful attractors of

. In other words, the meaningful attractors of  are precisely those containing the state

are precisely those containing the state  ; all other attractors are assumed to be spurious.

; all other attractors are assumed to be spurious.

Let us consider the periodic input stream  and its corresponding evolution

and its corresponding evolution

|

From time step  , the evolution

, the evolution  of

of  remains confined in a cyclic visit of the states

remains confined in a cyclic visit of the states  . Thence, the set

. Thence, the set  is an attractor of

is an attractor of  . Moreover, since the state

. Moreover, since the state  does not belong to

does not belong to  , the attractor

, the attractor  is spurious. Therefore, the input stream

is spurious. Therefore, the input stream  is also spurious, and hence does not belong to the neural language of

is also spurious, and hence does not belong to the neural language of  , i.e.

, i.e.  .

.

Let us consider another periodic input stream  and its corresponding evolution

and its corresponding evolution

|

The set of states  is an attractor, and the evolution

is an attractor, and the evolution  of

of  is confined in

is confined in  already from the very first time step

already from the very first time step  . Yet in this case, since the Boolean vector

. Yet in this case, since the Boolean vector  belongs to

belongs to  , the attractor

, the attractor  is meaningful. It follows that the input stream

is meaningful. It follows that the input stream  is also meaningful, and thus

is also meaningful, and thus  .

.

-Automata

-Automata

Büchi Automata

A finite deterministic Büchi automaton

[34] is a 5-tuple  , where

, where  is a finite set called the set of states,

is a finite set called the set of states,  is a finite alphabet,

is a finite alphabet,  is an element of

is an element of  called the initial state,

called the initial state,  is a partial function from

is a partial function from  into

into  called the transition function, and

called the transition function, and  is a subset of

is a subset of  called the set of final states. A finite deterministic Büchi automaton is generally represented as a directed labelled graph whose nodes and labelled edges correspond to the states and transitions of the automaton, respectively.

called the set of final states. A finite deterministic Büchi automaton is generally represented as a directed labelled graph whose nodes and labelled edges correspond to the states and transitions of the automaton, respectively.

Given some finite deterministic Büchi automaton  , every triple

, every triple  such that

such that  is called a transition of

is called a transition of  . Then, a path in

. Then, a path in  is a sequence of consecutive transitions

is a sequence of consecutive transitions  , also denoted by

, also denoted by  . The path

. The path  is said to successively visit the states

is said to successively visit the states  and the word

and the word  is the label of

is the label of  . The state

. The state  is called the origin of path

is called the origin of path  and

and  is said to be initial if its starting state is initial, i.e. if

is said to be initial if its starting state is initial, i.e. if  . If

. If  is an infinite path, the set of states visited infinitely many times by

is an infinite path, the set of states visited infinitely many times by  is denoted by

is denoted by  .

.

An infinite initial path  of

of  is said to be successful if it visits at least one of the final states infinitely often, i.e. if

is said to be successful if it visits at least one of the final states infinitely often, i.e. if  . An infinite word is then said to be recognised by

. An infinite word is then said to be recognised by  if it is the label of a successful infinite path in

if it is the label of a successful infinite path in  . The language recognised by

. The language recognised by

, denoted by

, denoted by  , is the set of all infinite words recognised by

, is the set of all infinite words recognised by  .

.

A cycle in  consists of a finite set of states

consists of a finite set of states  such that there exists a finite path in

such that there exists a finite path in  with same initial and final state and visiting precisely all states of

with same initial and final state and visiting precisely all states of  . A cycle

. A cycle  is said to be accessible from cycle

is said to be accessible from cycle  if there exists a path from some state of

if there exists a path from some state of  to some state of

to some state of  . Furthermore, a cycle is called successful if it contains a state belonging to

. Furthermore, a cycle is called successful if it contains a state belonging to  , and non-successful otherwise.

, and non-successful otherwise.

An alternating chain of length

(respectively co-alternating chain of length

(respectively co-alternating chain of length

) is a finite sequence of

) is a finite sequence of  distinct cycles

distinct cycles  such that

such that  is successful (resp.

is successful (resp.  is non-successful),

is non-successful),  is successful iff

is successful iff  is non-successful,

is non-successful,  is accessible from

is accessible from  , and

, and  is not accessible from

is not accessible from  , for all

, for all  . An alternating chain of length

. An alternating chain of length

is a sequence of two cycles

is a sequence of two cycles  such that

such that  is successful,

is successful,  is non-successful,

is non-successful,  is accessible from

is accessible from  , and

, and  is also accessible from

is also accessible from  (we recall that

(we recall that  denotes the least infinite ordinal). In this case, cycles

denotes the least infinite ordinal). In this case, cycles  and

and  are said to communicate. For any

are said to communicate. For any  , an alternating chain of length

, an alternating chain of length  is said to be maximal in

is said to be maximal in  if there is no alternating chain and no co-alternating chain in

if there is no alternating chain and no co-alternating chain in  with a length strictly larger than

with a length strictly larger than  . A co-alternating chain of length

. A co-alternating chain of length  is said to be maximal in

is said to be maximal in  if exactly the same condition holds.

if exactly the same condition holds.

The above definitions are illustrated by the Example S1 and Figure S1 in File S1.

Muller Automata

A finite deterministic Muller automaton is a 5-tuple  , where

, where  ,

,  ,

,  , and

, and  are defined exactly like for deterministic Büchi automata, and

are defined exactly like for deterministic Büchi automata, and  is a set of states' sets called the table of the automaton. The notions of transition and path are defined as for deterministic Büchi automata. An infinite initial path

is a set of states' sets called the table of the automaton. The notions of transition and path are defined as for deterministic Büchi automata. An infinite initial path  of

of  is now called successful if

is now called successful if  . Given a finite deterministic Muller automaton

. Given a finite deterministic Muller automaton  , a cycle in

, a cycle in  is called successful if it belongs to

is called successful if it belongs to  , and non-succesful otherwise. An infinite word is then said to be recognised by

, and non-succesful otherwise. An infinite word is then said to be recognised by  if it is the label of a successful infinite path in

if it is the label of a successful infinite path in  , and the

, and the  -language recognised by

-language recognised by

, denoted by

, denoted by  , is defined as the set of all infinite words recognised by

, is defined as the set of all infinite words recognised by  . The class of all

. The class of all  -languages recognisable by some deterministic Muller automata is precisely the class of

-languages recognisable by some deterministic Muller automata is precisely the class of  -rational languages

[79].

-rational languages

[79].

It can be shown that deterministic Muller automata are strictly more powerful than deterministic Büchi automata, but have an equivalent expressive power as non-deterministic Büchi automata, Rabin automata, Street automata, parity automata, and non-deterministic Muller automata [81].

For each ordinal  such that

such that  , we introduce the concept of an alternating tree of length

, we introduce the concept of an alternating tree of length  in a deterministic Muller automaton

in a deterministic Muller automaton  , which consists of a tree-like disposition of the successful and non-successful cycles of

, which consists of a tree-like disposition of the successful and non-successful cycles of  induced by the ordinal

induced by the ordinal  , as illustrated in Figure 2. In order to describe this tree-like disposition, we first recall that any ordinal

, as illustrated in Figure 2. In order to describe this tree-like disposition, we first recall that any ordinal  can uniquely be written of the form

can uniquely be written of the form  , for some

, for some  ,

,  , and

, and  . Then, given some deterministic Muller automata

. Then, given some deterministic Muller automata  and some strictly positive ordinal

and some strictly positive ordinal  , an alternating tree (respectively co-alternating tree) of length

, an alternating tree (respectively co-alternating tree) of length  is a sequence of cycles of

is a sequence of cycles of

such that:

such that:

Figure 2. An alternating tree of length  , for some ordinal

, for some ordinal  .

.

Illustration of the inclusion and accessibility relations between cycles forming an alternating tree of length  .

.

is successful (respectively non-successful);

is successful (respectively non-successful); , and

, and  is successful iff

is successful iff  is non-successful;

is non-successful; is accessible from

is accessible from  , and

, and  is successful iff

is successful iff  is non-successful;

is non-successful; and

and  are both accessible from

are both accessible from  , and each

, and each  is successful whereas each

is successful whereas each  is non-successful.

is non-successful.

An alternating tree of length  is said to be maximal in

is said to be maximal in  if there is no alternating or co-altenrating tree in

if there is no alternating or co-altenrating tree in  of length

of length  . A co-alternating tree of length

. A co-alternating tree of length  is said to be maximal in

is said to be maximal in  if exactly the same condition holds. An alternating tree of length

if exactly the same condition holds. An alternating tree of length  is illustrated in Figure 2.

is illustrated in Figure 2.

The above definitions are illustrated by the Example S2 and Figure S2 in File S2.

Results

Hierarchical Classification of Neural Networks

Our notion of an attractor refers to a set of states such that the behaviour of the network could forever be confined into that set of states. In other words, an attractor corresponds to a cyclic behaviour of the network produced by an infinite input stream. According to these considerations, we provide a generalisation to this precise infinite input stream context of the classical equivalence result between Boolean neural networks and finite state automata [1]–[3]. More precisely, we show that, under some natural specific conditions on the specification of the type of their attractors, Boolean recurrent neural networks express the very same expressive power as deterministic Büchi automata. This equivalence result enables us to establish a hierarchical classification of neural networks by translating the Wagner classification theory from the Büchi automaton to the neural network context [35]. The obtained classification is intimately related to the attractive properties of the neural networks, and hence provides a new refined measurement of the computational power of Boolean neural networks in terms of their attractive behaviours.

Boolean Recurrent Neural Networks and Büchi Automata

We now prove that, under some natural conditions, Boolean recurrent neural networks are computationally equivalent to deterministic Büchi automata. Towards this purpose, we consider that the neural networks include selected elements belonging to an output layer. The activation of the output layer communicates the output of the system to the environment.

Formally, let us consider a recurrent neural network  , as described in Definition 1, with

, as described in Definition 1, with  activation cells and

activation cells and  input units. In addition, let us assume that

input units. In addition, let us assume that  cells chosen among the

cells chosen among the  activation cells form the output layer of the neural network, denoted by

activation cells form the output layer of the neural network, denoted by  . For graphical purpose, the activation cells of the output layer are represented as double-circled nodes in the next figures. Thus, a recurrent neural network is now defined by a tuple

. For graphical purpose, the activation cells of the output layer are represented as double-circled nodes in the next figures. Thus, a recurrent neural network is now defined by a tuple  . Let us assume also that the specification type of the attractors of a network

. Let us assume also that the specification type of the attractors of a network  is naturally related to its output layer as follows: an attractor

is naturally related to its output layer as follows: an attractor  of

of  is considered meaningful if it contains at least one state where some output cell is spiking, i.e. if there exist

is considered meaningful if it contains at least one state where some output cell is spiking, i.e. if there exist  and

and  such that

such that  and

and  ; the attractor

; the attractor  is considered spurious otherwise. According to these assumptions, meaningful attractors refer to the cyclic behaviours of the network that induce some response activity of the system via its output layer, whereas spurious attractors refer to the cyclic behaviours of the system that do not evoke any response at all of the output layer.

is considered spurious otherwise. According to these assumptions, meaningful attractors refer to the cyclic behaviours of the network that induce some response activity of the system via its output layer, whereas spurious attractors refer to the cyclic behaviours of the system that do not evoke any response at all of the output layer.

It can be stated that the expressive powers of Boolean recurrent neural networks and deterministic Büchi automaton are equivalent. As a first step towards this result, the following proposition shows that any Boolean recurrent neural network can be simulated by some deterministic Büchi automaton.

Proposition 1.

Let

be some Boolean recurrent neural network provided with an output layer. Then there exists a deterministic Büchi automaton

be some Boolean recurrent neural network provided with an output layer. Then there exists a deterministic Büchi automaton

such that

such that

.

.

Proof. Let  be some neural network given by the tuple

be some neural network given by the tuple  , with

, with  ,

,  , and

, and  . Consider the deterministic Büchi automaton

. Consider the deterministic Büchi automaton  , where

, where  ,

,  ,

,  is the

is the  -dimensional zero vector,

-dimensional zero vector,  , and

, and  is the function defined by

is the function defined by  iff

iff  . Note that the complexity of the transformation is exponential, since

. Note that the complexity of the transformation is exponential, since  and

and  .

.

According to this construction, any infinite evolution  of

of  naturally induces a corresponding infinite initial path

naturally induces a corresponding infinite initial path  in

in  . Moreover, by the definitions of meaningful and spurious attractors of

. Moreover, by the definitions of meaningful and spurious attractors of  , an infinite input stream

, an infinite input stream  is meaningful for

is meaningful for  iff

iff  is recognised by

is recognised by  . In other words,

. In other words,  iff

iff  , and therefore

, and therefore  .

.

According to the construction given in the proof of Proposition 1, any infinite evolution of the network  is naturally associated with a corresponding infinite initial path in the automaton

is naturally associated with a corresponding infinite initial path in the automaton  , and conversely, any infinite initial path in

, and conversely, any infinite initial path in  corresponds to some possible infinite evolution of

corresponds to some possible infinite evolution of  . Consequently, there is a biunivocal correspondence between the attractors of the network

. Consequently, there is a biunivocal correspondence between the attractors of the network  and the cycles in the graph of the corresponding Büchi automaton

and the cycles in the graph of the corresponding Büchi automaton  . As a result, a procedure to compute all possible attractors of a given network

. As a result, a procedure to compute all possible attractors of a given network  is obtained by firstly constructing the corresponding deterministic Büchi automaton

is obtained by firstly constructing the corresponding deterministic Büchi automaton  and secondly listing all cycles in the graph of

and secondly listing all cycles in the graph of  .

.

As a second step towards the equivalence result, we prove now that any deterministic Büchi automaton can be simulated by some Boolean recurrent neural network.

Proposition 2.

Let

be some deterministic Büchi automaton over the alphabet

be some deterministic Büchi automaton over the alphabet

, with

, with

. Then there exists a Boolean recurrent neural network

. Then there exists a Boolean recurrent neural network

provided with an output layer such that

provided with an output layer such that

.

.

Proof. Let  be some deterministic Büchi automaton over alphabet

be some deterministic Büchi automaton over alphabet  , with

, with  , and

, and  . Consider the network

. Consider the network  with

with  cells given as follows: firstly,

cells given as follows: firstly,  , where

, where  is decomposed into a set of

is decomposed into a set of  “letter cells”

“letter cells”  , a “delay-cell”

, a “delay-cell”  , and a set of

, and a set of  “state cells”

“state cells”  ; secondly, the set of

; secondly, the set of  “input units”

“input units”  , and thirdly, the outptut layer

, and thirdly, the outptut layer  . The idea of the simulation is that the “letter cells” and “state cells” of the network

. The idea of the simulation is that the “letter cells” and “state cells” of the network  simulate the letters and states currently read and entered by the automaton

simulate the letters and states currently read and entered by the automaton  , respectively.

, respectively.

Towards this purpose, the weight matrices  ,

,  , and

, and  are described as follows. Concerning the matrix

are described as follows. Concerning the matrix  : for any

: for any  , we consider the binary decomposition of

, we consider the binary decomposition of  , namely

, namely  , with

, with  , and for any

, and for any  , we set the weight

, we set the weight  ; for all other

; for all other  , we set

, we set  , for any

, for any  . Concerning the matrix

. Concerning the matrix  : for any

: for any  , we set

, we set  ; we also set

; we also set  ; for all other

; for all other  , we set

, we set  . Concerning the matrix

. Concerning the matrix  : we set

: we set  , and for any

, and for any  and any

and any  , we set

, we set  iff

iff  is a transition of

is a transition of  ; otherwise, for any pair of indices

; otherwise, for any pair of indices  such that

such that  has not been set to

has not been set to  or

or  , we set

, we set  . This construction is illustrated in Figure 3.

. This construction is illustrated in Figure 3.

Figure 3. The network  described in the proof of Proposition 2.

described in the proof of Proposition 2.

The network is characterised by a set of  input cells

input cells  reading the alphabet

reading the alphabet  ,

,  “letter cells”

“letter cells”  , a “delay-cell”

, a “delay-cell”  , and a set of

, and a set of  “state cells”

“state cells”  . The idea of the simulation is that the “letter cells” and “state cells” of the network

. The idea of the simulation is that the “letter cells” and “state cells” of the network  simulate the letters and states currently read and entered by the automaton

simulate the letters and states currently read and entered by the automaton  , respectively. In this illustration, we assume that the binary decomposition of

, respectively. In this illustration, we assume that the binary decomposition of  is given by

is given by  , so that the “letter cell”

, so that the “letter cell”  receives synaptic connections of intensities

receives synaptic connections of intensities  and

and  from input cells

from input cells  and

and  , respectively, and it receives synaptic connections of intensities

, respectively, and it receives synaptic connections of intensities  from any other input cells. Consequently, the “letter cell”

from any other input cells. Consequently, the “letter cell”  becomes active at time

becomes active at time  iff the sole input cells

iff the sole input cells  and

and  are active at time

are active at time  . The synaptic connections to other “letter cells” are not illustrated. Moreover, the synaptic connections

. The synaptic connections to other “letter cells” are not illustrated. Moreover, the synaptic connections  model the transition

model the transition  of automaton

of automaton  . The synaptic connections modelling other transitions are not illustrated.

. The synaptic connections modelling other transitions are not illustrated.

According to this construction, if we let  denote the boolean vector whose components are the

denote the boolean vector whose components are the  's (for

's (for  ), one has that the “letter cell”

), one has that the “letter cell”  will spike at time

will spike at time  iff the input vector

iff the input vector  is received at time

is received at time  . Moreover, at every time step

. Moreover, at every time step  , a unique “letter cell”

, a unique “letter cell”  and “state cell”

and “state cell”  are spiking, and, if

are spiking, and, if  performs the transition

performs the transition  at time

at time  , then network

, then network  evokes the spiking pattern

evokes the spiking pattern  . The relation between the final states

. The relation between the final states  of

of  and the output layer

and the output layer  of

of  ensures that any infinite input stream

ensures that any infinite input stream  is recognised by

is recognised by  if and only if

if and only if  is meaningful for

is meaningful for  . Therefore,

. Therefore,  .

.

The proof of Proposition 2 can be generalised to any network dynamics driven by unate local transition functions  , for

, for  , rather than by the

, rather than by the  threshold local transition functions defined by Equation 1. Since unate functions are a generalisation of threshold functions, this proof can be interesting in the broader context of switching theory.

threshold local transition functions defined by Equation 1. Since unate functions are a generalisation of threshold functions, this proof can be interesting in the broader context of switching theory.

Propositions 1 and 2 yield to the following equivalence between recurrent neural networks and deterministic Büchi automata.

Theorem 1.

Let

for some

for some

. Then

. Then

is recognisable by some Boolean recurrent neural network provided with an output layer iff

is recognisable by some Boolean recurrent neural network provided with an output layer iff

is recognisable by some deterministic Büchi automaton.

is recognisable by some deterministic Büchi automaton.

Proof. Proposition 1 shows that every language recognisable by some Boolean recurrent neural network is also recognisable by some deterministic Büchi automaton. Conversely, Proposition 2 shows that every language recognisable by some deterministic Büchi automaton is also recognisable by some Boolean recurrent neural network.

The two procedures given in the proofs of propositions 1 and 2 are illustrated by the Example S3 and Figure S3 in File S3.

RNN Hierarchy

In the theory of infinite word reading machines, abstract devices are commonly classified according to the topological complexity of their underlying  -language (i.e., the languages of infinite words that they recognise). Such classifications provide an interesting measurement of the expressive power of various kinds of infinite word reading machines. In this context, the most refined hierarchical classification of

-language (i.e., the languages of infinite words that they recognise). Such classifications provide an interesting measurement of the expressive power of various kinds of infinite word reading machines. In this context, the most refined hierarchical classification of  -automata – or equivalently, of

-automata – or equivalently, of  -rational languages – is the so-called Wagner hierarchy

[35].

-rational languages – is the so-called Wagner hierarchy

[35].

Here, this classification approach is translated from the  -automaton to the neural network context. More precisely, according to the equivalence given by Theorem 1, the Wagner hierarchy can naturally be translated from Büchi automata to Boolean neural networks. As a result, a hierarchical classification of first-order Boolean recurrent neural networks is obtained. Interestingly, the obtained classification is tightly related to the attractive properties of the networks, and, more precisely, refers to the ability of the networks to switch between meaningful and spurious attractive behaviours along their evolutions. Hence, the obtained hierarchical classification provides a new measurement of complexity of neural networks associated with their abilities to switch between different types of attractors along their evolutions.

-automaton to the neural network context. More precisely, according to the equivalence given by Theorem 1, the Wagner hierarchy can naturally be translated from Büchi automata to Boolean neural networks. As a result, a hierarchical classification of first-order Boolean recurrent neural networks is obtained. Interestingly, the obtained classification is tightly related to the attractive properties of the networks, and, more precisely, refers to the ability of the networks to switch between meaningful and spurious attractive behaviours along their evolutions. Hence, the obtained hierarchical classification provides a new measurement of complexity of neural networks associated with their abilities to switch between different types of attractors along their evolutions.

As a first step, the following facts and definitions need to be introduced. To begin with, for any  , the space

, the space  can naturally be equipped with the product topology of the discrete topology over

can naturally be equipped with the product topology of the discrete topology over  . Accordingly, one can show that the basic open sets of

. Accordingly, one can show that the basic open sets of  are the sets of infinite sequences of

are the sets of infinite sequences of  -dimensional Boolean vectors which all begin with a same prefix, or formally, the sets of the form

-dimensional Boolean vectors which all begin with a same prefix, or formally, the sets of the form  , where

, where  . An open set is then defined as a union of basic open sets. Moreover, as usual, a function

. An open set is then defined as a union of basic open sets. Moreover, as usual, a function  is said to be continuous iff the inverse image by

is said to be continuous iff the inverse image by  of every open set of

of every open set of  is an open set of

is an open set of  . Now, given two Boolean recurrent neural networks

. Now, given two Boolean recurrent neural networks  and

and  with

with  and

and  input units respectively, we say that

input units respectively, we say that  reduces (or Wadge reduces or continuously reduces) to

reduces (or Wadge reduces or continuously reduces) to  , denoted by

, denoted by  , iff there exists a continuous function

, iff there exists a continuous function  such that, for any input stream

such that, for any input stream  , one has

, one has  , or equivalently, such that

, or equivalently, such that  [78]. Intuitively,

[78]. Intuitively,  iff the problem of determining whether some input stream

iff the problem of determining whether some input stream  belongs to the neural language of

belongs to the neural language of  (i.e. whether

(i.e. whether  is meaningful for

is meaningful for  ) reduces via some simple function

) reduces via some simple function  to the problem of knowing whether

to the problem of knowing whether  belongs to the neural language of

belongs to the neural language of  (i.e. whether

(i.e. whether  is meaningful for

is meaningful for  ). The corresponding strict reduction, equivalence relation, and incomparability relation are then naturally defined by

). The corresponding strict reduction, equivalence relation, and incomparability relation are then naturally defined by  iff

iff  , as well as

, as well as  iff

iff  , and

, and  iff

iff  . Moreover, a network

. Moreover, a network  is called self-dual if

is called self-dual if  ; it is called non-self-dual if

; it is called non-self-dual if  , which can be proved to be equivalent to saying that

, which can be proved to be equivalent to saying that  [78]. We recall that the network

[78]. We recall that the network  , as defined in Section “Formal Definitions”, corresponds to the network

, as defined in Section “Formal Definitions”, corresponds to the network  whose type specification of its attractors has been inverted. Consequently,

whose type specification of its attractors has been inverted. Consequently,  does not correspond a priori to some neural network provided with an output layer. By extension, an

does not correspond a priori to some neural network provided with an output layer. By extension, an  -equivalence class of networks is called self-dual if all its elements are self-dual, and non-self-dual if all its elements are non-self-dual.

-equivalence class of networks is called self-dual if all its elements are self-dual, and non-self-dual if all its elements are non-self-dual.

The continuous reduction relation over the class of Boolean recurrent neural networks naturally induces a hierarchical classification of networks formally defined as follows:

Definition 3. The collection of all Boolean recurrent neural networks ordered by the reduction “ ” is called the RNN hierarchy.

” is called the RNN hierarchy.

We now provide a precise description of the RNN hierarchy. The result is obtained by drawing a parallel between the RNN hierarchy and the restriction of the Wagner hierarchy to Büchi automata. For this purpose, let us define the DBA hierarchy to be the collection of all deterministic Büchi automata over multidimensional Boolean alphabets  ordered by the continuous reduction relation “

ordered by the continuous reduction relation “ ”. More precisely, given two deterministic Büchi automata

”. More precisely, given two deterministic Büchi automata  and

and  , we set

, we set  iff there exists a continuous function

iff there exists a continuous function  such that, for any input stream

such that, for any input stream  , one has

, one has  . The following result shows that the RNN hierarchy and the DBA hierarchy are actually isomorphic. Moreover, a possible isomorphism is given by the mapping described in Proposition 1 which associates to every network

. The following result shows that the RNN hierarchy and the DBA hierarchy are actually isomorphic. Moreover, a possible isomorphism is given by the mapping described in Proposition 1 which associates to every network  a corresponding deterministic Büchi automaton

a corresponding deterministic Büchi automaton  .

.

Proposition 3. The RNN hierarchy and the DBA hierarchy are isomorphic.

Proof. Consider the mapping described in Proposition 1 which associates to every network  a corresponding deterministic automaton

a corresponding deterministic automaton  . We prove that this mapping is an embedding from the RNN hierarchy into the DBA hierarchy. Let

. We prove that this mapping is an embedding from the RNN hierarchy into the DBA hierarchy. Let  and

and  be any two networks, and let

be any two networks, and let  and

and  be their corresponding deterministic Büchi automata. Proposition 1 ensures that

be their corresponding deterministic Büchi automata. Proposition 1 ensures that  and

and  . Hence, one has

. Hence, one has  iff by definition there exists a continuous function

iff by definition there exists a continuous function  such that

such that  iff there exists a continuous function

iff there exists a continuous function  such that

such that  , iff by definition

, iff by definition  . Therefore

. Therefore  iff

iff  . It follows that

. It follows that  iff

iff  , proving that the considered mapping is an embedding. We now show that, up to the continuous equivalence relation “

, proving that the considered mapping is an embedding. We now show that, up to the continuous equivalence relation “ ”, this mapping is also onto. Let

”, this mapping is also onto. Let  be some deterministic Büchi automaton. By Proposition 2, there exists a network

be some deterministic Büchi automaton. By Proposition 2, there exists a network  such that

such that  . Moreover, by Proposition 1, the automaton

. Moreover, by Proposition 1, the automaton  satisfies

satisfies  . It follows that for any infinite input stream

. It follows that for any infinite input stream  , one has

, one has  iff

iff  , meaning that both

, meaning that both  and

and  hold, and thus

hold, and thus  . Therefore, for any deterministic Büchi automaton

. Therefore, for any deterministic Büchi automaton  , there exists a neural network

, there exists a neural network  such that

such that  , meaning precisely that up to the continuous equivalence relation “

, meaning precisely that up to the continuous equivalence relation “ ”, the mapping

”, the mapping  described in Proposition 1 is onto. This concludes the proof.

described in Proposition 1 is onto. This concludes the proof.

By Proposition 3 and the usual results of the DBA hierarchy, a precise description of the RNN hierarchy can be given. First of all, the RNN hierarchy is well-founded, i.e. there is no infinite strictly descending sequence of networks  . Moreover, the maximal strict chains in the RNN hierarchy have length

. Moreover, the maximal strict chains in the RNN hierarchy have length  , meaning that the RNN hierarchy has a height of

, meaning that the RNN hierarchy has a height of  . (A strict chain of length

. (A strict chain of length  in the RNN hierarchy is a sequence of neural networks

in the RNN hierarchy is a sequence of neural networks  such that

such that  iff

iff  ; a strict chain is said to be maximal if its length is at least as large as the length of every other strict chain.) Furthermore, the maximal antichains of the RNN hierarchy have length

; a strict chain is said to be maximal if its length is at least as large as the length of every other strict chain.) Furthermore, the maximal antichains of the RNN hierarchy have length  , meaning that the RNN hierarchy has a width of

, meaning that the RNN hierarchy has a width of  . (An antichain of length

. (An antichain of length  in the RNN hierarchy is a sequence of neural networks

in the RNN hierarchy is a sequence of neural networks  such that

such that  for all

for all  with

with  ; an antichain is said to be maximal if its length is at least as large as the length of every other antichain.) More precisely, it can be shown that incomparable networks are equivalent (for the relation

; an antichain is said to be maximal if its length is at least as large as the length of every other antichain.) More precisely, it can be shown that incomparable networks are equivalent (for the relation  ) up to complementation, i.e., for any two networks

) up to complementation, i.e., for any two networks  and

and  , one has

, one has  iff

iff  and

and  are non-self-dual and

are non-self-dual and  . These properties imply that, up to equivalence and complementation, the RNN hierarchy is actually a well-ordering. In fact, the RNN hierarchy consists of an infinite alternating succession of pairs of non-self-dual and single self-dual classes, overhung by an additional single non-self-dual class at the first limit level

. These properties imply that, up to equivalence and complementation, the RNN hierarchy is actually a well-ordering. In fact, the RNN hierarchy consists of an infinite alternating succession of pairs of non-self-dual and single self-dual classes, overhung by an additional single non-self-dual class at the first limit level  , as illustrated in Figure 4.

, as illustrated in Figure 4.

Figure 4. The RNN hierarchy.

An infinite alternating succession of pairs of non-self-dual classes of networks followed by single self-dual classes of networks, all of them overhung by an additional single non-self-dual class at the first limit level. Circles represent the equivalence classes of networks (with respect to the relation “ ”) and arrows between circles represent the strict reduction “

”) and arrows between circles represent the strict reduction “ ” between all elements of the corresponding classes.

” between all elements of the corresponding classes.

For convenience reasons, the degree of a network  in the RNN hierarchy is defined such that the same degree is shared by both non-self-dual networks at some level and self-dual networks located just one level higher, as illustrated in Figure 4:

in the RNN hierarchy is defined such that the same degree is shared by both non-self-dual networks at some level and self-dual networks located just one level higher, as illustrated in Figure 4:

|

Moreover, the equivalence between the DBA and RNN hierarchies ensures that the RNN hierarchy is actually decidable, in the sense that there exists an algorithmic procedure which is able to compute the degree of any network in the RNN hierarchy. All the above properties of the RNN hierarchy are summarised in the following result.

Theorem 2.

The RNN hierarchy is a decidable pre-well-ordering of width

and height

and height

.

.

Proof. The DBA hierarchy consists of a decidable pre-well-ordering of width  and height

and height  [79]. Proposition 3 ensures that the RNN hierarchy and the DBA hierarchy are isomorphic.

[79]. Proposition 3 ensures that the RNN hierarchy and the DBA hierarchy are isomorphic.

The following result provides a detailed description of the decidability procedure of the RNN hierarchy. More precisely, it is shown that the degree of a network  in the RNN hierarchy corresponds precisely to the maximal number of times that this network might switch between visits of meaningful and spurious attractors along some evolution.

in the RNN hierarchy corresponds precisely to the maximal number of times that this network might switch between visits of meaningful and spurious attractors along some evolution.

Theorem 3.

Let

be some network provided with an additional specification of an output layer,

be some network provided with an additional specification of an output layer,

be the corresponding deterministic Büchi automaton of

be the corresponding deterministic Büchi automaton of

, and

, and

.

.

If there exists in

a maximal alternating chain of length

a maximal alternating chain of length

and no maximal co-alternating chain of length

and no maximal co-alternating chain of length

, then

, then

and

and

is non-self-dual.

is non-self-dual.

Symmetrically, if there exists in

a maximal co-alternating chain of length

a maximal co-alternating chain of length

but no maximal alternating chain of length

but no maximal alternating chain of length

, then also

, then also

and

and

is non-self-dual.

is non-self-dual.

If there exist in

a maximal alternating chain of length

a maximal alternating chain of length

as well as a maximal co-alternating chain of length

as well as a maximal co-alternating chain of length

, then

, then

and

and

is self-dual.

is self-dual.

If there exist in

a maximal alternating chain of length

a maximal alternating chain of length

, then

, then

and

and

is non-self-dual.

is non-self-dual.

Proof. By Proposition 3, the degree of a network  in the RNN hierarchy is equal to the degree of its corresponding deterministic Büchi automaton

in the RNN hierarchy is equal to the degree of its corresponding deterministic Büchi automaton  in the DBA hierarchy. Moreover, the degree of a deterministic Büchi automaton in the DBA hierarchy corresponds precisely to the length of a maximal alternating or co-alternating chain contained in this automaton [79].

in the DBA hierarchy. Moreover, the degree of a deterministic Büchi automaton in the DBA hierarchy corresponds precisely to the length of a maximal alternating or co-alternating chain contained in this automaton [79].

By Theorem 3, the decidability procedure of the degree of a neural network  in the RNN hierarchy consists firstly in translating the network

in the RNN hierarchy consists firstly in translating the network  into its corresponding deterministic Büchi automaton

into its corresponding deterministic Büchi automaton  , as described in Proposition 1, and secondly in returning the ordinal

, as described in Proposition 1, and secondly in returning the ordinal  corresponding to the length of a maximal alternating chain or co-alternating chain contained in

corresponding to the length of a maximal alternating chain or co-alternating chain contained in  . Note that this procedure can clearly be achieved by some graph analysis of the automaton

. Note that this procedure can clearly be achieved by some graph analysis of the automaton  , since the graph of

, since the graph of  is always finite. Furthermore, since alternating and co-alternating chains are defined in terms of cycles in the graph of the automaton, then according to the biunivocal correspondence between cycles in

is always finite. Furthermore, since alternating and co-alternating chains are defined in terms of cycles in the graph of the automaton, then according to the biunivocal correspondence between cycles in  and attractors of

and attractors of  , it can be deduced that the complexity of a network in the RNN hierarchy is in fact directly related to the attractive properties of this network.

, it can be deduced that the complexity of a network in the RNN hierarchy is in fact directly related to the attractive properties of this network.

More precisely, it can be observed that the complexity measurement provided by the RNN hierarchy actually corresponds to the maximal number of times that a network might alternate between visits of meaningful and spurious attractors along some evolution. Indeed, the existence of a maximal alternating or co-alternating chain  of length

of length  in

in  means that every infinite initial path in

means that every infinite initial path in  might alternate at most

might alternate at most  times between visits of successful and non-successful cycles. Yet this is precisely equivalent to saying that every evolution of

times between visits of successful and non-successful cycles. Yet this is precisely equivalent to saying that every evolution of  can only alternate at most

can only alternate at most  times between visits of meaningful and spurious attractors before eventually becoming trapped forever by a last attractor. In this case, Theorem 3 ensures that the degree of

times between visits of meaningful and spurious attractors before eventually becoming trapped forever by a last attractor. In this case, Theorem 3 ensures that the degree of  is equal to

is equal to  . Moreover, the existence of an alternating chain

. Moreover, the existence of an alternating chain  of length

of length  in

in  is equivalent to the existence of an infinite initial path in

is equivalent to the existence of an infinite initial path in  that might alternate infinitely many times between visits of the cycles

that might alternate infinitely many times between visits of the cycles  and

and  . This is equivalent to saying that there exists an evolution of

. This is equivalent to saying that there exists an evolution of  that might alternate infinitely many times between visits of a meaningful and a spurious attractor. By Theorem 3, the degree of

that might alternate infinitely many times between visits of a meaningful and a spurious attractor. By Theorem 3, the degree of  is equal to

is equal to  is this case. Therefore, the RNN hierarchy provides a new measurement of complexity of neural networks according to their ability to maximally alternate between different types of attractors along their evolutions.

is this case. Therefore, the RNN hierarchy provides a new measurement of complexity of neural networks according to their ability to maximally alternate between different types of attractors along their evolutions.

Finally, the decidability procedure of the RNN hierarchy is illustrated by the Example S4 in File S4.

Refined Hierarchical Classification of Neural Networks

In this section, we show that by relaxing the restrictions on the specification of the type of their attractors, the networks significantly increase their expressive power from deterministic Büchi automata up to Muller automata [80]. Hence, by translating once again the Wagner classification theory from the Muller automaton to the neural network context, another more refined hierarchical classification of Boolean neural networks can be obtained. The obtained classification is also tightly related to the attractive properties of the networks, and hence provides once again a new refined measurement of complexity of Boolean recurrent neural networks in terms of their attractive behaviours.

Boolean Recurrent Neural Networks and Muller Automata

The assumption that the networks are provided with an additional description of an output layer, which would subsequently influence the type specifications (meaningful/spurious) of their attractors, is not necessary anymore from this point onwards. Instead, let us assume that, for any network, the precise classification of its attractors into meaningful and spurious types is known. How the meaningfulness of the attractors is determined is an issue that is not considered here. For instance, the specification of the type of each attractor might have been determined by its neurophysiological significance with respect to measurable observations associated to certain behaviours or sensory discriminations. Formally, in this section, a recurrent neural network consists of a tuple  , as described in Definition 1, but also provided with an additional specification into meaningful and spurious type for each one of its attractors.

, as described in Definition 1, but also provided with an additional specification into meaningful and spurious type for each one of its attractors.