Abstract

Optical coherence tomography (OCT) is the de facto standard imaging modality for ophthalmological assessment of retinal eye disease, and is of increasing importance in the study of neurological disorders. Quantification of the thicknesses of various retinal layers within the macular cube provides unique diagnostic insights for many diseases, but the capability for automatic segmentation and quantification remains quite limited. While manual segmentation has been used for many scientific studies, it is extremely time consuming and is subject to intra- and inter-rater variation. This paper presents a new computational domain, referred to as flat space, and a segmentation method for specific retinal layers in the macular cube using a recently developed deformable model approach for multiple objects. The framework maintains object relationships and topology while preventing overlaps and gaps. The algorithm segments eight retinal layers over the whole macular cube, where each boundary is defined with subvoxel precision. Evaluation of the method on single-eye OCT scans from 37 subjects, each with manual ground truth, shows improvement over a state-of-the-art method.

OCIS codes: (100.0100) Image processing, (170.4470) Ophthalmology, (170.4500) Optical coherence tomography

1. Introduction

Optical coherence tomography (OCT) is an interferometric technique that detects reflected or back-scattered light from tissue. An OCT system probes the retina with a beam of light and lets the reflection interfere with a reference beam originating from the same light source. The resultant interfered signal describes the reflectivity profile along the beam axis; this one-dimensional depth scan is referred to as an A-scan. Multiple adjacent A-scans are used to create two-dimensional (B-scans) and three-dimensional image volumes. OCT has allowed for in vivo microscopic imaging of the human retina, enabling the unprecedented study of ocular diseases. OCT as a modality has provided increased access to the inner workings of the retinal substructures, allowing for detailed cohort analyses of multiple sclerosis (MS) with respect to the macular retinal layers [1–3]. MS is an inflammatory demyelinating disorder of the central nervous system, with neuronal loss in the retina being a primary vector of MS, which is independent of any axonal injury [2, 4]. Though unmyelinated, it is very common to observe inflammation and neuronal loss in the retina of MS patients. The retinal nerve fiber (RNFL) principally consists of axons of ganglion cell neurons, with these axons joining together at the optic disc to form the optic nerve. MS is clinically manifested in the optic nerves as optic neuritis, it can also be observed through the degeneration of axons in the optic nerves, resulting in the atrophy of various [5, 6]. For approximately 95% of MS patients there is no visibly pathological corruption of the OCT images as a result of MS, the remaining 5% have microcystic macular edema (MME) [3]. MS subjects with MME were excluded from our cohort. OCT has also contributed to the study of more traditional ophthalmological conditions such as glaucoma [7–10] and myopia [11], as well as to retinal vasculature [12, 13], and to the exploration of more obliquely related conditions such as Alzheimer’s disease (AD) [14–16] and diabetes [17, 18]. The study of such a wide assortment of pathologies necessitates automated processing, which in the case of macular retinal OCT starts with segmentation of the various retinal cellular layers.

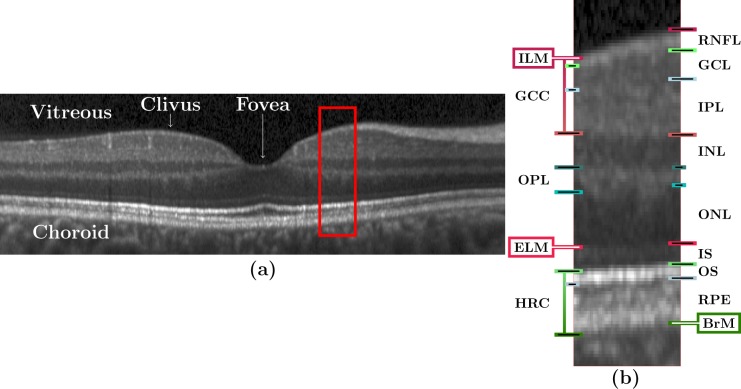

Figure 1 shows a spectral domain OCT image of the macula along with definitions of various regions and layers. In particular, Fig. 1(a) shows a B-scan image through the fovea while Fig. 1(b) shows a zoomed portion with definitions of the various retinal layers and boundaries. It is apparent from these images that the boundary between the GCL and IPL is hard to discern, which is often the case in OCT images. As a result, it is common for OCT layer segmentation methods to treat these two layers as one, which we refer to as the GCIP. The layers our automated algorithm segments (in order from the vitreous to the choroid) are the RNFL, GCIP, INL, OPL, ONL, IS, OS, and RPE. These eight layers (and their associated nine boundaries) are the maximal set that are typically segmented from OCT of the macular retina.

Fig. 1.

(a) A B-scan from a subject in our cohort with annotations indicating the locations of the vitreous, choroid, clivus, and fovea. (The image has been rescaled by a factor of three along each A-scan for display purposes.) The red boxed region is shown magnified (×3) in (b) with markings to denote various layers and boundaries. The layers are: RNFL; ganglion cell (GCL); inner plexiform (IPL); inner nuclear (INL); outer plexiform (OPL); outer nuclear (ONL), inner segment (IS); outer segment (OS); retinal pigment epithelium (RPE). The named boundaries are: inner limiting membrane (ILM); external limiting membrane (ELM); Bruch’s Membrane (BrM). The OS and RPE are collectively referred to as the hyper-reflectivity complex (HRC), and the ganglion cell complex (GCC) comprises the RNFL, GCL, and IPL.

There has been a large body of work on macular retinal OCT layer segmentation [19–35]. A diverse array of approaches have been investigated including methods based on active contours [19, 20], machine learning [23–25], graphs [24–30, 36, 37], dynamic programming [28], texture analysis [21], simple image gradients [31], registration [34], and level sets [35]. None of these methods, with the exception of the the active contours [19, 20] method of Ghorbel et al. and the “loosely coupled level sets” (LCLC) method of Novosel et al. [35], define boundaries between retinal layers in a subvoxel manner. This voxel level limit in precision manifests itself in layers that are forced to be at least one voxel thick even if they are very thin or absent (which is the case for some layers at the fovea). The thickness constraints can be controlled in the graph-based methods [24–30, 36, 37], allowing for layers of zero thickness which would enable the segmented surfaces to overlap. However, those methods that do allow a hard constraint of a zero thickness layer are still not reflecting the true thickness of the layer, which is somewhere between zero and one pixel thick. Upsampling could be seen as a way to increase the resolution of the data enabling sufficient samples between layers. Upsampling, however, has several drawbacks the first of which is its failure to address the underlying problem. As layers are still required to be a certain voxel thickness at the operating resolution used by any of the algorithms. Secondly, upsampling can introduce various spurious artifacts such as ringing due to Gibbs phenomenon [38] which would hamper the ability to correctly identify layer boundaries.

The active contour work of Ghorbel et al. [19, 20] generates segmentations on all the layers in a tight window around the fovea (between the clivi) of a B-scan that itself must also pass through the fovea. Hence, this work is limited in its scope and impact. The LCLS method partially addresses the lack of subvoxel precision. Level set methods are inherently capable of subvoxel precision, but since LCLS incorporates a “proper ordering” constraint on pairs of adjacent level sets, it becomes necessary for the zero crossings of these pairs to be separated by at least one voxel position. Therefore, although the thickness of a specific layer can be arbitrarily small at a given voxel position, the thickness of layers adjacent to this one must be at least one voxel thick. As in LCLS, our method also uses a level set approach, but we employ three significant extensions. First, we segment all retinal layers simultaneously using a multiple-object geometric deformable model (MGDM) approach [39]. Second, Our method distinguishes itself by operating on the complete 3D volume in concert, unlike many other methods which use the adjoining B-scans to simply smooth out the estimated boundaries [36]. Third, our method uses a geometric transformation of the raw data prior to running MGDM in order to surmount the voxel precision restrictions that are present in LCLS and in the graph based methods. As MGDM does not allow gaps or overlap of objects, we can create a complete segmentation of the retinal image. In addition, the output MGDM level sets can be considered to represent a partial volume model of the tissues, which is a capability that is not available with current graph based methods.

2. Methods

Our method builds upon our random forest (RF) [40] based segmentation of the macula [24] and also provides for a new computational domain which we refer to as flat space. First, as is common in the literature, we estimate the boundaries of the vitreous and choroid with the retina. From these estimated boundaries, we apply a mapping that was learned by regression on a collection of manual segmentations, which maps the retinal space between the vitreous and choroid to a domain in which each of the layers is approximately flat, referred to as flat space. In the original space, we use the random forest layer boundary estimation [24] to compute probabilities for the boundaries of each layer and then map them to flat space. These probabilities are then used to drive MGDM [39], providing a segmentation in flat space which is then mapped back to the original space. Detailed descriptions of these steps are provided below.

2.1. Preprocessing

We use several of the preprocessing steps outlined in Lang et al. [24], which we briefly describe here. The first step is intensity normalization, which is necessary because of automatic intensity rescaling and automatic real-time averaging performed by the scanner, both of which lead to differences in the dynamic ranges of B-scans. Intensity normalization is achieved by computing a robust maximum Im and linearly rescaling intensities from the range [0, Im] to the range [0, 1], with values greater than Im being clamped at 1. The robust maximum, Im, is found by first median filtering each individual A-scan within the same B-scan using a kernel size of 15 pixels. The robust maximum is set to a value that is 5% larger than the maximum intensity of the entire median-filtered image. Next, the macular retina is detected by estimating the ILM and the BrM (providing initial estimates that are updated later). Vertical derivatives of a Gaussian smoothed image (σ = 3 pixels) are computed and the largest positive derivative is associated with the ILM while the largest negative derivative below the ILM is associated with the BrM. We thus have two collections of gradient extrema, positive and negative, each associated with a boundary, the ILM and BrM respectively. These two collections are crude estimators of these boundaries and contain errors, the correction of which is accomplished by comparing the estimated boundaries to the boundary given by independently median filtering the two collections. Points that are more than 15 voxels from the median filtered curve are removed as outliers and an interpolated point replaces it in the collection.

2.2. Flat space

We now introduce the concept of our new computational domain, which we refer to as flat space. We geometrically transform each subject image to a space in which all retina layers of the subject are approximately flat. To learn this transformation, we took OCT scans from 37 subjects comprising a mix of controls and MS patients and manually delineated all retinal layers. We then converted the positions of the boundaries between the manually delineated retinal layers into proportional distances between the BrM (which is given a distance of zero) and the ILM (which is given a distance of one). We then estimated the foveal centers of all scans based on the minimum distance between the ILM and BrM within a small search window about the center of the macula, and then aligned all subjects by placing their foveas at the origin. For each aligned A-scan, we then computed the interquartile mean of the proportional positions of each of the seven retinal layer boundaries between the ILM and BrM.

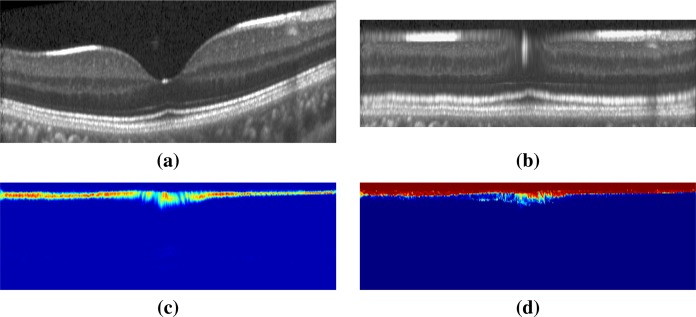

To place OCT data from a new subject into flat space, we first estimate the ILM and BrM boundaries and the foveal center from the subject’s OCT data as outlined above, in Section 2.1. We then estimate the positions of seven internal boundaries for each A-scan based on the mean proportional positions learned above. Each A-scan is then resampled by linearly interpolating new values at the estimated boundary positions and at ten equally spaced vertices between these boundary positions. The pixel intensities between the BrM and ILM are interpolated to have 89 pixel values between these two boundaries. The 89 pixels are computed on the A-scan distributed such that there is a pixel on the estimated boundaries position based on the flat space mapping, with ten additional pixels placed between each of the boundary estimates. In this way, each A-scan is represented by 89 values from the BrM to the ILM, which we put together into a three-dimensional volume that represents an approximately flat subject macula. An additional ten pixels are added above the ILM and another ten below the BrM. These additional pixels allow for errors in our initial estimates of these two boundaries. Resulting in a flat space in which each vertical column has exactly 109 pixels. Figure 2(a) shows a B-scan and Fig. 2(b) shows its flat space mapping.

Fig. 2.

Shown is (a) the original image in native space. In flat space are (b) the original image, (c) a heat map of the probabilities for one of the boundaries (ILM), and (d) the y-component of the GVF field for that same boundary. The color scale in (c) represents zero as blue and one as red.

2.3. RF boundary estimate

We estimate probabilities for the locations of nine boundaries within each OCT image using a RF method previously reported [24]. This method trains a RF using a set of image features and corresponding manual delineations derived from a training set of OCT images. The trained RF can be applied to the image features derived from a new OCT image and used to estimate the probabilities of the locations of nine retinal boundaries within the new OCT image. There are 27 features used in RF training and classifying. The first three are the relative location and signed distance of the voxel from the fovea. The next nine are the voxel values in a 3 × 3 neighborhood in the B-scan around the voxel. The last fifteen are various directional derivatives (first and second order) taken after oriented anisotropic Gaussian smoothing at different orientations and scales. The first twelve of which are generated by using the signed value of the first and magnitude of the second derivatives on six images corresponding to two scales and three orientations. The two scales for Gaussian smoothing are σx,y = {5, 1} pixels and σx,y = {10, 2} pixels while the three orientations are at −10, 0, and 10 degrees from the horizontal. The final three features are the average vertical gradients in an 11 × 11 neighborhood located at 15, 25, and 35 pixels below the current pixel, calculated using a Sobel kernel on the unsmoothed data. Rather than labeling the layers, the RF is trained to label each boundary.

Given a new image, the RF classifier is applied to all of the voxels, producing for each voxel the probability that the voxel belongs to each boundary. Since the RF classification is carried out on the original OCT image, the boundary probabilities are assigned to the voxels of the original OCT image. The RF training data and validation have all been previously completed in each subjects native space, for this reason we have kept the probability estimation from the RF in the subjects own native space. Examples showing these nine probability maps are shown in [24]. Here, we add an additional step to this process: transforming the estimated boundary probability maps to flat space. This allows us to carry out segmentation in flat space while utilizing features (and probabilities) developed on the original images. A probability map for the ILM boundary is shown in flat space in Fig. 2(c).

2.4. MGDM segmentation in flat space

We use MGDM to improve our estimates of the nine boundaries based on the probability maps generated by the RF classifiers. The recently developed MGDM [39] framework allows control over the movement of the boundaries of our ten retinal objects (eight retinal layers plus the choroid and sclea complex, and the vitreous) in order to achieve the final segmentation in flat space. MGDM is a multi-object extension to the conventional geometric level set formulation of active contours [41]. It uses a decomposition of the signed distance functions (SDFs) of all objects that enables efficient contour evolution while preventing object overlaps and gaps from forming. We consider N objects O 1, O 2, ..., ON that cover a domain Ω with no overlaps or gaps. Let ϕi, be the signed distance function for Oi defined as

| (1) |

where

| (2) |

is the shortest distance from point x ∈ Ω to Oi. In a conventional multi-object segmentation formulation, each object is moved according to a speed function fi(x) defined for each object using the standard PDE for level set evolution,

| (3) |

MGDM uses a special representation of the objects and their level set functions to remove the requirement to store all these level set functions. The new representation also enables a convenient capability for boundary-specific speed functions.

We define a set of label functions that give the object at x and all successively nearest neighboring objects

| (4) |

We then define functions that give the distance to each successively distant object

| (5) |

Together, these functions describe a local “object environment” at each point x. It can be shown that the SDFs at x for all objects can be reconstructed from these functions [39].

The first computational savings of MGDM comes about by realizing that approximations to the SDFs of the closest objects can be computed from just three label functions and three distance functions:

| (6) |

where the notation i ≠ L 0,1(x) means i ≠ L 0(x) and i ≠ L 1(x) while i ≠ L 0,1,2(x) means i ≠ L 0(x) and i ≠ L 1(x) and i ≠ L 2(x). These are level set functions sharing the same zero level sets as the true signed distance functions; they give a valid representation of the boundary structure of all the objects–details and conditions of the stability of this approximation are in Bogovic et al. [39]. Therefore, storing just these six functions (three label functions and three distance functions) is sufficient to describe the local object environment of every point in the domain.

The second computational savings of MGDM comes about by realizing that the evolution equations Eq. (3) can be carried over by updating the distance functions instead of the signed distance functions, as follows

| (7) |

for all k in the narrow band (voxels near the boundaries). Evolving these distance functions must be carried out by starting with ψ 2, then ψ 1, and finally ψ 0; any changes to the ordering of object neighbors during this process is reflected by changes in the associated label functions. Reconstruction of the actual objects themselves need not be carried out until after the very last iteration of the evolution. To accomplish this, an isosurface algorithm is run on on each level set function , and the object boundaries will have subvoxel resolution by virtue of the level sets.

Since MGDM keeps track of both the object label and its nearest neighbor at each x, the update Eq. (7) is very fast to carry out. Furthermore, it can use both object-specific and boundary-specific speed functions. Given the index of the object and its neighbor stored at each x, a simple index into a lookup table gives the appropriate speed functions to use for that object and particular boundary. This aspect of MGDM is used in the OCT implementation described in this paper.

We use two speed functions to drive MGDM. The first speed function is the inner product of a gradient vector flow (GVF) [42] field computed from a specific boundary probability map with the gradient of the associated signed distance function. Nine GVF fields are computed, one for each retinal boundary, which ensures a large capture range—i.e., the entire computational domain—for each boundary that MGDM must identify. The second speed function that is used in MGDM is a conventional curvature speed term [41]. Since the curvature speed term encourages flat surfaces, this is a perfect regularizer to use in flat space.

MGDM segments all retinal layers (plus the vitreous and choroid) at once. Like the topology preserving geometry deformable model (TGDM) [43], which maintains single-object topology using the simple point concept, MGDM is capable of preserving multiple object relationships using the digital homeomorphism concept, which is an extension of the simple point concept to multiple objects [44]. We therefore use the digital homeomorphism constraint in MGDM to maintain proper layer ordering. For the simple object arrangement in the retina (i.e., ordered layers), this constraint amounts to the same constraint used in LCLS [35]. As MGDM is operating in flat space, it does not come with the same one voxel layer thickness limitation on the final boundary segmentation.

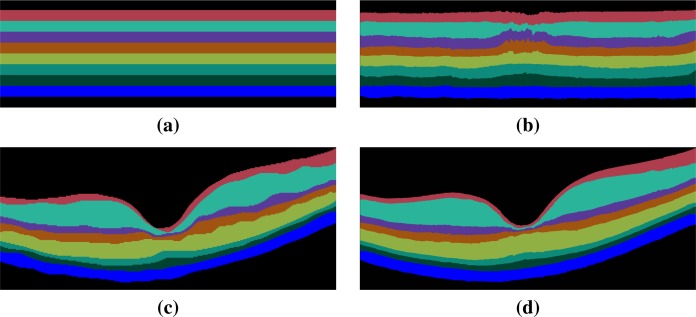

MGDM uses the two forces together with topology control to move the retinal layers in flat space until convergence, see Fig. 3(b). The flat space segmentation is then mapped back into the subject’s native space, see Fig. 3(d). Using MGDM in flat space has several computational advantages. First, the curvature force is a natural choice since all the layers are nominally flat. Second, flat space allows for greater precision of surface definition since mapping the MGDM result back to the native space can put surfaces much closer together than one voxel. Third, flat space is a smaller digital image domain than native space since there are only 109 vertices per A-scan by construction. This leads to a reduced computational burden. An example of an MGDM initialization in flat space is shown in Fig. 3(a) and the result for the same subject is shown in flat space in Fig. 3(b). The MGDM result mapped back to native space is shown in Fig. 3(d).

Fig. 3.

Shown in flat space are (a) the MGDM initialization, and (b) the MGDM result. The MGDM result mapped back to the native space of the subject is shown in (d) and for comparison the manual segmentation of the same subject is shown in (c). The same color map is used in this figure and in Fig. 4.

3. Experiments and results

3.1. Data

Data from the right eyes of 37 subjects (a mixture of 16 healthy controls and 21 MS patients) were obtained using a Spectralis OCT system (Heidelberg Engineering, Heidelberg, Germany). Seven subjects from the cohort were picked at random and used to train the RF boundary classifier. The RF classifier has been previous [24] shown to be robust and independent of the training data and thus should not have introduced any bias in the results. The research protocol was approved by the local Institutional Review Board, and written informed consent was obtained from all participants. All scans were screened and found to be free of microcystic macular edema, which is sometimes found in a small percentage of MS subjects [3].

All scans were acquired using the Spectralis scanner’s automatic real-time function in order to improve image quality by averaging 12 images of the same location (ART setting was fixed at 12). The resulting scans had signal-to-noise ratios (SNR) of between 20 dB and 38 dB, with a mean SNR of 30.6 dB. Macular raster scans (20° × 20°) were acquired with 49 B-scans, each B-scan having 1024 A-scans with 496 voxels per A-scan. The B-scan resolution varied slightly between subjects and averaged 5.8 μm laterally and 3.9 μm axially. The through-plane distance (slice separation) averaged 123.6 μm between images, resulting in an imaging area of approximately 6 × 6 mm. We note that our data is of a higher resolution and better SNR than many other recent publications. The nine layer boundaries were manually delineated on all B-scans for all subjects by a single rater using an internally developed protocol and software tool. The manual delineations were performed by clicking on approximately 20–50 points along each layer border followed by interpolation between the points using a cubic B-spline. Visual feedback was used to move points to ensure a correct boundary.

3.2. Results

We compared our multi-object geometric deformable models based approach to our previous work (RF+Graph) [24] on all 37 subjects. In terms of computational performance, we are currently not competitive with RF+Graph, which takes only four minutes on a 3D volume of 49 B-scans. However, our MGDM implementation is written in a generic framework and an optimized method based on a GPU framework could offer 10 to 20 fold speed up [45]. To compare the two methods, we computed the Dice coefficient [46] of the automatically segmented layers against manual delineations. The Dice coefficient is a measure of how much the two segmentations agree with each other. It has a range of [0, 1], with a score of 0 meaning complete contradiction between the two, while 1 represents complete concurrence.

The resulting Dice coefficients are shown in Table 1. It is observed that the mean Dice coefficient is larger for MGDM than RF+Graph in all layers. Further, we used a paired Wilcoxon rank sum test to compare the distributions of the Dice coefficients. The resulting p-values in Table 1 show that six of the eight layers reach significance (α level of 0.001). Therefore, MGDM is statistically better than RF+Graph in six of the eight layers. The two remaining layers (INL and OPL) lack statistical significance because of the large variance.

Table 1.

Mean (and standard deviations) of the Dice Coefficient across the eight retinal layers. A paired Wilcoxon rank sum test was used to test the significance of any improvement between RF+Graph [24] and our method, with strong significance (an α level of 0.001) in six of the eight layers.

| Layer | Dice Coefficient | P-Value | |

|---|---|---|---|

|

| |||

| RF+Graph [24] | MGDM | ||

| RNFL | 0.877 (±0.0533) | 0.903 (±0.0279) | < 0.001 |

| GCIP | 0.892 (±0.0529) | 0.911 (±0.0290) | < 0.001 |

| INL | 0.806 (±0.0261) | 0.811 (±0.0339) | 0.238 |

| OPL | 0.855 (±0.0164) | 0.860 (±0.0250) | 0.294 |

| ONL | 0.909 (±0.0186) | 0.926 (±0.0163) | < 0.001 |

| IS | 0.751 (±0.0295) | 0.805 (±0.0225) | < 0.001 |

| OS | 0.816 (±0.0323) | 0.846 (±0.0301) | < 0.001 |

| RPE | 0.884 (±0.0227) | 0.900 (±0.0268) | < 0.001 |

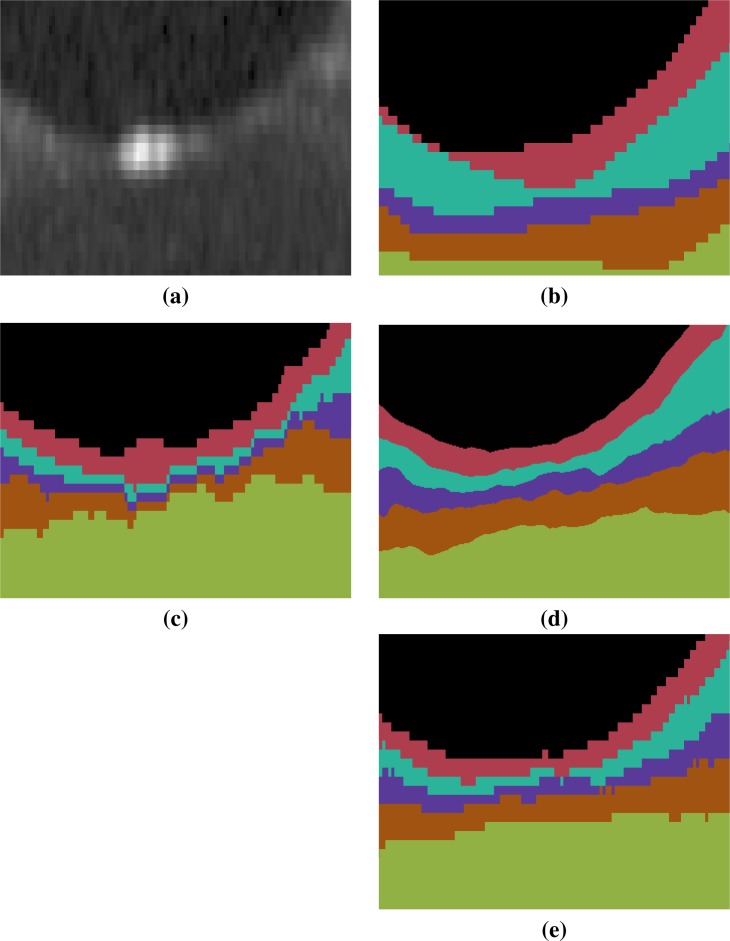

An example of the manual delineations as well as the result of our method on the same subject are shown in Fig. 3, with a magnified region about the fovea in Fig. 4. Table 2 includes the absolute boundary error for the nine boundaries we approximate; these errors are measured along the A-scans in comparison to the same manual rater. We, again, used a paired Wilcoxon rank sum test to compute p-values between the two methods for the absolute distance error, six of the nine boundaries reach significance (α level of 0.001).

Fig. 4.

Shown is a magnified (×18) region around the fovea for each of (a) the original image, (b) the manual delineation, and automated segmentations generated by (c) RF+Graph [24] and (d) our method. The result in (d) is generated from the continuous representation of the level sets in the subjects native space, shown in (e) is the voxelated equivalent for our method. The RF+Graph method has to keep each layer at least one voxel thick (the GCIP and INL in this case). We also observe the voxelated nature of the the RF+Graph result, whereas our approach has a continuous representation due to its use of levelsets shown in (d) but can also be converted a voxelated format (e). The same color map is used in this figure and in Fig. 3.

Table 2.

Mean absolute errors (and standard deviation) in microns for our method (MGDM) in comparison to RF+Graph [24] on the nine estimated boundaries. A paired Wilcoxon rank sum test was used to compute p-values between the two methods with strong significance (an α level of 0.001) in six of the nine boundaries.

| Boundary | Absolute Errors | P-Value | |

|---|---|---|---|

|

| |||

| RF+Graph [24] | MGDM | ||

| ILM | 4.318 (±1.1089) | 3.478 (±0.8084) | < 0.001 |

| RNFL-GCL | 6.192 (±2.1597) | 5.339 (±1.8408) | < 0.001 |

| IPL-INL | 6.319 (±1.1818) | 6.289 (±2.0463) | 0.141 |

| INL-OPL | 5.464 (±2.8283) | 4.467 (±0.4669) | < 0.05 |

| OPL-ONL | 4.657 (±1.1318) | 4.129 (±1.2162) | < 0.001 |

| ELM | 5.229 (±0.9822) | 4.031 (±0.9623) | < 0.001 |

| IS-OS | 4.108 (±1.2415) | 3.309 (±1.0028) | < 0.001 |

| OS-RPE | 4.944 (±1.3937) | 4.437 (±1.5522) | < 0.001 |

| BrM | 4.291 (±0.8959) | 3.739 (±2.4001) | 0.411 |

| Overall | 4.947 (±1.4558) | 4.468 (±1.9830) | < 0.001 |

4. Discussion and conclusion

The Dice coefficient and absolute boundary error in conjunction with the comparison to RF+GC suggest that our method has very good performance characteristics. In comparison to the state-of-the-art methods, Song et al. [27] reports mean unsigned boundary error of 5.14μm (±0.99) while Dufour et al. [26] reports 3.03μm (±0.54). Our results on the surface appear to be in between these two recent methods. However, the comparison is inherently flawed as the data used in both of these methods is of differing quality to our own, and was acquired on a different scanner with different parameters. The fact that we used a larger cohort than either of these two methods may account for our larger variance. In terms of specific boundaries, again with the caveat of different data and noting that layer definitions seem to vary somewhat by group and scanner used, we compare for the ILM and BrM. Song et al. reports unchanged results from their earlier work [30] which had an unsigned boundary error of 2.85μm (±0.32), with Dufour et al. reporting 2.28μm (±0.48); clearly for this boundary we have room for improvement. For BrM, Song et al. reports unchanged results from their prior work which reported 8.47μm (±2.29), and Dufour et al. reported 2.63μm (±0.59). Our approach is better than that of Song et al., and not as good as that of Dufour et al. However, Song et al. and Dufour et al. segment six and seven boundaries respectively, whereas our method identifies nine boundaries. There are only a few methods that are able to accurately segment nine or more boundaries [20, 21, 33]. The data used in our study is of a very high quality for OCT data, with a mean SNR of 30.6 dB, which might be part of the reason for the improved accuracy of our results. An obvious criticism of our approach is the use of the same 37 subjects for developing our regression mapping to flat space and for the validation study. Our current study involved healthy controls and MS subjects with essentially normal appearing eyes, a future area of work will be in exploring cases of more pronounced pathology were the flat mapping may fail or the forces driving MGDM may be insufficient. From a computation standpoint, the MGDM process is computationally expensive, and currently takes 2 hours and 9 minutes (±4 minutes) to process a full 3D OCT macular cube, lagging behind the state-of-the-art methods [24, 26] which can run in less than a minute. A GPU based implementation of MGDM [45] would offer a 10 to 20 fold speed up, taking the run time to the five minute range.

In this paper, a fully automated algorithm for segmenting nine layer boundaries in OCT retinal images has been presented. The algorithm uses a multi-object geometric deformable model of the retinal layers in a unique computational domain, which we refer to as flat space. The forces used for each layer were built from the same principles. These could be refined or modified on a per-layer basis to help improve the results.

Acknowledgments

This work was supported by the NIH/NEI R21-EY022150 and the NIH/NINDS R01-NS082347.

References and links

- 1.Gordon-Lipkin E., Chodkowski B., Reich D. S., Smith S. A., Pulicken M., Balcer L. J., Frohman E. M., Cutter G., Calabresi P. A., “Retinal nerve fiber layer is associated with brain atrophy in multiple sclerosis,” Neurology 69, 1603–1609 (2007). 10.1212/01.wnl.0000295995.46586.ae [DOI] [PubMed] [Google Scholar]

- 2.Saidha S., Syc S. B., Ibrahim M. A., Eckstein C., Warner C. V., Farrell S. K., Oakley J. D., Durbin M. K., Meyer S. A., Balcer L. J., Frohman E. M., Rosenzweig J. M., Newsome S. D., Ratchford J. N., Nguyen Q. D., Calabresi P. A., “Primary retinal pathology in multiple sclerosis as detected by optical coherence tomography,” Brain 134, 518–533 (2011). 10.1093/brain/awq346 [DOI] [PubMed] [Google Scholar]

- 3.Saidha S., Sotirchos E. S., Ibrahim M. A., Crainiceanu C. M., Gelfand J. M., Sepah Y. J., Ratchford J. N., Oh J., Seigo M. A., Newsome S. D., Balcer L. J., Frohman E. M., Green A. J., Nguyen Q. D., Calabresi P. A., “Microcystic macular oedema, thickness of the inner nuclear layer of the retina, and disease characteristics in multiple sclerosis: a retrospective study,” The Lancet Neurology 11, 963–972 (2012). 10.1016/S1474-4422(12)70213-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Saidha S., Syc S. B., Durbin M. K., Eckstein C., Oakley J. D., Meyer S. A., Conger A., Frohman T. C., Newsome S., Ratchford J. N., Frohman E. M., Calabresi P. A., “Visual dysfunction in multiple sclerosis correlates better with optical coherence tomography derived estimates of macular ganglion cell layer thickness than peripapillary retinal nerve fiber layer thickness,” Mult. Scler. 17, 1449–1463 (2011). 10.1177/1352458511418630 [DOI] [PubMed] [Google Scholar]

- 5.Kerrison J. B., Flynn T., Green W. R., “Retinal pathologic changes in multiple sclerosis,” Retina 14, 445–451 (1994). 10.1097/00006982-199414050-00010 [DOI] [PubMed] [Google Scholar]

- 6.Green A. J., McQuaid S., Hauser S. L., Allen I. V., Lyness R., “Ocular pathology in multiple sclerosis: retinal atrophy and inflammation irrespective of disease duration,” Brain 133, 1591–1601 (2010). 10.1093/brain/awq080 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Schuman J. S., Hee H. R., Puliafito C. A., Wong C., Pedut-Kloizman T., Lin C. P., Hertzmark J. A. I. E., Swanson E. A., Fujimoto J. G., “Quantification of nerve fiber layer thickness in normal and glaucomatous eyes using optical coherence tomography,” Arch. Ophthalmol. 113, 586–596 (1995). 10.1001/archopht.1995.01100050054031 [DOI] [PubMed] [Google Scholar]

- 8.Rao H. L., Zangwill L. M., Weinreb R. N., Sample P. A., Alencar L. M., Medeiros F. A., “Comparison of different spectral domain optical coherence tomography scanning areas for glaucoma diagnosis,” Ophthalmology 117, 1692–1699 (2010). 10.1016/j.ophtha.2010.01.031 [DOI] [PubMed] [Google Scholar]

- 9.Park S. C., De Moraes C. G. V., Teng C. C., Tello C., Liebmann J. M., Ritch R., “Enhanced depth imaging optical coherence tomography of deep optic nerve complex structures in glaucoma,” Ophthalmology 119, 3–9 (2012). 10.1016/j.ophtha.2011.07.012 [DOI] [PubMed] [Google Scholar]

- 10.Kanamori A., Nakamura M., Escano M. F. T., Seya R., Maeda H., Negi A., “Evaluation of the glaucomatous damage on retinal nerve fiber layer thickness measured by optical coherence tomography,” Am. J. of Ophthalmol. 135, 513–520 (2003). 10.1016/S0002-9394(02)02003-2 [DOI] [PubMed] [Google Scholar]

- 11.Kang S. H., Hong S. W., Im S. K., Lee S. H., Ahn M. D., “Effect of myopia on the thickness of the retinal nerve fiber layer measured by Cirrus HD optical coherence tomography,” Invest. Ophthalmol. Vis. Sci. 51, 4075–4083 (2010). 10.1167/iovs.09-4737 [DOI] [PubMed] [Google Scholar]

- 12.Srinivasan V. J., Sakadžić S., Gorczynska I., Ruvinskaya S., Wu W., Fujimoto J. G., Boas D. A., “Quantitative cerebral blood flow with optical coherence tomography,” Opt. Express 18, 2477–2494 (2010). 10.1364/OE.18.002477 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kim D. Y., Fingler J., Werner J. S., Schwartz D. M., Fraser S. E., Zawadzki R. J., “In vivo volumetric imaging of human retinal circulation with phase-variance optical coherence tomography,” Biomed. Opt. Express 2, 1504–1513 (2011). 10.1364/BOE.2.001504 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Guo L., Duggan J., Cordeiro M. F., “Alzheimer’s disease and retinal neurodegeneration,” Current Alzheimer Research 7, 3–14 (2010). 10.2174/156720510790274491 [DOI] [PubMed] [Google Scholar]

- 15.Lu Y., Li Z., Zhang X., Ming B., Jia J., Wang R., Ma D., “Retinal nerve fiber layer structure abnormalities in early Alzheimer’s disease: Evidence in optical coherence tomography,” Neurosci. Lett. 480, 69–72 (2010). 10.1016/j.neulet.2010.06.006 [DOI] [PubMed] [Google Scholar]

- 16.Kesler A., Vakhapova V., Korczyn A. D., Naftaliev E., Neudorfer M., “Retinal thickness in patients with mild cognitive impairment and Alzheimer’s disease,” Clin. Neurol. Neurosurgery 113, 523–526 (2011). 10.1016/j.clineuro.2011.02.014 [DOI] [PubMed] [Google Scholar]

- 17.Alasil T., Keane P. A., Updike J. F., Dustin L., Ouyang Y., Walsh A. C., Sadda S. R., “Relationship between optical coherence tomography retinal parameters and visual acuity in diabetic macular edema,” Ophthalmology 117, 2379–2386 (2010). 10.1016/j.ophtha.2010.03.051 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.De Buc D. C., Somfal G. M., “Early detection of retinal thickness changes in diabetes using Optical Coherence Tomography,” Med. Sci. Monit. 16, 15–21 (2010). [PubMed] [Google Scholar]

- 19.Ghorbel I., Rossant F., Bloch I., Paques M., “Modeling a parallelism constraint in active contours. Application to the segmentation of eye vessels and retinal layers,” in Image Processing (ICIP), 2011 18th IEEE International Conference on,” (2011), pp. 445–448 [Google Scholar]

- 20.Ghorbel I., Rossant F., Bloch I., Tick S., Paques M., “Automated segmentation of macular layers in OCT images and quantitative evaluation of performances,” Pattern Recognition 44, 1590–1603 (2011). 10.1016/j.patcog.2011.01.012 [DOI] [Google Scholar]

- 21.Kajić V., Považay B., Hermann B., Hofer B., Marshall D., Rosin P. L., Drexler W., “Robust segmentation of intraretinal layers in the normal human fovea using a novel statistical model based on texture and shape analysis,” Opt. Express 18, 14730–14744 (2010). 10.1364/OE.18.014730 [DOI] [PubMed] [Google Scholar]

- 22.Koozekanani D., Boyer K., Roberts C., “Retinal thickness measurements from optical coherence tomography using a Markov boundary model,” IEEE Trans. Med. Imag. 20, 900–916 (2001). 10.1109/42.952728 [DOI] [PubMed] [Google Scholar]

- 23.Vermeer K. A., van der Schoot J., Lemij H. G., de Boer J. F., “Automated segmentation by pixel classification of retinal layers in ophthalmic OCT images,” Biomed. Opt. Express 2, 1743–1756 (2011). 10.1364/BOE.2.001743 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Lang A., Carass A., Hauser M., Sotirchos E. S., Calabresi P. A., Ying H. S., Prince J. L., “Retinal layer segmentation of macular OCT images using boundary classification,” Biomed. Opt. Express 4, 1133–1152 (2013). 10.1364/BOE.4.001133 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Antony B. J., Abràmoff M. D., Sonka M., Kwon Y. H., Garvin M. K., “Incorporation of texture-based features in optimal graph-theoretic approach with application to the 3-D segmentation of intraretinal surfaces in SD-OCT volumes,” Proc. SPIE 8314, 83141G (2012). 10.1117/12.911491 [DOI] [Google Scholar]

- 26.Dufour P. A., Ceklic L., Abdillahi H., Schroder S., Zanet S. D., Wolf-Schnurrbusch U., Kowal J., “Graph-based multi-surface segmentation of OCT data using trained hard and soft constraints,” IEEE Trans. Med. Imag. 32, 531–543 (2013). 10.1109/TMI.2012.2225152 [DOI] [PubMed] [Google Scholar]

- 27.Song Q., Bai J., Garvin M. K., Sonka M., Buatti J. M., Wu X., “Optimal multiple surface segmentation with shape and context priors,” IEEE Trans. Med. Imag. 32, 376–386 (2013). 10.1109/TMI.2012.2227120 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Chiu S. J., Li X. T., Nicholas P., Toth C. A., Izatt J. A., Farsiu S., “Automatic segmentation of seven retinal layers in SDOCT images congruent with expert manual segmentation,” Opt. Express 18, 19413–19428 (2010). 10.1364/OE.18.019413 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Garvin M. K., Abràmoff M. D., Kardon R., Russell S. R., Wu X., Sonka M., “Intraretinal layer segmentation of macular optical coherence tomography images using optimal 3-D graph search,” IEEE Trans. Med. Imag. 27, 1495–1505 (2008). 10.1109/TMI.2008.923966 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Garvin M. K., Abràmoff M. D., Wu X., Russell S. R., Burns T. L., Sonka M., “Automated 3-D intraretinal layer segmentation of macular spectral-domain optical coherence tomography images,” IEEE Trans. Med. Imag. 28, 1436–1447 (2009). 10.1109/TMI.2009.2016958 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Mayer M. A., Hornegger J., Mardin C. Y., Tornow R. P., “Retinal nerve fiber layer segmentation on FD-OCT scans of normal subjects and glaucoma patients,” Biomed. Opt. Express 1, 1358–1383 (2010). 10.1364/BOE.1.001358 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Mishra A., Wong A., Bizheva K., Clausi D. A., “Intra-retinal layer segmentation in optical coherence tomography images,” Opt. Express 17, 23719–23728 (2009). 10.1364/OE.17.023719 [DOI] [PubMed] [Google Scholar]

- 33.Yang Q., Reisman C. A., Wang Z., Fukuma Y., Hangai M., Yoshimura N., Tomidokoro A., Araie M., Raza A. S., Hood D. C., Chan K., “Automated layer segmentation of macular OCT images using dual-scale gradient information,” Opt. Express 18, 21293–21307 (2010). 10.1364/OE.18.021293 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Chen M., Lang A., Sotirchos E., Ying H. S., Calabresi P. A., Prince J. L., Carass A., “Deformable registration of macular OCT using A-mode scan similarity,” in 10th International Symposium on Biomedical Imaging (ISBI 2013),” (2013), pp. 476–479 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Novosel J., Vermeer K. A., Thepass G., Lemij H. G., van Vliet L. J., “Loosely coupled level sets for retinal layer segmentation in optical coherence tomography,” in 10th International Symposium on Biomedical Imaging (ISBI 2013),” (2013), pp. 998–1001 [Google Scholar]

- 36.Lang A., Carass A., Sotirchos E., Calabresi P., Prince J. L., “Segmentation of retinal OCT images using a random forest classifier,” Proc. SPIE 8669, 86690R (2013). 10.1117/12.2006649 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Lang A., Carass A., Calabresi P. A., Ying H. S., Prince J. L., “An adaptive grid for graph-based segmentation in macular cube OCT,” Proc. SPIE 9034, 90340A (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Gibbs J. W., “Fourier’s series,” Nature 59, 200 (1898). 10.1038/059200b0 [DOI] [Google Scholar]

- 39.Bogovic J. A., Prince J. L., Bazin P.-L., “A multiple object geometric deformable model for image segmentation,” Comput. Vis. Image Und. 117, 145–157 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Breiman L., “Random forests,” Machine Learning 45, 5–32 (2001). 10.1023/A:1010933404324 [DOI] [Google Scholar]

- 41.Caselles V., Catté F., Coll T., Dibos F., “A geometric model for active contours in image processing,” Numerische Mathematik 66, 1–31 (1993). 10.1007/BF01385685 [DOI] [Google Scholar]

- 42.Xu C., Prince J. L., “Snakes, shapes, and gradient vector flow,” IEEE Trans. Imag. Proc. 7, 359–369 (1998). 10.1109/83.661186 [DOI] [PubMed] [Google Scholar]

- 43.Han X., Xu C., Prince J. L., “A topology preserving level set method for geometric deformable models,” IEEE Trans. Pattern Anal. Mach. Intell. 25, 755–768 (2003). 10.1109/TPAMI.2003.1201824 [DOI] [Google Scholar]

- 44.Bazin P.-L., Ellingsen L., Pham D., “Digital homeomorphisms in deformable registration,” in 20th Inf. Proc. in Med. Imaging (IPMI 2007),” (2007), pp. 211–222 [DOI] [PubMed] [Google Scholar]

- 45.Lucas B. C., Kazhdan M., Taylor R. H., “Multi-object geodesic active contours (MOGAC),” in 15th International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI 2012),” (2012), pp. 404–412 [DOI] [PubMed] [Google Scholar]

- 46.Dice L. R., “Measures of the amount of ecologic association between species,” Ecology 26, 297–302 (1945). 10.2307/1932409 [DOI] [Google Scholar]