Abstract

Emotional connection is the main reason people engage with music, and the emotional features of music can influence processing in other domains. Williams syndrome (WS) is a neurodevelopmental genetic disorder where musicality and sociability are prominent aspects of the phenotype. This study examined oscillatory brain activity during a musical affective priming paradigm. Participants with WS and age-matched typically developing controls heard brief emotional musical excerpts or emotionally neutral sounds and then reported the emotional valence (happy/sad) of subsequently presented faces. Participants with WS demonstrated greater evoked fronto-central alpha activity to the happy vs sad musical excerpts. The size of these alpha effects correlated with parent-reported emotional reactivity to music. Although participant groups did not differ in accuracy of identifying facial emotions, reaction time data revealed a music priming effect only in persons with WS, who responded faster when the face matched the emotional valence of the preceding musical excerpt vs when the valence differed. Matching emotional valence was also associated with greater evoked gamma activity thought to reflect cross-modal integration. This effect was not present in controls. The results suggest a specific connection between music and socioemotional processing and have implications for clinical and educational approaches for WS.

Keywords: Williams syndrome, music, emotion, EEG

INTRODUCTION

Emotional connection is the main reason people engage with music, yet the mechanisms by which music conveys emotion are not yet fully understood (Juslin and Västfhäll, 2008). Recent research has demonstrated that music can both induce emotional changes in listeners (e.g. Salimpoor et al., 2009) and influence conceptual and emotional processing of subsequent auditory, visual and semantic information (Koelsch et al., 2004; Daltrozzo and Schön, 2009; Logeswaran and Bhattacharya, 2009; Steinbeis and Koeslch, 2011). A better understanding of the mechanisms underlying music’s ability to modulate emotions and emotional processing could allow music to be harnessed in an evidence-based fashion for therapeutic purposes in clinical populations.

One population for whom the links between music and emotion may be particularly prominent is Williams syndrome (WS), which is a neurodevelopmental disorder caused by the deletion of ∼28 genes on chromosome 7 (Ewart et al., 1993). WS is associated with a unique constellation of cognitive and behavioral strengths and weaknesses, including cognitive impairment, greater verbal than nonverbal abilities, anxiety and hypersociability (Martens et al., 2008). Individuals with WS also have heightened sensitivities to a variety of sounds, including lowered pain threshold for loud sounds, auditory aversions and auditory fascinations (Levitin et al., 2005). An affinity for music also has long been noted in people with WS (see Lense and Dykens, 2011, for a review). Compared with typically developing (TD) individuals or those with other intellectual and developmental disabilities, parents of individuals with WS report greater musical engagement in their children, including more wide-ranging, intense and longer-lasting emotional responsiveness to music (Don et al., 1999; Levitin et al., 2004).

The sensitivity to musical emotions in individuals with WS is also evident in direct testing. Children and adolescents with WS perform similar to TD individuals in rating expressive versions of piano pieces as more emotional than mechanical versions or versions where each note has a random amount of temporal and amplitude variation (Bhatara et al., 2010). However, individuals with WS vary in proficiency of identifying specific emotional characteristics of instrumental music, performing better for happy than sad or scary emotions (Hopyan et al., 2001). In general, although individuals with WS appear proficient at distinguishing between overall positive (i.e. happy) and negative (i.e. sad) emotions across domains, they may struggle with differentiating amongst types of emotions within a domain (e.g. negative emotions of sadness, anger, fear, disgust; Plesa Skwerer et al., 2006). These difficulties have been alternately associated with their lower IQs (Gagliardi et al., 2003) or receptive language abilities (Plesa Skwerer et al., 2006).

Evidence of emotional connections between music and other domains has been demonstrated previously in TD individuals using affective priming paradigms (e.g. Logeswaran and Bhattacharya, 2009; Steinbeis and Koeslch, 2011). In these paradigms, a brief, affectively valenced musical excerpt was followed by an affectively congruent or incongruent target word or picture. When the musical prime and target were affectively related, reaction times (RTs) to identify the target were reduced. Within WS, sensitivity to musical emotion and listening to music during testing have been associated with improved facial emotion identification (Järvinen-Pasley et al., 2010; Ng et al., 2011).

In this study, we aimed to examine sensitivity to the emotional valence of non-target music and whether musical and socioemotional processing are particularly coupled in WS. Previous studies conducted in WS have used musical excerpts several seconds in duration (e.g. Hopyan et al., 2001), while TD individuals can reliably label music as happy vs sad within the first 250–500 ms of a musical stimulus (Peretz et al., 1998). We used electroencephalogram (EEG) oscillatory activity in response to brief musical excerpts to measure the immediacy of musical emotion processing in WS. EEG oscillatory activity offers millisecond-level temporal resolution and provides information about how brain activity at different frequencies evolves over short time periods, therefore shedding light on neural processes apart from and complementary to behavioral responses. It has been proposed that examining oscillatory activity in specific frequencies may better represent activity associated with specific sensory and cognitive processes than traditional event-related potentials (ERPs), which may reflect superposition of multiple EEG processes (Senkowski et al., 2005). Because neural oscillations in the alpha (8–12 Hz) and gamma (25 Hz and above) band frequencies have been previously linked to perceptual processes that were of particular interest, the study was designed with time-frequency method of EEG analysis in mind.

We were specifically interested in whether brief auditory excerpts would generate changes in evoked alpha-band activity that reflects sensory, attentional and affective processing (e.g. Wexler et al., 1992; Schürmann et al., 1998; Kolev et al., 2001). Increases in alpha-band activity within 0–250 ms have been reported in response to auditory stimulus processing (Kolev et al., 2001) and are sensitive to spectral and temporal characteristics of sound stimuli in TD children and adults (Shahin et al., 2010). For example, earlier phase locking in alpha band occurs in response to sounds with faster vs slower temporal onsets, whereas greater phase locking is seen for spectrally complex musical tones vs pure tones (Shahin et al., 2010). Moreover, in response to longer musical excerpts, alpha-band activity has been associated with the valence and arousal of the music. Greater alpha power (Baumgartner et al., 2006) and increased coherence (Flores-Gutiérrez et al., 2009) are reported in response to positively valenced music or audiovisual stimuli, while suppression of right frontal alpha power occurs during high arousal music (Mikutta et al., 2012). We hypothesized that both the WS and TD groups would demonstrate greater evoked alpha-band activity to the happy and sad musical excerpts vs neutral environmental sounds, reflecting discrimination of emotional music from non-emotional noise. We also hypothesized that increased sensitivity to musical affect in participants with WS would be evident in greater evoked alpha-band activity differences to the happy vs sad valenced musical primes than would be seen in the TD group. Furthermore, we predicted that greater alpha-wave activity would correlate with parent-rated emotional reactivity to music (Hypothesis 1: Musical Valence).

Performance on the affective priming task also served to measure the potential coupling of musical and socioemotional information in WS. We examined whether the musical primes would influence subsequent processing of emotionally valenced faces. We focused on happy and sad emotions because prior research indicates that individuals with WS are similar to TD individuals in their proficiency in recognizing these basic emotions (Plesa Skwerer et al., 2006). We hypothesized that individuals with WS and TD participants would be more accurate and faster at identifying facial emotions that were congruent with the valence of the preceding musical prime, but that these differences would be more pronounced in the WS vs TD group (Hypothesis 2: Affective Priming—Behavior).

Furthermore, in light of the growing literature indicating the role of gamma-band activity in cross-modal binding (e.g. Senkowski et al., 2008; Willems et al., 2008), we also examined evoked gamma-band activity to the emotional facial targets as an index of emotional music–face connections. In TD individuals, increased gamma-band activity is seen when individuals combine information across sensory modalities such as with multisensory stimuli (e.g. simultaneously presented pure tone and visual grid; Senkowski et al., 2005) or successively presented congruent stimuli (e.g. image of a sheep followed by ‘baa’ sound; Schneider et al., 2008). This gamma-band activity is thought to reflect synchronization of neural activity to promote coherence across modalities so that individuals can better navigate their environment (e.g. Schneider et al., 2008; Senkowski et al., 2008). On the basis of prior evidence of enhanced emotional perception in response to multimodal stimuli that combine emotionally congruent visual and auditory information compared with that of the unimodal stimuli (Baumgartner et al., 2006), we hypothesized greater gamma-band activity in response to congruent musical prime–face target pairs. We predicted that differences in gamma-band activity would be greater in the WS than in the TD group, due to the greater sensitivity to musical emotion and increased interest in faces, and the links between musical and social emotion reported in WS (Hypothesis 3: Affective Priming—EEG).

METHODS

Participants

Participants included 13 young adults with WS and 13 age- and sex-matched TD control individuals. Participants with WS were recruited from a residential summer music camp, while TD participants were recruited from the community. The groups did not differ in age, gender or handedness as determined by the Edinburgh Handedness Inventory (Oldfield, 1971) (Table 1). Intellectual functioning was assessed using the Kaufman Brief Intelligence Test, 2nd edition (KBIT-2; Kaufman and Kaufman, 2004). As expected, participants with WS had lower KBIT-2 IQ scores than TD participants, and they also showed the expected verbal-greater-than-non-verbal-IQ discrepancy [t(12) = 2.287, P = 0.041].

Table 1.

Demographic information for TD and WS groups

| TD (n = 13) | WS (n = 13) | Difference | |

|---|---|---|---|

| Age | 27.7 ± 6.0 | 27.1 ± 7.1 | t24 = 0.261, P = 0.796 |

| Gender (% male) | 61.5 | 61.5 | χ2 = 0, P = 1.0 |

| Handedness (LQ) | 0.6 ± 0.3 | 0.6 ± 0.7 | t24 = 0.002, P = 0.983 |

| Total IQ* | 115.2 ± 12.2 | 67.5 ± 14.1 | t23 = 9.463, P < 0.001 |

| Verbal IQ* | 113.7 ± 13.2 | 75.7 ± 13.7 | t23 = 7.032, P < 0.001 |

| Nonverbal IQ* | 115.2 ± 12.2 | 66.3 ± 15.7 | t24 = 8.842, P < 0.001 |

| MIS total | 46.2 ± 16.1 | 53.6 ± 10.6 | t24 = −1.398, P = 0.175 |

| MIS interest | 16.6 ± 6.6 | 20.5 ± 4.3 | t24 = −1.801, P = 0.084 |

| MIS skills | 12.7 ± 5.8 | 15.5 ± 3.7 | t24 = −1.489, P = 0.150 |

| MIS emotion | 16.8 ± 5.7 | 17.5 ± 3.6 | t24 = −0.372, P = 0.714 |

IQ scores from KBIT-2.

LQ, laterality quotient from Edinburgh Handedness Inventory; MIS, Music Interest Scale.

*P < 0.001.

TD participants and parents of participants with WS completed the Music Interest Scale (MIS; Blomberg et al., 1996). The MIS consists of 14 items rated on a 6-point rating scale, where a 0 rating corresponds to ‘Does not describe’ and a 5 rating corresponds to ‘Describes perfectly’. The MIS taps three subscales measuring Musical Interest (e.g. ‘My child is always listening to music’), Skills (e.g. ‘My child has a good sense of rhythm’) and Emotional Reaction to Music (e.g. ‘Music makes my child happy’). Cronbach’s α for the total score and three subscales are 0.92 (Total), 0.76 (Interest), 0.90 (Skills) and 0.86 (Emotional Reactions) (Blomberg et al., 1996). TD and WS participants did not differ in musicality on any of the MIS subscales or total score (Table 1).

All participants were reported to have normal hearing and normal or corrected-to-normal vision. Another seven individuals with WS and four controls completed the EEG paradigm but were excluded from analyses because of unusable EEG data (due to blinks or other motion artifacts). The study was approved by the institutional review board of the university. TD controls and parents or guardians of WS participants provided written consent to participate in the study. Participants with WS provided written assent.

Stimuli

The 48 auditory prime stimuli consisted of emotionally valenced music (32 excerpts, 16 happy and 16 sad) and neutral environmental sounds (16 excerpts). The emotional instrumental musical excerpts were created from classical music recordings based on the stimuli used in Peretz et al. (1998). Previous research indicates that the emotional valence of these musical excerpts can be identified by 250–500 ms after music onset (Peretz et al., 1998). Sixteen complex environmental sound excerpts (e.g. typewriter) that were rated as neutral on valence and arousal were taken from the International Affective Digitized Sounds (Bradley and Lang, 2007). All auditory stimuli were sampled at 44.1 kHz and edited in Audacity to be 500 ms in length with 50 ms envelope rise and fall time. Stimuli were presented at ∼70 dB SPL(A) from a speaker positioned ∼60 cm above the participant’s head.

The 32 facial target stimuli consisted of photographs of the happy and sad facial expressions (both male and female) of 16 individuals, which were selected from the NimStim Set of Facial Expression (http://www.macbrain.org/resources.htm; Tottenham et al., 2009). Only faces with >80% validity ratings for both the happy and sad version of their face were included. Faces were framed by a white rectangle, 18.5 × 25.5 cm, and presented on a flat screen computer monitor with a black background and were viewed from a distance of 1 m.

Procedure

To make sure that all participants could accurately identify the emotion of the faces, participants first completed a practice block with only facial stimuli. A face appeared on a computer screen, and participants used a response box to record whether the face was happy or sad by pressing the appropriate response button (e.g. happy face on right side and sad face on left side or vice versa; response side was counterbalanced among participants). Participants needed to achieve at least 75% accuracy in the practice block, with a minimum of four trials. All 13 controls and 11 participants with WS reached the accuracy criterion within 4 trials, while 2 WS participants required 8 trials.

The cross-modal affective priming task included 96 trials evenly divided among three conditions: (i) match (prime and target had same emotion—both happy or both sad); (ii) mismatch (prime and target had different emotions) or (iii) neutral (neutral sound prime followed by emotionally valenced face target). Thus, each auditory prime was used twice, once followed by the happy version and once followed by the sad version of a given face target. Each face was used three times, once following a happy, sad and neutral auditory prime, respectively. All participants had the same prime–target pairings, but presentation order of pairs was randomized across participants. Participants were alerted that a trial was starting by presentation of a fixation cross for 500 ms. Next, participants heard the 500 ms auditory prime. After a 250 ms delay (i.e. stimulus onset asynchrony (SOA) = 750 ms), participants saw the picture of the face, which remained on the screen until they responded via a button press on a response box (Figure 1). Participants were informed that they would hear different sounds but were instructed to focus on the face pictures and to respond whether the face was happy or sad. Stimuli presentation and response collection (accuracy, RT to face) was carried out via E-prime 2.0.

Fig. 1.

Cross-modal affective priming procedure.

Participants were tested in a quiet room in the psychophysiology laboratory at the university. A research assistant was present in the testing room to answer questions and make sure the participants attended to the task.

EEG collection and preprocessing

EEG was recorded using a high-density array of 128 Ag/AgCl electrodes embedded in soft sponges (Geodesic Sensor Net, ECI, Inc., Eugene, OR, USA) connected to a high impedance amplifier. Data were collected at 250 Hz with a 0.1–100 Hz filter and all electrodes referenced to vertex. Impedance was below 40 kΩ as measured before and after the EEG session. The Geodesic Sensor Net utilizes an ‘isolated common’ as a ground sensor such that it is connected to the common of the isolated power supply of the amplifier so that participants are not at risk for electric shock. Following the session, data were bandpass filtered at 0.5–55 Hz and re-referenced to an average reference. EEG was epoched separately for the three types of auditory primes (happy music, sad music and neutral sounds) and the three face target conditions [match (to prime), mismatch (from prime) and neutral (following neutral sound prime)], from 800 ms before stimulus onset to 1200 ms post. The long epochs were required for wavelet analyses. Importantly, statistical analysis was performed only on the segment length of interest and did not overlap with other segments/conditions (see section Data Analysis). Trials with ocular artifacts or other movement were excluded from analysis using an automated NetStation screening algorithm. Specifically, for the eye channels, voltage in excess of 140 µV was interpreted as an eye blink and voltage above 100 µV was considered to reflect eye movements. Any channel with voltage range exceeding 200 µV was considered bad, and its data were reconstructed using spherical spline interpolation procedures. Following automated artifact detection, the data were manually reviewed. If more than 10 electrodes within a trial were deemed bad, the entire trial was discarded. There was no difference in the number of trials kept per group (WS: 22.6 ± 5.2 trials; TD: 21.6 ± 5.0 trials, P = NS).

Data analysis

Behavioral data

Group (2: WS vs TD) by condition (3: Match vs Mismatch vs Neutral) repeated measures analysis of variances (ANOVAs) were conducted on the accuracy and RT data in response to the faces. Behavioral analyses were conducted in SPSS 18.0.

Time-frequency analyses

Wavelet-based time frequency decomposition was conducted using the open source Fieldtrip Toolbox (http://www.ru.nl/neuroimaging/fieldtrip; Oostenveld et al., 2011). Evoked (phase-locked) time frequency representations (TFRs) were conducted per condition using the average waveform (ERP) for each participant. The average waveform was convolved with a Morlet wavelet with a width of six cycles, resulting in a frequency resolution with a s.d. equals f/6 and a temporal resolution with s.d. equals 1/(f/6). The convolution was completed from 8 to 12 Hz for alpha power and 26 to 45 Hz for gamma power, with a frequency step of 1 Hz and a time step of 4 ms, between −400 and 800 ms from stimulus zero point (see Herrmann et al., 2005 for details on EEG wavelet analysis).

TFRs were normalized to control for differences in absolute power between individuals. The spectra from −100 to 650 ms across the three auditory prime types (happy music, sad music and neutral sound) were averaged for each participant for the auditory prime analyses, which yielded a baseline frequency at each channel. This latency band was chosen to ensure signal changes were not due to the following target. For the facial target analyses, data were averaged across the three conditions (match, mismatch and neutral) for each participant. For all conditions for each participant, relative percent change in power spectra from their respective baselines (prime vs target) was calculated, yielding normalized power across conditions.

Cluster randomization tests

For both the alpha and gamma band activity analyses, cluster randomization procedures with planned comparisons were used to test differences in normalized power between conditions (Maris and Oostenveld, 2007). Clustering identified power values for each channel, at each frequency and time point, that showed similar effects and tested the significance of the cluster in a given condition vs another condition, compared with clusters computed from 2500 random permutations of values drawn from both conditions via the Monte Carlo method. P < 0.05 was considered significant.

Because only paired comparisons are possible with the clustering tests, analyses were conducted separately for the WS and TD groups using dependent t-tests. For the auditory primes, we computed comparisons in alpha band activity between each of the auditory primes for the WS and TD groups. For the facial targets, we first conducted comparisons between the match vs mismatch conditions for each group. In the event of a significant difference between these conditions, we then separately compared the match and mismatch conditions vs the neutral target condition (i.e. affective face following neutral sound prime).

Cluster sum calculations

To relate EEG results to behavioral measures, we summed EEG power values over significant clusters to obtain a single value per participant, for which a correlation could be tested with behavioral scores. Specifically, when there were significant differences in EEG power in a pair of conditions, cluster sums were computed by summing together the EEG power values at each electrode and time point (averaged over the frequency band, as with the clustering analysis itself) for each condition. Correlations were then tested between the alpha cluster sum differences (i.e. differences in total cluster alpha power between pairs of prime conditions) and participants’ scores on the MIS Emotional Reaction to Music subscale (Spearman correlations were used because of the ordinal nature of the MIS). Correlations were also tested between the gamma cluster sum differences (i.e. differences in total cluster gamma power among the match vs mismatch conditions) and participants’ difference in RT to the same conditions.

RESULTS

Behavioral results

A Group (TD, WS) × Condition (Match, Mismatch, Neutral) ANOVA for accuracy of facial target emotion identification revealed no effect of Group [F(1,24) = 2.65, P = 0.12, ηp2 = 0.10] or Condition [F(2,23) = 0.54, P = 0.59, ηp2 = 0.05], and no Group × Condition interaction [F(2,23) = 1.91, P = 0.17, ηp2 = 0.14]. Average accuracy in the WS group across conditions was 92.1% ± 1.6%, whereas average accuracy in the TD groups across conditions was 96.3% ± 1.2%. Thus, participants in the WS and TD groups were equally accurate at identifying the target facial emotion regardless of the preceding auditory prime and did not differ from each other.

In contrast, a Group (TD, WS) × Condition (Match, Mismatch, Neutral) ANOVA for RT to facial target emotion identification revealed a main effect of Group [F(1,24) = 16.27, P < 0.001, ηp2 = 0.40], reflecting overall faster RT in TD (mean ± s.e.: 593.6 ± 1.5 ms) than WS (751.6 ± 5.8 ms) participants. There was no main effect of Condition [F(2,23) = 1.52, P = 0.24, ηp2 = 0.12], but there was a significant Group × Condition interaction [F(2,23) = 6.09, P = 0.008, ηp2 = 0.35]. Specifically, although RT across conditions did not differ in controls, individuals with WS were significantly faster in the match than mismatch condition [t(12) = −2.98, P = 0.012] (Figure 2).

Fig. 2.

Reaction time to facial targets in WS and TD groups. Although TD individuals did not differ in their reaction time across conditions, individuals with WS responded significantly faster when the emotion of the musical prime and face target matched than when they mismatched [t(12) = −2.975, P = 0.012]. *P < 0.05; **P < 0.01.

EEG results

Auditory primes

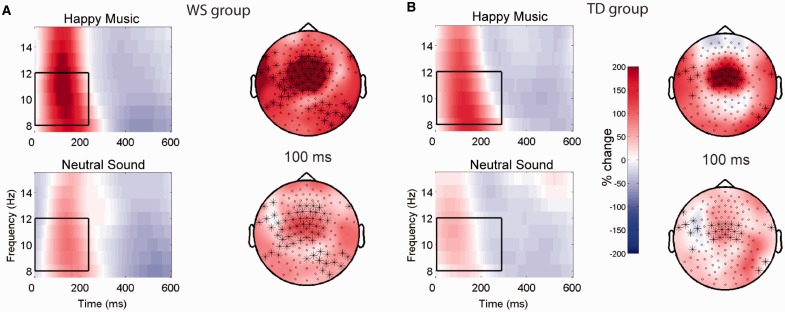

Time-frequency analysis of the EEG recorded during the auditory primes revealed differences in evoked alpha-band activity (8–12 Hz) to the happy music vs neutral sound in both the WS and TD groups. In both groups, a large cluster of frontal-central electrodes showed greater early evoked alpha power during the happy music vs neutral sound (WS: 0–236 ms, P = 0.004; TD: 4–288 ms, P = 0.02; Figure 3). [The extremely early latency of these alpha power differences could be attributed in part to the size of the wavelet (six cycles), which could have resulted in some temporal ‘smearing’. The relatively large wavelet size was chosen to allow for greater frequency resolution (Herrmann et al., 2005), at the expense of less sensitive temporal resolution.] Neither group demonstrated evoked alpha power differences between the sad music and neutral sound.

Fig. 3.

Differences in evoked alpha (8–12 Hz) power in response to happy (top) vs neutral (bottom) auditory primes in (A) WS and (B) TD groups. Electrodes belonging to the significant clusters at the time point indicated in each topographic plot (right) are represented with asterisks, and overlay activity for the data used in the contrast. The scale is the percent change from baseline (i.e. normalized power). Time-frequency representations (left) were generated by averaging together all of the electrodes belonging to the significant cluster; the same power scale applies to both topographic plots and TFRs. In both WS and TD groups, a large cluster of frontal-central electrodes showed greater early evoked alpha power during the happy music vs neutral sound (WS: 0–236 ms, P = 0.004; TD: 4–288 ms, P = 0.02).

In the WS group only, a cluster of frontal-central electrodes showed greater evoked alpha power during the happy vs sad musical primes (P = 0.03) from 0 to 452 ms (Figure 4). Furthermore, the difference in alpha power to the happy vs sad musical primes in WS was positively associated with their emotional reactions to music as rated by the MIS (ρ = 0.615, P = 0.025), but not their alpha power difference to the happy music vs neutral sound (ρ = 0.320, P = 0.286). The stronger correlation between the emotional reactions to music subscale and the happy vs sad musical prime alpha power difference suggests that the musical primes convey greater emotional information than the neutral sound and support the use of alpha power to index sensitivity to different musical emotions. [To better understand neural responses to the emotional music vs neutral sounds, in a related ongoing research project, complementary analyses are being conducted examining induced alpha power. Induced analyses used the same parameters as the evoked analyses described earlier, except that wavelets were applied to single-trial data before averaging. Thus, induced analyses capture activity that is not phase locked across trials and whose exact phase may be jittered from trial to trial with respect to the stimulus onset. Recent studies have revealed that induced alpha activity to non-target stimuli (such as the auditory primes in this study) may reflect sensory perception and judgment of the stimuli (Peng et al., 2012). Induced analyses complemented the findings of the evoked analyses in only the WS group with greater induced alpha power to the happy vs neutral primes (420–600 ms, P = 0.026) and happy vs sad primes (0–396 ms, P = 0.044). In addition, the induced analyses revealed greater induced alpha power to the neutral than the sad stimuli in the WS group (192–420 ms, P = 0.024), whereas no alpha power differences between these primes was seen in the TD group.]

Fig. 4.

Differences in evoked alpha (8–12 Hz) power in response to happy (top) vs sad (bottom) auditory primes in (A) WS and (B) TD groups. In the WS group only, there was a significant cluster of frontal-central electrodes that showed greater evoked alpha power during the happy vs sad musical primes (P = 0.03) from 0 to 452 ms. There were no significant clusters in the TD group. See legend of Figure 3 for explanation of plotting.

Facial targets

Time frequency analysis of the EEG data to the faces in the match vs mismatch condition indicated differences in evoked gamma power (26–45 Hz) in the WS but not the TD group. As depicted in Figure 5 in the WS group only, a cluster of electrodes encompassed two consecutive bursts of greater evoked gamma power to the face targets that matched vs mismatched the valence of the auditory primes, with a frontal-central distribution from 144 to 516 ms (P = 0.001). Differential gamma activity in the match vs mismatch conditions was not associated with the RT differences in these conditions (ρ = 0.049, P = NS), suggesting that the gamma activity difference was not due to the difference in response button press time in the two conditions.

Fig. 5.

Differences in evoked gamma (26–45 Hz) power to the facial targets in the match (top) vs mismatch (bottom) conditions in the (A) WS and (B) TD groups. In the WS group only, there were two consecutive bursts of greater evoked gamma power to the face targets that matched the valence of the auditory primes than to those that mismatched the valence of the auditory primes, with a frontal-central distribution from 144 to 516 ms (P = 0.001). There were no significant clusters in the TD group. See legend of Figure 3 for explanation of plotting.

Because the WS group showed the hypothesized differential evoked gamma activity to the match and mismatch conditions, we further explored differences between match and mismatch facial targets with facial targets that followed the neutral environmental sounds. Again, in the WS group only, there was significantly greater evoked gamma activity to target faces that matched the valence of the musical primes than to those that followed neutral sounds, with a similar frontal-central distribution, from 220 to 372 ms (P = 0.01). There were no gamma activity differences between faces that mismatched the valence of the musical prime vs faces that followed neutral sounds.

DISCUSSION

This study had two aims to further our understanding of musicality and socioemotional processing in WS: (i) examine affective processing of extremely short excerpts of music using neural responses and (ii) test the potential of short emotional musical excerpts to modulate the processing of subsequent socioemotional stimuli. Although both TD and WS participants responded differently to happy music than neutral sounds as indexed by evoked alpha power differences, only the participants with WS demonstrated differentiation of happy vs sad music. Furthermore, only in WS participants did the brief emotional music excerpts influence processing of subsequent faces, with greater gamma power observed when the emotion of the face matched that of the preceding music piece.

Evoked alpha power reflects primary sensory processing (Schürmann et al., 1998) and attentional processes, as greater evoked alpha activity was observed to auditory targets vs distractors (Kolev et al., 2001). Our findings reveal that even 500 ms auditory musical excerpts may attract greater attention than emotionally neutral sounds as reflected by alpha power changes, as brain responses of both TD and WS participants differentiated happy music from non-musical complex environmental sounds. The absence of similar discrimination effects for the sad music vs neutral sounds could be due to differences in psychoacoustic characteristics of the music pieces in the two conditions, such as a lower count of melodic elements within the 500 ms stimulus for the sad excerpts (Peretz et al., 1998), resulting in reduced attentional capture or greater similarity with the neutral sounds, which did not contain melodic information. However, the latter explanation is not supported by additional follow-up analyses examining induced rather than evoked alpha power revealing lower induced alpha power to the sad vs neutral primes in the WS (but not TD) group, suggesting that the sad music and neutral sounds contained different information. The discrimination between the sad and neutral primes in the WS group may have been captured by the induced but not the evoked analyses because the induced analyses capture activity that is not tightly phase locked to the stimulus. Future studies can examine the complementary roles of evoked vs induced activity for understanding emotional music processing in WS.

Happy–sad music discrimination was observed only in the WS group, who showed greater alpha power to the former. Differences in alpha power to the happy vs sad music were positively associated with parent-reported emotional reactivity to music. This finding is consistent with prior reports of heightened auditory sensitivities (Levitin et al., 2005) and increased activation of emotion processing brain regions in response to longer musical excerpts in WS (Levitin et al., 2003; Thornton-Wells et al., 2010). We may not have seen happy–sad differences in the TD group in this study due to the short duration of the excerpts because previous studies with longer musical excerpts have reported greater alpha coherence in response to happy vs unpleasant music (Flores-Gutiérrez et al., 2009) and greater alpha power to happy vs fearful audiovisual stimuli (Baumgartner et al., 2006) in TD individuals.

We also found behavioral and neural evidence of music’s ability to modulate the processing of subsequent emotional faces. However, this finding was only present in participants with WS, supporting the hypothesized enhanced relationship between music and socioemotional processing in WS. The WS group demonstrated an affective priming effect both in faster RT and in greater gamma band activity to emotionally congruous vs incongruous music–face pairings. Evoked gamma activity is thought to reflect cross-modal or multisensory integration, with greater gamma power elicited by related than unrelated stimuli (e.g. Senkowski et al., 2005; Yuval-Greenberg and Deouell, 2007; Schneider et al., 2008; Willems et al. 2008; for a review, see Senkowski et al., 2008).

Greater cross-modal priming by musical stimuli in persons with WS vs TD individuals is consistent with findings from prior neuroimaging studies that reported atypically diffuse activation in auditory processing areas in WS (Levitin et al., 2003; Thornton-Wells et al., 2010). Moreover, adults with WS also demonstrated activation of early visual and visual association areas in response to music (20–40 s of songs, chords or tones) and other auditory stimuli (white noise, vocalizations) (Thornton-Wells et al., 2010). In addition, functional connectivity studies in WS report increased anisotropy (fiber coherence) of the inferior longitudinal fasciculus, which connects temporal and occipital lobes and thus may provide physical support for such cross-modal integration (Hoeft et al., 2007; Marenco et al., 2007).

The lack of a priming effect in TD participants could be due to the ease of the facial emotion identification task and the resulting ceiling level target performance regardless of the preceding prime. However, there was no difference in accuracy at identifying the facial emotion targets between the TD and WS groups, with both exhibiting high levels of performance. Affective priming effects for emotional face targets have been previously reported in TD individuals (e.g. Logeswaran and Bhattacharya, 2009; Paulmann and Pell, 2010). Thus, it is unlikely that a ceiling effect in the TD group is alone responsible for the lack of priming effect in TD vs WS participants.

Another possible reason for the lack of priming effect in TD may be the relatively long SOA between the auditory primes and facial targets. However, priming effects have been reported in studies of auditory–visual conceptual/semantic priming in TD individuals with SOAs up to 800–1000 ms (Orgs et al., 2006; Daltrozzo and Schön, 2009). Individuals with WS have a prolonged attentional window and require greater time to disengage from one stimulus and attend to the next (Lense et al., 2011), thus it is possible that a longer SOA in this study enabled them to integrate the music and face information, whereas the TD group treated auditory and visual stimuli as separate events. Although there is no prior work on multisensory integration in WS, a prolonged multisensory binding window is seen in other developmental disabilities (Foss-Feig et al., 2010).

Finally, the lack of priming effects in the TD group could be due to their information processing strategy. Previous studies reporting cross-modal priming by music in TD individuals have utilized explicit relatedness judgments between the music and target (Koelsch et al., 2004; Daltrozzo and Schön, 2009; Steinbeis and Koelsch, 2011). When asked to respond only to the affective valence of target words following brief emotional musical excerpts and not to their relatedness, TD participants did not demonstrate a priming effect in RT or N400 differences, even when presentation of the musical prime and semantic target overlapped (Goerlich et al., 2011). In this study, participants were specifically instructed to only focus on and respond to the faces. Therefore, they may have relied on top–down attentional processes to minimize processing of the musical prime and focus only on the face targets. In contrast, WS participants may have been unable to only focus on the target face. Given their heightened auditory sensitivities (Levitin et al., 2005), higher than typical interest in music (Levitin et al., 2004) and reported difficulties with attentional disengagement (Lense et al., 2011), reliance on bottom–up processing of the auditory primes may have facilitated music–face priming.

The current finding of the spreading of emotional information from even brief musical excerpts to other emotional information, such as facial expressions, has important clinical implications. Affective auditory information provided by music could be used for socio-emotional interventions. Studies in TD individuals reveal heightened emotional experiences in response to social scenes or faces presented with congruent classical emotional music than to isolated socioemotional visual stimuli (Baumgartner et al., 2006). Baumgartner et al. (2006) reported that this enhanced emotional experience was associated with stronger neural activation, perhaps due to integration across emotion and arousal processing areas. Intriguingly, magnetic resonance imaging studies in WS have revealed atypically diminished activity in the amygdala in response to negative faces (Haas et al., 2009, 2010) but greater amygdala activity in response to music (Levitin et al., 2003). Thus, combining music with visual stimuli may lead to greater brain activation in emotion and arousal processing areas to improve emotion recognition and experiences. In addition, although individuals with WS are proficient with happy and sad basic expressions, they struggle with other facial expressions, such as fear and surprise (Plesa Skwerer et al., 2006). Pairing facial emotions with affective musical cues may improve their ability to recognize these higher-order emotions as music can elicit higher order emotions such as fear (e.g. classical music; Krumhansl, 1997; Baumgartner et al., 2006) and a variety of more nuanced emotional states (e.g. classical, jazz, techno, Latin American, pop/rock music; Zentner et al., 2008).

In addition, this study suggests that individuals with WS have increased sensitivity to the emotional characteristics of music. Music may help individuals with WS identify and manage their own emotions. For example, individuals can be taught to explicitly recognize and label the emotional experience of specific musical excerpts to build their emotional vocabulary or to use music to communicate their emotional state to caregivers. Anecdotally, many individuals with WS use music as a form of self-administered therapy, and music listening is associated with fewer externalizing symptoms, while music production is associated with fewer internalizing problems such as anxiety (Dykens et al., 2005).

This study also has implications for attentional issues in WS. In this study, even though participants were not explicitly instructed to attend to the auditory primes, the auditory stimuli appeared to have captured their attention via bottom–up processes. Moreover, even though the participants stayed on task and performed with high accuracy, this automatic attention capture by the auditory prime modulated how they processed the subsequent targets. WS is associated with significant attentional problems, including distractibility, in both childhood and adulthood (Elison et al., 2010; Rhodes et al., 2011; Scerif and Steele, 2011). Thus, families and educators need to be aware of the high susceptibility of an individual with WS to distraction by auditory information. Given reported cases of hyperacusis in WS (Levitin et al., 2005), individuals may be distracted by auditory stimuli that may not appear distracting to typical persons. Individuals with WS may need to be given frequent cues to refocus their attention, and learning environments may need to be kept quiet. At the same time, multimodal educational methods, such as pairing auditory cues with visual information (e.g. phoneme-grapheme correspondence in reading), may be particularly beneficial for individuals with WS.

This study has some limitations that need to be addressed in future research. The sample size was relatively small, although consistent with or larger than previous studies examining neural markers of musicality and sociability in WS (e.g. Levitin et al., 2005; Haas et al., 2009, 2010; Thornton-Wells et al., 2010). We did not include a mental-aged matched group because previous studies and our own results have demonstrated that WS participants are capable of performing the explicit task (facial emotion identification) at the same level as their TD peers (Plesa Skwerer et al., 2006). Moreover, due to maturational changes in oscillatory brain activity (e.g. Yordanova and Kolev, 1996), an age-matched TD group was the most appropriate comparison group. Finally, this study included only happy and sad emotions, so it is unknown whether the same effects would be seen with other emotions. Future studies could make the cross-modal task more explicit and vary the SOA between the primes and targets to examine what the optimal SOA length is for affective priming in individuals with WS vs TD controls.

In summary, this study used both neural and behavioral data to demonstrate the increased emotional connection to music in WS. The use of very brief emotional musical excerpts highlights the sensitivity of musical emotion processing in WS. Moreover, the neural activity (alpha power) to different musical emotions correlated with parent reports of emotional reactivity to music. The early timing of the alpha activity suggests that this emotional sensitivity may be related to general auditory attention and sensitivities. Future research can manipulate specific psychoacoustic features to examine their role in the emotional responsiveness to music in WS. Finally, this study provided the first direct neural (gamma power) and behavioral (RT) evidence for an enhanced connection between music and socioemotional processing in WS. These results are consistent with previously hypothesized cross-modal processing of auditory stimuli in WS (e.g. Thornton-Wells et al., 2010), with implications for educational and therapeutic interventions.

Acknowledgments

The authors thank the participants and their families for taking part in the study. They also thank Dorita Jones and Amber Vinson for assistance collecting EEG data. Study questionnaire data were collected and managed using Research Electronic Data Capture (REDCap) electronic data capture tools hosted at Vanderbilt (Harris et al., 2009). This work was supported in part by a grant from NICHD (P30 HD015052-30) and a National Science Foundation Graduate Research Fellowship, as well as grant support to the Vanderbilt Institute for Clinical and Translational Research (UL1TR000011 from NCATS/NIH).

REFERENCES

- Baumgartner T, Esslen M, Jäncke L. From emotion perception to emotion experience: emotions evoked by pictures and classical music. International Journal of Psychophysiology. 2006;60:34–43. doi: 10.1016/j.ijpsycho.2005.04.007. [DOI] [PubMed] [Google Scholar]

- Bhatara A, Quintin E-M, Levy B, Bellugi U, Fombonne E, Levitin DJ. Perception of emotion in musical performance in adolescents with autism spectrum disorders. Autism Research. 2010;3(5):214–25. doi: 10.1002/aur.147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blomberg S, Rosander M, Andersson G. Fears, hyperacusis and musicality in Williams syndrome. Research in Developmental Disabilities. 1996;27:668–80. doi: 10.1016/j.ridd.2005.09.002. [DOI] [PubMed] [Google Scholar]

- Bradley MM, Lang PJ. The International Affective Digitized Sounds: Affective Ratings of Sounds and Instruction Manual. 2nd edn. Gainesville: NIMH Center for the Study of Emotion and Attention; 2007. [Google Scholar]

- Daltrozzo J, Schön D. Conceptual processing in music as revealed by N400 effects on words and musical targets. Journal of Cognitive Neuroscience. 2009;21(10):1882–92. doi: 10.1162/jocn.2009.21113. [DOI] [PubMed] [Google Scholar]

- Don AJ, Schellenberg EG, Rourke BP. Music and language skills of children with Williams syndrome. Child Neuropsychology. 1999;5(3):154–70. [Google Scholar]

- Dykens EM, Rosner BA, Ly T, Sagun J. Music and anxiety in Williams syndrome: a harmonious or discordant relationship? American Journal of Mental Retardation. 2005;110(5):346–58. doi: 10.1352/0895-8017(2005)110[346:MAAIWS]2.0.CO;2. [DOI] [PubMed] [Google Scholar]

- Elison S, Stinton C, Howlin P. Health and social outcomes in adults with Williams syndrome: findings from cross-sectional and longitudinal cohorts. Research, in Developmental Disabilities. 2010;31(2):587–99. doi: 10.1016/j.ridd.2009.12.013. [DOI] [PubMed] [Google Scholar]

- Ewart AK, Morris CA, Atkinson D, et al. Hemizygosity at the elastin locus in a developmental disorder, Williams syndrome. Nature Genetics. 1993;5(1):11–6. doi: 10.1038/ng0993-11. [DOI] [PubMed] [Google Scholar]

- Flores-Gutiérrez EO, Díaz J-L, Barrios FA, et al. Differential alpha coherence hemispheric patterns in men and women during pleasant and unpleasant musical emotions. International Journal of Psychophysiology. 2009;71(1):43–9. doi: 10.1016/j.ijpsycho.2008.07.007. [DOI] [PubMed] [Google Scholar]

- Foss-Feig JH, Kwakye LD, Cascio CJ, et al. An extended multisensory temporal binding window in autism spectrum disorders. Experimental Brain Research. 2010;203(2):381–9. doi: 10.1007/s00221-010-2240-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gagliardi C, Frigerio E, Burt DM, Cazzaniga I, Perrett DI, Borgatti R. Facial expression recognition in Williams syndrome. Neuropsychologia. 2003;41:733–8. doi: 10.1016/s0028-3932(02)00178-1. [DOI] [PubMed] [Google Scholar]

- Goerlich KS, Witteman J, Aleman A, Martens S. Hearing feelings: affective categorization of music and speech in alexithymia, an ERP study. PLoS One. 2011;6(5):e19501. doi: 10.1371/journal.pone.0019501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haas BW, Hoeft F, Searcy YM, Mills D, Bellugi U, Reiss A. Individual differences in social behavior predict amygdala response to fearful facial expressions in Williams syndrome. Neuropsychologia. 2010;48(5):1283–8. doi: 10.1016/j.neuropsychologia.2009.12.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haas BW, Mills D, Yam A, Hoeft F, Bellugi U, Reiss A. Genetic influences on sociability: heightened amygdala reactivity and event-related responses to positive social stimuli in Williams syndrome. The Journal of Neuroscience. 2009;29(4):1132–9. doi: 10.1523/JNEUROSCI.5324-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. Journal of Biomedical Informatics. 2009;42(2):377–81. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herrmann CS, Grigutsch M, Busch NA. EEG oscillations and wavelet analysis. In: Handy TC, editor. Event-Related Potentials: A Methods Handbook. Cambridge, MA: MIT Press; 2005. pp. 229–59. [Google Scholar]

- Hoeft F, Barnea-Goraly N, Haas BW, et al. More is not always better: increased fractional anisotropy of superior longitudinal fasciculus associated with poor visuospatial abilities in Williams syndrome. The Journal of Neuroscience. 2007;27(44):11960–5. doi: 10.1523/JNEUROSCI.3591-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hopyan T, Dennis M, Weksberg R, Cytrynbaum C. Music skills and the expressive interpretation of music in children with Williams-Beuren syndrome: pitch, rhythm, melodic imagery, phrasing, and musical affect. Child Neuropsychology. 2001;7(1):42–53. doi: 10.1076/chin.7.1.42.3147. [DOI] [PubMed] [Google Scholar]

- Järvinen-Pasley A, Vines BW, Hill KJ, et al. Cross-modal influences of affect across social and non-social domains in individuals with Williams syndrome. Neuropsychologia. 2010;48(2):456–66. doi: 10.1016/j.neuropsychologia.2009.10.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Juslin PN, Västfjäll D. Emotional responses to music: the need to consider underlying mechanisms. Behavioral and Brain Sciences. 2008;31(05):559–75. doi: 10.1017/S0140525X08005293. [DOI] [PubMed] [Google Scholar]

- Kaufman A, Kaufman N. Kaufman Brief Intelligence Test. 2nd edn. Circle Pines, MN: American Guidance Service; 2004. [Google Scholar]

- Koelsch S, Kasper E, Sammler D, Schulze K, Gunter T, Friederici AD. Music, language and meaning: brain signatures of semantic processing. Nature Neuroscience. 2004;7(3):302–7. doi: 10.1038/nn1197. [DOI] [PubMed] [Google Scholar]

- Kolev V, Yordanova J, Schürmann M, Başar E. Increased frontal phase-locking of event-related alpha oscillations during task processing. International Journal of Psychophysiology. 2001;39(2–3):159–65. doi: 10.1016/s0167-8760(00)00139-2. [DOI] [PubMed] [Google Scholar]

- Krumhansl K. An exploratory study of musical emotions and psychophysiology. Canadian Journal of Experimental Psychology. 1997;51(4):336–52. doi: 10.1037/1196-1961.51.4.336. [DOI] [PubMed] [Google Scholar]

- Lense MD, Dykens EM. Musical interests and abilities in individuals with developmental disabilities. International Review of Research in Developmental Disabilities. 2011;41:265–312. [Google Scholar]

- Lense MD, Key AP, Dykens EM. Attentional disengagement in adults with Williams syndrome. Brain and Cognition. 2011;77:201–7. doi: 10.1016/j.bandc.2011.08.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levitin DJ, Cole K, Chiles M, Lai Z, Lincoln A, Bellugi U. Characterizing the musical phenotype in individuals with Williams Syndrome. Child Neuropsychology. 2004;10(4):223–47. doi: 10.1080/09297040490909288. [DOI] [PubMed] [Google Scholar]

- Levitin DJ, Cole K, Lincoln A, Bellugi U. Aversion, awareness, and attraction: investigating claims of hyperacusis in the Williams syndrome phenotype. Journal of Child Psychology and Psychiatry, and Allied Disciplines. 2005;46(5):514–23. doi: 10.1111/j.1469-7610.2004.00376.x. [DOI] [PubMed] [Google Scholar]

- Levitin DJ, Menon V, Schmitt JE, et al. Neural correlates of auditory perception in Williams syndrome: an fMRI study. Neuroimage. 2003;18(1):74–82. doi: 10.1006/nimg.2002.1297. [DOI] [PubMed] [Google Scholar]

- Logeswaran N, Bhattacharya J. Crossmodal transfer of emotion by music. Neuroscience Letters. 2009;455(2):129–33. doi: 10.1016/j.neulet.2009.03.044. [DOI] [PubMed] [Google Scholar]

- Marenco S, Siuta MA, Kippenhan JS, et al. Genetic contributions to white matter architecture revealed by diffusion tensor imaging in Williams syndrome. Proceedings of the National Academy of Sciences of the United States of America. 2007;104(38):15117–22. doi: 10.1073/pnas.0704311104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maris E, Oostenveld R. Nonparametric statistical testing of EEG- and MEG-data. Journal of Neuroscience Methods. 2007;164(1):177–90. doi: 10.1016/j.jneumeth.2007.03.024. [DOI] [PubMed] [Google Scholar]

- Martens MA, Wilson SJ, Reutens DC. Research review: Williams syndrome: a critical review of the cognitive, behavioral, and neuroanatomical phenotype. Journal of Child Psychology and Psychiatry, and Allied Disciplines. 2008;49(6):576–608. doi: 10.1111/j.1469-7610.2008.01887.x. [DOI] [PubMed] [Google Scholar]

- Mikutta C, Altorfer A, Strik W, Koenig T. Emotions, arousal, and frontal alpha rhythm asymmetry during Beethoven's 5th symphony. Brain Topography. 2012;25:423–30. doi: 10.1007/s10548-012-0227-0. [DOI] [PubMed] [Google Scholar]

- Ng R, Järvinen-Pasley A, Searcy Y, Fishman L, Bellugi U. Music and Sociability in Williams Syndrome; Poster sessions presented at the 44th annual Gatlinburg Conference; San Antonio, TX. 2011. [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Oostenveld R, Fries P, Maris E, Schoffelen J-M. FieldTrip: open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Computational Intelligence and Neuroscience. 2011;2011:1–9. doi: 10.1155/2011/156869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orgs G, Lange K, Dombrowski J-H, Heil M. Conceptual priming for environmental sounds and words: an ERP study. Brain and Cognition. 2006;62(3):267–72. doi: 10.1016/j.bandc.2006.05.003. [DOI] [PubMed] [Google Scholar]

- Paulmann S, Pell MD. Contextual influences of emotional speech prosody on face processing: how much is enough? Cognitive, Affective & Behavioral Neuroscience. 2010;10(2):230–42. doi: 10.3758/CABN.10.2.230. [DOI] [PubMed] [Google Scholar]

- Peng W, Hu L, Zhang Z, Hu Y. Causality in the association between P300 and alpha event-related desynchronization. PLoS One. 2012;7(4):e34163. doi: 10.1371/journal.pone.0034163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peretz I, Gagnon L, Bouchard B. Music and emotion: perceptual determinants, immediacy, and isolation after brain damage. Cognition. 1998;68(2):111–41. doi: 10.1016/s0010-0277(98)00043-2. [DOI] [PubMed] [Google Scholar]

- Plesa Skwerer D, Faja S, Schofield C, Verbalis A, Tager-Flusberg H. Perceiving facial and vocal expressions of emotion in Williams syndrome. American Journal of Mental Retardation: AJMR. 2006;111(1):15–26. doi: 10.1352/0895-8017(2006)111[15:PFAVEO]2.0.CO;2. [DOI] [PubMed] [Google Scholar]

- Rhodes SM, Riby DM, Matthews K, Coghill DR. Attention-deficit/hyperactivity disorder and Williams syndrome: shared behavioral and neuropsychological profiles. Journal of Clinical and Experimental Neuropsychology. 2011;33(1):147–56. doi: 10.1080/13803395.2010.495057. [DOI] [PubMed] [Google Scholar]

- Salimpoor VN, Benovoy M, Longo G, Cooperstock JR, Zatorre RJ. The rewarding aspects of music listening are related to degree of emotional arousal. PLoS One. 2009;4(10):e7487. doi: 10.1371/journal.pone.0007487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scerif G, Steele A. Neurocognitive development of attention across genetic syndromes inspecting a disorder’s dynamics through the lens of another. Progress in Brain Research. 2011;189 C:285–301. doi: 10.1016/B978-0-444-53884-0.00030-0. [DOI] [PubMed] [Google Scholar]

- Schneider TR, Debener S, Oostenveld R, Engel AK. Enhanced EEG gamma-band activity reflects multisensory semantic matching in visual-to-auditory object priming. NeuroImage. 2008;42(3):1244–54. doi: 10.1016/j.neuroimage.2008.05.033. [DOI] [PubMed] [Google Scholar]

- Schürmann M, Başar-Eroglu C, Başar E. Evoked EEG alpha oscillations in the cat brain—a correlate of primary sensory processing? Neuroscience Letters. 1998;240(1):41–4. doi: 10.1016/s0304-3940(97)00926-9. [DOI] [PubMed] [Google Scholar]

- Senkowski D, Schneider TR, Foxe JJ, Engel AK. Crossmodal binding through neural coherence: implications for multisensory processing. Trends in Neurosciences. 2008;31(8):401–9. doi: 10.1016/j.tins.2008.05.002. [DOI] [PubMed] [Google Scholar]

- Senkowski D, Talsma D, Herrmann CS, Woldorff MG. Multisensory processing and oscillatory gamma responses: effects of spatial selective attention. Experimental Brain Research. 2005;166(3–4):411–26. doi: 10.1007/s00221-005-2381-z. [DOI] [PubMed] [Google Scholar]

- Shahin AJ, Trainor LJ, Roberts LE, Backer KC, Miller LM. Development of auditory phase-locked activity for music sounds. Journal of Neurophysiology. 2010;103:218–29. doi: 10.1152/jn.00402.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steinbeis N, Koelsch S. Affective priming effects of musical sounds on the processing of word meaning. Journal of Cognitive Neuroscience. 2011;23(3):604–21. doi: 10.1162/jocn.2009.21383. [DOI] [PubMed] [Google Scholar]

- Thornton-Wells TA, Cannistraci CJ, Anderson AW, et al. Auditory attraction: activation of visual cortex by music and sound in Williams syndrome. American Journal on Intellectual and Developmental Disabilities. 2010;115(2):172–89. doi: 10.1352/1944-7588-115.172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tottenham N, Tanaka J, Leon AC, et al. The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry Research. 2009;168:242–9. doi: 10.1016/j.psychres.2008.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wexler BE, Warrenburg S, Schwartz GE, Janer LD. EEG and EMG responses to emotion-evoking stimuli processed without conscious awareness. Neuropsychologia. 1992;30(12):1065–79. doi: 10.1016/0028-3932(92)90099-8. [DOI] [PubMed] [Google Scholar]

- Willems RM, Oostenveld R, Hagoort P. Early decreases in alpha and gamma band power distinguish linguistic from visual information during spoken sentence comprehension. Brain Research. 2008;1219:78–90. doi: 10.1016/j.brainres.2008.04.065. [DOI] [PubMed] [Google Scholar]

- Yordanova JY, Kolev VN. Developmental changes in the alpha response system. Electroencephalography and Clinical Neurophysiology. 1996;99(6):527–38. doi: 10.1016/s0013-4694(96)95562-5. [DOI] [PubMed] [Google Scholar]

- Yuval-Greenberg S, Deouell LY. What you see is not (always) what you hear: induced gamma band responses reflect cross-modal interactions in familiar object recognition. The Journal of Neuroscience. 2007;27(5):1090–6. doi: 10.1523/JNEUROSCI.4828-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zentner M, Grandjean D, Scherer KR. Emotions evoked by the sound of music: characterization, classification, and measurement. Emotion. 2008;8(4):494–521. doi: 10.1037/1528-3542.8.4.494. [DOI] [PubMed] [Google Scholar]