Abstract

Methods for handling missing data depend strongly on the mechanism that generated the missing values, such as missing completely at random (MCAR) or missing at random (MAR), as well as other distributional and modeling assumptions at various stages. It is well known that the resulting estimates and tests may be sensitive to these assumptions as well as to outlying observations. In this paper, we introduce various perturbations to modeling assumptions and individual observations, and then develop a formal sensitivity analysis to assess these perturbations in the Bayesian analysis of statistical models with missing data. We develop a geometric framework, called the Bayesian perturbation manifold, to characterize the intrinsic structure of these perturbations. We propose several intrinsic influence measures to perform sensitivity analysis and quantify the effect of various perturbations to statistical models. We use the proposed sensitivity analysis procedure to systematically investigate the tenability of the non-ignorable missing at random (NMAR) assumption. Simulation studies are conducted to evaluate our methods, and a dataset is analyzed to illustrate the use of our diagnostic measures.

Keywords: Influence measure, Missing data mechanism, Perturbation manifold, Sensitivity analysis

1. Introduction

It is common to have missing data in surveys, clinical trials, and longitudinal studies. Various statistical methods have been developed to handle missing data. These methods depend on the missing data mechanism that generates the missing values and other modeling assumptions at various stages, and the resulting estimates and tests can be sensitive to these assumptions. Sensitivity analyses are commonly performed to perturb the model assumptions and/or individual observations to check the sensitivity of a specific influence measure (e.g., a parameter of interest). There is an extensive literature on sensitivity analysis for missing data problems in frequentist analysis (Copas and Eguchi (2005), Little and Rubin (2002), Zhu and Lee (2001), Copas and Li (1997), van Steen et al. (2001), Troxel (1998), Jansen et al. (2006), Jansen et al. (2003), Verbeke et al. (2001), Troxel et al. (2004), Shi, Zhu and Ibrahim (2009), Hens et al. (2006), Daniels and Hogan (2008)).

The literature on influence measures include Copas and Eguchi (2005), Zhu and Lee (2001), Troxel et al. (2004), Copas and Li (1997), van Steen et al. (2001), Troxel (1998), Jansen et al. (2006), Jansen et al. (2003), Hens et al. (2006), Verbeke et al. (2001), Shi, Zhu and Ibrahim (2009), and Daniels and Hogan (2008). For instance, in frequentist analysis, Copas and Eguchi (2005) developed a general formulation for assessing the bias of maximum likelihood estimates in the presence of small model perturbations for missing data problems. The local influence method in Cook (1986) was successfully applied to carry out sensitivity analyses for various statistical models with missing data (van Steen et al. (2001), Troxel (1998), Jansen et al. (2006), Hens et al. (2006), Jansen et al. (2003), Verbeke et al. (2001)). Shi, Zhu and Ibrahim (2009) further systematically investigated the local influence methods proposed in Zhu et al. (2007) for GLMs with missing at random (MAR) covariates as well as not missing at random (NMAR) covariates, often referred to as nonignorable missing covariates.

In contrast, in the Bayesian literature, several analogues of Cook (1986) were developed to carry out model assessment by using either the curvature of some influence measures (Millar and Stewart (2007), Linde (2007), Lavine (1991)) or the Fréchet derivative of the posterior with respect to the prior (Dey, Ghosh and Lou (1996), Gustafson (1996a), Gustafson (1996b), Berger (1994)). Daniels and Hogan (2008) examined several global and local sensitivity methods in the Bayesian analysis of pattern mixture models (Little (1994), Andridge and Little (2011)). Recently, Zhu, Ibrahim and Tang (2011) developed a general framework of Bayesian influence analysis for assessing various perturbation schemes to the data, the prior and the sampling distribution for a class of statistical models without missing data.

The aim of this paper is to develop a formal Bayesian sensitivity analysis in statistical models with missing data. We introduce various perturbations to the modeling of the missing data mechanism, individual observations, and the prior. We develop a geometric framework, called the Bayesian perturbation manifold, to characterize the intrinsic structure of these perturbations. We examine several influence measures for sensitivity analysis and for quantifying the effect of various perturbations to statistical models with missing data.

In the paper, we develop a Bayesian perturbation manifold for a large class of statistical models with missing data; examine three Bayesian influence measures including the ϕ-divergence, the posterior mean distance, and the Bayes factor; focus on assessing missing data mechanism, while simultaneously perturbing other distributional assumptions, the prior, and individual observations.

To motivate our methodology, we consider data on 1116 female sex workers in Philippine cities from a study of the relationship between Acquired Immune Deficiency Syndrome (AIDS) and the use of condoms (Morisky et al. (1998)), which is discussed in more detail in Section 3. The data contains items about knowledge of AIDS, attitude toward AIDS, belief, and self efficiency of condom use. Nine variables in the original data set (items 33, 32, 31, 43, 72, 74, 27h, 27e, and 27i in the questionnaire) were taken as responses. The primary interest here was to find how the threat of AIDS is associated with aggressiveness of the sex worker and the fear of contracting AIDS. The responses and covariates are missing at least once for 361 workers (32.35%). In Section 3, we carry out a Bayesian analysis of a structural equations model with both missing covariates and responses to analyze this data set, and present a formal Bayesian sensitivity analysis.

The rest of this paper is organized as follows. In Section 2, we construct a Bayesian perturbation manifold to characterize various perturbations to statistical models with missing data and derive its associated geometric quantities. We propose global and local influence measures to quantify the effects of perturbing missing data mechanism, while simultaneously perturbing the data, the prior, and other model assumptions on the posterior quantities. In Section 3, we present simulation studies and a data analysis to illustrate the importance of the proposed method in assessing the missing data mechanism and other potential misspecifications.

2. Bayesian sensitivity analysis

2.1. Statistical models with missing data

Let zobs = (z1,o, . . . , zn,o) and zmis = (z1,m, . . . , zn,m) be the observed and missing data, respectively, and zcom = (z1,c, . . . , zn,c) = (zmis, zobs) be the complete data, where zi,c = (zi,o, zi,m) for i = 1, . . . , n. In applications, the dimensions of zi,c, zi,o and zi,m may be different across i. For instance, the number of observations may vary across clusters for clustered data.

For missing data problems, we consider a statistical model p(zcom | θ) for the complete data such that p(zcom | θ) is the product of a model for the observed data p(zobs | θ) and a model for the missing data given the observed data p(zmis | zobs, θ). This class of statistical models for missing data includes generalized linear models with missing covariates and/or responses, generalized linear mixed models, nonlinear models, parametric survival models, and many others. To carry out Bayesian inference, we usually use Markov chain Monte Carlo (MCMC) methods to simulate samples from the posterior distribution of the observed data

| (2.1) |

Example 1 (Missing Covariates Data). Consider n independent observations zcom = {zi,c = (xi, ci, ri, yi), i = 1, . . . , n}, where yi is the response variable, xi is a p1 × 1 vector of completely observed covariates, and ci = (ci,m, ci,o) is a p2 × 1 vector of partially observed covariates, where ci,m and ci,o denote the missing and observed components of ci, respectively. Let ri be a p2 × 1 vector whose jth component, rij, equals 1 if the jth component of ci, denoted by cij, is observed, and 0 if cij is missing. We assume that p(xi, ci, ri, yi|θ) = p(yi|xi, ci, θ)p(xi, ci|θ) p(ri|yi, xi, ci, θ), where θ denotes the vector of unknown parameters. In this case, zi,m = ci,m and zi,o = (xi, ci,o, ri, yi) for all i.

We assume the generalized linear model (GLM)

| (2.2) |

for i = 1, . . . , n, where ai(·), b1(·), and b2(·,·) are known functions, ηi = η(μi) and , in which g(·) is a known link function, β = (β1, . . . , βp)′ and p = p1 + p2. We assume that

| (2.3) |

Similarly, we model the missing-data mechanism as

| (2.4) |

To carry out a full Bayesian analysis, we need to specify a prior for θ. We can take an independent prior for θ such that p(θ) = p(τ)p(β)p(ξ)p(α). For τ and β, we can take τ ~ gamma(α0/2, λ0/2) and β ~ N(μ0, Σ0), where α0, λ0, μ0(p×1), and Σ0(p × p positive definite matrix) are pre-specified hyperparameters. If λmin(Σ0) converges to ∞, then N(μ0, Σ0) tends to an improper prior. In contrast, if λmax(Σ0) is very small, then N(μ0, Σ0) tends to a strongly informative prior. For α, we can take an independent prior p(α) = p(α1)p(α21) · · · p(α2p2). To make valid Bayesian inferences about β, requires an appropriate prior p(θ) and the correct specification of the sampling distributions (2.2)-(2.4), so it is crucial to assess the robustness of both the prior and the sampling distribution with respect to posterior estimate of β. Particularly, there is a growing awareness of the need for a formal method for investigating the sensitivity of inference to the missing-data mechanism (Copas and Eguchi (2005), Little and Rubin (2002), Zhu and Lee (2001), Troxel et al. (2004), Copas and Li (1997), van Steen et al. (2001), Troxel (1998), Jansen et al. (2006), Jansen et al. (2003), Verbeke et al. (2001), Shi, Zhu and Ibrahim (2009), Daniels and Hogan (2008), Ibrahim, Chen and Lipsitz (2005)).

Example 2 (Missing Response Data). We consider n independent observations zcom = {zi,c = (xi, ri, yi), i = 1, . . . , n}, where yi = (yi,m, yi,o) is a py × 1 response vector, in which yi,m and yi,o denote the missing and observed components of yi, respectively, and xi is a px × 1 vector of completely observed covariates. Moreover, ri is a py × 1 vector, whose jth component, rij, equals 1 if the jth component of yi, denoted by yij, is observed, and 0 if yij is missing. It is common to model the joint distribution of (yi, ri) given xi such that

| (2.5) |

where θI is the vector of parameters of interest and θN includes all parameters in the missing data mechanism p(ri|yi,m, yi,o, xi, θN). In this case, zi,m = yi,m and zi,o = (xi, yi,o, ri) for all i.

To carry out a full Bayesian analysis, we need to specify a prior for θ and the missing data mechanism. For instance, a well-known ignorability condition (Rubin, 1976) is commonly used to carry out posterior inference on θI without specifying the missing data mechanism. Specifically, a missing data mechanism is said to be ignorable if it is MAR, (2.5) is true and p(θ) = p(θI)p(θN). Although it is computationally easier to assume the ignorability condition, most missing data mechanisms are nonignorable (Daniels and Hogan (2008)). An alternative method for nonignorable missing data is to use the extrapolation factorization

| (2.6) |

In this case, p(yi,m|yi,o, ri, xi, θN) is an extrapolation model and cannot be identifiable by the observed data, while p(yi,o, ri|xi, θI) is an observed data model. Here, the components in θN are called sensitivity parameters (Daniels and Hogan (2008)).

2.2. Bayesian Perturbation Manifold

We introduce a perturbation vector ω = ω(zcom, θ) in a set Ω to perturb the complete-data model p(zcom, θ) = p(θ)p(zcom | θ). To ensure that the perturbation ω is meaningful and sensible, we require the following. (1) p(zcom, θ | ω) is the probability density of (zcom, θ) for the perturbed model as ω varies in a set Ω; (2) There is an ω0 ∈ Ω such that p(zcom, θ | ω0) = p(zcom, θ) and p(zobs, θ | ω0) = ∫ p(zcom, θ | ω0)dzmis = p(zobs, θ) for all (z, θ). The ω0 can be regarded as the ‘central point’ of Ω representing no perturbation. See Gustafson (2006) and Daniels and Hogan (2008) for general discussions of model expansion from a Bayesian viewpoint.

Example 1 (Continued) We are interested in perturbing the missing-data mechanism p(ri|yi, xi, ci, ξ) in (2.4). For instance, when (2.4) is assumed to be MAR, we can consider a general perturbation scheme

| (2.7) |

where ω = (ω1, . . . , ωm)T is an m×1 vector. The perturbation (2.7) is commonly used to perturb the given GLM with MAR covariates in the direction of NMAR (Shi, Zhu and Ibrahim (2009), Verbeke et al. (2001)). We can also consider the individual-specific infinitesimal perturbation (Verbeke et al. (2001), Hens et al. (2006), Jansen et al. (2006), Jansen et al. (2003))

| (2.8) |

Large effect of ωi in (2.8) can provide insight into which cases have large influence. Influence measures developed for the perturbation (2.8) are closely related to Bayesian case influence measures, such as the conditional predictive ordinate (Geisser (1993), Gelfand et al. (1992)).

We develop a geometric framework, called a Bayesian perturbation manifold, to delineate the effect of introducing each perturbation ω in Ω. Under some conditions, is a Riemannian Hilbert manifold (Lang (1995)). On , we consider a smooth curve C(t) given by

| (2.9) |

in which is called the tangent (or derivative) vector. The tangent vectors for all possible curves of the form C(t) form the tangent space of at ω, denoted by . The inner product of any two tangent vectors v1(ω) and v2(ω) in is given by

| (2.10) |

It can be shown that the length of the curve C(t) from t1 to t2 is

| (2.11) |

We consider the concept of a geodesic as a direct extension of the straight line in Euclidean space on . For a real function f(ω) defined on , we take df[v](ω) = limt→0t–1(f[p(zcom, θ | ω(t))] – f[p(zcom, θ | ω(0))]) as the directional derivative of f at the perturbation distribution p(zcom, θ | ω) in the direction of . For any two smooth vector fields u(ω) and v(ω) in , we define the directional derivative du[v](ω) = limt→0t–1{u(ω(t)) – u(ω(0))} of a vector field u(ω), called the connection, at the perturbation distribution in the direction of v(ω). The popular Levi-Civita connection, denoted by ∇vu(ω), is

| (2.12) |

A geodesic on the manifold is a smooth curve τ(t) = p(zcom, θ | ω(t)) on with such that ∇vv(ω(t)) = 0. The geodesic is (locally) the shortest path between points on . Finally, based on these geometric quantities of , we define as the Bayesian perturbation manifold (BPM) with an inner product < u, v > and the Levi-Civita connection ∇vu.

Compared to the existing sensitivity analysis methods, a key advantage of using the BPM is that it provides a framework for quantifying simultaneous perturbations to the prior, the missing data mechanism and other distributional assumptions, and individual observations. Such simultaneous perturbations can be important, since it can allow one to disentangle the uncertainty about unverifiable missing data mechanism assumptions from the misspecification of the prior and other distributional assumptions, as well as the presence of outliers. According to the best of our knowledge, no methods currently exist for handling the simultaneous perturbations.

Example 1 (Continued) Consider the simultaneous perturbation model

| (2.13) |

where ω includes ωθ and for all i and all components of ω are assumed to be independent of zcom and θ. The three terms on the right hand side of (2.13) are assumed to be probability densities and ωθ, ωiy, ωic, and ωir for all i have no components in common. In this case, the BPM is given by

| (2.14) |

where p(xi, ci, ri, yi|θ, ωi) denotes the product of the three terms on the right hand side of (2.13). Consider ω(t) as a vector of smooth functions of t and vh = dω(0)/dt. It follows from the arguments in Zhu, Ibrahim and Tang (2011) that is spanned by the functions ∂ωθ logp(θ| ωθ), ∂ωiy logp(yi|xi, ci, β, τ, ωiy), ∂ωic logp(xi, ci|α, ωic), and ∂ωir logp(ri|xi, ci, yi, ξ, ωir), where ∂ω = ∂/∂ω. By using the chain rule, we have

| (2.15) |

where

| (2.16) |

is the Bayesian Fisher information matrix with respect to ω (Daniels and Hogan (2008)). Geometrically, ωθ, ωiy, ωic, and ωir are orthogonal to each other with respect to the inner product defined in (2.10) (Cox and Reid (1987)). Similar to Zhu et al. (2007), one can easily separate out the influence of the missing data mechanism from that of the data, the prior, and other distributional assumptions. Example 2 (Continued). The sensitivity parameters in (2.6) can be either fixed at a range of values, or assigned an appropriate distribution (Daniels and Hogan (2008)). Here we take the first approach and treat θN or its parametrization as a perturbation vector. Generally, we consider a simultaneous perturbation model

| (2.17) |

where ω includes ωθ, ωN, and ωI, which represent the perturbation vectors to the prior, the extrapolation model, and the observed data model, respectively. For simplicity, we assume that ωθ, ωN, and ωI do not share any common components and are independent of zcom and finite dimensional parameters. Moreover, it is assumed that p(θ|ωθ), p(yi,m|yi,o, ri, xi, ωN), and p(yi,o, ri|xi, θI, ωI) for all i are probability densities. Generally, it is possible that ωN and ωI may depend on zcom and vary across i.

Consider ω(t) as a vector of smooth functions of t and vh = dω(0)/dt. In this case, is spanned by ∂ωθ logp(θ |ω), , and . Subsequently, we can calculate the Bayesian Fisher information matrix G(ω(0)) according to (2.16). Geometrically, ωθ, ωN, and ωI are also orthogonal to each other with respect to the inner product defined in (2.10) (Cox and Reid (1987)).

2.3 Intrinsic influence measures

As the purpose of a sensitivity analysis is to assess the uncertainty of the parameter of interest as ω varies in Ω given the data at hand, we take an IFM to be a functional of p(θ|zobs, ω) as ω varies in Ω, where p(θ | zobs, ω) is the perturbed posterior distribution of θ given zobs and ω. Generally, let IF(ω) = IF(p(θ | zobs, ω)) be the intrinsic influence measure. Three common intrinsic influence measures are the ϕ-divergence function, the posterior mean, and the Bayes factor (Kass et al (1989), Kass and Raftery (1995)).

For the missing data mechanism, one can fix an ω0 ∈ Ω corresponding to MAR and then develop a relative intrinsic influence measure (RIFM) as a functional of p(θ | zobs, ω) and p(θ | zobs, ω0),

| (2.18) |

For instance, RI(ω, ω0) can be the total variation distance of p(θ | zobs, ω0) and p(θ | zobs, ω) (Dey, Ghosh and Lou (1996)). One can take RI(ω, ω0) = IF(ω) – IF(ω0) as the difference between IFMs at ω and ω0. See more examples in Section 2.4.

We also suggest rescaling RI(ω, ω0) by using the minimal geodesic distance between p(zcom, θ | ω) and p(zcom, θ | ω0), g(ω, ω0), on the BPM . Thus, we define the intrinsic influence measure for comparing p(θ | zobs, ω) to p(θ | zobs, ω0) as

| (2.19) |

The proposed IGIRI(ω, ω0) can be interpreted as the ratio of the change of the objective function relative to the minimal distance p(zcom, θ | ω) and p(zcom, θ | ω0) on . In practice, one can identify the most influential ω in Ω, denoted by , which maximizes IGIRI(ω, ω0) for all ω ∈ Ω.

We consider the local behavior of RI(ω(t), ω0) as t approaches zero along all possible smooth curves p(zcom, θ | ω(t)} passing through ω(0) = ω0. Since RI(ω(t), ω0) is a function from R to R, it follows from a Taylor's series expansion that

where ∂RI(ω(0)) and ∂2RI(ω(0)) denote the first- and second order derivatives of RI(ω(t), ω0) with respect to t evaluated at t = 0. We need to distinguish ∂RI(ω(0)) ≠ 0 for some smooth curves ω(t) and ∂RI(ω(0)) = 0 for all smooth curves ω(t). For the case ∂RI(ω(0)) ≠ 0, ∂RI(ω(0)) = d(RI)[v](ω(0)) is the directional derivative of RI in the direction of (Lang (1995)). The first-order local influence measure is defined as

| (2.20) |

We use the tangent vector vFImax in that maximizes FIRI[v](ω(0)), to carry out a sensitivity analysis.

For the case ∂RI(ω(0)) = 0, we use ∂2RI(ω(0)) to assess the second-order local influence of ω to a statistical model (Zhu et al. (2007)). The second-order influence measure in the direction is defined as

| (2.21) |

Geometrically, SIRI[v](ω(0)) is invariant to scalar transformations and smooth transformations. To carry out a sensitivity analysis, we use the tangent vector vS,max in that maximizes SIRI[v](ω(0)) for all .

2.4. Bayesian Sensitivity Analysis

Our sensitivity analysis consists of four steps.

Introduce a Bayesian perturbation manifold based on p(zcom, θ | ω).

Calculate the geometric metric < v, v > (ω0) of the perturbation manifold.

Choose an intrinsic influence measure IF(ω). If ∂RI(ω(0)) ≠ 0, then we calculate vFI,max to assess local influence of minor perturbations to the model. If ∂RI(ω(0)) = 0, then we compute vS,max. We inspect vFI,max (or vS,max) in order to detect the most influential components of ω.

For the most influential subcomponents of ω, we calculate IGIRI(ω, ω0) and .

In practice, we iteratively perform the four-step influence analysis as described above. We start with a simultaneous perturbation to zcom, p(θ) and p(zcom|θ). We decide a set of parametric perturbation characterized by a finite dimensional ω such that the perturbed model is large enough to cover a large class of candidate models for the data set. With parametric perturbations, it is computationally simple to carry out the Bayesian sensitivity analysis, and a perturbation model with a large number of perturbations can approximate most interesting perturbation models. we start with a local influence analysis to examine the sensitivity of all components and then focus on a few influential components using an intrinsic influence analysis. For instance, if a few influential hyper-parameters to the prior are identified, one further perturbs their associated prior distribution using the additive ε-contamination class and then carries out intrinsic influence analysis. After combining the information learned from our influence analysis, we might choose a new sampling distribution and/or a new prior. This procedure can be run iteratively until a certain satisfaction is reached.

2.5. Examples of Bayesian influence measures

We focus on assessing the influence of a perturbation scheme ω to the posterior distribution based on ϕ–divergence, the posterior mean distance, and the Bayes factor. The Bayes factor, the ϕ-diverfence, and the posterior mean quantify the effects of introducing ω on the overall assumed model, on the overall posterior distribution, and on the posterior mean of θ, respectively. Since the Bayes factor measures the overall difference between p(zobs|ω) and p(zobs|ω0), it can be more sensitive to some discrepancies between the assumed model and the observed data. As the ϕ-diverfence measures the overall difference between p(zmis, θ|zobs, ω) and p(zmis, θ|zobs, ω0), and such a difference may include mean, median, etc., it can be more sensitive to some changes of the posterior distributions, but the posterior mean distance is more sensitive to a subtle change in the posterior mean.

Example 3 (Bayes factor). The logarithm of the Bayes factor for comparing ω with ω0 is

The value of BF(ω, ω0) can be regarded as a statistic for testing hypotheses of ω against ω0 (Kass and Raftery (1995)). Under some smoothness conditions, BF(ω, ω0) is a continuous map from to R.

We set RI(ω, ω0) = BF(ω, ω0), where ω(t) is a smooth curve on with ω(0) = ω0 and , where dt = d/dt. It can be shown that

where the conditional expectation is taken with respect to p(zmis, θ | zobs, ω(t)). We can use MCMC methods to draw samples from p(zmis, θ | zobs) and then approximate ∂RI(ω(0)) by using .

We consider a simultaneous perturbation to both the prior and the sampling distribution. We have

For instance, for the perturbation to the prior given by p(θ; t) = p(θ) + t{g(θ) – p(θ)}, it can be shown that

where p(zobs) = ∫ p(zcom; θ)p(θ)dzmisdθ and pg(zobs) = ∫ p(zcom; θ)g(θ)dzmisdθ. Since the ratio of pg(zobs) to p(zobs) is the Bayes factor in favor of g(θ) against p(θ), the first-order local influence measure is the square of the normalized Bayes factor of g(θ) against p(θ).

Example 4 (ϕ–divergence). The ϕ–divergence between two posterior distributions for ω0 and ω is

where R(zmis,θ | ω, ω0) = p(zmis, θ | zobs, ω)/p(zmis, θ | zobs, ω0) and ϕ(·) is a convex function with ϕ(1) = 0, such as the Kullback-Leibler divergence or the χ2-divergence (Kass et al. (1989)).

We set RI(ω, ω0) = ΦRI(ω, ω0), where ω(t) is a smooth curve on with ω(0) = ω0 and . It can be shown that ∂RI(ω(0)) = 0 and

where . We need a computational formula. Note that

In practice, we use MCMC methods to draw samples from p(θ, zmis | zobs, ω0) and then approximate ∂2RI(ω(0)) using

For perturbation schemes to the prior distribution, it can be shown that

and , which are, respectively, the Fisher information matrices of ω(t) based on the prior and posterior distributions, where var(· | zobs, ω0) denotes the posterior variance. For instance, for p(θ | ω(θ)) = p(θ) + t{g(θ) – p(θ)}, we can show that

where varP(·) denotes the prior variance.

Example 5 (Posterior mean distance). We measure the distance between the posterior means of h(θ) for ω0 and ω (Kass et al. (1989), Gustafson (1996b)). The posterior mean of h(θ) after introducing ω is

Cook's posterior mean distance for characterizing the influence of ω is then

| (2.22) |

where Gh is a positive definite matrix. Henceforth, Gh is the inverse of the posterior covariance matrix of h(θ) for p(θ | zobs, ω0).

We set RI(ω, ω0) = CMh(ω, ω0), where ω(t) is a smooth curve on with ω(0) = ω0 and . It can be shown that ∂RI(ω(0)) = 0 and ∂2RI(ω(0)) = M̧h(v)TGhM̧h(v), where

We can use MCMC methods to approximate M̧h(v) and Gh.

2.6. A Simple Theoretical Example

We consider a simple example involving missing responses (Daniels and Hogan (2008)). Consider a data set zcom = ((y1, r1), · · · , (yn, rn))T, where ri = 1 if yi is observed and 0 if yi is missing. We focus on perturbing missing-data mechanism.

First, we fit a pattern mixture model for (yi, ri) such that

| (2.23) |

Model (2.23) assumes that the observed and missing responses differ in their mean but share the same variance. Since the observed data do not contain any information on μ0, we assume μ0 = μ1 + ωμ.

Here ωμ can be regarded as a perturbation and θ = (μ1, σ2, ϕ). The complete-data likelihood function is

where p(y|μ, σ2) denotes the normal density function. Regardless of the prior for θ, it can be shown that , which is independent of ωμ, and thus is flat and g(ωμ,1, ωμ,2) = c|ωμ,1 – ωμ,2|, where c is a scalar (Zhu et al. (2007)). Moreover, since the observed-data likelihood function ∫ p(zcom, θ|ωμ)dzmis does not depend on ωμ, all IFs and IFMs based on p(θ|zobs, ωμ) are zero. This indicates that varying ωμ does not influence the posterior inferences on θ given zobs. Instead, if we consider the posterior mean μ1 + (1 – ϕ)ωμ, the mean of yi, as the influence measure, then we have

where E[·|zobs] denote the expectations taken with respect to p(θ|zobs). In this case, IF(ωμ) does not belong to any of the three Bayesian influence measures considered in Section 2.4, but our invariant influence measure is applicable. Moreover, the constant IGIRI(ωμ,1, ωμ,2) indicates that any inferences about the measure of yi is completely driven by the assumptions regarding the size of ωμ.

Second, we fit a selection model for (yi, ri) such that

| (2.24) |

where logit(·) denotes the logit function. In (2.24), ωξ = 0 corresponds to MAR, whereas ωξ ≠ 0 corresponds to NMAR. In this case, ωξ can be regarded as a perturbation and θ = (μ1, σ2, ξ1). The complete-data likelihood function is

If p(θ) is the prior for θ, it can be shown that

which does not have a simple form. Moreover, since the observed-data likelihood function ∫ p(zcom, θ|ωξ)dzmis does depend on ωξ, all IFs and IFMs based on p(θ|zobs, ωξ) can be numerically calculated according to the formula given in Sections 2.3-2.4. Generally, in the selection model, varying ωξ does not influence the posterior inferences about θ given zobs.

3. Simulation Study

We consider a two-level model. We assume that data are obtained from N individuals nested within J groups, with group j containing nj individuals, where . The level-1 units are the individuals and the level-2 units are the groups. At level-1, for each group j (j = 1, . . . , J), the within-group model is given by

| (3.1) |

where yij is the outcome variable, xij is a q-vector with explanatory variables (including a constant), βj is a q-vector of regression coefficients, and εij is the residual. At level-2, we further assume βj to be a vector of random regression coefficients,

| (3.2) |

where Zj is a q × r matrix with explanatory variables (including a constant) obtained at the group level, γ is a r-vector containing fixed coefficients, and uj is a q-vector of residuals. Assume that uj is independent of εij, uj ~ Nq(0, Σ), and . We assume that the covariates xij and Zj are completely observed for i = 1, . . . , nj and j = 1, . . . , J, but the responses yij may be missing.

We simulated a data set according to (3.1)-(3.2). We set J = 100, q = 2, and r = 3, and then we chose varying values of nj in order to create a scenario with different cluster sizes. Specifically, we set n1 = . . . = n10 = 3, n91 = . . . = n100 = 20, and ni ∈ {5, 7, 8, 10, 12, 13, 15, 17} for i = 11, . . . , 90. We independently generated all components (except the intercept) of xij and Zj as U(0, 1). We assumed that the yij's were missing at random (MAR) with missing data mechanism

| (3.3) |

where φ = (φ0, φx), rij = 1 if yij is missing and rij = 0 if yij is observed. We set φ0 = –2.0, φx = (0.5, 0.5)T , γ = (0.8, 0.8, 0.8)T , , and . The missing fraction of the responses is about 18.4%. To add some outliers, we modified the simulated data set by generating new {yij : j = 1, 99, 100; i = 1, . . . , nj} from a distribution with uj ~ N(5.612, 1.96I2 + 0.3Σ) (j = 1, 99, 100).

We fit (3.1)-(3.3) to the simulated data set and used MCMC sampling to carry out the Bayesian influence analysis (Chen, Shao and Ibrahim (2000)). We took

where γ0, H0ε, α0ε, β0ε, R0, and ρ0 are hyperparameters whose values are prespecified. We assumed that , where φ0 and H0φ are the given hyperparameters. Furthermore, we set γ0 = (0.8, 0.8, 0.8)T, , φ0 = (–2.0, 0.5, 0.5)T, H0φ = I3, αε0 = 10.0, βε0 = 8.0, ρ0 = 10, and H0ε = diag(0.2, 0.2, 0.2).

We simultaneously perturbed the distributions of uj and the prior distributions of γ, Σ, and , whose perturbed complete-data joint (unnormalized) log-posterior density is given by

where is the density of a Gamma (α0ε + 3, β0ε + 1) distribution and ω = (ω1, . . . , ωJ, ωγ, ωΣ, ωσ )T. In this case, ω0 = (1, 1, . . . , 1, 0)′ represents no perturbation. By differentiating with respect to ω, after some calculations, we have

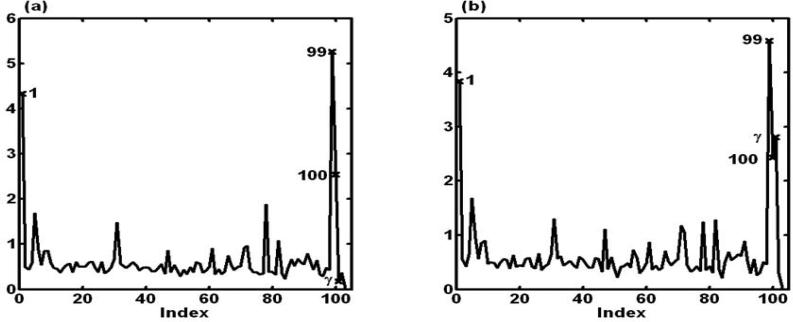

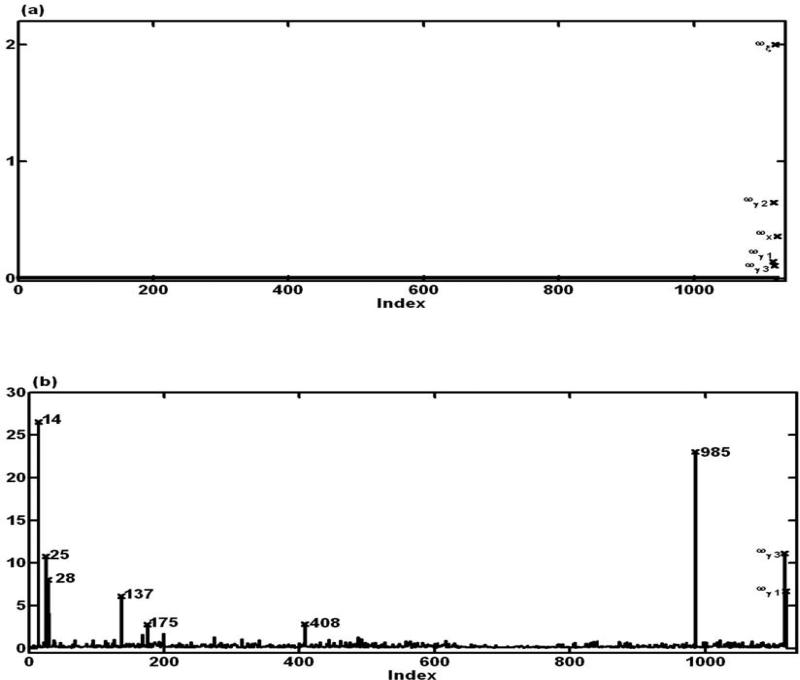

where varΣ and denote the variance with respect to the priors of Σ and , respectively. Then, we chose a new perturbation scheme and calculated the associated local influence measures , SIΦIR[ej], and SICMh[ej], in which ϕ(·) was chosen to be the Kullback-Leibler divergence divergence and h(θ) = θ. Note that the numbers of observations in groups 1, 99, and 100 were, respectively, 3, 20, and 20. Groups 1, 99 and 100 were detected to be influential by all our local influence measures. Selected results for SIΦIR[ej] are presented in Fig. 1(a).

Figure 1.

Simulation Study: group index plots of local influence measures for simultaneous perturbation: (a) SIΦIR[ej] can detect the three influential groups (1, 99, and 100); (b) SIΦIR[ej] can simultaneously detect the three influential groups (1, 99, and 100) and the perturbed prior distribution p(γ).

We used the same setup, except that we employed a perturbed prior distribution for , and then applied the same MCMC method, perturbation scheme, and local influence measures. Groups 1, 99, and 100 and the perturbed prior distribution of γ were identified to be influential by all our local influence measures. Selected results for SIΦD[ej] are presented in Fig. 1 (b).

Next, we explored the potential deviations of the MAR mechanism in the direction of NMAR. We simulated a data set using the same setup except that the missing data mechanism for yij was

| (3.4) |

with φy = 0.5 to make the missing data fraction approximately equal to 25%.

Similar to sensitivity analysis methods in missing data problems (Molenberghs and Kenward (2007), Little and Rubin (2002)), we fit model (3.1)-(3.2) and (3.4), with φy fixed at a value ωy, to the simulated data set. When ωy = 0, the missing data is MAR and hence the missing data mechanism in (3.4) is ignorable. Thus, by varying ωy in an interval Ω1, we can treat ωy as a perturbation scheme to the sampling distribution and then calculate the associated local influence measures. Specifically, we chose ω = (ωy) and obtained a curve C(t) on at t = ω.

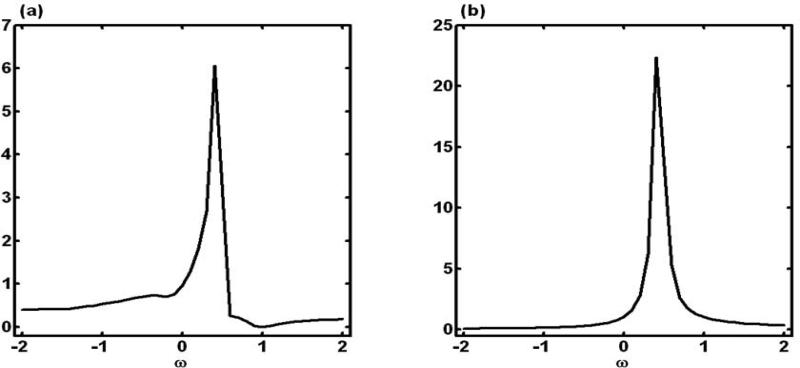

We used the same prior distributions for γ, φ, , and Σ as before and used MCMC sampling to carry out the Bayesian influence analysis. We calculated the intrinsic influence measures IGIf(ω0, Ω1) for ΦD(ω) and Mh(θ), in which we chose ϕ(·) as the Kullback-Leibler divergence divergence, set h(θ) = γ and treated ω0 = 0 as no perturbation. We set Ω1 = [–2.0, 2.0] and approximated Ω1 via K0 = 41 grid points ωg,(k) = –2.0 + 0.1k for k = 0, . . . , 40. For a given ω ∈ Ω1, d(ω0, ω) was calculated via a composite trapezoidal rule.

Figures 2 (a) and 2 (b) present plots of IGIIR(ω0, ω) against ω ∈ Ω1 for ΦIR(ω) and Mh(ω), respectively. The intrinsic influence measures reach maxima near the true value of φy = 0.5. This indicates that the nonignorable missing data mechanism is tenable for the simulated data. We also followed a standard sensitivity analysis to compute the posterior means and standard deviations of γ for different φy in Table 1. Although we observed that the posterior distribution of γ varies with φy, it is hard to tell why φy = 0.5 is more meaningful. We also carried out a local influence analysis under this NMAR setting (not presented here) and observed that the proposed local influence method can pick up anomalous features of the data that are not necessarily associated with the missing data mechanism (Jansen et al. (2006)).

Figure 2.

Simulation Study: plots of IGIIR(ω0, ω) against ω ∈ Ω1 for (a) ΦIR(ω) and (b) Mh(ω), in which h(θ) = γ.

Table 1.

Posterior means (PMs) and standard errors (SDs) of γ at different values of φy

| True γ0 = (0.8, 0.8, 0.8)T | ||||||

|---|---|---|---|---|---|---|

| γ 1 | γ 2 | γ 3 | ||||

| PM | SD | PM | SD | PM | SD | |

| φy = 0.5 | 0.831 | 0.174 | 0.721 | 0.251 | 0.809 | 0.255 |

| φy = 0.3 | 0.777 | 0.170 | 0.697 | 0.249 | 0.786 | 0.247 |

| φy = 0.15 | 0.738 | 0.167 | 0.661 | 0.243 | 0.776 | 0.249 |

| φy = 0.0 | 0.697 | 0.177 | 0.622 | 0.247 | 0.749 | 0.250 |

4. Real data example

We consider a small portion of a data set from a study of the relationship between acquired immune deficiency syndrome (AIDS) and the use of condoms (Morisky et al. (1998)). This subset contains 11 items on such topics as knowledge about AIDS and beliefs, behaviours and attitudes towards condoms use collected from 1116 female sex workers. Nine items, denoted by y = (y1, . . . , y9)T, were taken as responses. Items (y1, y2, y3) are related to a latent variable, η, which can be roughly interpreted as threat of AIDS, while items (y4, y5, y6) and (y7, y8, y9) are, respectively, related to latent variables ξ1 and ξ2, that can be interpreted as aggressiveness of the sex worker and worry of contracting AIDS (Lee and Tang (2006)). All response variables were treated as continuous. A continuous item x1 on the duration as a sex worker and an ordered categorical item x2 on the knowledge about AIDS were taken as covariates. The response variables and covariates are missing at least once for 361 of them (32.35%) (see Table 4 of Lee and Tang (2006)). The covariate x2 is completely observed.

Let yi = (yi1, . . . , yi9)T and . We considered the measurement and structural equations given as

where μ = (μ1, . . . , μ9)T and

in which 0.0* and 1.0* are regarded as fixed values to identify the scale of the latent factor. We took εi distributed as N(0, Ψ), where Ψ = diag(ψ1, . . . , ψ9), and and εi are independent. In the structural equation, Γ = (b1, b2, γ1, γ2) is a vector of unknown parameters, ξi = (ξi1, ξi2)T is distributed as N(0, Φ), δi is distributed as N(0, ψδ), and ξi and δi are independent.

We took the missing data as NMAR, and hence the missingness mechanism of the response variables is non-ignorable (Ibrahim and Molenberghs (2009)). Let ryij = 1 if yij is missing and ryij = 0 if yij is observed. For the missing data mechanism of the response variables, we took logit{pr(ryij = 1 | yi)} = φ0 + φ1yi1 + . . . + φ9yi9, where φ = (φ0, φ1, . . . , φ9)T. We also assumed that the covariate xi1 is NMAR. Let rxi1 = 1 if xi1 is missing and rxi1 = 0 if xi1 is observed. It was assumed that xi1 was distribution and logit{pr(rxi1 = 1 | φx)} = φx0 + ωxi1. When ω = 0, the missingness mechanism reduces to MAR.

We fitted the proposed structural equation models to the AIDS data set and used MCMC sampling to carry out the Bayesian influence analysis. We specified the prior distributions for μ, Λ, Ψ, Γ, ω, Φ, ψδ, φ, φx0, and τx as those in Lee and Tang (2006). A total of 40, 000 MCMC samples was used to compute the intrinsic and local influence measures.

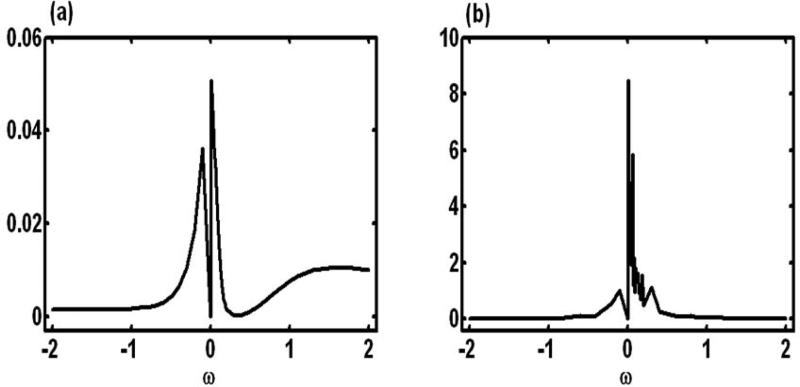

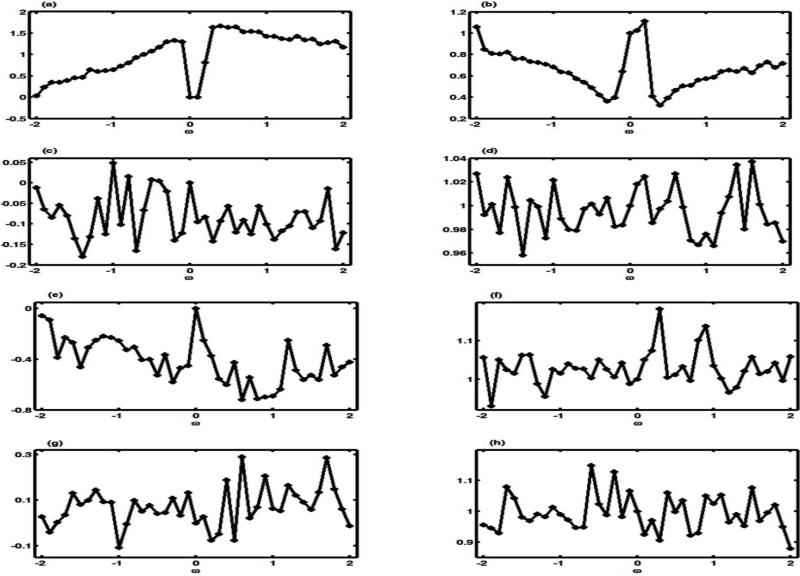

By varying ω in an interval [–2, 2], we can treat ω as a perturbation parameter to the sampling distribution. In this case, ω0 = 0 represents no perturbation. We calculated two intrinsic influence measures for the Kullback-Leibler divergence and the posterior mean distance, denoted by CMh(ω). Specifically, CMh(ω, ω0) = {Mh(ω) – Mh(ω0)}T Ch{Mh(ω) – Mh(ω0)}, where Mh(ω) = ∫ h(θ)p(θ | z, ω)dθ, in which h(θ) = Γ, and Ch is the posterior covariance matrix of Γ based on p(Γ |z, ω0). We calculated IGIRI(ω0, ω) at 41 evenly spaced grid points in [–2, 2] (Fig. 3). An inspection of Figure 3 shows that the largest IGIRI(ω0, ω) values are close to 0.1 for both the Kullback-Leibler divergence and Mh(ω). This indicates that the nonignorable missing data mechanism may be tenable for the AIDS data. We also carried out a standard sensitivity analysis and computed posterior means and standard deviations of at different values of ω, as shown in Figure 4. Although we observe that the posterior means and standard deviations of Γ vary with ω, it is difficult to make any meaningful inference here.

Figure 3.

AIDS data analysis results: plots of IGIRI(ω0, ω) against ω ∈[–2, 2] for (a) ΦRI(ω) and (b) Mh(ω), in which h(θ) = Γ.

Figure 4.

AIDS data analysis results: plots of (posterior means-posterior mean at ω = 0)/(posterior standard deviation at ω = 0) ((a),(c),(e),(g)) and the ratio of posterior standard deviations over posterior standard deviation at ω = 0 ((b),(d),(f),(h)) of b1, b2, γ1, γ2 as a function of ω ∈ [–2, 2].

We also calculated the local influence measures of the Kullback-Leibler divergence under a simultaneous perturbation scheme. The simultaneous perturbation scheme ω includes variance perturbations ωc for individual observations, perturbations ωs to coefficients in the structural equations model, perturbations ωξ to the sampling distribution of ξi, perturbations ωμ to the prior distribution of μ, perturbations ωΓ to the prior distribution of Γ, perturbations ωφ to the prior distribution of φ, and perturbations ωx to the missing data mechanism. The corresponding kernel of the joint log-posterior density of (z, θ) based on the complete data is given by

| (4.1) |

where

In this case, represents no perturbation, in which , , and .

We calculated and then obtained its metric tensor as

where , , , , , , and . The diagonal elements of the metric tensor G(ω0) reveal that ωγ1, ωγ2, ωγ3, ωξ, and ωx have larger effects compared to other perturbations (see Fig. 5(a)). Then, we chose a new perturbation scheme and calculated the associated local influence measures SIΦIR[ej] for the Kullback-Leibler divergence divergence. The local influence measures based on the ϕ-divergence are able to detect cases {14, 25, 28, 137, 175, 408, 985} as influential observations (see Fig. 5(b)), while ωγ1 and ωγ3 indicate that it may be important to include and ξi1ξi2 in the structural model (see Fig. 5(b)).

Figure 5.

AIDS data analysis results: index plots of (a) metric tensor gjj(ω0) and (b) local influence measures SIΦIR[ej] for simultaneous perturbation.

5. Discussion

We have developed a Bayesian sensitivity analysis methods for assessing various perturbations to statistical methods with missing data. We have developed a Bayesian perturbation manifold to characterize the intrinsic structure of the perturbation model and quantifying the degree of each perturbation in the perturbation model. We have developed global and local influence measures for selecting the most influential perturbation based on various objective functions and their statistical properties. Finally, we have also examined a number of examples to highlight the broad spectrum of applications of this method for Bayesian influence analysis in missing data problems.

Many issues merit further research. Our Bayesian sensitivity analysis method can be extended to more complex data structures (e.g., survival data) and other parametric and semiparametric models with nonparametric priors. In further research, we will generalize our methodology to the setting of estimating equations and empirical likelihood of generalized estimating equations for missing data problems. We will develop Bayesian sensitivity analysis methods to deal with the well-known masking and swamping effects in the diagnostic literature.

Contributor Information

HONGTU ZHU, Department of Biostatistics, University of North Carolina at Chapel Hill, 3109 McGavran-Greenberg Hall, Campus Box 7420, Chapel Hill, North Carolina 27516, U.S.A. hzhu@bios.unc.edu.

JOSEPH G. IBRAHIM, Department of Biostatistics, University of North Carolina at Chapel Hill, 3109 McGavran-Greenberg Hall, Campus Box 7420, Chapel Hill, North Carolina 27516, U.S.A. ibrahim@bios.unc.edu

NIANSHENG TANG, Department of Statistics, Yunnan University, Kunming 650091, P. R. China nstang@ynu.edu.cn.

References

- Andridge RR, Little RJA. Proxy pattern-mixture analysis for survey nonresponse. Journal of Official Statistics. 2011;27:153–180. [Google Scholar]

- Berger JO. An overview of robust bayesian analysis. Test. 1994;3:5–58. [Google Scholar]

- Chen MH, Shao QM, Ibrahim JG. Monte Carlo Methods in Bayesian Computation. Springer-Verlag; New York: 2000. [Google Scholar]

- Cook RD. Assessment of local influence (with Discussion). J. Roy. Statist. Soc. Ser. B. 1986;48:133–169. [Google Scholar]

- Copas J, Eguchi S. Local model uncertainty and incomplete data bias (with discussion). J. Roy. Statist. Soc. Ser. B. 2005;67:459–512. [Google Scholar]

- Copas JB, Li HG. Inference for non-random samples (with discussion). J. Roy. Statist. Soc. Ser. B. 1997;59:55–96. [Google Scholar]

- Cox DR, Reid N. Parameter orthogonality and approximate conditional inference (with discussion). J. Roy. Statist. Soc. Ser. B. 1987;49:1–39. [Google Scholar]

- Daniels MJ, Hogan JW. Missing Data in Longitudinal Studies: Strategies for Bayesian Modeling and Sensitivity Analysis. Chapman and Hall; London: 2008. [Google Scholar]

- Dey DK, Ghosh SK, Lou KR. Berger JO, Betró B, Moreno e., Pericchi l. R., ruggeri F, Salinetti G, Wasserman L, editors. On local sensitivity measures in bayesian (with discussion). Bayesian Robustness. 1996. pp. 21–39. IMS Lecture Notes-Monograph Series.

- Geisser S. Predictive Inference: An Introduction. Chapman and Hall; London: 1993. [Google Scholar]

- Gelfand AE, Dey DK, Chang H. Model determination using predictive distributions, with implementation via sampling-based methods (disc: P160-167). In: Bernardo JM, Berger JO, Dawid AP, Smith AFM, editors. Bayesian Statistics. Vol. 4. Oxford University Press; Oxford: 1992. pp. 147–159. [Google Scholar]

- Gustafson P. Local sensitivity of inferences to prior marginals. Journal of the American Statistical Association. 1996a;91:774–781. [Google Scholar]

- Gustafson P. Local sensitivity of posterior expectations. Annals of Statistics. 1996b;24:174–195. [Google Scholar]

- Gustafson P. On model expansion, model contraction, identifability, and prior information: two illustrative scenarios involving mismeasured variables (with discussion). Statistical Science. 2006;20:111–140. [Google Scholar]

- Hens N, Aerts M, Molenberghs G, Thijs H, Verbeke G. Kernel weighted in influence measures. Computational Statistics and Data Analysis. 2006;48:467–487. [Google Scholar]

- Ibrahim JG, Chen MH, Lipsitz SR, Herring A. Missing-data methods for generalized linear models: a comparative review. Journal of the American Statistical Association. 2005;100:332–346. [Google Scholar]

- Ibrahim JG, Molenberghs G. Missing data methods in longitudinal studies: a review. Test. 2009;18:1–43. doi: 10.1007/s11749-009-0138-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jansen I, Hens N, Molenberghs G, Aerts M, Verbeke G, Kenward MG. The nature of sensitivity in monotone missing not at random models. Computational Statistics and Data Analysis. 2006;50:830–858. [Google Scholar]

- Jansen I, Molenberghs G, Aerts M, Thijs H, Van Steen K. A local influence approach to binary data from a psychiatric study. Biometrics. 2003;59:410–419. doi: 10.1111/1541-0420.00048. [DOI] [PubMed] [Google Scholar]

- Kass RE, Raftery AE. Bayes factors. Journal of the American Statistical Association. 1995;90:773–795. [Google Scholar]

- Kass Robert E., Tierney Luke, Kadane Joseph B. Approximate methods for assessing influence and sensitivity in Bayesian analysis. Biometrika. 1989;76:663–674. [Google Scholar]

- Lang S. Differential and Riemannian manifolds. 3ed. Springer-Verlag; New York: 1995. [Google Scholar]

- Lavine M. Sensitivity in bayesian statistics: the prior and the likelihood. Journal of the American Statistical Association. 1991;86:396–399. [Google Scholar]

- Lee SY, Tang NS. Analysis of nonlinear structural equation models with nonignorable missing covariates and ordered categorical data. Statistica Sinica. 2006;16:1117–1141. [Google Scholar]

- Little RJA, Rubin DB. Statistical Analysis With Missing Data. Wiley; New York: 2002. [Google Scholar]

- Little RJA. A class of patternmixture models for normal incomplete data. Biometrika. 1994;81:471–483. [Google Scholar]

- Millar RB, Stewart WS. Assessment of locally influential observations in bayesian models. Bayesian Analysis. 2007;2:365–384. [Google Scholar]

- Molenberghs G, Kenward G. Missing Data in Clinical Studies. Wiley; New York: 2007. [Google Scholar]

- Morisky DE, Tiglao TV, Sneed CD, Tempongko SB, Baltazar JC, Detels R, Stein JA. The effects of establishment practices, knowledge and attitudes on condom use among filipina sex workers. AIDS Care. 1998;10:213–320. doi: 10.1080/09540129850124460. [DOI] [PubMed] [Google Scholar]

- Shi XY, Zhu HT, Ibrahim JG. Local influence for generalized linear models with missing covariates. Biometrics. 2009;65:1164–1174. doi: 10.1111/j.1541-0420.2008.01179.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Troxel AB. A comparative analysis of quality of life data from a southwest oncology group randomized trial of advanced colorectal cancer. Statistics in Medicine. 1998;17:767–779. doi: 10.1002/(sici)1097-0258(19980315/15)17:5/7<767::aid-sim820>3.0.co;2-b. [DOI] [PubMed] [Google Scholar]

- Troxel AB, Ma G, Heitjan DF. An index of local sensitivity to nonignorability. Statistica Sinica. 2004;14:1221–1237. [Google Scholar]

- van der Lindem A. Local influence on posterior distributions under multiplicative modes of perturbation. Bayesian Analysis. 2007;2:319–332. [Google Scholar]

- van Steen K, Molenberghs G, Thijs H. A local influence approach to sensitivity analysis of incomplete longitudinal ordinal data. Statistical Modelling: An International Journal. 2001;1:125–142. [Google Scholar]

- Verbeke G, Molenberghs G, Thijs H, Lasaffre E, Kenward MG. Sensitivity analysis for non-random dropout: a local influence approach. Biometrics. 2001;57:43–50. doi: 10.1111/j.0006-341x.2001.00007.x. [DOI] [PubMed] [Google Scholar]

- Zhu HT, Ibrahim JG, Lee SY, Zhang HP. Perturbation selection and influence measures in local influence analysis. Annals of Statistics. 2007;35:2565–2588. [Google Scholar]

- Zhu HT, Ibrahim JG, Tang NS. Bayesian local influence analysis: a geometric approach. Biometrika. 2011;98:307–323. doi: 10.1093/biomet/asr009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhu HT, Lee SY. Local influence for incomplete-data models. J. Roy. Statist. Soc. Ser. B. 2001;63(1):111–126. [Google Scholar]