Abstract

Objective

To describe the activities performed by people involved in clinical decision support (CDS) at leading sites.

Materials and methods

We conducted ethnographic observations at seven diverse sites with a history of excellence in CDS using the Rapid Assessment Process and analyzed the data using a series of card sorts, informed by Linstone's Multiple Perspectives Model.

Results

We identified 18 activities and grouped them into four areas. Area 1: Fostering relationships across the organization, with activities (a) training and support, (b) visibility/presence on the floor, (c) liaising between people, (d) administration and leadership, (e) project management, (f) cheerleading/buy-in/sponsorship, (g) preparing for CDS implementation. Area 2: Assembling the system with activities (a) providing technical support, (b) CDS content development, (c) purchasing products from vendors (d) knowledge management, (e) system integration. Area 3: Using CDS to achieve the organization's goals with activities (a) reporting, (b) requirements-gathering/specifications, (c) monitoring CDS, (d) linking CDS to goals, (e) managing data. Area 4: Participation in external policy and standards activities (this area consists of only a single activity). We also identified a set of recommendations associated with these 18 activities.

Discussion

All 18 activities we identified were performed at all sites, although the way they were organized into roles differed substantially. We consider these activities critical to the success of a CDS program.

Conclusions

A series of activities are performed by sites strong in CDS, and sites adopting CDS should ensure they incorporate these activities into their efforts.

Keywords: clinical decision support, knowledge management, governance, implementation, rapid assessment process

Introduction

Health information technology (HIT), including electronic health records (EHRs), computerized provider order entry (CPOE) systems, and clinical decision support (CDS) systems, in particular, has been shown to improve healthcare quality, safety, and effectiveness.1–6 Recent federal legislation and regulations have been designed to encourage and guide adoption of HIT.7–9 However, barriers to effective adoption of HIT, and CDS in particular, persist, including dissatisfaction among providers,10 shortages of trained HIT workers,11 high costs,12 and limited research identifying necessary components for successful HIT implementation.2 5 6 13

The provider order entry team (POET) is a multidisciplinary research team composed of physicians, medical informaticians, pharmacists, and medical anthropologists based at Oregon Health & Science University in Portland, Oregon, USA. For the past few years, POET has focused its ethnographic research efforts on understanding problems related to CDS implementation within community hospitals and ambulatory clinics throughout the USA. In previously reported work, the POET team identified 10 key ‘themes’ for successful implementation of CDS: (1) workflow; (2) knowledge management14; (3) data as a foundation for CDS;15 (4) user–computer interaction; (5) measurement and metrics; (6) governance16; (7) translation for collaboration; (8) the meaning of CDS17; (9) roles of special, essential people; and (10) communication, training, and support.18

The focus of this paper is the ninth theme: the roles and activities of the people involved in designing, implementing, maintaining, and evaluating CDS. A similar theme was previously explored by the POET team during a study of CPOE implementation,19 but CDS-related activities differ from those involved in CPOE implementation. In this study, we describe, in detail, the activities of these people in the creation and maintenance of a robust CDS program. Because the job titles of these ‘CDS people’ vary substantially, we focused our study on the activities they perform and their responsibilities rather than their specific job titles.

Methods

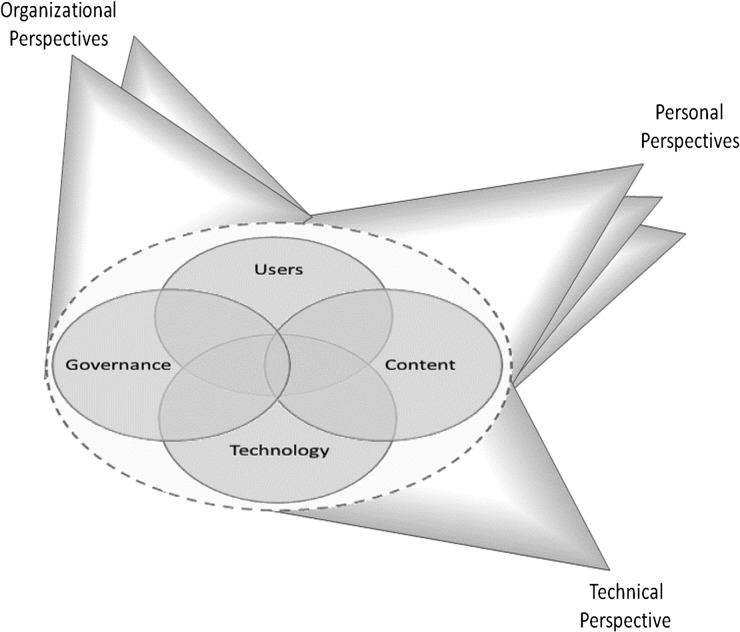

In a previous paper, we described the theoretical underpinnings of our perspective on CDS,19 20 which are informed by Linstone's Multiple Perspectives Model (figure 1).21 This framework informed subject selection and data analysis for our study. In brief, we incorporated the perspectives of clinical end users, CDS developers, and administrators, each of whom have different meanings for, and perspectives on, the concept of CDS.

Figure 1.

Multiple Perspectives Model. Figure from Recommended practices for computerized clinical decision support and knowledge management in community settings: a qualitative study. BMC medical informatics and decision-making, and republished here with permission of the author.18

This study was conducted using a qualitative dataset collected from 2007 to 2009. Detailed descriptions of our study design, sample selections, and data collection methods have been published elsewhere,18 22 but will be outlined here. The study was approved by institutional review boards (IRBs) at Oregon Health & Sciences University (OHSU), Portland Oregon, USA; Brigham and Women's Hospital, Boston, Massachusetts, USA; the University of Texas Health Science Center at Houston,Texas, USA; Providence Portland Medical Center, Portland, Oregon, USA; El Camino Hospital, Mountain View, California, USA; Wishard Memorial Hospital/Regenstrief Institute, Indianapolis, Indiana, USA; Roudebush Veterans Affairs Hospital, Indianapolis, Indiana, USA and the University of Medicine and Dentistry of New Jersey, New Brunswick, New Jersey, USA. The Mid-Valley Independent Physicians Association did not have an IRB, so they relied on the OHSU IRB.

Selection of sites

We began our study by selecting a sample of diverse sites with a reputation for excellence based on their history of publishing or presenting the results of their CDS research. Although we believed that sites that had not yet embarked on a CDS program or those that had a history of CDS failures would also have important lessons, we felt that sites with a history of excellence would have the most to teach us about successful CDS implementations. Though all sites in our study had a history of excellence, we intentionally selected sites with diverse maturity of information system use,23 types of system, and organizational structures. Attributes of the chosen sites are outlined in table 1. The two community hospitals represent different histories of CDS use: one site has operated their system for over 40 years, the other, for 2 years; and they use different commercial systems. The ambulatory sites operate two different commercial systems and three locally developed systems. Each site employs, at minimum, drug-interaction checking, drug-allergy checking, preventive care reminders, order sets, documentation templates, and referential CDS tools. Other than the qualification of being excellent at CDS, the chosen study sites are quite diverse and represent a wide range of healthcare organizations.

Table 1.

Attributes of study sites

| Attributes | Providence Portland Medical Center | El Camino Hospital | Partners HealthCare | Wishard Memorial Hospital Clinics | Roudebush Veterans Health Administration | Mid-Valley IPA | RWJ Medical Group |

|---|---|---|---|---|---|---|---|

| Location | Portland, Oregon, USA | Mountain View, California, USA | Boston, Massachusetts, USA | Indianapolis, Indiana, USA | Indianapolis, Indiana, USA | Salem, Oregon, USA | New Brunswick, New Jersey, USA |

| Type of setting | Community hospital | Community hospital | Academic and community outpatient | Academic and county clinics | VA outpatient clinics | Community outpatient | Academic outpatient |

| Type of system | Commercial | Commercial | Locally developed and commercial | Locally developed | Nationally developed | Commercial | Commercial |

| Date of visit | December, 2007 | February, 2008 | June, 2008 | September, 2008 | September, 2008 | December, 2008 | February, 2009 |

This table has been used with permission from the author.18

IPA, Independent Physicians Association; RWJ, Robert Wood Johnson; VA, Veterans Affairs.

Selection of subjects within sites

Following the Multiple Perspectives Model, we interviewed individuals representing a broad spectrum of people at each site performing a variety of roles in CDS development, management, implementation, training, support, and usage. Additional subjects were identified through recommendations by interviewees and local sponsors.

Data collection methods

We adapted the rapid assessment process (RAP) to appropriately and accurately collect data from the study sites.24 Using a multidisciplinary research team and following the RAP method, we carried out intensive site visits and collected data through interviews, observations, and field surveys in a relatively short period of time. The completion of pre-visit ‘site profiles’ and system demonstrations allowed us to design interview questions that incorporated the language and philosophy of the individual sites. These data collection methods were designed with the goal of encouraging subjects and field investigators to view the CDS system through three different lenses: technical, organizational, and personal aspects.

All interviews were recorded and transcribed, and the investigators also took detailed field notes. Both the transcripts and the field notes were entered into the NVivo system (Burlington, Massachusetts, USA) for qualitative data analysis.13

Data analysis

All interview transcripts and field notes were reviewed and coded by the team of anthropologists. The anthropologists initially used the set of 10 themes from our original paper on CPOE,19 coding relevant passages of each document according to these 10 themes. Over the course of the coding process, the definitions of the themes were revised, new themes were added, and others were combined or removed in consultation with the entire study team. Once the coding scheme of 10 CDS-related themes was finalized, all documents were reviewed by two team members trained in anthropology (AB and JW) and passages were coded with the final coding scheme. The results of this analysis have been reported previously.18

For this manuscript, we conducted a more detailed analysis of all passages that were identified as relating to the ‘people’ theme. We extracted all passages coded with this theme and presented them to a team of six clinical informatics experts. These experts worked in pairs, with each pair analyzing data from two sites and identifying granular activities. The team then conducted a series of pile sorts to identify a set of essential activities. Pile sorting is a standard method for grouping concepts and identifying themes, used in both cognitive research and social science.25 The team took all of the activities identified by the pairs, which had a high degree of overlap and duplication, and iteratively grouped and split them until consensus was achieved. Although pile sorting is inherently subjective, we employed several techniques to ensure rigor. First, we used a multidisciplinary group to do the sorting, ensuring that a variety of perspectives were included. Second, we conducted the sorting over a period of several days, allowing each team member to reflect on the evolving piles and also to discuss their perspectives on opportunities for splitting or grouping activities. Finally, we proceeded until consensus was reached, and all team members were satisfied with the final piles. Although there is no guarantee that a similar team would reach an identical set of piles, we believe our process was quite rigorous and accurately captured the underlying activities. After the pile sort was complete, the activities were then grouped into overarching areas of function.

Results

After the first round of analysis within the people theme, we identified 219 activities described by our informants. After several rounds of pile sorting, a total of 18 activities were identified and are described in this section. To facilitate discussion, we further grouped these 18 activities into four areas of function. In the following section, we describe patterns seen across all the study sites relating to the role of people in CDS. Boxes 1 and 2 give one or two illustrative quotes from our interviews that shed light on each theme, while boxes 3 and 4 present best practices we identified from our site visits.

Box 1. Illustrative informant quotes for theme areas 1 and 2.

1. Fostering relationships across the organization

a) Training and support: “Well, we follow a train-the-trainer model, so what we do with each [clinical] practice is we train a core group of super-users and their administrative champion project manager, whoever that is, on how to use the system, and then we ask them to train everybody else.”—Medical Director

b) Visibility/presence on the floor: “We had experts who actually would just make rounds with the physicians … and tried to do a lot of one-on-one training.” An EHR education and support manager explained the need for individuals who could troubleshoot problems and answer questions in real-time: “If a nurse needs help, she needs it now; and probably, if she has to go through the help desk, she'll suffer and not get [the] help [she needs]…One of the first things I did was decentralize those [help desk] nurses and get them at the sites so that they could be rounding throughout the day and interacting with people.”—Vice President of Patient Care Services

c) Liaising between people: “Dr [X] is kind of the cross pollinator. He attends as many of the development team meetings, clinical program guidance council meetings as he can…he kind of sees what's happening across the system and is able to help cross pollinate.” A healthcare services account manager also expressed the need for individuals who serve to bridge the gap between groups: “You need both analytical experience and that [real-world] nursing experience to make this successful. You need more than just [information system] analysts and nurses…you really need somebody that embodies both sides.”—Chief Medical Officer

d) Administration and leadership: “Our chief nursing officer, who works very closely with me, is a very strong advocate of all these things we're doing...I have to believe there's lots of [people like her]...who will grasp, and grab onto this kind of an approach, and be a catalyst to help in their own area and to develop it.”—Chief Medical Officer

e) Project management: “One of their jobs is sort of the traffic control job, to make sure that all of the people that are doing content development and order set development are working at an appropriate pace, and are working on the things that the hospital wants them to work on.”—Chief Medical Informatics Officer

f) Cheerleading/buy-in/sponsorship: “Early on, we tried to get as many champions as we could. And we wanted those key people who were positive and enthusiastic. That was one key to our success: having the right players.”—Health Systems Account Manager

g) Preparing for CDS implementation: “She came on, and she just started what I call this concierge service, of literally cold calling the doctors and saying, ‘We're coming. I'd like to sit with you, and help you build your order sets.’ And that was really the beginning of success, when she just literally reached out directly to people, and knocked on doors, and started building what they felt they wanted.”—Chief Information Officer

2. Assembling the system

a) Providing technical support: “So no matter what hospital you're in, across the whole system you can dial 3-4-5-6 and you can get any kind of computer support 24 hours a day, 7 days a week, 365 days a year, which I think was a really important step. Because until then, we had people carrying pagers for this application, that application, and you never knew what kind of help you were going to get on the other end of the phone. And sometimes people just had to put up with stuff until daybreak and somebody came on to work. And now if the person on the end of the phone can't help you, then they have someone who is on-call, who can fix the problem, and that helps a lot with I think the frustration with the clinicians.”—Clinical Informatics Specialist

b) CDS content development: “Ideally, having [an] informatics person, and a data management person, and getting some of the technical people in that process can make for a guideline that not only could be written on paper and used by someone who is just going to follow a guideline manually but could have some potential of going into the system. I think there are a lot of little subtleties in creating the knowledge that drives our decision support. Creating all the rules in the system [is] better done in the hands of someone who understands some of the technical side and not just the clinical side.”—Chief Software Architect

c) Purchasing products from vendors: “If you're going to beat up [the vendor] over contracting every single time, they're going to dig in their heels on contracting every single time. Also, it is important to interact in a collaborative manner with the vendors to build and customize systems for the organization. And you're going to end up with a not-so-good relationship. I really made a big push to have a good working relationship with our vendor and it's paid off in spades.”—Chief Medical Information Officer // “I basically work with [the vendor] around the architecture of the new system and how we want to build [our] system, and the requirements and goals, and setting up that kind of information about the system.”—Chief Medical Information Officer

d) Knowledge management: “You'd want some infrastructure to make sure that content is being reviewed regularly. That someone is waking up in the morning worrying about bringing that pool of people together...It's really having people dedicated to managing and I think reviewing and making sure that the content is being regularly turned over and questioned. ‘Is this still standard care?’...There have been other people that have contributed parts but they're not programmers, they're doctors who understand the technical side enough to put it into kind of a high-level language that the system can understand, and that's driven most of our decision support content. And so putting new decision support into the system usually doesn't take programmer time.”—Chief Software Architect

e) System integration: “Part of the issue around decision support...is when you have multiple disparate systems with different data models and you go to build decision support, you are in for a world of pain. And I've been there, done that, got the scars.”—Chief Information Officer

CDS, clinical decision support; EHR, electronic health record

Box 2. Illustrative informant quotes for theme areas 3 and 4.

3. Using CDS to achieve the organization's goals

a) Reporting: “A recent [query] I did was [to identify] patients that are less than 18 [years old] and have a BMI [body mass index] between [the] 85th and 95th [percentile].Our EHR doesn't let you pull that stuff. We have some very basic aggregate reporting in our EHR, but if they want specific things, I'll pull those.”—Clinical Super-user // “Data needs to be translated into a form that is understandable to provide the opportunity for tracking change. “I was working with quality data and clinical abstracting professionals to be able to try and pull these data bits and pieces altogether in one usable format, and put it in a program that people can actually use and drill into to see how their patients are getting better, or not getting better, or how they're performing.”—Clinical Analyst

b) Requirements-gathering/specifications: “And then after I get the end-users to buy in to using the computer system, I act as their liaison back to the programmers to help close the loop—taking their needs, their requests back to the programmers so that we can make the system better.”—Application Specialist

c) Monitoring CDS: “We have monitor[ing] programs, 24 hours a day, every day: patients who are on the wrong antibiotics, patients who should be on antibiotics, patients who are on antibiotics too long who need a proper antibiotic change, a patient who probably has an adverse drug event, patients whose dose of antibiotic is too high. Anything we can think of that might indicate there's an error out there or some type of a problem, we will monitor that.”—Senior Medical Informatician

d) Linking CDS to goals: “So I also look at all the requests that the doctors come up with about things that they'd like to see the system be able to do. But the grander objective is to help improve the quality and efficiency of care in our service area.”—Medical Director // “I'm actually hiring a person, who I'm calling manager of informatics, that's going to have really sort of operational, working-type experience...and that person is going to suggest interventions that could potentially get them to their performance improvement goals. So to the degree that that person thinks that an order set or an alert or some sort of relevant display or something like that would be pertinent to achieving those performance improvement goals, they would get worked into that project as part of multi-faceted approach that they would use in order to improve.”—Chief Medical Information Officer

e) Managing data: “We do decision support but we do more of what I think of as population decision support. So we do data warehousing, quality reporting, disease registries, [and] population management… so we'll, for example, look at EMR reminders and look at the logic, or it could just be disease state definitions, it could be actual reminder rules, and we'll implement them against the data warehouse for populations, and create reports or various workflow tools that way.”—Corporate Manager for Decision Support

4. Participation in external policy and standards activities

a) “So as things like meaningful use come in, we kind of sit and discuss, should we be building some new type of decision support or beefing up some old types.” A senior medical informatician related: “I currently have three CDC grants and an Agency for Healthcare Research and Quality grant we're working on...A lot of that is to prevent VTEs (venous thromboembolisms), which includes DVTs (deep venous thrombosis) and PEs (pulmonary embolisms). Of course we're still working on adverse drug events, hospital-acquired-infections has come around again, we're identifying patients that are on urinary catheters too long, we're trying to get those [catheters] removed.”—Principal Informatician

CDC, Centers for Disease Control and Prevention; CDS, clinical decision support; EHR, electronic health record; EMR, electronic medical record.

Box 3. Themes, activities, and recommended practices for theme areas 1 and 2.

1. Fostering relationships across the organization

Training and support:

When a new CDS intervention is created, develop a training curriculum and communication package for the intervention. This package can range from a paragraph-long email to a half-day course, depending on the complexity of the intervention.

Provide training and communication to staff both before and after bringing a new CDS intervention live—don't just silently turn on an intervention and hope it will work.

Deal with post-implementation problems with CDS quickly. Problems with new CDS interventions are common, so it is essential to rapidly identify and correct these errors, and communicate these fixes to clinicians. Clinicians are impressively forgiving if they understand why an error occurred and can see that it was taken seriously and fixed quickly, and that steps were taking to prevent recurrence.

Visibility/presence on the floor:

Place knowledgeable experts on the floor when a new CDS intervention is deployed to answer questions, train users, and gather feedback. It is often helpful to make these users visible by giving them a special vest, hat, button, or shirt.

Have information technology and quality leaders make regular “walk rounds” to build relationships with end users and hear their concerns and suggestions. Make sure to act on these suggestions, and provide follow-up to those who made the suggestions.

Liaising between people:

Develop staff who can speak both clinical and technical language and act as a liaison between these two groups. Some of the best liaisons we encountered were clinicians with informatics training who understood the realities of the clinical and technical worlds.

These liaison staff should focus much of their effort on building relationships in the organization, and are ideal to provide visibility and presence on the floor.

Administration and leadership:

Involve senior clinical leaders in the development of your CDS strategy. These leaders should ensure that CDS is aligned with broader organizational goals and objectives, and also identify opportunities to use CDS in service of broader organizational purposes.

When possible, have these leaders send communications about new CDS to show the importance of these new interventions to the clinical goals of the organization.

Project management:

Each new CDS initiative should have a project manager assigned to track its goals and drive it to completion.

The project manager should work with the liaison staff from theme 1.c to convene both clinical and technical experts for the project.

Cheerleading/buy-in/sponsorship:

Foster enthusiasm for new CDS interventions among users and leaders through regular, concise, to-the-point communications.

When possible, find ways to involve users before implementation of new CDS, for example, as β testers and usability study subjects.

Preparing for CDS implementation:

Before any implementation, assess the current state of the EHR and clinical process, and then deliberately map out changes that are needed with input from end users.

Test new CDS interventions extensively before going live.

Focus on user needs and on-site support in the period immediately after going live.

2. Assembling the system

Providing technical support:

Train technical personnel to quickly escalate CDS issues requiring clinical evaluation and input.

Permit the use of back channels, such as emailing the chief medical information officer, so that users feel that they have a place to turn when they have a problem with CDS. But make sure to track such communications carefully and refer users back to traditional channels, such as a help desk, when their problem is more appropriately handled there.

Quickly correct problems with CDS content that affect patient care.

CDS content development:

Ensure that content which is developed is consistent with guidelines, evidence, and best practices, and also that it matches your organization's goals and procedures.

Develop a process for prioritizing development of new content, both to ensure that limited resources are effectively deployed and also to avoid building content that is likely to be bothersome or unlikely to be used.

Consider purchasing content that you can't develop or maintain effectively in-house.

Purchasing products from vendors:

Develop strong working relationships with vendors who have an impact on CDS, particularly your EHR vendor and any clinical content vendors you use. These relationships are strategically important and shouldn't just be driven by price.

Be the voice of your users—take their feedback to your vendor and communicate the vendor's response back to the users. Even if a problem can't be resolved immediately, communication helps.

Knowledge management:

Create an inventory of all CDS content in your organization, and a process for maintaining it regularly and in response to changes in guidelines or evidence.

If you have a sufficiently large body of CDS content, consider investing in tools for managing it beyond just a spreadsheet.

System integration:

Where possible, work to create a seamless flow of information into your CDS systems.

Never ask a user to re-enter information needed for CDS that is already (or should be) known to an electronic system.

CDS, clinical decision support; EHR, electronic health record.

Box 4. Themes, activities, and recommended practices for theme areas 3 and 4.

3. Using CDS to achieve the organization's goals

Reporting:

Develop a comprehensive strategy for quality measurement and reporting, and use these measures and reports to identify opportunities for new or improved CDS.

Where needed, augment structured data in the EHR with manual abstraction to identify key clinical events and improve the accuracy of measures.

Requirements-gathering/specifications:

Begin all CDS requirements-gathering exercises with a series of conversations from all end users who will be affected by the CDS change. Give their input extra weight.

Appreciate that most CDS development is highly iterative, so consider any specifications to be evolving and pivot as needed.

Monitoring CDS:

Develop metrics and reports about how well CDS is functioning, and monitor them both immediately after going live and on an ongoing basis. Consider implementing a proactive notification system to alert you if a CDS intervention exhibits anomalous performance (eg, significantly increased or decreased firing rate) so that you can investigate.

Consider reporting CDS-related metrics to clinical committees and your organization's board to help them understand how CDS fits into the organization's broader quality and safety agenda.

Linking CDS to goals:

Consider CDS a key tool for achieving organizational quality, safety, and cost goals and use it smartly to drive change.

Conversely, remember that CDS is only one tool in your toolbox—also consider quality reports, registries, care management programs, training, forcing functions, and other potential tools.

Foster communication between quality and informatics professionals in your organization.

Managing data:

Track and, where possible, integrate data across sources in you organization to build a complete picture of your CDS program's performance.

If your organization has multiple sites (or is part of a consortium) consider benchmarking your CDS activities between sites to identify best practices and areas for improvement.

4. Participation in external policy and standards activities

Carefully monitor external regulatory programs and policies, as well as the development of technical and clinical standards. Enlist the help of your vendors and professional associations in monitoring these activities.

Consider adding your voice to discussions on these activities—new policies and standards are sometimes developed without significant input from “on-the-ground” users, and clinical perspectives generally carry significant weight.

CDS, clinical decision support; EHR, electronic health record.

Fostering relationships across the organization

Training and support

Training and support are necessary to ensure proper implementation and continued use of CDS; and this includes educating staff; training physicians, nurses, and pharmacists; and fixing post-implementation system errors. Although all sites had training and support programs that covered general use of their clinical information systems, the sites also emphasized that it was important to train end users on CDS, and to advise them when a new CDS intervention would be put into place so that they would understand the rationale, significance, and appropriate responses to the intervention.

Visibility/presence on the floor

In parallel with ‘training and support’, the sites also emphasized the importance of having individuals who are knowledgeable about CDS present on clinical units to answer questions, provide support, and gather feedback. Several sites had made improvements to their CDS tools based on this ‘on the floor’ feedback, identifying, for example, clinical situations where an alert fired inappropriately, or a reminder's wording that clinicians found unclear.

Liaising between people

All the sites identified challenges in communication between clinical users and technical staff, and each had at least one person in a liaison or bridging role who spoke both ‘languages’, generally a clinician cross-trained in informatics or information technology (IT). These individuals were highly valued by their organizations, and both technical and clinical constituencies considered them essential to the success of their CDS programs.

Administration and leadership

In addition to these liaison staff, who often operate at an individual contributor or middle management level, each site emphasized the importance of people in high-level positions who are ‘champions’ for decision support, are knowledgeable about clinical and socio-technical aspects of the system,20 and oversee quality. Common titles included chief medical officers, chief nursing officers, chief quality officers, and chief executive officers. Although these individuals did not always have technical expertise, their opinion about safety, quality, and clinical issues was highly respected and valued in their organization, so they were effective at communicating the importance of related CDS interventions to end users.

Project management

All sites had project managers who oversaw CDS projects. Generally, a project manager would track a CDS project from request, through implementation. Project managers often interacted with, but were generally distinct from, the ‘liaison staff’ in theme 1.c, with the liaison staff focusing on translation and the project managers focusing on coordination.

Cheerleading/buy-in/sponsorship

Because CDS projects often involve a variety of groups in a health system, all sites identified the need to ‘cheerlead’ for CDS projects and get buy-in and sponsorship across the organization. For example, new CDS interventions would often require subject matter expertise from pharmacists, physicians, and nurses while affecting the workflow of one or two of these groups. People involved in CDS would attend meetings, make presentations, cultivate relationships, and even call in favors to align all these groups behind a new CDS initiative.

Preparing for CDS implementation

Each site emphasized the importance of early planning of new CDS interventions, often beginning 6 months to a year before going live. During this planning phase, CDS practitioners worked to gain an understanding of the functionality of the system before implementation, the needs of end users, and the various approaches that might be taken to implement the CDS intervention. Key to this is focusing on user needs and support before and during the early stages of implementation.

Assembling the system

Providing technical support

Fixing technical errors in the system is an ongoing activity for all clinical IT departments; however, our sites reported that it was particularly challenging for CDS. CDS support issues are is often difficult for IT departments and technical help desks to resolve because they often involve nuanced clinical issues. Most sites reported that they quickly route CDS-related concerns to personnel with clinical knowledge, and many users reported ‘back channels’, such as emailing a chief medical information officer directly, when they perceive a problem with a CDS intervention.

CDS content development

One of the most resource-intensive activities reported by our sites was developing CDS content, such as rules, order sets, and templates. Most sites reported purchasing some of their content, with all sites using a commercial drug database and (potentially customized) commercial drug-interaction alerts. Many sites also purchased order sets26 and some sites purchased documentation tools.

Purchasing products from vendors

Among sites with commercial EHRs, most reported that their selection of an EHR system was the decision that had most impact on CDS. Most sites reported that price, compatibility with existing systems, and end-user feedback on usability and workflow were the main drivers of their system selection, with CDS a consideration, but not the driving factor. After system selection, many sites either worked with their EHR vendor or engaged with clinical content vendors to acquire additional CDS capabilities. Even sites that developed their own system still reported important relationships with vendors, especially for medication-related and referential CDS content. Site leaders all emphasized the importance of maintaining positive relationships with vendors.

Knowledge management

Knowledge management activities were challenging for all sites in our sample, with each site tracking clinical guidelines and evidence, quality measures, and regulatory requirements to identify new areas for CDS or areas where CDS content needed to be updated. Many, but not all, sites had regular processes to review their content, though often only every several years (and some sites had backlogs). Some sites reported substantial knowledge management infrastructure (such as tracking systems, version management tools, and online collaboration environments), while others had no tools, or relied on spreadsheets to track content. We explored this theme in detail (with a different sample) in a previous manuscript.27

System integration

Because CDS interventions often depend on data from a variety of sources, CDS practitioners found themselves regularly involved in system integration activities, requiring an understanding of data models, computer interfaces, and controlled clinical vocabularies, as well as the skills to navigate the front and back end of database structures.

Using CDS to achieve the organization's goals

Reporting

All sites regularly generated clinical performance reports. These reports were used by leaders and healthcare providers to monitor performance and identify areas for improvement. These areas for improvement were often dealt with by new CDS interventions. Most, but not all, sites also used clinical performance reports to assess the effectiveness of CDS interventions.

Requirements-gathering/specifications

CDS practitioners (often the liaison staff from theme 1.c) defined requirements for new CDS interventions (often based on the results of reporting) and then developed specifications that were used to implement CDS. The specifications differed in their level of formality—most were short written documents, but some were formal documents including detailed logic specifications. All sites reported that they generally went through several iterations of development on a new CDS intervention before deployment.

Monitoring CDS

In addition to the clinical reports described in theme 3.a, each site had at least rudimentary ability to monitor their CDS by generating reports— for example, of firing and acceptance rates for alerts or usage of order sets. Most of the sites, however, reported monitoring such reports only on an ad hoc basis, generally right after implementation of a new CDS intervention or in response to user complaints.

Linking CDS to goals

All sites reported that they used CDS as a key tool in their arsenal for achieving their organizational quality, safety and cost goals. Thus, they all worked to ensure alignment between their CDS interventions, their organization's strategic goals and other quality improvement activities, including training and quality measurement.

Managing data

CDS practitioners all reported the importance of managing large amounts of data, including underlying EHR data, data on performance of CDS, and external data, including error reports, cost data, and clinical data from external sources.

Participation in external policy and standards activities

The final theme we identified was a singleton: participation in external policy (eg, meaningful-use or policy advisory committees) and standards (eg, HL7) activities. All sites reported at least passive involvement, such as participation in webinars or ensuring their organization was on track to meet meaningful-use targets. However, some informants participated more actively by sitting on committees, providing input to standards, or conducting grant-funded research. These activities united the individual (and sometimes solitary) activities performed at each site, creating a sense of community and common purpose for CDS practitioners.

Discussion

A key finding of our work is that each of the 18 categories of activities we identified was performed at every site we visited, although the extent to which they were performed varied significantly. We consider each of these activities essential for any successful CDS program. However, we also observed important differences in how the activities were organized and prioritized at the sites in our study. In particular, we found that the way the activities were organized into roles and assigned to people or teams differed widely. For example, some smaller sites had only one person conducting all 18 activities, while other sites had entire departments dedicated to one or more of those activities. Thus, we reported our results in terms of discrete activities, rather than in terms of individuals or job titles. We also noted that some of the sites outsourced functions (eg, knowledge management or system integration), often with success. However, many of the activities (particularly visibility/presence on the floor, liaising between people, and administration and leadership) must be performed locally.

Based on our analysis of observations at institutions with a history of excellence in CDS and a review of existing literature, we identified a number of recommended practices for each activity type—the full list of recommended practices is presented in boxes 3 and 4. Among the institutions, performance of activities was variable. The recommended practices were selected because informants at the sites attributed the success of their CDS programs to these practices, or because we observed the practices to be particularly helpful.

Limitations

Our sample was limited to institutions in the USA with a track record of success in CDS, and all sites had all of the most common CDS types available. Additional activities, functions, and responsibilities may be necessary at institutions that have not yet developed a CDS program or whose CDS program is not successful. Because of the nature of our qualitative, post-implementation interviews and observational methods, we were unable to correlate the activities we observed with quantitative measures of program success. This study was designed to be descriptive, but it would be beneficial to conduct studies of the frequency and intensity with which these activities are carried out in a larger sample of healthcare settings and then correlate these data with measures of success. Likewise, one might correlate different staffing models (eg, centralized vs distributed, and in-house vs out-sourced) designed to achieve these activities with measures of success.

Conclusion

Implementing and maintaining successful CDS programs require many different kinds of skills and activities. This study identified 18 types of activity carried out by different people in a sample of robust CDS programs. Healthcare providers and provider organizations that intend to develop or improve an existing CDS program should ensure that these activities and practices are used. Although employing CDS is challenging, it is also essential if we are to realize the full benefits of the EHRs that we are implementing.

Acknowledgments

We are deeply indebted to the sites that shared their experiences and time with us. We also appreciate the help of Fetiya Belayneh, who reviewed the manuscript and provided helpful feedback.

Correction notice: This article has been corrected since it was published Online First. The article has been made unlocked.

Contributors: AW, JSA, CM, DFS, and EG made substantial contributions to conception and design, acquisition of data, and analysis and interpretation of data. JLE, JW, AB, AS, DSH, and MP made substantial contributions to the analysis and interpretation of the study data, as did CM and BM, who also participated in the conception and design of the study. The manuscript was drafted by AW with assistance from DSH and it was revised critically for important intellectual content by all authors. All authors approved the final version of the manuscript.

Funding: This project is derived from work supported under contract #HHSA290200810010 from the Agency for Healthcare Research and Quality (AHRQ), US Department of Health and Human Services. The findings and conclusions in this document are those of the author(s), who are responsible for its content, and do not necessarily represent the views of AHRQ. No statement in this report should be construed as an official position of AHRQ or of the US Department of Health and Human Services. Identifiable information on which this report, presentation, or other form of disclosure is based is protected by federal law, section 934(c) of the public health service act, 42 U.S.C. 299c-3(c). No identifiable information about any individuals or entities supplying the information or described in it may be knowingly used except in accordance with their prior consent. Any confidential identifiable information in this report or presentation that is knowingly disclosed is disclosed solely for the purpose for which it was provided.

Competing interests: None.

Ethics approval: Oregon Health & Sciences University (OHSU), Portland Oregon, USA; Brigham and Women's Hospital, Boston, Massachusetts, USA; the University of Texas Health Science Center at Houston, Texas, USA; Providence Portland Medical Center, Portland, Oregon, USA; El Camino Hospital, Mountain View, California, USA; Wishard Memorial Hospital/Regenstrief Institute, Indianapolis, Indiana, USA; Roudebush Veterans Affairs Hospital, Indianapolis, Indiana, USA, and the University of Medicine and Dentistry of New Jersey, New Brunswick, New Jersey, USA. The Mid-Valley Independent Physicians Association did not have an institutional review board (IRB), so they relied on the OHSU IRB.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Buntin MB, Burke MF, Hoaglin MC, et al. The benefits of health information technology: a review of the recent literature shows predominantly positive results. Health Aff 2011;30:464–71 [DOI] [PubMed] [Google Scholar]

- 2.Lyman JA, Cohn WF, Bloomrosen M, et al. Clinical decision support: progress and opportunities. J Am Med Inform Assoc 2010;17:487–92 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Kawamoto K, Lobach DF. Proposal for fulfilling strategic objectives of the U.S. Roadmap for national action on clinical decision support through a service-oriented architecture leveraging HL7 services. J Am Med Inform Assoc 2007;14:146–55 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Garg AX, Adhikari NKJ, McDonald H, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes. JAMA 2005;293:1223–38 [DOI] [PubMed] [Google Scholar]

- 5.Kawamoto K, Houlihan CA, Balas EA, et al. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ 2005;330:765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Chaudhry B, Wang J, Wu S, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med 2006;144:742–52 [DOI] [PubMed] [Google Scholar]

- 7.Blumenthal D. Stimulating the adoption of health information technology. N Engl J Med 2009;360:1477–9 [DOI] [PubMed] [Google Scholar]

- 8.Blumenthal D, Tavenner M. The "meaningful use" regulation for electronic health records. N Engl J Med 2010;363:501–4 [DOI] [PubMed] [Google Scholar]

- 9.Marcotte L, Seidman J, Trudel K, et al. Achieving meaningful use of health information technology: a guide for physicians to the EHR incentive programs. Arch Intern Med 2012;172:731–6 [DOI] [PubMed] [Google Scholar]

- 10.Love JS, Wright A, Simon SR, et al. Are physicians’ perceptions of healthcare quality and practice satisfaction affected by errors associated with electronic health record use? J Am Med Inform Assoc 2012;19:610–14 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hersh W, Wright A. What workforce is needed to implement the health information technology agenda? Analysis from the HIMSS analytics database. AMIA Annu Symp Proc 2008:303–7 [PMC free article] [PubMed] [Google Scholar]

- 12.Hillestad R, Bigelow J, Bower A, et al. Can electronic medical record systems transform health care? Potential health benefits, savings, and costs. Health Aff 2005;24:1103–17 [DOI] [PubMed] [Google Scholar]

- 13.Sittig DF, Wright A, Osheroff JA, et al. Grand challenges in clinical decision support. J Biomed Inform 2008;41:387–92 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Sittig DF, Wright A, Meltzer S, et al. Comparison of clinical knowledge management capabilities of commercially-available and leading internally-developed electronic health records. BMC Med Inform Decis Making 2011;11:13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.McCormack JL, Ash JS. Clinician perspectives on the quality of patient data used for clinical decision support: a qualitative study. AMIA Annu Symp Proc 2012;2012:1302–9 [PMC free article] [PubMed] [Google Scholar]

- 16.Wright A, Sittig DF, Ash JS, et al. Governance for clinical decision support: case studies and recommended practices from leading institutions. J Am Med Inform Assoc 2011;18:187–94 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Richardson JE, Ash JS, Sittig DF, et al. Multiple perspectives on the meaning of clinical decision support. AMIA Annual Symposium Proceedings; 2010, American Medical Informatics Association, 2010:1427. [PMC free article] [PubMed] [Google Scholar]

- 18.Ash JS, Sittig DF, Guappone KP, et al. Recommended practices for computerized clinical decision support and knowledge management in community settings: a qualitative study. BMC Med Inform Decis Mak 2012;12:6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ash JS, Stavri PZ, Dykstra R, et al. Implementing computerized physician order entry: the importance of special people. Int J Med Inform 2003;69:235–50 [DOI] [PubMed] [Google Scholar]

- 20.Sittig DF, Singh H. A new sociotechnical model for studying health information technology in complex adaptive healthcare systems. Qual Saf Health Care 2010;19(Suppl 3):i68–74 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Linstone H. Decision making for technology executives: using multiple perspectives to improve performance. Artech House, 1999 [Google Scholar]

- 22.McMullen CK, Ash JS, Sittig DF, et al. Rapid assessment of clinical information systems in the healthcare setting: an efficient method for time-pressed evaluation. Methods Inf Med 2010;50:299–307 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Sittig DF, Guappone K, Campbell EM, et al. A survey of U.S.A. acute care hospitals’ computer-based provider order entry system infusion levels. Stud Health Technol Inform 2007;129(Pt 1):252–6 [PubMed] [Google Scholar]

- 24.Trotter R, Needle R, Goosby E. A methodological model for rapid assessment, response, and evaluation: the RARE program in public health. Field Methods 2001;13:137–59 [Google Scholar]

- 25.Ryan GW, Bernard HR. Techniques to identify themes. Field Methods 2003;15:85–109 [Google Scholar]

- 26.Wright A, Feblowitz JC, Pang JE, et al. Use of order sets in inpatient computerized provider order entry systems: a comparative analysis of usage patterns at seven sites. Int J Med Inform 2012;81:733–45 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Sittig DF, Wright A, Simonaitis L, et al. The state of the art in clinical knowledge management: an inventory of tools and techniques. Int J Med Inform 2010;79:44–57 [DOI] [PMC free article] [PubMed] [Google Scholar]