Abstract

Dropout is a recently introduced algorithm for training neural network by randomly dropping units during training to prevent their co-adaptation. A mathematical analysis of some of the static and dynamic properties of dropout is provided using Bernoulli gating variables, general enough to accommodate dropout on units or connections, and with variable rates. The framework allows a complete analysis of the ensemble averaging properties of dropout in linear networks, which is useful to understand the non-linear case. The ensemble averaging properties of dropout in non-linear logistic networks result from three fundamental equations: (1) the approximation of the expectations of logistic functions by normalized geometric means, for which bounds and estimates are derived; (2) the algebraic equality between normalized geometric means of logistic functions with the logistic of the means, which mathematically characterizes logistic functions; and (3) the linearity of the means with respect to sums, as well as products of independent variables. The results are also extended to other classes of transfer functions, including rectified linear functions. Approximation errors tend to cancel each other and do not accumulate. Dropout can also be connected to stochastic neurons and used to predict firing rates, and to backpropagation by viewing the backward propagation as ensemble averaging in a dropout linear network. Moreover, the convergence properties of dropout can be understood in terms of stochastic gradient descent. Finally, for the regularization properties of dropout, the expectation of the dropout gradient is the gradient of the corresponding approximation ensemble, regularized by an adaptive weight decay term with a propensity for self-consistent variance minimization and sparse representations.

Keywords: machine learning, neural networks, ensemble, regularization, stochastic neurons, stochastic gradient descent, backpropagation, geometric mean, variance minimization, sparse representations

1 Introduction

Dropout is a recently introduced algorithm for training neural networks [27]. In its simplest form, on each presentation of each training example, each feature detector unit is deleted randomly with probability q = 1 – p = 0.5. The remaining weights are trained by backpropagation [40]. The procedure is repeated for each example and each training epoch, sharing the weights at each iteration (Figure 1.1). After the training phase is completed, predictions are produced by halving all the weights (Figure 1.2). The dropout procedure can also be applied to the input layer by randomly deleting some of the input-vector components–typically an input component is deleted with a smaller probability (i.e. q = 0.2).

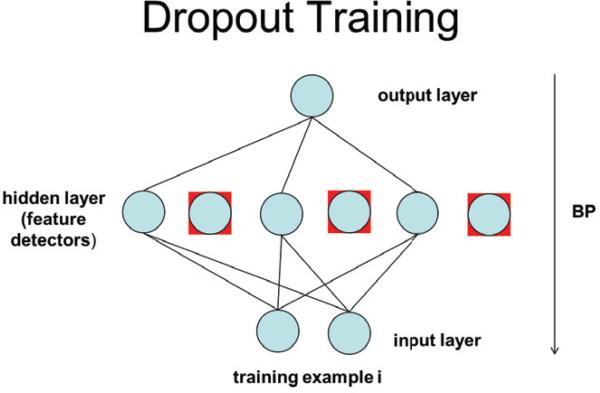

Figure 1.1.

Dropout training in a simple network. For each training example, feature detector units are dropped with probability 0.5. The weights are trained by backpropagation (BP) and shared with all the other examples.

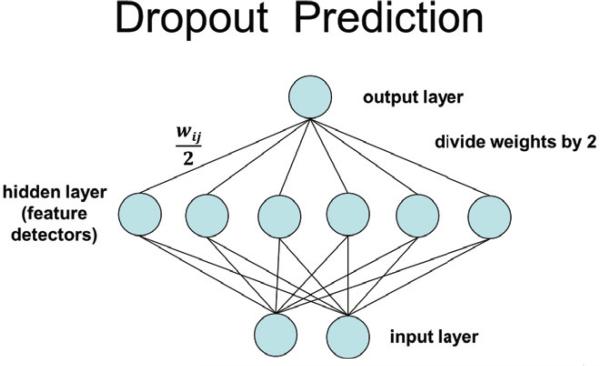

Figure 1.2.

Dropout prediction in a simple network. At prediction time, all the weights from the feature detectors to the output units are halved.

The motivation and intuition behind the algorithm is to prevent overfitting associated with the co-adaptation of feature detectors. By randomly dropping out neurons, the procedure prevents any neuron from relying excessively on the output of any other neuron, forcing it instead to rely on the population behavior of its inputs. It can be viewed as an extreme form of bagging [17], or as a generalization of naive Bayes [23], as well as denoising autoencoders [42]. Dropout has been reported to yield remarkable improvements on several difficult problems, for instance in speech and image recognition, using well known benchmark datasets, such as MNIST, TIMIT, CIFAR-10, and ImageNet [27].

In [27], it is noted that for a single unit dropout performs a kind of “geometric” ensemble averaging and this property is conjectured to extend somehow to deep multilayer neural networks. Thus dropout is an intriguing new algorithm for shallow and deep learning, which seems to be effective, but comes with little formal understanding and raises several interesting questions. For instance:

What kind of model averaging is dropout implementing, exactly or in approximation, when applied to multiple layers?

How crucial are its parameters? For instance, is q = 0.5 necessary and what happens when other values are used? What happens when other transfer functions are used?

What are the effects of different deletion randomization procedures, or different values of q for different layers? What happens if dropout is applied to connections rather than units?

What are precisely the regularization and averaging properties of dropout?

What are the convergence properties of dropout?

To answer these questions, it is useful to distinguish the static and dynamic aspects of dropout. By static we refer to properties of the network for a fixed set of weights, and by dynamic to properties related to the temporal learning process. We begin by focusing on static properties, in particular on understanding what kind of model averaging is implemented by rules like ”halving all the weights”. To some extent this question can be asked for any set of weights, regardless of the learning stage or procedure. Furthermore, it is useful to first study the effects of droupout in simple networks, in particular in linear networks. As is often the case [8, 9], understanding dropout in linear networks is essential for understanding dropout in non-linear networks.

Related Work. Here we point out a few connections between dropout and previous literature, without any attempt at being exhaustive, since this would require a review paper by itself. First of all, dropout is a randomization algorithm and as such it is connected to the vast literature in computer science and mathematics, sometimes a few centuries old, on the use of randomness to derive new algorithms, improve existing ones, or prove interesting mathematical results (e.g. [22, 3, 33]). Second, and more specifically, the idea of injecting randomness into a neural network is hardly new. A simple Google search yields dozen of references, many dating back to the 1980s (e.g. [24, 25, 30, 34, 12, 6, 37]). In these references, noise is typically injected either in the input data or in the synaptic weights to increase robustness or regularize the network in an empirical way. Injecting noise into the data is precisely the idea behind denoising autoencoders [42], perhaps the closest predecessor to dropout, as well as more recent variations, such as the marginalized-corrupted-features learning approach described in [29]. Finally, since the posting of [27], three articles with dropout in their title were presented at the NIPS 2013 conference: a training method based on overlaying a dropout binary belief network on top of a neural network [7]; an analysis of the adaptive regularizing properties of dropout in the shallow linear case suggesting some possible improvements [43]; and a subset of the averaging and regularization properties of dropout described primarily in Sections 8 and 11 of this article [10].

2 Dropout for Shallow Linear Networks

In order to compute expectations, we must associate well defined random variables with unit activities or connection weights when these are dropped. Here and everywhere else we will consider that a unit activity or connection is set to 0 when the unit or connection is dropped.

2.1 Dropout for a Single Linear Unit (Combinatorial Approach)

We begin by considering a single linear unit computing a weighted sum of n inputs of the form

| (1) |

where I = (I1, . . . , In) is the input vector. If we delete inputs with a uniform distribution over all possible subsets of inputs, or equivalently with a probability q = 0.5 of deletion, then there are 2n possible networks, including the empty network. For a fixed I, the average output over all these networks can be written as:

| (2) |

where is used to index all possible sub-networks, i.e. all possible edge deletions. Note that in this simple case, deletion of input units or of edges are the same thing. The sum above can be expanded using networks of size 0, 1, 2, . . . n in the form

| (3) |

In this expansion, the term wiIi occurs

| (4) |

times. So finally the average output is

| (5) |

Thus in the case of a single linear unit, for any fixed input I the output obtained by halving all the weights is equal to the arithmetic mean of the outputs produced by all the possible sub-networks. This combinatorial approach can be applied to other cases (e.g. p ≠ 0.5) but it is much easier to work directly with a probabilistic approach.

2.2 Dropout for a Single Linear Unit (Probabilistic Approach)

Here we simply consider that the output is a random variable of the form

| (6) |

where δi is a Bernoulli selector random variable, which deletes the weight wi (equivalently the input Ii) with probability P(δi = 0) = qi. The Bernoulli random variables are assumed to be independent of each other (in fact pairwise independence, as opposed to global independence, is sufficient for all the results to be presented here). Thus P(δi = 1) = 1 – qi = pi. Using the linearity of the expectation we have immediately

| (7) |

This formula allows one to handle different pi for each connection, as well as values of pi that deviate from 0.5. If all the connections are associated with independent but identical Bernoulli selector random variables with pi = p, then

| (8) |

Thus note, for instance, that if the inputs are deleted with probability 0.2 then the expected output is given by 0.8 . Thus the weights must be multiplied by 0.8. The key property behind Equation 8 is the linearity of the expectation with respect to sums and multiplications by scalar values, and more generally for what follows the linearity of the expectation with respect to the product of independent random variables. Note also that the same approach could be applied for estimating expectations over the input variables, i.e. over training examples, or both (training examples and subnetworks). This remains true even when the distribution over examples is not uniform.

If the unit has a fixed bias b (affine unit), the random output variable has the form

| (9) |

The case where the bias is always present, i.e. when δb = 1 always, is just a special case. And again, by linearity of the expectation

| (10) |

where P(δb = 1) = pb. Under the natural assumption that the Bernoulli random variables are independent of each other, the variance is linear with respect to the sum and can easily be calculated in all the previous cases. For instance, starting from the most general case of Equation 9 we have

| (11) |

with qi = 1 – pi. S can be viewed as a weighted sum of independent Bernoulli random variables, which can be approximated by a Gaussian random variable under reasonable assumptions.

2.3 Dropout for a Single Layer of Linear Units

We now consider a single linear layer with k output units

| (12) |

In this case, dropout applied to input units is slightly different from dropout applied to the connections. Dropout applied to the input units leads to the random variables

| (13) |

whereas dropout applied to the connections leads to the random variables

| (14) |

In either case, the expectations, variances, and covariances can easily be computed using the linearity of the expectation and the independence assumption. when dropout is applied to the input units, we get:

| (15) |

| (16) |

| (17) |

When dropout is applied to the connections, we get:

| (18) |

| (19) |

| (20) |

Note the difference in covariance between the two models. When dropout is applied to the connections, Si and Sl are entirely independent.

3 Dropout for Deep Linear Networks

In a general feedforward linear network described by an underlying directed acyclic graph, units can be organized into layers using the shortest path from the input units to the unit under consideration. The activity in unit i of layer h can be expressed as:

| (21) |

Again, in the general case, dropout applied to the units is slightly different from dropout applied to the connections. Dropout applied to the units leads to the random variables

| (22) |

whereas dropout applied to the connections leads to the random variables

| (23) |

When dropout is applied to the units, assuming that the dropout process is independent of the unit activities or the weights, we get:

| (24) |

with in the input layer. This formula can be applied recursively across the entire network, starting from the input layer. Note that the recursion of Equation 24 is formally identical to the recursion of backpropagation suggesting the use of dropout during the backward pass. This point is elaborated further at the end of Section 10. Note also that although the expectation is taken over all possible subnetworks of the original network, only the Bernoulli gating variables in the previous layers (l < h) matter. Therefore it coincides also with the expectation taken over only all the induced subnetworks of node i(comprising only nodes that are ancestors of node i).

Remarkably, using these expectations, all the covariances can also be computed recursively from the input layer to the output layer, by writing and computing

| (25) |

under the usual assumption that of is independent of . Furthermore, under the usual assumption that and are independent when l ≠ l′ or j ≠ j′, we have in this case , with furthermore . Thus in short under the usual independence assumptions, can be computed recursively from the values of in lower layers, with the boundary conditions for a fixed input vector (layer 0). The recursion proceeds layer by layer, from the input to the output layer. When a new layer is reached, the covariances to all the previously visited layers must be computed, as well as all the intralayer covariances.

When dropout is applied to the connections, under similar independence assumptions, we get:

| (26) |

with in the input layer. This formula can be applied recursively across the entire network. Note again that although the expectation is taken over all possible subnetworks of the original network, only the Bernoulli gating variables in the previous layers (l < h) matter. Therefore it is also the expectation taken over only all the induced subnetworks of node i(corresponding to all the ancestors of node i). Furthermore, using these expectations, all the covariances can also be computed recursively from the input layer to the output layer using a similar analysis to the one given above for the case of dropout applied to the units of a general linear network.

In summary, for linear feedforward networks the static properties of dropout applied to the units or the connections using Bernoulli gating variables that are independent of the weights, of the activities, and of each other (but not necessarily identically distributed) can be fully understood. For any input, the expectation of the outputs over all possible networks induced by the Bernoulli gating variables is computed using the recurrence equations 24 and 26, by simple feedforward propagation in the same network where each weight is multiplied by the appropriate probability associated with the corresponding Bernoulli gating variable. The variances and covariances can also be computed recursively in a similar way.

4 Dropout for Shallow Neural Networks

We now consider dropout in non-linear networks that are shallow, in fact with a single layer of weights.

4.1 Dropout for a Single Non-Linear Unit (Logistic)

Here we consider that the output of a single unit with total linear input S is given by the logistic sigmoidal function

| (27) |

Here and everywhere else, we must have c ≥ 0 There are 2n possible sub-networks indexed by and, for a fixed input I, each sub-network produces a linear value and a final output value . Since I is fixed, we omit the dependence on I in all the following calculations. In the uniform case, the geometric mean of the outputs is given by

| (28) |

Likewise, the geometric mean of the complementary outputs is given by

| (29) |

The normalized geometric mean (NGM) is defined by

| (30) |

The NGM of the outputs is given by

| (31) |

Now for the logistic function σ, we have

| (32) |

Applying this identity to Equation 31 yields

| (33) |

where here . Or, in more compact form,

| (34) |

Thus with a uniform distribution over all possible sub-networks , equivalent to having i.i.d. input unit selector variables δ = δi with probability pi = 0.5, the NGM is simply obtained by keeping the same overall network but dividing all the weights by two and applying σ to the expectation .

It is essential to observe that this result remains true in the case of a non-uniform distribution over the subnetworks , such as the distribution generated by Bernoulli gating variables that are not identically distributed, or with p ≠ 0.5. For this we consider a general distribution . This is of course even more general than assuming the P is the product of n independent Bernoulli selector variables. In this case, the weighted geometric means are defined by:

| (35) |

and

| (36) |

and similarly for the normalized weighted geometric mean (NWGM)

| (37) |

Using the same calculation as above in the uniform case, we can then compute the normalized weighted geometric mean NWGM in the form

| (38) |

| (39) |

where here . Thus in summary with any distribution over all possible sub-networks , including the case of independent but not identically distributed Ninput unit selector variables δi with probability pi, the NW GM is simply obtained by applying the logistic function to the expectation of the linear input S. In the case of independent but not necessarily identically distributed selector variables δi, each with a probability pi of being equal to one, the expectation of S can be computed simply by keeping the same overall network but multiplying each weight wi by pi so that .

Note that as in the linear case, this property of logistic units is even more general. That is for any set of S1, . . . , Sm and any associated probability distribution and associated outputs O1, . . . , Om (with O = σ(S)), we have . Thus the NVGM can be computed over inputs, over inputs and subnetworks, or over other distributions than the one associated with subnetworks, even when the distribution is not uniform. For instance, if we add Gaussian or other noise to the weights, the same formula can be applied. Likewise, we can approximate the average activity of an entire neuronal layer, by applying the logistic function to the average input of the neurons in that layer, as long as all the neurons in the layer use the same logistic function. Note also that the property is true for any c and λ and therefore, using the analyses provided in the next sections, it will be applicable to each of the units, in a network where different units have different values of c and λ. Finally, the property is even more general in the sense that the same calculation as above shows that for any function f

| (40) |

and in particular, for any k

| (41) |

4.2 Dropout for a Single Layer of Logistic Units

In the case of a single output layer of k logistic functions, the network comoutes k linear sums for i = 1, . . . , k and then k outputs of the form

| (42) |

The dropout procedure produces a subnetwork where here represents the corresponding sub-network associated with the i-th output unit. For each i, there are 2n possible sub-networks for unit i, so there are 2kn possible subnetworks . In this case, Equation 39 holds for each unit individually. If dropout uses independent Bernoulli selector variables δij on the edges, or more generally, if the sub-networks are selected independently of each other, then the covariance between any two output units is 0. If dropout is applied to the input units, then the covariance between two sigmoidal outputs may be small but non-zero.

4.3 Dropout for a Set of Normalized Exponential Units

We now consider the case of one layer of normalized exponential units. In this case, we can think of the network as having k outputs obtained by first computing k linear sums of the form for i = 1, . . . , k and then k outputs of the form

| (43) |

Thus Oi is a logistic output but the coefficients of the logistic function depend on the values of Sj for j ≠ i. The dropout procedure produces a subnetwork where represents the corresponding sub-network associated with the i-th output unit. For each i, there are 2n possible subnetworks for unit i, so there are 2kn possible subnetworks . We assume first that the distribution is factorial, that is , equivalent to assuming that the subnetworks associated with the individual units are chosen independently of each other. This is the case when using independent Bernoulli selector applied to the connections. The normalized weighted geometric average of output unit i is given by

| (44) |

Simplifying by the numerator

| (45) |

Factoring and collecting the exponential terms gives

| (46) |

| (47) |

Thus with any distribution over all possible sub-networks , including the case of independent but not identically distributed input unit selector variables δi with probability pi, the NW GM of a normalized exponential unit is obtained by applying the normalized exponential to the expectations of the underlying linear sums Si. In the case of independent but not necessarily identically distributed selector variables δi, each with a probability pi of being equal to one, the expectation of Si can be computed simply by keeping the same overall network but multiplying each weight wi by pi so that .

5 Dropout for Deep Neural Networks

Finally, we can deal with the most interesting case of deep feedforward networks of sigmoidal units 1, described by a set of equations of the form

| (48) |

Dropout on the units can be described by

| (49) |

using the selector variables and similarly for dropout on the connections. For each sigmoidal unit

| (50) |

and the basic idea is to approximate expectations by the corresponding NWGMs, allowing the propagation of the expectation symbols from outside the sigmoid symbols to inside.

| (51) |

More precisely, we have the following recursion:

| (52) |

| (53) |

| (54) |

Equations 52, 53, and 54 are the fundamental equations underlying the recursive dropout ensemble approximation in deep neural networks. The only direct approximation in these equations is of course Equation 52 which will be discussed in more depth in Sections 8 and 9. This equation is exact if and only if the numbers are identical over all possible subnetworks . However, even when the numbers are not identical, the normalized weighted geometric mean ofteNn provides a good approximation. If the network contains linear units, then Equation 52 is not necessary for those units and their average can be computed exactly. The only fundamental assumption for Equation 54 is independence of the selector variables from the activity of the units or the value of the weights so that the expectation of the product is equal to the product of the expectations. Under the same conditions, the same analysis can be applied to dropout gating variables applied to the connections or, for instance, to Gaussian noise added to the unit activities.

Finally, we measure the consistency of neuron i in layer h for input I by the variance ) taken over all subnetworks and their distribution when the input I is fixed. The larger the variance is, the less consistent the neuron is, and the worse we can expect the approximation in Equation 52 to be. Note that for a random variable O in [0,1] the variance is bound to be small anyway, and cannot exceed 1/4. This is because Var(O) = E(O2) – (E(O))2 ≤ E(O) – (E(O))2 = E(O)(1 – E(O)) ≤ 1/4. The overall input consistency of such a neuron can be defined as the average of taken over all training inputs I, and similar definitions can be made for the generalization consistency by averaging over a generalization set.

Before examining the quality of the approximation in Equation 52, we study the properties of the NWGM for averaging ensembles of predictors, as well as the classes of transfer functions satisfying the key dropout NWGM relation (NWGM(f(x)) = f(E(x))) exactly, or approximately.

6 Ensemble Optimization Properties

The weights of a neural network are typically trained by gradient descent on the error function computed using the outputs and the corresponding targets. The error functions typically used are the squared error in regression and the relative entropy in classification. Considering a single example and a single output O with a target t, these errors functions can be written as:

| (55) |

Extension to multiple outputs, including classification with multiple classes using normalized exponential transfer functions, is immediate. These error terms can be summed over examples or over predictors in the case of an ensemble. Both error functions are convex up (∪) and thus a simple application of Jensen's theorem shows immediately that the error of any ensemble average is less than the average error of the ensemble components. Thus in the case of any ensemble producing outputs O1, . . . , Om and any convex error function we have

| (56) |

Note that this is true for any individual example and thus it is also true over any set of examples, even when these are not identically distributed. Equation 56 is the key equation for using ensembles and for averaging them arithmetically.

In the case of dropout with a logistic output unit the previous analyses show that the NWGM is an approximation to E and on this basis alone it is a reasonable way of combining the predictors in the ensemble of all possible subnetworks. However the following stronger result holds. For any convex error function, both the weighted geometric mean WGM and its normalized version NWGM of an ensemble possess the same qualities as the expectation. In other words:

| (57) |

| (58) |

In short, for any convex error function, the error of the expectation, weighted geometric mean, and normalized weighted geometric mean of an ensemble of predictors is always less than the expected error.

Proof: Recall that if f is convex and g is increasing, then the composition f(g) is convex. This is easily shown by directly applying the definition of convexity (see [39, 16] for additional background on convexity). Equation 57 is obtained by applying Jensen's inequality to the convex function Error(g), where g is the increasing function g(x) = ex, using the points log O1, . . . , log Om. Equation 58 is obtained by applying Jensen's inequality to the convex function Error(g), where g is the increasing function g(x) = ex/(1 + ex), using the points log O1 – log(1 – O1), . . . , log Om – log(1 – Om). The cases where some of the Oi are equal to 0 or 1 can be handled directly, although these are irrelevant for our purposes since the logistic output can never be exactly equal to 0 or 1.

Thus in circumstances where the final output is equal to the weighted mean, weighted geometric mean, or normalized weighted geometric mean of an underlying ensemble, Equations 56, 57, or 58 apply exactly. This is the case, for instance, of linear networks, or non-linear networks where dropout is applied only to the output layer with linear, logistic, or normalized-exponential units.

Since dropout approximates expectations using NWGMs, one may be concerned by the errors introduced by such approximations, especially in a deep architecture when dropout is applied to multiple layers. It is worth noting that the result above can be used at least to “shave off” one layer of approximations by legitimizing the use of NWGMs to combine models in the output layer, instead of the expectation. Similarly, in the case of a regression problem, if the output units are linear then the expectations can be computed exactly at the level of the output layer using the results above on linear networks, thus reducing by one the number of layers where the approximation of expectations by NWGMs must be carried. Finally, as shown below, the expectation, the WGM, and the NWGM are relatively close to each other and thus there is some flexibility, hence some robustness in how predictors are combined in an ensemble, in the sense that combining models with approximations to these quantities may still outperform the expectation of the error of the individual models.

Finally, it must also be pointed out that in the prediction phase once can also use expected values, estimated at some computational cost using Monte Carlo methods, rather than approximate values obtained by forward propagation in the network with modified weights.

7 Dropout Functional Classes and Transfer Functions

7.1 Dropout Functional Classes

Dropout seems to rely on the fundamental property of the logistic sigmoidal function NWGM(σ) = σ(E). Thus it is natural to wonder what is the class of functions f satisfying this property. Here we show that the class of functions f defined on the real line with range in [0, 1] and satisfying

| (59) |

for any set of points and any distribution, consists exactly of the union of all constant functions f(x) = K with 0 ≤ K ≤ 1 and all logistic functions f(x) = 1/(1 + ce–λx). As a reminder, G denotes the geometric mean and G′ denotes the geometric mean of the complements. Note also that all the constant functions with f(x) = K with 0 ≤ K ≤ 1 can also be viewed as logistic functions by taking λ = 0 and c = (1 – K)/K(K = 0 is a limiting case corresponding to c → ∞).

Proof: To prove this result, note first that the [0, 1] range is required by the definitions of G and G′, since these impose that f(x) and 1 – f(x) be positive. In addition, any function f(x) = K with 0 ≤ K ≤ 1 is in the class and we have shown that the logistic functions satisfy the property. Thus we need only to show these are the only solutions.

By applying Equation 59 to pairs of arguments, for any real numbers u and v with u ≤ v and any real number 0 ≤ p ≤ 1, any function in the class must satisfy:

| (60) |

Note that if f(u) = f(v) then the function f must be constant over the entire interval [u, v]. Note also that if f(u) = 0 and f(v) > 0 then f = 0 in [u, v). As a result, it is impossible for a non-zero function in the class to satisfy f(u) = 0, f(v1) > 0, and f(v2) > 0. Thus if a function f in the class is not constantly equal to 0, then f > 0 everywhere. Similarly (and by symmetry), if a function f in the class is not constantly equal to 1, then f < 1 everywhere.

Consider now a function f in the class, different from the constant 0 or constant 1 function so that 0 < f < 1 everywhere. Equation 60 shows that on any interval [u, v] f is completely defined by at most two parameters f(u) and f(v). On this interval, by letting x = pu + (1 – p)v or equivalently p = (v – x)/(v – u) the function is given by

| (61) |

or

| (62) |

with

| (63) |

and

| (64) |

Note that a particular simple parameterization is given in terms of

| (65) |

[As a side note, another elegant formula is obtained from Equation 60 for f(0) by taking u = –v and p = 0.5. Simple algebraic manipulations give:

| (66) |

]. As a result, on any interval [u, v] the function f must be: (1) continuous, hence uniformly continuous; (2) differentiable, in fact infinitely differentiable; (3) monotone increasing or decreasing, and strictly so if f is constant; (4) and therefore f must have well defined limits at –∞ and +∞. It is easy to see that the limits can only be 0 or 1. For instance, for the limit at +∞, let u = 0 and v′ = αv, with 0 < α < 1 so that v′ → ∞ as v → ∞. Then

| (67) |

As v′ → ∞ the limit must be independent of α and therefore the limit f(v) must be 0 or 1.

Finally, consider u1 < u2 < u3. By the above results, the quantities f(u1) and f(u2) define a unique logistic function on [u1, u2], and similarly f(u2) and f(u3) define a unique logistic function on [u2, u3]. It is easy to see that these two logistic functions must be identical either because of the analycity or just by taking two new points v1 and v2 with u1 < v1 < u2 < v2 < u3. Again f(v1) and f(v2) define a unique logistic function on [v1, v2] which must be identical to the other two logistic functions on [v1, u2] and [u2, v2] respectively. Thus the three logistic functions above must be identical. In short, f(u) and f(v) define a unique logistic function inside [u, v], with the same unique continuation outside of [u, v].

From this result, one may incorrectly infer that dropout is brittle and overly sensitive to the use of logistic non-linear functions. This conclusion is erroneous for several reasons. First, the logistic function is one of the most important and widely used transfer functions in neural networks. Second, regarding the alternative sigmoidal function tanh(x), if we translate it upwards and normalize it so that its range is the [0,1] interval, then it reduces to a logistic function since (1 + tanh(x))/2 = 1/(1 + e–2x). This leads to the formula: NWGM((1 + tanh(x))/2) = (1 + tanh(E(x)))/2. Note also that the NWGM approach cannot be applied directly to tanh, or any other transfer function which assumes negative values, since G and NWGM are defined for positive numbers only. Third, even if one were to use a different sigmoidal function, such as arctan(x) or , when rescaled to [0, 1] its deviations from the logistic function may be small and lead to fluctuations that are in the same range as the fluctuations introduced by the approximation of E by NWGM. Fourth and most importantly, dropout has been shown to work empirically with several transfer functions besides the logistic, including for instance tanh and rectified linear functions. This point is addressed in more detail in the next section. In any case, for all these reasons one should not be overly concerned by the superficially fragile algebraic association between dropout, NWGMs, and logistic functions.

7.2 Dropout Transfer Functions

In deep learning, one is often interested in using alternative transfer functions, in particular rectified linear functions which can alleviate the problem of vanishing gradients during backpropagation. As pointed out above, for any transfer function it is always possible to compute the ensemble average at prediction time using sampling. However, we can show that the ensemble averaging property of dropout is preserved to some extent also for rectified linear transfer functions, as well for broader classes of transfer functions.

To see this, we first note that, while the properties of the NWGM are useful for logistic transfer functions, the NWGM is not needed to enable the approximation of the ensemble average by deterministic forward propagation. For any transfer function f, what is really needed is the relation

| (68) |

Any transfer function satisfying this property can be used with dropout and allow the estimation of the ensemble at prediction time by forward propagation. Obviously linear functions satisfy Equation 68 and this was used in the previous sections on linear networks. A rectified linear function RL(S) with threshold t and slope λ has the form

| (69) |

and is a special case of a piece-wise linear function. Equation 68 is satisfied within each linear portion and will be satisfied around the threshold if the variance of S is small. Everything else being equal, smaller value of λ will also help the approximation. To see this more formally, assume without any loss of generality that t = 0. It is also reasonable to assume that S is approximately normal with mean μS and variance –a treatment without this assumption is given in the Appendix. In this case,

| (70) |

On the other hand,

| (71) |

and thus

| (72) |

where Φ is the cumulative distribution of the standard normal distribution. It is well known that Φ satisfies

| (73) |

when x is large. This allows us to estimate the error in all the cases. If μS = 0 we have

| (74) |

and the error in the approximation is small and directly proportional to λ and σ. If μS < 0 and σS is small, so that |μS|/σS is large, then and

| (75) |

And similarly for the case when μS > 0 and σS is small, so that μS/σS is large. Thus in all these cases Equation 68 holds. As we shall see in Section 11, dropout tends to minimize the variance σS and thus the assumption that σ be small is reasonable. Together, these results show that the dropout ensemble approximation can be used with rectified linear transfer functions. It is also possible to model a population of RL neurons using a hierarchical model where the mean μS is itself a Gaussian random variable. In this case, the error E(RL(S)) – RL(E(S)) is approximately Gaussian distributed around 0. [This last point will become relevant in Section 9.]

More generally, the same line of reasoning shows that the dropout ensemble approximation can be used with piece-wise linear transfer functions as long as the standard deviation of S is small relative to the length of the linear pieces. Having small angles between subsequent linear pieces also helps strengthen the quality of the approximation.

Furthermore any continuous twice-differentiable function with small second derivative (curvature) can be robustly approximated by a linear function locally and therefore will tend to satisfy Equation 68, provided the variance of S is small relative to the curvature.

In this respect, a rectified linear transfer function can be very closely approximated by a twice-differentiable function by using the integral of a logistic function. For the standard rectified linear transfer function, we have

| (76) |

With this approximation, the second derivative is given by σ′(S) = λσ(S)(1 – σ(S)) which is always bounded by λ/4.

Finally, for the most general case, the same line of reasoning, shows that the dropout ensemble approximation can be used with any continuous, piece-wise twice differentiable, transfer function provided the following properties are satisfied: (1) the curvature of each piece must be small; (2) σS must be small relative to the curvature of each piece. Having small angles between the left and right tangents at each junction point also helps strengthen the quality of the approximation. Note that the goal of dropout training is precisely to make σS small, that is to make the output of each unit robust, independent of the details of the activities of the other units, and thus roughly constant over all possible dropout subnetworks.

8 Weighted Arithmetic, Geometric, and Normalized Geometric Means and their Approximation Properties

To further understand dropout, one must better understand the properties and relationships of the weighted arithmetic, geometric, and normalized geometric means and specifically how well the NWGM of a sigmoidal unit approximates its expectation (E(σ) ≈ NWGMS(σ)). Thus consider that we have m numbers O1, . . . , Om with corresponding probabilities . We typically assume that the m numbers satisfy 0 < Oi < 1 although this is not always necessary for the results below. Cases where some of the Oi are equal to 0 or 1 are trivial and can be examined separately. The case of interest of course is when the m numbers are the outputs of a sigmoidal unit of the form for a given input I = (I1, . . . , In). We let E be the expectation (weighted arithmetic mean) and G be the weighted geometric mean . When 0 ≤ Oi ≤ 1 we also let be the expectation of the complements, and be the weighted geometric mean of the complements. Obviously we have E′ = 1 – E. The normalized weighted geometric mean is given by NWGM = G/(G + G′). We also let V = Var(O). We then have the following properties.

- The weighted geometric mean is always less or equal to the weighted arithmetic mean

with equality if and only if all the numbers Oi are equal. This is true regardless of whether the number Oi are bounded by one or not. This results immediately from Jensen's inequality applied to the logarithmic function. Although not directly used here, there are interesting bounds for the approximation of E by G, often involving the variance, such as:(77)

with equality only if the Oi are all equal. This inequality was originally proved by Cartwright and Field [20]. Several refinements, such as(78) (79)

as well as other interesting bounds can be found in [4, 5, 31, 32, 1, 2].(80) Since G ≤ E and G′ ≤ E′ = 1 – E, we have G + G′ ≤ 1, and thus G ≤ G/(G + G′) with equality if and only if all the numbers Oi are equal. Thus the weighted geometric mean is always less or equal to the normalized weighted geometric mean.

- If the numbers Oi satisfy 0 < Oi ≤ 0.5 (consistently low), then

[Note that if Oi = 0 for some i with pi ≠ 0, then G = 0 and the result is still true. ] This is easily proved using Jensen's inequality and applying it to the function ln x – ln(1 – x) for x ∈ (0, 0.5]. It is also known as the Ky Fan inequality [11, 35, 36] which can also be viewed as a special case of the Levinson's inequality [28]. In short, in the consistently low case, the normalized weighted geometric mean is always less or equal to the expectation and provides a better approximation of the expectation than the geometric mean. We will see in a later section why the consistently low case is particularly significant for dropout.(81) - If the numbers Oi satisfy 0.5 ≤ Oi < 1 (consistently high), then

Note that if Oi = 1 for some i with pi ≠ 0, then G′ = 0 and the result is still true. In short, the normalized weighted geometric mean is greater or equal to the expectation. The proof is similar to the previous case, interchanging x and 1 – x.(82) Note that if G/(G + G′) underestimates E then G′/(G + G′) overestimates 1 – E, and vice versa.

- This is the most important set of properties. When the numbers Oi satisfy 0 < Oi < 1, to a first order of approximation we have

Thus to a first order of approximation the WGM and the NWGM are equally good approximations of the expectation. However the results above, in particular property 3, lead one to suspect that the NWGM may be a better approximation, and that bounds or estimates ought to be derivable in terms of the variance. This can be seen by taking a second order approximation, which gives(83)

with the differences(84)

and(85)

The difference |E – NWGM| is small to a second order of approximation and over the entire range of values of E. This is because either E is close to 0.5 and then the term 1 – 2E is small, or E is close to 0 or 1 and then the term V is small. Before we provide specific bounds for the difference, note also that if E < 0.5 the second order approximation to the NWGM is below E, and vice versa when E > 0.5.(86)

Since V ≤ E(1 – E), with equality achieved only for 0-1 Bernoulli variables, we have

| (87) |

The inequalities are optimal in the sense that they are attained in the case of a Bernoulli variable with expectation E. The function E(1 – E)|1 – 2E|/[1 – 2E(1 – E)] is zero for E = 0, 0.5, or 1, and symmetric with respect to E = 0.5. It is convex down and its maximum over the interval [0, 0.5] is achieved for (Figure 8.1). The function 2E(1 – E)|1 – 2E| is zero for E = 0, 0.5, or 1 , and symmetric with respect to E = 0.5. It is convex down and its maximum over the interval [0, 0.5] is achieved for (Figure 8.2). Note that at the beginning of learning, with small random weights initialization, typically E is close to 0.5. Towards the end of learning, E is often close to 0 or 1. In all these cases, the bounds are close to 0 and the NWGM is close to E.

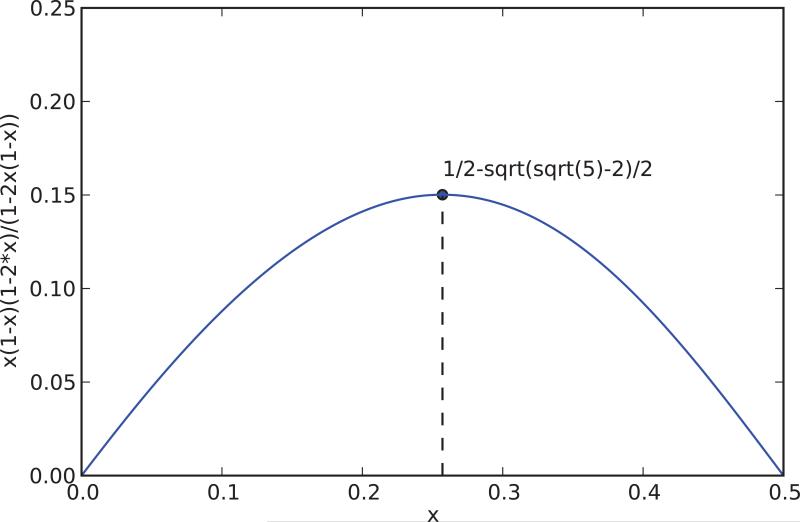

Figure 8.1.

The curve associated with the approximate bound |E – NWGM| ≲ E(1 – E)|1 – 2E|/[1 – 2E(1 – E)] (Equation 87).

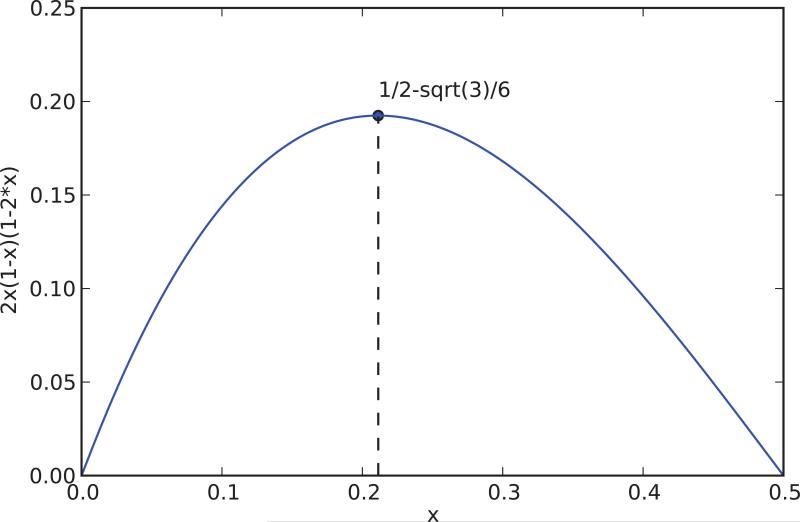

Figure 8.2.

The curve associated with the approximate bound |E – NWGM| ≲ 2E(1 – E)|1 – 2E| (Equation 87).

Note also that it is possible to have E = NWGM even when the numbers Oi are not identical. For instance, if O1 = 0.25, O2 = 0.75, and P1 = P2 = 0.5 we have G = G′ and thus: E = NWGM = 0.5.

In short, in general the NWGM is a better approximation to the expectation E than the geometric mean G. The property is always true to a second order of approximation. Furthermore, it is always exact when NWGM ≤ E since we must have G ≤ NWGM E. Furthermore, in general the NWGM is a better approximation to the mean than a random sample. Using a randomly chosen Oi as an estimate of the mean E, leads to an error that scales like the standard deviation , whereas the NWGM leads to an error that scales like V.

When NWGM > E, “third order” cases can be found where

| (88) |

An example is provided by: O1 = 0.622459, O2 = 0.731059 with a uniform distribution (p1 = p2 = 0.5). In this case, E = 0.676759, G = 0.674577, G′ = 0.318648, NWGM = 0.679179, E – G = 0.002182 and NWGM – E = 0.002420.

Extreme Cases: Note also that if for some i, Oi = 1 with non-zero probability, then G′ = 0. In this case, NWGM = 1, unless there is a j ≠ i such that Oj = 0 with non-zero probability.

Likewise if for some i, Oi = 0 with non-zero probability, then G = 0. In this case, NWGM = 0, unless there is a j ≠ i such that Oj = 1 with non-zero probability. If both Oi = 1 and Oj = 0 are achieved with non-zero probability, then NWGM = 0/0 is undefined. In principle, in a sigmoidal neuron, the extreme output values 0 and 1 are never achieved, although in simulations this could happen due to machine precision. In all these extreme cases, where the NWGM is a good approximation of E or not depends on the exact distribution of the values. For instance, if for some i, Oi = 1 with non-zero probability, and all the other Oj's are also close to 1, then NWGM = 1 ≈ E. On the other hand, if Oi = 1 with small but non-zero probability, and all the other Oj's are close to 0, then NWGM = 1 is not a good approximation of E.

Higher Order Moments: It would be useful to be able to derive estimates also for the variance V, as well as other higher order moments of the numbers O, especially when O = σ(S). While the NWGM can easily be generalized to higher order moments, it does not seem to yield simple estimates as for the mean (see Appendix). However higher order moments in a deep network trained with dropout can easily be approximated, as in the linear case (see Section 9).

Proof: To prove these results, we compute first and second order approximations. Depending on the case of interest, the numbers 0 < Oi < 1 can be expanded around E, around G, or around 0.5 (or around 0 or 1 when they are consistently close to these boundaries). Without assuming that they are consistently low or high, we expand them around 0.5 by writing Oi = 0.5 + εi where 0 ≤ |εi| ≤ 0.5. [Estimates obtained by expanding around E are given in the Appendix]. For any distribution P1, . . . , Pm over the m subnetworks, we have E(O) = 0.5 + E(ε) and Var(O) = Var(ε). As usual, let . To a first order of approximation,

| (89) |

The approximation is obtained using a Taylor expansion and the fact that 2|εi| < 1. In a similar way, we have G′ ≈ 1 – E and G/(G + G′) ≈ E. These approximations become more accurate as εi → 0. To a second order of approximation, we have

| (90) |

where R3(εi) is the remainder of order three

| (91) |

and |ui| ≤ 2|εi|. Expanding the product gives

| (92) |

which reduces to

| (93) |

By symmetry, we also have

| (94) |

where again R3(ε) is the higher order remainder. Neglecting the remainder and writing E = E(O) and V = Var(O) we have

| (95) |

Thus the differences between the mean on one hand, and the geometric mean and the normalized geometric means on the other, satisfy

| (96) |

and

| (97) |

To know when the NWGM is a better approximation to E than the WGM, we consider when the factor |(1 – 2E)/(1 – 2V)| is less or equal to one. There are four cases:

E ≤ 0.5 and V ≤ 0.5 and E ≥ V.

E ≤ 0.5 and V ≥ 0.5 and E + V ≥ 1.

E ≥ 0.5 and V ≤ 0.5 and E + V ≤ 1.

E ≥ 0.5 and V ≥ 0.5 and E ≤ V.

However, since 0 < Oi < 1, we have V ≤ E – E2 = E(1 – E) ≤ 0.25. So only cases 1 and 3 are possible and in both cases the relationship is trivially satisfied. Thus in all cases, to a second order of approximation, the NWGM is closer to E than the WGM.

9 Dropout Distributions and Approximation Properties

Throughout the rest of this article, we let denote the deterministic variables of the dropout approximation (or ensemble network) with

| (98) |

in the case of dropout applied to the nodes. The main question we wish to consider is whether is a good approximation to for every input, every layer l, and any unit i.

9.1 Dropout Induction

Dropout relies on the correctness of the approximation of the expectation of the activity of each unit over all its dropout subnetworks by the corresponding deterministic variable in the form

| (99) |

for each input, each layer l, and each unit i. The correctness of this approximation can be seen by induction. For the first layer, the property is obvious since , using the results of Section 8. Now assume that the property is true up to layer l. Again, by the results in Section 8,

| (100) |

which can be computed by

| (101) |

The approximation in Equation 101 uses of course the induction hypothesis. This induction, however, does not provide any sense of the errors being made, and whether these errors increase significantly with the depth of the networks. The error can be decomposed into two terms

| (102) |

Thus in what follows we study each term.

9.2 Sampling Distributions

In Section 8, we have shown that in general NWGM(O) provides a good approximation to E(O). To further understand the dropout approximation and its behavior in deep networks, we must look at the distribution of the difference α = E(O) – NWGM(O). Since both E and NWGM are deterministic functions of a set of O values, a distribution can only be defined if we look at different samples of O values taken from a more general distribution. These samples could correspond to dropout samples of the output of a given neuron. Note that the number of dropout subnetworks of a neuron being exponentially large, only a sample can be accessed during simulations of large networks. However, we can also consider that these samples are associated with a population of neurons, for instance the neurons in a given layer. While we cannot expect the neurons in a layer to behave homogeneously for a given input, they can in general be separated in a small number of populations, such as neurons that have low activity, medium activity, and high activity and the analysis below can be applied to each one of these populations separately. Letting denote a sample of m values Oi, . . . , Om, we are going to show through simulations and more formal arguments that in general has a mean close to 0, a small standard deviation, and in many cases is approximately normally distributed. For instance, if the O originate from a uniform distribution over [0.1], it is easy to see that both E and NWGM are approximately normally distributed, with mean 0.5, and a small variance decreasing as 1/m.

9.3 Mean and Standard Deviation of the Normalized Weighted Geometric Mean

More generally, assume that the variables Oi are i.i.d with mean μO and variance . Then the variables Si satisfying Oi = σ(Si) are also i.i.d. with mean μS and variance . Densities for S when O has a Beta distribution, or for O when S has a Gaussian distribution, are derived in the Appendix. These could be used to model in more detail non uniform distributions, and distributions corresponding to low or high activity. For m sufficiently large, by the central limit theorem2 the means of these quantities are approximately normal with:

| (103) |

If these standard deviations are small enough, which is the case for instance when m is large, then σ can be well approximated by a linear function with slope t over the corresponding small range. In this case, is also approximately normal with

| (104) |

Note that |t| ≤ λ/4 since σ′ = λσ(1 – σ). Very often, σ(μS) ≈ μO. This is particularly true if μO = 0.5. Away from 0.5, a bias can appear—for instance we know that if all the Oi < 0.5 then NWGM < E—but this bias is relatively small. This is confirmed by simulations, as shown in Figure 9.1 using Gaussian or uniform distributions to generate the values Oi. Finally, note that the variance of and are of the same order and behave like C1/m and C2/m respectively as m → ∞. Furthermore if is small.

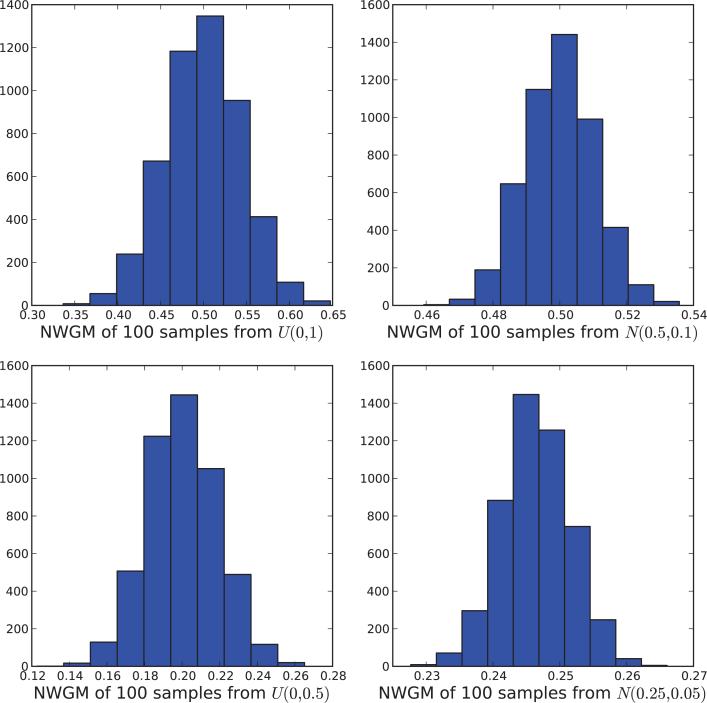

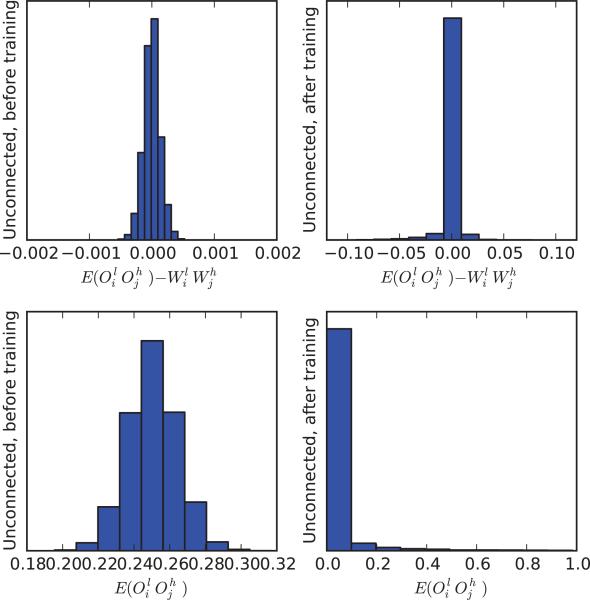

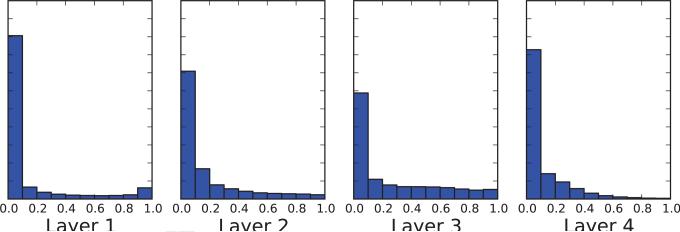

Figure 9.1.

Histogram of NWGM values for a random sample of 100 values O taken from: (1) the uniform distribution over [0,1] (upper left); (2) the uniform distribution over [0,0.5] (lower left); (3) the normal distribution with mean 0.5 and standard deviation 0.1 (upper right); and (4) the normal distribution with mean 0.25 and standard deviation 0.05 (lower right). All probability weights are equal to 1/100. Each sampling experiment is repeated 5,000 times to build the histogram.

If necessary, it is also possible to derive better and more general estimates of E(O), under the assumption that S is Gaussian by approximating the logistic function with the cumulative distribution of a Gaussian, as described in the Appendix (see also [41]).

If we sample from many neurons whose activities come from the same distribution, the sample mean and the sample NWGM will be normally distributed and have roughly the same mean. The difference will have approximately zero mean. To show that the difference is approximately normal we need to show that E and NWGM are uncorrelated.

9.4 Correlation between the Mean and the Normalized Weighted Geometric Mean

We have

| (105) |

Thus to estimate the variance of the difference, we must estimate the covariance between and . As we shall see, this covariance is close to null.

In this section, we assume again samples of size m from a distribution on O with mean E = μO and variance . To simplify the notation, we use , , and to denote the random variables corresponding to the mean, variance, and normalized weighted geometric mean of the sample. We have seen, by doing a Taylor expansion around 0.5, that .

We first consider the case where E = NWGM = 0.5. In this case, the covariance of and can be estimated as

| (106) |

We have and . Thus in short the covariance is of order V/m and goes to 0 as the sample size m goes to infinity. For the Pearson correlation, the denominator is the product of two similar standard deviations and scales also like V/m. Thus the correlation should be roughly constant and close to 1. More generally, even when the mean E is not equal to 0.5, we still have the approximations

| (107) |

And the leading term is still of order V/m [Similar results are also obtained by using the expansions around 0 or 1 given in the Appendix to model populations of neurons with low or high activity]. Thus again the covariance between NWGM and E goes to 0, and the Pearson correlation is constant and close to 1. These results are confirmed by simulations in Figure 9.2.

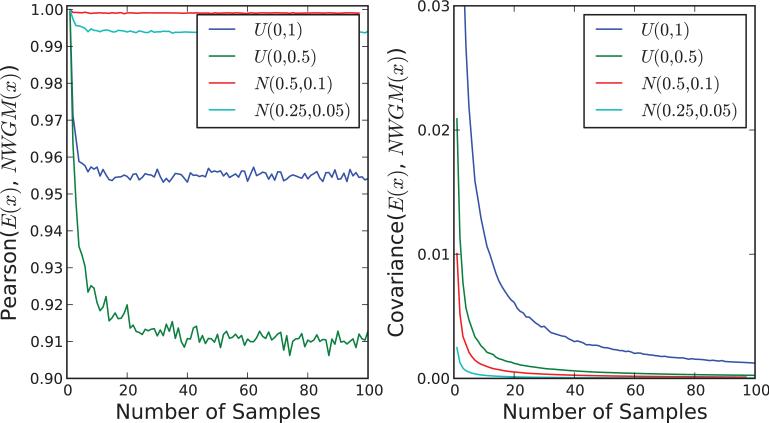

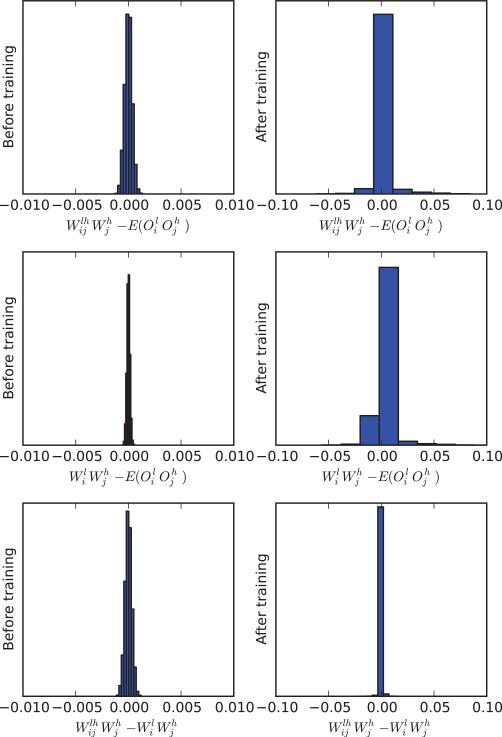

Figure 9.2.

Behavior of the Pearson correlation coefficient (left) and the covariance (right) between the empirical expectation E and the empirical NWGM as a function of the number of samples and sample distribution. For each number of samples, the sampling procedure is repeated 10,000 times to estimate the Pearson correlation and covariance. The distributions are the uniform distribution over [0,1], the uniform distribution over [0,0.5], the normal distribution with mean 0.5 and standard deviation 0.1, and the normal distribution with mean 0.25 and standard deviation 0.05.

Combining the previous results we have

| (108) |

Thus in general and are random variables with: (1) similar, if not identical, means; (2) variances and covariance that decrease to 0 inversely to the sample size; (3) approximately normal distributions. Thus E – NWGM is approximately normally distributed around zero. The NWGM behaves like a random variable with small fluctuations above and below the mean. [Of course contrived examples can be constructed (for instance with small m or small networks) which deviate from this general behavior.]

9.5 Dropout Approximations: the Cancellation Effects

To complete the analysis of the dropout approximation of by , we show by induction over the layers that where in general the error term is small and approximately normally distributed with mean 0. Furthermore the error is uncorrelated with the error for l > 1.

First, the property is true for l = 1 since and the results of the previous sections apply immediately to this case. For the induction step, we assume that the property is true up to layer l. At the following layer, we have

| (109) |

Using a first order Taylor expansion

| (110) |

or more compactly

| (111) |

thus

| (112) |

As a sum of many linear small terms, is approximately normally distributed. By linearity of the expectation

| (113) |

By linearity of the variance with respect to sums of independent random variables

| (114) |

This variance is small since for the standard logistic function (and much smaller than 1/16 at the end of learning, , and is small by induction. The weights are small at the beginning of learning and as we shall see in Section 11 dropout performs weight regularization automatically. While this is not observed in the simulations used here, one concern is that with very large layers the sum could become large. We leave a more detailed study of this issue for future work. Finally, we need to show that and are uncorrelated. Since both terms have approximately mean 0, we compute the mean of their product

| (115) |

By linearity of the expectation

| (116) |

since

In summary, in general both and can be viewed as good approximations to with small deviations that are approximately Gaussians with mean zero and small standard deviations. These deviations act like noise and cancel each other to some extent preventing the accumulation of errors across layers.

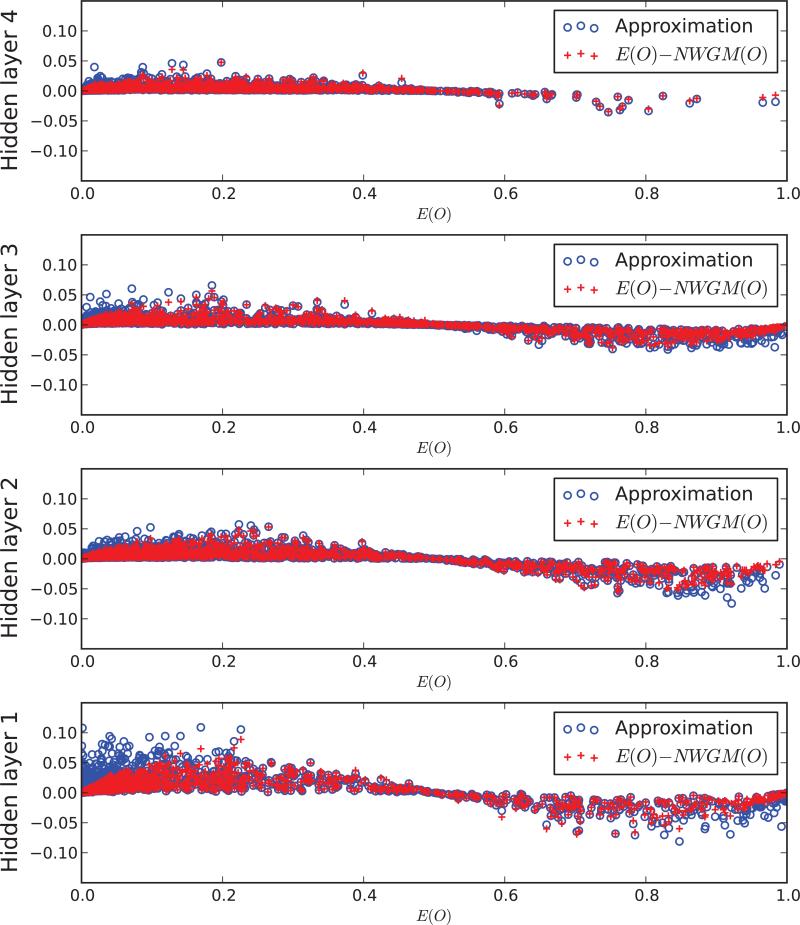

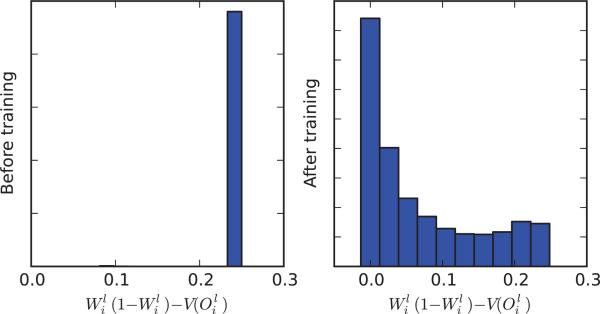

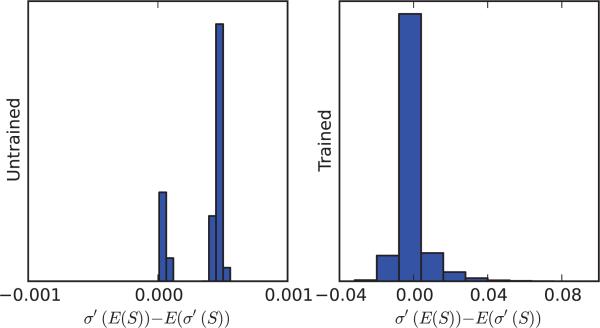

These results and those of the previous section are confirmed by simulation results given by Figures 9.3, 9.4, 9.5, 9.6, and 9.7. The simulations are based on training a deep neural network classifier on the MNIST handwritten characters dataset with layers of size 784-1200-1200-1200-1200-10 replicating the results described in [27], using p = 0.8 for the input layer and p = 0.5 for the hidden layers. The raster plots accumulate the results obtained for 10 randomly selected input vectors. For fixed weights and a fixed input vector, 10,000 Monte Carlo simulations are used to sample the dropout subnetworks and estimate the distribution of activities O of each neuron in each layer. These simulations use the weights obtained at the end of learning, except in the cases were the beginning and end of learning are compared (Figures 9.6 and 9.7). In general, the results show how well the and the deterministic values approximate the true expectation in each layer, both at the beginning and the end of learning, and how the deviations can roughly be viewed as small, approximately Gaussian, fluctuations well within the bounds derived in Section 8.

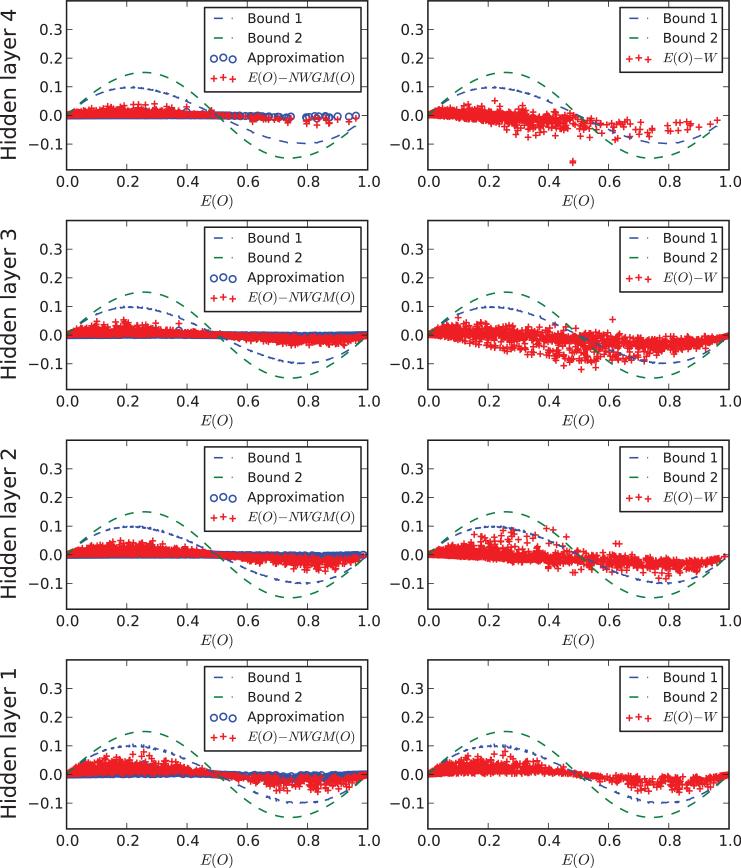

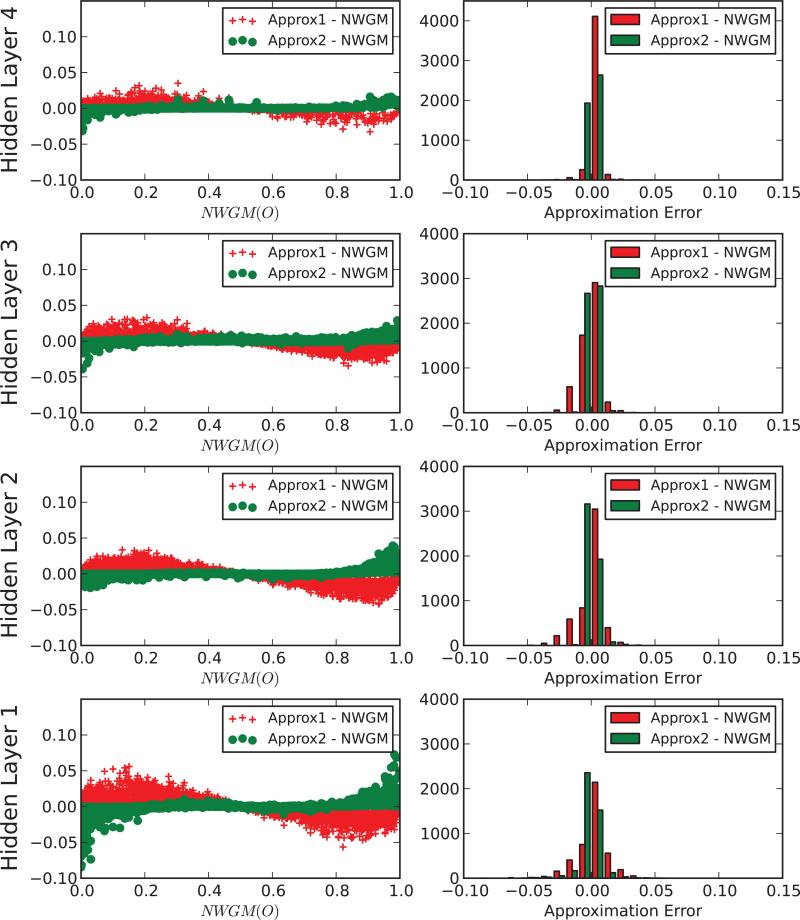

Figure 9.3.

Each row corresponds to a scatter plot for all the neurons in each one of the four hidden layers of a deep classifier trained on the MNIST dataset (see text) after learning. Scatter plots are derived by cumulating the results for 10 random chosen inputs. Dropout expectations are estimated using 10,000 dropout samples. The second order approximation in the left column (blue dots) correspond to |E – NWGM| ≈ V|1 – 2E|/(1 – 2V) (Equation 87). Bound 1 is the variance-dependent bound given by E(1 – E)|1 – 2E|/(1 – 2V) (Equation 87). Bound 2 is the variance-independent bound given by E(1–E)|1–2E|/(1–2E(1–E)) (Equation 87). In the right column, W represent the neuron activations in the deterministic ensemble network with the weights scaled appropriately and corresponding to the “propagated” NWGMs.

Figure 9.4.

Similar to Figure 9.3, using the sharper but potentially more restricted second order approximation to the NWGM obtained by using a Taylor expansion around the mean (see Appendix B, Equation 202).

Figure 9.5.

Similar to Figures 9.3 and 9.4. Approximation 1 corresponds to the second order Taylor approximation around 0.5: ∥E – NWGM| ≈ V|1 – 2E|/(1 – 2V) (Equation 87). Approximation 2 is the sharper but more restrictive second order Taylor approximation around (see Appendix B, Equation 202). Histograms for the two approximations are interleaved in each figure of the right column.

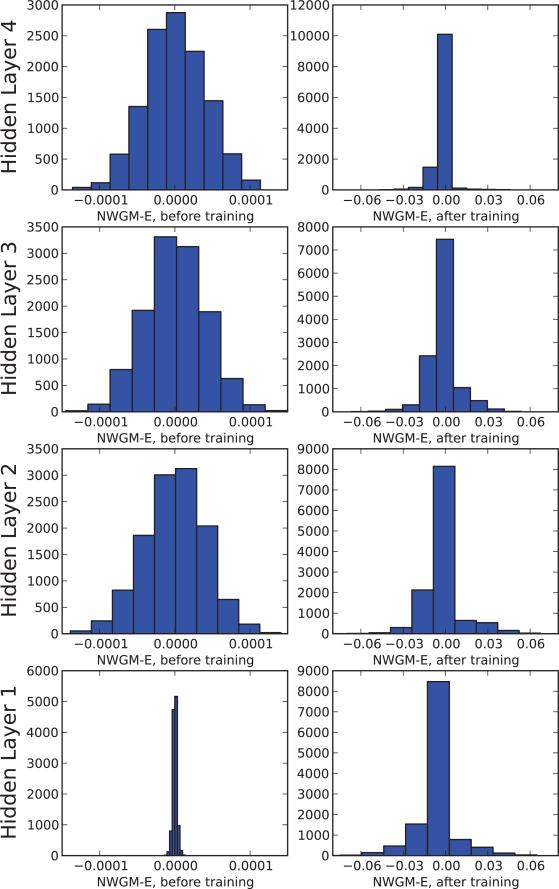

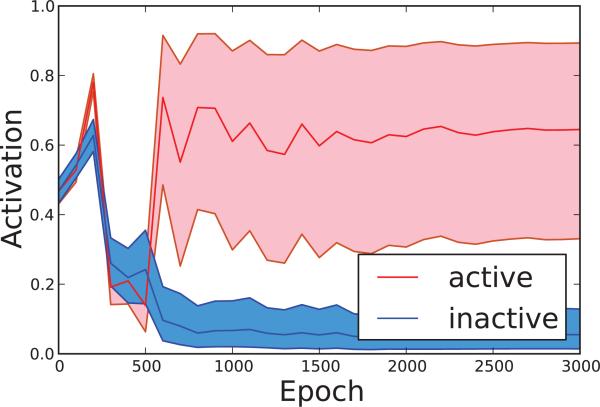

Figure 9.6.

Empirical distribution of NWGM – E is approximately Gaussian at each layer, both before and after training. This was performed with Monte Carlo simulations over dropout subnetworks with 10,000 samples for each of 10 fixed inputs. After training, the distribution is slightly asymmetric because the activation of the neurons is asymmetric. The distribution in layer one before training is particularly tight simply because the input to the network (MNIST data) is relatively sparse.

Figure 9.7.

Empirical distribution of W – E is approximately Gaussian at each layer, both before and after training. This was performed with Monte Carlo simulations over dropout subnetworks with 10,000 samples for each of 10 fixed inputs. After training, the distribution is slightly asymmetric because the activation of the neurons is asymmetric. The distribution in layer one before training is particularly tight simply because the input to the network (MNIST data) is relatively sparse.

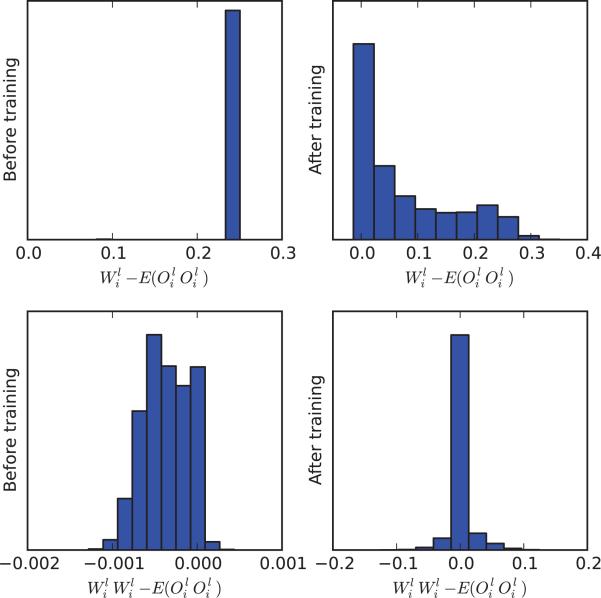

9.6 Dropout Approximations: Estimation of Variances and Covariances

We have seen that the deterministic values W s can be used to provide very simple but effective estimates of the values E(O)s across an entire network under dropout. Perhaps surprisingly, the W s can also be used to derive approximations of the variances and covariances of the units as follows.

First, for the dropout variance of a neuron, we can use

| (117) |

or

| (118) |

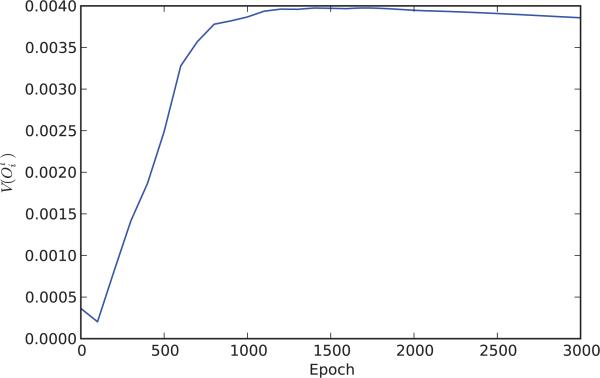

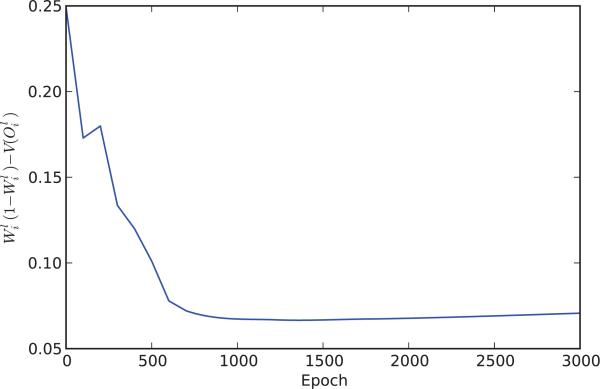

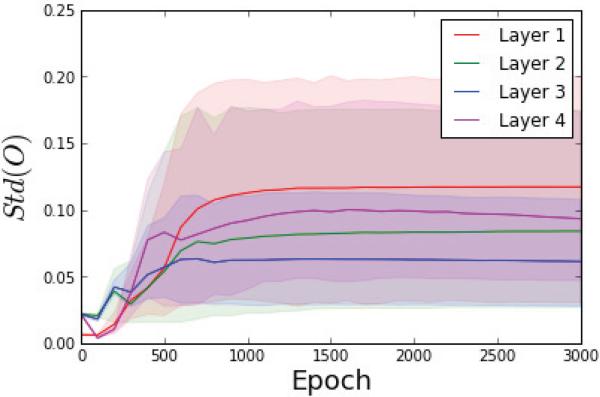

These two approximations can be viewed respectively as rough upperbounds and lower bounds to the variance. For neurons whose activities are close to 0 or 1, and thus in general for neurons towards the end of learning, these two bounds are similar to each other. This is not the case at the beginning of learning when, with very small weights and a standard logistic transfer function, and (Figure 9.8 and 9.9). At the beginning and the end of learning, the variances are small and so “0” is the better approximation. However , during learning, variances can be expected to be larger and closer to their approximate upper bound W(1 – W) (Figures 9.10 and 9.11).

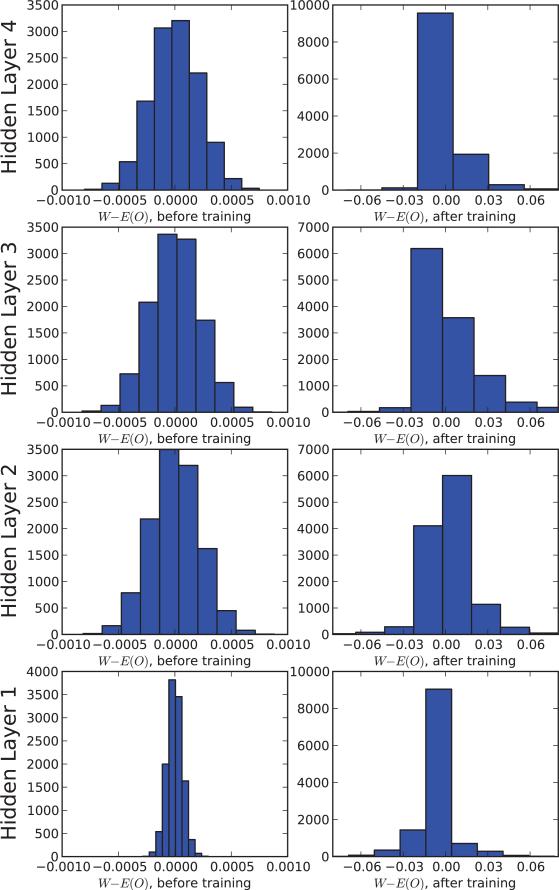

Figure 9.8.

Approximation of by and by corresponding respectively to the estimates and for the variance for neurons in a MNIST classifier network before and after training. Histograms are obtained by taking all non-input neurons and aggregating the results over 10 random input vectors.

Figure 9.9.

Histogram of the difference between the dropout variance of and its approximate upperbound in a MNIST classifier network before and after training. Histograms are obtained by taking all non-input neurons and aggregating the results over 10 random input vectors. Note that at the beginnning of learning, with random small weights, , and thus whereas .

Figure 9.10.

Temporal evolution of the dropout variance V(O) during training averaged over all hidden units.

Figure 9.11.

Temporal evolution of the difference W(1 – W ) – V during training averaged over all hidden units.

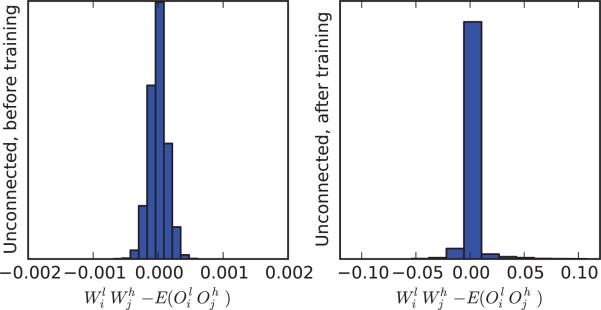

For the covariances of two different neurons, we use

| (119) |

This independence approximation is accurate for neurons that are truly independent of each other, such as pairs of neurons in the first layer. However it can be expected to remain approximately true for pairs of neurons that are only loosely coupled, i.e. for most pairs of neurons in a large neural networks at all times during learning. This is confirmed by simulations (Figure 9.12) conducted using the same network trained on the MNIST dataset. The approximation is much better than simply using 0 (Figure 9.13).

Figure 9.12.

Approximation of by for pairs of non-input neurons that are not directly connected to each other in a MNIST classifier network, before and after training. Histograms are obtained by taking 100,000 pairs of unconnected neurons, uniformly at random, and aggregating the results over 10 random input vectors.

Figure 9.13.

Comparison of to 0 for pairs of non-input neurons that are not directly connected to each other in a MNIST classifier network, before and after training. As shown in the previous figure, provides a better approximation. Histograms are obtained by taking 100,000 pairs of unconnected neurons, uniformly at random, and aggregating the results over 10 random input vectors.

For neurons that are directly connected to each other, this approximation still holds but one can try to improve it by introducing a slight correction. Consider the case of a neuron with output feeding directly into the neuron with output through a weight . By isolating the contribution of , we have

| (120) |

with a first order Taylor approximation which is more accurate when or are small (conditions that are particularly well satisfied at the beginning of learning or with sparse coding). In this expansion, the first term is independent of and its expectation can easily be computed as

| (121) |

Thus here is simply the deterministic activation of neuron i in layer l in the ensemble network when neuron j in layer h is removed from its inputs. Thus it can easily be computed by forward propagation in the deterministic network. Using a first-order Taylor expansion it can be estimated by

| (122) |

In any case,

| (123) |

Towards the end of learning, σ′ ≈ 0 and so the second term can be neglected. A slightly more precise estimate can be obtained by writing σ′ ≈ λσ when σ is close to 0, and σ′ ≈ λ(1 – σ) when σ is close to 1, replacing the corresponding expectation by or . In any case, to a leading term approximation, we have

| (124) |

The accuracy of these formula for pairs of connected neurons is demonstrated in Figure 9.14 at the beginning and end of learning, where it is also compared to the approximation . The correction provides a small improvement at the end of learning but not at the beginning. This is because it neglects a term in σ′ which presumably is close to 0 at the end of learning. The improvement is small enough that for most purposes the simpler approximation may be used in all cases, connected or unconnected.

Figure 9.14.

Approximation of by and for pairs of connected non-input neurons, with a directed connection from j to i in a MNIST classifier network, before and after training. Histograms are obtained by taking 100,000 pairs of connected neurons, uniformly at random, and aggregating the results over 10 random input vectors.

10 The Duality with Spiking Neurons and With Backpropagation

10.1 Spiking Neurons

There is a long-standing debate on the importance of spikes in biological neurons, and also in artificial neural networks, in particular as to whether the precise timing of spikes is used to carry information or not. In biological systems, there are many examples, for instance in the visual and motor systems, where information seems to be carried by the short term average firing rate of neurons rather than the exact timing of their spikes. However, other experiments have shown that in some cases the timing of the spikes are highly reproducible and there are also known examples where the timing of the spikes is crucial, for instance in the auditory location systems of bats and barn owls, where brain regions can detect very small interaural differences, considerably smaller than 1 ms [26, 19, 18]. However these seem to be relatively rare and specialized cases. On the engineering side the question of course is whether having spiking neurons is helpful for learning or any other purposes, and if so whether the precise timing of the spikes matters or not. There is a connection between dropout and spiking neurons which might shed some, at the moment faint, light on these questions.

A sigmoidal neuron with output O = σ(S) can be converted into a stochastic spiking neuron by letting the neuron “flip a coin” and produce a spike with probability O. Thus in a network of spiking neurons, each neuron computes three random variables: an input sum S, a spiking probability O, and a stochastic output Δ (Figure 10.1). Two spiking mechanisms can be considered: (1) global: when a neuron spikes it sends the same quantity r along all its outgoing connections; and (2) local or connection-specific: when a neuron spikes with respect to a specific connection, it sends a quantity r along that connection. In the latter case, a different coin must be flipped for each connection. Intuitively, one can see that the first case corresponds to dropout on the units, and the second case to droupout on the connections. When a spike is not produced, the corresponding unit is dropped in the first case, and the corresponding connection is dropped in the second case.

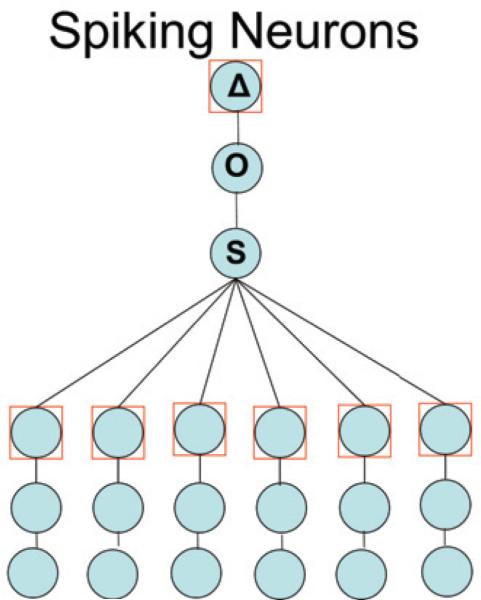

Figure 10.1.

A spiking neuron formally operates in 3 steps by computing first a linear sum S, then a probability O = σ(S), then a stochastic output Δ of size r with probability O(and 0 otherwise).

To be more precise, a multi-layer network is described by the following equations. First for the spiking of each unit:

| (125) |

in the global firing case, and

| (126) |

in the connection-specific case. Here we allow the “size” of the spikes to vary with the neurons or the connections, with spikes of fixed-size being an easy special case. While the spike sizes could in principle be greater than one, the connection to dropout requires spike sizes of size at most one. The spiking probability is computed as usual in the form

| (127) |

and the sum term is given by

| (128) |

in the global firing case, and

| (129) |

in the connection-specific case. The equations can be applied to all the layers, including the output layer and the input layer if these layers consist of spiking neurons. Obviously non-spiking neurons (e.g. in the input or output layers) can be combined with spiking neurons in the same network.

In this formalism, the issue of the exact timing of each spike is not really addressed. However some information about the coin flips must be given in order to define the behavior of the network. Two common models are to assume complete asynchrony, or to assume synchrony within each layer. As spikes propagate through the network, the average output E(Δ) of a spiking neuron over all spiking configurations is equal to r times the size its average firing probability E(O). As we have seen, the average firing probability can be approximated by the NWGM over all possible inputs S, leading to the following recursive equations:

| (130) |

in the global firing case, or

| (131) |

in the connection-specific case. Then

| (132) |

with

| (133) |

in the global firing case, or

| (134) |

in the connection-specific case.

In short, the expectation of the stochastic outputs of the stochastic neurons in a feedforward stochastic network can be approximated by a dropout-like deterministic feedforward propagation, proceeding from the input layer to the output layer, and multiplying each weight by the corresponding spike size –which acts as a dropout probability parameter– of the corresponding presynaptic neuron. [Operating a neuron in stochastic mode is also equivalent to setting all its inputs to 1 and using dropout on its connections with different Bernoulli probabilities associated with the sigmoidal outputs of the previous layer.]

In particular, this shows that given any feedforward network of spiking neurons, with all spikes of size 1, we can approximate the average firing rate of any neuron simply by using deterministic forward propagation in the corresponding identical network of sigmoidal neurons. The quality of the approximation is determined by the quality of the approximations of the expectations by the NWGMs. More generally, consider three feedforward networks (Figure 10.2) with the same identical topology, and almost identical weights. The first network is stochastic, has weights , and consists of spiking neurons: a neuron with activity sends a spike of size with probability , and 0 otherwise (a similar argument can be made with connection-specific spikes of size ). Thus, in this network neuron i in layer h sends out a signal that has instantaneous mean and variance given by

| (135) |

for fixed , and short-term mean and variance given by

| (136) |

when averaged over all spiking configurations, for a fixed input.

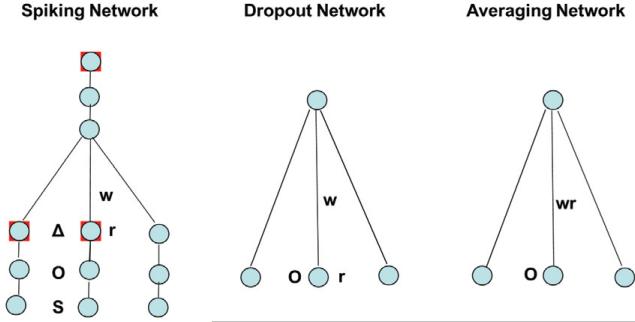

Figure 10.2.

Three closely related networks. The first network operates stochastically and consists of spiking neurons: a neuron sends a spike of size r with probability O. The second network operates stochastically and consists of logistic dropout neurons: a neurons sends an activation O with a dropout probability r. The connection weights in the first and second networks are identical. The third network operates in a deterministic way and consists of logistic neurons. Its weights are equal to the weights of the second network multiplied by the corresponding probability r.

The second network is also stochastic, has identical weights to the first network, and consists of dropout sigmoidal neurons: a neuron with activity sends a value with probability , and 0 otherwise (a similar argument can be made with connection-specific dropout with probability ). Thus neuron i in layer h sends out a signal that has instantaneous expectation and variance given by

| (137) |

for a fixed , and short-term expectation and variance given by

| (138) |

when averaged over all dropout configurations, for a fixed input.

The third network is deterministic and consists of logistic units. Its weights are identical to those of the previous two networks except they are rescaled in the form . Then, remarkably, feedforward deterministic propagation in the third network can be used to approximate both the average output of the neurons in the first network over all possible spiking configurations, and the average output of the neurons in the second network over all possible dropout configurations. In particular, this shows that using stochastic neurons in the forward pass of a neural network of sigmoidal units may be similar to using dropout.

Note that the first and second network are quite different in their details. In particular the variances of the signals sent by a neuron to the following layer are equal only when . When , then the variance is greater in the dropout network. When , which is the typical case with sparse encoding and , then the variance is greater in the spiking network. This corresponds to the Poisson regime of relatively rare spikes.

In summary, a simple deterministic feedforward propagation allows one to estimate the average firing rates in stochastic, even asynchronous, networks without the need for knowing the exact timing of the firing events. Stochastic neurons can be used instead of dropout during learning. Whether stochastic neurons are preferable to dropout, for instance because of the differences in variance described above, requires further investigations. There is however one more aspect to the connection between dropout, stochastic neurons, and backpropagation.

10.2 Backpropagation and Backpercolation

Another important observation is that the backward propagation used in the backpropagation algorithm can itself be viewed as closely related to dropout. Starting from the errors at the output layer, backpropagation uses an orderly alternating sequence of multiplications by the transpose of the forward weight matrices and by the derivatives of the activation functions. Thus backpropagation is essentially a form of linear propagation in the reverse linear network combined with multiplication by the derivatives of the activation functions at each node, and thus formally looks like the recursion of Equation 24. If these derivatives are between 0 and 1, they can be interpreted as probabilities. [In the case of logistic activation functions, σ′(x) = λσ(x)(1 – σ(x)) and thus σ′(x) ≤ 1 for every value of x when λ ≤ 4.] Thus back-propagation is computing the dropout ensemble average in the reverse linear network where the dropout probability p of each node is given by the derivative of the corresponding activation. This suggests the possibility of using dropout (or stochastic spikes, or addition of Gaussian noise), during the backward pass, with or without dropout (or stochastic spikes, or addition of Gaussian noise) in the forward pass, and with different amounts of coordination between the forward and backward pass when dropout is used in both.