Abstract

How objects are held determines how they are seen, and may thereby play an important developmental role in building visual object representations. Previous research suggests that toddlers, like adults, show themselves a disproportionate number of planar object views – that is, views in which the objects’ axes of elongation are perpendicular or parallel to the line of sight. Here, three experiments address three explanations of this bias: 1) that the locations of interesting features of objects determine how they are held and thus how they are viewed: 2) that ease of holding determines object views; and 3) that there is a visual bias for planar views that exists independent of holding and of interesting surface properties. Children 18 to 24 months of age manually and visually explored novel objects 1) with interesting features centered in planar or ¾ views; 2) positioned inside Plexiglas boxes so that holding biased either planar or non-planar views; and 3) positioned inside Plexiglas spheres, so that no object properties directly influenced holding. Results indicate a visual bias for planar views that is influenced by interesting surface properties and ease of holding, but that continues to exist even when these factors push for alternative views.

Visual object recognition depends on the perceived views of objects. These views depend, in turn, on the perceiver’s actions. For humans, hands that can hold and rotate objects may play a critical developmental role in structuring visual experience and in the building of visual object representations (James, Swain, Smith, & Jones, in press; Pereira, James, Jones, & Smith, 2010; Soska, Adolph, & Johnson, 2010). Here we report new evidence on how object properties influence the manual behaviors of 18 to 24 month olds, and the consequent object views that the children see. Three experiments were designed to test three not mutually exclusive explanations for biased viewing behavior in toddlers: (1) that the locations of information-rich surface features determine manual and visual exploration, and thus object views; (2) that the ease with which an object can be held is the principle determiner of self-generated views; and (3) that there is a visual bias for views with particular geometric properties, and this visual bias exists independent both of holding biases and of the surface properties of the object.

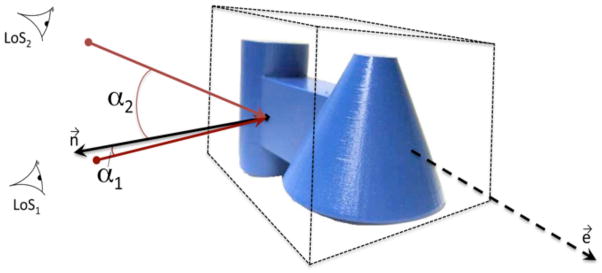

These proposals were motivated by well-documented findings of a viewing bias during object exploration in adults, and by a recent finding that toddlers’ self-generated object views show the same bias. Specifically, when adults are actively viewing 3-dimensional objects, they show a preference for “planar” views – views in which the major axis of the object is (approximately) either perpendicular or parallel to the line of sight (e.g., Harman, Humphrey, & Goodale, 1999; Harries, Perrett, & Lavender, 1991; James, T., Humphrey, Gati, Menon, & Goodale, 2002; James, K., Humphrey, & Goodale, 2001; Keehner, Hegarty, Cohen, Khooshabeh, & Montello, 2008; Locher, Vos, Stappers, & Overbeeke, 2000; Niemann, Lappe, & Hoffmann, 1996; Pereira et al., 2010). Planar views are those that primarily show the flat planes of objects, both when looking at the ‘side’ of an object, where the axis of elongation is perpendicular to the line of sight, and when looking at the front or back of the object, which renders a ‘foreshortened’ view in which the axis of elongation is parallel to the line of sight. In contrast, ¾ views show corners of objects, and the planes of the object are seen as extending from the corners. A graphical depiction of the determination of planar and ¾ views is presented in Figure 1. Adult studies also suggest that selective experience of the planar views of novel 3-dimensional objects leads to faster subsequent recognition of those objects than selective experience of other views (James et al., 2001), a result that implies that planar views in some way build better object representations. Recent evidence has shown that in young children, a similar preference for planar views is positively related to object recognition measures and to vocabulary size (James et al., 2013).

Figure 1.

A graphical depiction of the determination of planar views and ¾ views of objects. Each image frame was coded as planar, ¾ or otherwise by calculating the angle between the Line of Sight (LoS) and the normal vectors of the front, top, and side face of the object’s bounding box. The bounding box was oriented so it had one side parallel to the object’s main axis of elongation (e⃗) and its faces were parallel to the object’s sides. The figure shows an example of the calculation, for one side of the bounding box and for two orientations: LoS1 corresponds to a planar view and LoS2 corresponds to a ¾ view; n⃗ is the vector normal to the bounding box’s side.

The specific mechanisms that underlie dynamic view selection in adults are not well understood (see Pereira et al., 2010). However, the preference for planar views has been demonstrated in a variety of behavioral paradigms to be a function of dwell times within a viewing space (Perrett & Harries, 1988). If an object were to be rotated through all possible rotations, the resultant viewing space could be represented as a viewing sphere (see also Bulthoff & Edelman, 1992). On this sphere, one can calculate the amount of time - the “dwell time” - that perceivers spend looking at any view of any type of object. The objects in many of the adult studies were computer-generated virtual objects that were rotated by perceivers using a trackball to reveal different views (Harman et al., 1999; James et al., 2001, 2002). This procedure allowed for precise measurement of preferred views within the viewing sphere and revealed a clear preference for planar views over ¾ views in adults. Similar biases, though by different measures, have been shown for both adults and toddlers in the views generated when manually holding 3-dimensional objects (Pereira et al., 2010).

In the Pereira et al. (2010) study, 12- to 36-month-old children held 3-dimensional objects and explored them manually, while a head-camera mounted on the children’s foreheads captured the resulting views. The objects were a set of toy versions of common things (e.g., crib, shoe, camera) each painted a solid color, and a set of novel variations on those same things. When the views that children showed to themselves were measured in terms of aggregated dwell times over the whole viewing sphere, planar views of objects were overrepresented relative to the proportion of such views expected if the object had been randomly rotated. This bias was found even at the youngest age level. It then increased substantially in strength between 12 and 36 months – although it never matched that of adults assessed by a similar method.

In the present study, we investigated the source of children’s biased selection of planar views. Understanding the origins of biased viewing in children may be critical to understanding the origins of the adult planar bias. It is also potentially necessary to a full explanation of the development of visual object representations, as even a small bias in the frequency of specific object views may build upon itself and thus bias children’s developing internal object representations.

One possibility is that infants’ views of objects are determined primarily by the objects’ surface properties – the textural properties and small part structures such as knobs and buttons – that may be the focus of manual and visual exploration. If these functional properties are typically located on the planar surfaces, as seems likely, and if children prefer to center such features in their visual fields, then those features might bias the holding of objects in ways that favor planar views. This hypothesis is consistent with previous developmental findings that infants manually explore the part structures of objects and finger small regions of texture on objects more than smooth ones (Bushnell, 1982, 1985, 1989; Ruff, 1984; 1986). No prior studies have examined the visual consequences of these activities and in particular, how they may influence visual biases. In Experiment 1, we used a head mounted camera (as in Pereira et al., 2010; Smith & Yoshida, 2008) to capture the first person views of older infants as they explored familiar and novel objects. The hypothesis under test is that the planar versus non-planar locations of textural properties and small functional parts determine whether a planar bias in the children’s self-generated views is observed. That is, we explore whether or not interesting textures and functional parts pull toddlers towards the visual bias observed previously.

A second possibility is that the planar view preference is a consequence of the way in which an object is typically held. Grasping and holding behavior is constrained by the geometry of the whole object in relation to the arms and hands. No studies to our knowledge have examined the visual consequences of biases in how objects are grasped and held. Thus, it is possible that the planar viewing bias in young children reported by Pereira et al. (2010) is fundamentally an “object-holding” bias. The natural and easy way of holding many things – especially bimanual holding with two arms equally extended – may favor holding patterns in which the major axis of the object is perpendicular to the holder’s line of sight, and thus presents a planar view. If such a holding bias creates a biased sample of seen views, it may also create, over developmental time, a visual bias for planar views. A bias that begins with holding but strengthens with development would account for the fact that the planar view bias in adults does not depend on holding behavior, as it is observed with computer generated objects whose views are manipulated with a trackball (Perrett & Harries, 1988; James et al., 2002). In Experiments 2 and 3, we test the hypothesis that the way in which toddlers tend to hold objects creates the planar bias, using stimuli that dissociate the geometry of what is seen from the geometry of holding.

Finally, it is possible that the planar bias is principally a visual bias from the first. Planar views have special visual properties that could be the source of the bias. First, these views may emphasize or highlight non-accidental properties (parallel sides, right angles, symmetry) that are critical within some theories of visual object recognition (e.g., Biederman, 1987; Marr, 1982; Farivar, 2009). Second, planar views are distinct, in the sense that small variations around a planar view are more variable than the same small variations around ¾ views in terms of the sides, angles and features of the objects that can be seen with small changes in viewing direction. This is illustrated in Figure 2, which shows how small variation in viewing angles around a planar view reveal different sides and properties of the objects whereas the same variation around a 3/4 view does not reveal new information about the object’s structure (Niimi & Yokosawa, 2009; James et al., 2001; Harman et al., 1999). Both of these possibilities suggest that the preference for planar views could originate in the visual properties of those views in and of themselves. All three experiments provide evidence with respect to this explanation.

Figure 2.

Depiction of 22.5 degree variation across center of planar and ¾ view determination. Middle column: 0 degree deviation from either (top row) ¾ view or (middle and bottom rows) planar views. Outer columns: 11.25 degree rotations in either direction from the 0 degree view.

To test the contributions of the three proposed mechanisms, we used a similar procedure to that used by Pereira et al. (2010). We chose to focus on 18- to 24-month-old children because the planar bias in this age range is sufficiently coherent that the effects of manipulations can be detected (see Pereira et al., 2010, Figure 4). The toddlers were seated in a chair with no tray or table, so that they had to hold the stimulus objects in order to manually and visually explore them. The first-person views generated by these behaviors were recorded by a head camera placed low on the child’s forehead. In contrast to the objects used in Pereira et al. (2010), the objects used in the present experiment were novel. Thus, viewing preferences could not be based on past knowledge of, or functional experiences with, the objects.

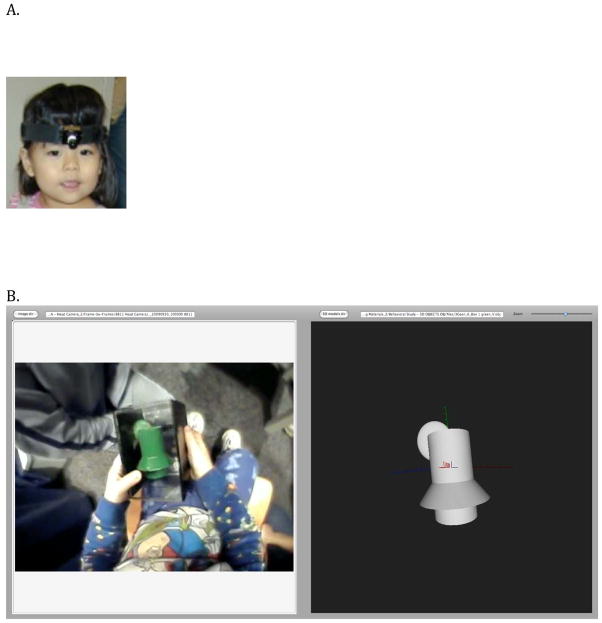

Figure 4.

On the left, a child wearing the head-mounted camera. On the right, a screen shot of a child playing with an object taken from the head-mounted camera. Beside this a screenshot of the software package used to determine the angle of the object taken from frames from head-mounted camera.

Experiment 1

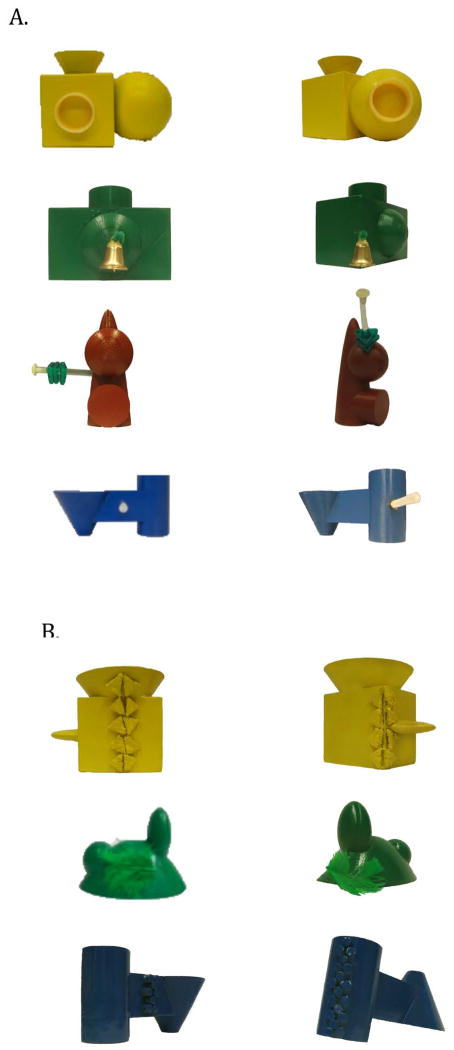

In this experiment we manipulated the surface properties of objects, placing potentially interesting properties either on the center of the planar faces of the objects or on the angular edges of the objects - that is, at the center of a ¾ view. These features, as shown in Figure 3, were sufficiently large that they did not require precision in object handling in order to be in view. However, if children held the objects so as to center the visually interesting feature, then one might expect interesting properties on the center of the planar faces to bias planar views, and interesting properties on the vertices connecting planar sides to bias non-planar “3/4” views. Figure 3 shows the stimulus manipulations. Experiment 1A consisted of the two Texture location conditions. Haptically interesting textures were placed at the center points of either the Planar (Figure 3A, left column) or ¾ views (Figure 3A, right column) of stimulus objects. Experiment 1B consisted of the two Functional Parts location conditions. Visually attractive and interactive features were placed at the center points of either Planar (Figure 3B left) or ¾ views (Figure 3B right) of stimulus objects.

Figure 3.

A. Stimuli included in Experiment 1A. The left column depicts objects with interesting features placed on planar sides. The right column depicts objects with features placed on ¾ angles. B. Stimuli included in Experiment 1B. As above, left column, textures on planar sides, right column textures are on ¾ angles.

In short: in Experiment 1, we manipulated where information was added to the object: this factor is called ‘Feature Location’ (planar side or a non-planar angle). We then measured where children looked within the viewing sphere: this dependent measure is called “Preferred View” (planar or ¾).

Method

Participants

In Experiment 1A, the participants were 32 children (16 female) aged 18–24 months (M = 20.95, SD = 2.39). In Experiment 1B the participants were 24 children (12 female) aged 18 – 24 months (M = 22.02, SD = 1.92). Participants were recruited from a predominantly English-speaking middle class community in the Midwest. No child participated in both studies. An additional 19 participants were recruited but data were not obtained due to experimental error or the child’s refusal to wear the head camera. In each sub-experiment, children were randomly assigned to a Planar Location or ¾ Location condition.

Stimuli

Seven unique objects, shown in Figure 3, were designed, and two copies of each were made in plastic using a 3D printer. Objects measured approximately 900 – 1000 cm3 and weighed 70–80 grams. Objects ranged in size from 4.5 to 9 cm in length, 5 to 12 cm in width and 4 to 6 cm in depth when measured from their gravitational upright (flat bottom of object placed on a surface). Each object consisted of three geometric volumes arranged to ensure that each object had a major axis of elongation. Both members of each pair of identical objects were painted the same single, bright color – red, yellow, green, blue, or purple. Both copies of three of the objects were used for Experiment 1A (Textures) and both copies of four of the objects were used for Experiment 1B (Functional parts). The two between-subject conditions within each sub-experiment, Planar Location or ¾ Location, differed only in the location of the added features.

For the two Texture Location conditions of Experiment 1A, pieces of surface textures were applied on two opposing planar views of one of each pair of objects and on two opposing ¾ views of the other member of that pair. A different texture was used for each of the three unique objects: feathers, a piece of rough scouring cloth, or a grid of raised rubber dots. For the two Functional Parts Location conditions of Experiment 1B, the features added to the center of either the planar or ¾ view of each pair of objects were: 1) a small metal bell suspended from a green pipe cleaner; 2) a yellow plastic circular “slinky” (2.5cm in diameter, 1 cm deep when stacked); 3) a clear plastic rod on which three green plastic triangles were threaded and 4) a 1.5 inch long white plastic spring (see Figure 3B). Each feature invited an action.

Apparatus

A miniature video camera worn low on the child’s forehead was used to capture the first person views. The head camera was mounted on a rubber strip (58.4 cm × 2.54 cm) that could be snugly fitted onto each child’s head and fastened with Velcro (see Figure 4 left). The head camera could be rotated slightly in pitch to adjust viewing angle. Once fit and adjusted, the head camera moved with head movements but did not slip in its positioning on the head. The camera was a WATEC model WAT-230A with 512 × 492 effective image frame pixels, weighing 30 g and measuring 36 mm × 30 mm × 30 mm. The lens was a WATEC model 1920BC-5, with a focal length of f1.9 and an angle of view of 115.2 degrees on the horizontal and 83.7 degrees on the vertical. Lightweight power and video cables were attached to the outside of the headband and were long enough to allow the seated child to move freely. Camera, headband and cables together weighed 100 g. A second camera was situated across from the child to record the experimental session from a 3rd person view, so that coders could check for consistency in how the experimenter handed the objects to the infants and also confirm that the head-mounted camera stayed in the same position throughout the study.

Procedure

The head-camera was placed on the child using the following procedure: The child and one experimenter sat at the table and played with interactive toys on which pushed buttons made events happen. While the child was so engaged, a second experimenter (from behind the child) placed the head camera on the child and then adjusted the camera’s angle so that when the child pushed a button on a toy, that button was in the center of the camera’s view. The head camera was centered between the child’s eyes, as close as possible to a center point between the eyebrows. The images from the camera were checked while the camera was adjusted to ensure that the camera captured images of the objects that the child held.

The table and toys used for head camera placement were then removed. Then the experimenter handed the stimulus objects to the child one at a time. The order in which the objects were presented and their orientation with respect to the child when handed to the child were randomly determined. The experimenter encouraged the child to look at the object with phrases such as “What is that? Look at that!” Children examined each object for as long as they wished. If an object was retained for less than 10 s, that object was given to the child again at the end of the experiment. The experimenter spoke only to make general statements encouraging exploration without mentioning any specific object features or parts.

Coding

The time spent by each child in handling each object was recorded, beginning with the first head-camera frame in which the object appeared and ending at the moment the object was dropped or handed to the experimenter. Image frames were sampled for coding at 1 Hz. Coders recorded whether the object was held with one hand or two hands, and whether children were touching the added textures or functional parts with one or more fingertips in each coded frame.

Object views were coded using a custom software application that permitted side-by-side comparisons of the head camera image and a rotatable 3-dimensional model of each object (Pereira et al., 2010). Coders rotated the 3-dimensional model until its orientation matched the orientation of the object in the video frame (see Figure 4 right). The software then recorded this position in terms of its Euler angles and standard YXZ (heading-attitude-bank) coordinates (Kuipers, 2002). Following the approach of Pereira et al. (2010), two categories of views - Planar and ¾ - were defined from these codings by calculating the angle between the Line of Sight (LoS) and the normal vectors of the front, top, and side face of the object’s bounding box as shown in Figure 1. An object’s orientation in the head-camera view was categorized as a planar view if one of the three signed angles between the LoS and the normal for front, top or side faces of the bounding box was within one of these intervals: 0 ± 11.25 degrees; or 180 ± 11.25 degrees. A viewpoint was categorized as a ¾ view if at least two sides of the bounding box were in view and the angle of LoS with both of them was inside the interval 45 ± 11.25 deg or a rotation of 90 deg on this interval (i.e. centered at 135 deg, 225 deg or 315 deg). Note that the range (in degrees) of views defined as planar or ¾ are the same, 11.25 degrees from the central point, resulting in views that range 22.50 degrees. Such broadly defined categories are appropriate because prior research indicates that even adult participants do not present themselves with perfectly flat planar views (Blanz, Tarr, Bülthoff, & Vetter, 1999; James et al., 2001; Perrett & Harries, 1992), and because prior developmental studies indicates that young children do not do so either (James et al., 2013; Pereira et al., 2010).

Data analyses

Although the size in degrees of planar and ¾ views is the same, their baseline likelihood given random rotations of the objects are not. Monte Carlo simulations of unbiased viewing yield expected values of 5.6% planar views and 33.1% ¾ views. These rates were estimated by generating a large number of points (N=10000) distributed uniformly over a sphere of radius one and checking whether the azimuth and elevation of each point made that point a planar or 3/4 view, according to our definitions above. By this formulation, if children’s views were randomly selected with no constraints from holding or object structure, one would expect only 5–6% of those views to fall within the range defined as planar, and about 1/3 to fall within the range defined as ¾ views. Accordingly, we use as our dependent measures the differences between the percentages of observed views produced by each child in each condition and the percentage of views expected by this determination of chance, calculated separately for planar views and ¾ views. All statistics are performed on these difference scores. Results are reported for planar view preference separately from ¾ view preference, given that these two dependent measures are not entirely independent. (Note, however, that a majority of views in the viewing sphere - more than 60% - are neither planar nor ¾ views.)

Results and Discussion

Children explored the objects for 58.3 seconds on average (SD = 30 s). A 2 (Features: Textures, Functional Parts) X 2 (Location: Planar, ¾) analysis of variance indicated a reliable difference between the two sub-experiments in exploration time per object (F (1,52) = 6.02, p <0.02), such that the time spent exploring the objects with added patches of texture (M = 50.03 sec, SD = 27.0) was shorter than the time spent exploring objects with added functional parts (M = 68.9 sec, SD = 30.4). There was no difference in the mean times spent exploring objects with textural or functional properties applied to the two different Locations (F (1,52) = 1.15, p> .25).

The main experimental question was whether the locations of the features influenced the views of the objects that children chose. The first set of analyses will consider only the difference scores reflecting the frequencies of planar views relative to random viewing. If the placement of interesting elements on a planar view caused the child to attend to that view, then the results should show greater than chance proportions of planar views in the Planar Location conditions but not in the ¾ Location conditions. The second set of analyses will consider only the difference scores reflecting the frequencies of ¾ views relative to random viewing. If the placement of interesting elements on a 3/4 view caused the child to attend to that view, then the results should show greater than chance proportions of 3/4 views in the 3/4 Location conditions but not in the Planar Location conditions. All post hoc multiple comparison analyses are Bonferroni corrected.

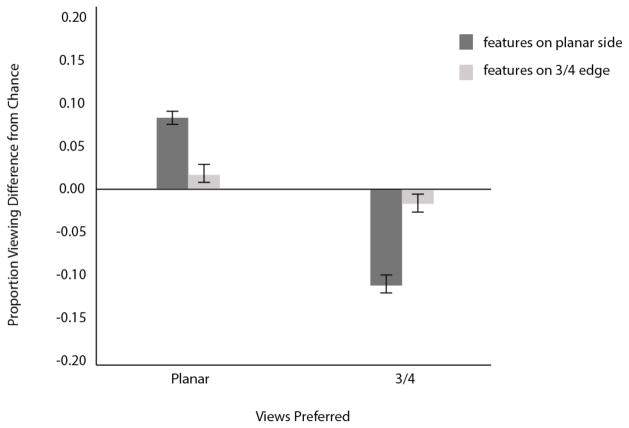

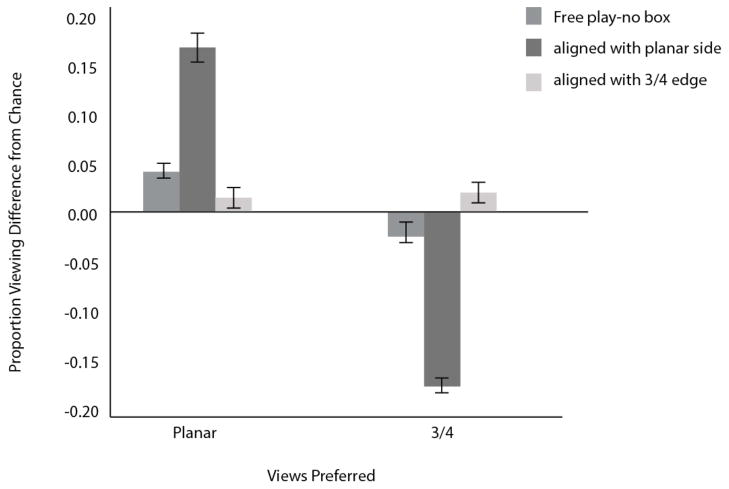

Frequencies of Planar Views

Subjects’ difference scores for planar views were submitted to a 2 (Features: Textures or Parts) X 2 (Location of those features: Planar or ¾) ANOVA. The analysis revealed a significant effect of Location (F(1,52)=5.37, p<.05; MSe=.006) in that participants generated planar views more when features were placed on planar sides (M=.06, SD=.09) than when those features were placed on non-planar edges (M=.02, SD= .05). There was no difference between the feature types; textures and functional parts produced similar viewing preferences. Collapsing then across feature type, Figure 5’s left-side bars show difference scores for proportions of planar views relative to chance as a function of location of feature. The proportions of planar views that children showed themselves was above chance viewing (i.e., the mean difference score was significantly different from zero) when the features were placed on the planar sides (t (15)=5.29, p<.01), but not when features were placed on the non-planar angles, (t (15)=1.9, ns). In sum, the locations of features on objects affected whether or not children would show a planar bias in viewing the objects.

Figure 5.

Proportion of viewing different from chance as a function of views preferred, planar or ¾ in Experiment 1. Bars depict difference from chance (0.00), error bars are standard error of the mean.

Frequencies of 3/4 Views

The mean difference scores for the proportions of observed ¾ views relative to chance are also shown in Figure 5. A 2 (Features: textures or functional parts) X 2 (Location: planar or ¾) ANOVA was performed on the differences scores for ¾ views (frequency of subject-generated ¾ views minus the frequency of those views expected by chance). A significant difference between location conditions was obtained (F (1,52)=4.74, p< .05, MSe=.01), but neither location condition was associated with ¾ view frequencies greater than the frequencies predicted by chance (see Figure 5, right bars). Instead, when the features were placed on planar views, children looked at ¾ views significantly less often than predicted by chance (t (15) = 5.7, p< .01), and when the features were placed on ¾ views, children looked at ¾ views at zchance levels (t (15) = 1.9, ns). In sum, when features were placed on ¾ angles, the views that children showed themselves appeared to be chosen at random: no planar view preference was observed and no preference for ¾ views – where the interesting features were located – was observed.

All in all, these findings indicate that the location of features on objects can influence the views that children show themselves. However, whereas features located at the center of planar views are associated with children’s oversampling of planar views in comparison to chance level viewing of such views, features located at the center of ¾ views do not yield a ¾ bias. This asymmetry indicates that the location of interesting features is insufficient by itself to provide a complete explanation of young children’s bias for planar views when visually exploring objects.

Experiment 2

Experiment 2 examined the possibility that an object-holding bias affects the object views that children generate. The global shapes of objects in relation to the biomechanical properties of hands are strong constraints on how objects can be held, and therefore on object views. A fairly large (for toddler hands) oblong object affords holding the object at the ends of the elongated side. Thus, the planar bias could reflect in part the child’s history of holding behavior. If many objects are frequently held in ways that incidentally yield planar views, then over time, the visual system could become biased to prefer those views even for non-held objects - as is evident in adults (James et al., 2001, 2002).

To test this possibility, Experiment 2 used stimuli designed to dissociate potential holding and viewing preferences. There were two main stimulus conditions, as shown in Figure 6. In the Planar Biasing condition, each object was encased in a transparent rectangular box such that the planar faces of the object were aligned with the planar faces of the box. In the ¾ Biasing condition, each object was encased in the same kind of transparent rectangular box, but now the 3/4 views of the object were aligned with the planar faces of the box. In the Planar Biasing condition, a bias to hold the transparent box in two equally extended hands so that the major axis of elongation of the box was perpendicular to the line of sight (LoS) would result in seeing a planar view (where the axis is perpendicular to the LoS) of the object inside the box. If ease of holding is relevant to the strength of a planar viewing bias, then boxing the object in the Planar biasing box should facilitate viewing the object’s planar side. In the 3/4 Biasing condition, a bias to hold the transparent box with the axis of elongation perpendicular to the LoS should result in decreased planar views and increased viewing of the object from the ¾ angle relative to the Planar biasing box condition. The ¾ Biasing condition presented a strong challenge to a planar viewing bias, because in order to see a planar view of the object inside, children in this condition had to turn the transparent box until a corner of the box was centered on their LoS. In short, the ¾ Biasing condition put a potential holding bias (bilateral holding by elongated ends) and a potential viewing bias (seeing the planar view) into competition. Because we had not previously observed infants freely exploring the specific objects being put into these boxes, we also included a No Biasing, free play condition, in which children freely explored the novel objects outside of the boxes as in our previous work (Pereira et al., 2010: James et al., 2013).

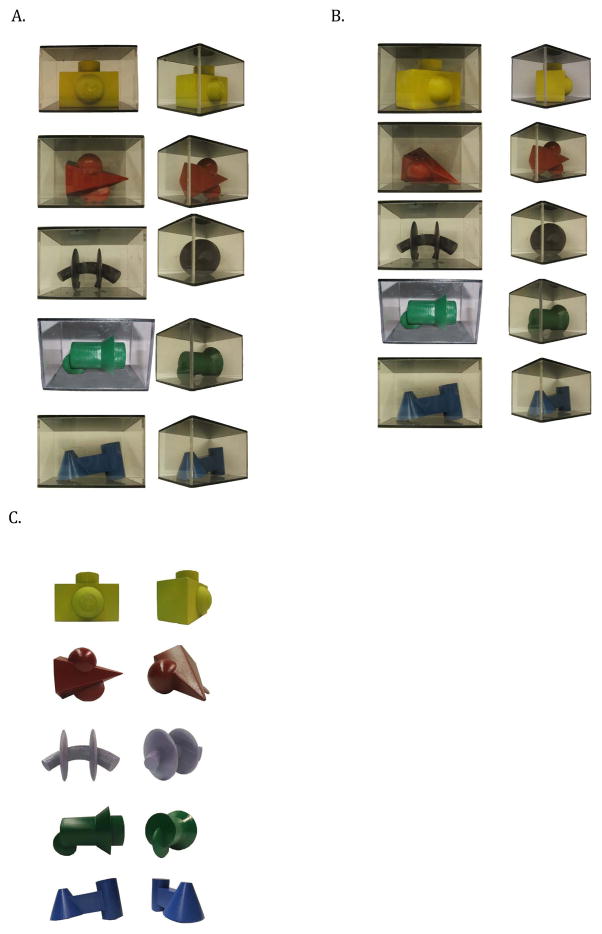

Figure 6.

A. Planar Biased stimuli used in Experiment 2. Left column: planar view of object aligned with planar view of box. Right column: Same objects pictured from the ¾ viewing angle. B. ¾ Biased stimuli used in Experiment 2. The planar view of the object is misaligned with the planar view of the box, such that when looking at planar view of box, see the object from the ¾ view. Left column: pictured with box seen from planar view, right column is same objects seen form ¾ view of box. C. Objects used for the No-Bias, free-play portion of experiment

Method

Participants

Participants in the main study were 42 children (20 female) aged 18–24 months (M = 21.71, SD = 1.80) recruited from a predominantly English-speaking middle class community in the Midwest. Participants were randomly assigned to either the Planar Biasing condition or the ¾ Biasing condition. We also included a group of 14 participants (8 female) in a No Biasing, free play condition. An additional three participants were recruited but would not wear the head camera.

Stimuli

There were 5 novel stimulus objects, designed according to the same principles and in the same size and weight ranges as the objects in Experiment 1. Three copies of each object (one for each condition) were printed in plastic using a 3-D printer (see Figure 6). For the Planar Biasing and ¾ Biasing conditions, a copy of each of the five objects was encased in a rectangular box made of Plexiglas and measuring 11.5 cm × 9 cm × 7.5 cm (see examples in Figure 6). The sides of each box were transparent, but the top and bottom were black and opaque and hid the clear plastic rods that fixed the object’s position in the box. For the Planar Biasing condition, each stimulus object was positioned in the box such that its axis of elongation and therefore 2 of its planar views were aligned with the axis of elongation of the box (see Figure 6a). For the ¾ Biasing condition, each object was positioned such that its axis of elongation formed a 45-degree angle with the axis of elongation of the box, so that planar views of the object were aligned with ¾ views of the box (see Figure 6b). Placing the objects in the Plexiglas boxes increased the weight of each stimulus from the 100g of the object alone to 200 g.

Procedure

The procedure for Experiment 2 was essentially the same as for Experiment 1. For Experiment 2, though, the boxes were handed to the children in a randomly chosen orientation such that no one angle was seen initially for all objects or children. The child grasped one end of each box with each hand, a grasp that facilitated holding the box with two hands with the elongated plane perpendicular to the line of sight. Thus, the child’s initial grasp of the boxes increased the likelihood of views consistent with the geometric structure of the box (not of the object inside).

Results and Discussion

Overall, children held the objects significantly longer in the No Biasing condition (M =97.8 sec, SD =31.4) than in either of the Biasing box conditions (Planar Biasing box: M = 66.4 sec, SD = 30.9; ¾ Biasing box: M = 67.2 sec, SD=29.5: F(2, 54) = 6.39, p = .003. Tukey’s HSD (.01) = 25.62), indicating that the boxes may have reduced children’s interest in the objects. However, children in the two Biasing conditions held the boxes in two hands, one hand on each end of the major axis of elongation, for a significantly greater proportion of the total holding time than children in the No Biasing condition held unencased objects with two hands (89% in the Planar Biasing box condition, and 87.7% in the ¾ Biasing box condition vs. 62.3% of the time in the No Biasing condition. F (2,54) = 12.33, p< .001. Tukey’s HSD (.01) = 15.09). Thus, our expectation that the elongated boxes would bias holding patterns was borne out. The crucial question then is whether or not this holding bias also biased visual preferences.

Planar viewing frequencies

The left side of Figure 7 shows the difference mean scores reflecting the proportions of planar views (relative to chance) generated by the children in the three stimulus conditions. A one-way between-subjects ANOVA compared these scores in the three biasing conditions: No Bias (free play); Planar Bias; and ¾ Bias. If the preference for planar views previously observed in children was a byproduct of the way in which the children held the objects, then in the present experiment we should find that children held the boxes the same way in the two conditions, and thus looked at planar views in the Planar Bias condition and at ¾ views in the ¾ View condition. If, however, the planar preference is driven by visual preferences and not by a holding preference, then children should rotate the outer box to view the planar sides of the internal object, resulting in an above chance sampling of planar views when the object was in either box, as well as when it was not encased.

Figure 7.

Proportion of viewing different from chance as a function of views preferred, planar or ¾ in Experiment 2. Bars depict difference from chance (0.00), error bars are standard error of the mean.

The ANOVA revealed a significant effect of condition (F (2,56)=23.0, p< .0001, MSe=.01). Planar viewing of objects inside the Planar Bias boxes was significantly greater than planar viewing of the objects inside the ¾ Bias boxes (t (40)=6.48, p< .0001). The Planar Bias condition also resulted in a greater proportion of planar views than did the No Bias condition (Welch’s t (33)=2.98, p<.05). The No Bias and ¾ Bias conditions did not differ significantly from one another in the proportion of planar views (Welch’s t (33)=.79, ns.).

When compared directly to chance, the No Bias (free play) condition did produce a significant planar viewing bias (t (13)=3.2, p< .01), as did the Planar Bias box (t (20)=5.7, p< .001, M=.17). The ¾ Bias condition did not, (t (20)=.87, ns). These results tell us that ease of holding supported the biased viewing of planar views. That is, there was already a reliable planar bias in the No Bias condition, but the strength of the bias was enhanced when ease of holding favored viewing planar sides. Conversely, when the planar views of the object were aligned with the ¾ angles of the box, the planar bias disappeared, as children did not consistently rotate the outer box to see the planar sides of the internal object. In short, regularities in holding behavior support the planar bias when holding preferences result in planar views; and holding behavior is a stronger force on viewing than the visual planar bias when holding preferences result in views other than planar views.

Frequencies of 3/4 views

The right side of Figure 7 shows the difference scores relative to chance for the ¾ views in the three Biasing conditions. A one-way analysis of variance on these difference scores revealed a reliable effect of Biasing condition on the frequency of ¾ views, (F (2,56) = 31.56, p < .001). Three-quarter views of objects were significantly less frequent in the Planar Bias box condition than in either the ¾ Bias box condition (t (40)=7.31, p< .0001) or the No Bias condition (Welch’s t (33)=3.98, p< .01). The No Bias and ¾ Bias conditions did not differ from one another in the proportions of 3/4 views (Welch’s t (33)=1.94, ns).

Children’s choice of ¾ views in the Planar Bias box condition was significantly less frequent than expected by chance (t (20)=−4.7, p< .001). The ¾ Bias boxes did not produce an increased proportion of ¾ views as compared to chance (t (20)=1.5, ns); and in the No Bias condition, the mean proportion of ¾ views also did not differ from chance (t (13)=−1.77, ns).

To summarize: children in this experiment, as in Experiment 1, chose planar views more often than expected by chance when freely exploring novel objects. Placing the objects in a rectangular box so that the planar sides of the object and the box were aligned had the effect of increasing the proportion of planar views, suggesting that symmetrical 2-handed holding may play a role in the strength of the planar bias. In contrast, placing the object in a box so that the planes of the box were aligned to ¾ views of the object did not increase the proportion of ¾ views above the proportion obtained with the unboxed object. Thus, ease of holding that favored planar views increased the frequency of planar views, but ease of holding that favored ¾ views did not increase the frequency of ¾ views. The observed asymmetry of the effects of the planar biasing and the ¾ biasing boxes could reflect a disruption in viewing patterns of misalignment per se (and not holding). However the pattern as a whole is also consistent with the proposal that children have a visual preference for planar views. That is, it is possible that in all conditions in Experiment 2, children were trying to select and maintain planar views, and so were not easily moved to other views, but were helped when the shape of the held object encouraged holding behavior that supported the bias. The same proposal, of a visual planar bias, is consistent with the asymmetry between the effects of the biasing conditions of Experiment 1: functional parts on the planar sides of objects led to increased planar views but functional parts on the ¾ sides did not lead to increased ¾ views.

Overall then, the results are consistent with the suggestion that there is a visual planar bias that can be enhanced by the locations of surface properties, and by more so by holding behaviors that promote planar viewing. However, the evidence for the visual planar bias in this experiment and in Experiment 1 is indirect. Therefore, in Experiment 3, we look for more direct evidence of the existence of a visual bias for planar views of objects in the absence of any influence either from interesting features in the planar views of the objects, or from children’s preferred ways of holding the objects.

Experiment 3

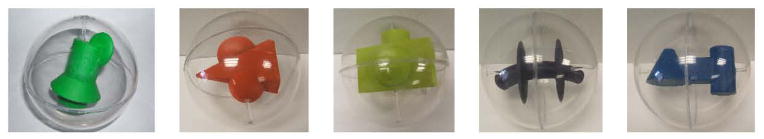

In Experiment 3, we removed the possible influences of holding biases by putting the objects used in Experiment 2 into transparent spheres (shown in Figure 8). For an object inside a sphere, the same arm and hand postures and movements would be used to obtain any view. Thus, the way in which the child grasps and holds the sphere in Experiment 3 will neither promote nor interfere with planar viewing so that, if there is a visual bias for planar views, it should be evident in this experiment. However, if children’s preference for planar views is to some degree a product of physical constraints on object holding, then children exploring objects inside spheres may show a reduced planar bias, or even show no bias at all for any subset of views, because the holding behavior will be the same for all possible views.

Figure 8.

The stimuli used in Experiment 3. Objects are secured in Plexiglass spheres.

Method

Participants

Participants were 32 children (16 female) aged 18–24 months (M = 21.29, SD = 1.71) recruited from a predominantly English-speaking middle class community in the Midwest. None had taken part in Experiments 1 or 2. An additional four participants were recruited but would not wear the head camera.

Stimuli and procedure

The stimulus objects were identical in shape and color to the 5 objects used in Experiment 2. Each object was encased in a Plexiglas sphere (10 cm in diameter) as shown in Figure 8. The objects were attached to the inside walls of the spheres with clear plastic rods. Each object together with the sphere weighed approximately 205 g.

Experimental and coding procedures were identical to those described for Experiment 1.

Results and Discussion

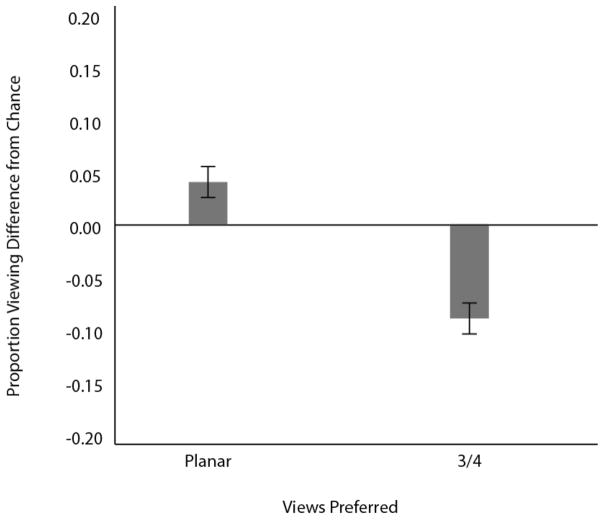

Children held the objects in spheres on average for 57.9 sec (SD = 29.8 sec). Figure 9 shows the mean proportions of planar and ¾ views self-generated by the children. The proportion of planar views is reliably greater than expected by the Monte Carlo simulations, (t (31) = 4.86, p < .0001). The proportion of planar views of objects in spheres chosen by these children does not differ from the frequency of planar views of the same objects not encased in boxes chosen by a different group of children in the No

Figure 9.

Proportion of viewing different from chance as a function of views preferred, planar or ¾ in Experiment 3. Bars depict difference from chance (0.00), error bars are standard error of the mean.

Biasing condition in Experiment 2, (Welch’s t (26) < 1.00, NS). This result strongly indicates that the visual structure of the object is sufficient to yield a reliable planar viewing bias in young children. Interestingly, when the objects were in spheres and holding ease was equal for all views, ¾ views were reliably less frequent than expected by chance, (t (31) = −4.11, p < .001) and planar views were selected significantly more often than predicted by chance (t (31)=4.86, p< .001). This result suggests that in the dynamic viewing of 3-dimensional objects, when holding pattern is not an issue, 3/4 views were avoided by young children while planar views were selected.

In sum, the results of Experiment 3 indicate that toddlers’ self-generated views are visually biased toward object views with a certain geometric structure, in which the planar faces of the objects are perpendicular to the line of sight rather than rotated in depth relative to the child’s viewing angle. In addition, the results of Experiments 1 and 2 tell us that visually interesting and manipulable features centered in a planar view, as well as object holding biases, can increase the strength of the observed planar bias in young children’s self-generated object views.

General Discussion

When visually examining objects for the purpose of later recognition, adults are strongly biased to view the planar sides of the objects (Harman et al., 1999; James, Humphrey & Goodale, 2001; Pereira et al., 2010). When visually and manually exploring familiar objects as well as novel variants of those objects, 18 to 24 month olds hold and handle objects in ways that also reliably, though much more weakly, lead to an oversampling of planar views relative to the numbers of such views that infants might be expected to happen upon by chance (Pereira et al., 2010). It is important to note that when we refer to a planar bias, we do not mean to suggest that children show themselves a preponderance of planar views. In this study and in others, children looked at planar views significantly more often than expected by chance, but still saw more ¾ views than planar views overall, because the amount of the viewing sphere that consists of possible planar views is so much smaller than the amount that consists of ¾ views.

The large difference between the numbers of possible planar views and possible ¾ views actually makes children’s planar preference more impressive, as that preference appears to reflect children’s deliberate efforts to see planar views. As the results of the present experiments show, interesting object properties located on the planar surface increase the planar views that 18 to 24 month olds show themselves, and arranging that the easiest way of holding the object will present the child with planar views also increases the frequency of those views. However, the planar bias is still reliable even when these supports are removed. These findings support the proposal that the oversampling of planar views observed in young children is, at least in part, a reflection of preferences for certain visual inputs - in effect, that toddlers are biased to hold objects in ways that yield specific visual properties of those objects.

Toddlers, of course, are old enough to have been handling objects for some time and thus the present data cannot tell us whether the visual bias observed here might not have had its origins in earlier experiences constrained by the ease of holding or by the likely location of interesting features. Addressing that question requires studying these potential biases in even younger infants. In the rest of the discussion, we set these possible origins aside to consider the visual properties that might underlie a visual bias, how that bias might influence and be influenced by holding and object properties, and how experiences of planar views might support the development of visual object recognition.

A developing visual bias

Planar views have a number of (inter-related) visual properties that set them apart from other views. First, several theories of visual object recognition (e.g., Biederman, 1987; Lowe, 1987; Hoffman & Richards, 1985; Gibson, Lazareva, Gosselin, Schyns, & Wasserman, 2007) suggest that the non-accidental and nonmetric (i.e., entirely present or entirely absent) properties of edges - symmetry, linearity, co-curvilinearity, cotermination – may be more stable and easier to see in planar views (e.g., Blais, Arguin & Marleau, 2009). This is particularly true for those planar views, and small variations around planar views, in which the major axis is perpendicular to the line of sight (Cutzu & Tarr, 2007; Rosch, Simpson, & Miller, 1976). Therefore, infants could be biased towards visual images that reveal key shape properties and thus toward planar views. Second, planar views are more distinct than other views (Niimi & Yokosawa, 2008): adults show more precise discriminations of changes in the views that are close to planar views, but are largely insensitive to similar variation around nonplanar views. Thus, infants could be attracted to the uniqueness of the planar views per se. Third, and again reflecting the greater distinctness of planar views, the visual bias could be related to the amount of visual information available in dynamic viewing centered around planar views. As shown in Figure 2, small variations (with small head or body movements) around planar views yield new information about object structure, which similar variations around ¾ views do not. A critical question for future research is how these three interrelated properties of planar views influence infants’ choices of object views.

Pereira et al. (2010) found that the planar bias in manual exploration increased substantially from 12 months of age to 36 months and from 36 months to adulthood. One possible reason for this increase might be that children have to learn how to hold objects in ways that stabilize or optimize planar views. The properties of objects – where interesting features are placed, how easy they are to hold in certain orientations – might support this learning. If this is so, then the visual bias itself might strengthen with development as experience in manually generating planar views accumulates over time. Additional goals for future work are to examine the planar bias in purely visual tasks that assess its strength and its relation to the distinct visual properties of planar views as a function of age, and to examine the role of manual activities in strengthening that bias.

Building visual object representations

The importance of understanding the planar view bias and its development derives from the potential role of such a bias in building more view-independent object representations. Advancing evidence and theory suggest that human visual object recognition depends on several different kinds of representations (Hayward, 2003; Pessig & Tarr, 2007). The extant evidence suggests a protracted developmental course leading to less view dependent and more object centered representations (Smith, 2009; Juttner, Muller & Rentschler, 2006). Recent work shows that infants who have more experience in the manual exploration of objects have more robust expectations about unseen views of novel objects (Soska et al., 2010). Other research suggests that more view-independent representations increase in middle childhood and into adolescence (e.g., Juttner et al., 2006; Juttner, Wakui, Petters, Kaur, & Davidson in press). The planar viewing bias observed in the present study might be expected to play a role in this developmental trend. Several contemporary theories suggest that dynamic and perhaps in particular, self-generated views (through manual actions or other means) may be especially critical to the development of object centered representations (Graf, 2006; Xu & Kuipers, 2009). Graf (2006; see also Farivar, 2009; Harman & Humphrey 1999) proposed that dynamic viewing and alignment of views around the major axis of an object may be the mechanism through which separate views are integrated into unified object representations. An early visual bias for planar views may encourage sampling of views around one axis of rotation and thus play a role in the early development of structural representations of three-dimensional shape.

There is some preliminary evidence consistent with this view. Several studies (Pereira & Smith, 2009; Smith, 2003) suggest that between 18 and 24 months, there is a major shift in children’s ability to recognize common objects (ice cream cone, car) from sparse representations of 3-dimensional shape – the kinds of representations that have been proposed to underlie view-independent object recognition (e.g., Biederman, 1987). A recent correlational study shows that children who show stronger biases for planar views when manually and visually exploring objects are also more likely to recognize common objects from abstract representations of 3-dimensional shape (James et al., 2013).

In summary, visual object recognition depends on the specific views of objects experienced by the perceiver. Active perceivers play a strong role in choosing just what views they see. The work presented here serves to highlight the importance of active learning, in the form of visual-motor interactions early in development, in generating visual information and thus aiding children’s learning about objects in the world. Past research has indicated that toddlers as well as adults are biased to select some object views over others. The main contribution of the present experiments is evidence that this bias in toddlers is primarily visual, although it is also influenced by the location of interesting surface properties and by whether the object is easily held in a position that yields planar views. Understanding the origins and nature of these early self-generated object views is fundamental to understanding how and why the mature object recognition system has the properties that it does, and the developmental pathway to that mature system.

Acknowledgments

This research was supported by funding from the NIH National Institute of Child Health and Development, HD057077 and HD28675. Thanks are due to JeanneMarie Heeb for management of the data collection and coding.

References

- Blais C, Arguin M, Marleau I. Orientation invariance in visual shape perception. Journal of Vision. 2009;9(2) doi: 10.1167/9.2.14. [DOI] [PubMed] [Google Scholar]

- Biederman I. Recognition-by-components: a theory of human image understanding. Psychological Review. 1987;94:115–147. doi: 10.1037/0033-295X.94.2.115. [DOI] [PubMed] [Google Scholar]

- Blanz V, Tarr MJ, Bülthoff HH, Vetter T. What object attributes determine canonical views? Perception. 1999;28(5):575–600. doi: 10.1068/p2897. [DOI] [PubMed] [Google Scholar]

- Bulthoff HH, Edelman S. Psychophysical support for a two-dimensional view interpolation theory of object recognition. Proceedings of the National Academy of Science. 1992;89 (1):60. doi: 10.1073/pnas.89.1.60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bushnell EW. Visual-tactual knowledge in 8-, 9½, and 11-month-old infants. Infant Behavior and Development. 1982;5:63–75. [Google Scholar]

- Cutzu F, Tarr MJ. Representation of three-dimensional object similarity in human vision. Paper presented at the SPIE Electronic Imaging: Human Vision and Electronic Imaging II; San Jose, CA. 2007. [Google Scholar]

- Farivar R. Dorsal-ventral integration in object recognition. Brain Research Reviews. 2009;61(2):144–153. doi: 10.1016/j.brainresrev.2009.05.006. [DOI] [PubMed] [Google Scholar]

- Graf M. Coordinate transformations in object recognition. Psychological Bulletin. 2006;132(6):920–945. doi: 10.1037/0033-2909.132.6.920. [DOI] [PubMed] [Google Scholar]

- Harman KL, Humphrey GK. Encoding regular and random sequences of views of novel, 3-D objects. Perception. 1999;28:601–615. doi: 10.1068/p2924. [DOI] [PubMed] [Google Scholar]

- Harman KL, Humphrey GK, Goodale MA. Active manual control of object views facilitates visual recognition. Current Biology. 1999;9:1315–1318. doi: 10.1016/s0960-9822(00)80053-6. [DOI] [PubMed] [Google Scholar]

- Harries MH, Perrett DI, Lavender A. Preferential inspection of views of 3-D model heads. Perception. 1991(20):669–680. doi: 10.1068/p200669. (199) [DOI] [PubMed] [Google Scholar]

- Hayward WG. After the viewpoint debate: where next in object recognition? Trends in cognitive sciences. 2003;7(10):425–427. doi: 10.1016/j.tics.2003.08.004. [DOI] [PubMed] [Google Scholar]

- Hoffman DD, Richards WA. Parts of recognition. Cognition. 1985;18:65–96. doi: 10.1016/0010-0277(84)90022-2. [DOI] [PubMed] [Google Scholar]

- James KH, Humphrey GK, Goodale MA. Manipulating and recognizing virtual objects: where the action is. Canadian Journal of Experimental Psychology/Revue canadienne de psychologie expérimentale. 2001;55(2):111–120. doi: 10.1037/h0087358. [DOI] [PubMed] [Google Scholar]

- James KH, Humphrey GK, Vilis T, Corrie B, Baddour R, Goodale MA. “Active” and “passive” learning of three-dimensional object structure within an immersive virtual reality environment. Behavior Research Methods, Instruments & Computers. 2002;34:383–390. doi: 10.3758/bf03195466. [DOI] [PubMed] [Google Scholar]

- James KH, Swain S, Smith LB, Jones SS. Young children’s self-generated object views and object recognition. Cognition and Development. 2013 doi: 10.1080/15248372.2012.749481. Accepted, Online. [DOI] [PMC free article] [PubMed] [Google Scholar]

- James TW, Humphrey GK, Gati JS, Menon RS, Goodale MA. Differential effects of viewpoint on object-driven activation in dorsal and ventral streams. Neuron. 2002;35:793–801. doi: 10.1016/s0896-6273(02)00803-6. [DOI] [PubMed] [Google Scholar]

- Keehner M, Hegarty M, Cohen C, Khooshabeh P, Montello DR. Spatial reasoning with external visualizations: What matters is what you see, not whether you interact. Cognitive Science. 2008;32(7):1099–1132. doi: 10.1080/03640210801898177. [DOI] [PubMed] [Google Scholar]

- Juttner M, Muller A, Rentschler I. A developmental dissociation of view-dependent and view-invariant object recognition in adolescence. Behavioral Brain Research. 2006;175:420–424. doi: 10.1016/j.bbr.2006.09.005. [DOI] [PubMed] [Google Scholar]

- Juttner M, Wakui E, Petters D, Kaur S, Davidson J. Developmental trajectories of part-based and configural object recognition in adolescence. Developmental Psychology. doi: 10.1037/a0027707. in press. [DOI] [PubMed] [Google Scholar]

- Kuipers JB. Quaternions and rotation sequences. Princeton, NJ, USA: Princeton university press; 1999. p. 21. [Google Scholar]

- Locher Vos, Stappers, Overbeeke 2000 [Google Scholar]

- Lowe DG. Three-dimensional object recognition from single two-dimensional images. Artificial Intelligence. 1987;31:355–395. [Google Scholar]

- Marr D. Vision. San Francisco: Freeman; 1982. [Google Scholar]

- Niemann T, Lappe M, Hoffmann KP. Visual inspection of three-dimensional objects by human observers. Perception. 1996;25:1027–1042. doi: 10.1068/p251027. [DOI] [PubMed] [Google Scholar]

- Niimi R, Yokosawa K. Determining the orientation of depth-rotated familiar objects. Psychonomic bulletin & review. 2008;15(1):208–214. doi: 10.3758/pbr.15.1.208. [DOI] [PubMed] [Google Scholar]

- Palmeri TJ, Gauthier I. Visual Object Understanding. Nature Reviews: Neuroscience. 2004;5:291– 303. doi: 10.1038/nrn1364. [DOI] [PubMed] [Google Scholar]

- Pereira A, Smith LB. Developmental changes in visual object recognition between 18 and 24 months of age. Developmental Science. 2009;12:57–80. doi: 10.1111/j.1467-7687.2008.00747.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pereira A, James K, Jones S, Smith LB. Early biases and developmental changes in self-generated object views. Journal of Vision. 2010;10(11):1–13. doi: 10.1167/10.11.22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perrett DI, Harries MH. Characteristic views and the visual inspection of simple faceted and smooth objects: “Tetrahedra and potatoes. Perception. 1988;17:703–720. doi: 10.1068/p170703. [DOI] [PubMed] [Google Scholar]

- Peissig J, Tarr M. Visual Object Recognition: Do We Know More Now Than We Did 20 Years Ago? Annual Review of Psychology. 2007;58:75–96. doi: 10.1146/annurev.psych.58.102904.190114. [DOI] [PubMed] [Google Scholar]

- Rosch E, Simpson C, Miller RS. Structural bases of typicality effects. Journal of Experimental Psychology: Human Perception and Performance. 1976;2(4):491. [Google Scholar]

- Ruff HA. Infants’ manipulative exploration of objects: Effects of age and object characteristics. Developmental Psychology. 1984;20:9–20. [Google Scholar]

- Ruff HA. Components of attention during infant’s manipulative exploration. Child Development. 1986;57:105– 114. doi: 10.1111/j.1467-8624.1986.tb00011.x. [DOI] [PubMed] [Google Scholar]

- Savarese S, Fei-Fei L. Computer Vision, 2007. ICCV 2007. IEEE 11th International Conference on. IEEE; 2007. Oct, 3D generic object categorization, localization and pose estimation; pp. 1–8. [Google Scholar]

- Smith LB. Learning to Recognize Objects. Psychological Science. 2003;14(3):244–250. doi: 10.1111/1467-9280.03439. [DOI] [PubMed] [Google Scholar]

- Smith LB, Pereira A. Shape, action, symbolic play and words: Overlapping loops of cause and consequence in developmental process. In: Johnson S, editor. Neo-constructivism: The new science of cognitive development. Oxford University Press; 2009. [Google Scholar]

- Smith LB. From fragments to geometric shape: Changes in visual object recognition between 18 and 24 months. Current Directions in Psychological Science. 2009;18(5):290–294. doi: 10.1111/j.1467-8721.2009.01654.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Soska KC, Adolph KE, Johnson SP. Systems in development: Motor skill acquisition facilitates three-dimensional object completion. Developmental Psychology. 2010;46(1):129–138. doi: 10.1037/a0014618. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu C, Kuipers B. Construction of the object semantic hierarchy. Sensors. 2010;24:26. [Google Scholar]

- Yoshida H, Smith LB. What’s in view for toddlers? Using a head camera to study visual experience. Infancy. 2008;13(3):229–248. doi: 10.1080/15250000802004437. [DOI] [PMC free article] [PubMed] [Google Scholar]