Abstract

This study examined unisensory and multisensory speech perception in 8–17 year old children with autism spectrum disorders (ASD) and typically developing controls matched on chronological age, sex, and IQ. Consonant– vowel syllables were presented in visual only, auditory only, matched audio-visual, and mismatched audiovisual (“McGurk”) conditions. Participants with ASD displayed deficits in visual only and matched audiovisual speech perception. Additionally, children with ASD reported a visual influence on heard speech in response to mismatched audiovisual syllables over a wider window of time relative to controls. Correlational analyses revealed associations between multisensory speech perception, communicative characteristics, and responses to sensory stimuli in ASD. Results suggest atypical speech perception is linked to broader behavioral characteristics of ASD.

Keywords: Autism spectrum disorders, Speech perception, Multisensory integration, Auditory, Visual, McGurk effect, Sensory, Communication

Introduction

The literature associated with autism spectrum disorders (ASD) is replete with anecdotal and empirical accounts of atypical responses to sights, sounds, and other sensory stimuli (refer to Iarocci and McDonald 2006 for an indepth review). Although not included in the present diagnostic criteria (American Psychiatric Association 2000), the prevalence and persistence of these problems has led researchers to propose that disruptions in sensation and perception be considered a core deficit in ASD (Billstedt et al. 2007), and sensory abnormalities are included in the proposed revision of the diagnostic criteria (American Psychiatric Association 2012). Some theories suggest that children with autism have a bias for processing the local features of sensory stimuli and difficulty integrating discrete featural information to derive higher-level meaning from their experiences, possibly due to atypical “binding” of the individual elements that make up a real world environmental stimulus (Brock et al. 2002; Frith and Happé 1994; Happe 2005; Happé and Frith 2006). Integral to this idea is multisensory binding, wherein an individual binds sensory inputs from different modalities into a single, unified percept. Recent work has demonstrated that children with ASD bind low-level (i.e., simple, non-social) auditory and visual stimuli (such as flashes and pure tone beeps) over a wider window of time than their typically developing peers (Foss-Feig et al. 2010; Kwakye et al. 2011). Despite this emerging evidence of altered low-level multisensory integration, it remains unclear if higher-level (i.e., complex, social) multisensory processes are impacted in ASD.

Speech perception is a higher-level multisensory task in which visual perception of a dynamic social stimulus (i.e., the moving face and neck) shapes the perception of a complex acoustic stream (Massaro 1998). To examine multisensory speech perception in ASD, several previous studies have utilized the McGurk paradigm, a multisensory-mediated perceptual illusion in which observers frequently fuse incongruent or “mismatched” auditory and visual speech tokens (i.e., “ba” and “ga”) and report the percept of an illusory syllable (e.g., “da” or “tha”) (de Gelder et al. 1991; Iarocci et al. 2010; Irwin et al. 2011; Magnée et al. 2008; Massaro and Bosseler 2003; Mongillo et al. 2008; Williams et al. 2004). This “McGurk effect” is thought to reflect integration or synthesis of information from the auditory and visual channels (Calvert and Thesen 2004). Some prior studies have also presented the stimuli associated with the McGurk task in auditory only and visual only conditions to simultaneously assess unisensory speech perception in ASD (de Gelder et al. 1991; Iarocci et al. 2010; Williams et al. 2004). However, despite using this common tool to examine audiovisual speech perception in ASD, researchers have come to conflicting conclusions.

Prior work has alternately concluded that children with ASD have deficits in audiovisual integration in the face of intact unisensory abilities (de Gelder et al. 1991), deficits in audiovisual integration due to deficient unisensory abilities (Williams et al. 2004), and differences, but not deficits, in audiovisual integration due to a specific visual deficit (Iarocci et al. 2010). Most likely, differences in experimental design have contributed to these discrepant findings. For example, several of the past studies have instructed participants to report what they heard, introducing an auditory response bias. In addition, multisensory integration has been operationalized in different ways across studies. For example, in the initial description of the McGurk effect, McGurk and MacDonald (1976, 1978) assessed multisensory integration by the rate of fused responses, defined as those responses “where information from the two modalities is transformed into something new with an element not presented in either modality.” Subsequent work has not always conformed to this convention. In addition, previous studies have imposed verbal response demands on participants (i.e., asked participants to repeat or imitate the speaker’s production), a potential confound in children with ASD, who have highly variable verbal skills and documented deficits in motor imitation (Rogers et al. 2003; Stone et al. 1997; Tager-Flusberg et al. 2005; Williams et al. 2004).

In addition to these conflicting findings, several critical aspects of multisensory speech perception in ASD warrant further investigation. For example, there is limited information regarding speech perception as it occurs in every-day settings—that is, in a congruent and synchronous audiovisual condition (as opposed to the incongruent condition used in the McGurk task)—in individuals with ASD. Although a number of prior studies have included matched audiovisual (i.e., normal) speech conditions, these stimuli are typically not the focus of analyses. Additionally, the temporal window for integration of audiovisual speech information (the period of time over which auditory and visual speech input is likely to be bound together and perceived as arising from the same event) has not been exhaustively explored in children with ASD (though a few recent studies, including Bebko et al. 2006; Grossman et al. 2009; Irwin et al. 2011, have examined detection of asynchrony for audiovisual speech stimuli at a limited number of temporal offsets). This is particularly germane given recent work by Foss-Feig et al. (2010) that has reported that children with ASD integrate non-speech visual and auditory cues (flashes and beeps) over a temporal window approximately twice as wide as their typically developing peers. As speech perception depends on accurate detection of dynamic temporal cues (Summerfield 1987), it is reasonable to predict that speech perception in the ASD population may be significantly impacted if there is a tendency to combine visual and auditory cues over a longer period of time. Finally, more information is needed regarding the possible relationships between multisensory speech perception, severity of autism symptomatology, and the tendency to respond atypically to sensory stimuli across environmental settings in ASD.

In the current study, our objective was to examine how children with ASD perceive unisensory (i.e., auditory only, visual only) and multisensory (i.e., audiovisual) speech stimuli, and to relate these processes to symptoms commonly associated with ASD. Towards this aim, we used four consonant– vowel (CV) syllables associated with the McGurk effect (visually-compatible “ga”, auditory-compatible “ba”, and the syllables associated with the audiovisual fusion—”da” and “tha”). Syllables were presented in visual only, auditory only, matched audiovisual, and mismatched audiovisual (“McGurk”) conditions. Mismatched stimuli were presented with varying degrees of asynchrony to examine the temporal constraints of the multisensory binding process (additional detail on stimulus types is presented in the “Methods” section). To avoid potential confounds noted in prior work (de Gelder et al. 1991; Iarocci et al. 2010; Williams et al. 2004), unbiased instructions were presented, scoring standards consistent with the seminal studies of the McGurk effect were employed (Macdonald and McGurk 1978; McGurk and Macdonald 1976), and response demands were reduced by providing a multiple-choice button press response set. Clinical measures were used to explore associations between speech perception, symptom severity, and responses to sensory stimuli in everyday settings.

Specific research questions included: (a) Do children with ASD present with a specific multisensory speech perception deficit in comparison with typically developing peers in response to matched audiovisual stimuli?; (b) Do children with ASD present with deficits in unisensory (i.e., auditory only, visual only) speech perception relative to typically developing peers on a task that does not require a verbal response?; (c) Do children with ASD bind mismatched audiovisual speech information differently (i.e., display a visual influence on heard speech at a different magnitude or over a wider window of time) relative to typically developing peers when presented with a McGurk choice set that does not bias responses towards a single modality or require a verbal response?; (d) Does multisensory speech perception vary according to ASD symptom severity and sensory processing abnormalities as indexed by standard clinical measures?

Methods

Participants

Children with ASD and typically developing controls (TD) were recruited for a large-scale study of multisensory perception. Results of low-level multisensory tasks from this project have previously been published (Foss-Feig et al. 2010; Kwakye et al. 2011). A subset of participants also completed tasks examining multisensory speech perception. These children comprise the study sample in the present report.

Eligibility criteria for inclusion in this study were as follows: (a) chronological age between 8 and 17 years; (b) full scale and verbal IQ standard scores greater than or equal to 70 on the Weschler Abbreviated Scale of Intelligence (WASI; Wechsler 1999); (c) normal or corrected-to-normal hearing and vision per parent report; (d) completion of 80 % of trials on multisensory speech perception tasks; and (e) susceptibility to the McGurk illusion as demonstrated by report of at least one perceived fusion on the McGurk task.

Additional eligibility criteria for children with ASD included: (a) diagnosis of Autistic Disorder, Asperger’s Disorder, or Pervasive Developmental Disorder-Not Otherwise Specified confirmed by research-reliable administrations of the Autism Diagnostic Observation Schedule Module 3 (ADOS; Lord et al. 2000) and Autism Diagnostic Interview-Revised (ADI-R; Lord et al. 1994), as well as the clinical judgment of a licensed psychologist; (b) no history of seizure disorders; and (c) no diagnosed genetic disorders, such as Fragile X or tuberous sclerosis.

Additional eligibility criteria for TD participants included: (a) confirmation of TD status according to parent report of ASD symptoms below the suggested screening threshold on the Lifetime Version of the social communication questionnaire (SCQ; Rutter et al. 2003); (b) no immediate family members with diagnoses of ASD; (c) no history of neurological conditions or seizure disorders; (d) no prior history or present indicators of psychiatric conditions; and (e) no diagnosed learning disorders.

The resulting final sample for the current study comprised 18 children with ASD and 18 TD controls. The experimental groups did not differ significantly in sex, chronological age, full scale IQ, verbal IQ, or performance IQ (p<.05). A significant group difference was observed in the number of symptoms associated with ASD as reported by parents on the SCQ (t(34) = 10.076, p>001). See Table 1 for a summary of descriptive group information.

Table 1.

Group demographics

| Variable | ASD | TD |

|---|---|---|

| Sex | 16 male; 2 female | 15 male; 3 femalens |

| Age | 12 years; 4 mos (31.0 mos) | 11 years; 5 mos (23.8 mos)ns |

| Verbal IQ | 109.2 (15.9) | 113.8 (9.4)ns |

| Performance IQ | 110.4 (14.5) | 105.4 (9.2)ns |

| Full scale IQ | 111.2 (16.3) | 111.0 (9.2)ns |

| SCQ total score | 21.3 (6.9) | 3.4 (3.0)* |

ASD autism spectrum disorders group, TD typically developing control group, SCQ social communication questionnaire (Rutter et al. 2003). Groups did not significantly differ in sex, chronological age, verbal IQ, performance IQ, or full scale IQ (ns non-significant, p<.05). Groups did significantly differ in total score obtained on the SCQ (* p>.001)

Recruitment and study procedures were conducted in accordance with the approval of the Vanderbilt University Institutional Review Board. Parents provided written informed consent, and participants provided written assent prior to participation in the study. All participants were compensated for their participation.

Setting and Equipment

Experimental tasks were completed in a sound- and light-attenuated room (WhisperRoom Inc., Morristown, TN, USA). Visual stimuli were presented on a 22 inch flat-screen NEC Multisync FE992 PC monitor. Auditory stimuli were presented binaurally via Philips SBC HN110 noise-cancelling, supra-aural headphones (peak sound level 90 dB SPL). Stimulus presentation was managed by E-prime software, and responses were recorded by a 5-button serial response box (Psychology Software Tools Inc., Pittsburgh, PA, USA). Buttons of the response box were labeled for the CV syllables “ba”, “ga”, “da”, “tha”, and “other” respectively.

Stimuli

Video recordings of a young woman speaking four CV syllables (“ba”, “ga”, “da”, and “tha”) at a natural rate and volume with a neutral facial expression were acquired with a digital video camera. The speaker’s full face and neck were visible against a white background. Visual and auditory tracks for each CV syllable were separated with the video processing program, Ulead (Video Studio). Visual only, auditory only, matched audiovisual, and mismatched audiovisual (“McGurk”) stimuli were developed from these videos for the multisensory speech perception task.

Unisensory Stimuli

In the visual only condition, children viewed the speaker’s face and neck movements without the auditory track. In the auditory only condition, participants viewed the speaker’s static face as the auditory stimulus was presented.

Matched Audiovisual Stimuli

In the matched audiovisual condition, the corresponding auditory and visual stimuli were presented as naturally spoken for the syllables “ba”, “ga”, “da”, and “tha”.

Mismatched Audiovisual (“McGurk”) Stimuli

In the mismatched audiovisual condition, the auditory stimulus “ba” was presented together with the discrepant visual stimulus “ga”. This combination has been shown to best facilitate a “fused” percept and thus served as the focus of analysis for the McGurk task (Macdonald and McGurk 1978; McGurk and Macdonald 1976). To evaluate the effects of temporal asynchrony on binding of multisensory speech information, the timing of the auditory “ba” and visual “ga” stimuli were shifted so that the visual stimulus preceded the auditory stimulus by the following stimulus onset asynchronies (SOAs): 0 ms (i.e., at the natural audiovisual offset observed for the matched syllable “ga”), 33, 66, 100, 166, 233, 300 ms.

Procedure

Participants were prompted to attend to a fixation cross positioned at the center of the computer screen and were given the following instructions: “You will watch a video of a person speaking syllables. Please pay attention to the person’s mouth. After the video, you will push a button to tell us which syllable she said.” Participants indicated their perceptions of the stimuli by pushing the appropriate button on the response box following termination of the video clip for each trial.

Prior to initiating the experimental task, participants completed one or more practice sessions, each of which consisted of an abbreviated computer task comprising 8 matched CV syllable trials (2 each of “ba”, “ga”, “da”, and “tha”). Practice was terminated once the children confirmed that they understood the task instructions and were comfortable with the procedures. In the experimental task, 10 trials of each stimulus type were presented in random order.

Throughout the task, participants were continuously monitored by a member of the research team via closed circuit video cameras to ensure attention to the task and compliance with instructions. Proactive strategies to promote on-task behavior and maintain participant motivation included a visual schedule, scheduled breaks, and positive reinforcement. In isolated instances of off-task or inattentive behavior, the task was interrupted temporarily and strategies such as increased verbal prompting and reinforcement were implemented to increase the child’s engagement. If necessary, a parent or member of the research team accompanied the participant in the testing booth. All participants completed the speech perception task within a single testing session.

Clinical Measures

Symptom Severity

Participant scores on the Communication and Reciprocal Social Interaction domains of the ADOS Module 3 (Lord et al. 2000) were used as indicators of symptom severity.

Sensory Profiles

The Sensory Profile Caregiver Questionnaire (SP) was used to evaluate participants’ responses to sensory stimuli in everyday settings (Dunn 1999). On the SP, parents or caregivers assign a qualitative frequency rating (i.e., ranging from “always” to “never”) to 125 statements regarding how their child responds to various sensory experiences (e.g., “holds hands over ears to protect ears from sound”, “is bothered by bright lights after others have adapted to the light”). These ratings yield quantitative summary scores for sections, including Auditory Processing, Visual Processing, and Multisensory Processing, and for factors, such as Inattention. The Inattention factor is of interest as it represents an aggregate of items from the Auditory Processing and Multisensory Processing sections (e.g., “can’t work with background noise”) that may be sensitive to atypical processing in the modalities of interest in the present study. Scores index how the child’s behavior compares to typically developing peers. The rating scale is offset in either direction of “typical” performance, indicating whether atypical performance reflects hyposensitivity (i.e., Less Than Others) or hypersensitivity (i.e., More than Others). Scores in the aforementioned sections may thus provide particularly valuable information regarding participants’ attention or “sensitivity” to environmental stimuli believed to tap auditory, visual, or multisensory processing.

Statistical Analysis Plan

Unisensory and Matched Audiovisual Stimuli

For the unisensory and matched audiovisual conditions, percent identification accuracy was calculated for each participant. Syllable type (“ba”, “ga,” “tha”, and “da”) was collapsed within modalities (auditory only, visual only, and matched audiovisual) to simplify the model and to focus on effects of interest between diagnostic groups.

Mismatched Audiovisual Stimuli

The proportion of trials in which participants perceived the McGurk illusion was calculated as the percentage of “da” and/or “tha” responses provided to mismatched audiovisual stimuli (auditory “ba” + visual “ga”). Mean rates of fusion reported at the synchronous (i.e., 0 ms) SOA served as indices of integration magnitude for group comparisons. In addition, individual rates of reported fusion on the McGurk task at the series of temporal offsets (i.e., SOAs) were subsequently used to calculate temporal binding windows (TBWs) for each participant (Powers et al. 2009; Stevenson and Wallace, in progress). For each child, mean rates of fusion across SOAs were normalized to his or her maximum value of perceived fusion. A psychometric sigmoid function was then fit to the rates of perceived fusion across SOAs using the glmfit function in MATLAB (Natick, MA). Individual TBWs were estimated as the width of the stimulus offset range at which participants fused the visual “ga” and auditory “ba” stimulus pair on 75 % or more of the trials.

Clinical Measures

Additional analyses explored the associations between multisensory speech perception, ASD symptom severity, and reported responses to sensory stimuli in participants with ASD. Bivariate correlations were conducted to evaluate the strength and direction of the associations between two aspects of multisensory speech perception (performance accuracy in response to matched audiovisual stimuli and magnitude of reported fusion in response to synchronous mismatched audiovisual stimuli) and scores of interest derived from clinical measures (Reciprocal Social Interaction and Communication domain scores of the ADOS Module 3 and Auditory Processing, Visual Processing, Multisensory Processing, and Inattention scores of the SP).

Results

Responses to Unisensory and Matched Audiovisual Stimuli

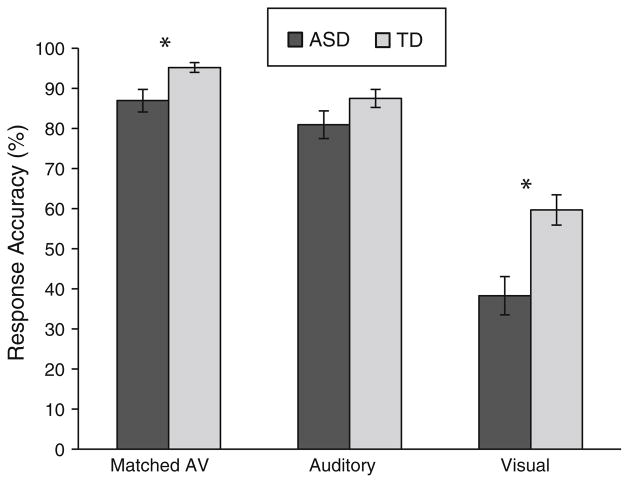

A mixed-model ANOVA with group (ASD, TD) as the between-subjects factor and modality (visual only, auditory only, and matched audiovisual) as the within-subjects factor revealed a main effect of modality (F(1.398,47.516) = 152.914, p>.001, η2 partial = .818). Figure 1 shows that overall identification accuracy was highest for matched audiovisual cues (M = 91.04 %, SD = 10.02 %), was slightly reduced for auditory only cues (M = 84.19 %, SD = 12.65 %) and was substantially reduced for visual only cues (M = 48.97 %, SD = 20.97 %). The main effect of group was also significant (F(1,34) = 11.909, p>.005; η2 partial = .259). On average across all conditions, children with ASD displayed reduced accuracy in identification of speech stimuli (M = 68.69 %, SD = 12.2 %) in comparison to TD controls (M = 80.77 %, SD = 7.69 %).

Fig. 1.

Identification accuracy according to stimulus modality and diagnostic group. ASD autism spectrum disorders group, TD typically developing control group. This graph represents the mean identification accuracy of children with ASD (black bars) and TD controls (gray bars) across stimulus modalities. Error bars represent standard error of the mean (SEM). Children with ASD show significantly poorer performance in visual only and matched audiovisual conditions in comparison with TD peers

In addition, the results also revealed a significant interaction between group and modality (F(1.398,47.516) = 4.922, p>.05, η2 partial = .126), suggesting that the difference in speech perception between children with ASD and TD depends upon the modality in which the cues are presented. Independent samples t-tests were performed for each stimulus modality to clarify the nature of this interaction. Mean accuracy in response to matched audiovisual stimuli was significantly poorer for participants with ASD (M = 86.91 %, SD = 11.99 %) in comparison with TD peers (M = 95.17 %, SD = 5.19 %), t(23.164) = −2.681, p>.05. Children with ASD (M = 38.28 %, SD = 20.23 %) also showed significantly reduced identification accuracy in relation to TD controls (M = 59.65 %, SD = 15.94 %) for visual only stimuli, t(34) = −3.521, p>.001. However, children with ASD (M = 80.9 %, SD = 14.63 %) and TD controls (M = 87.48 %, SD = 9.61 %) did not differ significantly in identification accuracy for auditory only stimuli, t(34) = −1.595, p = .12.

Responses to Mismatched Audiovisual (“McGurk”) Stimuli

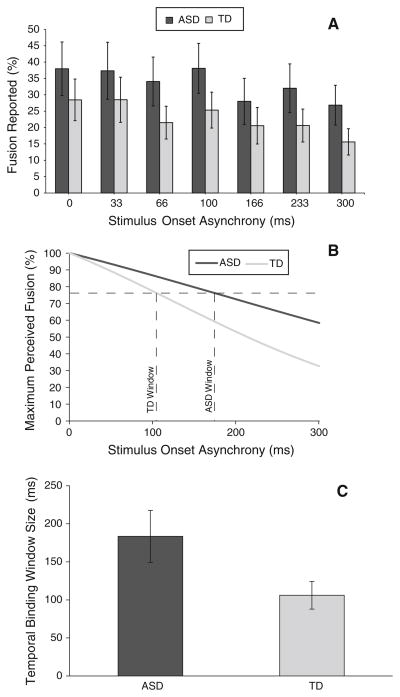

There was not a significant difference in the mean percentage (i.e., magnitude) of fusions reported by children with ASD (M = 37.94 %, SD = 34.71 %) and TD controls (M = 28.44 %, SD = 27.04 %) for the mismatched audiovisual stimuli presented simultaneously (i.e., at the 0 ms SOA), t(34) = .916, p<.05. However, when analyses were structured to look across the range of tested SOAs (sigmoid curves were successfully fit to 13 participants in each group), there was a marginally significant difference in the width of the TBWs for children with ASD and TD, t(18.362) = 2.083, p = .051. In terms of mean values, children with ASD had larger TBWs when compared with TD controls (ASD M = 183.46 ms, SD = 117.96 ms versus TD M = 106.15 ms, SD = 63.18 ms) (Fig. 2).

Fig. 2.

Temporal binding of audiovisual speech according to diagnosis. ASD autism spectrum disorders group, TD typically developing control group. a Represents the raw values of reported fusion for children with ASD (black) and TD (gray) at each stimulus onset asynchrony. b Depicts the sigmoid curves for representative ASD and TD participants derived from normalized individual data. c Presents the mean temporal binding windows of children with ASD and TD estimated as the width of the stimulus offset range at which participants fused the visual “ga” and auditory “ba” stimulus pair on 75 % or more of the trials. Error bars represent SEM. Children with ASD integrate information over a marginally wider window of time than controls (p = .051). Note: There was not a significant difference in the mean percentage (i.e., magnitude) of fusions reported by children with ASD and TD controls at the 0 ms SOA

Associations with Symptom Severity and Sensory Profiles

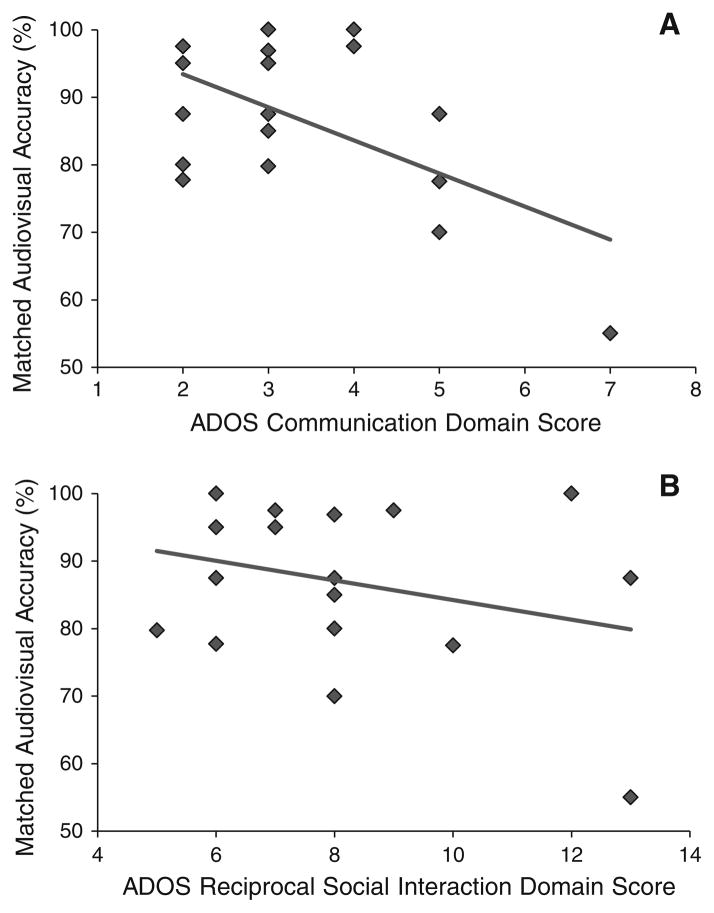

In children with ASD, symptom severity was significantly correlated with speech perception in response to matched audiovisual syllables, but not with perception in response to mismatched audiovisual (McGurk) syllables. More specifically, accuracy in identification of matched audiovisual syllables was negatively correlated with the degree of communication deficit as indexed by ADOS Module 3 Communication domain score (r = −.578, p>.05; Fig. 3a). In contrast, matched audiovisual speech perception was not significantly associated with the severity of social impairment as measured by the ADOS Module 3 Reciprocal Social Interaction domain score (r = −.291, p = .24; Fig. 3b). These results suggest that decreased multisensory speech perception for matched audiovisual stimuli tends to coincide with increased communication dysfunction in ASD (e.g., idiosyncratic use of language such as delayed echolalia or unusual intonation patterns, inability to maintain a conversational exchange, difficulty providing a verbal account of events). However, poor multisensory speech perception does not reliably relate to impairments in social characteristics associated with ASD as indexed by the ADOS Module 3 Reciprocal Social Interaction domain scale. Integration of mismatched speech cues as measured by the McGurk task at no delay (0 ms SOA) was not significantly correlated with either communication (r = .07, p<.05) or social (r = .25, p<.05) symptoms as indexed by the ADOS Module 3.

Fig. 3.

Associations between multisensory speech perception and symptom severity. ADOS Autism Diagnostic Observation Schedule (Lord et al. 2000). Multimodal identification accuracy is significantly correlated with ADOS Module 3 Communication score (r = −.578, p>.05; a), but not significantly associated with ADOS Module 3 Reciprocal Social Interaction score (r = −.291, p<.24; b) in participants with autism spectrum disorders

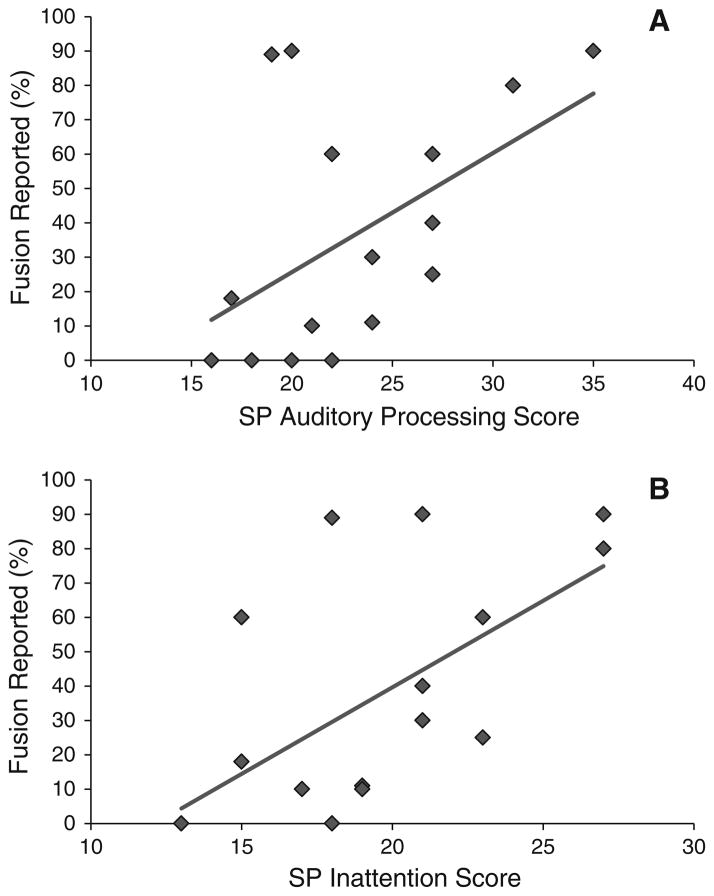

The opposite pattern of results was observed when correlations between multisensory speech perception and sensory scores were evaluated for participants with ASD. Reports of atypical responses to sensory stimuli were associated with responses to mismatched, but not matched audiovisual stimuli. More specifically, reduced multisensory binding, as indexed by reported fusion in response to mismatched (McGurk) stimuli at 0 ms, was significantly associated with Auditory Processing (r = .51, p>.05; Fig. 4a) and Inattention ratings (r = .61, p = .01; Fig. 4b), but not with Visual or Multisensory Processing scores, on the SP (ps<.05). Thus, children with ASD who reported fewer fusions on the McGurk task tended to display more unusual responses to sound and have greater difficulty attending or functioning in the face of environmental distractions, such as noise, according to parent reports. Correlations between matched audiovisual, auditory only, and visual only identification accuracy and the SP scores of interest were all non-significant (p<.05).

Fig. 4.

Association between fusion of mismatched audiovisual information and sensory profile. SP Sensory Profile Caregiver Questionnaire. Percent reported fusion at 0 ms stimulus onset asynchrony significantly correlated with SP Auditory Processing score (r = .51, p>. 05; a) and the SP Inattention score (r = .61, p = .01; b) in participants with autism spectrum disorders

Evaluation of Possible Age-Related Effects

Age did not significantly correlate with identification accuracy for unisensory (visual only or auditory only) or matched audiovisual syllables, fusion for synchronously presented mismatched audiovisual syllables, or size of multisensory TBWs for the age range represented by the present participant sample (all p-values<.10).

Discussion

This study examined audiovisual speech perception in children with ASD and a group of TD controls who did not significantly differ in chronological age or generalized cognitive ability as indexed by a common IQ test. The results make several key contributions to the extant literature on speech perception in ASD. First, by presenting participants with a greater number of unisensory (visual only and auditory only) and matched audiovisual stimuli than prior studies, we demonstrated that children with ASD display deficits in their ability to identify both visual only and matched audiovisual speech stimuli. Second, by capitalizing on previously identified temporal constraints of the McGurk effect, we discovered that children with ASD demonstrate atypical temporal binding of audiovisual speech stimuli. Finally, by exploring associations between speech perception, symptom severity, and sensory profiles, we unveiled larger links between multisensory speech perception and characteristics associated with ASD.

Identification of Unisensory and Multisensory Speech Signals

Presentation of syllables in matched audiovisual and unisensory conditions revealed that children with ASD display impairments in visual only and multisensory speech perception in response to naturalistic speech stimuli relative to children with TD histories. Children with ASD demonstrated a small, but statistically significant deficit in processing of matched audiovisual speech even under controlled listening conditions. Additionally, the ASD group showed a substantial deficit in the identification of visual only stimuli even with a non-verbal response mode, confirming prior work showing impairments in visual speech perception in ASD (Iarocci et al. 2010; Irwin et al. 2011; Smith and Bennetto 2007; Williams et al. 2004). These results for visual speech stimuli stand in contrast to evidence suggesting spared (or even enhanced) visual skills for lower-level, non-social stimuli in individuals with ASD (Bertone et al. 2005; Kwakye et al. 2011; Simmons et al. 2009). The findings are, however, consistent with evidence of specific deficits in visual perception of more complex social stimuli, including faces and biological motion, in ASD (e.g., Blake et al. 2003; Dawson et al. 2005).

Auditory only speech perception was comparable for children with ASD and controls in the current study. This is somewhat surprising as prior work has noted differences in detection, processing, and preference of auditory speech stimuli in ASD (Kuhl et al. 2005; Gervais et al. 2004; Klin 1991). However, this outcome is compatible with results from several similar studies of speech perception in older children with ASD that have evaluated perception of naturally spoken stimuli that are brief in duration and bereft of semantic content (de Gelder et al. 1991; Iarocci et al. 2010). Findings regarding auditory perception in ASD appear to vary according to stimulus complexity, task demands, and the specific perceptual feature of interest (e.g., pitch versus duration) (Kellerman et al. 2005; Samson et al. 2006). Although investigations involving higher-level sociolinguistic stimuli (likely confounded by larger language and social concerns) tend to report perceptual impairments in children with ASD, no consistent pattern has emerged for less linguistically-laden auditory stimuli, such as the syllables employed in this study (Kellerman et al. 2005). Nevertheless, the present findings seem to suggest that the auditory system may be a relative strength in multisensory speech perception among high-functioning children and adolescents with ASD. This result stresses the need for future studies to consider speech perception in a broader multisensory framework, rather than focusing solely on auditory processing and perception in children with ASD.

Evidence of Extended Temporal Binding of Multisensory Speech Signals

This study found that the temporal offset between auditory and visual speech cues differentially affected performance in children with ASD and TD controls. An extended multisensory temporal binding window has been previously observed for a partially overlapping participant pool of children with ASD on multisensory psychophysical tasks involving simple audiovisual stimuli, such as flashes and beeps (flash-beep task as reported by Foss-Feig et al. 2010; multisensory temporal order judgment task as reported by Kwakye et al. 2011). The current results suggest that the expanded time window within which auditory and visual stimuli interact in individuals with autism extends to the integration of more complex speech stimuli as well. Taken together, this set of results indicates that at least a subset of cognitively-able children with ASD tends to bind both simple and complex audiovisual information over a wider window of time than their typically developing peers—a finding likely to have important implications for the construction and maintenance of language representations.

Inconsistency in Responses to Mismatched “McGurk” Stimuli Across Studies

Our finding of a similar magnitude of multisensory synthesis across ASD and TD groups, as measured by the mean percentage of fusions reported in the simultaneous (i.e., 0 ms SOA) condition, is inconsistent with prior studies that reported reduced susceptibility to the McGurk Effect in children with ASD (de Gelder et al. 1991; Irwin et al. 2011; Mongillo et al. 2008; Williams et al. 2004). However, it is largely consistent with a more recent investigation that found no difference between ASD and TD groups in the reports of fusion for mismatched multisensory trials (Iarocci et al. 2010). Reports of fusion in response to the McGurk task are highly variable even within the TD literature (Gentilucci and Cattaneo 2005; Macdonald and McGurk 1978; McGurk and Macdonald 1976; Nath and Beauchamp 2012). Though much of the individual variability has yet to be explained, the degree of fusion appears to be influenced by stimulus parameters (e.g., speaker identity, presence of noise, level of presentation), task instructions (e.g., report what you heard versus report what was said), and participant characteristics (e.g., age) (Gentilucci and Cattaneo 2005; Macdonald and McGurk 1978; McGurk and Macdonald 1976; Nath and Beauchamp 2012).

Given our preliminary evidence of variation in McGurk-related fusion within the ASD group as a function of sensory symptoms as reported on a standard clinical test, it is possible that the tendency to perceive the illusion may vary systematically according to additional factors associated with autism that have not yet been identified. As such, our failure to find a significant difference in the degree of fusion reported by children with ASD and TD in response to the synchronous mismatched stimuli may be due in part to our sample comprising a group of children with ASD who show less aberrant responses to sensory stimuli (specifically the types of stimuli indexed by the Auditory Processing Section and Inattention factor of the SP) on average relative to the samples of studies yielding different results. Characterizing the sensory profile of participant samples will facilitate future comparisons across studies.

Associations Between Speech Perception, Symptom Severity, and Sensory Profiles

Exploratory analyses revealed relationships between multisensory speech perception, symptom severity, and sensory abnormalities in participants with ASD. Prior work has linked abnormal responses to auditory speech with linguistic and social impairments in young children with ASD (Kuhl et al. 2005). Another recent study observed an association between the degree of fusion reported in response to mismatched syllables and social symptom severity in older children with ASD (Mongillo et al. 2008). Our results extend this work by demonstrating a correlation between multisensory speech perception for matched audiovisual syllables and communication-related symptoms in older, high-functioning children with ASD. Future studies should seek to further elucidate the relations between speech perception and broader communication, language, and literacy skills in ASD across the lifespan.

The significant correlation between participants’ probability of reporting fusion in perceiving mismatched audiovisual speech stimuli and caregiver ratings of their sensory processing symptoms further suggests that multisensory integration may be linked with the unusual reactions children with ASD sometimes exhibit in response to sound and other stimuli in everyday settings. Based on our findings, it appears that children with ASD who are less likely to integrate discrete pieces of complex, socially-relevant information (i.e., auditory and visual speech) into a unified percept are more likely than their peers to respond atypically to environmental sounds and distractions. Replications conducted with a priori hypotheses are necessary to confirm these correlations and to investigate possible associations between sensory profiles and responses to lower-level multisensory stimuli in ASD. Furthermore, as sensory problems are particularly prevalent and persistent in ASD (Billstedt et al. 2007), these relationships warrant investigation across samples that represent a wider range of ages and functioning levels.

Limitations and Future Directions

There are several notable limitations to the present study. First, the participant pool was restricted to a subset of older, cognitively-able children with ASD. Though this was necessary to ensure valid and reliable results with a psychophysical paradigm that demanded comprehension of an instruction set, as well as attention and active responding over an extended period of time, it does limit the generalization of results to the broader population of individuals with ASD. At the same time, it is important to note that a restricted cognitive functioning range does not necessarily translate to a restricted range of ASD symptoms, as intellectual ability is not actually included in ASD criteria. Exclusion of individuals with co-morbid cognitive impairments did not appear to result in an extremely truncated range of ASD symptom severity in the present study (ADOS Module 3 total scores ranged from 8 to 20, and SCQ scores ranged from 8 to 32). Nonetheless, additional information is needed regarding multisensory speech perception in children with ASD across a wider range of ages, symptom severities, and cognitive skill levels.

A second limitation involves the stimuli presented. Though the readily-available and heavily-researched McGurk model was an ideal method for measuring multisensory integration and its temporal constraints, incongruent speech stimuli are rarely encountered in more naturalistic settings. As such, these stimuli are admittedly of limited ecological validity. Even the more ecologically valid matched audiovisual syllables remain weak in ethological salience, as they were presented in isolation and lacked meaning. However, use of short, meaningless syllables allowed us to avoid confounds related to stimulus duration and semantics that were anticipated in ASD. Future endeavors should explore perceptual abilities across a broader range of speech stimuli.

Additionally, the closed choice set could be considered a limitation. Though children with autism always had the option of selecting “other”, the multiple-choice format could arguably produce a task that taps discrimination rather than identification skill. We believed that this format was necessary to avoid potential confounds related to verbal ability and motor imitation deficits in children with autism. Children’s selection of the “other” option suggests that they did not default to discrimination between the four CV syllable options explicitly provided. However, future studies may explore other methods to account for confounds encountered in ASD research.

Finally, the present study did not monitor participants’ gaze during the experimental task. Some previous investigations have shown that the location of gaze fixation (within the speaker’s face) does not predict visual accuracy (Lansing and McConkie, 2003) or the McGurk effect (Pare et al. 2003) in TD individuals. However, it is unclear whether such findings generalize to individuals with ASD. Furthermore, we cannot ensure that participants’ gaze was within the speaker’s face during stimulus presentation. Several past studies have found reduced attention to faces in children with ASD (e.g., Hutt and Ounsted, 1966; Osterling and Dawson, 1994; Volkmar and Mayes 1990), and one recent report specifically observed that children with ASD were less likely to fixate within the speaker’s face during audiovisual speech perception tasks (Irwin et al. 2011). We cannot rule out the possibility that gaze to the speaker’s face differed between children with ASD and TD controls, or that such a difference in gaze impacted the results. Thus, it is important for future studies of audiovisual speech perception in ASD to incorporate gaze tracking technology.

Further work is also necessary to determine how clinicians can support optimal speech perception in ASD. For example, limiting environmental noise or providing amplification devices such as FM systems to improve signal-to-noise ratios may provide a means of compensation for poor multisensory speech perception in the classroom and community. Alternatively, interventions may ultimately facilitate normalization of speech perception. Previous studies utilizing computer-based training protocols have demonstrated that multisensory integration in general and speech perception in particular may be malleable with meaningful improvements following focused training (Massaro and Bosseler, 2003; Powers et al. 2009, 2012; Williams et al. 2004). A recent study in young children with language impairments has additionally demonstrated that more conventional speech-language interventions may impact speech processing skills (Yoder et al. in press). Systematic investigation is necessary to determine which type of intervention will produce the most favorable results for children with ASD.

Conclusion and Implications

In conclusion, this study found that children with ASD show reduced multisensory speech perception for matched audiovisual stimuli—even under controlled listening conditions with a closed choice set. Specific deficits in visual skill may lead to a reliance on auditory information in speech perception for at least a subset of this population. Furthermore, in response to mismatched syllables, cognitively- able children and adolescents with ASD display an atypical pattern of multisensory integration in comparison to TD controls, characterized by a wider window of temporal binding. Finally, preliminary links between multisensory speech perception and communication and sensory processing symptoms suggest that perception of speech cues may be centrally related to core deficits associated with ASD. We hope that these findings will generate further inquiry into: (a) why children with ASD display atypical patterns of speech perception and multisensory integration; (b) how speech perception and temporal binding relate to traits associated with ASD; and (c) how clinicians may best target multisensory perception to improve outcomes of individuals with ASD.

Contributor Information

Tiffany G. Woynaroski, Email: tiffany.g.woynaroski@vanderbilt.edu, Department of Hearing and Speech Sciences, Vanderbilt University, 1211 Medical Center Drive, Nashville, TN 37232, USA

Leslie D. Kwakye, Email: lkwakye@oberlin.edu, Neuroscience Department, Oberlin College, 119 Woodland St, Oberlin, OH 44074, USA

Jennifer H. Foss-Feig, Department of Psychology and Human Development, Vanderbilt University, Nashville, TN, USA

Ryan A. Stevenson, Department of Hearing and Speech Sciences, Psychology and Psychiatry, Vanderbilt Kennedy Center, Vanderbilt Brain Institute, Vanderbilt University, Nashville, TN, USA

Wendy L. Stone, Department of Psychology and UW Autism Center, University of Washington, Seattle, WA, USA

Mark T. Wallace, Department of Hearing and Speech Sciences, Psychology and Psychiatry, Vanderbilt Kennedy Center, Vanderbilt Brain Institute, Vanderbilt University, Nashville, TN, USA

References

- American Psychiatric Association. Diagnostic and statistical manual of mental disorders-IV-TR. Washington, DC: APA; 2000. [Google Scholar]

- American Psychiatric Association. DSM-5 development: autism spectrum disorder. 2012 Retrieved August 8, 2012, from http://www.dsm5.org/ProposedRevisions/Pages/proposedrevision.aspx?rid=94.

- Bebko JM, Weiss JA, Demark JL, Gomez P. Discrimination of temporal synchrony in intermodal events by children with autism and children with developmental disabilities without autism. Journal of Child Psychology and Psychiatry. 2006;47(1):88–98. doi: 10.1111/j.1469-7610.2005.01443.x. [DOI] [PubMed] [Google Scholar]

- Bertone A, Mottron L, Jelenic P, Faubert J. Enhanced and diminished visuo-spatial information processing in autism depends on stimulus complexity. Brain. 2005;128(10):2430–2441. doi: 10.1093/brain/awh561. [DOI] [PubMed] [Google Scholar]

- Billstedt E, Carina Gillberg I, Gillberg C. Autism in adults symptom patterns and early childhood predictors. Use of the DISCO in a community sample followed from childhood. Journal of Child Psychology and Psychiatry. 2007;48(11):1102–1110. doi: 10.1111/j.1469-7610.2007.01774.x. [DOI] [PubMed] [Google Scholar]

- Blake R, Turner LM, Smoski MJ, Pozdol SL, Stone WL. Visual recognition of biological motion is impaired in children with autism. Psychological Science. 2003;14(2):151–157. doi: 10.1111/1467-9280.01434. [DOI] [PubMed] [Google Scholar]

- Brock J, Brown C, Boucher J, Rippon G. The temporal binding deficit hypothesis of autism. Development and Psychopathology. 2002;14(2):209–224. doi: 10.1017/S0954579402002018. [DOI] [PubMed] [Google Scholar]

- Calvert GA, Thesen T. Multisensory integration: methodological approaches and emerging principles in the human brain. Journal of Physiology Paris. 2004;98:191–205. doi: 10.1016/j.jphysparis.2004.03.018. [DOI] [PubMed] [Google Scholar]

- Dawson G, Webb SJ, McPartland J. Understanding the nature of face processing impairment in autism: insights from behavioral and electrophysiological studies. Developmental Neuropsychology. 2005;27(3):403–424. doi: 10.1207/s15326942dn2703_6. [DOI] [PubMed] [Google Scholar]

- de Gelder B, Vroomen J, Van der Heide L. Face recognition and lip-reading in autism. European Journal of Cognitive Psychology. 1991;3(1):69–86. [Google Scholar]

- Dunn W. Sensory profile. San Antonio, TX: Psychological Corporation; 1999. [Google Scholar]

- Foss-Feig JH, Kwakye LD, Cascio CJ, Burnette CP, Kadivar H, Stone WL, et al. An extended multisensory temporal binding window in autism spectrum disorders. Experimental Brain Research. 2010;203(2):381–389. doi: 10.1007/s00221-010-2240-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frith U, Happé F. Autism: beyond “theory of mind”. Cognition. 1994;50(1–3):115–132. doi: 10.1016/0010-0277(94)90024-8. [DOI] [PubMed] [Google Scholar]

- Gentilucci M, Cattaneo L. Automatic audiovisual integration in speech perception. Experimental Brain Research. 2005;167(1):66–75. doi: 10.1007/s00221-005-0008-z. [DOI] [PubMed] [Google Scholar]

- Gervais H, Belin P, Boddaert N, Leboyer M, Coez A, Sfaello I, et al. Abnormal cortical voice processing in autism. Nature Neuroscience. 2004;7(8):801–802. doi: 10.1038/nn1291nn1291. [DOI] [PubMed] [Google Scholar]

- Grossman RB, Schneps MH, Tager-Flusberg H. Slipped lips: onset asynchrony detection of auditory-visual language in autism. Journal of Child Psychology and Psychiatry. 2009;50(4):491–497. doi: 10.1111/j.1469-7610.2008.02002.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Happe F. The weak central coherence account of autism. In: Volkmar Paul, Klin Cohen., editors. Handbook of autism and pervasive developmental disorders, Vol 1: Diagnosis, development, neurobiology, and behavior. 3. New York: John Wiley & Sons; 2005. pp. 640–649. [Google Scholar]

- Happé F, Frith U. The weak coherence account: Detailfocused cognitive style in autism spectrum disorders. Journal of Autism and Developmental Disorders. 2006;36(1):5–25. doi: 10.1007/s10803-005-0039-0. [DOI] [PubMed] [Google Scholar]

- Hutt C, Ounsted C. The biological significance of gaze aversion with particular reference to the syndrome of infantile autism. Behavioral Science. 1966;11(5):346–356. doi: 10.1002/bs.3830110504. [DOI] [PubMed] [Google Scholar]

- Iarocci G, McDonald J. Sensory integration and the perceptual experience of persons with autism. Journal of Autism and Developmental Disorders. 2006;36(1):77–90. doi: 10.1007/s10803-005-0044-3. [DOI] [PubMed] [Google Scholar]

- Iarocci G, Rombough A, Yager J, Weeks DJ, Chua R. Visual influences on speech perception in children with autism. Autism. 2010;14(4):305–320. doi: 10.1177/1362361309353615. [DOI] [PubMed] [Google Scholar]

- Irwin JR, Tornatore LA, Brancazio L, Whalen DH. Can children with autism spectrum disorders “hear” a speaking face? Child Development. 2011;82(5):1397–1403. doi: 10.1111/j.1467-8624.2011.01619.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kellerman GR, Fan J, Gorman JM. Auditory abnormalities in autism: Toward functional distinctions among findings. CNS Spectrums. 2005;10(9):748–756. doi: 10.1017/s1092852900019738. [DOI] [PubMed] [Google Scholar]

- Klin A. Young autistic children’s listening preferences in regard to speech: A possible characterization of the symptom of social withdrawal. Journal of Autism and Developmental Disorders. 1991;21(1):29–42. doi: 10.1007/BF02206995. [DOI] [PubMed] [Google Scholar]

- Kuhl P, Coffey-Corina S, Padden D, Dawson G. Links between social and linguistic processing of speech in preschool children with autism: Behavioral and electrophysiological measures. Developmental Science. 2005;8(1):F1–F12. doi: 10.1111/j.1467-7687.2004.00384.x. [DOI] [PubMed] [Google Scholar]

- Kwakye LD, Foss-Feig JH, Cascio CJ, Stone WL, Wallace MT. Altered auditory and multisensory temporal processing in autism spectrum disorders. Frontiers in Integrative Neuroscience. 2011;4:1–11. doi: 10.3389/fnint.2010.00129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lansing CR, McConkie GW. Word identification and eye fixation locations in visual and visual-plus-auditory presentations of spoken sentences. Attention, Perception, and Psychophysics. 2003;65(4):536–552. doi: 10.3758/bf03194581. [DOI] [PubMed] [Google Scholar]

- Lord C, Risi S, Lambrecht L, Cook EH, Jr, Leventhal BL, DiLavore PC, et al. The autism diagnostic observation schedule-generic: A standard measure of social and communication deficits associated with the spectrum of autism. Journal of Autism and Developmental Disorders. 2000;30(3):205–223. [PubMed] [Google Scholar]

- Lord C, Rutter M, Le Couteur A. Autism diagnostic interview-revised: A revised version of a diagnostic interview for caregivers of individuals with possible pervasive developmental disorders. Journal of Autism and Developmental Disorders. 1994;24(5):659–685. doi: 10.1007/BF02172145. [DOI] [PubMed] [Google Scholar]

- Macdonald J, McGurk H. Visual influences on speech perception processes. Attention, Perception, & Psychophysics. 1978;24(3):253–257. doi: 10.3758/bf03206096. [DOI] [PubMed] [Google Scholar]

- Magnée M, De Gelder B, Van Engeland H, Kemner C. Audiovisual speech integration in pervasive developmental disorder: Evidence from event-related potentials. Journal of Child Psychology and Psychiatry. 2008;49(9):995–1000. doi: 10.1111/j.1469-7610.2008.01902.x. [DOI] [PubMed] [Google Scholar]

- Massaro D. Perceiving talking faces: From speech perception to a behavioral principle. Cambridge, Massachusetts: MIT Press; 1998. [Google Scholar]

- Massaro D, Bosseler A. Perceiving speech by ear and eye: Multimodal integration by children with autism. Journal of Developmental and Learning Disorders. 2003;7:111–144. [Google Scholar]

- McGurk H, Macdonald J. Hearing lips and seeing voices. Nature. 1976;264(5588):746–748. doi: 10.1038/264746a0. [DOI] [PubMed] [Google Scholar]

- Mongillo E, Irwin J, Whalen D, Klaiman C, Carter A, Schultz R. Audiovisual processing in children with and without autism spectrum disorders. Journal of Autism and Developmental Disorders. 2008;38(7):1349–1358. doi: 10.1007/s10803-007-0521-y. [DOI] [PubMed] [Google Scholar]

- Nath AR, Beauchamp MS. A neural basis for interindividual differences in the McGurk effect, a multisensory speech illusion. Neuroimage. 2012;59(1):781–787. doi: 10.1016/j.neuroimage.2011.07.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Osterling J, Dawson G. Early recognition of children with autism: A study of first birthday home videotapes. Journal of Autism and Developmental Disorders. 1994;24(3):247–257. doi: 10.1007/bf02172225. [DOI] [PubMed] [Google Scholar]

- Pare M, Richler RC, ten Hove M, Munhall KG. Gaze behavior in audiovisual speech perception: The influence of ocular fixations on the McGurk effect. Attention, Perception, & Psychophysics. 2003;65(4):553–567. doi: 10.3758/bf03194582. [DOI] [PubMed] [Google Scholar]

- Powers AR, III, Hevey MA, Wallace MT. Neural correlates of multisensory perceptual learning. Journal of Neuroscience. 2012;32(18):6263–6274. doi: 10.1523/JNEUROSCI.6138-11.201232/18/6263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powers AR, III, Hillock AR, Wallace MT. Perceptual training narrows the temporal window of multisensory binding. The Journal of Neuroscience. 2009;29(39):12265–12274. doi: 10.1523/JNEUROSCI.3501-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rogers SJ, Hepburn SL, Stackhouse T, Wehner E. Imitation performance in toddlers with autism and those with other developmental disorders. Journal of Child Psychology and Psychiatry. 2003;44(5):763–781. doi: 10.1111/1469-7610.00162. [DOI] [PubMed] [Google Scholar]

- Rutter M, Bailey A, Lord C. Social communication questionnaire. Los Angeles, CA: Western Psychological Services; 2003. [Google Scholar]

- Samson F, Mottron L, Jemel B, Belin P, Ciocca V. Can spectro-temporal complexity explain the autistic pattern of performance on auditory tasks? Journal of Autism and Developmental Disorders. 2006;36(1):65–76. doi: 10.1007/s10803-005-0043-4. [DOI] [PubMed] [Google Scholar]

- Simmons DR, Robertson AE, McKay LS, Toal E, McAleer P, Pollick FE. Vision in autism spectrum disorders. Vision Research. 2009;49(22):2705–2739. doi: 10.1016/j.visres.2009.08.005. [DOI] [PubMed] [Google Scholar]

- Smith EG, Bennetto L. Audiovisual speech integration and lipreading in autism. Journal of Child Psychology and Psychiatry. 2007;48(8):813–821. doi: 10.1111/j.1469-7610.2007.01766.x. [DOI] [PubMed] [Google Scholar]

- Stone WL, Ousley OY, Littleford CD. Motor imitation in young children with autism: What’s the object? Journal of Abnormal Child Psychology. 1997;25(6):475–485. doi: 10.1023/a:1022685731726. [DOI] [PubMed] [Google Scholar]

- Summerfield Q. Some preliminaries to a comprehensive account of audio-visual speech perception. In: Dodd B, Campbell R, editors. Hearing by eye: The psychology of lip-reading. Hillsdale, NJ, England: Lawrence Erlbaum Associates, Inc; 1987. pp. 3–51. [Google Scholar]

- Tager-Flusberg H, Paul R, Lord C. Language and communication in autism. In: Volkmar FR, Paul R, Klin A, Cohen D, editors. Handbook of autism and pervasive developmental disorders, Vol. 1: Diagnosis, development, neurobiology, and behavior. 3. John Wiley & Sons Inc; Hoboken, NJ, US: 2005. pp. 335–364. [Google Scholar]

- Volkmar FR, Mayes LC. Gaze behavior in autism. Development and Psychopathology. 1990;2(01):61–69. doi: 10.1017/S0954579400000596. [DOI] [Google Scholar]

- Wechsler D. Wechsler abbreviated scale of intelligence. San Antonio, TX: Psychological Corporation; 1999. [Google Scholar]

- Williams J, Massaro DW, Peel NJ, Bosseler A, Suddendorf T. Visual-auditory integration during speech imitation in autism. Research in Developmental Disabilities. 2004a;25(6):559–575. doi: 10.1016/j.ridd.2004.01.008. [DOI] [PubMed] [Google Scholar]

- Williams J, Whiten A, Singh T. A systematic review of action imitation in autistic spectrum disorder. Journal of Autism and Developmental Disorders. 2004b;34(3):285–299. doi: 10.1023/B:JADD.0000029551.56735.3a. [DOI] [PubMed] [Google Scholar]

- Stevenson R, Wallace M. Multisensory temporal integration: task and stimulus dependencies. Experimental Brain Research. doi: 10.1007/s00221-013-3507-3. (in progress) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yoder P, Molfese D, Murray M, Key A. Normative topographic ERP analyses of speed of speech processing and grammar before and after grammatical treatment. Journal of Developmental Neuropsychology. doi: 10.1080/87565641.2011.637589. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]