Abstract

This paper considers the fully complex backpropagation algorithm (FCBPA) for training the fully complex-valued neural networks. We prove both the weak convergence and strong convergence of FCBPA under mild conditions. The decreasing monotonicity of the error functions during the training process is also obtained. The derivation and analysis of the algorithm are under the framework of Wirtinger calculus, which greatly reduces the description complexity. The theoretical results are substantiated by a simulation example.

Keywords: Complex-valued neural networks, Fully complex backpropagation algorithm, Wirtinger calculus, Convergence

Introduction

The theoretical studies and practical implementations of complex-valued neural network (CVNN) have attracted considerable attention in signal processing, pattern recognition, and medical information processing (Fink et al. 2014; Hirose 2012; Nitta 2013). Based on different choices of the activation function, there are two main CVNN models: the split CVNN (Nitta 1997) and the fully CVNN (Kim and Adali 2003). The split CVNN uses a pair of real-valued functions to separately process the real part and the imaginary part of the neuron’s input signal. This strategy can effectively overcome the singularity problem during the training procedure. In contrast, activation functions of the fully CVNN are fully complex-valued, which helps the network make fully use of the phase information and thus achieve better performance in some applications. As one of the most popular training methods for neural networks, backpropagation algorithm (BPA) has been extended from the real domain to the complex domain in order to train the CVNN. Accordingly, there are two types of complex BPA: one is the split-complex BPA (SCBPA) (Nitta 1997) for split CVNN, and another is the fully complex BPA (FCBPA) (Li and Adali 2008) for fully CVNN.

Convergence is the precondition for any iteration algorithm to be used in real applications. (Wei et al. 2013; Osborn 2010) The convergence of the BPA has been extensively studied in literature (Wu et al. 2005, 2011; Zhang et al. 2007, 2008, 2009; Shao and Zheng 2011), where the boundedness and differentiability of the activation function are usually two necessary conditions for the convergence analysis. However, as stated by the Liouvilles theorem (an entire and bounded function in the complex domain is a constant), the complex activation function can not be both bounded and analytic. The conflict between the boundedness and differentiability of the activation function makes the theoretical convergence analysis for the complex BPA more difficult than that for the BPA. Fortunately, as the activation functions of the split CVNN can be split into two bounded and differential real-valued functions, the convergence analysis of SCBPA can then be conducted in the real domain. For the corresponding convergence results, we refer to (Nitta 1997; Zhang et al. 2013; Xu et al. 2010). However, though FCBPA has been widely used in many applications and has been experimentally shown to be convergent for some kinds of activation functions (Kim and Adali 2003), the theoretical convergence analysis remains challengeable.

Despite the challenge posed by the Liouville‘s theorem, another challenge for the theoretical convergence analysis of FCBPA is that the traditional mean value theorem, which is vital for the convergence analysis of BPA, dos not hold in the complex domain. (For example: . We have f(z2) − f(z1) = 0 but for all w.) By expanding the analytic function with Taylor series and omitting the high order terms, some local stability results for complex ICA are obtained (Adali et al. 2008). Under the assumption that the activation function is a contraction, the convergence of the complex nonlinear adaptive filters is proved (Mandic and Goh 2009). However, to the best of our knowledge, the theoretical convergence results of the FCBPA has not yet been established. This becomes the main concern of this paper. Specifically, we make the following contributions:

By introducing a mean value theorem for a holomorphic function (Mcleod 1965), we will prove both the weak convergence and the strong convergence of FCBPA.

Instead of dropping the high order terms of the Taylor series (Adali et al. 2008), we give an accurate estimation for the difference of the error function between two iterations using the mean value theorem. As a result, our results are of global nature in that they are valid for arbitrarily given initial values of the weights.

The restrictive condition that the activation function is a contraction is not needed in our analysis.

The derivation and analysis of the algorithm are under the framework of Wirtinger calculus, which greatly reduces the description complexity.

The remainder of this paper is organized as follows. The network structure and the derivation of the FCBPA based on Wirtinger calculus are described in the next section. “Main results” section presents the main convergence theorem of the paper. The detailed proof of the theorem is given in Section Proofs. In “Simulation result” section we use a simulation example to support our theoretical results. The paper is concluded in “Conclusion” section.

Network structure and FCBPA based on Wirtinger calculus

We consider a single hidden layer feedforward network consisting of p input nodes, q hidden nodes, and 1 output node. Let be the weight vector between all the hidden units and the output unit, and be the weight vector between all the input units and the hidden unit l. To simplify the presentation, we write all the weight parameters in a compact form, i.e., and we define a matrix .

Given activation functions for the hidden layer and output layer, respectively, we define a vector function for . For an input , the output vector of the hidden layer can be written as and the final output of the network can be written as

| 1 |

where represents the inner product between the two vectors w0 and .

Suppose that is a given set of training samples, where is the input, and dk is the desired output. The aim of the network training is to find the appropriate network weights that can minimize the error function

| 2 |

where

| 3 |

and the notation − denotes the complex conjugation.

As noted by Adali et al. (2008), any function h(z) that is analytic in a bounded zone |z| < R with a Taylor series expansion with all real coefficients in |z| < R satisfies the property . Examples of such functions include polynomials and most trigonometric functions and their hyperbolic counterparts, which are qualified activation functions of CVNNs (Kim and Adali 2003). As a result, we suppose both the activation functions and satisfy , . Therefore,

| 4 |

E(w) can be viewed as a function of complex variable vector w and its conjugate . According to the Wirtinger calculus (Brandwood 1983; Bos 1994), we can define two gradient vectors ∇wE (by taking partial derivatives with respect to w at the same time treating as a constant vector in E) and (by taking partial derivatives with respect to at the same time treating w as a constant vector). Then the gradient defines the direction of the maximum rate of change in E(w) with respect to w. As the output node yk does not explicitly contain the variable vector , we can conclude . Thus, by the chain rule of the Wirtinger calculus, we have

| 5 |

| 6 |

Obviously,

| 7 |

Starting from an arbitrary initial value w0, the BPA based on Wirtinger calculus updates the weights {wn} iteratively by

| 8 |

where is the learning rate.

Main results

The following assumptions are needed in our convergence analysis.

(A1) There exists a constant c1 > 0 such that for all , ;

(A2) The functions f(z) and g(z) are analytic in a bounded zone |z| < R with a Taylor series expansion with all real coefficients in |z| < R, where R > max{c2,c3} (c2 and c3 are defined in (17) below).

(A3) The set contains only finite points.

Remark 1

Assumption (A1) is the usual condition for the convergence analysis of the gradient method for both the real-valued neural networks (Zhang et al. 2007, 2008) and the CVNNs (Xu et al. 2010) in literature. As noted by Adali et al. (2008), Assumption (A2) is satisfied by quite a number of functions which are qualified as activation functions of the fully CVNNs. Assumption (A3) is used to establish a strong convergence result.

Now we present our convergence results.

Theorem 1

Suppose that the error function is given by (2), that the weight sequence {wn} is generated by the algorithm (8) for any initial valuew0, that, whereLis defined by (27) below, and that Assumptions (A1) and (A2) are valid. Then we have

9 10

Moreover, if Assumption (A3) is valid, then there holds the strong convergence: there exists a pointsuch that11 12

Proofs

Lemma 1

[see Theorem 10 in by Mcleod (1965)] Suppose h is a holomorphic function defined on a connected open set G in the complex plane. Ifz1andz2are points in G such that the segment joining them is also in G then

| 13 |

for some ξ1and ξ2on the segment joiningz1andz2and some nonnegative numbersandsuch that.

Lemma 2

Suppose Assumptions (A1) and (A2) are valid, thensatisfies Lipschitz condition, that is, there exists a positive constantL1, such that

| 14 |

Similarly, there exists a positive constantL2, such that

| 15 |

Proof

For simplicity, we introduce the following notations:

| 16 |

for .

By Assumption (A2), f and g have differentials of any order in the zone {z: |z| < R}. In addition, recalling is finite and {wn} is bounded, we can define c2 and c3 such that

| 17 |

| 18 |

Using (18), Lemma 1 and the Cauchy-Schwartz Inequality, for any 1 ≤ k ≤ K and , we have that

| 19 |

where , ξl1 and ξl2 lie on the segment joining and , .

Similarly, we have

| 20 |

By (18), (19), Lemma 1 and the Cauchy-Schwartz Inequality we have that for any 1 ≤ k ≤ K and

| 21 |

where , , and lie on the segment joining and .

In the same way, we can prove that

| 22 |

With (18), (20), (22), and Cauchy-Schwartz Inequality we obtain

| 23 |

Combining (18), (21), (23), and the Cauchy-Schwartz Inequality we can conclude

| 24 |

where .

Similarly, there exists a Lipschitz constants L4 such that for

| 25 |

Hence, (7), (24), and (25) validate (14) by setting L1 = L3 + qL4.

Equation (15) can be proved in a similar way to (14). □

Now, we proceed to the proof of Theorem 2 by dealing with Equations (9)-(12) separately.

Proof of (9)

By the differential mean value theorem, there exists a constant , such that

| 26 |

To make (9) valid, we only require the learning rate η to satisfy

| 27 |

where . □

Proof of (10)

Proof of (11)

Let β = 2η − (L1 + L2)θη2. By (26), we have

| 28 |

Considering E(wn+1) > 0, let , then we have

| 29 |

This immediately gives

| 30 |

□

The following lemma, which will be used in the proof of (12), is a generalization of Theorem 14.1.5 by Ortega and Rheinboldt (1970) from the real domain to the complex domain. The proof of this lemma follows the same route as (Ortega and Rheinboldt 1970) and we omit it here.

Lemma 3

[22] Letbe continuous for a bounded closed region, and.Suppose the setcontains only finite points and the sequence {zn} satisfy:

-

(i)

;

-

(ii)

.

Then, there exists a uniquesuch that.

Proof of (12)

Obviously is continuous under the Assumption (A2). Using (8) and (11), we have

| 31 |

Furthermore, the Assumption (A3) is valid. Thus, applying Lemma 3, there exists a unique such that . □

Simulation result

In this section we illustrate the convergence behavior of the FCBPA by the problem of one-step-ahead prediction of the complex-valued nonlinear signals. The nonlinear benchmark input signal is given by (Mandic and Goh 2009)

| 32 |

where n(t) is a complex white Gaussian noise with zero mean and unit variance.

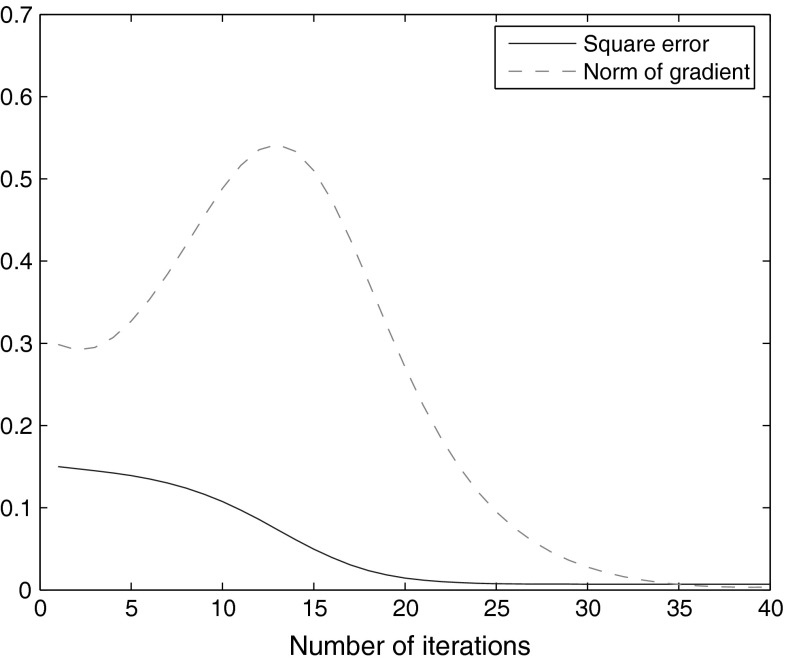

This example uses a network with one input node, five hidden nodes, and one output node. We set the activation function for both the hidden layer and output layer to be , which is analytic in the complex domain. The learning rate η is set to be 0.1. The test is carried out with the initial weights (both the real part and the imaginary part) taken as random numbers from the interval [−0.1, 0.1]. The simulation results are presented in Fig. 1, which shows that the gradient tends to zero and the square error decreases monotonically as the number of iteration increases and at last it tends to a constant. This supports our theoretical findings.

Fig. 1.

Convergence behavior of FCBPA

Conclusion

In this paper, under the framework of Wirtinger calculus, we investigate the FCBPA for fully CVNN. Using a mean value theorem for holomorphic functions, under mild conditions we prove the gradient of the error function with respect to the network weight vector satisfies the Lipschitz condition. Based on this conclusion, both the weak convergence and strong convergence of the algorithm are proved. Simulation results substantiate the theoretical findings.

Acknowledgments

This research is supported by the National Natural Science Foundation of China (61101228, 10871220), the China Postdoctoral Science Foundation (No. 2012M520623), the Research Fund for the Doctoral Program of Higher Education of China (No. 20122304120028), and the Fundamental Research Funds for the Central Universities.

References

- Adali T, Li H, Novey M, et al. Complex ICA using nonlinear functions. IEEE Trans Signal Process. 2008;56(9):4356–544. [Google Scholar]

- Bos AVD. Complex gradient and Hessian. Proc Inst Elec Eng Vision Image Signal Process. 1994;141:380–382. doi: 10.1049/ip-vis:19941555. [DOI] [Google Scholar]

- Brandwood D. Complex gradient operator and its application in adaptive array theory. Proc Inst Electr Eng. 1983;130:11–16. [Google Scholar]

- Fink O, Zio E, Weidmann U. Predicting component reliability and level of degradation with complex-valued neural networks. Reliab Eng Syst Safe. 2014;121:198–206. doi: 10.1016/j.ress.2013.08.004. [DOI] [Google Scholar]

- Hirose A. Complex-valued neural networks. Berlin Heidelberg: Springer-Verlag; 2012. [Google Scholar]

- Kim T, Adali T. Approximation by fully complex multilayer perceptrons. Neural Comput. 2003;15:1641–666. doi: 10.1162/089976603321891846. [DOI] [PubMed] [Google Scholar]

- Li H, Adali T. Complex-valued adaptive signal processing using nonlinear functions. EURASIP J Adv Signal Process. 2008;2008:122. [Google Scholar]

- Mandi DP, Goh SL. Complex valued nonlinear adaptive filters. Chichester: Wiley; 2009. [Google Scholar]

- Mcleod RM. Mean value theorems for vector valued functions. Proc Edinburgh Math Soc. 1965;14(2):197–209. doi: 10.1017/S0013091500008786. [DOI] [Google Scholar]

- Nitta T. An extension of the back-propagation algorithm to complex numbers. Neural Netw. 1997;10(8):1391–1415. doi: 10.1016/S0893-6080(97)00036-1. [DOI] [PubMed] [Google Scholar]

- Nitta T. Local minima in hierarchical structures of complex-valued neural networks. Neural Netw. 2013;43:1–7. doi: 10.1016/j.neunet.2013.02.002. [DOI] [PubMed] [Google Scholar]

- Osborn GW. A Kalman filtering approach to the representation of kinematic quantities by the hippocampal-entorhinal complex. Cogn Neurodyn. 2010;4:C315–C335. doi: 10.1007/s11571-010-9115-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shao HM, Zheng GF. Boundedness and convergence of online gradient method with penalty and momentum. Neurocomputing. 2011;74:765–770. doi: 10.1016/j.neucom.2010.10.005. [DOI] [Google Scholar]

- Wei H, Ren Y, Wang ZY. A computational neural model of orientation detection based on multiple guesses: comparison of geometrical and algebraic models. Cogn Neurodyn. 2013;7:C361–C379. doi: 10.1007/s11571-012-9235-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu W, Feng GR, Li ZX, et al. Deterministic convergence of an online gradient method for BP neural networks. IEEE Trans Neural Netw. 2005;16:533–540. doi: 10.1109/TNN.2005.844903. [DOI] [PubMed] [Google Scholar]

- Wang J, Wu W, Zurada J. Deterministic convergence of conjugate gradient method for feedforward neural networks. Neurocomputing. 2011;74:2368–2376. doi: 10.1016/j.neucom.2011.03.016. [DOI] [Google Scholar]

- Xu DP, Zhang HS, Liu L. Convergence analysis of three classes of split-complex gradient algorithms for complex-valued recurrent neural networks. Neural Comput. 2010;22(10):2655–2677. doi: 10.1162/NECO_a_00021. [DOI] [PubMed] [Google Scholar]

- Zhang C, Wu W, Xiong Y. Convergence analysis of batch gradient algorithm for three classes of sigma-pi neural networks. Neural Process Lett. 2007;26:177–180. doi: 10.1007/s11063-007-9050-0. [DOI] [Google Scholar]

- Zhang C, Wu W, Chen XH, et al. Convergence of BP algorithm for product unit neural networks with exponential weights. Neurocomputing. 2008;72:513–520. doi: 10.1016/j.neucom.2007.12.004. [DOI] [Google Scholar]

- Zhang HS, Wu W, Liu F, Yao MC. Boundedness and convergence of online gradient method with penalty for feedforward neural networks. IEEE Trans Neural Netw. 2009;20(6):1050–1054. doi: 10.1109/TNN.2009.2020848. [DOI] [PubMed] [Google Scholar]

- Zhang HS, Xu DP, Zhang Y (2013) Boundedness and convergence of split-complex back-propagation algorithm with momentum and penalty. Neural Process Lett. doi:10.1007/s11063-013-9305-x

- Ortega JM, Rheinboldt WC. Iterative solution of nonlinear equations in several variables. New York: Academic Press; 1970. [Google Scholar]