Summary

We consider the problem of estimating multiple related Gaussian graphical models from a high-dimensional data set with observations belonging to distinct classes. We propose the joint graphical lasso, which borrows strength across the classes in order to estimate multiple graphical models that share certain characteristics, such as the locations or weights of nonzero edges. Our approach is based upon maximizing a penalized log likelihood. We employ generalized fused lasso or group lasso penalties, and implement a fast ADMM algorithm to solve the corresponding convex optimization problems. The performance of the proposed method is illustrated through simulated and real data examples.

Keywords: alternating directions method of multipliers, generalized fused lasso, group lasso, graphical lasso, network estimation, Gaussian graphical model, high-dimensional

1. Introduction

In recent years, much interest has focused upon estimating an undirected graphical model on the basis of a n × p data matrix X, where n is the number of observations and p is the number of features. Suppose that the observations x1, …, xn ∈ ℝp are independent and identically distributed N(μ, Σ), where μ ∈ ℝp and Σ is a positive definite p × p matrix. Then zeros in the inverse covariance matrix Σ−1 correspond to pairs of features that are conditionally independent – that is, pairs of variables that are independent of each other, given all of the other variables in the data set. These conditional dependence relationships can be represented by a graph in which nodes represent features and edges connect conditionally dependent pairs of features (Lauritzen 1996).

A natural way to estimate the precision (or concentration) matrix Σ−1 is via maximum likelihood. Letting S denote the empirical covariance matrix of X, the Gaussian log likelihood takes the form (up to a constant)

| (1.1) |

Maximizing (1.1) with respect to Σ−1 yields the maximum likelihood estimate S−1.

However, two problems can arise in using this maximum likelihood approach to estimate Σ−1. First, in the high-dimensional setting where the number of features p is larger than the number of observations n, the empirical covariance matrix S is singular and so cannot be inverted to yield an estimate of Σ−1. If p ≈ n, then even if S is not singular, the maximum likelihood estimate for Σ−1 will suffer from very high variance. Second, one often is interested in identifying pairs of variables that are unconnected in the graphical model, i.e. that are conditionally independent; these correspond to zeros in Σ−1. But maximizing the log likelihood (1.1) will in general yield an estimate of Σ−1 with no elements that are exactly equal to zero.

In recent years, a number of proposals have been made for estimating Σ−1 in the high-dimensional setting in such a way that the resulting estimate is sparse. Meinshausen & Bühlmann (2006) proposed doing this via a penalized regression approach, which was extended by Peng et al. (2009). A number of authors have instead taken a penalized log likelihood approach (among others, Yuan & Lin 2007b, Friedman, Hastie & Tibshirani 2007, Rothman et al. 2008): rather than maximizing (1.1), one can instead solve the problem

| (1.2) |

where λ is a nonnegative tuning parameter, and ||Θ||1 denotes the sum of the absolute values of the elements of Θ. This is a convex optimization problem, and its solution provides an estimate for Σ−1. The use of an ℓ1 or lasso (Tibshirani 1996) penalty on the elements of Θ has the effect that when the tuning parameter λ is large, some elements of the resulting precision matrix estimate will be exactly equal to zero. Moreover, (1.2) can be solved even if p ≫ n. The solution to the problem (1.2) is referred to as the graphical lasso. Some authors have proposed applying the ℓ1 penalty in (1.2) only to the off-diagonal elements of Θ.

Graphical models are especially of interest in the analysis of gene expression data, since it is believed that genes operate in pathways, or networks. Graphical models based on gene expression data can provide a useful tool for visualizing the relationships among genes and for generating biological hypotheses. The standard formulation for estimating a Gaussian graphical model assumes that each observation is drawn from the same distribution. However, in many datasets the observations may correspond to several distinct classes, so the assumption that all observations are drawn from the same distribution is inappropriate. For instance, suppose that a cancer researcher collects gene expression measurements for a set of cancer tissue samples and a set of normal tissue samples. In this case, one might want to estimate a graphical model for the cancer samples and a graphical model for the normal samples. One would expect the two graphical models to be similar to each other, since both are based upon the same type of tissue, but also to have important differences stemming from the fact that gene networks are often dysregulated in cancer. Estimating separate graphical models for the cancer and normal samples does not exploit the similarity between the true graphical models. And estimating a single graphical model for the cancer and normal samples ignores the fact that we do not expect the true graphical models to be identical, and that the differences between the graphical models may be of interest.

In this paper, we propose the joint graphical lasso, a technique for jointly estimating multiple graphical models corresponding to distinct but related conditions, such as cancer and normal tissue. Our approach is an extension of the graphical lasso (1.2) to the case of multiple data sets. It is based upon a penalized log likelihood approach, where the choice of penalty depends on the characteristics of the graphical models that we expect to be shared across conditions.

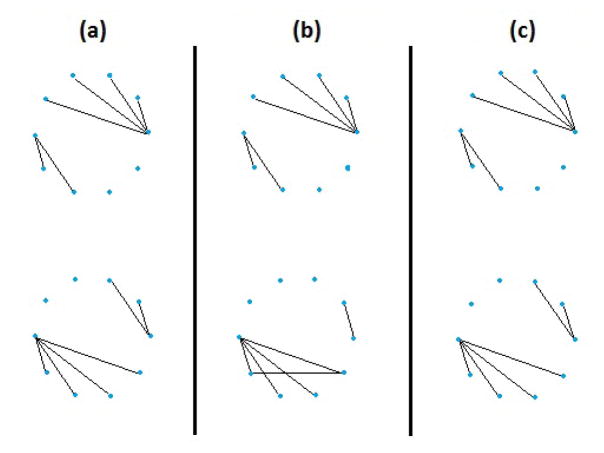

We illustrate our method with a small toy example that consists of observations from two classes. Within each class, the observations are independent and identically distributed according to a normal distribution. The two classes have distinct covariance matrices. When we apply the graphical lasso separately to the observations in each class, the resulting graphical model estimates are less accurate than when we use our joint graphical lasso approach. Results are shown in Figure 1.

Fig. 1.

Comparison of the graphical lasso with our joint graphical lasso in a toy example with two conditions, p = 10 variables, and n=200 observations per condition. (a): True networks. (b): Networks estimated by applying the graphical lasso separately to each class. (c): Networks estimated by applying our joint graphical lasso proposal.

The rest of this paper is organized as follows. In Section 2, we present the joint graphical lasso optimization problem. Section 3 contains an alternating directions method of multipliers algorithm for its solution. In Section 4, we present theoretical results that lead to massive gains in the algorithm’s computational efficiency. Section 5 contains a discussion of related approaches from the literature, and in Section 6 we discuss tuning parameter selection. In Section 7, we illustrate the performance of our proposal in a simulation study. Section 8 contains an application to a lung cancer gene expression dataset. The discussion is in Section 9.

2. The joint graphical lasso

We briefly introduce some notation that will be used throughout this paper.

We let K denote the number of classes in our data, and let denote the true precision matrix for the kth class. We will seek to estimate by formulating convex optimization problems with arguments {Θ} = Θ(1), …, Θ(K). The solutions {Θ̂} = Θ̂(1), …, Θ̂(K) to these optimization problems will constitute estimates of .

We will index matrix elements using i = 1, …, p, j = 1, …, p, and we will index classes using k = 1, …, K. ||A||F will denote the Frobenius norm of matrix A, i.e. .

2.1. The general formulation for the joint graphical lasso

Suppose that we are given K data sets, Y(1), …, Y(K), with K ≥ 2. Y(k) is a nk ×p matrix consisting of nk observations with measurements on a set of p features, which are common to all K data sets. Furthermore, we assume that the observations are independent, and that the observations within each data set are identically distributed: . Without loss of generality, we assume that the features within each data set are centered such that μk = 0. We let , the empirical covariance matrix for Y(k). The log likelihood for the data takes the form (up to a constant)

| (2.3) |

Maximizing (2.3) with respect to Θ(1), …, Θ(K) yields the maximum likelihood estimate (S(1))−1, …, (S(K))−1.

However, depending on the application, the maximum likelihood estimates that result from (2.3) may not be satisfactory. When p is smaller than but close to nk, the maximum likelihood estimates can have very high variance, and no elements of (S(1))−1, …, (S(K))−1 will be zero, leading to difficulties in interpretation. In addition, when p > nk, the maximum likelihood estimates become ill-defined. Moreover, if the K data sets correspond to observations collected from K distinct but related classes, then one might wish to borrow strength across the K classes to estimate the K precision matrices, rather than estimating each precision matrix separately.

Therefore, instead of estimating by maximizing (2.3), we take a penalized log likelihood approach and seek {Θ̂} solving

| (2.4) |

subject to the constraint that Θ(1), …, Θ(K) are positive definite. Here P ({Θ}) denotes a convex penalty function, so that the objective in (2.4) is strictly concave in {Θ}. We propose to choose a penalty function P that will encourage Θ̂(1), …, Θ̂(K) to share certain characteristics, such as the locations or values of the nonzero elements; moreover, we would like the estimated precision matrices to be sparse. In particular, we will consider penalty functions that take the form , where λ1 is a nonnegative tuning parameter and P̃ is a convex function. When P̃ ({Θ}) = 0, (2.4) amounts to performing K uncoupled graphical lasso optimization problems (1.2). The P̃ penalty is chosen to encourage similarity across the K estimated precision matrices; therefore, we refer to the solution to (2.4) as the joint graphical lasso (JGL). We discuss specific forms of the penalty function in (2.4) in the next section.

2.2. Two useful penalty functions

In this subsection, we introduce two particular choices of the convex penalty function P in (2.4) that lead to useful graphical model estimates. In Appendix 1, we further extend these proposals to work on the scale of partial correlations.

2.2.1. The fused graphical lasso

The fused graphical lasso (FGL) is the solution to the problem (2.4) with the penalty

| (2.5) |

where λ1 and λ2 are nonnegative tuning parameters. This is a generalized fused lasso penalty (Hoefling 2010b), and results from applying ℓ1 penalties to (1) each off-diagonal element of the K precision matrices, and (2) differences between corresponding elements of each pair of precision matrices. Like the graphical lasso, FGL results in sparse estimates Θ̂(1), …, Θ̂(K) when the tuning parameter λ1 is large. In addition, many elements of Θ̂(1), …, Θ̂(K) will be identical across classes when the tuning parameter λ2 is large (Tibshirani et al. 2005). Thus FGL borrows information aggressively across classes, encouraging not only similar network structure but also similar edge values.

2.2.2. The group graphical lasso

We define the group graphical lasso (GGL) to be the solution to (2.4) with

| (2.6) |

Again, λ1 and λ2 are nonnegative tuning parameters. A lasso penalty is applied to the elements of the precision matrices and a group lasso penalty is applied to the (i, j) element across all K precision matrices (Yuan & Lin 2007a). This group lasso penalty encourages a similar pattern of sparsity across all of the precision matrices –that is, there will be a tendency for the zeros in the K estimated precision matrices to occur in the same places. Specifically, when λ1 = 0 and λ2 > 0, each Θ̂(k) will have an identical pattern of non-zero elements. On the other hand, the lasso penalty encourages further sparsity within each Θ̂(k).

GGL encourages a weaker form of similarity across the K precision matrices than does FGL: the latter encourages shared edge values across the K matrices, whereas the former encourages only a shared pattern of sparsity.

3. Algorithm for the joint graphical lasso problem

3.1. An ADMM algorithm

We solve the problem (2.4) using an alternating directions method of multipliers (ADMM) algorithm. We refer the reader to Boyd et al. (2010) for a thorough exposition of ADMM algorithms as well as their convergence properties, and to Simon & Tibshirani (2011) and Mohan et al. (2012) for recent applications of ADMM to related problems.

To solve the problem (2.4) subject to the constraint that Θ(k) is positive definite for k = 1, …, K using ADMM, we note that the problem can be rewritten as

| (3.7) |

subject to the positive-definiteness constraint as well as the constraint that Z(k) = Θ(k) for k = 1, …, K, where {Z} = {Z(1), …, Z(K)}. The scaled augmented Lagrangian (Boyd et al. 2010) for this problem is given by

| (3.8) |

where {U} = {U(1), …, U(K)} are dual variables and ρ serves as a “penalty parameter.” Roughly speaking, an ADMM algorithm corresponding to (3.8) results from iterating three simple steps. At the ith iteration, they are as follows:

{Θ(i)} ← arg min{Θ} {Lρ ({Θ}, {Z(i−1)}, {U(i−1)})}.

{Z(i)} ← arg min{Z} {Lρ ({Θ(i)}, {Z}, {U(i−1)})}.

{U(i)} ← {U(i−1)} + ({Θ(i)} − {Z(i)}).

We now present the ADMM algorithm in greater detail.

ADMM algorithm for solving the joint graphical lasso problem

Initialize the variables: Θ(k) = I, U(k) = 0, Z(k) = 0 for k = 1, …, K.

Select a scalar ρ > 0.

-

For i = 1, 2, 3, … until convergence:

-

For k = 1, …, K, update as the minimizer (with respect to Θ(k))Letting VDVT denote the eigendecomposition of , the solution is given (Witten & Tibshirani 2009) by VD̃VT, where D̃ is the diagonal matrix with jth diagonal element

- Update {Z(i)} as the minimizer (with respect to {Z}) of

(3.9) For k = 1, …, K, update as .

-

The final Θ̂(1), …, Θ̂(K) that result from this algorithm are the JGL estimates of . This algorithm is guaranteed to converge to the global optimum (Boyd et al. 2010). We note that the positive-definiteness constraint on the estimated precision matrices is naturally enforced by the update in Step (c)(i).

This algorithm requires specification of a penalty ρ controlling the step size and a convergence criterion. In the examples throughout this paper, we use ρ = 1 and declare convergence when .

Details of the minimization of (3.9) will depend on the form of the convex penalty function P. We note that the task of minimizing (3.9) can be re-written as

| (3.10) |

where

| (3.11) |

We will see in Section 3.2 that for the FGL and GGL penalties, solving (3.10) is a simple task, regardless of the value of K.

The algorithm given above involves computing the eigen decomposition of a p × p matrix, which can be computationally demanding when p is large. However, in Section 4, we will present two theorems that reveal that when the values of the tuning parameters λ1 and λ2 are large, one can obtain the exact solution to the JGL optimization problem without ever computing the eigen decomposition of a p × p matrix. Therefore, solving the JGL problem is fast even when p is quite large. In Section 8, we will see that one can perform FGL with K = 2 classes and almost 18,000 features in under 2 minutes.

3.2. Solving (3.10) for the joint graphical lasso

We now consider the problem of solving (3.10) if P is a generalized fused lasso or group lasso penalty.

3.2.1. Solving (3.10) for FGL

If P is the penalty given in (2.5), then (3.10) takes the form

| (3.12) |

Now (3.12) is completely separable with respect to each pair of matrix elements (i, j): that is, one can simply solve, for each (i, j),

| (3.13) |

This is a special case of the fused lasso signal approximator (Hoefling 2010b) in which there is a fusion between each pair of variables. A very efficient algorithm for this special case, which can be performed in O(K log K) operations, is available (Hocking et al. 2011, Hoefling 2010a, Tibshirani 2012).

In fact, when K = 2, (3.13) has a very simple closed form solution. When λ1 = 0, it is easy to verify that the solution to (3.13) takes the form

| (3.14) |

And when λ1 > 0, the solution to (3.13) can be obtained through soft-thresholding (3.14) by λ1/ρ (see Friedman, Hastie, Hoefling & Tibshirani 2007). Here the soft-thresholding operator is defined as S(x, c) = sgn(x)(|x|−c)+, where a+ = max(a, 0).

3.2.2. Solving (3.10) for GGL

If P is the group lasso penalty (2.6), then (3.10) takes the form

| (3.15) |

First, for all j = 1, …, p and k = 1, …, K, it is easy to see that the solution to (3.15) has . And one can show that the off-diagonal elements take the form (Friedman et al. 2010)

| (3.16) |

where S denotes the soft-thresholding operator.

4. Faster computations for FGL and GGL

We now present two theorems that lead to substantial computational improvements to the JGL algorithm presented in Section 3. Using these theorems, one can inspect the empirical covariance matrices S(1), …, S(K) in order to determine whether the solution to the JGL optimization problem is block diagonal after some permutation of the features. Then one can simply perform the JGL algorithm on the features within each block separately, in order to obtain exactly the same solution that would have been obtained by applying the algorithm to all p features. This leads to huge speed improvements since it obviates the need to ever compute the eigen decomposition of a p × p matrix. Our results mirror recent improvements in algorithms for solving the graphical lasso problem (Witten et al. 2011, Mazumder & Hastie 2012).

For instance, suppose that for a given choice of λ1 and λ2, we determine that the estimated inverse covariance matrices Θ̂(1), …, Θ̂(K) are block diagonal, each with the same R blocks, the rth of which contains pr features, . Then in each iteration of the JGL algorithm, rather than having to compute the eigen decomposition of K p × p matrices, we need only compute eigen decompositions of matrices of dimension p1 × p1, …, pR × pR. This leads to a potentially massive reduction in computational complexity from O(p3) to .

We begin with a very simple lemma for which the proof follows by inspection of (2.4). The lemma can be extended by induction to any number of blocks.

Lemma 4.1

Suppose that the solution to the FGL or GGL optimization problem is block diagonal with known blocks. That is, the features can be reordered in such a way that each estimated inverse covariance matrix takes the form

| (4.17) |

where each of has the same dimension. Then, and can be obtained by solving the FGL or GGL optimization problem on just the corresponding set of features.

We now present the key results. Theorems 1 and 2 outline necessary and sufficient conditions for the presence of block diagonal structure in the FGL and GGL optimization problems, and are proven in Appendix 2.

Theorem 1

Consider the FGL optimization problem with K = 2 classes. Let C1 and C2 be a non-overlapping partition of the p variables such that C1 ∩ C2 = ∅, C1 ∪ C2 = {1, …, p}. The following conditions are necessary and sufficient for the variables in C1 to be completely disconnected from those in C2 in each of the resulting network estimates:

for all i ∈ C1 and j ∈ C2,

for all i ∈ C1 and j ∈ C2, and

for all i ∈ C1 and j ∈ C2.

Furthermore, if K > 2, then

| (4.18) |

is a sufficient condition for the variables in C1 to be completely disconnected from those in C2.

Theorem 2

Consider the GGL optimization problem with K ≥ 2 classes. Let C1 and C2 be a non-overlapping partition of the p variables, such that C1 ∩ C2 = ∅, C1 ∪ C2 = {1, …, p}. The following condition is necessary and sufficient for the variables in C1 to be completely disconnected from those in C2 in each of the resulting network estimates:

| (4.19) |

Theorems 1 and 2 allow us to quickly check if, given a partition of the features C1 and C2, the solution to the JGL optimization problem is block diagonal with one block corresponding to features in C1 and one block corresponding to features in C2. In practice, for any given (λ1, λ2), we can quickly perform the following two-step procedure to identify any block structure in the FGL or GGL solution:

Create M, a p×p matrix with Mjj = 1 for j = 1, …, p. For i ≠ = j, let Mij = 0 if the conditions specified in Theorem 1 are met for that pair of variables and the FGL penalty is used, or if the condition of Theorem 2 is met for that pair of variables and the GGL penalty is used. Otherwise, set Mij = 1.

Identify the connected components of the undirected graph whose adjacency matrix is given by M. Note that this can be performed in O(|M|) operations, where |M| is the number of nonzero elements in M (Tarjan 1972).

Theorems 1 and 2 guarantee that the connected components identified in Step (b) correspond to distinct blocks in the FGL or GGL solutions. Therefore, one can quickly obtain these solutions by solving the FGL or GGL optimization problems on the submatrices of these K p × p empirical covariance matrices that correspond to that block diagonal structure. Consequently, we can obtain the exact solution to the JGL optimization problem on extremely high-dimensional data sets that would otherwise be computationally intractable. For instance, in Section 8 we performed FGL on a gene expression data set with almost 18, 000 features in under two minutes.

As pointed out by a reviewer, Theorems 1 and 2 lead to speed improvements only if the tuning parameters λ1 and λ2 are sufficiently large. We argue that this will in fact be the case in most practical applications of JGL. When network estimation is performed for the sake of data exploration and when p is large, only a very sparse network estimate will be useful; otherwise, interpretation of the estimate will be impossible. Even when data exploration is not the end goal of the analysis, large values of λ1 and λ2 will generally be used, since most data sets cannot reasonably support estimation of Kp(p + 1)/2 nonzero parameters when n ≪ p.

5. Relationship to previous proposals

Several past proposals have been made to jointly estimate graphical models on the basis of observations drawn from distinct conditions. Some proposals have used time-series data to define time-varying networks in the context of continuous or binary data (Zhou et al. 2008, Song et al. 2009a, Ahmed & Xing 2009, Kolar & Xing 2009, Song et al. 2009b, Kolar et al. 2010). Guo et al. (2011) instead describe a likelihood-based method for estimating precision matrices across multiple related classes simultaneously. They employ a hierarchical penalty that forces similar patterns of sparsity across classes, an approach that is similar in spirit to GGL.

Our FGL and GGL proposals have a number of advantages over these existing approaches. Methods for estimating time-varying networks cannot be easily extended to the setting where the classes lack a natural ordering. Guo et al. (2011)’s proposal is a closer precursor to our method, and can in fact be stated as an instance of the problem (2.4) with a hierarchical group lasso penalty

| (5.20) |

that encourages a shared pattern of sparsity across the K classes. But the approach of Guo et al. (2011) has a number of disadvantages relative to FGL and GGL. (1) The penalty (5.20) is not convex, so algorithmic convergence to the global optimum is not guaranteed. (2) Because (5.20) is not convex, it is not possible to achieve the speed improvements described in Section 4. Consequently, the Guo et al. (2011) proposal is quite slow relative to our approach, as seen in Figures 2(e), 4(e), and 5(e), and essentially cannot be applied to very high-dimensional data sets. (3) Unlike FGL and GGL, it uses just one tuning parameter, and is unable to control separately the sparsity level and the extent of network similarity. (4) In cases where we expect edge values as well as network structure to be similar between classes, FGL is much better suited than GGL and Guo et al. (2011)’s proposal, both of which encourage shared patterns of sparsity but do not encourage similarity in the signs and values of the nonzero edges.

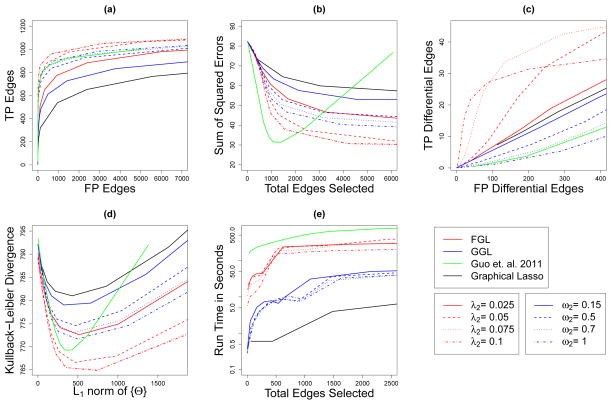

Fig. 2.

Performance of FGL, GGL, Guo et al. (2011)’s method, and the graphical lasso on simulated data with 150 observations in each of 3 classes, and 500 features. Black lines display models derived using the graphical lasso, green lines display the proposal of Guo et al. (2011), red lines display FGL, and blue lines display GGL. (a): The number of edges correctly identified to be nonzero (TP Edges) is plotted against the number of edges incorrectly identified to be nonzero (FP edges). (b): The sum of squared errors in edge values is plotted against the total number of edges estimated to be nonzero. (c): The number of edges correctly found to have values differing between classes (TP Differential Edges) is plotted against the number of edges incorrectly found to have values differing between classes (FP Differential Edges). (d): The dKL of the estimated models from the true models is plotted against the ℓ1 norm of the off-diagonal entries of the estimated precision matrices. (e): Running time (in seconds) is plotted against the number of non-zero edges estimated. Note the use of a log scale on the vertical axis.

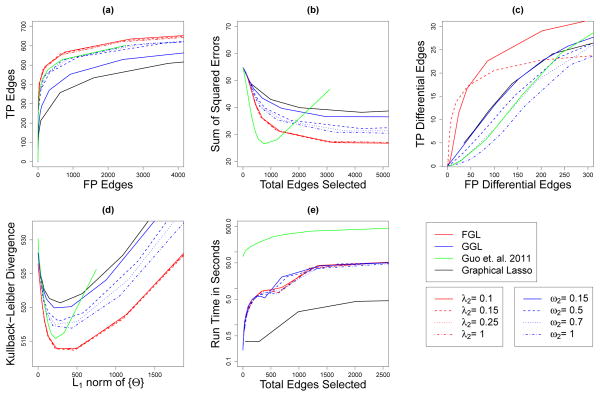

Fig. 4.

Performance of FGL, GGL, Guo et al. (2011)’s method, and the graphical lasso on simulated data with 150 observations in each of 2 classes, and 500 features corresponding to ten equally sized, unconnected subnetworks drawn from a power law distribution. Details are as given in Figure 2.

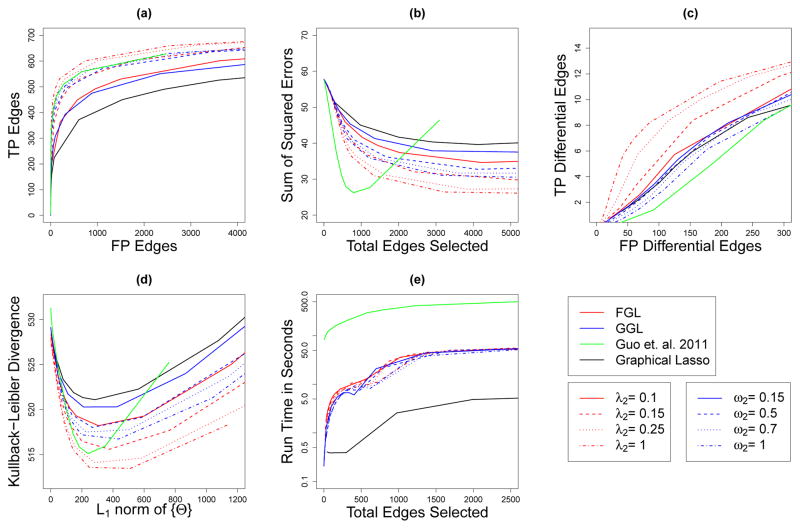

Fig. 5.

Performance of FGL, GGL, Guo et al. (2011)’s method, and the graphical lasso on simulated data with 150 observations in each of 2 classes, and 500 features corresponding to a single large power law network. Details are as given in Figure 2.

Guo et al. (2011)’s proposal is included in the simulation study in Section 7.

6. Tuning parameter selection

Network estimation is usually performed to aid exploratory data analysis and hypothesis generation. For these purposes, approaches such as AIC, BIC, and cross-validation may tend to choose models that are too large to be useful, and model selection is better guided by practical considerations, such as network interpretability and stability and the desire for an edge set with a low false discovery rate (Meinshausen & Buhlmann 2010, Li et al. 2013). Thus in most cases, we recommend an application-driven selection of tuning parameters in order to achieve a model that is biologically plausible, sufficiently complex to be interesting, and sufficiently sparse to be interpretable and extremely well-supported by the data. In fact, network estimation methods will often prove most descriptively useful when run over a variety of tuning parameters, giving the researcher a sense of how easily various edges overcome increasing values of the sparsity penalty and how readily they become shared across networks as the similarity penalty increases. Ideally, the final model would be accompanied by a p-value on each edge or an overall estimate of edge FDR, a difficult problem addressed by Li et al. (2013) in the partial correlation-based network estimation framework and an important goal for research in likelihood-based network estimation methods.

When an objective method of selecting tuning parameters is desirable, one can select tuning parameters for JGL using an approximation of the Akaike Information Criterion (AIC),

| (6.21) |

where is the inverse covariance matrix estimated on the kth class of data using the tuning parameters λ1 and λ2, and Ek is the number of non-zero elements in . A grid search can then be performed to select λ1 and λ2 that minimize the AIC(λ1, λ2) score. The simulation study in Section 7 suggests that this criterion tends to select models whose Kullback-Leibler divergence (dKL) from the true model is low. When the number of variables p is very large, computing AIC(λ1, λ2) over a range of values for λ1 and λ2 may prove computationally onerous. If this is the case, we suggest a dense search over λ1 while holding λ2 at a fixed, low value, followed by a quick search over λ2, holding λ1 at the selected value.

7. Simulation study

We compare the performances of FGL and GGL to two existing methods, the graphical lasso and the proposal of Guo et al. (2011), in Section 7.1. When applying the graphical lasso, networks are fitted for each class separately. We investigate the effects of n and p on FGL and GGL’s performances in Section 7.2. Additional simulation results are presented in Appendix 3.

The effects of the FGL and GGL penalties vary with the sample size. For ease of presentation of the simulation study results, we multiply the reported tuning parameters λ1 and λ2 by the sample size of each class before performing JGL.

To ease interpretation, we reparametrize the GGL penalties in our simulation study. The motivation is to summarize the regularization for “sparsity” and for “similarity” separately. In FGL, this is nicely achieved by just using λ1 and λ2, as the former drives network sparsity and the latter drives network similarity. In contrast, in GGL, both tuning parameters contribute to sparsity: λ1 drives individual network edges to zero whereas λ2 simultaneously drives network edges to zero across all K network estimates. We reparameterize our simulation results for GGL in terms of and , which we found to reasonably reflect the levels of “sparsity” and “similarity” regularization, respectively.

7.1. Performance as a function of tuning parameters

7.1.1. Simulation set-up

In this simulation, we consider a three-class problem. We first generate three networks with p = 500 features belonging to ten equally sized unconnected subnetworks, each with a power law degree distribution. Power law degree distributions are thought to mimic the structure of biological networks (Chen & Sharp 2004) and are generally harder to estimate than simpler structures (Peng et al. 2009). Of the ten subnetworks, eight have the same structure and edge values in all three classes, one is identical between the first two classes and missing in the third (i.e. the corresponding features are singletons in the third network), and one is present in only the first class. The topology of the networks generated is shown in Figure 6 in Appendix 4.

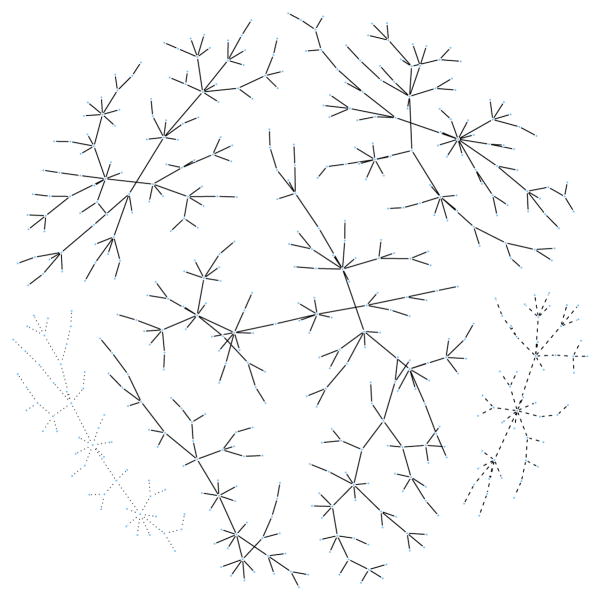

Fig. 6.

Network used to generate simulated datasets for Figure 2 in Section 7.1.2. Solid edges are common to all three classes, dashed edges are present only in classes 1 and 2, and dotted edges are present only in class 1.

Given a network structure, we generate a covariance matrix for the first class as follows (Peng et al. 2009). We create a p × p matrix with ones on the diagonal, zeroes on elements not corresponding to network edges, and values from a uniform distribution with support on {[−.4, −.1] [.1, .4]} on elements corresponding to edges. To ensure positive definiteness, we divide each off-diagonal element by 1.5 times the sum of the absolute values of off-diagonal elements in its row. Finally, we average the matrix with its transpose, achieving a symmetric, positive-definite matrix A. We then create the (i, j) element of Σ1 as

where dij = 0.6 if i ≠ j and dij = 1 if i = j. We create Σ2 equal to Σ1, then reset one of its ten subnetwork blocks to the identity. We create Σ3 equal to Σ2, and reset an additional subnetwork block to the identity. Finally, for each class we generate independent, identically distributed samples from a N(0, Σk) distribution.

We present two additional simulations studies involving two-class datasets in Appendix 3. The first additional simulation uses the same network structure described above, and the second uses a single power law network with no block structure.

7.1.2. Simulation results

Our first set of simulations illustrates the effect of varying tuning parameters on the performances of FGL and GGL. We generated 100 three-class data sets with p = 500 features and n = 150 observations per class, as described in Section 7.1.1. Class 1’s network had 490 edges, class 2’s network is missing 49 of those edges, and class 3’s network is missing an additional 49 edges. Figure 2 characterizes the average performance of the methods over the 100 data sets. In each plot, the lines for FGL and for GGL indicate the results obtained with a single value of the similarity tuning parameters λ2 and ω2. The graphical lasso and the proposal of Guo et al. (2011) are included in the comparisons.

Figure 2(a) displays the number of true edges selected against the number of false edges selected. We say an edge (i, j) in the kth network is selected if , and we say that the edge is true if and false if . As the sparsity tuning parameters λ1 and ω1 decrease, the number of edges selected increases. At many values of the similarity tuning parameter λ2, FGL dominates the other methods. At some choices of the similarity tuning parameter ω2, GGL performs as well as Guo et al. (2011). FGL, GGL, and Guo et al. (2011)’s proposal dominate the graphical lasso.

Figure 2(b) displays the sum of squared errors (SSE) between estimated edge values and true edge values: . Unlike the proposal of Guo et al. (2011), FGL, GGL, and the graphical lasso tend to overshrink edge values towards zero due to the use of convex penalty functions. Thus, while FGL and GGL attain SSE values that are as low as those of Guo et al. (2011), they do so when estimating much larger networks. When simultaneous edge selection and estimation are desired, it may be useful to run FGL or GGL once and then to re-run them with smaller penalties on the selected edges, as in Meinshausen (2007).

Figure 2(c) evaluates each method’s success in detecting differential edges, or edges that differ between classes. We say that the (i, j)th edge is estimated to be differential between the kth and k′th networks if , and we say it is truly differential if and falsely differential if . For FGL, the number of differential edges is computed as the number of pairs k < k′, i < j such that . Since GGL, the proposal of Guo et al. (2011), and the graphical lasso cannot yield edges that are exactly identical across classes, for those approaches the number of differential edges is computed as the number of pairs k < k′, i < j such that . The number of true positive differential edges is plotted against the number of false positive differential edges. Note that by controlling the total number of non-zero edges, the sparsity tuning parameters λ1 and ω1 have a large effect on the number of edges that are estimated to differ between the two networks. FGL yields fewer false positives than the competing methods, since it shrinks between-class differences in edge values to zero. Since neither GGL nor Guo et al. (2011)’s method are designed to shrink edge values towards each other, by this measure neither method outperforms even the graphical lasso.

Figure 2(d) displays the sum of the dKL’s of the estimated distributions from the true distributions, as a function of the ℓ1 norm of the off-diagonal elements of the estimated precision matrices, i.e. . The dKL from the multivariate normal model with inverse covariance estimates Θ̂(1), …, Θ̂(K) to the multivariate normal model with the true precision matrices is

At most values of λ2, FGL attains a lower dKL than the other methods, followed by Guo et al. (2011)’s method, then by GGL. The graphical lasso has the worst performance, since it estimates each network separately.

Figure 2(e) compares the methods’ running times. Computation time (in seconds) is plotted against the total number of non-zero edges estimated. The graphical lasso is fastest, but FGL and GGL are much faster than the proposal of Guo et al. (2011), due to the results from Section 4. Timing comparisons were performed on an Intel Xeon x5680 3.3 GHz processor. It is worth mentioning that the FGL algorithm is much faster in problems with only two classes, since in that case there is a closed-form solution to the generalized fused lasso problem (Section 3.2). This can be seen in Figures 4(e) and 5(e) in Appendix 3.

We examined the details of the models from FGL, GGL, the method of Guo et al. (2011) and the graphical lasso with tuning parameters selected as described in Section 6. The performance of these models is detailed in Table 1. The AIC-selected FGL and GGL models outperform the AIC-selected models from the earlier methods. AIC selects a larger model for the method of Guo et al. (2011) than it does for FGL and GGL. For all methods, AIC appears to select models with low Kullback-Leibler divergences from the truth but with greater numbers of edges than would be ideal for accurate hypothesis generation. AIC selected a much smaller model for GGL than for the other methods, achieving by far the best edge estimation performance.

Table 1.

Performance of models selected by AIC. For each method, the tuning parameters selected by AIC are displayed, as are the average values over 100 iterations of the following performance metrics: AIC, Kullback-Leibler divergence from the true model, numbers of true and false positive edges (TPE and FPE) and numbers of true and false positive differential edges (TPDE and FPDE).

| Method | tuning parameters | AIC | dKL | TPE | FPE | TPDE | FPDE |

|---|---|---|---|---|---|---|---|

|

| |||||||

| FGL | λ1 = 0.175, λ2 = 0.025 | 1465 | 774 | 884 | 2406 | 77 | 4977 |

| GGL | ω1 = 0.225, ω2 = 1 | 1470 | 776 | 898 | 736 | 53 | 1456 |

| Graphical lasso | λ = 0.2 | 1471 | 781 | 766 | 5578 | 85 | 11609 |

| Guo et al. (2011) | λ = 0.4 | 1338 | 791 | 1003 | 5080 | 89 | 8992 |

7.2. Performance as a function of n and p

We now evaluate the effect of sample size n and dimension p on the performances of FGL and GGL.

7.2.1. Simulation set-up

We generate a pair of networks with p = 500 much as described in Section 7.1.1, but with K = 2 instead of K = 3. The first network has 10 equal-sized components with power law degree distributions, and the second network is identical to the first in both edge identity and value, but with two components removed.

In addition to the 500-feature network pair, we generate a pair of networks with p = 1000 features, each of which is block diagonal with 500 × 500 blocks corresponding to two copies of the 500-feature networks just described. We generate covariance matrices from the networks exactly as described in Section 7.1.1.

7.2.2. Simulation results

For both the p = 500 and the p = 1000 networks, we simulate 100 datasets with n = 50, n = 200, and n = 500 samples in each class. We run FGL with λ1 = 0.2, λ2 = 0.1 and GGL with λ1 = 0.05, λ2 = 0.25. These tuning parameter values were chosen because they were in the range of successful values in the similar simulation setup of Section 7.1.2 (see Figure 2), and therefore provide a good setting under which to evaluate the effects of n and p on FGL and GGL. We record in Table 2 dKL as well as the sensitivity and false discovery rate associated with detecting nonzero edges and detecting differential edges. In this simulation setting, accuracy of covariance estimation (as measured by dKL) improves significantly from n = 50 to n = 200, and improves only marginally with a further increase to n = 500. Detection of edges improves throughout the range of n’s sampled: for both FGL and GGL, sensitivity improves slightly with increased sample size, and FDR decreases dramatically. Accurate detection of edge differences is a more difficult goal, though FGL succeeds in it at higher sample sizes.

Table 2.

Performances as a function of n and p. Means over 100 replicates are shown for dKL, and for sensitivity (Sens.) and false discovery rate (FDR) of detection of edges (DE) differential edge detection (DED).

| p | n | dKL | DE Sens. | DE FDR | DED Sens. | DED FDR | |

|---|---|---|---|---|---|---|---|

|

| |||||||

| FGL | 500 | 50 | 545.1 | 0.502 | 0.966 | 0.262 | 0.996 |

| 200 | 517.5 | 0.570 | 0.053 | 0.228 | 0.485 | ||

| 500 | 516.6 | 0.590 | 0.001 | 0.192 | 0.036 | ||

|

| |||||||

| 1000 | 50 | 1119.3 | 0.600 | 0.970 | 0.245 | 0.998 | |

| 200 | 1035.0 | 0.666 | 0.063 | 0.223 | 0.557 | ||

| 500 | 1033.3 | 0.681 | 0.000 | 0.194 | 0.025 | ||

|

| |||||||

| GGL | 500 | 50 | 549.8 | 0.490 | 0.973 | 0.337 | 0.996 |

| 200 | 520.8 | 0.505 | 0.060 | 0.244 | 0.903 | ||

| 500 | 519.7 | 0.524 | 0.010 | 0.194 | 0.921 | ||

|

| |||||||

| 1000 | 50 | 1127.9 | 0.587 | 0.976 | 0.316 | 0.998 | |

| 200 | 1041.7 | 0.615 | 0.061 | 0.239 | 0.908 | ||

| 500 | 1039.4 | 0.629 | 0.007 | 0.197 | 0.920 | ||

8. Analysis of lung cancer microarray data

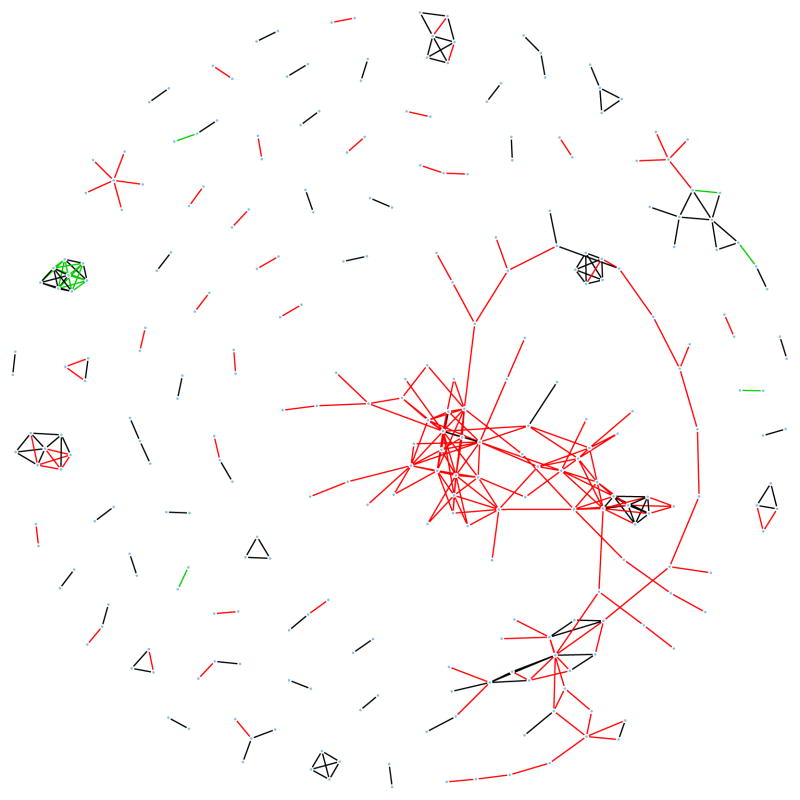

We applied FGL to a dataset containing 22, 283 microarray-derived gene expression measurements from large airway epithelial cells sampled from 97 patients with lung cancer and 90 controls (Spira et al. 2007). The data are publicly available from the Gene Expression Omnibus (Barrett et al. 2005) at accession number GDS2771. We omitted genes with standard deviations in the bottom 20% since a greater share of their variance is likely attributable to non-biological noise. The remaining genes were standardized to have mean zero and standard deviation one within each class. To avoid disparate levels of sparsity between the classes and to prevent the larger class from dominating the estimated networks, we weighted each class equally instead of by sample size in (2.4). Since our goal was data visualization and hypothesis generation, we chose a high value for the sparsity tuning parameter, λ1 = 0.95, to yield very sparse network estimates. We ran FGL with a range of λ2 values in order to identify the edges that differed most strongly, and settled on λ2 = 0.005 as providing the most interpretable results. This application-driven choice of tuning parameters is appropriate when the goal of network estimation is description and hypothesis generation. A full analysis of this dataset would involve examination of network estimates across a range of tuning parameters. Application of Theorem 1 revealed that only 278 genes were connected to any other gene using the chosen tuning parameters. Identification of block diagonal structure using Theorem 1 and application of the FGL algorithm took less than two minutes. (Note that this data set is so large that it would be computationally prohibitive to apply the proposal of Guo et al. (2011)!) FGL estimated 134 edges shared between the two networks, 202 edges present only in the cancer network, and 18 edges present only in the normal tissue network. The results are displayed in Figure 3.

Fig. 3.

Conditional dependency networks inferred from 17,772 genes in normal and cancerous lung cells. 278 genes have nonzero edges in at least one of the two networks. Black lines denote edges common to both classes. Red and green lines denote tumor-specific and normal-specific edges, respectively.

The estimated networks contain many two-gene subnetworks common to both classes, a few small subnetworks, and one large subnetwork specific to tumor cells. Reassuringly, 45% of edges, including almost all of the two-gene subnetworks, connect multiple probes for the same gene. Many other edges connect genes that are obviously related, that are involved in the same biological process, or that even code for components of the same enzyme. Examples include TUBA1B and TUBA1C, PABPC1 and PABPC3, HLA-B and HLA-G, and SERPINB3 and SERPINB4. Recovery of these pairs suggests that FGL (and other network analysis tools) can generate high-quality hypotheses about gene co-regulation and functional interactions. This increases our confidence that some of the non-obvious two-gene subnetworks detected in this analysis may merit further investigation. Examples include DAZAP2 and TCP1, PRKAR1A and CALM3, and BCLAF1 and SERPB1. A complete list of subnetworks detected is available in the Supplementary Materials.

The small black and green network in Figure 3 suggests an interesting phenomenon. It contains multiple probes for two hemoglobin genes, HBA2 and HBB. In the normal tissue network, the probes for these genes are heavily interconnected both within and between the genes. In the tumor cells, while edges between HBA2 probes and between HBB probes are preserved, no edges connect the two genes. The abundance of connections between the two genes in healthy cells and the absence of connections in tumor cells may indicate a possible direction of future investigation.

The most promising results of this analysis arise from the large subnetwork (104 nodes for 84 unique genes) unique to tumor cells. Many of the subnetwork’s genes are involved in constructing ribosomes, including RPS8, RPS23, RPS24, RPS7p11, RPL3, RPL5, RPL10A, RPL14P1, RPL15, RPL17, RPL30 and RPL31. Other genes in the subnetwork further involve ribosome functioning: SRP14 and SRP9L1 are involved in recruiting proteins from ribosomes into the ER, and NACA inhibits the SRP pathway. Thus this subnetwork portrays a detailed web of relationships consistent with known biology. More interestingly, this network also contains two genes in the RAS oncogene family: RAB1A and RAB11A. Genes in this family have been linked to many types of cancer, and are considered promising targets for therapeutics (Adjei 2008). These genes’ connections with ribosome activity in the tumor samples may indicate a relationship common to an important subset of cancers. Many other genes belong to this network, each indicating a potentially novel interaction in cancer biology.

9. Discussion

We have introduced the joint graphical lasso, a method for estimating sparse inverse covariance matrices on the basis of observations drawn from distinct but related classes. We employ an ADMM algorithm to solve the joint graphical lasso problem with any convex penalty function, and we provide explicit and efficient solutions for two useful penalty functions. Our algorithm is tractable on very large datasets (> 20, 000 features), and usually converges in seconds for smaller problems (500 features). Our joint estimation methods outperform competing approaches on a range of simulated datasets.

In the JGL optimization problem (2.4), the contribution of each class to the penalized log likelihood is weighted by its size; consequently, the largest class can have outsize influence on the estimated networks. By omitting the nk term in (2.4), it is possible to weight the classes equally to prevent a single class from dominating estimation.

We note that FGL and GGL’s reliance on two tuning parameters is a strength rather than a drawback: unlike the proposal of Guo et al. (2011), which involves a single tuning parameter that controls both sparsity and similarity, in performing FGL and GGL one can vary separately the amount of similarity and sparsity to enforce in the network estimates.

The joint graphical lasso has potential applications beyond those discussed in this paper. For instance, one could use it to shrink multiple classes’ precision matrices towards each other in order to define a classifier intermediate between quadratic discriminant analysis (QDA) and linear discriminant analysis (LDA) (Hastie et al. 2009). In fact, a similar approach has been taken in recent work (Simon & Tibshi-rani 2011). In the unsupervised setting, it can be used in the maximization step of Gaussian model-based clustering in order to reduce the variance associated with estimating a separate covariance matrix for each cluster.

FGL and GGL are implemented in the R package JGL, available on CRAN, http://cran.r-project.org/.

Supplementary Material

Acknowledgments

We thank two anonymous reviewers, an associate editor, and an editor for useful comments that substantially improved this paper; Noah Simon, Holger Hoefling, Jacob Bien, and Ryan Tibshirani for helpful conversations and for sharing with us unpublished results; and Jian Guo and Ji Zhu for providing software for the proposal in Guo et al. (2011). We thank Karthik Mohan, Mike Chung, Su-In Lee, Maryam Fazel, and Seungyeop Han for helpful comments. PD and PW are supported by NIH grant 1R01GM082802. PW is also supported by NIH grants P01CA53996 and U24CA086368. DW is supported by NIH grant DP5OD009145.

Appendix 1: Modifying JGL to work on the scale of partial correlations

A reviewer suggested that under some circumstances, it may be preferable to encourage the K networks to have shared partial correlations, rather than shared precision matrices. Below, we describe a simple approach for extending our FGL proposal to work on the scale of partial correlations. A similar approach can be taken to extend GGL. The extension relies on two insights.

, where ρij is the true partial correlation between the ith and jth features, and where σij is the (i, j)th entry of the true precision matrix.

The algorithm for solving the FGL optimization problem can easily be modified to make use of the following penalty function:

| (9.22) |

where , and where σ̂ii is an estimate of the ith diagonal element of the K precision matrices. (Here, we assume the K precision matrices have shared diagonal elements.) The estimate {σ̂ii} can be obtained in a number of ways, for instance by performing the graphical lasso on the samples from all K data sets together. Then this approach will effectively result in applying a generalized fused lasso penalty to the partial correlations for the K classes.

Appendix 2: Proofs of Theorems 1 and 2

Preliminaries to Proofs of Theorems 1 and 2

We begin with a few comments on subgradients. The subgradient of with respect to equals

for some a ∈ [−1, 1]. The subgradient of with respect to for k ≠ k′ equals (d, − d), where

for some a ∈ [−1, 1]. Finally, the subgradient of with respect to is given by

for some ϒ1,ij, …, ϒK,ij such that .

To prove Theorem 1, we will use the following lemma.

Lemma 9.1

The following two sets of conditions are equivalent:

|n1S1| ≤ λ1 + λ2, |n2S2| ≤ λ1 + λ2, and |n1S1 + n2S2| ≤ 2λ1.

There exist Γ1, Γ2, ϒ ∈ [−1, 1] such that −n1S1 − λ1Γ1 −λ2 ϒ = 0, and −n2S2 − λ1Γ2 + λ2 ϒ = 0.

Proof

We will begin by proving that (B) implies (A), and will then prove that (A) implies (B).

Proof that (B)⇒(A)

First of all, −n1S1 − λ1Γ1 − λ2ϒ = 0 implies that |n1S1| ≤ λ1 + λ2, since Γ1, ϒ ∈[−1, 1]. Similarly, −n2S2 − λ1Γ;2 + λ2ϒ = 0 implies that |n2S2| ≤ λ1 + λ2. Finally, summing the two equations in (B) reveals that n1S1 + n2S2 = − λ1(Γ1 + Γ2), which implies that |n1S1 + n2S2| ≤ 2λ1.

Proof that (A)⇒(B)

Without loss of generality, assume that n1S1 ≥ n2S2. We split the proof into two cases.

-

Case 1: n1S1 − n2S2 < 2 λ2.

Let , and .

First, note that by (A), we know that |n1S1+n2S2| ≤ 2 λ1. Therefore, Γ1, Γ;2 ∈ [−1, 1]. Second, note that Case 1’s assumption that n1S1 −n2S2 < 2 λ2 implies that ϒ ∈ [−1, 1]. Finally, we see by inspection that −n1S1 − λ1Γ1 − λ2 ϒ = 0, and −n2S2 − λ1 Γ2 + λ2 ϒ = 0.

-

Case 2: n1S1 − n2S2 ≥ 2λ2.

Let , and ϒ = −1. Then, by inspection, −n1S1 − λ1Γ1 − λ2 ϒ = 0, and −n2S2 − λ1 Γ2 +λ2 ϒ = 0.

It remains to show that Γ1, Γ2, ϒ ∈ [−1, 1]. Trivially, ϒ = −1 ∈ [−1, 1]. From our assumption that |n1S1| ≤ λ1 + λ2 = −1. n1S1| = 1 + 2, we know that . Moreover, by the assumptions that n1S1 − n2S2 ≥ 2λ2 and |n1S1 + n2S2| ≤ 2λ1, we have that(9.23) Therefore Γ1 ∈ [−1, 1].

By the assumption that |n2S2| ≤ λ1 + λ2, we know that . From the assumptions that n1S1 − n2S2 ≤ 2λ2 and |n1S1 + n2S2| ≤ 2λ1, we have that(9.24) Therefore Γ2 [−1, 1].

Thus we conclude (A)⇒(B), and our proof of Lemma 9.1 is complete.

We will make use of the following lemma in order to prove Theorem 2.

Lemma 9.2

The following two conditions are equivalent:

There exist scalars a1, …, aK such that and nk|Sk| ≤ λ1 + λ2ak for all k = 1, …, K.

There exist scalars Γ1, …, ΓK ∈ [−1, 1] and ϒ1, …, ϒK such that and nkSk + λ1Γk + λ2 ϒK = 0 for k = 1, …, K.

Proof

We will begin by proving that (B) implies (A), and will then show that (A) implies (B).

Proof that (B)⇒(A)

By (B), nk|Sk| = |λ1Γk + λ2 ϒk| ≤ λ1|Γk|+ λ2|ϒk| ≤ λ1 + λ2|ϒk |. Letting ak = |ϒk|, the result holds.

Proof that (A)⇒(B)

Let Γk and ϒk take the following forms, for k = 1, …, K:

| (9.25) |

| (9.26) |

First of all, we note by inspection that Γk ∈ [−1, 1] and that nkSk + λ1Γk + λ2 ϒk = 0 for k = 1, …, K. It remains to show that . Specifically, we will show that for k = 1, …, K. To see why this is the case, note that if −λ1 < nkSk < λ1 then . And if nkSk > λ1 or nkSk < − λ1, then .

Proof of Theorem 1

We first consider the claim for the case K = 2. By the Karush-Kuhn-Tucker (KKT; see e.g. Boyd & Vandenberghe 2004) conditions, a necessary and sufficient set of conditions for {Θ} to be the solution to the JGL problem is that

| (9.27) |

where Γ1,ij is the subgradient of with respect to , Γ2,ij is the subgradient of with respect to , and ϒij is the subgradient of with respect to .

Let C1 and C2 be a partition of the p variables into two nonoverlapping sets, with C1 ∩ C2 =∅, C1 ∪ C2 = {1, …, p}. Consider the matrices

| (9.28) |

where and solve the JGL problem on the features in C1, and and solve the JGL problem on the features in C2. By inspection of (9.27), Θ(1) and Θ(2) solve the entire JGL optimization problem if and only if for all i ∈ C1, j ∈ C2, there exist Γ1,ij, Γ2,ij, ϒij ∈ [−1, 1] such that

| (9.29) |

Therefore, by Lemma 9.1, the proof of the claim for the case K = 2 is complete.

The derivation of the sufficient condition for the case K > 2 is simple and we omit it here.

Proof of Theorem 2

We note that Theorem 2’s condition (4.19) is equivalent to the following:

| (9.30) |

where aij,1, …, aij,K are scalars that satisfy . We will prove that (9.30) is necessary and sufficient for the variables in C1 to be completely disconnected from those in C2 in each of the resulting network estimates.

By the KKT conditions, a necessary and sufficient set of conditions for {Θ} to be the solution to the JGL problem is that

| (9.31) |

for k = 1, …, K. In (9.31), Γk,ij is the subgradient of with respect to , and (ϒ1,ij, …, ϒK,ij) is the subgradient of with respect to .

Let C1 and C2 be a partition of the p variables into two nonoverlapping sets, with C1 ∩ C2 = ∅, C1 ∪ C2 = {1, …, p}. Consider the matrices of the form

| (9.32) |

for k = 1, …, K, where solve the JGL problem on the features in C1, and solve the JGL problem on the features in C2. By inspection of (9.31), Θ(1), …, Θ(K) solve the entire JGL optimization problem if and only if for all i ∈ C1, j ∈ C2, there exist Γ1,ij, …, ΓK,ij ∈ [−1, 1] and ϒ1,ij, …, ϒK,ij satisfying such that

| (9.33) |

Therefore, by Lemma 9.2, the proof is complete.

Appendix 3: Additional simulations for two-class datasets

We first present results for a simulation study similar to the one in Section 7.1, but with only two classes. Taking an approach similar to the one described in Section 7.1, we defined two networks with p = 500 features belonging to ten equally sized unconnected subnetworks, each with a power law degree distribution. Of the ten subnetworks, eight have the same structure and edge values in both classes, and two are present in only one class. Class 1’s network has 490 edges, 94 of which are not present in class 2. We generated covariance matrices as described in Section 7.1.1. Again, we simulated 100 datasets with n = 150 observations per class. The results shown in Figure 4 are similar to the results in Section 7.1.2.

We also simulated data with an entirely different network structure. Instead of the block-diagonal network structure used in the previous simulations, in this simulation we generated data drawn from a single large power law network. We defined class 1’s network to be a single power law network with only one component and generated Σ1 as described in Section 7.1.1. We then identified a branch in this network connected to the rest of the network through only one edge. We then let equal , except for the elements corresponding to the edges in the selected branch, which were set to be zero instead. Finally, we defined Σ2 by inverting , and generated the two classes’ data using Σ1 and Σ2. This yielded distributions based on two power law networks that were identical except for a missing branch in class 2. Class 1’s network has 499 edges, 104 of which are not present in class 2. We simulated 100 datasets with n = 150 observations per class. Figure 5 shows the results, averaged over the 100 data sets. Again, FGL and GGL were superior to or competitive with the other methods.

Appendix 4: Network structure used in simulations

The network structure for the simulations described in Section 7.1 is displayed in Figure 6. Solid edges are shared between all three classes’ networks, dashed edges are present only in classes 1 and 2, and dotted edges are present only in class 1.

Contributor Information

Patrick Danaher, Department of Biostatistics, University of Washington, USA.

Pei Wang, Public Health Sciences Division, Fred Hutchinson Cancer Research Center, USA.

Daniela M. Witten, Department of Biostatistics, University of Washington, USA

References

- Adjei A. K-ras as a target for lung cancer therapy. Journal of Thoractic Oncology. 2008;3(6):S160–S163. doi: 10.1097/JTO.0b013e318174dbf9. [DOI] [PubMed] [Google Scholar]

- Ahmed A, Xing E. Tesla: Recovering time-varying networks of dependencies in social and biological studies. Proc Natl Acad Sci. 2009;29:11878–11883. doi: 10.1073/pnas.0901910106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett T, Suzek T, Troup D, Wilhite S, Ngau W, Ledoux P, Rudnev D, Lash A, Fujibuchi W, Edgar R. NCBI GEO: mining millions of expression profiles–database and tools. Nucleic Acids Research. 2005;33:D562–D566. doi: 10.1093/nar/gki022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boyd S, Parikh N, Chu E, Peleato B, Eckstein J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Foundations and Trends in Machine Learning. 2010;3(1):1–122. [Google Scholar]

- Boyd S, Vandenberghe L. Convex Optimization. Cambridge University Press; 2004. [Google Scholar]

- Chen H, Sharp B. Content-rich biological network constructed by mining pubmed abstracts. BMC Bioinformatics. 2004;5:147. doi: 10.1186/1471-2105-5-147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedman J, Hastie T, Hoefling H, Tibshirani R. Pathwise coordinate optimization. Annals of Applied Statistics. 2007;1:302–332. [Google Scholar]

- Friedman J, Hastie T, Tibshirani R. Sparse inverse covariance estimation with the graphical lasso. Biostatistics. 2007;9:432–441. doi: 10.1093/biostatistics/kxm045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedman J, Hastie T, Tibshirani R. Technical report. Department of Statistics, Stanford University; 2010. A note on the group lasso and a sparse group lasso. [Google Scholar]

- Guo J, Levina E, Michailidis G, Zhu J. Joint estimation of multiple graphical models. Biometrika. 2011;98(1):1–15. doi: 10.1093/biomet/asq060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hastie T, Tibshirani R, Friedman J. The Elements of Statistical Learning; Data Mining, Inference and Prediction. Springer Verlag; New York: 2009. [Google Scholar]

- Hocking L, Joulin A, Bach F. Clusterpath: An algorithm for clustering using convex fusion penalties. Proceedings of the 28th International Conference on Machine Learning.2011. [Google Scholar]

- Hoefling H. Personal communication. 2010a.

- Hoefling H. A path algorithm for the fused lasso signal approximator. Journal of Computational and Graphical Statistics. 2010b;19(4):984–1006. [Google Scholar]

- Kolar M, Song L, Ahmed A, Xing E. Estimating time-varying networks. Annals of Applied Statistics. 2010;4 (1):94–123. [Google Scholar]

- Kolar M, Xing E. Sparsistent estimation of time-varying discrete markov random fields. Manuscript. 2009 arXiv:0907.2337. [Google Scholar]

- Lauritzen S. Graphical Models. Oxford Science Publications; 1996. [Google Scholar]

- Li S, Hsu L, Peng J, Wang P. Bootstrap inference for network construction. Annals of Applied Statistics. 2013 doi: 10.1214/12-AOAS589. Forthcoming. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazumder R, Hastie T. Exact covariance-thresholding into connected components for large-scale graphical lasso. The Journal of Machine Learning Research. 2012;13:781–794. [PMC free article] [PubMed] [Google Scholar]

- Meinshausen M, Buhlmann P. Stability selection (with discussion) Journal of the Royal Statistical Society, Series B. 2010;72:417–473. [Google Scholar]

- Meinshausen N. Relaxed lasso. Computational Statistics and Data Analysis. 2007;52:374–393. [Google Scholar]

- Meinshausen N, Bühlmann P. High dimensional graphs and variable selection with the lasso. Annals of Statistics. 2006;34:1436–1462. [Google Scholar]

- Mohan K, Chung M, Han S, Witten D, Lee S, Fazel M. Advances in Neural Information Processing Systems 25. 2012. Structured learning of Gaussian graphical models; pp. 629–637. [PMC free article] [PubMed] [Google Scholar]

- Peng J, Wang P, Zhou N, Zhu J. Partial correlation estimation by joint sparse regression model. Journal of the American Statistical Association. 2009;104(486):735–746. doi: 10.1198/jasa.2009.0126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rothman A, Levina E, Zhu J. Sparse permutation invariant covariance estimation. Electronic Journal of Statistics. 2008;2:494–515. [Google Scholar]

- Simon N, Tibshirani R. Discriminant analysis with adaptively pooled covariance. Manuscript. 2011 arXiv:1111.1687. [Google Scholar]

- Song L, Kolar M, Xing E. Keller: Estimating time-evolving interactions between genes. Bioinformatics. 2009a;25 (12):i128–i136. doi: 10.1093/bioinformatics/btp192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song L, Kolar M, Xing E. Time-varying dynamic Bayesian networks. Proceeding of the 23rd Neural Information Processing Systems.2009b. [Google Scholar]

- Spira A, Beane J, Shah V, Steiling K, Liu G, Schembri F, Gilman S, Dumas Y, Calner P, Sebastiani P, Sridhar S, Beamis J, Lamb C, Anderson T, Gerry N, Keane J, Lenburg M, Brody J. Airway epithelial gene expression in the diagnostic evaluation of smokers with suspect lung cancer. Nature Medicine. 2007;13(3):361–366. doi: 10.1038/nm1556. [DOI] [PubMed] [Google Scholar]

- Tarjan R. Depth-first search and linear graph algorithms. SIAM Journal on Computing. 1972;1(2):146–160. [Google Scholar]

- Tibshirani R. Regression shrinkage and selection via the lasso. J Royal Statist Soc B. 1996;58:267–288. [Google Scholar]

- Tibshirani R. Personal communication. 2012.

- Tibshirani R, Saunders M, Rosset S, Zhu J, Knight K. Sparsity and smoothness via the fused lasso. J Royal Statist Soc B. 2005;67:91–108. [Google Scholar]

- Witten D, Friedman J, Simon N. New insights and faster computations for the graphical lasso. Journal of Computational and Graphical Statistics. 2011;20(4):892–900. [Google Scholar]

- Witten D, Tibshirani R. Covariance-regularized regression and classifica-tion for high-dimensional problems. J Royal Stat Soc B. 2009;71(3):615–636. doi: 10.1111/j.1467-9868.2009.00699.x. PM-CID:PMC2806603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuan M, Lin Y. Model selection and estimation in regression with grouped variables. Journal of the Royal Statistical Society, Series B. 2007a;68:49–67. [Google Scholar]

- Yuan M, Lin Y. Model selection and estimation in the Gaussian graphical model. Biometrika. 2007b;94(10):19–35. [Google Scholar]

- Zhou S, Lafferty J, Wasserman L. Time varying undirected graphs. The 21st Annual Conference on Learning Theory (COLT 2008); Helsinki, Finland. 2008. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.