Abstract

The University of Washington (UW) Institute for Translational Health Sciences (ITHS), funded by a Clinical and Translational Sciences Award program, has supplemented its initial Kellogg Logic Model–based program evaluation with the eight judgment-based evaluative elements of the World Health Organization’s (WHO) Health Services Assessment Model. This article describes the relationship between the two models, the rationale for the decision to supplement the evaluation with WHO evaluative elements, the value-added results of the WHO evaluative elements, and plans for further developing the WHO assessments.

Keywords: translational science, logic models, health research, CTSA program evaluation, WHO evaluative elements, WHO Health Services Assessment Model

The University of Washington’s Institute for Translational Sciences (ITHS) was established in 2007 as a Clinical and Translational Science Award (CTSA) site. The mission of the ITHS (https://www.iths.org/) was to enhance translational research capabilities at the University of Washington (UW), the Fred Hutchinson Cancer Research Center, Seattle Children’s Hospital, Group Health Research Institute, and the Benaroya Research Institute at Virginia Mason Hospital. During the initial funding period, a regional ITHS infrastructure was established to support translational research. This catalyzed collaborations among the partners and provided needed translational services and resources, including biostatistics, bioinformatics, regulatory and ethics, education and mentoring, scientific analytic resources, pilot grants, and support to preclinical, clinical, and community-based areas.

The need to evaluate the activities and resources of CTSAs, such as clinical trials and research databases, multidisciplinary training programs, and regional community outreach efforts is a key function across the national awardees (National Institutes of Health, 2005). Diverse methods have been applied to evaluations of these complex new organizations (Anderson, Silet, & Fleming, 2012; Frechtling, Raue, Michie, Miyaoka, & Spiegelman, 2012; Iribarne, Easterwood, Russo, & Wang, 2011; Kane, Rubio, & Trochim, 2013; Lee et al., 2012; Nagarajan, Kalinka, & Hogan, 2012; Nagarajan, Lowery, & Hogan, 2011).

Results of initial ITHS evaluations suggest that the ITHS has strengthened research partnerships between its regional and academic institutions, and its communities and tribal groups located throughout the Pacific Northwest states of Washington, Wyoming, Alaska, Montana, and Idaho (the 5-state WWAMI Region). For over 5 years, these entities have collaborated to develop more effective methods for enhancing translational research to improve the health of individuals and communities by evaluating electronic data capture for regional clinical trials (Franklin, Guidry, & Brinkley, 2011), implementing a clinical research data warehouse framework with a national consortium of research organizations (Anderson et al., 2012; Murphy et al., 2010), and coordinating research policy development among stakeholders (Melvin, Edwards, Malone, Hassell, & Wilfond, 2013).

Logic models provide guided evaluation frameworks to identify and track relationships between parts of a program or a system and its functions and outcomes (Frechtling, 2007). The Kellogg Logic Model (KLM) served as the initial roadmap for the ITHS to explore relationships between inputs, outputs, outcomes, and impacts of ITHS resources and services. The KLM employs a systems approach wherein resources and needs generate activities and outputs, which in turn produce measurable outcomes and impacts. It was designed to help create shared understandings of and focus evaluations on program goals and methods and to then relate these to expected outcomes (Kellogg Foundation, 2004; Renger, & Hurley, 2006).

The KLM helped the ITHS develop metrics similar to those recently amplified by Rubio and associates (Lee et al., 2012; Rubio et al., 2011). Such trackable metrics are needed to evaluate, monitor, and improve translational efforts within the areas of education, biostatistics, epidemiology, and research. Examples of these metrics include number of investigators, type and levels of interactions and engagements, number of proposals, number/type of grants submitted, and number of publications, including the quality of journals in which they appear. Because service was a primary focus of the ITHS, the first 2 years of evaluations established service baselines and assessed researchers’ satisfaction with their initial interactions with the ITHS.

Although the KLM assessments are informative, findings from logic models and other evaluation models are sometimes met with resistance (Carter, 1971; Kaplan & Garrett, 2005). After the first several ITHS years, KLM-type metrics alone, such as activities, levels of engagement and publications, were not widely perceived within the organization as providing the necessary depth of understanding about how well it was nurturing and supporting multidisciplinary team science and translational research. ITHS leadership concluded that results lacked needed breadth; KLM metrics alone were not adequately capturing operational aspects of evolving services and resources. In short, leadership wanted more illumination of the “value added” by the ITHS to translational environments within its partner institutions and to the development of translational capabilities of investigators. In particular, ITHS leadership sought more actionable information regarding the relevance and adequacy of its burgeoning resources and services.

The ITHS identified the World Health Organization’s (WHO) Health Services Program Evaluation Model as a good possibility for obtaining additional data on the ITHS and its activities. Subsequently, the eight WHO evaluative elements provided the framework for a more value-based approach. This article’s purpose is to communicate results from as well as reactions from within the ITHS to its supplementation of the KLM with the WHO evaluative elements. The article is a case study of this new hybrid KLM/WHO approach to CTSA evaluation. It aligns with the CTSA’s National Evaluation Final Report (Frechtling et al., 2012), which strongly recommends that evaluations better assess the extent to which CTSAs are adding value to translational research. The approach remains a work in progress.

The WHO Model in the Context of the ITHS

Program evaluation in the context of multisite, multidisciplinary organizations is complex. One feature that distinguishes CTSA recipient organizations from other multidisciplinary organizations is their focus on translational health research and support processes. Pertinent to the evaluation of health systems, Drew and associates (Drew, Duivenboden, & Bonnefoy, 2000) described the WHO model as an essential component in “developing, implementing and improving policies designed to protect health and the environment and enhance the quality of life.” The unique evaluative elements of the WHO model complemented the KLM approach and motivated ITHS leadership to move ahead with exploring the utility of the WHO model’s evaluative elements as a framework to generate a more value-based supplementation.

The Combined KLM/WHO Model

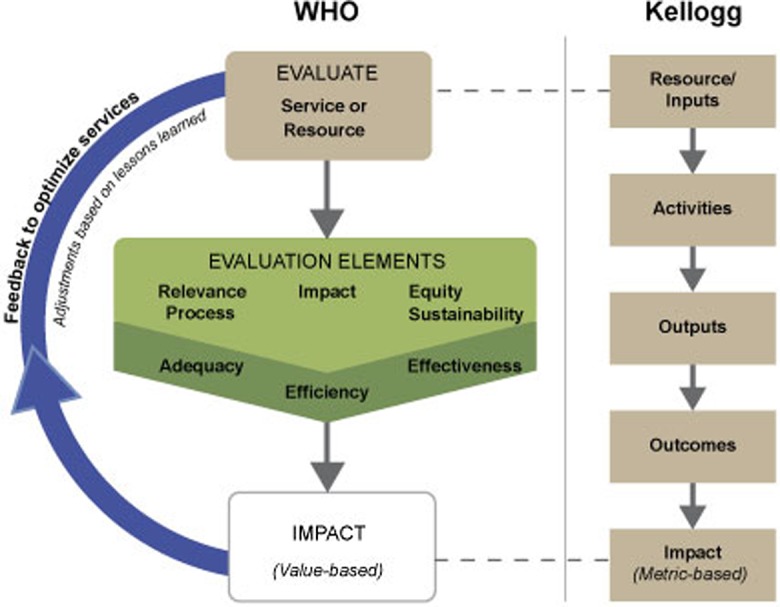

Figure 1 illustrates how the KLM and WHO models relate to one another. The Kellogg model proceeds from resources and inputs, to activities, outputs, outcomes, and impacts. It is predominately metric based. The WHO approach adds focus on eight evaluative focal points: relevance, adequacy, efficiency, effectiveness, process, impact, equity, and sustainability (WHO, 1981). Addition of the WHO model provides a more value-based approach. The model interested ITHS leadership because it offered potential for more in-depth data on users’ judgments about the eight important attributes of its activities, resources, and services.

Figure 1.

The combined Kellogg/World Health Organization Model.

Figure 1 also illustrates how the combined KLM/WHO approach builds on the unique and complementary strengths of each model to produce broader and deeper views of ITHS resources and services. The intent was to develop new perspectives on KLM inputs, activities, outputs, outcomes, and impacts. With the combined KLM/WHO approach, the outputs of an activity would remain of paramount importance. The additional WHO elements’ value judgments would inform about the relevancy of resources and services and, for example, the extent to which they are efficient, adequate, effective, and sustainable. Such metrics were viewed as crucial for the overall ITHS evaluation.

Method

Evolution of ITHS Assessment

In its first two operational years (2008 and 2009), the ITHS relied on results from membership surveys (MSs) and high-impact stories to characterize major ITHS interactions. The MS was designed to assess member and nonmember users’ perceptions of (1) how the macro-level infrastructure was working; (2) how the micro-level individual and team collaborations were viewed; (3) satisfaction with ITHS efforts to support various core services (using a 6-point Likert-type satisfaction scale); and (4) satisfaction with each core.

Initial Experience With the Supplemental WHO Model

In 2010, the member survey satisfaction scales were modified to incorporate an ITHS impact scale that ranged from “no impact” to “major impact.” At the same time, in order to provide more robust information about translational progress, the MS was modified to include a focus on the eight WHO evaluative elements. Items were written for each WHO element to elicit value judgments from service and resource users about the extent to which the ITHS added value to their research. The eight WHO-based questions were:

Relevance. Are ITHS services relevant to your translational research and development needs?

Efficiency. Has the ITHS made your research processes more efficient?

Adequacy. Has the ITHS enabled you to design research that is more likely to be capable of positively impacting the health of the public?

Effectiveness. Has the ITHS made you a more effective, mission-driven researcher?

Process. Has the ITHS helped improve your research endeavors—the way research is conducted?

Impact. Is ITHS involvement helping you increase the impact of your work on population health within communities?

Equity. As a translational researcher, do you feel your results are more applicable to mulitple cultural groups?

Sustainability. Is the ITHS helping to make your work sustainable beyond the terms of your current support?

Since 2010, the aforementioned WHO-based questions have been administered annually in a hybrid KLM/WHO MS of ITHS members and other service/resource users. Respondents rate the extent to which their experiences with the ITHS have impacted their work, in WHO element terms, using the following 4-point scale: 1 = Yes, absolutely; 2 = Yes, to some degree; 3 = Not yet, but I see potential; and 4 = No and I don’t see doing so.

Results

In 2008 and 2009 (pre-WHO model), ITHS members and users reported relatively high levels of satisfaction with ITHS functions (all functions were ranked above average on satisfaction and 12 of 14 increased over time). These results informed ITHS leaders about the relative satisfaction rankings of its service areas. However, over the 2-year period statistically significant improvements (p <.05) were noted only for institutional review board and Regulatory Support.

Although the results did shed light on levels of satisfaction with ITHS services, they did not provide sufficient understandings of either their strengths and weaknesses or of the value they added to translational research. Results did not provide sufficient direction about which aspects of core offerings needed attention nor did they provide enough insight into how services and resources could be improved. The problem was that it was not possible to ascertain whether the KLM-assessed ITHS activities, outputs, and outcomes, for example, were the right ones, and if so whether they needed to be more relevant, adequate, or sustainable.

A second pre-WHO analytic perspective was obtained by looking at satisfaction trends between 2008 and 2010. Linear regressions demonstrated significant upward trends (p < .05) on offerings related to preclinical research, community affiliations, and cross-institutional collaborations. Of the 14 ITHS service areas, 12 showed monotonic improvement in satisfaction/impact. The result of a sign test was significant (p > .001) for 12 of 14 matched pairs showing positive results. Even with these results, however, it was difficult to ascertain the extent to which the ITHS was contributing (adding value) to researchers’ translational work. Were ITHS offerings relevant enough, were they adequate for impacting population health, was the ITHS efficiently and effectively impacting translational research?

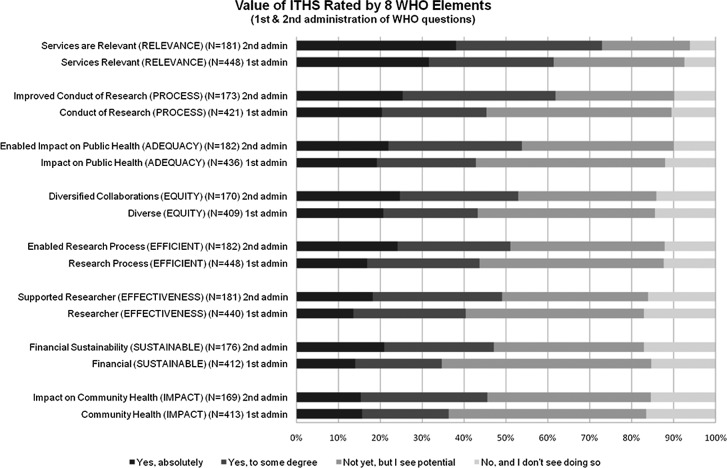

The WHO items were first administered in 2010 and 2011. Figure 2 indicates that during this period ratings of the ITHS overall on the 8 WHO elements trended upward. The second administration produced smaller percentages of respondents who indicated they saw only potential for the WHO qualities to be met, an improvement. Similar charts were generated for each ITHS core area.

Figure 2.

The 2010 (first administration) versus 2011 (second administration) of members’ and users’ service ratings of the Institute for Translational Health Sciences overall, based on the eight World Health Organization evaluative elements.

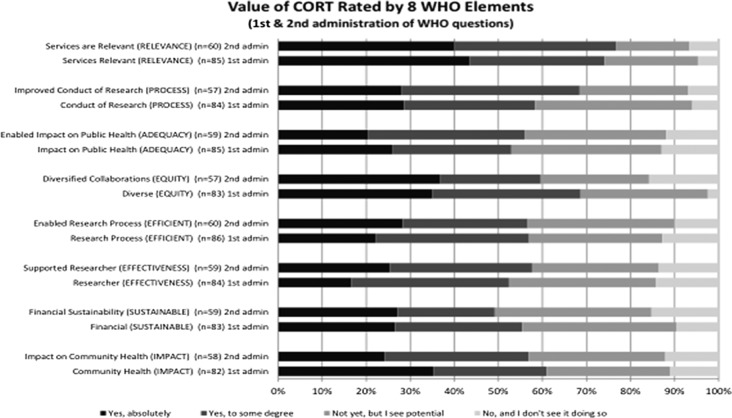

Figure 3 shows WHO element ratings for one ITHS core, namely, Community Outreach and Research Translation (CORT). The CORT ratings were high. More than 80% of CORT users reported either positive views of or saw potential value for CORT services. The WHO elements of relevance and research process were rated the highest. Even the lower rated WHO elements, process and equity, were viewed as having potential by 24% and 25%, respectively. In the second WHO administration, all elements declined but remained high; equity showed the largest decrease.

Figure 3.

The 2010 (first administration) versus 2011 (second administration) of members’ and users’ service rating of Community Outreach and Research Translation (CORT) overall, based on the eight World Health Organization evaluative elements.

Review of Leadership Assessment of the KLM/WHO Approach

Beyond leadership buy in, it was essential to understand the extent to which ITHS leaders adequately understood the WHO framework and the extent to which they valued the new information. In 2012, after 2 years of using the hybrid model, an internal review was conducted. The purpose was to assess ITHS leaders’ (1) perceptions of the utility of the combined KLM/WHO model; (2) views as to whether the WHO elements had been sufficiently integrated into evaluations of core-specific work; and (3) overall receptivity to the WHO supplementation.

Of the 34 ITHS leaders, 16 (47%) participated in this retrospective WHO review (13 core leaders and 3 critical staff). Thirty-seven percent of ITHS leaders either were neutral about the WHO approach or indicated they did not fully understand its elements. Yet 71% indicated the WHO elements had been valuable for informing them about their core; 86% indicated that the combined approach resulted in a good overall assessment and 83% rated it as an improvement over the KLM alone.

Discussion

The ITHS seems to be convincing large percentages of users that its services are supportive and relevant to the conduct of translational research. Prior to 2010 implementation of the combined KLM/WHO approach, the ITHS was receiving encouraging evaluations from its members and users with respect to their satisfaction with evolving services and resources. There remained a dearth of actionable information for providing developmental guidance to ITHS leadership. That is, knowledge of the KLM’s activities, outputs, and outcomes alone did not inform well as to whether services and resources were relevant, adequate, efficient, and/or effective. Results were not providing enough direction about which aspects of core offerings needed attention and what needed to be done. Assessments of satisfaction were simply not sufficiently informative.

Our results suggest that the WHO elements were viewed as valuable for assessing cores’ services overall. However, only half of the leadership indicated that the existing eight WHO evaluation questions did an adequate job of assessing functionality of their core. This may have been because only 63% rated their understanding of the WHO elements as “full” or “fair” (the scale ranged from 1 = fully understand, 3 = neutral to 5 = do not understand at all). It may also have been because the WHO questions had not yet focused on specific ITHS resources and services.

In the 2012 internal WHO review, the majority of responding leaders indicated that the WHO supplementation provided more illuminating views of the ITHS overall. Results of the WHO-based assessments were viewed as more informative with respect to cores’ specific resources and services, but they were only slightly more actionable. Some ITHS leaders pointed to areas needing improvement. These were (1) improve common understandings of how the WHO framework serves the ITHS and its functional groups; (2) develop better definitions of and questions related to the WHO elements; and (3) promote integration of the WHO elements into individual core evaluations.

The WHO supplementation that began in 2010 has helped some, but the consensus is that impacts to date have been somewhat limited because the original eight WHO questions were too general; they provided only broad evaluative strokes. For that reason the ITHS has begun developing additional, hopefully more actionable questions related to each WHO element. The following new questions should aid in the evaluations of specific resources and services:

Relevance: How directly are current CTSA resources and services focused on the translational needs of researchers? What modifications and/or actions would make CTSA resources and services more relevant?

Adequacy: To what extent are CTSA services and resources meeting known translational needs? How are CTSA services improving the process of biomedical research? Which CTSA offerings merit additional or fewer resources?

Efficiency: What are the relationships between results obtained and the amounts of effort and resources expended? How are CTSA services and resources evaluated for efficiency? What would make them more efficient?

Effectiveness: How is the CTSA facilitating movement of projects from discovery to application? How are service and resource impacts assessed for effectiveness at attaining predetermined translational goals and needs? What would make the services and resources more effective?

Equity: To what extent do researchers and stakeholders have comparable opportunities to access CTSA resources and services? To what extent do underserved users merit priority and/or more economical access to CTSA resources and services and if they do, are they getting it? How could access to CTSA offerings be made more equitably accessible?

Process: How is the CTSA improving the process of translational research? How directly are inputs and activities related to intended outputs? Are important daily activities tracked? Are tracked activities consistent with preplanned protocols and processes? Are tracked activities relevant to translational goals?

Impact: How are CTSA education and training improving the next generation of translational researchers? How are CTSA resources and services contributing to desired translational outcomes and impacts? How do resources and services directly or indirectly improve the health of people in the region?

Sustainability: To what extent are services and resources sustainable in terms of financing, personnel, and political will? Are translational researchers sufficiently supported to become more financially sustainable in their work?

Leaders will be encouraged by the evaluation team to incorporate these questions into upcoming evaluations of each major ITHS service and resource. We foresee future conversations based on these questions that will encourage reflection on how to optimize services and resources to maximize their translational value to individuals, institutions, organizations, and to the health of the public.

Conclusion

This case study represents an effort to better assess the value added by the ITHS through the use of the eight WHO health systems evaluation elements. This work builds upon the CTSA’s National Evaluation Final Report (Frechtling et al., 2012) recommendation that calls for increased efforts to assess the value added to translational research by CTSAs.

More work is needed for the combined KLM/WHO framework to reach its full potential. The approach is beginning to sharpen leaders’ focus on some of the most important attributes of services and resources. Most importantly, the additional WHO focus is beginning to provide improved perspectives on relevance, adequacy, efficiency, effectiveness, process, impact, equity, and sustainability; all critical attributes of any service and/or resource in which CTSAs invest.

This work is enabling ITHS leadership to think more clearly about its resources and services. The goal is to determine which ITHS offerings are most relevant, where service and resource improvements are needed, and how to optimally allocate ITHS resources for maximal translational impact. With this in mind, evaluations of specific services and resources are being reoriented to focus more on the WHO evaluative elements.

The ITHS will continue to develop this combined KLM/WHO approach to optimally evaluate translational research capabilities within its participating institutions and throughout the five-state WWAMI Region. The ITHS leadership is committed to more fully incorporating WHO evaluative elements into assessments of organizational operations and activities. By supplementing the KLM-focused evaluation with the WHO elements, the ITHS has repositioned itself to conduct formative and summative assessments that are more credible and convincing to leaders and stakeholders alike.

Acknowledgments

The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

Footnotes

Declaration of Conflicting Interests: The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) received no financial support for the research, authorship, and/or publication of this article: This study was supported by the National Center for Advancing Translational Sciences of the Institutes of Health Grant No. UL1TR000423 awarded to Mary L. Disis.

References

- Anderson N., Abend A., Mandel A., Geraghty E., Gabriel D., Wynden R.…Tarczy-Hornoch P. (2012). Implementation of a deidentified federated data network for population-based cohort discovery. Journal of the American Medical Informatics Association, 19, e60–67 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson L., Silet K., Fleming M. (2012). Evaluating and giving feedback to mentors: New evidence-based approaches. Clinical and Translational Science, 5, 71–77 doi:10.1111/j.1752-8062.2011.00361.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carter R. K. (1971). Clients' resistance to negative findings and the latent conservative function of evaluation studies. The American Sociologist, 6, 118–124 [Google Scholar]

- Drew C. H., Duivenboden J. V., Bonnefoy X. (2000). Environmental health services in Europe No. 5: Guidelines for evaluation of environmental health services (European Series, No. 90, 2000) Retrieved from http://www.euro.who.int/__data/assets/pdf_file/0003/98292/E71502.pdf [PubMed] [Google Scholar]

- Franklin J. D., Guidry A., Brinkley J. F. (2011). A partnership approach for electronic data capture in small-scale clinical trials. Journal of Biomedical Informatics, 44, S103–108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frechtling J. A. (2007). Logic modeling methods in program evaluation. San Francisco, CA: Wiley, Jossey-Bass [Google Scholar]

- Frechtling J., Raue K., Michie J., Miyaoka A., Spiegelman M. (2012). The CTSA national evaluation final report. Retrieved from https://www.ctsacentral.org/sites/default/files/files/CTSANationalEval_FinalReport_20120416.pdf

- Iribarne A., Easterwood M. R., Russo M. J., Wang Y. C. (2011). Integrating economic evaluation methods into clinical and translational science award consortium comparative effectiveness educational goals. Academic Medicine: Journal of the Association of American Medical Colleges, 86, 701–705 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kane C., Rubio D., Trochim W. (2013). Evaluating Translational Research. In Alving B., Dia K., Chan S. H. H. (Eds.), Translational medicine – What, why and how: An international perspective (pp. 110–119). Basel, Switzerland: Karger [Google Scholar]

- Kaplan S. A., Garrett K. E. (2005). The use of logic models by community-based initiatives. Evaluation and Program Planning, 28, 167–172 [Google Scholar]

- Kellogg Foundation. (2004). Using logic models to bring together planning, evaluation and action: Logic model development guide. Retrieved from www.wisconsin.edu/edi/grants/Kellogg_Logic_Model.pdf [Google Scholar]

- Lee L. S., Pusek S. N., McCormack W. T., Helitzer D. L., Martina C. A.…Rubio D. M. (2012). Clinical and translational scientist career success: Metrics for evaluation. Clinical and Translational Science, 5, 400–407 doi:10.1111/j.1752-8062.2012.00422.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Melvin A. J., Edwards K., Malone J., Hassell L., Wilfond B. S. (2013). Role for CTSAs in leveraging a distributed research infrastructure to engage diverse stakeholders in emergent research policy development. Clinical and Translational Science, 6, 57–59 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murphy S. N., Weber G., Mendis M., Gainer V., Chueh H. C., Churchill S., Kohane I. (2010). Serving the enterprise and beyond with informatics for integrating biology and the bedside (i2b2). Journal of the American Medical Informatics Association, 17, 124–130 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nagarajan R., Kalinka A. T., Hogan W. R. (2012). Evidence of community structure in biomedical research grant collaborations. Journal of Biomedical Informatics, 46, 40–46 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nagarajan R., Lowery C. L., Hogan W. R. (2011). Temporal evolution of biomedical research grant collaborations across multiple scales: A CTSA baseline study. In AMIA annual symposium proceedings (Vol. 2011, p. 987). American Medical Informatics Association: [PMC free article] [PubMed] [Google Scholar]

- National Institutes of Health. (2005). Institutional clinical and translational science award (RFA-RM-06-002). Retrieved from http://grants.nih.gov/grants/guide/rfa-files/RFA-RM-06-002.html

- Renger R., Hurley C. (2006) From theory to practice: Lessons learned in the application of the ATM approach to developing logic models. Evaluation and Program Planning, 29, 106–119 [Google Scholar]

- Rubio D. M., del Junco D. J., Bhore R., Lindsell C. J., Oster R. A.…DeMets D. (2011). Evaluation metrics for biostatistical and epidemiological collaborations. Statistics in Medicine, 30, 2767–2777 [DOI] [PMC free article] [PubMed] [Google Scholar]

- World Health Organization. (1981). Health programme evaluation: Guiding principles for its application in the managerial process for national health development. Retrieved from http://whqlibdoc.who.int/publications/9241800062.pdf