Abstract

A key step in the analysis of circadian data is to make an accurate estimate of the underlying period. There are many different techniques and algorithms for determining period, all with different assumptions and with differing levels of complexity. Choosing which algorithm, which implementation and which measures of accuracy to use can offer many pitfalls, especially for the non-expert. We have developed the BioDare system, an online service allowing data-sharing (including public dissemination), data-processing and analysis. Circadian experiments are the main focus of BioDare hence performing period analysis is a major feature of the system. Six methods have been incorporated into BioDare: Enright and Lomb-Scargle periodograms, FFT-NLLS, mFourfit, MESA and Spectrum Resampling. Here we review those six techniques, explain the principles behind each algorithm and evaluate their performance. In order to quantify the methods' accuracy, we examine the algorithms against artificial mathematical test signals and model-generated mRNA data. Our re-implementation of each method in Java allows meaningful comparisons of the computational complexity and computing time associated with each algorithm. Finally, we provide guidelines on which algorithms are most appropriate for which data types, and recommendations on experimental design to extract optimal data for analysis.

Introduction

Circadian biology has been studied since the 18th Century and has been an area of increasingly active research across an ever wider range of organisms since the 1950's. Circadian clocks have now been identified across the whole Tree of Life in organisms ranging from cyanobacteria [1], [2] through to mammals [3], [4]. In order to improve understanding of the various clock mechanisms and of their significance, models of the circadian clock have been developed for many organisms. These models vary from simple 3 protein post-translational oscillators, for instance the Kai A, Kai B, Kai C clock of Synechococcus elongatus [5] to the more complex integrated feedback and feed-forward loops of the mammalian circadian clock [3], [4]. The various models can be tested through simulation, experimentation or both.

One of the key steps in identifying the molecular components of the various clocks is to examine the rhythmicity, or arhythmicity, of time course data obtained either from simulations or experiments. If the data are found to be rhythmic then it is vital to be able to make an accurate estimate of the underlying period. Key components in the clocks of all species have been discovered by forward genetic approaches, starting from the identification of a single individual with an altered period among a large population (for example [6]). Reverse genetic or drug screens for RNAi probes or chemical compounds that alter circadian properties use very similar, large-scale period assays [7], [8]. There are many different techniques and algorithms for doing the analysis required, all with different assumptions and with differing levels of complexity. Many of the algorithms are available as parts of software packages which can be either proprietary or freely available; some of these will be discussed later. Choosing which algorithm, which implementation and which measures of accuracy to use can offer many pitfalls, especially for the non-expert.

Advances in experimental techniques have facilitated the execution of long, circadian timeseries experiments. This creates new challenges in the form of data processing and data management. BioDare (Biological Data Repository) was developed under the multi-site ROBuST project (http://hallidaylab.bio.ed.ac.uk/ROBuST.html) to address such issues, and continued under the TiMet project (http://www.timing-metabolism.eu). BioDare is an online service which allows data-sharing (including public dissemination), data-processing and analysis, with the main focus on time-series data produced in circadian experiments from model species. One of the most important aspects of the data processing capability is its period analysis facility. Rhythmic data analysis was initially performed using the FFT-NLLS algorithm [9]. We have since added five other analysis methods: Enright and Lomb-Scargle periodograms [10], [11], mFourfit [12], MESA [13] and Spectrum Resampling [14], which we introduce below.

Having provided six different analysis methods, several broadly-relevant questions arose, including: which method is the best? Which method should I use for our data? How often do I need to sample my data? Can I analyse only X days of data [where X is a small number]? Here we report a detailed assessment of the selected period estimation algorithms, to address these and related questions. The results have broad relevance, as the algorithms tested represent a spectrum of popular period analysis techniques. The direct motivation was an objective evaluation of the methods available in BioDare. The guidelines are particularly relevant to BioDare users but do not include any BioDare-specific protocols, which are published elsewhere [15].

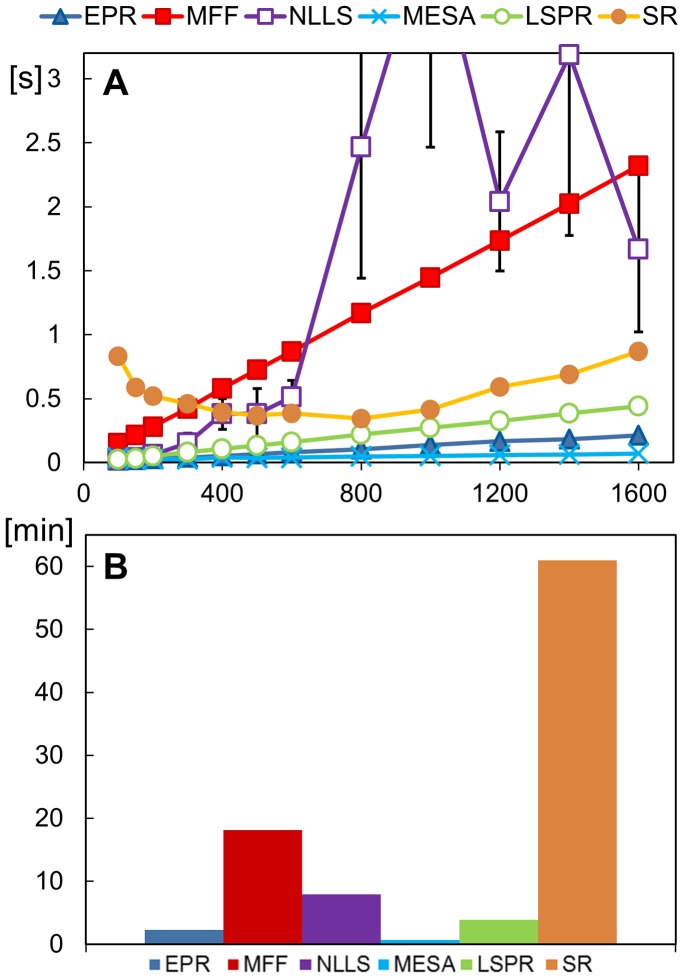

This paper starts with a brief review of the six methods and some of the alternatives. The work presented here used our own implementations of the six algorithms, which were re-factored into Java. This allowed us not only to make minor adjustments to the code but also allowed meaningful comparisons of the computational complexity and computing time associated with each algorithm.

Many previous studies have measured the performance of algorithms by evaluating them on real data. In such an approach, the underlying period is not known a priori. To avoid this problem we generated synthetic data sets that allow us to quantify an algorithm's performance. We focus on stationary data (with constant period), which is the most common analytical approach, although period is not always stable in free-running biological systems [16]. We examined the six methods not only in terms of the accuracy of the period estimate, but also in terms of speed. This latter factor is becoming increasingly important as recent advances in assay technology and computing power have led to an almost exponential growth in the complexity of experiments and the size of the resulting data sets. For example, molecular genetic experiments using reporter genes such as Luciferase can now routinely generate total volumes of data that were once the exclusive preserve of animal activity monitors or electrophysiological readings.

Having assessed the six algorithms under a variety of conditions, we offer some guidelines as to their use, to extract the maximum useful information from experimental data.

Algorithms

There is a plethora of different techniques available for the analysis of the periodicity of time-series data, e.g. [17], [18], and these techniques can be categorized in a variety of different ways: parametric vs. non-parametric; Fourier-transform based techniques vs. non Fourier-transform based etc. In deciding which algorithms to evaluate we tried to include algorithms from a variety of different categories and took as our starting point several of the key algorithms already used in the analysis of circadian data. In the following sections we describe the chosen algorithms, focusing on the overall concepts behind each method rather than mathematical/technical details, which can be found in the original papers.

Enright developed conceptually the simplest method for analysis of rhythmic biological data, referred to as the Enright Periodogram [10]. Periodic data of known period could be split into sections with the length of the sections matching the underlying period. Each section should contain similar portions of data, as the rhythmic data must contain a repeating pattern. Overlaying the sections will produce a clear waveform (with peak and trough), in which the trough time-points coincide and give a low sum across sections, peak time-points coincide and give a large sum, and the resulting waveform will have large amplitude. However, if the data were split in sections where the length does not correspond to the underlying period, then the peaks and troughs will not coincide and summing the sections together will result in a small-amplitude signal. This observation lies at the heart of the method. To analyse data with unknown period, the algorithm steps through a series of test period values, for each of them performs the procedure described above, and selects the period that gave the averaged waveform of the highest amplitude. To test the statistical significance of the period, Sokolove and Bushell [19] modified the calculation of the amplitude of the resulting waveform. This method, known as the Chi-square Periodogram, was implemented in BioDare and is here referred to as EPR.

EPR has the advantage of being intuitively straightforward and computationally simple. Its main limitation is that the step size between periods that can effectively be tested is constrained by the duration and sampling frequency of the input data.

Another general approach to period estimation is based on the idea of curve fitting. If the measured time series can be represented by a function (curve) of known period, the period of the data can be assumed to be equal to the period of that function. The challenge lies in finding such a function. Typically a model-based approach is used, i.e. a function is chosen that depends on parameters that determine not only its period but also its shape. In a ‘naive’ approach, all possible combinations of the function's parameters can be tested; for each combination, the function's time-series values can be calculated. The set of parameters which gives a time-series closest to the original data is chosen. In general, there is an untenably large number of parameter combinations, so such a naive approach is not feasible. Fortunately, there are known mathematical techniques to find optimal parameters, for example linear- and non-linear least-squares fitting [20]. Many methods of period analysis adopt this scheme, though they differ in the model functions and the selection procedures.

mFourfit [12] is one of the curve-fitting methods. It was developed for use with data obtained under entrained conditions, where the phase of entrainment was of particular interest. Stable entrainment implies that the underlying signal will have a single period, namely the period of the entraining cycle T. The waveform may be complicated but it is assumed to be the same in each entrained cycle (like the sections into which EPR splits data).

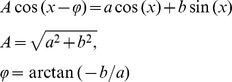

mFourfit's model function consists of a main cosine component of phase φ1, amplitude A1 and period τ. Up to 4 additional cosine components may be included, each with its own phase and amplitude but with a period that is a simple fraction of the main period τ, from τ/2 to τ/5:

(where Ai is the amplitude of each cosine, φi its phase and τ/i its period)

Using the sum of 5 cosines allows for the construction of quite complicated shapes, while the constraint that all components have period τ or a fraction guarantees that the resulting shape has exactly the length of τ. Rather than trying to estimate the period directly, upper and lower boundaries for the period are set by the user and the algorithm then steps through the range of periods in pre-defined increments. For given period τ, mFourfit finds all the parameters for each cosine using ordinary least-squares covariance. This step establishes ‘the best shape’ of length τ that can represent the given data (Note 1). Then, for each period, mFourfit calculates a sum of squared differences between the input data and the theoretical time series generated using the calculated parameters. After iterating through all periods within the boundaries, the period that fits the data with the lowest sum of differences can be determined. This method combines the ‘naive’ approach, checking all potential period values one by one, with selecting model parameters using the least square scheme.

mFourFit also tries to minimise the number of cosine components necessary to reproduce the data shape, in order to reduce the model complexity for each tested period. During period selection, both the fitting error and the model complexity are taken into account, with preference given to periods that yield simpler models.

The main advantage of mFourfit is that it provides the same best-fit waveform for each cycle, which better reflects the underlying biology of an entrained system. The major disadvantage is that the mFourfit algorithm is designed always to return a period (without any significance measure), even if the input time series is arrhythmic. We use the abbreviation MFF to refer to mFourfit method.

Another method that is based on curve fitting is FFT-NLLS [9], [21]. This was originally developed at the NSF Centre for Biological Timing in Virginia, to analyse circadian data obtained in free-running conditions (i.e. without entrainment), particularly in genetic screens to identify mutant organisms with altered period. Here, the data are also modelled by a sum of cosine functions, in the form of:

(where: τi, φ i, αi are period, phase and amplitude of each cosine component, c is the offset)

The main differences between FFT-NLLS and MFF are that the periods τi of each cosine are independent of each other in FFT-NLLS and the number of cosines N can be up to 25. The unconstrained periods and the large number of components mean that almost any curve can be represented by this model. For example, a long period cosine could model a data trend, a mid range cosine would match the ‘main’ oscillation in the data, and very short period cosines could represent sudden changes in the data or even noise. In reality, 5 components are sufficient to model correctly most biological data.

FFT NLLS starts with a model with a single cosine and determines the parameters (τ1, φ 1, α1, c) using a non-linear least squares fitting algorithm. This procedure is repeated using models with additional cosine components (increased N), until adding an additional cosine term does not improve significantly the resulting fit. The precise details of the algorithm can be found in [9]. Once the best model and its parameters have been found, the period is taken to be the period of the cosine component lying within a user-defined range of likely circadian periods (typically 15–35 h). If more than one cosine component belongs to the circadian range, the user has to decide which to select. Conventionally the component associated with the smallest relative amplitude error (defined as the value of the amplitude error estimate divided by the amplitude value) is chosen.

FFT NLLS performs an additional operation, namely finding confidence levels for period, phase and amplitude for all of the cosine components of the best model. This is done by determining the maximum size of perturbation which can be introduced into individual parameters before the resulting fit significantly deviates from the original.

The non-linear least squares procedure that calculates the parameters only works well if sensible initial values are provided. In order to obtain the initial values of the period and phase, a Fast Fourier Transform (FFT) is performed on the input time series [22]. The underlying principle of this common method is explained under MESA, and one of its limitations under Spectrum Resampling, below. Its relevance here is only to learn initial period values from the data, rather than using default or user-defined values. The initial values are then improved by the NLLS iterative numerical search. Hence the full name of this technique: the Fast Fourier Transform Non-Linear Least Squares algorithm, abbreviated NLLS in the Results.

The main advantages of FFT-NLLS are reported to be that the algorithm works well on relatively short and/or noisy data series; it gives confidence levels for period, phase and amplitude; and that the algorithm can identify (and report) arrhythmic data if no period can be identified with sufficiently high confidence. The postulated disadvantage of the algorithm is that it potentially has a limited ability to fit rhythmic data with non-sinusoidal waveforms.

Maximum Entropy Spectral Analysis (MESA) [13] uses a completely different approach based on stochastic modelling. The algorithm was championed for use in biological data analysis by Dowse [23] and has been used subsequently in marine biology to investigate swimming rhythms and vertical migration [24], [25].

MESA first fits an autoregressive model to the data. This model assumes that the value at a given time point is the combination of a number of previous values plus some stochastic process (noise):

(where: ai are model coefficients, X[t-i] is the data value at previous time point t-i, η is noise, N is the length of the model)

Model coefficients can also be considered as the coefficients of a prediction filter (PF) of length N, where the next value can be predicted using the previous values. Such equations can be written for each data point and filter coefficients that minimise the difference between the predicted and original values can be found using a least-squares approach.

It is possible to obtain a frequency spectrum for the data by using the prediction coefficients. In general, a frequency spectrum characterizes the presence (contribution) of each frequency within the signal, with the most common example being the Fourier Transform power spectrum [22]. Since frequency is the inverse of the period, finding the maximum in a frequency spectrum also identifies the strongest period of the data. Determination of a spectrum and finding its associated peak lie at the foundation of all frequency spectrum-based methods, such as the FFT.

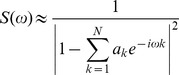

In the MESA approach, the spectrum is constructed using the formula (the scaling constant is removed):

|

(where ak are the PF coefficients and ω is circular frequency: ω = 2π/τ, τ is period)

The PF length (N) is crucial to the output of the analysis; if the filter length is too low, resolution and important detail can be lost. However, if N is too high, artificial peaks may appear in the spectrum. Although there are procedures to determine the optimal value of the model length, usually manual selection of the minimum value of N is necessary. Once the model length, N, is established, corresponding PF coefficients can be found, the spectrum S is then calculated using the formula above and the period corresponding to the highest value of S is selected.

The main advantages of MESA are that it does not model data assuming any a priori shape of waveform, and it has much better precision than Fourier transform based methods. The drawbacks are its dependence on the correct choice of model length and the lack of a significance/confidence measure.

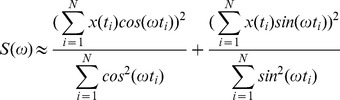

Another spectral analysis technique is the Lomb-Scargle periodogram [11]. This method also creates a spectrum representing the significance of each frequency in the analysed data. As before, the period corresponding to the peak is chosen as the output of the method. The very simplified formula for the spectrum is:

|

(where: x(ti) is the value at time ti, N number of data points, ω is the circular frequency: ω = 2π/τ, τ is the period).

It can be instructive to examine this formula to determine why it reflects the presence of a given periodicity in the data. In the first term the measured data are convolved with the cosine function (for each time point, the recorded value in data is multiplied by the corresponding value of a cosine at this time point). Assume a long data series of period T and phase 0, and consider the result of convolving the data with the equivalent cosine, cos(2π/T). Each time the data has its maximum value so does the cosine and the result of repeated multiplication followed by summation will be high, whereas each time the cosine has a negative value the data has its lowest value, and a small value will be subtracted from the sum. Thus the overall sum in the numerator will have a relatively high value. If the same data is convolved with a cosine of period other than T, the cosine no longer peaks at times nT and hence, the elements in the nominator sum will start to cancel each other, leading to a smaller summation than the previous case. The denominator value is a scaling factor which reflects how much ‘value’ the cosine contributed at the given times. The second sine-based term will behave similarly but with rhythmic data of phase T/4. The combination of both cosine and sine terms can measure contributions to the frequency regardless of the data phase (see MFF note 1).

The main advantage of this method is that, unlike most of the spectral methods (for example MESA), it can handle non-evenly spaced data. We refer to this method as LSPR.

Spectrum resampling [14], abbreviated as SR in this manuscript, was developed to improve period estimation when the data are non-sinusoidal. The approach uses a power spectrum created by carrying out a Fourier Transform on the time series.

The Fourier Transform and its discrete implementation, the Fast Fourier Transform (FFT), are standards in timeseries processing [22]. Similar to the LSPR, the FFT finds frequency contributions by convolving cosine and sine functions with the analysed signal. However, the spectrum obtained using the FFT cannot be directly used for period estimation of circadian data, due to its poor frequency precision. The precision varies across the spectrum, and is directly correlated with the input data length: to obtain a precision of less than an hour around 24 h periods, there must be over 1000, hourly-sampled time points, i.e. more than one month of measurement. In most cases, biological data must be padded to artificially extend the length of the time series prior to FFT analysis.

The main idea behind spectrum resampling is to find a period “between the cracks” of the original FFT spectrum. The algorithm starts by calculating an ordinary FFT power spectrum. The initial spectrum is smoothed using kernel smoothing. Kernel smoothing can be explained as a more sophisticated form of moving average. Each data point is averaged with scaled values of its neighbours. The kernel method ensures that more distant neighbours contribute less to the average.

The smoothed spectrum forms the basis for the algorithm. Noise is added to the base spectrum, this creates a new sample spectrum, which is successively smoothed, and the frequency value corresponding to the maximum peak in the smoothed spectrum is recorded. This procedure of adding noise to the base spectrum, smoothing, and recording the peak is repeated 1000 times (a process known as boot-strapping).

The recorded peak frequencies are averaged and the mean value is converted to the corresponding period and reported as the data period. For example, if the data has a true period of 24.5 hours, but the precision in the FFT spectrum is limited to about 1 hour around this period due to the data length, each bootstrap iteration could produce period values of 23, 24, 25 or 26 h. The average bootstrap period can be 24.5 (for example 500 peaks at 24 and 500 at 25). The distribution of period values recorded during the bootstrap iterations provides a confidence interval for the period estimates.

The main advantage reported for this algorithm is that it was designed to be more robust to noise and non-sinusoidal time series. The disadvantage is that, because it uses boot-strapping, it is very computationally intensive.

These six methods represent a broad range of the many published approaches to period estimation. EPR is distinct; LSPR, MESA and SR represent spectrum-based methods, while MFF and NLLS are representative examples of curve-fitting methods (for example, the popular Halberg's cosinor procedure [26] is equivalent to MFF with only one cosine). Autocorrelation has not been considered as MESA has been recommended as its replacement, especially for the short data series investigated here.

We omitted two classes of methods that deserve a longer comment: wavelet-based and Bayesian methods. Simple wavelet methods use wavelet transforms as low/high pass filters, which smooth the data and remove trends: such signal pre-processing is not our focus here. The processed data are then analysed with a standard method, for example a simple peak finding algorithm [27]. Alternatively, a continuous wavelet transform may be performed and then changes of period over time can be extracted from the transformation results [28]. Those methods are well suited for non-stationary periods which, although common in biological systems, are not our current focus. Taking into account the lack of evidence that wavelet methods are superior in analysis of stationary periods and the poor support for wavelets currently available in Java, we judged that at this stage, the extra effort necessary to implement such methods for BioDare was not proportional to the potential gain.

Bayesian methods are attractive as they provide well defined confidence levels for period estimates [29], [30]. However, due to their computational complexity we deemed them unsuitable for general use in BioDare. If we consider the typical number of sampling iterations performed by the algorithms (typically above 10,000), we would expect Bayesian methods to be 100 times slower than SR, already the slowest algorithm considered. Furthermore, the documentation of the published algorithms did not allow easy re-implementation.

Implementations

Hand in hand with the choice of algorithm goes the choice of implementation. This paper uses our implementations of the six algorithms in Java, which have been incorporated into the online BioDare repository. As mentioned briefly in the introduction, period analysis is only one of many BioDare features. The main features of BioDare are:

an online repository for experimental data accompanied by extensive metadata including details about environmental conditions and biological material used

rhythm analysis and period estimation using the mentioned algorithms

generation of secondary data (normalized, detrended, averaged …)

graphical output of data, secondary data and rhythm analysis

simple text-based search throughout metadata

search for data based on biological sample, assay and conditions

data aggregation and export

group-based privacy settings for collaborative research followed by public dissemination.

BioDare was designed to offer flexibility and adaptability to new techniques, data types, experimental designs and use cases. Thus all experimental metadata and system data is stored in XML documents, allowing easy conversion between formats using XSLT. Furthermore, as XML is human readable it speeds up debugging and testing. Java was chosen as the programming language for its self-documenting character, simple error tracing and large number of supported standards and technologies.

The user enters the experiment description in Pedro (a tool that can generate forms which are then populated using the information required by XML document definition) and exports them to XML format. The XML metadata are transformed with XLST to BioDare internal representation and mapped to Java objects for further manipulations using JAXB. The numerical time series are read from Excel files using the Apache POI library. Subsets of the metadata and the time series are stored in a MySQL database using JPA for Java to DB mapping.

Period analysis is controlled by separate subsystem called JobCenter, which allows simultaneous analysis submission by multiple users for multiple data sets. Its main functions are: queuing and housekeeping of the submitted jobs, dispatching analysis to the correct implementation (which can be local or remote using a WebService interface) and sending back completed results. In order to increase the overall performance, JobCenter takes advantage of multiprocessor servers and processes time series in parallel (in the current set-up, 4 time series are analysed in parallel).

BioDare can be found at http://www.biodare.ed.ac.uk, and its source code can be accessed from http://sourceforge.net/projects/biodare. Publicly-accessible data are available to browse and download using the “public” account, while the “demo” account allows users to test the analysis methods.

There are also alternative software packages that offer access to period analysis methods (typically EPR, LSPR, and FFT-based), some of which are listed here. Clocklab is a commercial package produced by Actimetrics (www.actimetrics.com/ClockLab/ ), Circadian Rhythm software is a non-commercial suite of programs developed by Refinetti (http://www.circadian.org/softwar.html), Circwave and Chronoshop are available at (http://webpage2.woelmuis.nl/downloads.htm). MFF and NLLS are provided in BRASS developed by Paul E. Brown with the Millar group [31], [32], which is available from (www.amillar.org) but is superseded by BioDare for almost all applications.

Materials and Methods

In order to evaluate the performance of any period estimation algorithms it is, of course, necessary to know the period a priori. This means that it is not practical to use real biological data for any initial algorithm evaluation. Thus different artificial data sets were generated and used to compare the different algorithms. In all cases the time series were stationary so that the underlying period is constant.

The first group of data sets comprised so-called mathematical test signals which, whilst not biologically meaningful, allow the algorithms to be tested against artefacts such as sudden changes in amplitude.

The mathematical test signals comprise:

a pure cosine of known frequency, and hence known period;

a pulsed waveform which comprises pulses of a Gaussian waveform with a standard deviation of (period/7);

a double pulsed waveform which comprises two periodic Gaussian waveforms, the first is as in the single pulse waveform and the second is a Gaussian waveform of a quarter of the amplitude of the first Gaussian waveform, shifted by period/3 relative to the original Gaussian and with a narrower standard deviation of (period/9);

The second group of time series were designed to be more representative of biological systems whilst still allowing exact knowledge of the underlying period. This group comprised simulated clock data generated using a delayed negative feedback loop (DNFL) model. This model, which is similar to the clock model used by Goldbeter [33] and which was developed by Monk and Heron [34], [35], generates synthetic mRNA and protein data. By varying the parameters of the model it is possible to produce a range of periodic but non-sinusoidal waveforms including asymmetric cycles similar to those found in biological systems. Here we use parameter sets identical to those used in [14] to produce two sets of time series. The first comprised time series with a moderate level of asymmetry and the second comprised time series with a moderate shoulder. Examples of both the mathematical test signals and the DFNL time series are shown in Figures 1A, 1B and full details of the parameters can be found in [14].

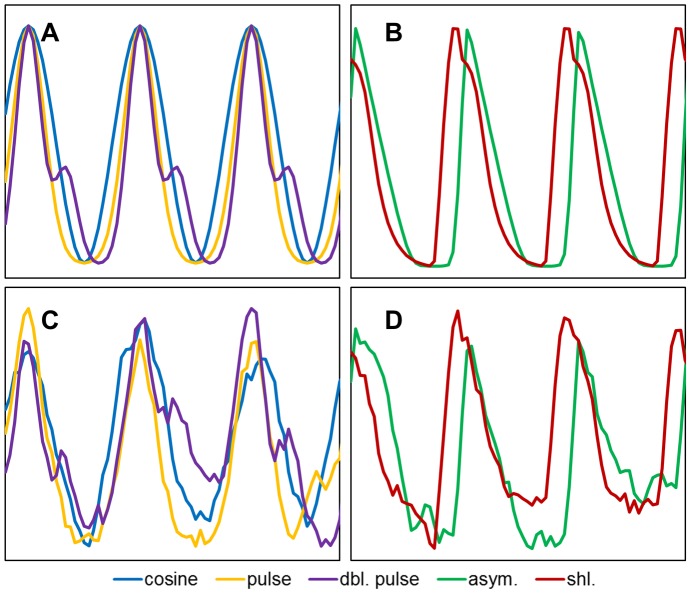

Figure 1. Examples of artificial time series used in the analysis.

A) the three mathematical test signals: cosine, pulse and double pulse (dbl. pulse). B) the two model-derived (DNFL) time series: moderate shoulder (shl.) and moderate asymmetry (asym.). C, D) the same test signals with 80% walking noise added.

Once the basic time series had been generated, noise was added. The noise was additive and was either uniform noise or walking noise. Uniform noise is drawn from a uniform distribution and the amplitude of the noise is defined as a percentage of the amplitude of the original time-series. This would be characteristic of the noise in a measurement system. Walking noise is additive and uniform, but this time the distribution of the noise is restricted so that the current data point lies within a limited range of the previous data point (Figures 1C, 1D). This would be more representative of noisy signals in nature, where noise affects an underlying biological system with a characteristic timescale greater than the sampling interval. The level of noise added is defined as the percentage of the amplitude of the original test signal (typically 30%, 80% or 160%), and is referred to in the text as, for example, 80% walking noise.

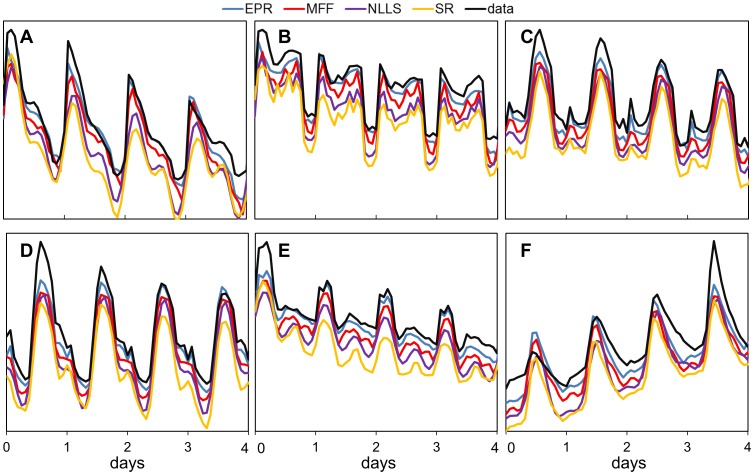

To assess applicability of the methods to the analysis of real biological systems, we also tested data sets obtained by in vivo imaging of transgenic Arabidopsis thaliana plants. Each transgenic line carried a luciferase reporter gene, in which a promoter of a gene of interest (termed the marker) is fused to the luciferase protein sequence. The expression of the luciferase protein was monitored by low-light imaging of seedlings, as described by Gould et al [36]. We analysed data acquired from transgenic plants having: CAB, CAT3, CCA1, CCR2 and TOC1 constructs [12]. During the whole measurement the plants were exposed to 24 h light/dark cycles, however some experienced 6 hours of light (short days: SD) while others received 18 hours (long days: LD). Plants in these conditions are expected to be stably entrained, with a period close to 24 h. Combining the 5 markers and 2 experimental conditions yielded 10 data sets with distinctive waveforms.

We report two metrics: the mean period and the mean absolute error, which is defined as absolute difference between the calculated value and the expected period (the means are calculated over the replicates in the tests data sets). The expected period value is 24.08 h for moderate asymmetry signal and 24.0 h in all the other cases. The mean period is abbreviated as MP in the tables and figures; absolute error (AE) refers to the mean absolute error. When discussed, statistical significance was determined by performing t-tests with alpha = 0.05.

All the algorithms were implemented in Java using the Apache Math library for matrix operations, least-squares solving and FFT transform. The implementations are wrapped into web services using JAX-WS so they can be deployed on remote servers and linked with BioDare using the SOAP protocol. We introduced small modifications to the original algorithms, described below. All our implementations conduct detrending prior to analysis (cubic polynomial for SR, linear for the other methods).

Our implementation of EPR always uses spline interpolation to transform input data to time series with 0.1 hour data interval. This data step matches the 0.1 h period scanning step size. This approach has advantages over the original method when determining the spectrum power for a fractional period (for example 24.1), see SI (Doc S1) for more details.

For the MESA method, we initially followed Dowse [37] in using the Andersen algorithm [38] but it gave us poor period estimates probably due to its known numerical instability. The Barrodale implementation of MESA [39] addressed those issues, so this approach was selected, with a bi-directional prediction filter. However, that implementation underestimated the length of the internal prediction model. The minimal model lengths that gave good period estimates for our test data were therefore determined empirically and found to be specific for each sampling frequency.

The LSPR was implemented using the algorithm described by Glynn et al. [40].

We introduced two modifications to the SR algorithm. Firstly, when performing kernel smoothing only the close neighbourhood of the current point is taken into account instead of the whole time series. The size of the neighbourhood is determined by the bandwidth under consideration, and it is equal to the distance at which the ‘kernel’ value is smaller than 10−14. This reduces the asymptotic computational cost from O(N2) to O(N). The second modification concerns the selection of the optimal bandwidth, as we sample a smaller range of candidates than in the original paper. We compared the results obtained with the modified code against the estimates obtained using the original and found that neither modification influenced the period estimates, but both substantially reduced the computation time.

Results

To evaluate the algorithms supported in BioDare, and to suggest guidelines for their use in circadian research, we compared the performance of Chi-square Periodogram (EPR), mFourfit (MFF), FFT-NLLS (NLLS), Maximum Entropy Spectral Analysis (MESA), Lomb-Scargle periodogram (LSPR) and Spectrum Resampling (SR).

1. Impact of increasing levels of noise

We first examined the impact on rhythm analysis of a limited number of data points and increasing noise levels. Period estimation was carried out using only 3 days of data with sampling every hour, i.e. only 72 data points. The six algorithms were compared for different levels of noise (30%, 80%, 160% and 300%) and both uniform and walking noise were used. Figures 2,3 and Tables 1, 2 (full data are in SI Tables S1, S2) show the results for 5 different input signals. 3 mathematical test signals: cosine; pulse and double pulse: and two sets of artificial mRNA data, one with moderate asymmetry and one with a shoulder in the data, accompanied by the aggregated results from all the waveforms. 128 replicates with different noise samples were analysed by each algorithm. We report two metrics: the mean period MP (over the 128 replicates) and the mean absolute error AE, which is defined as absolute difference between the calculated value and the expected period (24.08 h for simulated mRNA data with moderate asymmetry and 24 h for the rest).

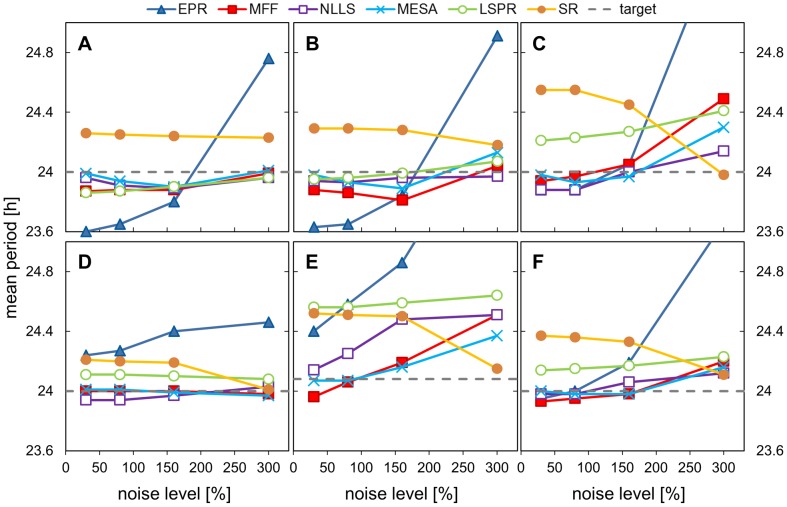

Figure 2. Impact of increasing levels of uniform noise on period estimation.

Data sets with different noise levels (30%, 80%, 160%, 300%) were analysed using all the methods and the mean period was plotted. Data sets were created by adding noise at the level indicated to the hourly-sampled template of 3 days duration. The templates were: A) cosine data, B) pulse data, C) double pulse data, D) DNFL shoulder data, E) DNFL asymmetry data (expected period is 24.08 h), F) aggregated results from all the shapes.

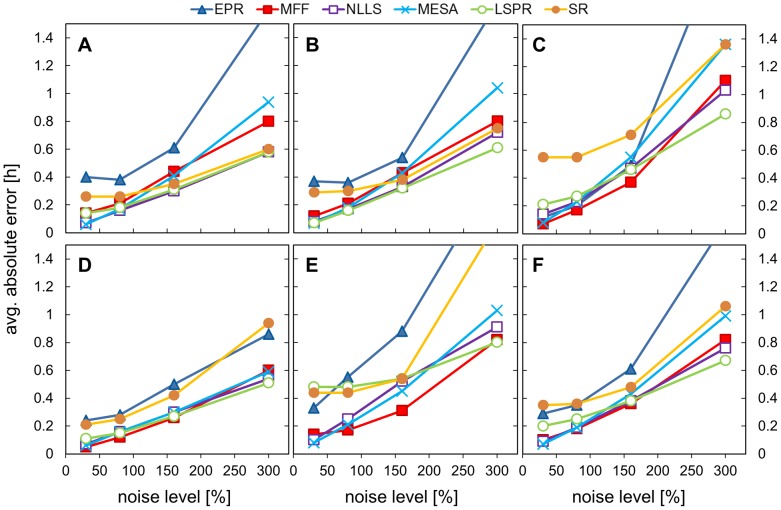

Figure 3. Impact of increasing levels of uniform noise on absolute error.

Data sets with different noise levels (30%, 80%, 160%, 300%) were analysed using all the methods and the absolute error is plotted. The absolute error is defined as the absolute value of the difference between calculated period and the expected value (24.08 for asym. signal and 24 h for the others). Data sets were created by adding noise of specific level to the hourly-sampled template of 3 days duration. The templates were: A) cosine data, B) pulse data, C) double pulse data, D) DNFL shoulder data, E) DNFL asymmetry data, F) aggregated results from all the shapes.

Table 1. Impact of uniform noise level on period estimates.

| Shape1 | Method | NL 30%2 | NL 80%2 | NL 160%2 | NL 300%2 |

| pul | EPR | 23.63 (0.07) | 23.65 (0.21) | 23.84 (0.72) | 24.91 (2.21) |

| pul | MFF | 23.88 (0.07) | 23.86 (0.22) | 23.81 (0.46) | 24.04 (1.11)+ |

| pul | NLLS | 23.94 (0.08) | 23.93 (0.19) | 23.96 (0.41)+ | 23.97 (1.37)+ |

| pul | MESA | 23.98 (0.08) | 23.93 (0.22) | 23.89 (0.55) | 24.13 (1.52)+ |

| pul | LSPR | 23.95 (0.07) | 23.96 (0.19) | 23.99 (0.4)+ | 24.07 (0.81)+ |

| pul | SR | 24.29 (0.08) | 24.29 (0.2) | 24.28 (0.4) | 24.18 (1.35)+ |

| dblp | EPR | 23.88 (0.07) | 23.88 (0.22) | 24.05 (0.69)+ | 25.63 (2.73) |

| dblp | MFF | 23.94 (0.06) | 23.97 (0.21) | 24.05 (0.45)+ | 24.49 (1.78) |

| dblp | NLLS | 23.88 (0.13) | 23.88 (0.26) | 24.0 (0.58)+ | 24.14 (1.9)+ |

| dblp | MESA | 23.98 (0.1) | 23.93 (0.27) | 23.97 (0.71)+ | 24.3 (1.95) |

| dblp | LSPR | 24.21 (0.09) | 24.23 (0.25) | 24.27 (0.51) | 24.41 (1.1) |

| dblp | SR | 24.55 (0.1) | 24.55 (0.25) | 24.45 (1.2) | 23.98 (2.73)+ |

| all | EPR | 23.95 (0.36) | 24.0 (0.49)+ | 24.19 (0.82) | 25.13 (2.16) |

| all | MFF | 23.93 (0.08) | 23.95 (0.22) | 23.98 (0.45)+ | 24.2 (1.24) |

| all | NLLS | 23.98 (0.13) | 23.98 (0.26) | 24.06 (0.5) | 24.12 (1.33) |

| all | MESA | 24.0 (0.1)+ | 23.98 (0.24) | 23.98 (0.57)+ | 24.16 (1.42) |

| all | LSPR | 24.14 (0.25) | 24.15 (0.32) | 24.17 (0.48) | 24.23 (0.89) |

| all | SR | 24.37 (0.17) | 24.36 (0.27) | 24.33 (0.69) | 24.11 (2.39)+ |

Data sets with different noise level were analysed using all the methods. The mean period value is reported in the table (standard deviation is given in brackets). Data sets were created by adding noise of specific level to the hourly-sampled template of 3 days duration. 1) The base shape of the signal: cosine (cos), pulse (pul); double pulse (dpl); shoulder (shl) and moderate asymmetry (asym), (all) represents aggregated results from all the signals. 2) NL- noise level as the percentage of the original signal amplitude. +) Means which are accurate, not statistically different from the expected period value, are marked with +. The underlying period was 24.08 h for asym data and 24.00 h for the other signals. See SI Table S1 for the full results.

Table 2. Impact of uniform noise level on absolute error.

| Shape1 | Method | NL 30%2 | NL 80%2 | NL 160%2 | NL 300%2 |

| pul | EPR | 0.37 | 0.36 | 0.54 | 1.65 |

| pul | MFF | 0.12 | 0.21 | 0.43 | 0.8 |

| pul | NLLS | 0.08 | 0.17 | 0.33 | 0.72 |

| pul | MESA | 0.07 | 0.18 | 0.43 | 1.04 |

| pul | LSPR | 0.07 | 0.16 | 0.32 | 0.61 |

| pul | SR | 0.29 | 0.3 | 0.38 | 0.75 |

| dblp | EPR | 0.12 | 0.2 | 0.49 | 2.16 |

| dblp | MFF | 0.07 | 0.17 | 0.37 | 1.1 |

| dblp | NLLS | 0.14 | 0.23 | 0.47 | 1.03 |

| dblp | MESA | 0.08 | 0.23 | 0.55 | 1.36 |

| dblp | LSPR | 0.21 | 0.27 | 0.46 | 0.86 |

| dblp | SR | 0.55 | 0.55 | 0.71 | 1.36 |

| all | EPR | 0.29 | 0.35 | 0.61 | 1.66 |

| all | MFF | 0.1 | 0.18 | 0.36 | 0.82 |

| all | NLLS | 0.09 | 0.19 | 0.38 | 0.76 |

| all | MESA | 0.07 | 0.19 | 0.43 | 0.99 |

| all | LSPR | 0.2 | 0.25 | 0.38 | 0.67 |

| all | SR | 0.35 | 0.36 | 0.48 | 1.06 |

Data sets with noise level were analysed using all the methods and the average absolute error is reported in the table. The absolute error is defined as the absolute value of the difference between calculated period and the expected value (24.08 for asym signal and 24 h for the others). Data sets were created by adding noise of given level to the hourly-sampled templates of 3 days duration. 1) The base shape of the signal: cosine (cos), pulse (pul); double pulse (dpl); shoulder (shl) and moderate asymmetry (asym), (all) represents aggregated results from all the sets2) NL- noise level as the percentage of the original signal amplitude. See SI Table S2 for the full table.

Period estimates depended upon the shape of the input signals and noise levels, with considerable differences among the algorithms. Analysis of method accuracy (how close the mean period was to 24 h) showed that MESA, MFF, NLLS gave the best period estimates for both low and high noise levels. EPR could either over- or under-estimate the mean period depending on the shape (Fig 2A,D), giving this method the largest estimation errors for high noise (Fig. 2C,E), whereas SR and LSPR (for double pulse, shoulder and moderate asymmetric shapes) tended to overestimate the period values (Fig. 2C,D,E). Double pulse and moderate asymmetry data were the most challenging, with the largest estimation errors (Fig. 2C,E). As expected, the average error increased with the level of noise, as individual input data traces were more severely distorted. However, the mean period reported by each of the methods was quite resilient to the amount of uniform noise added. There was little difference between the mean periods for 30% to 160% noise levels (relatively flat lines for the first 3 points in Fig. 2 with exception of 2E). EPR was the most sensitive to the amount of noise, and SR responded strongly to the highest 1.5 level. The MESA method gave the best results compared to the other algorithms for the highest noise level (MP in range 23.97-24.30), whereas EPR and SR performed poorly in this test (MP for EPR between 24.5 and 25.9, AE >1). Changes in period estimates due to the addition of the walking noise were more pronounced, but similar trends were observed (see SI, Figures S1, S2).

These results highlight the importance of measuring biological replicates when dealing with noisy data, as the mean period over the ‘population’ properly matched the underlying period even for substantially distorted signals. In general, MESA, MFF and NLLS offered comparable accuracy.

2. Impact of different signal durations

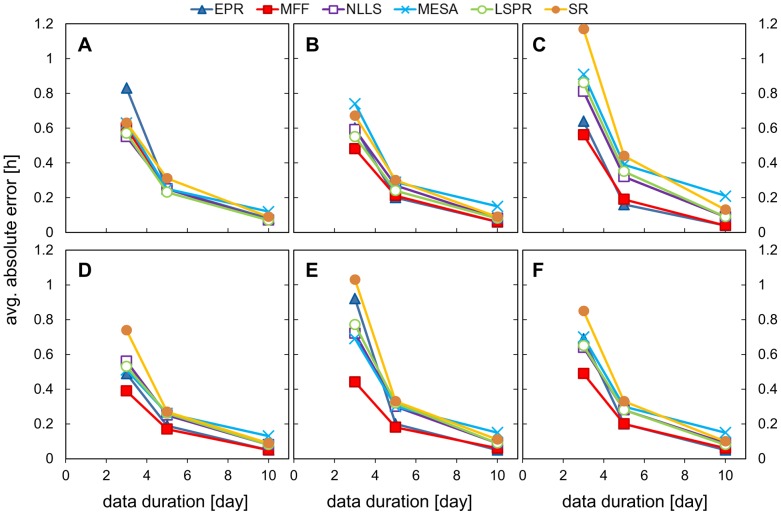

The next analysis examined the effect of different signal durations on period estimation. Here the results of period estimation using 3, 5 and 10 days of data, all sampled every hour and with 160% walking noise added. The same input signal shapes were used, each with 128 replicates with different noise samples. Figures 4, 5 and Tables 3, 4 show the results of the analysis (full results set in SI Tables S3, S4).

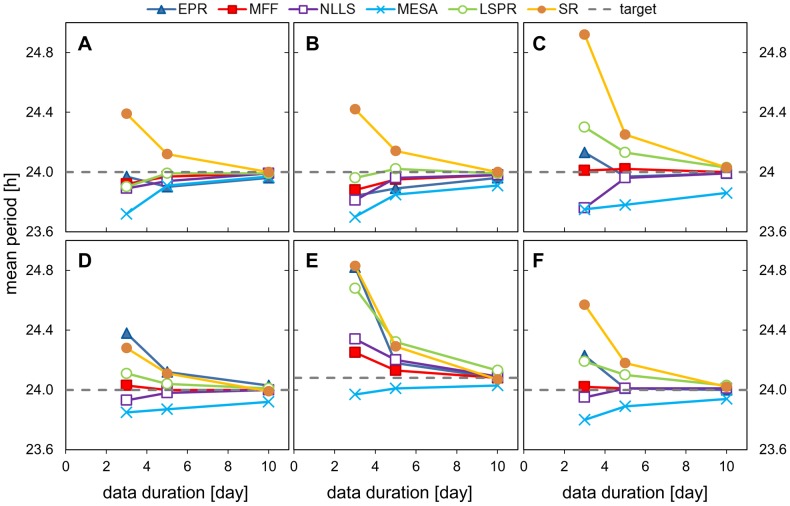

Figure 4. Impact of different signal durations on period estimation.

Data sets with different signal duration were analysed using all the methods and the mean period value is presented for each test signal shape. Data sets were created by adding 160% walking noise to the hourly-sampled templates of different duration. A) cosine data, B) pulse data, C) double pulse data, D) DNFL shoulder data, E) DNFL asymmetry data (expected period is 24.08 h), F) aggregated results from all the shapes.

Figure 5. Impact of different signal durations on absolute error.

Data sets with different signal durations were analysed using all the methods and the average absolute error is presented for each test signal shapes. Data sets were created by adding 160% walking noise to the hourly-sampled templates of different duration. A) cosine data, B) pulse data, C) double pulse data, D) DNFL shoulder data, E) DNFL asymmetry data, F) aggregated results from all the shapes.

Table 3. Impact of data duration on period estimates (data sets with walking noise).

| Shape1 | Method | 3 days | 5 days | 10 days |

| shl | EPR | 24.38 (0.52) | 24.12 (0.23) | 24.03 (0.07) |

| shl | MFF | 24.03 (0.51)+ | 24.0 (0.21)+ | 24.0 (0.07)+ |

| shl | NLLS | 23.93 (0.7)+ | 23.98 (0.33)+ | 24.0 (0.11)+ |

| shl | MESA | 23.85 (0.65) | 23.87 (0.33) | 23.92 (0.15) |

| shl | LSPR | 24.11 (0.65) | 24.04 (0.32)+ | 24.01 (0.1)+ |

| shl | SR | 24.28 (0.91) | 24.11 (0.33) | 23.99 (0.11)+ |

| asym | EPR | 24.82 (1.08) | 24.18 (0.24) | 24.08 (0.06)+ |

| asym | MFF | 24.25 (0.57) | 24.13 (0.21) | 24.08 (0.07)+ |

| asym | NLLS | 24.34 (0.85) | 24.2 (0.37) | 24.09 (0.11)+ |

| asym | MESA | 23.97 (0.86)+ | 24.01 (0.38) | 24.03 (0.18) |

| asym | LSPR | 24.68 (0.78) | 24.32 (0.34) | 24.13 (0.1) |

| asym | SR | 24.83 (1.14) | 24.29 (0.39) | 24.07 (0.13)+ |

| all | EPR | 24.23 (1.08) | 24.01 (0.27)+ | 24.0 (0.09)+ |

| all | MFF | 24.02 (0.74)+ | 24.01 (0.25)+ | 24.01 (0.08) |

| all | NLLS | 23.95 (0.82)+ | 24.01 (0.36)+ | 24.01 (0.12) |

| all | MESA | 23.8 (1.07) | 23.89 (0.37) | 23.94 (0.19) |

| all | LSPR | 24.19 (0.88) | 24.1 (0.35) | 24.03 (0.11) |

| all | SR | 24.57 (1.1) | 24.18 (0.4) | 24.02 (0.17) |

Data sets with different signal duration were analysed using all the methods. The mean period value is reported in the table (standard deviation is given in brackets). Data sets were created by adding walking noise of 160% of the original signal amplitude to the hourly-sampled templates of different length. 1) The base shape of the signal: cosine (cos), pulse (pul); double pulse (dpl); shoulder (shl) and moderate asymmetry (asym), (all) represents aggregated results from all the signals. +) Means which are accurate, not statistically different from the expected period value, are marked with +. The underlying period was 24.08 h for asym data and 24.00 h for the other signals. See SI Table S3 for the full table.

Table 4. Impact of data duration on absolute error (data sets with walking noise).

| Shape1 | Method | 3 days | 5 days | 10 days |

| shl | EPR | 0.49 | 0.19 | 0.05 |

| shl | MFF | 0.39 | 0.17 | 0.05 |

| shl | NLLS | 0.56 | 0.25 | 0.08 |

| shl | MESA | 0.51 | 0.26 | 0.13 |

| shl | LSPR | 0.53 | 0.26 | 0.08 |

| shl | SR | 0.74 | 0.27 | 0.09 |

| asym | EPR | 0.92 | 0.2 | 0.05 |

| asym | MFF | 0.44 | 0.18 | 0.06 |

| asym | NLLS | 0.72 | 0.3 | 0.09 |

| asym | MESA | 0.69 | 0.3 | 0.15 |

| asym | LSPR | 0.77 | 0.32 | 0.09 |

| asym | SR | 1.03 | 0.33 | 0.11 |

| all | EPR | 0.69 | 0.2 | 0.05 |

| all | MFF | 0.49 | 0.2 | 0.06 |

| all | NLLS | 0.64 | 0.28 | 0.09 |

| all | MESA | 0.7 | 0.3 | 0.15 |

| all | LSPR | 0.65 | 0.28 | 0.08 |

| all | SR | 0.85 | 0.33 | 0.1 |

Data sets with different signal duration were analysed using all the methods and the average absolute error is reported in the table. The absolute error is defined as the absolute value of the difference between calculated period and the expected value (24.08 for asym signal and 24 h for the others). Data sets were created by adding walking noise of 160% of the original signal amplitude to the hourly-sampled templates of different duration. 1) The base shape of the signal: cosine (cos), pulse (pul); double pulse (dpl); shoulder (shl) and moderate asymmetry (asym), (all) represents aggregated results from all the sets. See SI Table S4 for the full table.

As expected, accuracy of the period estimate improved and the error decreased as the duration of the time series, and hence the number of samples and cycles, increased. All methods gave almost perfect period estimates for 10 days of data (discrepancies below 0.1 h are insignificant in circadian applications as the typical biological variation is larger than 6 minutes). MESA tended to underestimate periods values (Fig. 4C, F) and its results for long data were statistically different from the other methods for all signal shapes apart from the simple cosine. Detailed examination of 3 days data with walking noise added revealed that MFF was the most accurate method for short timeseries (Fig. 5B–F), followed by NLLS and MESA, and the difference between those methods often was not statistically significant. The SR and EPR were the least suitable for analysis of such short data series, their absolute error values were in the range (0.6–1.2 and 0.5–0.9 respectively). In general SR overestimated period value (MP in the range of 24.3–24.9 h).

The acquired data suggests 5 days as a reasonable duration for circadian experiments aiming to estimate period. Compared to 10 days of data, 5 days data length is more technically feasible and should limit the impact of physiological changes in the studied samples. At the same time, the calculated mean period values lay close enough to the expected 24 hours and individual errors were below 0.2 h, which should be sufficient for typical applications. For 5 days data duration, MFF was generally the most accurate method (MP 24.0±0.05 for all shapes apart from asym., AE≈0.2), followed by NLLS, LSPR and MESA.

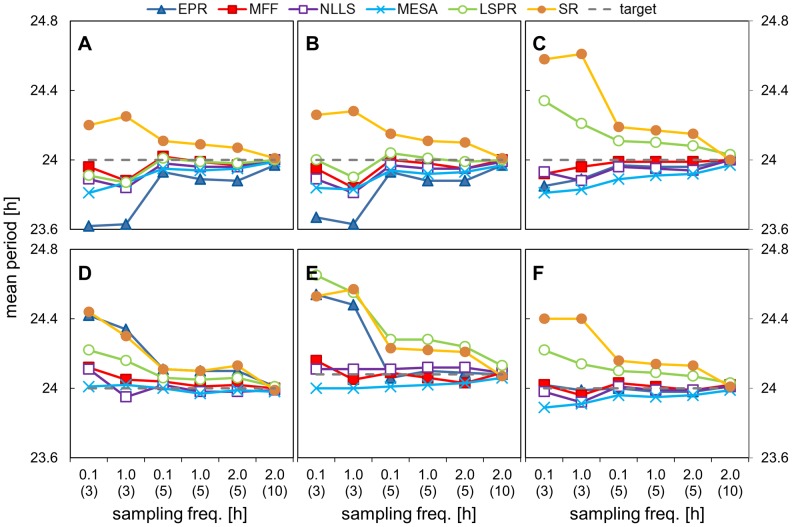

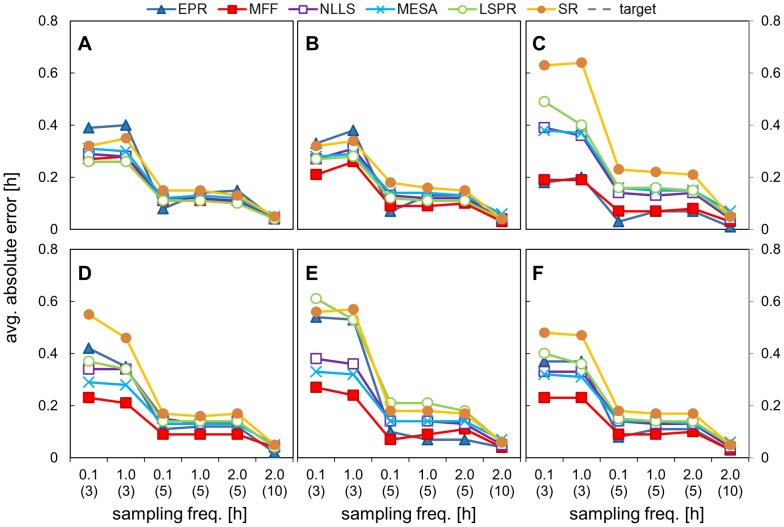

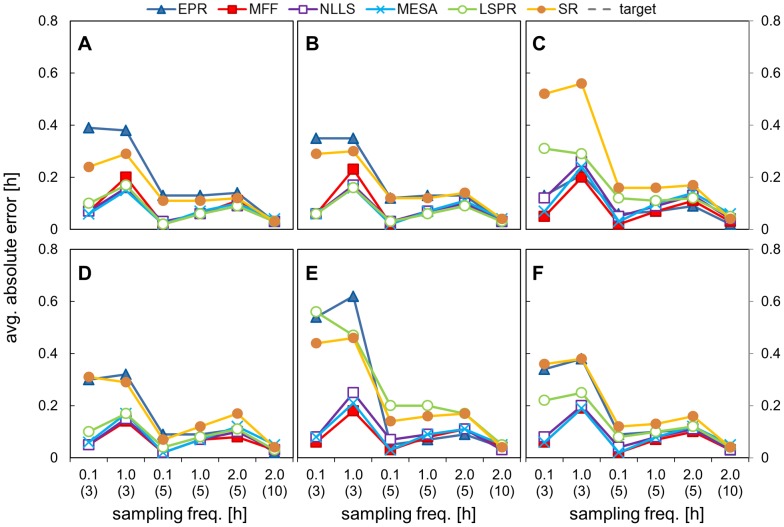

3. Impact on period estimation of varying the sampling frequency

In the third investigation we tested the influence of sampling frequency on the accuracy of period estimation. We used data with a noise level kept at 80% and we varied the sampling frequency between every 6 minutes, every hour and every 2 hours. The results are shown in Figures 6, 7, 8 and Tables 5, 6 (full results set in SI Tables S5, S6).

Figure 6. Impact of sampling frequency on period estimation.

Data sets with different time intervals and selected durations were analysed using all the methods and the mean period value is plotted. Data sets were created by adding 80% walking noise to the templates of different duration and time interval between points. The X axis represents time intervals with data duration in brackets. The underlying period was 24.08 h for asym. data and 24.00 h for the other signals. A) cosine data, B) pulse data, C) double pulse data, D) DNFL shoulder data, E) DNFL asymmetry data, F) aggregated results from all the shapes.

Figure 7. Impact of sampling frequency on absolute error (walking noise data set).

Data sets with different time intervals and selected durations were analysed using all the methods and the mean absolute error value is plotted. Data sets were created by adding 80% walking noise to the templates of different duration and time interval between points. The X axis represents time intervals with data duration in brackets. The underlying period was 24.08 h for asym data and 24.00 h for the other signals. A) cosine data, B) pulse data, C) double pulse data, D) DNFL shoulder data, E) DNFL asymmetry data, F) aggregated results from all the shapes.

Figure 8. Impact of sampling frequency on absolute error (uniform noise data set).

Data sets with different time intervals and selected durations were analysed using all the methods and the mean absolute error value is plotted. Data sets were created by adding 80% uniform noise to the templates of different duration and time interval between points. The X axis represents time intervals with data duration in brackets. The underlying period was 24.08 h for asym. data and 24.00 h for the other signals. A) cosine data, B) pulse data, C) double pulse data, D) DNFL shoulder data, E) DNFL asymmetry data, F) aggregated results from all the shapes.

Table 5. Impact of sampling frequency on period estimates (data sets with walking noise).

| Shape1 | Method | 0.1 (3.0)2 | 1.0 (3.0)2 | 0.1 (5.0)2 | 1.0 (5.0)2 | 2.0 (5.0)2 | 2.0 (10)2 |

| cos | EPR | 23.62 | 23.63 | 23.93 | 23.89 | 23.88 | 23.97 |

| cos | MFF | 23.96 | 23.88 | 24.02 | 23.99 | 23.97 | 24.00 |

| cos | NLLS | 23.89 | 23.84 | 23.98 | 23.96 | 23.96 | 24.00 |

| cos | MESA | 23.81 | 23.87 | 23.95 | 23.94 | 23.95 | 23.99 |

| cos | LSPR | 23.91 | 23.87 | 24.01 | 23.99 | 23.98 | 24.00 |

| cos | SR | 24.2 | 24.25 | 24.11 | 24.09 | 24.07 | 24.01 |

| asym | EPR | 24.54 | 24.48 | 24.06 | 24.1 | 24.09 | 24.08 |

| asym | MFF | 24.16 | 24.05 | 24.09 | 24.06 | 24.03 | 24.09 |

| asym | NLLS | 24.11 | 24.11 | 24.11 | 24.12 | 24.12 | 24.09 |

| asym | MESA | 24.00 | 24.00 | 24.01 | 24.02 | 24.03 | 24.06 |

| asym | LSPR | 24.65 | 24.55 | 24.28 | 24.28 | 24.24 | 24.13 |

| asym | SR | 24.53 | 24.57 | 24.23 | 24.22 | 24.21 | 24.07 |

| all | EPR | 24.02 | 23.99 | 24 | 23.98 | 23.98 | 24.01 |

| all | MFF | 24.02 | 23.96 | 24.03 | 24.01 | 23.99 | 24.02 |

| all | NLLS | 23.98 | 23.92 | 24.01 | 23.99 | 23.99 | 24.01 |

| all | MESA | 23.89 | 23.91 | 23.96 | 23.95 | 23.96 | 23.99 |

| all | LSPR | 24.22 | 24.14 | 24.1 | 24.09 | 24.07 | 24.03 |

| all | SR | 24.40 | 24.40 | 24.16 | 24.14 | 24.13 | 24.01 |

Data sets with different time intervals and selected durations were analysed using all the methods and the mean period value is reported in the table (standard deviations are omitted for clarity). Data sets were created by adding walking noise of 80% of the original signal amplitude to the templates of different duration and time interval between points. The underlying period was 24.08 h for asym data and 24.00 h for the other signals. 1) The base shape of the signal: cosine (cos), pulse (pul); double pulse (dpl); shoulder (shl) and moderate asymmetry (asym), (all) represents aggregated results from all the sets. 2) the time interval (sampling frequency) in the data set and in brackets the data duration. See SI Table S5 for the full table.

Table 6. Impact of sampling frequency on on absolute error (data sets with walking noise).

| Shape1 | Method | 0.1 (3.0)2 | 1.0 (3.0)2 | 0.1 (5.0)2 | 1.0 (5.0)2 | 2.0 (5.0)2 | 2.0 (10)2 |

| cos | EPR | 0.39 | 0.4 | 0.08 | 0.14 | 0.15 | 0.04 |

| cos | MFF | 0.27 | 0.28 | 0.11 | 0.11 | 0.11 | 0.04 |

| cos | NLLS | 0.29 | 0.28 | 0.12 | 0.12 | 0.11 | 0.04 |

| cos | MESA | 0.31 | 0.3 | 0.12 | 0.13 | 0.12 | 0.05 |

| cos | LSPR | 0.26 | 0.26 | 0.11 | 0.11 | 0.1 | 0.04 |

| cos | SR | 0.32 | 0.35 | 0.15 | 0.15 | 0.13 | 0.05 |

| asym | EPR | 0.54 | 0.53 | 0.1 | 0.07 | 0.07 | 0.04 |

| asym | MFF | 0.27 | 0.24 | 0.07 | 0.09 | 0.11 | 0.04 |

| asym | NLLS | 0.38 | 0.36 | 0.14 | 0.14 | 0.13 | 0.05 |

| asym | MESA | 0.33 | 0.32 | 0.14 | 0.14 | 0.14 | 0.07 |

| asym | LSPR | 0.61 | 0.53 | 0.21 | 0.21 | 0.18 | 0.06 |

| asym | SR | 0.56 | 0.57 | 0.18 | 0.18 | 0.17 | 0.06 |

| all | EPR | 0.37 | 0.37 | 0.08 | 0.11 | 0.11 | 0.03 |

| all | MFF | 0.23 | 0.23 | 0.09 | 0.09 | 0.1 | 0.03 |

| all | NLLS | 0.33 | 0.33 | 0.14 | 0.13 | 0.13 | 0.04 |

| all | MESA | 0.32 | 0.31 | 0.14 | 0.14 | 0.13 | 0.06 |

| all | LSPR | 0.4 | 0.36 | 0.15 | 0.14 | 0.14 | 0.05 |

| all | SR | 0.48 | 0.47 | 0.18 | 0.17 | 0.17 | 0.05 |

Data sets with different time interval and selected durations were analysed using all the methods and the average absolute error is reported in the table, the absolute error is defined as the absolute value of the difference between calculated period and the expected value (24.08 for asym signal and 24 h for the others). Data sets were created by adding walking noise of 80% of the original signal amplitude to the templates of different duration and time interval between points. 1) The base shape of the signal: cosine (cos), pulse (pul); double pulse (dpl); shoulder (shl) and moderate asymmetry (asym), (all) represents aggregated results from all the sets. 2) the time interval (sampling frequency) in the data set and in brackets the data duration in days. See SI Table S6 for the full table.

The calculated mean periods and average errors were almost identical for all 3 sampling frequencies, in the case of 5 days of data with walking noise added (Fig. 6,7); the max difference between the MP for each frequency was 0.08 (SR analysis of shl. data). The sampling frequency had considerably less impact on period estimates than data duration or level of noise. The results for the data sampled 2-hourly over 10 days highlighted the importance of the data duration. Their average error was lower than for hourly or 6 minutes sampled 5 day data, although the former consisted of exactly the same number of data points and the latter had 10 times more (Fig. 7). In similar fashion, the estimates for 3-day data with 6 minutes time interval (720 timepoints) were less precise than hourly-sampled, 5-day data with only 120 measurements (Fig 6A–C).

Different conclusions could be drawn from the results obtained for the data with uniform noise. In this case, frequent sampling reduced the value of average error (Fig. 8, first two points in each panel). This is the consequence of different impact of both forms of noise on the waveform shape (see SI, Figure S3). Uniform noise added to densely-sampled data preserves the underlying shape, as there is high probability that the changes to the each of 10 points per hour would cancel each other. In contrast, walking noise ‘propagates’ between the points, and the final waveform for the 0.1 and 1-hourly data were both similarly distorted. Nevertheless, the results for 10 days data with 2 hour sampling interval were generally equal or more accurate than for shorter timeseries sampled every 6 minutes, despite having an order of magnitude fewer data points.

MFF was the best method for the analysis of the most ‘sparse’ data (5 days, 2-hourly samples) with, followed by NLLS and MESA, all 3 having MP in the range of 24.00±0.05 h; the difference between those methods was statistically significant only for dbl. pulse and asymmetric data.

4. Impact of non-sinusoidal data

One of the reported advantages of techniques which do not try to fit the data as a series of sines and/or cosines is that such techniques are able to perform better when analysing non-sinusoidal data. Looking back at results presented above, we could not prove such claims. The more demanding time series were: the double pulse mathematical test signal; and both simulated DNFL RNA time series (asymmetric and shoulder data). Those signals were neither constructed using trigonometric functions nor were symmetrical. Nevertheless both MFF and NLLS typically offered the best accuracy, which could be matched only by MESA from the non-sinusoidal methods.

5. Impact of non-evenly sampled data

In a typical circadian experiment data are collected at constant time intervals, however, due to technical problems, occasionally some of the data points have to be omitted and the final time series are no longer evenly spaced (for example, measurements of luminescence often contain cosmic-ray-induced spikes which have to be removed prior to period analysis). EPR, MESA and our implementation of SR require evenly spaced input data. LSPR and MFF do not have such restrictions, while NLLS is intermediate, as its core cosine fitting is performed using arbitrary input data times, but the initial parameters are established under the assumption of a regular time interval.

In order to test influence of irregular time intervals on the methods performance, we took hourly-sampled, 5-day-long data sets and randomly removed 2, 12, 24 and 36 data points (1%, 10%, 20% and 30% of the original signals). We then analysed the resulting time series using all the methods; in the case of EPR, MESA and SR, a simple interpolation was performed to provide the algorithms with necessary data regularity.

Unexpectedly, even the removal of the 30% of the time points had no effect on either mean period or average error (Table 7), despite the fact that the altered timeseries typically contained 5-6-hour gaps in their data. In this scenario, simple interpolation was enough to rectify the limitations of EPR, MESA and SR methods, without influencing their period estimates.

Table 7. Impact of non-evenly sampled data on period estimates.

| Shape1 | del.2) | Metric3 | EPR | MFF | NLLS | MESA | LSPR | SR |

| pul | 0 | MP | 23.88 | 23.98 | 23.95 | 23.92 | 24.01 | 24.11 |

| pul | 30% | MP | 23.88 | 23.96 | 23.97 | 23.91 | 23.99 | 24.11 |

| all | 0 | MP | 23.99 | 24.01 | 23.99 | 23.95 | 24.09 | 24.14 |

| all | 30% | MP | 23.99 | 24.00 | 24.04 | 23.95 | 24.07 | 24.14 |

| pul | 0 | AE | 0.13 | 0.09 | 0.12 | 0.14 | 0.11 | 0.16 |

| pul | 30% | AE | 0.13 | 0.1 | 0.13 | 0.14 | 0.12 | 0.16 |

| all | 0 | AE | 0.11 | 0.09 | 0.13 | 0.14 | 0.14 | 0.17 |

| all | 30% | AE | 0.11 | 0.1 | 0.15 | 0.15 | 0.16 | 0.17 |

The reference data sets together with data sets from which 30% data points were randomly removed, were analysed with all the methods. The mean period and the absolute error are reported in the table. The reference sets consisted of 5 days of hourly-sampled data, to which walking noise was added at 80% level of the original amplitude. 1) The type of the base signal, (pul) pulse, (all) aggregated results from all the used shapes (cosine, pulse, double pulse, shoulder and moderate asymmetry). 2) Percentage of randomly removed points (time series with 30% points missing usually contained at least one 5-hour gap in the data). 3) Values of mean period (MP) and absolute error (AE)

6. Influence of baseline trends

Period analysis methods usually assume only small variations in signal level and amplitude. To meet this assumption, most implementations have some form of detrending built into the data pre-processing steps. However in our experience, biological data from imaging experiments typically have significant baseline trends as well as changes in amplitude (for example, dampening due to the individual cellular rhythms losing synchrony). Thus, the next investigation considered the influence of baseline and amplitude trends on period estimation.

To examine the influence of baseline trends, 5 envelope shapes were applied to the pulse waveform used in the previous analyses. The envelopes comprised: linear increase; exponential increase; inverse parabola; 2/3 inverse parabola; and 1/3 parabola, see supplementary materials Figure S4 for more details and example time series. The maximum amplitude of each of the envelopes was varied from 0 (no baseline trend) to 100. In all cases the true underlying period was 24 hours and the data were sampled every hour for 5 days. 128 replicates were different noise samples (80% walking noise) were used and the average period for the 128 replicates was calculated. The results are shown in Table 8 and SI Table S7. In each case, the period estimates were accurate for baseline trends of equal amplitude to the 24 h rhythm, but were wildly overestimated when the algorithm confounded the trend with the 24 h rhythmic signal.

Table 8. Impact of baseline trend on periods estimates.

| Trend1 | Method | Level 02 | Level 12 | Level 52 | Level 102 | Level 202 |

| exp | EPR | 23.88 (0.11) | 23.87 (0.11) | 23.65 (0.26) | 23.05 (1.16) | 35.1 (0) |

| exp | MFF | 23.98 (0.11) | 23.94 (0.13) | 23.38 (0.1) | 22.95 (0.08) | 31.87 (5.31) |

| exp | NLLS | 23.95 (0.15) | 23.92 (0.14) | 23.66 (0.28) | 612.09 (657) | 705.89 (227.48) |

| exp | MESA | 23.92 (0.15) | 23.92 (0.15) | 23.92 (0.15) | 23.9 (0.16) | 23.88 (0.17) |

| exp | LSPR | 24.01 (0.13) | 23.92 (0.13) | 23.45 (0.14) | 22.88 (0.09) | 35.63 (1.66) |

| exp | SR | 24.11 (0.15) | 24.11 (0.15) | 24.1 (0.15) | 24.1 (0.15) | 24.09 (0.15) |

| ipar | EPR | 23.88 (0.11) | 23.8 (0.17) | 34.61 (0.73) | 34.62 (0.09) | 34.6 (0.05) |

| ipar | MFF | 23.98 (0.11) | 23.76 (0.04) | 34.62 (1.65) | 34.9 (0.03) | 34.85 (0.02) |

| ipar | NLLS | 23.95 (0.15) | 24.15 (0.25) | 269.56 (77.77) | 280.77 (23.87) | 173.73 (120.04) |

| ipar | MESA | 23.92 (0.15) | 23.98 (0.15) | 23.98 (0.18) | 24.19 (0.22) | 24.69 (0.54) |

| ipar | LSPR | 24.01 (0.13) | 24.31 (0.2) | 34.88 (1.77) | 35.3 (0.04) | 35.31 (0.02) |

| ipar | SR | 24.11 (0.15) | 24.1 (0.15) | 24.07 (0.15) | 29.57 (6.6) | 46.88 (0.5) |

Data sets with different levels of baseline trend and different trend forms were analysed using all the methods and the mean period value is reported in the table (standard deviation is given in brackets). Data sets were created by taking a standard pulse signal data set (5 days data, hourly sampled, 80% walking noise level, 24 h underlying period) and adding to it 5 different envelope shapes with increasing amplitude. 1) The trend/envelope shapes: linear increase (lin); exponential increase (exp); inverse parabola (ipar); 2/3 inverse parabola (2/3ipar) and 1/3 parabola (1/3par). 2) The baseline level is defined as ration between trend total amplitude and the original signal amplitude (0 no trend, 20 trend is 20 times higher than signal). See SI Table S7 for the full table.

The inverse parabola was the most challenging form of the baseline trend for all the methods, while linear trend was correctly removed by all the implementations, because they have a linear detrending step built into the data pre-processing. The MESA method was the most resilient to the presence of large-scale baseline trends, with amplitudes up to 100-fold greater than the 24 h rhythmic signal (MP was in the range 24.0±0.2 for all trends apart from parabola). The other spectral-based method, SR, coped well with trends up to 20 times larger than the signal amplitude. However, SR incorporates third-order polynomial detrending in its data pre-processing, which the other methods lack. The results suggested that the other algorithms attempted to fit/report a large period component which corresponded to the envelope of the baseline trend. The implementations of EPR, LSPR, MFF were restricted to search for periods of up to 35 hours; for the large-amplitude trends, this value was reported instead of the underlying 24 h oscillations. NLLS was the most susceptible to the presence of trends. NLLS usually stopped after fitting only one, long-period cosine component that represented the trend, as the contribution of extra components with low amplitude to the fit was rejected as insignificant.

In summary, it would appear that large-amplitude trends in the baseline are challenging to all algorithms. It is worth noting that such time series are representative of real biological data. As a rule of thumb, if the trend in the data obscures the presence of the oscillations to the human eye, it also distorts period estimates. Our recommendation, therefore, would be to examine the input time series and apply an appropriate baseline detrending algorithm before attempting to use any of the period estimation methods.

7. Influence of amplitude trends

Having examined the performance of the algorithms when subject to baseline trends, the influence of amplitude trends was then examined. Similar to the investigation into baseline trends, 5 envelope shapes were applied to the amplitude of the pulse waveform. The amplitude envelopes comprised: linear decrease; exponential decrease; parabola; 2/3 parabola; and 1/3 inverse parabola. The slope of each amplitude envelope was varied from 0 (no trend) to 1 (the amplitude has decayed to zero after 5 days; see supplementary materials Figure S5 for more details and example time series). In all cases the true underlying period was 24 hours and the data were sampled every hour for 5 days. 128 replicates with different noise samples (all walking noise, 80% amplitude) were used and the averaged period for the 128 replicates was calculated. The results are shown in Figure 9 and Table 9 (SI Table S8).

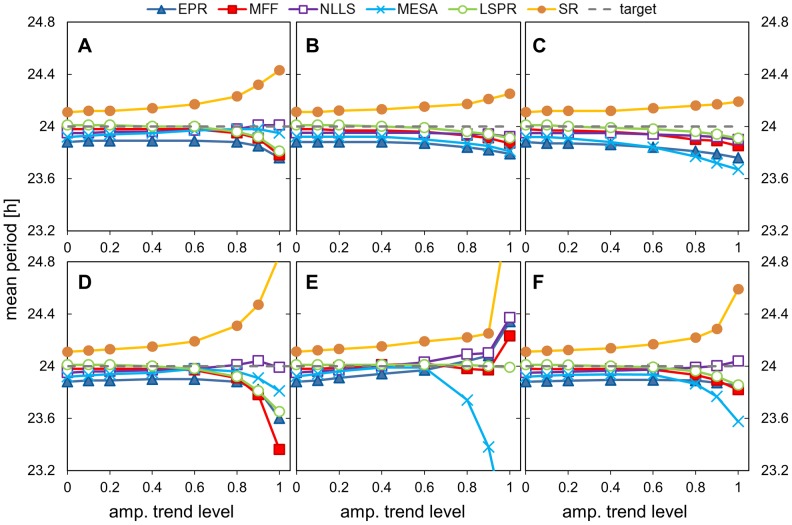

Figure 9. Impact of amplitude trends on period estimation.

Data sets with different levels of amplitude trend and different trend forms were analysed using all the methods and the mean period value is plotted. The amplitude trends were obtained by dampening the test data sets to the stated level using different trend shapes/envelopes. Dampening was applied to a standard pulse data set (5 days data, hourly sampled, 80% walking noise level, 24 h underlying period), using 5 different envelope shapes with increasing amplitude. The level of amplitude trend, i.e. the maximal reduction of the original signal, is denoted as: 0, no dampening; and for example 0.6 for lin. trend means that at the end of 5th day, the signal is reduced to 40% of its original value. The envelope shapes: A) exponential, B) linear, C) 1/3 parabola, D) 2/3 parabola, E) parabola, F) aggregated results from all the shapes.

Table 9. Impact of amplitude trend on periods estimates.

| Trend1 | Method | 02 | 0.12 | 0.22 | 0.42 | 0.62 | 0.82 | 0.92 | 12 |

| exp | NLLS | 23.95 | 23.95 | 23.96 | 23.96 | 23.97 | 23.98 | 24.01 | 24.01 |

| exp | LSPR | 24.01 | 24.01 | 24.01 | 24.00 | 24.00 | 23.96 | 23.92 | 23.81 |

| exp | MESA | 23.92 | 23.93 | 23.94 | 23.95 | 23.97 | 23.98 | 23.98 | 23.95 |

| exp | MFF | 23.98 | 23.98 | 23.98 | 23.98 | 23.98 | 23.95 | 23.91 | 23.78 |

| exp | EPR | 23.88 | 23.89 | 23.89 | 23.89 | 23.89 | 23.88 | 23.85 | 23.76 |

| exp | SR | 24.11 | 24.12 | 24.12 | 24.14 | 24.17 | 24.23 | 24.32 | 24.43 |

| lin | NLLS | 23.95 | 23.95 | 23.95 | 23.95 | 23.95 | 23.94 | 23.94 | 23.92 |

| lin | LSPR | 24.01 | 24.01 | 24.01 | 24.00 | 23.99 | 23.96 | 23.94 | 23.91 |

| lin | MESA | 23.92 | 23.92 | 23.92 | 23.92 | 23.9 | 23.87 | 23.85 | 23.81 |

| lin | MFF | 23.98 | 23.98 | 23.97 | 23.97 | 23.96 | 23.93 | 23.91 | 23.87 |

| lin | EPR | 23.88 | 23.88 | 23.88 | 23.88 | 23.87 | 23.84 | 23.82 | 23.79 |

| lin | SR | 24.11 | 24.11 | 24.12 | 24.13 | 24.15 | 24.17 | 24.21 | 24.25 |

| 2/3par | NLLS | 23.95 | 23.96 | 23.96 | 23.97 | 23.98 | 24.01 | 24.04 | 23.99 |

| 2/3par | LSPR | 24.01 | 24.01 | 24.01 | 24.00 | 23.98 | 23.92 | 23.81 | 23.65 |

| 2/3par | MESA | 23.92 | 23.93 | 23.94 | 23.95 | 23.98 | 23.96 | 23.91 | 23.81 |

| 2/3par | MFF | 23.98 | 23.98 | 23.98 | 23.98 | 23.97 | 23.91 | 23.78 | 23.36 |

| 2/3par | EPR | 23.88 | 23.89 | 23.89 | 23.9 | 23.9 | 23.88 | 23.82 | 23.6 |

| 2/3par | SR | 24.11 | 24.12 | 24.13 | 24.15 | 24.19 | 24.31 | 24.47 | 24.85 |

Data sets with different levels of amplitude trend and different trend forms were analysed using all the methods and the mean period value is reported in the table (standard deviations omitted for clarity). The amplitude trends were obtained by dampening the test data sets to the stated level at the last day using different trend shapes/envelopes. Dampening was applied to a standard pulse data set (5 days data, hourly sampled, 80% walking noise level, 24 h underlying period) and adding to it 5 different envelope shapes with increasing amplitude. 1) The trend/envelope shapes: linear decrease (lin); exponential decrease; parabola (par); 2/3 parabola (2/3par) and 1/parabola (1/3par) 2) The level of amplitude trend, ie, the maximal level of original signal deduction. 0 means no dampening, and for example 0.6 for lin. trend means that at the end of 5th day, the signal is reduced to 40% of its original vale. See SI Table S8 for the full table.

Overall, the most striking result is that even substantial, non-monotonic amplitude trends do not pose a major challenge for these algorithms. Dampening of the signal by 40% of its initial strength (trend 0.4 in our notation) affected period estimates by any of the methods by <0.1 h, and <0.5 h for trend 0.8. Unlike in the case of baseline trends, SR and MESA had the largest sensitivity to the amplitude trends, while MFF and NLLS were usually the least sensitive.

These results suggest that no amplitude detrending is necessary to estimate period, even if the recorded signal loses half of its amplitude during the measurement interval. This is reassuring, because pre-processing for amplitude detrending typically distorts the shape of the data waveforms and is best avoided if possible.

8. Performance of the algorithms in classifying arrhythmic signals

Another aspect of period analysis is distinguishing between arrhythmic and rhythmic signals. However, the problem starts even with defining the arrhythmic signal. Even randomly created time series, can have some ‘structure’ due to its finite length, furthermore any noise will demonstrate itself as a high frequency component while a trend in the data will manifest itself in the low frequencies. For that reason, rather than expecting a positive identification of arrhythmic data, it is more reasonable to expect the absence of a period in the range of interest: we term this the weak arrhythmicity criterion. In this study, we define this range as 16–32 h; BioDare includes a similar, user-specified period selection range (18–30 h by default), and period values outside this range are ignored in summary statistics.

In the first experiment, we generated 100 time series with uniform noise and analysed them with the six methods (Table S9 in SI). NLLS reported 21 series as arrhythmic, LSPR dismissed 99 results for being below the significance threshold, while EPR dismissed only 6 of them (see SI). The reported period values for NLLS and SR were all (SR) or almost all (77/79, NLLS) outside the circadian range, so both methods passed our weak arrhythmicity criterion. EPR identified 32% circadian periods, failing to identify arrhythmia by the weak criterion. As MESA and MFF do not have any significance test, we used them over a wider period range (15–35 h), in case spurious periods were returned at one of the boundaries. However, most of the periods found by MESA (62%) and MFF (78%) were inside our range of interest (16–32 h), so those methods also returned false positives by our weak criterion.

There are two problems with this test. Firstly it is not realistic, as biologists would not usually analyse obviously arrhythmic data but signals with at least some oscillating pattern. Secondly, we cannot objectively specify how many of the data series should fail, as we cannot estimate their arrhythmicity independently: the reported period values might correctly match structure in the data that was introduced by the noise.

We addressed those problems by examining the performance of all algorithms in classifying a class of arrhythmic signals that is common in circadian experiments, where rhythmic amplitude collapses within the time series. Clock-regulated molecular rhythms often also respond to environmental light and/or temperature signals. These responses are usually retained even if the clock is otherwise disabled. Such molecular components exhibit driven rhythms in a rhythmic environment, but become arrhythmic in a constant environment. A transition from rhythmic to constant conditions is therefore a common part of circadian protocols, and a relevant case for analysis.

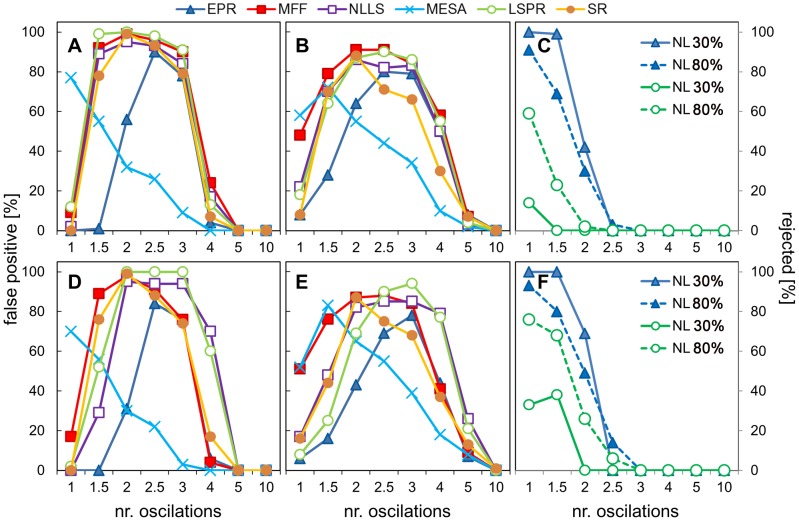

To simulate this we took two mathematical test signals of known, 24 hour period, and we applied a linear dampening filter to them, such that the amplitude of the signal was reduced to zero after 1 day, 1.5 days, 2 days, 2.5 days, 3, 4, 5, or 10 days. Noise was then added at 30% or 80% amplitude of the original time series (see supplementary material Figure S6 for example time series). The rationale for this was that there need to be at least 2 cycles (so in this case 2 days) of data for the data to be identifiably periodic. Hence those time series which are reduced to zero after 1 day or 1.5 days should be classified as arrhythmic. For the data with slower dampening, if a period is identified then this period should be close to the known 24 hour period of the underlying signal within the time series as it should dominate over the noise factor. We define such a period as being “accurate” (defined as ±0.5 hours from the expected 24 hour period). Any period values which are in the circadian range but not ‘accurate’ can be treated as false positives, because unlike in the previous experiment, we know the underlying ‘structure’ of our signal. In this test we assumed circadian range to be (18–30 h), while the methods were used over the wider range of (15–35), again the reason for that was the expectation of spurious periods returned at one of the boundaries. The results are shown in Figure 10. When discussing these results, we refer to data with the rhythmic signal reduced to 0 after the 2nd day as ‘2 days of data’, for example, though the full time series spanned 5 days.

Figure 10. Performance of the algorithms in classifying arrhythmic signals.