Abstract

Study Objectives:

Memory reactivation appears to be a fundamental process in memory consolidation. In this study we tested the influence of memory reactivation during rapid eye movement (REM) sleep on memory performance and brain responses at retrieval in healthy human participants.

Participants:

Fifty-six healthy subjects (28 women and 28 men, age [mean ± standard deviation]: 21.6 ± 2.2 y) participated in this functional magnetic resonance imaging (fMRI) study.

Methods and Results:

Auditory cues were associated with pictures of faces during their encoding. These memory cues delivered during REM sleep enhanced subsequent accurate recollections but also false recognitions. These results suggest that reactivated memories interacted with semantically related representations, and induced new creative associations, which subsequently reduced the distinction between new and previously encoded exemplars. Cues had no effect if presented during stage 2 sleep, or if they were not associated with faces during encoding. Functional magnetic resonance imaging revealed that following exposure to conditioned cues during REM sleep, responses to faces during retrieval were enhanced both in a visual area and in a cortical region of multisensory (auditory-visual) convergence.

Conclusions:

These results show that reactivating memories during REM sleep enhances cortical responses during retrieval, suggesting the integration of recent memories within cortical circuits, favoring the generalization and schematization of the information.

Citation:

Sterpenich V, Schmidt C, Albouy G, Matarazzo L, Vanhaudenhuyse A, Boveroux P, Degueldre C, Leclercq Y, Balteau E, Collette F, Luxen A, Phillips C, Maquet P. Memory reactivation during rapid eye movement sleep promotes its generalization and integration in cortical stores. SLEEP 2014;37(6):1061-1075.

Keywords: brain plasticity, conditioning, fMRI, memory consolidation, REM sleep

INTRODUCTION

A growing body of data indicates that patterns of neural activity prevailing during sleep support offline processing of newly acquired information, thereby promoting memory consolidation. Recent research has emphasized the importance of nonrapid eye movement (NREM) sleep oscillations, such as spindles1 or slow waves,2 in the consolidation of recent memories. In contrast, the role of rapid eye movement (REM) sleep in memory consolidation has been questioned.3–5 Yet, in humans, REM sleep is thought to be involved in the consolidation of motor6 and perceptual skills.7,8 Moreover, regional brain activity during REM sleep can be modified by prior practice during a motor sequence learning task, suggesting that REM sleep participates in the offline processing of motor memory traces.9–11 In contrast, the implication of REM sleep in declarative memory is debated.12–16 For example, it has been shown that theta power during REM sleep is enhanced after paired associative learning,17 and that REM sleep deprivation is associated with significantly lower recollection compared to slow wave sleep (SWS) deprivation in an episodic memory task.18 Recent studies suggest, however, that sleep,19–22 and particularly REM sleep, plays an active role in the consolidation of emotional declarative memories.23–25 This emotional memory enhancement was correlated with the quantity of REM sleep during a nap.25 Selective REM sleep deprivation also disrupts the consolidation of emotional texts.23

The causal influence of sleep on memory processing can arguably be tested by delivering a sensory cue during sleep that has been previously associated with learned material at encoding. Conditioned cues reactivate the associated neural circuits recruited during learning, while they are being transformed during sleep, thereby leading to detectable changes in subsequent behavior or regional brain activity. In humans, presentation of conditioned cues during NREM sleep has been associated with specific electroencephalograph (EEG) responses26 and improved retention of hippocampus-dependent declarative memories.26,27 In contrast, conditioned cues presented during wakefulness jeopardize memory consolidation because of interference with ongoing information processed while awake.28 Likewise, conditioned cues presented during REM sleep improved aversive conditioning in rodents29 and the retention of complex tasks in humans.30,31 However, their potential influence on declarative memory remains unknown.

SWS is often associated with consolidation of veridical memory through a hippocampo-cortical dialogue. A few recent studies have shown that sleep can also favor the generalization of information and the extraction of the gist, leading to false and illusory recognition.32–34 Emerging data suggest that REM sleep plays an important part in the integration and assimilation of new items into existing associative networks.35 REM sleep seems to favor the extraction of generalized information schemas, mainly in corticocortical networks.36

In this study we explored the behavioral and neurofunctional consequences of emotional and neutral memory reactivation during phasic REM sleep. Results indicate that experimental memory reactivation during REM sleep leads to a different outcome compared to reactivation during other sleep stages, promoting plastic changes in thalamocortical circuits and the interaction of new memories with existing semantically related representations.

METHODS

Population

Fifty-six healthy subjects (28 women and 28 men, age [mean ± standard deviation (SD)]: 21.6 ± 2.2 y] participated in this functional magnetic resonance imaging (fMRI) study. A semi-structured interview established the absence of medical, traumatic, psychiatric, or sleep disorders. All participants were nonsmokers and moderate caffeine consumers, and were not on medication. Their scores on a variety of self-assessed questionnaires (depression, anxiety, sleepiness, sleep quality, and circadian rhythms) fell within the normal range. All participants were right-handed as indicated by the Edinburgh Handedness Inventory.37 Subjects were categorized into four groups (see next paragraphs) of 14 volunteers (seven women and seven men in each group). The self-assessed questionnaire scores (depression, anxiety, sleepiness, sleep quality, and circadian rhythms) and age did not differ significantly among the four groups (F(4.59) = 1.6, P > 0.5) (see Table S1, supplemental material). Two volunteers of the group “New” were excluded before analysis because they rated significantly less emotion during the valence ratings of the encoding session than the rest of the sample (less than two times the SD below the mean, respectively 32.2% and 33.3% for each subject for emotional pictures as compared to a population mean of 51.3 ± 9.7% (mean ± SD). These low emotional scores hindered the analysis of fMRI data in these volunteers. Fifty-four volunteers were eventually included in the analysis. Participants gave their written informed consent to take part in the study and received financial compensation for their participation. The study was approved by the Ethics Committee of the Faculty of Medicine of the University of Liège.

Experimental Task and Material

Visual stimuli consisted of 120 negative and 120 neutral pictures of faces, with an equal proportion of men and women in each category. Part of the stimuli were sampled from the International Affective Picture System.38 Neutral and negative pictures were balanced in terms of contextual features around the face. In a pilot study, 12 unrelated subjects rated the pictures for emotional valence on a four-point scale (0: neutral, 4: very negative). The mean emotional valence was 2.6 ± 0.4 (SD) for the negative faces and 1.3 ± 0.2 (SD) for the neutral ones. Auditory stimuli consisted of three different sounds with a duration of 1 sec each, consisting of chirps with the frequency increasing between 220 Hz and 440 Hz for sound 1, decreasing from 440 Hz to 220 Hz for sound 2, and with crescendo/decrescendo frequency between 220 Hz and 440 Hz for sound 3. A gaussian window was applied to all sounds to attenuate edge effects.

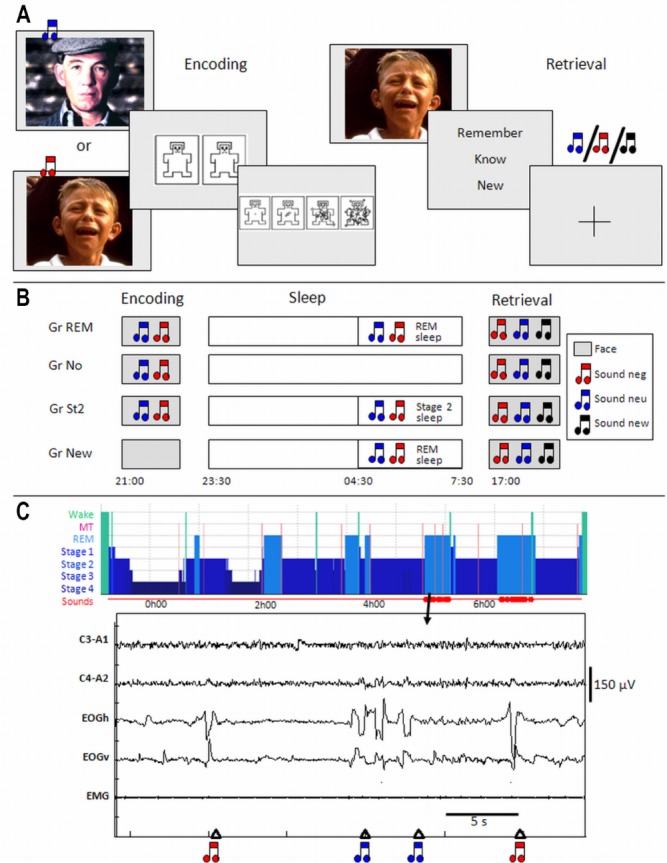

Each participant underwent scanning during an encoding and a retrieval session (Figure 1A). During a preliminary fMRI scanning session before encoding and retrieval, volunteers set the sound volume to ensure an optimal auditory perception.

Figure 1.

Protocol. (A) Encoding and retrieval trials. (B) General study design. (C) Example of a hypnogram and 30-sec recording of a Gr REM participant during the postencoding night (C3-A1, C4-A2: electroencephalogram; EOGV and EOGH: vertical and horizontal electro-oculograms; EMG: chin electromyogram, MT: movement time). GR, group; Neg, negative; Neu, neutral; REM, rapid eye movement.

During the incidental encoding, 90 pictures of each emotional valence were randomly chosen and displayed in groups of six consecutive items with the same valence, in order to enhance the emotional effect. The order of the pictures was otherwise randomized. Each face was displayed (16° × 22°) for 3 sec. Once the stimulus disappeared, the subjects were asked to rate the valence and the arousal of the face using a rating system inspired by the Self-Assessment Manikin.39 For valence, subjects had a maximum of 10 sec to choose between two icons, one representing negative emotion and the second representing neutral emotion, by pressing a keypad held in the right hand. The forced-choice method was selected for convenience of analysis and to ensure a robust design. For arousal, subjects had a maximum of 10 sec to choose from four icons representing different emotion intensities (from low to very high intensity), by pressing on a keypad (Figure 1A). Each trial ended with a fixation cross (3.75° × 3.75°) displayed on a light background for 1.5-sec duration. Trials ended as soon as participants responded on the keypad, resulting in a jitter between trials (range 0.24-4.3 sec for valence and 0.29-3.3 sec for intensity). Between the groups of pictures with the same emotional valence, the fixation cross was displayed for 10 to 15 sec. No indication was made; thus, participants were unaware that their memory for the pictures would be subsequently probed.

Although irrelevant to the ongoing task, two of the three sounds were randomly chosen for the encoding session for each subject: one sound was associated with emotional pictures and the second with neutral faces. None of the subjects noticed this systematic contingency, as revealed by posttesting debriefing. The association between sounds with neutral or negative pictures was randomized across subjects. In all groups, except group New (see next paragraphs), sounds were delivered during encoding, 500 ms after the onset of the presentation of the pictures. The third sound was presented during retrieval as a new sound.

During the retrieval session, 180 previously presented faces of each category (90 negative and 90 neutral) were considered as “old” pictures and were mixed with 60 new faces of each category (30 negative and 30 neutral), which had not been presented during encoding. The 240 pictures were presented in a randomized order. Each picture was displayed for 2 sec (16° × 22°), then participants had a maximum of 10 sec to choose one of three possible responses: “Remember”, “Know” or “New”. A “Remember” (R) response indicated that the recognition of the item was associated with retrieval of specific contextual details during encoding (for example, the rating of emotional valence). A “Know” (K) response was associated with the feeling of having encoded the item but without being able to retrieve any additional specific details. A “New” response was given when the participant thought the item had not been presented during encoding. Participants responded on a keypad that was held in their right hand. Trials ended as soon as the response was made, inducing a jitter in the presentation of the pictures (range 0.20 to 3.8 sec). In this paper, recollection specifically pertains to correct “Remember” responses.

At the end of the session, subjects rated the 60 new pictures for emotional valence with the scale used during encoding. Note that emotional ratings were requested during the first presentation of “old” pictures (during encoding) but during the second presentation for “new” pictures (during the debriefing session after the test session). Thus, the ratings of “new” pictures might be confounded by a habituation to experimental images.

During encoding, we could not assess whether conditioning actually occurred because sounds and pictures were presented simultaneously and the corresponding brain responses could not be teased apart. For this reason, during retrieval, to substantiate sound conditioning, the extinction of responses to conditioned cues was determined. Three sounds (negative, neutral, and new) were randomly presented without any fixed temporal contingency with the visual stimuli. This means that the sounds were presented alone, and they never coincided with the presentation of a picture to avoid potential influence on retrieval. Each sound was presented 30 times. We expected that extinction would manifest itself as a negative modulation by time, selectively for responses to conditioned sounds. Note that in these conditions, extinction does not have any effect on the experimental manipulation (i.e., presenting conditioned cues during sleep). A random jitter from 1 to 3 sec was added before and after each sound to decorrelate sound and picture onset vectors. In addition, the full randomization of both sounds and faces further decreased the potential detrimental effect of conditioned cues on face retrieval.

Note that we used the term “conditioning” because pictures of faces were either emotional or neutral. The pictures have intrinsic emotional valence and could be considered as unconditioned stimuli. All sounds were initially neutral and were considered as conditioned stimuli. Referring to conditioning also evokes the concept of extinction, which here corresponds to a time-dependent decrease in responses to conditioned sounds during retrieval that are determined in fMRI analysis.

However, it also could be considered that the visual stimuli were associated with the auditory stimuli. Because of reinforcement of this association during REM sleep, responses to visual stimuli during subsequent testing should be detected in brain areas processing both visual and auditory information. In this case, the experiment is best described as testing association memory.

Experimental Design

Volunteers followed a 4-day constant sleep schedule (23:00– 07:00 or 24:00–08:00 ± 30 min) before the experiment. Compliance to the schedule was assessed, using both sleep diaries and wrist actigraphy (Actiwatch, Cambridge Neuroscience, Cambridge, UK). According to the sleep diaries, mean sleep duration for the 2 nights preceding the experiment did not differ between groups (F(4.57) = 0.86, P > 0.05). At approximately 1 w before the experiment, volunteers performed a habituation night at the laboratory. This night served to adapt the volunteers to sleep polygraphic recordings in the laboratory and to verify that they had a normal sleep. Subjects reported to the laboratory between 20:30 and 22:00 to perform the encoding session in the fMRI scanner. After the encoding session, electrodes were attached for sleep recording and subjects went to bed in a soundproof room between 23:00 and 24:00. Sleep was monitored by standard polysomnography (V-Amp, Brain Products, Gilching, Germany) including 6 EEG (Fz, Cz, Pz, Oz, C3, C4, reference: mastoids), chin electromyograph (EMG), and vertical and horizontal electrooculograph (EOG) recordings (sampling rate: 500 Hz, bandwidth: DC to Nyquist frequency). Before sleep, the sound detection threshold for each individual subject was determined. To avoid awakenings, the sound volume was set 40% above the individual detection threshold estimated before sleep onset (about 1.5 dB HL). Sounds were presented binaurally via small headphones placed in the ear of the volunteer throughout the night. Sounds were presented during the second part of the night, between 04:30 and wake time. We chose to present sounds during the second part of the night because, in human, this part is characterized by a large proportion of REM sleep. “Phasic” REM sleep (i.e. the periods of REM sleep associated with transient events such as REMs) was specifically targeted because it seems particularly involved in memory processing. In animals, phasic REM sleep is associated with pontine waves, which are readily recorded in the pons, thalamus, hippocampus, and cortex.40 Following active avoidance learning, their density changes during REM sleep episodes in proportion to behavioral improvements that occur between initial training and postsleep testing.41 Their selective suppression deteriorates subsequent avoidance behavior,42 whereas their pharmacological enhancement prevents the detrimental behavioral effects of posttraining REM sleep deprivation.43 Indirect evidence suggests that brain activity corresponding to pontine waves exists in humans during REM sleep, in close temporal association with eye movements.44 Finally, tonic REM sleep was not taken as a control condition because it is not devoid of subclinical phasic pontine activity.45

During the night, vigilance state was classified online by the experimenters according to the criteria described by Rechtschaffen and Kales46 adapted on a 20-sec epoch basis. Participants were left undisturbed until 04:30. After this time, and depending on their experimental group, subjects received auditory stimulation during sleep. Participants were categorized into four groups according to whether sounds would be delivered during sleep, and/or during which stage of sleep the sounds were delivered (Figure 1B). In the first group (“Gr REM”), two sounds were associated with faces during encoding. Both sounds were presented during ocular movements of REM sleep (phasic REM sleep, Figure 1C). In the second group (“Gr No”), two sounds were presented during encoding but not during sleep. These subjects were not aware if sound would be presented during the night and also wore the headphones during sleep. In a third group (“Gr St2”), two sounds were conditioned during encoding and presented during stage 2 of NREM sleep in the second part of the night as for the Gr REM. Note that a control group in which cues would have been delivered during deep NREM sleep (SWS) was not possible because of the predominance of the stage of sleep in the first half of the night. In the fourth group (“Gr New”), the sounds were not present during encoding but were delivered during ocular movements of REM sleep, as in the Gr REM. The retest session took place the next evening between 16:30 and 20:00.

After the end of the retrieval session, subjects were debriefed and asked to justify their “Remember” and “Know” responses to ensure that they had understood the instructions clearly. Participants were also asked whether they had been aware of the temporal contingency between pictures and sounds and if they had perceived the auditory cues during sleep.

Analysis of Behavioral Data

During encoding, subjects rated the negative and neutral faces for emotional valence (1: neu, 2: neg) and intensity (1: very low, 2: low, 3: high, 4 very high). Two separate analyses of variance (ANOVAs) were performed to test for differences in emotional valence and intensity between negative and neutral faces for the different sleep groups.

During the retest, memory performance was assessed by the proportion of old items correctly recollected (R), old items correctly recognized by familiarity (K), old items identified as new (misses), and new items identified as old (false alarms). Two analyses were performed to test the effect of sleep on the different measures of memory (independently of emotion) and the effect of sleep on emotional memory respectively. Post hoc comparisons were conducted using the least significant difference Fisher test.

Finally, the discrimination index (d') and the criterion (C) were computed according to the procedure of Snodgrass and Corwin47 for each group. They were calculated using hits (R + K), Miss, Correct rejection, and False alarm (R + K). A negative (resp. positive) criterion indicates that participants use a permissive (resp. conservative) decision strategy. A criterion close to zero suggests that volunteers are unbiased in their decision.

Analysis of EEG Data

After the night, sleep stages were visually scored according to standard criteria by Rechtschaffen and Kales46 adapted on a 20-sec epoch basis using the FASST toolbox.48 EEG artifacts were visually detected and excluded for further analysis. The number of arousals from 04:30 to 07:30 was also computed to ensure that sounds presented during sleep did not induce arousals. An arousal corresponded to an abrupt shift in EEG frequency that lasted at least 3 sec, with at least 10 sec of stable sleep preceding the change. Arousal scoring during REM requires a concurrent increase in submental EMG of at least 1 sec. A spectral analysis and a time frequency analysis were conducted to detect any change in EEG power suggestive of an arousal. See the supplemental material for more information about the spectral analysis method.

Functional MRI Acquisition

Data were acquired with a 3T head-only magnetic resonance scanner (Allegra, Siemens, Erlangen) using a gradient echo/ echo planar imaging (EPI) sequence (32 transverse slices with 30% gap, voxel size: 3.4 × 3.4 × 3.4 mm, repetition time (TR): 2460 ms, echo time (TE): 40 ms, Flip angle (FA): 90°, field of view (FOV): 220 mm, delay : 0 ms). Between 605 and 800 functional volumes were acquired during encoding and between 565 and 730 functional volumes during retest. In all sessions, the first three volumes were discarded to account for magnetic saturation effects. A structural magnetic resonance scan was acquired at the end of the experimental session (T1-weighted three-dimensional magnetization prepared rapid acquisition gradient echo [MPRAGE] sequence, TR: 1960 ms, TE: 4.43 ms, TI: 1100 ms, FOV: 230 × 173 mm2, matrix size 256 × 192 × 176, voxel size: 0.9 × 0.9 × 0.9 mm).

Pictures were displayed on a screen positioned at the rear of the scanner, which the subject could comfortably see through a mirror mounted on the standard head coil. Sounds were transmitted using MR CONTROL amplifier and headphones (MR Confon, Magdeburg, Germany).

Functional MRI Analysis

fMRI data were analyzed using Statistical Parametric Mapping, SPM5 (http://www.fil.ion.ucl.ac.uk) implemented in MATLAB 6.7 (Mathworks Inc., Sherbom, MA). Functional scans were realigned using iterative rigid body transformations that minimize the residual sum of square between the first and subsequent images. They were normalized to the MNI EPI template (B-spline interpolation, voxel size: 2 × 2 × 2 mm3) and spatially smoothed with a gaussian kernel with full-width at half maximum (FWHM) of 8 mm.

Data were processed using a two-step analysis, taking into account intraindividual and inter-individual variance, respectively. For each subject, brain responses were modeled at each voxel, using a general linear model. The trials of both sessions were classified post hoc, according to the memory performance (hits, misses, correct rejections, and false alarms). For the encoding session, four trial types were modeled: negative or neutral images subsequently correctly remembered (“Neg-R”, “Neu-R”), and negative or neutral images subsequently correctly identified as known (“Neg-K”, “Neu-K”). An additional trial type consisted of subsequently forgotten images. Sounds were not modeled separately because they were delivered together with the faces. For the retrieval session, six trial types were modeled for the faces: old negative or neutral images correctly remembered (“Neg-R”, “Neu-R”), old negative or neutral images correctly identified as known (“Neg-K”, “Neu-K”), and new negative or neutral images correctly identified as new (“Neg-N”, “Neu-N”). Additional trial types consisted of forgotten images (misses) and false alarms. The three sounds were also modeled because they appeared independently from the faces: the old sound previously associated with emotional faces (“sound_neg”), the old sound previously associated with neutral faces (“sound_neu”), and the new sound (“sound_new”). Three further regressors were included, representing linear modulation by time of the activity elicited by the three sounds. The contrasts pertaining to the sounds were not conducted in the Gr New group because these participants were not conditioned during encoding. For this group, the three types of sounds were modeled in the design matrix but were not used in the contrasts. Each picture was categorized as neutral or negative based on the individual subjective ratings of the valence. On average for all 54 subjects, 87.8 ± 9.4 % (mean ± SD) of the negative pictures were scored as negative, and 81.2 ± 13.9% of the neutral images were scored as neutral.

For each trial type, a given item was modeled as a delta function representing its onset. The ensuing vector was convolved with the canonical hemodynamic response function, and used as a regressor in the individual design matrix. Movement parameters estimated during realignment (translations in x, y, and z directions and rotations around x, y, and z axes) and a constant vector were also included in the matrix as regressors of no interest. A high-pass filter was implemented using a cutoff period of 128 sec to remove low-frequency drifts from the time series. Serial autocorrelations were estimated with a restricted maximum likelihood algorithm using an autoregressive model of order 1 (+ white noise).

For the retest session, linear contrasts estimated the main effect of memory (remember versus know) and more interestingly, the emotion by memory interaction ([Neg-R > Neu-R] – [Neg-K > Neu-K]). Linear contrasts also estimated the main effect of the three sounds during retest (sound_neg + sound_neu + sound_new), the effect of conditioning between sounds and faces modulated by time ([sound_neg + sound_ neu > sound_new] × decreasing modulation by time), and the effect of the emotional conditioning modulated by time ([sound_neg > sound_neu] × decreasing modulation by time).

The resulting set of voxel values constituted a map of t statistics. The individual contrast images were spatially smoothed with a gaussian kernel with FWHM of 6 mm and used in a second-level analysis, corresponding to a random-effects analysis. The second-level analysis consisted of an ANOVA, which tested for the effects of interest for all subjects and compared the Gr REM to controls groups (Gr REM > Gr No + Gr St2 + Gr New). This contrast corresponded to a memory by sleep group interaction or a memory by emotion by sleep group interaction. The resulting set of voxel values was thresholded at P < 0.001 (uncorrected). Statistical inferences were corrected for multiple comparisons using gaussian random field theory at the voxel level in a small spherical volume (radius 10 mm) around a priori locations of interest, selected from the literature (see Tables 1, 2, and 3). Coordinates were chosen from articles exploring recognition memory (for hippocampus and medial prefrontal cortex -MPFC), detection of emotion (for amygdala, locus coeruleus, and insula), vision (for occipital cortex), and audition (for temporal cortex and geniculate body).

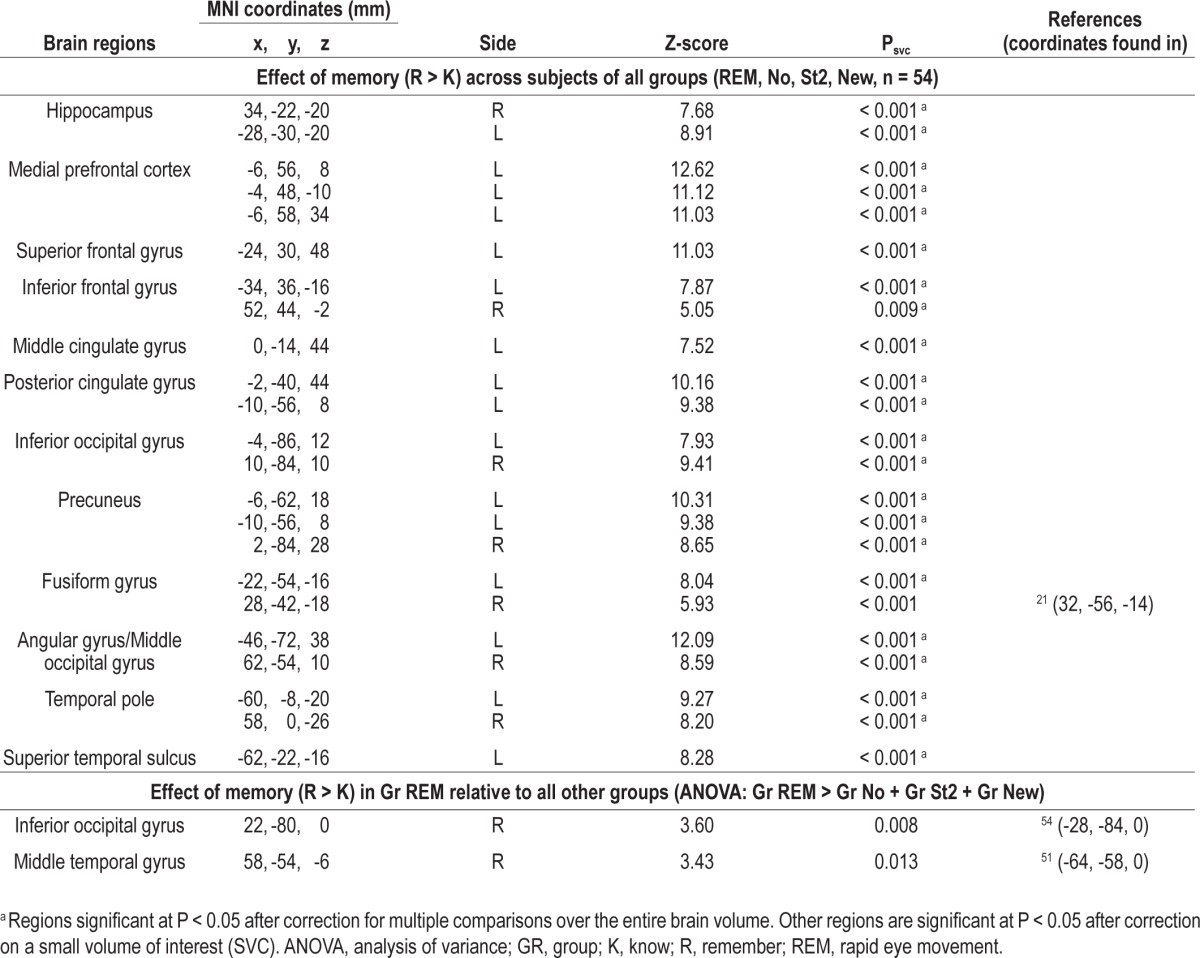

Table 1.

Response to sounds (Neg, Neu, New) during retest for all subjects (except Gr New) (n = 42)

Table 2.

Functional magnetic resonance imaging result for the retrieval session. Main effect of recollection (R > K)

Table 3.

Interaction between emotion and memory [(Neg > Neu) × (R > K)] during retrieval

RESULTS

Normal volunteers participated in two fMRI sessions, 20 h apart (Figure 1A). During an evening incidental encoding session, participants encoded neutral (Neu) and emotionally negative (Neg) pictures of faces. Critically, the presentation of each picture was associated with one auditory cue.

Four experimental groups were examined. The participants in the first group slept undisturbed during the entire night (Gr No, N = 14). In the second group (Gr REM, N = 14), the two conditioned auditory cues were presented in a randomized order during the bursts of eye movements characteristic of REM sleep (Figures 1B and 1C). The third group of participants followed the same experimental protocol with the two auditory cues presented during the second half of the night, although exclusively in stage 2 of NREM sleep (Gr St2, N = 14, Figure 1B). In the fourth group (Gr New, N = 12), subjects were presented with two sounds during phasic REM sleep, which had not been delivered during encoding (Figure 1B). Participants in the groups REM, St2, and No received the same sound-picture contingencies during encoding, and the those in the groups REM, St2, and New received an equivalent number of sounds (mean ± standard error of the mean [SEM]: Gr REM: 148.3 ± 6.6, Gr St2: 136.9 ± 7.4, P = 0.26; Gr New: 138.0 ± 7.3, P = 0.31), during the same period of the night (clock time range: 04:30 to 07:30). Moreover, the mean interstimulus interval (ISI) for the presentation of the sounds during sleep was not different between groups (mean ISI ± SD Gr REM = 18.8 s ± 7.8, Gr St2 = 22.4 s ± 10.8, Gr No = 22.7 s ± 7.9, one-way ANOVA: F(2,36) = 0.76, P = 0.47).

The following day during a second evening fMRI session, participants had to make recognition memory judgments about previously studied pictures and new pictures.

Behavioral Results During the Encoding Session

During encoding, subjects rated the negative and neutral faces for emotional valence (1:neu, 2:neg) and intensity (1: very low, 2: low, 3: high, 4 very high). For the 54 subjects included in the analysis, the mean score (± SEM) for the valence was 1.19 ± 0.02 for neutral faces and 1.88 ± 0.01 for negative faces on the two-point scale. The mean score for the intensity was 1.40 ± 0.05 for neutral faces and 2.37 ± 0.06 for negative faces on the four-point scale. Statistical tests demonstrated that negative pictures were subjectively more emotional than neutral pictures, and that mean valance and intensity during the encoding session were not significantly different among groups (see supplemental material).

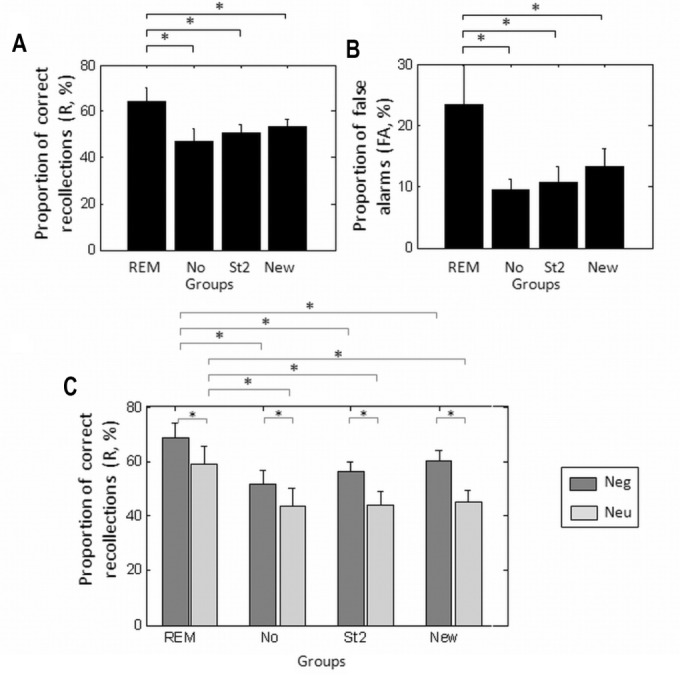

Behavioral Results During Retrieval: Enhancement of Both Correct and Incorrect Recollection After Stimulation During REM Sleep

We performed ANOVA on memory performance separately for different memory types (R, K, Miss, FA) as within-subject factors and sleep group (Gr REM, No, St2, New) as a between-subject factor. There was a significant effect of group (F(3,50) = 2.85, P = 0.047), a significant effect of memory (F(3,150) = 81.74, P < 0.001) and a significant interaction between memory and sleep group (F(9,150) = 2.31, P = 0.018). Post hoc analyses revealed that the presentation of auditory cues during REM sleep significantly enhanced recollection by 16.6% relative to unstimulated sleep (P < 0.001, Figure 2A, Table 4). This selective enhancement of recollection (R responses) cannot be attributed to a shift from “familiarity” to “recollection” judgments because volunteers followed specific instructions concerning Remember and Know responses, were trained to differentiate these response types and were debriefed concerning the strategy they actually followed during the retest session.

Figure 2.

Behavioral results. (A) Proportion of overall recollection (%). (B) Proportion of false alarms (%). (C) Proportion of recollection for negative and neutral faces (%). Error bars represent standard error of the mean. FA, false alarm; Neg, negative; Neu, neutral; R, recollection; REM, rapid eye movement.

Table 4.

Memory performance in percentage (mean ± standard error of the mean)

Recollection rates were also larger after presenting the conditioned cues during REM sleep than after stimulation during stage 2 sleep (P < 0.007, Figure 2A, Table 4). These results indicate that the enhancement of recollection is selectively associated with cues presented during phasic REM sleep. In contrast to Gr REM, and compared to unstimulated sleep, presenting the auditory cues during stage 2 sleep did not affect any memory measures.

Similarly, recollection rates achieved by Gr New participants did not significantly differ from subjects who did not receive any stimulation during sleep (P = 0.27) and were significantly worse than rates achieved by those in the Gr REM group (P = 0.04, Figure 2A, Table 4). In addition, hit rates (percentage of recollection) during retest tended to increase with the number of sounds delivered during REM sleep in the Gr REM group (r = 0.45, P = 0.11). This correlation was significant (P = 0.02) when discarding one volunteer who received significantly fewer sounds than the rest of the participants in Gr REM (less than two times the SD below the mean, i.e. 92 sounds). The correlation between hit rates and the number of sounds delivered during REM sleep significantly decreased with simulation in the Gr New (r = -0.58, P = 0.048), and the difference in regression is significant between groups (P = 0.018). These results indicate that only sounds previously associated with faces during wakefulness and delivered during REM sleep were likely to maintain subsequent recollection, whereas unconditioned cues significantly deteriorated subsequent recollection. The correlation between hit rates and number of sounds during sleep was not significant in the group St2 (r = 0.27, P = 0.35). We also checked that the proportion of sounds presented during an arousal, followed by an arousal in the 3-sec poststimulus and presented during a wake period did not differ among groups. Results from ANOVAs indicated that the percentage of sounds presented during an arousal (F(2,35) = 0.77, P = 0.47) and those followed by an arousal in the 3-sec poststimulus (F(2,35) = 0.08, P = 0.92) was not significantly different among groups (Table 5). Moreover, a third ANOVA demonstrated that the percentage of sounds presented during a period determined post hoc as wake and not as sleep, was also not significant among the three groups (F2,35) = 2.12, P = 0.13). These results suggest that (1) the prevalence of stimulus-related arousals did not differ between groups and that (2) the reactivation process did not occur during wake but mainly during REM sleep or stage 2 sleep.

Table 5.

Sleep parameters (mean ± standard deviation)

Recognition by familiarity (K response) was not significantly different in Gr REM compared to the other control groups (K resp: Gr REM vs Gr No: P = 0.11, Gr REM versus Gr St2: P = 0.18, Gr REM vs Gr New: P = 0.11, Figure S1), demonstrating that significant difference caused by presentation of cues during REM sleep cannot be detected for familiar responses.

Interestingly, false alarms, i.e., new stimuli incorrectly identified as old (R or K), significantly differed between Gr REM and control groups (Gr REM versus Gr No : P = 0.007; versus Gr St2: P = 0.01; Figure 2B; Table 4) and showed a relative trend compared to Gr New (P = 0.06), because subjects in the Gr REM group identified a greater number of new pictures as old than the other control groups. These results indicate that conditioned cues delivered during phasic REM sleep did not selectively consolidate memories for the learned items but they seemed to have established and reinforced associations between representations of the learned material and other face-related representations, thereby subsequently leading participants to more readily consider new items as previously encoded.

Finally, to quantify overall memory performance, irrespective of recognition strategy, i.e., recollection (R responses) or familiarity (K responses), we computed d' and C. Presentation of auditory cues during REM sleep did not globally improve recognition memory, because d'47 was not significantly different among groups (F(3,50) = 1.9, P = 0.14). In contrast, the decision criterion differed across groups (F(3,50) = 3.1, P = 0.03). It was more lenient in the Gr REM than in the other groups (Gr No: P = 0.01; Gr St2: P = 0.02; although this effect fell short of significance relative to Gr New: P = 0.07; Table 4). The modification of criterion was due to the enhancement of both recollection and false alarms rates that were more frequent in Gr REM than in the control groups.

By Design, Auditory Cues Presented During REM Sleep Favor Both Emotional and Neutral Recollection

Another ANOVA was performed to test the effect of emotion on memory performance. The ANOVA used memory (R versus K versus Miss versus FA) and emotion (Negative versus Neutral) as within-subject factors and sleep as the between-subject factor (Gr REM, No, St2, New). We observed a significant effect of memory (F(3,150) = 80.9, P < 0.001) and a significant interaction between emotion (emo) and memory (F(3,150) = 25.9, P < 0.001) because the beneficial effect of emotion on memory was larger for recollection than for familiarity (emo versus neu, for R responses: F(1,50) = 42.8, P < 0.001, emo versus neu for K responses: F(1,50) = 26.9, P < 0.001). There was also a trend for a significant effect of sleep group (F(3,50) = 2.57, P = 0.06). Finally, the three-way interaction between emotion, memory, and sleep was not significant (F(9,150) = 0.72, P = 0.69). Exploratory post hoc analyses revealed that emotional faces were better remembered than neutral ones for each group (Neg_R > Neu_R: Gr REM: P = 0.002, Gr No: P = 0.008, Gr St2: P < 0.001, Gr New: P < 0.001, Figure 2C, Table 4). However, presentation of auditory cues during REM sleep was not associated with a selective improvement in recollection of negative items because recollection rates were also enhanced for neutral pictures (Gr REM > Gr No: Neg_R: P < 0.001, Neu_R: P < 0.001, Gr REM > Gr St2: Neg_R: P < 0.001, Neu_R: P < 0.001, Gr REM > Gr New: Neg_R: P = 0.016, Neu_R: P < 0.001). This nonselective effect was expected, as by design, both sounds associated with neutral and emotional faces were presented during REM sleep.

Nevertheless, for familiar (K) responses the effect of sleep differed between negative and neutral items. The percentage of familiar responses was significantly smaller for negative items between Gr REM and the other control groups (Neg_K: Gr REM < Gr No: P = 0.002, Gr REM < Gr St2: P = 0.03, Gr REM < Gr New: 0.015), but the percentage of familiar responses did not differ for neutral items between Gr REM and the other controls groups (Neu_K: Gr REM < Gr No: P = 0.11, Gr REM < Gr St2: P = 0.051), except for Gr New (Neu_K: Gr REM < Gr New: 0.014). These results suggest that cues presented during REM sleep modified the familiarity of the items, according to their emotional valence.

EEG Results During Sleep

We analyzed the EEG data during sleep to ensure that any modification of memory performance observed within each group could not result from sleep modifications or arousals elicited by stimulation. First, no difference was observed among groups in terms of total sleep time, time spent in various sleep stages, or number of arousals (Table 5). ANOVA with the time spent in each sleep stage (wake, S1, S2, S3, S4, REM, MT) as a within-subject factor and the sleep groups as the between-subjects factor revealed no significant effect of group (F(3,44) = 1,03, P = 0.39) or interaction between sleep stage and groups (F(18,264) = 0,73, P = 0.77). Fisher tests confirmed the absence of any significant difference in the duration of different sleep stages between Gr REM and the control groups (P > 0.09). Another one-way ANOVA demonstrated that the number of arousals was not significantly different among groups of sleep (F(3,44) = 0.81, P = 0.50). These results demonstrate that the auditory cues presented during the different sleep stages did not affect general sleep architecture. Second, we tested whether the auditory cues could induce localized arousal. During debriefing, none of the subjects mentioned having perceived auditory stimulation during the experimental night. We also tested whether there were significant differences in theta, alpha, or beta power, suggestive of arousals, during the 3 sec following sound presentation between Gr REM and Gr New. There was no significant difference in theta, alpha, or beta power, during the 3 sec following sound presentation between Gr REM and Gr New (see Table S2, supplemental material). Likewise, the REMS EEG power spectrum did not differ between Gr REM and Gr New (Table S2). Collectively, these results demonstrate that any modifications of memory performance could not be attributed to the modification of sleep architecture or micro-arousal induced by the sounds during sleep.

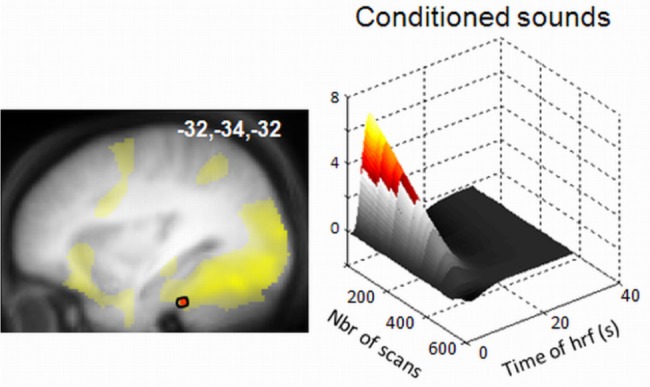

Functional MRI Results for the Presentation of the Sounds

During the retest session, conditioned and unconditioned sounds as well as faces were randomly presented without any fixed temporal contingency, therefore allowing the assessment of sound conditioning. Auditory conditioning at encoding modified responses to sounds during retest in all relevant groups (Gr REM, Gr No, and Gr st2). Whereas all sounds elicited responses in bilateral auditory cortices (Table 1), the two conditioned cues relative to a new unconditioned sound, were further associated with time-adapting responses in the anterior part of the fusiform gyrus, an area of the ventral stream implicated in processing complex visual stimuli (Figure 3 in red), that had been significantly recruited during encoding (Figure 3 in yellow). This result indicates that conditioned sounds were successfully associated with face representations during encoding and shows the expected extinction of conditioning during retest. Finally, the conditioned sound related to emotional faces induced a time-adaptive brain response in a region of the brainstem and in the insula49, significantly more than the conditioned sound related to neutral faces.

Figure 3.

Functional magnetic resonance imaging responses to sounds during the retrieval session. Adaptation of conditioned responses (relative to responses to new sounds) in the fusiform gyrus (in red). This activation overlaps with the brain regions involved in the perception of neutral faces during encoding (in yellow) Left panel: functional result displayed at P < 0.001 over the mean structural image of the participants, normalized to the MNI stereotactic space. Right panel: modulation of the response to conditioned sounds by time in a representative subject. MNI: Montreal Neurological Institute

Effects of Auditory Cues Delivered During REM Sleep on Brain Responses During Subsequent Memory Test

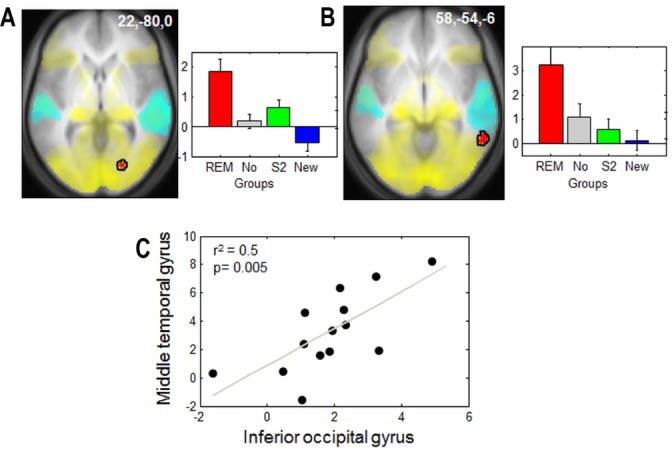

The main contrast of interest consisted of the comparison between recollections and familiar responses (R > K), isolating the mechanisms underpinning episodic memory. This contrast was independent from any change in criterion (which was based on the relative recognition of old and new items). Recollection of faces (R > K contrast) for all subjects was associated with the activation of a large set of brain areas involved in memory retrieval (hippocampus, medial prefrontal cortex, lateral superior prefrontal cortex, precuneus). This response pattern suggests that all subjects were correctly engaged in the declarative memory retrieval task (Table 2). Recollection of faces elicited significantly larger responses in Gr REM than in any other group in the inferior occipital gyrus, an area involved in face perception50 and in the middle temporal gyrus, a region of multi-sensory convergence involved in the perception of synchronous visual and auditory stimuli51,52 (Figure 4A and B, Table 2). Inclusive masking (threshold P < 0.001 uncorrected) confirmed that these areas had been recruited during encoding. Moreover, we computed correlations with the parameter estimates of these two regions for R versus K responses for each group separately. We observed that activity of the inferior occipital gyrus was significantly correlated with activity of the middle temporal gyrus in the Gr REM group (r2 = 0.5, P = 0.002, Figure 4C) but not in the other controls groups (Gr No: r2 = 0.003, P = 0.8, Gr st2: r2 = 0.18, P = 0.13, Gr NEW: r2 = 0.006, P = 0.8). These results suggest that presentation of conditioned cues during REM sleep modified memory traces through the reactivation and the association of neural populations recruited during encoding, especially in cortical areas related to the learned material and the audiovisual associations achieved through conditioning.

Figure 4.

Functional magnetic resonance imaging responses to faces during the retrieval session. Responses associated with recollection (R > K) in Gr REM relative to the other control groups (in red). (A) Inferior occipital gyrus overlaps with brain regions involved in the perception of neutral faces during encoding (in yellow). (B) Middle temporal gyrus. Yellow: responses to neutral faces during encoding. Cyan: responses to the three sounds during the retrieval session. (C) Correlation between the parameter estimates (R > K) of the inferior occipital cortex and the middle temporal gyrus observed in A and B for Gr REM. Left panels: functional results are displayed on the mean structural magnetic resonance image of the participants, normalized to the MNI stereotactic space (display at P, 0.001, uncorrected). Right panels and correlation: parameter estimates of recollection minus familiarity (arbitrary units, error bars: standard error of the mean). GR, group; REM, rapid eye movement.

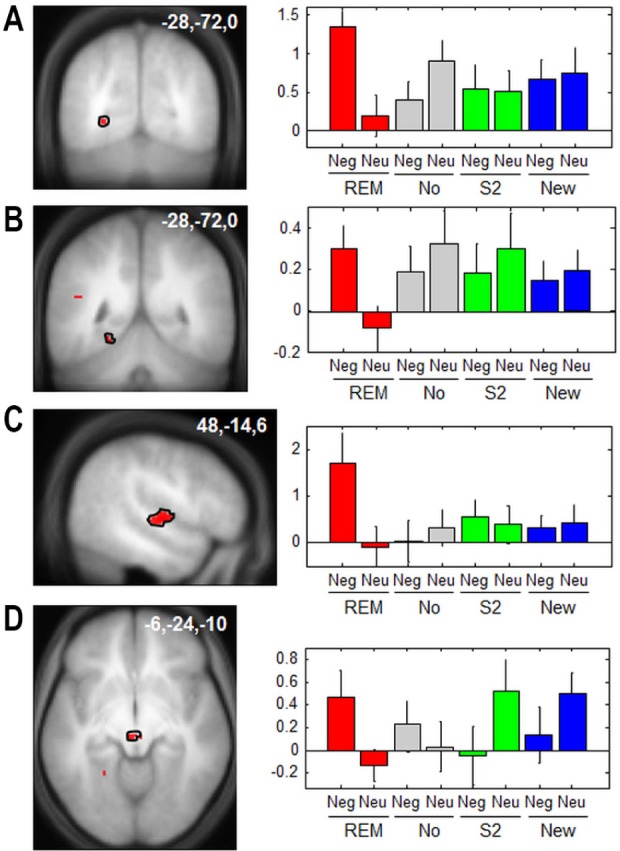

In addition, the interaction between emotion and memory ([neg > neu] versus [R > K]) during retrieval showed a significant activation in the amygdala, in the lateral occipital cortex (both regions involved in emotional perception53) and in the medial prefrontal cortex (involved in memory retrieval54, Table 3). Despite the absence of between-group differences in recollection between emotional and neutral faces, the comparison between the Gr REM and the control groups showed significantly larger responses for negative than neutral recollected pictures in both visual cortices (calcarine sulcus,55 fusiform gyrus55), and auditory areas (transverse temporal gyrus56 and a thalamic area compatible with the left medial geniculate body56,57 [Figure 5A-D, Table 3]). These results show that, at the neural level, the emotional significance of encoded stimuli further influenced the association between visual and auditory representations elicited by the presentation of conditioned cues during REM sleep. Moreover, we did not observe any significantly larger brain response in Gr St2 than Gr REM or Gr No.

Figure 5.

Functional magnetic resonance imaging responses to faces during the retrieval session for emotional versus neutral images. a-d, Emotion by memory by group interaction (R > K) × (Neg > Neu) × (Gr REM relative to all other groups). (A) Calcarine sulcus. (B) Fusiform gyrus. (C) Transverse temporal gyrus. (D) Thalamus (in the vicinity of the medial geniculate body). Neu, neutral; Neg, negative. Left panels: functional results are displayed on the mean structural magnetic resonance image of the participants, normalized to the MNI stereotactic space (display at P, 0.001, uncorrected). Right panels: parameter estimates of recollection minus familiarity (arbitrary units, error bars: standard error of the mean). Neg, negative; Neu, neutral; REM, rapid eye movement.

Although of interest, the neural correlates of false alarms could not be assessed because in most participants, their number was too small to allow for a reliable statistical analysis of the fMRI responses.

DISCUSSION

We assessed the causal effect of memory reactivation during phasic REM sleep on subsequent memory performance and brain responses elicited by episodic recognition (i.e., recollection). During an initial encoding session, auditory cues were incidentally associated with pictures of faces. The presentation of these conditioned auditory cues during post-encoding phasic REM sleep (the average cumulative duration of which amounted to approximately 140 sec only) induced significant changes in both memory performance and brain responses during later retrieval. These effects are indicative, at the neural level, of the integration of recent memories within cortical circuits, and behaviorally, of their interaction with semantically-related representations.

Sounds Were Effectively Conditioned With Faces

During retrieval, the three sounds were presented alone to measure the related brain response. We observed that the fusiform gyrus was significantly more activated for old than new sounds and that this activation decreased with time. The fusiform gyrus is one of the main regions specialized for facial recognition.58 Because our stimuli represented faces, this suggests that conditioning did in fact occur during encoding and was still present during retrieval. The assessment of this conditioning was not possible during encoding because the sounds and the pictures were presented simultaneously. A measure of this conditioning just after encoding was also difficult because it would induce extinction59 before the sounds could be used as a cue. During sleep, 140.6 sounds on average were delivered alone. This did not affect conditioning because the association between sounds and emotion is still observed during subsequent retrieval. This result suggests that sleep is a state favorable for reactivation of the encoded information in contrast to wakefulness involved in new learning, in particular extinction of conditioning.

Conditioned Cues During REM Sleep Promote Multimodal Cortical Plasticity

Following auditory fear conditioning in rodents, conditioned auditory cues presented during REM sleep elicited enhanced responses in the relevant brain structures: the medial geniculate body, lateral amygdala60 and hippocampus,61 suggesting a reactivation of the cued memory traces. Similarly, in our study, by reactivating the relevant neural circuits, conditioned cues during REM sleep modified the offline processing of memories. As a result, during retrieval, the neural correlates of face recollection materialized the experimentally induced formation of novel auditory-visual associations selectively in Gr REM. Face recollection was associated with responses in brain areas known to decode visual stimuli but also in a region of multisensory convergence of the temporal cortex, which is regularly engaged in situations requiring visual and auditory association.51 This result implies that the association between visual and auditory aspects of memory was strengthened by the experimental induction of memory reactivation during REM sleep; a hypothesis confirmed by the significant correlation between the activity of these two cortical areas observed only for Gr REM. Likewise, correct recollection of negative face pictures, as compared with neutral pictures, was further associated with responses in both visual (calcarine sulcus and fusiform gyrus) and auditory (transverse temporal gyrus and medial geniculate body) brain regions. Importantly, these results (which contrasted Remember to Familiar responses selectively) reflect episodic memory processes and are thus immune from the modification of the criterion, as discussed in the next paragraphs.

Although the directionality of the interactions between these brain areas was not specified, these responses were only observed in Gr REM, suggesting that the reactivation of memory traces by the presentation of conditioned cues during REM sleep allowed for the multimodal integration of recent memories within thalamocortical circuits. The thalamocortical locus of memory changes induced at retrieval by memory reactivation during REM sleep starts from the responses to conditioned memory cues during deep NREM sleep, which primarily recruit the hippocampus and higher-order associative cortices.28 Although these dissimilarities might relate to differences in experimental design (probing responses to cues during sleep instead of assessing episodic recognition during subsequent retrieval), data suggest that memory reactivation during REM sleep primarily influences thalamocortical memory stores. As organisms are continuously exposed to novel pieces of information, they must flexibly retain new information while preserving the heretofore stored knowledge. To resolve this conflict,62–64 the hippocampus would sparsely but rapidly store novel information whereas the cortex would need multiple repetitions of this information to store it successfully. Spontaneous reinstatements of hippocampal and neocortical activity associated with newly encoded representations are believed to participate in this consolidation process, and to progressively reinforce corticocortical connections that eventually support long-term memories.62–64 A number of studies propose that cues presented during NREM sleep favor the hippocampocortical dialogue,27 whereas our results suggest that cues presented during REM sleep favors the corticocortical reactivations. The transfer of information into cortical stores would support fundamental modifications of the memory trace, favoring the generalization and the creation of new associations and the integration with semantically related representations.36,65

Behavioral Consequences of Memory Reactivation During REM Sleep

Even if it seems unlikely, our data cannot rule out the possibility that a simple and non- specific response bias toward all stimuli (old and new) during retest occurs in the REM group, independent of memory consolidation per se. However, we postulate that the behavioral results observed in the REM group are explained by memory consolidation processes. In keeping with previous human30,31 and animal behavioral studies,29 reactivating memory traces during REM sleep resulted in an enhanced ability to explicitly retrieve learned information. The small percentage of sound sent during wake can be seen as a potential confound. But this percentage was not different between the Gr REM and other control groups (Gr St2 and Gr New) in which we observed not effect of cues. Moreover, the enhancement of performances observed by cues presented during REM sleep contrasts with the detrimental effect of memory reactivation during wakefulness, which renders memories labile and vulnerable to interference.28

Memory reactivation during REM sleep also differed from reactivations occurring during deep NREM sleep, which protect memories from later interference and results in an increased memory performance selectively for the learned exemplars.28 In contrast, reactivation during REM sleep does not selectively improve accurate recollection but it also enhances illusory recognition of novel stimuli as learned items. Memory reactivation during REM sleep seems to promote the associations between recently learned representations and other semantically related representations, thereby extracting their generic features and subsequently leading participants to confuse new and previously encoded exemplars. In other words, cues delivered during REM sleep appear to promote feature extraction from individual learned exemplars and their integration with stored superordinate representations. This process would subsequently bias identification of any face as previously learned. This hypothesis is also in keeping with processing of memory gist during sleep, in that it accounts not only for how memory representations are consolidated but also how they can change over time due to their interaction with related semantic fields.33 Rather than remembering the specifics of every face, subjects seem to have generalized the stimuli. Whereas reactivation in SWS stabilizes memories, reactivation in REM sleep induces a transformation of the memory trace, becoming more labile and allowing flexibility in the recombination of the stored information. This flexible memory trace is less accurate but more adapted in the long term.36,65 These findings are reminiscent of the increased ability during REM sleep to create or strengthen associations between weakly related material66,67 and to integrate unassociated information for creative problem solving.68

However, to test this latter hypothesis, participants should encode stimuli from separate semantic classes. Predictably, recollection and false alarms would be selectively enhanced for semantic categories in which cues were presented during REM sleep.

The Modifications of Memory are Specific to REM Sleep

The reported effects were specific to conditioned cues delivered during REM sleep. Conditioned cues delivered in a similar manner in stage 2 sleep during the same period of the night did not lead to any increment in recollection rates the next day, relative to the undisturbed control group. Moreover, we did not observe significantly larger brain responses in Gr St2 than in Gr REM or Gr No. These results contrast with the enhanced memory performance reported after presenting conditioned cues during deep NREM sleep in the first part of the night,27 suggesting that the effects of conditioned cues during NREM sleep depend on oscillations characteristic of deep NREM sleep or on a circadian influence. It is also plausible that memory traces processed earlier on during deep NREM sleep no longer respond to conditioned cues during NREM sleep in the second part of the night.

Auditory Stimulation During REM Sleep Cannot Explain the Memory Effects

Presenting unconditioned sounds during phasic REM sleep (Gr New) had no effect on subsequent recognition. Moreover, subsequent recollection rates decreased in proportion to the number of unconditioned cues delivered during REM sleep, whereas a different, mildly positive relationship was observed for conditioned sounds. The weakly positive relationship between conditioned cues and memory performance is remarkable. Indeed, the presentation of conditioned cues during wakefulness would invariably lead to extinction of the conditioned response.59 Accordingly, cues during wakefulness lead to an attenuation of the conditioned responses in the fusiform gyrus. This response pattern is suggestive of the extinction of conditioning during the retest session, during which there was no longer any fixed temporal contingency between sounds and faces. Similar adaptive responses have been reported in the amygdala during extinction of fear conditioning.69 It is remarkable that, in contrast, conditioned stimuli delivered during REM sleep maintain, and at the systems level arguably reinforce, the association between stimuli.

It is also unlikely that conditioned cues delivered during phasic REM sleep could modify offline plasticity through unspecific effects, for instance, by eliciting arousals. Quantitative analysis of polygraphic recordings further confirmed the absence of significant differences in the number of arousals among experimental groups (Gr REM, Gr New). Recollection was thus selectively enhanced by the presentation of conditioned cues which, by definition, were previously associated with specific memories. Conversely, unconditioned cues potentially disrupted local offline memory processes taking place in specific brain areas during REM sleep, by eliciting an auditory response unrelated to any previously formed association.

In the Current Protocol, Emotional Memories Were Not Further Consolidated During REM Sleep

Several studies have demonstrated that REM sleep enhances the consolidation of emotional stimuli.23–25 Initially, we hypothesized that emotional memories would benefit more from reactivation by cues during REM sleep than neutral memories. In this study, the recollection of emotional and neutral pictures was significantly enhanced by cues presented during REM sleep. The equivalent effect on neutral and emotional memories was a consequence of our design in which both cues were presented during sleep, reactivating both types of memory traces. In any case, this equivalent effect arises despite clear differences between negative and neutral pictures. First, emotional pictures were rated significantly higher than neutral ones for valence and arousal during encoding by all subjects. Second, as expected70 for each group, emotional pictures were better recollected than neutral ones, suggesting that negative pictures have a larger emotional effect on memory than neutral pictures. Third, negative pictures (as compared to neutral ones) induced amygdala and insula activation during encoding, two regions involved in emotion perception.71,72 Moreover, we provide evidence that conditioning was obtained for both sounds. During retrieval, the sound conditioned with emotional pictures showed significant activation in the insula (involved in emotion regulation)72 and in a region of the brainstem compatible with the locus coeruleus (involved in emotional arousal),70,73 suggesting that the two neutral sounds, randomly attributed to negative or neutral faces across subjects, were correctly associated to the emotional valence of the faces.

Potential Neural Mechanisms

Collectively, the data demonstrate that substantial plasticity can be induced in thalamo-neocortical circuits during post-training REM sleep of adult human volunteers. Although the mechanisms of these effects are out of reach in the current study, potential factors contributing to this plasticity are identified in animals. First, at the cellular level, REM sleep rebound was recently shown to promote long-term potentiation in rat hippocampus.74 The experimental induction of long-term potentiation in the hippocampus enhances activity-dependent gene expression in the hippocampus and neocortical areas during subsequent REM sleep.75 Likewise, exposure to novel objects results in an increase in cortical expression of genes involved in synaptic plasticity during subsequent REM sleep.76 Second, at the systems level, the widespread synchronous fore-brain activation elicited by pontine waves further provides optimal conditions for (hebbian-type) brain plasticity.42 Third, the particular neuromodulatory context prevailing during REM sleep, characterized by low adrenergic and serotonergic drives but high cholinergic tone, could potentially favor brain plasticity by allowing for the feed-forward spread of activity within thalamo-neocortical circuits.35

CONCLUSION

In the framework of memory consolidation during sleep, although NREM sleep has shown to be associated with both a synaptic downscaling77,78 and a reorganization of memories at the systems level,28,79–81 phasic REM sleep has shown to strengthen cortical representations freshly restructured during NREM sleep,35,82 promote their generalization and association with available memories in semantically related domains, and potentially foster their progressive integration into existing semantic knowledge stored in cortical circuits.

DISCLOSURE STATEMENT

This was not an industry supported study. This research was supported by the Belgian Fonds National de la Recherche Scientifique (FNRS), the Fondation Médicale Reine Elisabeth (FMRE), the Research Fund of the University of Liège and “Interuniversity Attraction Poles Programme – Belgian State – Belgian Science Policy.” The authors have indicated no financial conflicts of interest.

ACKNOWLEDGMENTS

The authors thank Pierre and Christian Berthomier (PHYSIP SA, Paris, France) for the automatic scoring of sleep recordings and Rebekah Blakemore for the wording correction.

Footnotes

A commentary on this article appears in this issue on page 1029.

SUPPLEMENTAL MATERIAL

METHOD

Analysis of EEG data

Quantitative EEG Analysis

For the comparison of Gr REM, Gr New, and Gr St2, in which sounds were delivered in REM sleep or stage 2 sleep, EEGs were subjected to spectral analysis on Cz using a fast Fourier transform (4-sec Welch window, 2-sec steps, Hanning window) resulting in a 0.25 Hz spectral resolution. EEG power spectra were calculated during REM sleep and during stage 2 sleep in the frequency range between 0.5 and 25 Hz. Results were averaged over REM sleep epochs or stage 2 sleep epochs.1

Evoked Response Analysis

Event-related potentials could not be adequately assessed because by design, and EEG recordings were heavily contaminated by eye movements during sound presentation. To detect EEG changes suggestive of arousals, a time frequency analysis was conducted and aimed at detecting any change in theta (4-7 Hz), alpha (8-12 Hz), or beta (15-25 Hz) EEG power during the 3 sec following sound presentation, suggestive of an arousal. This analysis was based on the definition of an arousal as “an abrupt shift in EEG frequency, which may include theta, alpha, and/ or frequencies greater than 16 Hz but not spindles”; “The EEG frequency shift must be 3 sec or greater in duration.”2 To ensure maximal sensitivity, we relaxed the criterion that “arousals are scored in REM sleep only when accompanied by concurrent increase in submental EMG amplitude.”2 Ocular artifacts were first rejected by estimating the residuals of a regression model based on EOG recordings. Although the rejection of EOG artifacts was incomplete, it was reasoned that eye movements occurred randomly in the prestimulus and poststimulus period and should not, on average, affect the estimation of induced oscillations. EEG recordings on Cz were epoched from -100 to +3000 ms from the sound trigger. Time frequency analysis used a Morlet wavelets [1:25 Hz; 1 Hz bins, Morlet wavelets factor: 7; no baseline correction, SPM8].3 From the time frequency plane, average power was computed in the theta, alpha, and beta frequency bands, both during prestimulus and poststimulus periods. These mean power values were subsequently averaged over trials. ANOVAs tested for a group effect in theta (resp. alpha, beta) power during the poststimulus period.

RESULTS

Behavioral Results During the Encoding Session

Two separate ANOVAs were performed on the scores for valence (resp. intensity) (valence_emo, valence_neu, resp. intensity_emo, intensity_neu) as within-subject and groups of sleep (Gr REM, No, St2, New) as between-subjects factor. For the first ANOVA on valence, we observed no main effect of group (F(3,50) = 0.53, P = 0.66), an effect of emotion (F(1,50) = 1015, P < 0.001) and no significant interaction between emotion and group of sleep during encoding (F(3,50) = 0.57, P = 0.64). The second ANOVA on intensity showed no main effect of group (F(1,50) = 0.7, P = 0.53), an effect of emotion (F(1,50) = 248, P < 0.001) and no significant interaction between emotion and group of sleep during encoding (F(3,50) = 1.57, P = 0.21). Planned comparisons revealed that both valence (P < 0.001) and intensity (P < 0.001) of emotional pictures were higher than neutral pictures. These results suggest that negative pictures were subjectively more emotional than neutral ones and subjects of the different groups were not significantly different during the encoding session. After the retrieval session, subjects rated the emotional valence and intensity of the new pictures. For “new” items, mean valence on a two-point scale was 1.16 ± 0.02 for neutral images and was 1.89 ± 0.02 for negative images. The intensity on a four-point scale was 1.34 ± 0.06 for neutral images and was 2.26 ± 0.07 for negative images (mean ± SEM). We performed two separate ANOVAs with emotion (valence [neg-neu] or intensity [low-high arousal]) and status (old or new images) as within-subject factor and groups of sleep as between-subjects factor. We observed no main effect of group (ANOVA on valence: F(3,49) = 0.236, P = 0.87, ANOVA on intensity: F(3,49) = 0.49, P = 0.68), no main effect of status for the valence (old versus new, F(1,49) = 0.46, P = 0.50) but a significant effect of status for the intensity rating (F(1,49) = 5.62, P = 0.02) because new images were scored less arousing than those rated during the encoding. Finally, the interaction between status (old or new) and groups of sleep was not significant (ANOVA on valence: F(3,49) = 1,44, P = 0.24, ANOVA on intensity: F(3,49) = 0.01, P = 0.99). These results do not show any difference between sleep groups (for valence or intensity of emotion), suggesting that cues presented during sleep do not modify emotional perception the next day.

Behavioral results. Proportion of familiar responses (% of Know).

Demographic data for each group of participants (mean ± standard deviation)

Electroencephalograph power in theta (4-7 Hz), alpha (8-12 Hz), and beta (15-25 Hz) frequency bands, during prestimulus and poststimulus periods (μV2). Power is computed on Cz (ref: mastoids)

EEG Results During Sleep

EEG Evoked Responses

One-way ANOVAs showed that for both the prestimulus and poststimulus period, there was no significant effect of group (Gr REM, Gr New, Gr St2) on theta (PRE: F(2) = 0.09; P = 0.91; POST: F(2) = 0.47; P = 0.63) and alpha power (PRE: F(2) = 1.37; P = 0.27; POST: F(2) = 1.51; P = 0.24). There was a significant effect of group on beta power (PRE: F(2) = 15.32; P < 0.001; POST: F(2) = 22.25; P < 0.001). Post hoc Fischer tests showed that this was because of larger beta power in Gr St2, relative to Gr REM and Gr New (both PRE and POST: P < 0.001), potentially in relation to the presence of high-frequency spindles. Likewise, ANOVA with REMS EEG power spectra from 0.5 Hz to 25 Hz as within-subject factor and sleep groups as between-subject factor for the REM sleep and stage 2 sleep respectively do not show any significant difference between groups (P > 0.05), and particularly between Gr REM and Gr New (P > 0.05). The results of the ERP (Event Related Potential) analysis were not interpretable because, according to the protocol, the sounds were contaminated by rapid eye movements of phasic REM sleep.

REFERENCE

- 1.Leclercq Y, Schrouff J, Noirhomme Q, Maquet P, Phillips C. fMRI artefact rejection and sleep scoring toolbox. Comput Intell Neurosci. 2011;2011:598206. doi: 10.1155/2011/598206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bonnet M, Carley D, Carskadon M, et al. EEG arousals: scoring rules and examples: a preliminary report from the Sleep Disorders Atlas Task Force of the American Sleep Disorders Association. Sleep. 1992;15:173–84. [PubMed] [Google Scholar]

- 3.Kiebel SJ, Tallon-Baudry C, Friston KJ. Parametric analysis of oscillatory activity as measured with EEG/MEG. Hum Brain Mapp. 2005;26:170–7. doi: 10.1002/hbm.20153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Johns MW. A new method for measuring daytime sleepiness: the Epworth sleepiness scale. Sleep. 1991;14:540–5. doi: 10.1093/sleep/14.6.540. [DOI] [PubMed] [Google Scholar]

- 5.Buysse DJ, Reynolds CF, 3rd, Monk TH, Berman SR, Kupfer DJ. The Pittsburgh Sleep Quality Index: a new instrument for psychiatric practice and research. Psychiatry Res. 1989;28:193–213. doi: 10.1016/0165-1781(89)90047-4. [DOI] [PubMed] [Google Scholar]

- 6.Horne JA, Ostberg O. A self-assessment questionnaire to determine morningness-eveningness in human circadian rhythms. Int J Chronobiol. 1976;4:97–110. [PubMed] [Google Scholar]

- 7.Steer RA, Ball R, Ranieri WF, Beck AT. Further evidence for the construct validity of the Beck depression Inventory-II with psychiatric outpatients. Psychol Rep. 1997;80:443–6. doi: 10.2466/pr0.1997.80.2.443. [DOI] [PubMed] [Google Scholar]

- 8.Spielberger CD, Gorsuch RL, Lushene R, et al. Palo Alto, CA: Consulting Psychologists Press; 1983. Manual for the state-trait anxiety inventory. [Google Scholar]

REFERENCE

- 1.Gais S, Molle M, Helms K, Born J. Learning-dependent increases in sleep spindle density. J Neurosci. 2002;22:6830–4. doi: 10.1523/JNEUROSCI.22-15-06830.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Huber R, Ghilardi MF, Massimini M, Tononi G. Local sleep and learning. Nature. 2004;430:78–81. doi: 10.1038/nature02663. [DOI] [PubMed] [Google Scholar]

- 3.Siegel JM. The REM sleep-memory consolidation hypothesis. Science. 2001;294:1058–63. doi: 10.1126/science.1063049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Vertes RP, Eastman KE. The case against memory consolidation in REM sleep. Behav Brain Sci. 2000;23:867–76. doi: 10.1017/s0140525x00004003. discussion 904-1121. [DOI] [PubMed] [Google Scholar]

- 5.Buzsaki G, Carpi D, Csicsvari J, et al. Maintenance and modification of firing rates and sequences in the hippocampus: does sleep play a role? In: Maquet P, Smith C, Stickgold R, editors. Sleep and brain plasticity. Oxford University Press; 2003. [Google Scholar]

- 6.Plihal W, Born J. Effects of early and late nocturnal sleep on declarative and procedural memory. J Cogn Neurosci. 1997;9:534–47. doi: 10.1162/jocn.1997.9.4.534. [DOI] [PubMed] [Google Scholar]

- 7.Karni A, Tanne D, Rubenstein BS, Askenasy JJ, Sagi D. Dependence on REM sleep of overnight improvement of a perceptual skill. Science. 1994;265:679–82. doi: 10.1126/science.8036518. [DOI] [PubMed] [Google Scholar]

- 8.Mednick S, Nakayama K, Stickgold R. Sleep-dependent learning: a nap is as good as a night. Nat Neurosci. 2003;6:697–8. doi: 10.1038/nn1078. [DOI] [PubMed] [Google Scholar]

- 9.Maquet P, Laureys S, Peigneux P, et al. Experience-dependent changes in cerebral activation during human REM sleep. Nat Neurosci. 2000;3:831–6. doi: 10.1038/77744. [DOI] [PubMed] [Google Scholar]

- 10.Laureys S, Peigneux P, Phillips C, et al. Experience-dependent changes in cerebral functional connectivity during human rapid eye movement sleep. Neuroscience. 2001;105:521–5. doi: 10.1016/s0306-4522(01)00269-x. [DOI] [PubMed] [Google Scholar]

- 11.Peigneux P, Laureys S, Fuchs S, et al. Learned material content and acquisition level modulate cerebral reactivation during posttraining rapid-eye-movements sleep. Neuroimage. 2003;20:125–34. doi: 10.1016/s1053-8119(03)00278-7. [DOI] [PubMed] [Google Scholar]

- 12.Empson JA, Clarke PR. Rapid eye movements and remembering. Nature. 1970;227:287–8. doi: 10.1038/227287a0. [DOI] [PubMed] [Google Scholar]

- 13.Ekstrand BR, Sullivan MJ, Parker DF, West JN. Spontaneous recovery and sleep. J Exp Psychol. 1971;88:142–4. doi: 10.1037/h0030642. [DOI] [PubMed] [Google Scholar]

- 14.Yaroush R, Sullivan MJ, Ekstrand BR. Effect of sleep on memory. II. Differential effect of the first and second half of the night. J Exp Psychol. 1971;88:361–6. doi: 10.1037/h0030914. [DOI] [PubMed] [Google Scholar]

- 15.Fowler MJ, Sullivan MJ, Ekstrand BR. Sleep and memory. Science. 1973;179:302–4. doi: 10.1126/science.179.4070.302. [DOI] [PubMed] [Google Scholar]

- 16.Tilley AJ, Empson JA. REM sleep and memory consolidation. Biol Psychol. 1978;6:293–300. doi: 10.1016/0301-0511(78)90031-5. [DOI] [PubMed] [Google Scholar]

- 17.Fogel SM, Smith CT, Cote KA. Dissociable learning-dependent changes in REM and non-REM sleep in declarative and procedural memory systems. Behav Brain Res. 2007;180:48–61. doi: 10.1016/j.bbr.2007.02.037. [DOI] [PubMed] [Google Scholar]

- 18.Rauchs G, Bertran F, Guillery-Girard B, et al. Consolidation of strictly episodic memories mainly requires rapid eye movement sleep. Sleep. 2004;27:395–401. doi: 10.1093/sleep/27.3.395. [DOI] [PubMed] [Google Scholar]

- 19.Payne JD, Stickgold R, Swanberg K, Kensinger EA. Sleep preferentially enhances memory for emotional components of scenes. Psychol Sci. 2008;19:781–8. doi: 10.1111/j.1467-9280.2008.02157.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Hu P, Stylos-Allan M, Walker MP. Sleep facilitates consolidation of emotional declarative memory. Psychol Sci. 2006;17:891–8. doi: 10.1111/j.1467-9280.2006.01799.x. [DOI] [PubMed] [Google Scholar]

- 21.Sterpenich V, Albouy G, Boly M, et al. Sleep-related hippocampo-cortical interplay during emotional memory recollection. PLoS Biol. 2007;5:e282. doi: 10.1371/journal.pbio.0050282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Sterpenich V, Albouy G, Darsaud A, et al. Sleep promotes the neural reorganization of remote emotional memory. J Neurosci. 2009;29:5143–52. doi: 10.1523/JNEUROSCI.0561-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Wagner U, Gais S, Born J. Emotional memory formation is enhanced across sleep intervals with high amounts of rapid eye movement sleep. Learn Mem. 2001;8:112–9. doi: 10.1101/lm.36801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Nishida M, Pearsall J, Buckner RL, Walker MP. REM sleep, prefrontal theta, and the consolidation of human emotional memory. Cereb Cortex. 2009;19:1158–66. doi: 10.1093/cercor/bhn155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Baran B, Pace-Schott EF, Ericson C, Spencer MC. Processing of emotional reactivity and emotional memory over sleep. J Neurosci. 2012;32:1035–42. doi: 10.1523/JNEUROSCI.2532-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Rudoy JD, Voss JL, Westerberg CE, Paller KA. Strengthening individual memories by reactivating them during sleep. Science. 2009;326:1079. doi: 10.1126/science.1179013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Rasch B, Buchel C, Gais S, Born J. Odor cues during slow-wave sleep prompt declarative memory consolidation. Science. 2007;315:1426–9. doi: 10.1126/science.1138581. [DOI] [PubMed] [Google Scholar]

- 28.Diekelmann S, Buchel C, Born J, Rasch B. Labile or stable: opposing consequences for memory when reactivated during waking and sleep. Nat Neurosci. 2011;14:381–6. doi: 10.1038/nn.2744. [DOI] [PubMed] [Google Scholar]

- 29.Hars B, Hennevin E, Pasques P. Improvement of learning by cueing during postlearning paradoxical sleep. Behav Brain Res. 1985;18:241–50. doi: 10.1016/0166-4328(85)90032-4. [DOI] [PubMed] [Google Scholar]

- 30.Smith C, Weeden K. Post training REMs coincident auditory stimulation enhances memory in humans. Psychiatr J Univ Ott. 1990;15:85–90. [PubMed] [Google Scholar]

- 31.Guerrien A, Dujardin K, Mandai O, Sockeel P, Leconte P. Enhancement of memory by auditory stimulation during postlearning REM sleep in humans. Physiol Behav. 1989;45:947–50. doi: 10.1016/0031-9384(89)90219-9. [DOI] [PubMed] [Google Scholar]

- 32.Darsaud A, Dehon H, Lahl O, et al. Does sleep promote false memories? J Cogn Neurosci. 2011;23:26–40. doi: 10.1162/jocn.2010.21448. [DOI] [PubMed] [Google Scholar]

- 33.Payne JD, Schacter DL, Propper RE, et al. The role of sleep in false memory formation. Neurobiol Learn Mem. 2009;92:327–34. doi: 10.1016/j.nlm.2009.03.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.McKeon S, Pace-Schott EF, Spencer RM. Interaction of sleep and emotional content on the production of false memories. PLoS One. 7:e49353. doi: 10.1371/journal.pone.0049353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Hasselmo ME. Neuromodulation: acetylcholine and memory consolidation. Trends Cogn Sci. 1999;3:351–9. doi: 10.1016/s1364-6613(99)01365-0. [DOI] [PubMed] [Google Scholar]