Abstract

Background

One of the challenging tasks in systems biology is parameter estimation in nonlinear dynamic models. A biological model usually contains a large number of correlated parameters leading to non-identifiability problems. Although many approaches have been developed to address both structural and practical non-identifiability problems, very few studies have been made to systematically investigate parameter correlations.

Results

In this study we present an approach that is able to identify both pairwise parameter correlations and higher order interrelationships among parameters in nonlinear dynamic models. Correlations are interpreted as surfaces in the subspaces of correlated parameters. Based on the correlation information obtained in this way both structural and practical non-identifiability can be clarified. Moreover, it can be concluded from the correlation analysis that a minimum number of data sets with different inputs for experimental design are needed to relieve the parameter correlations, which corresponds to the maximum number of correlated parameters among the correlation groups.

Conclusions

The information of pairwise and higher order interrelationships among parameters in biological models gives a deeper insight into the cause of non-identifiability problems. The result of our correlation analysis provides a necessary condition for experimental design in order to acquire suitable measurement data for unique parameter estimation.

Keywords: Parameter estimation, Identifiability, Parameter correlation, Data sets with different inputs, Zero residual surfaces, Experimental design

Background

Parameter estimation of dynamic biological models described by nonlinear ordinary differential equations (ODEs) poses a critical challenge. A special feature of biological models is that they usually contain a large number of parameters among which correlations exist [1,2]. In general, the quality of estimation results depends on the quality of data acquisition, the quality of the fitting method, and the quality of the model. Good experiment design can lead to highly informative data which will enhance the accuracy and identifiability of model parameters. Therefore, the task of parameter estimation demands an interactive endeavour of experiment and computation [3,4].

To fit parameters to measured data a numerical method for solving an optimization problem is required. Available methods for carrying out this task can be classified into deterministic approaches (e.g., multiple shooting [5,6], collocation on finite elements [7], global approaches [8,9]) and stochastic approaches (e.g. simulated annealing [10], genetic algorithms [11], and scatter search [12]). Using these approaches, model parameters can be well fitted to measured time courses provided from either experiment (in vivo) or simulation (in silico), i.e. high quality fits with minimal residual values can be obtained by global optimization methods.

However, even such a good fitting cannot guarantee unique parameter estimation, due to correlations among the parameters. The correlation phenomenon can be explained by the biological background, e.g. genes or proteins which are expressed in a correlated manner, correlations of expression levels between cells. As a consequence, certain regions in the parameter space correspond to good model predictions. It means that the residual value (quadratic error) remains low even if the parameters vary in certain regions. By testing 17 biological models, Gutenkunst et al. [13] concluded that collective fits to large amounts of ideal time-series data lead to the fact that some eigenvectors are orders of magnitudes better constrained than others.

Correlated parameters are non-identifiable. If the non-identifiability does not change for any data, these parameters are called structurally non-identifiable. On the contrary, if the non-identifiability can be remedied by data improvement, they are practically non-identifiable [14,15]. Identifiability analysis represents an important ongoing topic in the literature which can be in general categorized into two major groups: a priori and a posteriori methods [1,16]. Without any requirement of measurement data, global (structural) identifiability can be determined by a priori methods [17-19]. Since these methods are normally based on differential algebra, their application to high dimensional complex models can be limited.

The a posteriori methods reveal practical identifiability properties based on results from fitting parameters to available data sets. In most studies, correlations are detected by analysing the sensitivity matrix and the Fisher information matrix (FIM) [1,16,20-23], from which local confidence regions of parameter solutions can be obtained. Sensitivity analysis is well suitable to linear models but will have limitations for highly nonlinear models [14,24].

Recently, Raue et al. [15] proposed to use profile likelihood to detect non-identifiability for partially observable models. The parameter space is explored for each parameter by repeatedly fitting the model to a given data set, which then provides a likelihood-based confidence region for each parameter. Results from this method show that the number of practically non-identifiable parameters will decrease when more data sets are used [25].

An aim of identifiability analysis is to determine if the parameters of a model are identifiable or not, i.e. whether its parameters can be uniquely estimated. The profile likelihood approach can also offer information on the correlated relations among the parameters [15,25-27]. The information on parameter correlations (e.g. correlated groups, correlated forms in a group etc.) is important for experimental design, so that a series of experimental runs with determined conditions can be carried out to acquire proper measurement data sets for improving the quality of parameter estimation.

Very few studies have been made to investigate parameter correlations in biological models. Yao et al. [21] used the rank of the sensitivity matrix to determine the number of estimable parameters. However, the subsets of correlated parameters cannot be identified based on this result. Chu and Hahn [28] proposed to check the parallel columns in the sensitivity matrix to determine parameter subsets in which the parameters are pairwise correlated. Quaiser and Mönnigmann [29] proposed a method to rank the parameters from least estimable to most estimable. These methods, however, cannot identify parameter groups in which more than two parameters are correlated together but not in pairwise, i.e. the corresponding columns in the sensitivity matrix are linearly dependent but not parallel. Such correlations are called higher order interrelationships among parameters [16].

In this paper, “parameter correlations” means a group of parameters in the model equations which are mathematically related to each other through some implicit functions, i.e. among the parameters there is a functional relationship [15,26,27]. Correlated parameters will be structurally non-identifiable, if the functional relationship does not depend on the control variables which determine experimental conditions and thus measured data. On the other hand, they will be practically non-identifiable, if the functional relationship depends on the control variables.

In this paper, we present an approach which is able to identify both pairwise and higher order parameter correlations. Our approach is based on analysis of linear dependences of the first order partial derivative functions of model equations. In a given model there may be a number of groups with different number of correlated parameters. We propose to identify these groups by analysing the correlations of the columns of the state sensitivity matrix which can be derived directly from the right-hand side of the ODEs. Therefore, the method proposed in this paper is a priori in nature, which means that the parameter correlations considered in this paper are not from the results of data-based estimation. A geometric interpretation of parameter correlations is also presented. Using this approach, groups of correlated parameters and the types of correlations can be identified and, hence, the parameter identifiability issue can be addressed. Moreover, the relationship between parameter correlations and the control inputs can be derived. As a result, both structural and practical non-identifiabilities can be identified by the proposed approach.

In the case of practical non-identifiability, the parameter correlations can be relieved by specifying the values of control inputs for experimental design. Based on the correlation analysis, the maximum number of parameters among the correlation groups can be determined, which corresponds to the minimum number of data sets with different inputs required for uniquely estimating the parameters of the model under consideration. Numerical results of parameter estimation of a three-step-pathway model clearly demonstrate the efficacy of the proposed approach.

Methods

Identification of parameter correlations

We consider nonlinear model systems described by

| (1) |

| (2) |

where x(t) ∈ Rn is the state vector, u(t) ∈ Rm the control vector, and y(t) ∈ Rr the output vector, respectively. In this study, two different sets of parameters, i.e. p ∈ RNP in the state equations and q ∈ RNQ in the output equations, are considered. In most cases the number of parameters in the state equations is much larger than that in the output equations. Since the correlations of the parameters in the output Equation (2) are easier to identify, we concentrate on the analysis and identification of correlations of the parameters in the state Equation (1).

The equation of the state sensitivity matrix can be derived by taking the first order partial derivative of Eq. (1) with respect to parameters p

| (3) |

where is the state sensitivity matrix. By solving this equation (see Additional file 1 for details) the state sensitivity matrix can be written as

| (4) |

where V(τ) is a matrix computed at time τ. It means that S has a linear integral relation with the matrix from t0 to t. If at any time has the same linearly dependent columns, the corresponding columns in S will also be linearly dependent, i.e. the corresponding parameters are correlated. Therefore, we can identify parameter correlations by checking the linear dependences of the column in the matrix which is composed of the first order partial derivatives of the right-hand side of the ODEs. Based on Eq. (2), the output sensitivity matrices are, respectively, given by

| (5) |

| (6) |

To ensure unique estimation of the parameters (i.e. all parameters to be identifiable), based on the measured data of y, it is necessary that the columns in the output sensitivity matrices are linearly independent. From Eq. (6), relations of the columns in can be easily detected. The difficulty comes from Eq. (5), since the sensitivity functions in cannot be analytically expressed. However, from Eq. (5), the output sensitivity matrix is a linear transformation of . Consequently, there will be linearly dependent columns in , if there are linearly dependent columns in . It means the necessary condition for unique estimation of p is that, at least, the matrix must have a full rank. Based on Eq. (1), is expressed as vectors of the first order partial derivative functions

| (7) |

Now we analyse relations between the partial derivative functions in Eq. (7). If there is no correlation among the parameters, the columns in Eq. (7) will be linearly independent, i.e. if

| (8) |

there must be αi = 0, i = 1, ⋯, NP. Otherwise, there will be some groups of vectors in which lead to the following cases of linear dependences due to parameter correlations. Let us consider a subset of the parameters with k correlated parameters denoted as psub = [ps+1, ps+2, ⋯, ps+k]T with s + k ≤ NP.

Case 1:

| (9) |

where αi ≠ 0, i = 1, ⋯, k. Notice that the coefficient αi may be a function of the parameters (i.e. αi(p)) and/or of control inputs (i.e. αi(u(t), p)). It should be also noted that the control inputs u(t) are considered as constants in these coefficients, since they will be specified in experimental design. The linear dependences described by Eq. (9) lead to pairwise correlations among the k parameters, i.e. any pair of the parameters in psub are correlated. Moreover, the correlations mean a functional relationship between the parameters, i.e. the relationship between the parameters can be expressed by an algebraic equation

| (10) |

where γ(ps+1, ps+2, ⋯, ps+k) denotes a function of the parameters with one set of pairwise correlated parameters. The parameters in this function are compensated each other in an algebraic relationship, e.g. γ(ps+1 + ps+2 + ⋯ + ps+k) or γ(ps+1ps+2⋯ps+k). Eq. (10) describes the functional relationship between the parameters, e.g. ϕsub(γ( ⋅ )) = 1 + γ( ⋅ ) − (γ( ⋅ ))2 = 0. Due to the complexity of biological models, an explicit expression of this equation is not available in most cases.

If the coefficients in Eq. (9) are functions of only the parameters, i.e. αi(p), the parameters are structurally non-identifiable. In this case, the correlation relations in Eq. (9) will remain unchanged by specifying any values of control inputs. It means that the non-identifiability cannot be remedied through experimental design.

If the coefficients in Eq. (9) are functions of both the parameters and control inputs, i.e. αi(u, p), the parameters are practically non-identifiable. Different values for u can be specified which lead to different αi(u, p), such that Eq. (9) will not hold and therefore the parameter correlations will be relieved. Since k parameters are correlated, k different values of the control inputs u(j), (j = 1, ⋯, k) are required, such that the matrix

| (11) |

has a full rank. Notice that the columns in Eq. (11) are only linearly dependent for the same input, but the columns of the whole matrix are linearly independent. In this way, the non-identifiability is remedied. Moreover, a suggestion for experimental design is provided for the specification of u(j), (j = 1, ⋯, k) to obtain k distinct data sets which will be used for parameter estimation.

If all state variables are measurable, according to Eq. (4), this subset of parameters can be uniquely estimated based on the k data sets. If the outputs y are measured and used for the parameter estimation, it can be concluded from Eq. (5) that at least k data sets are required for unique parameter estimation.

Case 2:

| (12) |

and

| (13) |

where αi ≠ 0, i = 1, ⋯, s + k + d. Similarly, the coefficients may be functions of the parameters and/or of the control inputs. In this case, there are d sets of pairwise correlated parameters (Eq. (12)). A set is not correlated with another set, but all sets are correlated together (Eq. (13)). The functional relationship in this case can be expressed by

| (14) |

Based on Eq. (12), the group with the maximum number of parameters max (l1, l2, ⋯, ld) is of importance for data acquisition. From Eq. (13), in the case of practical non-identifiability, data for at least d different inputs is required. The combination of Eqs. (12) and (13) leads to the conclusion that we need a number of max (l1, l2, ⋯, ld, d) data sets with different inputs to eliminate parameter correlations in this case.

Case 3:

| (15) |

where αi ≠ 0, i = 1, ⋯, k. In this case, all k parameters are not pairwise correlated but they are correlated together in one group. The correlation equation in this case is expressed by

| (16) |

which means there is no set of correlated parameters in this case. The approach described above is able to identify pairwise and higher order parameter correlations in the state equations (Eq. (1)). In the same way, correlations among parameters in q in the output equations (Eq. (2)) can also be detected based on the first order partial derivative functions in Eq. (6).

From the results of this correlation analysis, the maximum number of correlated parameters of the correlation groups can be detected. This corresponds to the minimum number of data sets required for unique estimation of all parameters in the model. Furthermore, it is noted that the initial state of the model has no impact on the parameter correlations, which means that any initial state can be used for the experimental runs for the data acquisition.

For complex models, the correlation equations (Eqs. (10), (14), (16)) cannot be analytically expressed. A numerical method has to be used to illustrate the relationships of correlated parameters of a given model, which is discussed in the next section.

Interpretation of parameter correlations

Here we give an interpretation of parameter correlations in a biological model. Geometrically, for NP parameters, i.e. p = [p1, p2, ⋯, pNP]T, the estimation task can be considered as searching for true parameter values p* in the NP-dimensional parameter space. To do this, we need NP linearly independent surfaces in the parameter space which should pass through p*. Mathematically, such surfaces are described by linearly independent equations with the unknown parameters. We define such equations based on the results of fitting model Equations (1) to a data set (j) by minimizing the following cost function

| (17) |

where M is the number of sampling points, n is the number of state variables and denotes the measured data while x(p) the state variables predicted by the model. wi,l are weighting factors. The fitting results will depend on the data set resulted from the control inputs u(j), the values of wi,l, and the noise level of the measured data. For a geometric interpretation of parameter correlations, we assume to use idealized measurement data, i.e. data without any noises. Based on this assumption, the residual function (17) should be zero, when the true parameter set p* is applied, i.e.

| (18) |

It is noted that Eq. (18) is true for any noise-free data set employed for the fitting and independent of wi,q,. Now we define a zero residual equation (ZRE) as

| (19) |

This equation contains the parameters as unknowns and corresponds to a zero residual surface passing through the true parameter point p*. It means that a zero residual surface is built by parameter values which lead to a zero residual value. This suggests that we can find p* by solving NP linearly independent ZREs. From the first order Taylor expansion of Eq. (19), the linear dependences of ZREs can be detected by the columns in the following matrix

| (20) |

where x(j) = [x1,1(j), x1,2(j), ⋯, x1,M(j), ⋯, xn,1(j), xn,2(j), ⋯, xn,M(j)]T. Eq. (20) is exactly the state sensitivity matrix calculated by fitting to the given data set (j). This means, under the idealized data assumption, a zero residual value delivered after the fitting is associated to surfaces passing through the true parameter point. When there are no parameter correlations, the number of linearly independent ZREs will be greater than NP and thus the true parameter point can be found by fitting the current data set.

If there are parameter correlations, the fitting will lead to zero residual surfaces in the subspace of the correlated parameters. For instance, for a group of k correlated parameters, the zero residual surfaces (Eq. (19)) will be reduced to a single ZRE represented by Eq. (10), Eq. (14), or Eq. (16). Therefore, in the case of practical non-identifiability, k data sets are needed to generate k linearly independent ZREs so that the k parameters can be uniquely estimated. In the case of structural non-identifiability, the correlated relations are independent of data sets. It means fitting different data sets will lead to the same ZRE and thus the same surfaces in the parameter subspace.

If the measured data are with noises, the fitting results will lead to a nonzero residual value and nonzero residual surfaces, i.e.

| (21) |

where ϵi,l ≠ 0. Thus the nonzero residual surfaces will not pass through the true parameter point. However, based on Eq. (20) and Eq. (21) the first order partial derivatives remain unchanged. It means that parameter correlations do not depend on the quality of the measured data. Moreover, it can be seen from Eq. (19) and Eq. (21) that the zero residual surfaces and the nonzero residual surfaces will be parallel in the subspace of the correlated parameters.

Results and discussion

We consider a three-step pathway modelled by 8 nonlinear ordinary differential equations (ODEs) containing 8 metabolic concentrations (state variables) and 36 parameters [30-32], as given in Eqs. (22-29). The P and S values in the ODEs are considered as two control inputs specified by experimental design. No output equations were considered for this model in the previous studies.

| (22) |

| (23) |

| (24) |

| (25) |

| (26) |

| (27) |

| (28) |

| (29) |

This pathway model was studied by Moles et al. [31] using 16 noise-free data sets and Rodriguez-Fernandez et al. [32] using 16 both noise-free and noisy data sets, respectively. They showed some strong parameter correlations in several groups. Accurate parameter values were identified in [32]. However, a clear correlation analysis of the parameters and the relationship between the parameter correlations and the numbers of data sets with different inputs required for the parameter estimation were not given in the previous studies.

Identification of correlations

Now we identify parameter correlations in this model using our approach. Given the model represented by Eqs. (22-29), the first order partial derivative functions can be readily derived leading to the following linear dependences (see Additional file 1 for detailed derivation).

From Eq. (22),

| (30) |

From Eq. (23),

| (31) |

From Eq. (24),

| (32) |

From Eq. (28),

| (33) |

From Eq. (29),

| (34) |

The coefficients in Eqs. (30) – (34), αi, (i = 1, ⋯, 14), are functions of the corresponding parameters and controls in the individual state equations (see Additional file 1). Based on these results, correlated parameters in this model can be described in 5 groups:

Group 1: G1(p1, p2, p3, p4, p5), among which any pair of parameters are pairwise correlated;

Group 2: G2(p7, p8, p9, p10), among which p8, p9 are pairwise correlated and p7, p8, p10 as well as p7, p9, p10 are correlated, respectively.

Group 3: G3(p13, p14, p15, p16), among which p14, p15 are pairwise correlated and p13, p14, p16 as well as p13, p15, p16 are correlated, respectively.

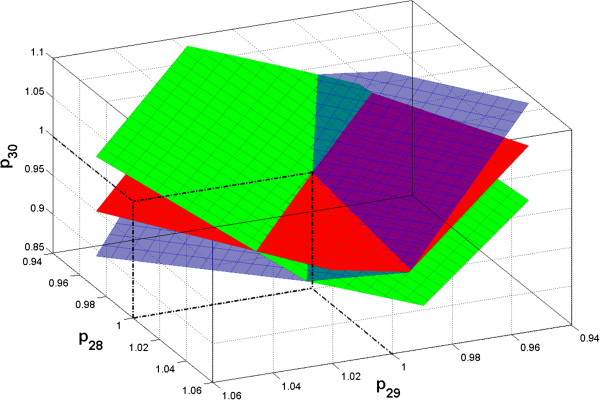

Group 4: G4(p28, p29, p30), the parameters are correlated together but not pairwise;

Group 5: G5(p35, p36), they are pairwise correlated.

Since the coefficients are functions of both of the parameters and the control inputs, these correlated parameters are practically non-identifiable for a single set of data. It is noted that, in G2 and G3, the maximum number of correlated parameters is three. Among the 5 correlated parameter groups the maximum number of correlated parameters is 5 (from G1). It means at least 5 data sets with different control values are required to uniquely estimate the 36 parameters of this model.

Verification of the correlations by fitting the model

To verify the proposed approach and check the correlations in this model, we carried out numerical experiments by fitting the parameters to a certain number of simulated data sets with different inputs. The fitting method used is a modified sequential approach suitable for handling multiple data sets [33,34].

We used the nominal parameter values given in [31], initial state values as well as P and S values (see Additional file 1) given in [32] to generate 5 noise-free data sets with different inputs containing the time courses of the 8 state variables. For each data set 120 data points were taken with 1 minute as sampling time.

For fitting the parameters we used random values for all 36 parameters to initialize the computation and all weights in Eqn. (17) were set to 1.0. The results were taken by a threshold of the total residual value in the order of 10-9 when using noise-free data sets (see Table 1).

Table 1.

Fitted parameter values based on different data sets

| No. | P * | P (1) | P (1)+(2) | P (1)+(2)+(3) | P (1)+…+(4) | P (1)+…+(5) |

P

(1)+…+(5)

(w) |

|---|---|---|---|---|---|---|---|

| 1(G1) |

1.0 |

1.06763 |

1.07763 |

1.60486 |

1.73180 |

1.00000 |

0.97145 |

| 2(G1) |

1.0 |

1.40146 |

0.91495 |

0.82116 |

0.75989 |

0.99998 |

1.05917 |

| 3(G1) |

2.0 |

1.47116 |

1.16323 |

2.39189 |

2.00001 |

2.00006 |

1.86755 |

| 4(G1) |

1.0 |

1.55173 |

1.01042 |

2.30123 |

3.19504 |

1.00000 |

0.98664 |

| 5(G1) |

2.0 |

1.40069 |

1.24912 |

0.32136 |

0.25317 |

2.00000 |

2.01339 |

| 6 |

1.0 |

1.00000 |

1.00002 |

1.00000 |

1.00000 |

1.00000 |

0.98154 |

| 7(G2) |

1.0 |

1.00927 |

1.02815 |

1.00000 |

1.00000 |

1.00000 |

0.99124 |

| 8(G2) |

1.0 |

1.32173 |

0.95504 |

1.00000 |

1.00000 |

1.00000 |

0.99919 |

| 9(G2) |

2.0 |

1.34185 |

1.18286 |

2.00000 |

2.00000 |

2.00000 |

1.93527 |

| 10(G2) |

1.0 |

1.00477 |

1.01393 |

1.00000 |

1.00000 |

1.00000 |

0.98693 |

| 11 |

2.0 |

1.99973 |

2.00007 |

2.00000 |

2.00000 |

2.00000 |

2.03582 |

| 12 |

1.0 |

0.99944 |

1.00019 |

1.00000 |

1.00000 |

1.00000 |

1.00435 |

| 13(G3) |

1.0 |

1.00572 |

1.05126 |

1.00001 |

1.00001 |

1.00001 |

1.03448 |

| 14(G3) |

1.0 |

1.39147 |

0.90768 |

1.00000 |

1.00000 |

1.00000 |

0.99558 |

| 15(G3) |

2.0 |

1.45117 |

1.00760 |

2.00003 |

2.00002 |

2.00001 |

1.98699 |

| 16(G3) |

1.0 |

1.00280 |

1.02531 |

1.00001 |

1.00000 |

1.00001 |

0.99786 |

| 17 |

2.0 |

1.99987 |

1.99999 |

1.99999 |

1.99999 |

1.99999 |

1.99586 |

| 18 |

1.0 |

1.00016 |

1.00000 |

1.00000 |

1.00000 |

1.00000 |

1.03924 |

| 19 |

0.1 |

0.10016 |

0.10000 |

0.10000 |

0.10000 |

0.10000 |

0.10000 |

| 20 |

1.0 |

1.00263 |

1.00000 |

1.00000 |

1.00000 |

1.00001 |

0.99469 |

| 21 |

0.1 |

0.10003 |

0.10000 |

0.10000 |

0.10000 |

0.10000 |

0.10007 |

| 22 |

0.1 |

0.10010 |

0.10000 |

0.10000 |

0.10000 |

0.10000 |

0.10000 |

| 23 |

1.0 |

1.00127 |

1.00000 |

1.00000 |

1.00000 |

1.00000 |

0.99581 |

| 24 |

0.1 |

0.10003 |

0.10000 |

0.10000 |

0.10000 |

0.10000 |

0.10025 |

| 25 |

0.1 |

0.10003 |

0.10000 |

0.10000 |

0.10000 |

0.10000 |

0.10492 |

| 26 |

1.0 |

1.00023 |

1.00002 |

1.00001 |

1.00000 |

1.00001 |

1.05077 |

| 27 |

0.1 |

0.10001 |

0.10000 |

0.10000 |

0.10000 |

0.10000 |

0.10120 |

| 28(G4) |

1.0 |

0.96519 |

0.99594 |

1.00000 |

1.00000 |

1.00000 |

1.01865 |

| 29(G4) |

1.0 |

1.62390 |

1.04672 |

1.00000 |

1.00000 |

1.00001 |

0.90507 |

| 30(G4) |

1.0 |

1.56817 |

1.04245 |

1.00000 |

0.99999 |

1.00000 |

0.85521 |

| 31 |

1.0 |

0.99997 |

1.00000 |

1.00000 |

1.00000 |

1.00000 |

1.11984 |

| 32 |

1.0 |

1.00110 |

1.00000 |

1.00000 |

1.00000 |

1.00000 |

0.97161 |

| 33 |

1.0 |

1.00207 |

0.99998 |

1.00000 |

0.99998 |

0.99998 |

1.33808 |

| 34 |

1.0 |

0.99956 |

1.00000 |

1.00000 |

1.00000 |

1.00000 |

1.01811 |

| 35(G5) |

1.0 |

1.05000 |

1.00001 |

1.00000 |

1.00000 |

1.00000 |

1.05077 |

| 36(G5) |

1.0 |

2.03075 |

0.99999 |

1.00000 |

1.00000 |

1.00000 |

1.20947 |

| Residual value | 3.62E-9 | 4.26E-9 | 5.31E-9 | 6.49E-9 | 5.35E-9 | 1.12E-0 | |

P* are the nominal (true) values, P(1) the values based on the 1st data set, P(1)+(2) based on the 1st, 2nd data sets together, P(1)+(2)+(3) based on the 1st, 2nd, and 3rd data sets, P(1)+…+(4) based on the 1st to 4th data sets, and P(1)+…+(5) based on the 5 data sets, respectively. (w) means results from 10% noises on the data. Correlated parameter groups are highlighted separately.

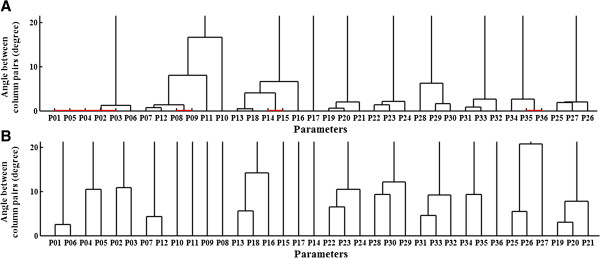

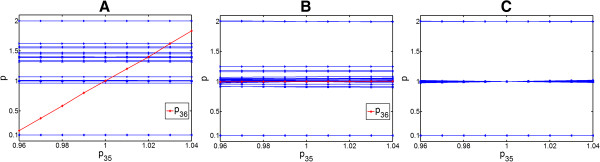

Figure 1A (upper panel) shows the angles between the columns of the state sensitivity matrix by fitting to the 1st data set. The zero angles (red lines) mean that the corresponding columns are pairwise parallel. According to Figure 1A, 4 pairwise correlated parameter groups (i.e. (p1, p2, p3, p4, p5), (p8, p9), (p14, p15), (p35, p36)) can be detected. However, these are not the same results as identified by the analysis of the model equations. This is because a dendrogram only shows pairwise correlations; it cannot detect higher order interrelationships among the parameters.

Figure 1.

Dendrogram. (A) Results from fitting to the 1st data set, where pairwise correlations in different groups exist (red lines). (B) Results from fitting to the 5 data sets together, where the pairwise correlations disappear.

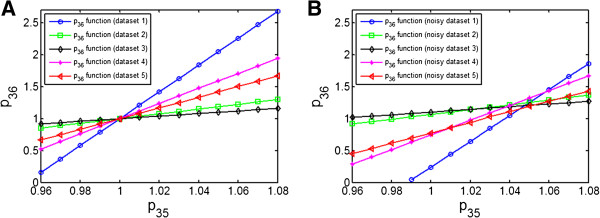

To illustrate the geometric interpretation, we first take the group of G5(p35, p36) as an example to construct ZREs, i.e. to plot the correlated relations between p35 and p36. This was done by repeatedly fitting the model to the 5 individual data sets with different inputs, respectively, with fixed values of p35. The resulting 5 zero residual surfaces (lines) in the subspace of p35 and p36 are shown in Figure 2A. As expected, the zero residual surfaces resulted from different data sets cross indeed at the true parameter point in the parameter subspace. Figure 2B shows the relations between p35 and p36 by fitting the parameters separately to the same 5 data sets on which a Gaussian distributed error of 10% was added. It can be seen that, due to the measurement noises, the crossing points of the nonzero residual surfaces are at different positions but near the true parameter point. Moreover, by comparing the lines in Figure 2A with Figure 2B, it can be seen that the corresponding zero residual surfaces and nonzero residual surfaces are indeed parallel, when fitting the same data set without noises or with noises, respectively.

Figure 2.

Correlated relations between p35 and p36 based on fitting the model to 5 individual data sets with different inputs. (A) Fitting to noise-free data sets. The 5 individual zero residual surfaces cross exactly at the true parameter point. It demonstrates that a zero residual surface from any data set will pass through the true parameter point and two data sets will be enough to determine p35 and p36. (B) Fitting to the data sets with 10% noise. The 5 individual nonzero residual surfaces cross near the true parameter point.

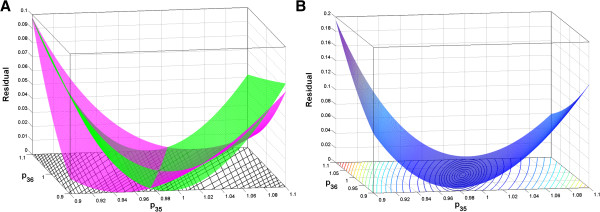

Figure 3 shows the residual surfaces based on fitting to 2 individual noise-free data sets (Figure 3A) and to the same 2 data sets together (Figure 3B). It is shown from Figure 3A that, due to the correlation, two hyperbolic cylinders are built by separately fitting to individual data sets. The bottom minimum lines of the two cylinders corresponding to the zero residual value cross at the true parameter point. Fitting to the two data sets together leads to an elliptic paraboloid (Figure 3B) which has only one minimum point with the zero residual value. This point is the true parameter point, which means the remedy of the correlation between p35 and p36.

Figure 3.

Residual surfaces of residual values as functions of p35 and p36. (A) Fitting to 2 individual noise-free data sets. (B) Fitting to the same 2 data sets together. The true parameter point corresponds to the crossing point in (A) and the minimum point in (B).

Since the maximum number of parameters among the correlation groups is 5, according to our approach, at least 5 data sets with different inputs are needed to uniquely determine the parameter set. The last column in Table 1 (P(1)+…+(5)) shows the parameter values from fitting the model to the 5 data sets together. It can be seen that all of the 36 parameter values fitted are almost at their true values. According our geometric interpretation, this means that the 5 zero residual surfaces expanded by together fitting to the 5 data sets cross at the true parameter point in the parameter subspace. Figure 1B (lower panel) shows these correlated relations indeed disappear based on the results of fitting to the 5 data sets together.

Moreover, it is shown in Table 1 (P(1)+(2)) that the correlation between p35 and p36 can be remedied by fitting two data sets together. As expected, it can be seen that in P(1)+(2) the parameters in G1 are not well fitted (i.e. 5 correlated parameters cannot be uniquely determined by two data sets). It is also interesting to see in P(1)+(2) the parameter values in G2, G3 and G4 are also not well estimated. This is because the degree of freedom of G2(p7, p8, p9, p10), G3(p13, p14, p15, p16), and G4(p28, p29, p30) is 3. Indeed, as shown in Table 1 (P(1)+(2)+(3)), these parameters are exactly determined based on fitting the model to 3 data sets together. However, it is shown in Table 1 from the parameter values of P(1)+(2)+(3) and P(1)+…+(4) that a number of data sets less than 5 is not enough to remedy the correlations of the parameters in G1.

To test the sensitivity of the parameter results to measurement errors, we also fitted the model to the same 5 data sets with different inputs and with 10% noise level together. As shown in the last column in Table 1 (P(1)+…+(5)(w)), to some extent, the parameter values identified are deviated from the true values due to an increased residual value. But the overall parameter quality is quite good. It means the crossing points of the 5 nonzero residual surfaces expanded by the 5 noisy data sets are quite close to the true parameter point.

Figure 4 shows profiles of all parameters as a function of p35, based on different number of data sets used for fitting. It is seen from Figure 4A that only p36 is correlated with p35 (red line). Moreover, it can be seen that, by fitting to one data set, the other parameters which have higher order interrelationships in other groups cannot be well determined. As shown in Figure 4B, the correlation between p35 and p36 is remedied by fitting to two data sets together and, moreover, the parameters tend to approach their true values (i.e. 0.1, 1.0 and 2.0, see Table 1). Finally, all parameters are uniquely determined (i.e. clearly at the three true values), when 5 data sets were used together for fitting the model, as shown in Figure 4C.

Figure 4.

Relationships of p35 with other parameters by fitting to different numbers of noise-free data sets with different inputs. (A) Relations between p35 and other parameters based on fitting to the 1st data set. (B) Relations between p35 and other parameters based on fitting to 1st and 2nd data sets together. (C) Relations between p35 and other parameters based on fitting to 5 data sets together.

These results clearly demonstrate the scope of our approach to identifying parameter correlations. Moreover, it is clearly seen that adding more data sets with different inputs can remedy the parameter non-identifiability problem in some complex models, but a necessary number of data sets with different inputs (5 for this example) is enough.

To illustrate a higher order interrelationship among parameters, estimations were made by separately fitting the model to 3 individual data sets to plot the relations of the parameters in G4(p28, p29, p30), as shown in Figure 5. It can be seen that the three zero residual surfaces (planes) resulted from the three individual data sets cross exactly at the true parameter point in the subspace of the 3 parameters. This demonstrates our geometric interpretation of parameter correlations, i.e. to estimate a group of three correlated parameters at least three distinct data sets with different inputs are needed.

Figure 5.

Relations between p28, p29 and p30 based on fitting the model to 3 individual noise-free data sets with different inputs. The fittings for p30 to each data set were made by fixed p28 and p29 with different values. Three zero residual surfaces are shown: the green plane is based on 1st data set, the red plane 2nd data set, and the blue plane 3rd data set. The three planes cross exactly at the true parameter point.

Since parameter correlations determined from the proposed approach are based on the structure of the state equations, our result provides a minimum number of different data sets with different inputs necessary for unique parameter estimation (5 in this example). This is definitely true, if all state variables (8 in this example) are measurable and included in the 5 data sets.

The results shown above are from the solutions of the parameter estimation problem based on the data sets composed of all 8 state variables. It is demonstrated that at least 5 data sets with different inputs will be needed to uniquely estimate the 36 parameters. However, our method does not give information on how many state variables which may be fewer than 8 but sufficient to identify the 36 parameters. To achieve this information, we tried to estimate the parameters based on the generated 5 data sets which include fewer measured state variables (as output variables). We checked the identifiability when the 5 data sets consist of data profiles of only a part of the state variables. Computational tests were carried out based on different combinations of the state variables included in the data sets. Table 2 shows the minimum sets of state variables which should be included in the data sets so as to achieve a successful fitting. It can be seen, for instance, the 36 parameters can be uniquely estimated in the case that only the first three state variables (i.e. x1, x2, x3) are included in the 5 data sets. Moreover, the generated data profiles of x7 and x8 are also enough for identifying the 36 parameters. Due to insufficient data, estimation runs with fewer numbers of the state variables than listed in Table 2 could not converge, i.e. the parameters will be non-identifiable.

Table 2.

Measurable variable sets for a successful fitting

| No. | Measured variables |

|---|---|

|

y1 |

(x1, x2, x3) |

|

y2 |

(x1, x2, x6) |

|

y3 |

(x1, x3, x5) |

|

y4 |

(x1, x5, x6) |

|

y5 |

(x2, x4, x6) |

|

y6 |

(x4, x5, x6) |

| y7 | (x7, x8) |

Different sets of state variables were used as measurable output variables included in the 5 data sets, respectively. This table shows the groups of a minimum number of state variables used as outputs for the parameter estimation which leads to the convergence to the true parameter point.

Conclusions

It is well recognized that parameters in many biological models are correlated. Finding the true parameter point remains as a challenge since it is hidden in these correlated relations. In many cases, a direct analysis of parameter correlations based on the output sensitivity matrix depends on experimental design, and the analytical relationship cannot be seen. Instead, we presented a method to analyse parameter correlations based on the matrix of the first order partial derivative functions of state equations which can be analytically derived. In this way, pairwise correlations and higher order interrelationships among the parameters can be detected. The result gives the information about parameter correlations and thus about the identifiability of parameters when all state variables are measurable for fitting the parameters. Since the output sensitivity matrix is a linear transformation of the matrix of first order partial derivative functions, our correlation analysis approach provides a necessary (but not sufficient) condition of parameter identifiability. That is, if there exist parameter correlations, the corresponding parameters are non-identifiable.

In addition, we introduced residual surfaces in the parameter subspace to interpret parameter correlations. Any point on a zero residual surface will result in a zero residual value. The crossing point of multiple zero residual surfaces leads to the true parameter point. Zero residual surfaces correspond to ZREs resulted from noise-free data sets used for fitting the parameters. If the ZREs are linearly independent (i.e. there are no correlations), the model parameters are identifiable, and otherwise they are non-identifiable. If more linearly independent ZREs can be constructed by adding new data sets with different inputs, the parameters are practically non-identifiable, otherwise they are structurally non-identifiable. In the case of practical non-identifiability the true parameter values can be found by together fitting the model to a necessary number of data sets which is the maximum number of parameters among the correlation groups. If the available measured data are from output variables, this should be regarded as the minimum number of data sets with different inputs required for unique parameter estimation. The results of the case study demonstrate the feasibility of our approach.

Moreover, an interesting result of our approach is that parameter correlations are not affected by the initial state. This means that, experimental runs can be conducted with any initial state to obtain the required data sets with different inputs. More interestingly, according to this result, different data sets with different inputs can be gained in one experimental run by changing the values of the control inputs. It is noted that the proposed approach does not address the identifiability issue of the initial states which would be a future research aspect.

The result of identifiable parameters determined by the proposed approach is theoretical. This means that the quality of the available data (the noise level, the length of sampling time, etc.) has an important impact on the identifiability issue. Parameters which are theoretically identifiable may not be identifiable by an estimator due to low quality of the data. Non-identifiability issues caused by relative data are not considered in this paper. In addition, the identification of parameter correlations based on the output equations is not considered in this paper.

Competing interests

The authors declare that they have no competing interests.

Author’s contributions

PL developed the methodology, wrote the manuscript and supervised the study. QDV wrote the software and designed the study. Both authors read and approved the final manuscript.

Supplementary Material

Derivation of the sensitivity matrix, partial derivative functions of the case study, and the values of control inputs for generating data sets in the case study.

Contributor Information

Pu Li, Email: pu.li@tu-ilmenau.de.

Quoc Dong Vu, Email: quoc-dong.vu@tu-ilmenau.de.

Acknowledgements

We thank M. Bartl, C. Kaleta, R. Guthke and H. M. La for carefully reading the manuscript and their suggestions for improving the description. This work has been supported by the PhD scholarship from Vietnamese government to QDV. In addition, we thank the anonymous reviewers for their comments that improved the manuscript.

References

- Ashyraliyev M, Fomekong-Nanfack Y, Kaandorp JA, Blom JG. Systems biology: parameter estimation for biochemical models. FEBS J. 2009;7:886–902. doi: 10.1111/j.1742-4658.2008.06844.x. [DOI] [PubMed] [Google Scholar]

- Chou IC, Voit EO. Recent developments in parameter estimation and structure identification of biochemical and genomic systems. Math Biosci. 2009;7:57–83. doi: 10.1016/j.mbs.2009.03.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacquez JA. Design of experiments. J Franklin Inst. 1998;7:259–279. [Google Scholar]

- Kreutz C, Timmer J. Systems biology: experimental design. FEBS J. 2009;7:923–942. doi: 10.1111/j.1742-4658.2008.06843.x. [DOI] [PubMed] [Google Scholar]

- Bauer I, Bock HG, Körkel S, Schlöder JP. Numerical methods for optimum experimental design in DAE systems. J Comp Appl Math. 2000;7:1–25. doi: 10.1016/S0377-0427(00)00300-9. [DOI] [Google Scholar]

- Peifer M, Timmer J. Parameter estimation in ordinary differential equations for biological processes using the method of multiple shooting. IET Syst Biol. 2007;7:78–88. doi: 10.1049/iet-syb:20060067. [DOI] [PubMed] [Google Scholar]

- Zavala VM, Laird CD, Biegler LT. Interior-point decomposition approaches for parallel solution of large-scale nonlinear parameter estimation problems. Chem Eng Sci. 2008;7:4834–4845. doi: 10.1016/j.ces.2007.05.022. [DOI] [Google Scholar]

- Esposito WR, Floudas CA. Global optimization for the parameter estimation of differential-algebraic systems. Ind Eng Chem Res. 2000;7:1291–1310. doi: 10.1021/ie990486w. [DOI] [Google Scholar]

- Miró A, Pozo C, Guillén-Gosálbez G, Egea JA, Jiménez L. Deterministic global optimization algorithm based on outer approximation for the parameter estimation of nonlinear dynamic biological systems. BMC Bioinformatics. 2012;7:90. doi: 10.1186/1471-2105-13-90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gonzalez OR, Küper C, Jung K, Naval PC Jr, Mendoza E. Parameter estimation using simulated annealing for S-system models of biological networks. Bioinformatics. 2007;7:480–486. doi: 10.1093/bioinformatics/btl522. [DOI] [PubMed] [Google Scholar]

- Kikuchi S, Tominaga D, Arita M, Takahashi K, Tomita M. Dynamic modelling of genetic networks using genetic algorithm and S-system. Bioinformatics. 2003;7:643–650. doi: 10.1093/bioinformatics/btg027. [DOI] [PubMed] [Google Scholar]

- Rodriguez-Fernandez M, Egea JA, Banga JR. Novel metaheuristic for parameter estimation in nonlinear dynamic biological systems. BMC Bioinformatics. 2006;7:483. doi: 10.1186/1471-2105-7-483. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gutenkunst RN, Waterfall JJ, Casey FP, Brown KS, Myers CR, Sethna JP. Universally sloppy parameter sensitivities in systems biology models. PLoS Comp Biol. 2007;7:1871–1878. doi: 10.1371/journal.pcbi.0030189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raue A, Kreutz C, Maiwald T, Klingmüller U, Timmer J. Addressing parameter identifiability by model-based experimentation. IET Syst Biol. 2011;7:120–130. doi: 10.1049/iet-syb.2010.0061. [DOI] [PubMed] [Google Scholar]

- Raue A, Kreutz C, Maiwald T, Bachmann J, Schilling M, Klingmüller U, Timmer J. Structural and practical identifiability analysis of partially observable dynamical models by exploiting the profile likelihood. Bioinformatics. 2009;7:1923–1929. doi: 10.1093/bioinformatics/btp358. [DOI] [PubMed] [Google Scholar]

- McLean KAP, McAuley KB. Mathematical modelling of chemical processes-obtaining the best model predictions and parameter estimates using identifiability and estimability procedures. Can J Chem Eng. 2012;7:351–365. doi: 10.1002/cjce.20660. [DOI] [Google Scholar]

- Chappel MJ, Godfrey KR, Vajda S. Global identifiability of the parameters of nonlinear systems with specified inputs: a comparison of methods. Math Biosci. 1990;7:41–73. doi: 10.1016/0025-5564(90)90055-4. [DOI] [PubMed] [Google Scholar]

- Meshkat N, Eisenberg M, DiStefano JJ III. An algorithm for finding globally identifiable parameter combinations of nonlinear ODE models using Gröbner bases. Math Biosci. 2009;7:61–72. doi: 10.1016/j.mbs.2009.08.010. [DOI] [PubMed] [Google Scholar]

- Chis OT, Banga JR, Balsa-Canto E. Structural identifiability of systems biology models: a critical comparison of methods. PLoS ONE. 2011;7:e27755. doi: 10.1371/journal.pone.0027755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vajda S, Rabitz H, Walter E, Lecourtier Y. Qualitative and quantitative identifiability analysis of nonlinear chemical kinetic models. Chem Eng Comm. 1989;7:191–219. doi: 10.1080/00986448908940662. [DOI] [Google Scholar]

- Yao KZ, Shaw BM, Kou B, McAuley KB, Bacon DW. Modeling ethylene/butene copolymerization with multi-site catalysts: parameter estimability and experimental design. Polym React Eng. 2003;7:563–588. doi: 10.1081/PRE-120024426. [DOI] [Google Scholar]

- Chu Y, Jayaraman A, Hahn J. Parameter sensitivity analysis of IL-6 signalling pathways. IET Syst Biol. 2007;7:342–352. doi: 10.1049/iet-syb:20060053. [DOI] [PubMed] [Google Scholar]

- Cintrón-Arias A, Banks HT, Capaldi A, Lloyd AL. A sensitivity matrix based methodology for inverse problem formulation. J Inv Ill-Posed Problems. 2009;7:545–564. [Google Scholar]

- Dobre S, Bastogne T, Profeta C, Barberi-Heyob M, Richard A. Limits of variance-based sensitivity analysis for non-identifiability testing in high dimensional dynamic models. Automatica. 2012;7:2740–2749. doi: 10.1016/j.automatica.2012.05.004. [DOI] [Google Scholar]

- Steiert B, Raue A, Timmer J, Kreutz C. Experimental design for parameter estimation of gene regulatory networks. PLoS ONE. 2012;7:e40052. doi: 10.1371/journal.pone.0040052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hengl S, Kreutz C, Timmer J, Maiwald T. Data-based identifiability analysis of nonlinear dynamical models. Bioinformatics. 2007;7:2612–2618. doi: 10.1093/bioinformatics/btm382. [DOI] [PubMed] [Google Scholar]

- Bachmann J, Raue A, Schilling M, Böhm ME, Kreutz C, Kaschek D, Busch H, Gretz N, Lehmann WD, Timmer J, Klingmüller U. Division of labor by dual feedback regulators controls JAK2/STAT5 signaling over broad ligand range. Mol Sys Bio. 2011;7:516. doi: 10.1038/msb.2011.50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chu Y, Hahn J. Parameter set selection for estimation of nonlinear dynamic systems. AIChE J. 2007;7(11):2858–2870. doi: 10.1002/aic.11295. [DOI] [Google Scholar]

- Quaiser T, Mönnigmann M. Systematic identifiability testing for nambiguous mechanistic modeling – application to JAK-STAT, MAP kinase, and NF-κB signaling pathway models. BMC Syst Biol. 2009;7:50. doi: 10.1186/1752-0509-3-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mendes P. Foundations of Systems Biology. Cambridge MA: MIT Press; 2001. Modeling large biological systems from functional genomic data: parameter estimation; pp. 163–186. [Google Scholar]

- Moles CG, Mendes P, Banga JR. Parameter estimation in biochemical pathways: a comparison of global optimization methods. Genome Res. 2003;7:2467–2474. doi: 10.1101/gr.1262503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rodriguez-Fernandez M, Mendes P, Banga JR. A hybrid approach for efficient and robust estimation in biochemical pathways. Biosystems. 2006;7:248–265. doi: 10.1016/j.biosystems.2005.06.016. [DOI] [PubMed] [Google Scholar]

- Faber R, Li P, Wozny G. Sequential parameter estimation for large-scale systems with multiple data sets. I: computational framework. Ind Eng Chem Res. 2003;7:5850–5860. doi: 10.1021/ie030296s. [DOI] [Google Scholar]

- Zhao C, Vu QD, Li P. A quasi-sequential parameter estimation for nonlinear dynamic systems based on multiple data profiles. Korean J Chem Eng. 2013;7:269–277. doi: 10.1007/s11814-012-0158-1. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Derivation of the sensitivity matrix, partial derivative functions of the case study, and the values of control inputs for generating data sets in the case study.