Abstract

Historically, there has been considerable variability in how formative evaluation has been conceptualized and practiced. FORmative Evaluation Consultation And Systems Technique (FORECAST) is a formative evaluation approach that develops a set of models and processes that can be used across settings and times, while allowing for local adaptations and innovations. FORECAST integrates specific models and tools to improve limitations in program theory, implementation, and evaluation. In the period since its initial use in a federally funded community prevention project in the early 1990s, evaluators have incorporated important formative evaluation innovations into FORECAST, including the integration of feedback loops and proximal outcome evaluation. In addition, FORECAST has been applied in a randomized community research trial. In this article, we describe updates to FORECAST and the implications of FORECAST for ameliorating failures in program theory, implementation, and evaluation.

Keywords: Formative evaluation, Logic model, Implementation science

Much has changed in the epistemology of evaluation since Scriven’s (1967) seminal work in which he introduced formative and summative evaluation. According to Scriven (1991), the proper function of evaluation is making a judgment of a program’s value or effectiveness, and formative evaluation is regarded as a strategy for providing a preview, or ‘‘early-warning,’’ of summative evaluation results. Today, we know that many community-based programs are continuously forming and constantly shifting and adapting to dynamic needs and unforeseen challenges.

Rossi, Lipsey, and Freeman (2004) emphasize the use of formative evaluation for program improvement. We build on this and suggest that formative evaluation is primarily oriented to ameliorating limitations or failures in program theory (idea), implementation, and evaluation (Wandersman, 2009). Theory, implementation, and evaluation are everywhere – e.g., in architecture, sports, politics, and in health and human services. Regardless of the specific content application, there is a need for quality in theory, implementation, and evaluation to ameliorate limitations that may reduce the accomplishment of desired outcomes.

Theory failure occurs when a planned program, process, or set of strategies is not well articulated or insufficient for reaching desired outcomes (Anderson, 2005; Shapiro, 1982). Implementation failure occurs when a planned process or set of strategies is not properly put into practice (e.g., low implementation fidelity or adherence) (Durlak & DuPre, 2008; Fixsen, 2005; Meyers, Durlak, & Wandersman, 2012). There is a risk of evaluation failure when the collection and use of data does not adequately meet stakeholders’ needs for accurately measuring what actually did occur in an intervention (Chelimsky, 1997; Scheirer et al., 2012; Wandersman, 2009).

FORmative Evaluation Consultation And Systems Technique (FORECAST) is a systematic approach to formative evaluation that offers specific models and tools for ameliorating limitations in program theory, implementation, and evaluation. There are surprisingly few systematic (standardized) formative evaluation approaches (Stetler et al., 2006). For example, a large number of models and tools have been designed and adopted as part of the theory-driven evaluation approach e.g., models and tools for theory-driven evaluation have been developed by the University of Wisconsin Cooperative Extension (Taylor-Powell, Steele, & Douglah, 1996), the W.K. Kellogg Foundation (2001), the Centers for Disease Control and Prevention (1999), and a variety of other organizations and agencies, yet there is minimal consistency and uniformity in their selection, use, and integration.

We provide an outline below about how FORECAST is used. The original article appeared in a journal supplement (Goodman & Wandersman, 1994) and is not easily accessible. Therefore, we present the basics of FORECAST here.

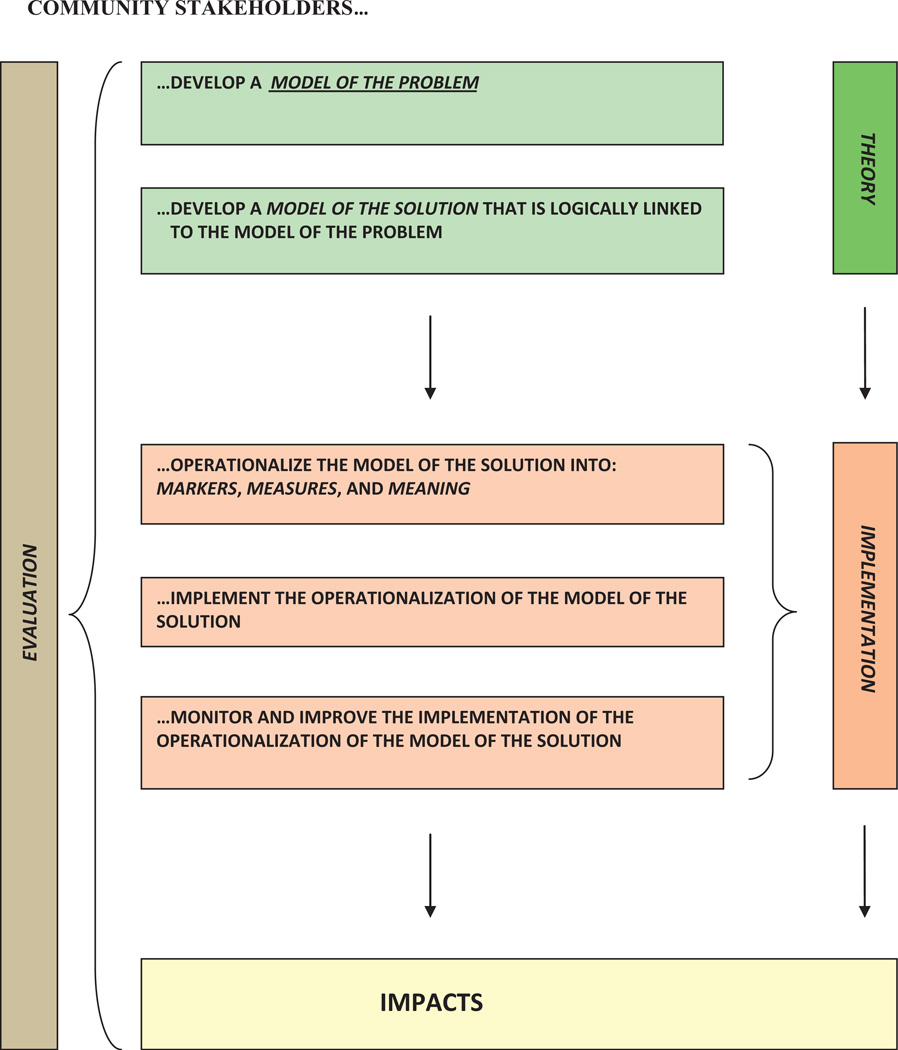

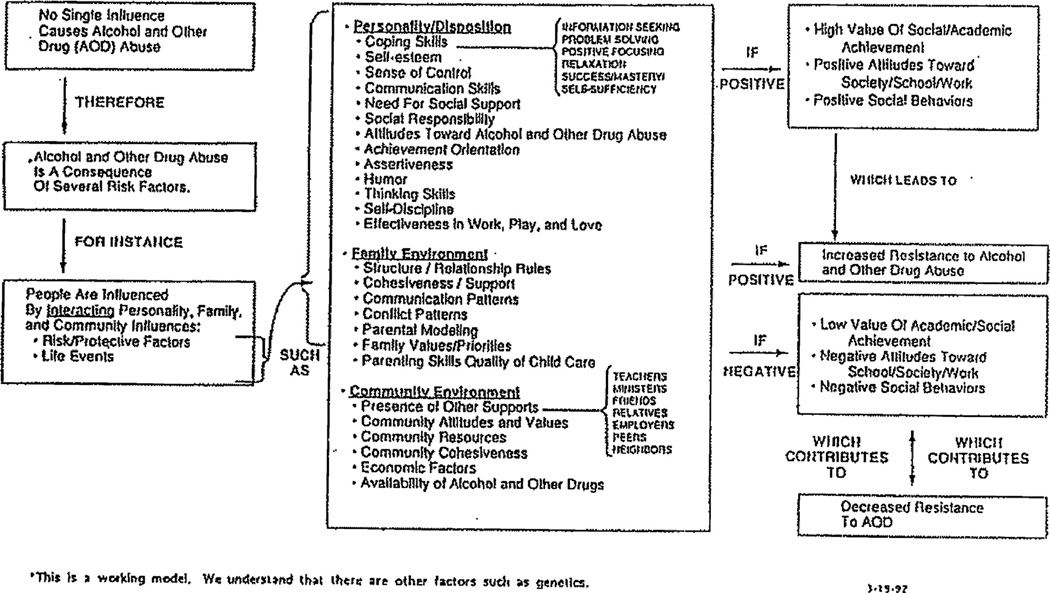

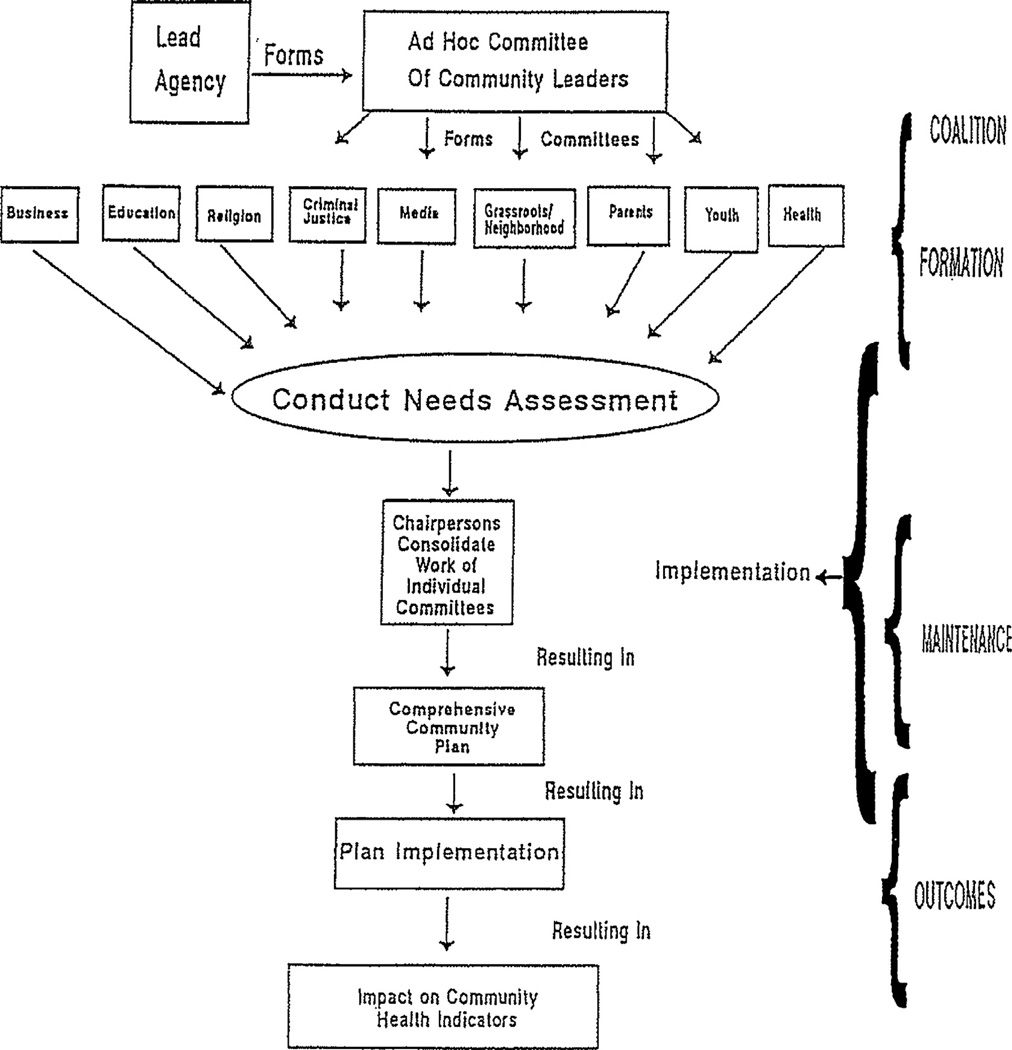

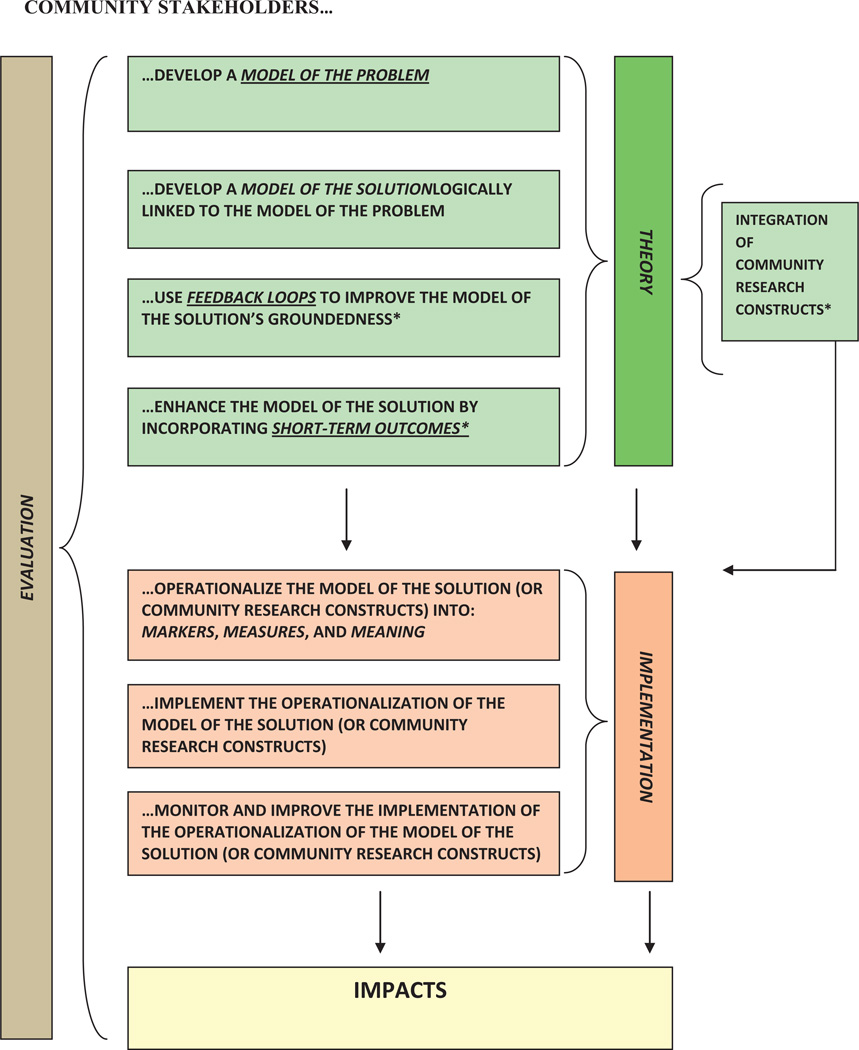

As illustrated in Fig. 1, FORECAST helps to enhance program theory by integrating the use of two logic models. First, evaluators and program members develop a model of the problem that diagrams the perceived causes and effects of a health or social problem of interest. In the initial application of FORECAST (Goodman & Wandersman, 1994), risk and protective factors for substance abuse were identified at multiple levels of analysis (e.g., individual differences, family influences, and community influences) (see Fig. 2). Second, evaluators and program members develop a model of the solution to depict how the program or intervention will address the problem (see Fig. 3). In this application of FORECAST, the model of the solution consisted of the development of a coalition and activities logically linked to risk factors that were intended to disrupt pathways leading to negative substance abuse outcomes. The model of the problem and the model of the solution are logically linked; the two models in concert help to promote a strategic approach in developing a program’s theory of change. FORECAST additionally helps to enhance program implementation by using markers, measures, and meaning; they are used in concert to operationally define the model of the solution into actionable activities and a timeline. Markers are short-term steps that can assess incremental achievement in implementing the model of the solution. They serve as reference points for real-time process monitoring and mid-course corrections during implementation. Measures assess whether markers are accomplished by a certain timeline, and meaning provides quality standards or benchmarks for implementation of markers. Throughout the course of implementation, markers, measures, and meaning are used by program stakeholders to identify planned and unplanned deviations from the model of the solution.

Fig. 1.

FORECAST logic model.

Fig. 2.

Community prevention model for alcohol and other drug (AOD) abuse.

Fig. 3.

Overview of the development of a community coalition.

Feedback by evaluators about unplanned deviations can prevent implementation failures by facilitating mid-course corrections. When deviations are planned and strategic, the evaluators can document why and how these shifts occurred. By documenting such alterations, FORECAST facilitates planned modifications that reflect realities confronted during implementation that were not anticipated during the initial planning phase (Goodman & Wandersman, 1994). Markers, measures, and meaning allow for input from stakeholders and the community to improve implementation over time (Table 1).

Table 1.

Selected markers and measures in the formation and planning stage of a partnership.

| SADAC and Steering Committee Check List February, 1992 |

SADAC and Steering Committee Measures for Markers February, 1992 |

|

|---|---|---|

| How effective is the S.T.O.P. Drugs Now Steering Committee in collaboration with SADAC in initiating the project? | ||

| Staffing | Staffing | |

| (4/15) | 1 – timely hiring of a qualified (masters prepared) project director | 1 – dates of hire for Sheryl, credentials, resume |

| (4/15) | 2 – timely hiring of qualified program coordinators | 2 – dates of hire for program coordinators, resume |

| (4/15+) | 3 – timely hiring of other project staff | 3 – dates of hire for other staff, resumes |

| (4/15+) | 4 – staff oriented to the project/additional training | 4 – Perc, OSAP, and SCCADA trainings, list of who attended, dates attended, agendas, OSAP meetings in Washington DC, other training |

| (4/15+) | 5 – assure that staff has certain qualifications as specified in the models of action | 5 – credentials of staff, resumes |

| (4/15) | 6 – assure that recruitment strategies are consistent with the models of action | 6 – type of recruitment, resumes of staff, copies of letters |

| Project structure | Project structure | |

| (2/15 and 4/15) | 7 – developing the organizational structure consistent with the project’s mission and operations | 7 – development of logic model and checklist. Following these documents will assure that the process is implemented according to the grant. |

| Project functions | Project functions | |

| (4/15) | 8 – identifying project leader for planning and development | 8 – job description for Sheryl |

| (4/15+) | 9 – providing oversight for project operations | 9 – Sheryl, minutes, staff meetings, copies of correspondence |

| (4/15+) | 10 – holding meetings with staff every two weeks | 10 – meetings with Sheryl, staff minutes, agenda, list of who attended |

| (4/15+) | 11 – coordinating with SCCADA | 11 – frequency of exchange of information, copies of correspondence, phone log |

Finally, the FORECAST approach as a whole helps to enhance evaluation accuracy and utilization; it serves as a vehicle for data-informed decision making about program formation, implementation, and the production of outcomes along the lifespan of a community project.

The goal for FORECAST evaluators is to assure that programs are successfully planned, implemented, and evaluated so that they may produce the desired outcomes. FORECAST is designed to be a highly participatory approach. Multiple stakeholders are involved in FORECAST (e.g., program staff, steering committees, evaluators); FORECAST encourages collaboration between evaluators and other program stakeholders in using the previously described models and tools (Goodman & Wandersman, 1994).

In summary, FORECAST is a collaborative formative evaluation approach that consists of specific models and tools designed to improve programs. The FORECAST models (model of the solution, model of the problem) are linked through a strategic problemsolving logic that articulates how a program intends to bridge needs and desired outcomes (Rossi et al., 2004). Markers, measures, and meaning work in concert to operationalize a program’s theory of change into action tasks to be carried out during implementation, allowing for ongoing monitoring and feedback, and mid-course corrections when appropriate. The overall FORECAST system, includes specialized components and techniques (including the development and integration of models, markers, measures, and meaning) that are made available to stakeholders in communities.

In this article, we will first describe recent applications of FORECAST and innovations that move toward a new and improved FORECAST. Then, we will describe how FORECAST’s innovations that build on the original (1994) model can ameliorate failures that can occur in program theory, implementation, and evaluation.

1. Updating FORECAST

Two innovations made to FORECAST – using feedback loops in logic models, and incorporating proximal outcomes into a theory of change – improve upon several limitations in the original 1994 FORECAST. Below we describe these innovations, including how these innovations help address some limitations in the original FORECAST approach

2. Using feedback loops in logic models

In designing logic models, it is important to account for social/ ecological factors that can affect program functioning. This is strikingly apparent in community prevention projects that address challenging problems like sexual violence. The Centers for Disease Control and Prevention funded several community-based demonstration projects of promising programs to reduce the prevalence of sexual violence amongst teenagers (Goodman & Noonan, 2009). Evaluators were hired to work with community stakeholders to develop a logic model for each demonstration project as part of a FORECAST evaluation.

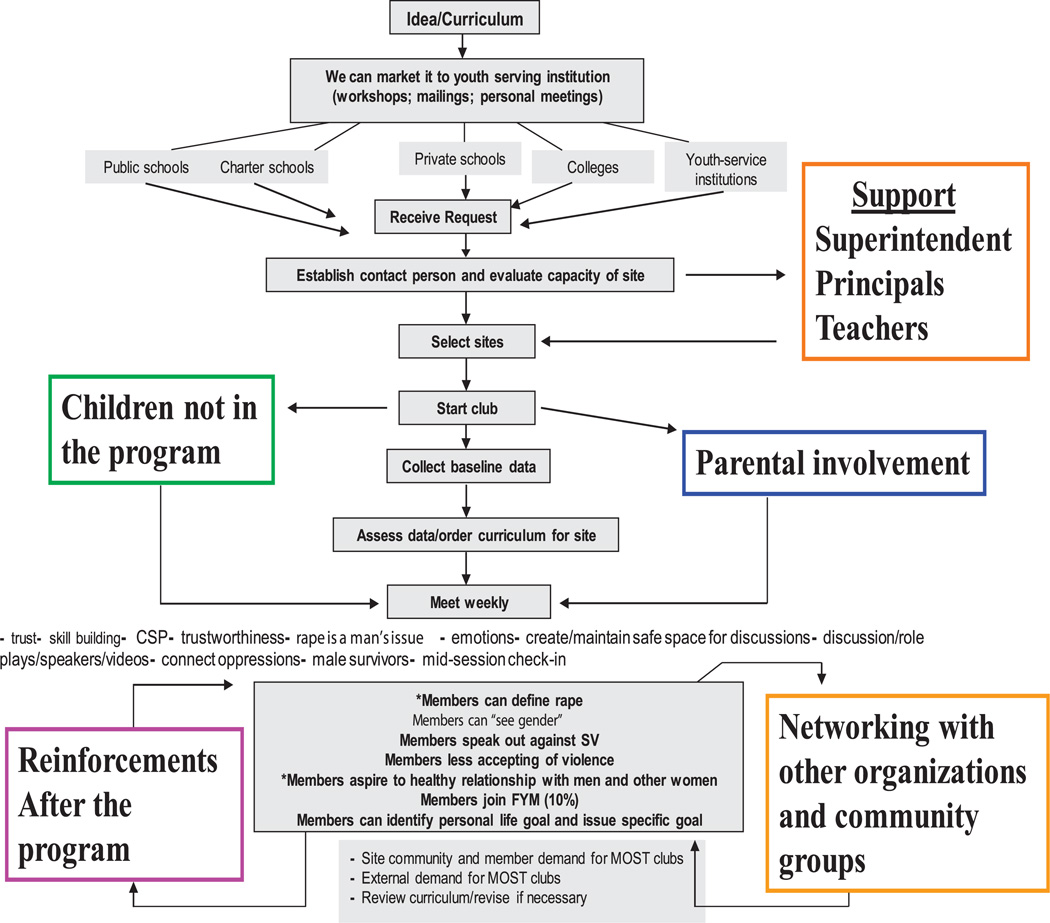

The logic model for one urban area project called Men of Strength Club consisted of a set of linear action steps that should occur if the initiative developed according to plan, e.g., if a valid curriculum is identified, then the curriculum should be marketed to public schools, charter schools, private schools, colleges and youth-serving institutions; if such marketing takes place, then public schools, charter schools, private schools, colleges and youth-serving institutions should be reached; if such institutions are effectively reached with a valid curriculum, then the institutions should request more information about the curriculum. These ‘‘if … then’’ statements continued vertically down the model until impacts were accomplished.

It became apparent that the logic model’s linear action steps were not commensurate with challenging contextual factors, including lack of support by school personnel, the untoward influence of students not in the program on those who are in the program, lack of active parental involvement and support, minimal involvement of other local organizations as support systems for the students, and an appreciable lack of mechanisms to sustain desirable outcomes once the program ends.

In light of these challenging contextual factors, a different type of logic model was needed for the Men of Strength Club, one that was grounded in the community and could incorporate a less linear and more recursive way of depicting program formation. Feedback loops were added to the program’s logic model to allow for a stronger fit with the implementation context. In Fig. 4 we illustrate how FORECAST was used in this project to accommodate contextual factors that would otherwise interfere with the program logic model’s trajectory toward desired outcomes.

Fig. 4.

Model of the solution for the men of strengths club.

Feedback loops help to bridge the gap between program theory and implementation context, as the latter is anticipated and incorporated into the program theory. A feedback loop curves up and out from an ‘‘if’’ statement to address a barrier and then curves back to a ‘‘then’’ statement. For example, ‘‘lack of support for a violence prevention curriculum by school personnel’’ can disrupt the logical proposition ‘‘if educational institutions are effectively reached with a valid curriculum, then the institutions should request more information about the curriculum.’’ The model of the solution would then include an intervention to increase support for the curriculum.

Since communities are multifaceted, complex, and non-linear, it is important for stakeholders to develop program models that are consistent with the complexity of the surrounding context. The use of feedback loops in FORECAST modifies a strictly linear model to address barriers to implementation and thereby improves the likelihood of positive program outcomes.

3. Incorporating proximal outcomes into a theory of change

STEPs to STEM evaluators have recently used FORECAST to not only plan, monitor, and improve procedural/process-based steps, but to also account for substantive proximal (short-term) accomplishments derived through project activities. STEPs to STEM is a University of South Carolina project sponsored by the National Science Foundation’s (NSF) Science, Technology, Engineering, and Mathematics Talent Expansion Program (STEP). The primary goals of the project are to increase the enrollment, retention, and graduation of transfer students in science, technology, engineering, and mathematics (STEM) majors.

Evaluators recommended to the program’s coordinators and staff that proximal outcomes should be integrated into modeling and data collection activities. The rationale for this innovation was that feedback about proximal outcome evaluation results was expected to help facilitate momentum for later program success– e.g., ‘‘small wins’’ (Weick, 1984), and that feedback about not accomplishing proximal outcomes would suggest the need for improvements in either theory or implementation.

Sense of community – a ‘‘feeling that members have of belonging, a feeling that members matter to one another and to the group, and a shared faith that members’ needs will be met through their commitment to one another’’ (McMillan & Chavis, 1986) – was selected as a short-term outcome in light of research on college students’ sense of community as a predictor of improved retention and graduation (Kuh, Kinzie, Schuh, Whitt, et al., 2005; Tinto, 1993). Interventions to address sense of community may be particularly appropriate for transfer students who are struggling to acclimate to a large university (Townsend & Wilson, 2006). Several ongoing activities in STEPs to STEM’s model of the solution – including monthly social events and summer research internships – were expected to increase students’ sense of community as a proximal outcome.

At the beginning of the program, FORECAST models and tools were used to develop a program theory that incorporated sense of community as a proximal outcome. After an initial period of implementation (and in accordance with the timeline reflected in the theory of change), stakeholders collected proximal outcome evaluation data. We will highlight one of the findings.

STEPs to STEM students have significantly higher sense of community when they participate in a greater number of internship experiences, but there are a finite number of internship slots per semester and hence not everyone can participate. Therefore, it appears that permitting students to participate in repeated internship experiences allows for a subgroup of students to be more intensively targeted for cumulative enhancements in sense of community. While limiting students to a single internship may be beneficial from a scalability perspective, it may attenuate the noted impact of repeated internships on a subset of students’ sense of community.

STEPs to STEM evaluators and other program stakeholders subsequently revisited the theory of change and had a focused dialog about the role and purpose of internships in the program’s theory of change. On a broader level, conducting proximal outcome evaluation in FORECAST can help stakeholders in identifying ambiguities, inconsistencies, or inaccurate assumptions within a theory of change to inform strategic adaptations and/or strengths to be sustained.

4. Using formative evaluation in community-based randomized trial research

There have been clear limitations with using top-down approaches that rely first on randomized controlled efficacy trials and then on effectiveness trials to show feasibility of dissemination into community settings (Wandersman et al., 2008). In general, these approaches may be artificial and not practical for real world stakeholders to adopt, and they may leave stakeholders questioning the usefulness and feasibility of implementing the approach over long-term periods (Chen & Garbe, 2011). The FORECAST approach provided a tool for implementing a bidirectional approach to intervention. The researchers provided an ecological model to identify both access and safety issues relevant for the intervention; the community provided context and knowledge about the community that helped operationalize the theory and expand ideas about how to enhance the intervention. The approach also allowed researchers to adapt to the changing needs of the communities to increase reach and sustainability.

Positive Action for Today’s Health (PATH) is a multi-community randomized trial with participatory evaluation features, funded by the National Institutes of Health (Wilson et al., 2010). The trial examined the efficacy and cost-effectiveness of an environmental intervention for improving safety and access for physical activity in three underserved African American communities. Three matched communities (based on poverty, ethnic minority percentage, crime rate, and rate of physical activity) were randomized to receive one of three PATH programs: (1) a police-patrolled walking program plus social marketing strategies intervention to promote walking (full intervention), (2) a police-patrolled walking program only, or (3) general health education only.

PATH is based on a social ecological model (Booth et al., 2001; Cochrane & Davey,2008; Coulon et al.,2012; Kumanyika et al.,2007; Wilson et al., 2010), which addresses the interactive relationships between the individual, interpersonal, community organization, societal, and physical environment levels of influence. The intervention programs were developed based on ecological theory and targeted essential elements within intrapersonal, interpersonal, social and physical environmental, and community levels.

FORECAST was used in the intervention communities for ongoing program development, with systematic and ongoing feedback communicated between intervention staff and local Steering Committees. It helped to define difficult problems on multiple levels of analysis and to develop locally grounded solutions (a community-based feedback approach) (Coulon et al., 2012).

The PATH trial applied FORECAST components to tailor evaluation methods and to facilitate ongoing program adaptation necessary for maintaining cultural and contextual relevance with community input. For example, the local steering committee in the full intervention community used FORECAST models and tools, along with data from community focus groups, as they worked with a social marketing firm to ensure that social marketing messages, materials, and distribution strategies were appropriate for the community context and culture. Within the PATH program, goals related to interpersonal-level elements such as social norms for walking changed over time as efforts to engage community residents developed through Walking Leader and Steering Committee efforts (Wilson et al., 2012). In collaboration with a social marketing firm, the Steering Committee organized Pride Strides – walks led by trained neighborhood residents, community leaders, or local organizations. Initial program goals for Pride Strides’’ included recruiting 1–2 program leaders each month with approximately 8–16 Pride Strides completed monthly. As the program developed, Walking Leaders and walkers noted that 10 monthly Pride Strides were feasible, and that engaging local organizations to lead Pride Strides would help to promote the program (initially, only individual Leaders were targeted). Program goals and delivery were modified accordingly. A final adaptation based on participatory feedback and FORECAST data was to build an infrastructure for formally and consistently including churches in the program and its Pride Strides. ‘‘Church Challenge’’ walks were planned to encourage local churches to compete and demonstrate a strong commitment to health by walking frequently with the program. As a result, participation and engagement of new walkers increased dramatically and the program managed to maintain high levels of participation during summer months, despite peak temperatures (Coulon et al., 2012).

Both the researchers and the community stakeholders benefited from the use of FORECAST. Community stakeholders developed and implemented markers, measures, and meaning in light of their needs and resources. As a result, interventions were more likely to have greater reach and sustainability. From the research team’s perspective, the community-based participatory process of program development and implementation helped to operationally define the randomized trial’s interventions in ways that enhanced the trial’s transferable validity – i.e., the ability of a program to effectively transfer from the research to the real world setting (Chen & Garbe, 2011). In brief, forecast helped enhance the operationalization of the theory in PATH and monitored and influenced implementation. By facilitating community-researcher changes in the plan and helping the community and researchers monitor implementation, FORECAST enhanced the theory, implementation, and accuracy of the evaluation of PATH.

5. Summary of FORECAST innovations

We will briefly describe the use of FORECAST in thee three projects, each of which represents a new context for FORECAST’s original logic model (see Fig. 1). Each project developed promising formative evaluation innovations that are included in an updated FORECAST system.

The linear nature of FORECAST modeling was an initial limitation. By incorporating feedback loops, we have started to address this limitation. Feedback loops promote a less discursive theory that takes into account interactions of a simpler linear theory of change with an implementation context. An understanding of how the use of feedback loops promotes improvement in program theory anticipates how feedback loops also promote improvements in implementation. A program theory that incorporates the use of feedback loops serves to proactively account for many challenges that would otherwise arise during implementation, thereby constructing a more social ecologically valid picture of a community program’s circumstances.

Another initial limitation in FORECAST was an overreliance on process evaluation. The latter, when used in formative evaluation, can be helpful in moving implementation quality to a higher level. There is an important caveat, however: process evaluation is oriented to revealing flaws in implementation but not in theory, and if there is a flawed program theory, it doesn’t matter how well you implemented – outcomes will suffer. The integration of proximal outcomes into a theory of change for guiding evaluation can be useful in pointing out to stakeholders areas that require clarification and improvement in program theory, allowing for additional information than would be provided by process evaluation alone.

The participatory aspect of FORECAST is especially conducive to a breadth of applications, including formative evaluation in community-based randomized trial research. As seen in PATH, using FORECAST in a structured program – even a randomized community trial – can assist with the translation of research constructs into locally grounded problem definitions and practical solutions. The experience of PATH demonstrates how participants in a community-based intervention trial can benefit from the FORECAST process in shaping and redirecting efforts to meet local needs within the parameters of a trial.

6. Implications for improving program theory, implementation, and evaluation

FORECAST 2.0 incorporates key innovations on the foundation of the original (1994) FORECAST approach. Innovations to FORECAST from projects described in this article are reflected in an updated logic model for a ‘‘new and improved’’ FORECAST – or ‘‘FORECAST 2.0’’ (see Fig. 5). The FORECAST 2.0 logic model integrates innovative formative evaluation contributions derived from practice for improving program theory, implementation, and evaluation – and decreasing failures. Below we describe some of these innovations.

Fig. 5.

FORECAST 2.0 logic model. An asterisk (*) indicates a component that was not included in the original FORECAST logical model.

6.1. Innovations for improving program theory

FORECAST 2.0 integrates two logic models (model of the problem, model of the solution) that were used in the original (1994) FORECAST model along with several additions to enhance program theory. We highlight benefits derived via the inclusion of feedback loops and proximal outcomes in logic models.

Using feedback loops in logic models promotes the development of a theory of change that is ecologically grounded, helping stakeholders to account for contextual factors in the theory of change that would otherwise (i.e., if the factors were not accounted for in the theory of change) interfere with implementation and serve as ‘‘noise.’’

A focus on proximal outcomes in FORECAST 2.0 helps stakeholders to be more rigorous and logic-driven in developing theories of change. By virtue of identifying and integrating proximal outcomes that are logically linked to longer-term impacts, stakeholders are better able to articulate the underlying logic and temporal linkages occurring between a program’s process trajectory (e.g., strategies, activities) and its long range desired impacts (Coie et al., 1993; Glasgow & Emmons, 2007).

6.2. Innovations for improving implementation

FORECAST 2.0 integrates the planning tools and process evaluation elements (markers, measures, and meaning) included in the original FORECAST model, along with an innovative community-based research application. Markers, measures, and meaning can be used in randomized community research projects to assist stakeholders in operationalizing research constructs based on their indigenous needs and resources. Although we focus specifically on the PATH project in this article, we expect that other community-based research projects could benefit from using FORECAST in a similar way.

Feedback loops not only help to improve theory but also help to improve implementation. When feedback loops are used to develop an ecologically grounded theory of change, the ecological context for operationalization is prospectively accounted for, which promotes a smoother transition from a theory on paper and its operationalization and implementation within a community. Feedback loops can promote improved ease and commensurability in the translation of theory into practice, thus helping stakeholders to avoid a troublesome gap between theory and practice (as is the case when a theory of change is highly logical but impractical to implement).

6.3. Innovations for improving evaluation

In the original FORECAST logic model, the data collected and used during implementation were derived primarily from process evaluation. An adjustment in FORECAST 2.0 helps to widen the breadth of evaluation data. As described in the STEPs to STEM project, the use of proximal outcome evaluation in tandem with process evaluation data can be informative and beneficial for accountability. (To the extent that a process evaluation indicates sufficient implementation quality, and proximal outcomes are not being reached within an expected time frame, this suggests the need to strategically enhance a program theory so that it is more logical and practically sound.)

Program evaluation enhancements are also derived from the community research application of FORECAST (e.g., in PATH). Community stakeholders can use markers, measures, and meaning to ‘‘locally’’ operationalize and realistically ground research-driven program elements. This contrasts with the ‘‘parachute’’ approach to bringing research-driven interventions into communities, which has a number of limitations including a record of disappointing outcomes (e.g., Merzel & D’Afflitti, 2003; Stewart-Brown, 2006). Coupled with the ‘‘parachute’’ approach is a tendency to overemphasize the importance of implementation fidelity, on the assumption that sufficient fidelity will result in the same or similar outcomes observed in previous (e.g., efficacy) trials. In contrast, the innovative use of FORECAST in the PATH project lends a more grounded, robust, and integrative approach to evaluation in community-based research that emphasizes both internal and external validity (Chen & Garbe, 2011).

7. Conclusion

It is important to ameliorate the gap between high hopes for innovations and the common occurrence of disappointment in actual results. Contributions made possible by collaborative partnerships between practitioners, evaluators, and participants in the projects above helped to build the FORECAST 2.0 logic model. The use of the initial FORECAST model in new program contexts (Men of Strengths Club, STEPs to STEM, and PATH) – was shown to be instructive in helping each of the projects to improve theory, implementation, and evaluation. Additionally in each project, collaborative partnerships promoted the development of new formative evaluation practices. We expect that many communities are in a position to benefit from these innovative formative evaluation practices when they use FORECAST 2.0. We believe that this would lead to strengthened theory, implementation, and evaluation, and this should lead to increased interest by communities and funders to have evaluators work with them.

An approach like FORECAST spurs innovations to increase the probability of success in theory, implementation, and evaluation. Theory, implementation, and evaluation are universal. Even the biblical creation story suggests a theory for the world, its implementation, and evaluation! Since community stakeholders and evaluators are neither omnipotent nor omniscient, we think that formative evaluation should include a strong focus on improving theory, implementation, and evaluation.

Biographies

Jason Katz, MA is an advanced graduate student in the clinical-community psychology program at the University of South Carolina (Columbia, SC). His interests include the application of industry-derived continuous quality improvement strategies in community health services (e.g., community-based health centers, clinics, and hospitals); the use of formative evaluation methods and tools for supporting data-informed decision-making and improvement; and proactive technical assistance systems for building capacity in community organizations and services.

Abraham Wandersman, Ph.D., is a Professor of Psychology at the University of South Carolina (Columbia, SC). He is an international authority in community psychology and program evaluation. He performs research and program evaluation on citizen participation in community organizations and coalitions and on interagency collaboration, and specializes in the areas of community psychology, environmental and ecological psychology, citizen participation, community coalitions and program evaluation.

Robert M. Goodman, Ph.D., is a Professor and former Dean of the School of Health, Physical Education and Recreation at Indiana University. Dr. Goodman has written extensively on issues concerning community health development, community capacity, community coalitions, evaluation methods, organizational development, and the institutionalization of health programs. He has been the principal investigator and evaluator on projects for CDC, The National Cancer Institute, the Centers for Substance Abuse Prevention, the Children’s Defense Fund, and several state health departments.

Sarah F. Griffin, Ph.D., received her doctorate degree from the Arnold School of Public Health in Columbia South Carolina. Dr. Griffin is currently an Assistant Professor in the Department of Public Health Sciences in the College of Health, Education and Human Development and Clemson University. Dr. Griffin’s research and evaluation interests primarily focuses on eliminating health disparities related to obesity through community and school-based interventions.

Dawn K. Wilson, Ph.D., is a Professor of Psychology at the University of South Carolina. Dr. Wilson received her Ph.D. from Vanderbilt University in 1988. Her areas of research interest include: (1) understanding family dynamics/interactions in promoting healthy diet and physical activity in underserved adolescents, (2) ecological and social cognitive theoretical models for understanding family connectedness, social support and role modeling in promoting health behavior change in youth, and (3) family-based interventions for promoting healthy diet and physical activity among underserved adolescents.

Michael Schillaci, Ph.D., is the Assistant Director of t the Center for Science Education at the University of South Carolina. He earned his PhD in Physics in 1999 from the University of Arkansas. He has an avid interest in problems in cognition and his research efforts are toward the development of a cognitive theory of deception. He is currently working to develop software to analyze high density event related potential (HDERP) data measured from subjects performing a visual, two-stimulus paradigm, with varying workload.

Contributor Information

Jason Katz, Email: katzj@mailbox.sc.edu.

Abraham Wandersman, Email: wanderah@mailbox.sc.edu.

Robert M. Goodman, Email: rmg@indiana.edu.

Sarah Griffin, Email: sgriffi@clemson.edu.

Dawn K. Wilson, Email: wilsondk@mailbox.sc.edu.

Michael Schillaci, Email: schillam@mailbox.sc.edu.

References

- Anderson A. An introduction to theory of change. Evaluation Exchange. 2005 Summer;Volume XI:2. http://www.hfrp.org/evaluation/the-evaluation-exchange/issue-archive/evaluation-methodology/an-introduction-to-theory-of-change. [Google Scholar]

- Booth SL, Mayer J, Sallis JF, Ritenbaugh C, Hill JO, Birch L, et al. Environmental and societal factors affect food choice and physical activity: Rationale, influences and leverage points. Nutrition Reviews. 2001;59:S21–S39. doi: 10.1111/j.1753-4887.2001.tb06983.x. [DOI] [PubMed] [Google Scholar]

- Centers for Disease Control and Prevention. (n.d.) Logic model magic: Using logic models for DASH program planning and evaluation. Retrieved from: http://apps.nccd.cdc.gov/dashoet/logic_model.

- Chelimsky E. The coming transformations in evaluation. Evaluation for the 21st Century. 1997:1–26. [Google Scholar]

- Chen HT, Garbe P. Assessing program outcomes from the bottom-up approach: An innovative perspective to outcome evaluation. Advancing validity in outcome evaluation: Theory and practice. In: Chen HT, Donaldson SI, Marks MM, editors. New Directions for Evaluation. Vol. 130. 2011. pp. 93–106. [Google Scholar]

- Cochrane T, Davey RC. Increasing uptake of physical activity: A social ecological approach. Perspectives in Public Health. 2008;128(1):31–40. doi: 10.1177/1466424007085223. [DOI] [PubMed] [Google Scholar]

- Coie J, Watt N, West S, Haskins D, Asarnow J, Markman H, et al. The science of prevention: A conceptual framework and some directions for a national research program. American Psychologist. 1993;48:1013–1022. doi: 10.1037//0003-066x.48.10.1013. [DOI] [PubMed] [Google Scholar]

- Coulon SM, Wilson DK, Griffin S, St George SM, Trumpeter NN, Wandersman A, et al. Formative process evaluation for implementing a social marketing intervention to increase walking in African Americans in the PATH trial. American Journal of Public Health. 2012;102(12):2315–2321. doi: 10.2105/AJPH.2012.300758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Durlak JA, DuPre EP. Implementation matters: A review of research on the influence of implementation on program outcomes and the factors affecting implementation. American Journal of Community Psychology. 2008;41:689–708. doi: 10.1007/s10464-008-9165-0. [DOI] [PubMed] [Google Scholar]

- Fixsen DL. Implementation research: A synthesis of the literature. National Implementation Research Network. 2005 [Google Scholar]

- Glasgow RE, Emmons KM. How can we increase translation of research into practice? Types of evidence needed. Annual Review of Public Health. 2007;28:413–433. doi: 10.1146/annurev.publhealth.28.021406.144145. [DOI] [PubMed] [Google Scholar]

- Goodman RM, Noonan RK. Empowerment evaluation for violence prevention. Health Promotion Practice. 2009;10(1):11S–18S. doi: 10.1177/1524839908317646. [DOI] [PubMed] [Google Scholar]

- Goodman RM, Wandersman A. FORECAST: A formative approach to evaluating community coalitions and community-based initiatives. Journal of Community Psychology. 1994:6–25. CSAP Special Issue. [Google Scholar]

- Kuh GD, Kinzie J, Schuh JH, Whitt EJ. Never let it rest lessons about student success from high-performing colleges and universities. Change: The Magazine of Higher Learning. 2005;37(4):44–51. [Google Scholar]

- Kumanyika SK, Whitt-Glover MC, Gary TL, Prewitt TE, Odoms-Young AM, Banks-Wallace J, et al. Expanding the obesity research paradigm to reach African American communities. Preventing Chronic Disease. 2007;4(4):A112. [PMC free article] [PubMed] [Google Scholar]

- McMillan D, Chavis D. Sense of community: A definition and theory. Journal of Community Psychology. 1986;14:6–23. [Google Scholar]

- Merzel C, D’Afflitti J. Reconsidering community-based health promotion: Promise, performance, and potential. American Journal of Public Health. 2003;93:557–574. doi: 10.2105/ajph.93.4.557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meyers DC, Durlak J, Wandersman A. What are important components of quality implementation? A synthesis of implementation frameworks. American Journal of Community Psychology. 2012;50(3–4):481–496. doi: 10.1007/s10464-012-9521-y. [DOI] [PubMed] [Google Scholar]

- Rossi PH, Lipsey MW, Freeman HE. Evaluation: A systematic approach. 7th ed. Thousand Oaks, CA: Sage; 2004. [Google Scholar]

- Scheirer MA, Mark MM, Brooks A, Grob GF, Chapel TJ, Geisz M, Leviton L. Planning Evaluation Through the Program Life Cycle. American Journal of Evaluation. 2012;33(2):263–294. [Google Scholar]

- Scriven M. The methodology of evaluation. In: Tyler R, Gagne R, Scriven M, editors. AERA monograph series on curriculum evaluation, No. 1: . Perspectives on curriculum evaluation. Skokie, IL: Rand McNally; 1967. pp. 39–83. (Series Ed.) [Google Scholar]

- Scriven M. Beyond formative and summative evaluation. In: Gredler ME, editor. Program evaluation. New Jersey: Prentice Hall; 1991. p. 16–. [Google Scholar]

- Shapiro J. Evaluation as theory testing: An example from Head Start. Educational Evaluation and Policy Analysis. 1982;4:341–353. [Google Scholar]

- Stetler CB, Legro MW, Wallace CM, Bowman C, Guihan M, Hagedorn H, et al. The role of formative evaluation in implementation research and the QUERI experience. Journal of General Internal Medicine. 2006;21:S1–S8. doi: 10.1111/j.1525-1497.2006.00355.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stewart-Brown S. What is the evidence on school health promotion in improving health or preventing disease and, specifically, what is the effectiveness of the health promoting schools approach? Copenhagen: World Health Organization; 2006. [Google Scholar]

- Taylor-Powell E, Steele S, Douglah M. Program development and evaluation. University of Wisconsin-Extension; 1996. Planning a program evaluation. Series G3658-1. [Google Scholar]

- Tinto V. 2nd ed. Chicago: University of Chicago Press; 1993. Leaving college: Rethinking the causes and cures of student attrition. [Google Scholar]

- Townsend BK, Wilson KB. A hand hold for a little bit: Factors facilitating the success of community college transfer students to a large university. Journal of College Student Development. 2006;9(4):439–456. [Google Scholar]

- Wandersman A. Four keys to success (theory, implementation, evaluation, and system/resource support): High hopes and challenges in participation. American Journal of Community Psychology. 2009;43(1–2):3–21. doi: 10.1007/s10464-008-9212-x. [DOI] [PubMed] [Google Scholar]

- Wandersman A, Duffy J, Flaspohler P, Noonan R, Lubell K, Stillman L, et al. Bridging the gap between prevention research and practice: The interactive systems framework for dissemination and implementation. American journal of community psychology. 2008;41(3–4):171–181. doi: 10.1007/s10464-008-9174-z. [DOI] [PubMed] [Google Scholar]

- Weick KE. Small wins: Redefining the scale of social problems. American Psychologist. 1984;39(1):40–49. [Google Scholar]

- Wilson DK, Ellerbe C, Lawson AB, Alia KA, Meyers DC, Coulon SM, et al. Imputational Modeling of Spatial Context and Social Environmental Predictors of Walking in an Underserved Community: The PATH Trial. Spatial and Spatio-temporal Epidemiology. 2012 doi: 10.1016/j.sste.2012.10.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson DK, Trumpeter N, St George SM, Griffin S, Van Horn ML, Wandersman A, et al. Positive Action for Today’s Health Trial (PATH): A randomized controlled trial for promoting walking in underserved communities. Contemporary Clinical Trials. 2010;31:624–633. doi: 10.1016/j.cct.2010.08.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- W.K. Kellogg Foundation. Logic model development guide: Using logic models to bring together planning, evaluation, & action. WK Kellogg Foundation: Battle Creek, MI; 2001. [Google Scholar]