Abstract

Purpose

Since 2000, Academic Emergency Medicine (AEM), the journal of the Society for Academic Emergency Medicine, has presented a one-day consensus conference to generate a research agenda for advancement of a scientific topic. One of the 12 annual issues of AEM is reserved for the proceedings of these conferences. The purpose of this study was to measure academic productivity of these conferences by evaluating subsequent federal research funding received by authors of conference manuscripts and calculating citation counts of conference papers.

Method

This was a cross-sectional study conducted during August and September 2012. NIH RePORTER was searched to identify subsequent federal funding obtained by authors of the consensus conference issues from 2000 to 2010. Funded projects were coded as related or unrelated to conference topic. Citation counts for all conference manuscripts were quantified using Scopus and Google Scholar. Simple descriptive statistics were reported.

Results

852 individual authors contributed to 280 papers published in the 11 consensus conference issues. 137 authors (16%) obtained funding for 318 projects. A median of 22 topic-related projects per conference (range 10–97 projects) accounted for a median of $20,488,331 per conference (range $7,779,512–122,918,205). The average (±SD) number of citations per paper was 15.7 ±20.5 in Scopus and 23.7 ±32.6 in Google Scholar.

Conclusions

The authors of consensus conference manuscripts obtained significant federal grant support for follow-up research related to conference themes. In addition, the manuscripts generated by these conferences were frequently cited. Conferences devoted to research agenda development appear to be an academically worthwhile endeavor.

In 2000, Academic Emergency Medicine (AEM), the journal of the Society for Academic Emergency Medicine, hosted a one-day consensus conference to develop a research agenda on “Errors in Emergency Medicine.” The conference was organized in response to the 1999 Institute of Medicine report, “To Err is Human,” which evaluated the morbidity and mortality related to medical errors.1 The 2000 conference was presented as a pre-conference offering at the Society's annual meeting. The editors dedicated the entire November issue of the journal to publishing the proceedings of that consensus conference.2

Each year since 2000, the AEM editorial board has selected a topic of interest to the AEM readership for the following year's consensus conference. Unlike other academic consensus conferences, which typically generate expert opinion and consensus on a controversial clinical topic for which evidence is limited,3,4 the purpose of each AEM conference is to develop a consensus-based research agenda to advance understanding of that topic by inspiring studies to address current knowledge gaps.5 The goal of the consensus conferences is that these research agendas can serve as a guide for future funding proposals. Table 1 lists the topics of each year's conference, illustrating the breadth of topics selected. The journal has maintained this concept and format over the years to help advance the academic mission of the Society by helping define research needs in our specialty, and providing the Society's members with consensus-driven ideas for research and, hopefully, some leverage for obtaining extramural funding in the form of the recommendations set forth in the conference proceedings.

Table 1.

Topics of Society of Academic Emergency Medicine's Annual Consensus Conferences, 2000–2014

| Year | Topic |

|---|---|

| 2000 | Errors in Emergency Medicine: A Call to Action |

|

| |

| 2001 | The Endangered Safety Net: Establishing a Measure of Control |

|

| |

| 2002 | Assuring Quality in Emergency Care |

|

| |

| 2003 | Disparities in Emergency Health Care |

|

| |

| 2004 | Developing Consensus in Emergency Medicine Information Technology |

|

| |

| 2005 | Ethical Conduct of Resuscitation Research |

|

| |

| 2006 | The Science of Surge |

|

| |

| 2007 | Knowledge Translation in Emergency Medicine |

|

| |

| 2008 | The Science of Simulation in Healthcare |

|

| |

| 2009 | Public Health in the Emergency Department |

|

| |

| 2010 | Beyond Regionalization: Integrated Networks of Emergency Care |

|

| |

| 2011 | Interventions to Assure Quality in the Crowded Emergency Department |

|

| |

| 2012 | Education Research in Emergency Medicine: Opportunities, Challenges, and Strategies for Success |

|

| |

| 2013 | Global Health and Emergency Care: A Research Agenda |

|

| |

| 2014 | Gender-Specific Research in Emergency Medicine: Investigate, Understand, and Translate how Gender Affects Patient Outcomes |

The proceedings of each consensus conference are presented in a full issue of AEM. Four types of conference-related manuscripts are published:

commentaries (including an executive summary by the conference chairs);

summaries of plenary and panel presentations;

proceedings of the conference “breakout sessions” where consensus is generated; and

original contributions on the conference topic.

Original contributions on the conference topic are solicited to the general readership as a “Call for Papers” approximately a year prior to the consensus conference. This category of manuscripts allows researchers whose research focus is in the area being discussed to publish their work, illustrating the types of studied that can be done on that topic, and suggesting a springboard for further work.

It is unusual (and perhaps unique) for a society-sponsored journal to dedicate a full issue each year to publishing consensus conference proceedings that are designed to generate a research agenda, rather than simply summarizing the state of knowledge of a topic at a single point in time. Moreover, revenue generated from registrants generally covers only a proportion of the conference expenses. These logistical and financial challenges need to be weighed with the potential benefits of the conferences. However, quantitatively, potential downstream gains are unknown. The purpose of this study was therefore to evaluate the downstream academic productivity of the consensus conferences using two approaches: 1) evaluating subsequent federal grant funding for research projects conducted by the authors of the papers published in the dedicated issues of the journal, and 2) calculating citation counts of those conference papers.

This study may be of value and generalizable to other specialties for two reasons. The first is that, by quantitatively measuring academic productivity generated by these conferences, other specialties may be more inclined to organize similarly structured conferences. These conferences may serve as an incubator for innovative ideas and methods for particular topics where traditional clinical academic research may not work. In particular, the prototype of randomized controlled trials may not be feasible for health care delivery research or improving health care systems.6 They can also help focus the attention of a broad audience on a particular topic that members of the specialty feel needs attention; assessing the downstream academic productivity of these conferences can help determine whether the format is having the desired results in stimulating research on the topic. The second is that our methodology may serve as a template for evaluating comparable conferences that focus on setting research agendas.7–9 If and when other specialties conduct similar conferences, they can utilize these methods to assess their success.

Method

Design and study participants

We conducted a cross-sectional study during August and September 2012. The eleven consensus conference issues of the journal (November, 2000–2009, and December, 2010) were reviewed. A list of all conference-related papers and their authors was assembled. Each paper was categorized as commentary, plenary/panel presentation, breakout session, or original contribution, based on its heading in the table of contents. This list of papers and their authors formed the study population.

Data sources

The National Institutes of Health Research Portfolio Online Reporting Tools Expenditures and Results (NIH RePORTER) system was used to identify federal funding obtained by authors contributing to the consensus conference issues.10 The NIH RePORTER system is an electronic tool that allows users to search a repository of both intramural and extramural federally funded research from 1988 to present.10 The system includes research funded by the NIH, the Centers for Disease Control and Prevention, the Agency for Healthcare Research and Quality (AHRQ), the Health Resources and Services Administration, the Substance Abuse and Mental Health Services Administration, and the United States Department for Veterans Affairs. It excludes funding obtained from Canadian sources, thus we could not evaluate non-U.S. funding obtained by Canadian authors on conference-related manuscripts. In addition, it does not include non-federal funding such as foundation or society grants.

Citation counts were generated through online review of Scopus (Elsevier BV, Amsterdam, The Netherlands) and Google Scholar (Google Inc., Mountain View CA). The Scopus database contains nearly 50 million records from just under 20,000 titles, including full coverage of Medline, and is currently the largest abstract and citation database of the peer-reviewed scientific literature. Google Scholar covers a broader range of sources, including theses, technical reports, and other documents found using web “crawlers.”

Study procedures

Between August 21 and August 29, 2012, two of us (TD and DKN) queried NIH RePORTER for subsequent funding by consensus conference issue authors. Search terms included each author's full name and funding cycles from the year of the consensus conference issue to present. Common names were cross-referenced with topic domain and author institution. We abstracted each project's activity code (e.g., R01, R03, K23), title of project, funding amount, funding institute or agency or center, and fiscal years funded. Activity codes were categorized into R01 equivalents (R01, R23, R29, R37, DP2), other R awards (R03, R15, R21), training awards (K01, K02, K23, K24, other K awards, F32, F31, other F awards, other T awards), cooperative agreements (all U awards), program awards (all P awards), small business innovation research (SBIR), small business technology transfer (SBTT) awards (R41, R42, R43, R44, U43, U44), and other awards. These categories were consistent with NIH RePORTER categories. Two of us (DKN and KY) independently coded each funded project as “related” or “unrelated” to the consensus conference topic domain using project information from NIH RePORTER, which included a description of the project (abstract text), narrative (public health relevance statement), and project terms to determine conference relatedness. Discrepancies in coding were adjudicated by a third member of our team (LM).

Between August 29 and September 12, 2012, one of us (DCC) examined the Scopus and Google Scholar databases to determine the number of papers citing each consensus conference paper; this information was manually recorded on an Excel spreadsheet. To examine potential differences in citation counts between papers of the four types, means were calculated for each of the four types of papers.

Data analysis

Data formatting and coding of variables were conducted using Microsoft Excel 2010 (Microsoft Corporation, Redmond, WA) and STATA 11.0 statistical software (STATA Corporation, College Station, TX). Means were used as the primary measure of central tendency for the citation counts, because journal impact factor, the most common journal citation metric, is expressed using means. However, the data for some years in particular, and thus the overall data, were somewhat right-skewed, justifying the use of medians (with IQR). Inter-rater reliability of coding for related projects was measured with Cohen's kappa coefficient with 95% confidence intervals (CI), with substantial agreement defined as a kappa >0.6.11

Results

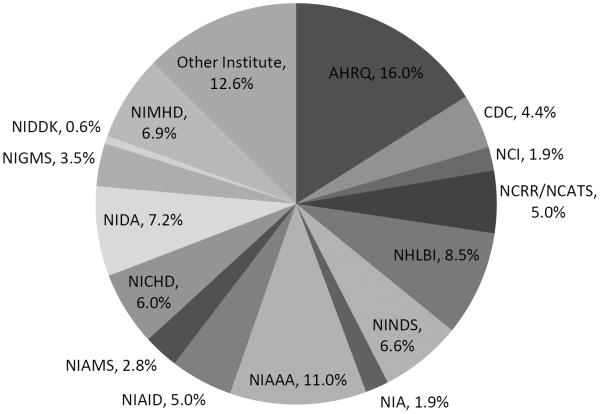

The eleven consensus conference issues of the journal included 280 manuscripts with 994 contributing authors; there were 852 unique authors, as some authors contributed to multiple papers and multiple journal issues. One hundred thirty-seven of the 852 unique authors (16.1%) were identified in NIH RePORTER as having received subsequent federal funding. These 137 authors obtained funding for 318 individual projects accounting for $329,492,017. The most common activity codes for funded projects were other R awards (91 awards, 29%) and R01 equivalents (82 awards, 26%) (Figure 1). AHRQ (51 awards, 16%), the National Institute for Alcohol Abuse and Alcoholism (35 awards, 11%), and the National Heart, Lung, and Blood Institute (27 awards, 8.5%) were the most common funding agencies (Figure 2).

Figure 1.

Activity codes for all 318 funded projects that arose from issues presented at Academic Emergency Medicine's annual consensus conference, 2000–2010. Activity codes, abstracted in NIH RePORTER, were categorized into R01 equivalents (R01, R23, R29, R37, DP2), other R awards (R03, R15, R21), training awards (K01, K02, K23, K24, other K awards, F32, F31, other F awards, other T awards), cooperative agreements (all U awards), program awards (all P awards), small business innovation research (SBIR), small business technology transfer (SBTT) awards (R41, R42, R43, R44, U43, U44), and other awards. These categories were consistent with NIH RePORTER categories.

Figure 2.

Funding institution/agency/center for all 318 funded projects that arose from issues presented at Academic Emergency Medicine's annual consensus conference, 2000–2010. Abbreviations: AHRQ, Agency for Healthcare Research and Quality; CDC, Centers for Disease Control and Prevention; NCI, National Cancer Institute; NCRR, National Center for Research Resources; NCATS, National Center for Advancing Translational Sciences; NHLBI, National Heart, Lung, and Blood Institute; NINDS, National Institute of Neurological Disorders and Stroke; NIA, National Institute on Aging; NIAAA, National Institute of Alcohol Abuse and Alcoholism; NIAID, National Institute of Allergy and Infectious Disease; NIAMS, National Institute of Arthritis and Musculoskeletal and Skin Diseases; NICHD, National Institute of Child Health and Human Development; NIDA, National Institute on Drug Abuse; NIGMS, National Institute of General Medical Sciences; NIDDK, National Institute of Diabetes and Digestive and Kidney Diseases; NIMHD, National Institute on Minority Health and Disparities.

Funded projects and their amounts (related and total) were tabulated individually for each conference (Table 2). The median number of related funded projects per year over the eleven years was 22 projects (range 10 to 97 projects). The median amount of total related funding per year was $20,488,331 (range $7,779,512 to $122,918,205). Fifty projects with discrepancies of coding for related versus unrelated required adjudication by a third author, with 32 judged through adjudication to be conference-related. Inter-rater reliability of coding for related projects was fair (kappa 0.6; 95% confidence intervals [CI] 0.5–0.7).

Table 2.

Overview of Funding Received by Authors of Articles Printed in the Proceedings of the Society for Academic Emergency Medicine's Annual Consensus Conferences, 2000–2010*

| Year | No. of authors with funded projects/No. of all authors (%) | No. of related funded projects | No. of all funded projects | Related funding amount, $ | All funding amount, $ |

|---|---|---|---|---|---|

| 2000 | 9/87(10) | 14 | 22 | 13,210,121 | 20,781,285 |

|

| |||||

| 2001 | 8/41(20) | 12 | 15 | 8,294,998 | 9,259,788 |

|

| |||||

| 2002 | 18/110(16) | 29 | 36 | 44,823,703 | 49,317,981 |

|

| |||||

| 2003 | 17/71(24) | 36 | 52 | 33,912,010 | 42,512,879 |

|

| |||||

| 2004 | 17/67(25) | 33 | 36 | 12,901,118 | 17,134,756 |

|

| |||||

| 2005 | 6/59(10) | 10 | 11 | 8,673,353 | 9,523,023 |

|

| |||||

| 2006 | 14/71(20) | 15 | 20 | 22,419,923 | 25,654,600 |

|

| |||||

| 2007 | 22/130(17) | 39 | 49 | 59,994,822 | 71,435,074 |

|

| |||||

| 2008 | 12/125(34) | 14 | 18 | 7,779,512 | 11,393,523 |

|

| |||||

| 2009 | 44/128(9.6) | 97 | 106 | 122,918,205 | 139,710,657 |

|

| |||||

| 2010 | 18/105(17) | 22 | 26 | 20,488,331 | 23,474,086 |

Funding was tabulated for each conference independently. Since authors may have contributed to more than one conference, some projects were counted in multiple years. For example if an author from the 2006 ("Science of Surge") and 2007 ("Knowledge Translation") consensus conference issues obtained funding in 2009 for a project evaluating dissemination and implementation of an emergency department crowding computer model, the project would count towards both years of conferences.

Table 3 shows the numbers of citations to the conference papers from each year, and summary totals. There were 4403 total citations in Scopus, and 6633 in Google Scholar. Citations per paper were 15.73 ± 20.45 in Scopus, and 23.69 ± 32.57 in Google Scholar. The overall medians were 9 (IQR 4–20) in Scopus and 13 (IQR 6–29) in Google Scholar. Commentaries were cited an average of 6.23 (SD ±4.93) times each, plenary presentations an average of 14.18 (SD ±18.75) times each, breakout session proceedings an average of 21.59 (SD ±71.61) times each, and original contributions an average of 19.96 (±24.97) times each.

Table 3.

Citation Counts by Year of Articles Printed in the Proceedings of the Society for Academic Emergency Medicine's Annual Consensus Conferences, 2000–2010

| Citations: Scopus | Citations: Google Scholar | ||||

|---|---|---|---|---|---|

| No. of articles |

|

|

|||

| Year | Total | Mean (±SD) | Total | Mean (±SD) | |

| 2000 | 27 | 710 | 26.3 (±26.34) | 1129 | 41.81 (±45.53) |

|

| |||||

| 2001 | 20 | 632 | 31.6 (±24.85) | 877 | 43.85(±35.17 |

|

| |||||

| 2002 | 19 | 626 | 32.95 (±41.60) | 926 | 48.74 (±60.05) |

|

| |||||

| 2003 | 22 | 402 | 18.27 (±14.33) | 549 | 24.95 (±18.58) |

|

| |||||

| 2004 | 21. | 457 | 21.76 (±23.29) | 780 | 37.14 (±41.85) |

|

| |||||

| 2005 | 22 | 210 | 9.55 (±6.99) | 242 | 11 (±7.73) |

|

| |||||

| 2006 | 31 | 399 | 12.87 (±10.57) | 665 | 21.45 (±16.44) |

|

| |||||

| 2007 | 34 | 319 | 9.38 (±10.45) | 469 | 13.79 (±13.41) |

|

| |||||

| 2008 | 32 | 460 | 14.38 (±15.3) | 747 | 23.34 (±34.23) |

|

| |||||

| 2009 | 31 | 158 | 5.10 (±3.90) | 210 | 6.77 (±5.98) |

|

| |||||

| 2010 | 21 | 30 | 1.43 (±1.63) | 39 | 1.86 (±2.03) |

|

| |||||

| Total | 280 | 4,403 | 15.73 (±20.45) | 6,633 | 23.69 (±32.57) |

Discussion

There is a paucity of literature describing consensus conferences with the primary goal of setting a research agenda. Most consensus conferences are focused on clinical guideline development and practice recommendations, especially for topics for which significant controversy between learned experts exists. There appear to be only three published society consensus conferences that have focused on setting research agendas.7–9 While studies have evaluated subsequent publication of abstracts presented at society meetings,12–15 there is limited data on research productivity (defined by funding success and subsequent publication) based upon prior participation in a national consensus conference. One relevant model, which is somewhat complimentary to the design of our conference and this manuscript, is a recent study describing a conference platform as a successful career stimulation strategy. We found through career tracking data, that nearly half of conference participants published their work and one third subsequently obtained research funding.16

Our results suggest that the consensus conference platform as developed by AEM has met the tests of “proof of concept.” We have demonstrated that a sizable body of related research and funding has subsequently been completed. Moreover, the conference manuscripts are among the most highly cited manuscripts published in AEM, increasing the impact factor of the journal. With recent impact factors for the journal ranging from 1.861 to 2.197 and five-year impact factors from 2.474 to 2.536, showing that the typical AEM article is cited roughly twice in the two to five years following its publication, the citation figures shown in Table 3 suggest higher citation rates for the consensus conference papers than for the average AEM paper. The data presented here should be helpful to leaders of future conferences across specialties. The leaders of our conferences have varied degrees of experience at approaching federal and other health care related entities with requests to help fund consensus conferences. Going forward, rather than approaching potential sources of funding simply with an idea, our conference leaders can now approach these potential funding sources with documentation of significant scholarly output related to the conference topic in post-conference years, suggesting a high “return on investment” for funders.

While the results of this study suggest that subsequent related scholarly output has transpired after the consensus conference, we are unable to definitively establish a causal relationship between the conference and subsequent scholarly output. Moreover, it is difficult to quantify the impact of the consensus conferences on future funding. In addition, post-conference funding is just one measure of consensus conference “success.” Less quantifiable measures of success include conference individual participant education and career development. Local practice improvements that result from consensus conferences, such as implementing interventions to improve quality in crowded emergency departments, would also be an important contribution, though also difficult to measure. The board of directors of the Society of Academic Emergency Medicine has recently questioned whether AEM should continue producing the consensus conferences, citing the expenses to produce each conference and publish its proceedings. To examine the financial aspects of the conferences, we queried the Society's executive office records for financial information regarding the conferences. Records from 2007 to 2011 were available. Expenses for each conference are approximately $60K–100K, including site-related and featured speaker-related expenses, plus the cost of publishing the proceedings in AEM, and registration fees have generated only ~15%–30% of the revenue needed to produce recent conferences (see Supplemental Digital Table 1 [LWW INSERT LINK], which describes revenues and expenses for consensus conferences 2007–2011). We believe that the data we have compiled demonstrate the value of the conferences to the Society and its members, and will aid in securing extramural funding for future consensus conferences. It is our hope that other societies may find these data compelling and useful as well.

The question of “impact factor” is somewhat controversial,17,18 and a number of other publication metrics have been generated to overcome some of the shortcomings of the impact factor. Regardless of whether these citation metrics are considered valid, it is clearly in the best interests of both the Society and AEM to publish articles that become highly cited in the future. These consensus conferences have assisted in the achievement of this goal; other journals may be interested in sponsoring conferences given the high citation rate of our conference proceedings papers.

There are certain limitations inherent to this study. First, other measures of conference scholarly output likely exist of which we are unaware or which were not measured. For example, we did not examine publications resulting from the projects we found through our NIH RePORTER search. In addition, the authors of the consensus conference manuscripts represented a proportion (typically 25%–50%) of all conference attendees. Due to the large number of participants, we did not evaluate the scholarly output of non-author conference participants.

Second, a number of Canadian presenters and attendees were present at these conferences, and Canadians were particularly prominent among the leadership of the 2007 conference, “Knowledge Translation in Emergency Medicine,” The addition of the as-yet not quantified contributions of Canadians to the sum of external funding related to these conferences would increase the funding total estimated herein. In addition, we were unable to ascertain which consensus conference authors were non-U.S. citizens (and thus generally exempt from receiving U.S. federal funding) and thus did not exclude these authors from the denominator. The funding totals reported are therefore likely an underestimate.

Third, non-federal funding from pharmaceutical, intramural, and foundation sources provide crucial funding for emergency care researchers. However, we did not include these sources of funding since obtaining this data accurately and comprehensively was logistically prohibitive.

Fourth, some subjectivity exits regarding assessing whether funded projects are or are not related to the prior consensus conference. The total number of projects and total funding represent the maximum possible; the data we provide for related projects represent our best approximation of funding that may have benefitted from the prior conferences. When disagreements regarding “relatedness” existed among the two primary adjudicators, a tie was broken by a third adjudicator. The Cohen's kappa for these adjudications was 0.6, demonstrating some degree of disagreement. In conclusion, the authors of consensus conference manuscripts have obtained significant federal grant support for follow-up research related to conference themes. In addition, the manuscripts generated by these consensus conferences are frequently cited. Consensus conferences devoted to research agenda development appear to be an academically worthwhile endeavor.

Supplementary Material

Acknowledgments

The authors thank Michelle H. Biros, MD, MS, founder of the Academic Emergency Medicine consensus conferences, for her review of this manuscript; and Melissa McMillian, SAEM Grants Coordinator, for her assistance with the study.

Funding/Support: This project was supported by the National Center for Advancing Translational Sciences (NCATS) though grant numbers UL1TR000002 and UL1TR000075 and linked awards KL2TR000134 and KL2TR000076, a component of the National Institutes of Health (NIH) and the NIH Roadmap for Medical Research. NCATS and NIH had no role in the design and conduct of the study, in the analysis or interpretation of the data, or in the preparation of the data. The views expressed in this article are solely the responsibility of the authors and do not necessarily represent the official view of NCATS or the NIH. Information on NCATS is available at http://www.ncats.nih.gov/. Information on Re-engineering the Clinical Research Enterprise can be obtained from http://nihroadmap.nih.gov/clinicalresearch/overview-translational.asp.

Ethical approval: The study was deemed by the University of California Davis institutional review board not to be human subjects research and thus exempt from review.

Footnotes

Other disclosures: Dr. Nishijima is chair and Drs. May and Yadav are members of a subcommittee of the Society for Academic Emergency Medicine Research Committee. Dr. Cone is editor-in-chief and Dr. Gaddis is senior associate editor of Academic Emergency Medicine. The authors have no funding sources or conflicts of interest to report.

Previous presentations: Accepted for presentation at the Society for Academic Emergency Medicine annual meeting, May 2013, Atlanta, GA.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Kohn LT, Corrigan JM, Donaldson MS. Report of the Institute of Medicine. National Academy Press; Washington D.C: 1999. To Err is Human. Building a Safer Health System. [PubMed] [Google Scholar]

- 2.Biros MH, Adams JG, Wears RL. Errors in emergency medicine: a call to action. Acad Emerg Med. 2000;7:1173–4. doi: 10.1111/j.1553-2712.2000.tb00456.x. [DOI] [PubMed] [Google Scholar]

- 3.Morris MI, Daly JS, Blumberg E, et al. Diagnosis and Management of Tuberculosis in Transplant Donors: A Donor-Derived Infections Consensus Conference Report. Am J Transplant. 2012;12:2288–300. doi: 10.1111/j.1600-6143.2012.04205.x. [DOI] [PubMed] [Google Scholar]

- 4.Lutz MP, Zalcberg JR, Ducreux M, et al. Highlights of the EORTC St. Gallen International Expert Consensus on the primary therapy of gastric, gastroesophageal and oesophageal cancer - Differential treatment strategies for subtypes of early gastroesophageal cancer. Eur J Cancer. 2012;48:2941–53. doi: 10.1016/j.ejca.2012.07.029. [DOI] [PubMed] [Google Scholar]

- 5.Biros MH, Adams JG. What is consensus? Acad Emerg Med. 2002;9:1063. [Google Scholar]

- 6.Zuckerman B, Margolis PA, Mate KS. Health services innovation: the time is now. JAMA. 2013;309:1113–4. doi: 10.1001/jama.2013.2007. [DOI] [PubMed] [Google Scholar]

- 7.Kraft GH, Johnson KL, Yorkston K, et al. Setting the agenda for multiple sclerosis rehabilitation research. Mult Scler. 2008;14:1292–7. doi: 10.1177/1352458508093891. [DOI] [PubMed] [Google Scholar]

- 8.Murray PT, Devarajan P, Levey AS, et al. A framework and key research questions in AKI diagnosis and staging in different environments. Clin J Am Soc Nephrol. 2008;3:864–8. doi: 10.2215/CJN.04851107. [DOI] [PubMed] [Google Scholar]

- 9.Walston J, Hadley EC, Ferrucci L, et al. Research agenda for frailty in older adults: toward a better understanding of physiology and etiology: summary from the American Geriatrics Society/National Institute on Aging Research Conference on Frailty in Older Adults. J Am Geriatr Soc. 2006;54:991–1001. doi: 10.1111/j.1532-5415.2006.00745.x. [DOI] [PubMed] [Google Scholar]

- 10.Research Portfolio Online Reporting Tools (RePORT) National Institute of Health; [Accessed February 22, 2013]. http://projectreporter.nih.gov/reporter.cfm. [Google Scholar]

- 11.Viera AJ, Garrett JM. Understanding interobserver agreement: the kappa statistic. Fam Med. 2005;37:360–3. [PubMed] [Google Scholar]

- 12.Amirhamzeh D, Moor MA, Baldwin K, Hosalkar HS. Publication rates of abstracts presented at pediatric orthopaedic society of North America meetings between 2002 and 2006. J Pediatr Orthop. 2012;32:e6–e10. doi: 10.1097/BPO.0b013e3182468c6b. [DOI] [PubMed] [Google Scholar]

- 13.Drury NE, Maniakis-Grivas G, Rogers VJ, Williams LK, Pagano D, Martin-Ucar AE. The fate of abstracts presented at annual meetings of the Society for Cardiothoracic Surgery in Great Britain and Ireland from 1993 to 2007. Eur J Cardiothorac Surg. 2012;42:885–9. doi: 10.1093/ejcts/ezs138. [DOI] [PubMed] [Google Scholar]

- 14.Harel Z, Wald R, Juda A, Bell CM. Frequency and factors influencing publication of abstracts presented at three major nephrology meetings. Int Arch Med. 2011;4:40. doi: 10.1186/1755-7682-4-40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Papagikos MA, Rossi PJ, Lee WR. Publication rate of abstracts from the annual ASTRO meeting: comparison with other organizations. J Am Coll Radiol. 2005;2:72–5. doi: 10.1016/j.jacr.2004.06.025. [DOI] [PubMed] [Google Scholar]

- 16.Interian A, Escobar JI. The use of a mentoring-based conference as a research career stimulation strategy. Acad Med. 2009;84:1389–94. doi: 10.1097/ACM.0b013e3181b6b2a8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Cone DC, Gerson LG. Measuring the measurable: a commentary on impact factor. Acad Emerg Med. 2012;19:1297–9. doi: 10.1111/acem.12003. [DOI] [PubMed] [Google Scholar]

- 18.Reynolds JC, Menegazzi JJ, Yealy DM. Emergency medicine journal impact factor and change compared to other medical and surgical specialties. Acad Emerg Med. 2012;19:1248–54. doi: 10.1111/acem.12017. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.