Abstract

Biomedical research data sharing is becoming increasingly important for researchers to reuse experiments, pool expertise and validate approaches. However, there are many hurdles for data sharing, including the unwillingness to share, lack of flexible data model for providing context information, difficulty to share syntactically and semantically consistent data across distributed institutions, and high cost to provide tools to share the data. SciPort is a web-based collaborative biomedical data sharing platform to support data sharing across distributed organisations. SciPort provides a generic metadata model to flexibly customise and organise the data. To enable convenient data sharing, SciPort provides a central server based data sharing architecture with a one-click data sharing from a local server. To enable consistency, SciPort provides collaborative distributed schema management across distributed sites. To enable semantic consistency, SciPort provides semantic tagging through controlled vocabularies. SciPort is lightweight and can be easily deployed for building data sharing communities.

Keywords: metadata, scientific data management, data sharing, data integration, computer supported collaborative work

1 Introduction

With increased complexity of scientific problems, biomedical research is increasingly a collaborative effort across multiple institutions and disciplines. Data sharing is becoming critical for validating approaches and ensuring that future research can build on previous efforts and discoveries. As a result, data sharing is often required by scientific funding agencies to share the data produced in grant projects. For example, the National Institutes of Health (NIH) of USA requires data sharing for NIH funded projects of $500,000 or more in direct costs in any one year.

To support large-scale collaborative biomedical research, NIH provides large-scale collaborative project awards for a team of independently funded investigators to synergise and integrate their efforts, and the awards mandate the research results and data to be shared (NIH Statement on Sharing Scientific Research Data; http://grants.nih.gov/grants/policy/datasharing/; Policy for sharing of data obtained in NIH supported or conducted genome-wide association studies, 2008). The Network for Translational Research (NTR) on Optical Imaging in Multimodality Platforms Network for Translational Research (NTR): Optical Imaging in Multimodality Platforms (http://imaging.cancer.gov/programsandresources/specializedinitiatives/ntroi) is one of such collaborative projects on the development, optimisation, and validation of imaging methods and protocols for rapid translation to clinical environments. It requires not only managing the complex scientific research results, but also sharing the data across hundreds of research collaborators. As another example, Siemens Healthcare has research collaborations with hundreds of research sites distributed across the USA, each providing Siemens marketing support by periodically delivering white papers, case reports, clinic methods, clinic protocols, state-of-the-art images, etc. In the past, there were no convenient methods for research partners to share data with Siemens, and mostly data were delivered through media such as emails, CDs and hard copies. This made it very difficult to organise, query and integrate the shared data.

Sharing biomedical research data is important but difficult (Piwowar et al., 2008; Birnholtz et al., 2003). One major difficulty is the unwillingness to share, as investigators may restrict access to data to maximise the professional development and economic benefit (Bekelman et al., 2003). With increased awareness of this issue, the government and funding agencies are developing strategies and policies to promote and enforce the sharing of data (Piwowar et al., 2008; Data Sharing & Intellectual Capital (DSIC) Workspace: https://cabig.nci.nih.gov/workinggroups/DSICSLWG; caBIG: cancer Biomedical Informatics Grid: http://caBIG.nci.nih.gov/). While the social issue of data sharing is challenging, this paper will mainly focus on the development of a biomedical data sharing system which makes data sharing becoming flexible and easy for humans on collaborative research.

1.1 Complexity of biomedical data

Biomedical data can be in heterogeneous formats such as structured data, standard-based medical images, such as DICOM (Digital Imaging and Communications in Medicine; http://medical.nema.org/), non-standard medical images such as optical images, raw equipment data, spreadsheets, PDF files, XML documents, and many others. Thus, the data are a mix of structured data (often deeply hierarchical) and files. Meanwhile, the data structures of biomedical research data can be very complex, ranging from different primitive data types, to lists and tables, from flat structures to deep nested structures, and so on. It is often difficult for investigators to create a sophisticated schema that can capture enough context information or metadata information, if the data types do not have a public, centralised, and well-recognised database. During our interviews with more than a dozen biomedical research investigators, we found that major investigators use spreadsheet like tools and manage their data through operating system folder organised files. To reduce the cost and complexity for data sharing, a data sharing system needs to provide an extensible architecture that can be easily customised by researchers with their own metadata models, even without programming skills.

1.2 Management of data sharing

Even with willingness to share data, in many cases an investigator would like to control when to share data, and who can access the data. An investigator may want to keep unpublished data only to his or her own group or collaborative partners, and selectively publish a subset of data for sharing. An investigator may also want to stop sharing certain data at any time, for example, when he or she finds a quality problem of the data. An investigator may also have new results added on existing shared data and want to keep the data current. A manageable data sharing system with convenient operations can potentially increase the willingness for users to share, as the effort for data sharing can be much reduced.

1.3 Data sharing architecture

There are three major types of data sharing architectures: (a) Centralised, multiple datasets hosted at a single location in a common schema. For example, the Cancer Genome Atlas (TCGA) Data Portal (http://cancergenome.nih.gov/dataportal) manages genome related data; National Biomedical Imaging Archive (http://ncia.nci.nih.gov/ncia) manages DICOM based medical research data. (b) Federated, with a virtual view of physically separate datasets. For example, Cancer Biomedical Informatics Grid (caBIG®) is a grid-based data federation infrastructure that supports a CQL query language across distributed data sources integrated with caGrid middleware (http://caBIG.nci.nih.gov/). (c) Distributed, physically and virtually separate datasets. Centralised approach is often limited to standardised data types such as DICOM images. Biomedical research, however, generates complex data and often new data types. Distributed approach is often difficult to retrieve, interpret and aggregate results, and lacks data consistency between research sites. While caGrid is used in biomedical research community, caGrid itself has a complex infrastructure and the effort is significant.

Considering the highly dynamic nature of biomedical research and the need for cost-effective data sharing, we develop a hybrid architecture which combines the benefits of the centralised approach and the distributed approach. In this approach, a data sharing central server is provided for multiple distributed data sources, and stores only published metadata (not raw data or images with large sizes) from distributed data sources. Users can have flexible management of data sharing through publishing or unpublishing data with a simple operation such as a publish or unpublish button. The metadata contain context information of original data sources (including raw data) which are still managed at distributed research sites. The central server provides an integrated view of all shared data, and shared data can be easily aggregated and retrieved from the central server. This architecture not only is lightweight, but also provides support of consistent data sharing through collaboratively managing schemas and semantically tagging of data.

1.4 Inconsistency of shared data

Another difficulty of sharing data is the incompatible representation of data between sharing partners. Each site may use different schemas (e.g., data templates) and represent data in different formats; and even if multiple partners choose to use the same schema, the schema may evolve as research progresses. For example, a field name may be changed or additional constraints may be added in a schema. Data generated from different versions of a schema may not match when searched together. For example, if a field named ‘age’ is changed to ‘patient age’, these two field names will lead to structurally different fields and cannot be searched together as a single field. Semantic consistency is on using the same vocabulary to represent knowledge. For example, one investigator may use ‘Malignant Tumour’ to describe a lesion, and another investigator may use ‘cancer’. While semantics annotations can be added to data to enhance semantic interoperability between shared data, there is a lack of integrated tools and architecture that can support semantic annotations from controlled vocabularies.

1.5 Our contributions

To meet the requirements of a data sharing system and overcome the challenges, we develop SciPort, a web-based collaborative biomedical data sharing platform to support collaborative biomedical research. SciPort brings together the following essential components to support biomedical data sharing:

Generic biomedical experiment metadata model and XML-based biomedical data management for research sites to flexibly customise and organise their data (Section 2);

Hybrid, lightweight data sharing architecture through a Central Server, and data sharing can be conveniently managed by investigators (Section 3);

Easy sharing of schemas between distributed research partners (Section 4);

Collaborative management of schemas and their evolution between distributed research sites to maintain syntactic data consistency (Section 5);

Semantic tagging of data to achieve semantically consistent data sharing (Section 6).

This paper has been significantly extended from previous work (Wang and Vergara-Niedermayr, 2008), which discussed preliminary work and the technique aspect on distributed scientific data management. The present work represents latest results and software development, and has a major new focus on the human and sharing aspects of biomedical data. The paper also covers new work on metadata modelling for data sharing, collaborative tagging for data sharing, user experience on real-world deployment, and so on.

2 Generic biomedical experiment metadata modelling and management

As discussed in Birnholtz et al. (2003), metadata models serve as the abstraction of data, and are essential for understanding shared data. However, metadata are often incomplete, and important context information of data (Chin and Lansing, 2004) is often ignored. However, many domain-specific applications or data models are specific to certain types of data and limited to a small set of context information. To precisely capture as sufficient metadata and context information as possible for biomedical data sharing, researchers themselves should be also to customise their own metadata models easily to meet their research need and data sharing need.

SciPort was first developed as a biomedical data management system with metadata modelling, authoring, management, viewing, searching and exchange.

2.1 Generic metadata modelling for biomedical data

To meaningfully represent each dataset, we develop a SciPort document model to represent the metadata and context information of the data. A SciPort document can represent both (nested) structured data, files and images. A SciPort document includes several objects:

Primitive Data Types/Fields: Primitive data types are used to represent structural data, including integer, float, date, text, and web-based data types such as textarea, radiobutton, checkbox, URL, etc.

File: Files can be linked to a document through the file object.

Reference: A reference type links to another SciPort document.

Group: A group is similar to a table, which aggregates a collection of fields or nested groups. There can be multiple instances for a group, like rows of a table.

Category: A category relates a list of fields, e.g., ‘patient data’ category, ‘experiment data’ category, etc. Categories are used only at the top level of the content, and categories are not nested.

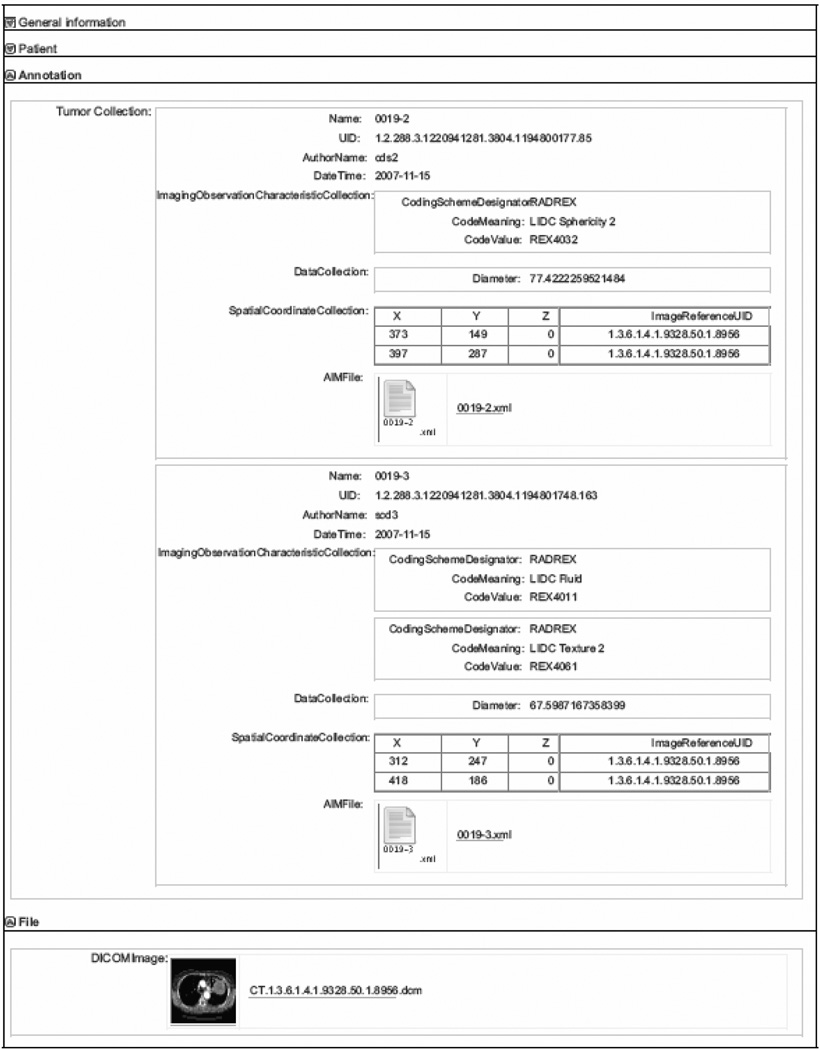

Figure 1 shows an example SciPort document that describes image annotations on top of medical images. This document captures generic information such as the tile, description, author, creation date and modification date; patient information such as the age and gender; annotation data such as a number of annotated tumours marked up on top of the image, and the link to the image file from which annotations were generated. Note that in the example, we can easily represent nested complex information such as multiple tumours, multiple spatial coordinates and different data types such as images and files.

Figure 1.

A sample SciPort document

2.2 XML based implementation and the benefits

The hierarchical nature of the data model fits perfectly with the tree-based XML data model, and we take an XML-based approach to implement the data model – we call it SciPort Exchange Document. Users can also easily define their own schemas which are internally represented with an XML-based schema definition language. Besides, to provide an intuitive way to present data, we also develop a hierarchical model based on XML to organise biomedical data, thus documents can be quickly browsed and identified through the hierarchy. For example, we can define a hierarchy with levels of ‘site’ → ‘patient’ → ‘measurement’, and attach documents at different folder levels. SciPort also provides fine grained access control at folder level for the data in the database (Wang et al., 2009).

The self-describing and rich structure of XML makes it possible to represent arbitrary complex biomedical research data. By modelling the data as XML documents, we can take advantage of native XML database technologies to manage biomedical data, thus we can avoid complex data model and query translation between XML and RDBMS. This is especially beneficial since users can define arbitrary structured and nested data formats for their data. Moreover, powerful queries can be supported directly on XML databases with the standard XML query language XQuery (http://www.w3.org/XML/Query).

A salient characteristic of SciPort is that the system is highly adaptable. SciPort provides a web-based schema authoring tool to easily create complex hierarchical metadata schema model without any need of programming. The organisational hierarchy is also customisable with its hierarchy authoring tool. This allows users to configure their own biomedical data repository without requiring expensive and time-consuming services or software development effort.

3 Sharing distributed biomedical data

While investigators can share their data simply through giving login information to collaborators, a more systematic approach for sharing data across research consortia or networks can provide more flexibility and benefits. SciPort comes with comprehensive data sharing capabilities:

Convenience: data sharing is performed by a single action and data can be selectively shared.

Ownership of data: researchers own and manage their data by their own.

Flexible sharing control: data sharing can also be revoked by researchers at any time.

Up-to-date of shared data: As data are updated or removed, corresponding shared data also need to be synchronised accordingly to stay current.

Consistently aggregated shared data from distributed sites.

Lightweight: Sharing is manipulated through metadata, no copy of large volume data is needed.

These sharing capabilities are implemented through a lightweight, central server based approach, as discussed next.

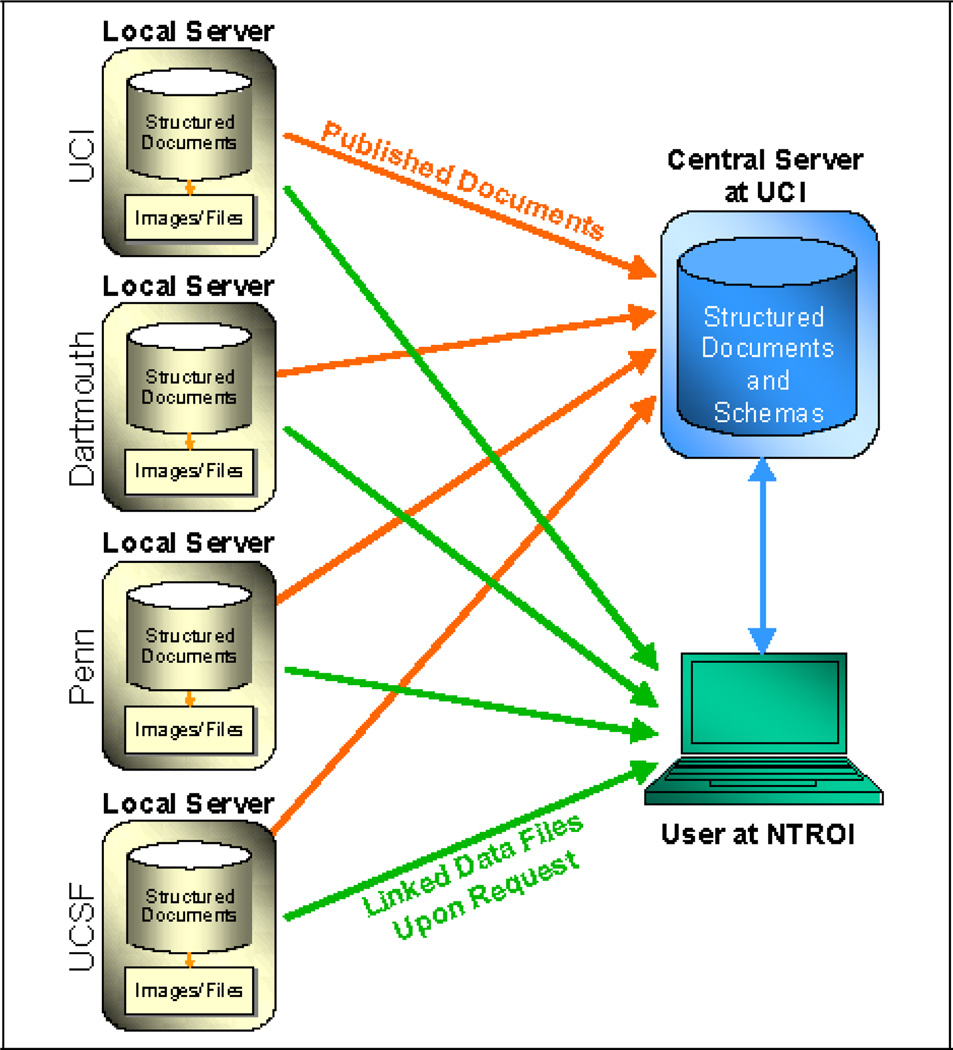

3.1 Sharing data through a central server

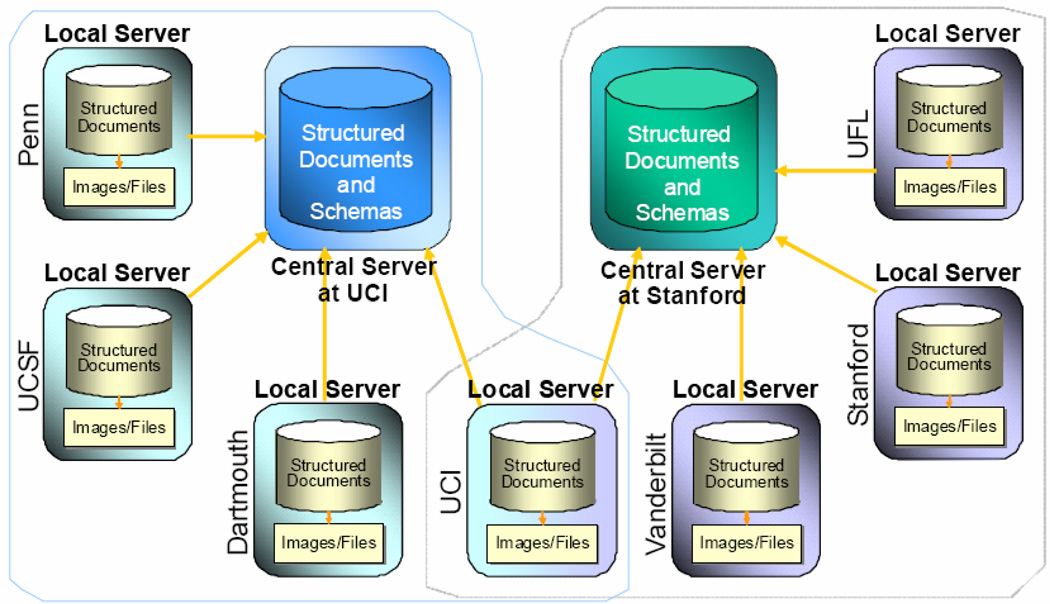

SciPort provides a distributed architecture to share and integrate data through a Central Server (Figure 2). In this architecture, each research site will have its own Local SciPort Server which itself functions as an independent server for data collection and management. In addition, there will be an additional Central Server upon which Local Servers are able to selectively publish their data (structured documents) (Figure 3). Images/files, which are often the major source of data volume, are still stored on corresponding Local Servers but are linked from the published documents on the Central Server. Once a user on the Central Server begins to download a document from the Central Server, actual data files are downloaded from the corresponding Local Server that holds the data.

Figure 2.

The central server based architecture for data sharing (see online version for colours)

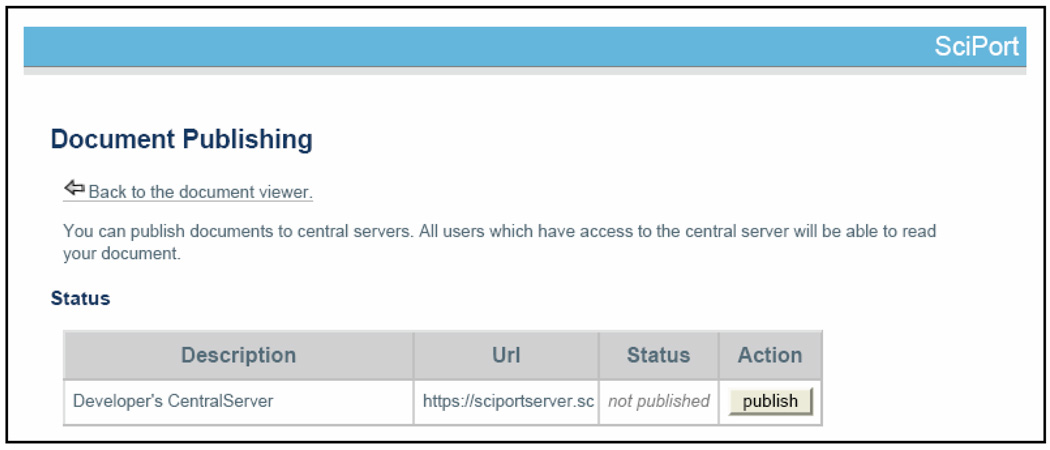

Figure 3.

An example of publishing an existing document (see online version for colours)

Thus, the Central Server provides a global view of shared data across all distributed sites, and can also be used as a hub for sharing schemas among multiple sites. Since data are shared through the metadata (SciPort Documents), the integration is lightweight. Users on the Central Server will only have read access to the data.

Figure 2 illustrates an example SciPort sharing architecture formed by four Local Servers at four universities: UCI, UCSF, Dartmouth and Penn. Each Local Server is used for data collection and management of clinical trial data at its local institution. Since these clinical trials are under the same research consortium, they would share their data together by publishing their data (documents) to the Central Server located at UCI. Members at NTR research consortia are granted read access on such shared data through the Central Server. Once the user identifies a data set from the Central Server and wants to download the data, the user will be redirected to the corresponding hosting Local Server to download the data to the client.

3.2 Data synchronisation

Shared data may become outdated as new results or analyses are generated on the data. SciPort provides automated data synchronisation on shared data through synchronisation enforcement in the following operations:

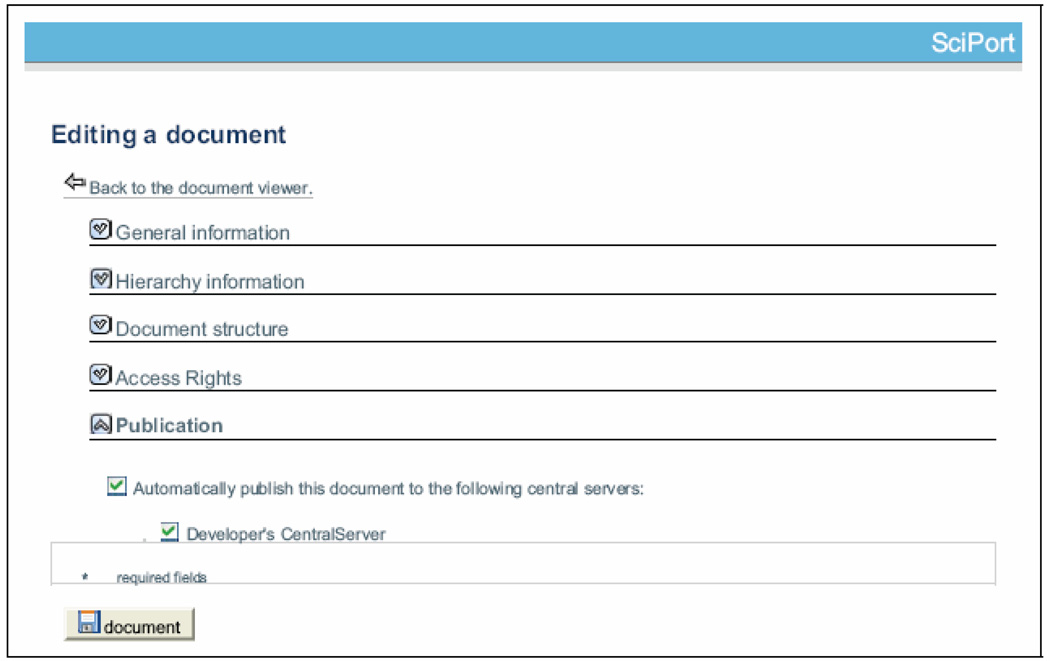

Create: When a document is created, the author or publisher has the option to publish this document (Figure 4). Once the document is published, a ‘published’ status is added to the document. A user can also set up an automatic publishing flag so all new documents will be automatically published.

Update: When an update is performed on a published document, the document will automatically be republished to the Central Server.

Delete: When a published document is deleted, it will also be automatically removed from the Central Server.

Unpublish: A user can stop sharing a document by selecting the ‘unpublish’ operation. Unpublished documents will be removed from the Central Server.

Figure 4.

An example of publishing a new document (see online version for colours)

3.3 Security and trust between local servers and the central server

Server Verification: The trust between Local Servers and the Central Server is implemented through security tokens. For a Local Server to be accepted into the network, it will be granted a security token to access the Central Server services. The token will be imported at the set-up step. When a Local Server tries to connect to the Central Server, the Central Server verifies if the token matches.

Single Sign-on and Security: One issue for sharing data from distributed databases is that it is not feasible for Central Server users to login to every distributed database. When a user publishes a document, the user already grants the read access of the document (including the files linked to the document) to the users on the Central Server, thus another authentication is unnecessary. Therefore, users on the Central Server should be able to automatically access shared data from a Local Server in a transparent way. To support this, the Local Server Document Access Control Manager has to make sure that the remote download requests really come from Central Server users who are currently logging on. We develop a single sign-on method to guarantee the security of the data sharing, by verifying if an incoming data request to a Local Server comes from the Central Server and if the current request user is currently logged in on the Central Server.

3.4 Sharing data in multiple data networks

Data can be shared not only in a single data network through one Central Server, but also in multiple data networks through multiple Central Servers. One organisation may want to share the same data in multiple networks, as demonstrated in an example (Figure 5). There are two networks, one centred at UCI and another centred at Stanford. UCI is collaborating with both networks and needs to share data with both networks. UCI will be granted as a partner site and its Local Server will be configured for both networks. A document can then be published to any of the Central Servers or both. This sharing architecture makes it possible for very flexible data sharing across different research networks.

Figure 5.

Sharing data in multiple data networks (see online version for colours)

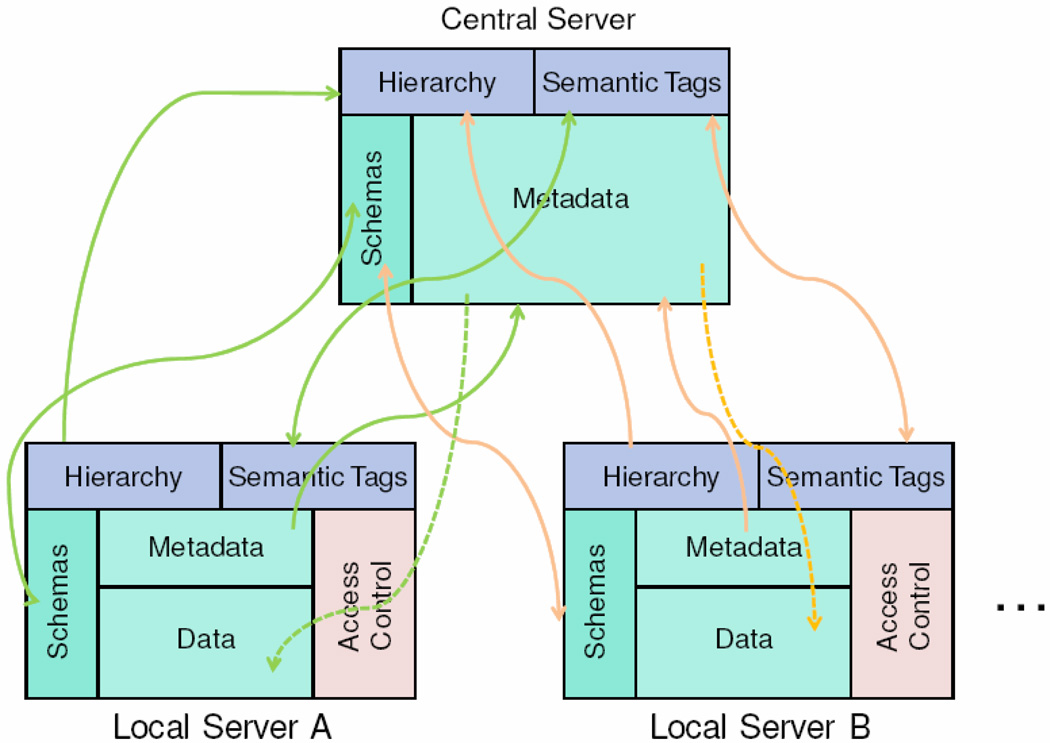

Besides data sharing, SciPort also provides sharing of schemas between distributed research sites (Figure 6), discussed in Section 4.

Figure 6.

Types of information sharing in SciPort (see online version for colours)

3.5 The benefits of our hybrid data sharing architecture

The architecture of SciPort is a hybrid of centralised approach and distributed approach. Centralised approach can provide high visibility, easy retrieval, easy aggregation within the repository, but suffers from limited data types or flexibility of customising new data types. Distributed approach allows users to flexibly manage, customise and control their data and data sharing, but often suffers from low visibility, difficulty on retrieval, interpretation, and aggregation, and lacks of data consistency. Our hybrid approach benefits from both. The Central Server provides high data visibility, easy retrieval and aggregation of all shared data from distributed sites. SciPort allows users to conveniently customise their data types through creating and updating schemas. Users can flexibly manage their data sharing through simple publishing or unpulishing operations. Data consistency is maintained through collaborative schema sharing and management (discussed in next two sections). The hybrid approach is also lightweight, as it does not store directly original data such as raw data or medical images – which can have high data volumes, but only metadata or structured documents that describe and abstract the data.

4 Sharing schemas

SciPort uses an XML-based approach for defining schema, which defines structures and constraints in XML – SciPort Schema Document. SciPort Schema Document defines structures as a mix of (possibly nested) object types defined in the data model, and defines the constraints as (a) number of instance constraints for file and group types, (b) minimal and maximal value constraints, and (c) controlled values. A schema not only defines the metadata, but also references to original data such as images or raw experimental files. Schemas can be used for (a) data validation, (b) document authoring form generation, (c) data presentation – templates are defined based on schemas, and (4) search form generation. A web search form can be automatically created based on a schema, with pre-filled options such as a dropdown list of constrained values.

Sharing schemas are critical for sharing data, since the Central Server glues data together using shared schemas to present and search data. How to keep data from multiple Local Servers coherent is also dependent on at what level and how schemas can be shared consistently.

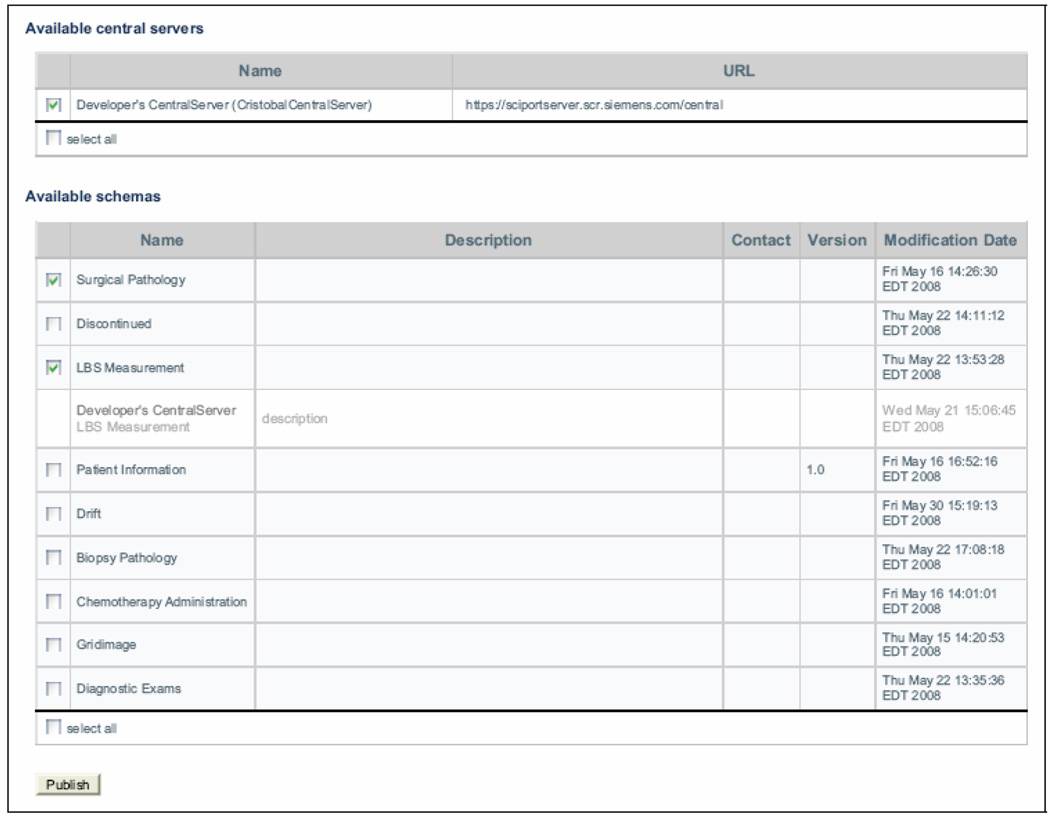

4.1 Publishing schemas

Schemas can be shared by publishing them to the Central Server. From a Local Server Schema Management menu, schemas created on the Local Server can be selectively published to target Central Servers, as shown in the example in Figure 7. When a new document is being published to the Central Server, the availability of the corresponding schema on the Central Server will be checked. If the schema is not present, then the schema will also be published together with the document.

Figure 7.

An example of publishing schemas from a local server (see online version for colours)

The owner of a schema is defined as the Local Server on which the schema is first created. A schema is identified by its owner and schema ID. SciPort also provides comprehensive access control management (Wang et al., 2009), and two roles are related to schema management: (a) organiser role with privileges to author and update schemas and (b) publisher role with privileges to publish documents and schemas.

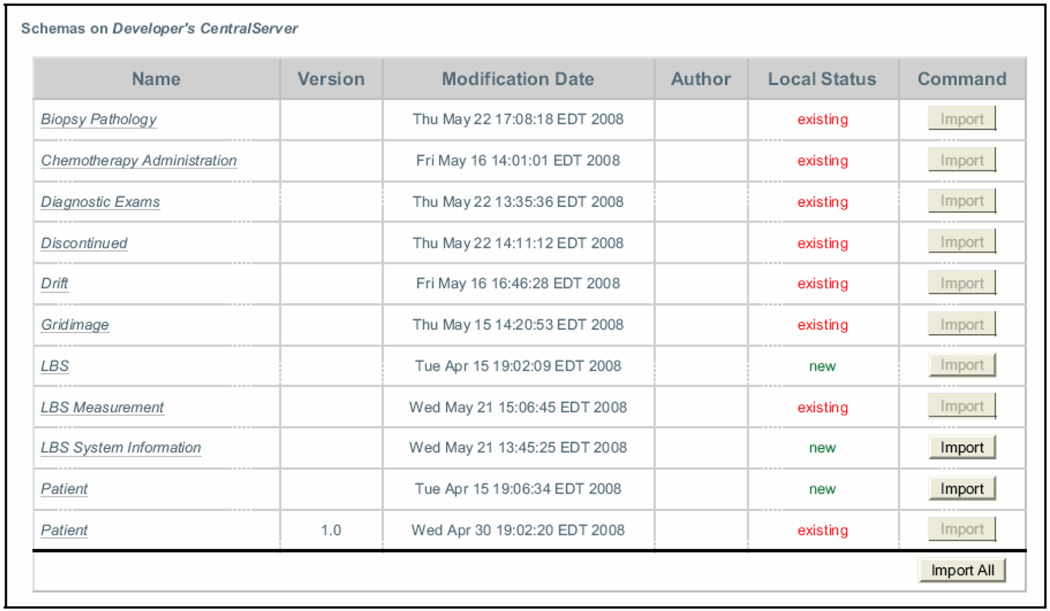

Once a schema is published, it can be shared through the Central Server. Other Local Servers can reuse schemas by importing schemas from the Central Server, as shown in Figure 8. A schema can be unpublished from a Central Server by a Local Server, thus the schema is not available on the Central Server for further sharing. Users on the Central Server with an ‘organiser’ role can remove a schema from the Central Server if no document on Central Server is depending on this schema. This can be used to clean up non-used schemas.

Figure 8.

An example of importing schemas to a local server (see online version for colours)

4.2 Three scenarios of schemas sharing

Based on the use cases, there are three typical scenarios of schema sharing:

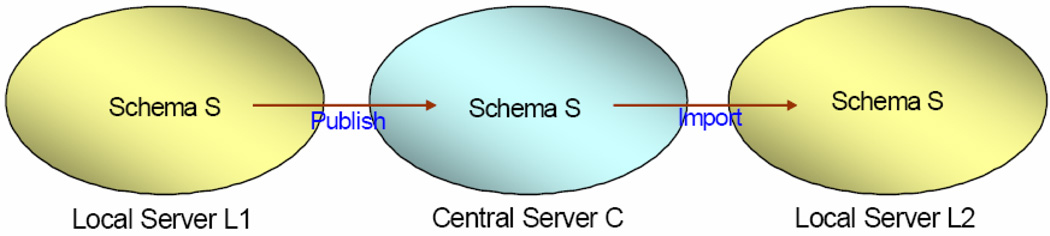

Static Schema: A schema is fixed and will not be changed. For example, some common standard based schemas are not likely to change. Once a schema is authored and changed to the final status, it can be published to the Central Server to be shared by every Local Server (Figure 9). This is the simplest scenario, as schemas can be created once and shared directly through the Central Server.

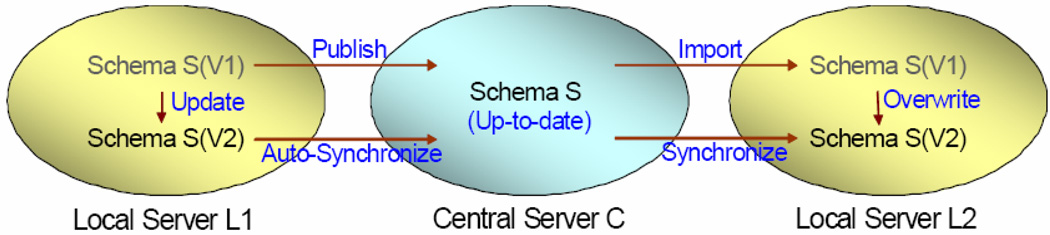

Uniform Evolving Schema: A schema can be changed and a uniform version is shared by all Local Servers, thus data consistency is maintained across all sites. The schema is owned by its original creating Local Server, and the owner can make certain changes. This means that all servers seem the same schema, but the schema can evolve.

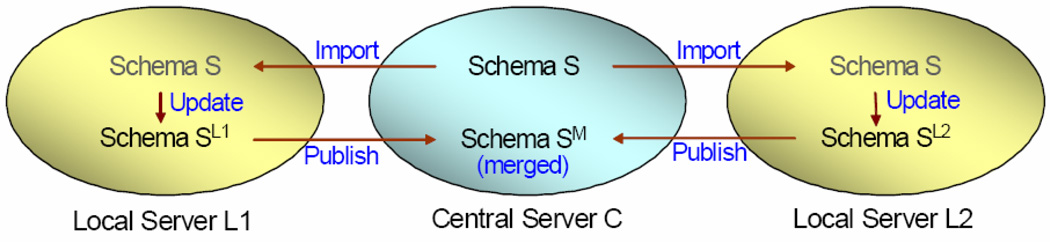

Multiform Evolving Schema: A ‘seed’ schema is first created and shared as public – every Local Server becomes the owner and can update the schema. The Central Server maintains a version of the schema that conditionally merges updates from Local Servers, thus all documents published on the Central Server will be compatible under this schema. This means that while the Central Server maintains a copy of schema that is compatible to all local servers, each Local Server may have its schema with additional updates.

Figure 9.

Static schemas sharing: a fixed schema is shared by all servers (see online version for colours)

Next, we will discuss how to manage schemas and their evolution for the last two scenarios (static schema management is straightforward and ignored here.)

5 Collaborative management of schemas for consistent data sharing

Since schemas can be shared and used across multiple distributed data sources, one challenging issue is how to manage schema evolution while keeping shared documents consistent and compatible with their schemas in a distributed environment. To solve this problem, we first define the following favourable rules for schema management and sharing in a distributed environment:

Minimal administration: Human based manual schema management can be difficult, especially for schemas that may be updated by different sites. To minimise human effort for managing schemas across distributed sites, it would be ideal if the data sharing system can facilitate the management of schemas, for example, relying on an information exchange hub based on the Central Server.

Data consistency: Schema evolution has to be backward compatible otherwise the integrity of documents will be broken.

Control of schemas: To prevent arbitrary update of schemas, by default, only the owner (the user who creates the initial schema on a Local Server) of a schema can update a schema.

Current of Schemas: The Central Server always has the up-to-date version of a schema if that schema is shared by the owner, to guarantee no outdated schemas are shared across sites.

Sharing Maximisation: Since shared schemas on the Central Server can be unpublished by local database users and removed from the Central Server, to promote sharing, only the last time publisher of this schema can unpublish a schema.

These rules will help to automate schema management in a distributed environment, minimise conflicts of updates, maintain coherent shared data on the Central Server, and reduce the effort from humans. To achieve this, we enforce the rules for schema operations on both Local Servers and Central Servers. Schema operations on Local Servers include create, update, delete, import, publish, and unpublish, and schema operations on Central Servers include remove.

5.1 Uniform schema management

In this scenario, a schema can be changed and a uniform version is shared by all Local Servers, thus data consistency is maintained across all sites and the Central Server. Next we discuss how schema management rules are enforced in each schema operation.

5.1.1 Create and delete

A schema can be created on a Local Server if the user has the organiser role. This Local Server will become the owner of this schema. A schema can be deleted if the user has the organiser role, and there are no documents using this schema on this Local Server. If the Local Server is the owner of the schema, and the schema is never published, deleting a schema will eliminate the schema forever. If the schema was once published, it may still be alive and used on other Local Servers.

5.1.2 Update

Incompatible update of a schema is the one that can lead to inconsistency between the new schema version and existing documents. Incompatible updates are not allowed unless the following conditions are met: (a) only the owner of a schema can make updates to the schema; (b) there are no existing documents created based on this schema on this Local Server; and (c) the schema was never published, i.e., there will no other Local Servers using this schema to create documents.

Compatible update of a schema will not lead to inconsistency between the new schema version and existing documents. The following conditions are required for compatible update:

Ownership: The Local Server is the owner of the schema, and the user is the organiser on this Local Server.

Field Containment: All the fields in the last schema are present in the new schema and belong to the same category or group, and new categories and fields added.

Type Compatibility: All the fields in the new schema have the same type or a compatible type, i.e., a more general type.

Relaxed Value Range Constraints: No new value constraints are permitted for existing fields which do not have any constraint. Value constraints can be updated with more relaxed ranges. Constraints on new fields are permitted.

Relaxed Controlled Values: For field with controlled values, the extent is enlarged with more options.

Relaxed Constraints on Number of Instances: For group field or file field, there can be a constraint on the minimal and/or maximal number of instances. No instance number constraint is permitted on existing fields, and instance number constraint can be updated with more relaxed range. Change of a field’s order within its sibling is not considered incompatible.

Once a schema is updated on the Local Server, it will be automatically republished to the Central Server (if any) onto which the schema has been published. This will ensure the Central Server always maintains up-to-date versions of schemas.

5.1.3 Publish and unpublish

A schema can be published to one or multiple Central Servers if the user has a publisher role and there is no newer version of this schema on the Central Server. There are three scenarios of schema publishing: (a) Schemas can be manually published from the schema publishing interfaces; (b) The schema of a document is automatically published when a document is published. When a document is published, the Local Server will check the Central Server if the schema is available or up-to-date. Otherwise the schema is republished; (c) If a schema was published and is then updated with compatibility, the new version schema will be automatically republished on the Central Server and replace the last version. This will keep the schemas on the Central Server up-to-date.

A schema can be unpublished by a Local Server with the following conditions: (a) the user has the ‘publish’ role on the Local Server; (b) there is no document associated with it on the Central Server, and (c) the Local Server is the last publisher of the schema. The last condition is necessary, otherwise if the schema is used at multiple Local Servers, every Local Server can easily stop the publishing which can be against the sharing goal of the last publisher.

5.1.4 Import

A Local Server can import a shared schema from the Central Server if the user has the organiser role. When there is a new version of a schema on the Central Server, the new version schema will be automatically detected by a Local Server when there is a document being published from that Local Server.

5.1.5 Remove from central server

Users on the Central Server with the organiser role can remove a schema from the Central Server if no document on Central Server is using this schema. This can be used to cleanup non-used schemas.

Update, or create? As a schema keeps on evolving, the number of updates on a schema can be many. When it reaches certain threshold, the latest schema may be very different from the original schema, the data between the two schemas may be very different, and the data consistency does not make much sense any more. In this case, it may be desirable to create a new schema. SciPort provides a functionality to view change history of a schema, and users can create a new schema from an existing schema in the Schema Editor tool.

5.1.6 An example of uniform schema management

A user with organiser privilege on a Local Server L1 creates a schema S(V1) (Figure 10). The user may later find the schema not accurate, and makes changes to the schema. Since no document has been created and the schema has never been shared yet, the user may make arbitrary changes, including incompatible updates. Once the user has a stable usable version of the schema, the user begins to author documents based on this schema, and publishes documents to the Central Server C. Schema S(V1) will be automatically published to the Central Server. After some time, the user may need more information for their data or adjust existing fields, and need to update schema S. Since there are existing documents using the schema, and the schema was also published, the user can make only compatible update to schema S(V1). (If compatible update is not sufficient, the user has to create a new schema.) Once schema S(V1) is updated as S(V2), it will be automatically propagated to the Central Server to replace the last version S(V1). A user with organiser privilege on a Local Server L2 imports schema S(V1) after S(V1) is published on the Central Server, and documents then are created on L2 on this schema. Later S(V2) replaces S(V1) on the Central Server, which is detected when a document of schema S(V1) is published to C. The user at L2 will be prompted to synchronise the schema, and S(V1) is replaced by S(V2) on L2.

Figure 10.

Uniform schema sharing: a schema is shared by all servers and updatable by the owning local server (see online version for colours)

5.2 Distributed multiform schema sharing

Uniform schema sharing provides data compatibility through a single uniform version of schemas across all servers, and maintains the ownership of schemas and provides controlled schema evolution. There can also be cases that multiple sites need to adapt certain schema to their own needs, and a uniform schema may not be feasible. To provide flexible schema evolution at each Local Server and support data compatibility on the Central Server, we provide a multiform schema sharing approach.

As shown in Figure 11, a ‘seed’ schema S will be first created and made as public owned, and then published onto the Central Server. Each Local Server will be able to import this schema, and adjust the schema for its local use (SL1, SL2, etc.). Schema updates from each site will be conditionally merged on the Central Server as SM. The goal of the merging is to keep the schema version on the Central Server mostly relaxed, through the following merging conditions:

If the changes are incremental structural changes, they will be merged. These include adding of new fields or new categories;

If the constraints on the Central Server are more permissive than the new or updated constraints that the modification suggests, then the suggested changes are ignored by the Central Server;

Structural removal changes such as field removal or category removal will be ignored and not merged.

Figure 11.

Multiform schema sharing: a schema is updatable by each local server independently and a compatible version is maintained by the central server (see online version for colours)

In this way, each Local Server will maintain its local schema evolution while the Central Server will maintain a merged schema for shared data compatibility.

6 Collaborative semantically tagging of data

Recently tagging has become a popular method to enable users to add keywords to internet resources, thus to improve search and personal organisation (Marlow et al., 2006). In SciPort, data are hierarchically organised based on a tree based organisational structure. In practice, it can be very helpful that users can provide additional classification of data, by assigning tags from a controlled vocabulary to documents. Each document can be flexibly annotated with one or more semantic tags, and tags themselves can be shared by users at both Local Servers and the Central Server.

Semantically tagging adds additional semantics to documents, which not only provides semantics based query support, but also enhances semantic interoperability of shared data from multiple distributed sites.

6.1 Tagging from controlled vocabulary

Free tagging is used by most Web-based tagging systems, where users can define arbitrary tags. One issue for free tagging is that since there is no common vocabulary among these tags, there can be semantic mismatch between tags.

Instead, SciPort chooses to annotate data using semantic tags coming from predefined ontology or controlled vocabulary, such as NCI Thesaurus NCI Thesaurus (http://ncit.nci.nih.gov). By standardising tags using such controlled vocabulary, the system can then provide a controlled set of semantic tags. Thus data can be categorised into multiple semantic groups, which makes it possible to express queries based on common semantics. For instance, when a user authors a document of a study on breast cancer, he assigns a tag ‘Stage II Breast Cancer’ (ID: 18077), and another tag ‘Cancer Risk’ (ID: 7768). A problem arises that expensive lookup through vocabulary may simply prevent users from using it.

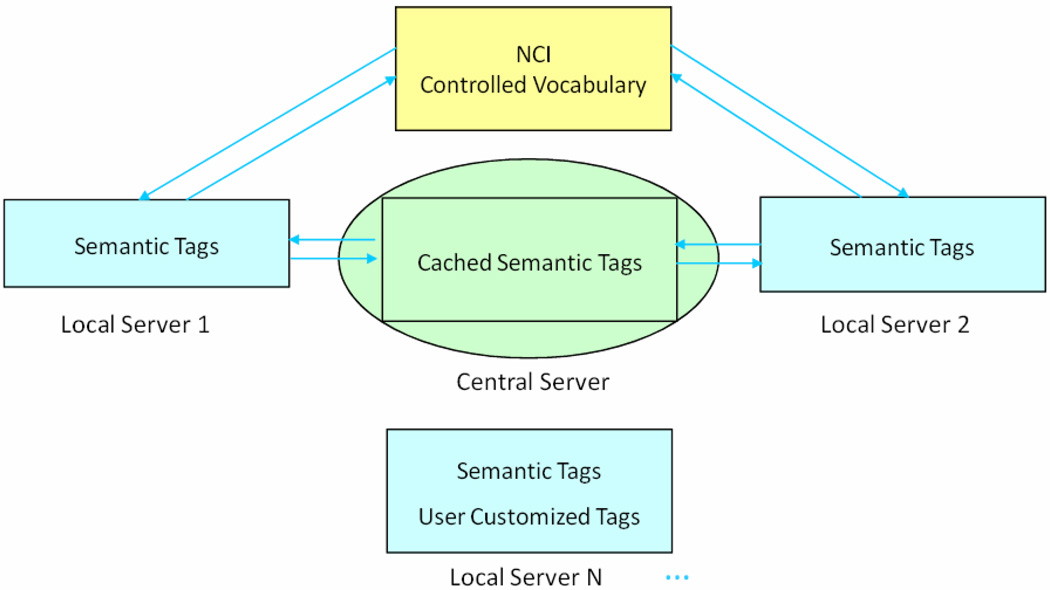

Next we show that with a collaborative tag management system, we can provide automatic tag lookup in a controlled vocabulary repository by caching previously retrieved tags in the Central Server with Ajax technology.

6.2 Collaborative semantic tag management

Caching tags is to exploit locality inherent to the subset of the vocabulary that is used within a group of researchers. Since a collaborative research consortium often focuses on solving a single significant problem, the vocabulary is quite smaller than the standardised vocabulary. For instance, the NCI Thesaurus NCI Thesaurus (http://ncit.nci.nih.gov) is 77 MB in size. In SciPort, we provide a cached vocabulary repository on the Central Server, thus previous-retrieved tags from the standardised vocabulary is shared among all users. Cached vocabulary on the Central Server makes it very efficient to search for a tag in the vocabulary: instead of searching for a tag at a remote large vocabulary all the time, previous used tags in the tag cache repository can be searched first.

Figure 12 shows the architecture of collaborative tag management. At each Local Server, there is a tag repository that manages all tags on this Local Server; there is a remote NCI controlled vocabulary database from which users can dynamically search and retrieve tags. Once a tag is defined on the Local Server, it will be automatically cached at the Central Server tag repository. When a user wants to associate a tag to its data, he can dynamically search and select the tag, through automatic tag lookup, as discussed next.

Figure 12.

Collaborative tag management (see online version for colours)

6.3 Semantic annotation of documents with tags

By taking advantage of the Ajax technology, we provide automatic tag lookup while a user wants to add a tag. As the user is typing in a keyword on a web page to search for a tag, there will be an automatic tag lookup from three resources: the local tag repository, the cached/shared tag repository on the Central Server, and the NCI controlled vocabulary. Implemented through Ajax, the lookup is performed in the background, and retrieved tags are displayed in the order of local tags, cached tags, and remote tags. The lookup will dynamically load a dropdown list of tags from which the user can pick up (Figure 13). By dynamically sending asynchronous tag queries to tag repositories, users can immediately select a desired tag to label his data, instead of opening multiple browser windows to do separate searches. With this technology, we are able to support using tags from a controlled vocabulary or shared repository by providing a convenient interface.

Figure 13.

An example of automatic tag lookup (see online version for colours)

6.4 Semantically querying and browsing data through semantic tags

Once documents are annotated with semantic tags, it is now possible for users to semantically browse data by clicking on a semantic tag, or use tagged fields as additional constraints for specifying queries. For example, a user might want to search all documents tagged with ‘Stage_II_Breast_Cancer’, and a type of ‘Breast Cancer’ can generate a drop down list of semantic tags and the user can quickly use the right tag to specify queries. Semantic annotation can also help to classify data. Since semantic tags coming from an ontology are hierarchically organised in the ontology, it is possible to generate an ontology tree based view of all documents based on annotated ontology concepts on documents.

7 Implementation

7.1 Software

SciPort is built with J2EE and XML, running on Apache Tomcat servers and Oracle Berkeley DB XML database server (open source) or IBM DB2 XML database. The system is OS neutral and has been tested on Windows, Linux, and MacOS. It uses standard protocols, including XML, XSLT, XPath and XQuery, and Web Services. SciPort is a lightweight application and can be easily deployed and customised by users.

SciPort is a rich web-based application, thus it is possible for users to use it at any place and at any time. SciPort supports major web browsers including Internet Explorer, Firefox, Opera and Safari. Taking advantage of Web 2.0 technologies such as Ajax, SciPort provides rich application capabilities with smooth user experience.

A salient characteristic of SciPort is that the system is highly adaptable. Through customisable metadata schemas, hierarchy organisation, and sharing architecture, SciPort can be easily set up for managing biomedical research data and providing a data sharing network.

8 Discussion

The design and development of SciPort has been an iterative process and driven by numerous discussions with biomedical researchers and users. During the process, we have learned many lessons.

8.1 Usability

A critical requirement for software tools from biomedical researchers is the usability of the system. This includes intuitive user interfaces and workflows for data authoring, sharing and querying, and the ease of system setup. When the initial version of SciPort was released, the pilot users complained about multiple clicks needed to navigate through multiple web pages, and showed strong desire for simpler interfaces and faster response. Especially, a single click data publishing is desired, otherwise users gradually get frustrated to share their data. The web-based user interfaces then evolved from static web pages (jumping from one page to another) based interface to Ajax based interface, and then another Flex based interface is developed to provide even smoother user experience. Our conclusion is that smooth user experience of data sharing tools could much improve the interest for researchers to share their data.

8.2 Generalisation and customisation

Although SciPort is currently a software platform and is not tied to specific applications, the initial version of SciPort (Version 1.0) was designed and developed for one single project with static data forms. Soon we realised that even for a single project, the schemas were dynamic, and new data forms were occasionally required. And researchers from other projects were using totally different data forms. Besides, the data are heterogeneous, ranging from structural data to medical images, to files, etc. This motivated us to redesign SciPort as a platform that could provide easy and flexible customisation. This is challenging as schema evolution is very difficult to support in traditional relational database management systems based on tables. The emergence of native XML database technologies makes it possible to support customisable schemas, as native XML DBMSs have a significant advantage on supporting schema evolution. Our data models, queries and data management are fully based on XML.

8.3 Integration with other systems

In the past, we also worked on extending SciPort so data managed in SciPort could be integrated into other infrastructures such as caBIG. We developed a SciPort-NBIA National Biomedical Imaging Archive (http://ncia.nci.nih.gov/ncia) bridge to support such integration.

9 Related work

Computer supported collaborative work has been increasingly used to support research collaborations. A review of collaborative systems is presented in Bafoutsou and Mentzas (2002), and a review of taxonomy of collaboratory types is discussed in Bos et al. (2007). SciPort belongs to the Community Data Systems based on this classification. Lee et al. (2009) review collaboratory concepts relevant for collaborative biomedical research and analyse the major challenges. In Myneni and Patel (2010), a collaborative information management system is discussed. A context-based sharing system is proposed in Chin and Lansing (2004) to support more data-centric collaboration than tools oriented ones, and this motivates our metadata oriented approach for data modelling and sharing in SciPort.

With the increasing collaboration of scientific research, collaborative cyberinfrastructures have been researched and developed. Grid-based systems (such as caBIG® – cancer Biomedical Informatics Grid; http://caBIG.nci.nih.gov/), Biomedical Informatics Research Network (BIRN) (Birnholtz et al., 2003) provide infrastructures to integrate existing computing and data resources. They rely on a top down common data structures. The iPlant Collaborative (iPC) Cyberinfrastructure for the Biological Sciences: Plant Science Cyberinfrastructure Collaborative (PSCIC) (http://www.nsf.gov/pubs/2006/nsf06594/nsf06594.htm) is a cyberinfrastructure project recently funded by US NSF. iPlant has a more focus on the human side of the infrastructure. myGrid (http://www.mygrid.org.uk/) is a suite of tools for e-Science, with a focus on workflows. A review on cyberinfrastructure systems for the biological sciences is discussed in Stein (2008). Grid based systems are more used on sharing computing and storage resources, P2P is more used on sharing data (Foster and Iamnitchi, 2003). MIRC (http://mirc.rsna.org) is an example of P2P based data system for authoring and sharing radiology teaching files. ckan (http://ckan.org) is an open source data portal software for publishing and sharing data, with a centralised approach. Recent work on collaborative e-health systems includes Balatsoukas et al. (2012), Couch et al. (2011), Mehmood et al. (2009).

A publish and subscribe architecture for distributed metadata management is discussed in Keidl et al. (2002), which focuses on the synchronisation problems. In Taylor and Ives (2006), an approach of bottom-up collaborative data sharing is proposed, where each group independently manages and extends their data, and the groups compare and reconcile their changes eventually while tolerating disagreement. Our approach takes an approach in between the bottom-up and top-down approaches, where each group manages their data, but also achieves as much agreement on schemas as possible through controlled schema evolution.

In Rader and Wash (2008), influence on tag choices is analysed from del.icio.us. Our approach focuses on semantic tagging from a controlled vocabulary instead of free tags. In Pike and Gahegan (2007), different approaches for representing scientific knowledge are discussed.

Extensive work has been done in data integration and schema integration (Halevy et al., 2006; Doan and Halevy, 2005). In Beynon-Davies et al. (1997), a collaborative schema integration system is discussed for database design. Our system takes a proactive approach where schema and data consistency is enforced during data authoring and schema authoring.

10 Conclusion

Contemporary biomedical research is moving towards multi-disciplinary, multi-institutional collaboration. These lead to strong demand for tools and systems to share biomedical data. This drives the development of SciPort – a web-based data sharing platform for collaborative biomedical research. To support meaningful abstraction of data, SciPort provides a generic metadata model for users to conveniently define their own metadata schemas. SciPort provides an innovative lightweight hybrid data sharing architecture which combines the benefits of the centralised approach and the distributed approach. Investigators are able to flexibly manage their data and schema sharing, and quickly build data sharing networks. To enable data consistency for data sharing, SciPort provides comprehensive collaborative distributed schema management. Through semantic tagging, SciPort further enhances semantic interoperability of shared data from distributed sites.

Acknowledgments

The project is funded in part by the National Institutes of Health, under Grant No. 1U54CA105480-05.

Biographies

Fusheng Wang is an Assistant Professor at Department of Biomedical Informatics, and a senior research scientist at Center for Comprehensive Informatics, Emory University. He is also an adjunct faculty member of Department of Mathematics and Computer Science at Emory University. He received his PhD in Computer Science from University of California, Los Angeles in 2004. Prior to joining Emory University, he was a research scientist at Siemens Corporate Research from 2004 to 2009. His research interests include big data management and analytics, medical imaging informatics, spatial and temporal data management, heterogeneous data management and integration, clinical natural language processing, and biomedical data standardisation.

Cristobal Vergara-Niedermayr received his BS and MS degrees in Computer Science from the Freie Universität Berlin, Germany. He has worked at Siemens Corporate Research, Center for Comprehensive Informatics at Emory University, University of Medicine and Dentistry of New Jersey, and recently at Oracle.

Peiya Liu was a senior research scientist at Siemens Corporate Research. He has many years of experience in applications of multimedia documents in industrial environments. His primary interests are in the areas of multimedia document authoring/processing/management, multimedia tools, innovative industrial applications and standards. He received his PhD degree in Computer Science from University of Texas at Austin.

Contributor Information

Fusheng Wang, Department of Biomedical Informatics, Emory University, Atlanta, Georgia, USA, fusheng.wang@emory.edu.

Cristobal Vergara-Niedermayr, Oracle, California, USA, cristobal.vergara.niedermayr@gmail.com.

Peiya Liu, Department of Integrated Data Systems, Siemens Corporate Research, Princeton, New Jersey, USA, pliu412@gmail.com.

References

- Bafoutsou G, Mentzas G. Review and functional classification of collaborative systems. International Journal of Information Management. 2002;Vol. 22(No. 4):281–305. [Google Scholar]

- Balatsoukas P, Williams R, Carruthers E, Ainsworth J, Buchan I. The use of metadata objects in the analysis and representation of clinical knowledge. Metadata and Semantics Research. 2012;Vol. 343:107–112. [Google Scholar]

- Bekelman JE, Li Y, Gross CP. Scope and impact of financial conflicts of interest in biomedical research: a systematic review. JAMA. 2003;Vol. 289(No. 19):454–465. doi: 10.1001/jama.289.4.454. [DOI] [PubMed] [Google Scholar]

- Beynon-Davies P, Bonde L, McPhee D, Jones CB. A collaborative schema integration system. Computer Supported Cooperative Work. 1997;Vol. 6(No. 1):1–18. [Google Scholar]

- Birnholtz JP, Bietz MJ. Data at work: supporting sharing in science and engineering. GROUP. 2003:339–348. [Google Scholar]

- Bos N, Zimmerman A, Olson J, Yew J, Yerkie J, Dahl E. From shared databases to communities of practice: a taxonomy of collaboratories. Journal of Computer-Mediated Communication. 2007;Vol. 12(No. 2):652–672. [Google Scholar]

- Chin G, Jr, Lansing CS. Capturing and supporting contexts for scientific data sharing via the biological sciences collaboratory. CSCW, ISBN. 2008 1-58113-810-5. [Google Scholar]

- Couch PA, Ainsworth J, Buchan I. Sharable simulations of public health for evidence based policy making. Computer-Based Medical Systems. 2011:1–6. [Google Scholar]

- Doan A, Halevy AY. Semantic integration research in the database community: a brief survey. AI Magazine. 2005;Vol. 26(No. 1):83–94. [Google Scholar]

- Foster I, Iamnitchi A. On death, taxes, and the convergence of peer-to-peer and grid computing. IPTPS’03. 2003:118–128. [Google Scholar]

- Halevy A, Rajaraman A, Ordille J. VLDB, Data integration: the teenage years. 2006 Available online at: http://portal.acm.org/citation.cfm?id=1182635.1164130. [Google Scholar]

- Keidl M, Kreutz A, Kemper A, Kossmann D. A publish and subscribe architecture for distributed metadata management; 18th International Conference on Data Engineering; 2002. pp. 309–320. [Google Scholar]

- Lee ES, McDonald DW, Anderson N, Tarczy-Hornoch P. Incorporating collaboratory concepts into informatics in support of translational interdisciplinary biomedical research. International Journal of Medical Informatics. 2009;Vol. 78(No. 1):10–21. doi: 10.1016/j.ijmedinf.2008.06.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marlow C, Naaman M, Boyd D, Davis M. Collaborative Web Tagging Workshop. Edinburgh, Scotland: 2006. Position Paper, Tagging, Taxonomy, Flickr, Article, ToRead. [Google Scholar]

- Mehmood R, Cerqueira E, Piesiewicz R, Chlamtac I. e-Labs and work objects: towards digital health economies. Communications Infrastructure. Systems and Applications in Europe. 2009;Vol. 16:205–216. [Google Scholar]

- Myneni S, Patel VL. Organization of biomedical data for collaborative scientific research: a research information management system. International Journal of Information Management. 2010;Vol. 30(No. 3):256–264. doi: 10.1016/j.ijinfomgt.2009.09.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pike W, Gahegan M. Beyond ontologies: toward situated representations of scientific knowledge. International Journal of Human-Computer Studies. 2007;Vol. 65(No. 7):674–688. [Google Scholar]

- Piwowar H, Becich M, Bilofsky H, Crowley R. Towards a data sharing culture: recommendations for leadership from academic health centers. PLoS Medicine. 2008;Vol. 5 doi: 10.1371/journal.pmed.0050183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rader EJ, Wash R. Influences on tag choices in del.icio.us; Proceedings of the ACM Conference on Computer Supported Cooperative Work (CSCW); 2008. pp. 239–248. [Google Scholar]

- Stein LD. Towards a cyberinfrastructure for the biological sciences: progress, Visions and challenges. Nature Reviews Genetics. 2008;Vol. 9(No. 9):678–688. doi: 10.1038/nrg2414. [DOI] [PubMed] [Google Scholar]

- Taylor NE, Ives ZG. Reconciling while tolerating disagreement in collaborative data sharing. SIGMOD’2006. 2006 Jun;:27–29. [Google Scholar]

- Wang F, Vergara-Niedermayr C. Collaboratively sharing scientific data. CollaborateCom. 2008:805–823. [Google Scholar]

- Wang F, Hussels P, Liu P. Proceedings of SPIE Medical Imaging 2009. Orlando, Florida, USA: 2009. Feb, Securely and flexibly sharing a biomedical data management system; pp. 7–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- caBIG. cancer Biomedical Informatics Grid. Available online at: http://caBIG.nci.nih.gov/

- ckan. Available online at: http://ckan.org. [Google Scholar]

- Cyberinfrastructure for the Biological Sciences: Plant Science Cyberinfrastructure Collaborative (PSCIC) Available online at: http://www.nsf.gov/pubs/2006/nsf06594/nsf06594.htm.

- Data Sharing & Intellectual Capital (DSIC) Workspace. Available online at: https://cabig.nci.nih.gov/workinggroups/DSICSLWG.

- Digital Imaging and Communications in Medicine (DICOM) Available online at: http://medical.nema.org/ [Google Scholar]

- MIRC. Available online at: http://mirc.rsna.org. [Google Scholar]

- myGrid. Available online at: http://www.mygrid.org.uk/ [Google Scholar]

- National Biomedical Imaging Archive. Available online at: http://ncia.nci.nih.gov/ncia.

- NCI Thesaurus. Available online at: http://ncit.nci.nih.gov.

- Network for Translational Research (NTR): Optical Imaging in Multimodality Platforms. Available online at: http://imaging.cancer.gov/programsandresources/specializedinitiatives/ntroi.

- NIH Statement on Sharing Scientific Research Data. Available online at: http://grants.nih.gov/grants/policy/data sharing/

- Policy for sharing of data obtained in NIH supported or conducted genome-wide association studies. Available online at: http://grants.nih.gov/grants/guide/notice-files/not-od-07-088.html.

- The Cancer Genome Atlas (TCGA) Data Portal. Available online at: http://cancergenome.nih.gov/dataportal.

- W3C XML Query (XQuery) Available online at: http://www.w3.org/XML/Query. [Google Scholar]