Abstract

A hallmark of working memory is the ability to maintain graded representations of both the spatial location and amplitude of a memorized stimulus. Previous work has identified a neural correlate of spatial working memory in the persistent maintenance of spatially specific patterns of neural activity. How such activity is maintained by neocortical circuits remains unknown. Traditional models of working memory maintain analog representations of either the spatial location or the amplitude of a stimulus, but not both. Furthermore, although most previous models require local excitation and lateral inhibition to maintain spatially localized persistent activity stably, the substrate for lateral inhibitory feedback pathways is unclear. Here, we suggest an alternative model for spatial working memory that is capable of maintaining analog representations of both the spatial location and amplitude of a stimulus, and that does not rely on long-range feedback inhibition. The model consists of a functionally columnar network of recurrently connected excitatory and inhibitory neural populations. When excitation and inhibition are balanced in strength but offset in time, drifts in activity trigger spatially specific negative feedback that corrects memory decay. The resulting networks can temporally integrate inputs at any spatial location, are robust against many commonly considered perturbations in network parameters, and, when implemented in a spiking model, generate irregular neural firing characteristic of that observed experimentally during persistent activity. This work suggests balanced excitatory–inhibitory memory circuits implementing corrective negative feedback as a substrate for spatial working memory.

Keywords: balanced networks, computational model, decision making, derivative feedback, integration, working memory

Introduction

Working memory refers to an ability to hold information “on-line” in the absence of sensory inputs. In spatial working memory, the item held in memory is the spatial location of an object that must be recalled after a delay period of up to several seconds. Electrophysiological recordings have revealed neurons in the parietal and frontal cortices that encode the remembered location of a cue through spatially tuned patterns of persistent neural firing (Funahashi et al., 1989; Constantinidis and Steinmetz, 1996; Chafee and Goldman-Rakic, 1998), but the circuit mechanisms maintaining this sustained neural activity remain poorly understood.

Computational modeling has been useful in suggesting possible mechanisms for the generation and storage of spatially specific patterns of persistent neural activity. The vast majority of models consist of networks of excitatory and inhibitory neuronal populations connected by short-range excitation and longer range inhibition (for review, see Ermentrout, 1998; Compte, 2006). Local recurrent excitation between neurons having similar preferred features provides positive feedback that supports long-lasting reverberation of activity, while long-range inhibition stabilizes and shapes the spatially localized patterns of activity. However, although long-range inhibition could be achieved through disynaptic pathways (Melchitzky et al., 2001) or large basket cells (Markram et al., 2004), the neural substrate for widespread inhibition in memory circuits remains unclear because inhibitory projections are typically shorter ranged than excitatory projections (Braitenberg and Schüz, 1998; Douglas and Martin, 2004).

Recent studies of frontal cortical microcircuitry suggest an alternative mechanism, based on negative-derivative feedback rather than positive feedback, may play a critical part in maintaining persistent neural activity. The key experimental observations motivating this hypothesis are that, first, inhibitory and excitatory inputs have been suggested to be balanced in strength in frontal cortical neurons (Shu et al., 2003; Haider et al., 2006) or more generally positively covary in other cortical neurons (Rudolph et al., 2007; Haider and McCormick, 2009), and, second, the kinetics of excitatory-to-excitatory synaptic connections are slower than those of excitatory-to-inhibitory connections (Wang et al., 2008; Wang and Gao, 2009; Rotaru et al., 2011). Recent modeling work (Lim and Goldman, 2013) has shown how these two conditions provide a mechanism for maintaining persistent activity through negative-derivative feedback that opposes drifts in firing rate: changes in firing rate trigger fast negative feedback that opposes the drift, followed by slower excitatory feedback that rebalances the net synaptic input. Here, we show how such negative-derivative feedback can operate in a spatially specific manner to maintain spatial working memory. Unlike traditional spatial working memory networks that have stereotyped spatial profiles of activity, and thus lose information about stimulus amplitude, we show that negative-derivative feedback models can temporally integrate their inputs and store analog values of stimulus amplitudes as well as spatial locations. Furthermore, by examining the relationship between the structure of the synaptic connectivity and the spatial profiles of persistent activity, we show that derivative-feedback memory networks do not require widespread, lateral inhibition. Finally, we show that the balance of inhibition and excitation that underlies persistent activity is robustly maintained across a range of common perturbations and leads to irregular neuronal firing similar to that observed experimentally (Compte et al., 2003).

Materials and Methods

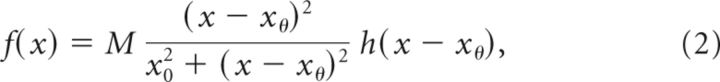

Here we describe how our firing rate and spiking networks are structured to maintain spatially tuned patterns of persistent firing through a negative-derivative feedback mechanism. Consistent with experimental observations in prefrontal cortex (Goldman-Rakic, 1995), the model networks are organized in a functionally columnar architecture of excitatory and inhibitory neurons (Fig. 1) with each column defined by having a similar preferred spatial feature of the stimulus. Following previous work (Ermentrout, 1998; Wang, 2001; Compte, 2006), we assume that these preferred spatial features are uniformly distributed along a ring and can be characterized by an angular variable θ. Below, we first describe the network structure and equations governing the dynamics of both the firing rate and spiking models. Then, we analytically derive conditions for producing spatially localized persistent activity in networks with either linear or nonlinear dynamics, and with or without translation-invariant symmetry.

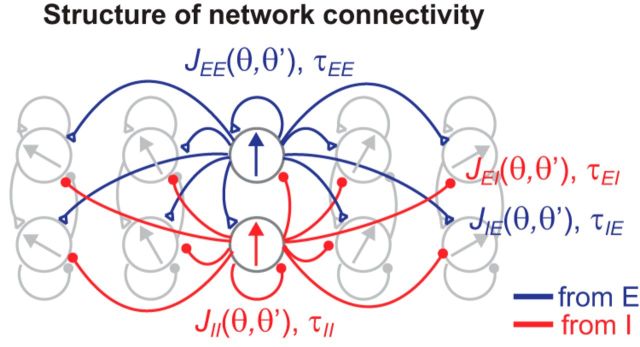

Figure 1.

Structure of network model for spatial working memory. We consider a columnar architecture of excitatory and inhibitory neurons such that neurons in the same column have similar preferred feature θ. The connectivity strength J is dependent only on the preferred features θ and θ′ of the presynaptic and postsynaptic neurons and τ represents the decay time of synaptic currents at the shown connections. Blue and red curves represent excitatory and inhibitory connections, respectively.

Firing rate model of spatial memory network.

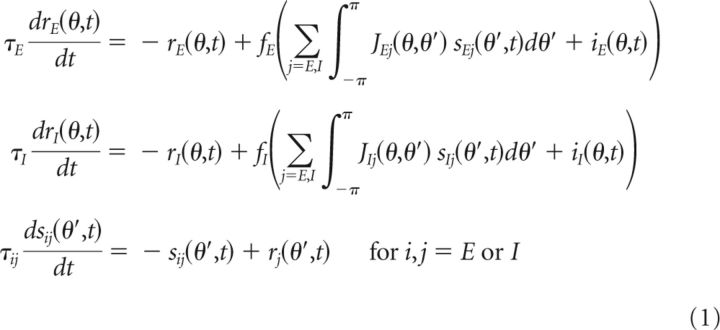

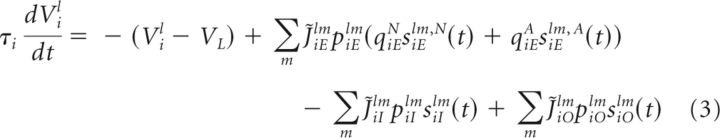

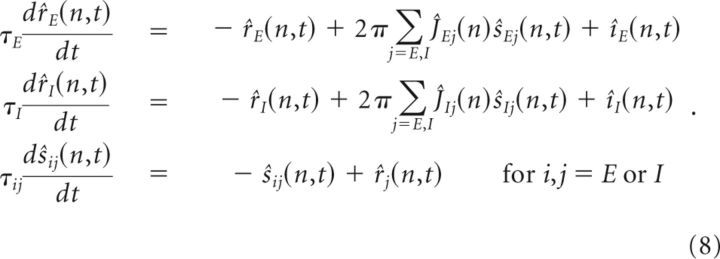

In the firing rate models, the activities of, and synaptic interactions between, the neurons are parameterized by their preferred spatial feature θ, which ranges from –π to π. The dynamics of the firing rates and synaptic state variables are governed by the equations:

|

where ri(θ,t) represents the mean firing rate of the excitatory (E) or inhibitory (I) population i with preferred feature θ. sij(θ′,t) denotes the synaptic state variable for the connections from population j with preferred feature θ′ onto population i for i,j = E or I, and approaches the presynaptic firing rate rj(θ′,t) with time constant τij.

The mean firing rate ri(θ,t) approaches fi(xi(θ,t)) with intrinsic time constant τi, where fi(x) represents the steady-state neuronal response to input current x. We consider two types of neuronal response functions: linear f(x) = x (Figs. 4, 5A–D, top, 6–10) and a nonlinear neuronal response function (Fig. 5A–D, bottom) having the Naka–Rushton (Wilson, 1999) form

|

where M represents the maximal neuronal response, xθ represents the input threshold, x0 defines the value of (x − xθ) at which f(x) reaches its half-maximal value, and h(x) denotes the step function h(x) = 1 for x ≥ 0 and h(x) = 0 for x < 0.

Figure 4.

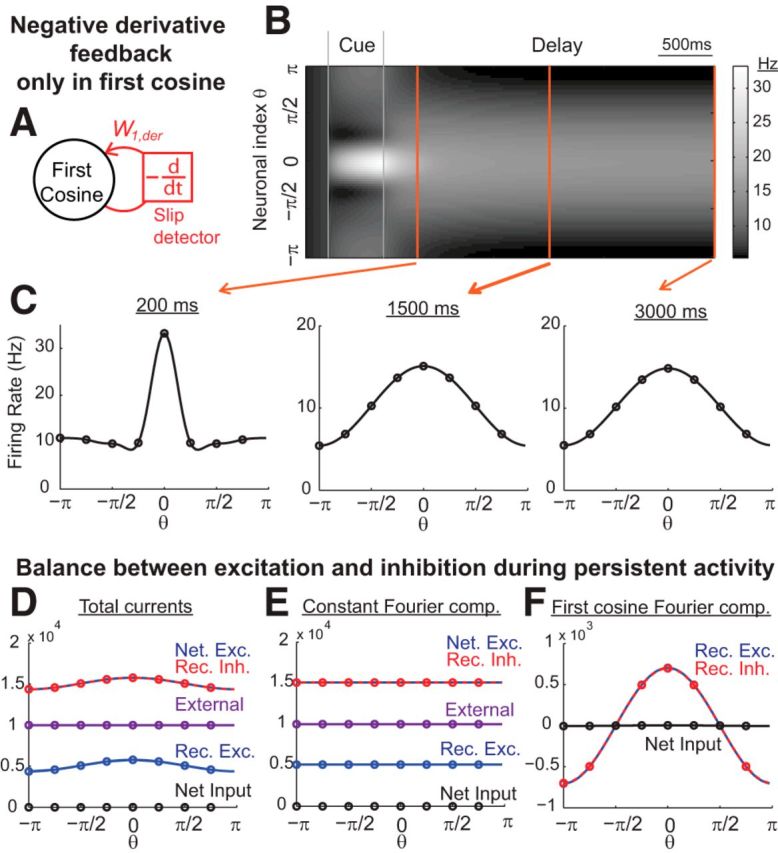

Temporal evolution of activity and balance between excitation and inhibition during memory performance. A, Example network with negative-derivative feedback only in the first cosine component. B, Grayscale-coded spatiotemporal activity pattern. The x-axis represents time and the y-axis represents the neuronal index parameterized by its preferred cue direction θ. C, Spatial profiles of firing activity at different times corresponding to the orange vertical slices in B. D–F, Close balance between excitation and inhibition during the delay period. The shown neuron receives strong net excitation and inhibition that are balanced in strength. The first cosine Fourier component, but not the constant Fourier component, is maintained by negative-derivative feedback, as indicated in F by the balance of recurrent excitation with recurrent inhibition within this first cosine component. Values in D–F are illustrated for the inputs onto an excitatory neuron at 3 s into the delay period.

Figure 5.

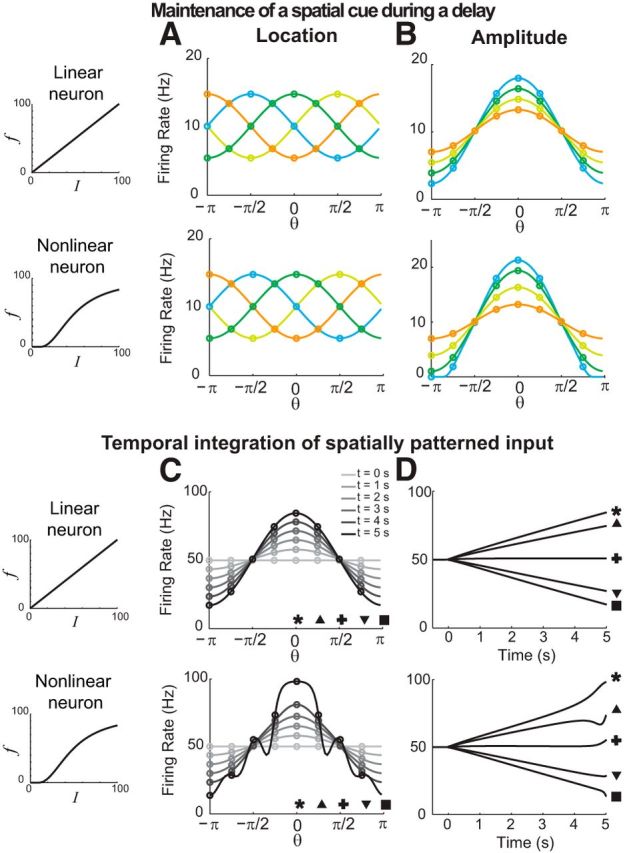

Location codes, amplitude codes, and temporal integration of negative-derivative feedback networks with linear (top) and nonlinear (bottom) firing rate (f) versus input current (I) relationships. A, Maintenance during the delay period of spatial patterns of activity centered at different locations in the network, corresponding to different spatial cues (shown as different colors). B, Maintenance of spatial patterns of activity of different amplitudes during a delay period for inputs at the same spatial location but with different strengths. Input amplitudes for the linear case were smaller than those for the nonlinear case to avoid the negative firing rates that are permitted in linear networks. C, D, Temporal integration of spatially structured input. After time 0, a spatially structured input is continuously present and the amplitude of the spatial pattern of activity linearly increases in time (C), resulting in ramp-like changes (D). Patterns in A and B are shown at 3 s into the delay period. In C and D (bottom), the ripply activity pattern at t = 5 s reflects the effect of reaching the extreme, saturating limit of the neuronal response function, when the neuron with preferred location θ = 0 has approached its limiting firing rate of 100 Hz and becomes insensitive to additional input.

Figure 6.

Networks receiving negative-derivative feedback in multiple Fourier components and maintenance of multiple bumps of activity. A, B, Example networks with negative-derivative feedback in multiple Fourier components (A). The synaptic connectivity profiles for this example were Gaussian shaped (B). C, D, The presence of negative-derivative feedback in higher order Fourier components permits the maintenance of narrowly tuned patterns of activity (C) and multiple bumps of activity (D). Data in C and D are shown at 3 s into the delay period.

Figure 7.

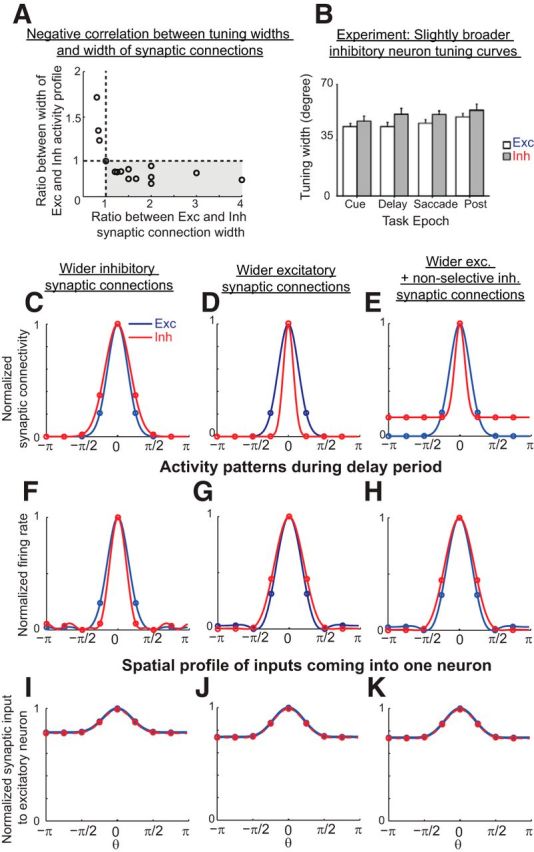

Maintenance of spatially localized, persistent activity with short-range inhibition and long-range excitation and relation between the profile of synaptic connectivity and the tuning widths of activity. A, Negative correlation between tuning widths and width of synaptic connections in negative-derivative feedback networks. The spatial profiles of the excitatory and inhibitory connections in this example were Gaussian, and the widths of both the excitatory and inhibitory connections were varied. To obtain the tuning widths of the activity of the excitatory and inhibitory populations, the activity was fit by the sum of constant and Gaussian functions and the tuning width was defined as the width of the Gaussian function during the delay period as in Constantinidis and Goldman-Rakic, (2002). B, Experimentally observed broader tuning of inhibitory neurons than of excitatory neurons during the different task epochs of a delayed saccade task. (Adapted from Constantinidis and Goldman-Rakic, 2002). C–E, Spatial profile of excitatory (blue; same for the E-to-E and E-to-I connections) and inhibitory (red; same for the I-to-E and I-to-I connections) connectivity with broader inhibitory connections (C), broader excitatory connections (D), or broader tuned component of excitatory than inhibitory connections but with inhibitory connections additionally having a nonselective (constant) component (E). Spatial profiles of synaptic connectivity are normalized by their maximum height. F–H, Activity patterns of excitatory (blue) and inhibitory (red) populations in response to a stimulus centered at θ = 0, illustrated 3 s into the delay period. Firing rates were normalized for comparison by subtracting off the minimum activity and scaling the resulting firing rates to unit amplitude. A broader tuned component of the inhibitory connections (C) results in a narrower profile of the inhibitory population activity (F). A broader tuned component of excitatory connections, with (E) or without (D) an additional nonselective component of inhibitory projections, results in a narrower profile of sustained excitatory activity (G, H). I–K, Spatial profiles of balanced excitatory (blue) and inhibitory (red) synaptic inputs coming into an excitatory neuron. Inputs are normalized by the maximum of the excitatory inputs.

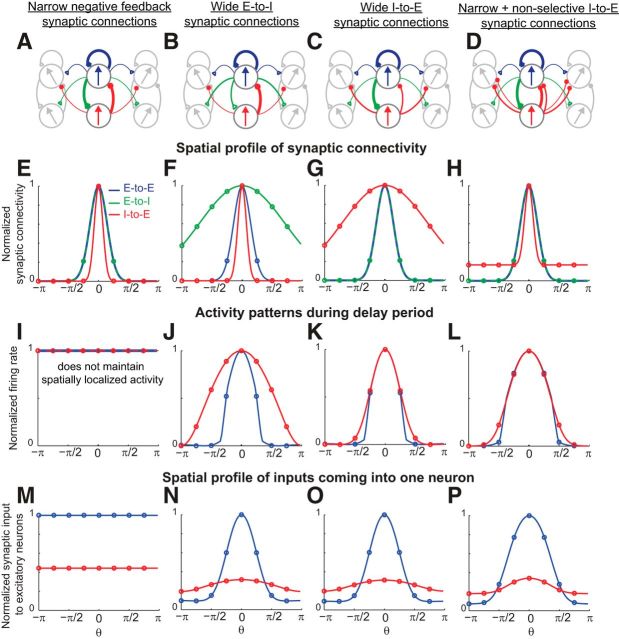

Figure 8.

Comparison of activity patterns and synaptic inputs with spatial memory networks without negative-derivative feedback networks that have equal timescales for positive and negative feedback pathways. A–H, Network structures and spatial profile of synaptic connectivity. For simplicity, the E-to-E (blue), E-to-I (green), and I-to-E (red) connections without the I-to-I connection are included and the spatial profile of synaptic connectivity is normalized by its maximum height (E–H). I–L, Activity patterns of excitatory (blue) and inhibitory (red) populations in response to a stimulus centered at θ = 0 (3 s into the delay period). When both the E-to-I and I-to-E connections are narrow, the network cannot sustain spatially localized activity, resulting in spatially uniform activity. Firing rates were normalized for comparison by subtracting off the minimum activity and scaling the resulting firing rates to unit amplitude except for the case showing spatially uniform patterns of activity (I). With broader negative feedback (B–D, F–H), spatially localized activity can be stabilized, with broader tuning of the inhibitory population. M–P, Spatial profiles of balanced excitatory (blue) and inhibitory (red) synaptic inputs coming into an excitatory neuron. Inputs are normalized by the maximum of the excitatory inputs. The networks receive broader inhibitory than excitatory inputs for spatially localized activity, resulting in an overall Mexican-hat (i.e., center-surround) type input (N–P).

Figure 9.

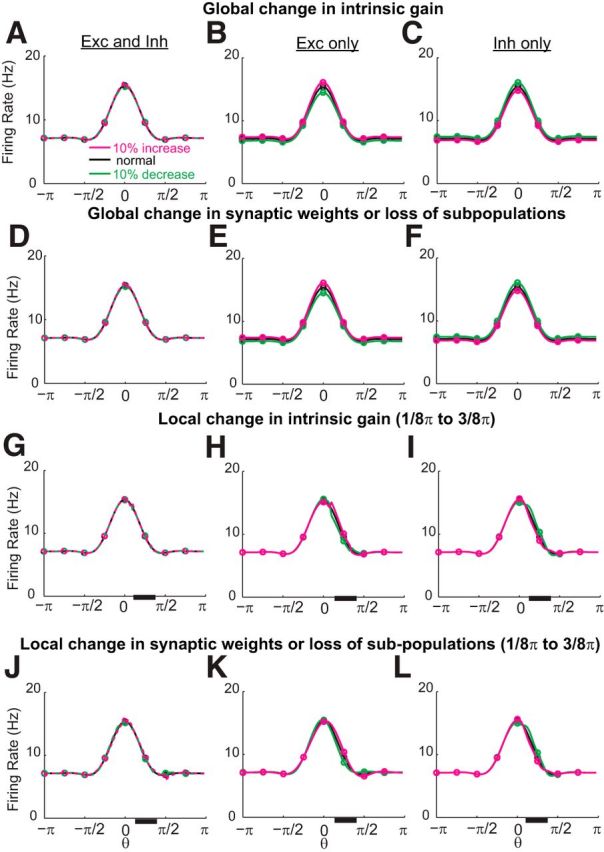

Robustness to common perturbations in memory networks with negative-derivative feedback. The networks can maintain spatially localized, persistent activity robustly against either global (A–F) or local (G–L) perturbations that preserve the balance between excitation and inhibition such as changes in intrinsic gain (A–C, G–I) or changes in synaptic weights or loss of a fraction of the neurons in a given subpopulation (D–F, J–L). The local perturbations were in neurons whose preferred directions lie between 1/8π and 3/8 π, represented by the black bar along the x-axis. Activity profiles are shown for 3 s into the delay period.

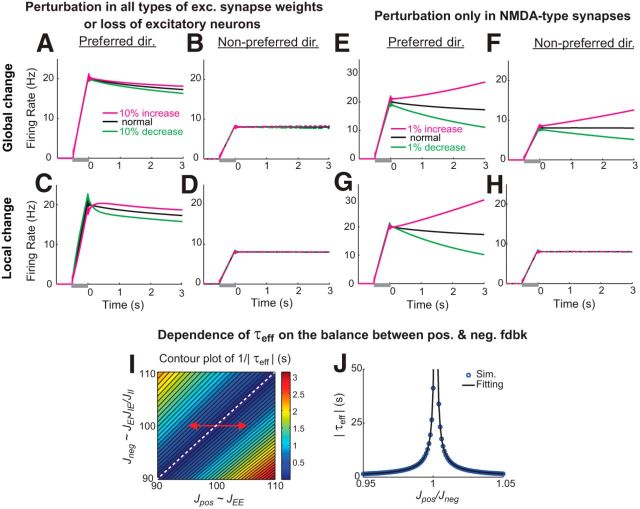

Figure 10.

Memory performance following perturbations under which the E-I balance is maintained (A–D) or disrupted (E–H). A–D, Time course of activity under global (A, B) or local (C, D) perturbations in all excitatory synaptic weights, or equivalently under loss of a corresponding fraction of the excitatory subpopulation. Since loss of a fraction of the cells or of all excitatory synapses affects both the positive and negative feedback pathways equally, the balance between positive and negative feedback is maintained and persistent firing is minimally affected. The time course of activity is shown for an example neuron receiving an external input stimulus centered on its preferred direction and for an example neuron receiving external input in its nonpreferred directions. E–H, Disruption of persistent firing under global (E, F) or local (G, H) perturbations in the E-to-E connections, mimicking perturbations in slow NR2B subunit-containing NMDA receptors located predominantly in this connection (Wang et al., 2013). Global perturbations led to similar changes in activity throughout the entire network (E, F), while local perturbations disrupted activity most severely in the perturbed neurons (G, H). I, J, Time constant of activity decay τeff in a neuron receiving an external input stimulus centered on its preferred direction as a function of the strengths of positive and negative feedback pathways. τeff is estimated by fitting the time course of delay activity between 500 and 2000 ms with an exponential function. As the overall strengths Jpos and Jneg of the positive and negative feedback pathways change, τeff decays approximately in inverse proportion to 1 − Jpos/Jneg (linear change in 1/|τeff| in I). Local perturbations (data not shown) provided similar results. In J, Jneg was fixed and only Jpos changed, corresponding to the red arrow in I. The black fit curve is given by 0.075 sec/|1 − Jpos/Jneg|∼(τpos − τneg)/(1 − Jpos/Jneg).

The input xi(θ,t) to population i with the preferred feature θ is a sum of the recurrent synaptic currents Jij(θ,θ′) sij(θ′,t) from population j with the preferred feature θ′ and the external current ii(θ,t) (not to be confused with the subscript i). Jij(θ,θ′) represents the synaptic connectivity strength and, except for the nontranslationally invariant model described in the final section of the Materials and Methods, we assume that it depends only on the distance between θ and θ′ and can be rewritten as Jij(θ − θ′). In Figures 6–10, we consider networks with Gaussian-shaped profiles of synaptic connectivity Jij(θ − θ′) = Jij exp[ − (θ − θ′)2/σij2], where σij here and below denotes times the standard deviation of the Gaussian. In Figures 4 and 5, in which only the first cosine Fourier component is tuned to provide negative-derivative feedback, we consider networks that have a (different) Gaussian-shaped profile, plus additional constant and cosine components Jij(θ − θ′) = Jij,const + Jij,cos cos(θ − θ′) + Jij,gaus exp[ − (θ − θ′)2/σij2].

We assume that the external input ii(θ,t) is the sum of constant background input ii,c and time-varying input, where the time-varying component can be expressed separably as the product of a spatial component ii,s(θ) and a temporal component ii,t(t), so that ii(θ,t) = ii,c + ii,s(θ) ii,t(t). The temporal component ii,t(t) represents an external pulse of input that has undergone smoothing before its arrival at the memory network, and is modeled as a pulse of duration twindow = 500 ms that is exponentially filtered with time constant τext = 100 ms. The spatial component ii,s(θ) is a Gaussian function centered at θ0. For the unimodal activity described in most of the paper, ii,s(θ) = ii,s,0 + ii,s,1 exp[ − (θ − θ0)2/σiO2]. For the multi-modal activity in Figure 6D, it is the sum of Gaussian functions ii,s(θ) = ii,s,0 + ∑k=13 ii,s,1k exp[ − (θ − θ0k)2/(σiOk)2], where the superscript k denotes the Gaussian component and is not an exponent. For the temporal integration of spatially localized input in Figure 5, ii,s(θ) is given by ii,s(θ) = ii,s,0 + ii,s,1 cos(θ − θ0).

Throughout the paper except in Figure 8, the intrinsic time constants of excitatory and inhibitory neurons, τE and τI, are 20 and 10 ms, respectively (McCormick et al., 1985). The time constants of GABAA-type inhibitory synapses, τEI and τII, are each 10 ms (Salin and Prince, 1996; Xiang et al., 1998). Based upon experimental measurements of excitatory synaptic currents in prefrontal cortex (Rotaru et al., 2011), the time constants of excitatory synaptic currents, τEE and τIE, were set to 100 and 25 ms, respectively. Note that these time constants reflect the kinetics of postsynaptic potentials triggered by activation of NMDA- and AMPA-type receptors, but likely include the effects of additional intrinsic ionic conductances since these experiments were performed without blocking intrinsic ionic currents (Rotaru et al., 2011).

For the nonlinear function of Naka–Rushton form in Equation 2, the maximal response M = 100, the half-activation parameter x0 = 40, and the input threshold xθ = 10. The parameters for the spatial components of the synaptic connectivity and external input were assigned as follows: in Figures 4 and 5, the parameters for the Gaussian component of the connectivity are JEE,gaus = 50/π, JIE,gaus = JEI,gaus = JII,gaus = 100/π, σEE= σIE = σEI = σII = 0.2π. The parameters for the amplitudes of the constant and cosine terms of the connectivity were defined as JEE,const = 250/π − JEE,gaus a0, JIE,const = JEI,const = JII,const = 300/π − JIE,gaus a0, JEE,cos = 150/π − JEE,gaus a1, JIE,cos = 300/π − JIE,gaus a1, JEI,cos = 100/π − JEI,gaus a1, JII,cos = 200/π − JII,gaus a1, where a0 and a1 are multiplicative factors deriving from the constant and first cosine components of the Gaussian portion of the connectivity, and are defined as and . With these definitions, the overall first cosine component of the connectivity satisfied the balance condition of Equation 9 below. σEO = 0.25π, iEc = 10,000, iIc = 9000, iE0 = 500, iI0 = 0, and iI1 = 0 in Figures 4 and 5A,B. iE1 = 300 in Figures 4 and 5A, varies between 200 and 500 in Figure 5B, top, and varies between 200 and 800 in Figure 5B, bottom. iEc = 5000, iIc = 0, iI0 = iI1 = 0, iE0 = iE1 = 80 in Figure 5C,D. The parameters in Figures 6–9 are the following: JEE,1 = 100, JIE,1 = 200, JEI,1 = 100, JII,1 = 200, σEE = σIE = 0.1π, σEI = σII = 0.2π, σEO = 0.4π, iEc = iIc = 0, iE0 = 100, iI0 = 0, iE1 = 135, and iI1 = 0, except in Figure 6D where iE0 = 100, iI0 = 0, iI1 = 0, and iE,11 = 150, iE,12 = iE,13 = 100, θ01 = 0, θ02,3 = ± 2π/3 and σEO1,2,3 = π/6.

In the spatial working memory networks without negative-derivative feedback (Fig. 8), τEE and τIE equal 100 ms, and the remaining time constants are the same as the corresponding ones for the negative-derivative feedback networks. The neuronal response (input current–output firing rate) functions in this figure were chosen to be linear for the inhibitory neurons and, for the excitatory neurons, a piecewise linear function given by f(x) = 1.4(x − 1) + 3.5 for x < 1, f(x) = 14(x − 1) + 3.5 for 1 ≤ x < 2, and f(x) = 7(x − 2) + 17.5 for 2 ≤ x. The spatial component of the synaptic connectivity is a Gaussian function Jij(θ − θ′) = Jij exp[ − (θ − θ′)2/σij2] with no I-to-I connection (and, for Fig. 8D,H,L,P only, with the addition of a constant function). The corresponding parameters are as follows: JEE,gaus= JIE,gaus = 0.5/π, JEI,gaus = 2.5/π, σEE = σIE = 0.2π, σEI = 0.1π for Figure 8A, E, I, and M; JEE,gaus = 0.5/π, JIE,gaus = 1/π, JEI,gaus = 0.5/π, σEE = 0.2π, σIE = π, σEI = 0.1π for Figure 8B, F, J, and N; JEE,gaus= JIE,gaus = JEI,gaus = 0.5/π, σEE= σIE = 0.2 π, σEI = π for Figure 8C, G, K, and O; and JEE,gaus= JEI,gaus = 0.5/π, JIE,gaus = 1/π, σEE= σIE = 0.2π, σEI = 0.1π, and with the addition of a constant value 0.1/π to the I-to-E connection for Figure 8D,H, L, and P. The spatial profile of the transient external input is the same for all networks and is given by ii,s(θ) = 0.5 + 0.5 cos(θ).

All the simulations of the firing rate models were run with a fourth-order explicit Runge–Kutta method in MATLAB.

Spiking network of leaky integrate-and-fire neurons.

In Figure 11, we constructed a recurrent network of excitatory and inhibitory populations of spiking neurons with balanced excitation and inhibition. The activities of, and synaptic interactions between, the neurons are parameterized by their preferred spatial feature θ, which ranges from –π to π, as in the firing rate models. Here, we describe the intrinsic dynamics of the individual neurons and the synaptic currents connecting the neurons.

Figure 11.

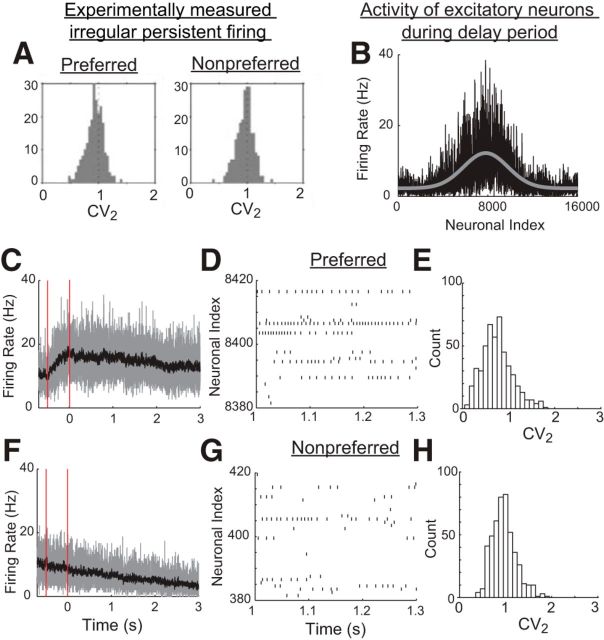

Irregular firing in spiking networks. A, Experimentally measured irregular firing, as captured by the local coefficient of variation CV2 during persistent activity in a delayed-saccade tasks. (Adapted from Compte et al., 2003). B–H, Simulation of spiking network models with negative-derivative feedback. B, Activity of excitatory neurons during the delay period in response to a transient, Gaussian-shaped stimulus. The firing rate distribution shown represents averages of neuronal activity between 2500 and 3000 ms after the stimulus offset (black curve) and is fit with a sum of constant and Gaussian functions (gray curve). C–H, Activity of neurons receiving a stimulus in their preferred directions (C–E, computed for neurons 7601–8400 in B) or neurons receiving stimuli in their nonpreferred directions (F–H, computed for neurons 1–400 and 15601–16000). C, F, Instantaneous, population-averaged activity, computed within time bins of 1 ms (gray) or 10 ms (black). Vertical red lines indicate the times of the start and end of the stimulus. D, G, Raster plots illustrating the irregular persistent firing of 40 example neurons. E, H, Histogram of CV2 values of active neurons during the persistent firing. Note that, for the activity of neurons receiving preferred stimuli, a small set of neurons fired regularly at high firing rate and exhibited low CV2 values. This is due to the heterogeneity of our completely randomly connected networks, resulting in excess positive feedback in some clusters of neurons.

The spiking network consists of NE excitatory and NI inhibitory current-based leaky integrate-and-fire neurons that emit a spike when a threshold is reached and then return to a reset potential after a brief refractory period. The neurons are recurrently connected to each other and receive transient stimuli from an external population of NO neurons. The connectivity between neurons is sparse and random with constant connection probability ρi so that, on average, each neuron receives NEρE, NIρI, and NOρO synaptic inputs from the excitatory, inhibitory, and external populations, respectively. The strengths of the recurrent connections and connections from the external population are dependent on the difference between the preferred feature θ of the postsynaptic neuron and the preferred feature θ′ of the presynaptic neuron.

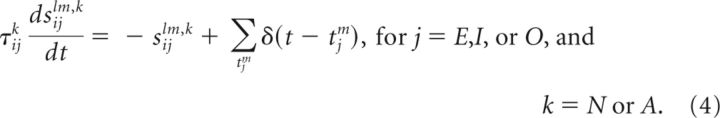

The dynamics of the subthreshold membrane potential Vil of the lth neuron in population i and the dynamics of the synaptic input variables sijlm onto this neuron from the mth neuron in population j are given as follows:

|

|

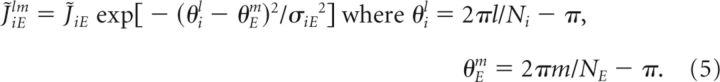

The first term on the right-hand side of Equation 3 corresponds to a neuronal intrinsic leak process such that, without the input, the voltage decays to the resting potential VL with time constant τi. The second term is the sum of the recurrent NMDA- and AMPA-mediated excitatory synaptic currents. The dynamic variables siElm,N and siElm,A represent NMDA- and AMPA-mediated synaptic currents from cell m of the excitatory population. The fractions of NMDA- and AMPA-mediated currents are assumed to be uniform across the population and are denoted by qiEN and qiEA = 1 − qiEN, respectively. piElm is a binary random variable with probability ρE and represents the random connectivity between neurons. The sum of the strengths of the NMDA- and AMPA-mediated synaptic currents is a Gaussian function given by the following:

|

Similarly, the third and fourth terms represent the total synaptic inputs from the inhibitory population and the external population. The dynamic variables siIlm and siOlm denote inhibitory and external synaptic currents of strengths J̃iIlm and J̃iOlm, respectively, and piIlm and piOlm are binary random variables with probability ρI and ρO, respectively.

In the dynamics of sijlm,k in Equation 4, a presynaptic spike at time tjm from neuron m in population j causes a discrete jump in synaptic current followed by an exponential decay with time constant τijk. The spikes arriving from the external population represent stimulus-driven inputs to be remembered and are generated by a Poisson process with rate rO during a time window twindow (rO = 0 during the memory period). Note that the strength of sijlm,j, denoted by J̃ijlm in Equation 3, corresponds to the integrated area under a single postsynaptic potential, not the height of a single postsynaptic potential. Furthermore, the connectivity strengths J̃ijlm were scaled as follows:

This scaling enabled the fluctuations in the input to remain of the same order of magnitude as the mean input as the network size varied (van Vreeswijk and Sompolinsky, 1996, 1998).

In Figure 11, E and H, the coefficients of variation of the interspike intervals were computed for 3 s from time 300 to 3300 ms using all excitatory neurons that exhibited >5 spikes during this period. CV2 measures the variability of the interspike intervals locally when the activity is not stationary, and is defined as where ISIn denotes the nth interspike interval (Holt et al., 1996).

In all spiking simulations, NE = 16000, NI = 4000, NO = 20000, ρE = ρO = 0.2, and ρI = 0.4. The time constants and the fractions of NMDA-mediated currents were τE = 20ms, τI = 10ms, τEI = τII = 10ms, τEEN = 150ms, τEEA = 50ms, τIEN = 45ms, τIEA = 20ms, qEEN = 0.5, and qIEN = 0.2 (Rotaru et al., 2011). Note that, as in the rate models, these time constants reflect the kinetics of postsynaptic potentials triggered by activation of NMDA- and AMPA-type receptors, but likely include the effects of additional intrinsic ionic conductances since these experiments were performed without blocking intrinsic ionic currents (Rotaru et al., 2011). The remaining parameters of the integrate-and-fire neuron, which were the same for both excitatory and inhibitory neurons, were VL= −60 mV, Vθ = −40 mV, and Vreset = −52 mV, with a refractory period τref = 2 ms. The parameters for the synaptic strengths were tuned to achieve a balance, on average, between the excitatory and inhibitory inputs arriving onto each population during sustained activity (Eq. 9), and were set as follows: JEE =JIE = 29.70, JIE =JII = 42.43, JEO,0 = 2.1, JIO,0 = 0, JEO,1 = 2.1, JIO,1 = 0, σEE = σIE = 0.25π, and σEI = σII = 0.2π. rO = 40 Hz for excitatory external input neurons with indices from 0.45NE (7200) to 0.55NE (8800) and was zero otherwise.

The numerical integration of the network simulations was performed using the second-order Runge–Kutta algorithm. Spike times were approximated by linear interpolation, which maintains the second-order nature of the algorithm (Hansel et al., 1998).

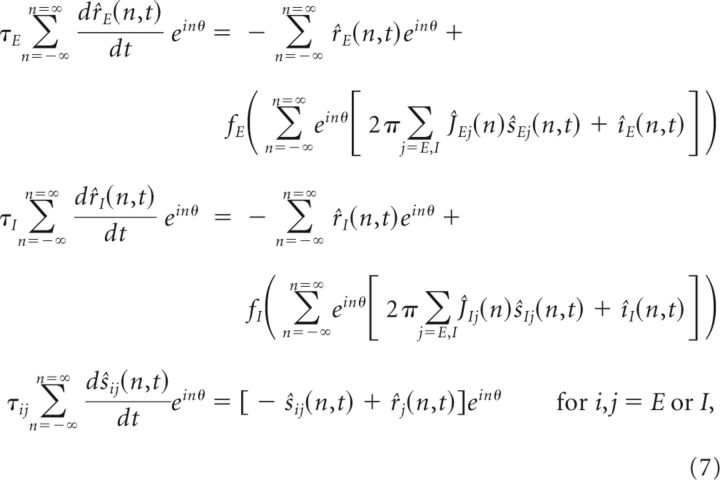

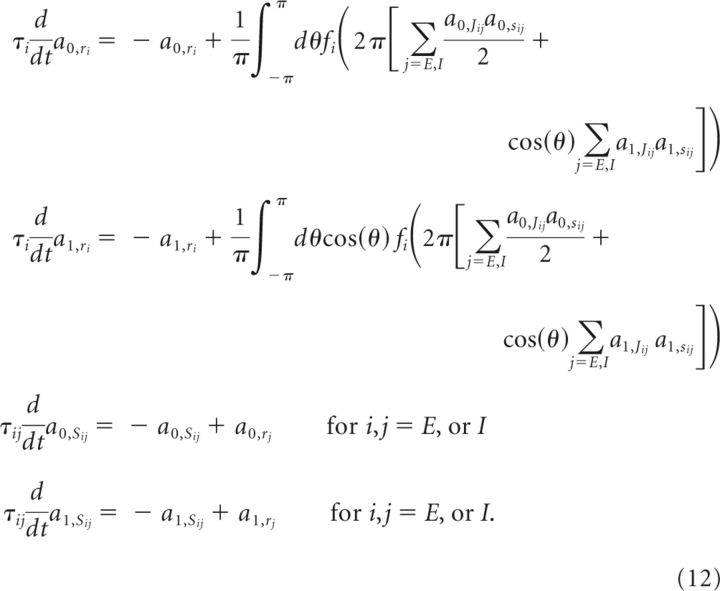

Derivation of conditions for negative-derivative feedback using Fourier analysis: linear dynamics.

Here, we analytically derive conditions for maintaining persistent spatial patterns of activity in firing rate models based on negative-derivative feedback control. First, to illustrate the conditions for negative-derivative feedback control in a simple manner, we assume that the network dynamics are linear and the connectivity pattern is translation invariant. In such a case, Fourier analysis can be used to obtain the conditions for negative-derivative feedback in terms of the Fourier coefficients of the synaptic strengths.

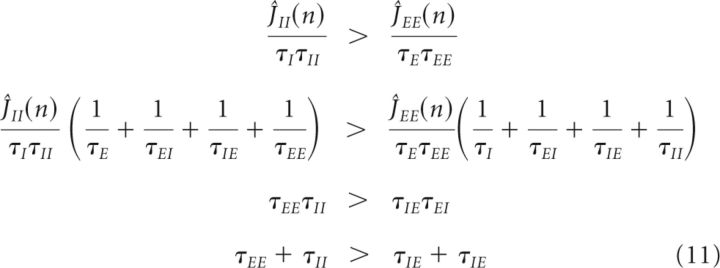

Under the assumption that the connectivity is translationally invariant, that is, the connectivity strength depends only on the difference θ − θ′ between the preferred features of the presynaptic and postsynaptic neurons so that Jij(θ,θ′) = Jij(θ − θ′), all variables and functions of θ in Equation 1 can be rewritten in terms of their Fourier series so that

|

where the x̂(n) are the Fourier coefficients of the function x(θ), and are defined by x̂(n) = (Folland, 2009). The expression for the recurrent input is obtained by using the convolution theorem, which states that the Fourier coefficient of is the product of the Fourier coefficients of Jij(θ) and sj(θ). Furthermore, if we assume linear dynamics with fE,I(x) = x, the Fourier components of the different spatial frequencies do not interact with each other and the equation governing the dynamics of each Fourier coefficient is given by the following:

|

Thus, we obtain a 6D linear system for each Fourier component, obeying where y⃗ = (r̂E(n),r̂I(n),ŝEE(n),ŝIE(n),ŝEI(n),ŝII(n)), and is defined in terms of the time constants τE,τI, and τij and the Fourier components Ĵij(n).

The conditions for negative-derivative feedback control within each Fourier mode of this spatially structured network are analogous to those found previously for spatially uniform networks (Lim and Goldman, 2013). Here, we summarize the approach taken in the previous work, and refer the reader to that work for more extensive analysis. To analyze the linear networks, we used the eigenvector decomposition to decompose the coupled 6D system into noninteracting eigenvectors. For a linear system obeying , the right eigenvectors q⃗ir and corresponding eigenvalues λi satisfy the equation and the decay of each mode is exponential with time constant τi,eff = − 1/Re(λi), where Re denotes the real part. To obtain persistent firing (large τi,eff), the system should have at least one eigenvector with its corresponding eigenvalue equal to or close to zero. Also, to maintain persistent activity without unbounded growth of activity in the nonpersistent modes requires that all eigenvalues except those close to 0 have a negative real part (Lim and Goldman, 2013, their Supplementary information 1.2 and 1.3).

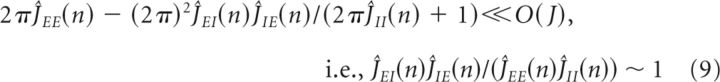

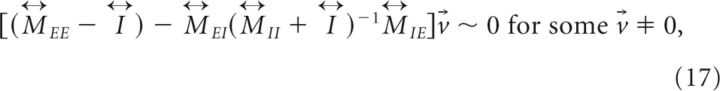

Applying this analysis to the system in Equation 8, we found conditions for the maintenance of persistent activity in each Fourier component by negative-derivative feedback. The conditions for each Fourier mode n are given by the following:

|

|

where here we have assumed that the magnitudes of the Ĵij(n) are large so that lower order terms in Ĵij(n) can be neglected. Equation 9 represents the balance between the strengths of positive feedback ĴEE(n) and negative feedback ĴEI(n)ĴIE(n)/ĴII(n) in each mode, and we thus refer to it as the balance condition (Fig. 3B). Equation 10 constrains the time constants of the positive and negative feedback. The time constants multiplying the feedback strengths correspond to the timescales for the positive and negative feedback, that is, τ+ = τEE + τII and τ− = τIE + τEI, where we note that τII acts as a time constant for positive feedback since the I-to-I connection inhibits the negative feedback pathway. From Equation 10, these time constants must be unequal, τ+ ≠τ−. Under these conditions, the recurrent input approximates derivative feedback and thus defines the derivative-feedback models.

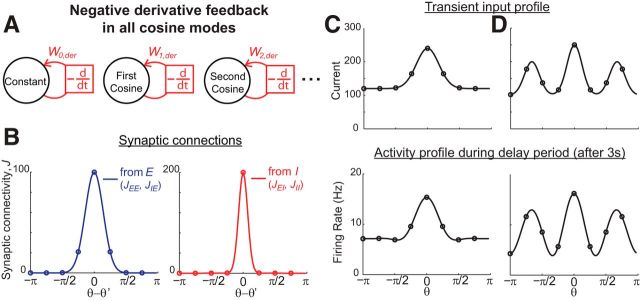

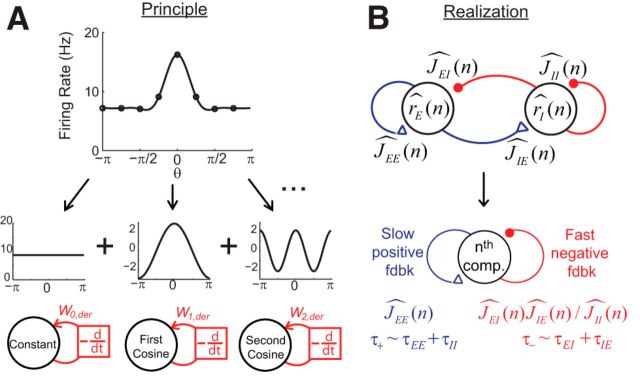

Figure 3.

Negative-derivative feedback in linear networks can be analyzed through the Fourier decomposition. A, Example spatial pattern of persistent activity (top) and its Fourier decomposition (middle). Negative-derivative feedback can occur within any or all of the Fourier components (bottom). B, Conditions for negative-derivative feedback in each Fourier component. Top, Illustration of the projection onto the nth Fourier component of the network's activity r̂i and connectivity Ĵij. To have negative-derivative feedback in each Fourier component, the positive feedback within this component must have equal strength, but slower kinetics, than the negative feedback within the component.

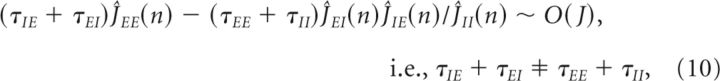

Additionally, we found the stability conditions on the network parameters for a system in which all eigenvalues except those close to 0 have a negative real part. Using the Routh–Hurwitz criterion (Nise, 2004), we found necessary conditions for stability given by the following:

|

The last condition is similar to Equation 10, which showed that the timescales for the positive and negative feedback must be different to have stable persistent firing. The stability condition above additionally specifies that the positive feedback should be slower than the negative feedback. The third condition is similar to the last condition, except that it constrains the product of the time constants, and the first two conditions require that the excitatory time constants be slower than the inhibitory ones.

Derivation of conditions for negative-derivative feedback using Fourier analysis: nonlinear dynamics.

In this section, we consider a network model in which the individual neurons have a nonlinear firing rate versus input current relationship. In the presence of such nonlinearity, the Fourier components of the firing rates and synaptic variables are no longer independent for the different Fourier modes. However, as shown below, the core principles for the conditions on the network parameters are similar to those for the linear networks, that is, negative-derivative feedback requires, first, a balance between the strengths of positive and negative feedback and, second, that positive feedback is slower than negative feedback.

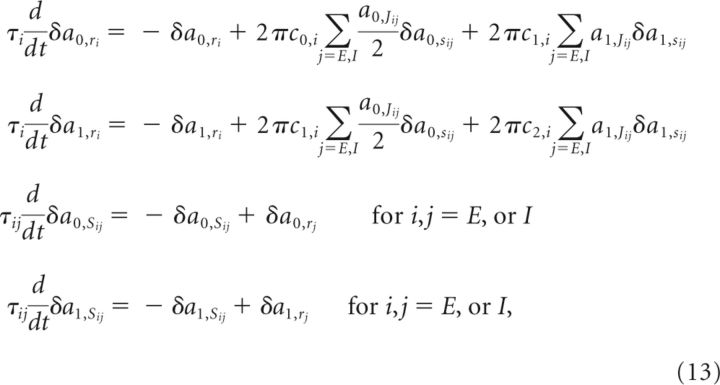

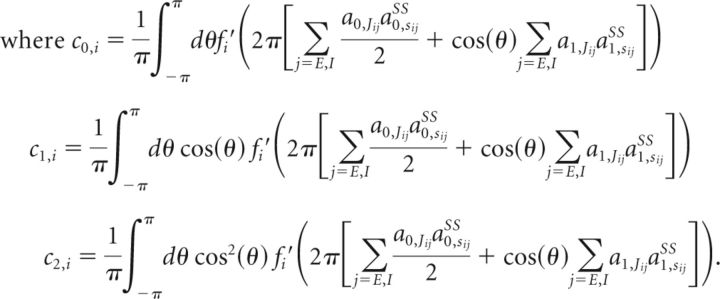

To find analytically the conditions on negative-derivative feedback in nonlinear networks, we consider a simple model in which the connection strengths and the external input are described by their first two Fourier components, a constant mode and a cosine mode (Ben-Yishai et al., 1995). The first two Fourier coefficients of the quantity x, denoted a0,x and a1,x, are given by al,x = for l = 0 or 1 (note that a0,x = 2x̂(0) and a1,x = x̂(1) + x̂( − 1) = Re {x̂(1)} in Eq. 8). Then, by projecting the system of Equation 1 onto the first two Fourier components, we obtain the following equations governing the dynamics of a0,x and a1,x, for x = rE, rI, sEE, sIE, sEI, or sII, during the memory period (when the external input is zero):

|

In the presence of nonlinearity, global analysis of the network dynamics through the eigenvector decomposition is not possible. Instead, we find the conditions by locally linearizing the system around possible steady states and note that the conditions obtained must hold for all steady states that can be maintained persistently. For the steady state to belong to a continuous attractor, there should be at least one eigenvector equal to or close to 0 in the local linearization. If we assume that there exists a steady state and denote it by the superscript SS as a0,xSS and a1,xSS for x = rE, rI, sEE, sIE, sEI, or sII, Equation 12 becomes

|

|

In the above, fi′(xi) denotes the derivative of fi(x) evaluated at xi, δa0,x = a0,x − a0,xSS, and δa1,x = a1,x − a1,xSS for x = rE, rI, sEE, sIE, sEI, or sII. Thus, these equations describe a 12D linear system (two coupled 6D systems, one for the constant mode and the other for the cosine mode). As shown in the previous section, we obtain the conditions for negative-derivative feedback by examining the conditions for the system given by Equation 13 to have an eigenvalue close to 0. These conditions are given by the following:

Equation 15 is the condition for slower positive feedback, which is the same as Equation 11 for the linear networks. Equation 14 can be achieved either when a0,JEEa0,JII − a0,JEIa0,JIE≪O(J2) or a1,JEEa1,JII − a1,JEIa1,JIE≪O(J2), that is, when either the constant mode or the first cosine mode satisfies a balance condition identical to Equation 9 for the linear networks. Additional inequality conditions for the stability of the system can likewise be obtained by analogy to the analysis underlying Equation 11 for the linear networks.

We note that, for both the linear and nonlinear networks, the condition that positive and negative feedback are balanced leads to a corresponding requirement that the excitatory and inhibitory inputs onto at least the excitatory cells (and, unless JEI and JIE are very different, also the inhibitory cells) are closely balanced as well. The reason for this is that achieving large negative-derivative feedback requires correspondingly large excitatory and inhibitory recurrent inputs. If these inputs were unbalanced, then the total current driving the neural response functions fE and fI would be very large. This would cause very large synaptic input to the neurons that would drive strong changes in firing rates rather than maintaining persistent activity. Thus, even in the presence of higher Fourier components of the connection strengths or nonlinear response functions, the balance condition remains the same (derivation not shown) and the core principles for negative-derivative feedback remain the same as in the linear networks.

Derivation of conditions for negative-derivative feedback to maintain arbitrary patterns of activity in nontranslationally invariant networks.

In the previous sections, we found the conditions necessary for negative-derivative feedback when the connection strengths are translationally invariant. In this section, we extend our analysis to networks without translation invariance and generalize the conditions for negative-derivative feedback control to such networks.

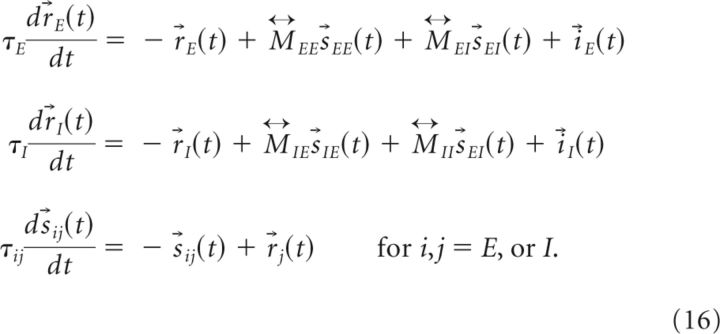

For simplicity, we assume the network obeys linear dynamics and assume that the neuronal index θ is discrete and uniformly spaced along the ring, with the total number of neurons in either the excitatory or inhibitory population equal to Nθ. Then, in Equation 1, the firing activities and synaptic variables are vectors of length Nθ, the connection strengths are Nθ × Nθ matrices that we denote as for i,j = E or I, and Equation 1 can be rewritten as follows:

|

In this case, the slower positive than negative feedback can be achieved under the same conditions (bottom two equations of Equation 11) found for the translationally invariant networks. On the other hand, the balance condition now is expressed as a relation between the connectivity matrices

|

and the persistent pattern of activity under this condition is

|

For example, if the 's commute with each other and have a common eigenvector v⃗ such that v⃗ = λijv⃗, then the balance condition becomes λEE ∼ λEIλIE/λII for large λ, and r⃗E ∼ v⃗ and r⃗I ∼ λEE/λEIr⃗E ∼ λIE/λIIr⃗E. Note that if is translationally invariant, the common eigenvectors of are the Fourier components discussed previously.

Results

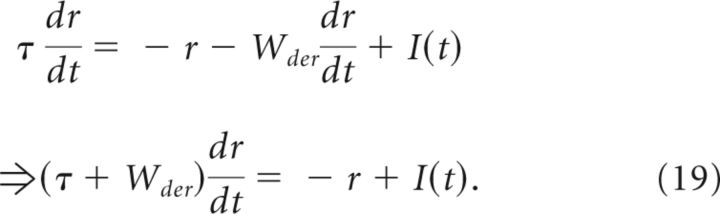

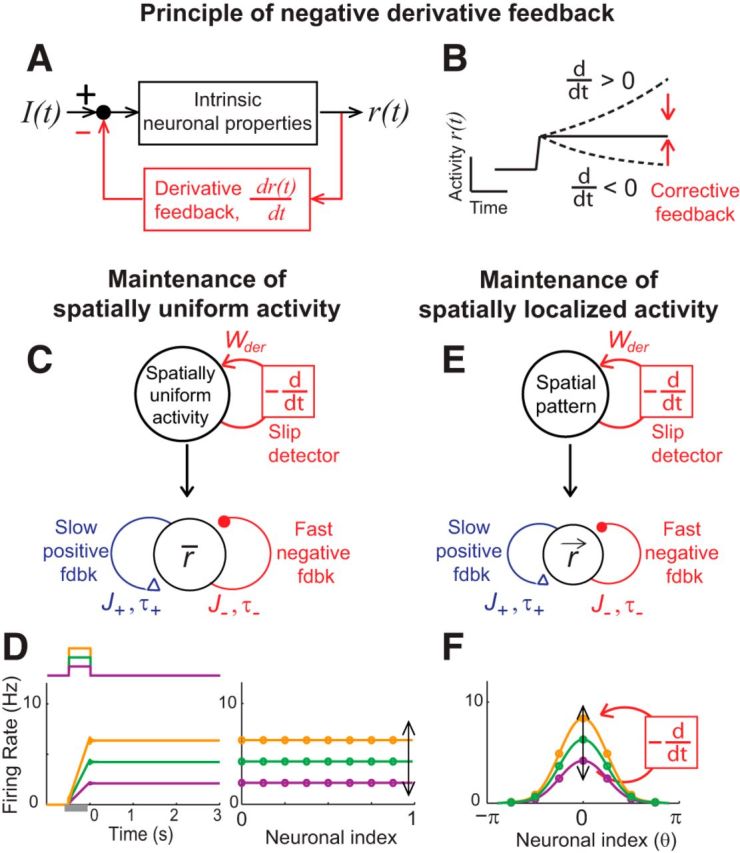

Principle of negative-derivative feedback control for spatial working memory

We consider a spatial working memory model that maintains persistent activity through a negative-derivative feedback mechanism that counteracts drift in memory representations. In this section, we review recent work (Lim and Goldman, 2013) showing how a negative-derivative feedback mechanism can maintain spatially uniform patterns of persistent activity in networks with no spatial structure. In the following sections, we show how this framework can be extended to networks whose spatial structure allows them to maintain stimulus-dependent spatial patterns of activity, and we describe salient properties of these networks.

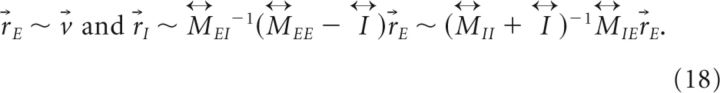

To illustrate how negative-derivative feedback networks slow memory decay and maintain a graded range of spatially uniform persistent activity, we consider a simple mathematical model of a memory cell with mean firing rate r(t), which receives transient input I(t) to be integrated and maintained during a delay period (Fig. 2A):

|

The first term on the right side of the top equation, –r, represents intrinsic leak processes that lead to activity decay with time constant τ in the absence of feedback. The second term, , represents negative-derivative feedback that resists changes in activity such that increases (decreases) in firing rates result in a feedback signal of negative (positive) sign (Fig. 2B). For strong derivative feedback, Wder ≫ τ, the effective time constant of activity decay τeff = τ + Wder is dominated by this derivative feedback, so that the system becomes proportionately more resistant to memory decay as the strength of derivative feedback increases.

Figure 2.

Memory networks with negative-derivative feedback. A, Block diagram illustrating the principle of negative-derivative feedback control for a system with transient external input I(t) and output firing rate r(t). B, A negative-derivative feedback mechanism maintains persistent activity by providing corrective feedback that opposes upward or downward changes in activity. C, Simple black-box model of a neural population with negative-derivative feedback that maintains spatially uniform patterns of persistent activity (top) and simplified network illustrating key components underlying the conditions on the positive and negative feedback pathways for negative-derivative feedback (bottom). D, Time course of average firing rates in spatially uniform negative-derivative feedback networks in response to three example amplitudes of transient stimuli (left) and corresponding maintenance of spatially uniform patterns of activity at different amplitudes (right) during the delay period. E, F, Extension of the mechanism of negative-derivative feedback control to maintaining spatially localized patterns of activity. The basic principles are the same as for the negative-derivative feedback networks for spatially uniform patterns of activity (E), but what negative-derivative feedback detects and corrects is the amplitude of particular spatial patterns of activity (F). In D, the neurons were rank ordered and the neuronal index is the neuron's order number divided by the network size. In F, the neuronal index is the neuron's preferred spatial feature θ.

Mechanistically, this negative-derivative feedback can arise from recurrent network interactions in memory-storing circuits that contain positive and negative feedback pathways (Fig. 2C). When positive feedback mediated by recurrent excitation and negative feedback mediated by recurrent inhibition have equal strength, but positive feedback has slower kinetics, a neuron receives derivative-like recurrent input: the equal-strength positive and negative feedback lead to nearly zero net input during persistent activity, but the faster negative feedback leads to large input that opposes changes in activity whenever activity fluctuates. In spatially uniform networks, the strength of negative-derivative feedback has been shown (Lim and Goldman, 2013) to be proportional to the strength of the balanced positive and negative feedback and the difference in their timescales, so that

where J denotes the strength of the balanced positive and negative feedback pathways, and τ+ and τ− denote the timescales of positive and negative feedback, respectively (Fig. 2C). Thus, when the recurrent synaptic interactions contain strong positive and negative feedback that are balanced in strength (large J) but with slower positive feedback (τ+ > τ−), the network temporally integrates its input with long integration time constant τeff ≈ Wder, showing step-like activity in response to spatially uniform transient input (Fig. 2D). We note that, although the derivative-feedback mechanism maintains persistent activity by resisting changes in firing rate, this does not keep the system from responding to external inputs as long as these inputs are of the same scale as the recurrent synaptic inputs, which would be expected if the strengths of recurrent and external inputs both scale with population size. Furthermore, external input can transiently imbalance the recurrent excitatory and inhibitory feedback, allowing for more rapid response to external inputs (Lim and Goldman, 2013).

Requirements for negative-derivative feedback in circuits with functionally columnar architecture

Here we describe how the mechanism of negative-derivative feedback described above can be extended to networks that maintain spatially localized patterns of persistent neural activity characteristic of those observed during spatial working memory tasks (Fig. 2E). The basic concept is the same as above, but for spatial working memory, the feature that negative-derivative feedback detects and corrects is a deviation in the amplitude of a particular spatial pattern of activity r⃑ = (r1,r2,…,rn), where ri is the firing rate of the ith neuron in the network (Fig. 2F). That is, for any maintained spatial pattern r⃑, we require that this activity drives recurrent synaptic interactions containing positive and negative feedback signals of equal strength but with slower kinetics for the positive feedback (Fig. 2E). Below, we show mathematically how these conditions can be met in a spatially structured network and find the conditions on the spatial profile and kinetics of the synaptic connectivity for negative-derivative feedback control.

We consider networks of excitatory and inhibitory populations that store the angular location of a transiently presented spatial cue that must be remembered during a subsequent delay period. Recordings of the persistent activity of spatially selective memory cells identified in such tasks suggest a functionally columnar architecture in which neurons in the same column have similar preferred features of the stimulus (Goldman-Rakic, 1995; Wimmer et al., 2014). To capture this functional organization, we parameterize the activities of the excitatory and inhibitory neurons by their preferred feature θ, which we assume to be uniformly distributed along a ring (Fig. 1). The connection strength between a presynaptic neuron from the jth population with preferred feature θ′and a postsynaptic neuron from the ith population with preferred feature θ is denoted by Jij(θ,θ′), where i =E or I denotes whether the presynaptic and postsynaptic neurons are part of the excitatory (E) or inhibitory (I) populations. Time constants for these connections similarly are denoted as τij, which is assumed to be independent of θ and θ′ for given population types i and j (Fig. 1).

The core requirements for negative-derivative feedback, a balance between the strengths of the positive and negative feedback pathways and slower positive than negative feedback, impose a tuning condition on the connection strengths Jij(θ,θ′) and a constraint on the time constants of the connections, τij. To derive the tuning condition on Jij(θ,θ′), we assume as in most previous models of orientation-selective spatial working memory that the connectivity Jij(θ,θ′) is translationally invariant, that is, independent of the absolute values of θ and θ′ but dependent on the difference between θ and θ′ as Jij(θ − θ′) (Ermentrout, 1998; Wang, 2001; Compte, 2006). Furthermore, if the dynamics of the system is linear, Fourier analysis can be used to decompose the spatial activity and recurrent interactions into cosine and sine functions of θ that do not interact with each other (Fig. 3A). In this case, the strengths of the recurrent connections within each Fourier component are denoted by Ĵij(n) and their timescales are given by τij (Fig. 3B, top; see Materials and Methods). However, we note that, although translation invariance and linear dynamics are helpful in building intuition and providing a simple illustration of conditions for negative-derivative feedback, neither of these features are necessary requirements for negative-derivative feedback (see Materials and Methods and Fig. 5 for networks with nonlinear dynamics and Materials and Methods for linear networks without translationally invariant connectivity).

Since the dynamics of each Fourier component are independent, negative-derivative feedback can be achieved independently for each component (Fig. 3A). Specifically, for the nth Fourier component to be governed by negative-derivative feedback, the positive feedback and negative feedback pathways onto this Fourier component should have equal strength, and the positive feedback pathway should have slower kinetics than the negative feedback pathway. This can be accomplished when two conditions are met:

Equation 21 is the condition for balancing positive feedback and negative feedback for the nth Fourier component. The left side of this condition represents the strength of positive feedback in this Fourier component, which is mediated by the E-to-E connection. The right side represents the strength of negative feedback and is mediated by the E-to-I-to-E feedback loop, with normalization of the strength of this loop provided by the I-to-I connection (Fig. 3B, bottom). Equation 22 is the condition for slower positive than negative feedback. The sum τ+ = τEE + τII represents the sum of the positive feedback contributions, where τII plays the role of a positive feedback time constant because the I-to-I connection inhibits the negative feedback pathway, and this feedback must be slower than the time constant associated with the traversal time around the negative feedback loop τ− = τEI + τIE (Fig. 3B, bottom; see Materials and Methods).

Throughout this paper, we assume that τEE is longer than the time constants of the other connections. This assumption is based upon recent experimental observations in prefrontal cortex that found that E-to-E connections are much slower than E-to-I connections, due to a relative prominence of slow NMDA-type synapses (Wang et al., 2008; Wang and Gao, 2009; Rotaru et al., 2011). Thus, because the time constants are independent of the particular Fourier component, Equation 22 is satisfied for all Fourier components.

In contrast, the balance condition, given by Equation 21, can be satisfied independently for each Fourier component. To maintain spatially nonuniform persistent activity across the population, this condition must be satisfied by at least one of the nonconstant Fourier components, and the specific spatial profile of persistent activity observed during the delay period reflects the relative balance of the different components satisfying the balance condition.

Maintenance of spatially modulated activity based on a balance between excitation and inhibition

To illustrate the dynamics of the negative-derivative feedback networks and how they maintain spatially localized patterns of persistent activity, we first consider a simple network that has been structured to receive negative-derivative feedback only in its first cosine component (Fig. 4A). This network's synaptic connectivity profile contained three components, an untuned uniform component of the connectivity, an untuned component with Gaussian connectivity profile, and a tuned component with cosine profile (see Materials and Methods). The network received a spatially localized input of narrow Gaussian profile centered at 0 degrees during a brief cue period, plus a constant background input that was present during both the cue and delay periods (Fig. 4B,C). During the cue presentation and shortly after the offset of the cue, the spatial profile of the network activity had a narrow width that directly followed the spatial profile of the transient input (Fig. 4B, bright horizontal band centered at 0 degrees during the cue period; C, left). However, during the delay period, the activity profile quickly broadened so that only the activity pattern of the first cosine component was maintained (Fig. 4B,C, middle and right). This is because all Fourier components except the first cosine component decayed quickly back to their baseline activity, which was zero for the higher Fourier components and a constant level driven by the tonic background input for the constant component. In contrast, the first cosine component was maintained throughout the delay period by the negative-derivative feedback (Fig. 4B, broad brighter region during delay period; C, middle and right). More generally, this example illustrates that the profile of activity maintained by the network reflects only those components that receive negative-derivative feedback.

A feature of the derivative-feedback networks is that, during the delay period, neurons in the network receive strong excitatory and inhibitory inputs that are closely balanced with each other (Fig. 4D; see Materials and Methods). The cosine component receives a balance of recurrent excitatory and inhibitory synaptic inputs, as required by the balance condition (Fig. 4F). The constant component likewise receives a balance of excitation and inhibition (Fig. 4E). However, this balance is achieved through inclusion of the external background input; the recurrent inputs, in contrast, are dominated by inhibition so that the network does not contain negative-derivative feedback in this component and cannot maintain spatially uniform activity in the absence of background input (data not shown). This reflects that both the excitatory and inhibitory inputs to each neuronal population (but not necessarily the excitatory and inhibitory tuning curves or connectivity, as shown in Fig. 7) are spatially localized and have the same spatial tuning widths.

A close balance between excitatory and inhibitory inputs in memory cells is a distinct feature of negative-derivative feedback. In most previous studies, it has been suggested that spatially localized activity patterns result from excess excitation in high-firing rate neurons and widespread lateral inhibition that stabilizes the bump of activity during the delay period (Ermentrout, 1998; Wang, 2001; Compte, 2006). This leads to inhibitory synaptic inputs onto a postsynaptic cell being more broadly tuned than excitatory inputs in such networks, whereas the spatial tuning of excitatory and inhibitory inputs are similar in negative-derivative feedback networks. Thus, a balance between excitation and inhibition is one prediction of the negative-derivative feedback mechanism that can be tested experimentally (see Discussion).

Location codes, amplitude codes, and neural integration in negative-derivative feedback networks

Traditional spatial working memory models maintain the analog spatial location of a stimulus through stereotyped patterns of network activity centered on the maintained stimulus location, as observed experimentally (Goldman-Rakic, 1995; Wang, 2001). However, a fundamental feature of these models is that the amplitude of the pattern of neuronal activity during the delay period is bistable, either exhibiting untuned background activity or participating in a fixed-amplitude pattern of activity corresponding to the location of the maintained stimulus (Ermentrout, 1998; Wang, 2001; Compte, 2006). Because of this bistability, only the location of the cue can be stored in such networks and, for example, the amplitude or value of the cue cannot be distinguished beyond a binary discrimination.

Negative-derivative feedback networks likewise can maintain an analog spatial location in memory (Fig. 5A) and, as in traditional memory models, this can be achieved by having a translation-invariant network connectivity profile that permits the network to maintain a given spatial pattern of activity centered at any location in the network. However, because the negative-derivative feedback models operate by resisting changes in activity, without regard for the absolute level of activity, they can also maintain analog amplitudes of activity at a given location (Fig. 5B). Thus, these networks can convey information simultaneously about the amplitude and location of a spatial cue (for related examples in the context of optimal Bayesian cue combination and storage and efficient spike-based coding, see Boerlin and Denéve, 2011; Boerlin et al., 2013).

A related feature of the negative-derivative feedback networks is that they can temporally integrate their inputs. Temporal integration is the defining property of neural accumulators that integrate evidence over time (in the sense of calculus) during decision-making processes (Gold and Shadlen, 2007). However, most previous work modeling evidence accumulation has focused primarily upon temporal aspects of this facility, without considering that the accumulated evidence could occur across an analog range of spatial locations. A hallmark of feedback control theory is that the input–output transformation performed by systems with strong negative feedback is approximately equal to the inverse of the function that was fed back. In the case of the negative-derivative feedback networks, the signal that is negatively fed back is the derivative of the activity pattern. Thus, since the functional inverse of a temporal derivative is a temporal integral, these networks output a temporal integral of their inputs. For example, if the inputs are spatially structured, but constant in time, the negative-derivative feedback networks accumulate these signals into a uniformly increasing spatial pattern of activity (Fig. 5C,D). Thus, negative-derivative feedback networks can maintain in memory both the spatial identity of accumulated evidence as well as its running total.

Notably, even in the presence of nonlinearities in intrinsic neuronal dynamics such as thresholds and saturation, negative-derivative feedback networks accumulate and maintain spatially localized activity under the same conditions as in linear networks: a balance between positive and negative feedback, with slower positive feedback than negative feedback, leads to negative-derivative feedback. This occurs even though the Fourier components in a nonlinear network are no longer decoupled and cannot easily be decomposed into independent components (see Materials and Methods). Furthermore, the features of negative-derivative feedback discussed for linear dynamics are maintained under nonlinear dynamics, that is, the networks receive balanced excitation and inhibition during persistent activity (data not shown), and can accumulate and maintain spatially localized patterns of activity at different locations (Fig. 5A, bottom) or at different amplitudes (Fig. 5B–D, bottom; note that at t = 5 s, the neuron with preferred location θ = 0 has approached its absolute maximum firing rate of 100 Hz, demonstrating that in this extreme case the profile does become significantly affected by the nonlinearity).

Maintaining multiple bumps of activity in negative-derivative feedback networks

In the previous sections, we considered networks receiving negative-derivative feedback only in the first cosine component and used this example to illustrate important features of the negative-derivative feedback mechanism–a close balance between excitation and inhibition during persistent activity (Fig. 4D–F) and the ability to encode information both in the location and in the amplitude of spatial patterns of activity (Fig. 5). While these features are hallmarks of negative-derivative feedback networks, the specific activity profile that is maintained during persistent activity is not constrained to simple sinusoids and ultimately is determined by which Fourier components receive negative-derivative feedback. Here, we consider more general networks that receive negative-derivative feedback in all Fourier components and show that such networks can be obtained by a condition analogous to the tuning condition used for the simple cosine example discussed above.

To construct more general networks receiving negative-derivative feedback (Fig. 6A), we consider networks with the same spatial profiles of the excitatory E-to-E and E-to-I connections (Fig. 6B, left) and the same spatial profiles of the inhibitory I-to-E and I-to-I connections (Fig. 6B, right), so that Jij(θ) = J̃ijwj(θ) for i, j = E or I (note that this assumption leads to a simple form of the balance condition, but is not essential to tuning negative-derivative networks more generally). In this case, the Fourier components of the synaptic connectivity profiles are given by Ĵij(n) = J̃ijŵj(n), and the condition for having a balance in strength of positive and negative feedback in a given Fourier mode is given by ĴEE(n)ĴII(n) = J̃EEJ̃IIŵE(n)ŵI(n)∼J̃EIJ̃IEŵE(n)ŵI(n) = ĴEI(n)ĴIE(n), so that J̃EEJ̃II ∼ J̃EIJ̃IE for large values of J̃ij for all n. When, in addition, positive feedback is slower than negative feedback (due to a relatively slow combination of self-excitatory and self-inhibitory time constants τEE + τII > τEI + τEI), the network interactions provide negative-derivative feedback to all Fourier components.

Unlike the network of Figure 4, which only could maintain broad patterns of activity corresponding to its tuned, first cosine component (Fig. 6C), networks that receive negative-derivative feedback in multiple Fourier components can maintain spatially localized activity with narrower tuning widths that reflect higher order Fourier components. Furthermore, these networks can maintain more general spatial patterns of activity comprised of these different Fourier components, such as activity profiles with multiple bumps (Fig. 6D), which have been suggested as a neural correlate of the storage of multiple items (Laing et al., 2002; Edin et al., 2009; Wei et al., 2012). Thus, networks receiving negative-derivative feedback in multiple Fourier components have a higher memory capacity than those that receive negative-derivative feedback only in a single cosine component. Note, however, that the strength of negative-derivative feedback in each Fourier component, and thus the integration time constant associated with this component, in general will not be the same for all Fourier components, because this strength depends linearly upon the amount of the frequency component that is present within the synaptic connectivity profile. For this reason, the network capacity over a given timescale will in general depend both upon the specific form of the connectivity and the shape of the profile to be maintained so that, for example, networks with broad synaptic connectivity profiles would not be expected to maintain very long-lasting activity for high-frequency components that are minimally represented in their synaptic connectivity. This feature may explain why the long-lasting profiles observed experimentally during spatial working memory tend to be of relatively broad width that likely reflects features of the underlying connectivity profile.

Relation between the profile of synaptic connectivity and tuning widths of activity

Traditional spatial working memory networks require long-range inhibition to maintain the stability of localized patterns of activity in memory (Ermentrout, 1998; Wang, 2001; Compte, 2006). Such long-range inhibition is not prevalent anatomically in cortical networks, although it might be achieved functionally through disynaptic connections (Melchitzky et al., 2001) or through the broadly projecting basket cell subclass of inhibitory interneurons (Markram et al., 2004). In any case, an interesting question is whether long-range inhibition is critical for storing spatial working memory, and what constraints experimental observations may place upon the form of synaptic connectivity.

Unlike traditional models, negative-derivative feedback networks are capable of maintaining spatially localized patterns of activity regardless of the relative widths of excitatory and inhibitory connections (Fig. 7A,C–E). In fact, narrower inhibitory connections are required for our models to generate the experimental observation (Rao et al., 1999, 2000; Constantinidis and Goldman-Rakic, 2002) that inhibitory neurons have broader tuning of activity (after subtracting off any constant baseline) than excitatory neurons (Fig. 7B). When we define “widths” of the activity or connectivity as the spatial spread of the tuned portion after subtracting off any constant, untuned baseline (Constantinidis and Goldman-Rakic, 2002), short-range excitation and long-range inhibition lead to a spatially localized activity profile with the excitatory neurons having broader tuning of activity than the inhibitory neurons (Fig. 7C,F). On the other hand, the reverse relationship of the excitatory and inhibitory synaptic projections, that is, long-range excitation and short-range inhibition (Fig. 7D, or with the addition of nonselective inhibitory projections, Fig. 7E) lead to stable persistent activity with broader tuning of the inhibitory neurons than that of the excitatory neurons (Fig. 7G,H), as seen experimentally (Rao et al., 1999, 2000; Constantinidis and Goldman-Rakic, 2002). In all cases, neurons receive closely balanced excitation and inhibition and thus, the excitatory and inhibitory inputs show the same tuning widths (Fig. 7I–K). This balance of excitation and inhibition with the same spatial tuning is a general feature of negative-derivative feedback networks, since the large amount of excitation and inhibition required for strong derivative feedback must cancel to avoid saturation or total silencing of firing rates.

Thus, in the negative-derivative feedback networks, the relative tuning widths of the excitatory and inhibitory neurons are inversely correlated with the widths of the excitatory and inhibitory synaptic connections (Fig. 7A). This reciprocal relationship between the tuning widths of the neurons and the widths of synaptic projections is a consequence of the balance of excitatory and inhibitory inputs (Fig. 7I–K): because the tuning width of the total excitatory or inhibitory synaptic input onto a neuron is given by a convolution of the synaptic connectivity onto this neuron and the width of the presynaptic neurons' tuning curves, achieving balanced inhibitory and excitatory inputs requires that the experimentally observed broader inhibitory (compared with excitatory) tuning curves be offset by relatively narrower inhibitory synaptic connectivity profiles. This is different from most previous models for spatial working memory, which require broader negative feedback and show no reciprocal relationship between tuning widths of synaptic connectivity and activity profiles. Without different timescales for positive and negative feedback pathways (and, thus, without negative-derivative feedback), narrower negative feedback cannot sustain spatially localized activity (Fig. 8A,E,I,M; see Ermentrout and Cowan, 1980 for a mathematical proof). To stabilize spatially localized activity in such traditional lateral inhibitory models, broader negative feedback than positive feedback is required. This can be achieved either by long-range E-to-I connections (Fig. 8B,F) or long-range I-to-E synaptic connections (Fig. 8C,D,G,H). With broader negative feedback, the excitatory neurons receive broader inhibitory inputs than excitatory inputs (Fig. 8N–P). With no requirement of a close balance between excitation and inhibition, the reciprocal relationship between tuning widths and widths of synaptic projections is not observed in these previous models (Fig. 8J–L). Thus, this reciprocal relationship is a distinct feature of the negative-derivative feedback networks that highlights the mechanism underlying spatial working memory based on balanced excitatory and inhibitory inputs.

Robust memory performance against common perturbations to synaptic weights

A major challenge in short-term memory networks is stably maintaining analog memory representations in the face of perturbations. Although many types of memory networks, including the negative-derivative feedback networks, are quite robust against random noise in synaptic weights that largely can be averaged out across the network or random noise inputs that are filtered out by the slow network dynamics underlying persistent activity, resisting systematic perturbations in weights or intrinsic neuronal response properties has proven to be more challenging. An advantage of negative-derivative networks is that the balance condition that defines these networks is robust against many types of such naturally occurring perturbations. For example, global increases in the intrinsic gains of all neurons, which is equivalent to multiplicatively scaling the strengths of all synaptic connections, does not affect the balance of excitation and inhibition upon which negative-derivative feedback depends. As a result, such perturbations have minimal effect upon the ability of the network to maintain spatially localized persistent activity (Fig. 9A). Conceptually, this is because each neuronal population participates in both positive (through the E-to-E and, effectively, the I-to-I connections) and negative (through E-to-I and I-to-E connections) feedback loops so that such perturbations produce offsetting changes in positive and negative feedback. Quantitatively, this result reflects that the balance condition for derivative-feedback networks is ratiometric, depending only upon the ratio of the synaptic strengths ĴEE(n)ĴII(n)/ĴEI(n)ĴIE(n)∼1 (see Eq. 21). Similarly, examination of this ratiometric condition shows that maintenance of persistent activity with negative-derivative feedback is also robust against global changes in the intrinsic gain of excitatory neurons alone (changes in ĴEE(n) and ĴEI(n); Fig. 9B) or inhibitory neurons alone (changes in ĴIE(n) and ĴII(n); Fig. 9C). Likewise, global changes in excitatory synaptic inputs (Figs. 9E, Fig. 10A,B; changes in ĴEE(n) and ĴIE(n)), inhibitory synaptic inputs (Fig. 9F; changes in ĴEI(n) and ĴII(n)) or all synaptic inputs (Fig. 9D) have minimal effect upon the maintenance of persistent activity, as does loss of a fraction of a subpopulation of neurons, which is equivalent to loss of a fraction of the corresponding excitatory or inhibitory synaptic inputs as in Figure 9D–F.

Furthermore, persistent neural activity in negative-derivative feedback networks is quite robust even against perturbations that occur locally in clusters of neurons with similar preferred spatial locations. To test how well the networks responded to local perturbations, we presented a transient input centered at a location θ = 0 (Fig. 9G–L) and asked how well this item could be maintained in memory following a local perturbation that affected 1/8 of the network. When the perturbation was centered on the preferred location (possibly modeling, for example, effects of attention that changed the gains of neurons triggered by the stimulus), the amplitude of activity increased or decreased mildly for neuronal gain or synaptic weight increases or decreases, respectively, but the time course of persistent activity was only mildly affected (Fig. 10C,D), with the change in time constant approximately linearly related to the perturbation size (data not shown). When the perturbation was located on the flanks of the presented stimulus location (Fig. 9G–L; black bar along x-axis), activity was again maintained persistently in time (data not shown), although there was a small warping of the Gaussian-shaped bump that reflected that the perturbation disrupted the translation-invariant form of the network's structure. Thus, in this case, the perturbation would slightly bias the observation of the cue location if the readout of the network activity remained the same as before the perturbation. However, because the local perturbation does not affect the balance of positive and negative feedback that maintains persistent activity, the cue would remain in memory and, if the perturbation were continually present, a change in network readout could in principle learn to compensate for the changes in shape of the maintained activity profile.

The negative-derivative feedback networks are not robust against all forms of perturbations, in particular those that break the balance between excitation and inhibition that underlies the balance in strength of the positive and negative feedback components of negative-derivative feedback. For example, global or local perturbations in specific excitatory pathways, such as the E-to-E pathways that are dominated by NMDA-type synapses, do disrupt persistent activity (Fig. 10E–H). This is because NMDA-mediated currents are stronger at E-to-E than E-to-I connections (Wang et al., 2008; Wang and Gao, 2009; Rotaru et al., 2011); therefore their disruption imbalances the positive and negative feedback pathways, consistent with recent experimental observations of lack of robustness of working memory to pharmacological blockade of NMDA receptors (Wang et al., 2013). The disruption of persistent activity under such perturbations can be quantified by changes of the time constant of decay of activity at the perturbed location, τeff. As the ratio between the strengths of the positive and negative feedback Jpos/Jneg deviates from 1, τeff decreases inversely proportional to 1 − Jpos/Jneg (Fig. 10I,J). Thus, while many common perturbations such as loss of neurons, changes in intrinsic neuronal gains, or uniform changes in synaptic strengths maintain Jpos/Jneg close to 1 (Fig. 10I, dashed diagonal line), the negative-derivative feedback networks are susceptible to perturbations that break the tuning of Jpos/Jneg ∼ 1 (Fig. 10I, off-diagonal portions).

We note that the lack of robustness to perturbations that disrupt the excitatory–inhibitory balance in our model is different from the behavior observed in previous lateral inhibition models that require rough but not exact balance between excitation and inhibition and therefore exhibit robust memory performance across a wider range of perturbations in connectivity. For example, mild perturbations of the strength of the E-to-E connection alone or the E-to-I or I-to-E connections alone do not affect the memory performance of lateral inhibition models (Camperi and Wang, 1998; Hansel and Sompolinsky, 1998). However, in these models, the spatial patterns of activity can be maintained only at a fixed amplitude, rather than the graded range of amplitudes that can be sustained in models based upon derivative feedback. Thus, the more stringent tuning conditions on synaptic connections in the negative-derivative feedback networks reflects a trade-off between robustness to excitatory–inhibitory imbalance and being able to encode the amplitude of spatial patterns of activity and temporally integrate the strength of inputs.

Irregular firing activity during persistent activity