Summary

Decision-making studies across different domains suggest that decisions can arise from multiple, parallel systems in the brain: a flexible system utilizing action-outcome expectancies, and a more rigid system based on situation-action associations. The hippocampus, ventral striatum and dorsal striatum make unique contributions to each system, but how information processing in each of these structures supports these systems is unknown. Recent work has shown covert representations of future paths in hippocampus, and of future rewards in ventral striatum. We developed new analyses in order to use a comparative methodology and apply the same analyses to all three structures. Covert representations of future paths and reward were both absent from the dorsal striatum. In contrast, dorsal striatum slowly developed situation representations that selectively represented action-rich parts of the task. This triple dissociation suggests that the different roles these structures play are due to differences in information processing mechanisms.

Keywords: dorsal striatum, ventral striatum, nucleus accumbens, hippocampus, neural ensembles, decoding, T-maze, learning, decision-making

Introduction

A key insight from decision-making studies across different domains is that decisions can arise from multiple, parallel systems in the brain (O'Keefe and Nadel, 1978; Schacter and Tulving, 1994; Poldrack and Packard, 2003; Daw et al., 2005; Redish et al., 2008). One system, broadly characterizable as “model-based” relies on internally generated expectations of action outcomes, while the other, “model-free” system uses learned (cached) values of situation-action associations. This distinction between different decision-making systems has been demonstrated behaviorally (e.g. stimulus-response vs. response-outcome learning; Balleine and Dickinson, 1998, as well as response learning vs. place learning; Packard and McGaugh, 1996), has been articulated computationally (Daw et al., 2005; Niv et al., 2006), and maps onto dissociable brain structures (Packard and McGaugh, 1996; Yin et al., 2004). In rodent navigation studies, lesion and inactivation studies have shown that the model-based system (as engaged by place navigation) depends on hippocampal integrity, while the model-free system (as engaged by response navigation) depends on dorsal striatal integrity (Packard and McGaugh, 1996); ventral striatum may play a role in both systems (Atallah et al., 2007).

Computationally, the model-based system is thought to rely on world knowledge in order to generate specific expectations about the outcomes of actions, which may range from anticipating the outcome of a simple lever press to mental simulation or planning over extended spatial maps or Tower of London puzzles (Shallice, 1982). While this process may be computationally expensive, it allows for adaptive behavior in novel situations and under changing goals. In contrast, a typical model-free system associates actions with values, reflecting how well each action has turned out in the past. This system is efficient but also inflexible because cached action values reflect past experience rather than current goals (Daw et al., 2005; Niv et al., 2006; Redish et al., 2008). Thus, computational theories of decision-making have suggested potential information processing differences that capture the behavioral and anatomical distinctions between model-based and model-free decision-making systems. However, in order to reveal the mechanisms actually used in the brain to specifically support these different decision-making algorithms, it is necessary to compare neural activity between structures, on a task that engages both systems.

The Multiple-T task is a spatial decision task that engages different decision-making strategies (Schmitzer-Torbert and Redish, 2002). On this task, Johnson and Redish (2007) found that ensembles of hippocampal neurons transiently represented locations ahead of the animal, sweeping down one arm of the maze, then another, before the animal made its choice. Such “lookahead” operations are a critical element of model-based decision making. However, given that dorsal striatum can represent locations as well (Wiener, 1993; Yeshenko et al., 2004), an important question is whether this property is in fact unique to the hippocampus. Similarly, slow changes in dorsal striatal firing patterns (Barnes et al., 2005) demonstrate reorganization that could support gradual model-free learning. However, slow changes have also been observed in the hippocampus (Mehta et al., 1997; Lever et al., 2002), so in the absence of direct comparison it is not clear if such effects are specific to how dorsal striatum operates. Finally, van der Meer and Redish (2009) found ventral striatal firing patterns relevant to roles in both model-free and model-based decision-making, such as anticipatory “ramping” and covert activation of reward-responsive neurons at decision points. However, it is not known if dorsal striatal neurons show ramping, or reward activation at decision points.

Thus, in order to determine which of these information processing mechanisms are unique to these areas – a requirement if we are to understand the neural basis of their distinct behavioral roles – we compared the firing properties of dorsal striatal, ventral striatal, and hippocampal neurons on the Multiple-T task. Because several of these analyses require large neural ensembles, we used three different groups of animals, one for each structure. The data sets used here include data used in previously published work (dorsal striatum: Schmitzer-Torbert and Redish 2004, ventral striatum: van der Meer and Redish 2009, hippocampus: Johnson and Redish 2007). However, here we use a comparative approach applying the same, new analyses to each structure, allowing direct comparisons and the identification of a triple dissociation in information processing mechanisms.

Results

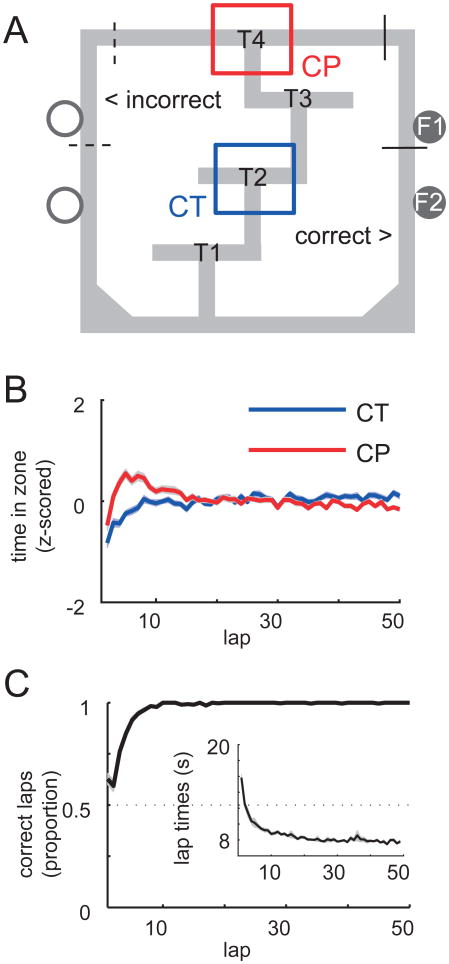

We recorded 1646, 2323 and 1473 spike trains from 98, 96, and 31 recording sessions from dorsal striatum, ventral striatum, and hippocampus respectively, as rats (n = 5 each for dorsal and ventral striatum, n = 6 for hippocampus) performed the Multiple-T task (Figure 1a). On this task, three low-cost choice points (T1-T3) with dead ends on one side were followed by a high-cost choice (T4) between the left or right “return rail”, with only one side rewarded during any given session. Although rats were trained on the task prior to electrode implant surgery, both the rewarded side (left or right choice at T4) as well as the correct sequence of preceding turns (T1-3) could be varied from day to day, such that the rats started out uncertain about the correct choices at the beginning of each session. Rats rapidly learned to choose the rewarded side, reaching asymptotic performance (>90%) within 10 laps (Figure 1c) with each group improving at a comparable rate (Figure S1a). Coincident with this rapid performance increase, rats exhibited pausing behavior at the high cost choice point (T4) during early laps, looking back and forth between left and right before making their choice (a hippocampus-dependent behavior known as vicarious trial and error or VTE, Tolman 1938; Hu and Amsel 1995). Pausing was absent at a control choice point (T2; Figures 1b and S1b). Following this initial VTE phase, choice performance reached asymptote, yet lap times continued to decrease (Figure 1c, inset), indicating a change in behavior beyond choice performance (Schmitzer-Torbert and Redish, 2002). These behavioral characteristics indicate the engagement of different decision-making strategies within single recording sessions.

Figure 1.

Differential coding of task structure in dorsal striatum, ventral striatum, and hippocampus

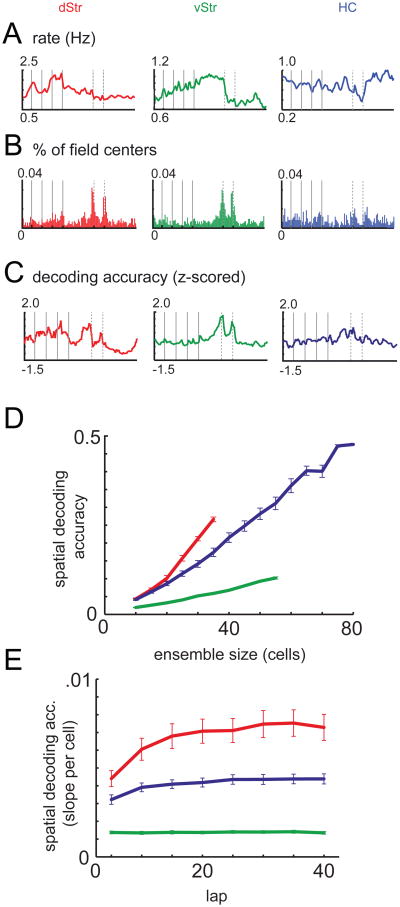

As both striatum and hippocampus are known to contain different cell types (Ranck, 1973; Kawaguchi, 1993), we separated putative projection neurons and interneurons based on firing statistics (Barnes et al., 2005; Schmitzer-Torbert and Redish, 2008). Consistent with previous reports, putative hippocampal pyramidal neurons tended to show spatially focused firing fields (“place fields”, O'Keefe and Dostrovsky 1971) while phasically firing neurons (PFNs; putative medium spiny neurons) in both striatal subregions exhibited a wider range of firing correlates, including maze-related activity and responsiveness to reward (Lavoie and Mizumori 1994; Schmitzer-Torbert and Redish 2004; Barnes et al. 2005; Berke et al. 2009). In order to examine differences between the three structures at the population level, we plotted the average firing rates for putative pyramidal neurons or PFNs in each of the three structures over the track (Figure 2a; interneurons, Figure S2b). Dorsal striatal PFNs were most active on the sequence of turns (S-T4), especially between T3 and T4, and least active on the bottom return rail (F2-S). Ventral striatal PFNs showed a ramping up of activity through the turn sequence, dropping off sharply at the first reward site. Hippocampal pyramidal neuron firing rates were relatively uniform over the track (Levene's test for uniformity, see Table S1) although a decrease between the two reward sites was visible, which may reflect effects of low running speed (pyramidal cells in hippocampus are sensitive to running speed; McNaughton et al. 1983). Because increases in population firing rate at a given location can result from (1) an increase in the number of cells that have fields there, and (2) increased firing rates at that location, we also plotted the distribution of peak firing locations (Figure 2b). Both striatal subregions showed a clear increase in the number of active cells at the reward sites, reflecting a population of reward-responsive neurons absent from hippocampus. In dorsal striatum, a decline in the number of firing fields was visible after the navigation sequence, while hippocampal firing fields were more uniformly distributed (Chi-square test, see Table S1). These characteristics support a distinction in information processing in which dorsal striatum emphasizes the turn sequence (consistent with situation-action encoding), ventral striatum shows a ramp (consistent with representation of motivationally relevant information), and hippocampus represented the track relatively uniformly (consistent with a spatial, map-like representation).

Figure 2.

The above comparison suggests underlying differences in neural coding, but does not reveal how informative these codes are. To address this, we measured the extent to which neural ensembles in the three structures contained information about location on the track. A Bayesian ensemble decoding algorithm was applied, which computes a probability distribution over the track given the numbers of spikes fired by each neuron within a given time window (Zhang et al. 1998; Figure S3). The average probability (over all time windows) at the rat's actual location was used as a measure of decoding accuracy: it indicates how good the ensemble is at representing the rat's actual location. Given the spatial modulation of firing rates on the track, we asked whether at the ensemble level, certain parts of the track could be decoded more accurately than others. To account for differences in decoding accuracy between recording sessions and structures (explored in the next section), we normalized the decoding accuracy distribution over the track (Figure 2c). Dorsal striatal decoding accuracy was best on the sequence of choice points (T1-T4), as well as the reward sites, but poor on the return segment (F2-T1). For hippocampus, the relatively uniform decoding accuracy was in similar agreement with its spatial firing rate distribution (Levene's test for uniformity, see Table S3). In contrast, ventral striatal decoding accuracy did not change over the sequence of turns as the firing rate distribution did. Thus, on the ensemble level, dorsal striatal decoding accuracy focused on the turn sequence on the track as well as the reward sites, while hippocampal decoding accuracy was most uniform, and ventral striatum highlighted the reward sites only.

Dorsal striatum, but not ventral striatum, shows a strong increase in coding efficiency within sessions

The preceding analysis normalized differences in decoding accuracy between sessions and structures. However, such differences can be informative when comparing neural coding properties between structures: we would like to ask “how well” each structure represents location on the track. In distributed representations, decoding accuracy depends on ensemble size (Zhang et al., 1998; Stanley et al., 1999; Wessberg et al., 2000). Thus, comparing decoding accuracy between sessions or structures with different ensemble sizes centers on the extent to which accuracy increases as a function of ensemble size (“coding efficiency”). We therefore used a neuron dropping procedure (Wessberg et al., 2000; Narayanan et al., 2005) to sample random subsets of ensembles (see Experimental Procedures) in order to plot overall decoding accuracy as a function of number of cells for the three structures (Figure 2d). Between structures, spatial decoding accuracy changed differentially as a function of the number of cells in the ensemble (2-factor ANOVA, structure × ensemble size interaction, F(2;1)=200.05; p<10−10). Dorsal striatal ensembles were the most efficient (steepest slope; 2-factor ANOVA for dorsal striatum and hippocampus, structure × ensemble size interaction, F(1,1) =7.72, p =0.0058) while in ventral striatum, spatial decoding accuracy increased the least with ensemble size, and hippocampus fell in between.

Previous studies have found slow changes (across days) in the distribution of dorsal striatal firing rates on a different T-maze task (Barnes et al., 2005). While within single sessions, we did not find evidence for systematic changes in dorsal striatal firing rates (Figure S2a), spatial decoding accuracy can vary independently of firing rate (compare Figures 2a,c) raising the possibility of reorganization with experience at the ensemble level. Thus, we asked how spatial coding efficiency changed over laps for the three structures. Decoding efficiency changed differentially between the three structures (Figure 2e; overall structure × lap interaction, F(2,1) = 11.84, p < 10−10): in ventral striatum, there was no evidence of a change over laps, while dorsal striatum showed the strongest increase. This analysis relies on accurate estimation of the slope of decoding accuracy as a function of the number of cells (verified in Figure S2c). To control for the possibility that behavioral differences between the groups of animals influenced these results, we used a multiple regression analysis to identify behavioral variables which explained a significant amount of variance in decoding efficiency, and subtracted the best fits based on these variables from the data (running speed, distance from an idealized path through the maze, and proportion of correct choices; see Figure S3b); this did not affect the pattern of results. Thus, with experience, dorsal striatum showed the strongest increase in coding efficiency, while hippocampus showed a modest amount, and ventral striatum showed none. These results suggest the presence of a dynamic reorganization process in dorsal striatum which comes to reflect the structure of the task with experience (Nakahara et al., 2002; Barnes et al., 2005; Schmitzer-Torbert and Redish, 2008; Berke et al., 2009).

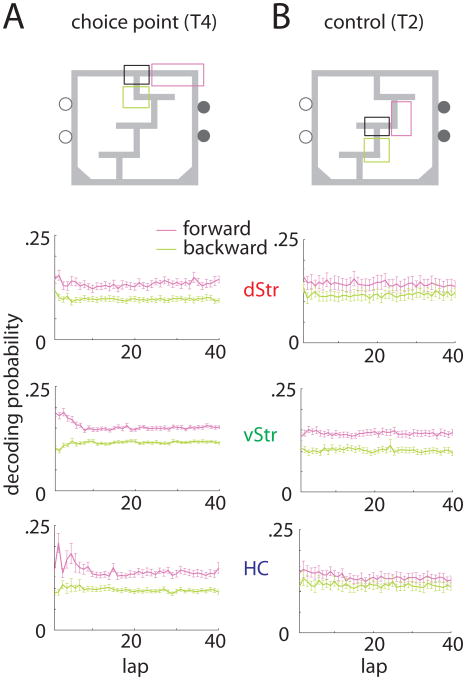

Hippocampus and ventral striatum, but not dorsal striatum, show forward representations at the choice point

Decoding provides access to representational content, allowing analysis of not just how much information a given ensemble contains, but also of what that information actually is (Schneidman et al., 2003). Johnson and Redish (2007) found that as rats paused at the final choice point, hippocampal representations of space swept ahead of the animal, down one arm of the maze and then the other, before the rat made its choice. It is presently not known if other areas in which spatial information is present, such as dorsal striatum, exhibit a similar effect. To investigate this, we applied the decoding algorithm to data from all three structures, using a 20 ms time window. Note that unlike the analysis in Johnson and Redish (2007), this method is “memoryless”, treating each time window as independent. For all passes through the final choice point, the proportion of the decoded probability distribution that fell either ahead, or behind the choice point (Figure 3a) was plotted as a function of lap. While for all three structures the decoding probability to the choice point itself increased over the first 10 laps (data not shown) for dorsal striatum (top panel) decoding to both the behind and ahead zones was marginally increased in this same period, indicating a nonspecific improvement in decoding accuracy. In contrast, ventral striatum and hippocampus showed a different pattern, where decoding ahead of, but not behind, the animal was high during early laps (2-factor ANOVA, lap-decoding zone interaction, smallest F(1,1) =4.69, p =0.031). This is not compatible with a nonspecific decoding improvement: instead, it suggests that during early laps there is increased representation of locations ahead of the animal. We did not find evidence for such events in dorsal striatum, neither when averaged across sessions (2-factor ANOVA, lap-decoding zone interaction, F(1,1) =1.78, p =0.18) nor upon visual inspection of decoding during individual passes through the choice point. Thus, even though dorsal striatal position encoding is at least as good as that in hippocampus (Figure 2d), it did not selectively represent locations ahead of the animal at the choice point.

Figure 3.

To assess whether lookahead in hippocampus (and ventral striatum) was specific to the final choice point, we repeated the same analysis for passes through turn 2, a low-cost choice point (Figure 3b). At this point, decoding ahead of the animal during early laps was much diminished, no longer reaching significance for either hippocampus or ventral striatum (2-factor ANOVA, lap-decoding zone interaction, largest F(1,1) = 0.25, p =0.62). Thus, lookahead occurred specifically at the final choice point, further supporting the notion that such processes are not permanently-on epiphenomena but can be dynamically engaged depending on task demands.

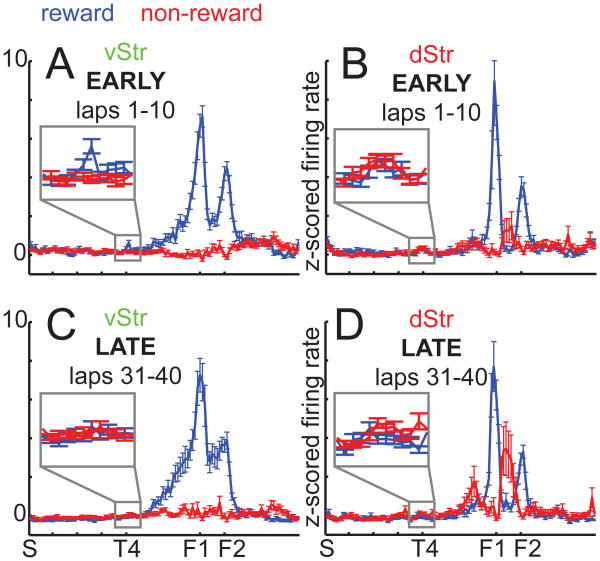

Covert representation of reward in ventral striatum, but not dorsal striatum

van der Meer and Redish (2009) showed that ventral striatal reward-responsive neurons tended to be active at the choice point during early laps, suggesting covert expectation-of reward congruent with model-based decision-making. It is not known if dorsal striatal representations of reward also show this effect. To address this, we applied the same analysis to dorsal striatum, plotting the average (z-scored) firing rate of reward-responsive cells and non-reward-responsive cells over the maze (Figure 4). Note that, because this analysis is designed to address firing rates of reward and non-reward cells on the track in the absence of reward, the normalization and analysis was restricted to firing rates on the part of the track between the turn sequence start (S) to past the final choice point (T4). For completeness, we have included this analysis for hippocampal regions in Figure S4b, even though these did not show the characteristic reward response of the striatal (Figure 2b).

Figure 4.

As reported in van der Meer and Redish (2009) for ventral striatum, a two-way ANOVA with location on the maze (nine bins, from the start of the first T to one-third of the way between T4 and F1) and cell type (reward or non-reward) as factors showed a significant interaction for early laps (1-10, F(8,1) =4.0, p < 0.001) with the reward cells having significantly higher activity in the T4 bin (F(1,1) = 12.56, p < 0.001; see Figure S4a for full firing rate distributions and additional statistics). During late laps (31-40) there was no such difference (F(1,1) =0.22, p =0.64). Thus, ventral striatal reward neurons showed a relative increase in activity specifically at the final choice point during early laps. In contrast, dorsal striatal reward neurons showed no difference in activity at T4 during early laps (F(1,1) =2.47, p =0.116); for late laps, there was a difference (F(1,1) =5.77, p =0.0163), but the non-reward cells were the more active group. Thus, even though we found similarly prominent reward-responsive activity in dorsal striatum compared to ventral striatum, only ventral striatal reward cells showed covert representation of reward at the choice point during early laps.

Discussion

Hippocampus, dorsal striatum and ventral striatum processed information differently on this task, consistent with their different roles in decision-making.

Hippocampus as a cognitive map with online search

Hippocampal “place cells” are classically thought of as providing a cognitive map that supports flexible route planning (O'Keefe and Nadel, 1978). Such a map is an example of a world model that could be used for internal generation of potential outcomes during decision-making. In support of this idea, Johnson and Redish (2007) found that hippocampal ensembles transiently represented possible outcomes at the final choice point of the Multiple-T task. We extend this result in several important ways. First, we found that this “lookahead” is not a permanently enabled property of hippocampus, as would be expected from effects like theta phase precession (Maurer and McNaughton, 2007). Instead, lookahead was specific to the final choice point (T4) and absent from a control choice point (T2). This supports the idea that hippocampal lookahead can be dynamically engaged during decision-making. Critically, using the same analysis on the same task, we found no evidence for lookahead in dorsal striatum, even though dorsal ensembles could represent location as well as or better than hippocampal ensembles on this task (Figure 2d). This demonstrates that lookahead is not a general, brain-wide phenomenon shared by all task-relevant representations but in the current dataset is restricted to brain systems known to play a role in “model-based” decision-making.

Dorsal striatum as a situation-action associator

We found that dorsal striatal firing, field, and decoding distributions were skewed towards the turn sequence of the task, as well as reward locations and cues predicting reward delivery (Figure 2). The turn sequence and reward cues together determine the structure of the task, i.e. how the actions the rat takes map onto motivationally relevant outcomes. Models that learn what action to take in what situation in order to maximize reward (such as temporal-difference reinforcement learning), need to represent this information (Sutton and Barto, 1998). Thus, dorsal striatum selectively represents those task aspects which computational accounts suggest are important for gradual, model-free learning. This is congruent with previous suggestions about the role of dorsal striatum as indicated by inactivation, recording, and imaging studies (Poldrack and Packard, 2003; Balleine et al., 2007; Redish et al., 2008). However, in our comparative approach, we can additionally show what dorsal striatum does not represent. It does not represent locations ahead of the animal at decision points, as hippocampus does; neither does it covertly represent rewards, as ventral striatum does. Although a population of dorsal striatal neurons responded to reward-predictive cues, these were not neurons that were activated by the rewards themselves (Figure 3b), consistent with a developing representation of cue-action value associations. Thus, dorsal striatum does not appear to employ model-based internal generation of possible outcomes.

Dorsal striatum did not represent all locations equally, even though animals executed similar actions at those locations, suggesting that that dorsal striatum learns to disregard task-irrelevant aspects with experience (such as the maze segment from the reward sites to the start of the turn sequence, which is constant and does not contain decision points). The gradual increase in coding efficiency further supports such reorganization with experience, consistent with reports from Graybiel and colleagues (e.g. Barnes et al., 2005), although we show this effect within session (instead of across days) and using an ensemble measure (which addresses spatial information content rather than firing rates alone). Taken together, these results support the notion that dorsal striatum learns to represent situation-action associations as proposed by computational accounts of model-free, habitual, or response-driven decision-making. It explicitly does not share properties important for model-based decision-making, even though the same analysis reveals such properties in other structures on the same task.

It may be surprising that dorsal striatum appears to contain more information about location on the track than hippocampus, whose relatively uniform distribution of place cells is well suited to spatial representation. However, on this task, spatial location is an important element of task structure (whether to turn left or right depends on location; reward locations are fixed). As such, dorsal striatum would be expected to represent spatial information on this task. Others have also observed that dorsal striatal firing patterns contain information about spatial location (Wiener, 1993; Yeshenko et al., 2004). However, this does not mean that dorsal striatal representations are intrinsically spatial. In fact, studies on tasks where reward locations were explicitly dissociated from space (Schmitzer-Torbert and Redish, 2008) or where multiple locations were equivalently associated with rewards (Berke et al., 2009) found that dorsal striatum did not represent space well. More generally, these considerations serve as a reminder that it is important to consider both task structure and ensemble-level properties when making inferences about representational content.

Ventral striatum as an evaluator of actual and expected situations

As shown by van der Meer and Redish (2009), ventral striatal reward neurons tended to re-activate at the final choice point during early laps. We show here that even though dorsal striatum has a similarly sized population of reward-responsive neurons, by the same analysis on the same task dorsal striatal neurons do not show this effect. This serves, first, as a particularly informative control that strengthens the original finding by illustrating that it is not due to non-specific behavioral features such as simply pausing at the choice point. More importantly, this difference in information processing mechanisms in dorsal and ventral striatum maps onto the conceptual difference between situation-action representations and action-outcome representations: while dorsal striatal neurons learned to respond to reward-predictive cues, these neurons did not respond to actual rewards. This suggests a potential role for ventral striatum in model-based decision-making.

Ventral striatum is generally acknowledged as an important structure in mediating motivated or goal-directed behaviors. A popular suggestion is that it acts as the “critic” component of a reinforcement learning system (Atallah et al., 2007). Anticipatory ramp cells seen in primate and rodent studies could be interpreted as an instantiation of a critic-like value signal (Schultz et al., 1992; Lavoie and Mizumori, 1994; Miyazaki et al., 1998). The ramp nature of this signal suggests a certain motivational relevance. On our task, it is clear that ventral and dorsal striatum have very different information processing properties. Dorsal striatum lacks the population firing rate ramp of ventral striatum, while ventral striatal decoding accuracy was poor compared to dorsal striatum and hippocampus. This suggests that ventral striatum represents global quantities related to value or motivation, which may fluctuate throughout a session, resulting in poor decoding accuracy. Thus, our results imply that ventral striatum may carry multiple motivationally relevant signals: a global ramp that may serve as the value signal in model-free learning systems, but also the covert representation of reward important for model-based systems.

Synthesis

In conclusion, we observed multiple dissociations in information processing between dorsal striatum, ventral striatum and hippocampus: While hippocampal neural ensembles encoded future paths during pauses at the choice point, dorsal striatal ensembles did not. While ventral striatal reward-related cells showed activity during pauses at the choice point, dorsal striatal reward-related cells did not. In contrast, dorsal striatal ensembles slowly developed a more accurate spatial representation than hippocampal ensembles on the action-rich navigation sequence of the task, and dorsal striatal non-reward-related cells slowly developed responses to high-value cues.

These differences reveal the different computations these structures are performing to accommodate their roles in model-based and model-free decision-making: Hippocampus provides a cognitive map of the environment, which can dynamically represent potential future paths. During pauses at choice points, ventral striatal reward representations are reactivated, as an expectation of future reward outcome. Ventral striatum also develops an activity ramp through the task, which may provide a motivationally relevant signal. Dorsal striatum does not represent expectancies or show a firing rate ramp, but instead develops stimulus-action representations with experience. These data bridge the behavioral and lesion data with computational/theoretical models of decision-making, directly linking distinct behavioral roles with unique information processing mechanisms at the neural level.

Experimental Procedures

Subjects

16 male Brown Norway-Fisher 344 hybrid rats (Harlan, IA), aged 8–12 months, were food deprived to no less than 85% of their free-feeding body weight during behavioral training; water was available ad libitum in the home cage at all times. All procedures were conducted in accordance with National Institutes of Health guidelines for animal care and approved by the IACUC at the University of Minnesota. Care was taken to minimize the number of animals used in these experiments and to minimize suffering.

Multiple-T task

The Multiple-T task apparatus, a carpet-lined track elevated 15 cm above the floor, consisted of a turn sequence of 3-5 T-choices, a top and a bottom rail, and two return rails leading back to the start of the turn sequence (Figure 1a). The specific configuration of the turn sequence could be varied from day to day. Two feeder sites at each of the return rails could deliver two 45 mg food pellets each (Research Diets, New Brunswick, NJ) through computer-controlled pellet dispensers (Med-Associates, St. Albans, VT), released when a ceiling-mounted camera and a position tracking system (Cheetah, Neuralynx, Bozeman, MT, and custom software written in MATLAB, Natick, MA) detected the rat crossing the active feeder trigger lines. Only one set of feeder sites (either on the left or the right return rail) was active in any given session. For pre-surgery training, rats ran 3-T and 5-T mazes with the turn sequence changed every day; once rats were running proficiently after surgery, recording sessions were run on 4-T mazes in a sequence of 7 novel/7 unchanged/7 novel configurations, for a total of 21 sessions per rat. Novel sequences consisted of session-unique sequences (Figure 1a shows the “RRLR” sequence). 98, 96, and 31 recording sessions from dorsal striatum, ventral striatum, and hippocampus respectively were accepted for analysis: for the hippocampal recordings we obtained good ensemble sizes only for a few days out of the 21-day protocol. However, the proportions of Novel/Familiar sessions were comparable to those in the other data sets (20 novel/11 familiar, compared to 68/30 for dorsal and 68/28 for ventral striatum). Furthermore, all analyses reported are within-session only, so the number of sessions should not affect the results. Rats were allowed to run as many laps as desired in each 40-minute recording session. Data collection was as described previously (Schmitzer-Torbert and Redish, 2004; Johnson and Redish, 2007; van der Meer and Redish, 2009).

Surgery

Following pre-training, rats were chronically implanted with an electrode array consisting of 12 tetrodes and 2 reference electrodes which could be moved in the dorsal-ventral plane (“hyperdrive”, Kopf, Tujunga, CA). Structures were targeted by centering the hyperdrive on stereotactic coordinates relative to bregma: AP +1.2, ML ± 2.3-2.5 mm for ventral striatum, AP +0.5, ML ± 3.0 mm for dorsal striatum, and AP -3.8, ML ± 4.0 mm for hippocampus (subfield CA3) as described previously (Schmitzer-Torbert and Redish, 2004; Johnson and Redish, 2007; van der Meer and Redish, 2009).

Spatial decoding

We used a Bayesian spatial decoding algorithm, designed to provide an estimate of the animal's location given ensemble spiking activity at any given time in the session (Zhang et al., 1998). For each time window, this method takes the spike counts from each cell i and computes the posterior probability of the rat being at location x given spike counts si, p(x|s). We used a time window of 200 ms (for the analysis in Figures 2) or 20 ms (for the analysis in Figure 3) and a uniform spatial prior. To obtain the decoding accuracy measure, for each time window, the probability of decoding to the animal's actual location was taken from the decoded probability distribution for that time window; the pattern of results did not change when slightly wider windows of 3 and 5 bins around the animal's actual location were used. This “local probability” was then averaged for each actual location on the track; this was done to minimize the contribution of long periods of inactivity at the reward sites when averaging over time windows alone. (This method is identical to that used in Schmitzer-Torbert and Redish, 2008, and in van der Meer and Redish, 2009.) Only recording sessions with at least 20 simultaneously recorded cells were used and only cells that fired at least 25 spikes in the session were included. For the slow timescale analysis, using a time window of 50, 100 or 150 ms did not change the pattern observed (data not shown).

Further experimental procedures, including cell classification, track linearization, and spatial distribution analyses, can be found in the Supplementary Online Material.

Supplementary Material

Acknowledgments

We thank Anoopum Gupta, Jadin Jackson, and Paul Schrater for discussion, and David Crowe and Geoffrey Schoenbaum for comments on a previous version of the manuscript. Supported by NIH grants MH068029, MH080318, T32HD007151 (Center for Cognitive Sciences, University of Minnesota), IGERT training grant 9870633, graduate student NSF fellowships, Dissertation Fellowships, and the Land Grant Professorship program at the University of Minnesota. This work has been published in abstract form (Frontiers in Systems Neuroscience, conference abstract: CoSyNe 2009); we are grateful to the conference participants for their suggestions.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Atallah HE, Lopez-Paniagua D, Rudy JW, O'Reilly RC. Separate neural substrates for skill learning and performance in the ventral and dorsal striatum. Nat Neurosci. 2007;10(1):126–131. doi: 10.1038/nn1817. [DOI] [PubMed] [Google Scholar]

- Balleine BW, Delgado MR, Hikosaka O. The role of the dorsal striatum in reward and decision-making. J Neurosci. 2007;27(31):8161–8165. doi: 10.1523/JNEUROSCI.1554-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barnes TD, Kubota Y, Hu D, Jin DZ, Graybiel AM. Activity of striatal neurons reflects dynamic encoding and recoding of procedural memories. Nature. 2005;437(7062):1158–1161. doi: 10.1038/nature04053. [DOI] [PubMed] [Google Scholar]

- Berke JD, Breck JT, Eichenbaum H. Striatal versus hippocampal representations during win-stay maze performance. J Neurophysiol. 2009;101:1575–1587. doi: 10.1152/jn.91106.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berke JD, Okatan M, Skurski J, Eichenbaum HB. Oscillatory entrainment of striatal neurons in freely moving rats. Neuron. 2004;43(6):883–896. doi: 10.1016/j.neuron.2004.08.035. [DOI] [PubMed] [Google Scholar]

- Csicsvari J, Hirase H, Czurk A, Mamiya A, Buzski G. Oscillatory coupling of hippocampal pyramidal cells and interneurons in the behaving rat. J Neurosci. 1999;19(1):274–287. doi: 10.1523/JNEUROSCI.19-01-00274.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat Neurosci. 2005;8(12):1704–1711. doi: 10.1038/nn1560. [DOI] [PubMed] [Google Scholar]

- Hu D, Amsel A. A simple test of the vicarious trial-and-error hypothesis of hippocampal function. Proc Natl Acad Sci U S A. 1995;92(12):5506–5509. doi: 10.1073/pnas.92.12.5506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson A, Redish AD. Neural ensembles in CA3 transiently encode paths forward of the animal at a decision point. J Neurosci. 2007;27(45):12176–12189. doi: 10.1523/JNEUROSCI.3761-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kawaguchi Y. Physiological, morphological, and histochemical characterization of three classes of interneurons in rat neostriatum. J Neurosci. 1993;13(11):4908–4923. doi: 10.1523/JNEUROSCI.13-11-04908.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lavoie AM, Mizumori SJ. Spatial, movement-and reward-sensitive discharge by medial ventral striatum neurons of rats. Brain Res. 1994;638(1-2):157–168. doi: 10.1016/0006-8993(94)90645-9. [DOI] [PubMed] [Google Scholar]

- Lever C, Wills T, Cacucci F, Burgess N, O'Keefe J. Long-term plasticity in hippocampal place-cell representation of environmental geometry. Nature. 2002;416(6876):90–94. doi: 10.1038/416090a. [DOI] [PubMed] [Google Scholar]

- Mallet N, Moine CL, Charpier S, Gonon F. Feedforward inhibition of projection neurons by fast-spiking gaba interneurons in the rat striatum in vivo. J Neurosci. 2005;25(15):3857–3869. doi: 10.1523/JNEUROSCI.5027-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Markus EJ, Barnes CA, McNaughton BL, Gladden VL, Skaggs WE. Spatial information content and reliability of hippocampal CA1 neurons: Effects of visual input. Hippocampus. 1994;4:410–421. doi: 10.1002/hipo.450040404. [DOI] [PubMed] [Google Scholar]

- Maurer AP, McNaughton BL. Network and intrinsic cellular mechanisms underlying theta phase precession of hippocampal neurons. Trends Neurosci. 2007;30(7):325–333. doi: 10.1016/j.tins.2007.05.002. [DOI] [PubMed] [Google Scholar]

- McNaughton BL, Barnes CA, O'Keefe J. The contributions of position, direction, and velocity to single unit activity in the hippocampus of freely-moving rats. Exp Brain Res. 1983;52(1):41–49. doi: 10.1007/BF00237147. [DOI] [PubMed] [Google Scholar]

- Mehta MR, Barnes CA, McNaughton BL. Experience-dependent, asymmetric expansion of hippocampal place fields. Proc Natl Acad Sci U S A. 1997;94(16):8918–8921. doi: 10.1073/pnas.94.16.8918. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miyazaki K, Mogi E, Araki N, Matsumoto G. Reward-quality dependent anticipation in rat nucleus accumbens. Neuroreport. 1998;9(17):3943–3948. doi: 10.1097/00001756-199812010-00032. [DOI] [PubMed] [Google Scholar]

- Nakahara H, Amari Si, Hikosaka O. Self-organization in the basal ganglia with modulation of reinforcement signals. Neural Comp. 2002;14(4):819–844. doi: 10.1162/089976602317318974. [DOI] [PubMed] [Google Scholar]

- Narayanan NS, Kimchi EY, Laubach M. Redundancy and synergy of neuronal ensembles in motor cortex. J Neurosci. 2005;25(17):4207–4216. doi: 10.1523/JNEUROSCI.4697-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niv Y, Joel D, Dayan P. A normative perspective on motivation. Trends Cogn Sci. 2006;10(8):375–381. doi: 10.1016/j.tics.2006.06.010. [DOI] [PubMed] [Google Scholar]

- O'Keefe J, Dostrovsky J. The hippocampus as a spatial map. preliminary evidence from unit activity in the freely-moving rat. Brain Res. 1971;34(1):171–175. doi: 10.1016/0006-8993(71)90358-1. [DOI] [PubMed] [Google Scholar]

- O'Keefe J, Nadel L. The hippocampus as a cognitive map. Clarendon Press; 1978. [Google Scholar]

- Packard MG, McGaugh JL. Inactivation of hippocampus or caudate nucleus with lidocaine differentially affects expression of place and response learning. Neurobiol Learn Mem. 1996;65(1):65–72. doi: 10.1006/nlme.1996.0007. [DOI] [PubMed] [Google Scholar]

- Poldrack RA, Packard MG. Competition among multiple memory systems: converging evidence from animal and human brain studies. Neuropsychologia. 2003;41(3):245–251. doi: 10.1016/s0028-3932(02)00157-4. [DOI] [PubMed] [Google Scholar]

- Ranck JB. Studies on single neurons in dorsal hippocampal formation and septum in unrestrained rats. i. behavioral correlates and firing repertoires. Exp Neurol. 1973;41(2):461–531. doi: 10.1016/0014-4886(73)90290-2. [DOI] [PubMed] [Google Scholar]

- Redish AD, Jensen S, Johnson A. A unified framework for addiction: vulnerabilities in the decision process. Behav Brain Sci. 2008;31(4):415–37. doi: 10.1017/S0140525X0800472X. discussion 437–87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schacter DL, Tulving E, editors. Memory Systems 1994. MIT Press; 1994. [Google Scholar]

- Schmitzer-Torbert N, Redish AD. Development of path stereotypy in a single day in rats on a multiple-t maze. Arch Ital Biol. 2002;140(4):295–301. [PubMed] [Google Scholar]

- Schmitzer-Torbert N, Redish AD. Neuronal activity in the rodent dorsal striatum in sequential navigation: separation of spatial and reward responses on the multiple t task. J Neurophysiol. 2004;91(5):2259–2272. doi: 10.1152/jn.00687.2003. [DOI] [PubMed] [Google Scholar]

- Schmitzer-Torbert NC, Redish AD. Task-dependent encoding of space and events by striatal neurons is dependent on neural subtype. Neuroscience. 2008;153(2):349–360. doi: 10.1016/j.neuroscience.2008.01.081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schneidman E, Bialek W, Berry MJ. Synergy, redundancy, and independence in population codes. J Neurosci. 2003;23(37):11539–11553. doi: 10.1523/JNEUROSCI.23-37-11539.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz W, Apicella P, Scarnati E, Ljungberg T. Neuronal activity in monkey ventral striatum related to the expectation of reward. J Neurosci. 1992;12(12):4595–4610. doi: 10.1523/JNEUROSCI.12-12-04595.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shallice T. Specific impairments of planning. Philos Trans R Soc Lond B Biol Sci. 1982;298(1089):199–209. doi: 10.1098/rstb.1982.0082. [DOI] [PubMed] [Google Scholar]

- Stanley G, Li F, Dan Y. Reconstruction of natural scenes from ensemble responses in the lateral geniculate nucleus. Journal of Neuroscience. 1999;19(18):8036–8042. doi: 10.1523/JNEUROSCI.19-18-08036.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sutton R, Barto A. Reinforcement Learning: An Introduction. MIT Press; Cambridge, MA: 1998. [Google Scholar]

- Tolman E. The determiners of behavior at a choice point. Psychological Review. 1938;45(1):1–35. [Google Scholar]

- van der Meer MAA, Redish AD. Covert expectation-of-reward in rat ventral striatum at decision points. Frontiers in Integrative Neuroscience. 2009;3(1):1–15. doi: 10.3389/neuro.07.001.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wessberg J, Stambaugh C, Kralik J, Beck P, Laubach M, Chapin J, Kim J, Biggs S, Srinivasan M, Nicolelis M. Real-time prediction of hand trajectory by ensembles of cortical neurons in primates. Nature. 2000;408(6810):361–365. doi: 10.1038/35042582. [DOI] [PubMed] [Google Scholar]

- Wiener SI. Spatial and behavioral correlates of striatal neurons in rats performing a self-initiated navigation task. J Neurosci. 1993;13(9):3802–3817. doi: 10.1523/JNEUROSCI.13-09-03802.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yeshenko O, Guazzelli A, Mizumori SJY. Context-dependent reorganization of spatial and movement representations by simultaneously recorded hippocampal and striatal neurons during performance of allocentric and egocentric tasks. Behav Neurosci. 2004;118(4):751–769. doi: 10.1037/0735-7044.118.4.751. [DOI] [PubMed] [Google Scholar]

- Yin HH, Knowlton BJ, Balleine BW. Lesions of dorsolateral striatum preserve outcome expectancy but disrupt habit formation in instrumental learning. Eur J Neurosci. 2004;19(1):181–189. doi: 10.1111/j.1460-9568.2004.03095.x. [DOI] [PubMed] [Google Scholar]

- Zhang K, Ginzburg I, McNaughton BL, Sejnowski TJ. Interpreting neuronal population activity by reconstruction: unified framework with application to hippocampal place cells. J Neurophysiol. 1998;79(2):1017–1044. doi: 10.1152/jn.1998.79.2.1017. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.