Abstract

In 1999, the Institute of Medicine (IOM) published Ensuring Quality Cancer Care, an influential report that described an ideal cancer care system and issued ten recommendations to address pervasive gaps in the understanding and delivery of quality cancer care. Despite generating much fervor, the report’s recommendations—including two recommendations related to quality measurement—remain largely unfulfilled.

Amidst continuing concerns regarding increasing costs and questionable quality of care, the IOM charged a new committee with revisiting the 1999 report and with reassessing national cancer care, with a focus on the aging US population. The committee identified high-quality patient-clinician relationships and interactions as central drivers of quality and attributed existing quality gaps, in part, to the nation’s inability to measure and improve cancer care delivery in a systematic way. In 2013, the committee published its findings in Delivering High-Quality Cancer Care: Charting a New Course for a System in Crisis, which included two recommendations that emphasize coordinated, patient-centered quality measurement and information technology enhancements:

Develop a national quality reporting program for cancer care as part of a learning health care system; and,

Develop an ethically sound learning health care information technology system for cancer that enables real-time analysis of data from cancer patients in a variety of care settings.

These recommendations underscore the need for independent national oversight, public-private collaboration, and substantial funding to create robust, patient-centered quality measurement and learning enterprises to improve the quality, accessibility, and affordability of cancer care in America.

Background of the Report

Cancer patients deserve the best care possible, yet many obstacles render timely, efficient, safe, and affordable cancer care an elusive goal, even in the 21st century. Since the 1990s, the Institute of Medicine (IOM) has directed attention to these quality issues. Most recently, the IOM assembled the Committee on Improving the Quality of Cancer Care: Addressing the Challenges of an Aging Population (“Committee”) in 2012 to revisit prior analyses and recommendations for the nation’s cancer care delivery system, examining what had changed, what challenges remained, whether new problems had arisen, and how health care reform might affect quality of care—with a specific focus on the aging US population. Examining the current delivery system from the six IOM aims for improvement (safety, timeliness, efficiency, effectiveness, patient-centeredness and equity),2 the Committee identified many quality gaps and published its findings in Delivering High-Quality Cancer Care: Charting a New Course for a System in Crisis.1 This report takes a fresh look at today’s obstacles to high-quality cancer care and recommends solutions for major stakeholders. Patient-clinician relationships and interactions—universal elements of the health care system—are a central focus of its recommendations and conceptual framework, since high-quality interactions are critical to delivering high-quality cancer care.

This report builds upon a 1999 IOM report, Ensuring Quality Cancer Care, which outlined ten recommendations to improve the quality of cancer care, including two recommendations to develop, collect, and disseminate a core set of cancer quality measures.3 Unfortunately, many objectives outlined in Ensuring Quality Cancer Care remain unfulfilled, and the status of quality measures and data systems remains remarkably unchanged. Then, and now, the IOM associated many quality gaps with an inability to measure performance and improve it in a systematic fashion. Thus, Delivering High-Quality Cancer Care: Charting a New Course for a System in Crisis highlights the key role that quality measurement plays in improving the quality of cancer care. This review describes deficiencies in the nation’s quality measurement system and a path forward to improve the quality of cancer care in America.

The Conceptual Framework

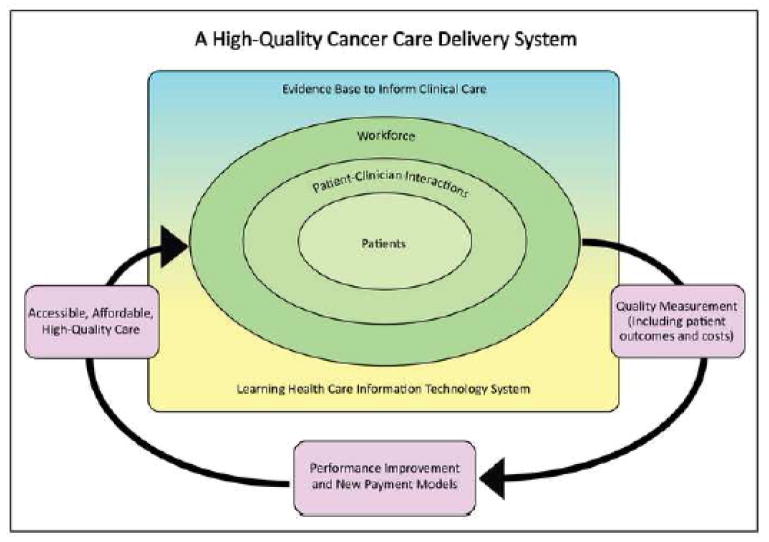

The report outlines six components of a high-quality cancer care delivery system: 1) engaged patients; 2) an adequately-staffed, trained, and coordinated workforce; 3) evidence-based cancer care; 4) a learning health care information technology (IT) system; 5) translation of evidence into clinical practice, quality measurement, and performance improvement; and, 6) accessible, affordable cancer care.1

Quality measures are integral to this conceptual framework, providing an objective means for patients and their families to identify high-quality cancer care, for providers to standardize care practices, and for payers to incentivize higher quality care through alternative reimbursement mechanisms. Additionally, quality measures allow providers and payers to determine whether performance improvement initiatives and new payment models improve the quality, accessibility, and affordability of cancer care. The act of measuring performance can motivate clinicians to improve care delivery, either out of the desire for self-improvement or to provide comparable or better care than their colleagues.4 Thus, to build and sustain a high-quality cancer care delivery system, its members must be able to measure and assess progress in improving cancer care delivery, to report that information publicly, and to develop innovative strategies for further performance improvement. In the Committee’s conceptual framework for this system (Figure 1), quality measurement is part of a cyclical process. The system measures the outcomes of patient-clinician interactions (including health care outcomes and costs), which inform development of performance improvement initiatives and implementation of new payment models. These, in turn, lead to improvements in the quality, accessibility, and affordability of cancer care.

Figure 1. A High-Quality Cancer Care Delivery System.

Figure 1 represents the committee’s conceptual framework for improving the quality of cancer care. Quality is achieved through the cyclical process of measurement, improvement, and integration into the system. The system should be accessible and affordable to all patients with a cancer diagnosis. Reprinted with permission from Delivering High-Quality Cancer Care: Charting a New Course for a System in Crisis, 2013 by the National Academy of Sciences, Courtesy of the National Academies Press, Washington, D.C.

Quality Measurement Challenges

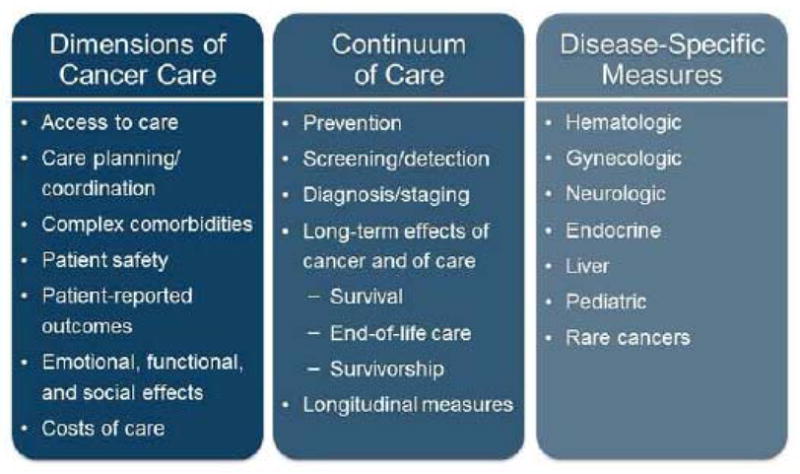

The Committee’s conceptual framework provides a solid basis to improve the quality of cancer care. Foundational measures evaluate the quality of care across the treatment cycle—prevention and early detection; diagnosis and treatment; and, survivorship or end of life—and along important dimensions of care, including access to care and quality of life.5,6 Disease-specific measures assess adherence to screening and treatment guidelines and complement broader, cross-cutting cancer measures. Several specialty-focused quality measurement registries facilitate standardized reporting of these types of measures, as summarized in Table 1. Despite these efforts, continued deficiencies in cancer-focused quality measures, including measurement gaps and overlapping, duplicative, or competing measures, undermine efforts to measure and improve performance systematically. Figure 2 summarizes key measurement gaps, and factors contributing to these deficiencies are described below.

Table 1. Specialty-Focused Quality Measurement Registries.

Table 1 provides an overview of three specialty-focused quality measurement registries for cancer care.

| American Society of Clinical Oncology Quality Oncology Practice Initiative Program |

| • Background: Administered by the American Society of Clinical Oncology (ASCO) as a voluntary practice-based quality program since 2006, the Quality Oncology Practice Initiative (QOPI) provides tools and resources for quality measurement, benchmarking, and performance improvement in the outpatient setting.41,42 QOPI is based on retrospective, manual chart review and evaluation with specific practice-level feedback.43 |

| • Benefits: Participation is associated with measurable improvement—with the greatest improvement observed among practices with low baseline performance and in measures associated with new clinical guidelines.41,43 Though voluntary, its membership increased when the QOPI certification program was introduced and when the program was adopted by third-party payers.44 |

| • Limitations: QOPI measures are process-based and do not evaluate the outcomes of care. Data abstraction and submission are time-consuming, and program costs are absorbed by participating practices (except for an innovative program in Michigan 45). QOPI’s voluntary nature limits widespread participation. |

| • Recent Developments: ASCO is developing eQOPI to automate QOPI data abstraction, as part of its CancerLinQ rapid health learning system. With CancerLinQ, ASCO seeks to: 1) capture, aggregate, and use complete longitudinal patient records from any source; 2) provide real-time, guideline-based clinical decision support; 3) measure clinical performance of eQOPI performance measures; and, 4) explore hypotheses from clinical data sets. Following a successful 2012 prototype, ASCO is implementing CancerLinQ system wide.46,47 |

| American College of Surgeons National Surgical Quality Improvement Program |

| • Background: The National Surgical Quality Improvement Program (NSQIP) is a voluntary outcomes-focused program that started in the Veterans Administration (VA) system.48 Since 2005, NSQIP has been available to non-VA hospitals through the American College of Surgeons (ACoS). Independent, trained reviewers prospectively collect NSQIP data via manual chart review and real-time patient follow-up. Participants receive semi-annual reports with risk-adjusted surgical outcomes; non-adjusted data are available via a web-based portal. |

| • Benefits: Through standardized collection of pre-operative risk factors and 30-day post-operative outcomes, NSQIP supports robust, data-driven quality improvement and benchmarking. Extensive peer-reviewed, published literature demonstrates that participation is associated with fewer deaths and complications and with lower costs.48–51 |

| • Limitations: Currently, NSQIP does not collect cancer-specific risk factors, such as cancer stage, or longer-term outcomes (beyond 30 days after surgery). There is a significant time delay between data collection and receipt of reports containing risk-adjusted outcomes. Data abstraction and submission are time-consuming, and program costs are absorbed by participating practices. NSQIP’s voluntary nature limits widespread penetration. |

| • Recent Developments: NSQIP is piloting an oncology-focused module for use in solid tumor patients. Researchers are utilizing NSQIP to study risk factors associated with morbidity and mortality for certain cancer treatments. This research may guide treatment planning and patient counseling in the future.52–56 |

| National Radiation Oncology Registry |

| • Background: In 2012, the Radiation Oncology Institute (ROI) and the American Society for Radiation Oncology (ASTRO) began collaborating on the National Radiation Oncology Registry (NROR), the nation’s first radiation oncology-specific national registry. The developers’ goals include creating a system with automated data collection and near real-time benchmarking of structural, treatment delivery, and short- and long-term outcomes. NROR began information technology (IT) infrastructure testing for prostate cancer in December 2012.57 |

• Benefits: The beta system has strong foundational elements, including:

|

| • Limitations: Due to its pilot status, it is unclear how successful NROR will be at automating data collection and reporting of the proposed measures. Additionally, it will take several years to assess NROR’s impact on health care delivery and treatment outcomes. |

| • Recent Developments: Patient enrollment and site expansions are planned for 2013 and 2014, respectively. Expansion to additional disease sites is planned for 2015. |

Figure 2. Key Gaps in Cancer-Specific Quality Measures.

Figure 2 lists significant gaps in existing cancer-specific quality measures, including gaps in measures that assess important dimensions of cancer care, gaps throughout the continuum of cancer care, and gaps in disease-specific measures.

Inadequate Consideration of Patient Perspectives

Historically, public reporting has focused on clinical quality measures from institutional administrative data (e.g., readmissions), which lack meaning for patients and are misinterpreted frequently,7 contributing to erroneous conclusions about health care quality. Patients use these data minimally when choosing providers,8–10 despite their strong interest in health care quality information.11 This non-consumer-oriented approach ignores patient information needs and preferences, despite well-intentioned efforts to increase transparency in health care.

Additionally, most cancer quality measures evaluate provider adherence to evidence-based guidelines, giving minimal consideration to patient preferences regarding care, and, in particular, to the patient-reported outcomes associated with particular treatment plans. Clinical and psychosocial factors influence patient treatment preferences and should inform providers’ treatment recommendations. In certain cases, a personalized approach may yield better outcomes than strict guideline adherence.12,13 Thus, quality measures should allow providers to respect individual patient preferences, needs, and values in delivering cancer care without penalty.

Measure Fragmentation

Cancer patients move between multiple oncology and non-oncology care settings throughout their care. Accordingly, health care outcomes, costs, and patient experience are influenced by multiple providers and should be measured using an integrated approach that traverses care settings. Instead, most cancer quality measures mirror existing fragmentation in health care delivery and focus on the clinician, medical subspecialty, or care setting, rather than the patient. Several problems contribute to this fragmented state: independent measure development and reporting efforts; lack of national oversight; failure to develop appropriate collective attribution methodologies for health care outcomes and costs; and, reporting programs that operate without clear goals and a strong measurement framework.

Thus, overlapping quality measures with slight, but meaningful, differences co-exist with pervasive gaps in cancer measures. This dichotomy weakens efforts toward shared accountability, care coordination, and patient-centered care. Organizations, such as the National Quality Forum (NQF), encourage measure developers to harmonize similar measures to reduce measure fragmentation. However, their efforts have yielded minimal success due to the non-binding nature of their recommendations.

Challenging Measure Criteria

Measure developers face significant challenges in translating measure concepts into validated quality measures. Measures that support broader comparisons across diseases or practice settings often fail the rigorous technical standards established by organizations, including the NQF. Thus, many validated measures are highly specific—incorporating complex inclusion/exclusion criteria and applying to small patient populations. Some measures are so specific that they produce small sample sizes that cannot be validated through traditional statistical testing methods. Thus far, measure developers and endorsing organizations have failed to strike a balance between satisfying the technical criteria that are important for validating measures, the need to fill critical measurement gaps, and the need to measure health care in a broader, more coordinated fashion.

Scientific Evidence Gaps

Since clinical research provides the foundation for measure development, many cancer measurement gaps derive from associated clinical research gaps. For example, elderly cancer patients are underrepresented in quality measurement for cancer care due primarily to their underrepresentation in clinical trials.14–17 Hence, existing quality measures may not apply directly to older adults, even though most new cancer diagnoses occur in this population.18 This omission is reflected in treatment- and screening-related disparities among the elderly.19–25 Additionally, because most clinical research evaluates the technical aspects of anti-cancer therapies, there are significant gaps in necessary research for oncology-focused measures of care coordination, quality of life, patient/family engagement, and costs of care. Inadequate national funding and direction for this research are contributing factors.

Inadequate Data Systems

In contrast to major technical advances in health care technology, minimal progress has occurred in producing timely and actionable performance data to support quality measurement and performance improvement. Many measures utilize administrative claims data. However, these data were not intended for this purpose, and, therefore, have limited ability to provide meaningful insights into care. Health care legislation and regulations have triggered increased use of electronic health records (EHR), but these systems require further enhancements and are limited by the completeness and accuracy of the data that are captured routinely by providers. Unsuccessful efforts to implement EHR-based quality measurement through the Meaningful Use program have frustrated some providers, suggesting the need for additional strategic and technical enhancements for the program.26 Presently, manual chart abstraction is the most reliable data collection method for quality measurement, but its significant resource requirements and time delay make it a poor choice to support long-term quality measurement efforts.

A Path Forward – Two Major Policy Recommendations

The Committee proposed major changes—both in the IT systems used to collect patient information and in the kind of measures that are collected and reported—for quality measurement to yield significant improvements in the quality of cancer care for Americans. Two of the ten recommendations in the report target the quality measurement issues described previously. The first recommendation addresses IT issues, and the second recommendation addresses quality measures. These recommendations are discussed below.

A Learning Health Care IT System in Cancer Care

Recommendation 7: A Learning Health Care IT System in Cancer Care.

Goal: Develop an ethically sound learning health care information technology system for cancer that enables real-time analysis of data from cancer patients in a variety of care settings. To accomplish this:

Professional organizations should design and implement the digital infrastructure and analytics necessary to enable continuous learning in cancer care.

The Department of Health and Human Services should support the development and integration of a learning health care IT system for cancer.

The Centers for Medicare & Medicaid Services and other payers should create incentives for clinicians to participate in this learning health care system for cancer, as it develops.1

Implementing a learning health care IT system would enhance existing cancer quality measurement and reporting efforts. This system would streamline provider collection of essential data for performance improvement and public reporting on a real-time basis by:

Integrating provider-collected data with patient-reported outcomes gathered from online surveys and tablet-based applications;

Using facile data repositories for collecting, storing, analyzing, and reporting large datasets; and,

Aggregating and reporting designated measures to various agencies and registries for purposes of public reporting and for more generalized performance improvement.

Existing reporting programs may be leveraged in this effort, but not without challenges. For example, as Table 1 describes, the American Society of Clinical Oncology (ASCO) is migrating its Quality Oncology Practice Initiative (QOPI) to an automated data abstraction system (eQOPI) as part of its CancerLinQ rapid health learning system. Recent experience suggests that several QOPI measures are not readily computable and that nontrivial interpretation differences occur during manual chart review, raising concerns regarding measure validation in an electronic environment.27 These technical hurdles, coupled with the voluntary and independent nature of existing quality reporting programs, highlight the need for continued IT enhancements to advance the implementation of this recommendation.

Quality Measurement

Recommendation 8: Quality Measurement.

Goal: Develop a national quality reporting program for cancer care as part of a learning health care system.

To accomplish this, the Department of Health and Human Services should work with professional societies to:

Create and implement a formal long-term strategy for publicly reporting quality measures for cancer care that leverages existing efforts.

Prioritize, fund, and direct the development of meaningful quality measures for cancer care with a focus on outcome measures and with performance targets for use in publicly reporting the performance of institutions, practices, and individual clinicians.

Implement a coordinated, transparent reporting infrastructure that meets the needs of all stakeholders, including patients, and is integrated into a learning health care system.1

The complexity and costs of cancer care, along with its prevalence, necessitate a unified strategy and a well-developed framework for measuring and improving the quality of cancer care. This is reflected in the Committee’s recommendation to develop a national quality reporting program for cancer care as part of a learning health care system. A broad stakeholder group, including professional societies and patient advocates, must be engaged in this effort. Their familiarity with complex cancer care delivery systems provides an essential perspective for creating and implementing a formal long-term strategy for public reporting. Additionally, measure development should focus on measures that are meaningful to patients and their families—measures that focus on important outcomes (e.g., survival and treatment toxicities) and costs—and on reporting these measures in an understandable way for patients and other stakeholders. This outcomes-focused reporting program may influence the choice and type of therapy while simultaneously informing quality improvement efforts in various care settings, thereby improving the quality of care throughout the health care system.

The IOM recommends implementation of a coordinated, transparent reporting infrastructure that meets the needs of all stakeholders and that is integrated with a learning health care system. This reporting program could accelerate progress toward a high-quality cancer care delivery system by making the outcomes, processes, and costs of cancer care more transparent to promote patient engagement and delivery of evidence-based care and to discourage wasteful and potentially harmful treatments.

Implementing the Recommendations

The current landscape contains the necessary foundational knowledge, support, and technology to construct the learning health care system and national reporting program described in the report. These factors comprise a solid platform on which to develop a national reporting program. However, additional transformation and leadership—in the form of funding, coordination, and oversight—are necessary to implement the Committee’s recommendations. Additionally, providers and other stakeholders will have to adjust to a more patient-centered cancer care delivery model, where high-quality patient-clinician relationships and interactions are central drivers of quality and quality is measured and improved in a systematic way. Within this model, responsibility for the outcomes and costs of care is shared across providers, traditionally proprietary health care cost data are more transparent, and patients, as integral members of health care teams, share responsibility for their outcomes of care. Specific recommendations for leadership, key tasks, and reducing disparities are described below.

Leadership

The Committee identified the Department of Health and Human Services (HHS) as the logical convener to lead implementation. The success of the Office of the National Coordinator for Health Information Technology (ONC) in accelerating nationwide adoption of health IT through the Meaningful Use program has direct relevance for creating a learning health care system for cancer. HHS could leverage the ONC model to create an Office of the National Coordinator for Health Care Quality to design and implement a robust national reporting program for cancer care, including initiating and funding projects to develop patient-centered measures to fill critical measurement gaps. With proper funding and authority and with multi-stakeholder collaboration, the national quality coordinator could reinvigorate cancer quality measurement and reorient existing activities in a more coordinated, patient-centered direction. Numerous payers, professional organizations, providers, patient advocacy groups, and public agencies share a vested interest in improving the quality and transparency of cancer care and should be involved. Designating the Centers for Medicare & Medicaid Services (CMS) and the NQF as key partners would further enhance implementation of the IOM’s recommendations, as described below:

CMS: As the principal payer for most newly diagnosed cancer patients and cancer survivors, CMS has a substantial interest in the quality of cancer care in America. CMS has the regulatory and legislative authority to enforce a mandatory quality measurement program, but its timelines are too brief and its focus on the Medicare population is too narrow to implement an effective and influential national reporting program for cancer care. CMS could support implementation of the IOM’s recommendations by leading payers in linking reimbursement to outcomes and by offering financial incentives to providers that participate in a learning health care system and in a national quality reporting program for cancer care. CMS could adopt a simplified version of the pay-for-reporting incentive/penalty model of the Physician Quality Reporting System (PQRS) for this purpose. The Center for Medicare & Medicaid Innovation (CMMI) should fund demonstration projects to test appropriate pay-for-performance models for this program.

NQF: The NQF holds the federal contract to endorse health care quality measures for use in public reporting 28 and has expended significant effort to expand cancer quality measurement.29–33 However, it lacks the authority to enforce its recommendations and the resources to fund measure development. The NQF is exploring opportunities to expedite filling key measurement gaps, such as through a virtual “measure incubator” that connects measure developers with potential funders, EHR vendors, and other key stakeholders.34 A mechanism like this could accelerate the timeline and reduce the cost of developing patient-centered oncology measures. Similarly, ongoing efforts to streamline the NQF’s measure endorsement process could hasten implementation of these measures.35

Key Tasks

Specific tasks for implementing the IOM’s recommendations are described below:

Strategy Development: Create and enforce a formal long-term strategy (with shorter-term milestones) for developing and publicly reporting cancer measures, aligned with the aims and priorities of the National Quality Strategy 36 and informed by the work of organizations, such as the IOM, the RAND Corporation, the NQF, and the NQF-convened Measure Applications Partnership.

Research Funding: Fund health services research and clinical trials focused on the non-technical dimensions of cancer care, including: access and care coordination; patient and family engagement; managing complex comorbidities; quality-of-life issues during and after treatment; reintegration into society (i.e., return to work and managing personal obligations); and, the costs of care. Older cancer patients should be well-represented in this research.

IT Enhancements: Enhance EHRs to address known workflow, usability, interoperability, and data management issues.26 Modify data structures to capture and report patient-reported data, to integrate structured, unstructured, and semi-structured data, to support robust data analytics and real-time decision making support, and to support data sharing, public reporting, and care coordination.37 The ONC should integrate the principles of a learning health care system for cancer in the criteria for future Meaningful Use stages to ensure that the IOM’s recommendation is implemented. Table 2 compares the IOM’s ten recommendations to accelerate progress toward a learning health care system with Meaningful Use Stage 2 objectives.

Measure Development: Fund and direct the development of cancer-focused measures that are meaningful for all stakeholders, focusing on: gaps in outcome measures, in cross-cutting, non-technical measures, and in measures for rarer cancers; measures that promote shared accountability (across providers and including patients) and that reduce disparities in coverage and access to care; and, measures with a well-defined cost-benefit relationship. Engage multidisciplinary provider teams with clinical and quality expertise and patient representatives in all stages of measure development. Develop a formal tool to assist with prioritizing measure development,38 with highest priority given to patient-centered outcome measures.

Public Reporting: Implement a reporting infrastructure (including IT and reporting methodologies) that meets the information needs of all stakeholders (patients and caregivers, providers, payers, and state and federal agencies), that emphasizes transparency, and that presents data in a way that guides patients and caregivers in health care decision-making.

Table 2. Recommendations for a Learning Health Care System—Relevant Meaningful Use Stage 2 Objectives, Cancer-Specific Gaps, and Potential Management Strategies.

Table 2 represents the authors’ view of relevant Meaningful Use Stage 2 objectives (for eligible hospitals and critical access hospitals, together “EH”, and for eligible providers, or “EP”) associated with the IOM’s ten recommendations to accelerate progress toward a learning health care system, cancer-specific gaps, and potential management strategies.40,58

| Foundational Elements |

|

Recommendation 1: The Digital Infrastructure Improve the capacity to capture clinical, care delivery process, and financial data for better care, system improvement, and the generation of new knowledge. |

Relevant Meaningful Use Stage 2 Objectives

|

Cancer-Specific Gaps

|

Potential Management Strategies

|

|

Recommendation 2: The Data Utility Streamline and revise research regulations to improve care, promote the capture of clinical data, and generate knowledge. |

Relevant Meaningful Use Stage 2 Objectives

|

| Cancer-Specific Gaps |

Potential Management Strategies

|

| Care Improvement Targets |

|

Recommendation 3: Clinical Decision Support Accelerate integration of the best clinical knowledge into care decisions. |

Relevant Meaningful Use Stage 2 Objectives

|

Cancer-Specific Gaps

|

Potential Management Strategies

|

| Recommendation 4: Patient-Centered Care Involve patients and families in decisions regarding health and health care, tailored to fit their preferences. |

Relevant Meaningful Use Stage 2 Objectives

|

Cancer-Specific Gaps

|

Potential Management Strategies

|

|

Recommendation 5: Community Links Promote community-clinical partnerships and services aimed at managing and improving health at the community level. |

Relevant Meaningful Use Stage 2 Objectives

|

Cancer-Specific Gaps

|

Potential Management Strategies

|

|

Recommendation 6: Care Continuity Improve coordination and communication within and across organizations. |

Relevant Meaningful Use Stage 2 Objectives

|

Cancer-Specific Gaps

|

Potential Management Strategies

|

| Recommendation 7: Optimized Operations Continuously improve health care operations to reduce waste, streamline care delivery, and focus on activities that improve patient health. |

Relevant Meaningful Use Stage 2 Objectives

|

Cancer-Specific Gaps

|

Potential Management Strategies

|

| Supportive Policy Environment |

|

Recommendation 8: Financial Incentives Structure payment to reward continuous learning and improvement in the provision of best care at lower cost. |

Relevant Meaningful Use Stage 2 Objectives

|

Cancer-Specific Gaps

|

Potential Management Strategies

|

|

Recommendation 9: Performance Transparency Increase transparency on health care system performance. |

Relevant Meaningful Use Stage 2 Objectives

|

Cancer-Specific Gaps

|

Potential Management Strategies

|

| Recommendation 10: Broad Leadership Expand commitment to the goals of a continuously learning health care system. |

Relevant Meaningful Use Stage 2 Objectives

|

Cancer-Specific Gaps

|

Potential Management Strategies

|

Reducing Disparities

Accessible, affordable cancer care is a key component of high-quality cancer care delivery in the Committee’s conceptual framework. A central goal of the framework is that quality of care should not depend on the care setting (e.g., academic cancer centers vs. community cancer centers).1 Several ongoing activities are designed to reduce disparities in patients’ access to cancer care. The Affordable Care Act of 2010 (ACA) is expected to provide 25 million people with insurance coverage by 2023.39 It also includes several provisions designed to improve care for vulnerable and underserved populations, through improved primary care, preventive services, and patient navigators. Additionally, several promising public and private efforts are underway to address disparities in cancer care, such as patient navigation programs sponsored by the National Cancer Institute (NCI) and C-Change. A national quality reporting program for cancer care should measure the effectiveness of these efforts in improving access and reducing disparities at provider-, community-, state-, and federal levels.

Significant financial resources are needed to ensure that institutions that care for vulnerable and underserved populations have the infrastructure and staffing to participate in a national quality reporting program and a learning health care system for cancer. A recent IOM report estimated that more than $750 billion of health care costs are wasteful,40 including inefficient care delivery, overuse of care, and excessive administrative costs. By eliminating waste, the cancer care delivery system could redirect the savings to attenuate disparities in access and care delivery. Additional grant funding is necessary to ensure that providers that care for vulnerable and underserved populations can take full advantage of EHRs and other health IT. Over time, cost savings associated with a healthier American population should offset a portion of the public’s investment in this technology.

Conclusion

For more than a decade, the IOM has directed public attention to significant quality gaps in national cancer care delivery. Throughout, the IOM advocated creating a national quality reporting program for cancer care and the necessary technical infrastructure to support this program as an effective means of assessing and improving cancer care delivery. Several organizations have attempted to fill these voids, but their efforts lacked the breadth, magnitude, coordination, and sustainability to transform cancer care across the nation. Thus, despite public and private efforts to develop cancer quality measures and the IT systems to capture and report these measures, this national reporting program does not exist today.

In Delivering High-Quality Cancer Care: Charting a New Course for a System in Crisis, the IOM took a fresh look at cancer care in America and, again, identified unified, patient-centered cancer quality measurement as a necessary element of a well-functioning cancer care delivery system. The report includes two recommendations targeting the nation’s inability to measure and improve cancer care delivery in a systematic way:

Develop a national quality reporting program for cancer care as part of a learning health care system; and,

Develop an ethically sound learning health care information technology system for cancer that enables real-time analysis of data from cancer patients in a variety of care settings.

National oversight, public-private collaboration, and a patient-centered approach are fundamental to creating the robust learning system and national cancer quality measurement program described in the report. By providing specific performance goals and measurements of care, by incorporating the perspectives of all stakeholder groups, by unifying existing, independent measure development activities, and by reporting this information in a way that supports patient decision-making, this patient-oriented reporting program for cancer care would transform the way that patients, providers, payers, and regulators evaluate cancer care and health care, in general. With proper national oversight, funding, and coordination, it will accelerate improvements in cancer care where other programs have failed.

Acknowledgments

This project was supported by AARP, American Cancer Society, American College of Surgeons’ Commission on Cancer, American Society for Radiation Oncology, American Society of Clinical Oncology, American Society of Hematology, California HealthCare Foundation, Centers for Disease Control and Prevention, LIVESTRONG, National Cancer Institute, National Coalition for Cancer Survivorship, Oncology Nursing Society, and Susan G. Komen for the Cure.

We thank the members of the IOM Committee on Improving the Quality of Cancer Care: Addressing the Challenges of an Aging Population and the project staff.

Footnotes

The authors are responsible for the content of this article, which does not necessarily represent the views of the Institute of Medicine.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Tracy Spinks, Email: tespinks@mdanderson.org, Clinical Operations, The University of Texas MD Anderson Cancer Center, 1400 Pressler St., Unit 1486, Houston, Texas 77030, 713-563-2198.

Patricia A. Ganz, Email: pganz@mednet.ucla.edu, Division of Cancer Prevention & Control Research, UCLA Schools of Medicine and Public Health, Jonsson Comprehensive Cancer Center, 650 Charles Young Drive South, Room A2-125 CHS, Los Angeles, CA 90095-6900, 310-206-1404.

George W. Sledge, Jr., Email: gsledge@stanford.edu, Division of Oncology, Stanford University Medical Center, 269 Campus Drive, CCSR 1115, MC:5151, Stanford, CA 94305, 650-724-4397.

Laura Levit, Email: llevit@nas.edu, Institute of Medicine, 500 5th St NW, Washington, DC 20001, 202-334-1343.

James A. Hayman, Email: hayman@umich.edu, Department of Radiation Oncology, University of Michigan, 1500 East Medical Center Drive, SPC 5010 - UH B2C490, Ann Arbor, MI 48109-5010, 734-647-9956.

Timothy J. Eberlein, Email: eberleint@wudosis.wustl.edu, Department of Surgery, Washington University School of Medicine, 660 South Euclid Avenue - Box 8109, St. Louis, MO 63110, 314-362-8020, 314-454-1898.

Thomas W. Feeley, Email: tfeeley@mdanderson.org, Anesthesiology & Critical Care, The University of Texas MD Anderson Cancer Center, 1515 Holcombe Boulevard, Unit 409, Houston, TX 77030, 713-792-7115.

References

- 1.IOM (Institute of Medicine) Delivering high-quality cancer care: Charting a new course for a system in crisis. Washington, DC: The National Academies Press; 2013. [PubMed] [Google Scholar]

- 2.Committee on Quality of Health Care in America, Institute of Medicine. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: The National Academies Press; 2001. [Google Scholar]

- 3.Hewitt M, Simone JV, editors. Ensuring Quality Cancer Care. Washington, DC: The National Academy Press; 1999. [PubMed] [Google Scholar]

- 4.Lamb GC, Smith MA, Weeks WB, Queram C. Publicly reported quality-of-care measures influenced Wisconsin physician groups to improve performance. Health Aff (Millwood) 2013 Mar;32(3):536–543. doi: 10.1377/hlthaff.2012.1275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Spinks TE, Walters R, Feeley TW, et al. Improving Cancer Care through Public Reporting of Meaningful Quality Measures. Health Aff (Millwood) 2011 Apr;30(4):664–672. doi: 10.1377/hlthaff.2011.0089. [DOI] [PubMed] [Google Scholar]

- 6.Spinks T, Albright HW, Feeley TW, et al. Ensuring Quality Cancer Care: A Follow-Up Review of the Institute of Medicine’s 10 Recommendations for Improving the Quality of Cancer Care in America. Cancer. 2012 May 15;118(10):2571–2582. doi: 10.1002/cncr.26536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hibbard J, Sofaer S. [Accessed August 15, 2012];Best Practices in Public Reporting No. 1: How To Effectively Present Health Care Performance Data To Consumers. 2010 Available at: http://www.ahrq.gov/legacy/qual/pubrptguide1.htm.

- 8.Faber M, Bosch M, Wollersheim H, Leatherman S, Grol R. Public reporting in health care: how do consumers use quality-of-care information? A systematic review. Med Care. 2009 Jan;47(1):1–8. doi: 10.1097/MLR.0b013e3181808bb5. [DOI] [PubMed] [Google Scholar]

- 9.Totten AM, Wagner J, Tiwari A, O’Haire C, Griffin J, MW Public Reporting as a Quality Improvement Strategy. Closing the Quality Gap: Revisiting the State of the Science. [Accessed August 15, 2012];Evidence Report No. 208. (Prepared by the Oregon Evidence-based Practice Center under Contract No. 290-2007-10057-I.) AHRQ Publication No. 12-E011-EF. [PMC free article] [PubMed] [Google Scholar]

- 10.Kaiser Family Foundation, Agency for Healthcare Research and Quality. [Accessed August 15, 2012];Update on Consumers’ Views on Patient Safety and Quality Information, 2006. 2006 Available at: www.kff.org/kaiserpolls/pomr092706pkg.cfm.

- 11.Harris KM, Beeuwkes Buntin M. Choosing a health care provider. Synth Proj Res Synth Rep. 2008 May;(14) [PubMed] [Google Scholar]

- 12.Kahn KL, Malin JL, Adams J, Ganz PA. Developing a reliable, valid, and feasible plan for quality-of-care measurement for cancer: how should we measure? Med Care. 2002 Jun;40(6 Suppl):III73–85. doi: 10.1097/00005650-200206001-00011. [DOI] [PubMed] [Google Scholar]

- 13.Measure Applications Partnership (MAP), National Quality Forum (NQF) [Accessed August 15, 2012];Performance Measurement Coordination Strategy for PPS-Exempt Cancer Hospitals. 2012 Available at: http://www.qualityforum.org/WorkArea/linkit.aspx?LinkIdentifier=id&ItemID=71217.

- 14.Kemeny MM, Peterson BL, Kornblith AB, et al. Barriers to clinical trial participation by older women with breast cancer. J Clin Oncol. 2003 Jun 15;21(12):2268–2275. doi: 10.1200/JCO.2003.09.124. [DOI] [PubMed] [Google Scholar]

- 15.Johnson M. Chemotherapy treatment decision making by professionals and older patients with cancer: a narrative review of the literature. Eur J Cancer Care (Engl) 2012 Jan;21(1):3–9. doi: 10.1111/j.1365-2354.2011.01294.x. [DOI] [PubMed] [Google Scholar]

- 16.National Cancer Policy Forum (U.S.) Cancer in elderly people: workshop proceedings. Washington, D.C: National Academies Press; 2007. [Google Scholar]

- 17.Fentiman IS, Tirelli U, Monfardini S, et al. Cancer in the elderly: why so badly treated? Lancet. 1990 Apr 28;335(8696):1020–1022. doi: 10.1016/0140-6736(90)91075-l. [DOI] [PubMed] [Google Scholar]

- 18.Mariotto AB, Yabroff KR, Shao Y, Feuer EJ, Brown ML. Projections of the cost of cancer care in the United States: 2010–2020. J Natl Cancer Inst. 2011 Jan 19;103(2):117–128. doi: 10.1093/jnci/djq495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Uyar D, Frasure HE, Markman M, von Gruenigen VE. Treatment patterns by decade of life in elderly women (> or =70 years of age) with ovarian cancer. Gynecol Oncol. 2005 Sep;98(3):403–408. doi: 10.1016/j.ygyno.2005.04.037. [DOI] [PubMed] [Google Scholar]

- 20.Lemmens VE, van Halteren AH, Janssen-Heijnen ML, Vreugdenhil G, Repelaer van Driel OJ, Coebergh JW. Adjuvant treatment for elderly patients with stage III colon cancer in the southern Netherlands is affected by socioeconomic status, gender, and comorbidity. Ann Oncol. 2005 May;16(5):767–772. doi: 10.1093/annonc/mdi159. [DOI] [PubMed] [Google Scholar]

- 21.Janssen-Heijnen ML, Houterman S, Lemmens VE, Louwman MW, Maas HA, Coebergh JW. Prognostic impact of increasing age and co-morbidity in cancer patients: a population-based approach. Crit Rev Oncol Hematol. 2005 Sep;55(3):231–240. doi: 10.1016/j.critrevonc.2005.04.008. [DOI] [PubMed] [Google Scholar]

- 22.Janssen-Heijnen ML, Maas HA, Houterman S, Lemmens VE, Rutten HJ, Coebergh JW. Comorbidity in older surgical cancer patients: influence on patient care and outcome. Eur J Cancer. 2007 Oct;43(15):2179–2193. doi: 10.1016/j.ejca.2007.06.008. [DOI] [PubMed] [Google Scholar]

- 23.Wang S, Wong ML, Hamilton N, Davoren JB, Jahan TM, Walter LC. Impact of age and comorbidity on non-small-cell lung cancer treatment in older veterans. J Clin Oncol. 2012 May 1;30(13):1447–1455. doi: 10.1200/JCO.2011.39.5269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Berger NA, Savvides P, Koroukian SM, et al. Cancer in the elderly. Trans Am Clin Climatol Assoc. 2006;117:147–155. discussion 155–146. [PMC free article] [PubMed] [Google Scholar]

- 25.Walter LC, Lindquist K, Nugent S, et al. Impact of age and comorbidity on colorectal cancer screening among older veterans. Ann Intern Med. 2009 Apr 7;150(7):465–473. doi: 10.7326/0003-4819-150-7-200904070-00006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Eisenberg F, Lasome C, Advani A, Martins R, Craig PA, Sprenger S. [Accessed August 28, 2013];A Study Of The Impact Of Meaningful Use Clinical Quality Measures. 2013 Available at: http://www.aha.org/content/13/13ehrchallenges-report.pdf.

- 27.Warner JL, Anick P, Drews RE. Physician inter-annotator agreement in the quality oncology practice initiative manual abstraction task. J Oncol Pract. 2013 May 1;9(3):e96–e102. doi: 10.1200/JOP.2013.000931. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.National Quality Forum (NQF) [Accessed August 15, 2012];Funding. 2012 Available at: http://www.qualityforum.org/About_NQF/Funding.aspx.

- 29.National Quality Forum (NQF) [Accessed August 15, 2012];Cancer Endorsement Maintenance 2011. 2012 Available at: http://www.qualityforum.org/Projects/Cancer_Endorsement_Maintenance_2011.aspx.

- 30.National Quality Forum (NQF) [Accessed August 15, 2012];NQF Steering Committee Meeting, standardizing quality measures for cancer care summary report. 2003 Available at: http://www.qualityforum.org/uploadedFiles/Quality_Forum/Projects/2009/Cancer_Care_Phase_I/cancer_meeting.pdf?n=3287.

- 31.National Quality Forum (NQF) [Accessed August 15, 2012];National voluntary consensus standards for quality of cancer care. 2009 Available at: http://www.qualityforum.org/WorkArea/linkit.aspx?LinkIdentifier=id&ItemID=22020.

- 32.National Quality Forum (NQF) [Accessed May 27, 2013];Cancer Endorsement Maintenance 2011 Final Report: December 2012. 2012 Available at: http://www.qualityforum.org/Publications/2012/12/Cancer_Endorsement_Maintenance_2011.aspx.

- 33.National Quality Forum (NQF) [Accessed May 31, 2013];Performance Measurement Coordination Strategy for PPS-Exempt Cancer Hospitals Final Report: June 2012. 2012 Available at: http://www.qualityforum.org/WorkArea/linkit.aspx?LinkIdentifier=id&ItemID=71217.

- 34.National Quality Forum (NQF) [Accessed June 4, 2013];MAP Pre-Rulemaking Report: 2013 Recommendations on Measures Under Consideration by HHS, February 2013. 2013 Available at: http://www.qualityforum.org/WorkArea/linkit.aspx?LinkIdentifier=id&ItemID=72746.

- 35.National Quality Forum (NQF) [Accessed October 18, 2013];Kaizen Improvement Event. 2013 Available at: http://www.qualityforum.org/Calendar/2013/09/Kaizen_Improvement_Event.aspx.

- 36.Agency for Healthcare Research and Quality (AHRQ) [Accessed October 18, 2013];About the National Quality Strategy (NQS) 2013 Available at: http://www.ahrq.gov/workingforquality/about.htm.

- 37.Anderson KM, Marsh CA, Flemming AC, Isenstein H, Reynolds J. [Accessed August 15, 2012];An Environmental Snapshot, Quality Measurement enabled by Health IT: Overview, Possibilities, and Challenges. 2012 Available at: http://healthit.ahrq.gov/sites/default/files/docs/page/final-hit-enabled-quality-measurement-snapshot.pdf.

- 38.Pronovost PJ, Lilford R. Analysis & commentary: A road map for improving the performance of performance measures. Health Aff (Millwood) 2011 Apr;30(4):569–573. doi: 10.1377/hlthaff.2011.0049. [DOI] [PubMed] [Google Scholar]

- 39.Congressional Budget Office (CBO) [Accessed October 18, 2013];CBO’s Estimate of the Net Budgetary Impact of the Affordable Care Act’s Health Insurance Coverage Provisions Has Not Changed Much Over Time. 2013 Available at: http://www.cbo.gov/publication/44176.

- 40.Smith MD, Institute of Medicine (U.S.). Committee on the Learning Health Care System in America. Best care at lower cost: the path to continuously learning health care in America. Washington, D.C: National Academies Press; 2012. [PubMed] [Google Scholar]

- 41.Jacobson JO, Neuss MN, McNiff KK, et al. Improvement in oncology practice performance through voluntary participation in the Quality Oncology Practice Initiative. J Clin Oncol. 2008 Apr 10;26(11):1893–1898. doi: 10.1200/JCO.2007.14.2992. [DOI] [PubMed] [Google Scholar]

- 42.McNiff K. The quality oncology practice initiative: assessing and improving care within the medical oncology practice. J Oncol Pract. 2006 Jan;2(1):26–30. doi: 10.1200/jop.2006.2.1.26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Neuss MN, Malin JL, Chan S, et al. Measuring the improving quality of outpatient care in medical oncology practices in the United States. J Clin Oncol. 2013 Apr 10;31(11):1471–1477. doi: 10.1200/JCO.2012.43.3300. [DOI] [PubMed] [Google Scholar]

- 44.Blayney DW, Severson J, Martin CJ, Kadlubek P, Ruane T, Harrison K. Michigan Oncology Practices Showed Varying Adherence Rates To Practice Guidelines, But Quality Interventions Improved Care. Health Aff (Millwood) 2012 Apr;31(4):718–728. doi: 10.1377/hlthaff.2011.1295. [DOI] [PubMed] [Google Scholar]

- 45.Blayney DW, Stella PJ, Ruane T, et al. Partnering with payers for success: quality oncology practice initiative, blue cross blue shield of michigan, and the michigan oncology quality consortium. J Oncol Pract. 2009 Nov;5(6):281–284. doi: 10.1200/JOP.091043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Sledge GW, Hudis CA, Swain SM, et al. ASCO’s Approach to a Learning Health Care System in Oncology. J Oncol Pract. 2013 May 1;9(3):145–148. doi: 10.1200/JOP.2013.000957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Sledge GW, Miller RS, Hauser R. CancerLinQ and the Future of Cancer Care. Am Soc Clin Oncol Educ Book. 2013;2013:430–434. doi: 10.14694/EdBook_AM.2013.33.430. [DOI] [PubMed] [Google Scholar]

- 48.Khuri SF, Henderson WG, Daley J, et al. Successful implementation of the Department of Veterans Affairs’ National Surgical Quality Improvement Program in the private sector: the Patient Safety in Surgery study. Ann Surg. 2008 Aug;248(2):329–336. doi: 10.1097/SLA.0b013e3181823485. [DOI] [PubMed] [Google Scholar]

- 49.Wick EC, Hobson DB, Bennett JL, et al. Implementation of a surgical comprehensive unit-based safety program to reduce surgical site infections. J Am Coll Surg. 2012 Aug;215(2):193–200. doi: 10.1016/j.jamcollsurg.2012.03.017. [DOI] [PubMed] [Google Scholar]

- 50.Hall BL, Hamilton BH, Richards K, Bilimoria KY, Cohen ME, Ko CY. Does surgical quality improve in the American College of Surgeons National Surgical Quality Improvement Program: an evaluation of all participating hospitals. Ann Surg. 2009 Sep;250(3):363–376. doi: 10.1097/SLA.0b013e3181b4148f. [DOI] [PubMed] [Google Scholar]

- 51.Ingraham AM, Richards KE, Hall BL, Ko CY. Quality improvement in surgery: the American College of Surgeons National Surgical Quality Improvement Program approach. Adv Surg. 2010;44:251–267. doi: 10.1016/j.yasu.2010.05.003. [DOI] [PubMed] [Google Scholar]

- 52.Bartlett EK, Meise C, Roses RE, Fraker DL, Kelz RR, Karakousis GC. Morbidity and Mortality of Cytoreduction with Intraperitoneal Chemotherapy: Outcomes from the ACS NSQIP Database. Ann Surg Oncol. 2013 Aug 29; doi: 10.1245/s10434-013-3223-z. [DOI] [PubMed] [Google Scholar]

- 53.Fischer JP, Nelson JA, Kovach SJ, Serletti JM, Wu LC, Kanchwala S. Impact of Obesity on Outcomes in Breast Reconstruction: Analysis of 15,937 Patients from the ACS-NSQIP Datasets. J Am Coll Surg. 2013 Jul 25; doi: 10.1016/j.jamcollsurg.2013.03.031. [DOI] [PubMed] [Google Scholar]

- 54.Fischer JP, Wes AM, Tuggle CT, 3rd, Serletti JM, Wu LC. Risk Analysis of Early Implant Loss after Immediate Breast Reconstruction: A Review of 14,585 Patients. J Am Coll Surg. 2013 Aug 21; doi: 10.1016/j.jamcollsurg.2013.07.389. [DOI] [PubMed] [Google Scholar]

- 55.Liu JJ, Maxwell BG, Panousis P, Chung BI. Perioperative Outcomes for Laparoscopic and Robotic Compared With Open Prostatectomy Using the National Surgical Quality Improvement Program (NSQIP) Database. Urology. 2013 Sep;82(3):579–583. doi: 10.1016/j.urology.2013.03.080. [DOI] [PubMed] [Google Scholar]

- 56.Uppal S, Al-Niaimi A, Rice LW, et al. Preoperative hypoalbuminemia is an independent predictor of poor perioperative outcomes in women undergoing open surgery for gynecologic malignancies. Gynecol Oncol. 2013 Aug 17; doi: 10.1016/j.ygyno.2013.08.011. [DOI] [PubMed] [Google Scholar]

- 57.Efstathiou JA, Nassif DS, McNutt TR, et al. Practice-based evidence to evidence-based practice: building the National Radiation Oncology Registry. J Oncol Pract. 2013 May;9(3):e90–95. doi: 10.1200/JOP.2013.001003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Office of the National Coordinator for Health Information Technology (ONC) [Accessed October 19, 2013];Stage 2 Overview Tipsheet. 2012 Available at: http://www.cms.gov/Regulations-and-Guidance/Legislation/EHRIncentivePrograms/Downloads/Stage2Overview_Tipsheet.pdf.

- 59.Davenport TH, Patil DJ. Data scientist: the sexiest job of the 21st century. Harv Bus Rev. 2012 Oct;90(10):70–76. 128. [PubMed] [Google Scholar]

- 60.RAND. Payment reform: Analysis of models and performance measurement implications. Santa Monica, CA: RAND Corporation; Jul 15, 2013 2011. [PMC free article] [PubMed] [Google Scholar]