Abstract

Background:

Autoverification is a process of using computer-based rules to verify clinical laboratory test results without manual intervention. To date, there is little published data on the use of autoverification over the course of years in a clinical laboratory. We describe the evolution and application of autoverification in an academic medical center clinical chemistry core laboratory.

Subjects and Methods:

At the institution of the study, autoverification developed from rudimentary rules in the laboratory information system (LIS) to extensive and sophisticated rules mostly in middleware software. Rules incorporated decisions based on instrument error flags, interference indices, analytical measurement ranges (AMRs), delta checks, dilution protocols, results suggestive of compromised or contaminated specimens, and ‘absurd’ (physiologically improbable) values.

Results:

The autoverification rate for tests performed in the core clinical chemistry laboratory has increased over the course of 13 years from 40% to the current overall rate of 99.5%. A high percentage of critical values now autoverify. The highest rates of autoverification occurred with the most frequently ordered tests such as the basic metabolic panel (sodium, potassium, chloride, carbon dioxide, creatinine, blood urea nitrogen, calcium, glucose; 99.6%), albumin (99.8%), and alanine aminotransferase (99.7%). The lowest rates of autoverification occurred with some therapeutic drug levels (gentamicin, lithium, and methotrexate) and with serum free light chains (kappa/lambda), mostly due to need for offline dilution and manual filing of results. Rules also caught very rare occurrences such as plasma albumin exceeding total protein (usually indicative of an error such as short sample or bubble that evaded detection) and marked discrepancy between total bilirubin and the spectrophotometric icteric index (usually due to interference of the bilirubin assay by immunoglobulin (Ig) M monoclonal gammopathy).

Conclusions:

Our results suggest that a high rate of autoverification is possible with modern clinical chemistry analyzers. The ability to autoverify a high percentage of results increases productivity and allows clinical laboratory staff to focus attention on the small number of specimens and results that require manual review and investigation.

Keywords: Algorithms, clinical chemistry, clinical laboratory information system, Epstein-Barr virus, informatics

INTRODUCTION

Autoverification is a process whereby clinical laboratory results are released without manual human intervention.[1,2,3,4,5,6] Autoverification uses predefined computer rules to govern release of results.[1] Autoverification rules may include decisions based on instrument error flags (e.g. short sample, possible bubbles, or clot), interference indices (e.g. hemolysis, icterus, and lipemia),[7] reference ranges, analytical measurement range (AMR), critical values, and delta checks (comparison of current value to previous values, if available, from the same patient).[8,9,10,11,12,13] Rules may also define potentially absurd (physiologically improbable) values for some analytes and additionally may control automated dilutions and conditions for repeat analysis of specimens. More sophisticated application of autoverification rules can generate customized interpretive text based on patterns of laboratory values.[3,14] Autoverification is commonly performed using the laboratory information system (LIS) and/or middleware software that resides between the laboratory instruments and the LIS.[1,2,3,4,5,6]

Autoverification can greatly reduce manual review time and effort by laboratory staff, limiting staff screen fatigue caused by reviewing and verifying hundreds to thousands of results per shift. Ideally, autoverification allows laboratory staff to focus manual review on a small portion of potentially problematic specimens and test results.[6] However, improperly designed autoverification can lead to release of results that should have been held, potentially negatively impacting patient management.

There is relatively little published literature on practical use of autoverification. There is a guideline document produced by the Clinical and Laboratory Standards Institute (CLSI) on autoverification of clinical laboratory test results which focuses on the process for validating and implementing autoverification protocols.[15] In this study, we present data on autoverification used in a clinical chemistry core laboratory in an academic medical center. The rules in this laboratory evolved over more than a decade and now result in a high autoverification rate of clinical chemistry tests.

SUBJECTS AND METHODS

The institution of this study is a 734-bed tertiary care academic medical center that includes an emergency room with level one trauma capability, adult and pediatric inpatient floors, and multiple intensive care units (neonatal, pediatric, cardiovascular, medical, and surgical/neurologic). Primary care and specialty outpatient services are provided at the main medical center campus as well as a multispecialty outpatient facility located 3 miles away. Pneumatic tube transportation of specimens is available throughout the medical center. Smaller primary care clinics affiliated with the academic health system are dispersed throughout the local region. A core laboratory within the Department of Pathology provides clinical chemistry and hematopathology testing for both outpatient and inpatient services. This study focuses on the clinical chemistry division from 1/1/2000 to 9/21/2013. This study was approved by the University of Iowa Institutional Review Board as a retrospective study.

Throughout the time period of retrospective analysis, the main chemistry instrumentation in the core laboratory was from Roche Diagnostics (Indianapolis, IN, USA). By 2010, the chemistry automation line included five Modular P and four Modular E170 analyzers, with front-end automation provided by a Modular Pre-Analytic (MPA)-7 unit. In 2013, the chemistry instrumentation was replaced with a Cobas 8000 system with two c702, three C502, and five e602 analyzers, still using MPA-7 as the front-end automation. This chemistry automation system currently supports 131 Roche assays and 14 non-Roche assays run as either open or partner channels. Other instrumentation in the core chemistry laboratory includes: Abbott Diagnostics (Abbott Park, IL, USA) Architect i1000 (running cyclosporine, sirolimus, and tacrolimus drug levels, along with human immunodeficiency virus (HIV) testing); Bio-Rad (Hercules, CA, USA) Bioplex 2200 (variety of serologic assays), and Advanced Instruments (Norwood, MA, USA) A2O automated osmometer for serum/plasma and urine osmolality measurements.

The LIS throughout the period of retrospective analysis has been Cerner (Kansas City, MO, USA) “Classic”, currently version 015. The LIS is managed by University of Iowa Hospital Computing and Information Services. Roche instruments are interfaced to the LIS via Data Innovations (South Burlington, VT, USA) Instrument Manager (“Middleware”) version 8.10. Instruments other than Roche are interfaced to the LIS via Data Innovations Instrument Manager version 8.12. The majority of autoverification rules are in Middleware. A small number of rules are in the LIS. The Roche and non-Roche Middleware production servers each have “shadow” backups in a separate location that can be used if the corresponding main production server fails. There are also separate “test” servers for each system that allow for initial testing and validation of rules without affecting the production servers. Service agreements for instrumentation and middleware are at a level that provides rapid response to problems.

The core laboratory has a supervisor that primarily focuses on middleware, and three clinical laboratory scientists that are tasked with implementing, validating, and maintaining autoverification rules. The core laboratory employs a full-time medical technologist for quality control and improvement. A process improvement team within the chemistry division meets regularly with the medical director to review and discuss laboratory issues. Many of the core laboratory staff is cross-trained on both chemistry and hematology automated instrumentation. This provides important cross-coverage, particularly in periods of instrument, LIS, or middleware downtime.

All autoverification rules require approval by medical director. Core laboratory staff can suspend autoverification, if necessary. The goal of the core laboratory is to have continuous flow of specimens onto the automated line as much as possible, regardless of the inpatient or outpatient unit origin of the specimens. Specimens that are not suitable for the automated line (e.g. small pediatric tubes or tubes requiring manual aliquoting) are handled by a manual exception bench. There is no designation of routine versus stat for ordering of laboratory tests. By 2010, the option to order any chemistry assay on a stat basis was removed from the electronic medical record provider ordering system and on paper requisitions. This change was based on consistent turnaround times and had approval from the hospital subcommittee overseeing laboratory testing.

The autoverification rules currently used were developed mainly over the course of 8 years, although rudimentary autoverification rules within the LIS were first used starting in 2000. Manual review and absurd limits were developed based on analysis of patient data and consultation with clinical services. More involved use of autoverification followed with use of introduction of middleware.

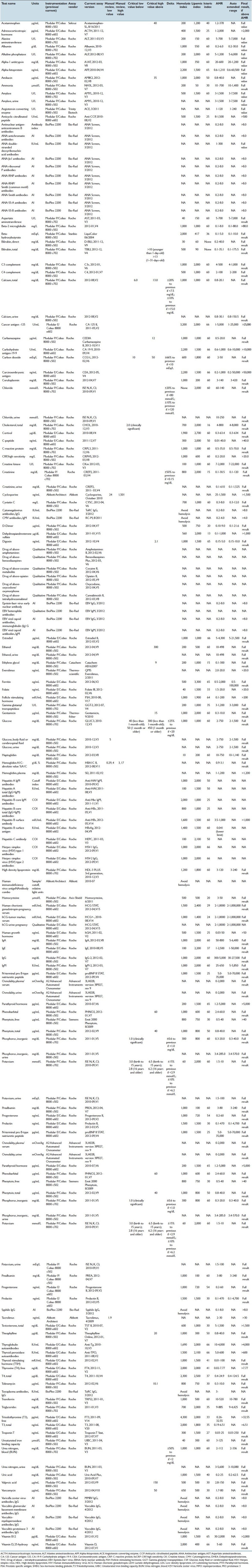

Validation of autoverification rules followed CLSI guideline AUTO10-A.[15] Key elements of the validation protocol are pretesting, simulated patient testing, testing using clinical specimens, approval of documentation, and finally, implementation and maintenance of rules. Where possible, clinical specimens with unusual properties (e.g. extremely low or high concentrations of a specific analyte, very icteric or lipemic specimens) were saved to allow for testing of rules using real specimens. Validation included thorough testing of upper and lower limits of ranges (e.g. reference ranges, absurd ranges), including the boundaries of these ranges. Rules were tested individually and in combination. Finally, integrity of the results to the LIS and finally to the hospital electronic medical record were tested. Common pitfalls encountered in the evolution of autoverification rules were unexpected instrument errors or finding samples with values sought after for testing. In the cases that specimens cannot be found with certain properties, simulated testing had to suffice. Table 1 contains parameters for all the chemistry assays including manual review limits, critical values, delta checks, interference indices (hemolysis, icterus, lipemia), AMR, and auto-extended range (for automated dilution protocols).

Table 1.

Parameters for all the chemistry assays including manual review limits, critical values, delta checks, interference indices (hemolysis, icterus, lipemia),AMR, and auto-extended range (for automated dilution protocols)

The protocols permit autoverification of critical values provided all other rules are met. Whether a critical value autoverifies or not, a printout is generated that directs laboratory staff to notify the ordering provider by telephone and to document this notification (including read-back and verification of the result by the recipient of the call). A call center within the core laboratory handles the majority of critical value reporting during business hours. When the call center is not open, technologists within the laboratory handle critical value reporting. Critical value reporting and appropriate documentation of the provider call is monitored as a quality metric. Late reports detect failure to document notification of provider of critical values.

RESULTS

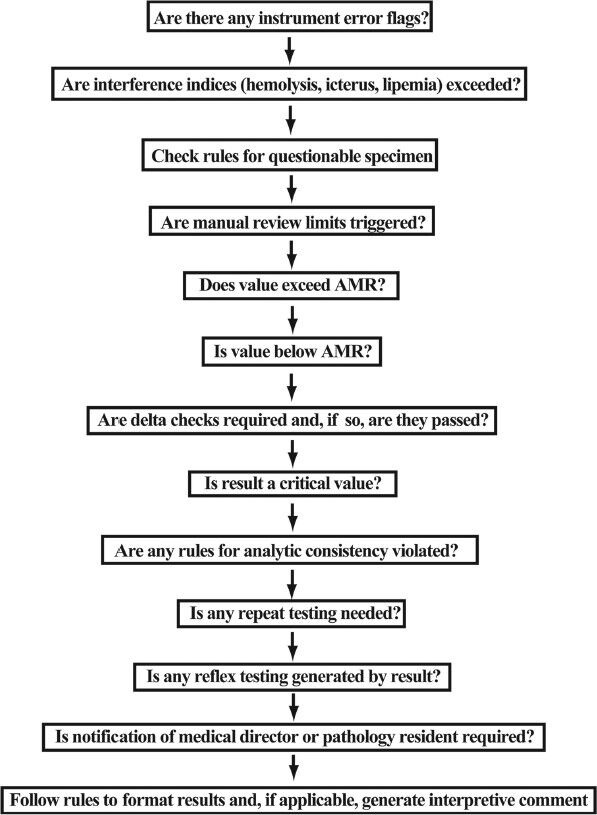

Figure 1 shows a schematic diagram of autoverification rules used for the chemistry assays. Table 2 specifies in greater detail the consequences of each step of this process. Some steps such as instrument error flags or violation of manual review limits or delta checks outright prevent autoverification. Note that a test result generating a critical value does not preclude autoverification.

Figure 1.

Diagram of main rules impacting autoverification

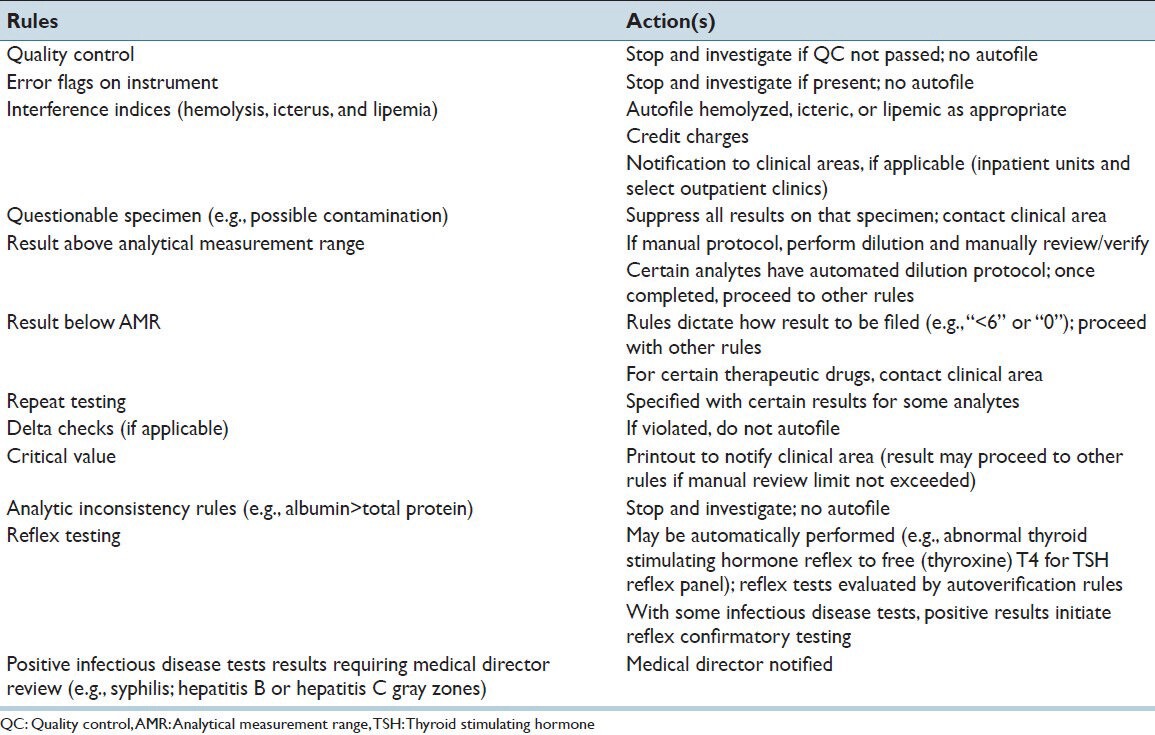

Table 2.

Autoverification rules and actions

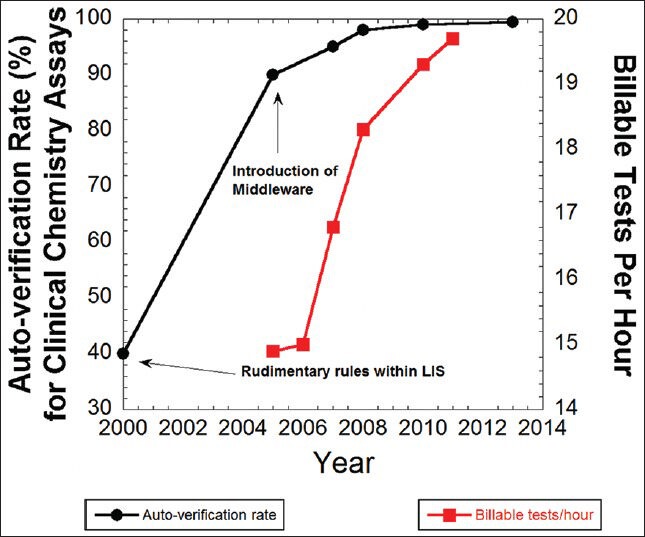

Figure 2 shows the increasing rate of autoverification over the course of years in the core chemistry laboratory. Starting from an autoverification rate of 40% in 2000 using rudimentary rules within the LIS, the rate increased to 95% by 2007 by initial implementation of middleware rules and then to 99.0% by 2010. The time period from 2005 to 2010 also saw an increase in staff productivity, with an increase of billable tests per hour from 14.9 to 19.7 [Figure 2]. During this time period, the annual testing volume increased from 2.7 million tests/year (2005) to 3.2 million tests/year (2010). Annual test volumes have remained relatively steady since 2010.

Figure 2.

Increases in autoverification rate (black circles) and staff productivity (red squares)

The further increase in autoverification rate from 99.0% in 2010 to the current rate of 99.5% in 2013 was achieved by allowing autoverification of critical values (provided manual review limits or absurd ranges are not violated) and by auto-release of results with interference by hemolysis, lipemia, or icterus. Exceeding interference limits results in text result of “hemolyzed”, “lipemic”, or “icteric” (i.e. no numeric result is provided), coupled with crediting of charges for that assay. Tests cancelled due to interference can only be overridden at clinician request, with a disclaimer then appended. For critical values that autoverify, the notification of clinical service occurs after autoverification (i.e. as long as autoverification conditions are met, filing of critical value results does not wait for clinician notification).

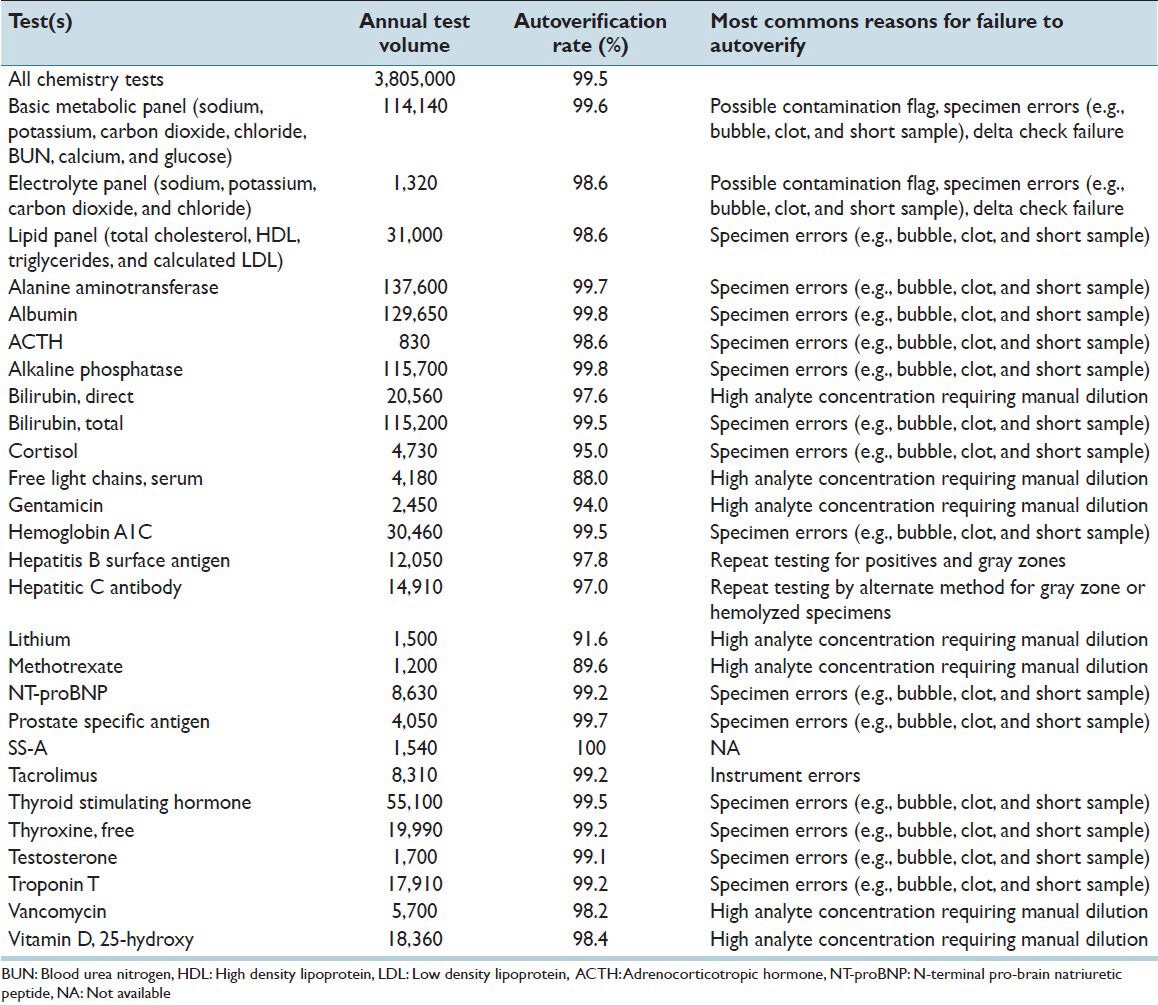

Table 3 shows current rates of autoverification for a variety of panels and individual assays. The overall rate of autoverification for all chemistry tests was 99.5% measured over a 4-month period in 2013. The highest rates of autoverification occurred with the most frequently ordered tests such as the basic metabolic panel (sodium, potassium, chloride, carbon dioxide, creatinine, blood urea nitrogen (BUN), calcium, glucose; 99.6%), albumin (99.8%), and alanine aminotransferase (99.7%). The lowest rates of autoverification occurred with some therapeutic drug levels (e.g. gentamicin, lithium, and methotrexate) and with serum free light chains (kappa/lambda), mostly due to need for offline dilution and manual filing of results. Table 3 includes the most common reason certain tests or test panels failed to autoverify. For the highest volume tests such as albumin or alanine aminotransferase, problems with specimen (e.g. short sample; possible bubble, or clot) were the most common reason for failure to autoverify.

Table 3.

Autoverification rate

Several rules were put in place to address specific issues with assays or protocols. The total bilirubin assay used shows rare interference by monoclonal gammopathy (most commonly those with IgM as the heavy chain).[16] This interference can be inferred by a discrepancy between the total bilirubin (in mg/dL) and the numeric icteric index (which usually tracks closely with total bilirubin) by greater than 4. This interference has occurred approximately three to four times per year over the course of 3 years. An additional rare problem is hook effect with the myoglobin assay with very high myoglobin concentrations. Dilution protocols were modified following identification of this problem.[17] A protocol for evaluation of possible toxic alcohol or glycol exposure was developed based on osmolal gap.[18,19]

Another rule was put in place to detect weakly positive (gray zone) hepatitis B surface antigen assay results. This assay was originally reported as reactive or nonreactive, but a retrospective analysis determined that a high fraction of reactive specimens with quantitative results barely above the cutoff index were due either to recent hepatitis B vaccination (the vaccine contains surface antigen) or were false positives that did not confirm with hepatitis B surface antigen neutralization assay.[20] Middleware rules print notification to staff to send out specimen for confirmation by neutralization assay and to notify pathology resident or attending pathologist. Following clinician notification, instances where the gray zone result is attributable to recent vaccination can lead to cancellation of test to avoid falsely labeling patient as hepatitis B positive. This avoids downstream problems such as notification of public health authorities.

DISCUSSION

Autoverification of laboratory test results is an essential component of increasing efficiency within the clinical laboratories.[1,2,3,4,5,6] Despite the importance of autoverification, there is relatively scant literature published on practical application within a clinical laboratory over the course of years.

In this report, we present our experience with autoverification in a busy automated core chemistry laboratory in an academic medical center. The autoverification rules evolved over more than a decade, with a steady increase in autoverification rate to the current rate of 99.5%. The high rate of autoverification is driven in large part by the highest volume tests or test panels (e.g. basic metabolic panel, albumin, alanine aminotransferase, and troponin T), which all have autoverification rates exceeding 99.0%. This frees up staff time to deal with assays such as certain drug levels or endocrinology tests that require offline steps such as manual dilutions, or to investigate questionable test results. Some tests in our study currently have autoverification rates under 90%; however, these tests comprise a small fraction of the total test volume.

To our knowledge, there is very little published data on autoverification of critical values. Our workflows allow for critical value autoverification, provided no other rules are violated. Critical values still require provider notification and subsequent documentation; however, communication of these results by laboratory staff is facilitated by the provider often seeing the autoverified value prior to the call. For example, the emergency treatment center and intensive care units in our medical center use electronic displays or dashboards that continuously display patient data in restricted staff areas. Phone calls to document the critical value proceed more quickly when the laboratory test result has already been seen.

Despite the advantages of autoverification, there are potential negatives that can arise. The validation of autoverification is time-consuming and attention to detail is paramount. Even the most thorough validation plan can miss unexpected instrument error flags or other rare events. It also not possible to test every conceivable combination of rules.

Informatics support is critical to successful implementation and maintenance of autoverification. The most common problems interfering with autoverification would be interruptions of network, LIS, middleware, and/or the interfaces between these systems. In our institution, we have maintained both production and shadow middleware servers in different geographic locations, in addition to using separate test servers for initial testing of middleware rules without compromising the production system. This has reduced period of times the systems are down. The other risk with autoverification, and indeed with increased automation in general, is reduction of staff (both in number of staff and in the mix of level of training and experience) to such a degree that the staff cannot handle downtimes or other challenges without severe compromise of turnaround time.

One benefit of computer rules is to catch rare events that could elude manual verification. Two examples of such rules in our laboratory are plasma albumin exceeding total protein concentration (suggesting an instrument error on one test) and marked discrepancy between total bilirubin and icteric index (possibly caused by monoclonal gammopathy interference).[16] Each of these situations occurs less than 10 times per year in our laboratory, meaning that any particular laboratory staff member may only see such an event once a year or less. Even experienced personnel can miss such combinations of results, especially for patients who have multiple tests ordered on a specimen.

Our experience suggests that successful and continued use of autoverification requires investment in personnel and training over the course of years. Validation of autoverification rules requires high attention to detail. Rules should be based on published evidence and analysis of assays. The use of autoverification also does not obviate need for careful quality control. Lastly, close collaboration between the clinical laboratory and computing services is the key for ongoing success.

ACKNOWLEDGEMENTS

The autoverification rules, along with instrument interfaces to middleware and the LIS for which these rules depend, presented in this manuscript were developed over the course of a number of years and would not be possible without the hard work of many pathology and hospital computing staff. The authors gratefully acknowledge support from the Department of Pathology Informatics team (especially Sue Dane) and Hospital Computing Information Systems (especially Nick Dreyer, Karmen Dillon, Kathy Eyres, Rick Dyson, Kurt Wendel, and Jason Smith). The authors also express gratitude to Dr Ronald Feld (emeritus faculty member of University of Iowa Department of Pathology, previous medical director of the clinical chemistry laboratory) and Sue Zaleski (former manager of core laboratory) for supporting and encouraging early efforts to develop autoverification within the core laboratory.

Footnotes

Available FREE in open access from: http://www.jpathinformatics.org/text.asp?2014/5/1/13/129450

REFERENCES

- 1.Crolla LJ, Westgard JO. Evaluation of rule-based autoverification protocols. Clin Leadersh Manag Rev. 2003;17:268–72. [PubMed] [Google Scholar]

- 2.Duca DJ. Autoverification in a laboratory information system. Lab Med. 2002;33:21–5. [Google Scholar]

- 3.Guidi GC, Poli G, Bassi A, Giobelli L, Benetollo PP, Lippi G. Development and implementation of an automatic system for verification, validation and delivery of laboratory test results. Clin Chem Lab Med. 2009;47:1355–60. doi: 10.1515/CCLM.2009.316. [DOI] [PubMed] [Google Scholar]

- 4.Lehman CM, Burgener R, Munoz O. Autoverification and laboratory quality. Crit Values. 2009;2:24–7. [Google Scholar]

- 5.Pearlman ES, Bilello L, Stauffer J, Kamarinos A, Miele R, Wolfert MS. Implications of autoverification for the clinical laboratory. Clin Leadersh Manag Rev. 2002;16:237–9. [PubMed] [Google Scholar]

- 6.Torke N, Boral L, Nguyen T, Perri A, Chakrin A. Process improvement and operational efficiency through test result autoverification. Clin Chem. 2005;51:2406–8. doi: 10.1373/clinchem.2005.054395. [DOI] [PubMed] [Google Scholar]

- 7.Vermeer HJ, Thomassen E, de Jonge N. Automated processing of serum indices used for interference detection by the laboratory information system. Clin Chem. 2005;51:244–7. doi: 10.1373/clinchem.2004.036301. [DOI] [PubMed] [Google Scholar]

- 8.Kim JW, Kim JQ, Kim SI. Differential application of rate and delta check on selected clinical chemistry tests. J Korean Med Sci. 1990;5:189–95. doi: 10.3346/jkms.1990.5.4.189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lacher DA, Connelly DP. Rate and delta checks compared for selected chemistry tests. Clin Chem. 1988;34:1966–70. [PubMed] [Google Scholar]

- 10.Ovens K, Naugler C. How useful are delta checks in the 21 century? A stochastic-dynamic model of specimen mix-up and detection. J Pathol Inform. 2012;3:5. doi: 10.4103/2153-3539.93402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Park SH, Kim SY, Lee W, Chun S, Min WK. New decision criteria for selecting delta check methods based on the ratio of the delta difference to the width of the reference range can be generally applicable for each clinical chemistry test item. Ann Lab Med. 2012;32:345–54. doi: 10.3343/alm.2012.32.5.345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Sheiner LB, Wheeler LA, Moore JK. The performance of delta check methods. Clin Chem. 1979;25:2034–7. [PubMed] [Google Scholar]

- 13.Wheeler LA, Sheiner LB. A clinical evaluation of various delta check methods. Clin Chem. 1981;27:5–9. [PubMed] [Google Scholar]

- 14.Jones JB. A strategic informatics approach to autoverification. Clin Lab Med. 2013;33:161–81. doi: 10.1016/j.cll.2012.11.004. [DOI] [PubMed] [Google Scholar]

- 15.Wayne, PA, USA: 2006. CLSI. Autoverification of Clinical Laboratory Test Results; Approved Guideline (AUTO10-A) [Google Scholar]

- 16.Bakker AJ, Mucke M. Gammopathy interference in clinical chemistry assays: Mechanisms, detection and prevention. Clin Chem Lab Med. 2007;45:1240–3. doi: 10.1515/CCLM.2007.254. [DOI] [PubMed] [Google Scholar]

- 17.Kurt-Mangold M, Drees D, Krasowski MD. Extremely high myoglobin plasma concentrations producing hook effect in a critically ill patient. Clin Chim Acta. 2012;414:179–81. doi: 10.1016/j.cca.2012.08.024. [DOI] [PubMed] [Google Scholar]

- 18.Ehlers A, Morris C, Krasowski MD. A rapid analysis of plasma/serum ethylene and propylene glycol by headspace gas chromatography. Springerplus. 2013;2:203. doi: 10.1186/2193-1801-2-203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Krasowski MD, Wilcoxon RM, Miron J. A retrospective analysis of glycol and toxic alcohol ingestion: Utility of anion and osmolal gaps. BMC Clin Pathol. 2012;12:1. doi: 10.1186/1472-6890-12-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Rysgaard CD, Morris CS, Drees D, Bebber T, Davis SR, Kulhavy J, et al. Positive hepatitis B surface antigen tests due to recent vaccination: A persistent problem. BMC Clin Pathol. 2013;12:15. doi: 10.1186/1472-6890-12-15. [DOI] [PMC free article] [PubMed] [Google Scholar]