Abstract

Comparative effectiveness research (CER) can provide valuable information for patients, providers and payers. These stakeholders differ in their incentives to invest in CER. To maximize benefits from public investments in CER, it is important to understand the value of CER from the perspectives of these stakeholders and how that affects their incentives to invest in CER. This article provides a conceptual framework for valuing CER, and illustrates the potential benefits of such studies from a number of perspectives using several case studies. We examine cases in which CER provides value by identifying when one treatment is consistently better than others, when different treatments are preferred for different subgroups, and when differences are small enough that decisions can be made based on price. We illustrate these findings using value-of-information techniques to assess the value of research, and by examining changes in pharmaceutical prices following publication of a comparative effectiveness study. Our results suggest that CER may have high societal value but limited private return to providers or payers. This suggests the importance of public efforts to promote the production of CER. We also conclude that value-of-information tools may help inform policy decisions about how much public funds to invest in CER and how to prioritize the use of available public funds for CER, in particular targeting public CER spending to areas where private incentives are low relative to social benefits.

Comparative effectiveness research (CER) seeks to provide data on the relative merits of different strategies to prevent, diagnose or treat illness.[1] As such, CER aims to generate or synthesize information on the health outcomes and perhaps costs of alternative strategies that are valuable for patients, for healthcare practitioners and firms that provide care for those patients, and for payers.1 CER studies can vary greatly in cost, ranging from relatively inexpensive literature syntheses or retrospective analyses of data collected routinely in ‘real-world’ settings to costly clinical trials. The recent and expected future large investments of public funds in CER in the US has created great interest in the understanding of when and how CER may produce value and, accordingly, how to best prioritize investments in CER.[3]

In this article, we develop a conceptual approach to understanding the value of CER from the perspectives of multiple potential producers and beneficiaries of CER and to understanding how to prioritize public investments in CER given those potential benefits and incentives to invest. We argue that, in general, CER can be valuable when it can (i) identify when one intervention is consistently superior to another; (ii) identify subsets of patients in which interventions with heterogeneous treatment effects are superior to others; or (iii) identify when interventions are equally effective, or at least sufficiently close in effectiveness, such that decisions can be made based on price.

To explore these issues, this article examines two examples of the application of value-of-information (VOI) techniques to assess the value of CER based on models derived from cost-effectiveness methods, and examines a case study of a change in pharmaceutical prices with the publication of a comparative effectiveness study. A key suggestion of these examples is that CER may have high societal value but limited private returns. This sense in which CER can be a ‘public good’ – something valuable for society but with insufficient private incentives for its production – suggests the importance of public efforts to promote the production of CER. The findings also suggest some strategies to assess the appropriate levels, and allocation, of public funds to support CER.

Although our discussion focuses on the use of CER to study pharmaceuticals, we also note that CER can be applied to a host of other medical interventions, including medical devices and alternative modes of providing and organizing care. Different market models may apply across and within these classes of healthcare interventions, so we also note that the roles of public and private CER may vary accordingly.

1. A Conceptual Approach to the Value of Comparative Effectiveness Research (CER)

Perspective is a key component in the analysis of the value of CER. Patients may care most about which strategy will produce the best outcomes for them, and the value of the improved health produced by CER may often be the greatest component of its value. Such improvements in health might be measured by changes in mortality or morbidity, and these might be aggregated into summative measures of the value of health such as QALYs.[4] Individuals and organizations that contribute to the provision of care will also care about the interests of the patients they serve, but may also have an economic stake in the outcomes of comparative effectiveness studies because these may affect the sales of the products or services they provide. This can be true when the intervention being studied is a drug and the interested provider is a pharmaceutical company that sells it or when the intervention is a procedure or other service offered by a health professional.

Finally, payers may also have an interest in the outcomes of CER because of its potential influence on both costs and patient outcomes. Of course, to the extent that the results of such studies are not proprietary, even findings that improve outcomes or reduce costs may have little long-term effect on the profitability of payers if their competitors adopt the same practices and competition drives down prices. In this case, patients may be the long-term beneficiaries of comparative effectiveness studies. These forces affecting the distribution of the benefits of CER suggest that the incentives to perform CER may not be readily captured by any single stakeholder group. This may cause insufficient investment in CER by private entities, creating a case for public investment in CER. To assess the case for such public investments, it is useful to assess the public and private returns from such studies.

One aspect of CER that is particularly important in assessing its value and the incentives to perform it is the simple fact that the outcome of research is uncertain. Broadly speaking, three outcomes are possible when a set of interventions (say, A and B) are compared. First, A or B can be found to be better than the alternative. Second, A and B can be found to each sometimes be better than the other for defined subgroups. Third, A and B can be found to be identical in effectiveness, or at least close enough that interventions may be selected based on price.

These three basic outcomes have different implications for the interests of patients, providers (including for example, physicians and pharmaceutical companies) and payers.

1.1 Patients

Patients may benefit from the results of comparative effectiveness studies in all of these three cases. If A is better than B, or B better than A, patients can choose the better alternative, assuming cost is not a concern. If B and A are each found to be better under specific circumstances, the patient can select the better treatment for their specific circumstance, and if A and B are found to be identical or sufficiently close in efficacy, the patient may benefit from knowing that they can choose the cheaper of the two. In a competitive market, this will tend to drive down the cost of both, especially when A and B are pharmaceuticals for which the marginal cost is close to zero.[5]

1.2 Providers

Providers may benefit from CER when the intervention they provide is found to be superior to an alternative. Sometimes this may be apparent to them from the time any research is begun. If so, the incentive to perform a comparative effectiveness study may be strong because the provider may be able to increase the price and/or quantity of the intervention that they provide if the results of the study are in their favour. However, more often there is some uncertainty about whether a treatment will be superior. The result of this is that a comparative effectiveness study may be likely to have an outcome that would not benefit the provider group. Even when the study may have appeared more likely to benefit the provider, the study would certainly carry risk, and risk-averse firms or specialty groups, or individual decision makers within them, might well be reluctant to bear that risk.

It is interesting to note that the adverse effects of such risk on the private incentives to perform research are precisely opposite to the influence of the social benefits in the sense that research is most valuable from the social perspective when there is the greatest uncertainty about the relative benefits of the alternatives being considered. For the same reason, provider groups would fail to gain the full benefits of research when the intervention they provide is found to benefit only a subgroup of patients, as this would reduce the market size. Furthermore, if the treatment was found to be equally as effective as alternatives, providers would likely be harmed by downward pressures on prices, unless the marginal cost of one alternative was sufficiently lower than the marginal cost of others. However, this is unlikely to be the case for most pharmaceuticals, where marginal cost is usually very low.

Finally, providers will generally only have incentives to invest in CER if they have some ability to increase their prices or the quantity sold if the results of the study are favourable. While this is likely to be the case for pharmaceuticals or devices protected by patents, it is unlikely to be the case for pharmaceuticals or devices without patent protection or for specific approaches to providing care (e.g. a surgical technique) or modes of organizing care. Therefore, the overall conclusion is that the incentives for providers to perform CER will generally be less than the benefits of such research for patients, and that provider incentives may be far less than patient benefits in many instances.

1.3 Payers

Payers may benefit from CER in the short run if it allows them to help their patients choose options that produce better outcomes or that decrease costs. If the results suggest that a given option is preferred and it is controlled by a private entity (such as a pharmaceutical company or medical specialty) that limits entry of potential competitors, such information may simply raise the price charged for the intervention, providing no benefit to the payer. Even if this is not the case, the results of a comparative effectiveness trial would be very unlikely to benefit a payer in the long run because their decision about which intervention to pursue would be impossible to keep private, and could thus be easily copied by competitors, eventually just driving down prices in their market, and producing benefits that accrue to patients and other insured individuals to whom reductions in premiums would eventually be passed on. Again, as with the incentives for providers to conduct CER, the overall conclusion is that the incentives for payers to do this research will generally be far less than the benefits to patients.

For all these reasons, it is likely that the value of CER to patients will generally far exceed its value to those providing the interventions or to payers. This suggests the importance of public policies that increase investment in comparative effectiveness studies. However, these new investments are likely to be most beneficial if they can be informed by estimates of the value of CER.

2. Examples of Estimates of the Value of CER

The value of CER can be considered from the perspective of either the value of its final outcome or the value one might expect to gain before the research has been carried out. As noted, the outcome of CER can be valuable when it can (i) identify that one intervention is consistently superior to alternatives; (ii) identify subgroups of patients in whom one or another intervention is superior; and (iii) identify that interventions are sufficiently close in efficacy that decisions can be made based on cost. While the value of any given study can be determined only after it is complete, the prospective value of a given study can be assessed before it is executed, based on the likelihood and value of the potential outcomes of the study. Such calculations are based on a conceptual framework called value of information (VOI) analysis, which calculates the expected (average) gain in welfare that can occur by multiplying the probability of an outcome of the research by the gain in welfare that would arise from that outcome. This idea has its origins in work in statistical decision theory developed in the 1950s,[6,7] but the theory has recently been extended with increasing degrees of generality to apply to the VOI concept to assess the value of research on the effectiveness of medical interventions.[8–10]

The basic mathematical structure of a VOI calculation is most easily illustrated by a case in which there is a current intervention (A) with known benefit (BenA) and we wish to consider the use of a new intervention (B) that could have two outcomes: discovery that A provides greater benefits than B (BenA > BenB) or discovery that B provides greater benefits than A (BenB > BenA). Assume that, based on prior studies, the probability that BenA > BenB is known to be pA > B and the probability that BenB > BenA is pB > A. If A is found to be better than B, then research does not change decisions from the current choice of A. However, if B is found to be better than A, then the decision changes from A to B and the difference in outcome between them is received as a benefit. Therefore, the expected value of the research is shown by equation 1:

| (Eq. 1) |

This basic calculation ignores many complexities that are relevant to considering incentives to invest in CER, including, for example, what the decision should be in the absence of additional research. To explore some of these complexities that can affect the incentives to invest in CER, it is useful to consider concrete examples that address the three cases we have already discussed: (i) when one intervention is consistently superior; (ii) when effectiveness varies across subgroups; and (iii) when effectiveness is similar.

2.1 Example 1: The Case of Consistent Superiority

A simple example illustrates the principles behind VOI analysis in the case in which the aim is to determine whether one intervention or the other is superior for all relevant patients. Imagine that clinical interventions A and B are both considered in a population of 100 people with a given clinical condition, but it is not clear which intervention is better. Assume that life expectancy is the clinically relevant outcome for this condition. Specifically, imagine that there is a 20% chance that B offers a benefit of 8 years of life expectancy over A and an 80% chance that A offers a benefit of 2 years of increased life expectancy over B. Thus, choosing B over A would yield equation 2:

| (Eq. 2) |

where the resulting ‘0’ is the years of expected benefit. Therefore, if decision makers are risk neutral, they would be indifferent between these options without additional information.2 This is a very special case in that each choice is equally good in expected value so that merely making decisions based on current information has no particular value; in general, one or another decision would produce greater expected value, and merely identifying that existing evidence already suggests a preferred option could be of value if not all people are currently choosing that option.

Our focus here is deciding when collecting additional information would be of value. The value of such information will partially depend on what decisions people are making given current information. Given the equal value of these expected outcomes in this case, assume that, without any additional information, 50 people choose A and 50 people choose B. Of course, other assumptions about the baseline choice in the absence of information could be made, but this decision about the baseline choice without additional information is useful because it illustrates how decision making can change based on either outcome of the study (i.e. whether A or B is found to be superior and how the value of research differs depending on the initial treatments chosen before the research is performed).

Consider now the possible gains in welfare that could come from different outcomes of the study. Assume B is found to be better. In this case, the 50 people already choosing B continue to choose B, but the 50 people who would have chosen A now choose B and gain 8 years of life each. This produces a gain of 8 × 50 = 400 life-years, and occurs with a probability of 0.2, given our assumptions above. In contrast, consider the case in which A is found to be better. In this case, the 50 people choosing A continue their choice, while the 50 choosing B now switch to A, and gain 2 life-years each, producing a gain of 50 × 2 = 100 life-years. This occurs with a probability of 0.8. Combining these two outcomes, we see that research would produce a gain of 400 life-years with a probability of 0.2, and 100 life-years with a probability of 0.8. Thus, the expected gain in life-years is shown by equation 3:

| (Eq. 3) |

2.1.1 Specific Example

While this simplified example is a useful illustration, it may be more instructive to consider a specific real-world example, namely that of the expected value of resolving residual uncertainty about the right choice of antipsychotic agents for patients with schizophrenia. This is a complex problem involving both differences in many dimensions of effectiveness and differences in costs among the alternative treatments. This question has recently been the subject of the large National Institutes of Health-funded randomized CATIE (Clinical Antipsychotic Trials in Intervention Effectiveness) trial examining the comparative effectiveness of typical and atypical antipsychotic agents in schizophrenia.[11] The results of the CATIE trial have been controversial, with some viewing them as strong evidence that atypical anti-psychotics do not offer treatment benefits, while others highlighting a variety of limitations of the study, including that the primary outcome was continuation on a medication, and that effects on quality of life (QOL) were estimated with considerable imprecision.

Meltzer et al.[12] re-examined the results of the CATIE trial from a VOI perspective. They first used data on the incidence, prevalence and mortality associated with schizophrenia in the US and found that approximately 3.9 million prevalent cases and approximately 50 000 annual incident cases of schizophrenia in the US would potentially benefit from better choices of antipsychotic agents. They then calculated what the value would be of finding evidence of varying levels of difference in efficacy between the agents studied, assuming the commonly held estimate that a QALY is worth $US100 000. To do this, they used the mean and 95% confidence interval for the incremental effects reported in the CATIE study of an atypical antipsychotic (ziprasidone used as the prototype) compared with a first-generation antipsychotic (perphenazine) on QOL of 0.011 (−0.005, 0.02750)[12] and monthly costs of $US861 (−1829, 4742); from these they calculated the net expected dollar value of health benefits minus costs of determining the best option among the drugs studied in CATIE using a net benefit framework that determines which therapy offers the greatest benefits minus costs.

Discounting future benefits and costs at 3%, the VOI for the annual incident cases indefinitely into the future was estimated at $US6.6 billion per year over their lifetime, or $US6.6 billion/ 0.03 = $US220 billion. For the prevalent cases over their remaining lifetime, the VOI was $US207 billion, after discounting at 3%. Thus, the VOI of comparative effectiveness data to determine the true comparative effectiveness of atypical anti-psychotics was $US220 billion + $US207 billion = $US427 billion. Assuming that this information would continue to be valuable for only the next 20 years, comparative effectiveness studies that could definitively answer this question as to the preferred single therapy for schizophrenia would still be worth $US308 billion (year 2002 values).

The CATIE study has been criticized on a number of grounds, but one of the most important is that specific drugs may have efficacy and adverse effects that vary across identifiable individuals or subgroups. This suggests that having more than one drug readily available for patients may have advantages. This is a case in which CER offers the benefit of identifying variable effectiveness across different subgroups, and is the subject of the next example.

2.2 Example 2: The Case of Variable Effectiveness across Subgroups

For illustration, it is useful to return to the example cited previously of interventions A and B. As before, assume that the likelihood and magnitude of the benefits potentially arising from a comparative effectiveness study, if the result were to choose a single therapy for the whole population, are as given previously. Now assume, in addition, that there are two types of patients, type 1 and type 2, each constituting half of the population of 100 individuals with the condition (50 people each). Assume that patients of both types are known to respond identically to treatment A, but there are reasons to suspect that types 1 and 2 respond differently to treatment B. Imagine, as before, that there is a 20% chance that treatment B is superior, on average across type 1s and 2s to treatment A, with an average benefit of 8 years, but that this results from a benefit of 20 years of life expectancy for type 1s and a harm of 4 years of life for type 2s. Now reconsider the value of CER. As before, the gain (with 80% likelihood) when a single therapy is chosen for all patients is the same (100 years) if A is the superior overall single treatment. If B is the superior overall treatment on average, the gain is as shown by equation 4:

| (Eq. 4) |

reflecting the effects on the 50 patients who were receiving A previously, which is again the same average total benefit from B (400 life-years over half of the population) as in the earlier example. However, the difference in this case is that this 8-year average benefit is made up of a large benefit for the 25 type 1 patients and a harm for the 25 type 2 patients previously taking A.

Now assume that CER can reveal that these two groups differ in the benefits they receive from treatments A and B so that therapy can be targeted to them. In this case, for type 1s there is a 20% chance that B will turn out to be the best choice, with a gain of 20 years, and an 80% chance that A will turn out to be the best choice, with a gain of 2 years. For type 2s, A is always the best choice, but with a 20% chance that the gain will be 4 years and an 80% chance that the gain will be 2 years. Assume, moreover, that before CER was conducted, types 1 and 2 were evenly distributed at random across A and B, with 25 type 1s and 25 type 2s each receiving A and B. This creates some complexity in that we now have two types (1 and 2) and two initial treatments (A and B), but it is important for illustrating how the value of research can vary depending both on subgroups of patient benefit and on subgroups of treatment choice in the absence of additional research.

With this, we can now calculate the benefits of the comparative effectiveness information that allows targeting of therapy to the two types. For the type 1s, of whom 25 were originally receiving A and 25 were receiving B, the 20% chance that B is 20 years better than A would mean that the 25 type 1s receiving A would switch to B and gain 20 life-years, while the 80% chance that A is 2 years better than B would mean that the 25 type 1s receiving B would gain 2 years by switching to A. Thus, the expected gain for type 1s from this CER would be as shown in equation 5:

| (Eq. 5) |

For the type 2s (25 receiving A and 25 receiving B), the 20% chance that B is 4 years worse than A would mean the 25 type 2s receiving B would switch to A and gain 4 life-years, while the 80% chance that A is 2 years better than B would mean the same 25 type 2s originally receiving B would gain 2 years by switching to A. The total expected gain for type 2s would be as shown by equation 6:

| (Eq. 6) |

Adding the expected benefits of this comparative effectiveness information for types 1 and 2 produces a benefit of 140 + 60 = 200 life-years. This is larger than the 160 life-years noted previously for the same example if a single therapy had to be chosen for patients of all types, and illustrates that, for any given average treatment difference between two alternative treatments, the presence of heterogeneity in treatment effects will increase the value of CER that can identify these differences and guide treatment based on them.

2.2.1 Individualized Treatment

The idea that there are subgroups of patients for whom one therapy is preferred over another is taken to its extreme in the case of individualized therapy in which the best therapy is chosen for any given person based on their own attributes. In an earlier study,[13] we developed this idea of the expected value of individualized care and calculated it in the case of the choice of treatment for localized prostate cancer. Prostate cancer treatment is controversial because there can be great variability in how a cancer will progress, because the treatments (typically surgery, radiation or watchful waiting) can have significant adverse effects, and because patients’ preferences for these outcomes can vary.

We incorporated these factors into a complex decision-analytic model of the value of prostate cancer treatment in terms of both healthcare costs and gains in quality-adjusted life-expectancy.[13] To develop a single unified metric, we converted these gains into dollars. Interestingly, when compared with watchful waiting, we found that the expected value of determining the best single treatment that would be applied across all men is only about $US29 per 65-year-old prostate cancer patient, while the benefit of finding the best therapy for each man given his preferences and individual clinical attributes is about 100 times higher at $US2958 per 65-year-old patient (year 2003 values). Viewed at a population level in the US, this is worth over $US70 million annually and $US2.3 billion in discounted value (at 3% per year) for this stream of benefits occurring into the indefinite future.

That the benefits of individualized therapy are so large compared with the benefits of finding a single best therapy may seem surprising at first, but is less surprising when one understands that heterogeneity in patient preferences and other clinical attributes among men with localized prostate cancer is such that the three major treatments are each chosen by approximately the same number of men. Thus, selecting a single treatment would inherently lead to substantial mismatching of treatments to patients. This is where CER shows its great potential for value in helping to identify subgroups of patients most likely to gain from specific treatments. Historically, such subgroups have often been identified using clinical attributes or diagnostic tests, but it is likely that in the future these will also be complemented increasingly by genetic testing, producing even larger potential gains from CER.

The large numbers reported here for the value of research illustrate the very high potential benefit of CER for patients in terms of health, but it is also possible that CER produces changes in prices that could affect the welfare of both patients and the entities that provide the products and services studied. For example, if an intervention that is of moderate benefit in the population as a whole is found to offer most or all of its benefits in a small subgroup, there is the possibility that the price might be increased for the group with greater benefit, so that the total cost of the intervention over the population might not fall. This contrasts with the general intuition that data suggesting a lack of efficacy of an intervention in some group will predictably decrease total spending on that intervention. That general intuition seems most likely to be valid in the instance that two alternatives are found to be equally efficacious. Such a case is the subject of the example in the next section.

2.3 Example 3: The Case of Similar Effectiveness with Effects on Price

As noted, another benefit of CER may be to determine when available treatments are sufficiently similar in their effectiveness in treating a particular condition that a decision can be made based on price. Economic theory suggests that, when effectiveness is similar among alternatives, price competition should be greater, ultimately leading to price decreases. Specific evidence in the pharmaceutical sector provides strong support for price being highly responsive to the availability of close substitutes, for example from generics.[14] To the extent that CER produces evidence of therapeutic equivalence, it might be expected to produce downward effects on price very similar to those of generic entry. However, this hypothesis has received relatively little attention.

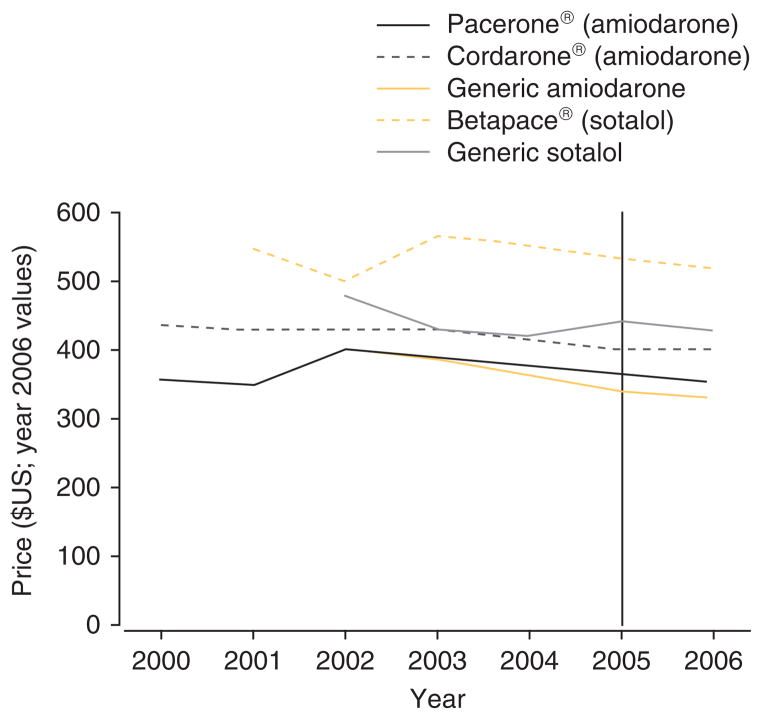

We performed a limited empirical test of this hypothesis using one recent and well publicized example, SAFE-T (Sotalol Amiodarone Atrial Fibrillation Efficacy Trial). SAFE-T examined the comparative effectiveness of the anti-arrhythmic drugs amiodarone and sotalol for the treatment of atrial fibrillation.[15] Atrial fibrillation is the most common arrhythmia requiring therapy. Conversion to and maintenance of sinus rhythm remain the cornerstones of therapy, but the optimal long-term strategy is controversial. The intention of the trial was to determine the best pharmacological method for maintaining sinus rhythm. The trial was double blinded and placebo controlled: 665 patients who were receiving anticoagulants and had persistent atrial fibrillation were randomly assigned to receive amiodarone, sotalol or placebo and were monitored for 1–4.5 years. The primary endpoint was the time to recurrence of atrial fibrillation beginning on day 28. The results suggested that amiodarone and sotalol were equally efficacious in converting atrial fibrillation to sinus rhythm. Both drugs had similar efficacy in maintaining sinus rhythm among patients with ischaemic heart disease. Amiodarone appeared to be superior for maintaining sinus rhythm among other patients.

We examined prices of the anti-arrhythmic medications amiodarone and sotalol before and after the results of the trial were published in the New England Journal of Medicine in 2005.[15] Data were drawn from the Red Book™.[16] Price refers to the annual average wholesale price (AWP) of the compound, adjusted for inflation using the consumer price index calculator (year 2006 values).[17] We matched the compound and the dosage (amiodarone 200 mg and sotalol 160 mg) to those reported in the trial. The unit batch of 100 pills per prescription available for purchase between 2000 and 2006 was used. Generics were matched to the branded compound in dosage and pill number: amiodarone is available in two branded versions (Pacerone® [Upsher-Smith] and Cordarone® [Wyeth-Ayerst Laboratories]), sotalol is available as Betapace® (Berlex Laboratories). Prices of the generic drugs are reported for the manufacturer observed for the longest period of time in the Red Book™ (Par and Apotex, respectively).

Figure 1 shows the price of the drugs between 2000 and 2006. Before trial results were released, prices were generally declining or stable, with the exception of generic sotalol, which was increasing. After trial results were reported in 2005 (indicated by the vertical bar), all branded and generic medication prices decreased or stabilized (Cordarone®).

Fig. 1.

Average wholesale price of amiodarone and sotalol before and after SAFE-T results.

2.3.1 Implications

What conclusions may we draw about consumer and producer benefit from the equal results of a comparative effectiveness trial? Of course, it is not appropriate to make broad generalizations from any one example. Nevertheless, in this example, patients and payers benefit from the results of the comparative effectiveness trial in terms of better clinical knowledge of the care of a common and acute medical condition. They may also benefit from decreasing prices. The extent of price competition and potential price reduction to payers as a result of the trial is unknowable from these results – AWP does not capture rebates and discounts that manufacturers typically give to purchasers. That the AWP for these compounds appears to be stabilizing or decreasing after trial results are reported suggests that, all else equal, comparative effectiveness trials with equivalent findings may put downward pressure on prescription drug prices. The extent of this pricing response is an empirical question for future work.

What about producers? Assuming the results hold, pharmaceutical manufacturers will lose revenue as a result of increased price competition. Even when there is a subgroup of patients who may have a superior outcome for one drug, it may be a small enough part of the market that it does not make sense to raise the price for the entire group for fear that other patients who do not benefit will decide to switch to a competitor. Thus, there may be little incentive for manufacturers to initiate comparative effectiveness trials unless they have prior knowledge of the superiority of their drug on some dimension or for a sizable number of patients.

One exception may be when a trial is designed with the goal of obtaining approval for the first indication for a new drug. In this case, it may be advantageous for the firm to identify differences in effectiveness among some patient populations or on some outcomes that may be only marginally relevant in medical practice in order to allow the drug to be available for off-label use. Finally, widespread use of such trials may ultimately push manufacturers to balkanize the market – producing medications with narrower indications so as to not be perceived as competing in the same therapeutic class for identical patient populations. Such strategies appear to have occurred in response to the Orphan Drug Act.[18] It is unclear what the patient welfare implications of such practices are.

3. Discussion

Comparative effectiveness studies can provide information that is of great value to patients and may lead to increased price competition that can lower prices. However, these same studies may generate no or even negative returns to private entities. This means that CER will be more likely to be produced by private entities when it seems more likely to serve their interests and helps support a case for public investment in comparative effectiveness studies.

These arguments may also have implications for the value of publically funded CER. For example, these arguments suggest that private investments in CER may favour questions likely to provide evidence of superiority for one treatment over another. If so, it is possible that publically funded CER will tend not to find differences in outcomes between treatments. In that case, much of the value of CER would come from price reductions when differences in outcomes are found to be small. Alternatively, if failure of private entities to invest in CER is more often because some treatment options are not privately controlled (e.g. generic drugs, approaches to treatment or organization of care), then public investments in CER might still often find differences in outcomes.

Whether CER primarily produces benefits by lowering prices or by finding evidence of differential effectiveness is ultimately an empirical question. However, it seems possible that considering why a private entity might be unwilling to fund CER on a topic might provide some insight into the likely results of CER. If so, public funders of CER who prefer to focus on CER likely to produce evidence of differential effectiveness or CER likely to result in price reductions may find it informative to analyse private incentives for CER on a given topic when considering public funding of CER on that topic. Presumably, public investments in CER will have their greatest value if they do not focus solely on simply reducing costs or maximizing benefits but consider the potential value of CER in terms of both costs and outcomes, and recognizing the importance of incentives for adequate investment in innovation as a condition of dynamic efficiency.

To guide public decisions about investment in CER, the type of VOI calculations described above could be useful. While the data on the probability and potential value of the outcomes of such VOI studies that is required to assess their value may seem daunting, there are examples of where the amount of information available has been sufficient to allow VOI methods to be used in practical applications that have actually been used for policy making by the UK National Institute for Health and Clinical Excellence (NICE).[19] In addition, methods have been developed to place bounds on VOI estimates using more limited data. Providing an upper bound on the value of a given type of comparative effectiveness study may be most useful when it suggests that the expected value of the study is relatively small. This would seem most likely when the condition being studied is very rare or when the potential change in outcomes is quite small. When the likelihood of finding information that could change practice and the value of such changes in practice are high, bounding exercises could suggest that even substantial overestimates of the value of research would still produce strong arguments for investment in that research.

Such calculations could also be performed from multiple perspectives, analysing both the private and public expected returns from any particular study. This might provide further guidance for the conditions under which a public subsidy might be most likely to be needed to produce socially valuable research. When a VOI analysis suggests that a given study would have high private value, it should presumably receive lower priority than a study with similar societal value without high private value, since the former study would likely be performed even in the absence of public spending. VOI studies can also suggest how to design studies to maximize value. For example, in the CATIE VOI study cited previously, the optimal sample size for a study that maximizes social benefit was found to be many times larger than the sample size used in the CATIE study itself. There may also be situations in which the ideal study design from a private perspective may differ from the ideal study design from a societal perspective. Such cases may be particularly interesting circumstances in which to consider the potential for public-private partnerships for the funding of CER.

4. Conclusions

Our results suggest that CER may have high societal value but limited private return to providers or payers. This suggests the importance of public efforts to promote the production of CER. We also conclude that VOI tools may help inform policy decisions about how much public funds to invest in CER and how to prioritize the use of available public funds for CER, in particular targeting public CER spending to areas where private incentives are low relative to social benefits.

Acknowledgments

This article was originally prepared at the request of the Institute of Medicine Roundtable on Evidence Based Medicine. The opinions expressed are those of the authors alone.

Dr Meltzer would like to acknowledge financial support from the Agency for Healthcare Research and Quality through the Hospital Medicine and Economics Center for Education and Research in Therapeutics (CERT) U18 HS016967-01 (Meltzer, PI), and a National Institute of Aging Midcareer Career Development Award from the National Institute of Aging K24-AG31326 (Meltzer, PI).

Footnotes

The Institute of Medicine (IOM) report[2] defined CER as “the generation and synthesis of evidence that compares the benefits and harms of alternative methods to prevent, diagnose, treat and monitor a clinical condition or to improve the delivery of care. The purpose of CER is to assist consumers, clinicians, purchasers, and policy makers to make informed decisions that will improve health care at both the individual and population levels.”

The value of research can of course be calculated even when one therapy is initially believed to be superior to another, but the assumption that the two alternatives are originally felt to have the same expected value of outcomes is a convenient simplifying assumption in this example.

References

- 1.Teutsch SM, Berger ML, Weinstein MC. Comparative effectiveness: asking the right questions, choosing the right method. Health Aff (Millwood) 2005 Jan-Feb;24 (1):128–32. doi: 10.1377/hlthaff.24.1.128. [DOI] [PubMed] [Google Scholar]

- 2.Ratner R, Eden J, Wolman D, et al., editors. Institute of Medicine. Initial national priorities for comparative effectiveness research. Washington, DC: National Academies Press; 2009. [Google Scholar]

- 3.Garber AM, Meltzer DO. Implementing comparative effectiveness research: priorities, methods, and impact. Washington, DC: Brookings Institution; 2009. [Accessed 2010 Jul 5]. Setting priorities for comparative effectiveness research; pp. 15–35. [online]. Available from URL: http://www.brookings.edu/~/media/Files/events/2009/0609_health_care_cer/0609_health_care_cer.pdf. [Google Scholar]

- 4.Gold MR, Siegel JE, Russell LB, et al. Cost-effectiveness in health and medicine. Oxford: Oxford University Press; 1996. [Google Scholar]

- 5.DiMasi JA. Price trends for prescription pharmaceuticals: 1995–1999. A background report prepared for the Department of Health and Human Services’ Conference on Pharmaceutical Pricing Practices, Utilization and Costs; 2000 Aug 8–9; Washington, DC. [Accessed 2007 Mar 11]. [online]. Available from URL: http://aspe.hhs.gov/health/Reports/Drug-papers/dimassi/dimasi-final.htm. [Google Scholar]

- 6.Raiffa H, Schlaifer R. Applied statistical decision theory. Beverly (MA): Harvard Business School, Colonial Press; 1961. [Google Scholar]

- 7.Pratt JW, Raiffa H, Schlaifer R. Introduction to statistical decision theory. New York: McGraw-Hill; 1965. [Google Scholar]

- 8.Claxton K, Posnett J. An economic approach to clinical trial design and research priority-setting. Health Econ. 1996;5 (6):513–24. doi: 10.1002/(SICI)1099-1050(199611)5:6<513::AID-HEC237>3.0.CO;2-9. [DOI] [PubMed] [Google Scholar]

- 9.Hornberger J. A cost-benefit analysis of a cardiovascular disease prevention trial using folate supplementation as an example. Am J Public Health. 1998;88 (1):61–7. doi: 10.2105/ajph.88.1.61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Meltzer D. Addressing uncertainty in medical cost-effectiveness analysis: implications of expected utility maximization for methods to perform sensitivity analysis and the use of cost-effectiveness analysis to set priorities for medical research. J Health Econ. 2001;20 (1):109–29. doi: 10.1016/s0167-6296(00)00071-0. [DOI] [PubMed] [Google Scholar]

- 11.Lieberman JA, Stroup TS, McEvoy JP, et al. Effectiveness of antipsychotic drugs in patients with chronic schizophrenia. N Engl J Med. 2005;353:1209–23. doi: 10.1056/NEJMoa051688. [DOI] [PubMed] [Google Scholar]

- 12.Meltzer DO, Basu A, Meltzer HY. Comparative effectiveness research for antipsychotic medications: how much is enough? Health Aff (Millwood) 2009;28 (5):w794–808. doi: 10.1377/hlthaff.28.5.w794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Basu A, Meltzer D. Value-of-information on preference heterogeneity and individualized care. Med Decis Making. 2007;27 (2):112–27. doi: 10.1177/0272989X06297393. [DOI] [PubMed] [Google Scholar]

- 14.Caves RE, Whinston MD, Hurwitz MA. Patent expiration, entry and competition in the US pharmaceutical industry: an exploratory analysis. Brookings Pap Econ Act: Microeconomics. 1991;1:1–48. [Google Scholar]

- 15.Singh BN, Singh SN, Reda DJ, et al. Amiodarone versus sotalol for atrial fibrillation. N Engl J Med. 2005;352 (18):1861–972. doi: 10.1056/NEJMoa041705. [DOI] [PubMed] [Google Scholar]

- 16.Thompson Healthcare. Red Book®. 2000–6. Montvale (NJ): Thompson PDR; 2007. [Google Scholar]

- 17.US Department of Labor. [Accessed 2007 Feb 15];Bureau of Labor Statistics [online] Available from URL: http://www.bls.gov.

- 18.Yin W. Evidence from the market for rare disease drugs [working paper] Chicago (IL): Irving B. Harris Graduate School of Public Policy Studies; 2006. Do market incentives generate innovation or balkanization? [Google Scholar]

- 19.Claxton K, Cohen JT, Neumann PJ. When is evidence sufficient? Health Aff. 2005 Jan-Feb;24(1):93–101. doi: 10.1377/hlthaff.24.1.93. [DOI] [PubMed] [Google Scholar]