Abstract

This paper is concerned with the selection and estimation of fixed and random effects in linear mixed effects models. We propose a class of nonconcave penalized profile likelihood methods for selecting and estimating important fixed effects. To overcome the difficulty of unknown covariance matrix of random effects, we propose to use a proxy matrix in the penalized profile likelihood. We establish conditions on the choice of the proxy matrix and show that the proposed procedure enjoys the model selection consistency where the number of fixed effects is allowed to grow exponentially with the sample size. We further propose a group variable selection strategy to simultaneously select and estimate important random effects, where the unknown covariance matrix of random effects is replaced with a proxy matrix. We prove that, with the proxy matrix appropriately chosen, the proposed procedure can identify all true random effects with asymptotic probability one, where the dimension of random effects vector is allowed to increase exponentially with the sample size. Monte Carlo simulation studies are conducted to examine the finite-sample performance of the proposed procedures. We further illustrate the proposed procedures via a real data example.

Keywords: Adaptive Lasso, linear mixed effects models, group variable selection, oracle property, SCAD

1. Introduction

During the last two decades, linear mixed effects models (Laird and Ware, 1982; Longford, 1993) have been widely used to model longitudinal and repeated measurements data, and have received a considerable amount of attention in the fields of agriculture, biology, economics, medicine, and sociology. See Verbeke and Molenberghs (2000) and references therein. With the advent of data collection technology, many variables can be easily collected in a scientific study, and it is typical to include many of them in the full model at the initial stage of modeling in order to reduce model approximation error. Due to the complexity of the mixed effects models, the inferences and interpretation of estimated models become challenging as the dimension of fixed or random components increases. Thus, the selection of important fixed effect covariates or random components becomes a fundamental problem in the analysis of longitudinal data or repeated measurements data using mixed effects models.

Variable selection for mixed effects models has become an active research topic in the literature. Lin (1997) considers testing a hypothesis on the variance component. The testing procedures can be used to detect whether an individual random component is significant or not. Based on these testing procedures, a stepwise procedure can be constructed for selecting important random effects. Vaida and Blanchard (2005) propose the conditional AIC, an extension of the AIC (Akaike, 1973), for mixed effects models with detailed discussion on how to define degrees of freedom in the presence of random effects. The conditional AIC has further been discussed in Liang et al. (2008). Chen and Dunson (2003) develop a Bayesian variable selection procedure for selecting important random effects in the linear mixed effects model using the Cholesky decomposition of the covariance matrix of random effects, and specify a prior distribution on the standard deviation of random effects with a positive mass at zero to achieve the sparsity of random components. Pu and Niu (2006) extend the generalized information criterion to select linear mixed effects models and study the asymptotic behavior of the proposed method for selecting fixed effects. Bondell et al. (2010) propose a joint variable selection method for fixed and random effects in the linear mixed effects model using a modified Cholesky decomposition in the setting of fixed dimensionality for both fixed effects and random effects. Ibrahim et al. (2011) propose to select fixed and random effects in a general class of mixed effects models with fixed dimensions of both fixed and random effects using maximum penalized likelihood method with the SCAD penalty and the adaptive least absolute shrinkage and selection operator penalty.

In this paper, we develop a class of variable selection procedures for both fixed effects and random effects in linear mixed effects models by incorporating the recent advances in variable selection for linear regression models. We propose to use the regularization methods to select and estimate fixed and random effects. As advocated by Fan and Li (2001), regularization methods can avoid the stochastic error of variable selection in stepwise procedures, and can significantly reduce computational cost compared with the best subset selection procedure and Bayesian procedures. Our proposal differs from existing ones in the literature mainly in two aspects. First, we consider the high-dimensional setting and allow dimensions of fixed and random effects to grow exponentially with the sample size. Second, our proposed procedures can estimate the fixed effects vector without knowing or estimating the random effects vector and vice versa.

We first propose a class of variable selection methods for the fixed effects using penalized profile likelihood method. To overcome the difficulty of unknown covariance matrix of random effects, we propose to replace it with a suitably chosen proxy matrix. The penalized profile likelihood is equivalent to a penalized quadratic loss function of the fixed effects. Thus, the proposed approach can take advantage of the recent developments in the computation of the penalized least-squares methods (Efron et al., 2004; Zou and Li, 2008). The optimization of the penalized likelihood can be solved by the LARS algorithm without extra effort. We further systematically study the sampling properties of the resulting estimate of fixed effects. We establish conditions on the proxy matrix and show that the resulting estimate enjoys model selection oracle property under such conditions. In our theoretical investigation, the number of fixed effects is allowed to grow exponentially with the total sample size, provided that the covariance matrix of random effects is nonsingular. In the case of singular covariance matrix for random effects, one can use our proposed method in Section 3 to first select important random effects and then conduct variable selection for fixed effects. In this case, the number of fixed effects needs to be smaller than the total sample size.

Since the random effects vector is random, our main interest is in the selection of true random effects. Observe that if a random effect covariateis a noise variable, then the corresponding realizations of this random effect should all be zero and thus the random effects vector is sparse. So we propose to first estimate the realization of random effects vector using a group regularization method and then identify the important ones based on the estimated random effects vector. More specifically, under the Bayesian framework, we show that the restricted posterior distribution of the random effects vector is independent of the fixed effects coefficient vector. Thus, we propose a random effect selection procedure via penalizing the restricted posterior mode. The proposed procedure reduces the impact of error caused by the fixed effects selection and estimation. The unknown covariance matrix is replaced with a suitably chosen proxy matrix. In the proposed procedure, random effects selection is carried out with group variable selection techniques (Yuan and Lin, 2006). The optimization of the penalized restricted posterior mode is equivalent to the minimization of the penalized quadratic function of random effects. In particular, the form of the penalized quadratic function is similar to that in the adaptive elastic net (Zou and Hastie, 2005; Zou and Zhang, 2009), which allows us to minimize the penalized quadratic function using existing algorithms. We further study the theoretical properties of the proposed procedure and establish conditions on the proxy matrix for ensuring the model selection consistency of the resulting estimate. We show that, with probability tending to one, the proposed procedure can select all true random effects. In our theoretical study, the dimensionality of random effects vector is allowed to grow exponentially with the sample size as long as the number of fixed effects is less than the total sample size.

The rest of this paper is organized as follows. Section 2 introduces the penalized profile likelihood method for the estimation of fixed effects and establishes its oracle property. We consider the estimation of random effects and prove the model selection consistency of the resulting estimator in Section 3. Section 4 provides two simulation studies and a real data example. Some discussion is given in Section 5. All proofs are presented in Section 6.

2. Penalized profile likelihood for fixed effects

Suppose that we have a sample of N subjects. For the i-th subject, we collect the response variable yij, the d × 1 covariate vector xij, and q × 1 covariate vector zij, for j = 1, … , ni, where ni is the number of observations on the i-th subject. Let , and . We consider the case where , i.e., the sample sizes for N subjects are balanced. For succinct presentation, we use matrix notation and write yi = (yi1, yi2, … , yini)T, Xi = (xi1, xi2, … , xini)T, and Zi = (zi1, zi2, … , zini)T In linear mixed effects models, the vector of repeated measurements yi on the i-th subject is assumed to follow the linear regression model

| (1) |

where β is the d × 1 population-specific fixed effects coefficient vector, γi represents the q × 1 subject-specific random effects with γi ~ N(0, G), εi is the random error vector with components independent and identically distributed as N(0, σ2), and γ1, …, γN, ε1, …, εN are independent. Here, G is the covariance matrix of random effects and may be different from the identity matrix. So the random effects can be correlated with each other.

Let vectors y, γ, and ε, and matrix X be obtained by stacking vectors yi, γi, and εi, and matrices Xi, respectively, underneath each other, and let Z = diag{Z1, …, ZN} and be block diagonal matrices. We further standardize the design matrix X such that each column has norm . The linear mixed effects model (1) can be rewritten as

| (2) |

2.1. Selection of important fixed-effects

In this subsection, we assume that there are no noise random effects and is positive definite. In the case where noise random effects exist, one can use the method in Section 3 to select the true ones. The joint density is

| (3) |

Given β, the maximum likelihood estimate (MLE) for γ is

where . Plugging into f(y, γ) and dropping the constant term yield the following profile likelihood function

| (4) |

where with I being the identity matrix. By Lemma 3 in Section 6, Pz can be rewritten as . To select the important x-variables, we propose to maximize the following penalized profile log-likelihood function

| (5) |

where pλn(x) is a penalty function with regularization parameter λn ≥ 0. Here, the number of fixed effects dn may increase with sample size n.

Maximizing (5) is equivalent to minimizing

| (6) |

Since Pz depends on the unknown covariance matrix and σ2, we propose to use a proxy to replace Pz, where is a pre-specified matrix. Denote by the corresponding objective function when is used. We will discuss in the next section on how to choose .

We note that (6) does not depend on the inverse of . So although we started this section with the non-singularity assumption of , in practice our method can be directly applied even when noise random effects exist, as will be illustrated in simulation studies of Section 4.

Many authors have studied the selection of the penalty function to achieve the purpose of variable selection for the linear regression model. Tibshirani (1996) proposes the Lasso method by the use of L1 penalty. Fan and Li (2001) advocate the use of nonconvex penalties. In particular, they suggest the use of the SCAD penalty. Zou (2006) proposes the adaptive Lasso by using adaptive L1 penalty, Zhang (2010) proposes the minimax concave penalty (MCP), Liu and Wu (2007) propose to linearly combine L0 and L1 penalties, and Lv and Fan (2009) introduce a unified approach to sparse recovery and model selection using general concave penalties. In this paper, we use concave penalty function to conduct variable selection. We make the following assumption on the penalty function.

Condition 1. For each λ > 0, the penalty function pλ(t) with t ∈ [0, ∞) is increasing and concave with pλ(0) = 0, its second order derivative exists and is continuous, and . Further, assume that as λ → 0.

Condition 1 is commonly assumed in studying regularization methods with concave penalties. Similar conditions can be found in Fan and Li (2001) and Lv and Fan (2009). Although it is assumed that exists and is continuous, it can be relaxed to the case where only exists and is continuous. All theoretical results presented in later sections can be generalized by imposing conditions on the local concavity of pλ(t), as done in Lv and Fan (2009).

2.2. Model selection consistency

Although the proxy matrix may be different from the true one Pz, solving the regularization problem (6) may still yield correct model selection results at the cost of some additional bias. We next establish conditions on to ensure the model selection oracle property of the proposed method.

Let β0 be the true coefficient vector. Suppose that β0 is sparse and denote s1n = ||β0||0 i.e., the number of nonzero elements in β0. Write

where β1,0 is an s1n-vector and β2,0 is a (dn – s1n)-vector. Without loss of generality, we assume that β2,0 = 0, that is, the nonzero elements of β0 locate at the first s1n coordinates. With a slight abuse of notation, we write X = (X1,X2) with X1 being a submatrix formed by the first s1n columns of X and X2 being formed by the remaining columns. For a matrix B, let Λmin(B) and Λmax(B) be its minimum and maximum eigenvalues, respectively. We make a few assumptions on the penalty function pλn(t) and the design matrices X and Z.

Condition 2.

(A) Letan = min1≤j≤s1n |β0, j|. It holds that annT (log n)−3/2 → ∞ with τ ∈ (0, ) being some positive constant, and .

(B) There exists a constant c1 > 0 such that and (C) The minimum and maximum eigenvalues of matrices and are both bounded from below and above by c0 and c0−1 respectively, where θ ∈ (2τ, 1] and c0 > 0 is a constant. Further, it holds that

| (7) |

| (8) |

where || · ||∞ denotes the matrix infinity norm.

Condition 2(A) is on the minimum signal strength an. We allow the minimum signal strength to decay with sample size n. When concave penalties such as SCAD (Fan and Li, 2001) or SICA (Lv and Fan, 2009) are used, this condition can be easily satisfied with λn appropriately chosen. Conditions 2(B) and (C) put constraints on the proxy . Condition 2(C) is about the design matrices X and Z. Inequality (8) requires noise variables and signal variables not highly correlated. The upper bound of (8) depends on the ratio . Thus, concave penalty functions relax this condition when compared to convex penalty functions. We will further discuss constraints (7) and (8) in Lemma 1.

If the above conditions on the proxy matrix are satisfied then the bias caused by using is small enough and the resulting estimate still enjoys the model selection oracle property described in the following theorem.

Theorem 1. Assume that as n → ∞ and . Then under Conditions 1 and 2, with probability tending to 1 as n → ∞, there exists a local minimizer which satisfies

| (9) |

Theorem 1 presents the weak oracle property in the sense of Lv and Fan (2009) on the local minimizer of . Due to the high dimensionality and the concavity of pλ(·), the characterization of the global minimizer of is a challenging open question. As will be shown in the simulation and real data analysis, the concave function will be iteratively minimized by the local linear approximation method (Zou and Li, 2008). Following the same idea as in Zou and Li (2008), it can be shown that the resulting estimate poesses the properties in Theorem 1 under some conditions.

2.3. Choice of proxy matrix

It is difficult to see from (7) and (8) on how restrictive the conditions on the proxy matrix are. So we further discuss these conditions in the lemma below. We introduce the notation and with . Correspondingly, when the proxy matrix is used, define and . We use || · ||2 to denote the matrix 2-norm, that is, ||A||2 = {Λmax(AAT)}½.

Lemma 1. Assume that and

| (10) |

Then (7) holds.

Similarly, assume that , and there exists a constant c2 > 0 such that

| (11) |

| (12) |

then (8) holds.

Equations (10), (11) and (12) show conditions on the proxy matrix . Note that if penalty function used is flat outside of a neighborhood of zero, then with appropriately chosen regularization parameter λn, and conditions (10) and (12) respectively reduce to

| (13) |

Furthermore, since Z is a block diagonal matrix, if the maximum eigenvalue of ZT−1ZT is of the order o(n1−θ), then condition (11) reduces to

| (14) |

Conditions (13) and (14) are equivalent to assuming that and have eigenvalues bounded between 0 and 2. By linear algebra, they can further be reduced to and . It is seen from the definitions of T, , E and that if eigenvalues of ZPxZT and ZZT dominate those of by a larger order of magnitude, then these conditions are not difficult to be satisfied. In fact, note that both ZPxZT and ZZT have components with magnitudes increasing with n, while the eigenvalues of are independent of n. Thus as long as both matrices ZPxZT and ZZT are non-singular, these conditions should easily be satisfied with the choice when n is large enough.

3. Identifying important random effects

In this section, we allow the number of random effects q to increase with sample size n and write it as qn to emphasize its dependency on n. We focus on the case where the number of fixed effects dn is smaller than the total sample size . We discuss the dn ≥ n case in the discussion Section 5. The major goal of this section is to select important random effects.

3.1. Regularized posterior mode estimate

The estimation of random effects is different from the estimation of fixed effects, as the vector γ is random. The empirical Bayes method has been used to estimate the random effects vector γ in the literature. See, for example, Box and Tiao (1973), Gelman et al. (1995), and Verbeke and Molenberghs (2000). Although the empirical Bayes method is successful in estimating random effects in many situations, it cannot be used to select important random effects. Moreover, the performance of empirical Bayes estimate largely depends on the accuracy of estimated fixed effects. These difficulties call for a new proposal for random effects selection.

Patterson and Thompson (1971) propose the error contrast method to obtain the restricted maximum likelihood of a linear model. Following their notation, define the n×(n–d) matrix A by the conditions AAT = Px and ATA = I, where Px = I − X(XTX)−1XT. Then the vector AT ε provides a particular set of n – d linearly independent error contrasts. Let w1 = AT y. The following proposition characterizes the conditional distribution of w1:

Proposition 1. Given γ, the density function of w1 takes the form

| (15) |

The above conditional probability is independent of the fixed effects vector β and the error contrast matrix A, which allows us to obtain a posterior mode estimate of γ without estimating β and calculating A.

Let be the index set of the true random effects. Define

and denote by . Then is the index set of nonzero random effects coefficients in the vector γ, and is the index set of the zero ones. Let be the number of true random effects. Then . We allow Ns2n to diverge with sample size n, which covers both the case where the number of subjects N diverges with n alone and the case where N and s2n diverge with n simultaneously.

For any we use to denote the (qnN)×|| submatrix of Z formed by columns with indices in and to denote the subvector of γ formed by components with indices in . Then with a submatrix formed by entries of with row and column indices in .

In view of (15), the restricted posterior density of can be derived as

Therefore, the restricted posterior mode estimate of is the solution to the following minimization problem:

| (16) |

In practice, since the true random effects are unknown, the formulation (16) does not help us estimate γ. To overcome this difficulty, note that and with the Moore-Penrose generalized inverse of . Thus, the objective function in (16) is rewritten as

which no longer depends on the unknown . Observe that if the k-th random effect is a noise one, then the corresponding standard deviation is 0 and the coefficients γik for all subjects i = 1, … ,N should equal to 0. This leads us to consider group variable selection strategy to identify true random effects. Define and consider the following regularization problem

| (17) |

where pλn(·) is the penalty function with regularization parameter λn ≥ 0. The penalty function here may be different from the one in Section 2. However, to ease the presentation, we use the same notation.

There are several advantages to estimating the random effects vector γ using the above proposed method (17). First, this method does not require knowing or estimating the fixed effects vector β, so it is easy to implement, and the estimation error of β has no impact on the estimation of γ. In addition, by using the group variable selection technique, the true random effects can be simultaneously selected and estimated.

In practice, the covariance matrix and the variance σ2 are both unknown. Thus, we replace with , where with M a proxy of G, yielding the following regularization problem

| (18) |

It is interesting to observe that the form of regularization in (18) includes the elastic net (Zou and Hastie, 2005) and the adaptive elastic net (Zou and Zhang, 2009) as special cases. Furthermore, the optimization algorithm for adaptive elastic net can be modified for minimizing (18).

3.2. Asymptotic properties

Minimizing (18) yields an estimate of γ, denoted by . In this subsection, we study the asymptotic property of . Because γ is random rather than a deterministic parameter vector, the existing formulation for the asymptotic analysis of a regularization problem is inapplicable to our setting. Thus, asymptotic analysis of is challenging.

Let and . Denote by , , and . Similarly, we can define submatrices , , and by replacing with . Then it is easy to see that . Notice that if the oracle information of set is available and and σ2 are known, then the Bayes estimate of the true random effects coefficient vector has the form . Define with for j = 1, … ,N as the oracle-assisted Bayes estimate of the random effects vector. Then and . Correspondingly, define as the oracle Bayses estimate with proxy matrix, i.e., and

| (19) |

For k = 1, … , qn, let . Throughout we condition on the event

| (20) |

with and . The above event Ω* is to ensure that the oracle-assisted estimator of σk is not too negatively biased. We will need the following assumptions.

Condition 3. (A) The maximum eigenvalues satisfy for all i = 1, … ,N, and the minimum and maximum eigenvalues of and are bounded from below and above by c3 and c3−1 respectively with mn = max1≤i≤N ni, where c3 is a positive constant. Further, assume that for some δ ∈ (0, ½),

| (21) |

| (22) |

where is the submatrix formed by the N columns of Z corresponding to the j-th random effect.

(B) It holds that .

(C) The proxy matrix satisfies that .

Condition 3(A) is about the design matrices X, Z, and covariance matrix . Since is a block diagonal matrix and , the components of have magnitude of the order mn = O(n/N). Thus, it is not very restrictive to assume that the minimum and maximum eigenvalues of are both of the order mn. Condition (22) puts an upper bound on the correlation between noise covariates and true covariates. The upper bound of (22) depends on the penalty function. Note that for concave penalty we have , whereas for L1 penalty . Thus, concave penalty relaxes (22) when compared with the L1 penalty. Condition 3(B) is satisfied by many commonly used penalties with appropriately chosen λn, for example, L1 penalty, SCAD penaty, and SICA penalty with small a. Condition 3(C) is a restriction on the proxy matrix , which will be further discussed in the next subsection.

Let with γj = (γj1, … , γjqn)T being an arbitrary (Nqn)-vector. Define for each k = 1, … , qn. Let

| (23) |

Theorem 2 below shows that there exists a local minimizer of defined in (18) whose support is the same as the true one and that this local minimizer is close to the estimator .

Theorem 2. Assume that Conditions 1 and 3 hold, , , and as n → ∞. Then, with probability tending to 1, there exists a strict local minimizer of such that

where δ is defined in (21).

Using similar argument to that for Theorem 1, we can obtain that the dimensionality Nqn is also allowed to grow exponentially with sample size n under some growth conditions and with appropriately chosen λn. In fact, note that if the sample sizes n1 = … N ≡ mn/N, then the growth condition in Theorem 2 becomes . Since the lowest signal level in this case is , if is a constant, a reasonable choice of tuning parameter would be of the order with some κ ∈ (0, ½). For s2n = O(nν) with ν ∈ [0, ½) and Nn1–2κ–ν → ∞, we obtain that Nqn can grow with rate exp(Nn1−2κ−ν).

3.3. Choice of proxy matrix

Similarly as for the fixed effects selection and estimation, we discuss (21) and (22) in the following lemma.

Lemma 2. Assume that and

| (24) |

Then (21) holds.

Similarly, assume that with defined in (22) and

| (25) |

Then (22) holds.

Conditions (24) and (25) put restrictions on the proxy matrix . Similarly to the discussions after Lemma 1, if , then these conditions become . If dominates by a larger magnitude, then conditions (24) and (25) are not restrictive, and choosing should make these conditions as well as Condition 3(C) satisfied for large enough n.

We remark that using the proxy matrix is equivalent to ignoring correlations among random effects. The idea of using diagonal matrix as a proxy of covariance matrix has been proposed in other settings of high dimensional statistical inference. For instance, the naive Bayes rule (or independence rule), which replaces the full covariance matrix in Fisher’s discriminant analysis with a diagonal matrix, has been demonstrated to be advantageous for high dimensional classifications both theoretically (Bickel and Levina, 2004; Fan and Fan, 2008) and empirically (Dudoit et al., 2002). The intuition is that although ignoring correlations gives only a biased estimate of covariance matrix, it avoids the errors caused by estimating a large amount of parameters in covariance matrix in high dimensions. Since the accumulated estimation error can be much larger than the bias, using diagonal proxy matrix indeed produces better results.

4. Simulation and application

In this section, we investigate the finite-sample performance of the proposed procedures by Monte Carlo simulations and an empirical analysis of a real data set. Throughout, the SCAD penalty with α = 3.7 (Fan and Li, 2001) is used. For each simulation study, we randomly simulate 200 data sets. Tuning parameter selection plays an important role in regularized methods. For fixed effect selection, both AIC and BIC-selectors (Zhang, Li and Tsai, 2010) are used to select the regularization parameter λn in (6). Our simulation results clearly indicate that the BIC-selector performs better than the AIC-selector for both the SCAD and the LASSO penalties. This is consistent with the theoretical analysis in Wang, Li and Tsai (2007). To save space, we report the results with the BIC-selector. Furthermore the BIC-selector is used for fixed effect selection throughout this section. For random effect selection, both AIC- and BIC-selectors are also used to select the regularization parameter λn in (18). Our simulation results imply that the BIC-selector outperforms the AIC-selector for the LASSO penalty, while the SCAD with AIC-selector performs better than the SCAD with BIC-selector. As a result, we use AIC-selector for the SCAD and BIC-selector for the LASSO random effect selection throughout this section.

Example 1. We compare our method with some existing ones in the literature under the same model setting as that in Bondell et al. (2010), where a joint variable selection method for fixed and random effects in linear mixed effects models is proposed. The underlying true model takes the following form with q = 4 random effects and d = 9 fixed effects

| (26) |

where the true parameter vector β0 = (1, 1, 0, … , 0)T , the true covariance matrix for random effects

and the covariates xijk for k = 1, … , 9 zijl for l = 1, 2, 3 are generated independently from a uniform distribution over the interval [−2, 2]. So there are three true random effects and two true fixed effects. Following Bondell et al. (2010), we consider two different sample sizes N = 30 subjects and ni = 5 observations per subject, and N = 60 and ni = 10. Under this model setting, Bondell et al. (2010) compared their method with various methods in the literature, and simulations therein demonstrate that their method outperforms the competing ones. So we will only compare our methods with the one in Bondell et al. (2010).

In implementation, the proxy matrix is chosen as . We then estimate the fixed effects vector β by minimizing , and the random effects vector γ by minimizing (18). To understand the effects of using proxy matrix on the estimated random effects and fixed effects, we compare our estimates with the ones obtained by solving regularization problems (6) and (17) with the true value .

Table 1 summarizes the results by using our method with the proxy matrix and SCAD penalty (SCAD-P), our method with proxy matrix and Lasso penalty (Lasso-P), our method with true and SCAD penalty (SCAD-T). When SCAD penalty is used, the local linear approximation (LLA) method proposed by Zou and Li (2008) is employed to solve these regularization problems. The rows “M-ALASSO” in Table 1 correspond to the joint estimation method by Bondell et al. (2010) using BIC to select the tuning parameter. As demonstrated in Bondell et al. (2010), the BICselector outperforms the AIC selector for M-ALASSO. We compare these methods by calculating the percentage of times the correct fixed effects are selected (%CF), and the percentage of times the correct random effects are selected (%CR). Since these two measures were also used in Bondell et al. (2010), for simplicity and fairness of comparison, the results for M-ALASSO in Table 1 are copied from Bondell et al. (2010).

Table 1. Fixed and random effects selection in Example 1 when d = 9 and q = 4.

| Setting | Method | %CF | %CR |

|---|---|---|---|

| N = 30 | Lasso-P | 51 | 19.5 |

| Hi = 5 | SCAD-P | 90 | 86 |

| SCAD-T | 93.5 | 99 | |

| M-ALASSO | 73 | 79 | |

|

| |||

| N = 60 | Lasso-P | 52 | 50.5 |

| ni = 10 | SCAD-P | 100 | 100 |

| SCAD-T | 100 | 100 | |

| M-ALASSO | 83 | 89 | |

It is seen from Table 1 that SCAD-P greatly outperforms the method with Lasso penalty as well as the M-ALASSO method. We also see that when the true covariance matrix is used, SCAD-T has almost perfect variable selection results. Using the proxy matrix makes the results slightly inferior, but the difference vanishes when the sample size is large, i.e., N = 60, ni = 10.

Example 2. In this example, we consider the case where the design matrices for fixed and random effects overlap. The sample size is fixed at ni = 8 and N = 30, and the numbers for fixed and random effects are chosen to be d = 100 and q = 10, respectively. To generate the fixed effects design matrix, we first independently generate from Nd(0, Σ), where Σ = (σst) with σst = ρ|s–t| and ρ ∈ (−1, 1). Then for the j-th observation of the i-th subject, we set for covariates k = 1 and d, and set for all other values of k. Thus, 2 out of d covriates are discrete ones and the rest are continuous ones. Moreover, all covariates are correlated with each other. The covariates for random effects are the same as the corresponding ones for fixed effects, i.e., for the j-th observation of the i-th subject, we set zijk = xijk for k = 1, … , q = 10. Then the random effect covariates form a subset of fixed effect covariates.

The first six elements of fixed effects vector β0 are (2, 0, 1.5, 0, 0, 1)T and the remaining elements are all zero. The random effects vector γ is generated in the same way as in Example 1. So the first covariate is discrete and has both fixed effect and random effect. We consider different values of correlation level ρ, as shown in Table 2.

Table 2. Fixed and random effects selection and estimation in Example 2 when m = 8, N = 30 and design matrices for fixed and random effects overlap.

| Random Effects | Fixed Effects | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Setting | Method | FNR (%) |

FPR | MRL2 | MRL1 | FNR (%) |

FPR | MRL2 | MRL1 |

| d = 100 | Lasso-P | 11.83 | 9.50 | 0.532 | 0.619 | 62.67 | 0.41 | 0.841 | 0.758 |

| q = 10 | SCAD-P | 0.50 | 1.07 | 0.298 | 0.348 | 0.83 | 0.03 | 0.142 | 0.109 |

| p = 0.3 | SCAD-T | 3.83 | 0.00 | 0.522 | 0.141 | 0.33 | 0.02 | 0.102 | 0.082 |

| d = 100 | Lasso-P | 23.67 | 7.64 | 0.524 | 0.580 | 59.17 | 0.41 | 0.802 | 0.745 |

| q = 10 | SCAD-P | 1.83 | 0.71 | 0.308 | 0.352 | 0.67 | 0.05 | 0.141 | 0.109 |

| p = -0.3 | SCAD-T | 3.17 | 0.00 | 0.546 | 0.141 | 0.17 | 0.02 | 0.095 | 0.078 |

| d = 100 | Lasso-P | 9.83 | 10.07 | 0.548 | 0.631 | 60.33 | 0.48 | 0.844 | 0.751 |

| q = 10 | SCAD-P | 1.67 | 0.50 | 0.303 | 0.346 | 0.17 | 0.05 | 0.138 | 0.110 |

| p = 0.5 | SCAD-T | 5.00 | 0.00 | 0.532 | 0.149 | 0.50 | 0.02 | 0.113 | 0.091 |

Since the dimension of random effects vector γ is much larger than the total sample size, as suggested at the beginning of Subsection 2.1, we start with the random effects selection by first choosing a relatively small tuning parameter λ and use our method in Section 3 to select important random effects. Then with the selected random effects, we apply our method in Section 2 to select fixed effects. To improve the selection results for random effects, we further use our method in Section 2 with the newly selected fixed effects to reselect random effects. This iterative procedure is applied to both Lasso-P and SCAD-P methods. For SCAD-T, since the true is used, it is unnecessary to use the iterative procedure and we apply our methods only once for both fixed and random effects selection and estimation.

We evaluate our new method by calculating the relative L2 estimation loss, which is defined as

where is an estimate of the fixed effects vector β0. Similarly, the relative L1 estimation error of , denoted by , can be calculated by replacing the L2-norm with the L1-norm. For the random effects estimation, we define and in a similar way by replacing β0 with the true γ in each simulation. We calculate the mean values of RL2 and RL1 in the simulations and denote them by MRL2 and MRL1 in Table 2. In addition to mean relative losses, we also calculate the percentages of missed true covaritates (FNR), as well as the percentages of falsely selected noise covariates (FPR), to evaluate the performance of proposed methods

From Table 2 we see that SCAD with the true covariance matrix has almost perfect variable selection results for fixed effects, while SCAD-P has highly comparable performance, for all three values of correlation level ρ. Both methods greatly outperform the Lasso-P method. For the random effects selection, both SCAD-P and SCAD-T perform very well with SCAD-T having slightly larger false negative rates. We remark that the superior performance of SCAD-P is partially because of the iterative procedure. In these high-dimensional settings, directly applying our random effects selection method in Section 3 produces slightly inferior results to the ones for SCAD-T in Table 2, but iterating once improves the results. We also see that as the correlation level increases, the performance of all methods become worse, but the SCAD-P is still comparable to SCAD-T and both perform very well in all settings.

Example 3. We illustrate our new procedures through an empirical analysis of a subset of data collected in the Multi-center AIDs Cohort Study. Details of the study design, method, and medical implications have been given by Kalsow et al. (1987). This data set comprises the human immunodeficiency virus (HIV) status of 284 homosexual men who were infected with HIV during the follow-up period between 1984 and 1991. All patients are scheduled to take measurements semiannually. However, due to the missing of scheduled visits and the random occurrence of HIV infections, there are an unequal number of measurements and different measurement times for each patients. The total number of observations is 1765.

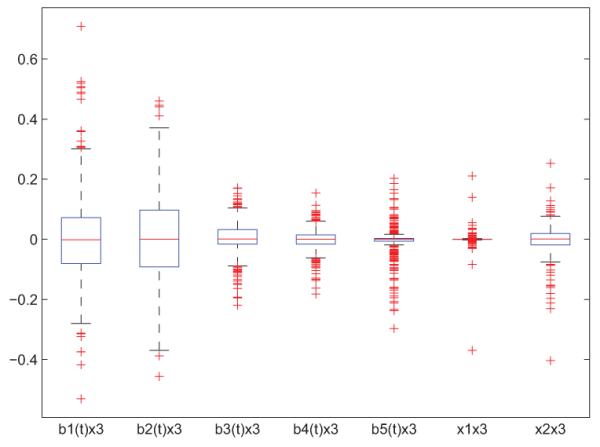

Of interest is to investigate the relation between the mean CD4 percentage after the infection (y) and predictors smoking status (x1, 1 for smoker and 0 for non-smoker), age at infection (x2), and pre-HIV infection CD4 percentage (Pre-CD4 for short, x3). To account for the effect of time, we use a five dimensional cubic spline b(t) = (b1(t), b2(t), … , b5(t))T . We take into account the two-way interactions b(tij)xi3, xi1xi2, xi1xi3, and xi2xi3. These eight interactions together with variables b(tij ), xi1, xi2, and xi3 give us 16 variables in total. We use these 16 variables together with an intercept to fit a mixed effects model with dimensions for fixed and random effects d = q = 17. The estimation results are listed in Table 3 with rows “Fixed” showing the estimated βj’s for fixed effects, and rows “Random” showing the estimates . The standard error for the null model is 11.45, and it reduces to 3.76 for the selected model. From Table 3, it can be seen that the baseline has time-variant fixed effect and Pre-CD4 has time-variant random effect. Smoking has fixed effect while age and Pre-CD4 have no fixed effects. The interactions smoking×Pre-CD4 and age×Pre-CD4 have random effects with smallest standard deviations among selected random effects. The boxplot of the selected random effects is shown in Figure 1.

Table 3. The estimated coefficients of fixed and random effects in Example 3.

| Intercept | b1(t) | 62 (t) | 63 (t) | 64 (t) | 65 (t) | x 1 | x 2 | ||

|---|---|---|---|---|---|---|---|---|---|

| Fixed | 29.28 | 9.56 | 5.75 | 0 | -8.32 | 0 | 4.95 | 0 | 0 |

| Random | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| b 1 (t)x 3 | b2(t)x3 | b3(t)x3 | b4(t)x3 | b5(t)x3 | x1x2 | x1x3 | x2x3 | ||

| Fixed | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Random | 0.163 | 0.153 | 0.057 | 0.043 | 0.059 | 0 | 0.028 | 0.055 |

Fig 1.

Boxplots of selected random effects. From left to right: bi(t)x3, i = 1, 2, … , 5, x1x3, x2x3, where x1 is the smoking status, x2 is the age at infection, x3 is Pre-CD4 level, and bi(t)’s are cubic spline basis functions of time.

Our results have close connections with the ones in Huang et al (2002) and Qu and Li (2006), where the former used bootstrap approach to test the significance of variables and the later proposed hypothesis test based on penalized spline and quadratic inference function approaches, for varying-coefficient models. Both papers revealed significant evidence for time-varying baseline, which is consistent with our discovery that basis functions bj(t)’s have nonzero fixed effect coefficients. At 5% level, Huang et al (2002) failed to reject the hypothesis of constant Pre-CD4 effect (p-value 0.059), while Qu and Li (2006)’s test was weakly significant with p-value 0.045. Our results show that Pre-CD4 has constant fixed effect and time-varying random effect, which may provide an explanation on the small difference of p-values in Huang et al (2002) and Qu and Li (2006).

To further access the significance of selected fixed effects, we refit the linear mixed effects model with selected fixed and random effects using the Matlab function “nlmefit”. Based on the t-statistics from the refitted model, the intercept, the baseline functions b1(t) and b2(t) are all highly significant with t-statistics much larger than 7, while the t-statistics for b4(t) and x1 (smoking) are −1.026 and 2.216, respectively. This indicates that b4(t) is insignificant and smoking is only weakly significant at 5% significance level. This result is different from those in Huang et al (2002) and Qu and Li (2006), where neither paper found significant evidence for smoking. A possible explanation is that by taking into account random effects and variable selection, our method has better discovery power.

5. Discussion

We have discussed the selection and estimation of fixed effects in Section 2, providing that the random effects vector has nonsingular covariance matrix, while we have discussed the selection and estimation of random effects in Section 3, providing that the dimension of fixed effects vector is smaller than the sample size. We have also illustrated our methods with numerical studies. In practical implementation, the dimensions of the random effects vector and fixed effects vector can be both much larger than the total sample size. In such case, we suggest an iterative way to select and estimate the fixed and random effects. Specifically, we can first start with the fixed effects selection using the penalized least squares by ignoring all random effects to reduce the number of fixed effects to below sample size. Then in the second step, with the selected fixed effects, we can apply our new method in Section 3 to select important random effects. Thirdly, with the selected random effects from the second step, we can use our method in Section 2 to further select important fixed effects. We can also iterate the second and third steps several times to improve the model selection and estimation results.

6. Proofs

Lemma 3 is proved in the online supplemental file.

Lemma 3. It holds that

6.1. Proof of Theorem 1

Let . We are going to show that under conditions 1 and 2, there exists a local minimizer with asymptotic probability one.

For a vector be a vector of the same length whose j-th component is . By Lv and Fan (2009), the sufficient conditions for with being a strict local minimizer of are

| (27) |

| (28) |

| (29) |

where . So we only need to show that with probability tending to 1, there exists a satisfying conditions (27) – (29).

We first consider (27). Since y = X1β0,1 + Zγ + ε, equation (27) can be rewritten as

| (30) |

Define a vector-valued continuous function with β1 ∈ Rs1n. It suffices to show that with probability tending to 1, there exists such that . To this end, first note that

By Condition 2(B), the matrix , where A ≥ 0 means the matrix A is positive semi-definite. Therefore,

| (31) |

Thus, the j-th diagonal component of matrix V in (31) is bounded from above by the j-th diagonal component of . Further note that by Condition 2(B), . Recall that by linear algebra, if two positive definite matrices A and B satisfy A ≥ B, then it follows from the Woodbury formula that A−1 ≤ B−1. Thus, and . So by Condition 2(C), the diagonal components of V in (31) are bounded from above by O(n−θ(log n)). This indicates that the variance of each component of the normal random vector is bounded from above by O(n−θ(log n)). Hence, by Condition 2(C),

| (32) |

Next, by Condition 2(A), for any and large enough n, we can obtain that

| (33) |

Since is a decreasing function in (0, ∞), we have . This together with Condition 2(C) ensures that

| (34) |

Combining (32) and (34) ensures that with probability tending to 1, if n is large enough,

Applying the Miranda’s existence theorem (Vrahatis, 1989) to the function g(β1) ensures that there exists a vector satisfying such that .

Now we prove that the solution to (27) satisfies (28). Plugging y = X1β0,1 + Zγ + ε into v in (28) and by (30), we obtain that

where and . Since , it is easy to see that v2,1 has normal distribution with mean 0 and variance

| (35) |

Since , is a projection matrix, and has eigenvalues less than 1, it follows that for the unit vector ek,

where the last step is because each column of X is standardized to have L2-norm . Thus, the diagonal elements of the covariance matrix of v1,2 are bounded from above by c1n. Therefore, for some large enough constant C > 0,

Thus, it follows from the assumption that . Moreover, by Condition 2(B) and (C),

Therefore, the inequality (28) holds with probability tending to 1 as n → ∞.

Finally we prove that satisfying (27) and (28) also makes (29) hold with probability tending to 1. By (33) and Condition 2(A),

On the other hand, by condition 2(C), . Since θ > 2τ , the inequality (34) holds with probability tending to 1 as n → ∞.

Combing the above results, we have shown that with probability tending to 1 as n → ∞, there exists which is a strict local minimizer of . This concludes the proof.

6.2. Proof of Theorem 2

Let with a RNqn-vector satisfying . Define with uj = (uj1, … , ujqn)T , where for j = 1, … ,N,

| (36) |

and λnujk = 0 if . Here, . Let be the Bayes estimate defined in (19). By Lv and Fan (2009), the sufficient conditions for γ ∈ RqnN with being a strict local minimizer of (18) are

| (37) |

| (38) |

| (39) |

where with wj = (wj1, … , wjqn)T , and

| (40) |

We will show that, under Conditions 1 and 3, the above (37) – (39) are satisfied with probability tending to 1 in a small neighborhood of .

In general, it is not always guaranteed that (37) has a solution. We first show that under Condition 3, there exists a vector with such that makes (37) hold. To this end, we constrain the objective function defined in (18) on the (Nsn2)-dimensional subspace . Next define

For any and each , we have

| (41) |

Note that by Condition 3(C), we have . Thus it can be derived using linear algebra and the definitions of and that . Since we condition on the event Ω* in (20), it is seen that for large enough n,

| (42) |

for and . Thus, in view of the definition of u(γ) in (36), for , we have

where the last step is because is decreasing in t ∈ (0, ∞) due to the concavity of pλn(t). This together with (21) in Condition 3 ensures

| (43) |

Now define the vector-valued continuous function , with ξ a RNs2n-vector. Combining (41) and (43) and applying Miranda’s existence theorem (Vrahatis, 1989) to the function Ψ(ξ), we conclude that there exists such that is a solution to equation (37).

We next show that defined above indeed satisfies (39). Since , by (42) we have for . Since pλn(t) is concave in t ∈ [0, ∞), we know that is negative and increasing in (0, ∞). Note that for any vector x,

| (44) |

Thus,

This together with (20) indicates that with probability tending to 1, the maximum eigenvalue of the matrix is less than

Further, by Condition 3 (A) and (B), . Thus, the maximum eigenvalue of the matrix is less than with asymptotic probability 1, and (39) holds for

It remains to show that satisfies (38). Let . Since is a solution to (37), we have . In view of (40), we have

| (45) |

Since , we obtain that with . Note that is a block diagonal matrix and the i-th block matrix has size ni×(qn–s2n). By Condition 3(A), it is easy to see that . Thus, . Further, it follows from and that

Thus, the i-th diagonal element of H is bounded from above by the i-th diagonal element of , and thus bounded by with some positive constant. Therefore by the normality of we have

Therefore, and

| (46) |

where is the ((j – 1)qn + k)-th element of Nqn-vector .

Now we consider . Define as the submatrix of Z formed by columns corresponding to the j-th random effect. Then, for each j = s2n +1, … , qn, by Condition 3(A) we obtain that

where is the ((j – 1)qn + k)-th element of Nqn-vector . Since , by (36), (42) and the decreasing property of we have . By (22) in Condition 3(A),

Combing the above result for with (45) and (46), we have shown that (38) holds with asymptotic probability one. This completes the proof.

Supplementary Material

Footnotes

AMS 2000 subject classifications: Primary 62J05, 62J07; secondary 62F10

Contributor Information

Yingying Fan, Information and Operations Management, Department Marshall School of Business, University of Southern California, Los Angeles, CA 90089, USA.

Runze Li, Department of Statistics and the Methodology Center, The Pennsylvania State University, University Park, PA 16802, USA.

References

- Akaike H. In: Petrov BN, Csaki F, editors. Information theory and an extension of the maximum likelihood principle; Proceedings of the 2nd International Symposium on Information Theory; Budapest: Akademia Kiado. 1973. pp. 267–281. [Google Scholar]

- Bickel PJ, Levina E. Some theory for Fisher’s linear discriminant function, “naive Bayes,” and some alternatives when there are many more variables than observations. Bernoulli. 2004;10:989–1010. [Google Scholar]

- Bondell HD, Krishna A, Ghosh SK. Joint variable selection for fixed and random effects in linear mixed-effects models. Biometrics. 2010;66:1069–1077. doi: 10.1111/j.1541-0420.2010.01391.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Box GEP, Tiao GC. Bayesian Inference in Statistical Analysis. John Wiley; New York: 1973. [Google Scholar]

- Chen Z, Dunson DB. Random effects selection in linear mixed models. Biometrics. 2003;59:762–769. doi: 10.1111/j.0006-341x.2003.00089.x. [DOI] [PubMed] [Google Scholar]

- Dudoit S, Fridlyand J, Speed TP. Comparison of discrimination methods for the classification of tumors using gene expression data. J. Amer. Statist. Assoc. 2002;97:77–87. [Google Scholar]

- Efron B, Hastie T, Johnstone I, Tibshirani R. Least angle regression (with discussion) Ann. Statist. 2004;32:407–451. [Google Scholar]

- Fan J, Fan Y. High-dimensional classification using features annealed independence rules. The Annals of Statistics. 2008;36:2605–2637. doi: 10.1214/07-AOS504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Amer. Statist. Assoc. 2001;96:1348–1360. [Google Scholar]

- Fan J, Peng H. Nonconcave penalized likelihood with diverging number of parameters. Ann. Statist. 2004;32:928–961. [Google Scholar]

- Gelman A, Carlin JB, Stern H, Rubin DB. Bayesian Data Analysis. Chapman and Hall; London: 1995. [Google Scholar]

- Harville DA. Bayesian inference for variance components using only error contrasts. Biometrika. 1974;61:383–385. [Google Scholar]

- Horn RA, Johnson CR. Matrix Analysis. Cambridge University Press; 1990. [Google Scholar]

- Huang JZ, Wu CO, Zhou L. Varying coefficient models and basis function approximations for the analysis of repeated measurements. Biometrika. 2002;89:111–128. [Google Scholar]

- Ibrahim JG, Zhu H, Garcia RI, Guo R. Fixed and Random Effects Selection in Mixed Effects Models. Biometrics. 2011;67:495–503. doi: 10.1111/j.1541-0420.2010.01463.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaslow RA, Ostrow DG, Detels R, Phair JP, Polk BF, Rinaldo CR. The Multicenter AIDS Cohort Study: Rationale, organization and selected characteristics of the participants. American Journal Epidemiology. 1987;126:310–318. doi: 10.1093/aje/126.2.310. [DOI] [PubMed] [Google Scholar]

- Laird NM, Ware JH. Random-effects models for longitudinal data. Biometrics. 1982;38:963–974. [PubMed] [Google Scholar]

- Liang H, Wu HL, Zou GH. A note on conditional AIC for linear mixed-effects models. Biometrika. 2008;95:773–778. doi: 10.1093/biomet/asn023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin X. Variance component testing in generalised linear models with random effects. Biometrika. 1997;84:309–326. [Google Scholar]

- Liu Y, Wu Y. Variable selection via a combination of the L0 and L1 penalties. J. Comput. Graph. Stat. 2007;16:782–798. [Google Scholar]

- Longford NT. Random Coefficient Models. Clarendon; Oxford: 1993. [Google Scholar]

- Lv J, Fan Y. A unified approach to model selection and sparse recovery using regularized least squares. Ann. Statist. 2009;37:3498–3528. [Google Scholar]

- Patterson HD, Thompson R. Recovery of inter-block information when block sizes are unequal. Biometrika. 1971;58:545–554. [Google Scholar]

- Pu W, Niu X. Selecting mixed-effects models based on a generalized information criterion. Journal of Multivariate Analysis. 2006;97:733–758. [Google Scholar]

- Qu A, Li R. Nonparametric modeling and inference function for longitudinal data. Biometrics. 2006;62:379–391. doi: 10.1111/j.1541-0420.2005.00490.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tibshirani R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society Series B. 1996;58:267–288. [Google Scholar]

- Vaida F, Blanchard S. Conditional Akaike information for mixed effects models. Biometrika. 2005;92:351–370. [Google Scholar]

- Verbeke G, Molenberghs G. Linear Mixed Models for Longitudinal Data. Springer; New York: 2000. [Google Scholar]

- Vrahatis MN. A short proof and a generalization of miranda’s existence theorem. Proceedings of the American Mathematical Society. 1989;107:701–703. [Google Scholar]

- Wang H, Li R, Tsai C-L. Tuning parameter selectors for the smoothly clipped absolute deviation method. Biometrika. 2007;94:553–568. doi: 10.1093/biomet/asm053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuan M, Lin Y. Model selection and estimation in regression with grouped variables. Journal of the Royal Statistical Society Series B. 2006;68:49–67. [Google Scholar]

- Zhang C-H. Nearly unbiased variable selection under minimax concave penalty. Ann. Statist. 2010;38:894–942. [Google Scholar]

- Zhang D, Lin X, Sowers MF. Two-stage functional mixed models for evaluating the effect of longitudinal covariate profiles on a scalar outcome. Biometrics. 2007;63:351–362. doi: 10.1111/j.1541-0420.2006.00713.x. [DOI] [PubMed] [Google Scholar]

- Zhang Y, Li R, Tsai C-L. Regularization parameter selections via generalized information criterion. Journal of American Statistical Association. 2010;105:312–323. doi: 10.1198/jasa.2009.tm08013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zou H. The adaptive lasso and its oracle properties. J. Amer. Statist. Assoc. 2006;101:1418–1429. [Google Scholar]

- Zou H, Hastie T. Regularization and variable selection via the elastic net. Journal of the Royal Statistical Society Series B. 2005;67:301–320. [Google Scholar]

- Zou H, Li R. One-step sparse estimates in nonconcave penalized likelihood models (with discussion) Ann. Statist. 200836:1509–1566. doi: 10.1214/009053607000000802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zou H, Zhang H. On the adaptive elastic-net with a diverging number of parameters. Ann. Statist. 2009;37:1733–1751. doi: 10.1214/08-AOS625. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.