Abstract

Owing to large financial investments that go along with the picture archiving and communication system (PACS) deployments and inconsistent PACS performance evaluations, there is a pressing need for a better understanding of the implications of PACS deployment in hospitals. We claim that there is a gap in the research field, both theoretically and empirically, to explain the success of the PACS deployment and maturity in hospitals. Theoretical principles are relevant to the PACS performance; maturity and alignment are reviewed from a system and complexity perspective. A conceptual model to explain the PACS performance and a set of testable hypotheses are then developed. Then, structural equation modeling (SEM), i.e. causal modeling, is applied to validate the model and hypotheses based on a research sample of 64 hospitals that use PACS, i.e. 70 % of all hospitals in the Netherlands. Outcomes of the SEM analyses substantiate that the measurements of all constructs are reliable and valid. The PACS alignment—modeled as a higher-order construct of five complementary organizational dimensions and maturity levels—has a significant positive impact on the PACS performance. This result is robust and stable for various sub-samples and segments. This paper presents a conceptual model that explains how alignment in deploying PACS in hospitals is positively related to the perceived performance of PACS. The conceptual model is extended with tools as checklists to systematically identify the improvement areas for hospitals in the PACS domain. The holistic approach towards PACS alignment and maturity provides a framework for clinical practice.

Keywords: Picture archiving and communication systems, PACS maturity model, Performance, Complexity theory, Strategic planning, Structural equation modeling

Introduction

After nearly 30 years of picture archiving and communication system (PACS) technology development and evolution, PACS has become an integrated component of today’s health care delivery system [1]. Nowadays, more extensive, efficient, cost-effective, scalable and vendor-independent infrastructure PACS solutions (e.g. using DICOM) are available, overcoming the inherent technical and practical limitations of earlier PACS deployments. Many hospitals are strategically planning and preparing for future radiology needs by re-evaluating their radiology systems and looking to replace (or upgrade) their original imaging networks with state-of-the-art equipment to improve the overall system performance [2].

In this respect, evaluation methods have proven valuable to assess the impacts of PACS on (radiological) workflow, although it has been argued that PACS benefits for hospitals should be evaluated from different angles and that the inclusion of clinical and not-for-profit goals makes the evaluations more relevant [3].

Still, little scientific knowledge is available about the mechanisms that govern the PACS performance and deployment success in hospitals. Owing to the large financial expenses that go along with PACS, there is a pressing need for models or frameworks that are adequate to rigorously assess and evaluate the performance of PACS, so that improvement guidelines for strategic planning and optimization plans and future investments can be systematically derived.

As we will argue in this paper, the PACS maturity model (PMM), which departs from the notion that PACS deployment is a stepwise process from an immature stage of growth/maturity towards the next maturity level, can be enriched with other theories into a conceptual model that is both extended and sparse enough to explain and understand PACS performance variations in hospitals.

Therefore, the main goal of this study is to develop an integrative model to empirically assess, on the one hand, the maturity and organizational alignment of PACS and, on the other hand, their impact on PACS performance. This implies that performance is defined as having multifactorial impacts and benefits, as produced by the application of PACS in terms of hospital efficiency (and service) and clinical effectiveness. We depart from the notion that theories from the IS/IT field provide new perspectives to understand how key elements in clinical practice can be achieved using PACS [3].

The validation of the proposed conceptual model for PACS performance is essential given the intangible nature of PACS performance as the central explanandum at stake. We first present how theoretical concepts of maturity and business alignment coincide with covariation (or co-alignment) [4] as an operationalized statistical scheme within structural equation modeling (SEM). The empirical part of this paper is dedicated to assessing the impact of PACS maturity and alignment on the multifactorial nature of the PACS performance using a primary data collected among 64 hospitals in the Netherlands. The main objective of this part of the paper is to empirically validate the proposed integrative PACS performance model. Based on these analyses, the third and final step is to derive improvement guidelines for strategic planning and optimization plans of PACS maturity and performance within hospitals.

Theoretical Background

Starting Point: The PACS Maturity Model

Maturity models have been developed to measure, plan and monitor the evolution of IS/IT in various organizations and markets. Within this field, Nolan and Gibson [5] are considered the founders of the IS/IT stage-based maturity perspective, although it has been further extended by others. For digital radiology and PACS, Van de Wetering and Batenburg developed the PMM [6]. In their study, they defined five levels of PACS maturity that hospitals can achieve.

These are the following:

-

PACS Infrastructure

This initial maturity level is concerned with the basic and unstructured implementation and usage of image acquisition, storage, distribution and display.

-

PACS Process

At the PACS process level, most of initial pitfalls have been covered by the so-called ‘second’ generation–more advanced–PACS deployments. The general focus on this level is on effective process redesign/re-engineering, optimizing manual workflow in radiology and initiating transparent PACS processes outside radiology. This requires a high level of integration of the various imaging information systems and hospital information system (HIS) and radiology information system (RIS).

-

Clinical Process Capability

This third level is represented by the evolution of PACS towards a system that can cope with operational workflow and patient management, hospital-wide PACS distribution, communication and image-based clinical action. The evolution to this level requires important alterations in terms of PACS processes, extending the scope beyond imaging data and the level of integration of health information systems like HIS, RIS and PACS.

-

Integrated Managed Innovation

The integrated managed innovation level can be characterized by the initial integration of PACS into the electronic patient record (ePR) (or electronic medical record (EMR)) and cross-enterprise exchange of digital imaging data (XDS-i) and supporting material. Basically, this level forms a bridge between the optimization of internal clinical PACS processes and the wider adoption within an ePR/EMR and enterprise PACS chain(s).

-

Optimized Enterprise PACS Chain

Finally, level five is the ‘optimized enterprise PACS chain’. At this level, and with PACS fully integrated into the wider ePR, PACS can be maximized for efficiency purposes and clinical effectiveness. Thus, the key process characteristics at this developmental stage include the following: large system integrations, PACS and web-based technology and image distribution though web-based ePR.

With the evolution of PACS towards higher levels of maturity, workflow efficiency (medical), IS/IT-integration, and effective qualitative care expand. It should be noted, however, that high quality service, efficiency and clinical effectiveness using a PACS can only be achieved if PACS is integrated within a wider ePR of the hospital. This integration is one of the most expensive and time-consuming projects but yields many benefits. The integration of PACS within an ePR enables a consistent work environment within the hospital for radiologists, referring clinicians, nurses, staff and management. Furthermore, it provides opportunities for effective (e)consultation, retrieval of more timely and accurate patient information, allowing for real-time diagnosis, decision support, inter-disciplinary processes (intelligent), data mining activities, continuous clinical optimization and so on.

The PMM is descriptive and partly normative and has been developed as a guideline for assessment and strategic planning. In that respect, the PMM can be used for strategic planning, incorporating growth paths towards achieving higher levels of PACS maturity. An important omission of the model is, however, that the development through the maturity model might differ by organizational domains and that maximizing maturity might not be effective or ‘optimal’ in all circumstances. For this reason, we involve another theoretical perspective, as shown in the next section.

Complementarity and Alignment Theories

The theory of complementarity was introduced by Edgeworth, who defined activities as complements ‘if doing (more of) any one of them increases the returns to doing (more of) the others’ [7]. Complementarity theory assumes that the individual elements of a strategic planning process (i.e. the variables) cannot be individually optimized to achieve a better performance [8]. In the business and strategic management literature, complementarity is often labeled as ‘fit’ [4] or strategic alignment. Strategic alignment refers to applying IS/IT in a structural and stable way, in harmony with business strategies, goals and needs. The strategic alignment model (SAM) of Henderson and Venkatraman is the most cited concept within this field [9, 10]. Their model implies that a systematic process is required to govern continuous alignment between business and IS/IT domains, i.e. to achieve ‘strategic fit’ as well as ‘functional integration’. The SAM has been extended by theorists, industry and consulting [10], who have all defined ‘fit’ as the balance or equilibrium of different organizational dimensions and ‘external fit’ as the strategic development that is based on environmental trends and changes.

However, the SAM is not able to monitor or measure maturity and/or performance. This was improved by Scheper [11], who extended the SAM by combining it with the Massachusetts Institute of Technology (MIT) 1990s model [12]—developed as part of a major business IT research—and defining five key organizational domains that are essential to be aligned: (1) strategy and policy (S&P), (2) organization and processes (O&P), (3) monitoring and control (M&C), (4) information technology (IT) and (5) people and culture (P&C). In contrast to the SAM, Scheper also defined levels of incremental maturity for each of the five domains. Hence, he claimed that alignment could be practically measured and assessed by the comparative levels of maturity on each of the five dimensions.

Probably for reasons of complexity, the co-evolutionary and emergent nature of alignment has rarely been taken into consideration in IS/IT alignment research [13]. In the same vein, the SAM and other IS/IT alignment approaches perceive and operationalize the alignment as a linear (static) mechanism. This neglects the fact that mechanisms are multidirectional and that change in one organizational domain has multilevel effects on other domains. Organizational performance is, in fact, a non-linear, emergent and partly unintended outcome, which cannot be approximated by any linear form [13].

To turn this perspective into a conceptual model, a systematic agenda are required, linking theory development with mathematical or computational model development that does not follow the concepts of equilibrium-based mathematical approaches (i.e. that rely on linearity, attractors, fixed points and the like [14]). This is addressed in the next section.

An Integrative PACS Performance Model

Based on the previous analyses, we develop a model that combines three concepts: (1) PACS maturity as the concept to define PACS and its elements (i.e. classifying PACS systems according to their stage of development and evolutionary plateau of process improvement), (2) PACS alignment as the concept to complement the organizational dimensions of PACS (i.e. investments made in organizational dimension related to PACS should be balanced out in the organization in order to obtain synergizing benefits), and (3) PACS performance as the added value of PACS within hospitals.

Using the PMM as a starting point, we suggest measuring maturity and alignment (as independent variables) by the degree to which hospitals score and differ on five organizational dimensions (see ‘Complementarity and Alignment Theories’ section). For each of these five dimensions, distinctive maturity levels have previously been defined by the PMM [11]. These accompanying maturity levels can be successively labeled for S&P3, S&P4 and S&P5, O&P3, O&P4 and O&P5, and so on. Maturity levels 1 and 2—as defined by the PMM—are omitted for practical reasons, which will be elaborated upon in the ‘Results’ section. In addition, we define PACS performance as a multifactorial (dependent) variable to be measured in terms of hospital efficiency (i.e. organizational construct containing the patient service, end-user service and organizational efficiency perspectives) and clinical performance (i.e. subdivided into diagnostic efficacy and communication efficacy) [3, 15].

Our conceptual model contains higher-order (multidimensional) latent constructs within the context of simultaneous equation systems [16]. These ‘latent constructs’ cannot be observed directly because their meanings are obtained by measuring the manifest variables. In interconnecting the three key concepts of PACS maturity, PACS alignment and PACS performance, we propose a reflective construct model, through which the manifest variables are affected by the latent variables (in contrast to the formative constructs).

We apply a multistep approach using path modeling to hierarchically construct latent variables as the independent part (i.e. PACS alignment) of the conceptual model and latent variables as the dependent part (i.e. PACS performance) of the conceptual model. (see Appendix for a detailed description of the constructs and their relation).

Based on the above mentioned, the main hypothesis to be empirically tested by the conceptual model can be formulated as

‘The alignment of PACS, as represented by the multifactorial nature of five organizational domains and their related maturity levels, has a positive relationship on PACS performance, as represented by the multifactorial nature in terms of hospital efficiency and clinical effectiveness and their related items.’

Alignment of PACS is defined as the pattern of internal consistency among the two sets of underlying constructs. More specifically, PACS alignment is modeled as a third-order latent construct, whereas the second-order constructs represent the organizational domains to be co-aligned and the first-order constructs represent the maturity levels. This modeling of PACS alignment is statistically appropriately captured by a pattern of covariation, which coincides with the concept of (co-)alignment [4].

Our conceptual model follows the central concept of internal logic among the various dimensions, since it is in accordance with the theories of complexity and CAS outlined previously.

SEM techniques are specifically suited for the modeling of complex processes to serve both theory and practice. Therefore, SEM is the appropriate method to validate our conceptual model to capture the complex entanglement of PACS deployment and performance in hospitals. The application of SEM (and latent variable modeling) fits a mode of integrative thinking about theory construction, measurement problems and data analysis. It enables stating the theory more exactly, testing the theory more precisely and yielding a more thorough modeling/understanding of the empirical data about complex phenomena and relationships [17].

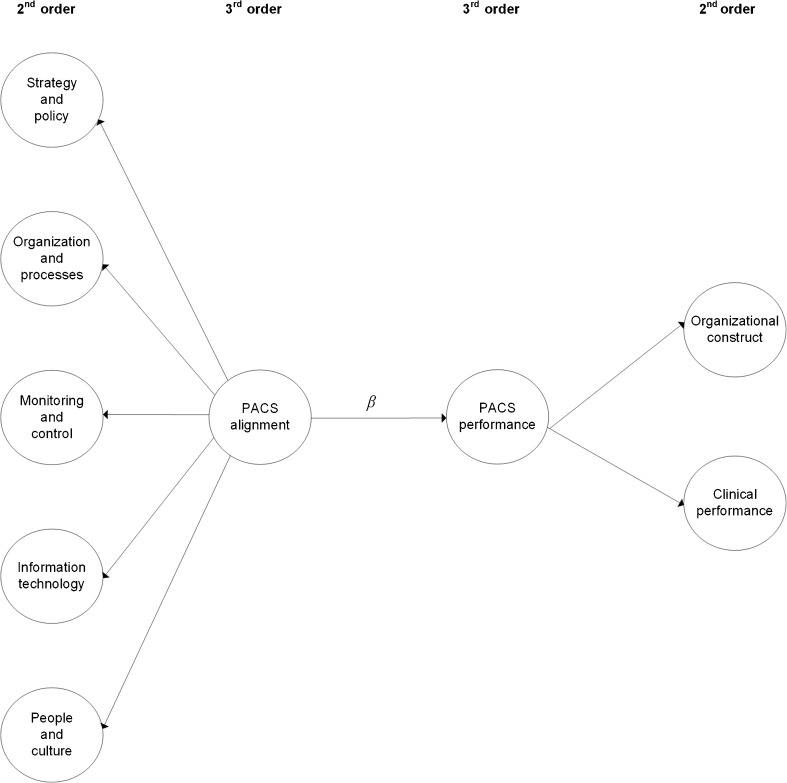

Figure 1 displays the SEM notation of our conceptual model, capturing the theorized relationships between organizational domains (i.e. second-order construct) and PACS alignment (i.e. third-order construct), on the one hand, and its impact on PACS performance (i.e. third-order construct), on the other.

Fig. 1.

Theoretical SEM notation for the PACS alignment model (β = estimated value for the path relationship in the structural model)

Material and Methods

Instrument Development Process

An initial survey was developed based on the literature, field experience and valuable suggestions by two PACS experts (a professor of radiology and the head of a radiology department) who provided the project team with input and advice on key concepts in diagnostic imaging. This initial survey was then discussed with industry consultants and a PACS R&D manager during a focus group meeting, thereby redefining some of the questions in the survey. The topics in the survey were subsequently validated in several individual validation sessions (using the ‘Delphi method’) with PACS experts (three radiologists, a neurologist, a technologist and medical informatics researcher) representing four hospitals in four different geographical areas in the Netherlands. The outcomes were used to improve our survey statements on validity, reliability and empirical application (e.g. the size of the survey and tooling).

Taking considerable comments into account, this initial survey was extended and applied in a pilot with two hospitals of different sizes and operating regions that were actively involved in optimizing their PACS deployments. At each hospital, two radiologists (including heads of department), the head of radiological technologists and a PACS administrator completed an online survey within a secure web environment. These informants were most familiar with the subject of PACS maturity and performance, making intra-institutional validity likely. Including multiple stakeholders from the radiology department also reduces common source variance associated with sampling from the same source [17], excluding face validity issues. Respondents completed the survey separately to avoid systematic bias and any peer pressure to give particular answers.

The pilot offered good opportunities to improve the contents of the survey and improve the clarity of the statements. Finally the questionnaire was extended to 42 statements1, covering most intersections of our framework.

For each organizational dimension, the items were formulated according to a cumulative order. Our questionnaire explicitly addressed a hierarchical order (i.e. increasing complexity’) of survey items along the maturity scale, communality and interrelationship of stages of maturity, so that we avoided common pitfalls in survey instruments and case research. All questions were assessed using a seven-point Likert scale for each statement from strongly disagree to strongly agree.

Furthermore, the statements were phrased in the present tense, but respondents were asked to provide answers for both the current and future/preferred situations of their hospitals.

The survey also contained some general questions (e.g. name, function, years of experience using PACS, etc.). Finally, PACS performance was measured using 12 performance statements on how well the system contributes to efficiency and effectiveness [3, 15].

Data and Sample Collection Procedure

A survey was conducted targeting all general and top clinical hospitals (i.e. non-university teaching hospitals) and university medical centres in the Netherlands (N = 91). The questionnaire was sent to (1) the heads of the radiology departments (and radiologists), (2) the heads of technologists and/or department managers and (3) the PACS/RIS administrators of all radiology departments. Contact details were obtained from the secretaries of each individual radiology department.

Respondents were asked to fill up the survey either online or by returning the provided printed version to the university. In parallel, invitations to participate were sent via mail (and a reminder mail after 5 weeks) to all the heads of radiology in the Netherlands by a recognized radiologist in the field.

Five weeks after, follow-up phone calls were made to all radiology departments that had not yet returned a single questionnaire.

In total, 82 questionnaires were either filled in online or returned in the post. Representatives from 12 hospitals filled in at least one questionnaire, resulting in an overall response from the 64 participating hospitals. This percentage is remarkably high in comparison with common survey response rates. All questionnaires were included into the analysis subject to quality criteria (e.g. no missing answers). Table 1 provides the demographics of our obtained sample. Participating hospitals—which all had their own radiology departments—could be divided into three categories: general hospitals, top clinical—large educational hospitals providing highly specialized medical care—and academic medical centres.

Table 1.

Sample demographics

| Sample descriptives | |||||||

|---|---|---|---|---|---|---|---|

| Total | Percentage of total | AVG beds | FTE radiologist | AVG exams | Total | ||

| Hospital type | Respondents | ||||||

| General hospital | 37 (56) | 66 | 353 | 6.4 | 95,900 | Radiologist | 29 (35 %) |

| Top clinical | 21 (27) | 78 | 657 | 10 | 160,000 | Head technologists/manager | 26 (32 %) |

| Academic | 6 (8) | 75 | 944 | 27.2 | 193,500 | PACS administrator | 27 (33 %) |

AVG average, FTE full-time equivalent)

As can be seen from Table 1, our sample contains 75 % of the academic hospitals, 78 % of the top clinical hospitals and 66 % of the general hospitals in the Netherlands. This is a total response rate of 70 % of the targeted hospitals. Therefore, the obtained sample is representative of hospitals in the Netherlands with regard to size.

Also, Table 2 includes a distribution of the PACS vendors currently involved in our sample. As can be seen from our sample, there are currently eight vendors active in the Dutch market. Currently, many Dutch hospitals re-evaluated their current PACS systems and are looking to replace their original imaging networks. Also, hospitals are planning for major upgrades, and this can change the PACS vendor landscape considerably in the coming years.

Table 2.

PACS vendor distribution (in percentages)

| Sample vendor descriptives | |

|---|---|

| Vendor | Percentage in sample (%) |

| Agfa | 26 |

| Carestream | 9 |

| Delft Diagnostic Imaging (Rogan) | 10 |

| Fuji | 9 |

| GE | 5 |

| Philips | 31 |

| Sectra | 5 |

| Siemens | 5 |

Instrument Validation

The operationalization of our conceptual model can be performed most accurately using SEM [18]. SEM (or ‘causal modeling’) is typically used to simultaneously validate multifaceted phenomena in terms of tentative cause and effect variables, including causal effects. In doing so, it simultaneously examines the measurement model (factor model or outer model) and the structural model (inner model or path model).

SEM techniques are specifically suited for the modeling of complex processes to serve both theory and practice. Therefore, SEM is the appropriate method to validate our conceptual model to capture the complex entanglement of PACS deployment and performance in hospitals. It enables stating the theory more exactly, testing the theory more precisely and yielding a more thorough modeling/understanding of empirical data about complex phenomena and relationships [17].

Since the interpretation of parameter outcomes in SEM is not straightforward, we adopted the validation procedures outlined by Marcoulides and Saunders [19] to assess the ‘outer’ (measurement) and ‘inner’ model (structural). This was to:

Propose a model that is consistent with all currently available theoretical knowledge and collect data to test that theory;

Perform data screening (including the accuracy of inputs and outliers.). We first performed tests on the data normality distribution of all manifest variables (MV1–MV42) using SPSS version 18.0. As a general rule of thumb, the absolute values of the ratio of skewness to its standard error (SE) and of kurtosis to its SE should be between −2 ≤ × ≤ 2; higher values indicate greater asymmetry and deviation from normality;

Examine the ‘psychometric properties’ (i.e. measurement model) of all variables. To demonstrate that the psychometric properties had satisfactory levels of validity and reliability, the measurement model was assessed for first-order constructs. Composite reliabilities2 (CRs; [20]) and average variance extracted (AVE; [20])—i.e. the average variance of measures accounted by the latent construct—were computed. As a general rule of thumb, variables with a loading less than 0.6 should be removed from the sample [21]. Discriminant validity was assessed by verifying (1) whether indicators loaded more strongly on their corresponding (first-order) constructs than they did on the other constructs and (2) that the square root of the AVEs should be larger than the inter-construct correlations (see entries in bold in Table 3 along the matrix diagonal). The off-diagonal elements are correlations between latent variables as calculated by the partial least squares (PLS) algorithm. See ‘Assessment of Discriminant Validity’ section for a brief explanation on the entries in Table 3.

Examine the magnitude of the relationships (i.e. structural model) and effects between the variables being considered in the proposed model. We accounted for possible moderating effects (i.e. interaction effects) within our data through a multisample/group approach [22]. In doing so, we equally divided our research sample into two groups based on the amount of beds of each hospital. Hence, group 1 (≤450 beds) was assigned to 30 hospitals and the second group (>450 beds) to 34 hospitals. The model’s path coefficients were subsequently estimated separately for each group using the SEs obtained from bootstrapping. Likewise, we divided our sample of questionnaires into three disjoint groups, based on respondent category (i.e. radiologist, PACS administrator and head technologists/manager). Also, we assessed various proper measures for model fit including (a) the Goodness-of-fit index, [20], defined as the geometric mean of the average communality of all constructs with multiple indicators and the average R2 (for endogenous constructs), (b) R2—the coefficient of determination, (c) Q2 of our endogenous constructs (using Stone–Geisser’s test [20]) to assess the quality of each structural equation measured by the cross-validated redundancy and communality index (using the blindfolding procedure in SmartPLS) and to evaluate the predictive relevance for the model constructs;

As a final step, assess and report the power of the study. We used G*Power [23]—a general standalone program—for statistical tests. Power (1 − β) of statistical tests can be defined as the probability of falsely retaining an incorrect H0 [24].

Table 3.

Inter-correlations of first-order constructs (N = 64)

| First-order construct | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. IT3 | 0.86 | |||||||||||||||||||

| 2. IT4 | 0.38 | 0.81 | ||||||||||||||||||

| 3. IT5 | 0.24 | 0.32 | 0.72 | |||||||||||||||||

| 4. MC3 | 0.13 | 0.34 | 0.21 | 0.79 | ||||||||||||||||

| 5. MC4 | 0.14 | 0.30 | 0.27 | 0.50 | 0.77 | |||||||||||||||

| 6. MC5 | 0.31 | 0.28 | 0.25 | 0.43 | 0.59 | 0.81 | ||||||||||||||

| 7. OP3 | 0.17 | 0.19 | 0.38 | 0.40 | 0.32 | 0.39 | 0.81 | |||||||||||||

| 8. OP4 | 0.06 | 0.21 | 0.13 | 0.23 | 0.52 | 0.52 | 0.24 | 0.79 | ||||||||||||

| 9. OP5 | 0.19 | 0.06 | 0.24 | 0.07 | 0.27 | 0.19 | 0.03 | 0.42 | 0.81 | |||||||||||

| 10. PC3 | 0.42 | 0.46 | 0.22 | 0.40 | 0.23 | 0.37 | 0.23 | 0.17 | 0.19 | 0.78 | ||||||||||

| 11. PC4 | 0.28 | 0.10 | −0.04 | 0.29 | 0.15 | 0.28 | 0.05 | −0.05 | 0.14 | 0.51 | 0.90 | |||||||||

| 12. PC5 | 0.23 | 0.42 | 0.21 | 0.38 | 0.45 | 0.31 | 0.24 | 0.21 | 0.33 | 0.33 | 0.28 | 0.82 | ||||||||

| 13. SP3 | 0.07 | −0.03 | −0.02 | −0.20 | 0.15 | 0.30 | −0.11 | 0.19 | 0.04 | 0.09 | 0.05 | −0.07 | 0.73 | |||||||

| 14. SP4 | 0.17 | 0.23 | 0.47 | 0.31 | 0.32 | 0.35 | 0.22 | 0.24 | 0.36 | 0.23 | −0.11 | 0.18 | 0.25 | 0.77 | ||||||

| 15. SP5 | 0.03 | 0.20 | 0.26 | 0.39 | 0.32 | 0.42 | 0.44 | 0.18 | 0.21 | 0.27 | 0.23 | 0.23 | 0.19 | 0.43 | 0.83 | |||||

| 16. Patient service | 0.27 | 0.15 | 0.31 | 0.11 | 0.09 | 0.34 | 0.19 | 0.16 | −0.08 | 0.14 | 0.23 | 0.00 | 0.20 | −0.01 | 0.14 | 0.74 | ||||

| 17. End-user service | 0.44 | 0.36 | 0.34 | 0.46 | 0.45 | 0.53 | 0.40 | 0.20 | 0.07 | 0.39 | 0.16 | 0.39 | −0.02 | 0.27 | 0.10 | 0.20 | 0.76 | |||

| 18. Organizational efficiency | −0.13 | −0.21 | −0.08 | 0.02 | −0.05 | −0.02 | 0.23 | −0.18 | −0.28 | −0.10 | −0.18 | 0.09 | −0.18 | 0.00 | −0.04 | 0.04 | 0.20 | 0.79 | ||

| 19. Diagnostic efficacy | 0.08 | 0.13 | 0.28 | 0.19 | 0.32 | 0.30 | 0.23 | 0.21 | 0.08 | 0.01 | 0.07 | 0.17 | 0.01 | 0.08 | 0.17 | 0.12 | 0.30 | −0.02 | 0.81 | |

| 20. Communication efficacy | 0.49 | 0.31 | 0.22 | 0.30 | 0.10 | 0.18 | 0.20 | 0.06 | 0.30 | 0.38 | 0.24 | 0.36 | −0.09 | 0.08 | 0.20 | 0.26 | 0.28 | -0.12 | 0.09 | 0.79 |

Entries in bold along the matrix diagonal are the square roots of the AVE

To perform this multistep approach and estimate the parameters in the inner and outer models, we used SmartPLS version 2.0 M3, which is a SEM application using PLS. We applied the path weighting scheme available within SmartPLS in addition to centroid and factor schemes with the knowledge that the choice among each scheme has a minor impact on the final result [20]. In addition, we applied a non-parametric bootstrapping [20], as implemented into the SmartPLS application, to compute the level of the significance of the regression coefficients, with 500 replications to interpret their significance and to obtain stable results.

The current study has a sample size of N = 64. Given the rationale above (and in ‘Material and Methods’ section) and the fact that our data are not normally distributed, we chose a PLS approach—which is robust for moderate sample sizes—over the use of covariance-based structures to validate our model. Hence, our main focus was on explaining (and predicting) the endogenous construct ‘PACS performance’ in which R2 and the significant relationships among constructs indicated how well our model performed. Therefore, variance-based methods were preferred.

Within PLS-SEM, higher-order constructs can be constructed using repeated indicators (i.e. the hierarchical component model). That is, all indicators of the first-order constructs are reassigned to the second-order construct, as second-order models are a special type of PLS path modeling that use manifest variables twice for model estimation. The same patterns are applicable to subsequent higher-order constructs. A prerequisite for this model approach is that all manifest variables of the first-order and higher-order constructs should be reflective [25]. As such, indicators share a common theme and are manifestations of the key constructs. In addition, any changes in constructs cause changes in the indicators. Thus, all constructs within our model were configured as reflective indicators and are considered exogenous variables. Therefore, all constructs in our PLS model were configured like this.

Results

Data Screening

Outcomes of the data screening suggest that our data slightly deviate from normality (AVG skew = |2,2|; AVG kurtosis = |1,4|). Additional support for non-normal distribution came from a Kolmogorov–Smirnov test (Kolmogorov–Smirnov–Lilliefors test) for normality. All variables demonstrated significant values; thereby, we rejected the null hypotheses that our data were not significantly different from normal distributions.

Assessment of the Measurement Model (the ‘outer’ model)

Table 4 includes loadings (λ) for all the items (MVi) of each organizational domain and maturity levels. λ can be best understood in terms of factor loadings (e.g. as a result of factor analysis). All loadings exceeded 0.7 except MV1, MV16, MV24 and MV25. Considering that these values were close to the threshold, these items were retained in the original model. As can be seen from Table 4, all CR values were well above 0.7. Likewise, all AVE values exceeded the cut-off value indicating sufficient convergent validity. Table 5 includes five performance dimensions, measurements and indicators and the psychometric properties (i.e. AVE, CR and λ) of the dependent construct (i.e. endogenous construct). All the loadings of the dependent construct exceeded acceptable thresholds. Manifest variables MV37 and MV38 both had negative loadings and had to be removed from the PLS program to obtain reliable outcomes. Since MV33 and MV40 had loadings close to the threshold, these items were retained in the original model. Once again, all measures indicated that the dependent constructs were well defined and unidimensional.

Table 4.

Estimates for the psychometric properties of the first-order constructs for PACS alignment

| PACS alignment construct | |||||

|---|---|---|---|---|---|

| Domain | Maturity level | Indicators (i.e. shortened survey statements that persons responded to) | λ | AVE | CR |

| Strategy and policy | 3 | Primary interpretation by radiologists using uncompressed images (MV1) | 0.67 | 0.54 | 0.70 |

| Emphasis is on the direct display of images from the archive (MV2) | 0.79 | ||||

| 4 | PACS integration with the ePR is an important strategic objective (MV3) | 0.76 | 0.59 | 0.77 | |

| Alignment of investment plans between radiology and other departments/wards (MV4) | 0.77 | ||||

| 5 | Inquiry of the external environment for new developments and products to optimize PACS functionality (MV5) | 0.84 | 0.69 | 0.83 | |

| Strategic and operational (multiyear) plans contain impact and opportunities for chain partners (MV6) | 0.82 | ||||

| Organization and processes | 3 | Active improvement of service levels using quality standards and measures for digital PACS workflow (MV7) | 0.86 | 0.66 | 0.79 |

| Every image is instantly available on any workstation in the hospital for every user at any time (MV8) | 0.76 | ||||

| 4 | All diagnostic images from other departments are stored into one central PACS archive (MV9) | 0.81 | 0.62 | 0.77 | |

| Dedicated workspace has all required patient information and integrated 2D/3D reconstruction tools (MV10) | 0.77 | ||||

| 5 | PACS real-time data with chain partners using standard exchange protocols (XDS-i) if necessary (MV11) | 0.80 | 0.66 | 0.80 | |

| Hospital-wide requests and planning radiology exams using an electronic order-entry system (MV12) | 0.83 | ||||

| Monitoring and control | 3 | Recurrent prognosis concerning the amount of radiology exams and required storage capacity (MV13) | 0.84 | 0.62 | 0.77 |

| Measurement and monitoring of financial and non-financial PACS data (MV14) | 0.73 | ||||

| 4 | Service level agreements with PACS vendors are periodically evaluated (MV15) | 0.90 | 0.60 | 0.74 | |

| PACS generates comprehensive management information that is always on time (MV16) | 0.61 | ||||

| 5 | The hospital confronts PACS vendors if service level agreements are not (or partially) achieved (MV17) | 0.86 | 0.64 | 0.78 | |

| An accurate overview of the contribution of PACS to overall cost prices per radiology exam (MV18) | 0.73 | ||||

| Information technology | 3 | PACS is compatible with current international standards and classifications (HL7 and DICOM) (MV19) | 0.85 | 0.73 | 0.85 |

| PACS exchanges information with the RIS and HIS without any complications (MV20) | 0.86 | ||||

| 4 | Adoption of standard ‘off-the-shelf’—vendor-independent—hardware and software (MV21) | 0.78 | 0.66 | 0.79 | |

| Impact prognosis on storage capacity because of modality upgrades or newly acquired devices (MV22) | 0.83 | ||||

| 5 | Application of reagent (security) protocols in preserving the privacy of patient data, PACS data security and backup (MV23) | 0.77 | 0.52 | 0.70 | |

| PACS is an integral part in the hospitals’ ePRs (MV24) | 0.67 | ||||

| People and culture | 3 | The hospital actively involves the users of PACS in the development of customizable user interfaces (MV25) | 0.64 | 0.60 | 0.75 |

| PACS process and procedure knowledge are extensively applied by clinicians and technologists (MV26) | 0.89 | ||||

| 4 | End-users of PACS affect the decision-making process in selecting a specific PACS vendor (MV27) | 0.90 | 0.81 | 0.89 | |

| End-users affect digital PACS workflow and functionality improvements (MV28) | 0.90 | ||||

| 5 | Radiologist awareness of PACS has a potential to influence the competitive position of the hospital and service delivery (MV29) | 0.77 | 0.67 | 0.80 | |

| Innovative solutions with PACS are discussed during clinico-radiological meetings (MV30) | 0.87 | ||||

Table 5.

Estimates for the psychometric properties of the first-order constructs for PACS performance

| PACS performance construct | ||||

|---|---|---|---|---|

| Measurement construct | Indicator | λ | AVE | CR |

| 1. Patient service | ||||

| Patient waiting time | Elapsed time between a patients’ arrival at radiology (on appointment) and subsequent exam (MV31) | 0.71 | 0.55 | 0.71 |

| Patient satisfaction | Satisfaction of patients on service delivery (MV32) | 0.77 | ||

| 2. End-user service | ||||

| Physician satisfaction | Satisfaction of referring clinicians on availability of imaging data and associated reports (MV33) | 0.62 | 0.58 | 0.73 |

| User satisfaction | User satisfaction on the current user interface and functionality of PACS (MV34) | 0.88 | ||

| 3. Organizational efficiency | ||||

| Report turnaround time | Sum of time after execution, reporting and the availability of imaging exams’ finalized report of CT exams (MV35) | 0.81 | 0.62 | 0.77 |

| Radiologist productivity | The amount of yearly radiology exams per FTE (MV36) | 0.77 | ||

| Budget ratio | Percentage (over) expenditures of allocated PACS budgets (MV37) | −0.62 | ||

| 4. Diagnostic efficacy | ||||

| Interpretation time | Time to process a series of CT exams (MV38) | −0.14 | 0.65 | 0.79 |

| Diagnostic accuracy | Sufficiency rate of current radiology workspaces for image interpretation (MV39) | 0.93 | ||

| Clinical capability | Workstations capability of displaying uncompressed CT studies (avg. 1,500–2,000 images) without delay (MV40) | 0.67 | ||

| 5. Communication efficacy | ||||

| Patient management | Contribution of PACS towards decision-making in diagnostic processes or treatment plans of patients (MV41) | 0.82 | 0.62 | 0.76 |

| Communication efficacy | PACS contribution towards the communication of critical findings and interdepartmental collaboration (MV42) | 0.76 | ||

Next to the assessment of first-order constructs, the higher-order constructs exceeded the average threshold values for CR (i.e. CR ≥ 0.7). Table 6 includes loadings (i.e. factor loading coefficients, γi) for both second-order and third-order endogenous and exogenous reflective constructs (see also Appendix for a detailed description of the constructs and their relation).

Table 6.

Factor loadings for higher-order constructs

| Second-order construct | |||||||

| Factor loading coefficients (γi) | |||||||

| Exogenous | Endogenous | ||||||

| S&P | O&P | M&C | IT | P&C | OC | CP | |

| Maturity level 3 | 0.48 | 0.55 | 0.80 | 0.78 | 0.79 | ||

| Maturity level 4 | 0.80 | 0.83 | 0.82 | 0.85 | 0.80 | ||

| Maturity level 5 | 0.84 | 0.71 | 0.67 | 0.83 | 0.61 | ||

| Patient service | 0.62 | ||||||

| End-user service | 0.82 | ||||||

| Organizational efficiency | 0.50 | ||||||

| Diagnostic efficacy | 0.73 | ||||||

| Communication efficacy | 0.74 | ||||||

| Third-order construct | |||||||

| Factor loading coefficients (γi) | |||||||

| Exogenous constructs | Endogenous | ||||||

| S&P | O&P | M&C | IT | P&C | OC | CP | |

| PACS alignment | 0.64 | 0.70 | 0.70 | 0.85 | 0.70 | ||

| PACS performance | 0.84 | 0.85 | |||||

All higher-order ‘factor’ loadings of the independent part of the model provided a satisfactory fit to the data, meeting stipulated thresholds and, thereby, supported the third-order hierarchical model of PACS alignment and its measurement model.

Thus, looking at the exogenous part of the model, we see that loadings of the first-order latent variables (i.e. maturity levels 1, 2 and 3) on the second-order factors (i.e. the five organizational domains, S&P, O&P, M&C, IT and P&C) exceed the threshold values.

In analogy to the exogenous constructs, the loadings for the endogenous higher-order constructs (i.e. PACS performance) had a significant meaning, indicating a strong goodness of fit and supporting the reflective PACS performance construct and its manifests.

Assessment of Discriminant Validity

As can be seen from Table 3, all square root scores of the AVEs are higher than the shared variances of the constructs with other constructs in the model. This means that the square root of the AVE (i.e. entries in bold in Table 3 along the matrix diagonal) exceeds the intercorrelations of the construct with the other constructs in the proposed model. Thus, evidence of adequate convergent and discriminant validity was found for all constructs in the proposed conceptual framework. Further evidence of discriminant validity was obtained using cross loadings as quality criteria [26]. These findings indicate that the loadings for each indicator were greater than the cross loading on other latent variables in the model.

In summary, the outcomes of the measurement model suggest that the PLS model construct is valid and reliable and that the estimates of the structural model (i.e. inner path model estimates) can thereby be evaluated.

Assessment of the Structural Model (the ‘Inner’ Model)

We found support for our main hypothesis. There was a significant positive impact of PACS alignment on PACS performance (β = 0.62; t = 4.01; p < 0.0001) after validating the outer model.

As outlined, we accounted for moderating the effects within our data by dividing our research sample into two groups based on the amount of hospital beds. Group 1 (≤450 beds) was assigned to 30 hospitals and the second group (>450 beds) to 34 hospitals.

The model’s path coefficients were subsequently estimated separately for each group using the SEs obtained from bootstrapping, and no significant difference between the structural models could be detected (t = 0.36, p < 0.72). Also, the model and its path coefficients were subsequently estimated for three disjoint groups, based on the respondent category (i.e. radiologist, PACS administrator and head technologists/manager). The radiology group contains 29 cases (i.e. the 29 questionnaires that were completed by them), the PACS administrator group 27 cases, and the head technologist/manager group 26 cases.

No significant difference between the structural models for each of the group comparisons was found (radiology-administrator group t = 0.01, p < 0.99; head technologist-administrator group t = 0.55, p < 0.60; head technologist-radiology group t = 0.46, p < 0.61). These outcomes imply that the impact of PACS alignment on the PACS performance construct (i.e. the hypothesized relationship) is stable for subsamples, i.e. the different respondent groups.

Goodness-of-Fit Measurement

Although PLS modeling does not include a proper single goodness-of-fit measure, the variance explained by the model (R2)—the coefficient of determination—values of the endogenous constructs can be used to assess this model fit. R2 accounted for by PACS performance was 0.37. In accordance with R2 effect size categorizations (i.e. 0.02, 0.13 and 0.26 [24]), we concluded that the explanatory power was large.

We also calculated the Q2 of our endogenous constructs. Q2 measures how well the observed values are reproduced by the model and its parameter estimates by using the cross validation [20]. As such, Q2 values larger than 0 imply the model’s predictive relevance; values less than 0 suggest the model’s lack of predictive relevance. In this study, all Q2 values were above the threshold value of zero, thereby, indicating the overall model’s predictive relevance.

A global measure of fit (i.e. goodness-of-fit index, GoF) has also recently been suggested [20]. The GoF, defined as the geometric mean of the average communality of all constructs with multiple indicators and the average R2 (for endogenous constructs), represents an operational solution for an index validating the PLS model globally. Since communality equals AVE in PLS [26], the cut-off is set to 0.5. Subsequently, taking small, medium and large effect sizes for R2 (i.e. 0.02, 0.13 and 0.26) into account, GoF criteria for small, medium and large effect sizes can be obtained: 0.10, 0.25 and 0.36. For our model, a GoF value of 0.45 was obtained, thereby, exceeding the cut-off value for large effects (GoF = 0.36) [24], which directs towards the conclusion that our model performs well compared with the base values and was a good fit of the model to the data.

Estimation of Power

Power calculations indicated that the power for all the parameters in our model exceeded 0.96. This level of power indicates a high probability that the analytical tests will yield statistically significant results if the phenomena truly exist and thereby a high probability of successfully rejecting H0 [24].

Discussion and Conclusion

Principle Findings and Conclusions

This study presented and validated an integrative model to determine PACS alignment and performance in hospitals, adopting theories and perspectives from the field of information system research and complexity theory. Our aim was to overcome the limitations of most approaches in the field that do not focus on the synergizing, complementarity and integrative effects of PACS in relation to performance.

Based on reliable and empirically valid data collected in Dutch hospitals, it is empirically validated that PACS alignment has a significant impact on the performance of PACS in terms of efficiency and effectiveness. This implies that hospital-specific resources, capabilities and the use of PACS are strongly interrelated, and integrative management is essential to optimize the added value of PACS.

From a practical point of view, operational and technical improvement opportunities can be identified based on our model and alignment perspective on the PACS domain. It should be recognized, however, that improvements often imply change of existing processes, organization structures and touch the interest of stakeholders. As with any (IT) change project in organizations, this cannot be prepared or executed in a vacuum; hence, internal stakeholders and potential (and current) vendor should collaborate during a replacement (or upgrade) process of original imaging networks.

To the best of our knowledge, this study is the first that empirically applies the concept of alignment, maturity and complexity science and theory to the research domain of PACS and medical informatics. We believe that the outcomes of this study will support hospital decision-makers.

Strategic Guidelines for PACS Deployment

Based on the above mentioned, we believe that hospitals should follow a dual strategic PACS maturity planning perspective that drives a continuous process of change and adaptation as well as the co-evolvement and alignment of PACS. Adaptability and changeability should be the integral properties, next to traditional and deliberate PACS strategic planning.

To do so, we suggest that hospitals who want to take their PACS systems to the next (maturity) level and advance PACS performance should explicitly identify and execute improvement activities on each of the five organizational dimensions of the PMM. To guide decision-makers in deciding how to travel and mature PACS in a certain direction (for any hospital, large or small, public or private that wants to integrate its strategic objectives for growth and maturity in terms of PACS, including ePR and other IS/IT), we suggest the following three steps to be taken:

Depart from PMM (that includes a checklist for evolving onto the next maturity level) and assess the current maturity state of PACS (‘as is’), and also a ‘to be’ situation should be determined involving multiple stakeholders for well-balanced and objective perspectives.

Second is a fit–gap analysis that allows to assess whether the current PACS maturity level is either a precursor for the ‘to be’ situation or the desired maturity level ‘leaps’ over intermediary stages. Now, decision-makers need to decide which road and enhancement plan are most suitable for the hospital. Important is that the plan need to be aligned with the context of hospital strategies.

As a final step, we suggest to organize improvement projects that take into account the risks involved, investment costs, critical success factors and benefits. The extensively outlined alignment perspective in this paper needs to be applied in managing similarities, overlap and synergy between the improvement projects in order to realize strategic, objectives and optimal deployment of PACS.

In practice, hospitals often apply improvement routes either evolutionary (routes that develop logically in subsequent stages), revolutionary (routes that take a more radical approach in that it takes strategic ‘leaps’ in evolving towards higher levels of PACS maturity) or both as a combined strategy. For each enhancement path, critical success factors are the involvement of multidisciplinary teams consisting of physicians, technicians and engineers and project commitment at all levels within the hospital.

These steps follow the logic of an ‘intended’ PACS strategy. Complementary to this deliberate (i.e. conscious) planning process, hospitals also need to plan PACS maturity, alignment and performance as goals that ‘co-evolve’ within hospitals. This complex task can only be achieved by mobilizing the diversity of interactions among all organizational agents involved in the deployment of PACS in clinical and IT practice.

Limitations and Venues for Future Research

Despite its attractiveness, our study and integrative framework have several limitations. These limitations are largely related because of our research sample. First, although sufficiently large to achieve acceptable levels of statistical significance given all the quality criteria for the inner model and outer model, our sample is limited to hospitals in the Netherlands, thereby, limiting generalizability.

Although we believe that our framework provides an assessment framework for hospitals worldwide to evaluate the triangular construct of PACS maturity, alignment and performance, we expect that our model can also be used to describe and reconstruct any hospital PACS case. It is our ambition to extend the application of the proposed model (also longitudinal). The model can then be reassessed and evaluated for its robustness, and the established higher-order constructs of our model can be validated through larger sample sizes. Comparing results across countries and groups might well contribute to the generalizability of our findings.

Second, our obtained data included various demographic variables (e.g. type, size and region), but our empirical analysis did not consider in depth the possible differences among group segments.

Using finite mixture (FIMIX)-PLS procedures, segmentation can be applied to the empirical data. This approach allows model parameters to be estimated and observations’ affiliations to be simultaneously segmented [27]. This has the advantage compared with an a priori segmentation scheme in that derived segments are homogeneous in terms of model (structural) relationships based on fully available information for both manifest and latent variable scores. Initial results indicate that by segmenting data—using an extended ex post analysis—higher levels of explained variance can be achieved for various homogeneous sub-groups. These findings provide a platform for acquiring further differentiated PLS path modeling conclusions given segment-specific estimations.

Third, the primary focus of our study was on PACS within hospitals. In that respect, it would be a logical step to extend our concepts on alignment, maturity and complexity theory to medical IS/IT in general (e.g. including ePR, clinical decision support and computerized provider order entry).

A final remark is that in our study, we might have encountered common method variance (CMV, a subset of method bias)3, which is a common phenomenon in survey research and can cause problems (e.g. with construct validity). This can specifically occur when respondents rate survey items at the same point in time, and both exogenous and endogenous constructs are self-perceived by the same respondents. There still is little consensus about the extent of common method biases and variances or the seriousness of these effects.

In summary, the current study validated a theorized PACS performance framework. We argue that the adopted complexity perspectives are crucial for explaining PACS performance and systematically identifying improvement areas within hospital operations as well as being aligned with the situational context of hospital strategies. In practice, the validated PACS performance framework is a useful checklist to systematically identify improvement areas for hospitals in the PACS domain.

Acknowledgments

The authors wish to thank participants from all hospitals for their kind cooperation and so made this study possible. In particular, we wish to thank Dr. P.M. Algra of the radiology department of the Alkmaar Medical Centre, The Netherlands, for his enthusiasm for our research, and support and assistance in stimulating higher response rates.

Appendix: A Multistep Model Development Approach

In developing our conceptual model, we applied a multistep approach using path modeling to hierarchically construct latent variables as the independent part (i.e. PACS alignment) of the conceptual model and latent variables as the dependent part (i.e. PACS performance) of the conceptual model.

Like in any type of modeling, we had to balance between recognizing the details of practice and complying the need for overview and limitation.

With regard to the independent part of the conceptual model, we define the following:

The second-order construct as the five organizational domains, each representing different maturity levels, the first-order exogenous constructs;

-

The third-order construct, labeled as PACS alignment, as related to the underlying second-order constructs (i.e. step 1).

With regard to the dependent part of the conceptual model, we define the following:

The second-order constructs (organizational construct and clinical performance construct), as related to the block of the underlying first-order latent constructs, i.e. patient service, end-user service, organizational efficiency, diagnostic efficacy and communication efficacy. For the sake of simplicity, these constructs were left out;

The third-order construct, labeled as PACS performance, as related to the underlying second-order constructs (i.e. step 3).

Footnotes

Statements for maturity levels 1 and 2 were omitted for practical reasons and because all Dutch hospitals have implemented the initial maturity level. Level 2 could be deducted from the assigned scores to level 3 statements.

Composite reliability is similar to Cronbach’s alpha without the assumption of the equal weighting of variables. Its mathematical formula (with the assumption that the factor variance = 1; standardized indicators) is ρ = (Σλi)2/((Σλi)2 + Σ1 − (λi)2).

Principle component analysis (using SPSS v18) among all manifest variables of the model showed that multiple components/factors were present, making CMV unlikely.

Contributor Information

Rogier van de Wetering, Phone: +31-6-20789870, Email: rogiervandewetering@gmail.com, Email: rvandewetering@deloitte.nl.

Ronald Batenburg, Email: R.Batenburg@nivel.nl.

References

- 1.Huang HK. Some historical remarks on picture archiving and communication systems. Comput Med Imaging Graph. 2003;27:93–99. doi: 10.1016/S0895-6111(02)00082-4. [DOI] [PubMed] [Google Scholar]

- 2.Andriole KP, Khorasani R. Implementing a replacement PACS: issues to consider. J Am Coll Radiol. 2007;4(6):416–418. doi: 10.1016/j.jacr.2007.03.009. [DOI] [PubMed] [Google Scholar]

- 3.Van de Wetering R, et al. A balanced evaluation perspective: picture archiving and communication system impacts on hospital workflow. J Digit Imaging. 2006;19(Suppl. 1):10–17. doi: 10.1007/s10278-006-0628-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Venkatraman N. The concept of fit in strategy research: towards verbal and statistical correspondence. Acad Manage Rev. 1989;14(3):423–444. [Google Scholar]

- 5.Gibson CF, Nolan RL. Managing the four stages of EDP growth. Harv Bus Rev. 1974;52(1):76–88. [Google Scholar]

- 6.Van de Wetering R, Batenburg RS. A PACS maturity model: a systematic meta-analytic review on maturation and evolvability of PACS in the hospital enterprise. Int J Med Inform. 2009;78(2):127–140. doi: 10.1016/j.ijmedinf.2008.06.010. [DOI] [PubMed] [Google Scholar]

- 7.Edgeworth F. Mathematical physics: an essay on the application of mathematics to the moral sciences. London: C. Kegan Paul & Co.; 1881. [Google Scholar]

- 8.Milgrom P, Roberts J. Complementarities and fit strategy, structure, and organizational change in manufacturing. J Account Econ. 1995;19(2–3):179–208. doi: 10.1016/0165-4101(94)00382-F. [DOI] [Google Scholar]

- 9.Henderson JC, Venkatraman N. Strategic alignment: leveraging information technology for transforming organisations. IBM Syst J. 1993;32(1):4–16. doi: 10.1147/sj.382.0472. [DOI] [Google Scholar]

- 10.Chan Y, Reich B. IT alignment: an annotated bibliography. J Inform Technol. 2007;22(4):316–396. doi: 10.1057/palgrave.jit.2000111. [DOI] [Google Scholar]

- 11.Scheper WJ. Business IT Alignment: solution for the productivity paradox (in Dutch) The Netherlands: Deloitte & Touche; 2002. [Google Scholar]

- 12.Scott Morton MS. The corporation of the 1990s: information technology and organizational transformation. London: Oxford Press; 1991. [Google Scholar]

- 13.Benbya H, McKelvey B. Using coevolutionary and complexity theories to improve IS alignment: a multi-level approach. J Inform Technol. 2006;21(4):284–298. doi: 10.1057/palgrave.jit.2000080. [DOI] [Google Scholar]

- 14.McKelvey B. Complexity theory in organization science: seizing the promise or becoming a fad? Emergence. 1999;1(1):5–32. doi: 10.1207/s15327000em0101_2. [DOI] [Google Scholar]

- 15.Van de Wetering R, Batenburg R. Defining and formalizing: a synthesized review on the multifactorial nature of PACS performance. Int J Comput Assist Radiol Surg. 2010;5(Suppl. 1):170. [Google Scholar]

- 16.Bagozzi R. Expectancy-value attitude models: an analysis of critical theoretical issues. Int J Res Mark. 1985;2(1):43–60. doi: 10.1016/0167-8116(85)90021-7. [DOI] [Google Scholar]

- 17.Hughes M, Price R, Marrs D. Linking theory construction and theory testing: models with multiple indicators of latent variables. Acad Manage Rev. 1986;11(1):128–144. [Google Scholar]

- 18.Jöreskog K. Structural analysis of covariance and correlation matrices. Psychometrika. 1978;43(4):443–477. doi: 10.1007/BF02293808. [DOI] [Google Scholar]

- 19.Marcoulides GA, Saunders C. PLS: a silver bullet? MIS Quart. 2006;30(2):3–9. [Google Scholar]

- 20.Tenenhaus M, et al. PLS path modeling. Comput Stat Data An. 2005;48(1):159–205. doi: 10.1016/j.csda.2004.03.005. [DOI] [Google Scholar]

- 21.Fornell C, Bookstein F. Two structural equation models: LISREL and PLS applied to consumer exit-voice theory. J Marketing Res. 1982;19(4):440–452. doi: 10.2307/3151718. [DOI] [Google Scholar]

- 22.Henseler J, Fassott G: Testing moderating effects in PLS path models: an illustration of available procedures. Handbook of Partial Least Squares. New York: Springer-Verlag, 2010, pp 713–735

- 23.Faul F, et al. G*Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav Res Methods. 2007;39(2):175–191. doi: 10.3758/BF03193146. [DOI] [PubMed] [Google Scholar]

- 24.Cohen J: Statistical power analysis for the behavioral sciences. New Jersey: Lawrence Erlbaum, 1988

- 25.Jarvis C, MacKenzie S, Podsakoff P. A critical review of construct indicators and measurement model misspecification in marketing and consumer research. J Consum Res. 2003;30(2):199–218. doi: 10.1086/376806. [DOI] [Google Scholar]

- 26.Wetzels M, Odekerken-Schröder G, Van Oppen C. Using PLS path modeling for assessing hierarchical construct models: guidelines and empirical illustration. MIS Quart. 2009;33(1):177–195. [Google Scholar]

- 27.Sarstedta M, Ringleb C. Treating unobserved heterogeneity in PLS path modeling: a comparison of FIMIX-PLS with different data analysis strategies. J Appl Stat. 2010;37(8):1299–1318. doi: 10.1080/02664760903030213. [DOI] [Google Scholar]