Abstract

The quantitative, multiparametric assessment of brain lesions requires coregistering different parameters derived from MRI sequences. This will be followed by analysis of the voxel values of the ROI within the sequences and calculated parametric maps, and deriving multiparametric models to classify imaging data. There is a need for an intuitive, automated quantitative processing framework that is generalized and adaptable to different clinical and research questions. As such flexible frameworks have not been previously described, we proceeded to construct a quantitative post-processing framework with commonly available software components. Matlab was chosen as the programming/integration environment, and SPM was chosen as the coregistration component. Matlab routines were created to extract and concatenate the coregistration transforms, take the coregistered MRI sequences as inputs to the process, allow specification of the ROI, and store the voxel values to the database for statistical analysis. The functionality of the framework was validated using brain tumor MRI cases. The implementation of this quantitative post-processing framework enables intuitive creation of multiple parameters for each voxel, facilitating near real-time in-depth voxel-wise analysis. Our initial empirical evaluation of the framework is an increased usage of analysis requiring post-processing and increased number of simultaneous research activities by clinicians and researchers with non-technical backgrounds. We show that common software components can be utilized to implement an intuitive real-time quantitative post-processing framework, resulting in improved scalability and increased adoption of post-processing needed to answer important diagnostic questions.

Keywords: Brain imaging, Computer-Aided Diagnoses (CAD), User interface, Algorithms, Biomedical Image Analysis, Brain Morphology, Digital Image Processing, Digital Imaging and Communications in Medicine (DICOM), Image analysis, MR imaging, Segmentation, Software design, Systems integration

Background

A more standardized system of quantitative assessment of brain lesions is required. This empirical observation is the result in witnessing the neuroradiologists and technologists at the authors’ institution applying custom techniques whenever such quantitative assessment is needed, resulting in a non-standardized process and decreased adoption in the clinical arena, as well as decreased number of research projects requiring such quantitative assessments. Therefore, there is a need for an intuitive, quantitative processing framework which could be built by available components and can efficiently complete the post-processing and coregistration steps. Many neuroimaging laboratories around the world provide open-source solutions and components for many of the tasks of post-processing and coregistration, and the effort presented here provides a comprehensive framework built of such components. Such a framework should be generic and modular enough to adapt to different clinical and research questions without significant reworking. These approaches should scale to busy neuroradiology practices and ultimately become widely adopted among clinical neuroradiologists.

Often, the assessment of brain lesions may require post-processing steps which require coregistering different parameters derived from differing MRI sequences such as T1-weighted dynamic contrast-enhanced (DCE), T2*-weighted dynamic susceptibility contrast (DSC), and diffusion-weighted (DWI) and diffusion tensor (DTI) MRI, among others. Following this, analysis of the voxel values of the ROI within the sequences, calculated parametric maps, and derived multiparametric models to classify imaging data can be efficiently implemented and used for data analysis.

Image coregistration is the process of geometrically aligning two or more images so that corresponding pixels representing the same objects may be integrated or fused. This process is an absolute prerequisite for any subsequent quantitative image analysis so that different matrices containing voxel values from different sequences and post-processing steps can be indexed and accessed identically, being able to rely on the fact that the same index in voxel matrix A and voxel matrix B represents the same voxel of the object in question.

Inter-subject coregistration is most widely used to standardize images and measurements to brain atlases [1–8]. Similarly, intra-subject coregistration can be useful to correct for motion, differences in sequence measurements, and sequence localization disagreements when selecting regions of interest [9]. The utility of intra-subject sequence coregistration has led to extensive literature descriptions with PET [10–21], fMRI [17, 22], and SPECT [23–26]. Clinically, coregistration has been shown to improve the performance of diagnostic imaging, treatment follow-up imaging, and stereotactic biopsies [27–32]. However, few pipelines have been described, which provide the flexibility necessary to coregister a variety of advanced MR sequences, specifically DSC, DCE, and diffusion-weighted imaging.

In general, the optimal coregistration method is largely influenced by the clinical scenario. Since the skull of an individual person is a rigid body and the brain moves relatively little within the skull, rigid-body registration is appropriate for single time-point intra-subject alignment. More sophisticated models, such as non-linear registration, are needed for inter-subject registration due to population variation [33]. In addition, Ellingson et al. recently demonstrated that non-linear registration is superior when measuring ADC within the same patient over time, presumably because of the geometric distortion that occurs longitudinally with glioma patients [28].

Mass-univariate approaches such as employed by the statistical parametric mapping (SPM) coregistration toolkit (Leopold Muller Functional Imaging Laboratory, London, UK, http://www.fil.ion.ucl.ac.uk/spm/) have been the most common method of combining intra-subject series data, specifically those that incorporate metabolic or hemodynamic time series data. More recently, multivariate approaches have been developed, which consider each image as a group of single voxel observations, rather than as a single observation [34]. When comparing the two, it has been suggested that mass-univariate methods both better correct for non-sphericity with regionally specific hypothesis and are more sensitive to focal effects, as is common in neuroimaging [17, 35]. A full discussion on the merits of mass-univariate and multivariate approaches is beyond the scope of this paper.

By implementing the quantitative post-processing framework with integrated coregistration, as proposed in this paper, the post-processing steps are automated and integrated as much as possible via Matlab (MathWorks, Natick, MA, USA) routines. These routines consist of freely available ones as well as ones specifically developed for this framework, facilitating quick and efficient image analysis. Many publicly available toolkits are available for image coregistration. We use the newest version of statistical parametric mapping (Wellcome Trust Centre for Neuroimaging at UCL, London, UK), SPM8, as it integrates very well with Matlab and is widely used in the literature [36]. This toolkit is available for download from http://www.fil.ion.ucl.ac.uk/spm/. As it uses the NIfTI format for both input and output, it is easy to integrate SPM-based coregistration as a step in the overall quantitative post-processing pipeline.

Alternative coregistration toolkits that are available for download include FSL (FMRIB, University of Oxford, Oxford, UK), available from http://fsl.fmrib.ox.ac.uk/fsl/fslwiki/.

Methods

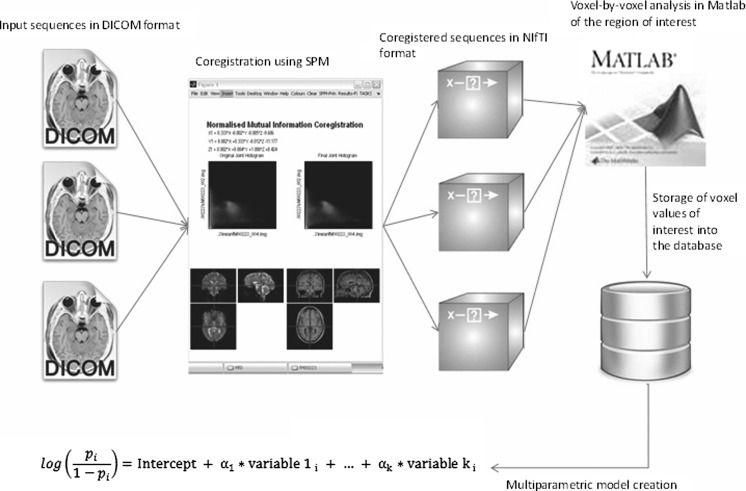

In order to perform a multiparametric assessment of brain lesions, post-processing steps are required, which include coregistering different parametric map and other sequences. This paper describes a framework to implement a pipeline to perform these post-processing steps in an appropriate order. The pipeline is implemented by using Matlab as the integration platform to integrate several freely available as well as commonly used software components together. The authors have noticed in their clinical and research practice a need for an intuitive, automated quantitative post-processing framework to be able to efficiently and consistently perform the required tasks. As such frameworks, which should be general enough to adapt to different clinical and research questions, don’t appear to be readily available, the authors proceeded to implement such a framework, the description of which is presented (Fig. 1). The following is an example of a DCE post-processing workflow using the proposed framework. The framework consists of five core modules: (1) post-processing, (2) file format conversion, (3) region of interest creation, (4) coregistration, and (5) output for statistical analysis. Each of these five core modules is described separately below. The two design principles that are key to enabling the creation of this framework are the following: (1) choosing components/modules that provide interfaces amenable to integration into a pipeline with an integration platform such as Matlab and (2) standardizing on the file format that each component/module is able to read, process, and output (NIfTI).

Fig. 1.

A summary of the post-processing and coregistration steps implemented. SPM does the coregistration, the files are converted to NIfTI format, and Matlab performs a voxel-by-voxel analysis of the ROI

Post-Processing

The first step in the DCE post-processing is the use of a post-processing toolkit, such as CADvue (iCAD, Nashua, NH, USA, http://www.icadmed.com/), to obtain specific parametric maps to characterize contrast enhancement. In addition to CADvue, alternative post-processing toolkits include TOPPCAT which is available for download from the Daniel P. Barboriak Laboratory (Duke University School of Medicine, Durham, NC, https://dblab.duhs.duke.edu/modules/dblabs_topcat/index.php?id=1).

In our application, the following parametric maps will be created within the post-processing environment: Ktrans, Ve, Vp, QiAUC, and Kep. In addition, preprocessed coronal SPGR, DTI, and T1 maps may be exported for further analysis.

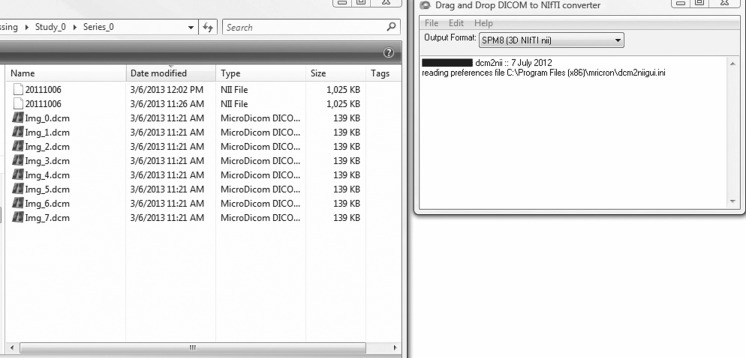

File Format Conversion

Once the parametric maps have been created in the post-processing toolkit such as CADvue or TOPPCAT, they are converted from the DICOM format to the NIfTI format using a freely available toolkit such as dcm2nii GUI (Fig. 2), available for download (McCausland Center For Brain Imaging, Columbia, SC, http://www.mccauslandcenter.sc.edu/mricro/mricron/dcm2nii.html). The NIfTI format is the result of the NIH Neuroimaging Informatics Technology Initiative which was created specifically to facilitate interoperability and cooperation of various informatics tools related to neuroimaging. Use of this format will enable the use of several additional publicly available neuroimaging analysis components in the construction of the overall post-processing framework, as detailed here. For example, a NIfTI format compatible viewer such as the freely available ImageJ may be used to review the parametric maps and coregistration results. Beyond input requirements, NIfTI is also generally recommended due to wide acceptability and uniformity with correctly reading header information [1].

Fig. 2.

The necessary sequences converting from DICOM to NIfTI using a dcm2nii GUI

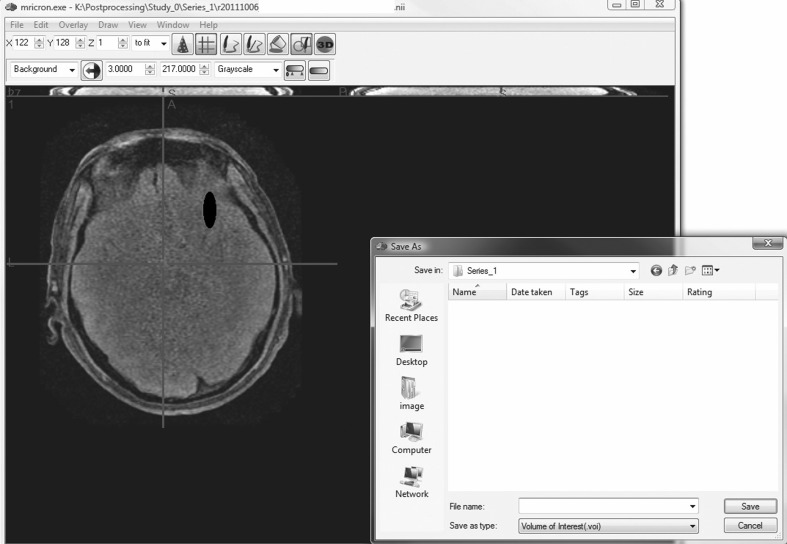

Region of Interest (ROI) Creation

Next, marking the ROI, such as the tumor volume on a MRI brain study, is a step in our pipeline requiring human interaction. For some applications, it may be possible to partially automate the segmentation of the lesions depending on their contrast resolution with their surroundings, but a human quality assurance step is required regardless to validate the results.

Several partially automated drawing tools are available for the radiologist or the technologist to mark the region of interest in the coregistered source image. For this work, publicly available MRIcron viewer was used, available for download from Neuroimaging Informatics Tools and Resources Clearinghouse (NITRC, http://www.nitrc.org/projects/mricron).

MRIcron is a cross-platform NIfTI format image viewer which supports single slice ROIs as well as volumes of interest. For most accurate ROIs, the original, non-coregistered sequence should be used such as the original axial T2 FLAIR. After the ROI has been created and saved as a NIfTI file, the ROI and the sequence used to draw it are coregistered together to the high-resolution reference sequence such as the coronal SPGR as discussed below (Fig. 3). This allows for ROIs to be delineated from images which provide the best ability to discern differences. This also allows users to define ROIs once per subject as opposed to redrawing the ROI on multiple images, which is a very common clinical practice.

Fig. 3.

Specification of the region of interest (ROI) using a drawing tool such as MRIcron. The ROI is created and is saving as a NIfTI file

The resulting NIfTI files can be read back into Matlab using a publicly available Matlab NIfTI toolkit, Tools for NIfTI and ANALYZE image, available for download from http://www.mathworks.com/matlabcentral/fileexchange/8797-tools-for-nifti-and-analyze-image, which contains various NIfTI utility functions such as load_nii() to load N-dimensional NIfTI files.

Coregistration

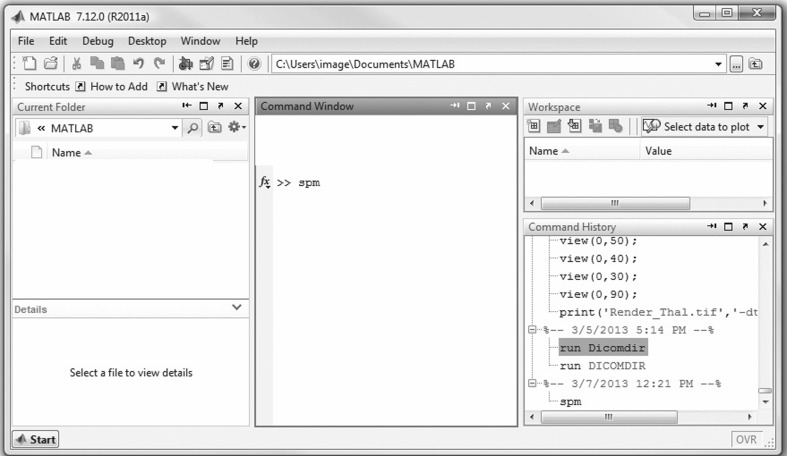

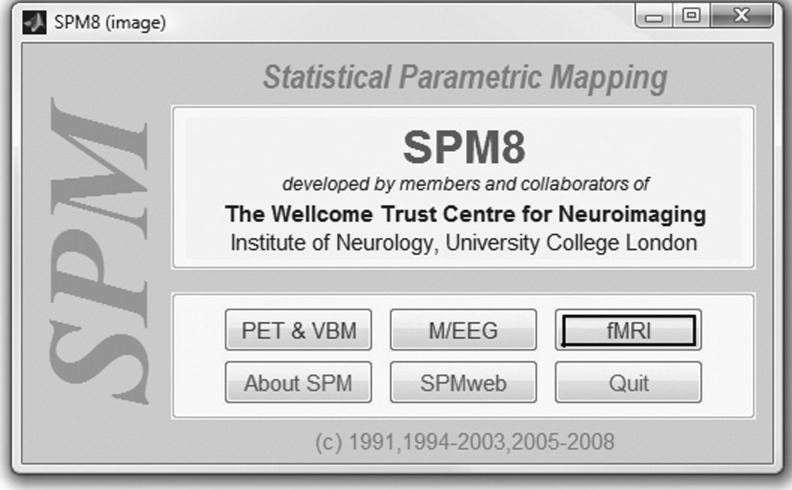

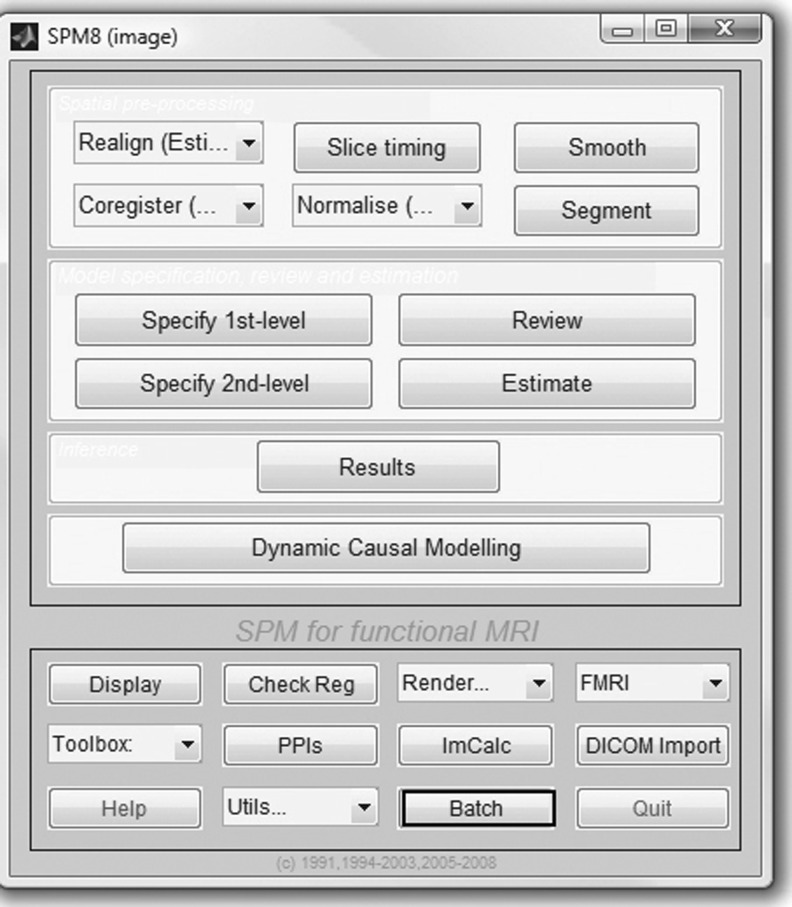

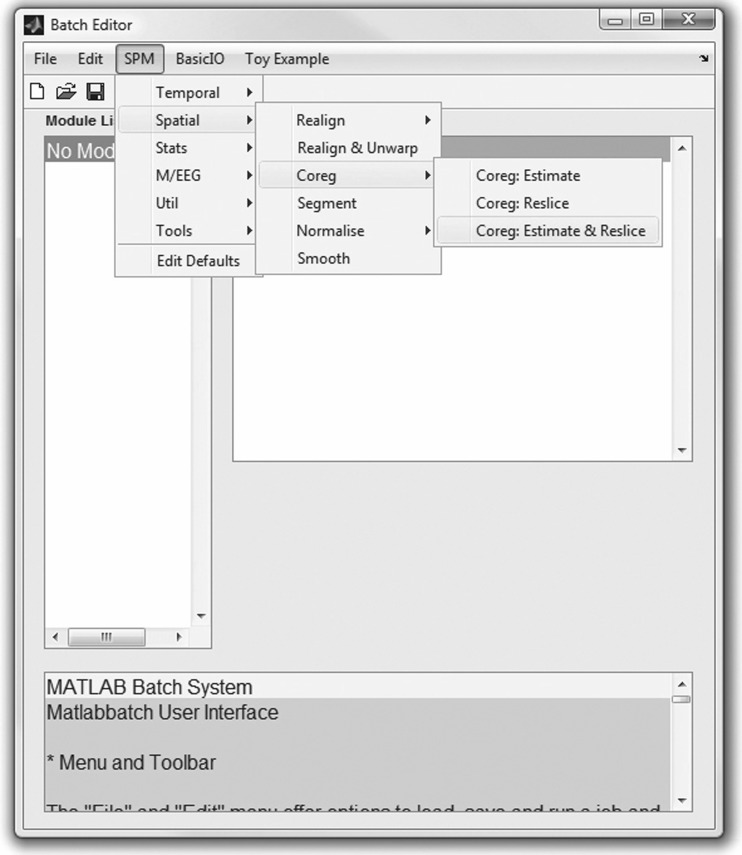

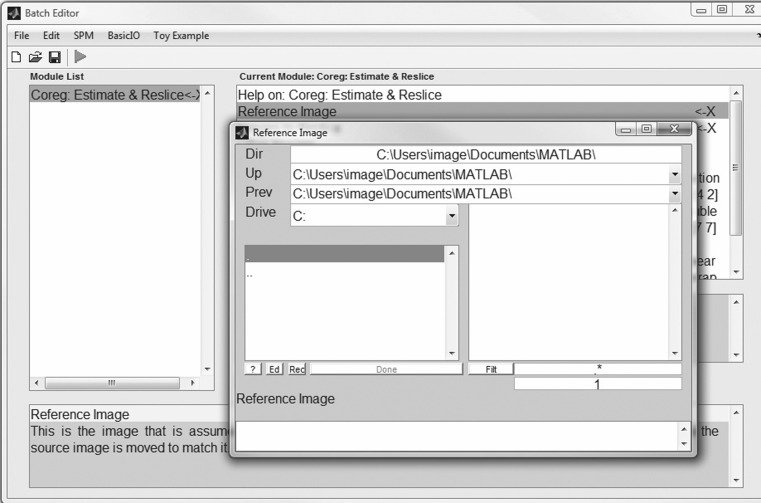

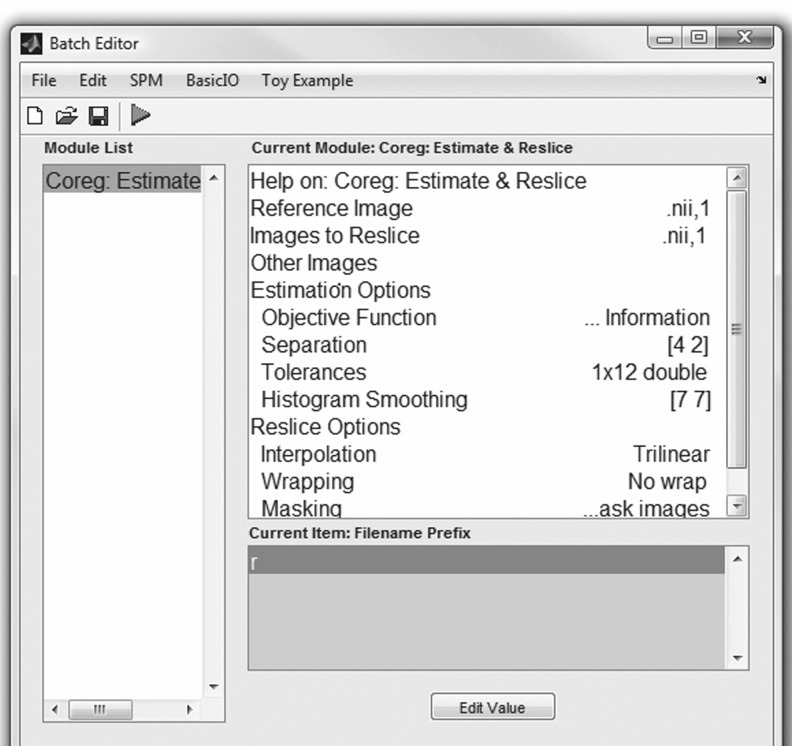

The next step in our workflow requires the use of SPM within Matlab to perform the coregistration (Fig. 4). For this MRI-based assessment of brain tumors, the fMRI processing option is used (Fig. 5) in batch mode (Fig. 6). Within the SPM Batch GUI, the Coregister: Estimate and Reslice module (Fig. 7) is used. The module requires a Reference Image (image which other images will be warped to match) and Source Image(s) (image(s) to be warped to match the Reference Image) (Fig. 8). In addition to aligning inter-series images, Estimate and Reslice also corrects for patient movement by aligning intra-series images. This is particularly important for modalities that have extended acquisition times and rely on temporal resolution, such as DCE MRI [35], [37]. The potential for subject movement is one reason why a higher-degree interpolation is used (such as trilinear).

Fig. 4.

Opening Matlab and typing “spm”

Fig. 5.

SPM toolkit provides coregistration for various modalities. For our particular MRI application illustrated here, the fMRI module is chosen

Fig. 6.

Using the batch mode of SPM to do the coregistration

Fig. 7.

The SPM Batch GUI for coregistration; selecting SPM, Spatial, Coregister, and Coregister: Estimate and Reslice

Fig. 8.

Specification of the reference image (file) and the source image (file) using SPM. The source image will be coregistered to the reference image. For best results, the reference image should be the highest resolution image available in the study

The objective (cost) function is the parameter that is either minimized or maximized during estimation. In general, the most commonly used cost functions are cross-correlation, mutual information (MI), normalized mutual information (NMI), and correlation ratio (CR). In general, NMI has consistently been demonstrated as either the superior or non-inferior method [26] [38]. However, more recently developed cost functions show promise. One such example is boundary-based registration (BBR), which is reported to be superior to NMI when the entire brain is not imaged or the images have large intensity biases [39]. However, given the widespread adoption of NMI and the cost functions included in the SPM8 package, we recommend the objective function be set at NMI.

For best coregistration results, the image with the highest resolution covering the entire brain should be chosen as the reference image if possible. In our case of MR assessment of brain tumors, coronal SPGR offers the highest resolution (Fig. 9), and it was therefore chosen as the reference sequence.

Fig. 9.

Execution of coregistration using SPM

Some sequences are always aligned, such as the ADC is aligned to the b0 EPI from the DTI. In this case, only the “parent” sequence is coregistered using SPM coregistration. For all the other sequences with the same alignment, the coregistration transformation matrix is automatically calculated by SPM and automatically copied from the beginning of the coregistered “parent” sequence to all the other sequences sharing that same original alignment. This reduces the number of distinct coregistrations needed to perform and ensures that even after coregistration, the sequences having the same alignment initially will continue to have the same alignment as they use identical coregistration transformation matrix.

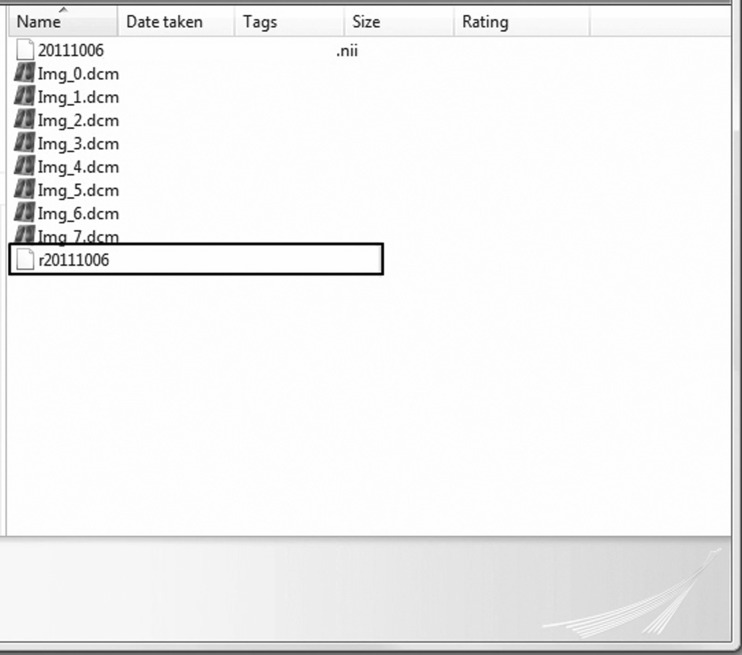

As an example, assuming that axial T2 FLAIR was used to draw the region of interest (ROI), in a typical example, only three actual coregistrations are needed: (1) T1 DCE coregistered to the coronal SPGR, (2) b0 EPI from the DTI coregistered to the coronal SPGR, and (3) axial T2 FLAIR coregistered to the coronal SPGR. The transformation matrix from the second coregistration listed above (b0 → coronal SPGR) is then automatically copied by SPM to the beginning of the NIfTI files of the following sequences: ADC, FA, and MD, as well as any DTI metric map, as all of these sequences share the original alignment with b0 EPI. In the case of SPM coregistration, the resulting coregistered and resliced NIfTI volume will have a prefix “r” (Fig. 10) and will be located in the file folder of the source image.

Fig. 10.

Using SPM, the source image coregistered to the reference image is named “r<date>… .nii” and is located in the folder for the source image

Output for Statistical Analysis

Once the files have been read into Matlab, the voxels corresponding to the ROI are extracted from each sequence of interest for further analysis. Several functions in the Matlab NIfTI toolkit are present, which are helpful for constructing the post-processing pipeline for DCE imaging, such as reslice_nii() to reslice the NIfTI images. Matlab environment can readily output any imaging data to either a database or a spreadsheet for further statistical analysis as needed (Fig. 11).

Fig. 11.

An example of a spreadsheet demonstrating the parametric mapping values for the region of interest (ROI), created by the framework illustrated in this paper

Finally, a NIfTI compatible viewer such as ImageJ, available for download from NIH Research Services Branch (http://rsbweb.nih.gov/ij/), may be used to review the coregistration results. Both the reference and coregistered source images can be overlaid in ImageJ to determine the quality of the coregistration. This quality assessment is a visual process as the coregistration algorithm has already performed, by definition, the best match numerically possible.

Results/Discussion

The implementation of this quantitative post-processing framework enables technologists and neuroradiologists to intuitively create multiple parametric maps, coregister the sequences and the parametric maps, draw a region of interest, and then automate the analysis of the parameters and voxel values of different sequences within the region of interest, thus facilitating in-depth voxel-wise analysis.

The use of the partially automated framework presented here also significantly reduces the overall post-processing time, with most of the time that is still required taken by drawing the region of interest. As mentioned before, while the specification of the region of interest may be partially automated using automated segmentation tools, there will always be a human quality control step required at this point. Using this framework and its modules, it has been our experience that the vast majority of ROIs, including volumetric ones, can be drawn within a few minutes and in many simpler cases in less than a minute.

Our initial empirical evaluation of the framework is an increased usage of analysis requiring post-processing and increased number of simultaneous research activities by clinicians and researchers with non-technical backgrounds. The evaluation methods at other institutions would similarly consist of observing whether there is increased usage of analysis requiring post-processing both in clinical and research use once a standardized framework such as described here has been implemented.

Conclusion

This work supports the idea that common software components can be utilized to implement an intuitive, real-time quantitative post-processing framework. It can result in improved scalability and hopefully increased adoption of the post-processing steps needed to answer important neuroradiological diagnostic questions.

Acknowledgments

Grant support: NIH R21EB013456 and NIH UL1RR031986-01.

Abbreviations

- ADC

Apparent diffusion coefficient

- DCE

Dynamic contrast-enhanced

- DICOM

Digital Imaging and Communications in Medicine

- DSC

Dynamic susceptibility contrast

- DTI

Diffusion tensor imaging

- DWI

Diffusion-weighted imaging

- FA

Flip angle

- fMRI

Functional magnetic resonance imaging

- GUI

Graphic user interface

- iCAD

Interactive computer-assisted diagnosis

- Kep

Reverse transfer constant

- Ktrans

Forward transfer constant

- MD

Mean diffusivity

- MI

Mutual information

- NIfTI

Neuroimaging Informatics Technology Initiative

- NMI

Normalized mutual information

- QiAUC

Quick initial area under curve

- ROI

Region of interest

- SPGR

Spoiled gradient recalled

- SPM

Statistical parametric mapping

- Ve

Volume of extracellular extravascular space

- Vp

Plasma blood volume

Learning Objectives

Learn about publicly available software components for coregistration and for constructing a quantitative post-processing framework.

Understand the fundamentals of coregistration.

Understand where coregistration fits in the overall quantitative post-processing framework.

Understand the fundamentals of basic image file formats such as DICOM and NIfTI, and when and where these different formats are to be used.

CME Questions

-

Describe what MRIcron is and how it is used for post-processing.

Choices:-

A.Tool to coregister images.

-

B.BTool to calculate DCE parametric maps.

-

C.Tool to perform partially automated drawings, such as draw the ROIs

-

D.Tool to perform the statistical analysis to create a logistical regression model

-

E.Tool to convert from DICOM to NIfTI

[C]. Explanation: *It is a partially automated drawing tool that can be used to mark the region of interest in the coregistered source image. It is a cross-platform NIfTI format image viewer which supports single slice ROIs as well as volumes of interest.

-

A.

-

Why are NIfTI format files used for image post-processing?

-

A.NIfTI format is used by many PACS systems natively.

-

B.NIfTI format is faster to process than DICOM format.

-

C.NIfTI format is widely accepted and reads header information correctly.

-

D.NIfTI format is more familiar to radiologists than DICOM.

-

E.NIfTI format will replace DICOM format in the future.

[C]. Explanation: *Using NIfTI files allows for the use of many publicly available neuroimaging toolkits and components used in the post-processing framework (such as SPM, ImageJ). Also, it is generally recommended because of its wide acceptability and uniformity with correctly reading header information.

-

A.

-

What sequence should be chosen as the reference sequence when coregistering?

-

A.Sequence in the same orientation as the image used to draw the ROIs

-

B.Sequence that best demonstrates the lesion.

-

C.b0 sequence from EPI

-

D.T1 DCE

-

E.Sequence with the highest resolution.

[E] Explanation: *The highest resolution sequence should be chosen. In our case, coronal SPGR was the chosen sequence.

-

A.

-

Some sequences are always aligned by default, such as the “dependent” sequence ADC being aligned to the “parent” sequence, b0 EPI, from DTI. Which sequences needs to be coregistered in this case?

-

A.“Parent” sequence to the reference sequence

-

B.“Dependent” sequence to the “parent”, and then “parent” to the reference sequence

-

C.“Parent” and “dependent” sequence to the reference sequence

-

D.“Dependent” sequence to the “parent” sequence

-

E.“Dependent” sequence to the reference sequence

[A] Answer: *Only the “parent” sequence is coregistered to the reference sequence using SPM coregistration. The coregistration transformation matrix can be automatically copied by SPM to all the “dependent” other sequences sharing the same original alignment as the “parent”.

-

A.

-

Explain what image coregistration is and why it is important.

-

A.Registration in PACS of each sequence in both the NIfTI and DICOM format to ensure both formats are available for quantitative analysis.

-

B.Conversion of each sequence from the DICOM to NIfTI, to enable use of commonly available neuroimaging toolkits.

-

C.Geometric alignment to ensure corresponding pixels may be fused, to enable quantitative analysis.

-

D.Extraction of the pixels of interest from the pertinent sequences using the ROI as the index, to enable further quantitative analysis.

-

E.Creation of a registry of voxels of interest for quantitative analysis.

[C] Answer: *It is the process of geometrically aligning two or more images (sequences) so that corresponding pixels representing the same objects may be fused. It is necessary for any quantitative imaging analysis so that different voxel values can be indexed and accessed identically.

-

A.

Footnotes

Presentation: ASNR 2013 May.

References

- 1.Klein A, Tourville J. 101 labeled brain images and a consistent human cortical labeling protocol. Front Neurosci. 2012;6:171. doi: 10.3389/fnins.2012.00171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Thirion B, et al. Dealing with the shortcomings of spatial normalization: multi-subject parcellation of fMRI datasets. Hum Brain Mapp. 2006;27(8):678–693. doi: 10.1002/hbm.20210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Van Hecke W, et al. On the construction of an inter-subject diffusion tensor magnetic resonance atlas of the healthy human brain. Neuroimage. 2008;43(1):69–80. doi: 10.1016/j.neuroimage.2008.07.006. [DOI] [PubMed] [Google Scholar]

- 4.Thompson PM, et al. Mathematical/computational challenges in creating deformable and probabilistic atlases of the human brain. Hum Brain Mapp. 2000;9(2):81–92. doi: 10.1002/(SICI)1097-0193(200002)9:2<81::AID-HBM3>3.0.CO;2-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Yelnik J, et al. Localization of stimulating electrodes in patients with Parkinson disease by using a three-dimensional atlas-magnetic resonance imaging coregistration method. J Neurosurg. 2003;99(1):89–99. doi: 10.3171/jns.2003.99.1.0089. [DOI] [PubMed] [Google Scholar]

- 6.Rorden C, et al. Age-specific CT and MRI templates for spatial normalization. Neuroimage. 2012;61(4):957–965. doi: 10.1016/j.neuroimage.2012.03.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Yoon HJ, et al. Correlated regions of cerebral blood flow with clinical parameters in Parkinson’s disease; comparison using ‘Anatomy’ and ‘Talairach Daemon’ software. Ann Nucl Med. 2012;26(2):164–174. doi: 10.1007/s12149-011-0547-2. [DOI] [PubMed] [Google Scholar]

- 8.Wu M, et al. Quantitative comparison of AIR, SPM, and the fully deformable model for atlas-based segmentation of functional and structural MR images. Hum Brain Mapp. 2006;27(9):747–754. doi: 10.1002/hbm.20216. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Heiss WD, Raab P, Lanfermann H. Multimodality assessment of brain tumors and tumor recurrence. J Nucl Med. 2011;52(10):1585–1600. doi: 10.2967/jnumed.110.084210. [DOI] [PubMed] [Google Scholar]

- 10.Pietrzyk U, Herzog H. Does PET/MR in human brain imaging provide optimal co-registration? A critical reflection. MAGMA. 2013;26(1):137–147. doi: 10.1007/s10334-012-0359-y. [DOI] [PubMed] [Google Scholar]

- 11.Price JC. Molecular brain imaging in the multimodality era. J Cereb Blood Flow Metab. 2012;32(7):1377–1392. doi: 10.1038/jcbfm.2012.29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Sauter AW, et al. Combined PET/MRI: one step further in multimodality imaging. Trends Mol Med. 2010;16(11):508–515. doi: 10.1016/j.molmed.2010.08.003. [DOI] [PubMed] [Google Scholar]

- 13.Slomka PJ, Baum RP. Multimodality image registration with software: state-of-the-art. Eur J Nucl Med Mol Imaging. 2009;36(Suppl 1):S44–S55. doi: 10.1007/s00259-008-0941-8. [DOI] [PubMed] [Google Scholar]

- 14.Townsend DW. Multimodality imaging of structure and function. Phys Med Biol. 2008;53(4):R1–R39. doi: 10.1088/0031-9155/53/4/R01. [DOI] [PubMed] [Google Scholar]

- 15.Cizek J, et al. Fast and robust registration of PET and MR images of human brain. Neuroimage. 2004;22(1):434–442. doi: 10.1016/j.neuroimage.2004.01.016. [DOI] [PubMed] [Google Scholar]

- 16.Andersson JL, Sundin A, Valind S. A method for coregistration of PET and MR brain images. J Nucl Med. 1995;36(7):1307–1315. [PubMed] [Google Scholar]

- 17.Kiebel SJ, Friston KJ. Statistical parametric mapping for event-related potentials (II): a hierarchical temporal model. Neuroimage. 2004;22(2):503–520. doi: 10.1016/j.neuroimage.2004.02.013. [DOI] [PubMed] [Google Scholar]

- 18.Montgomery AJ, et al. Correction of head movement on PET studies: comparison of methods. J Nucl Med. 2006;47(12):1936–1944. [PubMed] [Google Scholar]

- 19.Quarantelli M, et al. Integrated software for the analysis of brain PET/SPECT studies with partial-volume-effect correction. J Nucl Med. 2004;45(2):192–201. [PubMed] [Google Scholar]

- 20.Woods RP, Mazziotta JC, Cherry SR. MRI-PET registration with automated algorithm. J Comput Assist Tomogr. 1993;17(4):536–546. doi: 10.1097/00004728-199307000-00004. [DOI] [PubMed] [Google Scholar]

- 21.Gutierrez D, et al. Anatomically guided voxel-based partial volume effect correction in brain PET: impact of MRI segmentation. Comput Med Imaging Graph. 2012;36(8):610–619. doi: 10.1016/j.compmedimag.2012.09.001. [DOI] [PubMed] [Google Scholar]

- 22.Eklund A, et al. Does parametric fMRI analysis with SPM yield valid results? An empirical study of 1484 rest datasets. Neuroimage. 2012;61(3):565–578. doi: 10.1016/j.neuroimage.2012.03.093. [DOI] [PubMed] [Google Scholar]

- 23.Dey D, et al. Automatic three-dimensional multimodality registration using radionuclide transmission CT attenuation maps: a phantom study. J Nucl Med. 1999;40(3):448–455. [PubMed] [Google Scholar]

- 24.Grosu AL, et al. Validation of a method for automatic image fusion (BrainLAB System) of CT data and 11C-methionine-PET data for stereotactic radiotherapy using a LINAC: first clinical experience. Int J Radiat Oncol Biol Phys. 2003;56(5):1450–1463. doi: 10.1016/S0360-3016(03)00279-7. [DOI] [PubMed] [Google Scholar]

- 25.Thurfjell L, et al. Improved efficiency for MRI-SPET registration based on mutual information. Eur J Nucl Med. 2000;27(7):847–856. doi: 10.1007/s002590000270. [DOI] [PubMed] [Google Scholar]

- 26.Yokoi T, et al. Accuracy and reproducibility of co-registration techniques based on mutual information and normalized mutual information for MRI and SPECT brain images. Ann Nucl Med. 2004;18(8):659–667. doi: 10.1007/BF02985959. [DOI] [PubMed] [Google Scholar]

- 27.Bar-Shalom R, et al. Clinical performance of PET/CT in evaluation of cancer: additional value for diagnostic imaging and patient management. J Nucl Med. 2003;44(8):1200–1209. [PubMed] [Google Scholar]

- 28.Ellingson BM, et al. Nonlinear registration of diffusion-weighted images improves clinical sensitivity of functional diffusion maps in recurrent glioblastoma treated with bevacizumab. Magn Reson Med. 2012;67(1):237–245. doi: 10.1002/mrm.23003. [DOI] [PubMed] [Google Scholar]

- 29.Cohen DS, et al. Effects of coregistration of MR to CT images on MR stereotactic accuracy. J Neurosurg. 1995;82(5):772–779. doi: 10.3171/jns.1995.82.5.0772. [DOI] [PubMed] [Google Scholar]

- 30.Daisne JF, et al. Evaluation of a multimodality image (CT, MRI and PET) coregistration procedure on phantom and head and neck cancer patients: accuracy, reproducibility and consistency. Radiother Oncol. 2003;69(3):237–245. doi: 10.1016/j.radonc.2003.10.009. [DOI] [PubMed] [Google Scholar]

- 31.Grosu AL, et al. An interindividual comparison of O-(2-[18F]fluoroethyl)-L-tyrosine (FET)- and L-[methyl-11C]methionine (MET)-PET in patients with brain gliomas and metastases. Int J Radiat Oncol Biol Phys. 2011;81(4):1049–1058. doi: 10.1016/j.ijrobp.2010.07.002. [DOI] [PubMed] [Google Scholar]

- 32.Thiel A, et al. Enhanced accuracy in differential diagnosis of radiation necrosis by positron emission tomography–magnetic resonance imaging coregistration: technical case report. Neurosurgery. 2000;46(1):232–234. doi: 10.1097/00006123-200001000-00051. [DOI] [PubMed] [Google Scholar]

- 33.Ashburner J. Computational anatomy with the SPM software. Magn Reson Imaging. 2009;27(8):1163–1174. doi: 10.1016/j.mri.2009.01.006. [DOI] [PubMed] [Google Scholar]

- 34.Sui J, et al. A review of multivariate methods for multimodal fusion of brain imaging data. J Neurosci Methods. 2012;204(1):68–81. doi: 10.1016/j.jneumeth.2011.10.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Friston K: Introduction: experimental design and statistical parametric mapping. Frackowiak RSJ Editor. in Human brain function. Amsterdam ; Boston: Elsevier Academic Press, 2004 p. xvi, 1144 p

- 36.Wellcome Trust Centre for Neuroimaging, U.C.L., U.K. SPM8. 4/1/2013; Available from: http://www.fil.ion.ucl.ac.uk/spm/software/spm8/

- 37.Grootoonk S, et al. Characterization and correction of interpolation effects in the realignment of fMRI time series. Neuroimage. 2000;11(1):49–57. doi: 10.1006/nimg.1999.0515. [DOI] [PubMed] [Google Scholar]

- 38.Studholme C, Hill DLG, Hawkes DJ. An overlap invariant entropy measure of 3D medical image alignment. Pattern Recog. 1999;32(1):71–86. doi: 10.1016/S0031-3203(98)00091-0. [DOI] [Google Scholar]

- 39.Greve DN, Fischl B. Accurate and robust brain image alignment using boundary-based registration. Neuroimage. 2009;48(1):63–72. doi: 10.1016/j.neuroimage.2009.06.060. [DOI] [PMC free article] [PubMed] [Google Scholar]