Abstract

In the present contribution we investigate the images of CW diffusely reflected light for a point-like source, registered by a CCD camera imaging a turbid medium containing an absorbing lesion. We show that detection of μa variations (absorption anomalies) is achieved if images are normalized to background intensity. A theoretical analysis based on the diffusion approximation is presented to investigate the sensitivity and the limitations of our proposal and a novel procedure to find the location of the inclusions in 3D is given and tested. An analysis of the noise and its influence on the detection capabilities of our proposal is provided. Experimental results on phantoms are also given, supporting the proposed approach.

OCIS codes: (110.7050) Turbid media, (110.0113) Imaging through turbid media, (170.3880) Medical and biological imaging

1. Introduction

Diffuse reflectance from optically turbid biological tissue has been exploited for near infrared spectroscopy (NIRS) and NIR diffuse optical imaging (DOI) relevant for several applications in biomedical optics, [1–4]. Because of the diffusive character of photon migration in thick (∼ cm) tissue, imaging of function, as e.g. revealed by local concentrations of biomarkers like oxy- and deoxy-hemoglobin, is generally more reasonable than structural imaging. Contrast based on tissue concentrations of these chromophores has been used e.g. to non-invasively detect and characterize tumors in breast tissue [5–7] as well as tissue oxygenation in muscles [8] or the brain [9–11].

An indispensable prerequisite for any quantitative diffuse optical tissue characterization and imaging is the ability to separate signal damping due to absorption from that caused by light scattering inside the turbid media. Optical methods allowing for that employ either spatially and/or temporally modulated light sources together with photon detection schemes of appropriate spatial and/or temporal resolution [12–14]. However, most of these techniques measure only a single, small area of tissue at a time, although such investigations would greatly benefit from imaging a larger region of interest. Scanning or multiplexing can be used to overcome such a disadvantage, but is typically slow and cumbersome to implement. To solve these problems, several non-contact wide-field diffuse optical imaging methods have been developed during the recent years to realize deep quantitative functional imaging of tissue based on spatially modulated light, i.e. structured illumination [14–18]. It has been shown that these methods may overcome several limitations of conventional spatially resolved diffuse imaging arrangements employing discrete multi-distance source-detector separations [12, 19]. In general, the reflection geometry is not suited for a tomographic reconstruction of tissue optical properties since light sources and detectors are available on only one side of the tissue [20]. In order to estimate optical properties of tumors under these conditions several authors have used prior knowledge about the location and size of the tumor obtained from other imaging modalities [20, 21].

In the present paper we introduce a novel non-contact wide-field continuous wave (CW) optical imaging technique to localize a tumor from diffuse reflectance measurements. For this purpose, a point-like light source is used [14], and a CCD camera images the spatial point spread function across the whole field of view. This procedure is equivalent to a measurement of the corresponding k-space modulation transfer function of the tissue [14]. It is shown that wide-field imaging of a few spatial point spread functions in the vicinity of a lesion with enhanced absorption inside a semi-infinite turbid medium allows localizing it in 3D if an appropriate normalization procedure similar to the Rytov approximation is applied [22,23]. We have validated our method with the help of theoretical calculations and experimental investigations on phantoms. Furthermore, the sensitivity of the imaging system in terms of detection limit is investigated by statistical analysis of raw data to decide if lesions of a certain contrast and size cause significant signals above background level taking into account experimental noise. The information obtained with the described method could be used as input for estimating tumor optical properties by reconstruction with a parameterized inversion model [20] or by utilizing solutions of the diffusion equation for a localized heterogeneity in a homogeneous medium [24–26].

The paper has been organized as follows: In the next Section the theoretical analysis to investigate the capabilities of the method as well as noise modelling are given. The results of Section 2.1 are compared in Section 3 with those of experiments in the NIR spectral range using a phantom with a tumor simulating object. Finally, we summarize the main conclusions of our work.

2. Theoretical analysis

2.1. Description of the method

Our proposal to localize absorbing inclusions in a semi-infinte turbid medium is based on (multiple) point like CW illumination and non - contact wide field imaging of the spatial point spread function in diffuse reflection. The method may be suitable for all kind of turbid media. However, we are going to present it for values of the optical parameters which are common for Biomedical Optics, involving radiation in the NIR range since this topic is of intense research and growing interest.

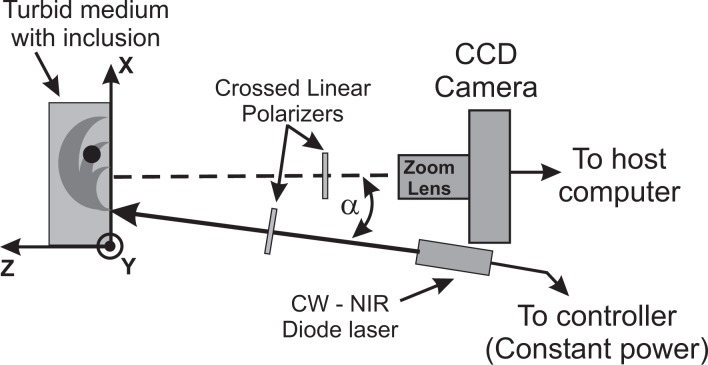

The experimental situation is schematically drawn in Fig. 1. Information is acquired by imaging the accessible face of the scattering medium by a CCD camera, resulting in a 2D picture of the light being diffusely reflected. Each pixel in this image can be interpreted as the end point of a photon banana which starts at the location of the light injection. An absorbing object in the scattering medium will affect light propagation along particular bananas, as illustrated in Fig. 1. As a consequence, the remitted light intensity is perturbed at the end point of the banana. In order to make the perturbation visible we normalize the measured image I(x, y) with absorbing object to the background image I 0(x, y) without object. Similar concepts have been applied by some of the authors to the case of CW diffuse transmittance [23].

Fig. 1.

Schema of the experimental setup. A CW NIR diode laser illuminates a semi-infinite turbid medium with inclusions. A small tilt angle, α, is used to avoid Fresnel reflections and a CCD camera images the medium.

When the depth (z) location of the object is changed, different bananas will be affected and the perturbed intensity will appear at other pixel positions in the camera image. Furthermore, when we shift the illumination point to another location on the turbid medium, the perturbation in the normalized image due to the absorber appears at a new position. As will be discussed in the next sections we use these dependencies to localize the absorber in the x–y plane and to derive its z coordinate.

2.2. The diffusion model

In order to obtain a theoretical expression for the normalized image

| (1) |

for typical values of optical properties and to analyze the limitations of the method we use a theoretical model based on diffusion theory [24, 27].

The turbid medium, of absorption coefficient μa and reduced scattering coefficient μ′s, is described by the semi-infinite medium geometry employing the extrapolated boundary condition. For a point source, and using the standard notation, the outward directed photon flux density when no inclusions are present is given by: [28, 29]

| (2) |

with r 1 = |r d − r s 1| and r 2 = |r d − r s 2|. Here is the attenuation coefficient, r s = (xs, ys, 0) is the surface position of the impinging light beam and r d = (xd, yd, 0) the detector position. r s 1 = (xs, ys, z 0) and r s 2 = (xs, ys, −2ze − z 0) denote the positions of the original and the image light source, ze is the extrapolated boundary position [30], A 0 is a scaling factor representing the number of photons per second in the incident beam, and z 0 = 1/μ′s. In the presence of an inclusion, centered at rInc, Eq. (2) is modified, resulting in

| (3) |

where JInc (r s, r d, r Inc) is the term corresponding to the perturbation due to the inclusion. In order to fulfill the extrapolated boundary condition an image of the inclusion has to be added at the location , and the interaction of both sources with the original and the image inclusion has to be taken into account. Thus, the photon flux density JInc (r s, r d, r Inc) can be constructed from four contributions:

| (4) |

In case of a spherical inclusion (radius a, attenuation coefficient κ′) the needed infinite medium fluence rate can be written as a multipole expansion in terms of the modified spherical Bessel functions of the second kind km and of Legendre polynomials Pm according to

| (5) |

where θ is the angle between the vectors joining the inclusion with the source and the detector [28].

The expansion coefficients Bm ≡ Bm(κ, κ′, rInc, a) are determined from the boundary conditions that require fluence rate and flux to be continuous across the surface of the sphere. The explicit expressions for these coefficients can be found in Refs. [25] and [28]. It is important to note that in many cases, including the experimental conditions presented in this work, the previous equation can be reduced to the form [28]

| (6) |

where |q| = |B 0/κ| and |p| = |B 1/κ 2|; r d − r Inc is the vector which aims from the center of the small sphere to the observation point.

Note that a solution of the diffusion equation is available also for cylindrical inclusions [24] which could be used for lesions strongly deviating from a spherical shape.

As discussed by Haskell et al. [30] the measurement of the remission from a turbid medium has to be described by a combination of the fluence rate and the flux density. However, since we consider source-detector separations larger than 1 cm the flux is a very good approximation for the relative decrease of the intensity with increasing source-detector separation. The deviations from the combined terms are smaller than 2%. Therefore, the flux (Eqs. (2) and (3)) is used in the following simulations.

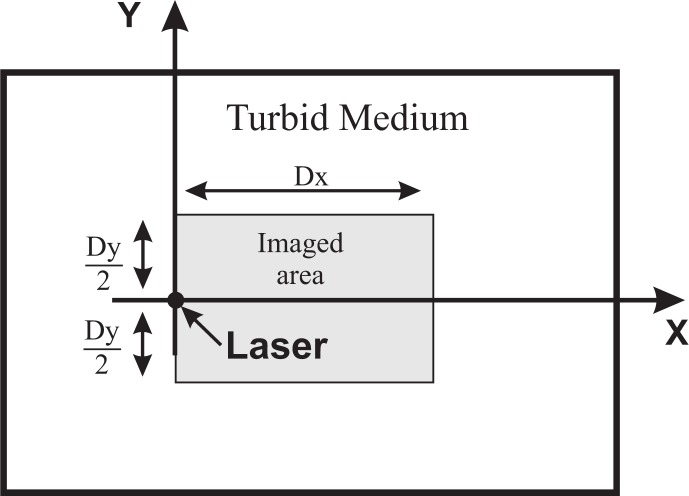

Figure 2 illustrates the geometry assumed for the calculations. The illumination point is taken at the coordinate rs = (0, 0, 0). The sphere is centered at y = 0 and both, its lateral position, xInc, and its depth, zInc, will be varied in the different calculations.

Fig. 2.

Geometry used (not to scale). We considered a thick slab, which can be taken as semi - infinite. This schema is a front view of the slab, as seen from the camera. The laser impinges at coordinates (x = 0, y = 0, z = 0). The camera is focused at the plane z = 0, which is the plane of the Figure, and a region of extension Dx × Dy is imaged onto the CCD.

2.3. Rationale

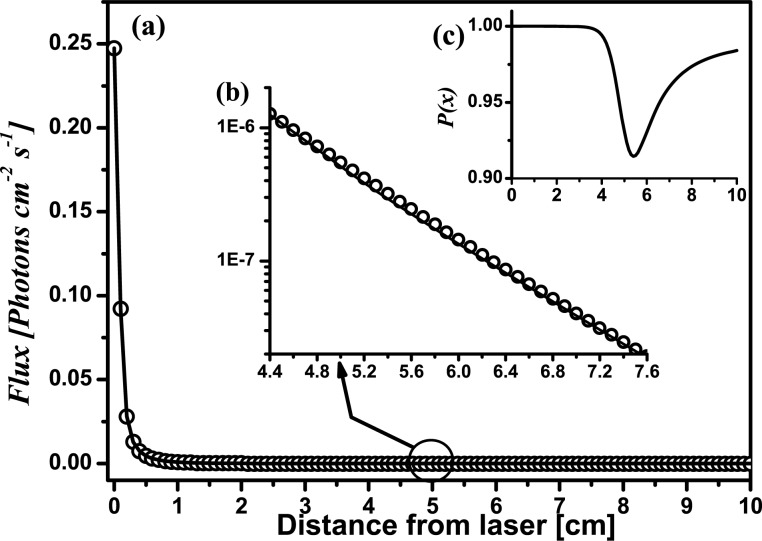

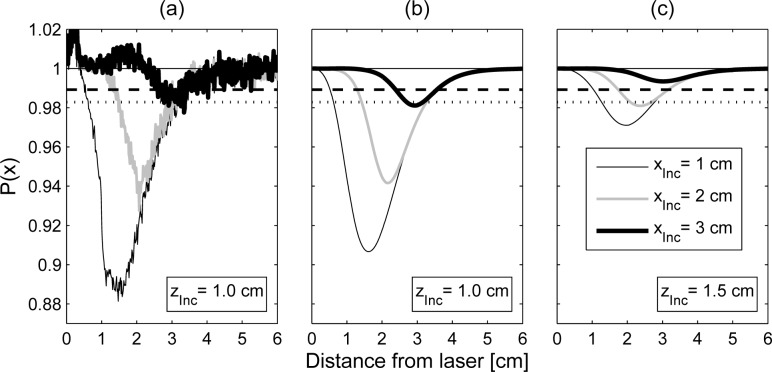

We illustrate how our proposal works in 1D with the help of Fig. 3. In terms of the previous Equations the 1D profile, P(x), of the normalized image along the x axis passing through the illumination point and the sphere is given by:

| (7) |

with rd = (x, 0, 0), rInc = (xInc, 0, zInc), rs = (0, 0, 0). Far from the inclusion the detected intensities with or without inclusion are (nearly) equal, and thus it is expected that P(x) → 1. All curves in Fig. 3 were obtained with the set of Eqs. (2) to (6). In this example, a host with the optical parameters μ a0 = 0.03 cm −1 and μ′ s0 = 10 cm −1 is assumed. An absorbing spherical inclusion with μ aInc. = 4μ a0, μ′ sInc = μ′ s0 and diameter ϕInc = 1.0 cm is immersed in the host placed at xInc = 5 cm from the laser, and at a depth zInc = 1.0 cm.

Fig. 3.

Illustration of the principle of the method by theoretical calculations. An absorbing (μaInc = 4μa 0) spherical inclusion, ϕInc = 1.0 cm was placed at a depth zInc = 1.0 cm and at xInc = 5 cm from the laser illumination point.

Figure 3(a) shows the light intensity emerging at different distances from the laser for both cases: the medium without the inclusion (open circles) and the medium with the inclusion (solid line). Please note that both curves cannot be distinguished by eye in a linear scale plot.

Figure 3(b) is a close up (log scale) of the region where the inclusion was placed. Subtle differences between both curves can be seen in this representation. It is noticeable that the intensity in this zone is several orders of magnitude lower than close to the laser.

Finally, the inset labeled as Fig. 3(c) shows the resulting profile after applying Eq. (7) to the intensities of Fig. 3(a). The presence of the absorbing inclusion is now clearly evident as a dip in the profile. The minimum of the profile does not occur exactly at xInc = 5 cm, but instead it is shifted away from the illumination point. We will return to this later.

2.4. Modulation profiles and detectability of inclusions

In this Section we discuss the general behavior of the profiles P(x) obtained for different sets of the parameters of the inclusion. In all cases, calculations for this Section were carried out considering a bulk medium with μ′s = 10cm −1 and μ a0 = 0.03cm −1, and using spherical inclusions which are more absorbing than the bulk (μ aInc > μ a0), but all having μ′sInc = μ′ s0 = 10cm −1. These values were chosen with the intention to represent a typical case for biomedical optics experiments in the NIR range.

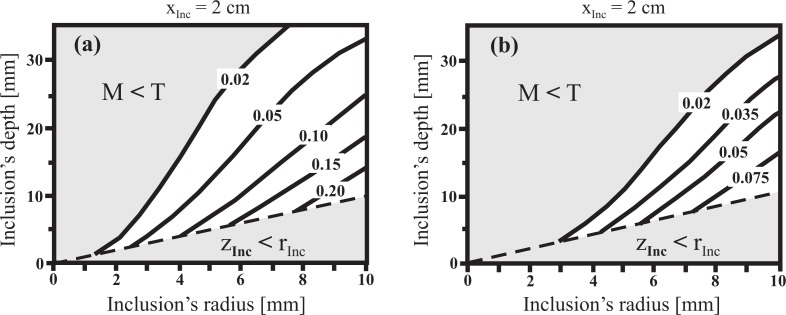

Due to the absorbing nature of the spheres a dip in the profiles is expected at those locations where the inclusion is detected. Additionally, since profiles are normalized, their maximum value is always unity. Thus we will refer to the modulation depth (or just the modulation) of the profile as M = 1 − P(x)min, being P(x)min the minimum value given by Eq. (1). Larger modulation values M correspond to better detectability of the inclusion according to the quantitative analysis of this concept given in Fig. 5.

Fig. 5.

Modulation (M) map obtained by the theory as a function of both, the inclusion’s radius (rInc) and the inclusion’s depth (zInc). The inclusion is assumed to be a sphere placed at xInc = 2.0 cm from the illumination point. (a) μaInc = 4μ a0; (b) μaInc = 2μ a0.

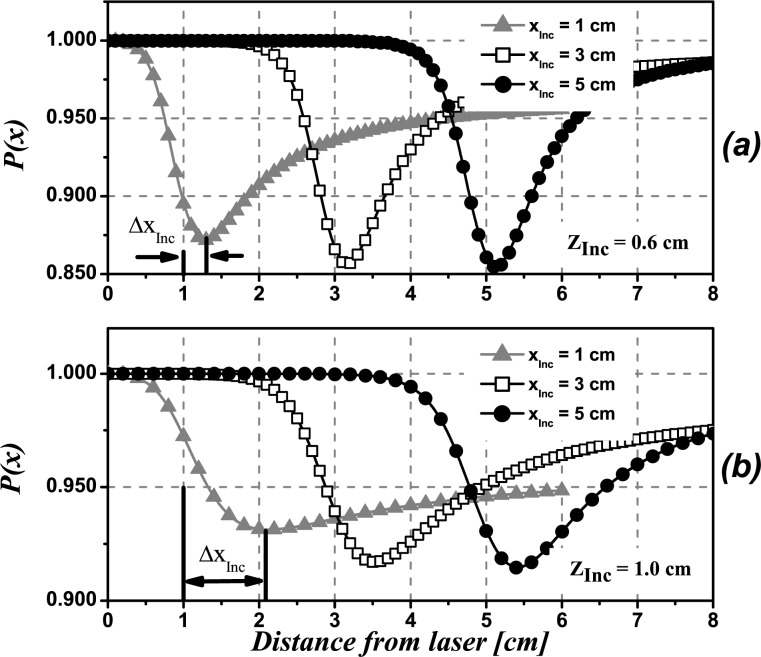

Figure 4 shows simulated profiles P(x) for inclusions at different lateral positions with respect to the laser, namely xInc = 1 cm (triangles), 3 cm (squares), and 5 cm (circles), leaving the depth as a parameter. The diameter of the sphere was set to ϕInc = 1.0 cm. The absorption of the inclusion is assumed to be μ aInc = 4μ a0 which is close to the clinically observed absorption contrast for breast tumors in healthy tissue [31, 32].

Fig. 4.

Theoretical profiles obtained using Eq. (1) for an absorbing spherical inclusion (μaInc = 4μa 0, ϕInc = 1.0 cm) located at different depths, zInc and at several distances from the laser, xInc. a) zInc = 0.6cm, b) zInc = 1.0cm.

The minimum in the intensity profile indicates the detected lateral location of the inclusion relative to the illumination point. It can be seen that the profiles corresponding to shallow inclusions (zInc = 0.6 cm) have their minimum slightly shifted away from the true position (Fig. 4(a)). For an inclusion placed at xInc we call this shift ΔxInc. The shift is seen to be always towards greater values of x, that is, away from the laser. Deeper inclusions give profiles with their minima also shifted towards greater values of x but by larger amounts (see Fig. 4(b)). As shown in Fig. 4(a), for a depth zInc = 0.6 cm this lateral shift does not exceed absolute values of 0.3 cm for the inclusion placed at xInc = 1 cm and decreases for xInc = 3 cm to 5 cm. When the depth of the inclusion is increased (Fig. 4(b)), ΔxInc increases to ΔxInc ≈ 1cm for xInc = 1cm and decreases to ΔxInc < 0.5 cm for xInc = 3 cm to 5 cm, which implies a large improvement in the relative percent error.

The modulation of the profiles is only slightly affected by the distance of the inclusion to the illumination point. For example, as shown in the first row of Fig. 4, for the case of zInc = 0.6 cm the modulation varies from M = 0.125 (12.5%) for xInc = 1.0 cm to M = 0.150 (15%) for xInc = 5.0 cm.

Besides, it is shown in Fig. 4, that keeping xInc fixed and increasing the depth, zInc, of the inclusion, produces noticeable changes in M. For example, M reaches values of M = 0.150 for an inclusion located at zInc = 0.6 cm depth (Fig. 4(a)) and drops to M < 0.10 for zInc = 1.0 cm (Fig. 4(b)). Clearly, in the case of noisy signals this modulation may be insufficient to detect the inclusion (See Section 2.6).

Figure 5 explores the behavior of the modulation, and thus the detectability of the inclusion, with respect to both, the depth and the size of the inclusion. For this example we have located the inclusion at xInc = 2 cm from the illumination point; the choice of xInc is not critical since we have already shown in Fig. 4 that the modulation depends only slightly on xInc. In Fig. 5(a) we assume a spherical inclusion with an absorption coefficient μ aInc = 4μ a0, whereas in Fig. 5(b) the absorption contrast is only twofold. The horizontal axis represents the sphere radius, the vertical one is the depth of its center and the modulation is shown by isolines. We have identified two particular invalid zones, shadowed in gray: i) the one for which the inclusion depth is less than its radius (the inclusion itself would cross the boundary of the bulk medium), and ii) the zone for which the inclusion is considered to be undetectable, corresponding to modulation values less than a given threshold, T (see Sections 2.6 and 3).

Figure 5(a) shows that relative large inclusions (rInc ≈ 10mm) can be detected even at depths of about zInc ≳ 30 mm. On the contrary, small inclusions rInc ≲ 3.0 mm are only detected if they are shallow (zInc ≲ 5 mm). Objects with twofold absorption contrast (Fig. 5(b)) have to be larger to be visible from the same depth as objects with fourfold contrast. Roughly, the decrease in absorption has to be compensated by a corresponding increase in the volume of the sphere to reach the same modulation depth. Since the absorption contrast for real biological cases is expected to be in the considered range, Fig. 5 establishes a quantitative criterion for the limits of detectability of the proposed technique. These results are in agreement with the criterion given by Ripoll et. al. [34] which, for the optical parameters considered in Fig. 5(a), estimates a maximum penetration depth of the photons of .

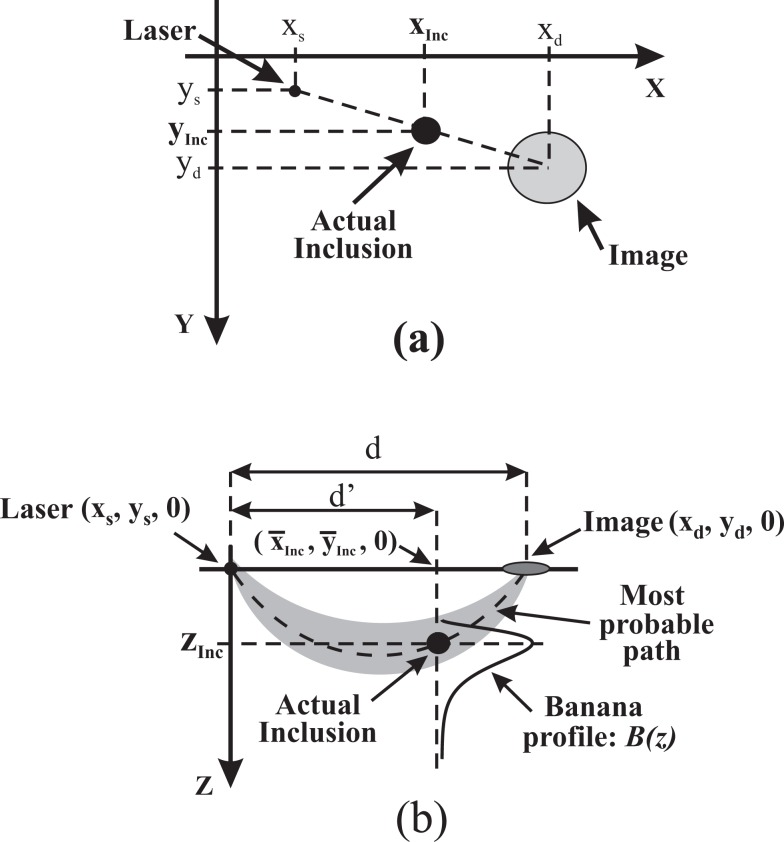

2.5. 3D location of the inclusion

The main idea for getting the in-plane location of the inclusion is based on taking a few images with varied illumination points. For a particular laser position L 1 the absorbing inclusion becomes visible in the normalized camera image as a blurred region centered at position P 1 = (x d1, y d1). If the laser is then moved to a new position, L 2 the dark zone related to the inclusion appears at another position I 2 = (x d2, y d2 , 0). The situation is sketched in Fig. 6(a) for a generic laser position. This experiment can be repeated for several, N, laser positions performing a coarse scanning of the surface. As the inclusion is fixed, all lines joining a laser position with the center of its corresponding image pass through the actual inclusion. Thus for each pair of laser-image lines, their intersection gives the in plane location of the inclusion (xInc, yInc). Taking several of these intersections and averaging the resulting coordinates provide a better in-plane location of the inclusion:

| (8) |

Fig. 6.

Illustration of the approach used to locate the inclusion in 3D. a) Illustrates the principle for finding the in plane location of the inclusion for the illumination point at coordinates (xs, ys). This produces at (xd, yd) a blurred image of the inclusion, which is actually located at (xInc, yInc). b) Shows how to retrieve the depth of the inclusion once the average values of the in plane coordinates, (x̄Inc, ȳInc), are known. The plane of this Figure is the one defined by the z axis and the line joining the illumination point and the image. See text for a detailed explanation.

Standard deviations in this expression vary as . We will present later, in Table 1, a quantitative example using 6 intersections.

Table 1.

Result of applying our algorithm to the solid phantom. Nominal values for the inclusion location are (xInc, yInc, zInc) = (9 cm, 9 cm, 1 cm). Errors are given as the standard deviation of the measured values.

| j 1 − j 2 | j 1 − j 3 | j 1 − j 4 | j 2 − j 3 | j 2 − j 4 | j 3 − j 4 | Averages [cm] | |

|---|---|---|---|---|---|---|---|

| xInc [cm] | 9.01 | 9.10 | 9.23 | 9.09 | 9.13 | 9.07 | x̄Inc = 9.10 ± 0.07 |

| yInc [cm] | 9.06 | 9.06 | 9.04 | 9.19 | 9.13 | 9.16 | ȳInc = 9.11 ± 0.06 |

The information about the xy-plane location of the inclusion can now be used to retrieve its depth, zInc. To this end we consider the photon banana connecting a selected illumination point rs with the related image position rd of the inclusion (see cross section in Fig. 6(b)). The object is located at the intersection of the banana with the vertical line in Fig. 6(b) drawn from the above derived position (x̄Inc, ȳInc) of the object on the surface of the turbid medium parallel to the z axis. Along this line we determine the profile B(z) = B(x̄Inc, ȳInc, z) of the banana. The first moment of this probability distribution is taken as the depth zInc of the inclusion. The procedure can be repeated for each of the N images to obtain an average depth value

| (9) |

To derive the required theoretical expression for the photon banana B(r, r s, r d) we follow the idea of Feng et al. [28]. Instead of the zero boundary condition employed by Feng we use the extrapolated boundary condition here. For the geometry of the semi-infinite medium we obtain (the normalization factor is omitted):

| (10) |

Vector r * = (x, y, −2ze − z) denotes the mirror position of the considered coordinate r = (x, y, z) of the banana with respect to the extrapolated boundary plane.

At this point it is important to mention that the attenuation coefficient κ of the turbid medium is needed to calculate the banana function (Eq. (10)) and to derive its first moment. Furthermore, the vectors rs 1, rs 2 and r * with the first two representing the positions of the original and the image point source depend on μ′s (cf. Eq. (2)). In general, κ and μ′s cannot be derived from our CW measurement simultaneously [33]. However, the influence of μ′s on the shape of the banana function is weak, i.e. we can use an approximate value μ′s,typ for the particular tissue type. Calculations of the first moments of B along the z axis for reduced scattering coefficients between 5cm −1 and 20cm −1 show that the error close to the source position scales approximately with |1/μ′s − 1/μ′s,typ|, i.e. the error in the determination of the z position of the object can reach 0.5mm to 1mm. When the in-plane (x,y) position of the object is at least 1cm apart from the source position, the error is always smaller than 0.5mm.

The needed attenuation coefficient can be determined from a fit by considering the decay of the measured intensity I(x, y) with increasing distance from the source in a region of the turbid medium which can be assumed to be homogeneous. Generally, the corresponding theoretical photon flux density given in Eq. (2) depends on both κ and μ′s. However, similar to the discussion for the banana function, for sufficiently long distances between source and detector position the lengths r 1 and r 2 in Eq. (2) become approximately equal to , and the photon flux density can be approximated as

| (11) |

In this term (ze + 1/μ′s) acts as a scaling factor only, i.e. Eq. (11) can be used to derive κ from the measured intensity profile.

2.6. Noise analysis

In this Section we will discuss the main effects of noise on the results presented. We will adopt an approach similar to that proposed by Ziegler et al. [36]. The intensity detected by the camera at pixel k can be written as

| (12) |

with Ik being the intensity without noise, the actual detected signal, including all contributions of noise, and Nk the noise component, all taken at pixel k. The quantity Ioff accounts for the baseline level of the camera obtained without any light. Commonly, this offset is compensated by subtracting a dark image measured separately. However, in our case it is advantageously to skip this correction for the following reason: Due to the point-like illumination we get images covering the full dynamic range of the camera. Far away from the illumination point the light level is too small to give a significant contribution and the pixel values scatter around the offset value, Ioff. After background subtraction these intensities will scatter around zero. Hence, calculating ratio values P(x, y) of two images according to Eq. (1) will result in very noisy data far away from the illumination point. In contrast, without background subtraction such ratio values approach to Ioff/Ioff = 1. This reduction in noise far away from the illumination point is paid by a slight reduction in the modulation depth which will hamper the detection of inclusions at larger distances from the illumination point. On the other hand, the modulation profile becomes sharper as will be shown in the next section

Investigations of the intensity dependence of the noise in our camera images have shown, that the standard deviation of the measured intensities can be described by the following equation:

| (13) |

The first contribution to the noise, na, is independent from the detected signal. It accounts mainly for the readout noise of the camera. The second term, describes the contribution of the photon noise (shot noise) which is significant at low intensities and weighted by np. The third contribution is a noise component proportional to the measured signal which is typical for linear signal calibration curves. It dominates at high intensities where nr describes a constant coefficient of variation.

To derive a detectability criterion we consider a medium with inclusion and note the position with the strongest modulation of the ratio signal P(x) as xI. We know that without an inclusion repeated measurements P 1,.., Pk at this position are normally distributed with mean θ 0 = 1 and variance σ 2, whereby the later is given by Eq. (13). When an absorbing inclusion is present, we expect to obtain a mean θI < θ 0. Since the modulation depth is small, we can assume that the variance of the distribution Pi with inclusion is nearly the same as for the homogeneous case.

As a criterion for object detection we use the Neyman - Pearson test [37] which yields the following relation between a given significance level αFP of false positive detection and the threshold value T (αFP) for the ratio signal P(x)

| (14) |

3. Phantom experiments

We present now an experiment to test our proposal under laboratory conditions using a solid phantom with a known absorbing inclusion. It is mainly intended to test the capability of our proposal to locate inclusions in 3D. Additionally, it will be used to compare the modulation profile with that produced by the theory. As a corollary, a fair estimation of the diameter of the inclusion is retrieved.

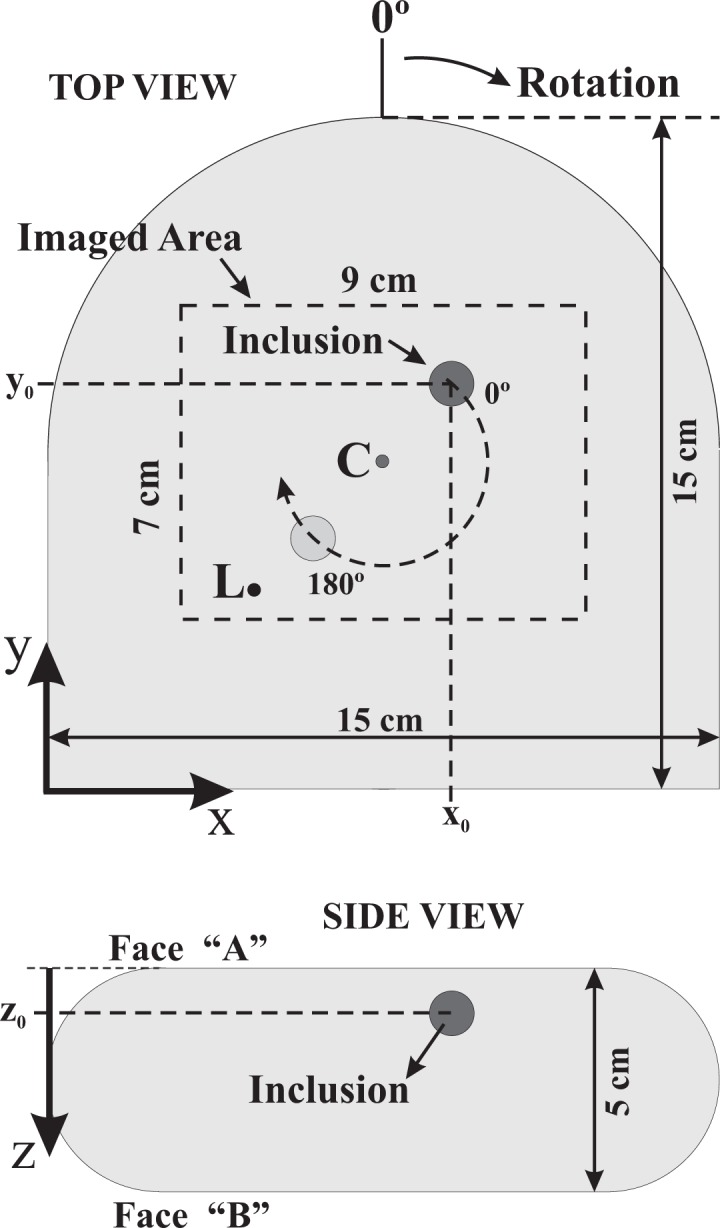

The phantom, which mimics a compressed breast, was designed and manufactured at PTB, Berlin to test and optimize different optical mammographs. It is made of Epoxy resin and contains one absorbing spherical inclusion of 1.0 cm diameter, also made of Epoxy [38, 39]. The phantom is 15 cm wide, 15 cm long and 5 cm thick. With reference to Fig. 7, the inclusion is located at coordinates (xInc, yInc, zInc) = (9 cm, 9 cm, 1 cm). Thus, when viewing the phantom from the face ”A”, the inclusion is at 1 cm depth from the surface.

Fig. 7.

Schematic representation (at scale) of the solid phantom used in the experiment. The inclusion is located at (xInc, yInc, zInc) = (9 cm, 9 cm, 1 cm). The dark spot indicated by ”L” is the illumination point and the phantom can be rotated around the point indicated by ”C”.

The optical properties of the phantom are known from measurements of time-resolved transmittance with a femtosecond Ti:Sapphire laser and time-correlated single photon counting. At 780 nm the properties for the homogeneous background amount to μ′ s0 = 9.46cm −1 and μ a0 = 0.047cm −1. The optical properties of the inclusion were measured from a block of the base material previous to the proper machining to obtain the sphere [39]. The results are μ′ sInc = 7.7cm −1 and μ aInc = 0.2cm −1. Thus, the absorption contrast between bulk and inclusion is 4.25. Accordingly to Figs. 4 and 5 this inclusion should be detected with a modulation depth of about 8% to 10%.

Images of the phantom were acquired with the setup shown in Fig. 1 using a 14 Bit EMCCD camera (Andor Luca, 658×496 pixels), equipped with a wide field lens (Fujinon 1 : 1.26 mm FL) imaging an area of approximate dimensions Dx = 9cm × Dy = 7cm of face ”A” of the phantom (Figs. 2 and 7). Illumination was provided by a pulsed (80 MHz) diode laser operating at λ = 783nm, with average power of 3 mW. The illumination point is indicated by ”L” in the Fig. 7. Crossed polarizers, one in front of the laser and the other in front of the camera, were used to suppress the detection of photons that exit the medium very close to the illumination point after only a few scattering events, i.e. before losing their original polarization. Additionally a small stop was placed in the field of view close to the lens to block out the over-exposure region at the illumination point. In this way the 14 Bit dynamic range of the camera enabled us to detect the spatial decrease of the diffusely scattered light up to a distance of about 3cm from the source. Possible blooming effects are also minimized with this stop. In order to calculate the ratio image according to Eq. (1) we have to generate a suitable background image, ”without” inclusion. In general, we do not know where a possible inclusion is located in the medium. Under these circumstances one can take a set of images with the scattering medium being shifted or rotated relative to the setup of the laser and the camera. When averaging these images the influence of the inclusion is suppressed due to blurring. We applied this procedure by rotating the phantom in steps of 20° resulting in 18 points of view after a complete rotation. The 18 images were averaged to obtain the (homogeneous) background image. Finally, each of the 18 measured images was normalized to this background image.

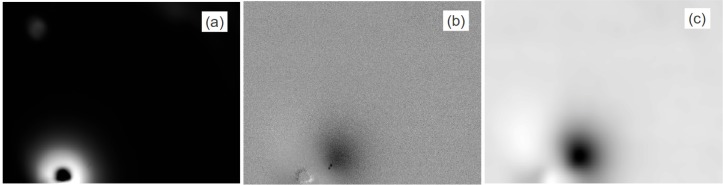

At those angular positions for which the inclusion is situated inside the zone reached by the laser light, an image of the inclusion can be seen. As an example, we show in Fig. 8 the raw camera image and the normalized image obtained for j = 180°. The center of rotation, the starting point of the rotation, i.e. 0°, the rotation direction as well as the illumination point are shown in Fig. 7. The dark round spot in Fig. 8(a) is the stop mentioned above. In Fig. 8(b) the darker blurred region reveals the presence of the inclusion. As illustrated in Fig. 8(c) the high frequency noise can be reduced by Fourier filtering.

Fig. 8.

a) Raw image of the phantom at position j = 180° as seen by the camera. b) Result of dividing the image in a) by the average of all 18 images. c) Same as in b) after Fourier filtering.

To measure the in - plane coordinates of the inclusion, after division by the background, the processed images obtained from Eq. (1) are counter rotated by the same angle used during acquisition to keep the inclusion stationary; the laser appears now at different positions, providing different points of view. As explained in Section 2.5, the intersection of two of these lines gives the in plane location of the inclusion. Using these pairs for four points of view, namely j 1 = 160°, j 2 = 180°, j 3 = 200° and j 4 = 220° we applied Eq. (8) for getting the x̄Inc and ȳInc of the inclusion. Table 1 summarizes the results of the entire procedure.

Finally, the depth of the inclusion, zInc was calculated, as explained above in Section 2.5. First, Eq. (11) was used to fit a radial intensity profile I 0(x) of the background image for values x between 1.5 cm and 4 cm with respect to the source position to derive the attenuation coefficient κ. The obtained value of 1.16 cm −1 is in excellent agreement with the value calculated from the known reduced scattering and absorption coefficient of the phantom (κ = 1.158 cm −1). Then, using this value together with the averaged coordinates x̄Inc, ȳInc, given in Table 1, we estimated the depth of the inclusion to be zInc = 0.81cm. In this step the reduced scattering coefficient of the phantom known from the time-resolved measurements (μ′s 0 = 9.46 cm −1) was used. As mentioned above, this value has little effect on the final result for zInc. In general, it is sufficient to use an approximate value representative for the particular type of tissue.

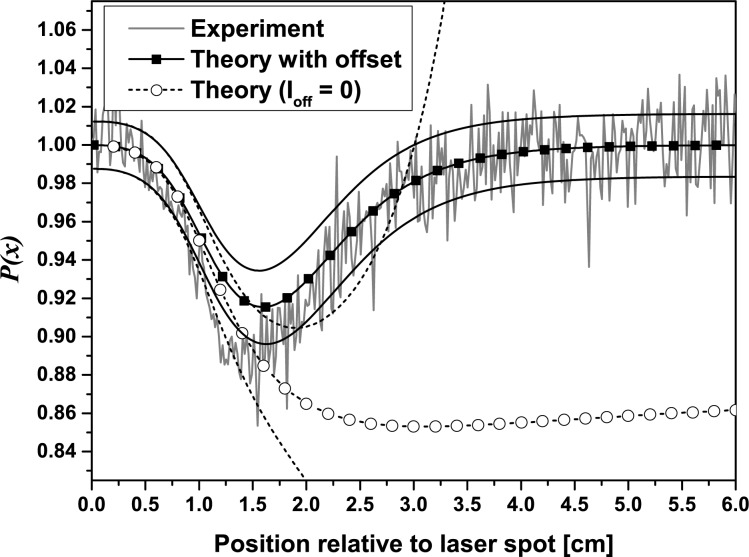

In order to determine the noise components introduced in Eq. (13) for the CCD Camera used in this work we measured the intensity dependence of the noise. The factors characterizing the three noise contributions were found to be na = 7.9, np = 0.88, and nr = 0.012. Accordingly, for the background image obtained from 18 single images, these values must be divided by . Additionally, the offset value estimated from a dark picture was Ioff = 497 ± 9.7. As expected, the standard deviation of this value meets approximately na given above. We used Eqs. (12) and (13) to study the influence of the dark offset and of the noise on the theoretical quotient P(x) (cf. Eq. (7)) for the experimental situation at j = 180°. Calculations were based on the true distance d = 1.1cm of the sphere from the laser. In order to add noise to the theoretical curves the standard deviation σP(x) of P(x) was derived from the noise values given above by error propagation:

| (15) |

Since σP(x) is intensity dependent, the photon flux densities J 0 and Jout calculated from Eqs. (2) and (3) were scaled by a factor A such that the integral along direction x fits to the integral of the measurement, whereby the region covered by the beam stop was excluded. Fig. 9 shows the theoretical profiles P(x) with and without background correction and the corresponding standard deviations from Eq. (15) together with the experimental profile.

Fig. 9.

Comparison of the experimental profile with theory using the camera offset value Ioff = 497. In addition, the theoretical profile is shown for the situation of background correction (Ioff = 0). For both simulations the standard deviation intervals are indicated by the black solid and dashed lines

As already mentioned in section 2.6, when adding a dark signal offset Ioff to the simulated intensities the modulation profile becomes smaller, and its depth is slightly reduced compared to the simulation without offset. In this way the experimental curve is well described by theory. The standard deviation of the simulated profile fits well to the experimental noise, too. When comparing the standard deviations plotted for both simulated profiles it is remarkable that the profile with offset yields a much better signal-to-noise ratio at larger distances from the illumination point than the calculation performed without any offset.

In order to apply the Neyman - Pearson criterion described previously, we must verify that the assumptions are correct, i.e. the obtained image must follow a normal distribution with mean 1 and unknown variance, and that the presence of an inclusion does not change the variance. Normality was confirmed by a Jarque-Bera test yielding a p value of 0.47. Then, a T-test showed that the sample comes from a normal distribution with mean 1 (p value of 0.43). And, finally, an F-test was run for variances, taken from 2 samples (one with inclusion and the other without it) to verify that both samples come from a normal distribution with same variance (p value of 0.79).

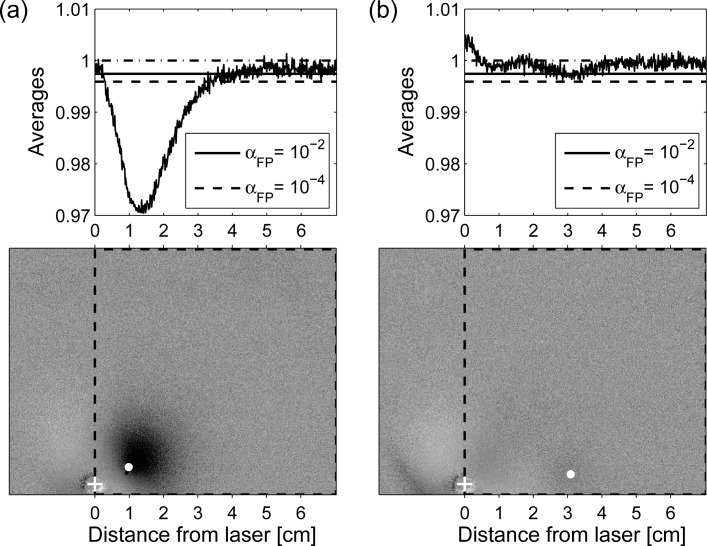

The Neyman-Pearson criterion can now be applied if we also approximate the deviation at each level of intensity by the maximum one found throughout the whole ratio image, i.e. we fix σP = max {σP(xk)}, where xk is the kth pixel of the image. We illustrate the application of the criterion to two selected ratio images shown in Fig. 10, namely for the inclusion at rotation position 180° and 140°. For both images we would like to decide about the presence of a lesion in the region of interest indicated by the dashed rectangle whereby we discard any previous knowledge about the true position of the lesion indicated by the white dots in the gray scale images. As a simple approach we have calculated the average horizontal profile from all pixel rows of the rectangular area for each case. These profiles are shown in the upper graphs of Fig. 10 together with the corresponding detection thresholds determined from Eq. (14). For both cases in Fig. 10 we show two significance levels, αFP, namely αFP = 10−2, resulting in a threshold value T (αFP = 10−2) = 0.998 and the very small level αFP = 10−4, resulting in a threshold value T (αFP = 10−4) = 0.996.

Fig. 10.

Result of applying the criterion of Section 2.6 to two ratio images. a) Corresponding to the case of the phantom rotated by 180° and b) corresponding to the phantom rotated by 140°. Values of the profiles are obtained by averaging all pixels in each column of the region delimited by the dashed contour. Threshold values, T, for different significance levels, are shown as horizontal lines. Inclusion is considered to be “detected” at positions at which the average values are below threshold. White crosses indicate the true position of the laser, and the white dots indicate the actual position of the inclusion inside the phantom.

In Fig. 10(a) the minimum of the profile is considerably smaller than both thresholds thus clearly indicating the presence of an inclusion in the selected rectangular region. The position of the dip corresponds to the horizontal coordinate of the inclusion. By analyzing the second dimension of the ratio image in a similar way, the inclusion can be localized along the vertical direction, too. In the right example of Fig. 10 the threshold for the 1% significance level is touched only by the small dip around xInc = 3cm. As can be seen from the white dot in the corresponding gray scale image, this dip fits to the true position of the inclusion in the experiment. Hence, detection of the inclusion which has a distance of 3cm from the laser in this case is at the limit.

By reducing the number of lines taken to calculate the average profile to a value which fits approximately to the extension of an expected inclusion the contrast in the resulting profile is optimized. Results of this type of analysis with the region for averaging chosen along the straight line between laser position and the known inclusion position are shown in Fig. 11. Besides the two situations discussed in Fig. 10 (180° and 140° rotation of the phantom), we also added the rotational position in between (160°). The distances between laser spot and true position of the inclusion amount to approximately 1cm, 2cm and 3cm. The experimental profiles in Fig. 11(a) were obtained by averaging 25 lines of the 2D ratio images. Since this number enters threshold calculation by Eq. (14) the threshold values for the two significance levels αFP = 10−2 and αFP = 10−4 are more far away from the baseline level P(x) = 1 than the thresholds plotted in Fig. 10. However, the detection of the most challenging inclusion at xInc = 3cm is improved now compared to Fig. 10. The signal dip is clearly below the threshold for αFP = 10−2. This result is confirmed by the theoretical profiles given in Fig. 11(b) which were calculated for the parameters of the experimental situation. When applying the smaller significance level of αFP = 10−4 the inclusion at xInc = 3cm is not detected even in the noise free simulation. In contrast, the inclusion is clearly visible at distances xInc = 1cm and xInc = 2cm in both the experiment (Fig. 11(a)) and the (noise-free) simulation (Fig. 11(b)).

Fig. 11.

Line profiles for three different distances (1cm, 2cm and 3cm) of the 1cm spherical inclusion from the illumination spot. a) Experimental result for a depth of zInc = 1cm obtained by averaging 25 lines of the ratio image. The dashed and the dotted line show the corresponding detection thresholds for significance levels of αFP = 10−2 and 10−4, respectively. b) Theoretical result for the parameters of the experiment in a). c) Theoretical result for an increased depth of the inclusion (zInc = 1.5cm).

For comparison, Fig. 11(c) represents the hypothetical situation of the same inclusion (that is, same size and same absorption contrast that the one in the experiments) and at the same three distances from the laser but at 1.5cm depth. Now the inclusion at 3cm distance from the laser is beyond the detection limit for both proposed thresholds, the inclusion at 2cm from the laser is only seen if T (αFP = 10−2) is taken, and the inclusion at 1cm from the laser is detected by both thresholds. Hence, lesions with a diameter of about 1cm and a 4-fold absorbing contrast should become visible in ratio images within a circular area of 2cm to 3cm radius around the laser illumination spot. In order to scan a larger tissue area, the grid of illumination points should have a step size not larger than 3cm to 4cm.

Note that if most of the profile, P(x), is clearly below the detection thresholds plotted in 10, this information can be used for a rough estimation of the maximum diameter of the inclusion. To this end, we determine the full width at half minimum of the dip in Fig. 10 taking the most conservative threshold level as a baseline. According to this estimate, we retrieve a diameter of about 1.5cm as an upper limit for the size of the inclusion having a true diameter of 1.0cm.

Our phantom experiment describes one particular configuration of a tumor in a homogeneous background. However, the threshold levels determined from the experiment can be used to derive the detection limits for objects of smaller or larger size as well as for other depth positions. From Fig. 11 we get a minimum modulation depth of 0.02 as a conservative estimate for object visibility. The corresponding contour line graphs in Fig. 5(a) and 5(b) permit us to determine the range of object size and depth position in which the object will be visible in the camera image with sufficient contrast, and, hence, the analysis to obtain its depth position can be successfully applied. From Fig. 5(a) we see, e.g., that a sphere of 4-fold absorption contrast with a radius of 2mm can be detected up to a depth of about 4mm, whereas an object with a radius of e.g. 8mm can be seen even for a depth of more than 35mm. The contour level 0.02 in Fig. 5(b) illustrates the corresponding detection limit for an object with a twofold increased absorption. It is shifted towards larger object size compared to the fourfold absorption contrast. At this point we should mention that we had performed another phantom experiment with a spherical inclusion of the same contrast and size as described above, but placed at a depth of 2.5cm. This sphere could not be detected, which is in accordance with the data shown in Fig. 5(a).

In general, the investigated phantom as well as the data in Fig. 5 present the situation of a single object hidden in a homogeneous background medium. For biological tissue the background can be heterogeneous which typically decreases the contrast of a lesion. In order to obtain a suitable background image one follows the same procedure as applied in the phantom experiment, i.e. recording a set of images by simultaneously moving the camera and the source position with respect to the tissue. When overlying these images the contribution of one or more lesions as well as of heterogeneities with large extensions will be averaged. The single images represent the coarse scanning of the tissue. In order to detect and localize a lesion it must have sufficient contrast with respect to background heterogeneities. This requirement is not a particular problem of the proposed method. It is present in planar scanning geometries and in tomographic geometries as well. Finally, when several lesions are present in the tissue, they can be analyzed as long as they appear as separate objects in the ratio image.

4. Conclusions

We developed a method that allows to locate absorbing inclusions in turbid media using the CW reflection geometry in a semi - infinite medium. The method is based on taking a set of 2D images of the diffuse reflection from the medium thereby varying the position of the point - like laser source and the camera with respect to the medium. Inclusions in the medium become visible after normalizing the images to a suited background image which is calculated as the average of all images recorded. In general, since 2D images of the surface are acquired, a coarse set of illumination points is sufficient, if compared to approaches that need a dense scanning grid on the medium surface. Using a theoretical model based on the diffusion approximation we showed that, for host media similar to biological tissues, tumor - like inclusions, having μ aInc ≲ 4μ a0 can be detected. Detection contrast depends on the inclusion’s depth and its diameter. Accordingly with data in the bibliography, [40–42] actual tumors may present absorptions coefficients of this order or less. However, for in-vivo investigations, absorption could be enhanced by adding a contrast agent like indocyanine green, thus improving the chances of detection [43].

To locate the inclusions in plane we proposed and tested an algorithm based on analyzing a subset of the acquired images. Having this information derived, the depth of the inclusion was also retrieved by taking the most probable paths of the photons (bananas) into account. To this end, the attenuation coefficient of the medium was derived from the CW measurements. To validate our approach we performed experiments on a solid phantom. The inclusion for this case was an absorbing sphere (μ aInc ≈ 4μ a0) with a diameter of 1.0 cm located at a depth of 1.0 cm. We showed that the approach works very well, finding the inclusion in 3D with errors about 10% for the in-plane coordinates and about 20% in the depth coordinate. We derived a detection criterion to determine the detection threshold taking the experimental noise into account. Additionally, using the detection criterion, we could set an upper bound for the diameter of the inclusion.

In conclusion, the proposed method is capable of both, locating a lesion in 3D and establishing its more absorbing nature. This information constitutes valuable prior knowledge, since it can be used to feed some inversion algorithms in order to retrieve the optical properties of the inclusion.

Acknowledgments

Authors thank for financial support from CONICET, PIP 2010–2012, N° 384 and ANPCyT, PICT 2008 N° 0570. Financial support from MinCyT - BMBF, Project AL/12/10 -01DN13022 NIRSBiT is also acknowledged. Helpful discussions with MSc. S. Torcida are greatly acknowledged.

References and links

- 1.Tromberg B. J., Pogue B. W., Paulsen K. D., Yodh A. G., Boas D. A., Cerussi A. E., “Assessing the future of diffuse optical imaging technologies for breast cancer management,” Med. Phys. 35(6), 2443–2451 (2008). 10.1118/1.2919078 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Choe R., Corlu A., Lee K., Durduran T., Konecky S. D., Grosicka-Koptyra M., Arridge S. R., Czerniecki B. J., Fraker D. L., DeMichele A., Chance B., Rosen M. A., Yodh A. G., “Diffuse optical tomography of breast cancer during neoadjuvant chemotherapy: a case study with comparison to MRI,” Med. Phys. 32(4), 1128–1139 (2005). 10.1118/1.1869612 [DOI] [PubMed] [Google Scholar]

- 3.Cerussi A., Shah N., Hsiang D., Durkin A., Butler J., Tromberg B. J., “In vivo absorption, scattering, and physiologic properties of 58 malignant breast tumors determined by broadband diffuse optical spectroscopy,” J. Biomed. Opt. 11(4), 044005 (2006). 10.1117/1.2337546 [DOI] [PubMed] [Google Scholar]

- 4.Gibson A. P., Hebden J. C., Arridge S. R., “Recent advances in diffuse optical imaging,” Phys. Med. Biol. 50, R1–R43, (2005). 10.1088/0031-9155/50/4/R01 [DOI] [PubMed] [Google Scholar]

- 5.Spinelli L., Torricelli A., Pifferi A., Taroni P., Danesini G. M., Cubeddu R., “Bulk optical properties and tissue components in the female breast from multiwavelength time-resolved optical mammography,” J. Biomed. Opt. 9(6), 1137–1142 (2004). 10.1117/1.1803546 [DOI] [PubMed] [Google Scholar]

- 6.Grosenick D., Wabnitz H., Rinneberg H., Moesta K. T., Schlag. P., “Development of a time-domain Optical mammograph and first in vivo applications,” Appl. Opt. 38(13), 2927–2943 (1999). 10.1364/AO.38.002927 [DOI] [PubMed] [Google Scholar]

- 7.Schmitz C. H., Klemer D. P., Hardin R., Katz M. S., Pei Y., Graber H. L., Levin M. B., Levina R. D., Franco N. A., Solomon W. B., Barbour. R. L, “Design and implementation of dynamic near-infrared optical tomographic imaging instrumentation for simultaneous dual-breast measurements,” Appl. Opt. 44(11), 2140–2153 (2005). 10.1364/AO.44.002140 [DOI] [PubMed] [Google Scholar]

- 8.Yu G., Durduran T., Lech G., Zhou C., Chance B., Mohler E. R., Yodh A. G., “Time-dependent blood flow and oxygenation in human skeletal muscles measured with noninvasive near-infrared diffuse optical spectroscopies,” J. Biomed. Opt. 10(2), 024027 (2005). 10.1117/1.1884603 [DOI] [PubMed] [Google Scholar]

- 9.Durduran T., Yu G., Burnett M. G., Detre J. A., Greenberg J. H., Wang J., Zhou C., Yodh A. G., “Diffuse optical measurement of blood flow, blood oxygenation, and metabolism in a human brain during sensorimotor cortex activation,” Opt. Lett. 29(15), 1766–1768 (2004). 10.1364/OL.29.001766 [DOI] [PubMed] [Google Scholar]

- 10.Riley J. D., Amyot F., Pohida T., Pursley R., Ardeshirpour Y., Kainerstorfer J. M., Najafizadeh L., Chernomordik V., Smith P., Smirniotopoulos J., Wassermann E. M., Gandjbakhche. A. H., “A hematoma detector -a practical application of instrumental motion as signal in near infra - red imaging,” Biomed. Opt. Express 3(1), 192–205 (2012). 10.1364/BOE.3.000192 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hillman. E. M., “Optical brain imaging in vivo: techniques and applications from animal to man,” J. Biomed. Opt. 12(5), 051402 (2007). 10.1117/1.2789693 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Grosenick D., Hagen A., Steinkellner O., Poellinger A., Burock S., Schlag P., Rinneberg H., Macdonald. R., “A multichannel time-domain scanning fluorescence mammograph: performance assessment and first in vivo results,” Rev. Sci. Instrum. 82, 024302 (2011). 10.1063/1.3543820 [DOI] [PubMed] [Google Scholar]

- 13.Nziachristos V., Ma X., Chance. B., “Time correlated single photon counting imager for simultaneous magnetic resonance and near infrared mammography,” Rev. Sci. Instrum. 69, 4221–4233 (1998). 10.1063/1.1149235 [DOI] [Google Scholar]

- 14.O’ Sullivan T., Cerussi A., Cuccia D., Tromberg B., “Diffuse optical imaging using spatially and temporally modulated light,” J. Biomed. Opt. 17(7), 071311 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Gioux S., Mazhar A., Cuccia D. J., Durkin A. J., Tromberg B. J., Frangioni J. V., “Three-dimensional surface profile intensity correction for spatially modulated imaging,” J. Biomed. Opt. 14(3), 034045 (2009). 10.1117/1.3156840 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bassi A., Cuccia D. J., Durkin A. J., Tromberg. B. J., “Spatial shift of spatially modulated light projected on turbid media,” J. Opt. Soc. Am. A 25(11), 2833–2839 (2008). 10.1364/JOSAA.25.002833 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gioux S., Mazhar A., Lee B. T., Lin S. J., Tobias A., Cuccia D. J., Stockdale A., Oketokoun R., Ashitate Y., Kelly E., Weinmann M., Durr N. J., Moffitt L. A., Durkin A. J., Tromberg B. J., Frangioni J. V., “First-in-human pilot study of a spatial frequency domain oxygenation imaging system,” J. Biomed. Opt. 16(8), 086015 (2011). 10.1117/1.3614566 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Weber J. R., Cuccia D. J., Johnson W. R., Bearman G. H., Durkin A. J., Hsu M., Lin A., Binder D. K., Wilson D., Tromberg B. J., “Multispectral imaging of tissue absorption and scattering using spatial frequency domain imaging and a computed-tomography imaging spectrometer,” J. Biomed. Opt. 16(1), 011015 (2011). 10.1117/1.3528628 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Dierkes T., Grosenick D., Moesta K. T., Möller M., Schlag P., Rinneberg H., Arridge S., “Reconstruction of optical properties of phantom and breast lesion in vivo from paraxial scanning data,” Phys. Med. Biol. 50(11), 2519–2542 (2005). 10.1088/0031-9155/50/11/006 [DOI] [PubMed] [Google Scholar]

- 20.Liu J., Li A., Cerussi A. E., Tromberg. B. J., “Parametric diffuse optical imaging in reflectance geometry,” IEEE J. Sel. Topics Quantum Electron 16(3), 555–564 (2010). 10.1109/JSTQE.2009.2034615 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Tavakoli B., Zhu. Q., “Two-step reconstruction method using global optimization and conjugate gradient for ultrasound-guided diffuse optical tomography,” J. Biomed. Opt. 18(1), 16006 (2013). 10.1117/1.JBO.18.1.016006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Born M., Wolf E., Principles of optics (Cambridge Unversity Press, 1999). 10.1017/CBO9781139644181 [DOI] [Google Scholar]

- 23.Carbone N., Di Rocco H., Iriarte D., Pomarico. J., “Solution of the direct problem in turbid media with inclusions using Monte Carlo simulations implemented on graphics processing units: new criterion for processing transmittance data,” J. Biomed. Opt. 15(3), 035002 (2010). 10.1117/1.3442750 [DOI] [PubMed] [Google Scholar]

- 24.Di Rocco H., Iriarte D., Lester M., Pomarico J., Ranea-Sandoval. H. F., “CW Laser transilluminance in turbid media with cylindrical inclusions,” Int. J. Light Electron Opt. 122, 577–581 (2011). 10.1016/j.ijleo.2010.04.013 [DOI] [Google Scholar]

- 25.Li X. D., OLeary M. A., Boas D. A., Chance B., Yodh. A. G., “Fluorescent diffuse photon density waves in homogeneous and heterogeneous turbid media: analytic solutions and applications,” Appl. Opt. 35(19), 3746–3758 (1996). 10.1364/AO.35.003746 [DOI] [PubMed] [Google Scholar]

- 26.Grosenick D., Kummrow A., Macdonald R., Schlag P. M., Rinneberg. H., “Evaluation of higher-order time-domain perturbation theory of photon diffusion on breast-equivalent phantoms and optical mammograms,” Phys. Rev. E 76(6), 061908 (2007). 10.1103/PhysRevE.76.061908 [DOI] [PubMed] [Google Scholar]

- 27.Ishimaru A., Wave propagation and scattering in Random Media (Oxford University Press, 1997). [Google Scholar]

- 28.Zhu X. D., Wei S., Feng S. C., Chance B., “Analysis of a diffuse-photon-density wave in multilpe-scattering media in the presence of a small spherical object,” J. Opt. Soc. Am. A 23(3), 494–499 (1996). 10.1364/JOSAA.13.000494 [DOI] [Google Scholar]

- 29.Contini D., Martelli F., Zaccanti G., “Photon migration through a turbid slab described by a model based on diffusion approximation. I. Theory,” Appl. Opt. 38(19), 4587–4599 (1997) 10.1364/AO.36.004587 [DOI] [PubMed] [Google Scholar]

- 30.Haskell R. C., Svaasand L. O., Tsay T. T., Feng T. C., McAdams M. S., Tromberg B. J., “Boundary conditions for the diffusion equation in radiative transfer,” J. Opt. Soc. Am. A 11(10), 2727–2741 (1994). 10.1364/JOSAA.11.002727 [DOI] [PubMed] [Google Scholar]

- 31.Feng S., Zeng Fan-An, Chance. B., “Photon migration in the presence of a single defect: a perturbation analysis,” Appl. Opt. 34, 3826–3837 (1995). 10.1364/AO.34.003826 [DOI] [PubMed] [Google Scholar]

- 32.Aksel E. B., Turkoglu A. N., Ercan A. E., Akin. A., “Localization of an absorber in turbid semi - infinite medium by spatially resolved continuous - wave diffuse reflectance measurements,” J. Biomed. Opt. 16(8), 086010 (2011). 10.1117/1.3615238 [DOI] [PubMed] [Google Scholar]

- 33.Arridge S. R., Lionheart W. R., “Nonuniqueness in diffusion-based optical tomography,” Opt. Lett. 23, 882–884 (1998). 10.1364/OL.23.000882 [DOI] [PubMed] [Google Scholar]

- 34.Ripoll Lorenzo J., “Difusión de luz en medios turbios con aplicación biomédica,”, Ph.D. Thesis, Universidad Autónoma de Madrid, Spain, (2000). [Google Scholar]

- 35.Colak S. B., Papaioannou D. G., ’t Hooft G. W., van der Mark M. B., Schomberg H., Paasschens J. C. J., Melissen J. B. M., van Asten N. A. A. J., “Tomographic image reconstruction from optical projections in light-diffusing media,” Appl. Opt. 36, 180–213 (1997). 10.1364/AO.36.000180 [DOI] [PubMed] [Google Scholar]

- 36.Ziegler R., Brendel B., Schiper A., Harbers R., van Beek M., Rinneberg H., Nielsen. T., “Investigation of detection limits for diffuse optical tomography systems: I. Theory and experiment,” Phys. Med. Biol. 54, 399–412 (2009). 10.1088/0031-9155/54/2/015 [DOI] [PubMed] [Google Scholar]

- 37.Kay S. M., “Fundamental of Statistical Signal Processing - Detection Theory,”, Prentice Hall Signal Processing Series, (1993). [Google Scholar]

- 38.Hagen A., Steinkellner O., Grosenick D., Möller M., Ziegler R., Nielsen T., Lauritzen K., Macdonald R., Rinneberg. H., “Development of a multi-channel time-domain fluorescence Mammograph,” Proc. SPIE 6434, 64340Z (2007). 10.1117/12.701010 [DOI] [Google Scholar]

- 39.Schieck. S., “Preparation and characterization of structured tissue - like phantoms for biomedical optics,” Diploma Thesis, Berlin, (2005) (In German). [Google Scholar]

- 40.Torricelli A., Pifferi A., Taroni P., Giambattistelli E., Cubeddu. R., “In vivo optical characterization of human tissue from 610 to 1010 nm by time resolved reflectance spectroscopy,” Phys. Med. Biol. 46(8), 2227–2237 (2001). 10.1088/0031-9155/46/8/313 [DOI] [PubMed] [Google Scholar]

- 41.Vo-Dinh T., Biomedical Photonics Handbook (CRC Press, 2003). 10.1201/9780203008997 [DOI] [Google Scholar]

- 42.Grosenick D., Wabnitz H., Moesta K. T., Mucke J., Schlag P. M., Rinneberg. H., “Time-domain scanning optical mammography: II. Optical properties and tissue parameters of 87 carcinomas,” Phys. Med. Biol. 50, 2451–2468 (2005). 10.1088/0031-9155/50/11/002 [DOI] [PubMed] [Google Scholar]

- 43.Intes X., Ripoll J., Chen Yu, Nioka. S., Yodh A.G., Chance. B., “In vivo continuous-wave optical breast imaging enhanced with indocyanine green,” Med. Phys., 30(6) 1039–1047, (2003). 10.1118/1.1573791 [DOI] [PubMed] [Google Scholar]