Abstract

Over the past 2 decades, e-learning has evolved as a new pedagogy within pharmacy education. As learners and teachers increasingly seek e-learning opportunities for an array of educational and individual benefits, it is important to evaluate the effectiveness of these programs. This systematic review of the literature examines the quality of e-learning effectiveness studies in pharmacy, describes effectiveness measures, and synthesizes the evidence for each measure. E-learning in pharmacy education effectively increases knowledge and is a highly acceptable instructional format for pharmacists and pharmacy students. However, there is limited evidence that e-learning effectively improves skills or professional practice. There is also no evidence that e-learning is effective at increasing knowledge long term; thus, long-term follow-up studies are required. Translational research is also needed to evaluate the benefits of e-learning at patient and organizational levels.

Keywords: pharmacy education, e-learning, knowledge assessment, computer instruction, Internet

INTRODUCTION

The fundamental purpose of pharmacy education is to provide pharmacy students with the knowledge and skills to become pharmacists, and then to enable pharmacists to remain competent in the profession. The traditional pedagogy involving face-to-face instruction has evolved alongside the maturation of the Internet. Increasingly, pharmacists, pharmacy students, and pharmacy educators encounter teaching and learning opportunities beyond the classroom, with more and more content delivered online.1-5 Historically, online learning (using information and communication technologies) represented one facet of e-learning, while computer-based learning (using standalone multimedia such as a CD-ROM) represented another. Now e-learning is defined as learning conducted through an Internet process.6,7

E-learning programs are truly ubiquitous, and for this reason they offer attractive solutions to educating large numbers of geographically diverse populations. They allow standardized educational content to be easily distributed and updated. Learners gain control over time and place of learning, while programs provide automated real-time feedback for teachers and learners. Moreover, rather than move away from teacher-centered pedagogy, educators enhance and extend existing curriculums with e-learning opportunities, and learners embrace this.4,7-10 However as e-learning becomes a common feature in pharmacy education, the need to demonstrate its effectiveness increases.

Measuring and defining effectiveness of complex interventions, such as e-learning is difficult.11-13 In 1959, Donald Kirkpatrick proposed a 4-level model for evaluation of training programs.14 Further in 2009, the Best Evidence Medical Education (BEME) Collaboration adopted (and termed the levels) “Kirkpatrick’s hierarchy,” as a grading standard for literature reviews.15 In both instances, the levels may be simply defined as (1) reaction, (2) learning, (3) behavior, and (4) results. Reaction is a measure of program satisfaction. Learning is a measure of attitudes, knowledge, or skills change as a result of the program. Behavior is represented by the transfer of learning to the workplace. Finally, results are a measure of how the learning has changed organizational practice or patient outcomes.

Several reviews have evaluated the effectiveness of e-learning in the health professions, some with and others without applying the concepts of Kirkpatrick’s hierarchy.8,9,16-22 However, there are no reviews of the effectiveness of e-learning in pharmacy education. We conducted a systematic review to identify and evaluate the literature on effectiveness of e-learning in pharmacy education. We used Kirkpatrick’s hierarchy to guide outcome measures. Our primary aim was to determine effectiveness in terms of learning, behavior, and results. Our secondary aim was to assess effectiveness as reactions to e-learning programs.

METHODS

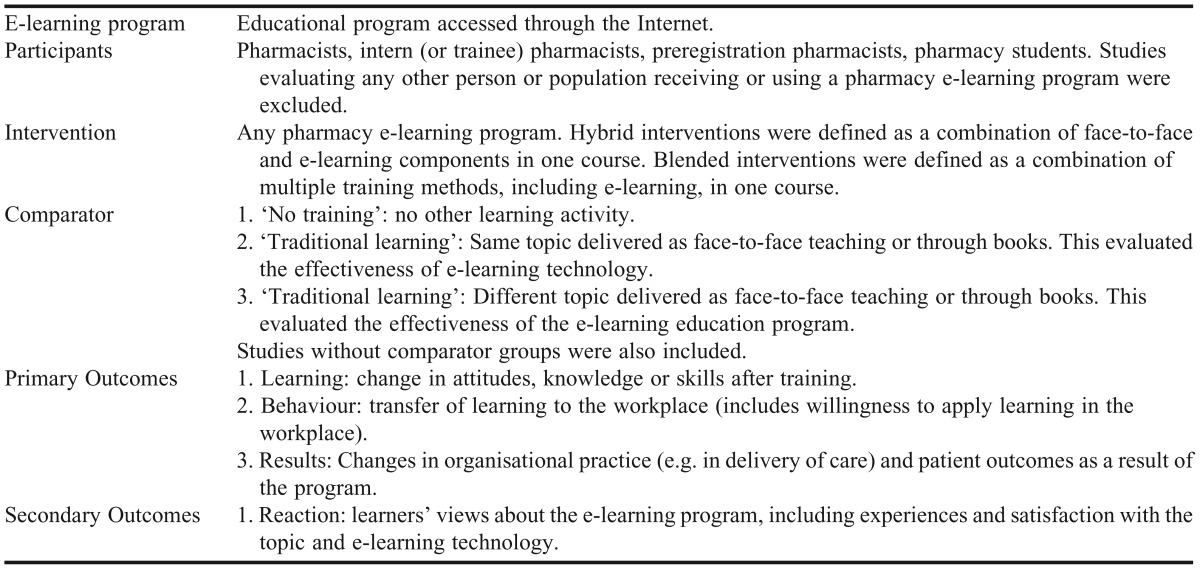

This systematic review was conducted according to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses Statement.23 The protocol for the review is published elsewhere.24 We defined specific criteria to allow a focused review of the effectiveness of e-learning in pharmacy education (Table 1). We included any effectiveness research that evaluated e-learning programs in undergraduate, postgraduate, and continuing professional development pharmacy education. We did not set limits on study design, language, or year of publication.

Table 1.

Definitions Used in Conducting a Systematic Review of eLearning in Pharmacy Education

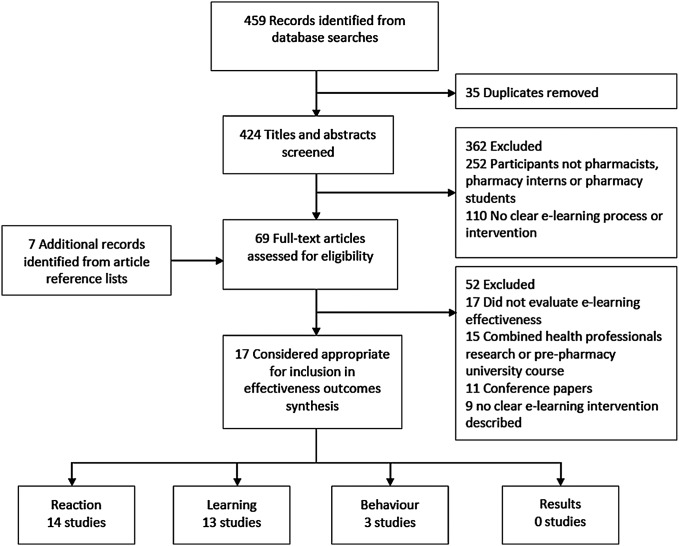

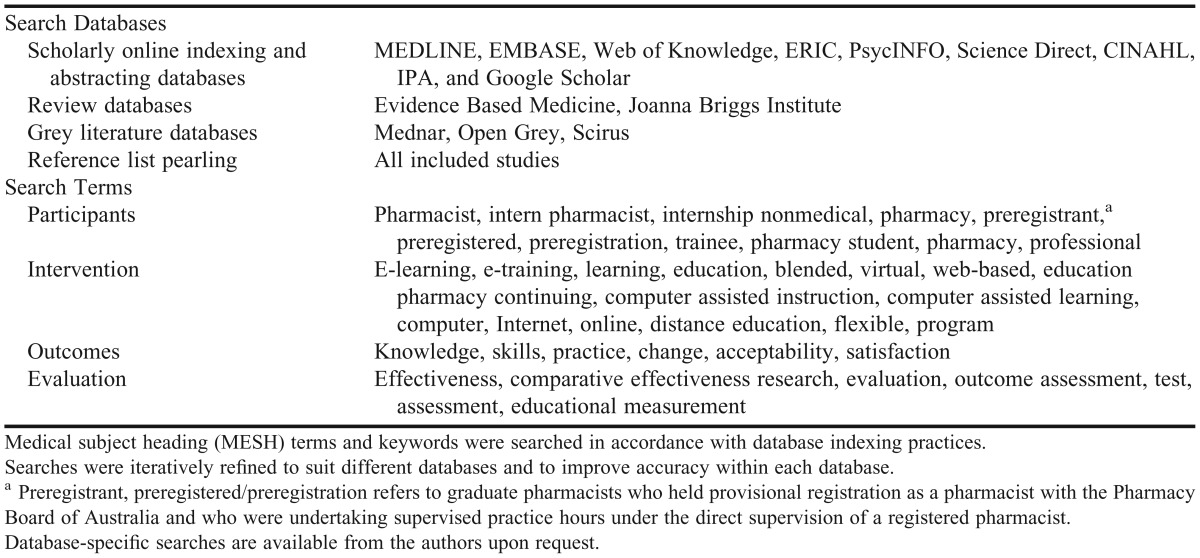

A senior reference librarian at The University of Western Australia’s Medical and Dental Library with expertise in conducting systematic literature reviews was consulted as part of the process to develop a comprehensive search strategy (Table 2). Databases were searched from inception to June 4, 2013. The review was conducted using the Web-based systematic review software, DistillerSR (Evidence Partners Incorporated, Ottawa, Canada). All identified citations were uploaded to DistillerSR and duplicates were removed. We developed forms for title/abstract and full-text screening according to the stated eligibility criteria, and pilot tested them before implementing them in study selection. Two reviewers independently and in duplicate screened all titles and abstracts. Potentially eligible abstracts, abstracts where reviewers disagreed, or abstracts with insufficient information were retrieved for full text review. Two reviewers then assessed the eligibility of each study in duplicate, and a final list of studies was determined. Agreement between reviewers was measured using Cohen’s kappa (weighted kappa for title/abstract screen was 0.75, and for full text screen, 0.88, estimated using DistillerSR). Conflicts were resolved by consensus. Reasons for exclusion were documented and are presented in Figure 1.

Table 2.

Search Strategy Used in Conducting a Systematic Review of eLearning in Pharmacy Education

Figure 1.

Systematic review flow. Studies may have contributed more than one effectiveness outcome measure. Reaction=satisfaction and course opinions. Learning=change in attitudes, knowledge or skills (including perceptions of these). Behavior=practice change (actual or willingness to change). Results=organizational change and patient benefit.

Two reviewers independently abstracted data using a series of dedicated forms we developed based on the Evidence for Policy and Practice Information and Coordinating Centre (EPPI-Centre) data extraction and coding tool for education studies.25 These forms were piloted and refined prior to data abstraction, and applied through DistillerSR. We assessed reviewer agreement in data abstraction using Cohen’s kappa, where 0=no agreement and 1=complete agreement. We abstracted data on study characteristics (study aims, location, participants, intervention topic, and assessment; kappa range 0.53-1); study design and methodology (sampling and recruitment, blinding, power, funding; kappa range 0.43-1); data collection and analysis (how data were collected, use and reliability of tools, statistical analysis; kappa range 0.48-1); and outcomes. As the focus of this review was on effectiveness, we sought information for outcomes that measured change after the e-learning intervention was delivered. The form for learning outcomes identified knowledge or skills change, and problem-solving ability (kappa range 0.55-1). The form for behavior and results outcomes identified willingness to change behavior or practice change, and organizational change or patient benefit (kappa range 0.64-1). The form for reaction outcomes identified satisfaction, attitudes and opinions (kappa range 0.48-1). Finally and where relevant, we contacted authors by e-mail to request missing data.

We expected the studies to be diverse, to include both qualitative and quantitative designs, to consist in the majority as noncomparative studies, and by the very nature of e-learning interventions, to be limited in their ability to conceal the intervention from the participant. Further, acknowledging that quality assessment of education intervention studies is complex,12 we considered no single published quality assessment tool to be appropriate for this review. However, aspects of 3 published tools were considered relevant to quality: the Cochrane Risk of Bias Criteria for Effective Practice and Organisation of Care reviews tool,26 the NICE quality appraisal checklist,27 and the EPPI-Centre data extraction and coding tool for education studies.25 In order to develop a more robust assessment of quality, we developed a quality assessment tool that included relevant aspects from each of the published tools as well as additional criteria, and embedded the assessment within the data abstraction forms in DistillerSR (Appendix 1).

We concurrently assessed the impact of each intervention in terms of Kirkpatrick’s hierarchy, and strength of findings for each study in terms of the BEME weight of evidence rating scale (strength 1=no clear conclusions can be drawn, not significant; 2=results ambiguous, but there appears to be a trend; 3=conclusions can probably be based on the results; 4=results are clear and very likely to be true; 5=results are unequivocal).15

There was significant variation between studies, in terms of design, intervention, duration, assessment method, and outcome. There were few controlled studies, and every study assessed a different topic within pharmacy education. Few studies reported sufficient data to enable calculation of a combined effect size, and there was limited response to requests for data. Given contextual limitations on methodology in education research (and the associated complication of interpreting education outcomes),13 the risks associated with evidence from uncontrolled studies and from imputing data, it was not possible or appropriate to conduct a meta-analysis for any outcome.

We adopted a modified meta-narrative approach to synthesis.28,29 We considered how e-learning effectiveness was conceptualized, using key outcome measures and how they were assessed in each study. To start, outcomes were broadly themed according to the 4 levels of Kirkpatrick’s hierarchy. Depending on how the outcome was defined (eg, perceived confidence, actual knowledge) and measured (eg, rating scales, formal test), we then iteratively categorized the results of each study to yield a detailed map of e-learning effectiveness in pharmacy education.

RESULTS

Our search strategy identified 459 records from database searches. After adjusting for duplicates, we screened 424 records, and excluded 362 because they did not assess e-learning interventions or because the participants were not pharmacists or pharmacy students. We identified a further 7 citations from reference lists and examined the remaining 69 records in detail. Of these, 17 studies met the criteria for review.

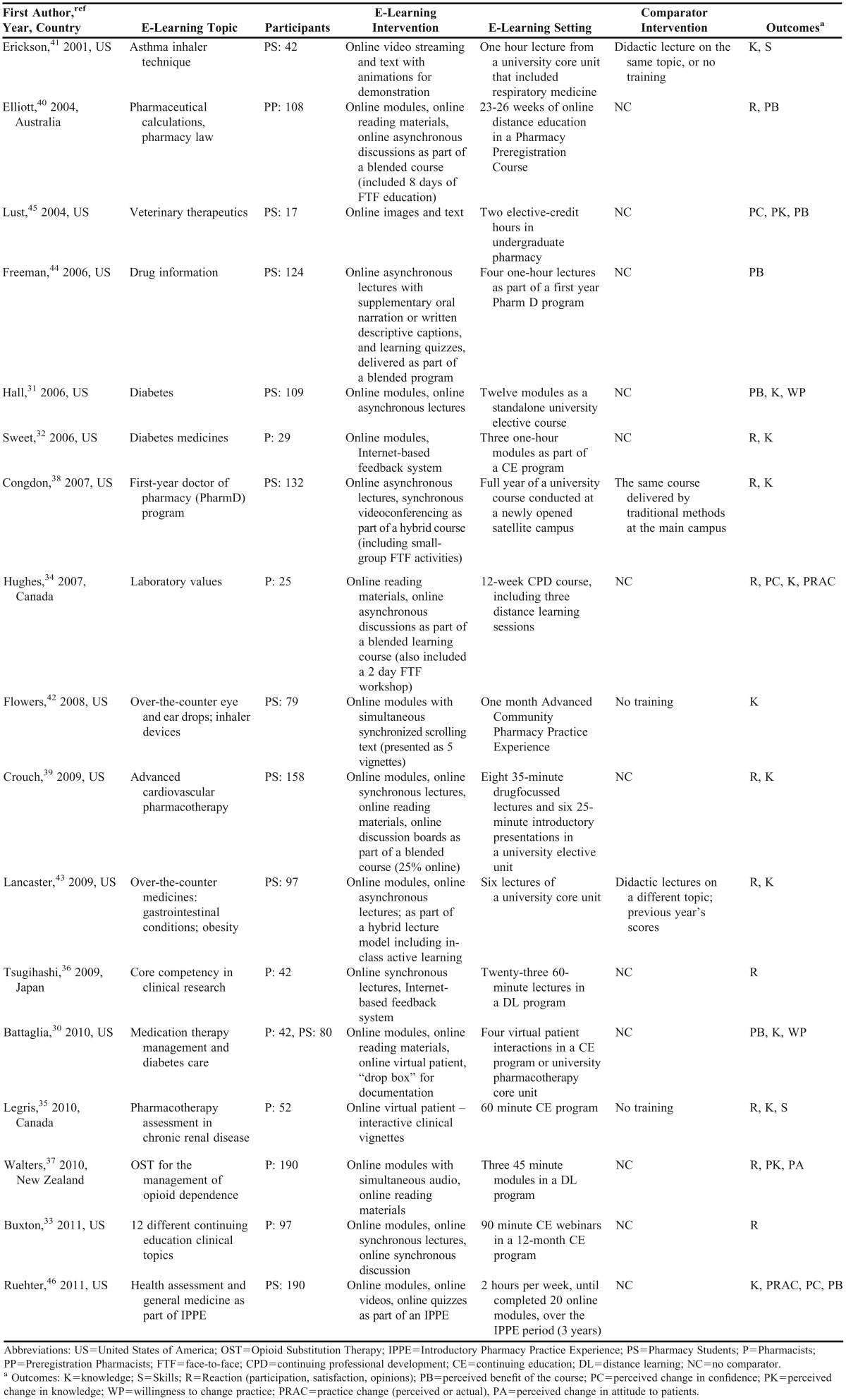

Table 3 summarizes the characteristics of pharmacy e-learning effectiveness studies. Every study assessed a different learning topic, although 3 studies included diabetes within their focus.30-32 Six studies (35%) assessed effectiveness of e-learning in pharmacists;32-37 10 studies (59%) assessed pharmacy students (of which 1 included preregistration pharmacists),31,38-46 and 1 study assessed both pharmacists and pharmacy students.30 The number of participants in each study ranged from 17-190.

Table 3.

Description of Studies Included in a Systematic Review of eLearning in Pharmacy Education

Fourteen studies (82%) delivered e-learning in more than 1 format. The most common interventions were online modules, with or without simultaneous audio. Online reading materials, synchronous and asynchronous lectures, virtual patients, compulsory discussions (with peers or teachers), online feedback systems, and multimedia vignettes were also presented. Six studies (35%) included traditional methods, such as face-to-face lectures, workshops or small-group activities, as part of a blended or hybrid approach.31,38-40,43 Five studies (29%) included a comparator group (non-Internet teaching on the same or different topics, or no training). There was significant variation in setting, including mode of delivery (continuing education, distance learning, university core and elective units, university courses, and pre-registration training), and duration of the intervention (range: 25 minutes to 1 academic year of education).

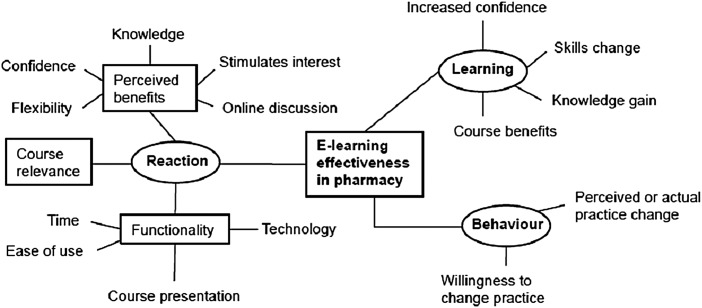

Effectiveness was measured using a variety of objective and subjective assessments, including pre-post knowledge tests, curriculum tests, mock patients, rating scales, semi-structured interviews, and written or online surveys. All objective assessments were analyzed quantitatively; while subjective assessments were analyzed qualitatively and/or quantitatively. We identified 3 effectiveness outcomes based on Kirkpatrick’s hierarchy, which were reaction, learning, and behavior, with 13 studies (76%) reporting more than 1 of these outcomes. A further 19 effectiveness themes emerged through the iterative process. These were refined and presented as a thematic map of e-learning effectiveness in pharmacy education (Figure 2).

Figure 2.

Thematic map of e-learning effectiveness concepts in pharmacy education.

Reaction was assessed subjectively, with different instruments and scales in each study. E-learning programs were considered beneficial in improving knowledge and confidence, and stimulating interest.30,31,35,37,39,40,44-46 Courses were evaluated in terms of their functionality, which was measured as time taken to complete the course,35,38,39 online navigation (programs were easy to use and user-friendly),32,33,35,37,39,44 course presentation (courses were acceptably designed and integrated),31,35,37,40,46 and technical issues (online access, and quality of recordings).31,33,43,44 The majority of pharmacists and pharmacy students considered their e-learning course to be relevant and practical.30,32,33,35,37,39,45,46 One study reported dissatisfaction with online lectures in pharmacy students.44

Learning was assessed objectively and subjectively. Of 11 studies assessing knowledge change, all reported a significant improvement in knowledge immediately after e-learning.30-32,34,35,38,39,41-43,46 However, the magnitude of the gain varied considerably from study to study (range 7% to 46%). Comparative studies assessing knowledge change demonstrated e-learning to be equivalent to lecture-based learning and superior to no training.35,38,41-43 One skills assessment reported significant gains (24% increase after training; adjusted compared to control),35 while another reported superior skills after e-learning in a posttest compared to control.41 Significant gains in self-perceived confidence or knowledge after e-learning varied in magnitude, depending on whether a 5- or 7-point rating scale was used. Most ratings improved by 1-2 points on each scale, representing a change between 14% and 40%.34,37,45,46

Behavior was assessed subjectively, as direct application of knowledge or skills to the workplace,34,46 or willingness to change practice.30,31 Although intended behavior change was reported, the intention varied across studies, depending on the educational topic.

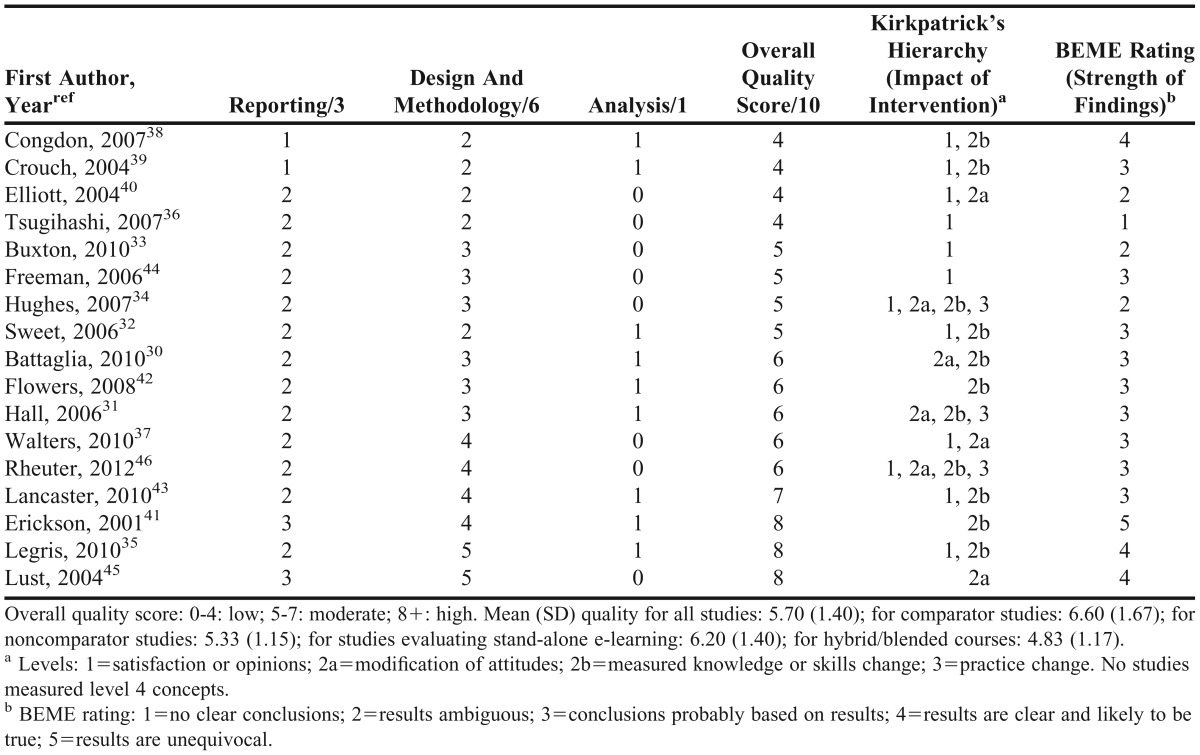

The quality of each study was rated as low (0-4), moderate (5-7), or high (8+), with a maximum score of 10 points. The mean quality for all included studies was 5.7 (Table 4).

Table 4.

Quality of Studies Included in a Systematic Review of eLearning in Pharmacy Education

For all studies, the most common flaws in methodology were selection bias and associated poor external validity (narrow sampling frame, convenience sampling, self-selection, use of financial incentives, lack of randomization). Lack of validated tools30,33,34,36,38,40,43 and/or no control group30-34,36,37,39,40 limited the quality of 11 of the 17 studies. Only 4 studies reported research questions or hypotheses. 30.33,41,45 Two studies had significant loss (40% or greater) at follow-up (posttests).31,37 Almost all studies included self-report (subjective) data; in uncontrolled studies, confounders affecting opinions were not identified or considered in study design or analysis. Most studies did not clearly explain analyses or fully report results of analyses (eg, significant differences claimed based on pooled data, where pooled results were not reported).

When compared to quality scores, there was no apparent relationship between the impact of e-learning interventions and quality, based on Kirkpatrick’s hierarchy. Conversely, BEME strength of findings for each study showed a trend, with higher-quality studies receiving higher ratings on the BEME scale.

DISCUSSION

This review is the first to comprehensively examine the effectiveness of e-learning in pharmacy education. Effectiveness is a complex, theoretical construct; here we used Kirkpatrick’s hierarchy to guide the development of a detailed e-learning effectiveness map in pharmacy education. Our primary interest was effective learning. Eleven studies evaluated knowledge change. Ten studies conducted pre- and post-intervention tests only, and 1 study conducted an additional 2 follow-up tests.39 All reported a significant improvement in knowledge after e-learning, although the magnitude of the gain varied widely (7% to 46%). This confirms that e-learning in pharmacy education is effective at increasing knowledge immediately after training. Additionally, in comparisons, e-learning was as effective as traditional learning and superior to no training. These results concur with the breadth of literature demonstrating effectiveness of e-learning in developing knowledge, in other professions.8,9,16-22 However, long-term knowledge change as a result of e-learning remains unknown.

Attitudinal change (assessed as pre and post e-learning ratings) evaluated professional confidence in performing tasks and perceived knowledge. The evidence, while significant was realistically limited. In all cases assessment was subjective, gleaned through questionnaires with rating scales and survey instruments. Improvements in attitude were seen immediately after e-learning. However, the results need to be interpreted with caution: scale format data should not be analyzed on an item-by-item basis, and ordinal data is at risk of distortion when reported as mean scores, as occurred in 4 studies.30,33,34,37,47,48 There was no evidence of long-term change in attitude.

We also primarily defined effectiveness as change in skills or practice. The evidence for effectiveness in these terms was limited and generally based on self-report data from small groups.30,31,34,41 Only 1 study employed sufficient methodological rigor to objectively report a positive change in skills after e-learning.35 To conduct objective skills or practice assessments is costly and time consuming, and requires greater dedication than objective knowledge assessments. However, the goal of quality education must be to improve skills and practice, and research should be directed to address this. There were no e-learning effectiveness studies for organizational change or patient benefit – the highest level in Kirkpatrick’s hierarchy. Translational research is required to determine the benefits of e-learning at this level.

Our secondary aim was to assess effectiveness as reactions to e-learning programs. Effectiveness measures for reactions included perceived benefits of e-learning, relevance of the specific e-learning course, and e-learning functionality. Most pharmacists agree that e-learning formats stimulate interest, provide flexible alternatives to traditional methods, and are easy to use. There is limited evidence for acceptance of technology used in e-learning, although technology is central to the process. This may be because the Internet is so inherently a part of everyday life that the details of technology are overlooked in research. Poor recordings or difficult access can lead to bad learning experiences. Further, as students (as part of the millennial generation) embrace other e-learning opportunities such as social media applications or massive open online courses (MOOCs), continued evaluation of e-learning technology will be essential. Finally, courses were presented in a myriad of formats, and satisfaction with course design and educational content was generally high.31,32,35,37,39,40,44-46

Overall, the findings of these studies show that learners consider e-learning a highly acceptable instructional format in pharmacy education. However, we acknowledge the risk that ratings may have been subject to response bias and that respondents’ impressions may have changed over time after completing the e-learning course. Opinions may be affected by external factors, especially in times of stress (eg, pharmacy students may score ratings differently after examinations compared to usual coursework); however, this is true for any instructional format. Finally, what we observed is missing from e-learning satisfaction research is the impression of the educator.

Our study has several limitations. We limited the eligibility criteria for inclusion in the study to those studies that reported evaluations of the effectiveness of e-learning in pharmacy education. Other research evaluating effectiveness alongside different constructs may have been overlooked. Although 2 reviewers independently abstracted the data, differences in study interpretation may have impacted the data obtained, as evidenced by the low to moderate agreement within some of the data extraction levels. Although overall quality was moderate, study methodological quality was generally low. Three particular flaws stood out: selection bias, lack of control groups, and lack of validated tools. Most studies were conducted within a narrow sampling frame, did not employ appropriate control groups, and used only partially validated or non-validated tools, thus limiting internal and external validity. We attempted to synthesize results for a group of studies that held only 2 commonalities: pharmacy and e-learning. Interventions, topics, duration, and setting were different for every study. However, while this may have affected combination of results, the fact that e-learning was effective in different environments may support generalizing these results. Further, we acknowledge that all included studies reported significant (and positive) effects, and that publication bias was likely to exist. Lastly, we synthesized the evidence for pharmacists and pharmacy students as one. We recognize each have distinct learning needs, motivations, and environments. As pharmacy students progress to pharmacists, learning styles may change. Future reviews should identify specific aspects of effective e-learning for each population.

In the context of the broader literature, our review adds e-learning as an effective instructional method in pharmacy education, to the evidence that it is effective for other health professions.7,9,19,22 Individual e-learning programs should continue to be evaluated for effectiveness, not to answer the question of whether e-learning works in pharmacy, but to inform educators and decision makers that the program itself is effective. There are 2 key reasons why this matters. First, e-learning programs are often developed for large-scale distribution; thus, confidence that the program will effectively teach (often complex) pharmacy topics is essential. Second, e-learning programs may not always be subject to the same scrutiny that traditional programs undergo, especially those developed by smaller organizations specifically for a target audience.

Finally, 13 of the 17 studies reviewed evaluated more than 1 effectiveness measure, in some cases using multiple methods. Problems with reporting, methodology, and thus quality may stem from this multiple outcome approach, suggesting that effectiveness studies of e-learning in pharmacy education are trying to address too many questions at once. Now that we know e-learning is effective in the short-term, it may be more useful to see well-conducted research that reports the long-term effectiveness of e-learning in pharmacy education (defined by 1 or 2 measures only) rather than broad snapshots of immediate impact.

CONCLUSIONS

E-learning has been studied as an instructional format across a range of pharmacy education topics and contexts for decades, yet until now there have been no reviews on the effectiveness of e-learning in pharmacy education. In this review, we found e-learning to be effective at increasing knowledge immediately after training for all topics and in all contexts. Therefore, we can generalize that e-learning in any context should improve knowledge. E-learning in pharmacy education was a highly acceptable instructional format for pharmacists and pharmacy students, although this measure of effectiveness, by its nature was assessed subjectively and is open to criticism. There is little evidence that e-learning improved skills or professional practice and no evidence that e-learning is effective at increasing knowledge long term. There is room for improvement in the quality of e-learning effectiveness research in pharmacy. Properly validated tools, follow-up research, and translational research are required to answer new questions about the effectiveness of e-learning in pharmacy education.

ACKNOWLEDGEMENTS

Sandra Salter is the recipient of a University Postgraduate Award and UWA Top-Up Scholarship, provided by The University of Western Australia. There were no other funding arrangements for this study.

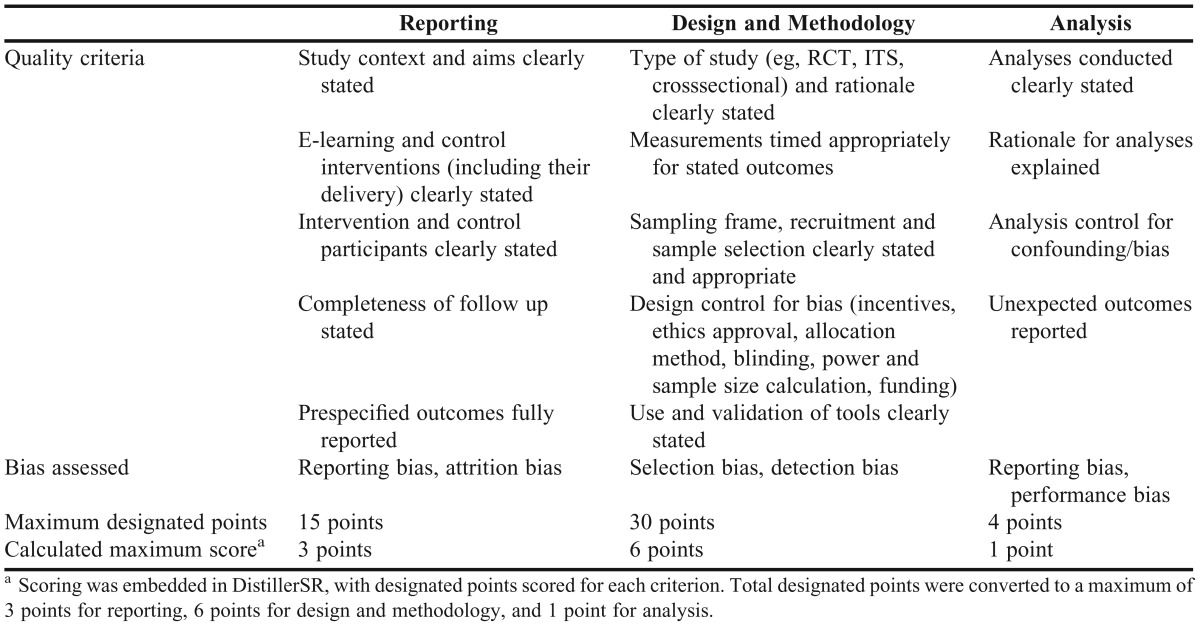

Appendix 1.

Criteria for Quality Assessment of Included Studies

REFERENCES

- 1.Cain J, Fox BI. Web 2.0 and pharmacy education. Am J Pharm Educ. 2009;73(7):Article 120. doi: 10.5688/aj7307120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Falcione BA, Joyner PU, Blouin RA, Mumper RJ, Burcher K, Unterwagner W. New directions in pharmacy education. J Am Pharm Assoc. 2011;51(6):678–679. doi: 10.1331/JAPhA.2011.11545. [DOI] [PubMed] [Google Scholar]

- 3.Monaghan MS, Cain JJ, Malone PM, et al. Educational technology use among US colleges and schools of pharmacy. Am J Pharm Educ. 2011;75(5):Article 87. doi: 10.5688/ajpe75587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Driesen A, Verbeke K, Simoens S, Laekeman G. International trends in lifelong learning for pharmacists. Am J Pharm Educ. 2007;71(3):Article 52. doi: 10.5688/aj710352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Malone PM, Glynn GE, Stohs SJ. The development and structure of a web-based entry-level doctor of pharmacy pathway at Creighton University Medical Center. Am J Pharm Educ. 2004;68(2):Article 46. [Google Scholar]

- 6.Martínez-Torres MR, Toral SL, Barrero F. Identification of the design variables of elearning tools. Interact Comput. 2011;23(3):279–288. [Google Scholar]

- 7.Ruiz JG, Mintzer MJ, Leipzig RM. The impact of e-learning in medical education. Acad Med. 2006;81(3):207–212. doi: 10.1097/00001888-200603000-00002. [DOI] [PubMed] [Google Scholar]

- 8.Childs S, Blenkinsopp E, Hall A, Walton G. Effective e-learning for health professionals and studentsbarriers and their solutions. A systematic review of the literature-findings from the HeXL project. Health Info Libr J. 2005;22:20–32. doi: 10.1111/j.1470-3327.2005.00614.x. [DOI] [PubMed] [Google Scholar]

- 9.Wong G, Greenhalgh T, Pawson R. Internet-based medical education: a realist review of what works, for whom and in what circumstances. BMC Med Educ. 2010;10:12. doi: 10.1186/1472-6920-10-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Mascolo MF. Beyond student-centered and teacher-centered pedagogy: teaching and learning as guided participation. Pedagog Human Sci. 2009;1(1):3–27. [Google Scholar]

- 11.Gough D, Thomas J, Oliver S. Clarifying differences between review designs and methods. Syst Rev. 2012;1:28. doi: 10.1186/2046-4053-1-28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Reed D, Price EG, Windish DM, et al. Challenges in systematic reviews of educational intervention studies. Ann Intern Med. 2005;142(12-Part-2):1080–1089. doi: 10.7326/0003-4819-142-12_part_2-200506211-00008. [DOI] [PubMed] [Google Scholar]

- 13.Scotland J. Exploring the philosophical underpinnings of research: relating ontology and epistemology to the methodology and methods of the scientific, interpretive, and critical research paradigms. Engl Lang Teach. 2012;5(9):9–16. [Google Scholar]

- 14.Kirkpatrick D. Great ideas revisited: revisiting Kirkpatrick's four-level model. Train Dev. 1996;50(1):54–60. [Google Scholar]

- 15.Yardley ST. Kirkpatrick's levels and education ‘evidence.’. Med Educ. 2012;46(1):97–106. doi: 10.1111/j.1365-2923.2011.04076.x. [DOI] [PubMed] [Google Scholar]

- 16.Booth A, Carroll C, Papaioannou D, Sutton A, Wong R. Applying findings from a systematic review of workplace-based e-learning: implications for health information professionals. Health Info Libr J. 2009;26(1):4–21. doi: 10.1111/j.1471-1842.2008.00834.x. [DOI] [PubMed] [Google Scholar]

- 17.Cook DA. Where are we with web-based learning in medical education? Med Teach. 2006;28(7):594–598. doi: 10.1080/01421590601028854. [DOI] [PubMed] [Google Scholar]

- 18.Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Instructional design variations in Internet-based learning for health professions education: a systematic review and meta-analysis. Acad Med. 2010;85(5):909–922. doi: 10.1097/ACM.0b013e3181d6c319. [DOI] [PubMed] [Google Scholar]

- 19.Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Internet-based learning in the health professions: a meta-analysis. JAMA. 2008;300(10):1181. doi: 10.1001/jama.300.10.1181. [DOI] [PubMed] [Google Scholar]

- 20.Curran VR, Fleet L. A review of evaluation outcomes of web-based continuing medical education. Med Educ. 2005;39(6):561–567. doi: 10.1111/j.1365-2929.2005.02173.x. [DOI] [PubMed] [Google Scholar]

- 21.Chumley-Jones HS, Dobbie A, Alford CL. Web-based learning: sound educational method or hype? A review of the evaluation literature. Acad Med. 2002;77(10S):S86–S93. doi: 10.1097/00001888-200210001-00028. [DOI] [PubMed] [Google Scholar]

- 22.Lahti M, Hatonen H, Valimaki M. Impact of e-learning on nurses' and student nurses knowledge, skills, and satisfaction: a systematic review and meta-analysis. Int J Nurs Stud. 2013;51(1):136–149. doi: 10.1016/j.ijnurstu.2012.12.017. [DOI] [PubMed] [Google Scholar]

- 23.Liberati A, Altman DG, Tetzlaff J, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. Br Med J. 2009;339:b2700. doi: 10.1136/bmj.b2700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Salter SM, Clifford RM, Sanfilippo F, Loh R. Effectiveness of e learning for the pharmacy profession: protocol for a systematic review. PROSPERO. 2013;CRD42013004107. [Google Scholar]

- 25.EPPI-Centre data extraction and coding tool for education studies V2.0. London, UK: The UK Civil Service; 2007. http://www.civilservice.gov.uk/wp-content/uploads/2011/09/data_extraction_form_tcm6-7398.doc. Accessed June 12, 2013. [Google Scholar]

- 26.Cochrane Risk of Bias Criteria for EPOC reviews. Ottawa, Canada: Cochrane Effective Practice and Organisation of Care Group; 2013. http://epoc.cochrane.org/sites/epoc.cochrane.org/files/uploads/Suggested risk of bias criteria for EPOC reviews.pdf. Accessed June 20, 2013.

- 27.Methods for the Development of NICE Public Health Guidance. 3rd edition. Quality appraisal checklist – qualitative studies. London, UK: National Institute for Health and Clinical Excellence; 2012. http://publications.nice.org.uk/methods-for-the-development-of-nice-public-health-guidance-third-edition-pmg4/appendix-h-quality-appraisal-checklist-qualitative-studies. Accessed June 20, 2013. [PubMed] [Google Scholar]

- 28.Gough D. Meta-narrative and realist reviews: guidance, rules, publication standards and quality appraisal. BMC Med. 2013;11:22. doi: 10.1186/1741-7015-11-22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Wong G, Greenhalgh T, Westhorp G, Buckingham J, Pawson R. RAMESES publication standards: metanarrative reviews. BMC Med. 2013;11:20. doi: 10.1186/1741-7015-11-20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Battaglia JN, Kieser MA, Bruskiewitz RH, Pitterle ME, Thorpe JM. An online virtual-patient program to teach pharmacists and pharmacy students how to provide diabetes-specific medication therapy management. Am J Pharm Educ. 2012;76(7):Article 131. doi: 10.5688/ajpe767131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Hall DL, Corman SL, Drab SR, Smith RB, Meyer SM. Application of a technology-based instructional resource in diabetes education at multiple schools of pharmacy: evaluation of student learning and satisfaction. Curr Pharm Teach Learn. 2010;2(2):108–113. [Google Scholar]

- 32.Sweet B, Welage L, Johnston J. Effect of a web-based continuing-education program on pharmacist learning. Am J Health-Syst Pharm. 2009;66(21):1902–1903. doi: 10.2146/ajhp080658. [DOI] [PubMed] [Google Scholar]

- 33.Buxton EC, Burns EC, De Muth JE. Professional development webinars for pharmacists. Am J Pharm Educ. 2012;76(8):Article 155. doi: 10.5688/ajpe768155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Hughes CA, Schindel TJ. Evaluation of a professional development course for pharmacists on laboratory values: can practice change? Int J Pharm Pract. 2010;18(3):174–179. [PubMed] [Google Scholar]

- 35.Legris M-E, Seguin NC, Desforges K, et al. Pharmacist web-based training program on medication use in chronic kidney disease patients: impact on knowledge, skills, and satisfaction. J Contin Educ Health. 2011;31(3):140–150. doi: 10.1002/chp.20119. [DOI] [PubMed] [Google Scholar]

- 36.Tsugihashi Y, Kakudate N, Yokoyama Y, et al. A novel Internet-based blended learning programme providing core competency in clinical research. J Eval Clin Prac. 2013;19(2):250–255. doi: 10.1111/j.1365-2753.2011.01808.x. [DOI] [PubMed] [Google Scholar]

- 37.Walters C, Raymont A, Galea S, Wheeler A. Evaluation of online training for the provision of opioid substitution treatment by community pharmacists in New Zealand. Drug Alcohol Rev. 2012;31(7):903–910. doi: 10.1111/j.1465-3362.2012.00459.x. [DOI] [PubMed] [Google Scholar]

- 38.Congdon HB, Nutter DA, Charneski L, Butko P. Impact of hybrid delivery of education on student academic performance and the student experience. Am J Pharm Educ. 2009;73(7):Article 121. doi: 10.5688/aj7307121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Crouch MA. An advanced cardiovascular pharmacotherapy course blending online and face-to-face instruction. Am J Pharm Educ. 2009;73(3):Article 51. doi: 10.5688/aj730351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Elliott RA, McDowell J, Marriott JL, Calandra A, Duncan G. A pharmacy preregistration course using online teaching and learning methods. Am J Pharm Educ. 2009;73(5):Article 77. doi: 10.5688/aj730577. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Erickson SR, Chang A, Johnson CE, Gruppen LD. Lecture versus web tutorial for pharmacy students' learning of MDI technique. Ann Pharmacother. 2003;37(4):500–505. doi: 10.1345/aph.1C374. [DOI] [PubMed] [Google Scholar]

- 42.Flowers SK, Vanderbush RE, Hastings JK, West D. Web-based multimedia vignettes in advanced community pharmacy practice experiences. Am J Pharm Educ. 2010;74(3):Article 39. doi: 10.5688/aj740339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Lancaster JW, McQueeney ML, Van Amburgh JA. Online lecture delivery paired with in class problembased learning… does it enhance student learning? Curr Pharm Teach Learn. 2011;3(1):23–29. [Google Scholar]

- 44.Freeman MK, Schrimsher RH, Kendrach MG. Student perceptions of online lectures and webCT in an introductory drug information course. Am J Pharm Educ. 2006;70(6):Article 126. doi: 10.5688/aj7006126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Lust E. An online course in veterinary therapeutics for pharmacy students. Am J Pharm Educ. 2004;68(5):Article 112. [Google Scholar]

- 46.Ruehter V, Lindsey C, Graham M, Garavalia L. Use of online modules to enhance knowledge and skills application during an introductory pharmacy practice experience. Am J Pharm Educ. 2012;76(4):Article 69. doi: 10.5688/ajpe76469. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Carifo J, Perla RJ. Ten common misunderstandings, misconceptions, persistent myths and urban legends about likert scales and likert response formats and their antidotes. J Soc Sci. 2007;3(3):106–116. [Google Scholar]

- 48.Jamieson S. Likert scales: how to (ab)use them. Med Educ. 2004;38(12):1217–1218. doi: 10.1111/j.1365-2929.2004.02012.x. [DOI] [PubMed] [Google Scholar]