Abstract

It is crucial to understand what brain signals can be decoded from single trials with different recording techniques for the development of Brain-Machine Interfaces. A specific challenge for non-invasive recording methods are activations confined to small spatial areas on the cortex such as the finger representation of one hand. Here we study the information content of single trial brain activity in non-invasive MEG and EEG recordings elicited by finger movements of one hand. We investigate the feasibility of decoding which of four fingers of one hand performed a slight button press. With MEG we demonstrate reliable discrimination of single button presses performed with the thumb, the index, the middle or the little finger (average over all subjects and fingers 57%, best subject 70%, empirical guessing level: 25.1%). EEG decoding performance was less robust (average over all subjects and fingers 43%, best subject 54%, empirical guessing level 25.1%). Spatiotemporal patterns of amplitude variations in the time series provided best information for discriminating finger movements. Non-phase-locked changes of mu and beta oscillations were less predictive. Movement related high gamma oscillations were observed in average induced oscillation amplitudes in the MEG but did not provide sufficient information about the finger's identity in single trials. Importantly, pre-movement neuronal activity provided information about the preparation of the movement of a specific finger. Our study demonstrates the potential of non-invasive MEG to provide informative features for individual finger control in a Brain-Machine Interface neuroprosthesis.

Keywords: Finger decoding, Brain-Machine Interface, Magnetoencephalography, Motor cortex, High-gamma oscillations, Electroencephalography

Introduction

One of the major efforts of current Brain-Machine Interface (BMI) research is the reliable control of an upper limb prosthesis. It remains an open question at what level of detail non-invasive recording techniques can provide information for movement decoding. For coarser movements, recent studies succeeded in decoding the kinematics and the position of hand trajectories and arm movements from single-unit recordings in monkeys (Carmena et al., 2003; Taylor et al., 2002) and in humans (Hochberg et al., 2006). Similarly, invasive subdural electrocorticography (ECoG, Leuthardt et al., 2004) noninvasive electroencephalography (EEG) and non-invasive magneto-encephalography (MEG) recordings (Georgopoulos et al., 2005; Jerbi et al., 2007; Waldert et al., 2008) allowed for decoding of arm movement directions. On a finer level of detail, ECoG recordings in humans (Acharya et al., 2010; Kubánek et al., 2009; Miller et al., 2009) and single unit recordings in monkeys (Aggarwal et al., 2009; Hamed et al., 2007) show the potential to detect movements of individual fingers.

It is unclear, however, whether it is possible to decode single finger movements of the same hand in single trials with non-invasive MEG or EEG. The limited spatiotemporal resolution and the low signal to noise ratio (SNR) of non-invasive recordings might not be sufficient to detect the weak signals generated by finger movements of one hand. The muscle mass involved in finger movements is smaller than in limb or hand movements and neuronal discharges of motor cortex neurons are correspondingly smaller in finger movements than in arm or wrist movements (Pfurtscheller et al., 2003), which makes them difficult to detect. Importantly, in a MEG study Kauhanen et al. (2006) were able to discriminate left from right hand index finger movements showing that single trial brain activity accompanying finger movements is indeed detectable. Another potential challenge is the spatially overlapping finger representation in somatosensory cortex. Penfield and Boldrey found that the distance along the Rolandic fissure that evoked finger movements after cortical stimulation was 55 mm (Penfield and Boldrey, 1937). The maximum spatial resolution of MEG for sources is thought to be around 2–3 mm but only under optimal circumstances and extensive averaging (Hämäläinen et al., 1993), hence leaving open if the spatial resolution is sufficient for single trial decoding. In addition, Schieber (2001), reviewing somatotopic hand organization, reported substantial overlap of the territories in M1 controlling individual fingers and noted that the encoding of muscle activations and joint positions in the brain does not follow a strict somatotopic organization which presents another obstacle for decoding.

A further question pertains to the observed event-related and oscillatory brain dynamics captured with non-invasive EEG and MEG. Crone et al. (1998a, 1998b) reported a decrease of oscillatory beta power (15 Hz–25 Hz) using ECoG over motor cortices during limb movements and a power increase in oscillatory high gamma (greater than 60 Hz). A similar pre-movement signal decrease (event related desynchronisation, ERDS) of oscillatory mu (7 Hz–12 Hz) and beta (20 Hz–24 Hz) bands has been described in EEG (Pfurtscheller and Lopes da Silva, 1999). Such signals proved to be reliable enough to control a non-invasive, EEG-based, human BMI based on imagined tongue and foot movements eliciting ERDS at relatively distant spatial sites along the Rolandic fissure (Pfurtscheller et al., 2006). Similarly, the low-pass filtered time series has been shown to provide useful information for movement decoding in invasive (Acharya et al., 2010; Kubánek et al., 2009) and non-invasive (Waldert et al., 2008) recordings. Although, high frequency oscillations can be recorded noninvasively under some circumstances (Cheyne et al., 2008), it is currently unclear whether their signal to noise ratio is sufficient to discriminate movements in single trials.

Finally, the recording technique may also have an influence on the signals that can be obtained in non-invasive recordings from the skull. Most studies on motor decoding employ EEG for practical reasons. Although MEG can provide an advantage over EEG in spatial resolution (Hari et al., 1988), it has rarely been used for single trial decoding (Rieger et al., 2008; Waldert et al., 2008). Here, we simultaneously recorded EEG and MEG to decode changes of neuronal activity evoked by minimal finger movements. Our goal was to directly compare the feasibility to decode single finger movements of the same hand with the two methods.

To our knowledge no classification of finger movements on the same hand has been shown with non-invasive recording techniques yet. In this study we aim to discriminate individual finger taps on a single trial basis with two non-invasive recording techniques (EEG and MEG). Importantly, whereas previous invasive studies focused on the repetitive movement of fingers (Kubánek et al., 2009; Miller et al., 2009) we concentrate on the decoding of single, minimal finger taps. Our subjects performed slight finger movements by pressing buttons on a button box with four fingers of the same hand. Beyond decoding our goal was to understand the dynamic and spatial features as well as the timing of brain networks controlling dexterous finger movements.

Material and methods

Recording systems and subjects

MEG and EEG were recorded simultaneously in an electromagnetically shielded room. The data was sampled at 1017.25 Hz, using a whole-head BTi Magnes system with 248-sensors (4D-Neuroimaging, San Diego, CA, USA) and a 32-channel EEG system. EEG electrodes were positioned according to the 10–20 System of the American Electroencephalographic Society (EEG system by SENSORIUM INC.) (Fp1, Fpz, Fp2, F7, F3, Fz, F4, F8, FC1, FC2, T7, C3, Cz, C4, T8, CP1, CP2, P7, P3, Pz, P4, P8, PO9, PO7, PO3, PO4, PO8, PO10, Oz, O9, Iz, O10). Eye movement artifacts were detected by measuring the vEOG and hEOG. The impedance of the EEG electrodes was kept below 5 kΩ. A total of thirteen right-handed subjects participated in the experiment (9 females and 4 males, mean age: 23.6 years, range: 21–27 years), all of whom gave written informed consent. The study was approved by the ethics committee of the Medical Faculty of the Otto-von-Guericke University of Magdeburg.

Task

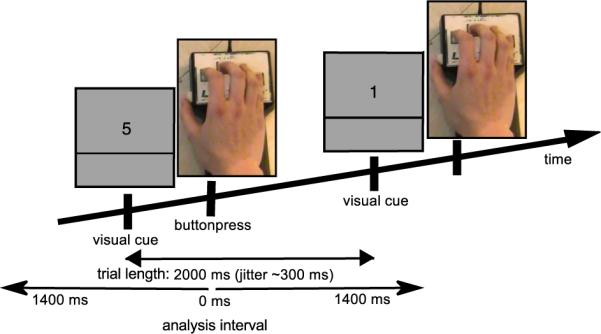

The subject was presented with a number on a screen (visual cue) that indicated which finger to move (1: thumb, 2: index, 3: middle, and 5: little finger). The visual cue was presented every 2 s and the participants were instructed to press and immediately release a button with the finger indicated by the visual cue using their dominant hand (Fig. 1). Trials with reaction times shorter than 200 ms were excluded from the later analysis. Moving the ring finger individually appeared to be difficult and was therefore excluded from the experiment. Subjects were seated comfortably in an upright position, the right elbow was rested on a pillow and the hand was held in a prone-position.

Fig. 1.

Task and trial structure. Each trial started with a numerical visual cue and instructed the subject to press a button with the corresponding finger (1: thumb, 2: index, 3: middle, 5: little, ring finger was not used). The inter-trial interval was 2 s with a temporal jitter of 0.3 s.

Data analysis

Preprocessing

Both datasets (MEG and EEG) were segmented in intervals of +/−1400 ms around the button press for further analysis (Fig. 1). This was necessary to fit the data into computer memory. The cut out segments were somewhat longer than the actually analyzed interval to avoid boundary effects in the following filtering and frequency analysis steps. Power line noise was removed with a 50 Hz notch-filter. The EEG data was band-pass-filtered from 0.15 Hz–50 Hz and down-sampled to 256 Hz. Higher frequencies were excluded from the EEG because due to their small amplitudes it is unlikely that they provide sufficient information in single trials. In EEG the channel-averaged activity was subtracted from each channel at every point in time to obtain common average referenced signals. The MEG data was band-pass-filtered from 0.15 Hz to 128 Hz and down-sampled to 256 Hz. Trials with EOG contributions or artifactual amplitude steps were dismissed from the analysis (on average 24% dismissed trials). These data were used for the following frequency analysis.

Additional preprocessing was done for time series classification. First, a baseline (−1000 ms to −800 ms with respect to the button press) was subtracted from each trial. The baseline interval was chosen to minimize the influence from the cortical response evoked by the visual cue and to prevent leaking of the motor evoked response from the previous button press into the current baseline. The success is demonstrated by the flat baselines in Fig. 5a (gray rectangle). Second, the raw time series was digitally low-pass filtered at 16 Hz and down-sampled to 32 Hz by picking every 8th sample. Third, we initially restricted the interval length to 500 ms from −50 ms to 450 ms around the button press.

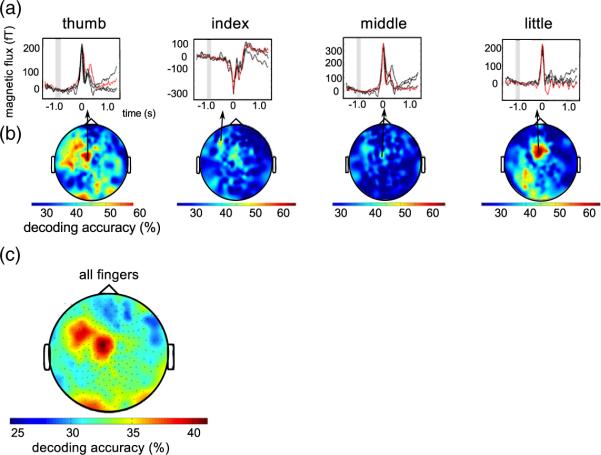

Fig. 5.

Single sensor decoding accuracies. (a) Average MEG time series of the most informative channels for one representative subject and all fingers (red: finger to be recognized, black: other fingers, gray rectangle: baseline interval). (b) Topographies of single sensor decoding accuracies of thumb, index, middle and little finger of the same subject. Classi-fication was performed on the time series from −50 ms to 450 ms around movement. Even single sensors allow for decoding accuracies exceeding 60% correct. (c) Topography of the decoding accuracies averaged over all fingers and subjects. The 30 sensors with highest decoding accuracies are all located over contralateral sensorimotor areas.

The Fieldtrip toolbox (Donders Institute for Brain, Cognition and Behaviour, http://www.ru.nl/neuroimaging/fieldtrip, (Oostenveld et al., 2011)) and custom software were used for preprocessing.

Frequency analysis

Spectrograms were calculated from 1 Hz to 120 Hz (in steps of 2.5 Hz) for each trial of the MEG. The time resolved spectral analysis was computed with a short-time FFT-multitaper approach, using 5 slepian tapers (Mitra and Pesaran, 1999). The center of the moving window was shifted in steps of 30 ms, with a window width of 500 ms.

Classification

For classification we tested different feature spaces: The time series of both MEG and EEG and oscillation amplitude modulation of MEG: To prevent classifier bias among fingers we balanced numbers of trials among fingers by randomly eliminating trials from the larger sets. (on average 136 trials per finger, minimum: 97, maximum: 184). To avoid artificial increase of correlation between training and test sets, all trials were preprocessed independently and all classification steps were performed in a five-fold cross-validation loop in which the classifier was trained on 80% of the trials and generalization of the trained classifier was tested on 20% of the trials. The decoding accuracy (DA) was calculated as the average percentage of correctly classified trials in all five folds. All sampling points of the low-pass filtered time series were included as features for classification, without any further feature selection. Consequently, each of the 500 ms intervals used for single trial classification consists of 3968 features (248 sensors by 16 samples) in the MEG data and 464 features (29 sensors by 16 samples) in the EEG data. We chose a linear support vector machine (SVM) for classification of single finger movements because this technique has been shown to provide robust results even when the number of features exceeds the number of examples (Guyon et al., 2002; Rieger et al., 2008). The support vector machine estimates the linear discriminate function: ƒ(x)=wx+b, with w being the weight vector and b being the bias.

We trained SVMs in a winner-takes-all one-vs-all multi-class classification scheme using LIBSVM (http://www.csie.ntu.edu.tw/~cjlin/libsvm/) through the spider toolbox (http://people.kyb.tuebingen.mpg.de/spider/main.html). Four binary SVMs were trained (thumb vs. index, middle, and little finger; index vs. thumb, middle, and little finger; middle vs. thumb, index, and little finger; little vs. thumb, index, and middle finger). For classification we calculate the distance d of the trial to the separating hyperplane in each of the four binary classifiers. Then the trial is assigned to the class with the highest value of d. The probability for correct guessing in this classification scheme is 1/n, for n equally probable class assignments. With n=4 for four fingers, the guessing level is 25%.

Evaluation of classifier generalization

To test whether the DAs are significantly better than random, we compared them to an empirically estimated guessing level. The guessing level was determined in a permutation test in which each subject's class-labels were randomly shuffled among trials. Classification was repeated 500 times with different randomizations (for a detailed description see (Rieger et al., 2008)). The mean prediction accuracy over all repetitions and subjects equaled the empirical guessing level. The permutation tests provided 95% confidence intervals and DAs above the upper confidence interval were assumed to be significant. In addition to the EEG and MEG time series, we classified the EOG time series using the same approach to ensure that classification performance is not based on eye movement artifacts.

Results

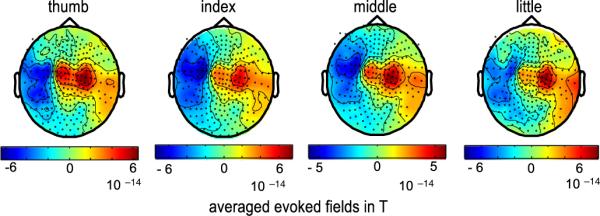

The mean reaction time (visual cue to button press) was 470 ms (standard deviation 50 ms). The highest power modulations are observed in the interval around the button press and in sensors above sensorimotor areas on the contralateral cortex. However, averaged over subjects, activation patterns of the individual fingers appeared relatively similar (Fig. 2).

Fig. 2.

Topography of subject averaged evoked magnetic fields. Evoked magnetic fields are shown for movements of the thumb, index, middle, and little finger of the right hand (interval −50 ms to 200 ms). The location of magnetic flux direction reversal (lateral blue to central red) indicates activity in contralateral, left somato-motor cortex. Back dots indicate sensor locations. Red signifies magnetic flux directed outward the skull and blue inward.

Classification results of the time series

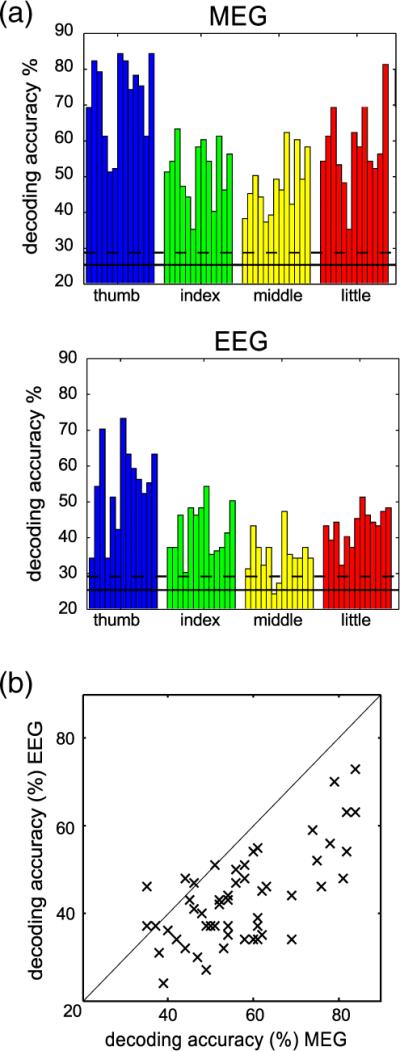

For time series classification we first chose the 500 ms time interval from −50 ms to 450 ms around the button press containing the maximum activation. The overall decoding accuracy obtained by the five-fold cross-validation of the multi-class SVM averaged over all subjects and fingers was 57% correct in the MEG recordings and 43% correct in the EEG recordings. Although we tested higher cut-off frequencies than 16 Hz we found no changes of discrimination rate. The theoretical guessing level is 25% in a four-class classification task. The empirically estimated guessing level, averaged over subjects, was 25.1% in MEG and EEG recordings, respectively, with an upper 95% confidence interval of 28.5% (MEG) and 28.9% (EEG). The results for the different fingers are shown in Fig. 3a for each subject. On average, button presses using the thumb allowed for highest DA, whereas index and middle finger were hardest to discriminate (Table 1).

Fig. 3.

Classification performance. (a) Decoding accuracies of the one-vs-all support vector machine obtained with MEG (top) and EEG (bottom) data. Each bar represents the decoding accuracy of one combination of subject and finger (thumb, index, middle, and little finger). The horizontal solid line depicts the empirically estimated guessing level and the dashed line the upper 95% confidence interval for guessing. From MEG data every finger can be decoded reliably in every subject (average 57% correct, range 40% to 70%). However, with EEG data average decoding accuracy is lower and for some subjects some fingers do not exceed the confidence interval for guessing (average 43% correct, range 32% to 54%). (b) For comparison MEG (abscissa) and EEG (ordinate) decoding accuracies are plotted against each other. Each data point represents the DA for one combination of finger and subject. The diagonal line represents the line of equal accuracy. Nearly all points fall below that line, clearly indicating that MEG allows for more reliable single trial finger decoding.

Table 1.

Average decoding accuracy in %. Decoding accuracies (%) for the four fingers averaged over subjects. On average, MEG and EEG allowed for discrimination of the finger moved. The average empirical guessing level was 25.1% in both MEG and EEG. The 95% confidence interval for guessing was 28.5% in MEG and 28.9% in EEG. The electrooculogram (EOG) never exceeded the guessing level. Hence, blinks or eye movements did not contribute to decoding accuracy.

| MEG | EEG | EOG | |

|---|---|---|---|

| Thumb | 72% (SE: 3.3%) | 54% (SE: 3.4%) | 27% (SE: 2.3%) |

| Index | 51% (SE: 2.4%) | 42% (SE: 2.0%) | 26% (SE: 3.3%) |

| Middle | 48% (SE: 2.3%) | 35% (SE: 1.7%) | 27% (SE: 2.1%) |

| Little | 58% (SE: 3.3%) | 43% (SE: 1.4%) | 27% (SE: 3.1%) |

| Mean | 57% (SE: 3.2%) | 43% (SE: 1.6%) | 27% (SE: 1.0%) |

MEG and EEG results confirm that the classification performance is better than random. However, the discrimination rate of the EEG is less robust and some subjects perform only slightly better than the upper confidence interval for guessing (Fig. 3a). A comparison of the classification accuracy between the two recording techniques revealed that the DA is higher in nearly every case in the MEG data (Fig. 3b) and that this difference is statistically significant (t(12)=5.0, p<0.005). Because we found MEG superior to EEG for decoding single finger movements we focused the further analysis on the MEG recordings.

As a control we also performed classification on the EOG time series. The DA obtained with the EOG was within the 95% confidence interval for guessing (average DA over all subjects: 27%, empirical guessing level: 25%, 95-confidence interval: 28.5%), indicating that eye movements do not contribute to the classification results.

Time course of decoding information in time series data

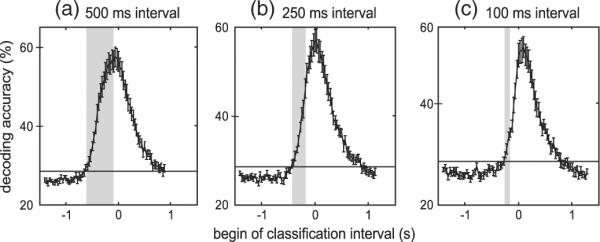

We further probed the temporal development of discriminative information around the finger movement by shifting classification intervals in steps of 30 ms. Fig. 4 shows the subject and finger averaged DA for windows with a length of 500 ms (16 sampling points per sensor in window, Fig. 4a), 250 ms (9 sampling points, Fig. 4b), and 100 ms (4 sampling points, Fig. 4c). The maximum DA was obtained with a 500 ms wide window starting 50 ms before the button press, the same window we used in the first analysis. Importantly, even shorter time intervals allow for accurate decoding and maximum DA drops only slightly with shorter intervals. To analyze whether pre-movement neuronal activity is discriminative for a specific finger, we tested when the decoding accuracy is above chance. We consider classification performance significant if the lower 95% confidence interval for the average DA exceeds the upper 95% confidence interval for the guessing level. With this criterion we found significant classi-fication performance starting at −620 ms with 500 ms long intervals. With 250 ms long intervals significant classification started at −400 ms, and with 100 ms long intervals significant classification started at −240 ms. This indicates that neuronal activity before the button press contains decoding information that allows for significant discrimination of different finger movements.

Fig. 4.

Evolution of MEG decoding accuracies over time. Each plot depicts the temporal evolution of decoding accuracies averaged over subjects and fingers. Zero on the x-axis marks time of button press. Decoding accuracies were determined for three different classification interval lengths (a: 500 ms length, b: 250 ms length, and c: 100 ms length) and intervals were advanced in steps of 30 ms. Decoding accuracy is plotted at the first sample that was acquired in the interval. The gray rectangle indicates the first interval with decoding accuracy exceeding the empirical 95% confidence interval. Decoding accuracy exceeded the guessing level even when only pre-movement brain activity was used for decoding. Error bars indicate standard error of the decoding accuracies.

Information used for classification of the time series

We investigated the spatial distribution of the brain activity relevant for finger movement decoding by determining DA for every MEG sensor separately (500 ms interval, 16 time samples per sensor). The MEG time series for the best sensors for each finger are shown in Fig. 5a for one subject. The spatial distributions of informative electrodes are shown in Fig. 5b for the same subject. Fig. 5c shows the average spatial distribution of DAs over all subjects and fingers. Even with single MEG-sensors DAs are still above guessing level and classi-fication performance often exceeds 60% correct. On average, the 30 highest-ranked sensors are all located over motor-related areas contralateral to the hand used to perform the button presses (Fig. 5c). When we retrained the SVM using only the best 30 sensors we found that DAs are just as good as when using all sensors (average over subject: thumb: 67%, index: 47%, middle: 47%, little: 57%). These results indicate that the better DA obtained with MEG than with EEG is not simply due to the larger number of sensors because a) even single MEG sensors can outperform the average performance obtained with all EEG channels and b) the DA obtained with MEG exceeds that of EEG even when the same number of sensors are used in both modalities. Moreover, the stability of the results over feature spaces (all sensors and 30 best sensors) indicates that SVM training provided robust generalization even when the number of input features was much higher than the number of trials. Importantly, the finding that the empirical guessing level and EOG classification were close to the theoretical guessing level indicates that neither eye movements nor other unforeseen factors biased DA.

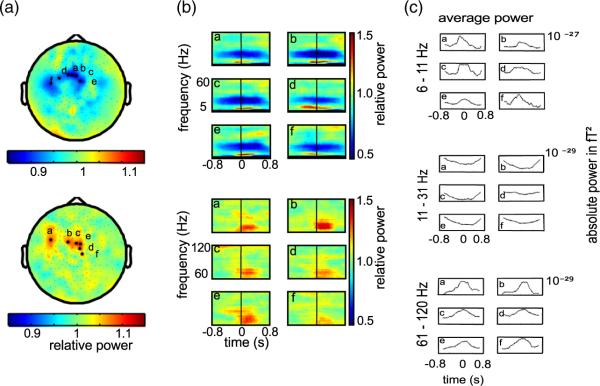

Time frequency analysis

For time frequency analysis we determined the spectrograms for each sensor. The relative change in power is estimated as the change of power compared to baseline (average from −1000 ms to −800 ms) and is calculated for each frequency separately. Fig. 6a shows the topographies of the power modulations relative to baseline averaged over all trials for the thumb movement of one representative subject. The top plot shows averaged low frequency modulations (1 Hz–60 Hz) and the bottom plot depicts averaged high frequency modulations (60 Hz–120 Hz) for the time interval from −50 ms to 450 ms around the finger movement. As in the time series, the most prominent changes are found in sensors over contralateral motor areas. The power of oscillations below 60 Hz decreases around the movement, whereas power in the high gamma band increases. This is in concordance with reports from previous studies using invasive electrocorticographic recordings (Crone et al., 1998b). In accord with Crone et al., the effects in the high gamma band appear to be more spatially focused than in lower frequency bands. Fig. 6b depicts the spectrograms of the single sensors with highest average modulations, marked with an asterisk in the topographies. The power decreases approximately 500 ms before the button press in the frequency range from 11 Hz to 31 Hz. This is in line with the formerly reported beta desynchronization during movement execution and imagination (Pfurtscheller and Lopes da Silva, 1999). Conversely, in an even lower frequency band, in the range from 6 Hz to 11 Hz, power increases, indicating a separate neural mechanism. In addition, we observe an increase of high gamma power from 60 Hz to 80 Hz, indicating neural synchronization time-locked to movement (see Supplementary Fig. 1 for the spectrograms of all subjects). Fig. 6c shows the dynamics of the absolute band power changes in the three frequency bands (6 Hz–11 Hz, 11 Hz–31 Hz, 61 Hz–120 Hz) in addition to the relative changes shown in the spectrograms.

Fig. 6.

Average spectral power changes around finger movement. (a) Topographies of the trial averaged relative spectral power changes. Top: low frequencies average (5 Hz–60 Hz). Bottom: high gamma average (61 Hz–120 Hz). Relative power compares average spectral power from − 50 ms to 450 ms around movement to pre-movement baseline power (−1000 ms to −800 ms). Values >1 indicate increase and <1 decrease compared to baseline. Power modulation maxima are located close to sensorimotor cortex. Data is shown for one representative subject and one finger (thumb). (b) Spectrograms for sensors marked in (a) by black asterisks. Letters in spectrograms correspond to letters in topographic maps. Spectrograms depict relative power changes around movement onset (0 ms). Top panels show relative power in frequencies from 2 Hz to 60 Hz. Bottom panels show power in high gamma ranging here from 60 Hz to 120 Hz. (c) The time course of the absolute power averaged over three bands (6 Hz–11 Hz, 11 Hz–31 Hz, and 61 Hz–120 Hz) for the same sensors. The low frequency band is split to better appreciate the low frequency power increase (roughly theta band) and the mid frequency power decrease (roughly mu and beta bands) around pre- and post movement onset. Power in high gamma band tends to increase at movement onset.

To further assess the reliability of these power modulations we performed pair-wise t-tests for each subject separately, comparing the average band power in the baseline interval to the average band power of the movement interval (−50 ms–450 ms) for the six channels with the highest average modulations (as displayed in the Supplementary Fig. 1). Power changes in the lower frequency bands (6 Hz–11 Hz and 11 Hz–31 Hz) were significant on a Bonferroni corrected level (with 6 comparisons at αcorr=0.05/6) for nearly all combinations of fingers and subjects (39 out of 52 combinations (6 Hz–11 Hz) and 46 out of 52 combinations (11 Hz–31 Hz)). The effects in high gamma power cross the significance level only in a few combinations (12 out of 52 combinations of fingers and subjects). This marginal effect suggests that high gamma band oscillations can be observed in non-invasive MEG recordings. However, these signals might not be relevant for classification of skeletal movements in MEG since the effect might not be strong enough in single trials and trial averaging might be necessary to reliably detect it.

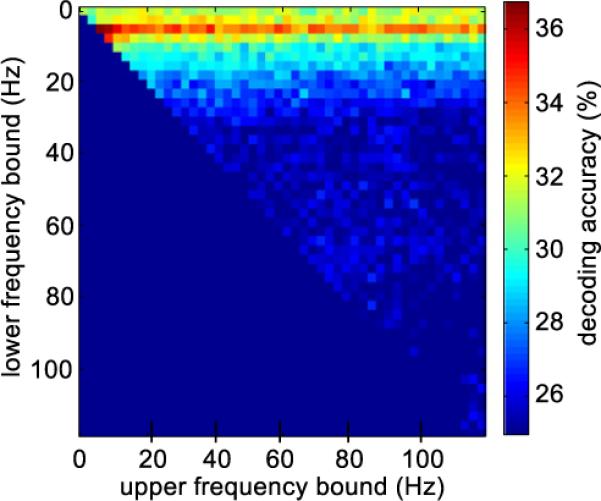

Classification results using the spectrograms

In an exploratory analysis we aimed to find the optimal frequency band for classification. We computed DAs for all frequency bands in the frequency range between 1 Hz and 120 Hz with bandwidth varied in steps of 2.5 Hz. Fig. 7 shows the DAs obtained with different frequency bands and all channels, averaged over all subjects. The averaged power in the band from 6 Hz to 11 Hz provided the best classification results. However, the average DA does not exceed 36%, and is specific to the inclusion of this narrow frequency band. This is indicated by the horizontal stripe of predictive frequency bands, which all include the 6 Hz–11 Hz band. Importantly, although DAs including the 6 Hz–11 Hz band exceed the guessing level and quite good DA was obtained in some subjects, overall DA performance with band power in any frequency band and bandwidth is far inferior to DA obtained with the entire time series (see Table 2 for a summary of DAs obtained with different features). This suggests that precise information about the dynamics of the brain activity (i.e. the phase), which is retained in the time series data but not in the oscillation band power, provides important information for decoding. Moreover, even though high gamma modulations were present in the MEG trial average, power in this band does not exceed the guessing level for single trial finger discrimination.

Fig. 7.

Decoding accuracies for different frequency bands. The color code depicts subject averaged decoding accuracy obtained with power in frequency bands of different width and center frequency. The upper cut off frequency varies along the x-axis and the lower cut off frequency varies along the y-axis. The closer the point is to the diagonal, the narrower is the frequency band. The highest decoding accuracies were obtained with the frequency band from 6 Hz to 11 Hz. Independent of bandwidth high gamma oscillatory power did not allow for reliable decoding.

Table 2.

Summary of decoding accuracies (%) for different MEG-feature spaces. Summary of average decoding accuracies (%) over subjects and fingers for different approaches. The times series provided for the best features for classification, also after reducing the number of sensors from 248 to 30.

| Feature space | Average decoding accuracy over subjects and fingers |

|---|---|

| Best interval for time series classification | |

| 500 ms interval: −50 ms to 450 ms | 57% |

| Classification of time series around button press | |

| 500 ms interval: −250 ms to 250 ms | 54% |

| 250 ms interval: −125 ms to 125 ms | 53% |

| 100 ms interval: −50 ms to 50 ms | 42% |

| Best frequency band: 6 Hz–11 Hz* | 36% |

| 30 best sensors* | 55% |

= time interval from −50 ms to 450 ms

Discussion

We show that neuronal activity originating from contralateral sensorimotor cortex conveys sufficient information to reliably distinguish slight movements of four different fingers of the same hand using non-invasive MEG recordings. Information on what finger a subject was about to move was available even before movement onset. The highest amount of information is captured in the dynamics of the movement related spatiotemporal brain activation patterns. We found movement related variations in average oscillatory brain activity ranging from slow variations (6 Hz–11 Hz) to the high gamma range (60 Hz–120 Hz). The oscillatory power changes followed the pattern previously described in invasive recordings (Crone et al., 1998a, 1998b): during movement power of high gamma oscillations increased whereas power in low frequency bands decreased. However, single trial finger movement discrimination in oscillatory power changes was limited to a narrow frequency band between 6 Hz and 11 Hz, and was inferior to the classification of movement related spatiotemporal patterns. This shows that the exact dynamics of the spatiotemporal brain activation patterns are the most important source of information about which finger is moved. Moreover, we show that these spatiotemporal patterns are better captured in magnetic flux changes measured in the MEG than in the electric potentials measured by EEG. The results of our study suggest that the signals available in non-invasive MEG recordings can provide highly detailed information for BMI control.

Magnetic field fluctuations time-locked to movement are most informative for finger discrimination

Relatively slow temporal modulations of magnetic fields caused by variation of neural activity around the button press, allowed for better finger discrimination than any other signal we investigated. Power modulations of slow oscillatory magnetic fields, as opposed to magnetic field time series, neglect exact phase relation between the movement dynamics and the underlying neural activation. Discarding this information leads to a severe drop of the decoding accuracy, indicating that spatiotemporal neural activation patterns time-locked to movement might play an important role for accurate decoding. This is consistent with previous studies reporting that time-locked local motor potentials provide substantial information about arm movement kinematics in invasive and non-invasive recordings from humans and monkeys (Acharya et al., 2010; Bansal et al., 2011; Ganguly et al., 2009; Kubánek et al., 2009; Rickert et al., 2005; Schalk et al., 2007; Waldert et al., 2008). Thus, our study, together with others, implies that knowledge about the precise temporal sequence of neuronal activation may be needed for detailed BMI control of an upper limb-prosthesis including finger function.

Precise spatial information is important for finger discrimination

The finding that MEG recordings outperform EEG recordings indicates that differences in the spatial pattern of brain activity provide important information about the identity of the finger that was moved. Although, MEG and EEG technically offer the same temporal resolution, MEG is superior to the EEG with respect to spatial resolution, at least in sensor space (Hämäläinen et al., 1993; Hari et al., 1988). The lower spatial resolution of scalp EEG signal is due to spatial blurring at the interface of tissues with different conductance. This limitation cannot be overcome by increasing the density of EEG electrodes. Accordingly, our results show that the better performance using MEG is not simply due to the higher number of sensors. Moreover, we consider it unlikely that instrumentation specific differences in noise floors or least significant bit resolution cause the DA differences between MEG and EEG in our experiment. The peak-to-peak modulation in the average MEG data was about 13.8 dB above the resolution limiting noise floor of approximately 10 fT/√Hz and similarly, the average EEG modulation was approximately 13 dB above the resolution limiting least significant bit resolution of the system (0.5 uV).

The question remains what spatial information MEG may provide in sensor space that is diagnostic of which finger of a hand was moved. In the past small but systematic spatial differences between cortical representations of the fingers have been observed in fMRI (Dechent and Frahm, 2003), histological projections (Schieber, 2001) and electrical stimulation (Penfield and Boldrey, 1937). However, the distance of the finger representations of the same hand along the cortical sheet was reported in the order of a few millimeters, close to estimates of the theoretical lower limit of the localization error for MEG (Mosher et al., 1993). We speculate that other factors than spatial position of the active brain area, e.g. the strong curvature of the cortical sheet in the finger knob too contributes to the high decoding accuracy. In humans finger representations are thought to be localized along an inverted omega shaped knob of the central sulcus (Cheyne et al., 2006; Yousry et al., 1997). Orientation changes in the active tissue could change spatial patterns of magnetic flux measured in sensor space even when the spatial localization of the active tissue remains indistinguishable (Scherg, 1992). However, to further investigations of the neural substrate, e.g. by classification in source instead of sensors space, are required.

Oscillatory power changes

We observed reliable transient average power modulations in a wide range of frequency bands up to the high-gamma range around finger movement onset. In the frequency decomposition these power modulations started already prior to movement and temporally extended well into the movement interval. In the low frequency bands we reproduce the previously described beta- and mu-band desynchronization around movement onset (Pfurtscheller and Lopes da Silva, 1999). In addition, we found informative movement related power changes in an even lower frequency band between 6 Hz and 11 Hz. However, even DA derived from band power in the most informative frequency band, between 6 Hz and 11 Hz, was clearly inferior to DA derived from time series data. This indicates that slow movement related neural activation modulations are most informative about which finger of a hand moves. The inferior DA with oscillatory band power is most likely due to the lack of phase information.

Movement related power changes in high-gamma oscillations (>70 Hz) have been reported in intracranial ECoG (Crone et al., 1998b), in hemicraniectomy patients, missing part of the skull (Voytek et al., 2010), and in MEG (Cheyne et al., 2008). In concordance with these studies we find movement related modulations in the average induced high gamma band power of the MEG (Fig. 6 and Supplementary Fig. 1). Invasively recorded high-gamma oscillations are spatially more focused and have a clearer somatotopic organization than slow oscillations (<30 Hz, Miller et al., 2007) and can be used to discriminate the flexion of individual fingers (Kubánek et al., 2009). However, with non-invasively recorded high gamma modulations we were unable to distinguish the individual fingers in single trials. We speculate that phase shifts between oscillations in closely neighboring locations on the brain attenuate the high gamma signal in distant MEG and EEG sensors with larger spatial integration areas than ECoG-electrodes placed directly on the cortex. As a result high-gamma power changes are less informative for single trial finger decoding in non-invasive compared to invasive recordings.

Pre-movement discrimination

An important question, with regard to Brain–Machine Interfaces is when brain activity starts to provide information about the finger that will be moved. We found discriminative information well before the actual finger movement started (150 ms). It is unlikely that this discriminative MEG activation simply reflects a “Bereitschaftsmagnetfeld” similar to the readiness potential described by Deecke et al. (Deecke et al., 1982) since other EEG (Haggard and Eimer, 1999) and MEG (Herrmann et al., 2008) studies indicate that the “Bereitschaftspotential” provides information about a general movement preparation but not about the specific movement that will be performed. The pre-movement discriminative brain activation we found represents preparation of a specific finger movement. Although we were able to decode which finger was moved solely on brain activity prior to movement onset, we consider it likely that brain activity elicited by somatosensory feedback also played an important role at later points in time since DA increased further when neuronal data from the interval following the button press was included.

Implications for Brain–Machine Interfaces

Even though the complex instrumentation currently prohibits the use of a MEG-based Brain–Machine Interface in daily life, it is important to gain insight into the potential of non-invasive recordings for BMI and to explore the limits of what functions can be decoded non-invasively despite the limited spatial resolution and signal to noise ratio (Bradberry et al., 2010; Jerbi et al., 2007; Waldert et al., 2008). We show that it is possible to reliably decode in single trial fine motor manipulations with non-invasive MEG-recordings. In addition, in the challenging task of decoding movements of different finger of the same hand we find a clear advantage of MEG over EEG.

Most successfully implemented non-invasive BMIs rely on asynchronous decoding of neuronal activity (Birbaumer et al., 1990; Pfurtscheller and Neuper, 2001; Pfurtscheller et al., 2006). Here we employed a synchronous decoding paradigm and exploited precise spatiotemporal information in neuronal activation. Oscillation amplitude based decoding, which is often used in asynchronous BMI, neglects precise temporal information and in our case, provided much lower DAs. Therefore, we think it will be necessary, to implement an asynchronous decoding approach that is able to exploit spatiotemporal information (e.g. a state space approach) to implement non-invasive finger decoding in practical BMI applications. A possibility to further improve the already high DA would be to integrate over multiple movements. Here, we restricted decoding to only one movement because we were interested in potential limits of decoding finger movements. For more practical applications, such restrictions would not necessarily apply.

Conclusion

The present study provides evidence that single finger movements of the same hand can be accurately decoded with non-invasive MEG recordings. Our work demonstrates that information available in concurrently recorded EEG is not sufficient for robust classification. Furthermore, we show that the slow amplitude modulations of the time series are more predictive than the power of the time-frequency representation of different frequency bands when decoding single finger taps. This indicates that important information is captured in the precise spatiotemporal pattern of movement related magnetic field dynamics.

Supplementary Material

Footnotes

Funding source: Land-Sachsen-Anhalt Grant MK48-2009/003, ECHORD 231143, NINDS grant NS21135.

Supplementary materials related to this article can be found online at doi:10.1016/j.neuroimage.2011.11.053.

References

- Acharya S, Fifer MS, Benz HL, Crone NE, Thakor NV. Electrocorticographic amplitude predicts finger positions during slow grasping motions of the hand. J. Neural. Eng. 2010;7:046002. doi: 10.1088/1741-2560/7/4/046002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aggarwal V, Tenore F, Acharya S, Schieber MH, Thakor NV. Cortical decoding of individual finger and wrist kinematics for an upper-limb neuroprosthesis. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2009;2009:4535–4538. doi: 10.1109/IEMBS.2009.5334129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bansal AK, Vargas-Irwin CE, Truccolo W, Donoghue JP. Relationships among low-frequency local field potentials, spiking activity, and 3-D reach and grasp kinematics in primary motor and ventral premotor cortices. J. Neurophysiol. 2011;105(4):1603–1619. doi: 10.1152/jn.00532.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Birbaumer N, Elbert T, Canavan AG, Rockstroh B. Slow potentials of the cerebral cortex and behavior. Physiol. Rev. 1990;70:1–41. doi: 10.1152/physrev.1990.70.1.1. [DOI] [PubMed] [Google Scholar]

- Bradberry TJ, Gentili RJ, Contreras-Vidal JL. Reconstructing three-dimensional hand movements from noninvasive electroencephalographic signals. J. Neurosci. 2010;30:3432–3437. doi: 10.1523/JNEUROSCI.6107-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carmena JM, Lebedev MA, Crist RE, O'Doherty JE, Santucci DM, Dimitrov DF, Patil PG, Henriquez CS, Nicolelis MAL. Learning to control a brain-machine interface for reaching and grasping by primates. PLoS Biol. 2003;1:E42. doi: 10.1371/journal.pbio.0000042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheyne D, Bakhtazad L, Gaetz W. Spatiotemporal mapping of cortical activity accompanying voluntary movements using an event-related beamforming approach. Hum. Brain Mapp. 2006;27:213–229. doi: 10.1002/hbm.20178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheyne D, Bells S, Ferrari P, Gaetz W, Bostan AC. Self-paced movements induce high-frequency gamma oscillations in primary motor cortex. NeuroImage. 2008;42:332–342. doi: 10.1016/j.neuroimage.2008.04.178. [DOI] [PubMed] [Google Scholar]

- Crone NE, Miglioretti DL, Gordon B, Lesser RP. Functional mapping of human sensorimotor cortex with electrocorticographic spectral analysis. II. Event-related synchronization in the gamma band. Brain. 1998a;121(Pt 12):2301–2315. doi: 10.1093/brain/121.12.2301. [DOI] [PubMed] [Google Scholar]

- Crone NE, Miglioretti DL, Gordon B, Sieracki JM, Wilson MT, Uematsu S, Lesser RP. Functional mapping of human sensorimotor cortex with electrocorticographic spectral analysis. I. Alpha and beta event-related desynchronization. Brain. 1998b;121(Pt 12):2271–2299. doi: 10.1093/brain/121.12.2271. [DOI] [PubMed] [Google Scholar]

- Dechent P, Frahm J. Functional somatotopy of finger representations in human primary motor cortex. Hum. Brain Mapp. 2003;18:272–283. doi: 10.1002/hbm.10084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deecke L, Weinberg H, Brickett P. Magnetic fields of the human brain accompanying voluntary movement: Bereitschaftsmagnetfeld. Exp. Brain Res. 1982;48:144–148. doi: 10.1007/BF00239582. [DOI] [PubMed] [Google Scholar]

- Ganguly K, Secundo L, Ranade G, Orsborn A, Chang EF, Dimitrov DF, Wallis JD, Barbaro NM, Knight RT, Carmena JM. Cortical representation of ipsilateral arm movements in monkey and man. J. Neurosci. 2009;29:12948–12956. doi: 10.1523/JNEUROSCI.2471-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Georgopoulos AP, Langheim FJP, Leuthold AC, Merkle AN. Magnetoencephalographic signals predict movement trajectory in space. Exp. Brain Res. 2005;167:132–135. doi: 10.1007/s00221-005-0028-8. [DOI] [PubMed] [Google Scholar]

- Guyon I, Weston J, Barnhill S, Vapnik V. Gene selection for cancer classification using support vector machines. Mach. Learn. 2002;46:389–422. [Google Scholar]

- Haggard P, Eimer M. On the relation between brain potentials and the awareness of voluntary movements. Exp. Brain Res. 1999;126:128–133. doi: 10.1007/s002210050722. [DOI] [PubMed] [Google Scholar]

- Hämäläinen M, Hari R, Ilmoniemi RJ, Knuutila J, Lounasmaa OV. Magneto-encephalography—theory, instrumentation, and applications to noninvasive studies of the working human brain. Rev. Mod. Phys. 1993;65:413–497. [Google Scholar]

- Hamed SB, Schieber MH, Pouget A. Decoding M1 neurons during multiple finger movements. J. Neurophysiol. 2007;98:327–333. doi: 10.1152/jn.00760.2006. [DOI] [PubMed] [Google Scholar]

- Hari R, Joutsiniemi SL, Sarvas J. Spatial resolution of neuromagnetic records: theoretical calculations in a spherical model. Electroencephalogr. Clin. Neurophysiol. 1988;71:64–72. doi: 10.1016/0168-5597(88)90020-2. [DOI] [PubMed] [Google Scholar]

- Herrmann CS, Pauen M, Min B-K, Busch NA, Rieger JW. Analysis of a choice-reaction task yields a new interpretation of Libet's experiments. Int. J. Psychophysiol. 2008;67:151–157. doi: 10.1016/j.ijpsycho.2007.10.013. [DOI] [PubMed] [Google Scholar]

- Hochberg LR, Serruya MD, Friehs GM, Mukand JA, Saleh M, Caplan AH, Bran-ner A, Chen D, Penn RD, Donoghue JP. Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature. 2006;442:164–171. doi: 10.1038/nature04970. [DOI] [PubMed] [Google Scholar]

- Jerbi K, Lachaux J-P, N'Diaye K, Pantazis D, Leahy RM, Garnero L, Baillet S. Coherent neural representation of hand speed in humans revealed by MEG imaging. Proc. Natl. Acad. Sci. U. S. A. 2007;104:7676–7681. doi: 10.1073/pnas.0609632104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kauhanen L, Nykopp T, Sams M. Classification of single MEG trials related to left and right index finger movements. Clin. Neurophysiol. 2006;117:430–439. doi: 10.1016/j.clinph.2005.10.024. [DOI] [PubMed] [Google Scholar]

- Kubánek J, Miller KJ, Ojemann JG, Wolpaw JR, Schalk G. Decoding flexion of individual fingers using electrocorticographic signals in humans. J. Neural. Eng. 2009;6:066001. doi: 10.1088/1741-2560/6/6/066001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leuthardt EC, Schalk G, Wolpaw JR, Ojemann JG, Moran DW. A brain-computer interface using electrocorticographic signals in humans. J. Neural. Eng. 2004;1:63–71. doi: 10.1088/1741-2560/1/2/001. [DOI] [PubMed] [Google Scholar]

- Miller KJ, Leuthardt EC, Schalk G, Rao RPN, Anderson NR, Moran DW, Miller JW, Ojemann JG. Spectral changes in cortical surface potentials during motor movement. J. Neurosci. 2007;27:2424–2432. doi: 10.1523/JNEUROSCI.3886-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller KJ, Zanos S, Fetz EE, den Nijs M, Ojemann JG. Decoupling the cortical power spectrum reveals real-time representation of individual finger movements in humans. J. Neurosci. 2009;29:3132–3137. doi: 10.1523/JNEUROSCI.5506-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitra PP, Pesaran B. Analysis of dynamic brain imaging data. Biophys. J. 1999;76:691–708. doi: 10.1016/S0006-3495(99)77236-X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mosher JC, Spencer ME, Leahy RM, Lewis PS. Error bounds for EEG and MEG dipole source localization. Electroencephalogr. Clin. Neurophysiol. 1993;86:303–321. doi: 10.1016/0013-4694(93)90043-u. [DOI] [PubMed] [Google Scholar]

- Oostenveld R, Fries P, Maris E, Schoffelen J-M. FieldTrip: open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput. Intell. Neurosci. 2011;2011:156869. doi: 10.1155/2011/156869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Penfield W, Boldrey E. Somatic motor and sensory representation in the cerebral cortex of man as studied by electrical stimulation. Brain. 1937;60:389–443. [Google Scholar]

- Pfurtscheller G, Lopes da Silva FH. Event-related EEG/MEG synchronization and desynchronization: basic principles. Clin. Neurophysiol. 1999;110:1842–1857. doi: 10.1016/s1388-2457(99)00141-8. [DOI] [PubMed] [Google Scholar]

- Pfurtscheller G, Neuper C. Motor imagery and direct brain-computer communication. Proc. IEEE. 2001;89:1123–1134. [Google Scholar]

- Pfurtscheller G, Graimann B, Huggins JE, Levine SP, Schuh LA. Spatiotemporal patterns of beta desynchronization and gamma synchronization in corticographic data during self-paced movement. Clin. Neurophysiol. 2003;114:1226–1236. doi: 10.1016/s1388-2457(03)00067-1. [DOI] [PubMed] [Google Scholar]

- Pfurtscheller G, Brunner C, Schlögl A, Lopes da Silva FH. Mu rhythm (de)synchronization and EEG single-trial classification of different motor imagery tasks. NeuroImage. 2006;31:153–159. doi: 10.1016/j.neuroimage.2005.12.003. [DOI] [PubMed] [Google Scholar]

- Rickert J, de Oliveira SC, Vaadia E, Aertsen A, Rotter S, Mehring C. Encoding of movement direction in different frequency ranges of motor cortical local field potentials. J. Neurosci. 2005;25:8815–8824. doi: 10.1523/JNEUROSCI.0816-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rieger JW, Reichert C, Gegenfurtner KR, Noesselt T, Braun C, Heinze H-J, Kruse R, Hinrichs H. Predicting the recognition of natural scenes from single trial MEG recordings of brain activity. NeuroImage. 2008;42:1056–1068. doi: 10.1016/j.neuroimage.2008.06.014. [DOI] [PubMed] [Google Scholar]

- Schalk G, Kubánek J, Miller KJ, Anderson NR, Leuthardt EC, Ojemann JG, Limbrick D, Moran D, Gerhardt LA, Wolpaw JR. Decoding two-dimensional movement trajectories using electrocorticographic signals in humans. J. Neural. Eng. 2007;4:264–275. doi: 10.1088/1741-2560/4/3/012. [DOI] [PubMed] [Google Scholar]

- Scherg M. Functional imaging and localization of electromagnetic brain activity. Brain Topogr. 1992;5:103–111. doi: 10.1007/BF01129037. [DOI] [PubMed] [Google Scholar]

- Schieber MH. Constraints on somatotopic organization in the primary motor cortex. J. Neurophysiol. 2001;86:2125–2143. doi: 10.1152/jn.2001.86.5.2125. [DOI] [PubMed] [Google Scholar]

- Taylor DM, Tillery SIH, Schwartz AB. Direct cortical control of 3D neuroprosthetic devices. Science. 2002;296:1829–1832. doi: 10.1126/science.1070291. [DOI] [PubMed] [Google Scholar]

- Voytek B, Secundo L, Bidet-Caulet A, Scabini D, Stiver SI, Gean AD, Manley GT, Knight RT. Hemicraniectomy: a new model for human electrophysiology with high spatio-temporal resolution. J. Cogn. Neurosci. 2010;22:2491–2502. doi: 10.1162/jocn.2009.21384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Waldert S, Preissl H, Demandt E, Braun C, Birbaumer N, Aertsen A, Mehring C. Hand movement direction decoded from MEG and EEG. J. Neurosci. 2008;28:1000–1008. doi: 10.1523/JNEUROSCI.5171-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yousry TA, Schmid UD, Alkadhi H, Schmidt D, Peraud A, Buettner A, Winkler P. Localization of the motor hand area to a knob on the precentral gyrus. A new landmark. Brain. 1997;120(Pt 1):141–157. doi: 10.1093/brain/120.1.141. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.