Abstract

Bistability within a small neural circuit can arise through an appropriate strength of excitatory recurrent feedback. The stability of a state of neural activity, measured by the mean dwelling time before a noise-induced transition to another state, depends on the neural firing-rate curves, the net strength of excitatory feedback, the statistics of spike times, and increases exponentially with the number of equivalent neurons in the circuit. Here, we show that such stability is greatly enhanced by synaptic facilitation and reduced by synaptic depression. We take into account the alteration in times of synaptic vesicle release, by calculating distributions of inter-release intervals of a synapse, which differ from the distribution of its incoming interspike intervals when the synapse is dynamic. In particular, release intervals produced by a Poisson spike train have a coefficient of variation greater than one when synapses are probabilistic and facilitating, whereas the coefficient of variation is less than one when synapses are depressing. However, in spite of the increased variability in postsynaptic input produced by facilitating synapses, their dominant effect is reduced synaptic efficacy at low input rates compared to high rates, which increases the curvature of neural input-output functions, leading to wider regions of bistability in parameter space and enhanced lifetimes of memory states. Our results are based on analytic methods with approximate formulae and bolstered by simulations of both Poisson processes and of circuits of noisy spiking model neurons.

Keywords: Dynamic synapses, Stochastic processes, Facilitation, Poisson process, Bistability, Persistent activity, Short-term memory, First-passage time

1 Introduction

Circuits of reciprocally connected neurons have been long considered as a basis for the maintenance of persistent activity [1]. Such persistent neuronal firing that continues for many seconds after a transient input can represent a short-term memory of prior stimuli [2]. Indeed, Hebb’s famous postulate [3] that causally correlated firing of connected neurons could lead to a strengthening of the connection, was based on the suggestion that the correlated firing would be maintained in a recurrently connected cell assembly beyond the time of a transient stimulus [3]. Since then, analytic and computational models have demonstrated the ability of such recurrent networks to produce multiple discrete attractor states [4], as in Hopfield networks [5,6], or to be capable of integration over time via a marginally stable network, often termed a line attractor [7,8]. Much of the work on these systems has assumed either static synapses, or considered changes in synaptic strength via long-term plasticity occurring on a much slower timescale than the dynamics of neuronal responses. Here, we add some new results pertaining to the less well-studied effects of short-term plasticity—changes in synaptic strength that arise on a timescale of seconds, the same timescale as that of persistent activity—within recurrent discrete attractor networks.

The two forms of short-term synaptic plasticity—facilitation and depression—affect all synapses of the presynaptic cell according to its train of action potentials. Synaptic facilitation refers to a temporary enhancement of synaptic efficacy in the few hundreds of milliseconds following each spike, effectively strengthening connections to postsynaptic cells as presynaptic firing rate increases. Synaptic depression is the opposite effect—reduced synaptic efficacy in the few hundreds of milliseconds following a presynaptic spike, effectively weakening connection strengths as presynaptic firing rate increases. The dynamics of these processes (Table 1) also impacts the variability in postsynaptic conductance, in particular when synaptic transmission is treated as a stochastic event. The variability affects information processing via the signal-to-noise ratio [9-11] and also determines the stability, or robustness, of discrete memory states [12,13].

Table 1.

Stochastic synapse model and parameters. (S) for single-synapse model; (M) for memory model calculations, where different

| (a) Presynaptic components | |

|---|---|

| Synapse | Determinant of release probability, |

| Static |

|

| Facilitating |

, where between spikes: and immediately following each spike:

|

| Depressing | , where P(V = 1)=D(t); P(V = 0)=1 − D(t). Between spikes: and immediately following successful release, , while immediately following unsuccessful release, |

| (b) Postsynaptic components | |

|---|---|

| Synapse | Determinant of synaptic gating variable, s(t) |

| All | Following successful release: |

| Between successful releases: | |

| (c) Parameters | |||||

|---|---|---|---|---|---|

| Synapse | Presynaptic τ | Factors | Postsynaptic | ||

| Static |

– |

0.5 (S) |

– |

100 ms |

1 − exp(−0.25) |

| 0.5 (M) | |||||

| Facilitating |

|

0.1 (S) |

(S) |

100 ms |

1 − exp(−0.25) |

| 0.25 (M) |

(M) |

||||

| Depressing | 0.5 | – | 100 ms | 1 − exp(−0.25) | |

When analyzing the stability of discrete states, we focus on the mean value of and fluctuations within the postsynaptic feedback conductance, since that is the variable with a slow enough time constant to maintain persistent activity in standard models of network-produced memory states [14,15]. In our formalism, we rely on fluctuations in this NMDA receptor-mediated feedback conductance to be on a slower timescale (100 ms) than the membrane time constant, which is short (<10 ms), in part because each cell receives a barrage of balanced excitatory and inhibitory inputs. When synapses are dynamic, both the mean postsynaptic conductance and its fluctuations are altered from the case of static synapses.

Here, we show how a presynaptic Poisson spike train, which produces an exponential distribution of interspike intervals (ISIs), produces a distribution of inter-release intervals (IRIs) that is not exponential if synapses are either facilitating or depressing. We then consider how the nonexponential distribution of IRIs affects both the mean and standard deviation of the postsynaptic conductance differently from the exponential, Poisson, distribution of IRIs. These results affect the calculation of stability of memory states, yielding differences in the parameter ranges where bistability exists and producing large changes in the spontaneous transition times between states, which limit their stability.

A two-state memory system is limited by the lifetime of the less stable state [16]. For a given system, one can typically vary any parameter so as to enhance the lifetime of one state while reducing the lifetime of the other state. If we define the system’s stability as the lifetime of the less stable state, then the optimal stability of a system arises when the lifetimes of the two states are equal. In this paper, for a given system, defined by the neural firing-rate curve and type of synapse, we parametrically scale the total feedback connection strength to determine the system’s optimal stability. In so doing, we find that optimal stability of bistable neural circuits is enhanced by synaptic facilitation.

2 Statistics of Synaptic Transmission Through Probabilistic Dynamic Synapses

In the following, we assume that synaptic facilitation and depression operate by modifying the release probability of presynaptic vesicles. Following vesicle release, neurotransmitter binds to receptors in the postsynaptic terminal. The fraction of receptors bound at any one time determines the fraction of open channels, known as the gating variable, s, which is proportional to the conductance producing current flow into the postsynaptic cell. The dependence of s on presynaptic firing is affected by the dynamic properties of the intervening synapse. In particular, the distribution of intervals between vesicle release events is not identical to the interspike interval (ISI) distribution: facilitating synapses increase the likelihood of short inter-release intervals (IRIs) compared to long intervals, so increase the coefficient of variation (CV); whereas depressing synapses make short release intervals unlikely and produce a more regular sequence of release intervals, reducing the CV (Fig. 1). While the means of these distributions can be calculated by standard methods [17], it is valuable to know the full distribution, since changes in the CV of IRIs affect the variability of the postsynaptic conductance, and thus alter properties like signal-to-noise ratio and the stability of memory states to noise fluctuations.

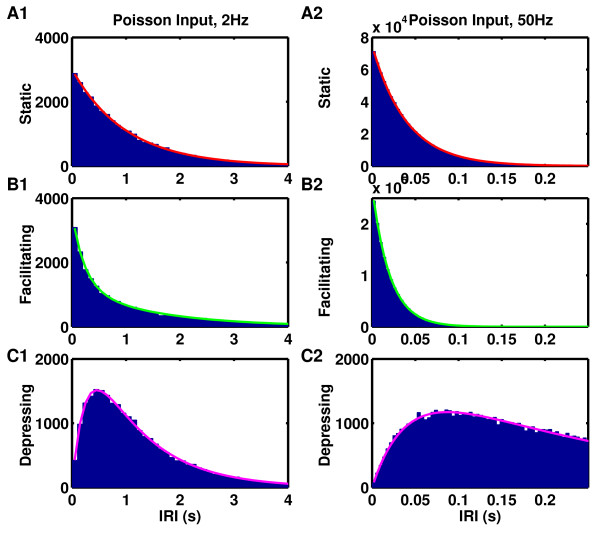

Fig. 1.

Dynamic synapses alter the distribution of interrelease intervals (IRI) of one vesicle produced by a Poisson spike train. a1–a2 Histogram of IRIs for static synapses is exponential (). b1–b2 Histogram of IRIs for a facilitating synapse is more sharply peaked than exponential (with a CV greater than one) (, , ). c1–c2 Histogram of IRIs for a probabilistic depressing synapse has a dip at low intervals (producing a CV less than one) (, ). a1, b1, c1 Presynaptic Poisson spike train of 2 Hz. a2, b2, c2 Presynaptic Poisson spike train of 50 Hz

2.1 Distribution of Release Times for a Poisson Spike Train Through Stochastic Depressing Synapses

The distribution of release times of a vesicle for depressing synapses with a single release site is simpler to calculate than that for facilitating synapses, because when considering synaptic depression alone, the probability of release from a single site simply depends on the time since last release of a vesicle from that site. Therefore, we will solve for depressing synapses before moving to the case of facilitating synapses, where the probability of release depends on the number of intervening spikes. The subsequent result for facilitating synapses will prove to be more biologically relevant, as synapses typically contain multiple releasable vesicles, so it is only in the case where the baseline release probability is low—in which case facilitation dominates—that failure of release is common enough to affect the distribution of release times. The case of probabilistic release in depressing synapses with multiple release sites is more complex, though the first two moments of the IRI probability distribution have been calculated by others [18].

Synaptic depression arises because of the time needed to recycle and replenish vesicles following release of neurotransmitter. Synaptic depression can be treated stochastically [19] by assuming vesicle recovery is a Poisson process, with the likelihood of a vesicle being release-ready, or “docked,” as , where T is the time since the prior vesicle release. Thus, the distribution of inter-release intervals (IRIs) can be calculated by requiring that a vesicle be docked within the interval and then adding the time for a spike to appear after the vesicle is docked. We assume a docked vesicle has a release probability of and incoming spikes arrive as a Poisson process of rate r. Since probability of docking between time and is evaluated at , or

| (1) |

and the probability of the first spike after time being at time T and causing release as , we have

| (2) |

so

| (3) |

which leads to a mean IRI of

| (4) |

The reduction in probability of small IRIs is a simple example of the temporal filtering of information presented by others [18]. The addition of the extra probabilistic process of vesicle recovery, which underlies synaptic depression, causes IRIs to be more regular, as evidenced in Eqs. (2)–(3) by a reduced coefficient of variation (CV) of IRIs from the Poisson value of 1:

| (5) |

which has a minimum value of at and a maximum value of 1 as or . For example, in the curves shown in Figs. 1c1–1c2, at 2 Hz and 0.87 at 50 Hz.

2.2 Distribution of Release Times for a Poisson Spike Train Through Stochastic Facilitating Synapses

For facilitating synapses, we take the following form for release probability, , where between presynaptic spikes:

| (6) |

Each spike produces an increase in F from (which determines the release probability of that spike) to (which is the new release probability for an immediate, subsequent spike) such that

| (7) |

where is the facilitation factor, taking a value between 0 and 1, indicating the fractional increase from the pre-spike release probability toward a saturating release probability of .

To calculate the distribution of interrelease intervals (IRIs) we need to calculate the probability of release as a function of time, following a prior release. Although presynaptic spikes arrive with constant probability per unit time in a Poisson process, vesicle release occurs more often when the facilitation variable is high. Thus, immediately after release, the likelihood of release is greater than on average, because the facilitation variable takes some time (on the order of ) to return to a baseline value. Furthermore, when calculating the IRI distribution, we must be aware that , which is the mean value approached by F conditioned on no intervening release event will be lower than the mean value, , since long IRIs are more associated with time windows of fewer intervening presynaptic spikes than chance.

To proceed, we first calculate , the mean of the facilitation variable immediately after vesicle release. To arrive at this quantity, we use the mean value of the facilitation variable averaged across all presynaptic spikes [17]:

| (8) |

and the variance of this quantity [9]:

| (9) |

Together, these can be used to calculate , which is the mean value of the facilitation variable just prior to firing when averaged across only those spikes that actually cause release, since release probability is proportional to . The latter averaging produces a higher value than , since higher instances of are more likely to result in release, so weight the average more than lower values:

| (10) |

where is the probability that F takes the value immediately prior to an incoming spike and the denominator normalizes the distribution. Hence,

| (11) |

From this, the mean value of the facilitation variable immediately following vesicle release can be calculated as

| (12) |

The above formula is exact and was matched by simulated data at all values of r simulated (data not shown).

To estimate the steady state value of F a long time from any prior release—a steady state that may never be reached if the product of firing rate and base release probability is much higher than —we solve a self-consistency equation for this value, and ignore fluctuations by assuming release probability is for each presynaptic spike. One can calculate then the probability of N spikes in a given interval, T, conditioned on the requirement that none of those spikes caused vesicle release, while the facilitation variable is at its mean steady state value of . The result is:

| (13) |

which is the result for a Poisson process of modified rate, . This allows us to self-consistently calculate by using the result for the mean value of the facilitation variable given such a modified spike rate, such that

| (14) |

which can be solved using the quadratic formula to give

| (15) |

a value which is always below and in close agreement with simulated data (not shown).

Finally, to fit the IRI distribution, we assumed exponential decay from to with a time constant such that the initial slope (when the probability of any intervening spikes is zero) matches that of an exponential decay to 1 with time constant (the initial rate of decrease of F in the absence of intervening spikes). That is, we take the release to follow an inhomogeneous Poisson process with a rate, which depends on time, T, since the prior release event, given by

| (16) |

where

| (17) |

The distribution of IRIs is then given by [17]

| (18) |

a function, which is plotted in Figs. 1b1–1b2, where it is indistinguishable from the simulated data. Similarly, indistinguishable is the cumulative IRI distribution plotted in Figs. 2b1–2b2, justifying the approximations that led to our results.

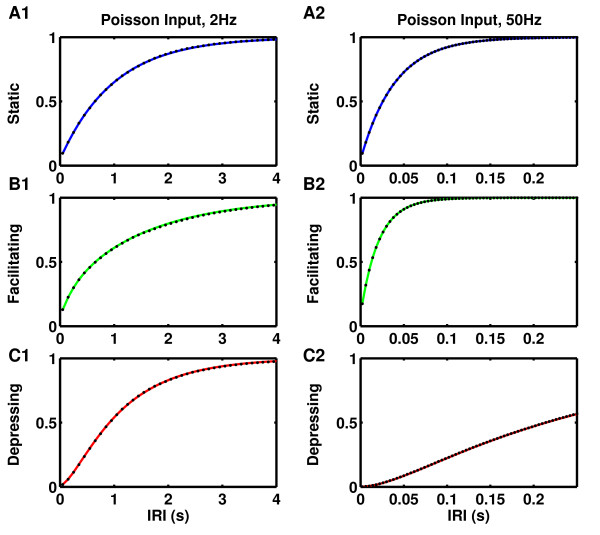

Fig. 2.

Cumulative distribution of inter-release intervals (IRIs) for the same curves shown in Fig. 1, verifying the remarkable agreement of the approximation used in Fig. 1b with the simulated data. a1–a2 Static synapses, . b1–b2 Facilitating synapses, , , . c1–c2 depressing synapses, , . a1, b1, c1 Presynaptic Poisson spike train of 2 Hz. a2, b2, c2 Presynaptic Poisson spike train of 50 Hz

Finally, it should be noted that when synapses are facilitating, consecutive IRIs are correlated. For example, when the presynaptic rate is 2 Hz in the simulation used to produce Figs. 1b1 and 2b1, the correlation between one IRI and the subsequent one is 0.028, while with a presynaptic rate of 50 Hz the correlation is 0.015. Such a correlation, which cannot be obtained from the IRI distribution alone, further increases any variability in postsynaptic conductance, above and beyond the increase due to the altered shape of the IRI distribution.

In summary, the main difference produced by facilitation from the exponential distribution of inter-spike intervals (which is retrieved by setting either or to zero) is an enhancement of probability at low T and a corresponding reduction at high T. These changes produce a CV of IRIs greater than 1 ( at 5 Hz and at 50 Hz in the examples shown in Figs. 1b1–1b2) enhancing the noise in any neural system.

2.3 Mean Synaptic Transmission via Dynamic Synapses

We assume that at the time of vesicle release the postsynaptic conductance increases in a step-wise manner, with a fraction, , of previously closed channels becoming opened. This causes the synaptic gating variable, s, to increase from its prior value, to according to . It then decays between release events with time constant, , according to .

If one assumes that successive inter-release intervals (IRIs) are uncorrelated then one can calculate the mean, , and variance, , in the postsynaptic gating variable (and hence the postsynaptic conductance, which is proportional to s) via:

| (19a) |

| (19b) |

| (19c) |

where the averages of and are taken over the distribution of interrelease intervals, , as given in the prior section (Fig. 1, Eqs. (3), (18)) and we have used the solution at time t following the ith spike at time . Solution of the above equations leads to

| (20) |

which allows us to calculate the mean synaptic conductance through static and dynamic synapses (Fig. 3a).

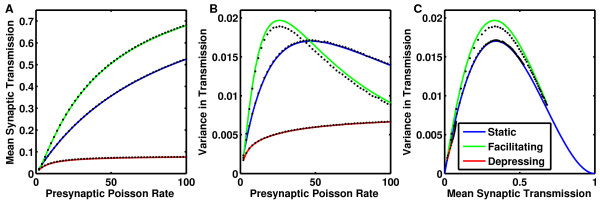

Fig. 3.

a Mean synaptic transmission, and b variance of synaptic transmission, , arising from presynaptic Poisson trains through probabilistic synapses. c Variance in synaptic transmission as a function of the mean transmission. Solid curves are analytic solutions (blue, middle curve for static, a= Eq. (23), b= Eq. (24), c= Eq. (25); green, upper curve for facilitating, a from Eqs. (20) and (31), b from Eqs. (22) and (31), c from a &b; and red, lower curve for depressing synapses, a= Eq. (26), b from Eqs. (4), (22), (25) and (27), c from a &b). Black dots are corresponding results from simulations produced by 30,000 sec of Poisson input spike trains through saturating synapses

Similarly, combining

| (21a) |

| (21b) |

| (21c) |

leads to

| (22) |

which allows us to calculate the variance in postsynaptic conductance (Fig. 3b).

When synapses are static, release times are distributed as a Poisson process of rate , where r is the presynaptic Poisson rate and is the static release probability. In this case, the mean value of the gating variable is calculated by standard methods [20] to give

| (23) |

a function plotted in Fig. 3a (blue curve), where it exactly matches the simulated data (black asterisks). A similar calculation leads to the variance in synaptic transmission for static synapses [12,20] as

| (24) |

a function plotted in Fig. 3b (blue curve), where it exactly matches the simulated data (black asterisks). The variance can be written as a function of the mean synaptic transmission by substituting for r into Eq. (24) with from Eq. (23) to produce the reduced formula:

| (25) |

which is plotted in Fig. 3c (blue curve).

For probabilistic depressing synapses with “all-or-none” release, the IRIs are independent as the synapse is always in the same state immediately post-release. The IRIs are distributed according to Eq. (3), which leads to

| (26) |

so that using Eq. (4) for the mean IRI, , we have

| (27) |

which, plotted as a red curve in Fig. 3a, precisely matches the simulated data (black circles). Similarly, making the substitution for probabilistic depressing synapses:

| (28) |

into Eq. (22), allows us to evaluate as plotted in Fig. 3b (red solid curve), where it precisely matches the simulated data (black points).

For probabilistic facilitating synapses, we use an approximate formula for to evaluate the expected value of the exponential decay —essentially a Laplace transform—since the full formula is intractable for these purposes. We found after testing many formulas against simulated quantities that so long as we correctly included the facilitation factor immediately after release as and the approximate release probability a long time after release as , the principal requirement was to use a probability density of IRIs, with the correct value for the mean IRI. For facilitating synapses we know the mean IRI, :

| (29) |

We fulfilled these three requirements by grossly simplifying the actual decay of the facilitation variable post-release, letting it switch between its immediate post-release value of to its steady state value, , at a time, into the IRI where is chosen to produce the correct value of . That is, we approximated the probability distribution of IRIs, , as

| (30) |

where

| (31) |

From such a distribution we can easily calculate moments, and , of the postsynaptic conductance using the Laplace transforms where

| (32) |

The corresponding mean postsynaptic conductance, using Eq. (20), plotted in Fig. 3a (green curve) is indistinguishable from the simulated data (black points). This form of the mean synaptic transmission through facilitating synapses will be used in the next section when we assess the stability and robustness of memory states produced by such synaptic feedback. The variance in synaptic transmission of the simulated data (Fig. 3b, black points) is no longer precisely fit by the approximate formula, obtained from Eq. (22), Eq. (31) and using Eq. (31) with replaced by to calculate (Fig. 3b, green curve). However, since the approximate formula slightly overestimates the variance, it will tend to underestimate the stability of any memory state. Thus, a more precise fit would enhance stability (Fig. 4d). Figure 3c (green curve) indicates that for all values of mean synaptic transmission, the variance is greater when synapses are facilitating.

Fig. 4.

Synaptic facilitation enhances the stability of discrete memory states. a The firing rate curve (solid, blue) and synaptic feedback (dashed red) for a system with feedback strength optimized for bistability in a group of cells with static synapses. Firing rate curve follows Eq. (34) with , , , which is the best fit to the leaky-integrate and fire neuron used in the simulations and described in Table 2d. Feedback strength is optimized for bistability with . b Same firing rate curve (solid, blue) as in a but synaptic feedback (dashed green) via facilitating synapses with feedback strength optimized for bistability with . a–b Solid circles indicate stable fixed points separated by an unstable fixed point (open circle). c Difference between firing rate and feedback curves in a and b determine the basis for the gradient of an effective potential. Note the enhanced areas between fixed points (zero crossings) producing a larger potential barrier when synapses are facilitating (green) compared to static (red). d The lifetime of both the low activity state and the high activity state increases exponentially with system size, but a given level of stability is achieved with far fewer cells when the synapses are facilitating (solid curves, analytic results; filled and open circles simulated results for the high and low activity states, respectively)

3 Stability of Discrete States Enhanced by Short-Term Synaptic Facilitation

Groups of cells with sufficient recurrent excitatory feedback can become bistable, capable of remaining, in the absence of input, in a quiescent state of low-firing rate, or after transient excitation, in a persistent state of high-firing rate. Given the inherent stochastic noise in neural activity—spike trains are irregular, with the CV of ISIs often exceeding one—the activity states have an inherent average lifetime, which increases exponentially with the number of neurons in the cell-group. In this section, we show analytically that addition of synaptic facilitation to all recurrent synapses can increase the stability of such discrete memory states by many orders of magnitude. We follow the methods presented in a prior paper for static synapses [12] and extend them to a circuit with probabilistic facilitating synapses. Calculations of stability are based on the mean of first-passage times between two stable states [21]. We assume that neurons spike with Poisson statistics, while the variability in the postsynaptic conductance, which possesses a long time constant (100 ms) typical of NMDA receptors [15], determines the instability of states. Since synaptic facilitation of probabilistic synapses affects both the mean and variance of the postsynaptic conductance (Figs. 3a–3b), both must be calculated and taken into account when determining the lifetime of memory states. We describe the method briefly below, leaving a reproduction of the full details to the following sections.

Bistability arises when the deterministic dynamics of the network produces multiple fixed points—firing rates at which —at least two of which are stable. The deterministic mean firing rate depends on the total synaptic input to a group of cells. The total synaptic input includes a feedback component via recurrent connections as well as an independent external component. At a fixed point, the feedback produced by a given firing rate is such that the total synaptic input exactly maintains that given firing rate (intersections in Figs. 4a, 4b). For a network to possess multiple fixed points, the curve representing synaptic transmission as a function of firing rate and the curve representing firing rate as a function of synaptic input must intersect at multiple points (Figs. 4a, 4b). Between any two stable fixed points is an unstable fixed point, where the curves cross back in the opposite direction. The stability of any individual fixed point is strongly dependent on the area enclosed between the two curves from that fixed point to the unstable fixed point. This enclosed area acts as the height of an effective potential (Fig. 4c), which, for a given level of noise in the system determines the mean passage time from one stable fixed point to the basin of attraction of the other fixed point, i.e., the mean lifetime of the memory state. Importantly, the lifetime is approximately exponentially dependent on the effective barrier height, or the area between the two curves. Thus, changes in the curvature of synaptic feedback as a function of firing rate, which can have a strong impact on the area between the f-I curve and the feedback curve, can affect state lifetimes exponentially.

When we analyze the extent of this effect as wrought by synaptic facilitation, we find a greatly enhanced barrier in the effective potential (Fig. 4c), which demonstrates the additional curvature in the neural feedback function outweighs any increase in noise in the system (which enters the denominator in the effective potential, Eq. (35). Consequently, the lifetime of both persistent and spontaneous states in a discrete attractor system, can be enhanced by several orders of magnitude when synapses are facilitating (Fig. 4d). Alternatively, one can obtain the same necessary stability with far fewer cells, for example, to produce a mean stable lifetime of over a minute for both the low and high activity states, with all-to-all connections, only eight cells are necessary in the example with facilitating synapses, whereas forty are necessary when synapses are static.

3.1 Analytic Calculation of Mean Transition Time Between Discrete Attractor States

To calculate transition times between discrete attractor states, and hence assess their stability to noise, we produce an effective potential for the postsynaptic conductance as the most slowly varying continuous variable of relevance. We use standard methods for transitions between stable states of Markov processes [21] but first must calculate the deterministic term, , and diffusive term, , for a group of cells with recurrent feedback. The calculations in the case of static synapses were produced and validated elsewhere [12] but we briefly reiterate them in the following paragraphs. When synapses are facilitating, the only alterations are the expression for mean synaptic conductance, (Fig. 3a) and its variance, (Fig. 3b), and a newly optimized strength of feedback connection to ensure both spontaneous and active states remain as stable as possible.

Our essential assumption is to treat the behavior of the postsynaptic variable, s, given a presynaptic Poisson spike train at rate r, as an Ornstein–Uhlenbeck process, which matches the mean and variance of s, while maintaining the same basic synaptic time constant for decay to zero in the absence of presynaptic input. Thus, we have

| (33) |

(by matching the mean of s) and

| (34) |

(by matching the variance of s) where the subscript “1” indicates the variance produced by a single presynaptic spike train. For a circuit with N presynaptic neurons producing feedback current, we scale down individual connections strengths so that the mean feedback current is independent of N, but the noise is reduced as , since s is the fraction of maximal conductance ().

We close the feedback loop by ensuring the presynaptic firing rate is equal to the postsynaptic firing rate, so use the firing rate function [22]:

| (35) |

with rate multiplier , threshold , and concavity all obtained by fitting to leaky integrate-and-fire simulations [12]. S is a scaled version of s, accounting for the total feedback conductance, , where W is the sum of connection strengths of all cells and held fixed when N is varied.

The effective potential, , for a group with N feedback inputs per cell is

| (36) |

which leads to a probability density, :

| (37) |

where C is a normalization constant. The mean transition time from a stable state centered at to a state centered at is [21]:

| (38) |

a function which is plotted for both static and facilitating synapses in Fig. 4d.

3.2 Simulation of Mean Transition Time Between Discrete Attractor States

We compared the results of our approximate analysis (Fig. 4d, curves) with those of computer simulations of noisy leaky-integrate and fire neurons. To do this, we simulated small circuits of excitatory neurons connected in an all-to-all manner, using the parameters given in Table 2. Each neuron received independent background Poisson inputs, both excitatory and inhibitory, such that interspike intervals had a CV of 1 at low firing rates, decreasing gradually to 0.8 by a firing rate of 100 Hz. We simulated for either 200,000 seconds, or until 20,000 transitions between states were made, whichever was sooner. The mean transition times are plotted in Fig. 4d (open and closed circles), where they show good qualitative agreement with the analytic curves.

Table 2.

Details of network simulations producing memory activity

| (a) Model summary | |

|---|---|

| Populations |

Single population, E |

| Connectivity |

All-to-all |

| Neuron model |

Leaky integrate-and-fire (LIF) with refractory

period |

| Synapse model |

Excitatory AMPA + voltage-dependent NMDA, inhibitory GABA

conductances − step increase then exponential decay |

| Input |

Independent fixed-rate Poisson spike trains from

populations of Input cells |

| Measurements | State transitions times via mean population firing rate |

| (b) Populations | ||

|---|---|---|

| Name | Elements | Size |

| E | LIF neurons | N = 8,20,30,40 (static) N = 4,8,12,16 (facilitating) |

| (c) Connectivity | |||

|---|---|---|---|

| Name | Source | Target | Pattern |

| EE | E | E | All-to-all, weight W |

| (d) Neuron and synapse model | |

|---|---|

| Name |

LIF neuron |

| Type |

Leaky integrate-and-fire (LIF) with refractory period, and

noisy Poisson exponential conductance input |

| Subthreshold dynamics |

|

| EE synaptic conductance dynamics |

|

|

between spikes of cell i at times

and | |

| Spiking | If then (1) emit spike with time-stamp (2) |

| (f) Input | |

|---|---|

| Type | Description |

| Poisson generators X = AMPA,GABA |

|

| ; | |

| (g) Measurements | |

|---|---|

| Transition times | Time for to transition from below 0.05 to above 0.45 () and from above 0.45 to below 0.05 () |

| (h) LIF neuron parameters | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| −70 mV | −70 mV | −70 mV | −45 mV | −60 mV | 50 nS | 0.5 nF | 20 nS | 20 nS | 2 ms | 5 ms | |

| (i) Synaptic parameters (EE) | |||||

|---|---|---|---|---|---|

| Synapse | Presynaptic τ | Factors | Postsynaptic | ||

| Static |

– |

0.5 (M) |

– |

100 ms |

1 − exp(−0.25) |

| Facilitating | 0.25 (M) | (M) | 100 ms | 1 − exp(−0.25) | |

3.3 Results for Multiple Circuits

In the example shown, bistability in the control system with static synapses required particular fine-tuning of parameters, so was not very robust. One could wonder that if a different system were chosen—in particular a different f-I curve were used—then the system with static synapses might not be improved by the addition of synaptic facilitation. That is, should synaptic facilitation always enhance robustness of such bistable neural circuits? To address this point, we parametrically varied the properties of the f-I curve (Eq. (34)) and for each set of parameters, we systematically varied the feedback connection strength, W, to test whether the system could be bistable.

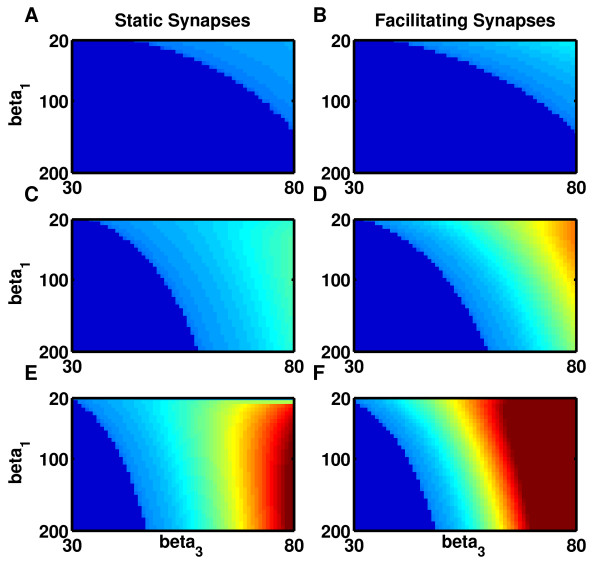

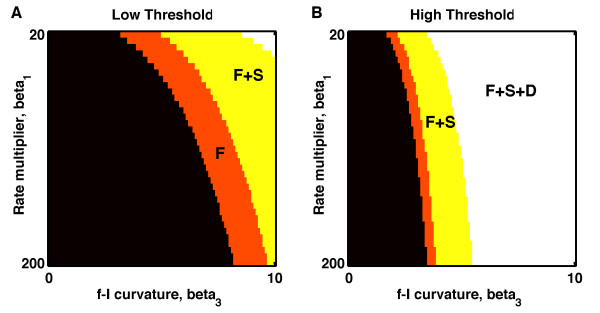

As a result (Fig. 5), we found that the set of parameters able to produce bistability when synapses are static is a subset of the set found when synapses are facilitating. Thus, synaptic facilitation can produce bistability when it is not possible with static synapses, but the reverse is not true. As a corollary, the set of parameters able to produce bistability when synapses are depressing is a subset of the set found when synapses are static.

Fig. 5.

Range of bistability is enhanced with facilitating synapses and reduced with depressing synapses. a Low threshold, with . b High threshold with . a, bWhite region, all models (with static, facilitating and depressing synapses) are bistable; yellow region, models with static or facilitating synapses are bistable; orange region, only models with facilitating synapses are bistable; black region, no models are bistable

For all parameter sets able to produce bistability, we assessed the optimal stability of the memory system. As the excitatory feedback connection strength, W, increases, so the mean lifetime of the high-activity state increases, while the mean lifetime of the low-activity state decreases. We consider optimal stability of the memory state as the value of the lifetime when high-activity and low-activity states are equally durable. More specifically, we calculate the minimum of and as a measure of the stability of memory and parametrically vary W to find the maximum stability for a given set of and given type of synapses. In all cases where comparison was possible, stability is enhanced when synapses are facilitating and stability is reduced when synapses are depressing, compared to the case of static synapses (Fig. 6).

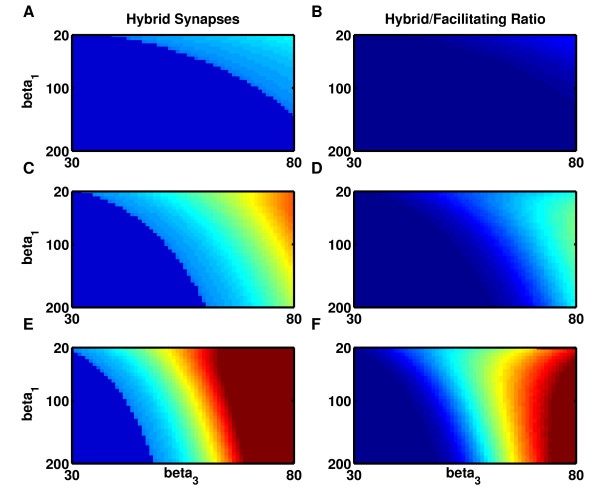

Fig. 6.

Maximum stability of memory states, for a given neural firing-rate curve, is always greater when synapses are facilitating rather than static. a–b Low threshold, . c–d Medium threshold, . e–f High threshold, . a, c, e Synapses are static. b, d, f Synapses are facilitating. All panels: Steepness of single neuron firing-rate curves increase with (y-axis) while maximum curvature increases with (x-axis). Stability of a bistable system is determined by the minimum lifetime of either of the two activity states. Maximum stability is calculated for each firing-rate curve as a function of connection strength and plotted after logarithmic scaling in color code. Dark blue: no bistability exists. Light blue = low stability; orange-red = high stability; cyan-green boundary = optimal lifetime of one hour

It is worth emphasizing that the two effects of synaptic facilitation on synaptic transmission have opposing consequences for attractor state stability. While the increased curvature in the curve of mean synaptic transmission increases stability of discrete attractors, the increased variance (Fig. 3c, green curve) decreases stability. While our results demonstrate that the deterministic effect dominates (i.e., the net effect of facilitation is to enhance stability), it is instructive to assess the contribution of each of the two effects alone. Thus, for a given mean synaptic transmission calculated for facilitating synapses, we used the variance in synaptic transmission corresponding to static synapses (Fig. 3c, blue curve) and recalculated the lifetimes of memory states. While changing the noise does not change significantly the parameter range for bistability (i.e., Fig. 5 is, to first order, unaffected by changes in noise) it does have a considerable impact on the lifetimes of states. In particular, by using the reduced noise of static synapses—a reduction of at most 20 %—the optimal lifetime was typically a factor of e higher in a circuit with 20 neurons and higher in a circuit with 40 neurons (using the parameters of Fig. 4d). Figure 7 demonstrates the enhanced lifetime in the hybrid model across networks—the ratio is always greater than one and extended to as high as 50 in the networks examined. Thus, the increased noise in the postsynaptic current produced by synaptic facilitation does produce considerable destabilization of state lifetimes—the hybrid model of synaptic facilitation without such enhanced noise produces the greatest possible stability of discrete memory states.

Fig. 7.

A hybrid model demonstrates the reduction in lifetime attributable to the enhanced fluctuations in postsynaptic conductance produced by synaptic facilitation. a–b Low threshold, . c–d Medium threshold, . e–f High threshold, . a, c, e Lifetime of states in the hybrid model with synaptic facilitation but with the noise due to static synapses. Dark blue: no bistability exists. Light blue = low stability; orange-red = high stability; cyan-green boundary = optimal lifetime of one hour. b, d, f Logarithm of the ratio of Figs. 7a, 7c, 7e to 6b, 6d, 6f, respectively, demonstrates the decrease in state lifetime attributable to enhanced noise when synapses are facilitating. Dark blue: ratio = 1. Cyan-yellow, ratio >e. Orange-light red, ratio . Dark red, ratio . All panels: Steepness of single neuron firing-rate curves increase with (y-axis) while maximum curvature increases with (x-axis). Stability of a bistable system is determined by the minimum lifetime of either of the two activity states. Maximum stability is calculated for each firing-rate curve as a function of connection strength and plotted after logarithmic scaling in color code

4 Discussion

Bistability relies upon positive feedback, which can arise from cell-intrinsic currents or from network feedback. Synaptic facilitation is a positive feedback mechanism in circuits of reciprocally connected excitatory cells, since the greater the mean firing rate, the greater the effective connection strength, further amplifying the excitatory input beyond that produced by the increased spike rate alone. This property of synaptic facilitation enhances the stability of memory states and renders them more robust to distractors [23]. Other forms of positive feedback, such as depolarization-induced suppression of inhibition (DSI), which depends on activity in the postsynaptic cell, can similarly produce robustness in recurrent memory networks [24].

When the bistability necessary for discrete memory is produced through synaptic feedback in a circuit of neurons, the relative stability to noise fluctuations of each of the two stable fixed points depends exponentially on the area between the mean neural response curve and the synaptic feedback curve (Figs. 4a–4b). While the synaptic feedback curve is monotonic in firing rate, for static synapses it is either linear (in the absence of postsynaptic saturation) or of negative curvature (decreasing gradient), with the effectiveness of additional spikes decreasing at high rates when receptors become saturated. However, when the synapse is facilitating, the synaptic response curve has positive curvature when firing rates are low—the effect of each additional spike is greater as firing rate increases. Here, we showed how such an effect could increase the area between intersections of synaptic feedback and neural response curves, enhancing stability dramatically (Figs. 4–6).

We note that the addition of positive curvature at low rates to the negative curvature at high, saturating rates in the curve of synaptic transmission as a function of presynaptic firing rates (Fig. 3a) inevitably increases the areas between three points of intersection with any firing rate curve without such an “S”-shape (Figs. 4a–4b). Since the “S”-shape is a hallmark of synaptic facilitation, not present for synaptic transmission through static synapses, facilitation can always enhance stability of such bistable systems. Less mathematically, a facilitating synapse with the same effective strength as a static synapse at intermediate firing rates is stronger at high firing rates, enhancing the stability of a high-activity state (where a drop in synaptic transmission is detrimental), while at the same time is weaker at low firing rates, enhancing the stability of a low-activity state (where a rise of synaptic transmission is detrimental).

It is worth pointing out the converse—that short-term synaptic depression reduces the robustness of such discrete attractors. Indeed, in Fig. 5, we show that the range of parameters for which a bistable system exists is much narrower when synapses are depressing (D) versus static (S) or facilitating (F). Since synaptic depression contributes a negative curvature to the f-I curve, it tends to reduce the “S-shape” needed for bistability. Or, perhaps more intuitively, high synaptic strength is needed to maintain a high-firing rate state if synapses are depressing, but such high synaptic strength is more likely to render the low-firing rate spontaneous state unstable.

The changes in the shape of the distribution of inter-release intervals caused by dynamic synapses alter the fluctuations in post-synaptic conductance. In particular, facilitation enhances the variability and depression reduces the variability arising from a Poisson spike train. While the extra variability caused by facilitating synapses tends to destabilize a memory system, this effect was overwhelmed by the increase in stability due to the rate-dependent changes in mean synaptic transmission described above. However, the increase in conductance variability, in particular, being on a slower timescale than membrane potential fluctuations, can be a factor in explaining the high CV of neural spike trains.

Our calculations are based on a simplified formalism, in which the firing-rate curve (f-I curve) of a neuron is first assumed or fit (Eq. (34), [22]) under in vivo-like conditions, assuming a given level of noise in the membrane potential. Since the shape of the f-I curve depends on both the mean and variance of the input current [25,26], it might appear invalid to discuss changes in the variability of input current due to dynamic synapses in the context of a fixed f-I curve. However, the time constants for short-term synaptic plasticity and the NMDA receptor-mediated currents are more than an order of magnitude greater than the time constant of the membrane potential under the conditions of strong, fluctuating balanced input that produce the irregularity of spike trains seen in vivo. Since the neuron’s membrane potential can sample its probability distribution—which determines the likelihood of a spike per unit time—more rapidly than the timescale for changes in that probability distribution, our analytic methods provide a reasonable description of the circuit’s behavior (Fig. 4d).

In summary, we have demonstrated the ability of short-term synaptic facilitation to stabilize discrete attractor states of neural activity to noise. We have shown this by simulations and through analytic methods, which include a consideration of how stochastic dynamic synapses mold the distribution of interrelease intervals (IRIs) into a form that differs from the exponential distribution of incoming interspike intervals (ISIs). The altered IRI distribution affects both mean synaptic transmission and the variability of transmission due to a presynaptic Poisson spike train—both of which have a strong impact on the stability of memory states. The increased variability of synaptic transmission due to facilitation is more than countered by the effect of facilitation on mean synaptic transmission, which enhances the robustness of bistability, leading to stable memory states with fewer neurons.

Competing Interests

The author declares that he has no competing interest.

References

- Lorente de Nó R. Vestibulo-ocular reflex arc. Arch Neurol Psych. 1933;3:245–291. doi: 10.1001/archneurpsyc.1933.02240140009001. [DOI] [Google Scholar]

- Funahashi S, Bruce CJ, Goldman-Rakic PS. Neuronal activity related to saccadic eye movements in the monkey’s dorsolateral prefrontal cortex. J Neurophysiol. 1991;3:1464–1483. doi: 10.1152/jn.1991.65.6.1464. [DOI] [PubMed] [Google Scholar]

- Hebb DO. Organization of Behavior. Wiley, New York; 1949. [Google Scholar]

- Brunel N, Nadal JP. Modeling memory: what do we learn from attractor neural networks? C R Acad Sci, Sér 3 Sci Vie. 1998;3:249–252. doi: 10.1016/s0764-4469(97)89830-7. [DOI] [PubMed] [Google Scholar]

- Hopfield JJ. Neural networks and physical systems with emergent collective computational abilities. Proc Natl Acad Sci USA. 1982;3:2554–2558. doi: 10.1073/pnas.79.8.2554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hopfield JJ. Neurons with graded response have collective computational properties like those of two-state neurons. Proc Natl Acad Sci USA. 1984;3:3088–3092. doi: 10.1073/pnas.81.10.3088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang K. Representation of spatial orientation by the intrinsic dynamics of the head-direction cell ensembles: a theory. J Neurosci. 1996;3:2112–2126. doi: 10.1523/JNEUROSCI.16-06-02112.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Compte A, Brunel N, Goldman-Rakic PS, Wang XJ. Synaptic mechanisms and network dynamics underlying spatial working memory in a cortical network model. Cereb Cortex. 2000;3:910–923. doi: 10.1093/cercor/10.9.910. [DOI] [PubMed] [Google Scholar]

- Bourjaily MA, Miller P. Dynamic afferent synapses to decision-making networks improve performance in tasks requiring stimulus associations and discriminations. J Neurophysiol. 2012;3:513–527. doi: 10.1152/jn.00806.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindner B, Gangloff D, Longtin A, Lewis JE. Broadband coding with dynamic synapses. J Neurosci. 2009;3:2076–2088. doi: 10.1523/JNEUROSCI.3702-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rotman Z, Deng PY, Klyachko VA. Short-term plasticity optimizes synaptic information transmission. J Neurosci. 2011;3:14800–14809. doi: 10.1523/JNEUROSCI.3231-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller P, Wang XJ. Stability of discrete memory states to stochastic fluctuations in neuronal systems. Chaos. 2006;3 doi: 10.1063/1.2208923. Article ID 026110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koulakov AA. Properties of synaptic transmission and the global stability of delayed activity states. Network. 2001;3:47–74. [PubMed] [Google Scholar]

- Wang XJ. Synaptic basis of cortical persistent activity: the importance of NMDA receptors to working memory. J Neurosci. 1999;3:9587–9603. doi: 10.1523/JNEUROSCI.19-21-09587.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang XJ. Synaptic reverberation underlying mnemonic persistent activity. Trends Neurosci. 2001;3:455–463. doi: 10.1016/S0166-2236(00)01868-3. [DOI] [PubMed] [Google Scholar]

- Miller P, Zhabotinsky AM, Lisman JE, Wang XJ. The stability of a stochastic CaMKII switch: dependence on the number of enzyme molecules and protein turnover. PLoS Biol. 2005;3 doi: 10.1371/journal.pbio.0030107. Article ID e107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dayan P, Abbott LF. Theoretical Neuroscience. MIT Press, Cambridge; 2001. [Google Scholar]

- Rosenbaum R, Rubin J, Doiron B. Short term synaptic depression imposes a frequency dependent filter on synaptic information transfer. PLoS Comput Biol. 2012;3 doi: 10.1371/journal.pcbi.1002557. Article ID e1002557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kandaswamy U, Deng PY, Stevens CF, Klyachko VA. The role of presynaptic dynamics in processing of natural spike trains in hippocampal synapses. J Neurosci. 2010;3:15904–15914. doi: 10.1523/JNEUROSCI.4050-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brunel N, Wang XJ. Effects of neuromodulation in a cortical network model of object working memory dominated by recurrent inhibition. J Comput Neurosci. 2001;3:63–85. doi: 10.1023/A:1011204814320. [DOI] [PubMed] [Google Scholar]

- Gillespie DT. Markov Processes. Academic Press, San Diego; 1992. [Google Scholar]

- Abbott LF, Chance FS. Drivers and modulators from push-pull and balanced synaptic input. Prog Brain Res. 2005;3:147–155. doi: 10.1016/S0079-6123(05)49011-1. [DOI] [PubMed] [Google Scholar]

- Itskov V, Hansel D, Tsodyks M. Short-term facilitation may stabilize parametric working memory trace. Front Comput Neurosci. 2011;3 doi: 10.3389/fncom.2011.00040. Article ID 40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carter E, Wang XJ. Cannabinoid-mediated disinhibition and working memory: dynamical interplay of multiple feedback mechanisms in a continuous attractor model of prefrontal cortex. Cereb Cortex. 2007;3(Suppl 1):i16–i26. doi: 10.1093/cercor/bhm103. [DOI] [PubMed] [Google Scholar]

- Hansel D, van Vreeswijk C. How noise contributes to contrast invariance of orientation tuning in cat visual cortex. J Neurosci. 2002;3:5118–5128. doi: 10.1523/JNEUROSCI.22-12-05118.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murphy BK, Miller KD. Multiplicative gain changes are induced by excitation or inhibition alone. J Neurosci. 2003;3:10040–10051. doi: 10.1523/JNEUROSCI.23-31-10040.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]