Abstract

Introduction

Hyperspectral imaging has been used in dermatology for many years. The enrichment of hyperspectral imaging with image analysis broadens considerably the possibility of reproducible, quantitative evaluation of, for example, melanin and haemoglobin at any location in the patient's skin. The dedicated image analysis method proposed by the authors enables to automatically perform this type of measurement.

Material and method

As part of the study, an algorithm for the analysis of hyperspectral images of healthy human skin acquired with the use of the Specim camera was proposed. Images were collected from the dorsal side of the hand. The frequency λ of the data obtained ranged from 397 to 1030 nm. A total of 4'000 2D images were obtained for 5 hyperspectral images. The method proposed in the paper uses dedicated image analysis based on human anthropometric data, mathematical morphology, median filtration, normalization and others. The algorithm was implemented in Matlab and C programs and is used in practice.

Results

The algorithm of image analysis and processing proposed by the authors enables segmentation of any region of the hand (fingers, wrist) in a reproducible manner. In addition, the method allows to quantify the frequency content in different regions of interest which are determined automatically. Owing to this, it is possible to perform analyses for melanin in the frequency range λ E ∈(450,600) nm and for haemoglobin in the range λ H ∈(397,500) nm extending into the ultraviolet for the type of camera used. In these ranges, there are 189 images for melanin and 126 images for haemoglobin. For six areas of the left and right sides of the little finger (digitus minimus manus), the mean values of melanin and haemoglobin content were 17% and 15% respectively compared to the pattern.

Conclusions

The obtained results confirmed the usefulness of the proposed new method of image analysis and processing in dermatology of the hand as it enables reproducible, quantitative assessment of any fragment of this body part. Each image in a sequence was analysed in this way in no more than 100 ms using Intel Core i5 CPU M460 @2.5 GHz 4 GB RAM.

Keywords: Hyperspectral imaging, Image processing, Measurement automation, Segmentation

Introduction

The use of imaging in dermatological diagnosis is currently a very rapidly growing branch of medicine and computer science. Computer-assisted medical diagnosis gives much wider possibilities than the methods of traditional evaluation performed by a medical diagnostician. The results obtained from computer analysis are automatic, reproducible, calibrated and independent of human factors related to both the patient and the medical diagnostician who performs the examination. The most common methods of imaging are infrared and visible light. In this respect, the methods of assessment of various types of dermatological conditions based on simple methods of image analysis and processing are extremely popular. In thermal imaging, temperature changes resulting from the imaged diseases such as melanoma as well as thermal effects of the performed dermatological treatments are analysed automatically. As for imaging in visible light, a group of methods which enable morphometric measurements or profiled methods of image analysis and processing are used, for example, to determine the brightness of the RGB components in a segmented skin area. Hyperspectral imaging, which is also used in dermatology, offers much wider capabilities. Multispectral images are acquired using profiled multispectral cameras working in different spectra ranges. Additionally, depending on the frequency and spectrum range, various types of illuminators are used. Matching these two elements (camera and illuminator) is extremely important because of the need to obtain a flat spectrum of the illuminator (lamp) in the range covered by the camera. In this range of light, for example, the visible one, it is possible to observe melanin or haemoglobin at a desired location on the skin. This observation is associated with image analysis enabling automatic and reproducible measurements independent of interindividual variability.

The methods of image analysis and processing used in hyperspectral imaging of any object (not necessarily a medical one) can be divided into several groups, namely morphological methods [1-6], statistical methods [7-9] and profiled algorithms for selected applications. Among the morphological methods, there are classical approaches [1,2,4] and those profiled to the analysis of image sequences [5,6]. The statistical methods are dominated by texture analysis [7-9] which is used as a set of features for classification and recognition. So far, profiled algorithms have been applied to face recognition [10], analysis of skin areas [11], and others [12]. These methods mainly dominate in the segmentation of specific objects [13]. On the basis of segmented objects, their morphometric measurements or analysis of their texture are performed [9]. These are, for example, methods which enable to assess the difference in absorption of radiation by skin chromophores such as haemoglobin or melanin.

The amount and distribution of melanin is in fact an important factor determining, inter alia, the efficacy and safety of treatments in aesthetic medicine with the use of lasers and ILP. In hyperspectral imaging of melanin and haemoglobin, only qualitative analyses of the results obtained are known [14-16]. Therefore, there is a need to objectify the results obtained and to propose fully-automatic measurements of progression and changes of melanin or haemoglobin in the skin of the hand.

The aim of the analysis is to determine melanin and haemoglobin quantity in selected areas of the right hand by using hyperspectral imaging.

Material

As part of the study, an algorithm for the analysis of hyperspectral images of human, healthy skin acquired with the use of the Specim PFD-V10E camera was proposed. Images were derived from the human hand having 2 Fitzpatrick skin phototypes. Individual hands were illuminated with a typical lamp with flat spectral characteristics in the required range (based on HgAr emission for the VNIR spectral range). The images were obtained retrospectively during routine medical examinations carried out in accordance with the Declaration of Helsinki. As for the described algorithm, no studies and experiments were carried out on humans. The resulting data were anonymized and stored in the output format, source 'dat' (ENVI File). The frequency λ of the data obtained ranged from 397 to 1030 nm. Each image was recorded every 0.79 nm, which in total gave 800 2D images for each patient. The resolution M × N (number of rows and columns) of each image for the selected frequency was 899 × 1312 pixels. For the fixed distance of the object from the camera and the set focusing parameters, there was a square area covering the range 130 × 130 μm per each pixel. A total of 4'000 2D images were obtained for 5 hyperspectral images. These images are subject to further analysis.

Method

Preprocessing

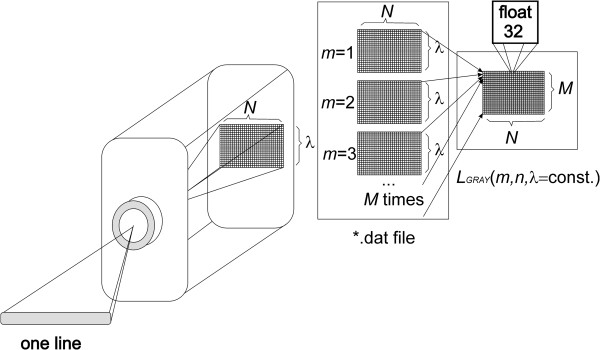

Input files with the extension *.dat containing a sequence of images are reorganized in the preliminary stage. This process (Figure 1) concerns the change in the organization of individual rows and columns obtained for different wavelengths to the sequence of images L GRAY (m,n,λ), where m and n are row column coordinates and the wavelength λ respectively, for which the image was acquired. The number of rows and columns and the wavelength for each image are automatically read from the file *.hdr. This file also contains information about the data format (e.g. data type 4 - float 32 bits) and other elements that need to be taken into account when reading such data (wavelength units, default bands, sensor type, interleave). For the analysed data, the image resolution M × N was 899 × 1312 pixels.

Figure 1.

Diagram of the acquisition and organization of data *.dat. The various stages of data analysis and reorganization are performed automatically. A hyperspectral camera saves each row sequentially for individual wavelengths λ. In subsequent stages of processing, they are converted to the image LGRAY(m,n,λ). The resulting image is further subjected to further processing steps.

Each of the images L GRAY (m,n,λ) is calibrated using the reference rows (used for the calibration for a given range of the spectrum λ) [17-19], and then subjected to filtration thus forming a new sequence of images L M (m,n,λ). This is median filtration with a mask h M sized 3 × 3 pixels. The mask size was chosen arbitrarily taking into account the possible contamination of the optical path as well as the image resolution and the size of the object [20-22]. A larger size of the mask h M caused the removal of not only noise but also small elements in the image which are substantively important. Consequently, the size of 3 × 3 pixels remained. The final step of image pre-processing involves normalization and removal of uneven illumination. In the case of normalization of brightness levels, the range of minimum and maximum values in the image L M (m,n,λ) is extended to the full range of brightness from 0 to 1, that is, the image after normalization L P (m,n,λ) is equal to:

| (1) |

It should be noted that this normalization also covers the area to be calibrated. For this reason, the maximum brightness value equal to “1” after normalization corresponds to 100% emission for the wavelength λ. Removal of uneven illumination is related to the subtraction from the image L P (m,n,λ) the image resulting from its filtration, i.e.:

| (2) |

for m∈(M h2 /2, M C -M h2 /2) and n∈(N h2 /2, N C -N h2 /2).where:

M h2 × N h2 resolution of the mask h2,

L C (m,n,λ) – output image after the removal of uneven illumination.

To acquire the output image L C (m,n,λ), its convolution with the mask h2 sized M h2 × N h2 equal to 30 pixels was used. The mask size was selected on the basis of twice the maximum size of the elements, objects visible in the image L C (m,n,λ), in this case it is 15 × 15, i.e. M h2 × N h2 = 30 × 30 pixels. Next, based on the images L C (m,n,λ) and L P (m,n,λ), the appropriate stages of image analysis and processing are carried out.

Image processing

The images L C (m,n,λ) and L P (m,n,λ) resulting from the initial stage of image processing are used for further processing steps. The image L C (m,n,λ) is further used in order to isolate the region and the object of interest. The image L P (m,n,λ) is a measurable image with respect to the value λ.

Since the aim of the analysis is to determine melanin and haemoglobin quantity in selected areas of the right hand, an important element is the location of characteristic areas. These areas included the individual fingers from V1 to V5 (pollex, index, digitus medius, digitus annularis, digitus minimus manus) and the metacarpus and wrist area V6. Automatic localization of these places (points) from V1 to V6 was made based on the pattern shown in Figure 2. Automatic recognition of individual locations (from V1 to V6) for each of the patients was carried out based on a hierarchical approach. The image L C (m,n,λ) is reduced to a resolution being 10% of the original resolution, that is from the resolution 899 × 1312 pixels to 90 × 131 pixels using the nearest neighbour method. The result is the image L D (m,n,λ,α) where α is the angle of rotation relative to L C (m,n,λ). Then, the angle of the hand inclination α is roughly determined, i.e.:

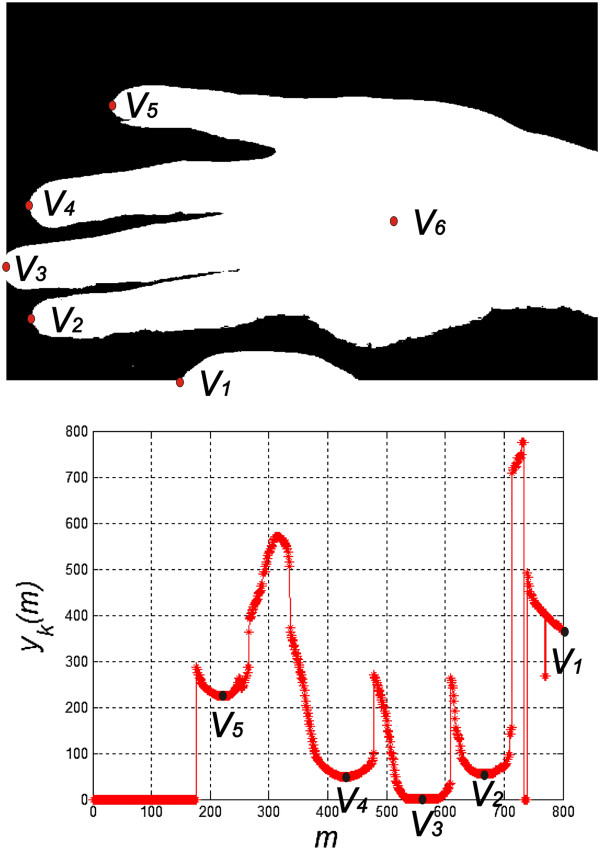

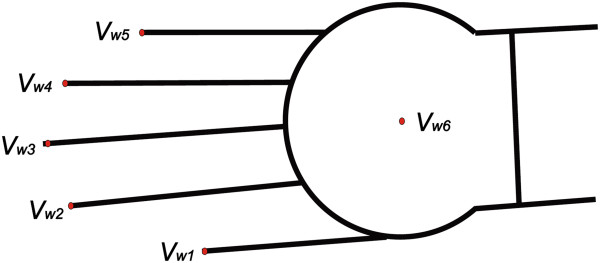

Figure 2.

Hand pattern used during matching to hyperspectral images. The division into individual (points) areas from Vw1 to Vw6 is performed automatically. For each area, a number of the finger from Vw1 to Vw5 and the centre of mass of the wrist Vw6 are assigned. In the upper part of the image, there is the brightness pattern sized 100 × 1321 pixels. The distribution and size of the fingers is carried out based on anthropometric data.

| (3) |

and α∈(0°,180°).

The obtained result is the value of the rotation angle α* at which the hand image must be rotated for each wavelength λ so that it is placed in a horizontal position. The rotation angle is constant for each λ so it does not occur in the formula (3). The image L C * (m,n,λ) rotated at the angle α is subjected to options for finding characteristic points from V1 to V6. Detection of the position of characteristic points is carried out based on the local minima found on the contour of the hand - Figure 3. The contour curve y k (m) is formed on the basis of the binary image L B (m,n) resulting from binarization of the image L D (m,n,λ,α*) for the threshold p r determined automatically from the Otsu’s formula [23], i.e.:

Figure 3.

Binary image of the hand LB(m,n) and the course of the curve yk(m). The binary image of the hand is acquired in a way which only enables to determine the position of the points V1 to V6. These points are determined on the basis of the local minimum in the course yk(m). They provide a further basis for matching the pattern.

| (4) |

The course of the curve y k (m) was determined on the basis of the position of the first rows with the pixel values of “1” in the image L B (m,n) for each column. Local minima, which are highlighted in Figure 3, were also determined on this basis. In the present case, these are the points V2 to V6 (the big finger is not fully visible). The point V6 is the centre of mass of the wrist calculated on the basis of the image L O (m,n) resulting from the opening operation of the image L B (m,n), i.e.:

| (5) |

where SE – square structural element sized 201 × 201.

The size of the structural element SE was selected on the basis of the maximum width of the fingers - for the set distance of the camera and its focal length. In the tests performed, the width of the biggest finger was no more than 200 pixels. On this basis, the centre of mass of the wrist was determined as the coordinates m v6 and n v6 of the point V6, i.e.:

| (6) |

| (7) |

The centre of mass of the wrist is necessary to roughly detect the position of the hand relative to the pattern. After this stage, the positions of the individual points of fingers, i.e. V1, V2, V3, V4 and V5, are matched. Matching is performed on the basis of minimization of the distance between the individual points of the pattern and the analysed image. For the points of the pattern V w1, V w2, V w3, V w4 and V w5, this is minimization of the criterion J:

| (8) |

where m vi and m wvi – coordinates of the points of the analysed image and the pattern i∈(1,5).

The value of the criterion J is calculated for the displacement of the points V1, V2, V3, V4 and V5 in the range of ±100 pixels in the axis of rows and columns. This range is sufficient for searching for the minimum value of J. Examples of the obtained values of the criterion J are shown in Figure 4. In this case, displacements amounting to -18 and -3 pixels in the axis of rows and columns respectively were obtained. This value informs about the need to move the image of the patient so that it would better (with respect to the criterion J) match the pattern. Final adjustment of the positions of the individual points of the fingers is performed on the basis of an analogous criterion. However, it applies to only one vertex V. A block diagram of the proposed algorithm is shown in Figure 5, whereas matching the pattern to the patient's hand is shown in Figure 6. The image L P * (m,n,λ) corrected in terms of affine transformations is further analysed in terms of the content of melanin and haemoglobin.

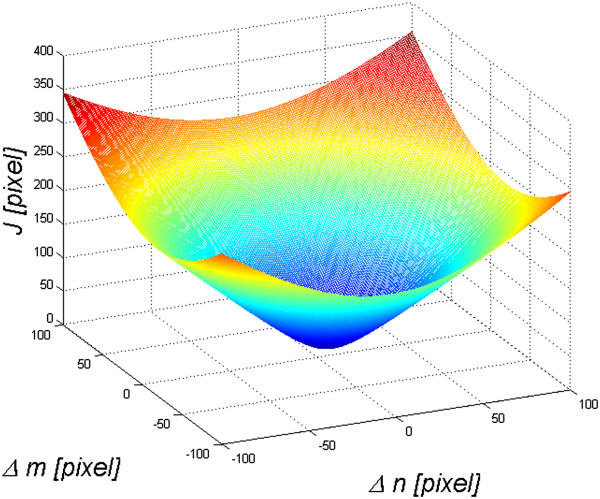

Figure 4.

Graph of changes in the value of the criterion J as a function of Δm and Δn. Depending on the displacements of the points V1 to V5 (Δm and Δn), the value of the criterion J is calculated. One global minimum occurring for the displacements -18 and -3 pixels in the axis of rows and columns respectively is visible. This value provides information about the need to move the image of the patient so that it would better (with respect to the criterion J) match the pattern.

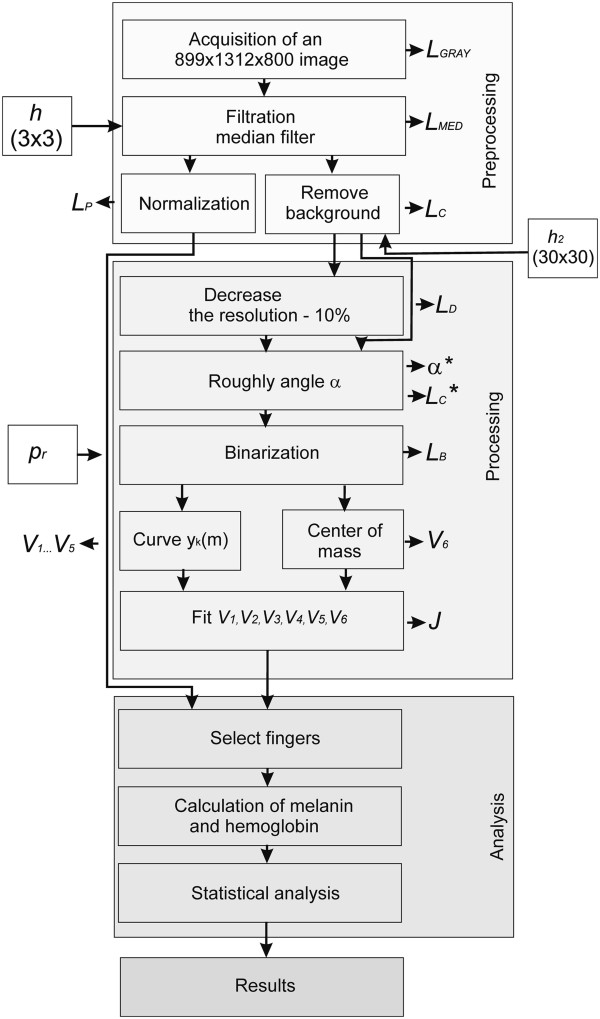

Figure 5.

Block diagram of the proposed algorithm for image analysis and processing. The subsequent algorithm blocks are profiled to the described issue. In the stage of pre-processing, interference is filtered out of the input image after it is reorganized from the file *.dat to the sequence of images. Next, the image is matched to the pattern for the subsequent frames. In the final stage, it is analysed in specified areas.

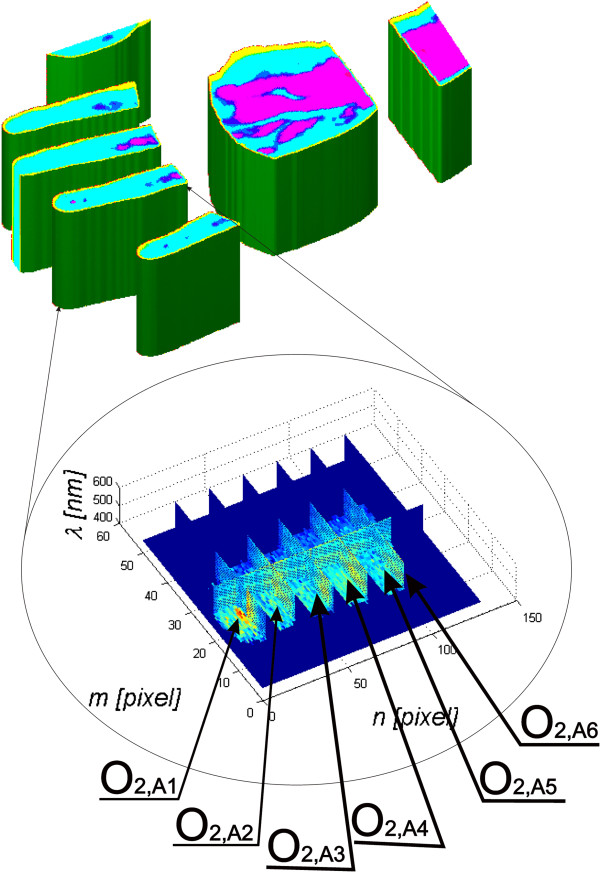

Figure 6.

Contours of melanin and haemoglobin images presented as components R-red and G-green respectively. For melanin, it is the contour formed as the sum of hyperspectral images in the frequency range λE∈(450,600) nm, whereas for haemoglobin this is the range λH∈(350,500) nm. Within these ranges, there are 189 images LP*(m,n,λ) for melanin and 126 images for haemoglobin. The image for melanin and the result of automatic segmentation into characteristic areas, described in the paper, are shown on top and separation into individual objects at the bottom.

The performed and described analysis enables automatic and reproducible determination of the position of individual areas of the hand. This process allows to perform further analysis of the brightness of the specific wavelengths λ. These lengths correspond to the maximum emissivity of melanin and haemoglobin. For melanin, this is the frequency range λ E ∈(450,600) nm and for haemoglobin it is λ H ∈(397 nm,500 nm) extending into the ultraviolet. Within these ranges, there are 189 images L P * (m,n,λ) for the melanin and 126 images for haemoglobin. Accordingly, the image for melanin L E (m,n) was determined as:

| (9) |

and analogously for the haemoglobin L H (m,n):

| (10) |

The results obtained after brightness normalization are shown in Figure 6. The red component is the image L E (m,n), while the green component is the image L H (m,n). The blue component was extinguished. The images L E (m,n) and L H (m,n) are the basis for further analysis of the results. Additionally, owing to the applied matching to the pattern, the division into individual fingers and the wrist is further used - Figures 6 and 7.

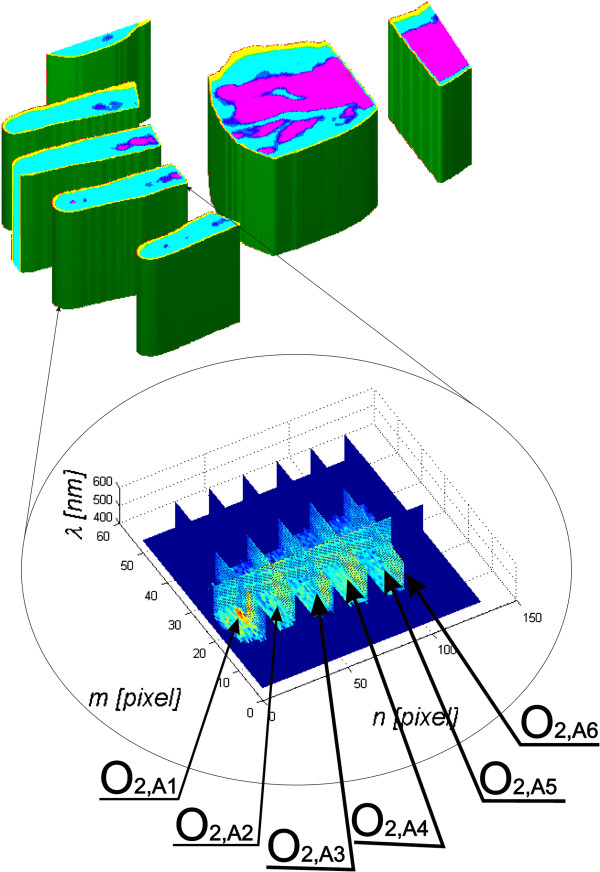

Figure 7.

Result of segmentation for individual wavelengths. The sample finger (V2) was divided into six areas from O2,A1 to O2,A6 for the left side and from O2,B1 to O2,B6 for the right side. Within these areas, the mean value of melanin and its standard deviation and proportions relative to the other areas are calculated.

Results

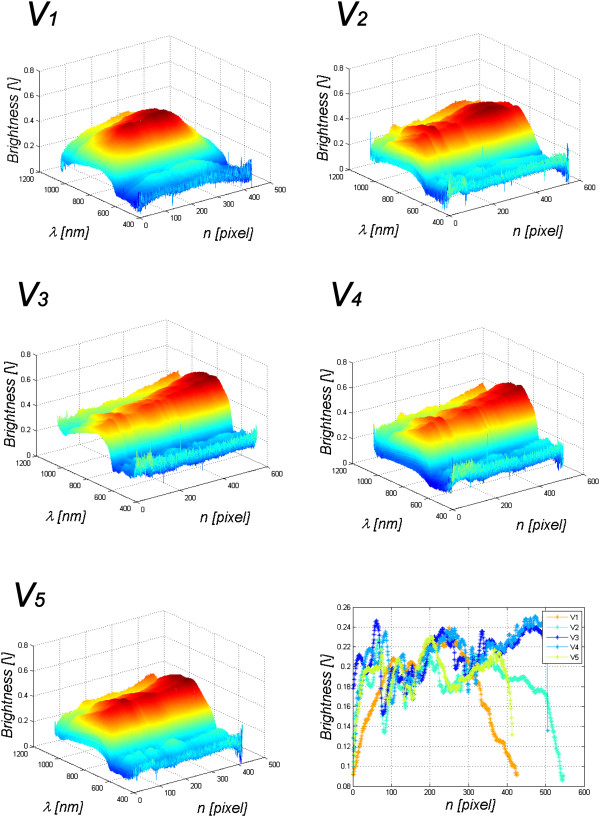

The results of automatic division into the fingers and wrist of the human hand (Figure 6) are further used in the tests. These analyses include automatic determination of the changes in the spectrum absorption for different frequencies λ∈(397,1030) for individual fingers. To this end, along each finger, the mean along its symmetry axis (for subsequent n) for a given frequency λ was calculated. The obtained results for the sample calculations of melanin are shown in Figure 8. For subsequent fingers, characteristic changes in the intensity of each frequency λ are visible. The last graph in Figure 8 shows changes in melanin along subsequent fingers. When approaching the wrist, an increase in the intensity by about 10% to 20% can be observed. The division of one finger (vertex V 2 ) into different areas is shown at the bottom in Figure 7. The finger was divided into 12 areas, 6 on the side “A” and 6 on the side “B” of the finger - that is from O 2,A1 to O 2,A6 for the side A and from O 2,B1 to O 2,B6 for the side B respectively - Figure 7. These areas are automatically scaled depending on the size of the finger and other camera settings which may affect the size of the object. The results of the average brightness intensity and standard deviation of the mean for each area are presented in Table 1. Between the sides A and B, brightness changes are not greater than 1% (O 2,A2, O 2,B2 and O 2,A3 , O 2,B3 ). The standard deviation of the mean is the highest for the areas of distal phalanges – areas O 2,A1 and O 2,B1 . The situation is similar for haemoglobin - Table 2. The standard deviation of the mean is a bit smaller than in the case of melanin – it oscillates around the brightness value of 0.02. Higher brightness values exist for other wavelengths not included in the calculation of melanin or haemoglobin - Figure 8.

Figure 8.

Graphs of changes in the value of spectrum for wavelengths λ for the patient’s subsequent fingers. The values on the axes are successive columns, wavelength λ and brightness for the selected wavelength. The last sixth graph shows the change of melanin along all five fingers of the patient. The y-value is the mean value of brightness for λE∈(450,600) nm. The colours of individual lines correspond to the colours of the fingers shown at the bottom in Figure 6.

Table 1.

The percentage of melanin for the sample finger (vertex V 2 ) and its standard deviation (STD) of the mean

| O 2,A1 | O 2,A2 | O 2,A3 | O 2,A4 | O 2,A5 | O 2,A6 | O 2,B1 | O 2,B2 | O 2,B3 | O 2,B4 | O 2,B5 | O 2,B6 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

Brightness |

0.17 |

0.16 |

0.16 |

0.19 |

0.17 |

0.16 |

0.17 |

0.17 |

0.17 |

0.19 |

0.17 |

0.16 |

| STD | 0.06 | 0.02 | 0.03 | 0.02 | 0.01 | 0.02 | 0.05 | 0.02 | 0.03 | 0.02 | 0.01 | 0.02 |

Table 2.

The percentage of haemoglobin for the sample finger (vertex V 2 ) and its standard deviation (STD) of the mean

| O 2,A1 | O 2,A2 | O 2,A3 | O 2,A4 | O 2,A5 | O 2,A6 | O 2,B1 | O 2,B2 | O 2,B3 | O 2,B4 | O 2,B5 | O 2,B6 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

Brightness |

0.17 |

0.14 |

0.15 |

0.16 |

0.15 |

0.15 |

0.17 |

0.14 |

0.15 |

0.16 |

0.15 |

0.15 |

| STD | 0.04 | 0.02 | 0.02 | 0.01 | 0.01 | 0.01 | 0.04 | 0.02 | 0.02 | 0.01 | 0.01 | 0.01 |

Comparison with other authors’ results

The known results and methods presented by other authors can be divided into:

• Issues related to hyperspectral imaging involving image analysis and processing and

• Methods of analysis and processing of hand images that can be used in hyperspectral imaging.

In the first case, hyperspectral imaging was used, inter alia, to analyse the saturation of HbO2 in people of different races (Zuzak [14,15]). Sampling from the hyposthenia region of African-American controls, the area within the square on the image, the percentage of skin HbO2 was 77.5 ± 0.2%, which is similar to the skin HbO2 percentage of 78.2 ± 0.2% in healthy Caucasian subjects. In contrast, the percentage of skin HbO2 in patients was significantly smaller and amounted to 61.0 ± 0.2% (p < 0.001). Percent renal parenchymal oxyhaemoglobin was also analysed by Liu [24]. In [13], there are also other results of analysis which is related only to the analysis of the whole ROI (region of interest) marked manually by an operator. The use of hyperspectral imaging to assess the time of the bruise formation is also interesting – Stam [25]. The inaccuracy found is 2.3% for fresh bruises and 3 to 24% for bruises up to 3 days old. In Authors’ conclusion [25], colour inhomogeneity of bruises can be used to determine their age. For example, the experiment results presented in [13] show that the hyperspectral based method has the potential to identify the spinal nerve more accurately than the traditional method as the new method contains both the spectral and spatial information of nerve sections. There are many other areas of medicine where manual or semi-automatic marking of regions of interest is used - analysis of vibrational Filik J. [26], laparoscopic digital light processing – Olweny EO. [27], blood stains at the crime scene – Edelman G. [28], prostate cancer detection – Akbari H. [29], histopathological examination of excised tissue - Vasefi F. [30] diabetic foot ulcer - Yudovsky D. [31], cancer detection - Akbari H. [32], and others.

In the other case, these are morphological operations used in the classification of various types of artefacts visible in the image [1,2,5,6]. Other methods for classification are also used such as SVM (support vector machines) [4], Gauss-Markov model [7] or wavelet method [9]. In the study of Dicker et al. [16], spectral library was generated with 12 unique spectra, which was used to classify specimens where sample preparation was varied. Using a CT of 0.99 left large areas of the tissues unclassified. Lowering the minimum correlation coefficient to 0.99 enabled all the samples to achieve >85% classification. The results referred mainly to the analysis of a hyperspectral image by analyzing the histograms obtained. Liu Z. et al. [12] propose a novel tongue segmentation method that uses hyperspectral images and the SVM. The presented segmentation of the tongue allows to obtain reproducible and quantitative results. Benediktsson J. et al. [2] present results for a sequential use of morphological opening and closure for the increasing size of the structural element. This methodology is similar to the use of conditional erosion and dilation as in [3]. In turn, Rellier G. et al. [7] propose a probabilistic vector texture model, using a Gauss-Markov random field (MRF). The MRF parameters allow the characterization of different hyperspectral textures.

In conclusion, the well-known studies related to using the methods for the analysis and processing of images into hyperspectral images is dominated by morphological analysis. Segmentation into specific areas refers to simple objects such as the tongue. In each case the methods described are profiled to a particular application. Therefore, the approach to the analysis of the hand proposed in this paper is an extension of these methods into a new area and new dedicated analysis methodology.

Summary

The paper presents a method for the analysis of melanin and haemoglobin in the area of the human hand. The characteristics of the described method are as follows:

• Repeatability of measurements owing to limiting of operator’s participation in the study,

• Full automatic operation of the algorithm - the arguments for each algorithm function are set once when first starting the algorithm – as they only depend on the type of the multispectral camera used,

• Possibility of any quantitative (not qualitative) assessment of the amount of melanin or haemoglobin in any area of the hand,

• Possibility of automatic comparison of the results of any area of the finger with other areas or other study of the same person in therapy/disease monitoring,

• Time analysis of a single image sequence does not exceed 100 ms when using Intel Core i5 CPU M460 @2.5 GHz 4 GB RAM.

The discussed methodology of hyperspectral image analysis and processing does not fully cover the issue. The presented algorithm for image analysis and processing can also be created by using the techniques from spectral methods [33,34], optical image analysis [35], microscopic analyses [36,37] and others [38-41]. In future work, the authors intend to analyse reproducibility of results for a larger number of patients using different types of hyperspectral cameras operating in the same spectral range. The impact of lighting and calibration method is equally interesting, which will be the subject of the authors’ future work.

Abbreviations

THV: Thermal camera; STD: Standard deviation; SVM: Support vector machines; ROI: Region of interest.

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

RK suggested the algorithm for image analysis and processing, implemented it and analysed the images. SW, ZW, SK, BBF performed the acquisition of the hyperspectral images and consulted the obtained results. All authors have read and approved the final manuscript.

Contributor Information

Robert Koprowski, Email: robert.koprowski@us.edu.pl.

Sławomir Wilczyński, Email: swilczynski@sum.edu.pl.

Zygmunt Wróbel, Email: zygmunt.wrobel@us.edu.pl.

Sławomir Kasperczyk, Email: kaslav@mp.pl.

Barbara Błońska-Fajfrowska, Email: bbf@sum.edu.pl.

Acknowledgements

This work was financially supported by the Medical University of Silesia in Katowice (Grant No. KNW – 2 – 019/N/3/N).

The authors wish to acknowledge the Katowice School of Economics for the ability of making measurements and Specim Company and LOT-Quantum Design Company for providing hyperspectral camera.

References

- Plaza A, Martinez P, Plaza J, Perez R. Dimensionality reduction and classification of hyperspectral image data using sequences of extended morphological transformations. IEEE Trans Geosci Rem Sens. 2005;43(3):466–479. [Google Scholar]

- Benediktsson JA, Palmason JA, Sveinsson JR. Classification of hyperspectral data from urban areas based on extended morphological profiles. IEEE Trans Geosci Rem Sens. 2005;43(3):480–491. [Google Scholar]

- Koprowski R, Teper S, Wrobel Z, Wylegala E. Automatic analysis of selected choroidal diseases in OCT images of the eye fundus. Biomed Eng Online. 2013;12:117. doi: 10.1186/1475-925X-12-117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fauvel M, Benediktsson JA, Chanussot J, Sveinsson JR. Spectral and spatial classification of hyperspectral data using SVMs and morphological profiles. IEEE Trans Geosci Rem Sens. 2008;46(11):3804–3814. [Google Scholar]

- Noyel G, Angulo J, Jeulin D. Morphological segmentation of hyperspectral images. Image Anal Stereol. 2007;26(3):101–109. doi: 10.5566/ias.v26.p101-109. [DOI] [Google Scholar]

- Dalla MM, Villa A, Benediktsson JA, Chanussot J, Bruzzone L. Classification of hyperspectral images by using extended morphological attribute profiles and independent component analysis. IEEE Geosci Remote Sens Lett. 2011;8(3):542–546. [Google Scholar]

- Rellier G, Descombes X, Falzon F, Zerubia J. Texture feature analysis using a Gauss-Markov model in hyperspectral image classification. IEEE Trans Geosci Remote Sens. 2004;42(7):1543–1551. [Google Scholar]

- Camps-Valls G, Gomez-Chova L, Munoz-Mari J, Vila-Frances J, Calpe-Maravilla J. Composite kernels for hyperspectral image classification. IEEE Geosci Remote Sens Lett. 2006;3(1):93–97. doi: 10.1109/LGRS.2005.857031. [DOI] [Google Scholar]

- Zhang X, Younan NH, O'hara CG. Wavelet domain statistical hyperspectral soil texture classification. IEEE Trans Geosci Remote Sens. 2005;43(3):615–618. [Google Scholar]

- Pan Z, Member S, Healey G, Member S, Prasad M, Tromberg B. Face recognition in hyperspectral images. IEEE Trans Pattern Anal Mach Intell. 2003;25(12):1552–1560. doi: 10.1109/TPAMI.2003.1251148. [DOI] [Google Scholar]

- Stamatas GN, Balas CJ, Kollias N. Hyperspectral image acquisition and analysis of skin. Biomedical Optics 2003. International Society for Optics and Photonics. 2003. pp. 77–82.

- Liu Z, Yan JQ, Zhang D, Li QL. Automated tongue segmentation in hyperspectral images for medicine. Appl Optic. 2007;46(34):8328–8334. doi: 10.1364/AO.46.008328. [DOI] [PubMed] [Google Scholar]

- Li Q, Chen Z, He X, Wang Y, Liu H, Xu Q. Automatic identification and quantitative morphometry of unstained spinal nerve using molecular hyperspectral imaging technology. Neurochem Int. 2012;61(8):1375–1384. doi: 10.1016/j.neuint.2012.09.018. [DOI] [PubMed] [Google Scholar]

- Zuzak KJ, Francis RP, Wehner EF, Litorja M, Cadeddu JA, Livingston EH. Active DLP hyperspectral illumination: a noninvasive, in vivo, system characterization visualizing tissue oxygenation at near video rates. Anal Chem. 2011;83(19):7424–7430. doi: 10.1021/ac201467v. [DOI] [PubMed] [Google Scholar]

- Zuzak KJ, Gladwin MT, Cannon RO, Levin IW. Imaging haemoglobin oxygen saturation in sickle cell disease patients using noninvasive visible reflectance hyperspectral techniques: effects of nitric oxide. Am J Physiol Heart Circ Physiol. 2003;285(3):H1183–H1189. doi: 10.1152/ajpheart.00243.2003. [DOI] [PubMed] [Google Scholar]

- Dicker DT, Lerner J, Van Belle P, Barth SF, Guerry D, Herlyn M, Elder DE, El-Deiry WS. Differentiation of normal skin and melanoma using high resolution hyperspectral imaging. Cancer Biol Ther. 2006;5(8):1033–1038. doi: 10.4161/cbt.5.8.3261. [DOI] [PubMed] [Google Scholar]

- Samarov DV, Clarke ML, Lee JY, Allen DW, Litorja M, Hwang J. Algorithm validation using multicolor phantoms. Biomed Opt Express. 2012;3(6):1300–1311. doi: 10.1364/BOE.3.001300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosas JG, Blanco M. A criterion for assessing homogeneity distribution in hyperspectral images. Part 1: homogeneity index bases and blending processes. J Pharm Biomed Anal. 2012;70:680–690. doi: 10.1016/j.jpba.2012.06.036. [DOI] [PubMed] [Google Scholar]

- Rosas JG, Blanco M. A criterion for assessing homogeneity distribution in hyperspectral images. Part 2: application of homogeneity indices to solid pharmaceutical dosage forms. J Pharm Biomed Anal. 2012;70:691–699. doi: 10.1016/j.jpba.2012.06.037. [DOI] [PubMed] [Google Scholar]

- Koprowski R, Wrobel Z. Identification of layers in a tomographic image of an eye based on the canny edge detection. Inf Technol Biomed Adv Intell Soft Comput. 2008;47:232–239. doi: 10.1007/978-3-540-68168-7_26. [DOI] [Google Scholar]

- Koprowski R, Teper S, Weglarz B, Wylęgała E, Krejca M, Wróbel Z. Fully automatic algorithm for the analysis of vessels in the angiographic image of the eye fundus. Biomed Eng Online. 2012;11:35. doi: 10.1186/1475-925X-11-35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koprowski R, Wróbel Z. Layers recognition in tomographic eye image based on random contour analysis. Computer recognition systems 3. Adv Intell Soft Comput. 2009;57:471–478. doi: 10.1007/978-3-540-93905-4_56. [DOI] [Google Scholar]

- Otsu N. A threshold selection method from gray-level histograms. IEEE Trans Sys Man Cyber. 1979;9(1):62–66. [Google Scholar]

- Liu ZW, Faddegon S, Olweny EO, Best SL, Jackson N, Raj GV, Zuzak KJ, Cadeddu JA. Renal oxygenation during partial nephrectomy: a comparison between artery-only occlusion versus artery and vein occlusion. J Endourol. 2013;27(4):470–474. doi: 10.1089/end.2012.0466. [DOI] [PubMed] [Google Scholar]

- Stam B, van Gemert MJ, van Leeuwen TG, Teeuw AH, van der Wal AC, Aalders MC. Can color inhomogeneity of bruises be used to establish their age? J Biophotonics. 2011;4(10):759–767. doi: 10.1002/jbio.201100021. [DOI] [PubMed] [Google Scholar]

- Filik J, Rutter AV, Sulé-Suso J, Cinque G. Morphological analysis of vibrational hyperspectral imaging data. Analyst. 2012;137(24):5723–5729. doi: 10.1039/c2an35914f. [DOI] [PubMed] [Google Scholar]

- Olweny EO, Faddegon S, Best SL, Jackson N, Wehner EF, Tan YK, Zuzak KJ, Cadeddu JA. Renal oxygenation during robot-assisted laparoscopic partial nephrectomy: characterization using laparoscopic digital light processing hyperspectral imaging. J Endourol. 2013;27(3):265–269. doi: 10.1089/end.2012.0207. [DOI] [PubMed] [Google Scholar]

- Edelman G, van Leeuwen TG, Aalders MC. Hyperspectral imaging for the age estimation of blood stains at the crime scene. Forensic Sci Int. 2012;223(1–3):72–77. doi: 10.1016/j.forsciint.2012.08.003. [DOI] [PubMed] [Google Scholar]

- Akbari H, Halig LV, Schuster DM, Osunkoya A, Master V, Nieh PT, Chen GZ, Fei B. Hyperspectral imaging and quantitative analysis for prostate cancer detection. J Biomed Opt. 2012;17(7):076005. doi: 10.1117/1.JBO.17.7.076005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vasefi F, Najiminaini M, Ng E, Chamson-Reig A, Kaminska B, Brackstone M, Carson J. Transillumination hyperspectral imaging for histopathological examination of excised tissue. J Biomed Opt. 2011;16(8):086014. doi: 10.1117/1.3623410. [DOI] [PubMed] [Google Scholar]

- Yudovsky D, Nouvong A, Schomacker K, Pilon L. Assessing diabetic foot ulcer development risk with hyperspectral tissue oximetry. J Biomed Opt. 2011;16(2):026009. doi: 10.1117/1.3535592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Akbari H, Uto K, Kosugi Y, Kojima K, Tanaka N. Cancer detection using infrared hyperspectral imaging. Cancer Sci. 2011;102(4):852–857. doi: 10.1111/j.1349-7006.2011.01849.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Porwik P. Efficient spectral method of identification of linear Boolean function. Control Cybern. 2004;33(4):663–678. [Google Scholar]

- Sonka M, Michael Fitzpatrick J. Medical Image Processing and Analysis. Belligham: SPIE; 2000. (Handbook of Medical Imaging). [Google Scholar]

- Siedlecki D, Nowak J, Zajac M. Placement of a crystalline lens and intraocular lens: retinal image quality. J Biomed Opt. 2006;11(5):054012. doi: 10.1117/1.2358959. [DOI] [PubMed] [Google Scholar]

- Korzynska A, Hoppe A, Strojny W, Wertheim D. Proceedings of the Second IASTED International Conference on Biomedical Engineering. Anaheim, Calif. Calgary, Zurich: ACTA Press; 2004. Investigation of a Combined Texture and Contour Method for Segmentation of Light Microscopy Cell Images; pp. 234–239. [Google Scholar]

- Korzynska A, Iwanowski M. Multistage morphological segmentation of bright-field and fluorescent microscopy images. Opt Electron Rev. 2012;20(2):87–99. [Google Scholar]

- Castro A, Siedlecki D, Borja D, Uhlhorn S, Parel JM, Manns F, Marcos S. Age-dependent variation of the gradient index profile in human crystalline lenses. J Mod Opt. 2011;58(19–20):1781–1787. doi: 10.1080/09500340.2011.565888. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Korus P, Dziech A. Efficient method for content reconstruction with self-embedding. IEEE Trans Image Process. 2013;22(3):1134–1147. doi: 10.1109/TIP.2012.2227769. [DOI] [PubMed] [Google Scholar]

- Tadeusiewicz R, Ogiela MR. Automatic understanding of medical images new achievements in syntactic analysis of selected medical images. Biocybern Biomed Eng. 2002;22(4):17–29. [Google Scholar]

- Edelman GJ, Gaston E, Leeuwen TG, Cullen PJ, Aalders MC. Hyperspectral imaging for non-contact analysis of forensic traces. Forensic Sci Int. 2012;223(1–3):28–39. doi: 10.1016/j.forsciint.2012.09.012. [DOI] [PubMed] [Google Scholar]