Abstract

In resurgence, an extinguished instrumental behavior (R1) recovers when a behavior that replaced it (R2) is also extinguished. The phenomenon may be relevant to understanding relapse that can occur after the termination of “contingency management” treatments, in which unwanted behavior (e.g., substance abuse) is reduced by reinforcing alternative behavior. When reinforcement is discontinued, the unwanted behavior might resurge. However, unlike most resurgence experiments, contingency management treatments also introduce a negative contingency in which reinforcers are not delivered unless the client has abstained from the unwanted behavior. Two experiments with rats therefore examined the effects of adding a negative “abstinence” contingency to the resurgence design. During response elimination, R2 was not reinforced unless R1 had not been emitted for a minimum period of time (45, 90, or 135 s). In both experiments, adding such a contingency to simple R1 extinction reduced, but did not eliminate, resurgence. Experiment 2 found the same effect in a yoked group that could earn reinforcers for R2 at the same points in time, but without the requirement to abstain from R1. Thus, the negative contingency per se did not contribute. Results suggest that the contingency reduced resurgence by making reinforcers more difficult to earn and more widely spaced in time. This could have allowed the animal to learn that R1 was extinguished in the “context” of infrequent reinforcement—a context more like that of resurgence testing. The results are thus consistent with a contextual (renewal) account of resurgence. The method might provide a better model of relapse after termination of a contingency management treatment.

Keywords: Resurgence, renewal, negative contingency, relapse, contingency management

In resurgence, the performance of an extinguished instrumental behavior can return when an alternative behavior is put on extinction. In the animal laboratory, resurgence experiments involve three phases. In the first, a target instrumental response (R1, e.g., pressing a lever) is reinforced. In the second phase, an alternative behavior (R2, e.g., pressing a second lever) is reinforced while the first response is extinguished (no longer reinforced). By the end of Phase 2, R2 responding replaces R1 responding. However, in the final phase, when reinforcement for R2 is also discontinued, R1 responding can recover or “resurge” (e.g., Leitenberg, Rawson, & Bath, 1970; Sweeney & Shahan, 2013a,b; Winterbauer & Bouton, 2010, 2012; Winterbauer, Lucke, & Bouton, 2013). Resurgence is like several other post-extinction “relapse” effects, including renewal, spontaneous recovery, and reinstatement, that all suggest that extinguished instrumental responding can return as a consequence of any of several experimental manipulations (e.g., Bouton, 2004; Bouton, Winterbauer & Vurbic, 2012; Bouton, Winterbauer, & Todd, 2012). Like the other forms of relapse, resurgence suggests that extinction is not erasure, and is instead a form of behavioral inhibition that leaves the original behavior susceptible to lapse and relapse.

There are several reasons why an extinguished instrumental behavior can resurge. Winterbauer and Bouton (2010; see also Bouton & Swartzentruber, 1991) suggested that resurgence is an example of the well-known renewal effect, in which extinguished responding recovers when the context is changed (e.g., Bouton, Todd, Vurbic, & Winterbauer, 2011). Extinction of either Pavlovian or instrumental learning is highly context-specific (e.g., Bouton, 2004; Bouton et al., 2012). According to this view, omitting reinforcers during the final test changes the context from the one that prevailed in extinction (when R2 was being reinforced), and responding therefore renews. The idea is consistent with evidence that a wide variety of stimuli, including the presence or absence of reinforcers, can function as contextual cues (e.g., Bouton, Rosengard, Achenbach, Peck, & Brooks, 1993; see Bouton, 1991, 2002 for discussion). Winterbauer and Bouton (2010) suggested that resurgence may be an “ABC” form of the renewal effect (e.g., Bouton et al., 2011; Todd, 2013; Todd, Winterbauer, & Bouton, 2012), where R1 conditioning, extinction, and testing each occur in different contexts (A, B, and C, respectively). Other explanations of resurgence will be discussed in the General Discussion.

One finding that is consistent with the contextual view of resurgence is that resurgence can be reduced if the reinforcement schedule for R2 in Phase 2 is “thinned” so that the reinforcers are presented less and less frequently toward the end of the response elimination phase (Winterbauer & Bouton, 2012; see also Sweeney & Shahan, 2013b). According to the contextual view, the thinning procedure allows the animal to learn that R1 is extinguished in the context of infrequent reinforcers, a context that is like the one that is present during resurgence testing. Also consistent with this idea, resurgence is weak if a very lean schedule of reinforcement for R2 is used throughout Phase 2 (Leitenberg, Rawson, & Mulick, 1975; Sweeney & Shahan, 2013b). In either case, the animal is given the opportunity to learn not to perform R1 in the context of long intervals between reinforcers.

The starting point for the present research was the idea that resurgence may be relevant to understanding relapse after “Contingency Management” (CM) treatments that are used to eliminate undesirable behavior in the clinic (e.g., Higgins, Silverman, & Heil, 2008). In CM, patients earn reinforcers (e.g. money, vouchers, prizes, or lottery tickets) contingent on suppressing unwanted behaviors (e.g., drug-taking or over-eating) and performing more desirable ones (Higgins, Heil & Lussier, 2004; Fisher, Green, Calvert, & Glasgrow, 2011). Research suggests that CM is effective in eliminating several types of substance use disorders (e.g. cocaine, opiates, marijuana, tobacco, and alcohol use) both alone and in conjunction with other behavioral and pharmaceutical treatments (Higgins et al., 2008). During treatment of substance use disorders, reinforcement is typically provided only after abstinence is confirmed by a biological assay (e.g. urine analysis). In contrast, when an assay indicates recent drug use, reinforcement is typically withheld. CM may be an especially effective therapy for individuals that require immediate cessation or cessation for a predetermined length of time when reinforcement can be made available (e.g. during pregnancy or after a recent heart attack, see Fisher et al., 2011; Heil, Yoon, & Higgins, 2008). Evidence regarding its effectiveness in promoting abstinence after the conclusion of treatment, when reinforcers are no longer presented, is more mixed (Higgins et al., 2008).

Several authors have noted the possible relevance of resurgence to understanding potential relapse after a CM treatment (e.g., Bouton et al., 2012; Shahan & Sweeney, 2011; Sweeney & Shahan, 2013a,b; Winterbauer et al., 2013). The phenomenon suggests that the original behavior might resurge when reinforcement for desirable behavior is stopped. However, it is worth noting that the resurgence paradigm was never designed as a model of CM. Consequently, there are several differences between the contingencies introduced in CM and in the response elimination phase of a typical resurgence experiment. For one thing, the unwanted behaviors that are reduced by CM are never put on extinction; a return to drug taking (for example) would always be reinforced. Presumably, this would make relapse even stronger when reinforcement delivered during CM is discontinued. Second, the reinforcers used in CM are different from those that reinforced the original learning (e.g., vouchers vs. alcohol or cocaine), whereas the first- and second-phase reinforcers in resurgence experiments are usually the same. Exceptions include resurgence experiments in which alcohol-reinforced (Podlesnik, Jimenez-Gomez, & Shahan, 2006) and cocaine-reinforced (Quick, Pyszczynski, Colston, & Shahan, 2011) responses resurged in animals after they were replaced by responses reinforced by food. It is worth noting that Winterbauer et al. (2013, Experiment 3) also found no difference in the amount of resurgence when sucrose- or grain-based pellet reinforcers were either the same between phases or were switched from grain to sucrose or sucrose to grain.

A third difference between CM and the usual resurgence paradigm is that CM treatments impose a negative contingency such that the reinforcer is not delivered unless there has been abstinence from the undesired behavior. In contrast, in most resurgence experiments, there is no such negative contingency; reinforcers are typically earned for performing the second behavior (R2) regardless of whether or not the animal has recently performed the first response (R1). There are some exceptions to this rule. Several experiments have demonstrated resurgence when a modest change-over delay was imposed during response elimination such that reinforcers could not be earned on R2 unless 3 s had also elapsed since the last R1 (e.g., da Silva, Maxwell, & Lattal, 2008; Doughty, da Silva, & Lattal, 2007; Lieving & Lattal, 2003). Other experiments have demonstrated resurgence after a differential-reinforcement-of-other-behavior (DRO) contingency in which reinforcers were presented if R1 had not been made in the previous 20 s (da Silva et al., 2008; Doughty et al., 2007; Pacitti & Smith, 1977). However, in contrast to the typical resurgence procedure, there was no joint requirement to make an explicit alternative R2 response. And although resurgence can occur after DRO, comparisons of DRO's effectiveness against other response-elimination procedures have produced mixed results. Whereas Pacitti and Smith (1977) found that DRO yielded less response recovery than a procedure in which R1 was merely extinguished while an R2 was reinforced, Doughty et al. (2007, Experiment 1) reported evidence of the opposite result.

It is worth noting that a negative contingency between a response and a reinforcer also does not abolish the related renewal effect. Nakajima, Urushihara, and Masaki (2002) found that when rats were trained to lever press for food in Context A and then given omission training in Context B (where lever presses now delayed food pellets that were otherwise presented freely), lever pressing was renewed when the rats were returned to Context A. Kearns and Weiss (2007) also reported a an ABA renewal effect in rats when a response reinforced by cocaine administration in Context A was eliminated by withholding cocaine and giving the response a new omission contingency with food in Context B. The fact that renewal and resurgence can still occur after a negative contingency is consistent with many other results reported in retroactive interference paradigms in which organisms learn something in a second phase that conflicts with what was learned in a preceding phase (e.g., extinction, counterconditioning, discrimination reversal learning). In such paradigms, learning that occurs in the second phase does not destroy what was learned in the first, which remains available for expression in performance after a number of manipulations of context and time (Bouton, 1993).

The present experiments were designed to provide a more detailed analysis of resurgence after adding a negative contingency to a traditional resurgence procedure (e.g., Winterbauer & Bouton, 2010, 2012). In experimental groups, response elimination involved not only extinction of R1, but a new contingency in which R2 was not reinforced unless there had been a minimum interval (45 s, 90 s, or 135 s) since the last R1. The fact that an alternative response, R2, was reinforced contingent on no R1 makes this new procedure similar to CM treatments in which reinforcers are jointly contingent on both abstinence and performance of another behavior such as entering data into a computer in a work setting (e.g., DeFulio, Donlin, Wong, & Silverman, 2009). The present experiments asked whether adding an “abstinence” contingency reduced resurgence when R2 was no longer reinforced during a final test. The results of Experiment 1 suggested that adding a negative contingency to extinction reduces, but does not eliminate, resurgence of R1. Experiment 2 replicated the result and provided further insight into why resurgence was reduced. Instead of generating more “unlearning,” the negative contingency initially made it more difficult to earn reinforcers on R2 and therefore allowed the rats to learn to withhold R1 responding in a “context” provided by long intervals between reinforcers. Because that context was similar to the context that prevailed during final resurgence testing, extinction of R1 transferred better to the resurgence test.

Experiment 1

In the first experiment, four groups of rats first learned to press a lever (R1) on a variable-interval (VI) 30-s schedule of reinforcement. Then, in the response elimination phase, a control group (Group Extinction) received a standard treatment in which R1 was extinguished while presses on a second lever (R2) were reinforced on a VI 10-s schedule. In the final test, when R2 was then extinguished, we expected responding on R1 to resurge. In three other groups, extinction of R1 was supplemented with a negative contingency. For Group 45-s Negative Contingency, R2 was reinforced once a reinforcer became available on the VI 10-s schedule only if there had been an interval of at least 45 s since the last R1 response. Groups 90-s Negative Contingency and 135-s Negative Contingency received similar treatments, except that the minimum interval since the last R1 response was 90 s or 135 s, respectively. All groups eventually learned to stop pressing R1 and to press R2 at a high rate. The question was whether the additional “abstinence” contingency would reduce resurgence relative to Group Extinction when R2 was finally put on extinction.

Method

Subjects

The subjects were 32 female Wistar rats obtained from Charles River, Inc. (St. Constance, Quebec). The rats were approximately 85–95 days old at the start of the experiment and were individually housed in suspended stainless steel cages in a room maintained on a 16:8-h light:dark cycle. At the beginning of the experiment, all rats were food deprived to 80% of their free-feeding weight and maintained at that level throughout the experiment with a single feeding following each day's session.

Apparatus

Conditioning proceeded in two sets of four standard conditioning boxes (Med-Associates, St. Albans, VT; model: ENV-008-VP) that were housed in different rooms of the laboratory. Boxes from both sets measured 31.75 × 24.13 × 29.21 cm (l × w × h) and were housed in sound-attenuation chambers. The front and back walls were aluminum; the sidewalls and ceiling were clear acrylic plastic. There was a 5.08 × 5.08 cm recessed food cup centered in the front wall near floor-level. 4.8 cm stainless steel operant levers (Med Associates model: ENV-112CM) were located to the left and to the right of the food cup, 6.2 cm above the floor. Ventilation fans provided background noise of 60 dB, and illumination was provided throughout the experiment by two 7.5-W incandescent bulbs mounted on the ceiling of the sound-attenuation chamber. In one set of boxes, the floor consisted of 0.48-cm diameter stainless steel grids spaced 3.81 cm apart and mounted parallel to the front wall. The ceiling and a sidewall had black horizontal stripes (3.81 cm wide). In the other set of boxes (also model ENV-008-VP), the floor consisted of alternating stainless steel grids with different diameters (0.48 and 1.27 cm), spaced 1.59 cm. The ceiling and left sidewall were covered with dark dots (1.9 cm in diameter). The apparatus was controlled by computer equipment located in an adjacent room. Although the two sets of boxes can provide discriminably different contexts, they were not used in that capacity here. Food reward consisted of 45-mg MLab Rodent Tablets (TestDiet, Richmond, IN).

Procedure

All experimental sessions were 30 min in duration.

Magazine Training

On the first day, each rat was assigned to a box and then received a single session in which free pellets were delivered on average every 30 s. The levers were retracted and unavailable during this session.

R1 conditioning (Phase 1)

On each of the next 12 days, rats received one session in which R1 presses resulted in pellet delivery every 30 s on average (a VI 30-s reinforcement schedule). The schedule was programmed by initiating pellet availability in a given second with a 1 in 30 probability. A pellet then remained available until the next lever press, when it was delivered and the schedule mechanism was restarted. All sessions began with a 2-min delay in which the levers were retracted from the chamber. Following the delay, either the right lever or left lever (counterbalanced) was inserted. No special response shaping was necessary; early in training, when inter-response intervals were long, the VI 30-s schedule allowed pellets at a frequency that was sufficient to allow the rat to learn to respond. Sessions ended after 30 min, when the lever was retracted again.

Response elimination (Phase 2)

On each of the next eight days, the rats received a single session that began with insertion of both the right and left levers after the usual 2-min delay following placement in the chambers. Rats were randomly assigned to one of four groups (n = 8), with the restriction that individual boxes and time of session were equally represented among groups. For all groups, R1 presses were recorded but never reinforced throughout the phase. In Group Extinction, R2 presses were reinforced on a VI 10-s schedule programmed in a manner analogous to the VI 30-s schedule in Phase 1. For the remaining three groups, presses on R2 were also reinforced on a VI 10-s schedule provided the rat also had not pressed R1 for a minimum interval. During Days 1-4, the minimum interval for all negative contingency groups was 15 s. During Days 5-8, the groups received differential treatments: Rats in the 45-s Negative Contingency group were reinforced for R2 responses on VI 10 if there had been a minimum of 45 s since the last R1 response; animals in the 90-s Negative Contingency group were similarly reinforced for R2 if there had been a 90-s interval since the last R1 response; and rats in the 135-s Negative Contingency group were reinforced for R2 contingent if 135 s had elapsed since the last R1 response. All sessions ended with retraction of the levers at the end of 30 minutes.

Resurgence test (Phase 3)

On the final day, all rats received a single 30-min test session in which both levers were inserted but presses had no scheduled consequences. As usual, the session ended with the retraction of both levers.

Data treatment

The data were put through analysis of variance (ANOVA) with a rejection criterion of p < .05. One rat in Group 90-s Negative Contingency and one from Group 135-s Negative Contingency were excluded because they failed to learn R2 during Phase 2. The first earned only 7 reinforcers during the entire phase, while the second earned only 14. The mean number of pellets earned by the other rats in Groups 90-s Negative Contingency and 135-s Negative Contingency were 828.1 and 752.6, respectively.

Results

Lever Pressing

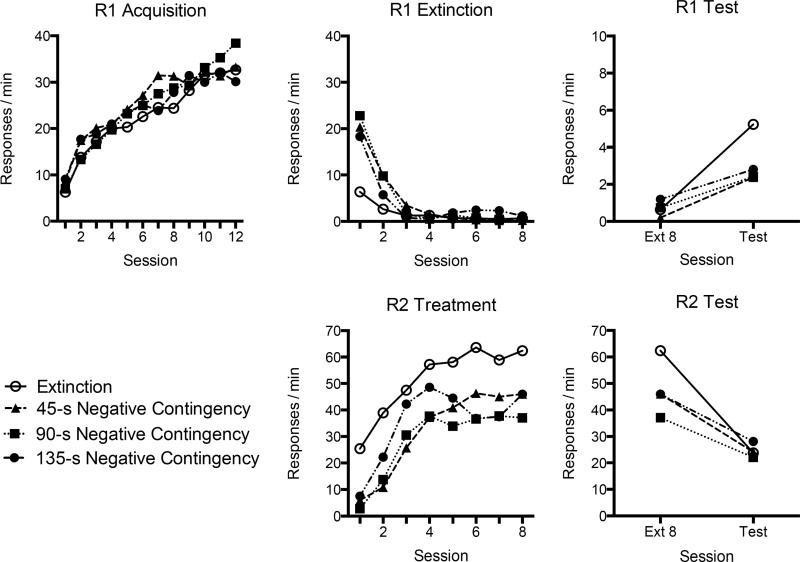

Lever press responding from all three phases is presented in the panels of Figure 1. R1 was acquired without difficulty in Phase 1 (left panel), and was replaced by R2 during Phase 2 (middle panels). During resurgence testing (upper right panel), all groups demonstrated a significant resurgence of R1 responding, although the negative contingency groups each exhibited less resurgence than the extinction control group.

Figure 1.

Results of Experiment 1. The upper panels summarize R1 responding during acquisition (left), response elimination (middle), and resurgence testing compared to the final extinction session (right). The lower panels summarize responding on R2 during response elimination (middle) and resurgence testing compared to the final extinction session (right).

During Phase 1, R1 responding increased reliably in all groups over 12 sessions F(11, 286) = 61.01, MSE = 30.01, p < .01. Random assignment to groups was successful in the sense that the main effect of Group and the Group × Session interaction were not significant, Fs <1.

During Phase 2, R1 responding quickly decreased. A 4 (Group) × 8 (Session) ANOVA demonstrated a main effect of Session, F(7, 182) = 85.46 MSE = 11.40, p < .001. A main effect of Group F(3, 26) = 5.39 MSE = 22.46, p < .01, and a Group × Session interaction, F(21, 182) = 5.20 MSE = 11.40, p < .001, further indicated differences in R1 pressing between groups that depended on session. One-way ANOVAs were conducted to decompose the Group × Session interaction. They revealed group differences on Day 1, F(3, 26) =5.93 MSE = 69.49, p<.01, and Day 2, F(3, 26) = 5.06 MSE = 18.67, p < .01. Fisher's Least Significant Difference (LSD) tests indicated that Group Extinction made fewer R1 responses than the 45 s (p < .01), 90 s (p < .01), and 135 s (p < .05) negative contingency groups. In addition, during Sessions 5-8, when negative contingency animals were receiving their terminal schedules (45, 90, or 135 s negative contingencies), differences in R1 pressing were noted during Session 6, F(3, 26) = 4.19 MSE = 1.57, p < .05, and Session 7, F(3, 26) = 4.38 MSE = 1.57, p < .05. LSD Tests indicated that during Session 6, Group 135-s Negative Contingency pressed R1 at a higher rate than Group Extinction (p < .05), Group 45-s Negative Contingency (p < .05), and Group 90-s Negative Contingency (p < .05). Similarly, during Session 7, rats in the 135-s Negative Contingency group again responded at a greater rate than did Group Extinction (p < .05), Group 45-s Negative Contingency (p < .05), and Group 90-s Negative Contingency (p < .05). No other differences between groups were indicated, and terminal rates during the final session did not differ statistically between groups, F(3, 26) = 1.17.

Turning to R2, a 4 (Group) × 8 (Session) ANOVA indicated that responding on R2 increased reliably over the sessions of Phase 2, F(7, 182) = 44.87 MSE = 123.27, p < .01. There was a main effect of Group, F(3, 26) = 4.34, MSE = 1374.58, p < .05. LSD tests indicated that over Phase 2, Group Extinction made more responses on R2 than rats in Group 45-s (p < .01), 90-s (p < .01), and 135-s Negative Contingency (p < .05). The Group × Session interaction was not significant, F(21, 182) = 1.17, MSE = 123.27.

During the resurgence test, when both R1 and R2 were placed on extinction, all groups showed an increase in R1 responding relative to Session 8 of Phase 2. A 4 (Group) × 2 (Session: Ext 8 vs. Resurgence Test) ANOVA indicated a reliable main effect of Session, F(1, 26) = 75.52 MSE = 1.25, p < .01. The main effect of Group, F(3, 26) = 3.58 MSE = 2.29, p < .05, and the Group × Session interaction, F(3, 26) = 6.18 MSE = 1.57, p < .01, were also reliable. LSD tests confirmed that all four groups increased R1 responding between the final session of Phase 2 and the resurgence test (ps < .01). One-way ANOVAs conducted on the data from each session to decompose the interaction indicated no differences between groups during the final session of Phase 2, F(3, 26) = 1.27. However, the groups differed in R1 pressing during the resurgence test, F(3, 26) = 5.93, MSE = 2.47, p < .01. LSD tests indicated more R1 responding in Group Extinction than Groups 45-s (p < .01), 90-s (p < .01), and 135-s Negative Contingency (p < .01). There were no differences among the three groups receiving negative contingency treatments (ps ≥ .60).

A 4 (Group) × 2 (Session) ANOVA indicated that when put on extinction, R2 decreased relative to its rate during the final session of Phase 2, F(1, 26) = 52.60 MSE = 144.025, p < .01. The main effect of Group and the Group × Session interaction each failed to reach significance, largest F(3, 26) = 2.16, MSE = 144.03, p > .05, suggesting that R2 responding did not differ between the groups.

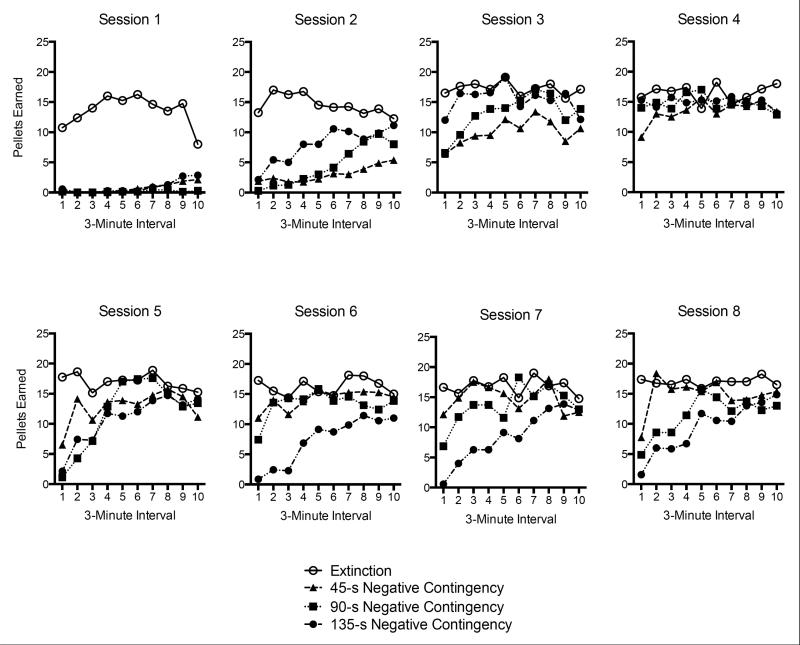

Reinforcers earned during Phase 2

One of the most important effects of introducing the negative contingencies was to reduce the rate at which the negative contingency groups earned pellets during Phase 2. This is shown in Figure 2, which depicts the pellets earned over 3-min intervals in each of the eight Phase 2 sessions. Across the first four sessions, when all negative contingency groups were receiving the initial 15-s negative contingency, a 4 (Group) × 40 (3-Min Interval) ANOVA indicated that the number of reinforcers earned changed over interval, F(39, 1014) = 37.63, MSE = 18.14, p < .001. A significant Group × Interval interaction, F(117, 1014) = 3.55, MSE = 18.14, p < .001, and a main effect of Group, F(3, 26) = 18.35, MSE = 249.03, p < .001, indicated group differences. LSD tests collapsing over bins indicated that Group Extinction earned more reinforcers than each of the groups receiving a negative contingency (all ps < .001). Although receiving identical treatments at this time, Group 45-s Negative Contingency also earned fewer reinforcers than Group 135-s Negative Contingency (p < .05) during the first four sessions. In Sessions 5-8, when the negative contingency groups were put on their final negative contingency schedules, a 4 (Group) × 40 (3 Min Interval) ANOVA indicated that the number of reinforcers earned changed over time bins, F(39, 1014) = 5.60, MSE = 25.29, p < .001. There was also a reliable Group × Interval interaction, F(39, 1014) = 2.00, MSE = 25.29, p < .001, and a main effect of Group, F(3, 25) = 7.35, MSE = 420.66, p < .01. LSD tests indicated that Group Extinction earned more reinforcers than Group 90-s Negative Contingency (p < .05) and Group 135-s Negative Contingency (p < .001). However, Group Extinction and Group 45-s Negative Contingency earned a similar number of reinforcers at this time (p = .12) In addition, Group 135-s Negative Contingency earned fewer reinforcers than Groups 45-s (p < .01) and 90-s Negative Contingency (p = .05). There were no differences between Group 45-s Negative Contingency and Group 90-s Negative Contingency (p = .36). The pattern was maintained during the final session of Phase 2, when the groups still differed in the number of reinforcers earned, F(3, 26) = 5.71, MSE = 1488.76, p < .01. Group Extinction earned more pellets than the 90-s and 135-s Negative Contingency groups,and Group 45 s Negative Contingency earned more pellets than Group 135-s Negative Contingency (ps ≤ .01). No other difference was reliable, smallest p >.12.

Figure 2.

Number of pellets earned during each of the response elimination (Phase 2) sessions by the four groups in Experiment 1. Data are shown over 10 3-min intervals within each session.

Discussion

The results suggest that adding a negative contingency to the standard extinction treatment in the resurgence paradigm had two effects on R1 responding. First, it paradoxically slowed the rate at which R1 declined during response elimination compared to that in a group that received simple extinction. This result was presumably due to the fact that Group Extinction quickly learned to press R2 at a high rate, and either the incompatible R2 response or the high level of reinforcement for that behavior competed with the strength of R1 responding (e.g., Herrnstein, 1970; Shahan & Sweeney, 2011). The negative contingency groups were slower to learn R2 because the negative contingency meant that it was never reinforced until R1 had been suppressed. Second, and more important, the negative contingency on R1 also reduced the final resurgence effect. This result is consistent with the idea that a negative contingency provided a more effective “intervention” than simple extinction. However, it did not eliminate resurgence; all three negative contingency groups showed attenuated, but not abolished, resurgence during the final test. The fact that the negative contingency did not eliminate resurgence is consistent with prior results, mentioned earlier, which suggest that omission training does not prevent resurgence (e.g., da Silva et al., 2008; Doughty et al., 2007) or the theoretically-related renewal effect (Kearns & Weiss, 2007; Nakajima et al., 2002). In the earlier experiments, making the response postponed a scheduled noncontingent reinforcer by 20 (da Silva et al., 2008; Doughty et al., 2007), 25 (Kearns & Weiss, 2007), or 30 s (Nakajima et al., 2002). The present results suggest that even stronger negative contingencies, including one that required abstinence from R1 for a minimum of 135 s to be reinforced for R2, do not destroy the first-phase instrumental learning either.

Another effect of adding the negative contingency was that it reduced the rate at which reinforcers were earned during the response elimination phase (Figure 2). This reduction was a consequence of the fact that the abstinence requirement, coupled with the tendency to continue to make R1 responses early in extinction, made it difficult to earn reinforcers at the start of Phase 2. The fact that reinforcers were so infrequent, particularly at the start of response elimination, could have allowed the Negative Contingency groups to learn that R1 was extinguished in the “context” of infrequent pellets. Thus, the animals could have learned to suppress (extinguish) R1 responding in that context. Since those conditions were similar to the conditions that were reintroduced during the resurgence test, there would be more generalization of response inhibition to the context of resurgence testing. An alternative, of course, is that the negative contingency is simply better at suppressing R1 performance in the long run than is extinction. Experiment 2 was designed to separate these possibilities.

Experiment 2

Like Experiment 1, Experiment 2 involved an acquisition phase, a response elimination phase, and a final resurgence test. To test the replicability of the results of Experiment 1, it included an Extinction Control Group and a 45-s Negative Contingency group. However, it also included a new Yoked group, which received a new treatment in Phase 2. For rats in this group, a reinforcer was made available for delivery after the next R2 response whenever a linked “master” rat in the Negative Contingency group earned one. There was thus no negative contingency between R1 responses and receiving reinforcement for Group Yoked. However, the yoking procedure meant that the rats would receive a very similar distribution of reinforcers as the Negative Contingency group over time. If the negative contingency reduces resurgence by allowing the animal to learn R1 extinction in the context of long intervals between reinforcers, then the final level of resurgence in Group Yoked should be the same as that in the Negative Contingency group. If there is an additional effect of learning the negative contingency, it should suppress resurgence in the final test below that observed in the Yoked group.

Subjects and Apparatus

The subjects were 32 naive female Wistar rats of the same age and from the same stock as those described in Experiment 1. The apparatus was also the same.

Procedure

Magazine training and R1 conditioning (Phase 1) proceeded exactly as described in Experiment 1.

Response elimination (Phase 2)

Over the next eight days, the rats received treatments identical to those in Experiment 1 except as noted. In all groups, R1 was available throughout the sessions but was never reinforced (i.e., extinguished). Group Extinction Control (n = 8) was reinforced on a VI 10-s schedule for pressing R2. Group 45-s Negative Contingency (n = 12) received the same treatment as its namesake in Experiment 1: During Days 1-4, R2 presses were reinforced on a VI 10 schedule contingent on a 15-s abstinence from R1 responses, and on Days 5-8, R2 responses were reinforced on VI 10 contingent on a 45-s abstinence. For animals in Group Yoked (n = 12), a pellet became available for pressing R2 whenever a “master” animal from the negative contingency group earned one. The yoked and negative contingency groups were thus approximately matched on reinforcement frequency and distribution, but there was no negative contingency between R1 and the reinforcement of R2 in the yoked group. All sessions ended, as usual, with retraction of the levers at the end of 30 min.

Resurgence test (Phase 3)

As in Experiment 1, there was a final 30-min test session in which R1 and R2 were both available, but never reinforced.

Results

Lever Pressing

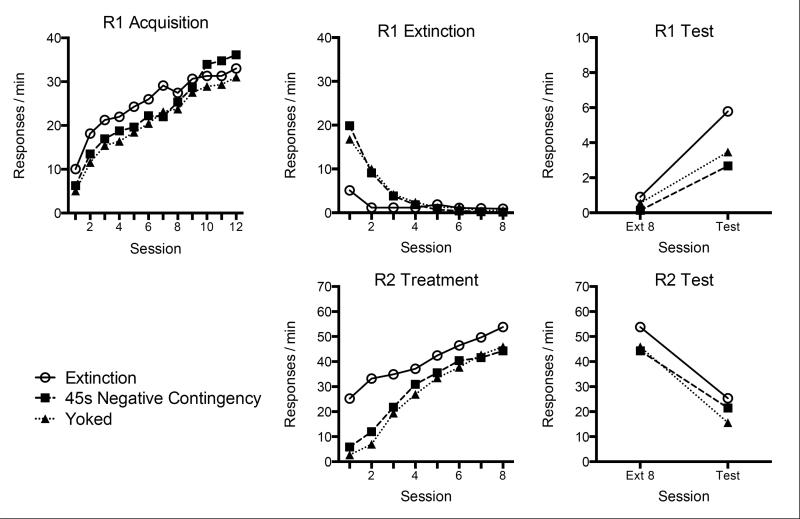

Lever press data from the three phases of Experiment 2 are presented in Figure 3. As in Experiment 1, R2 responding replaced R1 responding during Phase 2 (middle panels). In the resurgence test (upper right), all groups displayed a significant resurgence of R1 responding. As before, Group 45-s Negative Contingency showed an attenuated level of resurgence. So did Group Yoked; importantly, Group Yoked and Group 45-s Negative Contingency did not differ in their magnitude of resurgence.

Figure 3.

Results of Experiment 2. The upper panels summarize R1 responding during acquisition (left), response elimination (middle), and resurgence testing compared to the final extinction session (right). The lower panels summarize responding on R2 during response elimination (middle) and resurgence testing compared to the final extinction session (right).

During Phase 1, R1 responding proceeded as expected, and all groups clearly increased responding over sessions F(11, 319) = 64.48, MSE = 29.33, p < .001. Neither the main effect of Group nor the Group × Session interaction were reliable, Fs < 1.06.

In Phase 2, when reinforcement was now available for R2, R1 responding decreased while the R2 responding increased. However, differences between groups emerged. First considering R1 responding, a 3 (Group) × 8 (Session) ANOVA indicated a Session effect, F(7, 203) = 78.89, MSE = 8.45, p < .001, as well as a main effect of Group, F(2, 29) = 10.66, MSE = 18.63, p < .001, and Group × Session interaction, F(14, 203) = 10.23, MSE = 8.45, p < .001. LSD tests collapsed over session indicated that Group Extinction made fewer R1 presses than each of the other groups (ps < .01). One-way ANOVAs conducted on each session to decompose the interaction revealed differences during Session 1, F(2, 29) = 11.40, MSE = 48.58, p < .001, Session 2, F(2, 29) = 17.44, MSE = 12.24, p < .001, Session 7, F(2, 29) = 3.76, MSE = 0.37, p < .05, and Session 8, F(2, 29) = 4.34, MSE = 0.34, p < .001. During Sessions 1 and 2, LSD tests indicated that Group Extinction made fewer R1 responses than either Group 45-s Negative Contingency or Group Yoked (ps ≤ .01). During Sessions 7 and 8, Group Extinction responded more than Group 45-s Negative Contingency (ps ≤ .05). No other differences were noted for R1 responding during Phase 2.

Now considering R2, a 3 (Group) × 8 (Session) ANOVA indicated that responding increased over the sessions during Phase 2, F(7, 203) = 95.67, MSE = 64.09, p < .001. There was a significant Group × Session interaction, F(14, 203) = 3.07, MSE = 64.09, p < .05, as well as a Group main effect, F(2, 29) = 3.23, MSE = 1066.49, p = .05. One-way ANOVAs decomposing the interaction revealed group differences during Session 1, F(2, 29) = 53.49, p < .001, Session 2, F(2, 29) = 16.17, p < .001, and Session 3, F(2, 29) = 3.73, p < .05. LSD tests indicated that Group Extinction made more R2 responses than the other groups during Sessions 1-3 (ps ≤ .05). No differences in R2 responding were noted between Groups 45-s Negative Contingency and Group Yoked.

During resurgence testing, all groups exhibited a clear increase in R1 responding. A 3 (Group) × 2 (Session: Ext 8 vs. Resurgence Test) ANOVA revealed a Session effect, F(1, 29) = 92.62, MSE = 1.98, p < .001. There was also a significant Group × Session interaction, F(2, 29) = 3.64, MSE = 1.98, p < .05, and a reliable main effect of Group, F(2, 29) = 8.02, MSE = 2.29, p < .01. LSD tests revealed that all three groups increased R1 responding between the final session of Phase 2 and the resurgence test (ps < .001). In addition, during the resurgence test, Group Extinction responded more than either Group 45-s Negative Contingency (p < .01) or Group Yoked (p < .05). Importantly, there were no differences in R1 responding between the negative contingency and the yoked groups during testing (ps = .33).

In the resurgence test, all groups also decreased their rates of R2 responding compared with the final session of Phase 2, F(2, 29) = 3.64, MSE = 2.29, p < .05. However, neither a Group effect nor a Group × Session interaction were observed, largest F(2, 29) = 1.27, MSE = 318.58, p = .30.

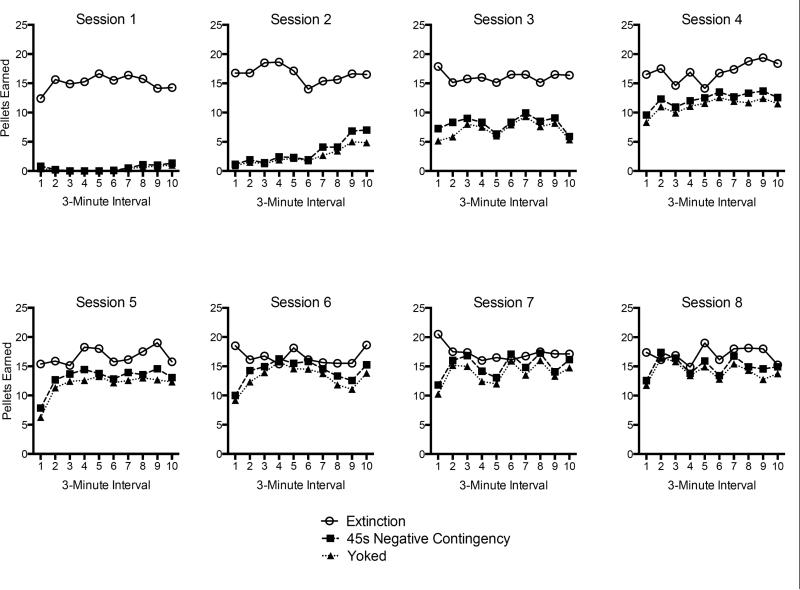

Reinforcers earned during Phase 2

As shown in Figure 4, both the negative contingency and the yoking procedure caused the rats to earn fewer reinforcers than Group Extinction Control during Phase 2. During the first four sessions, when the negative contingency group was receiving the initial 15-s minimum R1-reinforcer interval, a 3 (Group) × 40 (3-Min Interval) ANOVA indicated that the number of reinforcers earned increased over bins, F(39, 1131) = 21.54, MSE = 15.24, p < .001. There was a significant Group × Interval interaction, F(78, 1131) = 3.63, MSE = 15.24, p<.001, and a main effect of Group, F(2, 29) = 47.52, MSE = 280.66, p < .001. LSD tests that collapsed over time bin revealed that a higher number of pellets was earned by Group Extinction than by either of the other groups (ps < .001). The yoking procedure was effective in the sense that there were no differences in the reinforcers earned between the negative contingency and yoked groups (p = .49). In Sessions 5 through 8, when Group 45-s Negative Contingency was receiving the final 45-s negative contingency, a 3 (Group) × 40 (3-Min Interval) ANOVA again demonstrated that the number of reinforcers changed over bins, F(39, 1131) = 3.08, MSE = 19.70, p < .001. A main effect of Group, F(2, 29) = 13.40, MSE = 96.78, p < .001, also indicated differences between groups, although the Group × Session interaction was not reliable, F(78, 1131) < 1, MSE = 19.80. LSD tests collapsing over interval indicated that Group Extinction Control earned a greater number of reinforcers than either of the other groups (ps < .001). There was no difference between Group 45-s Negative Contingency and Group Yoked (p = .08). The pattern was maintained during the final session of Phase 2 (Session 8), where the groups still differed, F(2, 29) = 7.41, MSE = 262.16, p < .01, and LSD tests indicated that Group Extinction earned more reinforcers than either of the other groups (ps < .05), which did not differ (p = .17).

Figure 4.

Number of pellets earned during each of the response elimination (Phase 2) sessions by the four groups in Experiment 1. Data are shown over 10 3-min intervals within each session.

Discussion

As in Experiment 1, the negative contingency reduced the number of pellets that were earned in the response elimination phase (Group 45-s Negative Contingency). In addition, the yoking procedure was successful at approximately equating Group Yoked with Group 45-s Negative Contingency on the distribution of pellets earned during that phase. The groups therefore had a similar opportunity to learn that R1 extinction was in force in the context of infrequent pellet deliveries. And consistent with the context hypothesis, the groups showed equivalently attenuated, though not eliminated, resurgence during the final test. Evidently, the negative contingency between R1 and receiving the reinforcer for R2 made little contribution beyond this. The findings are thus consistent with the idea that the negative contingency reduced resurgence by allowing the rat to learn to extinguish R1 in the absence of pellets, the “context” that was also present during resurgence testing.

General Discussion

In both experiments, the introduction of a negative or “abstinence” contingency between the original behavior (R1) and reinforcement of R2 reduced, but did not eliminate, resurgence. The fact that resurgence still occurred after a negative contingency is consistent with the view that Phase-1 learning can persist through many retroactive interference treatments (e.g., Bouton, 1993). Experiment 2 further found a similar reduction but not elimination of resurgence in a yoked group that received the same temporal distribution of reinforcers during the response elimination phase, but without the negative contingency. Thus, the negative contingency per se did not appear to matter; the sparse distribution of Phase-2 reinforcers that it created did. The results are thus consistent with the hypothesis that the negative contingency reduced resurgence by allowing the animal to learn R1 extinction in the “context” of spaced or infrequent reinforcer delivery.

The results are consistent with prior work on the effectiveness of “thinning” schedules and the use of very lean reinforcement schedules in reducing resurgence (Leitenberg et al., 1975; Winterbauer & Bouton, 2012; Sweeney & Shahan, 2013b). In fact, one way to think of the present effect of the negative contingency is that it produced a kind of “thinning” treatment in reverse. Instead of beginning the response elimination phase with a rich schedule of reinforcement for R2 and ending with a very lean one, the negative contingency and yoked conditions began with a lean schedule and ended with a richer one. Clinically, the overall implication may be that the sequence of rich-to-lean or lean-to-rich might not be as important as merely giving the patient an opportunity to learn to inhibit responding at some point in a lean context that is similar to ones that might be encountered in the world outside the clinic. Of course, we did not compare the effects of rich-to-lean and lean-to-rich sequences here, and it may turn out that some sequences are more effective than others. Moreover, although we found no impact of the negative contingency beyond its effect on reinforcer distribution (Experiment 2), it is possible that more sensitive tests, or the presence of other variables that might modulate the effects of the negative contingency, might one day produce evidence of the contingency's impact. However, the present results are consistent with the view that the effect of the abstinence contingency was primarily to give the subject an opportunity to learn R1 extinction under conditions like those of resurgence testing.

The results are thus consistent with the contextual analysis of resurgence (e.g., Bouton et al., 2012; Bouton & Swartzentruber, 1991; Winterbauer & Bouton, 2010). That view takes as its starting point the extensive evidence that extinction performance can be relatively specific to the context in which it is learned (e.g., Bouton, 2002, 2004; Bouton et al., 2012). It is important to note that “context” has been shown to involve a wide variety of background stimuli, including not only the room or physical apparatus in which learning occurs, but other stimuli such as the interoceptive drug state, mood state, time, and recent events such as the presence or absence of reinforcers (e.g., Bouton et al., 1993; Bouton, 1991, 2002). In the typical resurgence paradigm, resurgence occurs because the transition from response elimination to resurgence testing provides a noticeable context change and thus allows an ABC renewal effect (e.g., Bouton et al., 2011; Todd, 2013; Todd et al., 2012). On this view, resurgence was reduced in the present experiments, and in the previous lean reinforcement and thinning reinforcement experiments (Leitenberg et al., 1975; Sweeney & Shahan, 2013b; Winterbauer & Bouton, 2012), because the new no-pellet context of testing is similar to the minimal-pellet context in which extinction was learned. Thus, learning to extinguish R1 during previous periods of lean reinforcement generalizes or transfers to the resurgence test. Presumably, transfer was not complete, and attenuated resurgence occurred, because the conditions of resurgence testing (which immediately follows sessions of relatively rich reinforcement of R2) were still somewhat different from those in which extinction had been learned. One advantage of the contextual approach is that it integrates resurgence with other well-studied “relapse” effects that occur after extinction, such as renewal, spontaneous recovery, reinstatement, and rapid reacquisition, and uses the conceptual tools that have been developed in their analysis (e.g., see Bouton & Woods, 2008, for a review). It is also compatible with successful earlier theories of extinction (e.g., Amsel, 1967; Capaldi, 1967, 1994), which, ever since the discovery of the partial reinforcement extinction effect, have also emphasized the role of “contextual” factors in extinction (e.g., see Bouton et al., 2012, or Vurbic & Bouton, in press, for further discussion).

An alternative explanation may be provided by a quantitative model of resurgence proposed by Shahan and Sweeney (2011). That model expands on behavioral momentum theory (e.g., Nevin & Grace, 2000) and suggests that when reinforcement of R2 is added to the extinction of R1, it further disrupts the first behavior beyond extinction alone while also strengthening a process that indirectly increases the potential strength of R1. When reinforcers are withdrawn during resurgence testing, the source of disruption is removed, and R1 responding can return. The model successfully predicts the current finding that extinction produced a more rapid loss of R1 responding than the negative and yoked contingencies during Phase 2; the higher rate of reinforcement earned in the extinction controls would have generated stronger disruption. The model can also explain previous results suggesting the negative impact of lean-reinforcement or thinning schedules on resurgence by noting that leaner schedules produce less disruption and less indirect strengthening of R1. The model would explain the current effect of reinforcer density in the same terms. Winterbauer et al. (2013) noted, however, that the model is challenged by the fact that resurgence can be observed even after very extensive Phase 2 training under some conditions (Winterbauer et al., 2013); the model predicts resurgence to weaken over Phase 2 (e.g., Sweeney & Shahan, 2013a). It is also worth noting that the model specifically models resurgence and does not account for other “relapse” effects that occur after extinction such as renewal and reinstatement (in which responding returns after a context change or when noncontingent reinforcers are presented again; see Podlesnik & Shahan, 2009, 2010).

One potential problem for either the contextual view or the Shahan-Sweeney view is that differences in reinforcement rate during Phase 2 were not perfectly related to the extent of resurgence in Experiment 1. Specifically, the negative contingency groups differed in their rates of reinforcement during the response elimination phase of Experiment 1, but did not differ in their final levels of resurgence. However, since reinforcement rate was similarly low among the groups during early sessions, when the groups all had the same 15-s minimum interval requirement, it is conceivable that later differences might have added relatively little to the final resurgence strength. That is, we know very little about the function relating the amount of time spent in a low reinforcement situation to the final level of resurgence. For this reason, the lack of a perfect correlation between Phase 2 reinforcement rate and final resurgence may not be fatal to either view.

Another explanation of resurgence was suggested by Leitenberg et al. (1970), who argued that under conditions that yield resurgence, R2 might compete with the performance of R1 so much that the animal does not have enough opportunity to learn that R1 is being extinguished. On this view, response elimination procedures that allow higher levels of R1 responding would presumably allow more opportunity for extinction learning. Consistent with this possibility, the present negative contingency and yoked groups made equivalently more R1 responses than the extinction control groups during the response elimination phases and showed equivalently less resurgence. However, although the response suppression mechanism may be especially plausible when the reinforcement of R2 produces very strong (e.g., complete) suppression of R1, resurgence can still occur when animals have made a substantial number of R1 extinction responses (e.g., over 1000 in Winterbauer & Bouton, 2012); the rats in the extinction control groups of the current experiments made an average of 416 unreinforced R1 responses during extinction. Winterbauer and Bouton (2010) have also found that resurgence still occurred in animals whose R2 reinforcement schedules created equivalent, or even more, R1 responding than in control groups that had mere extinction of R1 without training of R2. Such results clearly indicate that response suppression is not required for resurgence, but its role in the present experiments is nevertheless not clear.

In summary, the present experiments found that introducing a negative or “abstinence” contingency reduced, but did not eliminate, the resurgence effect. The results are consistent with the hypothesis that the contingency allowed the animal to learn to extinguish their responding in a no-pellet “context” that was similar to the no-pellet “context” of resurgence testing. One implication is that clinical interventions should be conducted in ways that encourage generalization between the intervention and relapse situations. Finally, it is worth noting that in addition to providing new empirical and theoretical information, the present experiments have introduced a new method that might provide a step toward a better model of relapse that can occur after the termination of a contingency management treatment.

Acknowledgments

This research was supported by NIH Grant RO1 DA033123 to MEB. We thank Eric Thrailkill, Sydney Trask, and Drina Vurbic for their comments on the manuscript. Send reprint requests to Mark E. Bouton, Department of Psychology, University of Vermont, Burlington, VT 05405-134.

References

- Amsel A. Partial reinforcement effects on vigor and persistence: Advances in frustration theory derived from a variety of within-subjects experiments. In: Spence KW, Spence JT, editors. Psychology of Learning and Motivation. Vol. 1. Academic Press; New York: 1967. pp. 1–65. [Google Scholar]

- Bouton ME. A contextual analysis of fear extinction. In: Martin PR, editor. Handbook of behavior therapy and psychological science: An integrative approach. Pergamon Press; New York: 1991. pp. 435–453. [Google Scholar]

- Bouton ME. Context, time, and memory retrieval in the interference paradigms of Pavlovian learning. Psychological Bulletin. 1993;114:80–99. doi: 10.1037/0033-2909.114.1.80. [DOI] [PubMed] [Google Scholar]

- Bouton ME. Context, ambiguity, and unlearning: Sources of relapse after behavioral extinction. Biological Psychiatry. 2002;52:976–986. doi: 10.1016/s0006-3223(02)01546-9. [DOI] [PubMed] [Google Scholar]

- Bouton ME. Context and behavioral processes in extinction. Learning & Memory. 2004;11:485–494. doi: 10.1101/lm.78804. [DOI] [PubMed] [Google Scholar]

- Bouton ME, Rosengard C, Achenbach GG, Peck CA, Brooks DC. Effects of contextual conditioning and unconditional stimulus presentation on performance in appetitive conditioning. The Quarterly Journal of Experimental Psychology. 1993;46:63–95. [PubMed] [Google Scholar]

- Bouton ME, Swartzentruber D. Sources of relapse after extinction in Pavlovian and instrumental learning. Clinical Psychology Review. 1991;11:123–140. [Google Scholar]

- Bouton ME, Todd TP, Vurbic D, Winterbauer NE. Renewal after the extinction of free operant behavior. Learning & Behavior. 2011;39:57–67. doi: 10.3758/s13420-011-0018-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Winterbauer NE, Todd TP. Relapse processes after the extinction of instrumental learning: renewal, resurgence, and reacquisition. Behavioural Processes. 2012;90:130–141. doi: 10.1016/j.beproc.2012.03.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Winterbauer NE, Vurbic D. Context and extinction: Mechanisms of relapse in drug self-administration. In: Haselgrove M, Hogarth L, editors. Clinical Applications of Learning Theory. Psychology Press; East Sussex, UK: 2012. pp. 103–134. [Google Scholar]

- Bouton ME, Woods AM. Byrne JH, Sweatt D, Menzel R, Eichenbaum H, Roediger H, editors. Extinction: Behavioral mechanisms and their implications. Learning and Memory: A Comprehensive Reference. 2008;1:151–171. [Google Scholar]

- Capaldi EJ. A sequential hypothesis of instrumental learning. Psychology of Learning and Motivation. 1967;1:67–156. [Google Scholar]

- Capaldi EJ. The sequential view: From rapidly fading stimulus traces to the organization of memory and the abstract concept of number. Psychonomic Bulletin & Review. 1994;1:156–181. doi: 10.3758/BF03200771. [DOI] [PubMed] [Google Scholar]

- da Silva SP, Maxwell ME, Lattal KA. Concurrent resurgence and behavioral history. Journal of the Experimental Analysis of Behavior. 2008;90:313–331. doi: 10.1901/jeab.2008.90-313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doughty AH, da Silva SP, Lattal KA. Differential resurgence and response elimination. Behavioural Processes. 2007;75:115–128. doi: 10.1016/j.beproc.2007.02.025. [DOI] [PubMed] [Google Scholar]

- Fisher EB, Green L, Calvert AL, Glasgow RE. Incentives in the modification and cessation of cigarette smoking. In: Schachtman TR, Reilly S, editors. Associative Learning and Conditioning Theory: Human and Non-Human Applications. Oxford University Press; Oxford: 2011. pp. 321–342. [Google Scholar]

- Herrnstein RJ. On the law of effect. Journal of the Experimental Analysis of Behavior. 1970;13:243–266. doi: 10.1901/jeab.1970.13-243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heil SE, Yoon JH, Higgins ST. Pregnant and postpartum women. In: Higgins ST, Silverman S, Heil SH, editors. Contingency Management in Substance Abuse Treatment. Guilford Press; New York: 2008. pp. 182–201. [Google Scholar]

- Higgins ST, Heil SH, Lussier JP. Clinical implications of reinforcement as a determinant of substance use disorders. Annual Review of Psychology. 2004;55:431–461. doi: 10.1146/annurev.psych.55.090902.142033. [DOI] [PubMed] [Google Scholar]

- Higgins ST, Silverman K, Heil SH. Contingency Management in Substance Abuse Treatment. Guilford Press; New York: 2008. [Google Scholar]

- Kearns DN, Weiss SJ. Contextual renewal of cocaine seeking in rats and its attenuation by the conditioned effects of an alternative reinforcer. Drug and Alcohol Dependence. 2007;90:193–202. doi: 10.1016/j.drugalcdep.2007.03.006. [DOI] [PubMed] [Google Scholar]

- Leitenberg H, Rawson RA, Bath K. Reinforcement of competing behavior during extinction. Science. 1970;169:301–303. doi: 10.1126/science.169.3942.301. [DOI] [PubMed] [Google Scholar]

- Leitenberg H, Rawson RA, Mulick JA. Extinction and reinforcement of alternative behavior. Journal of Comparative and Physiological Psychology. 1975;88:640–652. [Google Scholar]

- Lieving GA, Lattal KA. Recency, repeatability, and reinforcer retrenchment: An experimental analysis of resurgence. Journal of the Experimental Analysis of Behavior. 2003;80:217–233. doi: 10.1901/jeab.2003.80-217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakajima S, Urushihara K, Masaki T. Renewal of operant performance formerly eliminated by omission or noncontingency training upon return to the acquisition context. Learning and Motivation. 2002;33:510–525. [Google Scholar]

- Nevin JA, Grace RC. Behavioral momentum and the law of effect. Behavioral and Brain Sciences. 2000;23:73–90. doi: 10.1017/s0140525x00002405. [DOI] [PubMed] [Google Scholar]

- Pacitti WA, Smith NF. A direct comparison of four methods for eliminating a response. Learning and Motivation. 1977;8:229–237. [Google Scholar]

- Podlesnik CA, Jimenez-Gomez C, Shahan TA. Resurgence of alcohol seeking produced by discontinuing non-drug reinforcement as an animal model of drug relapse. Behavioural Pharmacology. 2006;17:369–374. doi: 10.1097/01.fbp.0000224385.09486.ba. [DOI] [PubMed] [Google Scholar]

- Podlesnik CA, Shahan TA. Behavioral momentum and relapse of extinguished operant responding. Learning & Behavior. 2009;37:357–364. doi: 10.3758/LB.37.4.357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Podlesnik CA, Shahan TA. Extinction, relapse, and behavioral momentum. Behavioural Processes. 2010;84:400–411. doi: 10.1016/j.beproc.2010.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quick SL, Pyszczynski AD, Colston KA, Shahan TA. Loss of alternative non-drug reinforcement induces relapse of cocaine-seeking in rats: Role of dopamine D1 receptors. Neuropsychopharmacology. 2011;36:1015–1020. doi: 10.1038/npp.2010.239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shahan TA, Sweeney MM. A model of resurgence based on behavioral momentum theory. Journal of the Experimental Analysis of Behavior. 2011;95:91–108. doi: 10.1901/jeab.2011.95-91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sweeney MM, Shahan TA. Behavioral momentum and resurgence: Effects of time in extinction and repeated resurgence tests. Learning & Behavior. 2013a doi: 10.3758/s13420-013-0116-8. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sweeney MM, Shahan TA. Effects of high, low, and thinning rates of alternative reinforcement on response elimination and resurgence. Journal of the Experimental Analysis of Behavior. 2013b;100:102–116. doi: 10.1002/jeab.26. [DOI] [PubMed] [Google Scholar]

- Todd TP. Mechanisms of renewal after the extinction of instrumental behavior. Journal of Experimental Psychology: Animal Behavior Processes. 2013;39:193–207. doi: 10.1037/a0032236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todd TP, Winterbauer NE, Bouton ME. Effects of the amount of acquisition and contextual generalization on the renewal of instrumental behavior after extinction. Learning & Behavior. 2012;40:145–157. doi: 10.3758/s13420-011-0051-5. [DOI] [PubMed] [Google Scholar]

- Vurbic D, Bouton ME. A contemporary behavioral perspective on extinction. In: McSweeney FK, Murphy ES, editors. The Wiley-Blackwell Handbook of Operant and Classical Conditioning. John Wiley & Sons; New York: (in press) [Google Scholar]

- Winterbauer NE, Bouton ME. Effects of thinning the rate at which the alternative behavior is reinforced on resurgence of an extinguished instrumental response. Journal of Experimental Psychology: Animal Behavior Processes. 2012;38:279–291. doi: 10.1037/a0028853. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winterbauer NE, Bouton ME. Mechanisms of resurgence of an extinguished instrumental behavior. Journal of Experimental Psychology: Animal Behavior Processes. 2010;36:343–353. doi: 10.1037/a0017365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winterbauer NE, Lucke S, Bouton ME. Some factors modulating the strength of resurgence after extinction of an instrumental behavior. Learning and Motivation. 2013;44:60–71. doi: 10.1016/j.lmot.2012.03.003. [DOI] [PMC free article] [PubMed] [Google Scholar]