Abstract

Background

It has been noted that increased focus on learning acute care skills is needed in undergraduate medical curricula. This study investigated whether a simulation-based curriculum improved a senior medical student's ability to manage acute coronary syndrome (ACS)as measured during a Clinical Practice Exam (CPX). We hypothesized that simulation training would improve overall performance as compared to targeted didactics or historical controls.

Methods

All fourth year medical students (N=291) over 2 years at our institution were included in this study. In the third year of medical school, the “Control” group received no intervention, the “Didactic” group received a targeted didactic curriculum, and the “Simulation” group participated in small group simulation training and the didactic curriculum. For intergroup comparison on the CPX, we calculated the percentage of correct actions completed by the student. Data is presented as Mean ± SD with significance defined as p<0.05.

Results

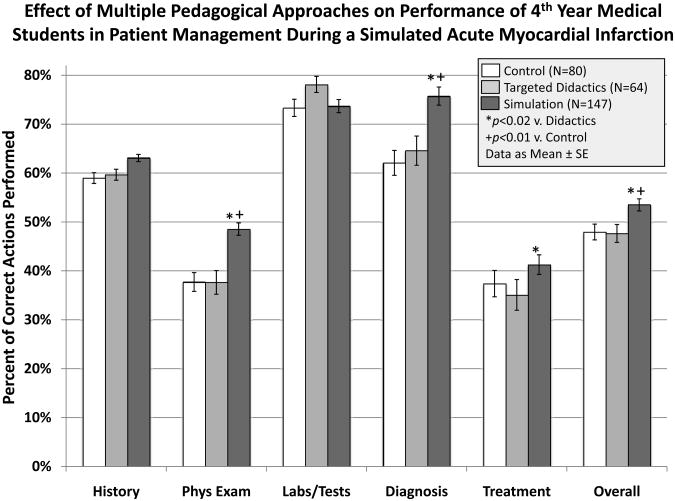

There was a significant improvement in overall performance with Simulation (53.5 ± 8.9%) versus both Didactics (47.7 ± 9.0%) and Control (47.9 ± 9.8%) (P<0.001).Performance on the physical exam component was significantly better in Simulation (48.5 ± 16.2%) versus both Didactics (37.6 ± 13.1%) and Control (37.7 ± 15.7%), as was diagnosis, Simulation (75.7 ± 24.2%) versus both Didactics (64.6 ± 25.1%) and Control (62.1 ± 24.2%) (P<0.02 for all comparisons).

Discussion

Simulation training had a modest impact on overall CPX performance in the management of a simulated ACS. Further studies are needed to evaluate how to further improve curricula regarding unstable patients.

Keywords: medical student, simulation, deliberate practice, curriculum, acute coronary syndrome

Introduction

Each year in the United States millions of patients are hospitalized for medical conditions requiring urgent assessment and treatment such as acute coronary syndrome (ACS).1-4 Best-practice guidelines have been published for proper patient assessment and management for ACS and other acute illnesses.5-10 Adherence to these guidelines dramatically improves patient outcomes.1,4,7,11-15 However, an equally large amount of research has shown that overall adherence to guidelines by physicians is poor.7,16-25

Some sources have recently reported that little training is included in most medical school curricula to prepare future interns for the care of unstable patients.26,27 Several studies have demonstrated that simulation-based medical education (SBME) can improve performance in the management of unstable patients immediately after training.28-30 Accordingly, our institution sought to improve the training of its students for the management of acute medical conditions. One part of this curricular expansion was to teach students how to assess acute chest pain and manage ACS. We undertook the current study to investigate whether this new simulation-based curriculum improved the senior medical student's ability to properly manage a SP presenting with ACS. Our hypothesis was that SBME combined with a targeted didactic curriculum would improve student adherence to published guidelines for the assessment and management of simulated ACS several months after initial training as compared both to exposure to the targeted didactic curriculum alone and to historical controls.

Methods

The MUSC Institutional Review Board reviewed this study protocol and waived the need for IRB approval.

Students who participated in the Internal Medicine 3rd year Clerkship the first half of year 1 were considered the “Control” group. This group received no curricular intervention. We instituted a targeted didactic curriculum during the mid-point of year 1 during the Clerkship. This group of students was the “Didactic” group whose targeted didactic curriculum included two hours of lectures entitled “The Approach to the Unstable Patient” while on the Clerkship. The content of the didactic curriculum covered the initial assessment, differential diagnosis, and management of a patient presenting with acute chest pain including the proper initial management steps of ACS. In the second year of the study, the “Simulation” group participated in small group simulation training in addition to the targeted didactic curriculum.

We administer the CPX in the first quarter of the senior year of medical school at our institution. All 4th year medical students (N=291) that took an 8-station Clinical Performance Exam (CPX) exam over the 2-year period were included (N=144 and N=147 for years 1 and 2 of testing, respectively). The standard 7-station CPX was administered along with an added eighth station that included the unstable patient (ACS, specifically acute ST-Elevation Myocardial Infarction). Student performance on the ACS station was not included in the grade received or recorded in their transcript. The students were unaware at the time of testing that the station was not included in their grade.

We performed all simulation training and testing sessions in a setting that replicates an emergency room bay at our institution, including patient bed, bedside monitors, medical gases, etc. The environment included SPs playing the role of patient and nurse, both of whom were trained to respond to the participant via a standardized script and the use of realistic actions such as medication administration. Each station was graded by the SP playing the nurse role according to checklists developed through a modified Delphi technique. For the STEMI station, this checklist was constructed via best practice in line with current American Heart Association publications along with review by a group of interventional cardiologists at our institution according to previously reported methods (see Table 1).31-34 All students received the didactics or the didactics plus simulation a minimum of 2 months prior to taking the CPX.

Table 1. Grading Checklist for ACS Station.

| Item | Assessment |

|---|---|

| 1 | Performed hand hygeine |

| 2 | Introduced self to patient |

| 3 | Assessed level of consciousness (alertness/orientation: person, place, time, etc) |

| 4 | Acquired History of Present Illness (asked questions about chest pain - where, how long, how bad, etc?) |

| 5 | Acquired Past Medical History (do you have medical problems for which you see a doctor?) |

| 6 | Acquired Past Surgical History (have you had surgery? When?) |

| 7 | Acquired Family Hx (does anyone in your family have heart disease; other health problems?) |

| 8 | Acquired Social Hx (alcohol, tobacco, illicit drugs) |

| 9 | Asked about Allergies (allergies to foods or medications?) |

| 10 | Asked about Current Medications |

| 11 | Asked about last dose of Sildenafil (Viagra) |

| Physical Exam (including obtaining vital signs) | |

| 12 | Applied bedside ECG |

| 13 | Confirmed patent IV in place or requested IV placement (is there a working IV?) |

| 14 | Placed Pulse Oximeter |

| 15 | Applied BP cuff |

| 16 | Checked temperature |

| 17 | Checked BP in both arms |

| 18 | Auscultated heart (MUST auscultate in 4 points on chest and over carotids) |

| 19 | Ausculated lungs (Credit for >3 lung fields on EACH side) |

| 20 | Auscultated abdomen (Credit for >2 areas of auscultation PLUS palpation and “does this hurt?”/any pain?) |

| 21 | Examined extremities (Credit for checking pulses in both arms and checked for edema in legs) |

| 22 | Examined patient's neck |

| Differential Diagnosis | |

| 23 | Listed correct DDX (Acute Coronary (Acute MI, Heart Attack, STEMI), Pulmonary Embolus (PE), Pericarditis, Esophageal Rupture (Boerhaave's), Aortic Dissection, Pneumothorax (PTX)) |

| Labs and Tests | |

| 24 | Ordered 12-lead ECG |

| 25 | Ordered Portable Chest x-ray |

| 26 | Ordered correct labs (BMP, CBC, Cardiac Enzymes, LFT's, Coags (PT, PTT, INR), d-dimer |

| Diagnosis | |

| 27 | Correctly diagnosed “Anterior-Lateral STEMI” (ST elevation MI) |

| 28 | Correctly assessed CXR - no acute cardiopulmonary problem/normal aorta/no air in mediatinum/no pericardial effusion |

| 29 | Correctly assessed labs [need to mention elevated Troponin and Glc] |

| Management | |

| 30 | Placed supplemental oxygen (NC if SaO2>90%, FM if SaO2<90%; must titrate for SaO2>94%) |

| 31 | Ordered Immediate Cardiology Consult |

| 32 | Requested/mentioned to activate cath lab |

| 33 | Ordered Nitroglycerin (sublingual, paste, or infusion) |

| 34 | Ordered Aspirin 325mg - verbalized that patient is to chew this |

| 35 | Ordered heparin bolus and infusion (okay to NOT know dose) |

| 36 | Participant stated that it was safe to give Heparin b/c CXR normal and BP equal in both arms |

| 37 | Ordered Plavix (clopidigrel load) 300-600mg PO |

| 38 | Ordered Lipitor or Simvastatin 80 mg PO |

| 39 | Ordered Lopressor (metoprolol) 5mg IV |

| 40 | Ordered bolus 1L Normal Saline or Lactated Ringer's |

| 41 | Ordered Mucomyst 600mg PO to be given immediately |

| 42 | Requested monitor for transport to cath lab |

For grading purposes, we calculated the percentage of correct actions completed by the student in the STEMI station. In order to evaluate performance on different components of the STEMI checklist by type of training curriculum and to see if certain areas accounted for the poor performance, we performed an F-Test based on a multivariate analysis of variance (MANOVA) model, followed by one-way ANOVA on performance scores by checklist component, which were: History (11 items), Physical Exam (11 items), Labs/Test (3 items), Diagnosis (3 items), and Management/Treatment Plan (13 items). Since there were 3 groups being compared, we used the Tukey-Kramer correction for multiple comparisons within each ANOVA model.35 Data is presented as Mean ± SD. All statistical analyses were conducted using SAS v9.3 (Cary, NC), and significance was defined as p<0.05.

Results

The students were divided into 3 groups based upon the curricular intervention that they received: historical controls (Control, N=80),the targeted didactic curriculum (Didactics, N=64), and the targeted didactic curriculum plus small group simulation training (Simulation, N=147). The MANOVA analysis indicated that there was significant (p<0.0001) variability in domain scores across the 3 groups. Results of the individual ANOVAs indicate that overall performance was significantly better with Simulation (53.5 ± 8.9%) versus both Didactics (47.7 ± 9.0%, p<0.02) and Control (47.9 ± 9.8%, p<0.01). Performance on the physical exam component was significantly better in Simulation(48.5 ± 16.2%) versus both Didactics (37.6 ± 13.1%, p<0.02) and Control (37.7 ± 15.7%, p<0.01), as was performance on the diagnosis component [Simulation (75.7 ± 24.2%) versus both Didactics (64.6 ± 25.1%, p<0.02) and Control (62.1 ± 24.2%), p<0.01]. There was not a significant difference in overall score or any component score when comparing Didactics with Control. Figure 1 illustrates all of these comparisons.

Figure 1.

This figure illustrates the performance in the STEMI scenario by educational intervention concerning overall performance and performance in different components of the grading checklist. The Simulation group performed better than both Didactics and Control overall and in the Physical Exam and Diagnosis components, although only to a modest degree.

Finally, the Simulation group performed significantly better than the Didactic group on the treatment component of the checklist (41.3 ± 15.0% v. 35.1 ± 14.6%, p<0.02). Of note, the physical and exam and treatment plan components of the checklist were two of the lowest scoring components in all three groups. Both components were at least 10% lower than every other component by intra-group comparison, with the exception of the differential diagnosis component among the Simulation group (see Figure 1).

Discussion

Interns must be able to assess and manage, with supervision, life threatening situations, including ACS, at least through the initial stages.26,27,36-38 However, the best pedagogical approach for preparing medical students to enter into this role is not known. As such, we compared 3 training curricula for preparing medical students to assess and manage a patient with ACS. Our data illustrate that SBME training made a modest improvement in overall performance in management of a simulated patient with this condition. This finding contributes to what is known in this domain in a number of ways.

A number of studies have shown that SBME can markedly improve medical student performance in the management of the simulated unstable patient as compared to traditional training, in the short term.29,30 Specifically, McCoy et al. reported in a small randomized, crossover trial that SBME was superior to didactics in training medical students to assess and manage simulated cases unstable scenarios.29 But, they tested students on the same day that the educational intervention was given. In addition, the students in their study were 4th year medical students who had self-selected to take an Emergency Medicine elective that involved this training and testing. Thus, their findings that SBME can produce near expert performance (>90% correct steps) in 4th year medical students managing an ACS may be tempered by our data.

We have shown that this level of performance is not achieved across an entire medical school class or when the time of testing is not coincident with training. It is very likely that there is a significant decline in patient management skills over time for all students, as has been shown with respect to Advanced Cardiac Life Support testing in as little as three months after training.39-41 It is concerning that our students performed poorly with respect to history, physical exam, and treatments. Our students perform close to the national average for all portions of USMLE exams. Simulation-based evaluation of the manner in which students assess and begin management on an unstable patient may be revealing a weakness in students that other testing is not discerning. However, as we only showed a modest improvement with the use of SBME, the curriculum needed to attain a high level of skill needs to be defined, along with whether DP and/or recurrent training can maintain this skill level over time.

Concerning the maintenance of high level performance over time, McGaghie and colleagues recently reported that SBME with DP is superior to traditional medical school curricula in a variety of specialties. There are 9 elements that make up DP, per McGaghie: highly motivated learners; clear objectives; appropriate level of difficulty; focused, repetitive practice; reliable measurements; informative feedback; error correction followed by more DP; the learner is able to master the task in the time needed; and advancement to the next task.28 In our study design, the students only had one practice session in the simulation center. Perhaps the reason there was only a modest improvement is multiple opportunities are needed for DP with debriefing sessions. In addition, in our curriculum there was no system of direct advancement to the next level of performance when a certain knowledge or skill set had been demonstrated. In a meta-analysis by McGaghie and colleagues, the authors argue that the growing literature suggests that traditional clinical training is inadequate to prepare students properly in order to improve clinical performance and patient safety.28 While it is likely true that SBME with DP is an effective addition to a traditional curriculum, future research needs to define how to best train medical students to reach a high level of adherence to guidelines in medical simulation involving acute care and then maintain that high level of performance into their intern year for treating acute cardiac situations, along with a myriad of other actual clinical conditions.42

A few weaknesses of our study design should be mentioned. First, concerning the improved rate of correct diagnosis in the Simulation group, it is most likely that this is due to having practiced the process of diagnosing and treating a patient in acute distress during the simulation training sessions. However, this also could be due to a loss of test integrity from year to year as students share experiences. Second, the students were not randomized in this study. Student schedules are assigned by a computer so there was no deliberate placement of students. However, there is the possibility that the Simulation group performed better due to some baseline difference between groups. Third, this study was done at the beginning of the fourth year. Many students took courses in the Intensive Care Unit (ICU) or Emergency Department (ED) their fourth year, so presumably those students would be more prepared for intern year.

While the Liaison Committee on Medical Education (LCME) and ACGME do not currently require students to master management of unstable patients, many are arguing that training this domain should be included and is lacking.26,27 Most CPX tests evaluate a student in the ambulatory setting which might be not be equivalent to evaluating a student on the acute management of an unstable patient. Furthermore, based upon this study, we believe that the addition of such training is needed. Delineating an effective pedagogical approach that can have lasting results should be the aim of future research.

Conclusion

A SBME curriculum intervention had a modest impact on performance when students were assessed at least 2 months from initial training as compared to targeted didactics and historical controls. Future research needs to elucidate the best methods by which to train students to be able to assess and manage patients with unstable conditions and how to retain these skills during their intern year.

Acknowledgments

Funding: This project was supported by the South Carolina Clinical & Translational Research Institute, Medical University of South Carolina's CTSA, NIH/NCATS Grant Number UL1TR000062.

Footnotes

Disclaimer: A portion of this data was presented as an oral abstract at the 2011 Annual Meeting of the Clerkship Directors in Internal Medicine (CDIM)

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Deborah J. DeWaay, Email: dewaay@musc.edu, MUSC Department of Internal Medicine, Medical University of South Carolina, 96 Jonathan Lucas Street, MSC 623 Charleston, SC 29425, Phone: 843-792-1302, Fax: 843-792-0448.

Matthew D. McEvoy, Vanderbilt University School of Medicine.

Donna H. Kern, Medical University of South Carolina.

Louise A. Alexander, Vanderbilt University School of Medicine.

Paul J. Nietert, Medical University of South Carolina.

References

- 1.Milani RV, Lavie CJ, Dornelles AC. The impact of achieving perfect care in acute coronary syndrome: the role of computer assisted decision support. American heart journal. 2012 Jul;164(1):29–34. doi: 10.1016/j.ahj.2012.04.004. [DOI] [PubMed] [Google Scholar]

- 2.Mannino DM, Braman S. The epidemiology and economics of chronic obstructive pulmonary disease. Proceedings of the American Thoracic Society; Oct 1 2007; pp. 502–506. [DOI] [PubMed] [Google Scholar]

- 3.Rathier MO, Baker WL. A review of recent clinical trials and guidelines on the prevention and management of delirium in hospitalized older patients. Hospital practice. 2011 Oct;39(4):96–106. doi: 10.3810/hp.2011.10.928. [DOI] [PubMed] [Google Scholar]

- 4.Calvin JE, Shanbhag S, Avery E, Kane J, Richardson D, Powell L. Adherence to evidence-based guidelines for heart failure in physicians and their patients: lessons from the Heart Failure Adherence Retention Trial (HART) Congestive heart failure. 2012 Mar-Apr;18(2):73–78. doi: 10.1111/j.1751-7133.2011.00263.x. [DOI] [PubMed] [Google Scholar]

- 5.Cantor WJ, Morrison LJ. Guidelines for STEMI. CMAJ: Canadian Medical Association journal = journal de l'Association medicale canadienne. 2005 May 24;172(11):1425. doi: 10.1503/cmaj.1041728. author reply 1426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Dechant LM. UA/NSTEMI: Are you following the latest guidelines? Nursing Sep. 2012;42(9):26–33. doi: 10.1097/01.NURSE.0000418611.61100.ca. quiz 34. [DOI] [PubMed] [Google Scholar]

- 7.Lodewijckx C, Sermeus W, Vanhaecht K, et al. Inhospital management of COPD exacerbations: a systematic review of the literature with regard to adherence to international guidelines. Journal of evaluation in clinical practice. 2009 Dec;15(6):1101–1110. doi: 10.1111/j.1365-2753.2009.01305.x. [DOI] [PubMed] [Google Scholar]

- 8.Loomba RS, Arora R. ST elevation myocardial infarction guidelines today: a systematic review exploring updated ACC/AHA STEMI guidelines and their applications. American journal of therapeutics. 2009 Sep-Oct;16(5):e7–e13. doi: 10.1097/MJT.0b013e31818d40df. [DOI] [PubMed] [Google Scholar]

- 9.O'Donnell C, Verbeek R. Guidelines for STEMI. CMAJ: Canadian Medical Association journal = journal de l'Association medicale canadienne. 2005 May 24;172(11):1425–1426. doi: 10.1503/cmaj.1041729. author reply 1426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Sykes PK. Prevention and management of postoperative delirium among older patients on an orthopedic surgical unit: a best practice implementation project. Journal of nursing care quality. 2012 Apr-Jun;27(2):146–153. doi: 10.1097/NCQ.0b013e31823f8573. [DOI] [PubMed] [Google Scholar]

- 11.Asche CV, Leader S, Plauschinat C, et al. Adherence to current guidelines for chronic obstructive pulmonary disease (COPD) among patients treated with combination of long-acting bronchodilators or inhaled corticosteroids. International journal of chronic obstructive pulmonary disease. 2012;7:201–209. doi: 10.2147/COPD.S25805. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Haut ER, Lau BD, Kraenzlin FS, et al. Improved prophylaxis and decreased rates of preventable harm with the use of a mandatory computerized clinical decision support tool for prophylaxis for venous thromboembolism in trauma. Archives of surgery. 2012 Oct;147(10):901–907. doi: 10.1001/archsurg.2012.2024. [DOI] [PubMed] [Google Scholar]

- 13.Kalla K, Christ G, Karnik R, et al. Implementation of guidelines improves the standard of care: the Viennese registry on reperfusion strategies in ST-elevation myocardial infarction (Vienna STEMI registry) Circulation. 2006 May 23;113(20):2398–2405. doi: 10.1161/CIRCULATIONAHA.105.586198. [DOI] [PubMed] [Google Scholar]

- 14.Mangin D. Adherence to evidence-based guidelines is the key to improved health outcomes for general practice patients: NO. Journal of primary health care. 2012 Jun;4(2):158–160. [PubMed] [Google Scholar]

- 15.Vause J. Adherence to evidence-based guidelines is the key to improved health outcomes for general practice patients: YES. Journal of primary health care. 2012 Jun;4(2):156–158. [PubMed] [Google Scholar]

- 16.Corrado A, Rossi A. How far is real life from COPD therapy guidelines? An Italian observational study. Respiratory medicine. 2012 Jul;106(7):989–997. doi: 10.1016/j.rmed.2012.03.008. [DOI] [PubMed] [Google Scholar]

- 17.Fantini MP, Compagni A, Rucci P, Mimmi S, Longo F. General practitioners' adherence to evidence-based guidelines: a multilevel analysis. Health care management review. 2012 Jan-Mar;37(1):67–76. doi: 10.1097/HMR.0b013e31822241cf. [DOI] [PubMed] [Google Scholar]

- 18.Ganz DA, Glynn RJ, Mogun H, Knight EL, Bohn RL, Avorn J. Adherence to guidelines for oral anticoagulation after venous thrombosis and pulmonary embolism. Journal of general internal medicine. 2000 Nov;15(11):776–781. doi: 10.1046/j.1525-1497.2000.91022.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Jochmann A, Neubauer F, Miedinger D, Schafroth S, Tamm M, Leuppi JD. General practitioner's adherence to the COPD GOLD guidelines: baseline data of the Swiss COPD Cohort Study. Swiss medical weekly. 2010 Apr 21; doi: 10.4414/smw.2010.13053. [DOI] [PubMed] [Google Scholar]

- 20.Seidu S, Khunti K. Non-adherence to diabetes guidelines in primary care - the enemy of evidence-based practice. Diabetes research and clinical practice. 2012 Mar;95(3):301–302. doi: 10.1016/j.diabres.2012.01.015. [DOI] [PubMed] [Google Scholar]

- 21.Van Eijk MM, Kesecioglu J, Slooter AJ. Intensive care delirium monitoring and standardised treatment: a complete survey of Dutch Intensive Care Units. Intensive & critical care nursing: the official journal of the British Association of Critical Care Nurses. 2008 Aug;24(4):218–221. doi: 10.1016/j.iccn.2008.04.005. [DOI] [PubMed] [Google Scholar]

- 22.Chan PS, Krumholz HM, Nichol G, Nallamothu BK. American Heart Association National Registry of Cardiopulmonary Resuscitation I. Delayed time to defibrillation after in-hospital cardiac arrest. The New England journal of medicine. 2008 Jan 3;358(1):9–17. doi: 10.1056/NEJMoa0706467. [DOI] [PubMed] [Google Scholar]

- 23.Chan PS, Nichol G, Krumholz HM, Spertus JA, Nallamothu BK. American Heart Association National Registry of Cardiopulmonary Resuscitation I. Hospital variation in time to defibrillation after in-hospital cardiac arrest. Archives of internal medicine. 2009 Jul 27;169(14):1265–1273. doi: 10.1001/archinternmed.2009.196. [DOI] [PubMed] [Google Scholar]

- 24.Mhyre JM, Ramachandran SK, Kheterpal S, Morris M, Chan PS. American Heart Association National Registry for Cardiopulmonary Resuscitation I. Delayed time to defibrillation after intraoperative and periprocedural cardiac arrest. Anesthesiology. 2010 Oct;113(4):782–793. doi: 10.1097/ALN.0b013e3181eaa74f. [DOI] [PubMed] [Google Scholar]

- 25.Kumbhani DJ, Fonarow GC, Cannon CP, et al. Predictors of Adherence to Performance Measures in Patients with Acute Myocardial Infarction. The American journal of medicine. 2013 Aug 24;126(74):1–9. doi: 10.1016/j.amjmed.2012.02.025. [DOI] [PubMed] [Google Scholar]

- 26.Fessler HE. Undergraduate medical education in critical care. Critical care medicine. 2012 Nov;40(11):3065–3069. doi: 10.1097/CCM.0b013e31826ab360. [DOI] [PubMed] [Google Scholar]

- 27.Tallentire VR, Smith SE, Skinner J, Cameron HS. The preparedness of UK graduates in acute care: a systematic literature review. Postgraduate medical journal. 2012 Jul;88(1041):365–371. doi: 10.1136/postgradmedj-2011-130232. [DOI] [PubMed] [Google Scholar]

- 28.McGaghie WC, Issenberg SB, Cohen ER, Barsuk JH, Wayne DB. Does simulation-based medical education with deliberate practice yield better results than traditional clinical education? A meta-analytic comparative review of the evidence. Academic medicine: journal of the Association of American Medical Colleges. 2011 Jun;86(6):706–711. doi: 10.1097/ACM.0b013e318217e119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.McCoy CE, Menchine M, Anderson C, Kollen R, Langdorf MI, Lotfipour S. Prospective randomized crossover study of simulation vs. didactics for teaching medical students the assessment and management of critically ill patients. The Journal of emergency medicine. 2011 Apr;40(4):448–455. doi: 10.1016/j.jemermed.2010.02.026. [DOI] [PubMed] [Google Scholar]

- 30.Steadman RH, Coates WC, Huang YM, et al. Simulation-based training is superior to problem-based learning for the acquisition of critical assessment and management skills*. Critical care medicine. 2006;34(1):151–157. doi: 10.1097/01.ccm.0000190619.42013.94. [DOI] [PubMed] [Google Scholar]

- 31.O'Connor RE, Brady W, Brooks SC, et al. Part 10: acute coronary syndromes: 2010 American Heart Association Guidelines for Cardiopulmonary Resuscitation and Emergency Cardiovascular Care. Circulation. 2010 Nov 2;122(18 Suppl 3):S787–817. doi: 10.1161/CIRCULATIONAHA.110.971028. [DOI] [PubMed] [Google Scholar]

- 32.Kushner FG, Hand M, Smith SC, Jr, et al. 2009 focused updates: ACC/AHA guidelines for the management of patients with ST-elevation myocardial infarction (updating the 2004 guideline and 2007 focused update) and ACC/AHA/SCAI guidelines on percutaneous coronary intervention (updating the 2005 guideline and 2007 focused update) a report of the American College of Cardiology Foundation/American Heart Association Task Force on Practice Guidelines. Journal of the American College of Cardiology. 2009 Dec 1;54(23):2205–2241. doi: 10.1016/j.jacc.2009.10.015. [DOI] [PubMed] [Google Scholar]

- 33.Antman EM, Anbe DT, Armstrong PW, et al. ACC/AHA guidelines for the management of patients with ST-elevation myocardial infarction; A report of the American College of Cardiology/American Heart Association Task Force on Practice Guidelines (Committee to Revise the 1999 Guidelines for the Management of patients with acute myocardial infarction) Journal of the American College of Cardiology. 2004 Aug 4;44(3):E1–E211. doi: 10.1016/j.jacc.2004.07.014. [DOI] [PubMed] [Google Scholar]

- 34.McEvoy MD, Smalley JC, Nietert PJ, et al. Validation of a detailed scoring checklist for use during advanced cardiac life support certification. Simulation in healthcare: journal of the Society for Simulation in Healthcare. 2012 Aug;7(4):222–235. doi: 10.1097/SIH.0b013e3182590b07. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Kramer CY. Extension of Multiple Range Tests to Group Means with Unequal Numbers of Replications. Biometrics. 1956;12:307–310. [Google Scholar]

- 36.Young JS, Dubose JE, Hedrick TL, Conaway MR, Nolley B. The use of “war games” to evaluate performance of students and residents in basic clinical scenarios: a disturbing analysis. The Journal of trauma. 2007 Sep;63(3):556–564. doi: 10.1097/TA.0b013e31812e5229. [DOI] [PubMed] [Google Scholar]

- 37.Mayo PH, Hackney JE, Mueck JT, Ribaudo V, Schneider RF. Achieving house staff competence in emergency airway management: results of a teaching program using a computerized patient simulator. Critical care medicine. 2004 Dec;32(12):2422–2427. doi: 10.1097/01.ccm.0000147768.42813.a2. [DOI] [PubMed] [Google Scholar]

- 38.Kelly C, Noonan CL, Monagle JP. Preparedness for internship: a survey of new interns in a large Victorian health service. Australian health review: a publication of the Australian Hospital Association. 2011 May;35(2):146–151. doi: 10.1071/AH10885. [DOI] [PubMed] [Google Scholar]

- 39.Smith KK, Gilcreast D, Pierce K. Evaluation of staff's retention of ACLS and BLS skills. Resuscitation. 2008 Jul;78(1):59–65. doi: 10.1016/j.resuscitation.2008.02.007. [DOI] [PubMed] [Google Scholar]

- 40.O'Steen DS, Kee CC, Minick MP. The retention of advanced cardiac life support knowledge among registered nurses. Journal of nursing staff development: JNSD. 1996 Mar-Apr;12(2):66–72. [PubMed] [Google Scholar]

- 41.Gass DA, Curry L. Physicians' and nurses' retention of knowledge and skill after training in cardiopulmonary resuscitation. Canadian Medical Association journal. 1983 Mar 1;128(5):550–551. [PMC free article] [PubMed] [Google Scholar]

- 42.McGaghie WC, Draycott TJ, Dunn WF, Lopez CM, Stefanidis D. Evaluating the impact of simulation on translational patient outcomes. Simulation in healthcare: journal of the Society for Simulation in Healthcare. 2011 Aug;6(Suppl):S42–47. doi: 10.1097/SIH.0b013e318222fde9. [DOI] [PMC free article] [PubMed] [Google Scholar]